Abstract

Background

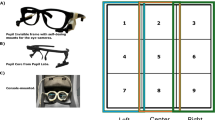

Eye-tracking technology has been shown to improve trainee performance in the aircraft industry, radiology, and surgery. The ability to track the point-of-regard of a supervisor and reflect this onto a subjects’ laparoscopic screen to aid instruction of a simulated task is attractive, in particular when considering the multilingual make up of modern surgical teams and the development of collaborative surgical techniques. We tried to develop a bespoke interface to project a supervisors’ point-of-regard onto a subjects’ laparoscopic screen and to investigate whether using the supervisor’s eye-gaze could be used as a tool to aid the identification of a target during a surgical-simulated task.

Methods

We developed software to project a supervisors’ point-of-regard onto a subjects’ screen whilst undertaking surgically related laparoscopic tasks. Twenty-eight subjects with varying levels of operative experience and proficiency in English undertook a series of surgically minded laparoscopic tasks. Subjects were instructed with verbal queues (V), a cursor reflecting supervisor’s eye-gaze (E), or both (VE). Performance metrics included time to complete tasks, eye-gaze latency, and number of errors.

Results

Completion times and number of errors were significantly reduced when eye-gaze instruction was employed (VE, E). In addition, the time taken for the subject to correctly focus on the target (latency) was significantly reduced.

Conclusions

We have successfully demonstrated the effectiveness of a novel framework to enable a supervisor eye-gaze to be projected onto a trainee’s laparoscopic screen. Furthermore, we have shown that utilizing eye-tracking technology to provide visual instruction improves completion times and reduces errors in a simulated environment. Although this technology requires significant development, the potential applications are wide-ranging.

Similar content being viewed by others

References

Ali SM, Reisner LA, King B, Cao A, Auner G, Klein M, Pandya AK (2008) Eye gaze tracking for endoscopic camera positioning: an application of a hardware/software interface developed to automate Aesop. Stud Health Technol Inform 132:4–7

Ballard DH (1992) Hand-eye coordination during sequential tasks. Philosophical transactions–royal society. Biol Sci 337:331

Ellis SM, Hu X, Dempere-Marco L, Yang GZ, Wells AU, Hansell DM (2006) CT of the lungs: eye-tracking analysis of the visual approach to reading tiled and stacked display formats. Eur J Radiol 59(2):257–264

Fuchs KH (2002) Minimally invasive surgery. Endoscopy 34:154–159

Hogle NJ, Chang L, Strong VE, Welcome AO, Sinaan M, Bailey R, Fowler DL (2009) Validation of laparoscopic surgical skills training outside the operating room: a long road. Surg Endosc 23:1476–1482

Kundel HL, Nodine CF, Krupinski EA (1990) Computer-displayed eye position as a visual aid to pulmonary nodule interpretation. Invest Radiol 25:890–896

Law B, Atkins MS, Kirkpatrick AE, Lomax AJ (2004) Eye gaze patterns differentiate novice and experts in a virtual laparoscopic surgery training environment. In: proceedings of the 2004 symposium on eye tracking research & applications. ACM, San Antonio, Texas

Noonan DP, Mylonas GP, Darzi A, Yang GZ (2008) Gaze contingent articulated robot control for robot assisted minimally invasive surgery. In: 2008 IEEE/RSJ international conference on robots and intelligent systems, vol 1–3. Pasadena, pp 1186–1191

Peters JH, Fried GM, Swanstrom LL, Soper NJ, Sillin LF, Schirmer B, Hoffman K (2004) Development and validation of a comprehensive program of education and assessment of the basic fundamentals of laparoscopic surgery. Surgery 135:21–27

Rayner K, Pollatsek A (1989) The psychology of reading. Prentice Hall, Englewood Cliffs, p 529

Richstone l, Schwartz M, Seideman C, Cadeddu J, Marshall S, Kavoussi LR (2010) Eye metrics as an objective assessment of surgical skill. Ann Surg 252:177–182

Sadasivan S (2004) Use of eye movements as feedforward training for a synthetic aircraft inspection task. Clemson University, Clemson

Sadasivan S, Greenstein J, Gramopadhye A, Duchowski T (2005) Use of eye movements as feedforward training for a synthetic aircraft inspection task. In: proceedings of the SIGCHI conference on human factors in computing systems. ACM, New York, pp 141–149

Smith CD, Farrell TM, McNatt SS, Metreveli RE (2001) Assessing laparoscopic manipulative skills. Am J Surg 181:547–550

Smith JD, Graham TCN (2006) Use of eye movements for video game control. In: proceedings of the 2006 ACM SIGCHI international conference on advances in computer entertainment technology. ACM, Hollywood

Stoyanov D, Mylonas GP, Yang GZ (2008) Gaze-contingent 3D control for focused energy ablation in robotic-assisted surgery. In: Miccai proceedings of medical image computing and computer-assisted intervention, vol 5242. New York 347–355

The DaVinci Si HD surgical system, Intuitive, CA

Wilson M, McGrath J, Vine S, Brewer J, Defriend D, Masters R (2010) Psychomotor control in a virtual laparoscopic surgery training environment: gaze control parameters differentiate novices from experts. Surg Endosc 24(10):2458–2464

Disclosures

A. Chetwood, J. Clark, K.W. Kwok, L.W. Sun, A. Darzi, and G.Z. Yang have no conflicts of interest or financial ties to disclose.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chetwood, A.S.A., Kwok, KW., Sun, LW. et al. Collaborative eye tracking: a potential training tool in laparoscopic surgery. Surg Endosc 26, 2003–2009 (2012). https://doi.org/10.1007/s00464-011-2143-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00464-011-2143-x