Abstract

Purpose

Eye gaze tracking and pupillometry are evolving areas within the field of tele-robotic surgery, particularly in the context of estimating cognitive load (CL). However, this is a recent field, and current solutions for gaze and pupil tracking in robotic surgery require assessment. Considering the necessity of stable pupillometry signals for reliable cognitive load estimation, we compare the accuracy of three eye trackers, including head and console-mounted designs.

Methods

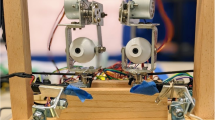

We conducted a user study with the da Vinci Research Kit (dVRK), to compare the three designs. We collected eye tracking and dVRK video data while participants observed nine markers distributed over the dVRK screen. We compute and analyze pupil detection stability and gaze prediction accuracy for the three designs.

Results

Head-worn devices present better stability and accuracy of gaze prediction and pupil detection compared to console-mounted systems. Tracking stability along the field of view varies between trackers, with gaze predictions detected at invalid zones of the image with high confidence.

Conclusion

While head-worn solutions show benefits in confidence and stability, our results demonstrate the need to improve eye tacker performance regarding pupil detection, stability, and gaze accuracy in tele-robotic scenarios.

Similar content being viewed by others

References

Sridhar AN, Briggs TP, Kelly JD, Nathan S (2017) Training in robotic surgery—an overview. Curr Urol Rep. https://doi.org/10.1007/s11934-017-0710-y

Ruiz Puentes P, Soberanis-Mukul RD, Acar A, Gupta I, Bhowmick J, Li Y, Ghazi A, Kazanzides P, Ying Wu J, Unberath M (2023) Pupillometry in telerobotic surgery: A comparative evaluation of algorithms for cognitive effort estimation. Med Robot. https://doi.org/10.54844/mr.2023.0420

Fujii K, Gras G, Salerno A, Yang G-Z (2018) Gaze gesture based human robot interaction for laparoscopic surgery. Med Image Anal 44:196–214. https://doi.org/10.1016/j.media.2017.11.011

Niehorster DC, Santini T, Hessels RS, Hooge ITC, Kasneci E, Nyström M (2020) The impact of slippage on the data quality of head-worn eye trackers. Behav Res Methods 52(3):1140–1160. https://doi.org/10.3758/s13428-019-01307-0

Krejtz K, Duchowski AT, Niedzielska A, Biele C, Krejtz I (2018) Eye tracking cognitive load using pupil diameter and microsaccades with fixed gaze. PLoS One 13(9):0203629

Duchowski AT, Krejtz K, Gehrer NA, Bafna T, Bækgaard P (2020) The low/high index of pupillary activity. https://doi.org/10.1145/3313831.3376394

Kassner M, Patera W, Bulling A (2014) Pupil: an open source platform for pervasive eye tracking and mobile gaze-based interaction. In: Proceedings of the 2014 ACM international joint conference on pervasive and ubiquitous computing: adjunct publication, pp 1151–1160

Tong I, Mohareri O, Tatasurya S, Hennessey C, Salcudean S (2015) A retrofit eye gaze tracker for the da vinci and its integration in task execution using the da vinci research kit. In: IROS. IEEE, pp 2043–2050

Kazanzides P, Chen Z, Deguet A, Fischer GS, Taylor RH, DiMaio SP (2014) An open-source research kit for the da vinci surgical system. In: IEEE intl. conf. on robotics and auto. (ICRA), Hong Kong, China, pp 6434–6439

Tuceryan M, Genc Y, Navab N (2002) Single-point active alignment method (SPAAM) for optical see-through HMD calibration for augmented reality. Presence: Teleoper Virtual Environ 11(3):259–276

Garrido-Jurado S, Muñoz-Salinas R, Madrid-Cuevas FJ, Marín-Jiménez MJ (2014) Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recogn 47(6):2280–2292. https://doi.org/10.1016/j.patcog.2014.01.005

Büter R, Soberanis-Mukul RD, Puentes PR, Ghazi A, Wu JY, Unberath M (2024) Eye tracking for tele-robotic surgery: a comparative evaluation of head-worn solutions. In: SPIE medical imaging 2024

Acknowledgements

This work was supported in part by an Intuitive Surgical Technology Advancement Grant.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no Conflict of interest to declare that are relevant to the content of this article.

Ethical approval

Approval was obtained from the ethics committee of Johns Hopkins University under HIRB00014648.

Consent to participate

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Soberanis-Mukul, R.D., Puentes, P.R., Acar, A. et al. Cognitive load in tele-robotic surgery: a comparison of eye tracker designs. Int J CARS (2024). https://doi.org/10.1007/s11548-024-03150-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11548-024-03150-x