Abstract

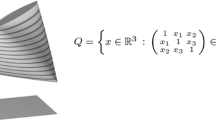

We derive tight bounds for the maximum number of k-faces, \(0\le k\le d-1\), of the Minkowski sum, \(P_1+P_2\), of two d-dimensional convex polytopes \(P_1\) and \(P_2\), as a function of the number of vertices of the polytopes. For even dimensions \(d\ge 2\), the maximum values are attained when \(P_1\) and \(P_2\) are cyclic d-polytopes with disjoint vertex sets. For odd dimensions \(d\ge 3\), the maximum values are attained when \(P_1\) and \(P_2\) are \(\lfloor \frac{d}{2}\rfloor \)-neighborly d-polytopes, whose vertex sets are chosen appropriately from two distinct d-dimensional moment-like curves.

Similar content being viewed by others

Notes

In the rest of the paper, all polytopes are considered to be convex.

For simplicial faces, we identify the face with its defining vertex set.

For these particular shellings, \(h_k({\mathcal {X}}_1)\) and \(h_k({{\mathcal {X}}_1}/{v})\) have a geometric interpretation: \(h_k({\mathcal {X}}_1)\) (resp., \(h_k({{\mathcal {X}}_1}/{v})\)) counts the number of restrictions of size k of the facets of \({\mathcal {K}}_1\) (resp., \({{\mathcal {K}}_1}/{v}\)) in \({\mathcal {X}}_1\) (resp., \({{\mathcal {X}}_1}/{v}\)) in the shelling \({\mathbb {S}}({\mathcal {K}}_1)\) (resp., \({\mathbb {S}}({{\mathcal {K}}_1}/{v})\)) of \({\mathcal {K}}_1\) (resp., \({{\mathcal {K}}_1}/{v}\)).

To see this, rewrite relation (34) in its element-wise form:

$$\begin{aligned} h_k({\mathcal {K}}_1)=h_k({\mathcal {X}}_1)+h_k({\mathcal {S}}_1), \end{aligned}$$and recall that \({\mathbb {S}}({\mathcal {K}}_1)\) shells \({\mathcal {S}}_1\) first. Since the shellings of \({\mathcal {K}}_1\) and \({\mathcal {S}}_1\) coincide as long as we shell \({\mathcal {S}}_1\), we get a contribution of one to both \(h_k({\mathcal {K}}_1)\) and \(h_k({\mathcal {S}}_1)\) for every restriction of \({\mathbb {S}}({\mathcal {K}}_1)\) of size k. After the shelling \({\mathbb {S}}({\mathcal {K}}_1)\) has left \({\mathcal {S}}_1\), a restriction of size k of the shelling contributes one to \(h_k({\mathcal {K}}_1)\) and, thus, necessarily, to \(h_k({\mathcal {X}}_1)\). But these restrictions are precisely the restrictions corresponding to the facets of \({\mathcal {X}}_1\) in the shelling \({\mathbb {S}}({\mathcal {K}}_1)\). The argumentation for \(h_k({{\mathcal {X}}_1}/{v})\) is entirely similar.

Given two facets \(F_i\) and \(F_j\) in the shelling \({\mathbb {S}}(\partial {Q})\) (resp., \({\mathbb {S}}({\partial {Q}}/{v})\)) of \(\partial {Q}\) (resp., \({\partial {Q}}/{v}\)) that share a ridge, the edge connecting \(F_i\) and \(F_j\) in the dual graph \(G^\Delta (\partial {Q})\) (resp., \(G^\Delta ({\partial {Q}}/{v})\)) is oriented from \(F_i\) to \(F_j\) if and only if \(i<j\).

They are images of moment curves under invertible linear transformations.

To see this, consider the Laplace expansion of the matrix with respect to the columns of its top-left block.

References

Adiprasito, K.A., Sanyal, R.: Relative Stanley–Reisner theory and upper bound theorems for Minkowski sums. arXiv:1405.7368 (2014)

Bruggesser, H., Mani, P.: Shellable decompositions of cells and spheres. Math. Scand. 29, 197–205 (1971)

The Cauchy–Binet formula. http://en.wikipedia.org/wiki/Cauchy-Binet_formula

de Berg, M., van Kreveld, M., Overmars, M., Schwarzkopf, O.: Computational Geometry: Algorithms and Applications, 2nd edn. Springer, Berlin (2000)

Fogel, E., Halperin, D., Weibel, C.: On the exact maximum complexity of Minkowski sums of polytopes. Discrete Comput. Geom. 42, 654–669 (2009)

Fogel, E.: Minkowski sum construction and other applications of arrangements of geodesic arcs on the sphere. PhD Thesis, Tel-Aviv University (2008)

Fukuda, K., Weibel, C.: \(f\)-vectors of Minkowski additions of convex polytopes. Discrete Comput. Geom. 37(4), 503–516 (2007)

Gantmacher, F.R.: Applications of the Theory of Matrices. Dover, Mineola (2005)

Graham, R.L., Knuth, D.E., Patashnik, O.: Concrete Mathematics. Addison-Wesley, Reading (1989)

Gritzmann, P., Sturmfels, B.: Minkowski addition of polytopes: computational complexity and applications to Gröbner bases. SIAM J. Discrete Math. 6(2), 246–269 (1993)

Huber, B., Rambau, J., Santos, F.: The Cayley trick, lifting subdivisions and the Bohne–Dress theorem on zonotopal tilings. J. Eur. Math. Soc. 2(2), 179–198 (2000)

Kalai, G.: A simple way to tell a simple polytope from its graph. J. Comb. Theory Ser. A 49, 381–383 (1988)

Karavelas, M.I., Tzanaki, E.: Convex hulls of spheres and convex hulls of convex polytopes lying on parallel hyperplanes. In: Proceedings of 27th Annual ACM Symposium on Computational Geometry (SCG’11), pp. 397–406. Paris, France, 13–15 June (2011)

Karavelas, M.I., Tzanaki, E.: The maximum number of faces of the Minkowski sum of two convex polytopes. In: Proceedings of the 23rd ACM–SIAM Symposium on Discrete Algorithms (SODA’12), pp. 11–28. Kyoto, Japan, 17–19 Jan (2012)

Karavelas, M.I., Tzanaki, E.: Tight lower bounds on the number of faces of the Minkowski sum of convex polytopes via the Cayley trick. arXiv:1112.1535v1 (2012)

Karavelas, M.I., Tzanaki, E.: A geometric approach for the upper bound theorem for Minkowski sums of convex polytopes. In: Proceedings of the 31st International Symposium on Computational Geometry (SCG’15), pp. 81–95. Eindhoven, The Netherlands, 22–25 June (2015)

Karavelas, M.I., Tzanaki, E.: A geometric approach for the upper bound theorem for Minkowski sums of convex polytopes. In: Proceedings of the 31st Symposium on Computational Geometry (SCG’15) (2015)

Karavelas, M.I., Konaxis, C., Tzanaki, E.: The maximum number of faces of the Minkowski sum of three convex polytopes. arXiv:1211.6089 (2012)

Karavelas, M.I., Konaxis, C., Tzanaki, E.: The maximum number of faces of the Minkowski sum of three convex polytopes. J. Comput. Geom. 6(1), 21–74 (2015)

McMullen, P.: The maximum numbers of faces of a convex polytope. Mathematika 17, 179–184 (1970)

Pachter, L., Sturmfels, B. (eds.): Algebraic Statistics for Computational Biology. Cambridge University Press, New York (2005)

Rosenmüller, J.: Game Theory: Stochastics, Information, Strategies and Cooperation. Theory and Decision Library, Series C, vol. 25. Kluwer Academic Publishers, Dordrecht (2000)

Sanyal, R.: Topological obstructions for vertex numbers of Minkowski sums. J. Comb. Theory Ser. A 116(1), 168–179 (2009)

Sharir, M.: Algorithmic motion planning. In: Goodman, J.E., O’Rourke, J. (eds.) Handbook of Discrete and Computational Geometry, Chap. 47, 2nd edn, pp. 1037–1064. Chapman & Hall/CRC, London (2004)

Weibel, C.: Minkowski sums of polytopes: combinatorics and computation. PhD Thesis, École Polytechnique Fédérale de Lausanne (2007)

Weibel, C.: Maximal f-vectors of Minkowski sums of large numbers of polytopes. Discrete Comput. Geom. 47(3), 519–537 (2012)

Zhang, H.: Observable Markov decision processes: a geometric technique and analysis. Oper. Res. 58(1), 214–228 (2010)

Ziegler, G.M.: Lectures on Polytopes. Graduate Texts in Mathematics, vol. 152. Springer, New York (1995)

Acknowledgments

The authors would like to thank Michael Joswig for discussions related to the shellability of simplicial polytopes and the graphs of their duals, and Efi Fogel for suggestions regarding the improvement of the presentation of the material. Finally, we would like to thank the anonymous referee for suggesting the usage of generating functions and for providing pointers regarding the improvement of the exposition of our results in earlier versions of the paper. The work in this paper has been partially supported by the FP7-REGPOT-2009-1 Project “Archimedes Center for Modeling, Analysis and Computation,” and has been co-financed by the European Union (European Social Fund – ESF) and Greek national funds through the Operational Program “Education and Lifelong Learning” of the National Strategic Reference Framework (NSRF) – Research Funding Program: THALIS – UOA (MIS 375891).

Author information

Authors and Affiliations

Corresponding author

Additional information

Editor in Charge: Günter M. Ziegler

An earlier version of this paper has appeared in [14].

Appendix

Appendix

1.1 Proof of Lemma 3

Proof

McMullen [20] in his original proof of the Upper Bound Theorem for polytopes proved that for any d-polytope P the following relation holds:

Multiplying both sides of (59) by \(t^{d-k-1}\) and summing over all \( 0 \le k \le d\), we get

For the right-hand side of (60), we have

whereas for the left-hand side of (60), we get

Substituting in (60), from (61) and (62), we recover the relation in the statement of the lemma. \(\square \)

1.2 Proof of an Identity

In this section we prove the following identity, used in Sect. 4, to prove the upper bound for \(f_{k-1}({\mathcal {F}})\) (see relations (50) and (51)).

Lemma 23

For any \(d\ge 2\) and any sequence of numbers \(\alpha _i\), where \(0\le i\le \lfloor \frac{d+1}{2}\rfloor \), we have

Proof

We start by recalling the definition of the symbol \(\sum \nolimits _{i=0}^{\frac{\delta }{2}}{^{^{*}}}T_i\). This symbol denotes the sum of the elements \(T_0,T_1,\ldots ,T_{\lfloor \frac{\delta }{2}\rfloor }\), where the last term is halved if \(\delta \) is even. More precisely:

Let us now first consider the case d odd. In this case \(d+1\) is even, and we have

The case d even is even simpler to prove. In this case \(d+1\) is odd; hence

This completes the proof. \(\square \)

1.3 Proof of Lemma 19

Before proceeding with the proof of Lemma 19, we need to introduce Vandermonde and generalized Vandermonde determinants. Given a vector of \(n\ge 2\) real numbers \({\varvec{x}}=(x_1,x_2,\ldots ,x_n)\), the Vandermonde determinant \(\text {VD}({\varvec{x}})\) of \({\varvec{x}}\) is the \(n\times n\) determinant

From the above expression, it is readily seen that if the elements of \({\varvec{x}}\) are in strictly increasing order, then \(\text {VD}({\varvec{x}})>0\). A generalization of the Vandermonde determinant is the generalized Vandermonde determinant: if, in addition to \({\varvec{x}}\), we specify a vector of exponents \({\varvec{\mu }}=(\mu _1,\mu _2,\ldots ,\mu _n)\), where we require that \(0\le \mu _1<\mu _2<\cdots <\mu _n\), we can define the generalized Vandermonde determinant \(\text {GVD}({\varvec{x}};{\varvec{\mu }})\) as the \(n\times n\) determinant:

It is a well-known fact that, if the elements of \({\varvec{x}}\) are in strictly increasing order, then \(\text {GVD}({\varvec{x}};{\varvec{\mu }})>0\) (for example, see [8] for a proof of this fact).

To prove Lemma 19 we exploit the Cauchy–Binet formula (cf. [3]). Let M be a \(n\times n\) square matrix factorized into a product LR of an \( n \times m \) and an \( m \times n \) matrix L and R respectively, with \( m \ge n\). If J is a subset of \( \{1,2,\ldots , m \}\) of size n, we denote by \( L_{[n],J} \) the \( n \times n\) matrix whose columns are the columns of L at indices from J and by \( R_{J,[n]} \) the \( n \times n \) matrix whose rows are the rows of R at indices from J. The Cauchy–Binet theorem states that

where \(\left( {\begin{array}{c}[m]\\ n\end{array}}\right) \) denotes the set of subsets of [m] of size n.

Proof

The determinant \(D_{k,l}(\tau )\) is clearly a polynomial function of \(\tau \). To prove our lemma, it suffices to show that the coefficient of the minimum exponent of \(\tau \) in \(D_{k,l}(\tau )\) is strictly positive.

In order to apply the Cauchy–Binet formula in our case, we factorize the matrix \(\Delta _{k,l}(\tau )\), corresponding to the determinant \(D_{k,l}(\tau )\), into the product of an \( (m+3)\times 2(m+1)\) and a \(2(m+1) \times (k+l)\) matrix L and R, respectively, as follows (recall that \(m+3=k+l\)):

The numbers over and sideways of L indicate the column and row indices, respectively, with \(\hat{k} := k+m+1\). We partition the index set J into \(J_1 \cup J_2\) where \(J_1 \subseteq \{1,\ldots ,m+1\}\) and \(J_2\subseteq \{\widehat{1}, \widehat{2},\ldots ,\widehat{m+1} \}.\) Notice that a term \(\det (L_{[m+3],J}) \det (R_{J,[k+l]})\) in the Cauchy–Binet expansion of \(D_{k,l}(\tau ) \) vanishes in the following two cases:

-

(i)

\( i \in J_1\) and \( \hat{\imath }\in J_2\) for some \( 3 \le i \le m+1\); in this case the i-th and \(\hat{\imath }\)-th columns of \(L_{[m+3],J}\) are identical, and thus \(\det (L_{[m+3],J})=0\).

-

(ii)

\(|J_1| \ne k\) or \( |J_2| \ne l\); in this case \(R_{J,[k+l]} \) is a block-diagonal square matrix with non-square non-zero blocks. The determinant of such a matrix is always zero.Footnote 6

Furthermore, notice that for any non-vanishing term in the Cauchy–Binet expansion of \(D_{k,l}(\tau )\), we have

where \({\varvec{\mu }}_1\) (resp., \({\varvec{\mu }}_2\)) is the vector consisting of the elements in \(\{i-1\mid i\in J_1\}\) (resp., \(\{i-(m+1)-1\mid i\in J_2\}\)) ordered increasingly. The parameter \(\tau \) appears only in \(\text {GVD}(\tau {\varvec{x}};{\varvec{\mu }}_1)\) and can be factored out (see 64). We thus have

since \(\text {GVD}({\varvec{x}};{\varvec{\mu }}_1)\) and \(\text {GVD}({\varvec{y}};{\varvec{\mu }}_2)\) are positive due to the way we have chosen \({\varvec{x}}\) and \({\varvec{y}}\).

Among all possible index sets \(J=J_1\cup J_2 \) for which the product \(\det (L_{[m+3],J})\det (R_{J,[k+l]})\) does not vanish, we have to find the index set that gives the minimum exponent for \(\tau \). Recall that, in view of condition (ii), the size of \(J_1\) is k and the size of \(J_2\) is l. The minimum exponent M(J) is then attained when \(J_1=J_1^\star :=\{1,\ldots ,k \}\). In view of condition (i), we have \(J_2 \subseteq \{\widehat{1}, \widehat{2},\ldots ,\widehat{m+1}\}\setminus \{\widehat{3},\ldots ,\widehat{k} \}, \) which leaves no other choice but \(J_2=J_2^\star :=\{ \widehat{1}, \widehat{2}, \widehat{k+1},\ldots ,\widehat{m+1} \}\).

For \(J^\star =J_1^\star \cup J_2^\star \), the matrix \(L_{[m+3],J}\) is

Clearly, \(L_{[m+3],J^\star }\) becomes the identity matrix after 2k row swaps. Hence \(\det (L_{[m+3],J^\star })=1\), which further implies that

\(\square \)

Rights and permissions

About this article

Cite this article

Karavelas, M.I., Tzanaki, E. The Maximum Number of Faces of the Minkowski Sum of Two Convex Polytopes. Discrete Comput Geom 55, 748–785 (2016). https://doi.org/10.1007/s00454-015-9726-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00454-015-9726-6

Keywords

- High-dimensional geometry

- Discrete geometry

- Combinatorial geometry

- Combinatorial complexity

- Minkowski sum

- Convex polytopes