Abstract

Can we efficiently compute optimal solutions to instances of a hard problem from optimal solutions to neighbor instances, that is, instances with one local modification? For example, can we efficiently compute an optimal coloring for a graph from optimal colorings for all one-edge-deleted subgraphs? Studying such questions not only gives detailed insight into the structure of the problem itself, but also into the complexity of related problems, most notably, graph theory’s core notion of critical graphs (e.g., graphs whose chromatic number decreases under deletion of an arbitrary edge) and the complexity-theoretic notion of minimality problems (also called criticality problems, e.g., recognizing graphs that become 3-colorable when an arbitrary edge is deleted). We focus on two prototypical graph problems, colorability and vertex cover. For example, we show that it is \(\text {NP}\)-hard to compute an optimal coloring for a graph from optimal colorings for all its one-vertex-deleted subgraphs, and that this remains true even when optimal solutions for all one-edge-deleted subgraphs are given. In contrast, computing an optimal coloring from all (or even just two) one-edge-added supergraphs is in \(\text {P}\). We observe that vertex cover exhibits a remarkably different behavior, demonstrating the power of our model to delineate problems from each other more precisely on a structural level. Moreover, we provide a number of new complexity results for minimality and criticality problems. For example, we prove that Minimal-3-UnColorability is complete for \(\text {DP}\) (differences of \(\text {NP}\) sets), which was previously known only for the more amenable case of deleting vertices rather than edges. For vertex cover, we show that recognizing \(\beta \)-vertex-critical graphs is complete for \(\Theta _2^\text {p}\) (parallel access to \(\text {NP}\)), obtaining the first completeness result for a criticality problem for this class.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Related Work

In Sect. 1.1, we introduce and motivate our new model, which we then compare and contrast to related notions in Sect. 1.2. Finally, we present in Sect. 1.3 an overview of our most interesting results and place them into the context of the wider literature.

1.1 Our Model

In view of the almost complete absence of progress in the question of \(\text {P} \) versus \(\text {NP} \), it is natural to wonder just how far and in what way these sets may differ. For example, how much additional information enables us to design an algorithm that solves an otherwise \(\text {NP}\)-hard problem in polynomial time? We are specifically interested in the case where this additional information takes the form of optimal solutions to neighboring (i.e., locally modified) instances. This models situations such as that of a newcomer who may ask experienced peers for advice on how to solve a difficult problem, for instance finding an optimal work route. Similar circumstances arise when new servers join a computer network. Formally, we consider the following oracle model: An algorithm may, on any given input, repeatedly select an arbitrary instance neighboring the given one and query the oracle for an optimal solution to it. Occasionally, it will be interesting to limit the number of queries that we grant the algorithm. In general, we do not impose such a restriction, however.

What precisely constitutes a local modification and thus a neighbor depends on the specific problem, of course. We examine the prototypical graph problems colorability and vertex cover, considering the following four local modifications, which are arguably the most natural choices: deleting an edge, adding an edge, deleting a vertex (including adjacent edges), and adding a vertex (including an arbitrary, possibly empty, set of edges from the added vertex to the existing ones). For example, we ask whether there is a polynomial-time algorithm that computes a minimum vertex cover for an input graph G if it has access to minimum vertex covers for all one-edge-deleted subgraphs of G. We will show that questions of this sort are closely connected to and yet clearly distinct from research in other areas, in particular the study of critical graphs, minimality problems, self-reducibility, and reoptimization.

1.2 Related Concepts

Criticality. The notion of criticality was introduced into the field of graph theory by Dirac [1] in 1952 in the context of colorability with respect to vertex deletion. Thirty years later, Wessel [2] generalized the concept to arbitrary graph properties and modification operations. Nevertheless, colorability has remained a central focus of the extensive research on critical graphs. Indeed, a graph G is called critical without any further specification if it is \(\chi \)-critical under edge deletion, that is, if its chromatic number \(\chi (G)\) (the number of colors used in an optimal coloring of G) changes when an arbitrary edge is deleted. Besides colorability, one other problem has received a comparable amount of attention and thorough analysis in three different manifestations: Independent Set, vertex cover, and Clique. The corresponding notions are \(\alpha \)-criticality, \(\beta \)-criticality, and \(\omega \)-criticality, where \(\alpha \) is the independence number (size of a maximum independent set), \(\beta \) is the vertex cover number (size of a minimum vertex cover), and \(\omega \) is the clique number (size of a maximum clique). Note that these graph numbers are all monotone—either nondecreasing or nonincreasing—with respect to each of the local modifications examined in this paper.

Minimality. Another strongly related notion is that of minimality problems. An instance is called minimal with respect to a property if only the instance itself but none of its neighbors has this property; that is, it inevitably loses the property under the considered local modification. The corresponding minimality problem is to decide whether an instance is minimal in the described sense. For example, a graph G is minimally 3-uncolorable (with respect to edge deletion) if it is not 3-colorable, yet all its one-edge-deleted neighbors are. The minimality problem \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \) is the set of all minimally 3-uncolorable graphs. Note that a graph is critical exactly if it is minimally k-uncolorable for some k.

While minimality problems tend to be in \(\text {DP}\) (i.e., differences of two \(\text {NP}\) sets, the second level of the Boolean hierarchy), \(\text {DP}\)-hardness is so difficult to prove for them that only a few have been shown to be \(\text {DP}\)-complete so far; see for instance Papadimitriou and Wolfe [3]. Note that the notion of minimality is not restricted to graph problems. Indeed, minimally unsatisfiable formulas figure prominently in many of our proofs.

Auto-Reducibility. Our model provides a refinement of the notion of functional auto-reducibility; see Faliszewski and Ogihara [4]. An algorithm solves a function problem \(R\subseteq \Sigma _1^*\times \Sigma _2^*\) if on input \(x\in \Sigma _1^*\) it outputs some \(y\in \Sigma _2^*\) with \((x,y)\in R\). The problem R is auto-reducible if there is a polynomial-time algorithm with unrestricted access to an oracle that provides solutions to all instances except x itself. The task of finding an optimal solution to a given instance is a special kind of function problem. Defining all instances to be neighbors (local modifications) of each other lets the two concepts coincide.

Self-Reducibility. Self-reducibility is auto-reducibility with the additional restriction that the algorithm may query the oracle only on instances that are smaller in a certain way. There are a multitude of definitions of self-reducibility that differ in what exactly is considered to be “smaller,” the two seminal ones stemming from Schnorr [5] and from Meyer and Paterson [6]. For Schnorr, an instance is smaller than another one if its encoding input string is strictly shorter. While his definition does allow for functional problems (i.e., more than mere decision problems, in particular the problem of finding an optimal solution), it is too restrictive for self-reducibility to encompass our model since not all neighboring graphs have shorter strings under natural encodings.

Meyer and Paterson are less rigid and allow instead any partial order having short downward chains to determine which instances are considered smaller than the given one.Footnote 1 The partial orders induced by deleting vertices, by deleting edges, and by adding edges all have short downward chains. The definition by Meyer and Paterson is thus sufficient for our model to become part of functional self-reducibility for all local modifications considered in this paper but one, namely, the case of adding a vertex, which is too generous a modification to display any particularly interesting behavior.

As an example, consider the graph decision problem \(\textsc {Color} \textsc {ability} =\{(G,k)\mid \chi (G)\le k\}\), which is self-reducible by the following observation. Any graph G with at least two vertices that is not a clique is k-colorable exactly if at least one of the polynomially many graphs that result from merging two nonadjacent vertices in G is k-colorable. This works for the optimization variant of the problem as well. Any optimal coloring of G assigns at least two vertices the same color, except in the trivial case of G being a clique. An optimal coloring for the graph that has two such vertices merged then yields an optimal coloring for G. This contrasts well with the findings for colorability’s behavior under our new model discussed below.

Reoptimization. Reoptimization examines optimization problems under a model that is tightly connected to ours. The notion of reoptimization was coined by Schäffter [7] and first applied by Archetti et al. [8]. The reoptimization model sets the following task for an optimization problem:

Given an instance, an optimal solution to it, and a local modification of this instance, compute an optimal solution to the modified instance.

The proximity to our model becomes clearer after a change of perspective. We reformulate the reoptimization task by reversing the roles of the given and the modified instance.

Given an instance, a local modification of it, and an optimal solution to the modified instance, compute an optimal solution to the original instance.

Note that this perspective switch flips the definition of local modification; for example, edge deletion turns into edge addition. Aside from this, the task now reads almost identical to that demanded in our model. The sole but crucial difference is that in reoptimization, the neighboring instance and the optimal solution to it are given as part of the input, whereas in our model, the algorithm may select any number of neighboring instances and query the oracle for optimal solutions to them. Even if we limit the number of queries to just one, our model is still more generous since the algorithm is choosing (instead of being given) the neighboring instance to which the oracle will supply an optimal solution. Thus, hardness in our model always implies hardness for reoptimization, but not vice versa. In fact, all problems examined under the reoptimization model so far remain NP-hard. Only for some of them could an improvement of the approximation ratio be achieved after extensive studies, the first discovered examples being tsp under edge-weight changes [9] and addition or deletion of vertices [10]. This stands in stark contrast to the results for our model, as outlined in the next section.

1.3 Results

We shed a new light on two of the most prominent and well-examined graph problems, colorability and vertex cover. Our results come in two different types.

The first type concerns the hardness of the two problems in our model for the most common local modifications; Table 1 summarizes the main results of this type. In addition, Corollaries 3 and 17 show that Satisfiability and vertex cover remain \(\text {NP}\)-hard for any number of queries if the local modification is the deletion of a clause or a triangle, respectively. The results for the vertex-addition columns are trivial since we can just query an optimal solution for the graph with an added isolated vertex.

Theorem 1

In our model with the local modification of adding a vertex, the two problems of finding an optimal vertex cover and finding an optimal coloring are in \(\text {P}\).

Proof

Let G be the given graph. Add an isolated vertex v and query the oracle for an optimal vertex cover or an optimal coloring of \(G+v\), respectively. Clearly, the restriction of this solution to G is optimal as well since an isolated vertex is never part of an optimal vertex cover and can be colored arbitrarily. (Note that we could also avoid isolated vertices by adding a universal vertex instead since a universal vertex needs to be colored in a unique color and is, except in the case of a clique, part of any optimal vertex cover.) \(\square \)

The hardness results for the one-query case all follow from the same simple Theorem 11, variations of which appear in the study of self-reducibility and many other fields. The findings of Theorems 12, 14, and 15 clearly delineate our model from that of reoptimization, where the \(\text {NP}\)-hard problems examined in the literature remain \(\text {NP}\)-hard despite the significant amount of advice in form of the provided optimal solution; see Böckenhauer et al. [11].

The results of the second type locate criticality problems in relation to the complexity classes \(\text {DP}\) and \(\Theta _2^\text {p}\). The class \(\Theta _2^\text {p}\) was introduced by Wagner [12] and represents the languages that can be decided in polynomial time by an algorithm that has access to an \(\text {NP}\) oracle under the restriction that all queries are submitted at the same time. The definitions of the classes immediately yield the inclusions \(\text {NP} \cup \text {coNP} \subseteq \text {DP} \subseteq \Theta _2^\text {p}\).

Papadimitriou and Wolfe [3] have shown that \(\textsc {Minimal} \text {-}{}\textsc {Un} \textsc {Sat} \) (the set of unsatisfiable formulas that become satisfiable when an arbitrary clause is deleted) is \(\text {DP}\)-complete. Cai and Meyer [13] built upon this to prove \(\text {DP}\)-completeness of \(\textsc {Vertex} \textsc {Minimal} \text {-}{}k\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \) (the set of graphs that are not k-colorable but become k-colorable when an arbitrary vertex is deleted), for all \(k \ge 3\). With Theorems 8 and 9, we were able to extend this result to classes that are analogously defined for the much smaller local modification of edge deletion, which is considered the default setting; namely, we prove \(\text {DP}\)-completeness of \(\textsc {} \textsc {Minimal} \text {-}{}k\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \) (the set of graphs that are not k-colorable but become k-colorable when an arbitrary edge is deleted), for all \(k \ge 3\).

In Theorem 10, we show that recognizing criticality and vertex-criticality are in \(\Theta _2^\text {p}\) and \(\text {DP}\)-hard. As Joret [14, Corollary 4 (p. 86)] points out, a construction by Papadimitriou and Wolfe [3] proves the \(\text {DP}\)-hardness of recognizing \(\beta \)-critical graphs. This problem also lies in \(\Theta _2^\text {p}\), but no finer classification has been achieved so far. In Theorem 18, we show that this problem is in fact \(\Theta _2^\text {p}\)-hard, yielding the first known \(\Theta _2^\text {p}\)-completeness result for a criticality problem.Footnote 2

2 Preprocessing Satisfiability

Our main technique for proving the nontrivial hardness results in our model is to describe a reduction from a standard \(\text {NP}\)-hard problem. This reduction will—in addition to what a usual polynomial-time reduction does—surround any instance with simply solvable neighbors. To be more precise, there is a polynomial-time algorithm that optimally solves any given neighbor of any given instance that may result from our reduction. The instances in the image of the reduction can thus be seen as islands of hardness, as it were, sprinkled across a sea of simplicity. Hence, the oracle providing optimal solutions to locally modified instances is essentially useless to us: It can continue to supply only those optimal solution to the neighbor instances that we could have just as well computed by ourselves. A similar approach is taken in some proofs of \(\text {DP}\)-completeness for minimality problems. Indeed, we can occasionally combine the proof of \(\text {DP}\)-hardness with that of the \(\text {NP}\)-hardness of computing an optimal solution from optimal solutions to locally modified instances.

We first present an outline of the proof and then provide all details in Sect. 2.2.

2.1 Proof Outline

Denote by \(\textsc {3CNF} \) the set of nonempty \(\textsc {CNF} \)-formulas with exactly three distinct literals per clause.Footnote 3 We begin by showing in Theorem 2 that there is a reduction from \(\textsc {3Sat} \) (the set of satisfiable \(\textsc {3CNF} \)-formulas) to \(\textsc {3Sat} \) that incorporates polynomial-time computable solutions for all one-clause-deleted subformulas of the resulting \(\textsc {3CNF} \)-formula. At first glance, this very surprising result may seem dangerously close to proving \(\text {P} =\text {NP} \); Corollary 3 will make explicit where the hardness remains. We will then use the reduction of Theorem 2 as a preprocessing step in reductions from \(\textsc {3Sat} \) to other problems.

Theorem 2

There is a polynomial-time many-one reduction f from \(\textsc {3Sat} \) to \(\textsc {3Sat} \) and a polynomial-time computable function \(s\) such that, for every \(\textsc {3CNF} \)-formula \(\Phi \) and for every clause \({C}\) in \(f(\Phi )\), \(s(f(\Phi ) - {C})\) is a satisfying assignment for \(f(\Phi ) - {C}\).

Proof Outline

Papadimitriou and Wolfe [3, Lemma 1] give a reduction from \(\textsc {3UnSat} \) to \(\textsc {Minimal} \text {-}{}\textsc {Un} \textsc {Sat} \) (the set of \(\textsc {CNF} \)-formulas that are unsatisfiable but that become satisfiable with the removal of an arbitrary clause). We show how to enhance this reduction such that it has all properties of our theorem.

First, we carefully prove that there is a function \(s\) that together with the original reduction satisfies all properties of our theorem, except that we may output a formula that is not in \(\textsc {3CNF} \). In order to rectify this, we show that the standard reduction from \(\textsc {Sat} \) to \(\textsc {Sat} \) that decreases the number of literals per clause to at most three maintains all the required properties. The same is then shown for the standard reduction that transforms \(\textsc {CNF} \)-formulas with at most three literals per clause into \(\textsc {3CNF} \)-formulas that have exactly three distinct literals per clause. Combining these three reductions, we can therefore satisfy all requirements of our theorem. \(\square \)

Having seen how to surround formulas by trivially satisfiable neighbors, we now formally show that this implies the \(\text {NP}\)-hardness of satisfying a given formula even with an oracle that provides satisfying assignments for all neighbors.

Corollary 3

Computing a satisfying assignment, whenever one exists, for a \(\textsc {3CNF} \)-formula whose one-clause-deleted subformulas all have a satisfying assignment from these assignments is \(\text {NP}\)-hard.

Proof

We provide a reduction from the standard problem with neither a guarantee of all one-clause-deleted subformulas having satisfiable assignments nor an oracle providing such assignments. Given a \(\textsc {3CNF} \)-formula \(\Phi \), use the reduction f and the accompanying function \(s\) from Theorem 2 to compute \(f(\Phi )\)—an equisatisfiable formula with simply satisfied subformulas—and also a satisfying assignment \(s(f(\Phi ) - {C})\) for every subformula resulting from deleting one clause \({C}\) in \(f(\Phi )\). Now we can apply the assumed algorithm to compute a satisfying assignment for \(f(\Phi )\) if one exists and use this to determine whether \(\Phi \) is satisfiable. \(\square \)

2.2 Proof Details

We now go through the reduction from \(\textsc {3UnSat} \) to \(\textsc {Minimal} \text {-}{}\textsc {Un} \textsc {Sat} \) by Papdimitriou and Wolfe [3] (mostly following the notation of Büning and Kullmann [17]) plus three standard reductions for additional form constraints, showing that the composition has all the properties that we need for our results.

2.2.1 The Main Reduction

Let \(\Phi \in \textsc {3CNF} \) be a Boolean formula over the variable set \(\{x_1,\ldots ,x_n\}\) with \(n>1\). This means that we have a formula \(\Phi =C_1\wedge \ldots \wedge C_m\) such that, for every \(i\in \{1,\dots ,m\}\), clause \(C_i=\ell _{i,1}\vee \ell _{i,2}\vee \ell _{i,3}\) consists of three distinct literals from \(\{x_1,\ldots ,x_n,\overline{x}_1,\ldots ,\overline{x}_n\}\), where an overline denotes negation. In the following, we construct in polynomial time an equivalent \(\textsc {CNF} \)-formula \(\Psi \) with the additional property that each one-clause-deleted subformula of \(\Psi \) has an easy-to-compute satisfying assignment. First, delete without replacement any clause that contains a variable and its negation. (Such a clause is satisfied for every assignment.) Assume thus without loss of generality that no clause \(C_i\) contains a variable and its negation. Now introduce m new variables \(\{y_1,\ldots ,y_m\}\) and let \(\pi _i= y_1\vee \ldots \vee y_{i-1}\vee y_{i+1}\vee \ldots \vee y_m\). Let

This concludes the description of the construction. We now prove all the required properties.

2.2.2 If \({\Phi }\) Satisfiable, then \(\Psi \) Satisfiable

Let \(\alpha \) be a satisfying assignment for \(\Phi \). Then

is a satisfying assignment for \(\Psi \). Indeed, we have \(\beta (C_i\vee \pi _i)=1\) since \(\alpha (C_i)=1\); and we have \(\beta (\overline{\ell }_{i,j}\vee \pi _i\vee \overline{y}_i)=1\) and \(\beta (\overline{y}_i\vee \overline{y}_j)=1\) since \(\beta (\overline{y}_i)=1\).

2.2.3 If \(\Psi \) Satisfiable, then \(\Phi \) Satisfiable

Let \(\beta \) be a satisfying assignment for \(\Psi \). We prove that \(\beta \) (or, to be precise, the restriction \(\beta \vert _X\)) also satisfies \(\Phi \). The satisfied clauses of the form \(\overline{y}_i\vee \overline{y}_j\) in \(\Psi \) guarantee that \(\beta (\overline{y}_{{{\hat{\imath }}}})=0\) for at most one \({{\hat{\imath }}}\in \{1,\ldots ,m\}\).

- Case 1.:

-

Assume that \(\beta (\overline{y}_i)=1\) for all \(i\in \{1,\ldots ,m\}\). All clauses that contain a literal \(\overline{y}_i\) are clearly satisfied. The only remaining clauses have the form \(C_i\vee \pi _i\). Moreover, \(\beta (y_i)=0\) for all \(i\in \{1,\ldots ,m\}\) implies \(\beta (\pi _i)=0\) for all \(i\in \{1,\ldots ,m\}\). Thus, we have \(\beta (C_i\vee \pi _i)=1\) if and only if \(\beta (C_i)=1\). By assumption \(\beta \) satisfies all clauses of \(\Psi \), in particular those of the form \(C_i\vee \pi _i\); therefore \(\beta \) also satisfies \(\Phi \).

- Case 2.:

-

Assume that \(\beta (\overline{y}_{{{\hat{\imath }}}})=0\), that is, \(\beta (y_{{{\hat{\imath }}}})=1\) for exactly one \({{\hat{\imath }}}\in \{1,\ldots ,m\}\). Then \(\beta (\pi _{{{\hat{\imath }}}})=0\) and \(\beta (\pi _i)=1\) for the remaining \(i\ne {{\hat{\imath }}}\). Thus, all clauses that contain \(y_i\) or \(\pi _i\) with \(i\ne {{\hat{\imath }}}\) are trivially satisfied. The only four remaining clauses are

$$\begin{aligned}(C_{{{\hat{\imath }}}}\vee \pi _{{{\hat{\imath }}}})\wedge \bigwedge _{j=1}^3 (\overline{\ell }_{{{\hat{\imath }}},j}\vee \pi _{{{\hat{\imath }}}}\vee \overline{y}_{{{\hat{\imath }}}}),\end{aligned}$$which, due to \(\beta (\pi _{{{\hat{\imath }}}})=\beta (\overline{y}_{{{\hat{\imath }}}})=0\), simplify to \(C_{{{\hat{\imath }}}}\wedge \overline{\ell }_{{{\hat{\imath }}},1}\wedge \overline{\ell }_{{{\hat{\imath }}},2}\wedge \overline{\ell }_{{{\hat{\imath }}},3}\). This is unsatisfiable since \(C_{{{\hat{\imath }}}}=\ell _{{{\hat{\imath }}},1}\vee \ell _{{{\hat{\imath }}},2}\vee \ell _{{{\hat{\imath }}},3}\). Thus, Case 2 cannot occur.

2.2.4 After the Deletion of an Arbitrary Clause, \(\Psi \) is Satisfiable

There are three cases, which we handle separately.

-

Case 1: The deleted clause is \(\overline{{y}}_{{{{\hat{\imath }}}}}\vee \overline{{y}}_{{{{\hat{\jmath }}}}}\). We show that, in this case, the following assignment is satisfying:

$$\begin{aligned} \beta :{\left\{ \begin{array}{ll} y_{{\hat{\imath }}}\mapsto 1,&{}\\ y_{{\hat{\jmath }}}\mapsto 1,&{}\\ y_i\mapsto 0,&{}\text { for }{{\hat{\imath }}}\ne i\ne {{\hat{\jmath }}},\text { and}\\ x_i\mapsto \text {arbitrary}. \end{array}\right. } \end{aligned}$$We have \(\beta (\pi _i)=1\) for all \(i\in \{1,\ldots ,m\}\) since any \(\pi _i\) contains either \(y_{{\hat{\imath }}}\) or \(y_{{\hat{\jmath }}}\). The remaining clauses \(\overline{y}_i\wedge \overline{y}_j\) with \((i,j)\ne ({{\hat{\imath }}},{{\hat{\jmath }}})\) are trivially satisfied.

-

Case 2: The deleted clause is \({C}_{{{{\hat{\imath }}}}}\vee {\pi }_{{{{\hat{\imath }}}}}\). In this case, the assignment

$$\begin{aligned} \beta :{\left\{ \begin{array}{ll} y_{{\hat{\imath }}}\mapsto 1,&{}\\ y_i\mapsto 0,&{}\text { for }i\ne {{\hat{\imath }}},\\ x_i\mapsto 0,&{}\text { for }x_i\in \{\ell _{{{\hat{\imath }}},1},\ell _{{{\hat{\imath }}},2},\ell _{{{\hat{\imath }}},3}\},\\ x_i\mapsto 1,&{}\text { for }x_i\in \{\overline{\ell }_{{{\hat{\imath }}},1},\overline{\ell }_{{{\hat{\imath }}},2}, \overline{\ell }_{{{\hat{\imath }}},3}\},\text { and}\\ x_i\mapsto \text {arbitrary},&{}\text { otherwise,} \end{array}\right. } \end{aligned}$$is satisfying. All clauses of the form \(\overline{y}_i\vee \overline{y}_j\) are satisfied since only \(y_{{\hat{\imath }}}\) is assigned 1 and \(i\ne j\). We also have \(\beta (\pi _i)=1\) for all \(i\ne {{\hat{\imath }}}\), so all clauses containing \(\pi _i\) for \(i\ne {{\hat{\imath }}}\) are satisfied. Since \(C_{{\hat{\imath }}}\vee \pi _{{\hat{\imath }}}\) is deleted, the only three remaining clauses are \(\overline{\ell }_{{{\hat{\imath }}},j}\vee \pi _{{\hat{\imath }}}\vee \overline{y}_{{\hat{\imath }}}\) for \(j\in \{1,2,3\}\). These are satisfied because \(\beta (\overline{\ell }_{{{\hat{\imath }}},j})=1\) for \(j\in \{1,2,3\}\). (Such an assignment is valid since no clause \(C_i\) contains a variable and its negation, as mentioned in the first paragraph, in particular \(C_{{\hat{\imath }}}\), that is, \(\{\ell _{{{\hat{\imath }}},1},\ell _{{{\hat{\imath }}},2},\ell _{{{\hat{\imath }}},3}\}\cap \{\overline{\ell }_{{{\hat{\imath }}},1},\overline{\ell }_{{{\hat{\imath }}},2}, \overline{\ell }_{{{\hat{\imath }}},3}\}=\emptyset \).)

-

Case 3: The deleted clause is \(\overline{{\ell }}_{{{{{\hat{\imath }}}}},{{{{\hat{\jmath }}}}}}\vee {\pi }_{{{{\hat{\imath }}}}}\vee \overline{{y}}_{{{{\hat{\imath }}}}}\). Also in this case, the assignment

$$\begin{aligned} \beta :{\left\{ \begin{array}{ll} y_{{\hat{\imath }}}\mapsto 1,&{}\\ y_i\mapsto 0,&{}\text { for }i\ne {{\hat{\imath }}},\\ x_i\mapsto 1,&{}\text { for }x_i\in \{\ell _{{{\hat{\imath }}},{{\hat{\jmath }}}}\}\cup \{\overline{\ell }_{{{\hat{\imath }}},j}\mid j\ne {{\hat{\jmath }}}\},\\ x_i\mapsto 0,&{}\text { for }x_i\in \{\overline{\ell }_{{{\hat{\imath }}},{{\hat{\jmath }}}}\}\cup \{\ell _{{{\hat{\imath }}},j}\mid j\ne {{\hat{\jmath }}}\},\\ x_i\mapsto \text {arbitrary},&{}\text { otherwise,} \end{array}\right. } \end{aligned}$$is satisfying. The same argument as in Case 2 shows that the assignment to \(y_{{{\hat{\imath }}}}\) satisfies all clauses but the three clauses \(C_{{\hat{\imath }}}\) and \(\overline{\ell }_{{{\hat{\imath }}},j}\vee \pi _{{\hat{\imath }}}\vee \overline{y}_{{\hat{\imath }}}\) for \(j\in \{1,2,3\}-\{{{\hat{\jmath }}}\}\). The clause \(C_{{\hat{\imath }}}\) is satisfied because \(\beta (\ell _{{{\hat{\imath }}},{{\hat{\jmath }}}})=1\); the other two are satisfied due to \(\beta (\ell _{{{\hat{\imath }}},j})=1\) for \(j\ne {{\hat{\jmath }}}\).

2.2.5 Additional Form Constraint: Two or Three Literals per Clause

We describe and analyze a reduction from the problem for \(\textsc {CNF} \)-formulas to the restriction to \(\textsc {2or3} \text {-}\textsc {CNF} \), which is the set of all \(\textsc {CNF} \)-formulas with exactly two or three literals in every clause.

Construction

The formula \(\Psi \) can be replaced by an equivalent \(\textsc {2or3} \text {-}\textsc {CNF} \)-formula \(\Psi '\) while retaining the property that each one-clause-deleted subformula has an easy-to-compute satisfying assignment. We can use the standard reduction that replaces a clause \(C_i=\ell _{i,1}\vee \ldots \vee \ell _{i,\Vert C_i\Vert }\), where \(\Vert C_i\Vert \) denotes the number of literals in the clause \(C_i\), by

where \(z_{i,1},\ldots ,z_{i,\Vert C_i\Vert }\) are \(\Vert C_i\Vert \) new variables.

Equivalence

The formulas \(\Psi \) and \(\Psi '\) are equivalent because we can use the assignment of truth values to \(z_{i,1},\ldots ,z_{i,\Vert C_i\Vert }\) to satisfy all but an arbitrary one of the substituted clauses above.

Easy-to-compute satisfying assignments for one-clause-deleted subformulas

Deleting a clause \(C_{{{\hat{\imath }}},{{\hat{\jmath }}}}\) from \(\Psi '\) corresponds to the deletion of the clause \(C_{{{\hat{\imath }}}}\) from \(\Psi \) because the clauses \(C_{{{\hat{\imath }}},j}\) with \(j\ne {{\hat{\jmath }}}\) can always be satisfied by assigning 1 to the variables \(z_{{{\hat{\imath }}},1},\ldots ,z_{{{\hat{\imath }}},{{\hat{\jmath }}}-1}\) and 0 to the variables \(z_{{{\hat{\imath }}},{{\hat{\jmath }}}+1},\ldots ,z_{{{\hat{\imath }}},\Vert C_{{\hat{\imath }}}\Vert }\).

2.2.6 Additional Form Constraint: Variables Occurring at Most Once per Clause, Thrice Overall

We describe and analyze a reduction from the problem for \(\textsc {3CNF} \)-formulas to the restriction to \(\textsc {3Occurrences} \text {-}\textsc {2or3} \text {-}\textsc {CNF} \), which is the set of \(\textsc {3CNF} \)-formulas where each variable occurs at most once in each clause and at most three times in the entire formula. In particular, each clause only contains distinct literals and each literal occurs at most twice overall.

Construction

Let \(\Phi \) be a \(\textsc {3CNF} \)-formula over \(\{x_1,\ldots ,x_n\}\). Assume that \(x_1\) occurs in \(\Phi \) a total of a times in the affirmative and b times negated. We replace the a affirmative occurrences by \(x_{1,1},\ldots ,x_{1,a}\) and the b negated occurrences by \(\overline{x}_{1,a+1},\ldots ,\overline{x}_{1,a+b}\). Moreover, we add the following new clauses:

Observe that the added clauses are equivalent to the implication chain

We repeat this construction for \(x_2,\ldots ,x_n\) and obtain our formula \(\Psi \). Now, we show that \(\Psi \) is equivalent to \(\Phi \).

Correctness

Given a satisfying assignment \(\alpha \) for \(\Phi \), the assignment \(\beta :x_{i,j}\mapsto \alpha (x_i) \) trivially satisfies the constructed formula \(\Psi \). For the converse, assume there is a satisfying assignment \(\beta \) for \(\Psi \). We prove that the modified assignment \(\beta '(x_{i,j})=\beta (x_{i,a(i)})\) for all \(j\in \{1,\ldots ,a(i)+b(i)\}\) also satisfies \(\Psi \). Obviously, \(\beta '\) satisfies the implication chains since there is no dependence on j. To see that the other clauses are satisfied as well, consider the two possible assignments for \(x_{i,a(i)}\).

- Case 1.:

-

If \(\beta (x_{i,a(i)})=1\), then \(\beta (x_{i,j})=1\) for all \(j\ge a(i)\) by the implication chain. These variables are also assigned 1 by \(\beta '\), which has \(\beta '(x_{i,j})=1\) for all j. Thus \(\beta '\) can only differ from \(\beta \) on the variables \(x_{i,j}\) with \(j<a\). These are the positively occurring variables and \(\beta '\) assigns 1 to all of them. Therefore, the changes to the assignment keep all the satisfied clauses satisfied.

- Case 2.:

-

If \(\beta (x_{i,a(i)})=0\), then \(\beta (x_{i,j})=0\) for all \(j\le a(i)\) by the contrapositive of the implication chain. These variables are also assigned 0 by \(\beta '\), which has \(\beta '(x_{i,j})=0\) for all j. Thus \(\beta '\) can only differ from \(\beta \) on the variables \(x_{i,j}\) with \(j>a(i)\). These are the negatively occurring variables and \(\beta '\) assigns 0 to all of them. As before, we conclude that none of the changes to the assignment renders any satisfied clause unsatisfied.

Now, we trivially obtain from \(\beta '\) a satisfying assignment for \(\Phi \).

Easy-to-compute satisfying assignments for one-clause-deleted subformulas.

Assume that a clause \(\overline{x}_{{{\hat{\imath }}},{{\hat{\jmath }}}}\vee x_{{{\hat{\imath }}},{{\hat{\jmath }}}+1}\) is deleted. (For all other clauses, the correspondence between \(\Phi \) and \(\Psi \) is immediate.) Then, the \({{\hat{\imath }}}\)th implication chain breaks in two and we are left with

Consider the three (partial) assignments

As already seen, the two assignments \(\beta _0\) and \(\beta _1\) correspond to the possible assignments for \(x_i\) in \(\Phi \). The new option of \(\beta '\) allows us to choose, in the case of \({{\hat{\jmath }}}\le a(i)\), between \(\beta '\) and \(\beta _1\) instead of \(\beta _0\) and \(\beta _1\) to assign without loss of generality 1 to the first \({{\hat{\jmath }}}\ge 1\) occurrences of \(x_i\), which are all positive and thus satisfied. For \({{\hat{\jmath }}}> a(i)\), we analogously choose between \(\beta _0\) and \(\beta '\) to assign 0 to at least the last and possibly more of the negative occurrences of \(x_i\) without loss of generality. We can thus satisfy the occurrence of \(x_i\) in at least one clause without loss of generality, which is tantamount to deleting the clause of \(\Phi \) where it occurs. For the remaining formula, we can then assign values as described at the end of Sect. 2.2.2 again.

2.2.7 Additional Form Constraint: Exactly Three Literals per Clause

We describe and analyze a reduction from the problem for \(\textsc {3Occurrences} \text {-}\textsc {2or3} \text {-}\textsc {CNF} \)-formulas to the one for \(\textsc {3CNF} \)-formulas. Thus, we gain the property that every clause contains exactly 3 distinct literals (instead of either 2 or 3), but lose the property that every variable occurs at most three times and every literal at most twice.

Construction

Let \(\Phi \) be a given formula in \(\textsc {3Occurrences} \text {-}\textsc {2or3} \text {-}\textsc {CNF} \). We construct \(\Psi \) in the following way: Clauses with exactly three literals remain unchanged. A clause \(C_i=(\ell _{i,1}\vee \ell _{i,2})\) with two literals is replaced by \(\widetilde{C}_i=(\ell _{i,1}\vee \ell _{i,2}\vee y_i)\wedge (\ell _{i,1}\vee \ell _{i,2}\vee \overline{y}_i)\), with a new variable \(y_i\). A clause \(C_i=(\ell _{i,1})\) with only one literal does in fact not occur in the given \(\Phi \) by our assumption, but if it did, it could still be replaced by

with new variables \(y_i\) and \(z_i\).

Correctness

By assigning the right values to \(y_i\) and \(z_i\), respectively, we satisfy any of the two clauses (any three of the four clauses, respectively) of \(\widetilde{C}_i\), leaving one that simplifies to the original \(C_i\).

Easy-to-compute satisfying assignments for one-clause-deleted subformulas

If a clause of \(\widetilde{C}_i\) is deleted, we can again use the assignment to \(y_i\) and \(z_i\) to satisfy the remaining ones; thus virtually deleting the whole of \(\widetilde{C}_i\).

3 Colorability

As mentioned in the previous section, the constructions of some \(\text {DP}\)-completeness results for minimality problems can be adapted to obtain \(\text {NP}\)-hardness for computing optimal solutions from optimal solutions to locally modified instances. There are remarkably few complexity results for minimality problems; fortunately, however, \(\textsc {Vertex} \textsc {Minimal} \textsc {3UnColor} \textsc {ability} \) (the graphs that are not 3-colorable but that are 3-colorable after deleting any vertex)Footnote 4 is \(\text {DP}\)-complete by reduction from \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {UnSat} \) [13]. We will show how to extract from said reduction a proof of the fact that computing an optimal coloring for a graph from optimal colorings for its one-vertex-deleted subgraphs is \(\text {NP}\)-hard (Theorem 5). However, the standard notion of criticality is \(\chi \)-criticality under edge deletion, and the construction by Cai and Meyer [13] does unfortunately not yield the analogous result for deleting edges. This was to be expected, since working with edge deletion is much harder. Surprisingly, however, a targeted modification of the constructed graph allows us to establish, through a far more elaborate case distinction, that computing an optimal coloring for a graph from optimal colorings for its one-edge-deleted subgraphs is \(\text {NP}\)-hard (Theorem 7) as well as that the related minimality problem \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \) is \(\text {DP}\)-complete (Theorem 8).

Lemma 4

There is a polynomial-time many-one reduction g from \(\textsc {3Sat} \) to \(\textsc {3} \text {-}\textsc {Color} \textsc {ability} \) and a polynomial-time computable function \({\text {opt}}\) such that, for every \(\textsc {3CNF} \)-formula \(\Phi \) and for every vertex v in \(g(\Phi )\), \({\text {opt}}(g(\Phi ) - v)\) is an optimal coloring for \(g(\Phi ) - v\).

Proof

Given a \(\textsc {3CNF} \)-formula \(\Phi \), let \(g(\Phi ) = h(f(\Phi ))\), where f is the reduction from Theorem 2 and h is the reduction from \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {UnSat} \) to \(\textsc {Vertex} \textsc {Minimal} \textsc {3UnColor} \textsc {ability} \) by Cai and Meyer [13]. Since h also reduces \(\textsc {3Sat} \) to \(\textsc {3} \text {-}\textsc {Color} \textsc {ability} \) [13, Lemma 2.2], so does g. A careful inspection of the reduction g reveals that there is a polynomial-time computable function \({\text {opt}}\) such that, for every vertex v in \(g(\Phi )\), \({\text {opt}}(g(\Phi ) - v)\) is a 3-coloring of \(g(\Phi ) - v\). We can also verify that \(g(\Phi ) - v\) does not have a 2-coloring, hence \({\text {opt}}(g(\Phi ) - v)\) is an optimal coloring. We do not dive into the details as this lemma immediately follows from the proof of the analogous result for edge deletion, Lemma 6, as explained there. \(\square \)

Theorem 5

Computing an optimal coloring for a graph from optimal colorings for its one-vertex-deleted subgraphs is \(\text {NP}\)-hard.

Proof

We reduce from the decision problem \(\textsc {3CNF} \). Given a \(\textsc {3CNF} \)-formula \(\Phi \), compute \(g(\Phi )\), where g is the reduction from Lemma 4, compute \({\text {opt}}(g(\Phi ) - v)\) for every vertex v in \(g(\Phi )\), and from these optimal solutions compute one for \(g(\Phi )\). This determines whether \(g(\Phi )\) is 3-colorable and thus whether \(\Phi \) is satisfiable. \(\square \)

Lemma 6

There is a polynomial-time many-one reduction g from \(\textsc {3Sat} \) to \(\textsc {3} \text {-}\textsc {Color} \textsc {ability} \) and a polynomial-time computable function \({\text {opt}}\) such that, for every \(\textsc {3CNF} \)-formula \(\Phi \) and for every edge e in \(g(\Phi )\), \({\text {opt}}(g(\Phi ) - e)\) is an optimal coloring of \(g(\Phi ) - e\).

Proof

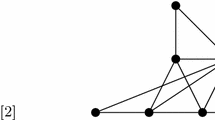

Given a \(\textsc {3CNF} \)-formula \(\Phi \), let \(g(\Phi ) = h(f(\Phi )) - e_v\), where f is the reduction from Theorem 2, h is the reduction from \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {UnSat} \) to \(\textsc {Vertex} \textsc {Minimal} \textsc {3UnColor} \textsc {ability} \) by Cai and Meyer [13] described below, \(v_\text {c}\) is the unique vertex adjacent to all variable-setting vertices, \(v_\text {s}\) is the only remaining neighbor vertex of \(v_\text {c}\), and \(e_v\) is the edge \(\{v_\text {c},v_\text {s}\}\). See Fig. 1 for an example of the construction.

We will show that g reduces \(\textsc {3Sat} \) to \(\textsc {3} \text {-}\textsc {Color} \textsc {ability} \) and that there is a polynomial-time computable function \({\text {opt}}\) such that, for every \(\textsc {3CNF} \)-formula \(\Phi \) and for every edge e in \(g(\Phi )\), \({\text {opt}}(g(\Phi ) - e)\) is an optimal coloring of \(g(\Phi ) - e\).

For completeness, we briefly describe the reduction h from \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {UnSat} \) to \(\textsc {Vertex} \textsc {Minimal} \textsc {3UnColor} \textsc {ability} \) [13] (also excellently explained by Rothe and Riege [18]). Let \(\Phi \) be a \(\textsc {3CNF} \)-formula with variables \(\{x_1,\ldots ,x_n\}\) and clauses \(\{c_1,\ldots ,c_m\}\). The graph \(h(\Phi )\) is defined as follows; see Fig. 1. First, we create two vertices \(v_\text {c}\) and \(v_\text {s}\) connected by an edge. Then, for each variable \(x_i\), we create two vertices \(x_i\) and \(\overline{x}_i\) and connect them to each other and each of them to \(v_\text {c}\). For each clause \({C}_k=\ell _{k1}\vee \ell _{k2}\vee \ell _{k3}\) of \(\Phi \), we create nine new vertices, namely a triangle \(t_{k1}, t_{k2}, t_{k3}\) and a pair \(a_{ki},b_{ki}\) for each literal \(\ell _{ki}\), where \(t_{ki}\) is connected to \(b_{ki}\), \(a_{ki}\) is connected to \(b_{ki}\), and both \(a_{ki}\) and \(b_{ki}\) are connected to \(v_\text {s}\); if and only if the literal \(\ell \in \{x_j,\overline{x}_j\}\) appears as the ith literal in \({C}_k\), there is an edge from \(\ell \) to \(a_{ki}\).

We first show that g is a reduction from \(\textsc {3Sat} \) to \(\textsc {3} \text {-}\textsc {Color} \textsc {ability} \). Cai and Meyer [13, Lemma 2.2] show that h is a reduction from \(\textsc {3Sat} \) to \(\textsc {3} \text {-}\textsc {Color} \textsc {ability} \). This implies that for every \(\textsc {3CNF} \)-formula \(\Phi \), \(\Phi \) is satisfiable if and only if \(h(f(\Phi ))\) is 3-colorable, so it suffices to show that if \(h(f(\Phi )) - \{v_\text {c},v_\text {s}\}\) is 3-colorable, then so is \(h(f(\Phi ))\). Consider a 3-coloring of \(h(f(\Phi )) - \{v_\text {c},v_\text {s}\}\) such that \(v_\text {c}\) and \(v_\text {s}\) get the same color. Following the original proof [13], we call the colors \(\text {T} \), \(\text {F} \), and \(\text {C} \). Assume that \(v_\text {c}\) and \(v_\text {s}\) are colored \(\text {T} \). Now change the color of \(v_\text {c}\) to \(\text {C} \) and change the color of every x-vertex originally colored \(\text {C} \) to \(\text {T} \). It is easy to check that this new coloring is a 3-coloring of \(h(f(\Phi ))\).Footnote 5

Let e be an edge in \(g(\Phi )\). We need to show that there is a polynomial-time computable optimal coloring of \(g(\Phi ) - e\). We show that there is a polynomial-time computable 3-coloring. (This is optimal because \(g(\Phi ) - e\) is not 2-colorable since it contains triangles.)

Let \({C}\) be a clause in \(f(\Phi )\). Let \(\alpha \) be a polynomial-time computable assignment for \(f(\Phi ) - {C}\). We now show how to compute from this assignment in polynomial time a 3-coloring of \(g(\Phi ) - {C}\), i.e., \(g(\Phi )\) minus the nine clause-vertices representing \({C}\), in such a way that the x–vertices are colored \(\text {T} \) or \(\text {F} \) according to \(\alpha \), \(v_\text {c}\) is colored \(\text {C} \), and \(v_\text {s}\) is colored \(\text {T} \):

-

1.

If \(e = \{x_i,\overline{x}_i\}\), let \({C}\) be a clause in \(f(\Phi )\) such that \(x_i\) occurs positively in \({C}\) (note that it follows from the definition of f that every literal appears positively in at least one clause of \(f(\Phi )\)). Color \(g(\Phi ) - {C}\) as explained above. If \(x_i\) is colored \(\text {F} \), change its color to \(\text {T} \). This is still a 3-coloring of \(g(\Phi ) - {C}\), and since \(x_i\) occurs positively in \({C}\), we can extend this coloring to a 3-coloring of \(g(\Phi )\).

-

2.

If \(e = \{v_\text {c},\ell _i\}\), where \(\ell _i \in \{x_i, \overline{x}_i\}\), let \({C}\) be a clause in \(f(\Phi )\) such that \(\ell _i\) occurs positively in \({C}\). Color \(g(\Phi ) - {C}\) as explained above. If \(\ell _i\) is colored \(\text {T} \), then we can extend the coloring to a 3-coloring of \(g(\Phi )\) in polynomial time. If \(\ell _i\) is colored \(\text {F} \), change the color of \(\ell _i\) to \(\text {C} \), and for every a-vertex connected to \(\ell _i\), change its color from \(\text {C} \) to \(\text {F} \), and for every b-vertex connected to a changed a-vertex, change its color from \(\text {F} \) to \(\text {C} \). It is possible that because of this, the b-vertices in a clause are all colored \(\text {C} \), and the attached triangle cannot be colored. If that is the case, there is a b-vertex in the clause that is connected to an a-vertex that is connected to a literal that is colored \(\text {T} \). Change the color of the a-vertex to \(\text {C} \) and that of the b-vertex to \(\text {F} \). Now we can color the triangle. This results in a 3-coloring of \(g(\Phi ) - {C}\), and it is easy to check that we can extend this coloring to a 3-coloring of \(g(\Phi )\).

-

3.

Let \({C}\) be a clause such that e is connected to an a-vertex of \({C}\). Again, color \(g(\Phi ) - {C}\) as above. If \(\alpha \) satisfies \(f(\Phi )\), we can in polynomial time compute a 3-coloring of \(g(\Phi )\). So suppose that \(\alpha \) does not satisfy \(f(\Phi )\). Then all x-vertices connected to an a-vertex of \({C}\) are colored \(\text {F} \). When we try to extend this coloring, all a-vertices in the clause must be colored \(\text {C} \) and all b-vertices must be colored \(\text {F} \), which means that we cannot color the triangle with 3 colors. If e is one of the triangle edges, we can color the triangle-vertices \(\text {T} \), \(\text {C} \), and \(\text {T} \). If e connects a b-vertex to a t-vertex, we can color that t-vertex \(\text {F} \), and the other t-vertices \(\text {T} \) and \(\text {C} \). For the remaining cases, we show that we can change the color of one of the b-vertices, which again allows us to color the triangle. If e connects an x-vertex to an a-vertex, we can color the a-vertex \(\text {F} \) and the connecting b-vertex \(\text {C} \). If e connects an a-vertex to a b-vertex, we can color the b-vertex \(\text {C} \). If e connects \(v_\text {s}\) to an a-vertex, we can color the a-vertex \(\text {T} \) and the connecting b-vertex \(\text {C} \). If e connects \(v_\text {s}\) to a b-vertex, we can color the b-vertex \(\text {T} \).

This completes the proof of Lemma 6. We now explain why g also fulfills the requirements of Lemma 4. Let v be a vertex in \(g(\Phi )\), and let e be an edge incident with v (such an edge always exists since \(g(\Phi )\) does not contain isolated vertices). Then \({\text {opt}}(g(\Phi ) - e)\) is a 3-coloring. This gives us a 3-coloring of \(g(\Phi )-v\), which is optimal since \(g(\Phi ) - v\) does not have a 2-coloring. \(\square \)

Theorem 7

Computing an optimal coloring for a graph from optimal colorings for its one-edge-deleted subgraphs is \(\text {NP}\)-hard.

Proof

The same argument as for Theorem 5 can be applied here. \(\square \)

Theorem 8

\(\textsc {Minimal} \text {-}{}3\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \) is \(\text {DP}\)-complete.

Proof

The membership in \(\text {DP}\) is immediate, since given a graph \(G = (V,E)\), determining whether \(G - e\) is 3-colorable for every \(e\in E\) is in \(\text {NP}\) and so is determining whether G is 3-colorable. As for \(\text {DP}\)-hardness, the argument from the proof of Lemma 6 shows that mapping \(\Phi \) to \(h(\Phi ) - \{v_\text {c},v_\text {s}\}\), where h is the reduction from \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {UnSat} \) to \(\textsc {Vertex} \textsc {Minimal} \textsc {3UnColor} \textsc {ability} \) by Cai and Meyer [13], gives a reduction from \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {UnSat} \) to \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \) (and to \(\textsc {Vertex} \textsc {Minimal} \textsc {3UnColor} \textsc {ability} \) as well). Recall that \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {UnSat} \) is \(\text {DP}\)-hard [3]. \(\square \)

Cai and Meyer [13] show \(\text {DP}\)-completeness for \(\textsc {Vertex} \textsc {Minimal} \text {-}{}k\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \), for all \(k \ge 3\). We now prove that the analogous result for deletion of edges holds as well.

Theorem 9

\(\textsc {} \textsc {Minimal} \text {-}{}k\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \) is \(\text {DP}\)-complete, for every \(k \ge 3\).

Proof

The membership in \(\text {DP}\) is again immediate. To show hardness for \(k \ge 4\), we reduce \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \) to \(\textsc {} \textsc {Minimal} \text {-}{}k\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \). We use the construction for deleting vertices [13, Theorem 3.1] and map graph G to \(G+K_{k-3}\).Footnote 6 Note that \(\chi (K_{k-3}) = k - 3\) and \(\chi (H+H') = \chi (H) + \chi (H')\) for any two graphs H and \(H'\). First suppose \(G + K_{k-3}\) is in \(\textsc {} \textsc {Minimal} \text {-}{}k\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \). Then \(G + K_{k-3}\) is not k-colorable, and so G is not 3-colorable. Let e be an edge in G. Then \((G - e) + K_{k-3} = (G+K_{k-3}) - e\) is k-colorable, and thus \(G - e\) is 3-colorable. It follows that G is in \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \).

Now suppose G is in \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \). Then \(G + K_{k-3}\) is not k-colorable. Let e be an edge in \(G + K_{k-3}\). If e is an edge in G, then \(G - e\) is 3-colorable and so \((G + K_{k-3}) - e = (G - e) + K_{k-3}\) is k-colorable. If e is an edge in \(K_{k-3}\), then \(K_{k-3} - e\) is \((k-4)\)-colorable and G is 4-colorable (let \(\hat{e}\) be any edge in G, take a 3-coloring of \(G - \hat{e}\), and change the color of one of the vertices incident to \(\hat{e}\) to the remaining color), so \((G + K_{k-3}) - e = G + (K_{k-3} - e)\) is k-colorable. Finally, if \(e=\{v,w\}\) for a vertex v in G and a vertex w in \(K_{k-3}\), let \(\hat{e}\) be an edge in G incident to v, take a 3-coloring of \(G - \hat{e}\), take a disjoint \((k - 3)\)-coloring of \(K_{k-3}\), and change the color of v to the color of w. As a result, for all edges e in \(G + K_{k-3}\), \((G + K_{k-3}) - e\) is k-colorable. It follows that \(G + K_{k-3}\) is in \(\textsc {} \textsc {Minimal} \text {-}{}k\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \). \(\square \)

The construction above does not prove the analogues of Lemmas 4 and 6: Note that G is 3-colorable if and only if \((G + K_{k-3}) - v\) and \((G + K_{k-3}) - e\) are both \((k-1)\)-colorable for every vertex v in \(K_{k-3}\) and for every edge e in \(K_{k-3}\), and so we can certainly determine whether a graph is 3-colorable from the optimal solutions to the one-vertex-deleted subgraphs and one-edge-deleted subgraphs of \(G+K_{k-3}\) in polynomial time. Turning to criticality and vertex-criticality, we can bound their complexity as follows.

Theorem 10

The two problems of determining whether a graph is critical and whether it is vertex-critical are both in \(\Theta _2^\text {p}\) and \(\text {DP}\)-hard.

Proof

For the \(\Theta _2^\text {p}\)-membership of the two problems, we observe that the relevant chromatic numbers of a graph \(G = (V,E)\) and its neighbors can be computed by querying the \(\text {NP}\) oracle \(\textsc {Color} \textsc {ability} =\{(G,k)\,\mid \,\chi (G) \le k\}\) for every (G, k), \((G - e, k)\), and \((G - v, k)\) for every \(e\in E\), \(v\in V\), and \(k \le \vert V(G)\vert \), where \(\vert S\vert \) denotes the cardinality of a set S, in parallel.

For the \(\text {DP}\)-hardness of the two problems, we prove that \(h(\Phi )-\{v_\text {c},v_\text {s}\}\)—that is, the reduction h from \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {UnSat} \) to \(\textsc {Vertex} \textsc {Minimal} \textsc {3UnColor} \textsc {ability} \) by Cai and Meyer [13] with one edge removed, as illustrated in Fig. 1—is a reduction from \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {UnSat} \) to both of them. We have already seen that it reduces \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {UnSat} \) to \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \). Hence, for every \(\Phi \in \textsc {Minimal} \text {-}{}3\text {-}\textsc {UnSat} \), the graph \(h(\Phi )-\{v_\text {c},v_\text {s}\}\) is in \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \subseteq \textsc {Vertex} \textsc {Minimal} \textsc {3UnColor} \textsc {ability} \) and thus both critical and vertex-critical. For the converse, it suffices to note that, for every \(\Phi \in \textsc {CNF} \) with clauses of size at most 3, \(h(\Phi )-\{v_\text {c},v_\text {s}\}\) is 4-colorable and thus in \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \) (in \(\textsc {Vertex} \textsc {Minimal} \textsc {3UnColor} \textsc {ability} \), respectively) if and only if it is critical (vertex-critical, respectively). \(\square \)

The exact complexity of these problems remains open, however. In particular, it is unknown whether they are \(\Theta _2^\text {p}\)-hard. This contrasts with the case of vertex cover, for which we prove in Theorem 18 that recognizing \(\beta \)-vertex-criticality is indeed \(\Theta _2^\text {p}\)-complete.

Before that, however, we return to our model and consider colorability under the local modification of adding an edge. If we allow only one query, the problem stays NP-hard via a simple Turing reduction: Iteratively adding edges to the given instance eventually leads to a clique as a trivial instance. The proof is simple enough and based on a technique that is commonly applied in reoptimization, self-reducibility, and many other fields. We formulate Theorem 11 as an adaptation of Lemma 1 by Böckenhauer et al. [20] with two main differences: On the one hand, we consider not just one efficiently solvable instance but an entire set of them. On the other hand, we require that every instance has not just one modification chain connecting it to an efficiently solvable one, but that all possible modification chains eventually lead to an efficiently solvable instance.

Theorem 11

Let \(\textsc {Opt} \textsc {Prob} \) be an optimization problem. Let T be a set of efficiently solvable instances of \(\textsc {Opt} \textsc {Prob} \). (Or, to be more precise: Let \(T\subseteq \Sigma _1\) be a subset of instances for the problem \(\textsc {Opt} \textsc {Prob} \subseteq \Sigma _1\times \Sigma _2\) such that there is a polynomial-time algorithm that computes an optimal solution on every instance of T.) Let the considered local modification be such that applying arbitrary such modifications repeatedly will inevitably transform any instance of \(\textsc {Opt} \textsc {Prob} \) into an instance in T in a polynomial number of steps. Then \(\textsc {Opt} \textsc {Prob} \) is \(\text {NP}\)-hard in our model with restriction to one query.

Proof

We give a reduction from \(\textsc {Opt} \textsc {Prob} \) in the classical setting to \(\textsc {Opt} \textsc {Prob} \) in our model. Let \(A \) be a polynomial-time algorithm that computes on instance \(I_i\) a locally modified instance \(I_{i+1}\) and uses an optimal solution to \(I_{i+1}\) to compute an optimal solution for \(I_i\). For any given instance \(I_0\) of \(\textsc {Opt} \textsc {Prob} \), we thus get a chain \(I_0,I_1,\ldots ,I_n\) of polynomial length such that \(I_n\) is in T. We can efficiently compute an optimal solution to \(I_n\in T\) and then use \(A \) to successively compute optimal solutions to \(I_{n-1},\ldots ,I_1,I_0\) in polynomial time. \(\square \)

Note that the restriction to one query is crucial for this reduction to work. If the assumed algorithm uses, for example, up to two queries to solve an instance, then the chain starting at any given \(I_0\) may branch in every step before reaching an instance in T. Despite each chain having at most polynomial length, this might lead to an exponential number of chains and instances that need to be considered to find an optimal solution for \(I_0\). The following theorem shows that this breakdown of the hardness proof is inevitable unless \(\text {P} =\text {NP} \) since the problem becomes in fact polynomial-time solvable if just one more oracle call is granted.

Theorem 12

There is a polynomial-time algorithm that computes an optimal coloring for a graph from optimal colorings of all its one-edge-added supergraphs; in fact, two optimal colorings, one for each of two specific one-edge-added supergraphs, suffice.

For the proof of this theorem, we naturally extend the notion of universal vertices as follows.

Definition 1

An edge \(\{u,v\}\in E\) of a graph \(G=(V,E)\) is called universal if, for every vertex \(x\in V-\{u,v\}\), we have \(\{x,u\}\in E\) or \(\{x,v\}\in E\). A graph is called universal-edged if all its edges are universal.

Additionally, we denote, for any given graph \(G = (V,E)\) and any vertex \(x\in V\), the open neighborhood of x in G by \(N(x):=\{y\mid \{x,y\}\in E\}\) and the closed neighborhood of x in G by \(N[x]:=N(x)\cup \{x\}\). We are now ready to give the proof of Theorem 12.

Proof of Theorem 12

We show that Colorer (Algorithm 1), which uses the oracle of our model and Subcol (Algorithm 2) as subroutines, has the desired properties. \(\square \)

An example of the construction that we use in Subcol (Algorithm 2) for a k-colorable graph G, exploiting the fact that G is known to be universal-edged. In the example, we have \(k=4\). In general, the graph G is k-colorable if and only if the induced subgraph G[M] is \((k-2)\)-colorable. The subgraphs G[L] and G[R] are independent sets. The following relations hold in general as well: \(\begin{aligned} L={}&N(\ell ){-} N[r]=V{-} N[r],&M={}&N(\ell )\cap N(r),\\ R={}&N(r){-} N[\ell ]=V{-} N[\ell ],\ \text {and}\hspace{-.9em}&V={}&N[\ell ]\cup N[r]=L\cup M\cup R\cup \{\ell ,r\}. \end{aligned}\) In the example, only edge d prevents G[M] from being 1-colorable and thus G from being 3-colorable

We begin by proving that Colorer is correct. Assume first that the input graph \(G=(V,E)\) is not universal-edged. Then Colorer can find an edge \(\{u,v\}\in E\) with a non-neighboring vertex \(x\in V\) and query the oracle on \(G\cup \{u,x\}\) and \(G\cup \{v,x\}\) for optimal colorings \(f_1\) and \(f_2\). We argue that at least one of them is also optimal for G. Let f be any optimal coloring of G. Since u and v are connected by an edge, we have \(f(u)\ne f(v)\) and hence \(f(x)\ne f(u)\) or \(f(x)\ne f(v)\); see Fig. 2. Thus, f is also an optimal coloring of \(G\cup \{x,u\}\) or \(G\cup \{x,v\}\), and so we have \(\chi (G)=\chi (G\cup \{x,u\})\) or \(\chi (G)=\chi (G\cup \{x,v\})\). Therefore, \(f_1\) or \(f_2\) is an optimal coloring for G as well and returned on line 7 or 9, respectively.

The while loop can be entered only if the graph G is universal-edged. This allows us to compute an optimal solution to G with no queries at all by using \({}\textsc {Subcol} \) (Algorithm 2). We will show that \({}\textsc {Subcol} \) is a polynomial-time algorithm that computes, for any universal-edged graph G and any positive integer k, a k-coloring of G if there is one, and outputs NO otherwise. The while loop of \({}\textsc {Colorer} \) thus searches the smallest integer k such that G has a k-coloring, that is, \(k=\chi (G)\). Hence, an optimal coloring of G is returned on line 18. Due to \(k=\chi (G)\le \vert V\vert \), Colorer has polynomial time complexity.

It remains to prove the correctness and polynomial time complexity of Subcol, formally stated in Lemma 13. This can be done by bounding its recursion depth and verifying the correctness for each of the six return statements; this is hardest for the last two. The proof relies on the properties of the partition \(M\cup L\cup R\cup \{\ell \}\cup \{r\}\) as illustrated in Fig. 3. \(\square \)

Lemma 13

The subroutine Subcol (Algorithm 2) is correct and runs in polynomial time.

Proof of Lemma 13

Note first that the input for Subcol is a pair (G, k), where G is a universal-edged graph and k a positive integer. It is thus clear that Subcol runs in polynomial time: The recursion depth is \(\lfloor (k-1)/2\rfloor \) and for the two base cases it is easy to check whether G has edges and whether G has a bipartition and, if there is any, find one in polynomial time.

We now show that Subcol is correct by going through all six return statements. The first one in line 2 is correct since returns a constant 1-coloring and we assume k to be a positive integer. With the second one in line 4, the case \(k=1\) is completely and correctly covered. Analogously, the third one in line 7 is correct since a 2-coloring is a k-coloring for any \(k\in \mathbb {N}-\{0,1\}\), and the case \(k=2\) is correctly covered together with the fourth return statement in line 9. If none of the first four return statements of Subcol are executed, the graph G has an edge and the choice of an edge \(\{\ell ,r\}\in E\) is possible.

For the last two return statements in lines 15 and 17, we will prove the correctness by induction over k, with the above two cases \(k=1\) and \(k=2\) serving as the induction basis. We will rely on the properties of a partition of G that we describe in what follows; see Fig. 3 for an illustrating example with a graph that is k-colorable for \(k=4\) but not for \(k=3\). Let \(\{\ell ,r\}\) be the edge of G as chosen by the algorithm. The remaining vertices \(V-\{\ell ,r\}\) are partitioned, depending on the way they are connected to \(\{\ell ,r\}\), into the three sets L, R, and M: L contains the vertices adjacent to \(\ell \) but not to r, R contains the vertices connected to r but not to \(\ell \), and M contains the vertices that are adjacent to both \(\ell \) and r. Note that the sets L, R, and M are disjoint. They cover \(V-\{\ell ,r\}\) since every vertex is adjacent to \(\ell \) or r because G is universal-edged and \(\{\ell ,r\}\) is thus universal. We now consider the case that NO is returned with the fifth return statement. This happens only if \(g=\mathop {{}\textsc {Subcol} }(G[M],k-2)=\text {NO}\).

Thus, G[M] is not \((k-2)\)-colorable by the induction hypothesis. We show that a k-coloring of G yields a \((k-2\))-coloring of G[M], thus proving by contradiction that G is not k-colorable. Assume that there is a k-coloring of G. Due to the edge \(\{\ell ,r\}\), the two vertices \(\ell \) and r have two different colors out of the k available ones. Since all vertices of M are adjacent to both \(\ell \) and r, the subgraph G[M] is indeed colored by f with the \(k-2\) remaining colors.

Finally, we consider the case where the last return statement in line 17 is reached. We need to prove that the output f(x) is a k-coloring of G. By the induction hypothesis, we know that g is a \((k-2)\)-coloring on G[M] using the colors \({1,\ldots ,k-2}\). The remaining vertices \(L\cup \{r\}\) and \(R\cup \{\ell \}\) are colored with \(k-1\) and k, respectively. Thus, it suffices to show that \(G[L\cup \{r\}]\) and \(G[R\cup \{\ell \}]\) are independent sets. Consider first \(G[L\cup \{r\}]\). On the one hand, none of the vertices in L are adjacent to r by the definition of L. On the other hand, if there were \(x,y\in L\) with \(\{x,y\}\in E\), this would contradict the universality of \(\{x,y\}\) for r. Analogously, we see that \(G[R\cup \{\ell \}]\) is an independent set, concluding the proof. \(\square \)

In this section, we have proven that \(\textsc {} \textsc {Minimal} \text {-}{}k\text {-}\textsc {Un} \textsc {Color} \textsc {ability} \) is complete for \(\text {DP}\) for every \(k \ge 3\) and demonstrated that colorability remains \(\text {NP}\)-hard in our model for deletion of vertices or edges, whereas it becomes polynomial-time solvable when the local modification is the addition of an edge. In the next section, we turn our attention to vertex cover.

4 Vertex Cover

This section will show that the behavior of vertex cover in our model is distinctly different from the one that we demonstrated for colorability in the previous section. In particular, Theorem 14 proves that computing an optimal vertex cover from optimal solutions of one-vertex-deleted subgraphs can be done in polynomial time, which is impossible for optimal colorings according to Theorem 5 unless \(\text {P} =\text {NP} \).

First, we note that the \(\text {NP}\)-hardness proof for our most restricted case with only one query still works (i.e., Theorem 11 is applicable): Deleting vertices, adding edges, or deleting edges repeatedly will always lead to the null graph, an edgeless graph, or a clique through polynomially many instances. As we have seen for colorability in the previous section, hardness proofs of this type may fail due to exponential branching as soon as multiple queries are allowed. We can show that, unless \(\text {P} =\text {NP} \), this is necessarily the case for edge addition and vertex deletion since two granted queries suffice to obtain a polynomial-time algorithm.

Theorem 14

There is a polynomial-time algorithm that computes an optimal vertex cover for a graph from two optimal vertex covers for some one-vertex-deleted subgraphs.

Proof

Observe what can happen when a vertex v is removed from a graph G with an optimal vertex cover of size k. If v is part of any optimal vertex cover of G, then the size of an optimal vertex cover for \(G-v\) is \(k-1\). Given any graph G, pick any two adjacent vertices \(v_1\) and \(v_2\). Since there is an edge between them, one of them is always part of an optimal vertex cover, thus either \(G-v_1\) or \(G-v_2\) or both will have an optimal vertex cover of size \(k-1\). Two queries to the oracle return optimal vertex covers for \(G-v_1\) and \(G-v_2\). The algorithm chooses the smaller of these two covers (or any, if they are the same size) and adds the corresponding \(v_i\). The resulting vertex cover has size k and is thus optimal for G. \(\square \)

The following theorem shows that the analogous result for adding an edge holds as well.

Theorem 15

There is a polynomial-time algorithm that computes an optimal vertex cover for a graph from two optimal vertex covers for some one-edge-added supergraphs.

Proof

Observe first what can happen when an edge e is added to a graph G with an optimal vertex cover of size k. If one of its endpoints v is part of any optimal vertex cover of G, then the optimal vertex cover of G containing v is also an optimal vertex cover for \(G\cup e\). Given any graph G, the algorithm picks any two non-universal vertices \(v_1\) and \(v_2\) that are adjacent. Since there is an edge between them, any given optimal vertex cover contains at least one of them. If edges are added to this vertex, the vertex cover of size k thus remains optimal. Because \(v_1\) and \(v_2\) are non-universal, the algorithm can add to G an edge \(e_1\) that is incident to \(v_1\) and an edge \(e_2\) incident to \(v_2\). Now the algorithm queries the oracle for two optimal vertex covers, one for \(G\cup e_1\) and one for \(G\cup e_2\). At least one of them has size k (as opposed to \(k+1\)) and is thus optimal for G as well. If G has the property that, for every pair of adjacent vertices \(v_1\) and \(v_2\), one of them is universal, then the set of all universal vertices constitutes an optimal vertex cover, except in the case of a clique. To see that this statement is true, first observe that not including a universal vertex in the solution implies that all other vertices must be included lest an edge leading to them remains uncovered. Such a solution can be optimal only if all but one of the n vertices of the graph must be included to cover all edges. This is trivially true for the clique on n vertices but false for any proper subgraph because removing just a single edge already allows for a vertex cover of size \(n-2\), namely the one containing all vertices but the two incident to the removed edge. \(\square \)

At this point, we would like to prove either an analogue to Theorem 7, showing that computing an optimal vertex cover is \(\text {NP} \)-hard even if we get access to a solution for every one-edge-deleted subgraph, or an analogue to Theorem 15, showing that the problem is in \(\text {P} \) if we have access to a solution for more than one one-edge-deleted subgraph. We were unable to prove either, however. The latter is easy to do for many restricted graph classes (e.g., graphs with bridges), yet we suspect that the problem is \(\text {NP}\)-hard in general. We will detail a few reasons for the apparent difficulty of proving this statement after the following theorem and corollary, which look at deleting a triangle as the local modification.

Theorem 16

There is a reduction g from \(\textsc {3Sat} \) to \(\textsc {Vertex} \textsc {Cover} \) such that, for every \(\textsc {3CNF} \)-formula \(\Phi \) and for every triangle T in \(g(\Phi )\), there is a polynomial-time computable optimal vertex cover of \(g(\Phi ) - T\).

Proof

The proof of Theorem 16 relies on the standard reduction from \(\textsc {3Sat} \) to \(\textsc {Vertex} \textsc {Cover} \); see [21], where clauses correspond to triangles.

Given a \(\textsc {3CNF} \)-formula \(\Phi \), let \(g(\Phi ) = h(f(\Phi ))\), where f is the reduction from Theorem 2 and h is the standard reduction from \(\textsc {3Sat} \) to \(\textsc {Vertex} \textsc {Cover} \) [21]. Let T be a triangle in \(g(\Phi )\). Then T corresponds to a clause \({C}\) in \(f(\Phi )\) and the satisfying assignments of \(f(\Phi ) - {C}\) correspond to optimal vertex covers of \(g(\Phi ) - T\), in a polynomially computable way. Hence, we can compute in polynomial time an optimal vertex cover of \(g(\Phi ) - T\) from the polynomial-time computable satisfying assignment for \(f(\Phi ) - {C}\). \(\square \)

Applying the same argument as in the proof of Theorem 5 yields the following corollary.

Corollary 17

Computing an optimal vertex cover for a graph from optimal vertex covers for the one-triangle-deleted subgraphs is \(\text {NP}\)-hard.

What can we say about optimal vertex covers for one-edge-deleted graphs? Papadimitriou and Wolfe show [3, Theorem 4] that there is a reduction g from \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {UnSat} \) to \(\textsc {Minimal} \text {-}{}k\text {-}\textsc {No} \textsc {Vertex} \textsc {Cover} \) (called \(\textsc {Critical} \text {-} \textsc {Vertex} \textsc {Cover} \) in [3]; asking, given a graph G and an integer k, whether G does not have a vertex cover of size k but all one-edge-deleted subgraphs do). The reduction guarantees that we can compute in polynomial time a vertex cover of size k for any one-edge-deleted subgraph. And so g is a reduction from \(\textsc {3Sat} \) to \(\textsc {Vertex} \textsc {Cover} \) such that there exists a polynomial-time computable function \({\text {opt}}\) such that for every \(\textsc {3CNF} \)-formula \(\Phi \) and \(g(\Phi ) = (G,k)\), it holds, for every edge e in G, that \({\text {opt}}(G - e)\) is a vertex cover of size k. Unfortunately, it may happen that an optimal vertex cover of \(G - e\) has size \(k-1\); namely, if e is an edge connecting two triangles, an edge between two variable-setting vertices, or any edge of the clause triangles. The function \({\text {opt}}\) does thus not give us an optimal vertex cover, thwarting the proof attempt. This shows that we cannot always get results for our model from the constructions for criticality problems.

The following would be one approach to design a polynomial-time algorithm that computes an optimal vertex cover from optimal vertex covers for all one-edge-deleted subgraphs: It is clear that deleting an edge does not increase the size of an optimal vertex cover and decreases it by at most one. If, for any two neighbor graphs, the provided vertex covers differ in size, then we can take the smaller one, restore the deleted edge, and add any one of the two incident vertices to the vertex cover; this gives us the desired optimal vertex cover. If the optimal vertex cover size decreases for all deletions of a single edge, we can do the same with any of them. Thus, it is sufficient to design a polynomial-time algorithm that solves the problem on graphs whose one-edge-deleted subgraphs all have optimal vertex covers of the same size as an optimal vertex cover of the original graph. One might suspect that only very few and simple graphs can be of this kind. However, we obtain infinitely many such graphs by the removal of any edge from different cliques, as already mentioned in the introduction. In fact, there is a far larger class of graphs with this property and no apparent communality to be exploited for the efficient construction of an optimal vertex cover.

We now turn to our complexity results of \(\beta \)-(vertex-)criticality. The reduction from \(\textsc {Minimal} \text {-}{}3\text {-}\textsc {UnSat} \) to \(\textsc {Minimal} \text {-}{}k\text {-}\textsc {No} \textsc {Vertex} \textsc {Cover} \) by Papadimitriou and Wolfe [3] establishes the \(\text {DP}\)-hardness of deciding whether a graph is \(\beta \)-critical. However, it seems unlikely that \(\beta \)-criticality is in \(\text {DP}\). The obvious upper bound is \(\Theta _2^\text {p}\), since a polynomial number of queries to a \(\textsc {Vertex} \textsc {Cover} \) oracle, namely (G, k) and \((G-e,k)\) for all edges e in G and all \(k \le \vert V(G)\vert \), in parallel allows us to determine \(\beta (G)\) and \(\beta (G - e)\) for all edges e in polynomial time, and thus allows us to determine whether G is \(\beta \)-critical. While we have not succeeded in proving a matching lower bound, or even any lower bound beyond \(\text {DP}\)-hardness, we do get this lower bound for \(\beta \)-vertex-criticality, thereby obtaining the first \(\Theta _2^\text {p}\)-completeness result for a criticality problem.

Theorem 18

Determining whether a graph is \(\beta \)-vertex-critical is \(\Theta _2^\text {p}\)-complete.

Proof

The membership follows with the same argument as above, this time querying the oracle \(\textsc {Vertex} \textsc {Cover} \) in parallel for all (G, k) and \((G-v,k)\) for all vertices v in G and all \(k \le \vert V(G)\vert \). To show that this problem is \(\Theta _2^\text {p}\)-hard, we use a similar reduction as the one by Hemaspaandra et al. [22, Lemma 4.12] to prove that it is \(\Theta _2^\text {p}\)-hard to determine whether a given vertex is a member of a minimum vertex cover. We reduce from the \(\Theta _2^\text {p}\)-complete problem \(\textsc {VC} _= = \{(G,H)\mid \beta (G) = \beta (H)\}\) [23].

For any given graph pair (G, H), let \(n = \max (\vert V(G)\vert ,\vert V(H)\vert )\), let \(G'\) consist of \(n+1-\vert V(G)\vert \) isolated vertices, let \(H'\) consist of \(n+1-\vert V(H)\vert \) isolated vertices, and let \(F = (G \cup G') + (H \cup H')\). Note that \(\beta (F) = (n+1) + \min (\beta (G),\beta (H))\). If \(\beta (G) = \beta (H)\), then \(\beta (F) = (n+1) + \beta (G) = (n+1) + \beta (H)\) and for every vertex v in F, \(\beta (F - v) = n + \beta (G)\). Thus, F is critical. If \(\beta (G) \ne \beta (H)\), assume without loss of generality that \(\beta (G) < \beta (H)\). Then \(\beta (F) = n + 1 + \beta (G)\). Let v be a vertex in \(G'\). Then \(\beta (F - v) = \min (n + 1 + \beta (G), n + \beta (H)) = n + 1 + \beta (G)\), and therefore F is not critical. \(\square \)

5 Conclusion and Future Research

We defined a natural model that provides new insights into the structural properties of \(\text {NP}\)-hard problems. Specifically, we revealed interesting differences in the behavior of colorability and vertex cover under different types of local modifications. While colorability remains \(\text {NP}\)-hard when the local modification is the deletion of either a vertex or an edge, there is an algorithm that finds an optimal coloring by querying the oracle on at most two edge-added supergraphs. Vertex cover, in contrast, becomes easy in our model for both deleting vertices and adding edges, as soon as two queries are granted. The question of what happens for the local modification of deleting an edge remains as an intriguing open problem that defies any simple approach, as briefly outlined above. Moreover, examples of problems where one can prove a jump from membership in \(\text {P}\) to \(\text {NP}\)-hardness at a given number of queries greater than 2 might be especially instructive.

With its close connections to many distinct research areas, most notably the study of self-reducibility and critical graphs, our model can serve as a tool for new discoveries. In particular, we were able to exploit the tight relations to criticality in the proof that recognizing \(\beta \)-vertex-critical graphs is \(\Theta _2^\text {p}\)-hard, yielding the first completeness result for \(\Theta _2^\text {p}\) in the field.

Notes

Formally, a partial order is said to have short downward chains if the following condition is satisfied: There is a polynomial p such that every chain decreasing with respect to the considered partial order and starting with some string x is shorter than \(p(\vert x\vert )\) and such that all strings preceding x in that order are bounded in length by \(p(\vert x\vert )\).

This set is often denoted \(\textsc {E3-CNF} \) in the literature.

It should be noted that \(\textsc {Vertex} \textsc {Minimal} \textsc {3UnColor} \textsc {ability} \) is denoted by \(\textsc {Minimal} \text {-}{} \textsc {3} \text {-}\textsc {Un} \textsc {Color} \textsc {ability} \) by Cai and Meyer [13] despite the fact that minimality problems usually refer to the case of edge deletion.

Note that this also shows that deleting the edge \(\{v_\text {c},v_\text {s}\}\) is crucial for the lemma and that the original construction [13] does not work for deleting edges.