Abstract

One of the first and easy to use techniques for proving run time bounds for evolutionary algorithms is the so-called method of fitness levels by Wegener. It uses a partition of the search space into a sequence of levels which are traversed by the algorithm in increasing order, possibly skipping levels. An easy, but often strong upper bound for the run time can then be derived by adding the reciprocals of the probabilities to leave the levels (or upper bounds for these). Unfortunately, a similarly effective method for proving lower bounds has not yet been established. The strongest such method, proposed by Sudholt (2013), requires a careful choice of the viscosity parameters \(\gamma _{i,j}\), \(0 \le i < j \le n\). In this paper we present two new variants of the method, one for upper and one for lower bounds. Besides the level leaving probabilities, they only rely on the probabilities that levels are visited at all. We show that these can be computed or estimated without greater difficulties and apply our method to reprove the following known results in an easy and natural way. (i) The precise run time of the (1+1) EA on LeadingOnes. (ii) A lower bound for the run time of the (1+1) EA on OneMax, tight apart from an O(n) term. (iii) A lower bound for the run time of the (1+1) EA on long k-paths (which differs slightly from the previous result due to a small error in the latter). We also prove a tighter lower bound for the run time of the (1+1) EA on jump functions by showing that, regardless of the jump size, only with probability \(O(2^{-n})\) the algorithm can avoid to jump over the valley of low fitness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The theory of evolutionary computation aims at explaining the behavior of evolutionary algorithms, for example by giving detailed run time analyses of such algorithms on certain test functions, defined on some search space (for this paper we will focus on \(\{0,1\}^n\)). The first general method for conducting such analyzes is the fitness level method (FLM) [60, 61]. The idea of this method is as follows. We partition the search space into a number m of sections (“levels”) in a linear fashion, so that all elements of later levels have better fitness than all elements of earlier levels. For the algorithm to be analyzed we regard the best-so-far individual and the level it is in. Since the best-so-far individual can never move to lower levels, it will visit each level at most once (possibly staying there for some time). Suppose we can show that, for any level \(i < m\) which the algorithm is currently in, the probability to leave this level is at least \(p_i\). Then, bounding the expected waiting for leaving a level i by \(1/p_i\), we can derive an upper bound for the run time of \(\sum _{i=1}^{m-1} 1/p_i\) by pessimistically assuming that we visit (and thus have to leave) each level \(i < m\) before reaching the target level m. The fitness level method allows for simple and intuitive proofs and has therefore frequently been applied. Variations of it come with tail bounds [64], work for parallel EAs [47], regard populations [62] or admit non-elitist EAs [8, 22, 25, 44].

While very effective for proving upper bounds, it seems much harder to use fitness level arguments to prove lower bounds (see Theorem 5 for an early attempt). The first (and so far only) to devise a fitness level-based lower bound method that gives competitive bounds was Sudholt [59]. His approach uses viscosity parameters \(\gamma _{i,j}\), \(0 \le i < j \le n\), which control the probability of the algorithm to jump from one level i to a higher level j (see Sect. 3.3 for details). While this allows for deriving strong results, the application is rather technical due to the many parameters and the restrictions they have to fulfill.

In this paper, we propose a new variant of the FLM for lower bounds, which is easier to use and which appears more intuitive. For each level i, we regard the visit probability \(v_i\), that is, the probability that level i is visited at all during a run of the algorithm. This way we can directly characterize the run time of the algorithm as \(\sum _{i=1}^{m-1} v_i/p_i\) when \(p_i\) is the precise probability to leave level i independent of where on level i the algorithm is.Footnote 1 When only estimates for these quantities are known, e.g., because the level leaving probability is not independent from the current state, then we obtain the corresponding upper or lower bounds on the expected run time (see Sect. 3.4 for details).

We first use this method to give the precise expected run time of the \((1 + 1)\) EA on LeadingOnes in Sect. 4. While this run time was already well-understood before, it serves as a simple demonstration of the ease with which our method can be applied.

Next, in Sect. 5, we give a bound on the expected run time of the \((1 + 1)\) EA on OneMax, precise apart from terms of order \(\varTheta (n)\). Such bounds have also been known before, but needed much deeper methods (see Sect. 5.3 for a detailed discussion). Sudholt’s lower bound method has also been applied to this problem, but gave a slightly weaker bound deviating from the truth by an \(O(n \log \log n)\) term. In addition to the precise result, we feel that our FLM with visit probabilities gives a clearer structure of the proof than the previous works.

In Sect. 6, we prove tighter lower bounds for the run time of the \((1 + 1)\) EA on jump functions. We do so by determining (asymptotically precise) the probability that in a run of the \((1 + 1)\) EA on a jump function the algorithm does not reach a non-optimal search point outside the fitness valley (and thus does not have to jump over this valley). Interestingly, this probability is only \(O(2^{-n})\) regardless of the jump size (width of the valley).

Finally, in Sect. 7, we consider the \((1 + 1)\) EA on so-called long k-paths. We show how the FLM with visit probabilities can give results comparable to those of the FLM with viscosities while again being much simpler to apply.

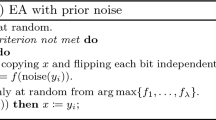

2 The \((1 + 1)\) EA

In this paper we consider exactly one randomized search heuristic, the \((1 + 1)\) EA. It maintains a single individual, the best it has seen so far. Each iteration it uses standard bit mutation with mutation rate \(p \in (0,1)\) (flipping each bit of the bit string independently with probability p) and keeps the result if and only if it is at least as good as the current individual under a given fitness function f. We give a more formal definition in Algorithm 1.

3 The Fitness Level Methods

The fitness level method is typically phrased in terms of a fitness-based partition, that is, a partition of the search space into sets \(A_1,\ldots ,A_m\) such that elements of later sets have higher fitness. We first introduce this concept and abstract away from it to ease the notation. After this, in Sect. 3.2, we state the original FLM. In Sect. 3.3 we describe the lower bound based on the FLM from Sudholt [59], before presenting our own variant, the FLM with visit probabilities, in Sect. 3.4.

3.1 Level Processes

Definition 1

(Fitness-Based Partition [61]) Let \(f: \{0,1\}^n \rightarrow {\mathbb {R}}\) be a fitness function. A partition \(A_1,\ldots ,A_m\) of \(\{0,1\}^n\) is called a fitness-based partition if for all \(i,j \le m\) with \(i < j\) and \(x \in A_i\), \(y \in A_j\), we have \(f(x) < f(y)\).

We will use the following shorthands. We write [a..b] for the set \(\{i \in {\mathbb {Z}}\mid a \le i \le b\}\); for \(x \in \{0,1\}^n\) we write \(\Vert x\Vert _1\) as the number of 1s in x. For a given fitness-based partition and \(i < m\), we write \(A_{\ge i} = \bigcup _{j=i}^m A_j\) and \(A_{\le i} = \bigcup _{j=1}^i A_j\).

In order to simplify our notation, we focus on processes on [1..m] (the levels) with underlying Markov chain as follows.

Definition 2

(Non-decreasing Level Process) A stochastic process \((X_t)_t\) on [1..m] is called a non-decreasing level process if and only if (i) there exists a Markov process \((Y_t)_t\) over a state space S such that there is an \(\ell : S \rightarrow [1..m]\) with \(\ell (Y_t) = X_t\) for all t, and (ii) the process \((X_t)_t\) is non-decreasing, that is, we have \(X_{t+1} \ge X_t\) with probability one for all t.

We later want to analyze algorithms in terms of non-decreasing level processes, making the transition as follows. Suppose we have an algorithm with state space \(\{0,1\}^n\). Denoting by \(Y_t\) the best among the first t search points generated by the algorithm, this defines a Markov chain \((Y_t)_t\) in the state space \(S = \{0,1\}^n\), the run of the algorithm. Further, suppose the algorithm optimizes a fitness function f such that the state of the algorithm is non-decreasing in terms of fitness. In order to get a non-decreasing level process, we can now define any fitness-based partition and get a corresponding level function \(\ell : S \rightarrow [1..m]\) by mapping any \(x \in S\) to the unique i with \(x \in A_i\). Then the process \((\ell (Y_t))_t\) is a non-decreasing level process.

The main reason for us to use the formal notion of a level process is the property formalized in the following lemma. Essentially, if a level process makes progress with probability at least p in each iteration (regardless of the precise current state), then the expected number of iterations until the process progresses is at most 1/p. This situation resembles a geometric distribution, but does not assume independence of the different iterations (one could show that the time to progress is stochastically dominated by a geometric distribution with success rate p, but we do not need this level of detail).

Lemma 3

Let \((X_t)_t\) be a non-decreasing level process with underlying Markov chain \((Y_t)_t\) and level function \(\ell \). Assume \(X_t\) starts on some particular level. Let \(p \in (0,1]\) be a lower bound on the probability for the level process to leave this level regardless of the state of the underlying Markov chain. Then the expected first time t such that \(X_t\) changes is at most 1/p.

Analogously, if p is an upper bound, the expected time t such that \(X_t\) changes is at least 1/p.

Proof

We let \((Z_t)_t\) be the stochastic process on \(\{0,1\}\) such that \(Z_t\) is 1 if and only if \(X_t > X_0\). According to our assumptions, we have, for all t before the first time that \(Z_t=1\), that \(E[Z_{t+1} - Z_t \mid Z_t] \ge p\). From the additive drift theorem [38, 45] we obtain that the expected first time such that \(Z_t = 1\) is bounded by 1/p as desired. The “analogously” clause follows analogously. \(\square \)

3.2 Original Fitness Level Method

The following theorem contains the original Fitness Level Method and makes the basic principle formal.

Theorem 4

(Fitness Level Method, upper bound [61]) Let \((X_t)_t\) be a non-decreasing level process (as detailed in Definition 2).

For all \(i \in [1..m-1]\), let \(p_i\) be a lower bound on the probability of a state change of \((X_t)_t\), conditional on being in state i. Then the expected time for \((X_t)_t\) to reach the state m is

This bound is very simple, yet strong. It is based on the idea that, in the worst case, all levels have to be visited sequentially. Note that one can improve this bound (slightly) by considering only those levels which come after the (random) start level \(X_0\) (by changing the start of the sum to \(X_0\) instead of 1 and then taking expectation). Intuitively, low levels that are never visited do not need to be left.

There is a lower bound based on the observation that at least the initial level has to be left (if it was not the last level).

Theorem 5

(Fitness Level Method, lower bound [61]) Let \((X_t)_t\) be a non-decreasing level process (as detailed in Definition 2).

For all \(i \in [1..m-1]\), let \(p_i\) be an upper bound on the probability of a state change, conditional on being in state i. Then the expected time for \((X_t)_t\) to reach the state m is

This bound is very weak since it assumes that the first improvement on the initial search point already finds the optimum.

We note, very briefly, that a second main analysis method, drift analysis, also has additional difficulties with lower bounds. Additive drift [38], multiplicative drift [20], and variable drift [40, 49] all easily give upper bounds for run times, however, only the additive drift theorem yields lower bounds with the same ease. The existing multiplicative [12, 24, 63] and variable [13, 17, 32, 35] drift theorems for lower bounds all need significantly stronger assumptions than their counterparts for upper bounds.

3.3 Fitness Level Method with Viscosity

While the upper bound above is strong and useful, the lower bound is typically not strong enough to give more than a trivial bound. Sudholt [59] gave a refinement of the method by considering bounds on the transition probabilities from one level to another.

Theorem 6

(Fitness Level Method with Viscosity, lower bound [59]) Let \((X_t)_t\) be a non-decreasing level process (as detailed in Definition 2). Let \(\chi ,\gamma _{i,j} \in [0,1]\) and \(p_i \in (0,1]\) be such that

-

For all t, if \(X_t = i\), the probability that \(X_{t+1} = j\) is at most \(p_i \cdot \gamma _{i,j}\);

-

\(\sum _{j=i+1}^m \gamma _{i,j} = 1\); and

-

For all \(j>i\), we have \(\gamma _{i,j} \ge \chi \sum _{k=j}^m \gamma _{i,k}\).

Then the expected time for \((X_t)_t\) to reach the state m is

This result is much stronger than the original lower bound from Fitness Level Method, since now the leaving probabilities of all segments are part of the bound, at least with a fractional impact prescribed by \(\chi \). The weakness of the method is that \(\chi \) has to be defined globally, the same for all segments i.

There is also a corresponding upper bound as follows; in [59] this result was used to derive a tight bound for LeadingOnes, but we believe it has otherwise not found application so far.

Theorem 7

(Fitness Level Method with Viscosity, upper bound [59]) Let \((X_t)_t\) be a non-decreasing level process (as detailed in Definition 2). Let \(\chi ,\gamma _{i,j} \in [0,1]\) and \(p_i \in (0,1]\) be such that

-

For all t, if \(X_t = i\), the probability that \(X_{t+1} = j\) is at least \(p_i \cdot \gamma _{i,j}\);

-

\(\sum _{j=i+1}^m \gamma _{i,j} = 1\);

-

For all \(j>i\), we have \(\gamma _{i,j} \le \chi \sum _{k=j}^m \gamma _{i,k}\); and

-

For all \(j \le m-2\), we have \((1-\chi )p_j \le p_{j+1}\).

Then the expected time for \((X_t)_t\) to reach the state m is

3.4 Fitness Level Method with Visit Probabilities

In this paper, we give a new FLM theorem for proving lower bounds. The underlying idea is that exactly all those levels that have ever been visited need to be left; thus, we can use the expected waiting time for leaving a specific level multiplied with the probability of visiting that level at all. The following theorem makes this idea precise for lower bounds; Theorem 9 gives the corresponding upper bound. We note that for the particular case of the optimization of the LeadingOnes problem via \((1+1)\)-type elitist algorithms, our bounds are special cases of [21, Lemma 5] and [27, Theorem 3].

Theorem 8

(Fitness Level Method with visit probabilities, lower bound) Let \((X_t)_t\) be a non-decreasing level process (as detailed in Definition 2). For all \(i \in [1..m-1]\), let \(p_i\) be an upper bound on the probability of a state change of \((X_t)_t\), conditional on being in state i. Furthermore, let \(v_i\) be a lower bound on the probability of there being a t such that \(X_t = i\). Then the expected time for \((X_t)_t\) to reach the state m is

Proof

For each \(i < m\), let \(T_i\) be the (random) time spent in level i. Thus,

Let now \(i < m\). We want to show that \(E[T_i] \ge v_i/p_i\). We let E be the event that the process ever visits level i and compute

For all t with \(X_t = i\), with probability at most \(p_i\), we have \(X_{t+1} > i\). Thus, using Lemma 3, the expected time until a search point with \(X_k > i\) is found is at least \(1/p_i\), giving \(E[T_i \mid E] \ge 1/p_i\) as desired. \(\square \)

A strength of this formulation is that skipping levels due to a higher initialization does not need to be taken into account separately (as in the two previous lower bounds), it is part of the visit probabilities. A corresponding upper bound follows with analogous arguments.

Theorem 9

(Fitness Level Method with visit probabilities, upper bound) Let \((X_t)_t\) be a non-decreasing level process (as detailed in Definition 2).

For all \(i \in [1..m-1]\), let \(p_i\) be a lower bound on the probability of a state change of \((X_t)_t\), conditional on being in state i. Furthermore, let \(v_i\) be an upper bound on the probability there being a t such that \(X_t = i\). Then the expected time for \((X_t)_t\) to reach the state m is

In a typical application of the method of the FLM, finding good estimates for the leaving probabilities is easy. It is more complicated to estimate the visit probabilities accurately, so we propose one possible approach in the following lemma.

Lemma 10

Let \((Y_t)_t\) be a Markov-process over state space S and \(\ell : S \rightarrow [1..m]\) a level function. For all t, let \(X_t = \ell (Y_t)\) and suppose that \((X_t)_t\) is non-decreasing. Further, suppose that \((X_t)_t\) reaches state m after a finite time with probability 1.

Let \(i < m\) be given. For any \(x \in S\) and any set \(M \subseteq S\), let \(x \rightarrow M\) denote the event that the Markov chain with current state x transitions to a state in M. For all j let \(A_j = \{s \in S \mid \ell (s) = j\}\). Suppose there is \(v_i\) such that, for all \(x \in A_{\le i-1}\) with \(\Pr [x \rightarrow A_{\ge i}] > 0\),

and

Then \(v_i\) is a lower bound for visiting level i as required by Theorem 8.

Proof

Let T be minimal such that \(Y_T \in A_{\ge i}\). Then the probability that level i is being visited is \(\Pr [Y_T \in A_i]\), since \((X_t)_t\) is non-decreasing.

By the law of total probability we can show the claim by showing it first conditional on \(T=0\) and then conditional on \(T\ne 0\).

We have that \(T = 0\) is equivalent to \(Y_0 \in A_{\ge i}\), thus we have \(\Pr [Y_T \in A_i \mid T = 0] \ge v_i\) from the second condition in the statement of the lemma.

Otherwise, let \(x = Y_{T-1}\). Since \(Y_T \in A_{\ge i}\),

As T was chosen minimally, we have \(x \not \in A_{\ge i}\) and thus get the desired bound from the first condition in the statement of the lemma. \(\square \)

Implicitly, the lemma suggests to take the minimum of all these conditional probabilities over the different choices for x. Note that this estimate might be somewhat imprecise since worst-case x might not be encountered frequently. Also note that a corresponding upper bound for Theorem 9 follows analogously.

4 The Precise Run Time for LeadingOnes

One of the classic fitness functions used for analyzing the optimization behavior of randomized search heuristics is the LeadingOnes function. Given a bit string x of length n, the LeadingOnes value of x is defined as the number of 1s in the bit string before the first 0 (if any). In parallel independent work, the precise expected run time of the \((1 + 1)\) EA on the LeadingOnes benchmark function was determined in [7, 59]. Even more, the distribution of the run time was determined with variants of the FLM in [21, 27]. As a first simple application of our methods, we now determine the precise run time of the \((1 + 1)\) EA on LeadingOnes via Theorems 8 and 9.

Theorem 11

Consider the \((1 + 1)\) EA optimizing LeadingOnes with mutation rate p. Let T be the (random) time for the \((1 + 1)\) EA to find the optimum. Then

Proof

We want to apply Theorems 8 and 9 simultaneously. We partition the search space in the canonical way such that, for all \(i \le n\), \(A_i\) contains the set of all search points with fitness i. Now we need a precise result for the probability to leave a level and for the probability to visit a level.

First, we consider the probability \(p_i\) to leave a given level \(i < n\). Suppose the algorithm has a current search point in \(A_i\), so it has i leading 1s and then a 0. The algorithm leaves level \(A_i\) now if and only if it flips the first 0 of the bit string (probability of p) and no previous bits (probability \((1-p)^i\)). Hence, \(p_i = p(1-p)^i\).

Next we consider the probability \(v_i\) to visit a level i. We claim that it is exactly 1/2, following reasoning given in several places before [19, 59]. We want to use Lemma 10 and its analogue for upper bounds. Let i be given. For the initial search point, if it is at least on level i (the condition considered by the lemma), the individual is on level i if and only if the \(i+1\)st bit is a 0, so exactly with probability 1/2 as desired for both bounds. Before an individual with at least i leading 1s is created, the bit at position \(i+1\) remains uniformly random (this can be seen by induction: it is uniform at the beginning and does not experience any bias in any iteration while no individual with at least i leading 1s is created). Once such an individual is created, if the bit at position \(i+1\) is 1, the level i is skipped, otherwise it is visited. Thus, the algorithm skips level i with probability exactly 1/2, giving \(v_i = 1/2\). With these exact values for the \(p_i\) and \(v_i\), Theorems 8 and 9 immediately yield the claim. \(\square \)

By computing the geometric series in Theorem 11, we obtain as a (well-known) corollary that the \((1 + 1)\) EA with the classic mutation rate \(p = 1/n\) optimizes LeadingOnes in an expected run time of \(n^2\frac{e-1}{2}(1\pm o(1))\).

5 A Tight Lower Bound for OneMax

In this section, as a more involved example of the usefulness of our general method, we prove a lower bound for the run time of the \((1 + 1)\) EA with standard mutation rate \(p=\frac{1}{n}\) on OneMax, which is only by an additive term of order O(n) below the upper bound following from the classic fitness level method. This is tighter than the best gap of order \(O(n \log \log n)\) proven previously with fitness level arguments. Moreover, our lower bound is the tightest lower bound apart from the significantly more complicated works that determine the run time precise apart from o(n) terms. We defer a detailed account of the literature together with a comparison of the methods to Sect. 5.3.

We recall the definition of the OneMax test functions as \(\textsc {OneMax} (x) = \sum _{i=1}^n x_i\), the number of 1s in a given bit string \(x \in \{0,1\}^n\). We further use the standard fitness levels of the OneMax function as given by

We use the notation \(A_{\ge i} :=\bigcup _{j = i}^n A_j\) and \(A_{\le i} :=\bigcup _{j = 0}^i A_j\) for all \(i \in [0..n]\) as defined above for fitness-based partitions, but with the appropriate bounds 0 and n instead of 1 and m.

We denote by \(T_{k,\ell }\) the expected number of iterations the \({(1 + 1)~\hbox {EA}} \), started with a search point in \(A_k\), takes to generate a search point in \(A_{\ge \ell }\). We further denote by \(T_{{{\,\mathrm{rand}\,}},\ell }\) the expected number of iterations the \((1 + 1)\) EA started with a random search point takes to generate a solution in \(A_{\ge \ell }\). These notions extend previously proposed fine-grained run time notions: \(T_{{{\,\mathrm{rand}\,}},\ell }\) is the fixed target run time first proposed in [21] as a technical tool and advocated more broadly in [6]. The time \(T_{k,n}\) until the optimum is found when starting with fitness k was investigated in [1] when \(k > n/2\), that is, when starting with a better-than-average solution. We spare the details and only note that such fine-grained complexity notions (which also include the fixed-budget complexity proposed in [42]) have given a much better picture on how to use EAs effectively than the classic run time \(T_{{{\,\mathrm{rand}\,}},n}\) alone. In particular, it was observed that different parameters or algorithms are preferable when not optimizing until the optimum or when starting with a good solution.

For all \(k, \ell \in [0..n]\), we denote by \(p_{k,\ell }\) the probability that standard bit mutation with mutation rate \(p = \frac{1}{n}\) creates an offspring in \(A_\ell \) from a parent in \(A_k\). We also write \(p_{k,\ge \ell } := \sum _{j = \ell }^n p_{k,j}\) to denote the probability to generate an individual in \(A_{\ge \ell }\) from a parent in \(A_k\). Then \(p_i := p_{i, \ge i+1}\) is the probability that the \((1 + 1)\) EA optimizing OneMax leaves the i-th fitness level.

5.1 Upper and Lower Bounds Via Fitness Levels

Using the notation just introduced, the classic fitness level method (see Theorem 4 and note that the fitness of the parent individuals describes a non-decreasing level process with state change probabilities \(p_i\)) shows that

To prove a nearly matching lower bound employing our new methods, we first analyze the probability that the \((1 + 1)\) EA optimizing OneMax skips a particular fitness level. Note that if \(q_i\) is the probability to skip the i-th fitness level, then \(v_i := 1 - q_i\) is the probability to visit the i-th level as used in Theorem 8.

Lemma 12

Let \(i \in [0..n]\). Consider a run of the \((1 + 1)\) EA with mutation rate \(p = \frac{1}{n}\) on the OneMax function started with a (possibly random) individual x with \(\textsc {OneMax} (x) < i\). Then the probability \(q_i\) that during the run the parent individual never has fitness i satisfies

Proof

Since we assume that we start below fitness level i, by Lemma 10 (and using the notation from that lemma for a moment) we have

Hence it suffices to show that \(\frac{p_{k,\ge i+1}}{p_{k, \ge i}} \le \frac{n-i}{n(1-\frac{1}{n})^{i-1}}\) for all \(k \in [0..i-1]\), and this is what we will do in the remainder of this proof.

Let us, slightly abusing the common notation, write \({{\,\mathrm{Bin}\,}}(m,p)\) to denote a random variable following a binomial law with parameters m and p. Let \(k, \ell \in {\mathbb {N}}\) with \(k \le \ell \). Noting that the only way to generate a search point in \(A_\ell \) from some \(x \in A_k\) is to flip, for some \(j \in [\ell -k..\min \{n-k,\ell \}]\), exactly j of the \(n-k\) zero-bits of x and exactly \(j - (\ell -k)\) of the k one-bits, we easily obtain the well-known fact that

Since \(p = \frac{1}{n}\), the mode of \({{\,\mathrm{Bin}\,}}(n-k,p)\) is at most 1. Since the binomial distribution is unimodal, we conclude that \(\Pr [{{\,\mathrm{Bin}\,}}(n-k,p) = j] \le \Pr [{{\,\mathrm{Bin}\,}}(n-k,p) = \ell -k]\) for all \(j \ge \ell -k\). Consequently, the first line of the above set of equations gives

and thus

We recall that our target is to estimate \(\frac{p_{k,\ge i+1}}{p_{k, \ge i}}\) for all \(k \in [0..i-1]\). By (1), we have

where the last estimate is [29, equation following Lemma 1.10.38]. We also have \(p_{k,\ge i} \ge p_{k,i} \ge (1-p)^k \Pr [{{\,\mathrm{Bin}\,}}(n-k,p)=i-k]\). Hence from

we conclude

using again that \(p = \frac{1}{n}\). For \(k \in [0..i-1]\), this expression is maximal for \(k = i-1\), giving that \(q_i \le \frac{n-i}{n(1-\frac{1}{n})^{i-1}}\) as claimed. \(\square \)

With this estimate, we can now easily give a very tight lower bound on the run time of the \((1 + 1)\) EA on OneMax.

Theorem 13

Let \(k , \ell \in [0..n]\) with \(k < \ell \). Then the expected number \(T_{k,\ell }\) of iterations the \((1 + 1)\) EA optimizing OneMax and initialized with any search point x with \(\textsc {OneMax} (x) = k\) takes to generate a search point z with fitness \(\textsc {OneMax} (z) \ge \ell \) is at least

where \({\tilde{T}}_{k,\ell }\) is the upper bound stemming from the fitness level method as defined at the beginning of this section. This lower bound holds also for \(T_{k',\ell }\) with \(k' \le k\), that is, when starting with a search point x with \(\textsc {OneMax} (x) \le k\).

Proof

We use our main result, Theorem 8. We note first that when assuming that the level process regarded in Theorem 8 starts on level \(k'\), then the expected time for it to reach level \(\ell \) or higher is at least \(\sum _{i=k'}^{\ell -1} \frac{v_i}{p_i}\). This follows immediately from the proof of the theorem or by applying the theorem to the level process \((X_t')\) defined by \(X'_t = \min \{\ell , X_t\} - k'\) for all t.

Consider now a run of the \((1 + 1)\) EA on the OneMax function started with an initial search point \(x_0\) such that \(k' = \textsc {OneMax} (x_0) \le k\). Denote by \(x_t\) the individual selected in iteration t as future parent. Then \(X_t = \textsc {OneMax} (x_t)\) defines a level process. As before, we denote the probabilities to visit level i by \(v_i\), to not visit it by \(q_i = 1 - v_i\), and to leave it to a higher level by \(p_i\). Using our main result and the elementary argument above, we obtain an expected run time of

We note that the first expression is exactly the upper bound \({\tilde{T}}_{k,\ell }\) stemming from the classic fitness level method. We estimate the second expression. We have

where the last estimate stems from regarding only the event that exactly one missing bit is flipped. Together with the estimate \(q_i \le \frac{n-i}{n(1-\frac{1}{n})^{i-1}}\) from Lemma 12, we compute

where the estimate in (3) uses the well-known inequality \(1+r \le e^r\) valid for all \(r \in {\mathbb {R}}\) and the last estimate exploits the convexity of the exponential function in the interval [0, 1], that is, that \(\exp (\alpha ) \le 1 + \alpha (\exp (1)-\exp (0))\) for all \(\alpha \in [0,1]\). \(\square \)

The result above shows that the classic fitness level method and our new lower bound method can give very tight run time results. We note that the difference \(\delta _{k,\ell } = (\ell -k-1) e (e-1) \exp (\frac{k}{n-1})\) between the two fitness level estimates is only of order \(O(\ell - k)\), in particular, only of order O(n) for the classic run time \(T_{{{\,\mathrm{rand}\,}},n}\), which itself is of order \(\varTheta (n \log n)\). Hence here the gap is only a term of lower order.

5.2 Estimating the Fitness Level Estimate \({\tilde{T}}_{k,\ell }\)

To make our results above meaningful, it remains to analyze the quantity \({\tilde{T}}_{k,\ell }= \sum _{i=k}^{\ell -1} 1/p_{i}\), which is the estimate from the classic fitness level method.

Here, again, it turns out that upper bounds tend to be easier to obtain since they require a lower bound for the \(p_{i}\), for which the estimate \(p_{i} \ge (1-\tfrac{1}{n})^{n-1} \frac{n-i}{n}\) from (2) usually is sufficient. To ease the presentation, let us use the notation \(e_n = (1 - \tfrac{1}{n})^{-(n-1)}\) and note that \(e (1-\frac{1}{n}) \le e_n \le e\), see, e.g., [29, Corollary 1.4.6]. With this notation, the lower bound (2) gives the upper bound

To prove a lower bound, we observe that

We can thus estimate

where the last inequality follows from the estimate \(\Pr [{{\,\mathrm{Bin}\,}}(n,p) \ge k] \le \left( {\begin{array}{c}n\\ k\end{array}}\right) p^k\), see, e.g., [34, Lemma 3] or [29, Lemma 1.10.37]. We note that the first summand in (5) is exactly our lower bound (2) for \(p_{i}\), so it is the second term that determines the slack of our estimates. We estimate coarsely

Summing over the fitness levels, we obtain

We note that our upper and lower bounds on \({\tilde{T}}_{k,\ell }\) deviate only by \({\tilde{T}}_{k,\ell }^+ - {\tilde{T}}_{k,\ell }^- = \frac{1}{2} e_n^2 (\ell -k)\). Together with Theorem 13, we have proven the following estimates for \(T_{k,\ell }\), which are tight apart from a term of order \(O(\ell -k)\).

Theorem 14

The expected number of iterations the \((1 + 1)\) EA optimizing OneMax, started with a search point of fitness k, takes to find a search point with fitness \(\ell \) or larger, satisfies

where \(e_n := (1-\frac{1}{n})^{-(n-1)}\).

We recall from above that \(e (1-\frac{1}{n}) \le e_n \le e\). We add that for \(\ell <n\), the sum \(\sum _{i = n-\ell +1}^{n-k} \frac{1}{i}\) is well-approximated by \(\ln (\frac{n-k}{n-\ell })\), e.g., \(\ln (\frac{n-k}{n-\ell }) -1< \sum _{i = n-\ell +1}^{n-k} \frac{1}{i} < \ln (\frac{n-k}{n-\ell })\) or \(\sum _{i = n-\ell +1}^{n-k} \frac{1}{i} = \ln (\frac{n-k}{n-\ell }) - O(\frac{1}{n-\ell })\), see, e.g., [29, Sect. 1.4.2] and note that \(\sum _{i = n-\ell +1}^{n-k} \frac{1}{i} = \sum _{i = 1}^{n-k} \frac{1}{i} - \sum _{i = 1}^{n-\ell } \frac{1}{i}\). For \(\ell = n\), we have \(\ln (n-k) < \sum _{i = n-\ell +1}^{n-k} \frac{1}{i} \le \ln (n-k)+1\) and \(\sum _{i = n-\ell +1}^{n-k} \frac{1}{i} = \ln (n-k)+O(\frac{1}{n-k})\).

When starting the \((1 + 1)\) EA with a random initial search point, the following bounds apply.

Theorem 15

There is an absolute constant K such that the expected run time \(T = T_{{{\,\mathrm{rand}\,}},n}\) of the \((1 + 1)\) EA with random initialization on OneMax satisfies

In particular,

Proof

By [11, Theorem 2], the expected run time of the \((1 + 1)\) EA with random initialization on OneMax differs from the expected run time when starting with a search point on level \(A_M\), \(M :=\lfloor n/2 \rfloor \), by at most a constant. Hence we have \(T \le T_{M,n} + O(1) \le {\tilde{T}}^+_{M,n} + O(1) = e_n n \sum _{i = 1}^{\lceil n/2 \rceil } \frac{1}{i} + O(1)\) by Theorem 14.

For the lower bound, we use Eq. (3) in the proof of Theorem 13, which is slightly tighter than the result stated in the theorem itself. Together with (6), we estimate

The second set of estimates stems from noting that \({\tilde{T}}_{M,n}^+ = e_n n \sum _{i = 1}^{\lceil n/2 \rceil } \frac{1}{i} = e_n n (\ln (\lceil n/2 \rceil ) + \gamma \pm O(\frac{1}{n})) = e (1 - O(\frac{1}{n})) n (\ln n - \ln 2 + \gamma \pm O(\frac{1}{n}))\), where \(\gamma = 0.5772156649\dots \) is the Euler-Mascheroni constant. \(\square \)

Let us comment a little on the tightness of our result. Due to the symmetries in the OneMax process, the probability to leave the i-th fitness level is independent of the particular search point \(x \in A_i\) the current parent is equal to. Consequently, in principle, Theorems 9 and 8 give the exact bound

where \(v_{i|k}\) denotes the probability that the process started on level k visits level i.

The reason why we cannot avoid a gap of order \(\varTheta (n)\) in our bounds is that computing the \(v_{i|k}\) and \(p_i\) precisely is very difficult. Let us regard the \(v_{i|k}\) first. It is easy to see that states i with \(k < i \le (1-\varepsilon ) n\), \(\varepsilon \) a positive constant, have a positive chance of not being visited: By Lemma 12, with probability \(\varOmega (1)\) level \(i-1\) is visited and from there, again with probability \(\varOmega (1)\), a two-bit flip occurs that leads to level \(i+1\). Since with constant probability the last level visited below level i is not \(i-1\), and since skipping level i conditional on the last level below i being at most \(i-2\) is, by a positive constant, less likely that skipping level i when on level \(i-1\) before (that is, \(\frac{p_{i-2,\ge i+1}}{p_{i-2,\ge i}} \le \frac{p_{i-1,\ge i+1}}{p_{i-1,\ge i}} - \varOmega (1)\), we omit a formal proof of this statement), our estimate \(q_{i|k} \le \max _{j \in [k..i-1]} \frac{p_{j,\ge i+1}}{p_{j,\ge i}}\) already leads to a constant factor loss in the estimate of the \(q_i\), which translates into a \(\varTheta (n)\) contribution to the gap of our lower bound from the truth. To overcome this, one would need to compute \(q_{i|k} = \sum _{j = k}^{i-1} Q_{j|k} \frac{p_{j,\ge i+1}}{p_{j,\ge i}}\) precisely, where \(Q_{j|k}\) is the probability that level j is the highest level visited below i in a process started on level k. This appears very complicated.

The second contribution to our \(\varTheta (n)\) gap is the estimate of \(p_i\). We need a lower bound on \(p_i\) both in the estimate of the run time advantage due to not visiting all levels (see Eq. (3)) and in the estimate of the run time estimate stemming from the fitness level method (4). Since the \(q_i\) are \(\varOmega (1)\) when \(i \le (1-\varepsilon )n\), a constant-factor misestimation of the \(p_i\) leads to a \(\varTheta (n)\) contribution to the gap. Unfortunately, it is hard to avoid a constant-factor misestimation of the \(p_i\), \(i \le (1-\varepsilon )n\). Our estimate \(p_i \ge (1-\frac{1}{n})^{n-1} \frac{n-i}{n}\) only regards the event that the i-th level is left (to level \(i+1\)) by flipping exactly one zero-bit into a one-bit. However, for each constant j the event that level \(i+1\) is reached by flipping \(j+1\) zero-bits and j one-bits has a constant probability of appearing. Moreover, for each constant j the event that level i is left to level \(i+j\) also has a constant probability. For these reasons, a precise estimate of the \(p_i\) appears rather tedious. We could imagine that either the methods developed in or partial results proven in [36] could help giving more precise estimates for \(v_{i|k}\) and \(p_i\), or even more directly for \(\frac{v_{i|k}}{p_i}\), but most likely this would still not be elementary.

In summary, we feel that our method quite easily gave a run time estimate precise apart from terms of order O(n), but for more precise results drift analysis [45] might be the better tool (though still the relatively precise estimate of the expected progress from a level \(i \le (1-\varepsilon )n\), which will necessarily be required for such an analysis, will be difficult to obtain).

5.3 Comparison with the Literature

We end this section by giving an overview on the previous works analyzing the run time of the \((1 + 1)\) EA on OneMax and comparing them to our result. Some of the results described in the following, in particular, Sudholt’s lower bound [59], were also proven for general mutation rates p instead of only \(p = \frac{1}{n}\). To ease the comparison with our result, we only state the results for the case that \(p = \frac{1}{n}\). We note that with our method we could also have analysed broader ranges of mutation rates. The resulting computations, however, would have been more complicated and would have obscured the basic application of our method.

To the best of our knowledge, the first to state and rigorously prove a run time bound for OneMax was Rudolph in his dissertation [53, p. 95], who showed that \(T = T_{{{\,\mathrm{rand}\,}},n}\) satisfies \(E[T] \le (1-\frac{1}{n})^{n-1} n \sum _{i=1}^{n} \frac{1}{i}\), which is exactly the upper bound \({\tilde{T}}^+_{0,n}\) from the fitness level method and from only regarding the events that levels are left via one-bit flips. A lower bound of \(n \ln (n) - O(n \log \log n)\) was shown in [18] for the optimization of a general separable function with positive weights when starting in the search point \((0, \dots , 0)\). From the proof of this result, it is clear that it holds for any pseudo-Boolean function with unique global optimum \((1, \dots , 1)\). This lower bound builds on the argument that each bit needs to be flipped at least once in some mutation step. It is not difficult to see that the expected time until this event happens is indeed \((1 \pm o(1)) n \ln n\), so this argument is too weak to make the leading constant of E[T] precise.

Only a very short time after these results and thus quite early in the young history of run time analysis of evolutionary algorithms, Garnier, Kallel, and Schoenauer [33] showed that \(E[T] = en\ln (n) + c_1 n + o(n)\) for a constant \(c_1 \approx -1.9\), however, the completeness of their proof has been doubted in [36]. Since at that early time precise run time analyses were not very popular, it took a while until Doerr, Fouz, and Witt [16] revisited this problem and showed with \(E[T] \ge (1-o(1)) e n \ln (n)\) the first lower bound that made the leading constant precise. Their proof used a variant of additive drift from [39] together with the potential function \(\ln (Z_t)\), where \(Z_t\) denotes the number of zeroes in the parent individual at time t. Shortly later, Sudholt [58] (journal version [59]) used his fitness level method for lower bounds to show \(E[T] \ge en \ln (n) - 2n\log \log n - 16n\). That the run time was \(E[T] = en\ln (n) - \varTheta (n)\) was proven first in [17], where an upper bound of \(en\ln (n) - 0.1369n + O(1)\)Footnote 2 was shown via variable drift for upper bounds [40, 49] and a lower bound of \(E[T] \ge en\ln (n) - O(n)\) was shown via a new variable drift theorem for lower bounds on hitting times. An explicit version of the lower bound of \(en\ln (n) - 7.81791n - O(\log n)\) and an alternative proof of the upper bound \(en\ln (n) - 0.1369n + O(1)\) was given in [48] via a very general drift theorem.

The final answer to this problem was given in an incredibly difficult work by Hwang, Panholzer, Rolin, Tsai, and Chen [36] (see [37] for a simplified version), who showed \(E[T] = en\ln (n) + c_1 n + \frac{1}{2} e \ln (n) + c_2 + O(n^{-1} \log n)\) with explicit constants \(c_1 \approx -1.9\) and \(c_2 \approx 0.6\).

In the light of these results, we feel that our proof of an \(en\ln (n) \pm O(n)\) bound is the first simple proof a run time estimate of this precision for this problem. Interestingly, our explicit lower bound \(en\ln (n) - 4.871n - O(\log n)\) is even a little stronger than the bound \(en\ln (n) - 7.81791n - O(\log n)\) proven with drift methods in [48].

6 Jump Functions

In this section, we regard jump functions, which comprise the most intensively studied benchmark in the theory of randomized search heuristics that is not unimodal and which has greatly aided our understanding of how different heuristics cope with local optima [2,3,4,5, 9, 10, 14, 15, 19, 26, 28, 30, 41, 43, 46, 51, 54,55,56, 65].

For all representation lengths n and all \(k \in [1..n]\), the jump function with jump size k is defined by

for all \(x \in \{0,1\}^n\). Jump functions have a fitness landscape isomorphic to \(\textsc {OneMax} \), except on the fitness valley or gap

where the fitness is low and deceptive (pointing away from the optimum).

For simple elitist heuristics, not surprisingly, the time to find the optimum is strongly related to the time to cross the valley of low fitness. For the \((1 + 1)\) EA with mutation rate \(\frac{1}{n}\), the probability to generate the optimum from a search point on the local optimum \(L = \{x \in \{0,1\}^n \mid \Vert x\Vert _1 = n-k\}\) is \(p_k = (1-\frac{1}{n})^{n-k} n^{-k}\), and hence the expected time to cross the valley of low fitness is \(\frac{1}{p_k}\).

The true expected run time deviates slightly from this value, both because some time is spent to reach the local optimum and because the algorithm may be lucky and not need to cross the valley or not in its full width. The first aspect, making additive terms of order at most \(O(n \log n)\) more precise, can be treated with arguments very similar to the ones of the previous section, so we do not discuss this here. More interesting appears to be the second aspect. In particular for larger values of k, the algorithm has a decent chance to start in the fitness valley. It is clear that even when starting in the valley, the deceptive nature of the valley will lead the algorithm rather towards the local optimum. We show now how our argumentation via omitted fitness levels allows to prove very precise bounds with elementary arguments. In principle, we could also use our fitness level theorem, but since we shall regard only the single level \(N_{n,k} = \{x \in \{0,1\}^n \mid \Vert x\Vert _1 \in [0..n-k]\}\), we shall not make this explicit and simply use the classic typical-run argument (that except with some probability q, a state is reached from which the expected run time is at least some t, and that this gives a lower bound of \((1-q)t\) for the expected run time).

The two previous analyses of the run time of the \((1 + 1)\) EA on jump functions deal with the problem of starting in the valley in a different manner. In [19], it is argued that with probability at least \(\frac{1}{2}\), the initial search point has at most \(\frac{n}{2}\) ones. In case the initial search point is nevertheless in the gap region (because \(k > \frac{n}{2}\)), then with high probability a OneMax-style optimization process will reach the local optimum with high probability in time \(O(n^2)\) except when in this period the optimum is generated. Since all parent individuals in this period have Hamming distance at least \(\frac{n}{2}\) from the optimum, the probability for this exceptional event is exponentially small. This argument proves an \(\varOmega (\frac{1}{p_k})\) bound for the expected run time, and this for all values of \(k \ge 2\). In [26], only the case \(k \le \frac{n}{2}\) was regarded and it was exploited that in this case, the probability for the initial search point to be in the gap (or the optimum) is only \(2^{-n} \left( {\begin{array}{c}n\\ \le k-1\end{array}}\right) \). This gives a lower bound of \(\big (1 - 2^{-n} \left( {\begin{array}{c}n\\ \le k-1\end{array}}\right) \big ) \frac{1}{p_k}\), which is tight including the leading constant for \(k \in [2..\frac{n}{2} - \omega (\sqrt{n})]\).

We now show that estimating the probability of never reaching a search point x with \(\Vert x\Vert _1 \le n-k\) is not difficult with arguments similar to the ones used in the previous section. We need a slightly different approach since now the probability to skip a fitness level is not maximal when closest to this fitness level (the probability to skip \(N_{n,k}\) is maximal when the algorithm is in the lowest fitness level, which is in Hamming distance \(k-1\) from \(N_{n,k}\)). Interestingly, we obtain very tight bounds which could be of some general interest, namely that the probability to never reach a point x with \(\Vert x\Vert \le n-k\) is \(O(\frac{1}{n})\), when allowing an arbitrary initialization (different from the global optimum), and is only \(O(2^{-n})\) when using the usual random initialization.

Theorem 16

Let \(n \in {\mathbb {N}}\) and \(k \in [2..n]\). Consider a run of the \((1 + 1)\) EA with mutation rate \(p = \frac{1}{n}\) on the jump function \({{\,\mathrm{\textsc {Jump}}\,}}_{n,k}\). Denote by \(N := N_{n,k} = \{x \in \{0,1\}^n \mid \Vert x\Vert _1 \in [0..n-k]\}\) the set of non-optimal solutions that do not lie in the gap region of the jump function and by \(p_k = (1-\frac{1}{n})^{n-k} n^{-k}\) the probability to generate the optimum from a solution on the local optimum.

-

(i)

Assume that the \((1 + 1)\) EA starts with an arbitrary solution different from the global optimum. Then with probability \(1 - O(\frac{1}{n})\), the algorithm reaches a search point in N. Consequently, the expected run time is at least \((1 - O(\frac{1}{n})) p_k^{-1}\).

-

(ii)

Assume that the \((1 + 1)\) EA starts with a random initial solution. Then with probability \(1 - O(2^{-n})\), the algorithm reaches a search point in N. Consequently, the expected run time is at least \((1 - O(2^{-n})) p_k^{-1}\).

Proof

Denote by f the jump function \({{\,\mathrm{\textsc {Jump}}\,}}_{n,k}\). We consider the partition of the search space into the fitness levels of the gap as well as N and the optimum. Hence let

Let us also denote by \(G = A_{\le k-1}\) the set of solutions in the gap region. Our first claim is that, regardless of the initialization as long as different from the optimum, the probability \(q_k\) that the algorithm never has the parent individual in \(A_k\) is \(O(\frac{1}{n})\). Since we start the algorithm with a non-optimal search point, the only way the algorithm can avoid \(A_k\) is that it starts with a solution in G and that at some time it generates the global optimum from a solution in G. Denote by \(r_j\) the probability that the algorithm, if the current search point is in \(A_j\), in the remaining run generates the optimum from a search point in \(A_j\). Then by a simple union bound, \(q_k\) is at most the sum of the \(r_j\). More precisely, let \(R_j\), \(j \in [1..k-1]\), denote the event that the algorithm ever has a search point from \(A_j\) as parent individual. Then

The probability \(r_j\) is exactly the probability that in the iteration in which from a search point in \(A_j\) a better individual is generated, this is actually the global optimum. Hence \(r_j = \Pr [y = (1, \dots , 1) \mid f(y) > j]\), where y is a mutation offspring generated from a search point in \(A_j\). To be on the safe side, we also make this argument more precise. Let us denote the parent individual at time t by \(x^{(t)}\). Let \(t_0\) be a time such that \(x^{(t_0)} \in A_j\). For all \(t \in {\mathbb {N}}_0\), let \(O_t\) be the event that \(x^{(t)} \in A_j\) and \(x^{(t+1)} = (1, \dots , 1)\), that is, that in interation t the optimum is generated from a parent in \(A_j\). Then \(r_j = \sum _{t = t_0}^\infty \Pr [O_t]\). Let \(T^+\) be the first (and only) time that from a search point in \(A_j\) a better solution is generated, that is, such that \(x^{(t)} \in A_j\) and \(f(x^{(t+1)}) > f(x^{(t)})\). By the law of total probability, we have

We note that \(\Pr [O_t \mid t \ne T^+] = 0\) because \(t < T^+\) implies \(x^{(t+1)} \in A_j\) and thus \(x^{(t+1)} \ne (1, \dots , 1)\) and because \(t > T^+\) implies \(x^{(t)} \notin A_j\). Hence \(r_j = \sum _{t = t_0}^\infty \Pr [t = T^+] \Pr [O_t \mid t = T^+] \). Since we have a Markov process, the particular time t has no influence on the transition probabilities, and since we have symmetry in all search points having the same number of ones, the particular parent at time \(T^+\) is irrelevant (apart from the fact that it is in \(A_j\)). Consequently, \(r_j = \Pr [f(y) = (1, \ldots , 1) \mid f(y) > j]\), where y is a mutation offspring from an arbitrary point in \(A_j\).

We now compute

where we estimated the probability to generate a search point with fitness better than j by the probability of the event that a single one is flipped into a zero. Consequently, \(q_k \le \sum _{j=1}^{k-1} r_j \le \sum _{j=1}^{n-1} \frac{e}{n^{j-1} (n-j)} = O(\frac{1}{n})\).

Once a search point in \(A_k\) is reached, the remaining run time dominates a geometric distribution with success probability \(p_k = (1-\frac{1}{n})^{n-k} n^{-k}\), simply because each of the following iterations (before the optimum is found) has at most this probability of generating the optimum; hence the expected remaining run time is at least \(\frac{1}{p_k}\). This shows that the expected run time of the \((1 + 1)\) EA started with any non-optimal search point is at least \((1-q_k) \frac{1}{p_k}\).

For the case of a random initialization, we proceed in a similar manner, but also use the trivial observation that to skip the fitness range N by jumping from \(A_j\), \(j \in [1..k-1]\), right into the optimum, it is necessary that the algorithm visits \(A_j\). To visit \(A_j\), it is necessary that the initial search point lies in \(A_1 \cup \dots \cup A_j\), which happens with probability \(2^{-n} \sum _{i=1}^{k-1} \left( {\begin{array}{c}n\\ i\end{array}}\right) \) only. This, together with the observation that the only other way to avoid \(A_k\) is that the initial individual is already the optimum, gives

Using a tail estimate for binomial distributions (equation (VI.3.4) in [31], also to be found as (1.10.62) in [29]), we bound \(\sum _{i=1}^{j} \left( {\begin{array}{c}n\\ i\end{array}}\right) \le 1.5 \left( {\begin{array}{c}n\\ j\end{array}}\right) \) for all \(j \le \frac{1}{4} n\). We also note from (7) that \(r_j \le 4 n^{-j}\) in this case. For \(j \ge \frac{1}{4} n\), we trivially have \(\sum _{i=1}^{j} 2^{-n} \left( {\begin{array}{c}n\\ i\end{array}}\right) r_j \le r_j \le e r^{-(j-1)}\). Consequently,

Hence, as above, the expected run time is at least \((1-q_k) \frac{1}{p_k} = (1 - O(2^{-n})) \frac{1}{p_k}\). \(\square \)

We note that the \(O(\frac{1}{n})\) term in the bound for arbitrary initialization cannot be avoided in general, simply because when starting with a search point that is a neighbor of the optimum, the first iteration with probability at least \(\frac{1}{en}\) generates the optimum. The \(O(2^{-n})\) term in the bound for random initialization is apparently necessary because with probability \(2^{-n}\) already the initial random solution is the global optimum.

We also note that we did not optimize the implicit constants in the \(O(\frac{1}{n})\) and \(O(2^{-n})\) term. With more care, these could be replaced by \((1 + o(1)) \frac{1}{e-1} \frac{1}{n}\) and \((1+o(1)) \frac{e}{e-1} 2^{-n}\), respectively.

7 A Bound for Long k-Paths

Long k-paths, introduced in [52], have been studied in various places; we point the reader to [57] for a discussion, which also contains the formalization that we use. A lower bound for long k-paths using FLM with viscosities was given in [59].

We use [57, Lemma 3] (phrased as a definition below) and need to know no further details about what a long k-path is. In fact, our proof uses all the ideas of the proof of [59], but cast in terms of our FLM with visit probabilities, which, we believe, makes the proof simpler and the core ideas given by [59] more prominent. Note that [59] first needs to extend the FLM with viscosities by introducing an additional parameter before it is applicable in this case.

Definition 17

Let k, n be given such that k divides n. A long k-path is function \(f: \{0,1\}^n \rightarrow {\mathbb {R}}\) such that

-

The 0-bit string has a fitness of 0; there are \(m = k2^{n/k} - k\) bit strings of positive fitness, and all these values are distinct; all other bit strings have negative fitness. We call the bit strings with non-negative fitness as being on the path and consider them ordered by fitness (this way we can talk about the “next” element on the path).

-

For each bit string with non-negative fitness and each \(i < k\), the bit string with i-next higher fitness is exactly a Hamming distance of i away.

-

For each bit string with non-negative fitness and each \(i\ge k\), the bit string with i-next higher fitness is at least a Hamming distance of k away.

For an explicit construction of a long k-path, see [19, 57]. The long k-paths are designed such that optimization proceeds by following the (long) path and true shortcuts are unlikely, since they require jumping at least k.

The following lower bound for optimizing long k-paths with the \((1 + 1)\) EA is given in [59]. Note that n is the length of the bit strings, m is the length of the path and p is the mutation rate.

We want to show here that we can derive the essentially same bound with the same ideas but less technical details.

Note that the lower bound given in [59] is only meaningful for \(k \ge \sqrt{n/\log (1/p)}\), as the last term of the bound would otherwise be close to 0:

We have that \(n/k-k\log (1/p)\) is positive if and only if \(n/\log (1/p) \ge k^2\).

In fact, if \(k = \omega \left( \sqrt{n/\log (1/p)}\right) \), we have

This also entails

With our fitness level method, we obtain the following lower bound. It differs from Sudholt’s bound (8) by an additional term m, which reduces the lower bound. Analyzing why this term does not appear in Sudholt’s analysis, we note that the \(\gamma _{i,j}\) chosen in [59] are underestimating the true probability to jump to elements of the path that are more than k steps (on the path) away. When this is corrected, as confirmed to us by the author, Sudholt’s proof would also only show our bound below. Consequently, there is currently no proof for (8).

Theorem 18

Consider the \((1 + 1)\) EA on a long k-path of length m with mutation rate \(p \le 1/2\) starting at the all-0 bit string (the start of the path).Footnote 3

Let T be the (random) time for the \((1 + 1)\) EA to find the optimum. Then

Proof

We are setting up to apply Theorem 8. We partition the search space in the canonical way such that, for all \(i \le m\) with \(i > 0\), \(A_i\) contains the only i-th point of the path and nothing else, and \(A_0\) contains all points not on the path. In order to simplify the analysis, we will first change the behavior of the algorithm such that it discards any offspring which differs from its parent by at least k bits. This will allow us to apply Theorem 8 quickly and cleanly, afterwards we will show that the progress of this modified algorithm is very close to the progress of the original algorithm.

In this modified process, we first consider the probability \(p_i\) to leave a given level \(i < m\). For this, the algorithm has to jump up exactly \(j < k\) fitness levels, which is achieved by flipping a specific set of j bits; the probability for this is

Next we consider the probability \(v_i\) to visit a level i. We want to apply Lemma 10, so let some \(x \in A_{< i}\) be given, on level \(\ell (x)\). Let \(d = i-\ell (x)\). Note that d is the Hamming distance between x and the unique point in \(A_i\). Thus, in case of \(d \ge k\), we have \(\Pr [x \rightarrow A_i] = 0\), so suppose \(d < k\). Then we have

By Lemma 10, we can use this last term as \(v_i\) in Theorem 8 (it also fulfills the second condition of Lemma 10, since the process starts deterministically in the 0 string). Note that neither \(p_i\) nor \(v_i\) depends on i. Using Theorem 8 and recalling that we have m levels, we get a lower bound of

Note that this is exactly the term derived in [59] except for a term correcting for the possibility of jumps of more than k bits, which we also still need to correct for.

We now show that this probability of making a successful jump of distance at least k is small. To that end we will show that it is very unlikely to leave a fitness level with a large jump rather than just move to the next level.

Suppose the algorithm is currently at \(x \in A_i\). Leaving x with a jump of at least k to a specific element on the path is less likely the longer the jump is (since \(p \le 1/2\)). Thus, we can upper bound the probability of jumping to an element of the path which is more than k away as \(p^k(1-p)^{n-k}\). Thus, conditional on leaving the fitness level, the probability of leaving it with a \(\ge k\)-jump is

Thus, the probability of never making an accepted jump of at least k is bounded from below by the probability to, independently once for each of the m fitness levels, leave the fitness level with a 1-step rather than a jump of at least k:

By pessimistically assuming that the process takes a time of 0 in case it ever makes an accepted jump of at least k, we can lower-bound the expected time of the original process to reach the optimum as the product of the expected time of the modified process times the probability to never make progress of k or more. \(\square \)

8 Conclusion

In this work, we proposed a simple and natural way to prove lower bounds via fitness level arguments. The key to our approach is that the true run time can be expressed as the sum of the waiting times to leave a fitness level, weighted with the probability that this level is visited at all. When applying this idea, usually the most difficult part is estimating the probabilities to visit the levels, but as our examples LeadingOnes, OneMax, jump functions, and long paths show, this is not overly difficult and clearly easier than setting correctly the viscosity parameters of the previous fitness level method for lower bounds. For this reason, we are optimistic that our method will be an effective way to prove other lower bounds in the future, most easily, of course, for problems where upper bounds were proven via fitness level arguments as well.

Our method makes most sense for elitist evolutionary algorithms even though by regarding the best-so-far individual any evolutionary algorithm gives rise to a non-decreasing level process (at the price that the estimates for the level leaving probabilities become weaker). We are optimistic that our method can be extended to non-elitist algorithms, though. We note that the level visit probability \(v_i\) for an elitist algorithm is equal to the expected number of separate visits to this level (simply because each level is visited exactly once or never). When defining the \(v_i\) as the expected number of times the i-th level is visited, our upper and lower bounds of Theorems 8 and 9 remain valid (the proof would use Wald’s equation). We did not detail this in our work since our main focus were the elitist examples regarded in [59], but we are optimistic that this direction could be interesting to prove lower bounds also for non-elitist algorithms.

References

Antipov, Denis, Buzdalov, Maxim, Benjamin, Doerr: First steps towards a runtime analysis when starting with a good solution. In: Parallel problem solving from nature, PPSN 2020, Part II., pp. 560–573. Springer, Cham (2020)

Antipov, Denis, Buzdalov, Maxim, Doerr Benjamin: Lazy parameter tuning and control: choosing all parameters randomly from a power-law distribution. In: Genetic and Evolutionary Computation Conference, GECCO 2021, pp. 1115–1123. ACM (2021)

Antipov, Denis, Doerr, Benjamin: Runtime analysis of a heavy-tailed \((1+(\lambda , \lambda ))\) genetic algorithm on jump functions. In Parallel Problem Solving From Nature, PPSN 2020, Part II, pp. 545–559. Springer, (2020)

Antipov, Denis, Doerr, Benjamin, Karavaev, Vitalii: The \((1 + (\lambda ,\lambda ))\) GA is even faster on multimodal problems. In: Genetic and Evolutionary Computation Conference, GECCO 2020, pp. 1259–1267. ACM, (2020)

Benbaki Riade, Benomar Ziyad, Doerr Benjamin: A rigorous runtime analysis of the 2-MMAS\(_{\rm ib }\) on jump functions: ant colony optimizers can cope well with local optima. In: Genetic and Evolutionary Computation Conference, GECCO 2021, pp. 4–13. ACM, (2021)

Buzdalov, Maxim, Doerr, Benjamin, Doerr, Carola, Vinokurov, Dmitry: Fixed-target runtime analysis. In: Genetic and Evolutionary Computation Conference, GECCO 2020, pp. 1295–1303. ACM, (2020)

Böttcher, Süntje, Doerr, Benjamin, Neumann, Frank: Optimal fixed and adaptive mutation rates for the LeadingOnes problem. In: Parallel Problem Solving from Nature, PPSN 2010, pp. 1–10. Springer, (2010)

Corus, Dogan, Dang, Duc-Cuong., Eremeev, Anton V., Lehre, Per Kristian: Level-based analysis of genetic algorithms and other search processes. IEEE Trans. Evolut. Comput. 22, 707–719 (2018)

Corus, Dogan, Oliveto, Pietro S., Yazdani, Donya: On the runtime analysis of the Opt-IA artificial immune system. In: Genetic and Evolutionary Computation Conference, GECCO 2017, pp. 83–90. ACM, (2017)

Corus, Dogan, Oliveto, Pietro S., Yazdani, Donya: Fast artificial immune systems. In: Parallel Problem Solving from Nature, PPSN 2018, Part II, pp. 67–78. Springer, (2018)

Doerr, Benjamin, Doerr, Carola: The impact of random initialization on the runtime of randomized search heuristics. Algorithmica 75, 529–553 (2016)

Doerr, Benjamin, Doerr, Carola, Kötzing, Timo: Static and self-adjusting mutation strengths for multi-valued decision variables. Algorithmica 80, 1732–1768 (2018)

Doerr, Benjamin, Doerr, Carola, Yang, Jing: Optimal parameter choices via precise black-box analysis. Theor. Comput. Sci. 801, 1–34 (2020)

Dang, Duc-Cuong, Friedrich, Tobias, Kötzing, Timo, Krejca, Martin S., Lehre, Per Kristian, Oliveto, Pietro S., Sudholt, Dirk, Sutton, Andrew M.: Escaping local optima with diversity mechanisms and crossover. In: Genetic and Evolutionary Computation Conference, GECCO 2016, pp. 645–652. ACM, 2016

Dang, Duc-Cuong., Friedrich, Tobias, Kötzing, Timo, Krejca, Martin S., Lehre, Per Kristian, Oliveto, Pietro S., Sudholt, Dirk, Sutton, Andrew M.: Escaping local optima using crossover with emergent diversity. IEEE Trans. Evol. Comput. 22, 484–497 (2018)

Doerr, Benjamin, Fouz, Mahmoud, Witt, Carsten: Quasirandom evolutionary algorithms. In: Genetic and Evolutionary Computation Conference, GECCO 2010, pp. 1457–1464. ACM (2010)

Doerr, Benjamin, Fouz, Mahmoud, Witt, Carsten: Sharp bounds by probability-generating functions and variable drift. In: Genetic and Evolutionary Computation Conference, GECCO 2011, pp. 2083–2090. ACM (2011)

Droste, Stefan, Jansen, Thomas, Wegener, Ingo: A rigorous complexity analysis of the \({(1+1)}\) evolutionary algorithm for separable functions with boolean inputs. Evol. Comput. 6, 185–196 (1998)

Droste, Stefan, Jansen, Thomas, Wegener, Ingo: On the analysis of the (1+1) evolutionary algorithm. Theor. Comput. Sci. 276, 51–81 (2002)

Doerr, Benjamin, Johannsen, Daniel, Winzen, Carola: Multiplicative drift analysis. Algorithmica 64, 673–697 (2012)

Doerr, Benjamin, Jansen, Thomas, Witt, Carsten, Zarges, Christine: A method to derive fixed budget results from expected optimisation times. In: Genetic and Evolutionary Computation Conference, GECCO 2013, pp. 1581–1588. ACM (2013)

Doerr, Benjamin, Kötzing, Timo: Multiplicative up-drift. In: Genetic and Evolutionary Computation Conference, GECCO 2019, pp. 1470–1478. ACM (2019)

Doerr, Benjamin, Kötzing, Timo: Lower bounds from fitness levels made easy. In: Genetic and Evolutionary Computation Conference, GECCO 2021, pp. 1142–1150. ACM (2021)

Doerr, Benjamin, Kötzing, Timo, Gregor Lagodzinski, J.A., Lengler, Johannes: The impact of lexicographic parsimony pressure for ORDER/MAJORITY on the run time. Theor. Comput. Sci. 816, 144–168 (2020)

Dang, Duc-Cuong., Lehre, Per Kristian: Runtime analysis of non-elitist populations: from classical optimisation to partial information. Algorithmica 75, 428–461 (2016)

Doerr, Benjamin, Le, Huu Phuoc, Makhmara, Régis, Nguyen, Ta Duy: Fast genetic algorithms. In Genetic and Evolutionary Computation Conference, GECCO 2017, pp. 777–784. ACM (2017)

Doerr, Benjamin: Analyzing randomized search heuristics via stochastic domination. Theor. Comput. Sci. 773, 115–137 (2019)

Doerr, Benjamin: Does comma selection help to cope with local optima?. In: Genetic and Evolutionary Computation Conference, GECCO 2020, pp. 1304–1313. ACM (2020)

Doerr, Benjamin: Probabilistic tools for the analysis of randomized optimization heuristics. In: Benjamin Doerr and Frank Neumann (ed.), Theory of Evolutionary Computation: Recent Developments in Discrete Optimization, pp. 1–87. Springer, 2020. Also available at arxiv: 1801.06733

Doerr, Benjamin, Zheng, Weijie: Theoretical analyses of multi-objective evolutionary algorithms on multi-modal objectives. In: Conference on Artificial Intelligence, AAAI 2021, pp. 12293–12301. AAAI Press (2021)

Feller, William: An introduction to probability theory and its applications, vol. I, 3rd edn. Wiley, Amsterdam (1968)

Feldmann, Matthias, Kötzing, Timo: Optimizing expected path lengths with ant colony optimization using fitness proportional update. In: Foundations of Genetic Algorithms, FOGA 2013, pp. 65–74. ACM (2013)

Garnier, Josselin, Kallel, Leila, Schoenauer, Marc: Rigorous hitting times for binary mutations. Evol. Comput. 7, 173–203 (1999)

Gießen, Christian, Witt, Carsten: The interplay of population size and mutation probability in the \({(1 + \lambda )}\) EA on OneMax. Algorithmica 78, 587–609 (2017)

Gießen, Christian, Witt, Carsten: Optimal mutation rates for the \({(1 + \lambda )}\) EA on OneMax through asymptotically tight drift analysis. Algorithmica 80, 1710–1731 (2018)

Hwang, Hsien-Kuei., Panholzer, Alois, Rolin, Nicolas, Tsai, Tsung-Hsi., Chen, Wei-Mei.: Probabilistic analysis of the (1+1)-evolutionary algorithm. Evol. Comput. 26, 299–345 (2018)

Hwang, Hsien-Kuei, Witt, Carsten: Sharp bounds on the runtime of the (1+1) EA via drift analysis and analytic combinatorial tools. In: Foundations of Genetic Algorithms, FOGA 2019, pp. 1–12. ACM (2019)

He, Jun, Yao, Xin: Drift analysis and average time complexity of evolutionary algorithms. Artif. Intell. 127, 51–81 (2001)

Jägersküpper, Jens: Algorithmic analysis of a basic evolutionary algorithm for continuous optimization. Theor. Comput. Sci. 379, 329–347 (2007)

Johannsen, Daniel: Random Combinatorial Structures and Randomized Search Heuristics. PhD thesis, Universität des Saarlandes, (2010)

Jansen, Thomas, Wegener, Ingo: The analysis of evolutionary algorithms - a proof that crossover really can help. Algorithmica 34, 47–66 (2002)

Jansen, Thomas, Zarges, Christine: Performance analysis of randomised search heuristics operating with a fixed budget. Theor. Comput. Sci. 545, 39–58 (2014)

Lehre, Per Kristian: Negative drift in populations. In: Parallel Problem Solving from Nature, PPSN 2010, pp. 244–253. Springer, (2010)

Lehre, Per Kristian: Fitness-levels for non-elitist populations. In: Genetic and Evolutionary Computation Conference, GECCO 2011, pp. 2075–2082. ACM, (2011)

Lengler, Johannes: Drift analysis. In Benjamin Doerr and Frank Neumann, editors, Theory of Evolutionary Computation: Recent Developments in Discrete Optimization, pp. 89–131. Springer, (2020). Also available at arXiv:1712.00964

Lissovoi, Andrei, Oliveto, Pietro S., Warwicker, John Alasdair: On the time complexity of algorithm selection hyper-heuristics for multimodal optimisation. In: Conference on Artificial Intelligence, AAAI 2019, pp. 2322–2329. AAAI Press, (2019)

Lässig, Jörg., Sudholt, Dirk: General upper bounds on the runtime of parallel evolutionary algorithms. Evol. Comput. 22, 405–437 (2014)

Lehre, Per Kristian, Witt, Carsten: Concentrated hitting times of randomized search heuristics with variable drift. In: International Symposium on Algorithms and Computation, ISAAC 2014, pp. 686–697. Springer, (2014)

Mitavskiy, Boris, Rowe, Jonathan E., Cannings, Chris: Theoretical analysis of local search strategies to optimize network communication subject to preserving the total number of links. Int. J. Intell. Comput. Cybern. 2, 243–284 (2009)

Meyn, Sean: Tweedie. Markov chains and stochastic stability. Cambridge University Press, Richard (2009)

Rowe, Jonathan E., Aishwaryaprajna: The benefits and limitations of voting mechanisms in evolutionary optimisation. In: Foundations of Genetic Algorithms, FOGA 2019, pp. 34–42. ACM, (2019)

Rudolph, Günter.: How mutation and selection solve long path problems in polynomial expected time. Evol. Comput. 4, 195–205 (1996)

Rudolph, Günter.: Convergence properties of evolutionary algorithms. Verlag Dr, Kovǎc (1997)

Rajabi, Amirhossein, Witt, Carsten: Self-adjusting evolutionary algorithms for multimodal optimization. In: Genetic and Evolutionary Computation Conference, GECCO 2020, pp. 1314–1322. ACM, (2020)

Rajabi, Amirhossein, Witt, Carsten: Stagnation detection in highly multimodal fitness landscapes. In: Genetic and Evolutionary Computation Conference, GECCO 2021, pp. 1178–1186. ACM, (2021)

Rajabi, Amirhossein, Witt, Carsten: Stagnation detection with randomized local search. In: Evolutionary Computation in Combinatorial Optimization, EvoCOP 2021, pp. 152–168. Springer, (2021)

Sudholt, Dirk: The impact of parametrization in memetic evolutionary algorithms. Theor. Comput. Sci. 410, 2511–2528 (2009)

Sudholt, Dirk: General lower bounds for the running time of evolutionary algorithms. In: Parallel Problem Solving from Nature, PPSN 2010, Part I, pp. 124–133. Springer, (2010)

Sudholt, Dirk: A new method for lower bounds on the running time of evolutionary algorithms. IEEE Trans. Evol. Comput. 17, 418–435 (2013)

Wegener, Ingo: Theoretical aspects of evolutionary algorithms. In: Automata, Languages and Programming, ICALP 2001, pp. 64–78. Springer, (2001)

Wegener, Ingo: Methods for the analysis of evolutionary algorithms on pseudo-Boolean functions. In: Ruhul Sarker, Masoud Mohammadian, and Xin Yao (ed.), Evolutionary Optimization, pp. 349–369. Kluwer, (2002)

Witt, Carsten: Runtime analysis of the (\(\mu \) + 1) EA on simple pseudo-Boolean functions. Evol. Comput. 14, 65–86 (2006)

Witt, Carsten: Tight bounds on the optimization time of a randomized search heuristic on linear functions. Combinatorics, Probab. Comput. 22, 294–318 (2013)

Witt, Carsten: Fitness levels with tail bounds for the analysis of randomized search heuristics. Inf. Process. Lett. 114, 38–41 (2014)

Whitley, Darrell, Varadarajan, Swetha, Hirsch, Rachel, Mukhopadhyay, Anirban: Exploration and exploitation without mutation: solving the jump function in \({\Theta (n)}\) time. In: Parallel Problem Solving from Nature, PPSN 2018, Part II, pp. 55–66. Springer, (2018)

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by a public grant as part of the Investissements d’avenir project, reference ANR-11-LABX-0056-LMH, LabEx LMH, and by the Deutsche Forschungsgemeinschaft (DFG), Grant FR 2988/17-1.

Extended version of the conference paper [23]

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Doerr, B., Kötzing, T. Lower Bounds from Fitness Levels Made Easy. Algorithmica 86, 367–395 (2024). https://doi.org/10.1007/s00453-022-00952-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00453-022-00952-w