Abstract

The search problem of computing a Stackelberg (or leader-follower)equilibrium (also referred to as an optimal strategy to commit to) has been widely investigated in the scientific literature in, almost exclusively, the single-follower setting. Although the optimistic and pessimistic versions of the problem, i.e., those where the single follower breaks any ties among multiple equilibria either in favour or against the leader, are solved with different methodologies, both cases allow for efficient, polynomial-time algorithms based on linear programming. The situation is different with multiple followers, where results are only sporadic and depend strictly on the nature of the followers’ game. In this paper, we investigate the setting of a normal-form game with a single leader and multiple followers who, after observing the leader’s commitment, play a Nash equilibrium. When both leader and followers are allowed to play mixed strategies, the corresponding search problem, both in the optimistic and pessimistic versions, is known to be inapproximable in polynomial time to within any multiplicative polynomial factor unless \(\textsf {P}=\textsf {NP}\). Exact algorithms are known only for the optimistic case. We focus on the case where the followers play pure strategies—a restriction that applies to a number of real-world scenarios and which, in principle, makes the problem easier—under the assumption of pessimism (the optimistic version of the problem can be straightforwardly solved in polynomial time). After casting this search problem (with followers playing pure strategies) as a pessimistic bilevel programming problem, we show that, with two followers, the problem is NP-hard and, with three or more followers, it cannot be approximated in polynomial time to within any multiplicative factor which is polynomial in the size of the normal-form game, nor, assuming utilities in [0, 1], to within any constant additive loss stricly smaller than 1 unless \(\textsf {P}=\textsf {NP}\). This shows that, differently from what happens in the optimistic version, hardness and inapproximability in the pessimistic problem are not due to the adoption of mixed strategies. We then show that the problem admits, in the general case, a supremum but not a maximum, and we propose a single-level mathematical programming reformulation which asks for the maximization of a nonconcave quadratic function over an unbounded nonconvex feasible region defined by linear and quadratic constraints. Since, due to admitting a supremum but not a maximum, only a restricted version of this formulation can be solved to optimality with state-of-the-art methods, we propose an exact ad hoc algorithm (which we also embed within a branch-and-bound scheme) capable of computing the supremum of the problem and, for cases where there is no leader’s strategy where such value is attained, also an \(\alpha \)-approximate strategy where \(\alpha > 0\) is an arbitrary additive loss (at most as large as the supremum). We conclude the paper by evaluating the scalability of our algorithms via computational experiments on a well-established testbed of game instances.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, Stackelberg (or Leader-Follower) Games (SGs) and their corresponding Stackelberg Equilibria (SEs) have attracted a growing interest in many disciplines, including theoretical computer science, artificial intelligence, and operations research. SGs describe situations where one player (the leader) commits to a strategy and the other players (the followers) first observe the leader’s commitment and, then, decide how to play. In the literature, SEs are often referred to as optimal strategies (for the leader) to commit to. SGs encompass a broad array of real-world games. A prominent example is that one of security games, where a defender, acting as leader, is tasked to allocate scarce resources to protect valuable targets from an attacker, who acts as follower [3, 17, 28]. Besides the security domain, applications can be found in, among others, interdiction games [10, 23], toll-setting problems [19], and network routing [2].

While, with only a few exceptions (see [6, 8, 13, 18, 21]), the majority of the game-theoretical investigations on the computation of SEs assumes the presence of a single follower, in this work we address the multi-follower case.

When facing an SG and, in particular, a multi-follower one, two aspects need to be considered: the type of game (induced by the leader’s strategy) the followers play and, in it, how ties among the multiple equilibria which could arise are broken.

As to the nature of the followers’ game, and restricting ourselves to the cases which look more natural, the followers may play hierarchically one at a time, as in a hierarchical Stackelberg game [14], simultaneously and cooperatively [13], or simultaneously and noncooperatively [4].

As to breaking ties among multiple equilibria, it is natural to consider two cases: the optimistic one (often called strong SE), where the followers end up playing an equilibrium which maximizes the leader’s utility, and the pessimistic one (often called weak SE), where the followers end up playing an equilibrium by which the leader’s utility is minimized. This distinction is customary in the literature since the seminal paper on SEs with mixed-strategy commitments by Von Stengel and Zamir [34]. We remark that the adoption of either the optimistic or the pessimistic setting does not correspond to assuming that the followers could necessarily agree on an optimistic or pessimistic equilibrium in a practical application. Rather, by computing an optimistic and a pessimistic SE the leader becomes aware of the largest and smallest utility she can get without having to make any assumptions on which equilibrium the followers would actually end up playing if the game resulting from the leader’s commitment were to admit more than a single one. What is more, while an optimistic SE accounts for the best case for the leader, a pessimistic SE accounts for the worst case. In this sense, the computation of a pessimistic SE is paramount in realistic scenarios as, differently from the optimistic one, it is robust, guaranteeing the leader a lower bound on the maximum utility she would get independently of how the followers would break ties among multiple equilibria. As we will see, though, this degree of robustness comes at a high computational cost, as computing a pessimistic SE is a much harder task than computing its optimistic counterpart.

1.1 Stackelberg Nash Equilibria

Throughout the paper, we will consider the case of normal-form games where, after the leader’s commitment to a strategy, the followers play simultaneously and noncooperatively, reaching a Nash equilibrium. We refer to the corresponding equilibrium as Stackelberg Nash Equilibrium (SNE).Footnote 1 We focus on the case where the followers are restricted to pure strategies. This restriction is motivated by several reasons. First, while the unrestricted problem is already hard with two followers (as shown in [4]), it is not known whether the restriction to followers playing pure strategies makes the problem easier or not. Secondly, many games admit pure-strategy NEs, among which potential games [25], congestion games [29], and toll-setting problems [19] and, as we show in Sect. 3.3, the same also holds with high probability in many unstructured games.

1.2 Original Contributions

After briefly pointing out that an optimistic SNE (with followers restricted to pure strategies) can be computed efficiently (in polynomial time) by a mixture of enumeration and linear programming, we entirely devote the remainder of the paper to the pessimistic case (with, again, followers restricted to pure strategies). In terms of computational complexity, we show that, differently from the optimistic case, in the pessimistic one the equilibrium-finding problem is NP-hard with two or more followers, while, when the number of followers is three or more, the problem cannot be approximated in polynomial time to within any polynomial multiplicative factor nor to within any constant additive loss unless \(\textsf {P}=\textsf {NP}\). To establish these two results, we introduce two reductions, one from Independent Set and the other one from 3-SAT.

After analyzing the complexity of the problem, we focus on its algorithmic aspects. First, we formulate the problem as a pessimistic bilevel programming problem with multiple followers. We then show how to recast it as a single-level Quadratically Constrained Quadratic Program (QCQP), which we show to be impractical to solve due to admitting a supremum, but not a maximum. We then introduce a restriction based on a Mixed-Integer Linear Program (MILP) which, while forsaking optimality, always admits an optimal (restricted) solution. Next, we propose an exact algorithm to compute the value of the supremum of the problem based on an enumeration scheme which, at each iteration, solves a lexicographic MILP (lex-MILP) where the two objective functions are optimized in sequence. Subsequently, we embed the enumerative algorithm within a branch-and-bound scheme, obtaining an algorithm which is, in practice, much faster. We also extend the algorithm (in both versions) so that, for cases where the supremum is not a maximum, it returns a strategy by which the leader can obtain a utility within an additive loss \(\alpha \) with respect to the supremum, for any arbitrarily chosen \(\alpha > 0\). To conclude, we experimentally evaluate the scalability of our methods over a testbed of randomly generated instances.

The status, in terms of complexity and known algorithms, of the problem of computing an SNE (with followers playing pure or mixed strategies) is summarized in Table 1. The original results we provide in this paper are reported in boldface.

1.3 Paper Outline

The paper is organized as follows.Footnote 2 Previous works are introduced in Sect. 2. The problem we study is formally stated in Sect. 3, together with some preliminary results. In Sect. 4, we present the computational complexity results. Sect. 5 introduces the single-level reformulation(s) of the problem, while Sect. 6 describes our exact algorithm (in its two versions). An empirical evaluation of our methods is carried out in Sect. 7. Sect. 8 concludes the paper.

2 Previous Works

As we mentioned in Sect. 1, most of the works on (normal-form) SGs focus on the single-follower case. In such case, as shown in [14] the follower always plays a pure strategy (except for degenerate games). In the optimistic case, an SE can be found in polynomial time by solving a Linear Program (LP) for each action of the (single) follower (the algorithm is, thus, a multi-LP). Each LP maximizes the expected utility of the leader subject to a set of constraints imposing that the given follower’s action is a best-response [14]. As shown in [13], all these LPs can be encoded into a single LP—a slight variation of the LP that is used to compute a correlated equilibrium (the solution concept where all the players can exploit a correlation device to coordinate their strategies).Footnote 3 Some works study the equilibrium-finding problem (only in the optimistic version) in structured games where the action space is combinatorial. See [7] for more references.

For what concerns the pessimistic single-follower case, the authors of [34] study the problem of computing the supremum of the leader’s expected utility. They show that, for the latter, it suffices to consider the follower’s actions which constitute a best-response to a full-dimensional region of the leader’s strategy space. The multi-LP algorithm the authors propose solves two LPs per action of the follower, one to verify whether the best-response region for that action is full-dimensional (so to discard it if full-dimensionality does not hold) and a second one to compute the best leader’s strategy within that best-response region. The algorithm runs in polynomial time. While the authors limit their analysis to computing the supremum of the leader’s utility, we remark that such value does not always translate into a strategy that the leader can play as, in the general case where the leader’s utility does not admit a maximum, there is no leader’s strategy giving her a utility equal to the supremum. In such cases, one should rather look for a strategy providing the leader with an expected utility which approximates the value of the supremum. This aspect, which is not addressed in [34], will be tackled on the multi-follower case by our work.

The multi-follower case, which, to the best of our knowledge, has only been investigated in [4, 6], is computationally much harder than the single-follower case. It is, in the general case where leader and followers are entitled to mixed strategies, NP-hard and inapproximable in polynomial time to within any multiplicative factor which is polynomial in the size of the normal-form game unless \({\mathsf {P}}=\mathsf {NP}\).Footnote 4 In the aforementioned works, the problem of finding an equilibrium in the optimistic case is formulated as a nonlinear and nonconvex mathematical program and solved to global optimality (within a given tolerance) with spatial branch-and-bound techniques. No exact methods are proposed for the pessimistic case.

3 Problem Statement and Preliminary Results

After setting the notation used throughout the paper, this section offers a formal definition of the equilibrium-finding problem we tackle in this work and illustrates some of its properties.

3.1 Notation

Let \(N=\{1,\ldots ,n\}\) be the set of players and, for each player \(p \in N\), let \(A_p\) be her set of actions, of cardinality \(m_p = |A_p|\). Let also  . For each player \(p \in N\), let \(x_p \in [0,1]^{m_p}\), with \(\sum _{a_p \in A_p}x_p^{a_p} = 1\), be her strategy vector (or strategy, for short), where each component \(x_{p}^{a_p}\) of \(x_p\) represents the probability by which player p plays action \(a_p \in A_{p}\). For each player \(p \in N\), let also \(\varDelta _p = \{ x_p \in [0,1]^{m_p} : \sum _{a_p \in A_p} x_p^{a_p} = 1 \}\) be the set of her strategies, or strategy space, which corresponds to the standard \((m_p-1)\)-simplex in \({\mathbb {R}}^{m_p}\). A strategy is said pure when only one action is played with positive probability, i.e., when \(x_p \in \{0,1\}^{m_p}\), and mixed otherwise. In the following, we denote the collection of strategies of the different players (called strategy profile) by \(x=(x_{1}, \ldots , x_{n})\). For the case where all the strategies are pure, we denote the collection of actions played by the players (called action profile) by \(a = (a_1, \ldots , a_n)\).

. For each player \(p \in N\), let \(x_p \in [0,1]^{m_p}\), with \(\sum _{a_p \in A_p}x_p^{a_p} = 1\), be her strategy vector (or strategy, for short), where each component \(x_{p}^{a_p}\) of \(x_p\) represents the probability by which player p plays action \(a_p \in A_{p}\). For each player \(p \in N\), let also \(\varDelta _p = \{ x_p \in [0,1]^{m_p} : \sum _{a_p \in A_p} x_p^{a_p} = 1 \}\) be the set of her strategies, or strategy space, which corresponds to the standard \((m_p-1)\)-simplex in \({\mathbb {R}}^{m_p}\). A strategy is said pure when only one action is played with positive probability, i.e., when \(x_p \in \{0,1\}^{m_p}\), and mixed otherwise. In the following, we denote the collection of strategies of the different players (called strategy profile) by \(x=(x_{1}, \ldots , x_{n})\). For the case where all the strategies are pure, we denote the collection of actions played by the players (called action profile) by \(a = (a_1, \ldots , a_n)\).

Given a strategy profile x, we denote the collection of all the strategies in it but the one of player \(p \in N\) by \(x_{-p}\), i.e., \(x_{-p}=(x_1,\ldots ,x_{p-1},x_{p+1},\ldots ,x_n)\). Given \(x_{-p}\) and a strategy vector \(x_p\), we denote the whole strategy profile x by \((x_{-p},x_p)\). For action profiles, \(a_{-p}\) and \((a_{-p},a_p)\) are defined analogously. For the case were all players are restricted to pure strategies with the sole exception of player p, who is allowed to play mixed strategies, we use the notation \((a_{-p}, x_p)\).

We consider normal-form games where \(U_p \in {\mathbb {Q}}^{m_1 \times \cdots \times m_n}\) represents, for each player \(p \in N\), her (multidimensional) utility (or payoff) matrix. For each \(p \in N\) and given an action profile \(a=(a_1,\ldots ,a_n)\), each component \(U_p^{a_1 \ldots a_n}\) of \(U_p\) corresponds to the utility of player p when all the players play the action profile a. For the ease of presentation and when no ambiguity arises, we will often write \(U_p^a\) in place of \(U_p^{a_1 \ldots a_n}\). Given a collection of actions \(a_{-p}\) and an action \(a_p \in A_p\), we will also use \(U_p^{a_{-p},a_p}\) to denote the component of \(U_p\) corresponding to the action profile \((a_{-p},a_p)\). Given a strategy profile \(x=(x_1,\ldots ,x_n)\), the expected utility of player \(p \in N\) is the n-th-degree polynomial \(\sum _{a \in A} U_p^{a} x_1^{a_1} \, x_2^{a_2} \dots \, x_n^{a_n}\).

An action profile \(a = (a_1, \ldots , a_n)\) is called pure strategy Nash Equilibrium (or pure NE, for short) if, when the players in \(N {\setminus } \{p\}\) play as the equilibrium prescribes, player p cannot improve her utility by deviating from the equilibrium and playing another action \(a_p' \ne a_p\), for all \(p \in N\). More generally, a mixed strategy Nash Equilibrium (or mixed NE, for short) is a strategy profile \(x = (x_1, \ldots ,x_n)\) such that no player \(p \in N\) could improve her utility by playing a strategy \(x_p' \ne x_p\) assuming the other players would play as the equilibrium prescribes. A mixed NE always exists [26] in a normal-form game, while a pure NE may not. For more details on (noncooperative) game theory, we refer the reader to [32].

Similar definitions hold for the case of SGs when assuming that only a subset of players (the followers) play an NE given the strategy the leader has committed to.

3.2 The Problem and Its Formulation

In the following, we assume that the n-th player takes the role of leader. We denote the set of followers (the first \(n-1\) players) by \(F=N {\setminus } \{n\}\). For the ease of notation, we also define  as the set of followers’ action profiles, i.e., the set of all collections of followers’ actions. We also assume, unless otherwise stated, \(m_p=m\) for every player \(p \in N\), where m denotes the number of actions available to each player. This is without loss of generality, as one could always introduce additional actions with a utility small enough to guarantee that they would never be played, thus obtaining a game where each player has the same number of actions.

as the set of followers’ action profiles, i.e., the set of all collections of followers’ actions. We also assume, unless otherwise stated, \(m_p=m\) for every player \(p \in N\), where m denotes the number of actions available to each player. This is without loss of generality, as one could always introduce additional actions with a utility small enough to guarantee that they would never be played, thus obtaining a game where each player has the same number of actions.

As we mentioned in Sect. 1, in this work we tackle the problem of computing an equilibrium in a normal-form game where the followers play a pure NE once they have observed the leader’s commitment to a mixed strategy. We refer to an Optimistic Stackelberg Pure-Nash Equilibrium (O-SPNE) when the followers play a pure NE which maximises the leader’s utility, and to a Pessimistic Stackelberg Pure-Nash Equilibrium (P-SPNE) when they seek a pure NE by which the leader’s utility is minimized.

3.2.1 The Optimistic Case

Before focusing our attention entirely on the pessimistic case, let us briefly address the optimistic one.

An O-SPNE can be found by solving the following bilevel programming problem with \(n-1\)followers:

Note that, due to the integrality constraints on \(x_p\) for all \(p \in F\), each follower can play a single action with probability 1. By imposing the \({{\,\mathrm{argmax}\,}}\) constraint for each \(p \in F\), the formulation guarantees that each follower plays a best-response action \(a_p\), thus guaranteeing that the action profile \(a_{-n} = (a_1, \dots , a_{n-1})\) with, for all \(a_p \in A_p\), \(a_p = 1\) if and only if \(x_p^{a_p} = 1\), be an NE for the given \(x_n\). It is crucial to note that the maximization in the upper level is carried out not only w.r.t. \(x_n\), but also w.r.t. \(x_{-n}\). This way, if the followers’ game admits multiple NEs for the chosen \(x_n\), optimal solutions to Problem (1) are then guaranteed to contain followers’ action profiles which maximize the leader’s utility—thus satisfying the assumption of optimism.

As shown in the following proposition, computing an O-SPNE is an easy task:

Proposition 1

In a normal-form game, an O-SPNE can be computed in polynomial time by solving a multi-LP.

Proof

It suffices to enumerate, in \(O(m^{n-1})\), all the followers’ action profiles \(a_{-n} \in A_F\) and, for each of them, solve an LP to: i) check whether there is a strategy vector \(x_n\) for the leader for which the action profile \(a_{-n}\) is an NE and ii) find, among all such strategy vectors \(x_n\), one which maximizes the leader’s utility. The action profile \(a_{-n}\) which, with the corresponding \(x_n\), yields the largest expected utility for the leader is an O-SPNE.

Given a followers’ action profile \(a_{-n}\), i) and ii) can be carried out in polynomial time by solving the following LP, where the second constraint guarantees that \(a_{-n} = (a_1, \dots , a_{n-1})\) is a pure NE for the followers’ game for any of its solutions \(x_n\):

As the size of an instance of the problem is bounded from below by \(m^n\), one can enumerate over the set of the followers’ action profiles (whose cardinality is \(m^{n-1}\)) in polynomial time. The claim of polynomiality of the overall algorithm follows due to linear programming being solvable in polynomial time. \(\square \)

3.2.2 The Pessimistic Case

In the pessimistic case, the computation of a P-SPNE amounts to solving the following pessimistic bilevel problem with\(n-1\)followers:

There are two differences between this problem and its optimistic counterpart: the presence of the \(\min \) operator in the objective function and the fact that Problem (2) calls for a \(\sup \) rather than for a \(\max \). The former guarantees that, in the presence of many pure NEs in the followers’ game for the chosen \(x_n\), one which minimizes the leader’s utility is selected. The \(\sup \) operator is introduced because, as illustrated in Subsect. 3.3, the pessimistic problem does not admit a maximum in the general case.

Throughout the paper, we will compactly refer to the above problem as

where f is the leader’s utility in the pessimistic case, defined as a function of \(x_n\). Since a pure NE may not exist for every leader’s strategy \(x_n\), we define \(\sup _{x_n \in \varDelta _n} f(x_n) = - \infty \) whenever there is no \(x_n\) such that the resulting followers’ game admits a pure NE. Note that f is always bounded from above when assuming bounded payoffs and, thus, \(\sup _{x_n \in \varDelta _n} f(x_n) < \infty \).

3.3 Some Preliminary Results

Since not all normal-form games admit a pure NE, a normal-form game may not admit an (optimistic or pessimistic) SPNE. Assuming that the payoffs of the game are independent and follow a uniform distribution, and provided that the number of players’ actions is sufficiently large, with high probability there always exists a leader’s commitment such that the resulting followers’ game has at least one pure NE. This is shown in the following proposition:

Proposition 2

Given a normal-form game with n players and independent uniformly distributed payoffs, the probability that there exists a leader’s strategy \(x_n \in \varDelta _n\) inducing at least one pure NE in the followers’ game approaches 1 as the number of players’ actions m goes to infinity.

Proof

As shown in [33], in an n-player normal-form game with independent and uniformly distributed payoffs the probability of the existence of a pure NE can be expressed as a function of the number of players’ actions m, say \({\mathcal {P}}(m)\), which approaches \(1 - \frac{1}{e}\) for \(m \rightarrow \infty \). Assume now that we are given one such n-player normal-form game. Then, for every leader’s action \(a_n \in A_n\), let \({\mathcal {P}}_{a_n}(m)\) be the probability that the followers’ game induced by the leader’s action \(a_n\) admits a pure NE. Since each of the followers’ games resulting from the choice of \(a_n\) also has independent and uniformly distributed payoffs, all the probabilities are equal, i.e., \({\mathcal {P}}_{a_n}(m) = {\mathcal {P}}(m)\) for every \(a_n \in A_n\). It follows that the probability that at least one of such followers’ games admits a pure NE is:

Since this probability approaches 1 as m goes to infinity, the probability of the existence of a leader’s strategy \(x_n \in \varDelta _n\) which induces at least one pure NE in the followers’ game also approaches 1 for \(m \rightarrow \infty \). \(\square \)

The fact that Problem (2) may not admit a maximum is shown by the following proposition:

Proposition 3

In a normal-form game, Problem (2) may not admit a \(\max \) even if the followers’ game admits a pure NE for every leader’s mixed strategy \(x_n\).

Proof

Consider a game with \(n=3\), \(A_1 = \{a_1^1,a_1^2\}\), \(A_2 = \{a_2^1, a_2^2\}\), \(A_3 = \{a_3^1,a_3^2\}\). The matrices reported in the following are the utility matrices for, respectively, the case where the leader plays action \(a_3^1\) with probability 1, action \(a_3^2\) with probability 1, or the strategy vector \(x_3 = (1-\rho , \rho )\) for some \(\rho \in [0,1]\) (the third matrix is the convex combination of the first two with weights \(x_3\)):

In the optimistic case, one can verify that \((a_1^1,a_2^2,a_3^2)\) is the unique O-SPNE (as it achieves the largest leader’s payoff in \(U_3\), no mixed strategy \(x_3\) would yield a better utility).

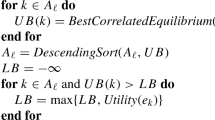

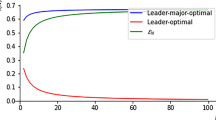

In the pessimistic case, the leader induces the followers’ game in the third matrix by playing \(x_3 = (1-\rho ,\rho )\). For \( \rho < \frac{1}{2} \), \((a_1^1,a_2^2)\) is the unique NE, giving the leader a utility of \(5+5\rho \). For \(\rho \geqslant \frac{1}{2} \), there are two NEs, \((a_1^1,a_2^2)\) and \( (a_1^2,a_2^1)\), with a utility of, respectively, \(5+5\rho \) and 1. Since, in the pessimistic case, the latter is selected, we conclude that the leader’s utility is equal to \(5+5 \rho \) for \(\rho < \frac{1}{2}\) and to 1 for \(\rho \geqslant \frac{1}{2}\) (see Fig. 1 for an illustration). Thus, Problem (2) admits a supremum of value \(5+\frac{5}{2}\), but not a maximum. \(\square \)

We remark that the result in Proposition 3 is in line with a similar result shown in [34] for the single-follower case, as well as with those which hold for general pessimistic bilevel problems [35].

The relevance of computing a pessimistic SPNE is highlighted by the following proposition:

Proposition 4

In the worst case, in a normal-form game with payoffs in [0, 1] the leader’s utility in an O-SPNE cannot be approximated to within any constant multiplicative factor nor to within any constant additive loss strictly smaller than 1 by the leader’s strategy corresponding to a P-SPNE, nor by any leader’s strategy obtained by perturbing the leader’s strategy corresponding to an O-SPNE.

Proof

Consider the following normal-form game with payoffs in [0, 1] where \(n=3\), \(A_1=\{a_1^1,a_1^2\}\), \(A_2=\{a_2^1,a_2^2\}\), \(A_3=\{a_3^1,a_3^2\}\), parametrized by \(\mu > 4\):

The leader’s utility in the normal-form game in the proof of Proposition 4, plotted as a function of \(\rho \), where the leader’s strategy is \(x_3=(1 - \rho ,\rho )\)

Let \(x_3 = (1-\rho ,\rho )\). The followers’ game admits the NE \((a_1^2, a_2^1)\) for all values of \(\rho \) (with leader’s utility \(\frac{2+ (4 \mu -2) \rho }{\mu ^2}\)) and the NE \((a_1^1, a_2^2)\) for \(\rho =0\) (with leader’s utility 1). Therefore, the game admits a unique O-SPNE achieved at \(\rho = 0\) (utility 1), and a unique P-SPNE achieved at \(\rho =1\) (utility \(\frac{4}{\mu }\)). See Fig. 2 for an illustration of the leader’s utility function.

To show the first part of the claim, it suffices to observe that the ratio between the leader’s utility in the unique O-SPNE, which is equal to 1, and that one in a P-SPNE, which is equal to \(\frac{\mu }{4}\), becomes arbitrarily large when letting \(\mu \rightarrow \infty \), whereas the difference between these two quantities approaches 1 for \(\mu \) approaching \(\infty \).

As to the second part of the claim, after perturbing the value that \(x_3\) takes in the unique O-SPNE by any arbitrarily small \(\epsilon > 0\) (i.e., by considering the leader’s strategy \(x_3=(1-\epsilon ,\epsilon )\)), we obtain a leader’s utility of \(\frac{2+(4 \mu -2) \epsilon }{\mu ^2}\), whose ratio w.r.t. the utility of 1 in the unique O-SPNE becomes again arbitrarily large for \(\mu \rightarrow \infty \), whereas the difference between these two quantities approaches 1 for \(\mu \) approaching \(\infty \). \(\square \)

4 Computational Complexity

Let P-SPNE-s be the search version of the problem of computing a P-SPNE for normal-form games. In Sect. 4.1, we show that solving P-SPNE is NP-hard for \(n \geqslant 3\) (i.e., with at least two followers). Moreover, in Sect. 4.2 we prove that for \(n \geqslant 4\) (i.e., for games with at least three followers) the problem is inapproximable, in polynomial time, to within any polynomial multiplicative factor or to within any constant additive loss unless P = NP. We introduce two reductions, a non approximation-preserving one which is valid for \(n \geqslant 3\) and another one only valid for \(n \geqslant 4\) but approximation-preserving.

In decision form, the problem of computing a P-SPNE reads:

Definition 1

(P-SPNE-d) Given a normal-form game with \(n \geqslant 3\) players and a finite number K, is there a P-SPNE in which the leader achieves a utility greater than or equal to K?

In Sect. 4.1, we show that P-SPNE-d is NP-complete by polynomially reducing to it Independent Set (IND-SET) (one of Karp’s original 21 NP-complete problems [16]). In decision form, IND-SET reads:

Definition 2

(IND-SET-d) Given an undirected graph \(G=(V,E)\) and an integer \(J \leqslant |V|\), does G contain an independent set (a subset of vertices \(V' \subseteq V: \forall u,v \in V'\), \(\{u,v\} \notin E\)) of size greater than or equal to J?

In Sect. 4.2, we prove the inapproximability of P-SPNE-s for the case with at least three followers by polynomially reducing to it 3-SAT (another of Karp’s 21 NP-complete problems [16]). 3-SAT reads:

Definition 3

(3-SAT) Given a collection \(C=\{\phi _1,\ldots ,\phi _t\}\) of clauses (disjunctions of literals) on a finite set V of Boolean variables with \(|\phi _c|=3\) for \(1 \leqslant c \leqslant t\), is there a truth assignment for V which satisfies all the clauses in C?

4.1 NP-Completeness

Before presenting our reduction, we introduce the following class of normal-form games:

Definition 4

Given two rational numbers b and c with \(1> c> b > 0\) and an integer \(r \geqslant 1\), let \(\varGamma _b^c(r)\) be a class of normal-form games with three players (\(n=3\)), the first two having \(r+1\) actions each with action sets \(A_1 = A_2 = A = \{1,\ldots ,r,\chi \}\) and the third one having r actions with action set \(A_3 = A {\setminus } \{\chi \}\), such that, for every third player’s action \(a_3 \in A {\setminus } \{\chi \} \), the other players play a game where:

the payoffs on the main diagonal (where both players play the same action) satisfy \(U_1^{a_3 a_3 a_3} \!=\!U_2^{a_3 a_3 a_3} \!=\!1, U_1^{\chi \chi a_3} \!=\!c, U_2^{\chi \chi a_3} \!=\!b\) and, for every \(a_1 \in A {\setminus } \{a_3,\chi \}\), \(U_1^{a_1 a_1 a_3} \!=\!U_2^{a_1 a_1 a_3} \!=\!0\);

for every \(a_1,a_2 \in A {\setminus } \{\chi \}\) with \(a_1 \ne a_2\), \(U_1^{a_1 a_2 a_3} \!=\!U_2^{a_1 a_2 a_3} = b\);

for every \(a_2 \in A {\setminus } \{\chi \}\), \(U_1^{\chi a_2 a_3} \!=\!c \) and \( U_2^{\chi a_2 a_3} \!=\!0\);

for every \(a_1 \in A {\setminus } \{\chi \}\), \(U_1^{a_1 \chi a_3} \!=\!1 \) and \(U_2^{a_1 \chi a_3} \!=\!0\).

No restrictions are imposed on the third player’s payoffs.

See Fig. 3 for an illustration of one such game \(\varGamma _b^c(r)\) with \(r=3\), parametric in b and c.

The special feature of \(\varGamma _b^c(r)\) games is that, no matter which mixed strategy the third player (the leader) commits to, with the exception of \((\chi ,\chi )\) only the diagonal outcomes can be pure NEs in the resulting followers’ game. Moreover, for every subset of diagonal outcomes there is a leader’s strategy such that this subset precisely corresponds to the set of all pure NEs in the followers’ game. This is formally stated by the following proposition:

Proposition 5

A \(\varGamma _b^c(r)\) game with \(c \leqslant \frac{1}{r} \) admits, for all \(S \subseteq \{(a_1,a_1) : a_1 \in A {\setminus } \{\chi \}\}\) with \(S\ne \emptyset \), a leader’s strategy \(x_3 \in \varDelta _3\) such that the outcomes \((a_1,a_1) \in S\) are exactly the pure NEs in the resulting followers’ game.

Proof

First, observe that the followers’ payoffs that are not on the main diagonal are independent of the leader’s strategy \(x_3\). Thus, any outcome \( (a_1,a_2) \) with \(a_1,a_2 \in A {\setminus } \{\chi \}\) and \(a_1 \ne a_2\) cannot be an NE, as the first follower would deviate by playing action \(\chi \) so to obtain a utility \(c > b\). Analogously, any outcome \((\chi ,a_2)\) with \(a_2 \in A {\setminus } \{\chi \}\) cannot be an NE because the second follower would deviate by playing \(\chi \) (since \(b > 0\)). The same holds for any outcome \((a_1,\chi )\) with \(a_1 \in A {\setminus } \{\chi \}\), since the second follower would be better off playing another action (as \(b > 0\)). The last outcome on the diagonal, \((\chi ,\chi )\), cannot be an NE either, as the first follower would deviate from it (as she would get c in it, while she can obtain \(1 > c\) by deviating).

As a result, the only outcomes which can be pure NEs are those in \(\{(a_1,a_1) : a_1 \in A {\setminus } \{\chi \} \}\). When the leader plays a pure strategy \(a_3 \in A {\setminus } \{\chi \}\), the unique pure NE in the followers’ game is \((a_3,a_3)\) as, due to providing the followers with their maximum payoff, they would not deviate from it. Outcomes \((a_1,a_1)\) with \(a_1 \in A {\setminus } \{\chi ,a_3\}\) are not NEs as, with them, the first follower would get \(0 < c\). In general, if the leader plays an arbitrary mixed strategy \(x_3 \in \varDelta _3\) the resulting followers’ game is such that the payoffs in \((a_3,a_3)\) with \(a_3 \in A {\setminus } \{\chi \} \) are \((x_3^{a_3},x_3^{a_3})\). Noticing that \((a_3,a_3)\) is an equilibrium if and only if \(x_3^{a_3} \geqslant c\) (as, otherwise, the first follower would deviate by playing action \(\chi \)), we conclude that the set of pure NEs in the followers’ game is \(S = \{(a_3,a_3) : x_3^{a_3} \geqslant c\}\).

In order to guarantee that, for every possible \(S \subseteq \{(a_1,a_1) : a_1 \in A{\setminus } \{\chi \}\}\) with \(S\ne \emptyset \), there is a leader’s strategy such that S contains all the pure NEs of the followers’ game, we must properly choose the value of c. Choosing \(c \leqslant \frac{1}{r}\) suffices, as, for any set S, the leader’s strategy \( x_3 \in \varDelta _3 \) such that \(x_3^{a_3} = \frac{1}{|S|}\) for every \(a_3 \in A{\setminus } \{\chi \}\) with \((a_3,a_3) \in S\) induces a followers’ game in which all the outcomes in S are NEs. \(\square \)

Notice that the followers’ game always admits a pure NE for any leader’s commitment \(x_3\) in a \(\varGamma _b^c(r)\) game with \(c \leqslant \frac{1}{r}\). As shown in Fig. 4 for \(r=3\), the leader’s strategy space \(\varDelta _3\) is partitioned into \(2^{r}-1\) regions, each corresponding to a subset of \(\{(a_1,a_1) : a_1 \in A {\setminus } \{\chi \} \}\) containing those diagonal outcomes which are the only pure NEs in the followers’ game. Hence, in a \(\varGamma _b^c(r)\) game with \(c \leqslant \frac{1}{r}\) the number of combinations of outcomes which may constitute the set of pure NEs in the followers’ game is exponential in r, and, thus, in the size of the game instance.

A \(\varGamma _b^c(r)\) game with \(r=3\) and \(c \leqslant \frac{1}{r}\). The leader’s strategy space \(\varDelta _3\) is partitioned into \(2^{r}-1\) regions, one per subset of \(\{(a_1,a_1) : a_1 \in A {\setminus } \{\chi \} \}\) (the three NEs in the followers’ game, (1, 1), (2, 2), and (3, 3), are labelled A, B, C)

Relying on Proposition 5, we can establish the following result:

Theorem 1

P-SPNE-d is strongly NP-complete even for \(n=3\).

Proof

For the sake of clarity, we split the proof over multiple steps.

Mapping Given an instance of IND-SET-d, i.e., an undirected graph \(G=(V,E)\) and a positive integer J, we construct a special instance \(\varGamma (G)\) of P-SPNE-d of class \(\varGamma _b^c(r)\) as follows. Assuming an arbitrary labelling of the vertices \(\{v_1,v_2,\ldots ,v_r\}\), let \(\varGamma (G)\) be an instance of \(\varGamma _b^c(r)\) with \(c < \frac{1}{r}\) and \(0<b< c < 1\), where each action \(a_1 \in A {\setminus }\{\chi \}\) is associated with a vertex \(v_{a_1} \in V\). In compliance with Definition 4, in which no constraints are specified for the leader payoffs, we define:

for any pair of vertices \(v_{a_1},v_{a_2} \in V\): \(U_3^{a_1 a_1 a_2} = U_3^{a_2 a_2 a_1} = \frac{-1-c}{c} \) if \(\{v_{a_1},v_{a_2}\} \in E\), and \(U_3^{a_1 a_1 a_2} = U_3^{a_2 a_2 a_1} = 1\) otherwise;

for every \(a_3 \in A {\setminus } \{\chi \}\): \(U_3^{a_3 a_3 a_3} = 0\) and \(U_3^{\chi \chi a_3} = 0\);

for every \(a_3 \in A {\setminus } \{\chi \} \) and for every \(a_1, a_2 \in A \) with \(a_1 \ne a_2\): \(U_3^{a_1 a_2 a_3} = U_3^{a_2 a_1 a_3} = 0\).

As an example, Fig. 5 illustrates an instance of IND-SET-d from which the game depicted in Fig. 3 is obtained by applying our reduction. Finally, let \( K = \frac{J - 1}{J} \). Note that this transformation can be carried out in time polynomial in the number of vertices \(|V|=r\). W.l.o.g., we assume that the graph G contains no isolated vertices. Indeed, it is always possible to remove all the isolated vertices from G (in polynomial time), solve the problem on the residual graph, and, then, add the isolated vertices back to the independent set that has been found, still obtaining an independent set.

If. We show that, if the graph G contains an independent set of size greater than or equal to J, then \( \varGamma (G)\) admits a P-SPNE with leader’s utility greater than or equal to K. Let \(V^*\) be an independent set with \(|V^*| = J\). Consider the case in which outcomes \((a_1,a_1)\), with \(v_{a_1} \in V^*\), are the only pure NEs in the followers’ game, and assume that the leader’s strategy \(x_3\) is \(x_3^{a_3} = \frac{1}{|V^*|}\) if \(v_{a_3} \in V^*\) and \(x_3^{a_3} = 0\) otherwise. Since, by construction, \(U_3^{a_1 a_1 a_3} = 1\) for all \(a_3 \in A {\setminus } \{\chi ,a_1\}\), the leader’s utility at an equilibrium \((a_1,a_1)\) is:

Only if. We show that, if \(\varGamma (G)\) admits a P-SPNE with leader’s utility greater than or equal to K, then G contains an independent set of size greater than or equal to J. Due to Proposition 5, at any P-SPNE the leader plays a strategy \({\bar{x}}_3\) inducing a set of pure NEs in the followers’ game corresponding to \(S^* = \{(a_3,a_3) : {\bar{x}}_3^{a_3} \geqslant c\}\). We now show that the leader would never play two actions \(a_1,a_2 \in A {\setminus } \{\chi \}\) and \(\{v_{a_1},v_{a_2}\} \in E\) with probability greater than or equal to c in a P-SPNE. By contradiction, assume that the leader’s equilibrium strategy \({\bar{x}}_3\) is such that \({\bar{x}}_3^{a_1}, {\bar{x}}_3^{a_2} \geqslant c\). When the followers play the equilibrium \((a_1,a_1)\) (the same holds for \((a_2,a_2)\)), the leader’s utility is:

In the right-hand side, the first term is \(<1\) (as the leader’s payoffs are \(\leqslant 1\) and \(\sum _{a_3 \in A {\setminus } \{\chi ,a_1,a_2\}} {\bar{x}}_3^{a_3} = 1 - {\bar{x}}_3^{a_1} - \bar{x}_3^{a_2} < 1 \), since \({\bar{x}}_3^{a_1} , {\bar{x}}_3^{a_2} \geqslant c\)). The second term is less than or equal to \(c \, \frac{-1-c}{c} = -1 - c\) (as \({\bar{x}}_3^{a_2} \geqslant c\)), which is strictly less than \(-1\). It follows that, since \((a_1,a_1)\) (or, equivalently, \((a_2,a_2)\)) always provides the leader with a negative utility, she would never play \({\bar{x}}_3\) in an equilibrium. This is because, by playing a pure strategy she would obtain a utility of at least zero (as the followers’ game admits a unique pure NE giving her a zero payoff when she plays a pure strategy). As a result, we have \(U_3^{a_3 a_3 a_3} = 0\) for every action \(a_3\) such that \({\bar{x}}_3^{a_3} \geqslant c\) and \(U_3^{a_1 a_1 a_3} = 1\) for every other action \(a_1\) such that \({\bar{x}}_3^{a_1} \geqslant c\) (since \(v_{a_1}\) and \(v_{a_3}\) are not connected by an edge).

Note that, in any equilibrium \((a_1,a_1) \in S^*\), the leader’s utility is:

where, in the first summation in the right-hand side, each payoff \(U_3^{a_1 a_1 a_3}\) is equal to 1 (as \({\bar{x}}_3^{a_1} \geqslant c\) and \({\bar{x}}_3^{a_3} \geqslant c\)). We show that the same holds for each payoff \(U_3^{a_1 a_1 a_3}\) appearing in the second summation. By contradiction, assume that there exists an action \(a_3 \in A {\setminus } \{\chi \}\) such that \({\bar{x}}_3^{a_3} < c\) and \(U_3^{a_1 a_1 a_3}= \frac{-1-c}{c}\) for some equilibrium \((a_1,a_1) \in S^*\). By shifting all the probability that \({\bar{x}}_3\) places on \(a_3\) to actions \(a_1\) such that \((a_1,a_1) \in S^*\) (so that \(\bar{x}_3^{a_3} = 0\)), we obtain a new leader’s strategy which induces the same set \(S^*\) of pure NEs in the followers’ game. Moreover, the leader’s utility in any equilibrium \((a_1,a_1) \in S^*\) strictly increases if \(U_3^{a_1 a_1 a_3} = \frac{-1-c}{c}\), while it stays the same when \(U_3^{a_1 a_1 a_3} = 1\). This contradicts the fact that \({\bar{x}}_3\) is a P-SPNE. Thus, all the actions \(a_3 \in A {\setminus } \{\chi \}\) such that \(\bar{x}_3^{a_3} < c\) satisfy \(U_3^{a_1 a_1 a_3} = 1\) for every equilibrium \((a_1,a_1) \in S^*\).

As a result, the leader’s utility at an equilibrium \((a_3,a_3) \in S^*\) is \(1 - {\bar{x}}_3^{a_3}\). Since, due to the pessimistic assumption, the leader maximizes her utility in the worst NE, her best choice is to select an \({\bar{x}}_3\) such that all NEs yield the same utility, that is: \({\bar{x}}_3^{a_1} = {\bar{x}}_3^{a_2}\) for every \(a_1,a_2\) with \((a_1,a_1), (a_2,a_2) \in S^*\). This results in the leader playing all actions \(a_3\) such that \((a_3,a_3) \in S^*\) with the same probability \({\bar{x}}_3^{a_3} = \frac{1}{|S^*|}\), obtaining a utility of \(\frac{|S^*|-1}{|S^*|} = K\). Therefore, the vertices in the set \(\{v_{a_3} : (a_3,a_3) \in S^* \}\) form an independent set of G of size \(|S^*|=J\). The reduction is, thus, complete.

NP membership Given a triple \((a_1,a_2,x_3)\) which is encoded with a number bits which is polynomial w.r.t. the size of the game, we can verify in polynomial time whether \((a_1,a_2)\) is an NE in the followers’ game induced by \(x_3\) and whether, when playing \((a_1,a_2,x_3)\), the leader’s utility is at least as large as K. The existence of such a triple follows as a consequence of the correctness of either of the two equilibrium-finding algorithms that we propose in Sect. 6—we refer the reader to Sect. 6.2 for a discussion on this. Therefore, we deduce that P-SPNE belongs to NP. Moreover, since in the game of the reduction the players’ payoffs are encoded with a polynomial number of bits and due to IND-SET being strongly NP-complete, P-SPNE-d is strongly NP-complete. \(\square \)

4.2 Inapproximability

We show now that P-SPNE-s (the search problem of computing a P-SPNE) is not only NP-hard (due to its decision version, P-SPNE-d, being NP-complete), but it is also difficult to approximate. Since the reduction from IND-SET which we gave in Theorem 1 is not approximation-preserving, we propose a new one based on 3-SAT (see Definition 3). We remark that, differently from our previous reduction (which holds for any number of followers greater than or equal to two), this one requires at least three followers.

In the following, given a literal l (an occurrence of a variable, possibly negated), we define v(l) as its corresponding variable. Moreover, for a generic clause

we denote the ordered set of possible truth assignments to the variables, namely, \(x=v(l_1),y=v(l_2)\), and \(z=v(l_3)\), by

where, in each truth assignment, a variable is set to 1 if positive and to 0 if negative. Given a generic 3-SAT instance, we build a corresponding normal-form game as detailed in the following definition.

Definition 5

Given a 3-SAT instance where \(C=\{\phi _1,\ldots ,\phi _t\}\) is a collection of clauses and \(V=\{v_1,\ldots ,v_r\}\) is a set of Boolean variables, and some \(\epsilon \in (0,1)\), let \(\varGamma _\epsilon (C,V)\) be a normal-form game with four players (\(n=4\)) defined as follows. The fourth player has an action for each variable in V plus an additional one, i.e., \(A_4=\{1,\ldots ,r\}\cup \{w\}\). Each action \(a_4 \in \{1,\dots ,r\}\) is associated with variable \(v_{a_4}\). The other players share the same set of actions A, with \(A=A_1=A_2=A_3=\{\varphi _{ca} \mid c \in \{1, \dots , t\}, a \in \{1,\dots ,8\}\}\cup \{\chi \}\), where each action \(\varphi _{ca}\) is associated with one of the eight possible assignments of truth to the variables appearing in clause \(\phi _c\), so that \(\varphi _{ca}\) corresponds to the a-th assignment in the ordered set \(L_{\phi _c}\). For each player \(p \in \{1,2,3\}\), we define her utilities as follows:

for each \(a_4 \in A_4{\setminus }\{w\}\) and for each \(a_1 \in A{\setminus }\{\chi \}\) with \(a_1=\varphi _{ca}=l_1 l_2 l_3\), \(U_p^{a_1 a_1 a_1 a_4}=1\) if \(v(l_p)=v_{a_4}\) and \(l_p\) is a positive literal or \(v(l_p) \ne v_{a_4}\) and \(l_p\) is negative;

for each \(a_4 \in A_4{\setminus }\{w\}\) and for each \(a_1 \in A{\setminus }\{\chi \}\) with \(a_1=\varphi _{ca}=l_1 l_2 l_3\), \(U_p^{a_1 a_1 a_1 a_4}=0\) if \(v(l_p)=v_{a_4}\) and \(l_p\) is a negative literal or \(v(l_p) \ne v_{a_4}\) and \(l_p\) is positive;

for each \(a_1 \in A{\setminus }\{\chi \}\) with \(a_1=\varphi _{ca}=l_1 l_2 l_3\), \(U_p^{a_1 a_1 a_1 w}=0\) if \(l_p\) is a positive literal, while \(U_p^{a_1 a_1 a_1 w}=1\) otherwise;

for each \(a_4 \in A_4\) and for each \(a_1,a_2,a_3 \in A{\setminus }\{\chi \}\) such that \(a_1\ne a_2 \vee a_2\ne a_3 \vee a_1\ne a_3\), \(U_p^{a_1 a_2 a_3 a_4}=\frac{1}{r+2}\);

for each \(a_4 \in A_4\), \(a_3 \in A{\setminus }\{\chi \}\), and \(a_2 \in A{\setminus }\{\chi \}\) with \(a_2 =\varphi _{ca}=l_1 l_2 l_3\), \(U_1^{\chi a_2 a_3 a_4}=\frac{1}{r+1}\) if \(l_1\) is a positive literal, whereas \(U_1^{\chi a_2 a_3 a_4}=\frac{r}{r+1}\) if \(l_1\) is negative, while \(U_2^{\chi a_2 a_3 a_4}=U_3^{\chi a_2 a_3 a_4}=0\);

for each \(a_4 \in A_4\), \(a_3 \in A{\setminus }\{\chi \}\), and \(a_1 \in A{\setminus }\{\chi \}\) with \(a_1 =\varphi _{ca}=l_1 l_2 l_3\), \(U_2^{a_1 \chi a_3 a_4}=\frac{1}{r+1}\) if \(l_2\) is a positive literal, whereas \(U_2^{a_1 \chi a_3 a_4}=\frac{r}{r+1}\) if \(l_2\) is negative, while \(U_1^{a_1 \chi a_3 a_4}=1\) and \(U_3^{a_1 \chi a_3 a_4}=0\);

for each \(a_4 \in A_4\), \(a_1 \in A{\setminus }\{\chi \}\), and \(a_2 \in A{\setminus }\{\chi \}\) with \(a_2=\varphi _{ca}=l_1 l_2 l_3\), \(U_3^{a_1 a_2 \chi a_4}=\frac{1}{r+1}\) if \(l_3\) is a positive literal, whereas \(U_3^{a_1 a_2 \chi a_4}=\frac{r}{r+1}\) if \(l_3\) is negative, while \(U_1^{a_1 a_2 \chi a_4}=0\) and \(U_2^{a_1 a_2 \chi a_4}=1\);

for each \(a_4 \in A_4\), \(U_1^{a_1 \chi \chi a_4}=U_3^{a_1 \chi \chi a_4}=1\) and \(U_2^{a_1 \chi \chi a_4}=0\), for all \(a_1 \in A{\setminus }\{\chi \}\);

for each \(a_4 \in A_4\), \(U_1^{\chi a_2 \chi a_4}=1\) and \(U_2^{\chi a_2 \chi a_4}=U_3^{\chi a_2 \chi a_4}=0\), for all \(a_2 \in A{\setminus }\{\chi \}\);

for each \(a_4 \in A_4\), \(U_1^{\chi \chi a_3 a_4}=U_3^{\chi \chi a_3 a_4}=0\) and \(U_2^{\chi \chi a_3 a_4}=1\), for all \(a_3 \in A\).

The payoff matrix of the fourth player is so defined:

for each \(a_4 \in A_4\) and for each \(a_1 \in A{\setminus }\{\chi \}\) with \(a_1=\varphi _{ca}=l_1 l_2 l_3\), \(U_4^{a_1 a_1 a_1 a_4}=\epsilon \) if the truth assignment identified by \(\varphi _{ca}\) makes \(\phi _c\) false (i.e., whenever, for each \(p \in \{1,2,3\}\), the clause \(\phi _c\) contains the negation of \(l_p\)), while \(U_4^{a_1 a_1 a_1 a_4}=1\) otherwise;

for each \(a_4 \in A_4\) and for each \(a_1,a_2,a_3 \in A\) such that \(a_1\ne a_2 \vee a_2\ne a_3 \vee a_1\ne a_3\), with the addition of the triple \((\chi ,\chi ,\chi )\), \(U_4^{a_1 a_2 a_3 a_4}=0\).

Games adhering to Definition 5 have some interesting properties, which we formally state in the following Propositions 6 and 7 .

First, we give a characterization of the strategy space of the leader in terms of the set of pure NEs in the followers’ game. In particular, given a game \(\varGamma _\epsilon (C,V)\), the leader’s strategy space \(\varDelta _4\) is partitioned according to the boundaries \(x_4^{a_4} = \frac{1}{r+1}\), for \(a_4 \in A_4 {\setminus }\{w\}\), by which \(\varDelta _4\) is split into \(2^r\) regions, each corresponding to a possible truth assignment to the variables in V. Specifically, in the assignment corresponding to a region, variable \(v_{a_4}\) takes value 1 if \(x_4^{a_4} \geqslant \frac{1}{r+1}\), while it takes value 0 if \(x_4^{a_4} \leqslant \frac{1}{r+1}\). Moreover, for each \(a_1 \in A {\setminus } \{\chi \}\) and \(a_1=\varphi _{ca}\) an outcome \((a_1,a_1,a_1)\) is an NE in the followers’ game only in the regions of the leader’s strategy space whose corresponding truth assignment is compatible with the one represented by \(\varphi _{ca}\). For instance, if \(\varphi _{ca}={\bar{v}}_1 v_2 v_3\) the corresponding outcome is an NE only if \(x_4^1 \leqslant \frac{1}{r+1}\), \(x_4^2 \geqslant \frac{1}{r+1}\), and \(x_4^3 \geqslant \frac{1}{r+1}\) (with no further restrictions on the other probabilities). Formally, we can claim the following:

Proposition 6

Given a game \(\varGamma _\epsilon (C,V)\) and an action \(a_1 \in A {\setminus } \{\chi \}\) with \(a_1=\varphi _{ca}=l_1 l_2 l_3\), the outcome \((a_1,a_1,a_1)\) is an NE of the followers’ game whenever the leader commits to a strategy \(x_4 \in \varDelta _4\) such that:

\(x_4^{a_4} \geqslant \frac{1}{r+1}\) if \(v(l_p)=v_{a_4}\) and \(l_p\) is a positive literal, for some \(p \in \{1,2,3\}\);

\(x_4^{a_4} \leqslant \frac{1}{r+1}\) if \(v(l_p)=v_{a_4}\) and \(l_p\) is a negative literal, for some \(p \in \{1,2,3\}\);

\(x_4^{a_4}\) can be any if \(v(l_p) \ne v_{a_4}\) for each \(p \in \{1,2,3\}\).

All the other outcomes of the followers’ game, i.e., those belonging to the set \(\{ (a_1,a_2,a_3) : a_1,a_2,a_3 \in A with a_1\ne a_2 \vee a_2\ne a_3 \vee a_1\ne a_3 \} \cup \{(\chi ,\chi ,\chi )\}\), cannot be NEs for any of the leader’s commitments.

Proof

Observe that, the followers’ payoffs do not depend on the leader’s strategy \(x_4\) in the outcomes not in \(\{(a_1,a_1,a_1) : a_1 \in A {\setminus } \{\chi \} \}\). Thus, for every \(a_1,a_2,a_3 \in A {\setminus } \{\chi \}\) such that \(a_1\ne a_2 \vee a_2\ne a_3 \vee a_1\ne a_3\) the outcome \((a_1,a_2,a_3)\) cannot be an NE as the first follower would deviate by playing action \(\chi \), obtaining a utility at least as large as \(\frac{1}{r+1}\), instead of \(\frac{1}{r+2}\). Also, for all \(a_2, a_3 \in A {\setminus } \{\chi \}\) the outcome \((\chi ,a_2,a_3)\) is not an NE since the second follower would be better off playing \(\chi \) (as she gets \(1 > 0\)). Analogously, for all \(a_1, a_3 \in A {\setminus } \{\chi \}\) the outcome \((a_1,\chi ,a_3)\) cannot be an NE as the third follower would deviate to \(\chi \) (getting a utility of \(1 > 0\)). For all \(a_3 \in A\), a similar argument also applies to the outcome \((\chi ,\chi ,a_3)\) as the first follower would have an incentive to deviate by playing any action different from \(\chi \) (note that \((\chi ,\chi ,\chi )\), whose payoffs are defined in the last item of Definition 5, is included). Moreover, for all \(a_1 \in A {\setminus } \{\chi \}\) the outcome \((a_1,\chi ,\chi )\) is not an NE as the second follower would deviate to any other action (getting a utility of 1). For all \(a_1, a_2 \in A {\setminus } \{\chi \}\), the same holds for the outcome \((a_1,a_2,\chi )\), where the first follower would deviate and play action \(\chi \), and for the outcome \((\chi ,a_2,\chi )\) where, for all \(a_2 \in {\setminus } \{\chi \}\), the second follower would deviate and play \(\chi \).

Therefore, the only outcomes which can be NEs in the followers’ game are those in \(\{(a_1,a_1,a_1) : a_1 \in A {\setminus } \{\chi \} \}\). Assume that the leader commits to an arbitrary mixed strategy \(x_4 \in \varDelta _4\). For each \(a_1 \in A {\setminus } \{\chi \}\) with \(a_1=\varphi _{ca}=l_1 l_2 l_3\) and for each \(p \in \{1,2,3\}\), the outcome \((a_1,a_1,a_1)\) provides follower p with a utility of \(u_p\) such that:

\(u_p=x_4^{a_4}\) if \(v(l_p)=v_{a_4}\) and \(l_p\) is a positive literal;

\(u_p=1-x_4^{a_4}\) if \(v(l_p)=v_{a_4}\) and \(l_p\) is a negative literal;

The outcome \((a_1,a_1,a_1)\) is an NE if the following conditions hold:

\(u_p \geqslant \frac{1}{r+1}\) for each \(p \in \{1,2,3\}\) such that \(l_p\) is positive, as otherwise follower p would deviate and play \(\chi \);

\(u_p \geqslant \frac{r}{r+1}\) for each \(p \in \{1,2,3\}\) such that \(l_p\) is negative, as otherwise follower p would deviate and play \(\chi \);

The claim is proven by these conditions, together with the definition of \(u_p\). \(\square \)

The characterization of the leader’s strategy space given in Proposition 6 establishes the relationship between the leader’s utility in a P-SPNE of a game \(\varGamma _\epsilon (C,V)\) and the feasibility of the corresponding 3-SAT instance. We highlight it in the following proposition.

Proposition 7

Given a game \(\varGamma _\epsilon (C,V)\), the leader’s utility in a P-SPNE is 1 if and only if the corresponding 3-SAT instance is feasible, and it is equal to \(\epsilon \) otherwise.

Proof

The result follows form Proposition 6. If the 3-SAT instance is a YES instance (i.e., if it is feasible), there exists then a strategy \(x_4 \in \varDelta _4\) such that all the NEs of the resulting followers’ game provide the leader with a utility of 1. This is because there is a region corresponding to a truth assignment which satisfies all the clauses. On the other hand, if the 3-SAT instance is a NO instance (i.e., if it is not satisfiable), then in each region of the leader’s strategy space there exits an NE for the followers’ game which provides the leader with a utility of \(\epsilon \). Therefore, the followers would always play such equilibrium due to the assumption of pessimism. \(\square \)

We are now ready to state the result.

Theorem 2

With \(n \geqslant 4\) and unless P = NP, P-SPNE-s cannot be approximated in polynomial time to within any multiplicative factor which is polynomial in the size of the normal-form game given as input, nor (assuming the payoffs are normalized between 0 and 1) to within any constant additive loss strictly smaller than 1.

Proof

Given a generic 3-SAT instance, let us build its corresponding game \(\varGamma _\epsilon (C,V)\) according to Definition 5. This construction can be done in polynomial time as \(|A_4|=r+1\) and \(|A|=|A_1|=|A_2|=|A_3|=8t+1\) are polynomials in r and t, and, therefore, the number of outcomes in \(\varGamma _\epsilon (C,V)\) is polynomial in r and t. Furthermore, let us select \(\epsilon \in \big (0,\frac{1}{2^{r}}\big )\) (the polynomiality of the reduction is preserved as \(\frac{1}{2^{r}}\) is representable in binary encoding with a polynomial number of bits).

By contradiction, let us assume that there exists a polynomial-time approximation algorithm \({\mathcal {A}}\) capable of constructing a solution to the problem of computing a P-SPNE with a multiplicative approximation factor \(\frac{1}{\text {poly}(I)}\), where \(\text {poly}(I)\) is any polynomial function of the size I of the normal-form game given as input. By Proposition 7, it follows that, when applied to \(\varGamma _\epsilon (C,V)\), \({\mathcal {A}}\) would return an approximate solution with value greater than or equal to \(1 \cdot \frac{1}{\text {poly}(I)} > \frac{1}{2^{r}}\) (for a sufficiently large r) if and only if the 3-SAT instance is feasible. When the 3-SAT instance is not satisfiable, \({\mathcal {A}}\) would return a solution with value at most \(\frac{1}{2^r}\). Since this would provide us with a solution to 3-SAT in polynomial time, we conclude that P-SPNE-s cannot be approximated in polynomial time to within any polynomial multiplicative factor unless P = NP.

For the additive case, observe that an algorithm \({\mathcal {A}}\) with a constant additive loss \(\ell < 1\) would return a solution of value at least \(1 - \ell \) for feasible 3-SAT instances and a solution of value at most \(\frac{1}{2^r}\) for infeasible ones. As for any \(\ell < 1 - \frac{1}{2^r}\) this algorithm would allow us to decide in polynomial time whether the 3-SAT instance is feasible or not, a contradiction unless \(\textsf {P} = \textsf {NP}\), we deduce \(\ell \geqslant 1 - \frac{1}{2^r}\). Since \(\frac{1}{2^r} \rightarrow 0\) for \(r \rightarrow \infty \), this implies \(\ell \geqslant 1\), a contradiction. \(\square \)

5 Single-Level Reformulation and Restriction

In this section, we propose a single-level reformulation of the problem admitting a supremum but, in general, not a maximum, and a corresponding restriction which always admits optimal (restricted) solutions.

For notational simplicity, we consider the case with \(n=3\) players. Although notationally more involved, the generalization to \(n \geqslant 3\) is straightforward. With only two followers, Problem (2), i.e., the bilevel programming formulation we gave in Sect. 3.2, reads:

5.1 Single-Level Reformulation

In order to cast Problem (3) into a single-level problem, we first introduce the following reformulation of the followers’ problem:

Lemma 1

The following MILP, parametric in \(x_3\), is an exact reformulation of the followers’ problem of finding a pure NE which minimizes the leader’s utility given a leader’s strategy \(x_3\):

Proof

Note that, in Problem (3), a solution to the followers’ problem satisfies \(x_1^{a_1}=x_2^{a_2}=1\) for some \((a_1,a_2) \in A_1 \times A_2\) and \(x_1^{a_1'}=x_2^{a_2'}=0\) for all \((a_1',a_2') \ne (a_1,a_2)\). Problem (4) encodes this in terms of the variable \(y^{a_1a_2}\) by imposing \(y^{a_1a_2} = 1\) if an only if \((a_1,a_2)\) is a pessimistic NE. Let us look at this in detail.

Due to Constraints (4b) and (4e), \(y^{a_1a_2}\) is equal to 1 for one and only one pair \((a_1,a_2)\).

Due to Constraints (4c) and (4d), for all \((a_1,a_2)\) such that \(y^{a_1a_2}=1\) there can be no action \(a_1' \in A_1\) (respectively, \(a_2' \in A_2\)) by which the first follower (respectively, the second follower) could obtain a better payoff when assuming that the other follower would play action \(a_2\) (respectively, action \(a_1\)). This guarantees that \((a_1,a_2)\) be an NE. Also note that Constraints (4c) and (4d) boil down to the tautology \(0 \geqslant 0\) for any \((a_1,a_2) \in A_1 \times A_2\) with \(y^{a_1a_2} = 0\).

By minimizing the objective function (which corresponds to the leader’s utility), a pessimistic pure NE is found.\(\square \)

To arrive at a single-level reformulation of Problem (3), we rely on linear programming duality to restate Problem (4) in terms of optimality conditions which do not employ the min operator. First, we show the following:

Lemma 2

The linear programming relaxation of Problem (4) always admits an optimal integer solution.

Proof

Let us focus on Constraints (4c) and analyse, for all \((a_1,a_2) \in A_1 \times A_2\) and \(a_1' \in A_1\), the coefficient \(\sum _{a_3 \in A_3} (U_1^{a_1a_2a_3} - U_1^{a_1'a_2a_3}) x_3^{a_3}\) which multiplies \(y^{a_1a_2}\). The coefficient is equal to the regret the first player would suffer from by not playing action \(a_1'\). If equal to 0, we have the tautology \(0 \geqslant 0\). If the regret is positive, after dividing by \(\sum _{a_3 \in A_3} (U_1^{a_1a_2a_3} - U_1^{a_1'a_2a_3}) x_3^{a_3}\) both sides of the constraint we obtain \(y^{a_1a_2} \geqslant 0\), which is subsumed by the nonnegativity of \(y^{a_1a_2}\). If the regret is negative, after diving both sides of the constraint again by \(\sum _{a_3 \in A_3} (U_1^{a_1a_2a_3} - U_1^{a_1'a_2a_3}) x_3^{a_3}\) we obtain \(y^{a_1a_2} \leqslant 0\), which implies \(y^{a_1a_2}=0\). A similar reasoning applies to Constraints (4d).

Let us now define O as the set of pairs \((a_1,a_2)\) such that there is as least an action \(a_1'\) or \(a_2'\) for which one of the followers suffers from a strictly negative regret. We have \(O {:}{=} \{(a_1,a_2) \in A_1 \times A_2: \exists a_1' \in A_1 \text { with } \sum _{a_3 \in A_3} (U_1^{a_1a_2a_3} - U_1^{a_1'a_2a_3}) x_3^{a_3}< 0 \vee \exists a_2' \in A_2 \text { with } \sum _{a_3 \in A_3} (U_1^{a_1a_2a_3} - U_1^{a_1a_2'a_3}) x_3^{a_3} < 0\}\). Relying on O, Problem (4) can be rewritten as:

All variables \(y^{a_1a_2}\) with \((a_1,a_2) \in O\) can be discarded. We obtain a problem with a single constraint imposing that the sum of all the \(y^{a_1a_2}\) variables with \((a_1,a_2) \notin O\) be equal to 1. The linear programming relaxation of such problem always admits an optimal solution with \(y^{a_1a_2} = 1\) for the pair \((a_1,a_2)\) which achieves the largest value of \(\sum _{a_3 \in A_3} U_3^{a_1a_2a_3} x_3^{a_3}\) (ties can be broken arbitrarily), and with \(y^{a_1a_2} = 0\) otherwise.

\(\square \)

As a consequence of Lemma 2, the following can be established:

Theorem 3

The following single-level Quadratically Constrained Quadratic Program (QCQP) is an exact reformulation of Problem (3):

Proof

By relying on Lemma 2, we first introduce the linear programming dual of the linear programming relaxation of Problem (4). Thanks to Constraints 4b, \(y^{a_1,a_2} \in \{0,1\}\) can be relaxed w.l.o.g. into \(y^{a_1,a_2} \in {\mathbb {Z}}^+\) for all \(a_1 \in A_1, a_2 \in A_2\). This way, we do not have to introduce a dual variable for each of the constraints \(y^{a_1,a_2} \leqslant 1\) which would be introduced when relaxing \(y^{a_1,a_2} \in \{0,1\}\) into \(y^{a_1,a_2} \in [0,1]\). Letting \(\alpha \), \(\beta _1^{a_1a_2a_1'}\), and \(\beta _2^{a_1a_2a_2'}\) be the dual variables of, respectively, Constraints (4b), (4c), and (4d), the dual reads:

A set of optimality conditions for Problem (4) can then be derived by simultaneously imposing primal and dual feasibility for the sets of primal and dual variables (by imposing the respective constraints) and equating the objective functions of the two problems.

The dual variable \(\alpha \) can then be removed by substituting it by the primal objective function, leading to Constraints (5e).

The result in the claim is obtained after introducing the leader’s utility as objective function and then casting the resulting problem as a maximization problem (in which a supremum is sought). \(\square \)

Since, as shown in Proposition 3, the problem of computing a P-SPNE in a normal-form game may only admit a supremum but not a maximum, the same must hold for Problem (5) due to its correctness (established in Theorem 3).

We formally highlight this property in the following proposition, showing in the proof how this can manifest in terms of the variables of the formulation.

Proposition 8

In the general case, Problem (5) may not admit a finite optimal solution.

Proof

Consider the game introduced in the proof of Proposition 3 and let \(x_3 = (1-\rho , \rho )\) for \(\rho \in [0,1]\). Adopting, for convenience, the notation \((a_1^1,a_2^1)=(1,1)\), \((a_1^1,a_2^2)=(1,2)\), \((a_1^2,a_2^1)=(2,1)\), and \((a_1^2,a_2^2)=(2,2)\), Constraints (5e) read:

Note that the left-hand sides of the four constraints are all equal to the objective function (i.e., to the leader’s utility).

Let us consider the case \(\rho < 0.5\) for which, as shown in the proof of Proposition 3, (1, 2) is the unique pure NE in the followers’ game. (1,2) is obtained by letting \(y^{12} = 1\) and \(y^{11}=y^{21}=y^{22} = 0\), for which the left-hand sides of the four constraints become equal to \(7.5 - 5\epsilon \). Note that such value converges to the supremum as \(\epsilon \rightarrow 0\). For this choice of y and letting \(\rho =0.5-\epsilon \) for \(\epsilon \in \left( 0,0.5\right] \) (which is equivalent to assuming \(\rho < 0.5\)), the constraints read:

Rearrange the four constraints as follows:

The second constraint implies \(\beta _1^{122} = \beta _2^{121} =0\). Letting \(\beta _1^{112} = \beta _2^{221} =0\), which corresponds to the least restriction on the first and fourth constraints, we derive:

As \(\epsilon \rightarrow 0\), we have a finite lower bound for \(\beta _2^{111}\) and \(\beta _1^{221}\), but we also have \(\beta _1^{211} + \beta _2^{212} \geqslant \frac{6.5 - 5\epsilon }{\epsilon } \rightarrow \infty \), which prevents \(\beta _1^{211}\) and \(\beta _2^{212}\) from taking a finite value.

With a similar argument, one can verify that there is no other way of achieving an objective function value approaching 7.5 as, for \(\rho \geqslant 5\), the third constraint in the original system imposes an upper bound on the objective function value of 1. \(\square \)

5.2 A Restricted Single-Level (MILP) Formulation

As state-of-the-art numerical optimization solvers usually rely on the boundedness of their variables when tackling a problem, due to the result in Proposition 8 solving the single-level formulation in Problem (5) may be numerically impossible.

We consider, here, the option of introducing an upper bound of M on both \(\beta _1^{a_1a_2a_1'}\) and \(\beta _2^{a_1a_2a_2'}\), for all \(a_1 \in A_1, a_2 \in A_2, a_1' \in A_1, a_2' \in A_2\). Due to the continuity of the objective function, this suffices to obtain a formulation which, although being a restriction of the original one, always admits a maximum (over the reals) as a consequence of Weierstrass’ extreme-value theorem. Quite conveniently, this restricted reformulation can be cast as an MILP, as we now show.

Theorem 4

One can obtain an exact MILP reformulation of Problem (5) for the case where \(\beta _1^{a_1a_2a_1'} \leqslant M\) and \(\beta _2^{a_1a_2a_2'} \leqslant M\) hold for all \(a_1 \in A_1, a_2 \in A_2, a_1' \in A_1, a_2' \in A_2\), and a restricted one when these bounds are not valid.

Proof

After introducing the variable \(z^{a_1a_2a_3}\), each bilinear product \(y^{a_1a_2} x_3^{a_3}\) in Problem (5) can be linearised by substituting \(z^{a_1a_2a_3}\) for it and introducing the McCormick envelope constraints [24], which are sufficient to guarantee \(z^{a_1a_2a_3} = y^{a_1a_2} x_3^{a_3}\) if \(y^{a_1a_2}\) takes binary values [1].

Assuming \(\beta _1^{a_1a_2a_1'} \in [0,M]\) for each \(a_1 \in A_1, a_2 \in A_2, a_1' \in A_1\), we can restrict ourselves to \(\beta _1^{a_1a_2a_1'} \in \{0,M\}\). This is the case also in the dual (reported in the proof of Theorem 3). Indeed, the dual problem asks for solving the following problem:

The \(\min \) operator ranges over functions (one for each pair \((a_1,a_2) \in A_1 \times A_2\)) defined on disjoint domains (the \(\beta _1,\beta _2\) variables contained in each such function are not contained in any of the other ones). Therefore, we can w.l.o.g. set the value of \(\beta _1\) and \(\beta _2\) so that each function be individually maximized. For each \((a_1,a_2) \in A_1 \times A_2\), this is achieved by setting, for each \(a_1' \in A_1\) (resp., \(a_2' \in A_2\)) \(\beta _1^{a_1a_2a_1'}\) (resp., \(\beta _2^{a_1a_2a_2'}\)) to its upper bound M if \(\sum _{a_3 \in A_3} (U_1^{a_1a_2a_3} - U_1^{a_1'a_2a_3}) x_3^{a_3} \geqslant 0\) (resp., \(\sum _{a_3 \in A_3} (U_2^{a_1a_2a_3} - U_2^{a_1a_2'a_3}) x_3^{a_3} \geqslant 0\)), otherwise setting \(\beta _1^{a_1a_2a_1'}\) (resp., \(\beta _2^{a_1a_2a_2'}\)) to its lower bound of 0.

We can, therefore, introduce the variable \(p_1^{a_1a_2a_1'} \in \{0,1\}\), substituting \(M p_1^{a_1a_2a_1'}\) for each occurrence of \(\beta _1^{a_1a_2a_1'}\). This way, for each \(a_1 \in A_1, a_2 \in A_2, a_1' \in A_1\), the term \(\beta _1^{a_1a_2a_1'} \sum _{a_3 \in A_3} (U_1^{a_1a_2a_3} - U_1^{a_1'a_2a_3}) x_3^{a_3}\) becomes \( M\sum _{a_3 \in A_3} (U_1^{a_1a_2a_3} - U_1^{a_1'a_2a_3}) p_1^{a_1a_2a_1'} x_3^{a_3}\). We can, then, introduce the variable \(q_1^{a_1a_2a_1'a_3}\) and impose \(q_1^{a_1a_2a_1'a_3} = p_1^{a_1a_2a_1'} x_3^{a_3}\) via the McCormick envelope constraints. This way, the term \( M\sum _{a_3 \in A_3} (U_1^{a_1a_2a_3} - U_1^{a_1'a_2a_3}) p_1^{a_1a_2a_1'} x_3^{a_3}\) becomes the completely linear term \(M \sum _{a_1' \in A_1} \sum _{a_3 \in A_3} (U_1^{a_1a_2a_3} - U_1^{a_1'a_2a_3}) q_1^{a_1a_2a_1'a_3}\). Similar arguments can be applied for \(\beta _2^{a_1a_2a_2'}\), leading to an MILP formulation. \(\square \)

The impact of bounding \(\beta _1^{a_1a_2a_1'}\) and \(\beta _2^{a_1a_2a_2'}\) by M is explained as follows. Assume that those upper bounds are introduced into Problem (5). If M is not large enough for the chosen \(x_3\) (remember that, as shown in Proposition 8, one may need \(M \rightarrow \infty \) for \(x_3\) approaching a discontinuity point of the leader’s utility function), Constraints (5e) may remain active for some \(({\hat{a}}_1,{\hat{a}}_2)\) which is not an NE for the chosen \(x_3\). Let \((a_1,a_2)\) be the worst-case NE the followers would play and assume that the right-hand side of Constraint (5e) for \(({\hat{a}}_1,{\hat{a}}_2)\) is strictly smaller than the utility the leader would obtain if the followers played the NE \((a_1,a_2)\), namely, \(\sum _{a_3 \in A_3} U_3^{{\hat{a}}_1 {\hat{a}}_2 a_3} x_3^{a_3} - \sum _{a_1' \in A_1} \beta _1^{{\hat{a}}_1 {\hat{a}}_2 a_1'} \sum _{a_3 \in A_3} (U_1^{{\hat{a}}_1 {\hat{a}}_2 a_3} - U_1^{a_1' {\hat{a}}_2 a_3}) x_3^{a_3} - \sum _{a_2' \in A_2} \beta _2^{{\hat{a}}_1 {\hat{a}}_2 a_2'} \sum _{a_3 \in A_3} (U_2^{{\hat{a}}_1 {\hat{a}}_2 a_3} - U_2^{{\hat{a}}_1 a_2'a_3}) x_3^{a_3} < \sum _{a_3 \in A_3} U_3^{a_1a_2a_3} x_3^{a_3}\). Letting \(y^{a_1a_2} = 1\), this constraint would be violated (as, with that value of y, the left-hand side of the constraint would be \(\sum _{a_3 \in A_3} U_3^{a_1a_2a_3} x_3^{a_3}\), which we assumed to be strictly larger than the right-hand side). This forces the choice of a different \(x_3\) for which the upper bound of M on \(\beta _1^{a_1a_2a_1'}\) and \(\beta _2^{a_1a_2a_2'}\) is sufficiently large not to cause the same issue with the worst-case NE corresponding to that \(x_3\), thus restricting the set of strategies the leader could play.

In spite of this, by solving the MILP reformulation outlined in Theorem 4 we are always guaranteed to find optimal (restricted) solutions to it (if M is large enough for the restricted problem to admit feasible solutions). Such solutions correspond to feasible strategies of the leader, guaranteeing her a lower bound on her utility at a P-SPNE.

6 Exact Algorithm

In this section, we propose an exact exponential-time algorithm for the computation of a P-SPNE, i.e., of \(\sup _{x_n \in \varDelta _n} f(x_n)\), which does not suffer from the shortcomings of the formulations we introduced in the previous section. In particular, if there is no \(x_n \in \varDelta _n\) where the leader’s utility \(f(x_n)\) attains \(\sup _{x_n \in \varDelta _n} f(x_n)\) (as \(f(x_n)\) does not admit a maximum), our algorithm also returns, together with the supremum, a strategy \({\hat{x}}_n\) which provides the leader with a utility equal to an \(\alpha \)-approximation (in the additive sense) of the supremum, namely, a strategy \({\hat{x}}_n\) satisfying \(\sup _{x_n \in \varDelta _n} f(x_n) - f({\hat{x}}_n) \leqslant \alpha \) for any additive loss \(\alpha >0\) chosen a priori. We first introduce a version of the algorithm based on explicit enumeration, in Sect. 6.1, which we then embed into a branch-and-bound scheme in Sect. 6.3.

In the remainder of the section, we denote the closure of a set \(X \subseteq \varDelta _n\) relative to \({{\,\mathrm{aff}\,}}(\varDelta _n)\) by \({\overline{X}}\), its boundary relative to \({{\,\mathrm{aff}\,}}(\varDelta _n)\) by \({{\,\mathrm{bd}\,}}(X)\), and its complement relative to \(\varDelta _n\) by \(X^c\). Note that, here, \({{\,\mathrm{aff}\,}}(\varDelta _n)\) denotes the affine hull of \(\varDelta _n\), i.e., the hyperplane in \({\mathbb {R}}^{m}\) containing \(\varDelta _n\).

6.1 Enumerative Algorithm

6.1.1 Computing \(\sup _{x_n \in \varDelta _n} f(x_n)\)

The key ingredient of our algorithm is what we call outcome configurations. Letting  , we say that a pair \((S^+, S^-)\) with \(S^+ \subseteq A_F\) and \(S^- = A_F {\setminus } S^+\) is an outcome configuration for a given \(x_n \in \varDelta _n\) if, in the followers’ game induced by \(x_n\), all the followers’ action profiles \(a_{-n} \in S^+\) constitute an NE and all the action profiles \(a_{-n} \in S^-\) do not.

, we say that a pair \((S^+, S^-)\) with \(S^+ \subseteq A_F\) and \(S^- = A_F {\setminus } S^+\) is an outcome configuration for a given \(x_n \in \varDelta _n\) if, in the followers’ game induced by \(x_n\), all the followers’ action profiles \(a_{-n} \in S^+\) constitute an NE and all the action profiles \(a_{-n} \in S^-\) do not.

For every \(a_{-n} \in A_F\), we define \(X(a_{-n})\) as the set of all leader’s strategies \(x_n \in \varDelta _n\) for which \(a_{-n}\) is an NE in the followers’ game induced by \(x_n\). Formally, \(X(a_{-n})\) corresponds to the following (closed) polytope:

For every \(a_{-n} \in A_F\), we also introduce the set \(X^c(a_{-n})\) of all \(x_n \in \varDelta _n\) for which \(a_{-n}\) is not an NE. For that purpose, we first define the following set for each \(p \in F\):

\(D_p(a_{-n},a_p')\), which is a not open nor closed polytope (as it has a missing facet, the one corresponding to its strict inequality), is the set of all values of \(x_n\) for which player p would achieve a better utility by deviating from \(a_{-n}\) and playing a different action \(a_p' \in A_p\). For every \(p \in F\), \(a_{-n} \in A_F\), and \(a'_p \in A_p\), we call the corresponding set \(D_p(a_{-n},a_p')\)degenerate if \(U_p^{a_{-n}, a_n} = U_p^{a_{-n}', a_n}\) for each \(a_n \in A_n\) (recall that \(a_{-n}'=(a_1,\ldots ,a_{p-1},a_p',a_{p+1},\ldots ,a_{n-1})\)). In a degenerate \(D_p(a_{-n},a_p')\), the constraint \(\sum _{a_n \in A_n} U_p^{a_{-n}, a_n} x_n^{a_n} < \sum _{a_n \in A_n} U_p^{a_{-n}', a_n} x_n^{a_n}\) reduces to \(0 < 0\). Since, in principle, any player could deviate from \(a_{-n}\) by playing any action not in \(a_{-n}\), \(X^c(a_{-n})\) is the following disjunctive set:

Notice that, since any point in \({{\,\mathrm{bd}\,}}(X^c(a_{-n}))\) which is not in \({{\,\mathrm{bd}\,}}(\varDelta _n)\) would satisfy, for some \(a_p'\), the (strict, originally) inequality of \(D_p(a_{-n},a_p')\) as an equation, such point is not in \(X^c(a_{-n})\) and, hence, \({{\,\mathrm{bd}\,}}(X^c(a_{-n})) \cap X^c(a_{-n}) \subseteq {{\,\mathrm{bd}\,}}(\varDelta _n)\). The closure \(\overline{X^c(a_{-n})}\) of \(X^c(a_{-n})\) is obtained by discarding any degenerate \(D_p(a_{-n},a_p')\) and by turning the strict constraint in the definition of each nondegenerate \(D_p(a_{-n},a_p')\) into a nonstrict one. Note that degenerate sets are discarded as, for such sets, turning their strict inequality into a \(\leqslant \) inequality would result in turning the empty set \(D_p(a_{-n},a_p')\) (whose closure is the empty set) into \(\varDelta _n\). An illustration of \(X(a_{-n})\) and \(X^c(a_{-n})\), together with the closure \(\overline{X^c(a_{-n})}\) of the latter, is reported in Fig. 6.

An illustration of \(X(a_{-n})\), \(X^c(a_{-n})\), and \(\overline{X^c(a_{-n})}\) for the case with \(m=3\). The three sets are depicted as subsets (highlighted in gray and continuous lines) of the leader’s strategy space \(\varDelta _n\). Dashed lines and circles indicate parts of \(\varDelta _n\) which are not contained in the sets

For every outcome configuration \((S^+,S^-)\), we introduce the following sets:

and

While the former is a closed polytope, the latter is the union of not open nor closed polytopes and, thus, it is not open nor closed itself. Similarly to \(X^c(a_{-n})\), \(X(S^-)\) satisfies \({{\,\mathrm{bd}\,}}(X(S^-)) \cap X(S^-) \subseteq {{\,\mathrm{bd}\,}}(\varDelta _n)\). The closure \(\overline{X(S^-)}\) of \(X(S^-)\) is obtained by taking the closure of each \(X^c(a_{-n})\). Hence, \(\overline{X(S^-)} = \bigcap _{a_{-n}\in S^-} \overline{X^c(a_{-n})}\).

By leveraging these definitions, we can now focus on the set of all leader’s strategies which realize the outcome configuration \((S^+,S^-)\), namely:

As for \(X(S^-)\), \(X(S^+) \cap X(S^-)\) is not an open nor a closed set. Due to \(X(S^+)\) being closed, the only points of \({{\,\mathrm{bd}\,}}(X(S^+) \cap X(S^-))\) which are not in \(X(S^+) \cap X(S^-)\) itself are the very points in \({{\,\mathrm{bd}\,}}(X(S^-))\) which are not in \(X(S^-)\). As a consequence, \(\overline{X(S^+) \cap X(S^-)} = X(S^+) \cap \overline{X(S^-)}\).

Let us define the set \(P {:}{=} \{(S^+,S^-) : S^+ \in 2^{A_F} \wedge S^- = 2^{A_F} {\setminus } S^+\}\), which contains all the outcome configurations of the game. The following theorem highlights the structure of \(f(x_n)\), suggesting an iterative way of expressing the problem of computing \(\sup _{x_n \in \varDelta _n} f(x_n)\). We will rely on it when designing our algorithm.

Theorem 5

Let \(\displaystyle \psi (x_n;{S}^+) {:}{=} \min _{a_{-n} \in S^+} \sum _{a_n \in A_n} U_n^{a_{-n}, a_n} x_n^{a_n}\). The following holds:

Proof