Abstract

We show that randomization can lead to significant improvements for a few fundamental problems in distributed tracking. Our basis is the count-tracking problem, where there are k players, each holding a counter \(n_i\) that gets incremented over time, and the goal is to track an \(\varepsilon \)-approximation of their sum \(n=\sum _i n_i\) continuously at all times, using minimum communication. While the deterministic communication complexity of the problem is \({\varTheta }(k/\varepsilon \cdot \log N)\), where N is the final value of n when the tracking finishes, we show that with randomization, the communication cost can be reduced to \({\varTheta }(\sqrt{k}/\varepsilon \cdot \log N)\). Our algorithm is simple and uses only O(1) space at each player, while the lower bound holds even assuming each player has infinite computing power. Then, we extend our techniques to two related distributed tracking problems: frequency-tracking and rank-tracking, and obtain similar improvements over previous deterministic algorithms. Both problems are of central importance in large data monitoring and analysis, and have been extensively studied in the literature.

Similar content being viewed by others

Change history

28 July 2020

After publication of the article [1] the authors have noticed that the funding information are not published in online and print version of the article. The omitted funding acknowledgement is given below.

Notes

We sometimes omit “(t)” when the context is clear.

A more careful analysis leads to a slightly better bound of \(O(k/\varepsilon \cdot \log (\varepsilon N/k))\), but we will assume that N is sufficiently large, compared to k and \(1/\varepsilon \), to simplify the bounds.

The lower bound in [29] was stated for the heavy hitters tracking problem, but essentially the same proof works for count-tracking.

In Feller’s book [11] the following is proved. Let \(p \in (0,1)\) be some constant and \(q = 1-p\). The population size is N and the sample size is n, so that \(n < N\) and Np, Nq are both integers. The hypergeometric distribution is \(P(k; n, N) = {Np \atopwithdelims ()k}{Nq \atopwithdelims ()n-k}/{N \atopwithdelims ()n}\) for \(0 \le k \le n\). Theorem 8 [11] If \(N \rightarrow \infty , n \rightarrow \infty \) so that \(n/N \rightarrow t\in (0,1)\) and \(x_k := (k - np)/\sqrt{npq} \rightarrow x\), then

$$\begin{aligned} p(k;n,N) \sim \frac{e^{-x^2/2(1-t)}}{\sqrt{2\pi npq(1-t)}} \end{aligned}$$

References

Agarwal, P.K., Cormode, G., Huang, Z., Phillips, J.M., Wei, Z., Yi, K.: Mergeable summaries. In: Proceedings of the ACM Symposium on Principles of Database Systems (2012)

Arackaparambil, C., Brody, J., Chakrabarti, A.: Functional monitoring without monotonicity. In: Proceedings of the International Colloquium on Automata, Languages, and Programming (2009)

Babcock, B., Olston, C.: Distributed top-k monitoring. In: Proceedings of the ACM SIGMOD International Conference on Management of Data (2003)

Bar-Yossef, Z.: The complexity of massive data set computations. PhD thesis, University of California at Berkeley (2002)

Chan, H.-L., Lam, T.W., Lee, L.-K., Ting, H.-F.: Continuous monitoring of distributed data streams over a time-based sliding window. Algorithmica 62(3–4), 1088–1111 (2011)

Cormode, G.: The continuous distributed monitoring model. ACM SIGMOD Rec. 42(1), 5–14 (2013)

Cormode, G., Garofalakis, M., Muthukrishnan, S., Rastogi, R.: Holistic aggregates in a networked world: distributed tracking of approximate quantiles. In: Proceedings of the ACM SIGMOD International Conference on Management of Data (2005)

Cormode, G., Hadjieleftheriou, M.: Finding frequent items in data streams. In: Proceedings of the International Conference on Very Large Data Bases (2008)

Cormode, G., Muthukrishnan, S., Yi, K.: Algorithms for distributed functional monitoring. ACM Trans. Algorithms 7(2), Article 21 (2011). (Preliminary version in SODA’08)

Cormode, G., Muthukrishnan, S., Yi, K., Zhang, Q.: Continuous sampling from distributed streams. J. ACM 59(2), 10 (2012). (Preliminary version in PODS’10)

Feller, W.: An Introduction to Probability Theory and Its Applications. Wiley, New York (1968)

Gibbons, P.B., Tirthapura, S.: Estimating simple functions on the union of data streams. In: Proceedings of the ACM Symposium on Parallelism in Algorithms and Architectures (2001)

Greenwald, M., Khanna, S.: Space-efficient online computation of quantile summaries. In: Proceedings of the ACM SIGMOD International Conference on Management of Data (2001)

Huang, Z., Wang, L., Yi, K., Liu, Y.: Sampling based algorithms for quantile computation in sensor networks. In: Proceedings of the ACM SIGMOD International Conference on Management of Data (2011)

Huang, Z., Yi, K., Liu, Y., Chen, G.: Optimal sampling algorithms for frequency estimation in distributed data. In: IEEE INFOCOM (2011)

Keralapura, R., Cormode, G., Ramamirtham, J.: Communication-efficient distributed monitoring of thresholded counts. In: Proceedings of the ACM SIGMOD International Conference on Management of Data (2006)

Manjhi, A., Shkapenyuk, V., Dhamdhere, K., Olston, C.: Finding (recently) frequent items in distributed data streams. In: Proceedings of the IEEE International Conference on Data Engineering (2005)

Manku, G., Motwani, R.: Approximate frequency counts over data streams. In: Proceedings of the International Conference on Very Large Data Bases (2002)

Metwally, A., Agrawal, D., Abbadi, A.: An integrated efficient solution for computing frequent and top-k elements in data streams. ACM Trans. Database Syst. 31(3), 1095–1133 (2006)

Misra, J., Gries, D.: Finding repeated elements. Sci. Comput. Program. 2, 143–152 (1982)

Munro, J.I., Paterson, M.S.: Selection and sorting with limited storage. Theor. Comput. Sci. 12, 315–323 (1980)

Patt-Shamir, B., Shafrir, A.: Approximate distributed top-k queries. Distrib. Comput. 21(1), 1–22 (2008)

Suri, S., Toth, C., Zhou, Y.: Range counting over multidimensional data streams. Discrete Comput. Geom. 36, 633–655 (2006)

Tirthapura, S., Woodruff, D.P.: Optimal random sampling from distributed streams revisited. In: Proceedings of the International Symposium on Distributed Computing (2011)

Vapnik, V.N., Chervonenkis, A.Y.: On the uniform convergence of relative frequencies of events to their probabilities. Theory Probab. Appl. 16, 264–280 (1971)

Woodruff, D.P.: Efficient and Private Distance Approximation in the Communication and Streaming Models. PhD thesis, Massachusetts Institute of Technology (2007)

Woodruff, D.P., Zhang, Q.: Tight bounds for distributed functional monitoring. In: Proceedings of the ACM Symposium on Theory of Computing (2012)

Yao, A.C.: Probabilistic computations: towards a unified measure of complexity. In: Proceedings of the IEEE Symposium on Foundations of Computer Science (1977)

Yi, K., Zhang, Q.: Optimal tracking of distributed heavy hitters and quantiles. In: Proceedings of the ACM Symposium on Principles of Database Systems (2009)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Lower Bound for the Sampling Problem

Appendix: Lower Bound for the Sampling Problem

Claim

To solve the sampling problem we need to probe at least \({\varOmega }(k)\) sites.

Proof

Suppose that the coordinator only samples \(z = o(k)\) sites. Let X be the number of sites that are sampled with bit 1. Then X is chosen from the hypergeometric distribution with probability density function (pdf) \(\mathsf {Pr}[X = x] = {s' \atopwithdelims ()x}{k' - s' \atopwithdelims ()z - x}/{k' \atopwithdelims ()z}\). The expected value of X is \(\frac{z}{k'} \cdot s'\), which is \(\frac{z}{k'}\left( \frac{k}{2} - y + \sqrt{k}\right) \) or \(\frac{z}{k'}\left( \frac{k}{2} - y - \sqrt{k}\right) \), depending on the value of \(s'\). Let \(p = \left( \frac{k}{2} - y\right) /k' = \frac{1}{2} \pm o(1)\) and \(\alpha = \sqrt{k}/k' = 1/\sqrt{k} \pm o(1/\sqrt{k})\). To avoid tedious calculation, we assume that X is picked randomly from one of the two normal distributions \(\mathcal {N}_1(\mu _1, \sigma _1^2)\) and \(\mathcal {N}_2(\mu _2, \sigma _2^2)\) with equal probability, where \(\mu _1 = z(p-\alpha ), \mu _2 = z(p+\alpha ), \sigma _1, \sigma _2 = {\varTheta }(\sqrt{zp(1-p)}) = {\varTheta }(\sqrt{z})\). In Feller [11] it is shown that the normal distribution approximates the hypergeometric distribution very well when z is large and \(p \pm \alpha \) are constants in (0, 1).Footnote 5 Now our task is to decide from which of the two distributions X is drawn based on the value of X with success probability at least 0.7.

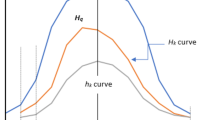

Let \(f_1(x; \mu _1, \sigma _1^2)\) and \(f_2(x; \mu _2, \sigma _2^2)\) be the pdf of the two normal distributions \(\mathcal {N}_1, \mathcal {N}_2\), respectively. It is easy to see that the best deterministic algorithm of differentiating the two distributions based on the value of a sample X will do the following.

-

If \(X > x_0\), then X is chosen from \(\mathcal {N}_2\), otherwise X is chosen from \(\mathcal {N}_1\), where \(x_0\) is the value such that \(f_1(x_0; \mu _1, \sigma _1^2) = f_2(x_0; \mu _2, \sigma _2^2)\) (thus \(\mu _1< x_0 < \mu _2\)).

Indeed, if \(X > x_0\) and the algorithm decides that “X is chosen from \(\mathcal {N}_1\)”, we can always flip this decision and improve the success probability of the algorithm.

The error comes from two sources: (1) \(X > x_0\) but X is actually drawn from \(\mathcal {N}_2\); (2) \(X \le x_0\) but X is actually drawn from \(\mathcal {N}_1\). The total error is

where \(\ell _1 = x_0 - \mu _1\) and \(\ell _2 = \mu _2 - x_0\). (Thus \(\ell _1 + \ell _2 = \mu _2 - \mu _1 = 2 \alpha z\)). \({\varPhi }(\cdot )\) is the cumulative distribution function (cdf) of the normal distribution. See Fig. 2.

Finally note that \(\ell _1/\sigma _1 = O(\alpha z / \sqrt{z}) = O(\sqrt{z/k}) = o(1)\) and \(\ell _2/\sigma _2 = O(\alpha z / \sqrt{z}) = o(1)\), so \({\varPhi }(-\ell _1/\sigma _1) + {\varPhi }(-\ell _2/\sigma _2) > 0.99\). Therefore, the failure probability is at least 0.49, contradicting our success probability guarantee. Thus we must have \(z = {\varOmega }(k)\). \(\square \)

Rights and permissions

About this article

Cite this article

Huang, Z., Yi, K. & Zhang, Q. Randomized Algorithms for Tracking Distributed Count, Frequencies, and Ranks. Algorithmica 81, 2222–2243 (2019). https://doi.org/10.1007/s00453-018-00531-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00453-018-00531-y