Abstract

The local computation of Linial [FOCS’87] and Naor and Stockmeyer [STOC’93] studies whether a locally defined distributed computing problem is locally solvable. In classic local computation tasks, the goal of distributed algorithms is to construct a feasible solution for some constraint satisfaction problem (CSP) locally defined on the network. In this paper, we consider the problem of sampling a uniform CSP solution by distributed algorithms in the \(\mathsf {LOCAL}\) model, and ask whether a locally definable joint distribution is locally sample-able. We use Markov random fields and Gibbs distributions to model locally definable joint distributions. We give two distributed algorithms based on Markov chains, called LubyGlauber and LocalMetropolis, which we believe to represent two basic approaches for distributed Gibbs sampling. The algorithms achieve respective mixing times \(O(\varDelta \log n)\) and \(O(\log n)\) under typical mixing conditions, where n is the number of vertices and \(\varDelta \) is the maximum degree of the graph. We show that the time bound \(\varTheta (\log n)\) is optimal for distributed sampling. We also show a strong \(\varOmega (\mathrm {diam})\) lower bound: in particular for sampling independent set in graphs with maximum degree \(\varDelta \ge 6\). This gives a strong separation between sampling and constructing locally checkable labelings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Local computation and the\(\mathsf {LOCAL}\)model. Locality of computation is a central theme in the theory of distributed computing. In the seminal works of Linial [44], and Naor and Stockmeyer [49], the locality of distributed computation and the locally definable distributed computing problems are respectively captured by the \(\mathsf {LOCAL}\) model and the notion of locally checkable labeling (LCL) problems. In the \(\mathsf {LOCAL}\) model [49, 52], a network of n processors is represented as an undirected graph, where each vertex represents a processor and each edge represents a bidirectional communication channel. Computations and communications are organized in synchronized rounds. In each round, each processor may receive a message of arbitrary size from each of its neighbors, perform an arbitrary local computation with the information collected so far, and send a message of arbitrary size to each of its neighbors. The output value for each vertex in a t-round protocol is determined by the local information within the t-neighborhood of the vertex. The local computation tasks are usually formulated as labeling problems, such as the locally checkable labeling (LCL) problems introduced in [49], in which the distributed algorithm is asked to construct a feasible solution of a constraint satisfaction problem (CSP) defined by local constraints with constant diameter in the network. Many problems can be expressed in this way, including various vertex/edge colorings, or local optimizations such as maximal independent set (MIS) and maximal matching.

A classic question for local computation is whether a locally definable problem is locally computable. Mathematically, this asks whether a feasible solution for a given local CSP can be constructed using only local information. There is a substantial body of research works dedicated to this question [2,3,4,5, 10, 24, 25, 29,30,31, 34, 39,40,42, 44, 49, 54].

The local sampling problem Given an LCL problem which defines a local CSP on the network, aside from constructing a feasible solution of the local CSP, another interesting problem is to sample a uniform random feasible solution, e.g. to sample a uniform random proper coloring of the network G with a given number of colors. More abstractly, given an instance of local CSP which, say, treats the vertices in the network G(V, E) as variables, a joint distribution of uniform random feasible solution \(\varvec{X}=(X_v)_{v\in V}\) is accordingly defined by these local constraints. Our main question is whether a locally definable joint distribution can be sampled from locally.

Intuitively, sampling could be substantially more difficult than labeling, because to sample a feasible solution is at least as difficult as to construct one, and furthermore, the marginal distribution of each random variable \(X_v\) in a jointly distributed feasible solution \(\varvec{X}=(X_v)_{v\in V}\) may already encapsulate certain amount of non-local information about the solution space.

Retrieving such information about the solution space (as in sampling) instead of constructing one solution (as in labeling) by distributed algorithms is especially well motivated in the context of distributed machine learning [14, 15, 17, 32, 50, 57, 61,62,63], where the data (the description of the joint distribution) is usually distributed among a large number of servers.

Besides uniform distributions, it is also natural to consider sampling from general non-uniform distributions over the solution space, which are usually formulated as graphical models known as the weighted CSPs [7], also known as factor graphs [47]. In this model, a probability distribution called the Gibbs distribution is defined over the space \(\varOmega =[q]^V\) of configurations, in such a way that each constraint of the weighted CSP contributes a nonnegative factor in the probability measure of a configuration in \(\varOmega \). Due to Hammersley-Clifford’s fundamental theorem [47, Theorem 9.3] of random fields, this model is universal for conditional independent (spatial Markovian) [47, Proposition 9.2] joint distributions. The conditional independence property roughly says that fixed a separator \(S\subset V\) whose removal “disconnects” the variable sets A and B, given any feasible configuration \(X_S=\sigma _S\) over S, the configurations \(X_A\) over A and \(X_B\) over B are conditionally independent.

We are particularly interested in a basic class of weighted local CSPs, namely the Markov random fields (MRFs), where every local constraint (factor) is either a binary constraint over an edge or a unary constraint on a vertex. Specifically, given a graph G(V, E) and a finite domain \([q]=\{1,2,\ldots , q\}\), the probability measure \(\mu (\sigma )\) of each configuration \(\sigma \in [q]^V\) under the Gibbs distribution \(\mu \) is defined to be proportional to the weight:

where \(\{A_e\in {\mathbb {R}}_{\ge 0}^{q\times q}\}_{e\in E}\) are non-negative \(q\times q\) symmetric matrices and \(\{b_v\in {\mathbb {R}}_{\ge 0}^{ q}\}_{v\in V}\) are non-negative q-vectors, both specified by the instance of MRF. Examples of MRFs include combinatorial models such as independent set, vertex cover, graph coloring, and graph homomorphsm, or physical models such as hardcore gas model, Ising model, Potts model, and general spin systems.

1.1 Our results

We give two Markov chain based distributed algorithms for sampling from Gibbs distributions. Given any \(\epsilon >0\), each algorithm returns a random output which is within total variation distance \(\epsilon \) from the Gibbs distribution. Our expositions mainly focus on MRFs, although both algorithms can be extended straightforwardly to general weighted local CSPs.

In classic single-site Markov chains for Gibbs sampling, such as the Glauber dynamics, at each step a variable is picked at random and is updated according to its neighbors’ current states. A generic approach for parallelizing a single-site sequential Markov chain is to update a set of non-adjacent vertices in parallel at each step. This natural idea has been considered in [32], also in a much broader context such as parallel job scheduling [12] or distributed Lovász local lemma [11, 48]. For sampling from locally defined joint distributions, it is especially suitable because of the conditional independence property of MRFs.

Our first algorithm, named LubyGlauber, naturally parallelizes the Glauber dynamics by parallel updating vertices from independent sets generated by the “Luby step” in Luby’s algorithm [1, 46]. It is well known that Glauber dynamics achieves the mixing rate \(\tau (\epsilon )=O\left( n\log \left( \frac{n}{\epsilon }\right) \right) \) under the Dobrushin’s condition for the decay of correlation [16, 35]. By a standard coupling argument, the LubyGlauber algorithm achieves a mixing rate \(\tau (\epsilon )=O\left( \varDelta \log \left( \frac{n}{\epsilon }\right) \right) \) under the same condition, where \(\varDelta \) is the maximum degree of the network. In particular, for uniform proper q-colorings, this implies:

Theorem 1

If \(q\ge \alpha \varDelta \) for an arbitrary constant \(\alpha >2\), there is an algorithm which samples a uniform proper q-coloring within total variation distance \(\epsilon >0\) within \(O\left( \varDelta \log \left( \frac{n}{\epsilon }\right) \right) \) rounds of communications on any graph G(V, E) with \(n=|V|\) vertices and maximum degree \(\varDelta \), where \(\varDelta \) may be unbounded.

A barrier for this natural approach is that it will perform poorly on general graphs with large chromatic number. The situation motivates us to ask following questions:

Is it possible to update all variables in \(\varvec{X}=(X_v)_{v\in V}\) simultaneously and still converge to the correct stationary distribution \(\mu \)?

More concretely, is it always possible to sample almost uniform proper q-coloring, for a \(q=O(\varDelta )\), on any graphs G(V, E) with \(n=|V|\) vertices and maximum degree \(\varDelta \), within \(O(\log n)\) rounds of communications, especially when \(\varDelta \) is unbounded?

Surprisingly, the answers to both questions are “yes”. We give an algorithm, called the LocalMetropolis algorithm, achieving these goals. This is a bit surprising, since it seems to fully parallelize a process which is intrinsically sequential due to the massive local dependencies, especially on graphs with unbounded maximum degree. The algorithm follows the Metropolis-Hastings paradigm: at each step, it proposes to update all variables independently and then applies proper local filtrations to the proposals to ensure its convergence to the correct joint distribution. Our main discovery is that for locally defined joint distributions, the Metropolis filters are localizable.

The LocalMetropolis algorithm always converges to the correct Gibbs distribution. The analysis of its mixing time is more involved. In particular, for uniformly sampling proper q-coloring we show:

Theorem 2

If \(q\ge \alpha \varDelta \) for an arbitrary constant \(\alpha >2+\sqrt{2}\), there is an algorithm for sampling uniform proper q-coloring within total variation distance \(\epsilon >0\) in \(O\left( \log \left( \frac{n}{\epsilon }\right) \right) \) rounds of communications on any graph G(V, E) with \(n=|V|\) vertices and maximum degree at most \(\varDelta \ge 9\), where \(\varDelta \) may be unbounded.

Neither of the algorithms abuses the power of the \(\mathsf {LOCAL}\) model: each message is of \(O(\log n)\) bits if the domain size \(q=\mathrm {poly}(n)\).

Due to the exponential correlation between variables in Gibbs distributions, the \(O\left( \log \left( \frac{n}{\epsilon }\right) \right) \) time bound achieved in Theorem 2 is optimal.

After the submission of this paper, two independent works [21, 23] give the same distributed algorithm for sampling random q-coloring, which improves the LocalMetropolis algorithm by introducing a step of laziness as distributed symmetry breaking. This new algorithm achieves an \(O(\log n)\) mixing time under the Dobrushin’s condition \(q \ge (2+\delta )\varDelta \). Furthermore, for graphs with sufficiently large maximum degree and girth at least 9, it achieves an \(O(\log n)\) mixing time when \(q \ge (\alpha ^* + \delta )\varDelta \), where \(\alpha ^* \approx 1.763\) is the positive root of equation \(x = \mathrm {e}^{1/x}\). Another non-MCMC algorithm named distributed JVV sampler is given in [22], which successfully samples. For many locally definable joint distributions, this algorithm successfully samples a configuration within \(\mathrm {polylog}(n)\) rounds in the \(\mathsf {LOCAL}\) model with high probability. In particular, this algorithm samples random q-coloring of triangle-free graphs within \(O(\log ^3 n)\) rounds in the \(\mathsf {LOCAL}\) model as long as \(q \ge (\alpha ^* + \delta )\varDelta \). This non-MCMC sampling algorithm abuses the power of the \(\mathsf {LOCAL}\) model by assuming unlimited message-size and local computations.

It is a well known phenomenon that sampling may become computationally intractable when the model exhibits the non-uniqueness phase-transition property, e.g. independent sets in graphs of maximum degree bounded by a \(\varDelta \ge 6\) [27, 28, 55, 56]. For the same class of distributions, we show the following unconditional \(\varOmega ({\mathrm {diam}})\) lower bound for sampling in the \(\mathsf {LOCAL}\) model.

Theorem 3

For \(\varDelta \ge 6\), there exist infinitely many graphs G(V, E) with maximum degree \(\varDelta \) and diameter \({\mathrm {diam}}(G)=|V|^{\varOmega (1)}\) such that any algorithm that samples uniform independent set in G within sufficiently small constant total variation distance \(\epsilon \) requires \(\varOmega ({\mathrm {diam}}(G))\)rounds of communications, even assuming the vertices \(v\in V\) to be aware of G.

The lower bound is proved by a now fairly well-understood reduction from maximum cut to sampling independent sets when \(\varDelta \ge 6\) [28, 55, 56]. Specifically, we show that when \(\varDelta \ge 6\) there are infinitely many graphs G(V, E) such that if one can sample a nearly uniform independent set in G(V, E), then one can also sample an almost uniform maximum cut in an even cycle of size \(|V|^{\varOmega (1)}\), which is necessarily a global task because of the long-range correlation.

Theorem 3 strongly separates sampling from labeling problems for distributed computing:

In the \(\mathsf {LOCAL}\) model it is trivial to construct an independent set (because \(\emptyset \) is an independent set). In contrast, Theorem 3 says that sampling a uniform independent set is very much a global task for graphs with maximum degree \(\varDelta \ge 6\).

In the \(\mathsf {LOCAL}\) model any labeling problem would be trivial once the network structure G is known to each vertex. In contrast, the sampling lower bound in Theorem 3 still holds even when each vertex is aware of G. Unlike labeling whose hardness is due to the locality of information, for sampling the hardness is solely due to the locality of randomness.

A breakthrough of Ghaffari et al. [30] shows that any labeling problem that can be solved sequentially with local information admits a \(O(\mathrm {polylog}(n))\)-round randomized protocol in the \(\mathsf {LOCAL}\) model. In contrast, for sampling we have an \(\varOmega ({\mathrm {diam}})\) randomized lower bound for graphs with \(n^{\varOmega (1)}\) diameter.

1.2 Related work

The topic of sequential MCMC (Markov chain Monte Carlo) sampling is extensively studied. The study of sampling proper q-colorings was initiated by the seminal works of Jerrum [37] and independently of Salas and Sokal [53]. So far the best rapid mixing condition for general bounded-degree graphs is \(q \ge \frac{11}{6}\varDelta \) due to Vigoda [59]. See [26] for an excellent survey.

The chromatic-scheduler-based parallelization of Glauber dynamics was studied in [32]. This parallel chain is in fact a special case of systematic scan for Glauber dynamics [18, 19, 35], in which the variables are updated according to a fixed order.

Empirical studies showed that sometimes an ad hoc “Hogwild!” parallelization of sequential sampler might work well in practice [51] and the mixing results assuming bounded asynchrony were given in [14, 38].

A sampling algorithm based on the Lovász local lemma is given in [33]. When sampling from the hardcore model with \(\lambda <\frac{1}{2\sqrt{\mathrm {e}}\varDelta -1}\) on a graph of maximum degree \(\varDelta \), this sampling algorithm can be implemented in the \(\mathsf {LOCAL}\) model which runs in \(O(\log n)\) rounds.

A problem related to the local sampling is the finitary coloring [36], in which a random feasible solution is sampled according to an unconstrained distribution as long as the distribution is over feasible solutions, rather than a specific distribution such as the Gibbs distribution. Therefore, the nature of this problem is still labeling rather than sampling.

Our algorithms are Markov chains which randomly walk over the solution space. A related notion is the distributed random walks [13], which walk over the network.

Our LocalMetropolis algorithm should be distinguished from the parallel Metropolis-Hastings algorithm [9] or the parallel tempering [58], in which the sampling algorithms makes N proposals or runs N copies of the system in parallel for a suitably large N, in order to improve the dynamic properties of the Monte Carlo simulation.

Organization of the paper The models and preliminaries are introduced in Sect. 2. The LubyGlauber algorithm is introduced in Sect. 3. The LocalMetropolis algorithm is introduced in Sect. 4. And the lower bounds are proved in Sect. 5.

2 Models and preliminaries

2.1 The \(\mathsf {LOCAL}\) model

We assume Linial’s \(\mathsf {LOCAL}\) model [49, 52] for distributed computation, which is as described in Sect. 1. We further allow each node in the network G(V, E) to be aware of upper bounds of \(\varDelta \) and \(\log n\), where \(n=|V|\) is the number of nodes. This information is accessed only because the running time of the Monte Carlo algorithms may depend on them.

2.2 Markov random field and local CSP

The Markov random field (MRF), or spin system, is a well studied stochastic model in probability theory and statistical physics. Given a graph G(V, E) and a set of spin states\([q]=\{1,2,\ldots ,q\}\) for a finite \(q\ge 2\), a configuration\(\sigma \in [q]^V\) assigns each vertex one of the q spin states. For each edge \(e\in E\) there is a non-negative \(q\times q\) symmetric matrix \(A_e\in {\mathbb {R}}_{\ge 0}^{q\times q}\) associated with e, called the edge activity; and for each vertex \(v\in V\) there is a non-negative q-dimensional vector \(b_v \in {\mathbb {R}}_{\ge 0}^q\) associated with v, called the vertex activity. Then each configuration \(\sigma \in [q]^V\) is assigned a weight \(w(\sigma )\) which is as defined in (1).

This gives rise to a natural probability distribution \(\mu \), called the Gibbs distribution, over all configurations in the sample space \(\varOmega =[q]^V\) proportional to their weights, such that \(\mu (\sigma ) = {w(\sigma )}/{Z}\) for each \(\sigma \in \varOmega \), where \(Z=\sum _{\sigma \in \varOmega }w(\sigma )\) is the normalizing factor. A configuration \(\sigma \in \varOmega \) is feasible if \(\mu (\sigma )>0\).

Several natural joint distributions can be expressed as MRFs:

Independent sets/vertex covers: When \(q=2\), all \(A_e=\begin{bmatrix}1&1 \\ 1&0\end{bmatrix}\) and all \(b_v=\begin{bmatrix}1 \\ 1 \end{bmatrix}\), each feasible configuration corresponds to an independent set (or vertex cover, if the other spin state indicates the set) in G, and the Gibbs distribution \(\mu \) is the uniform distribution over independent sets (or vertex covers) in G. When \(b_v=\begin{bmatrix}1 \\ \lambda \end{bmatrix}\) for some parameter \(\lambda >0\), this is the hardcore model from statistical physics.

Colorings and list colorings: When every \(A_e\) has \(A_e(i,i)=0\) and \(A_e(i,j)=1\) if \(i\ne j\), and every \(b_v\) is the all-1 vector, the Gibbs distribution \(\mu \) becomes the uniform distribution over proper q-colorings of graph G. For list colorings, each vertex \(v\in V\) can only use the colors from its list \(L_v\subseteq [q]\) of available colors. Then we can let each \(b_v\) be the indicator vector for the list \(L_v\) and \(A_e\)’s are the same as for proper q-colorings, so that the Gibbs distribution is the uniform distribution over proper list colorings.

Physical model: The proper q-coloring is a special case of the Potts model in statistical physics, in which each \(A_e\) has \(A_e(i,i)=\beta \) for some parameter \(\beta >0\) and \(A_e(i,j)=1\) if \(i\ne j\). When further \(q=2\), the model becomes the Ising model.

The model of MRF can be further generalized to allow multivariate asymmetric constraints, by which gives us the weighted CSPs, also known as the factor graphs. In this model, we have a collection \({\mathcal {C}}\) of constraints\(c=(f_c, S_c)\) where each \(f_c:[q]^{|S_c|}\rightarrow {\mathbb {R}}_{\ge 0}\) is a constraint function with scope\(S_c\subseteq V\). Each configuration \(\sigma \in [q]^V\) is assigned a weight:

where \(\sigma |_{S_c}\) represents the restriction of \(\sigma \) on \(S_c\). And the Gibbs distribution \(\mu \) over all configurations in \(\varOmega =[q]^V\) is defined in the same way proportional to the weights. In particular, when \(f_c\)’s are Boolean-valued functions, the Gibbs distribution \(\mu \) is the uniform distribution over CSP solutions.

A constraint \(c=(f_c,S_c)\) is said to be local with respect to network G if the diameter of the scope \(S_c\) in network G is bounded by a constant. Local CSPs are expressive, for example:

Dominating sets: They can be expressed by having a “cover” constraint on each inclusive neighborhood \(\varGamma ^+(v)\) which constrains that at least one vertex from \(\varGamma ^+(v)\) is chosen.

Maximal independent sets (MISs): An MIS is a dominating independent set.

Clearly, the MRF is a special class of weighted local CSPs, defined by unary and binary symmetric local constraints with respect to G.

2.3 Local sampling

The local sampling problem is defined as follows. Let G(V, E) be a network. Given an MRF defined on G (or more generally a weighted CSP that is local with respect to G), where the specifications of the local constraints are given as private inputs to the involved processors, for any \(\epsilon >0\) upon termination each processor \(v\in V\) outputs a random variable \(X_v\) such that the total variation distance between the distribution \(\nu \) of the random vector \(X=(X_v)_{v\in V}\) and the Gibbs distribution \(\mu \) is bounded as \(d_{\mathrm {TV}}\left( {\mu },{\nu }\right) \le \epsilon \), where the total variation distance between two distributions \(\mu ,\nu \) over \(\varOmega =[q]^V\) is defined as

2.4 Mixing rate

Our algorithms are given as Markov chains. Given an irreducible and aperiodic Markov chain \(X^{(0)},X^{(1)},\ldots \in \varOmega \), for any \(\sigma \in \varOmega \) let \(\pi ^{(t)}_{\sigma }\) denote the distribution of \(X^{(t)}\) conditioning on that \(X^{(0)}=\sigma \). For \(\epsilon >0\) the mixing rate\(\tau (\epsilon )\) is defined as

where \(\pi \) is the stationary distribution for the chain. For formal definitions of these notions for Markov chain, we refer to a standard textbook of the subject [43]. Informally, irreducibility and aperiodicity guarantees that \(X^{(t)}\) converges to the unique stationary distribution \(\pi \) as \(t\rightarrow \infty \), and the mixing rate \(\tau (\epsilon )\) tells us how fast it converges.

Notations Given a graph G(V, E), we denote by \(d_v=\deg (v)\) the degree of v in G, \(\varDelta =\varDelta _G\) the maximum degree of G, \({\mathrm {diam}}={\mathrm {diam}}(G)\) the diameter of G, and \({\mathrm {dist}}(u,v)={\mathrm {dist}}_G(u,v)\) the shortest path distance between vertices u and v in G.

We also denote by \(\varGamma (v)=\{u\mid uv\in E\}\) the neighborhood of v, and \(\varGamma ^+(v)=\varGamma (v)\cup \{v\}\) the inclusive neighborhood. Finally we write \(B_r(v)=\{u\mid {\mathrm {dist}}(u,v)\le r\}\) for the r-ball centered at v.

3 The LubyGlauber algorithm

In this section, we analyze a generic scheme for parallelizing Glauber dynamics, a classic sequential Markov chain for sampling from Gibbs distributions.

We assume a Markov random field (MRF) defined on the network G(V, E), with edge activities \(\varvec{A}=\{A_e\}_{e\in E}\) and vertex activities \(\varvec{b}=\{b_v\}_{v\in V}\), which specifies a Gibbs distribution \(\mu \) over \(\varOmega =[q]^V\). The single-site heat-bath Glauber dynamics, or simply the Glauber dynamics, is a well known Markov chain for sampling from the Gibbs distribution \(\mu \). Starting from an arbitrary initial configuration \(X\in [q]^V\), at each step the chain does the followings:

sample a vertex \(v\in V\) uniformly at random;

resample the value of \(X_v\) according to the marginal distribution induced by \(\mu \) at vertex v conditioning on the current spin states of v’s neighborhood.

It is well known (see [43]) that the Glauber dynamics is a reversible Markov chain whose stationary distribution is the Gibbs distribution \(\mu \).

Formally, supposed that \(\sigma \in [q]^V\) is sampled from \(\mu \), for any \(v\in V\), \(S\subseteq V\) and \(\tau _S\in [q]^S\), the marginal distribution at vertex v conditioning on \(\tau _S\), denoted as \({\mu }_v(\cdot \mid \tau _S)\), is defined as

In the Glauber dynamics, \(X_v\) is resampled according to the marginal distribution \(\mu _v(\cdot \mid X_{\varGamma (v)})\). Here \(X_{\varGamma (v)}\) represents the current spin states of v’s neighborhood \(\varGamma (v)\). For Markov random field, this marginal distribution can be computed as

For example, when the MRF is the proper q-coloring, this is just the uniform distribution over available colors in [q] which are not used by v’s neighbors. For the Glauber dynamics to work, it is common to assume that the sum \(\sum _{a\in [q]}b_v(a)\prod _{u\in \varGamma (v)}A_{uv}(a,X_u)\) is always positive, so that the marginal distributions are well-defined.Footnote 1

A generic scheme for parallelizing the Glauber dynamics is that at each step, instead of updating one vertex, the chain updates a group of “non-interfering” vertices in parallel, as follows:

independently sample a random independent set I in G;

for each \(v\in I\), resample \(X_v\) in parallel according to the marginal distribution \(\mu _v(\cdot \mid X_{\varGamma (v)})\).

This can be seen as a relaxation of the chromatic-based scheduler [32] and systematic scans [19].

A convenient way for generating a random independent set in a distributed fashion is the “Luby step” in Luby’s algorithm for distributed MIS [1, 46]: each vertex samples a uniform and independent ID from the interval [0, 1] (which can be discretized with \(O(\log n)\) bits) and the vertices v who are locally maximal among the inclusive neighborhood \(\varGamma ^+(v)\) are selected into the independent set I.

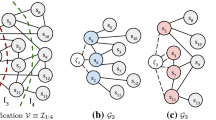

The resulting algorithm is called LubyGlauber, whose pseudocode is given in Algorithm 1.

According to the definition of marginal distribution (2), resampling \(X_v\) can be done locally by exchanging neighbors’ current spin states. After T iterations, where T is a threshold determined for specific Markov random field, the algorithm terminates and outputs the current \(\varvec{X}=(X_v)_{v\in V}\).

Remark 1

The LubyGlauber algorithm can be easily extended to sample from weighted CSPs defined by local constraints \(c=(f_c,S_c)\in {\mathcal {C}}\), by simply overriding the definition of neighborhood as \(\varGamma (v)=\{u\ne v\mid \exists c\in {\mathcal {C}}, \{u,v\}\subseteq S_c\}\), thus \(\varGamma (v)\) is the neighborhood of v in the hypergraph where \(S_c\)’s are the hyperedges and now I is the strongly independent set of this hypergraph.

3.1 Mixing of LubyGlauber

Let \(\mu _{\mathsf {LG}}\) denote the distribution of \(\varvec{X}\) returned by the algorithm upon termination. As in the case of single-site Glauber dynamics, we assume that the marginal distribution (2) is always well-defined, and the single-site Glauber dynamics is irreducible among all feasible configurations. The following proposition is easy to obtain.

Proposition 1

The Markov chain LubyGlauber is reversible and has stationary distribution \(\mu \). Furthermore, under the above assumption, \(d_{\mathrm {TV}}\left( {\mu _{\mathsf {LG}}},{\mu }\right) \) converges to 0 as \(T\rightarrow \infty \).

Proof

We prove this for a more general family of Markov chains, where the “Luby step” is replaced by an arbitrary way of independently sampling a random independent set I, as long as \(\Pr [v\in I]>0\) for every vertex \(v\in V\).

Let \(\varOmega =[q]^V\) and \(P\in {\mathbb {R}}^{|\varOmega |\times |\varOmega |}_{\ge 0}\) denote the transition matrix for the LubyGlauber chain. We first show that the chain is reversible and \(\mu \) is stationary. Specifically, this means to verify the detailed balance equation:

for all configurations \(X,Y\in \varOmega =[q]^V\).

If both X and Y are infeasible, then \(\mu (X)=\mu (Y)=0\) and the detailed balance equation holds trivially. If X is feasible and Y is not then \(\mu (Y)=0\) and meanwhile since the chain never moves from a feasible configuration to an infeasible one, we have \(P(X,Y)=0\) so the detailed balance equation is also satisfied.

It remains to verify the detailed balance equation when both X and Y are feasible. Let \(D=\{v\in V\mid X_v\ne Y_v\}\) be the set of disagreeing vertices. If D is not an independent set, then \(P(X,Y)=P(Y,X)=0\) and the detailed balance equation holds. Suppose that D is an independent set. For any independent set \(I\supseteq D\), we denote by \(\Pr [X\rightarrow Y\mid I]\) the probability that within an iteration the chain moves from X to Y conditioning on I being the independent set sampled in the first step. Therefore,

By the law of total probability,

Thus, the chain is reversible with respect to the Gibbs distribution \(\mu \).

Next, observe that the chain will never move from a feasible configuration to an infeasible one. Moreover, due to the assumption that the marginal distribution (2) is always well-defined, once a vertex v has been resampled, it will satisfy all local constraints. Therefore, the chain will be feasible once every vertex has been resampled. Since every vertex v has positive probability \(\Pr [v\in I]\) to be resampled, the chain is absorbing to feasible configurations.

It is easy to observe that every feasible configuration is aperiodic, since it has self-loop transition, i.e. \(P(X,X) > 0\) for all feasible X. And any move \(X \rightarrow Y\) between feasible configurations \(X,Y\in \varOmega \) in the single-site Glauber dynamics with vertex v being updated, can be simulated by a move in the LubyGlauber chain by first sampling an independent set \(I\ni v\) (which is always possible since \(\Pr [v\in I]>0\)) and then updating v according to \(X\rightarrow Y\) and meanwhile keeping all \(v\in I{\setminus }\{v\}\) unchanged (which is always possible for feasible X). Provided the irreducibility of the single-site Glauber dynamics among all feasible configurations, the LubyGlauber chain is also irreducible among all feasible configurations. Combining with the absorption towards feasible configurations and their aperiodicity, due to the Markov chain convergence theorem [43], the total variation distance \(d_{\mathrm {TV}}\left( {\mu _{\mathsf {LG}}},{\mu }\right) \) converges to 0 as \(T \rightarrow \infty \).\(\square \)

We then apply a standard coupling argument from [18, 35] to analyze the mixing rate of the LubyGlauber chain. The following notions are essential to the mixing of Glauber dynamics.

Definition 1

(influence matrix) For \(v\in V\) and \(\sigma \in [q]^V\), we write \(\mu _v^\sigma =\mu _v(\cdot \mid \sigma _{\varGamma (v)})\) for the marginal distribution of the value of v, for configurations sampled from \(\mu \) conditioning on agreeing with \(\sigma \) at all neighbors of v. For vertices \(i, j\in V\), the influence of j on i is defined as

where \(S_j\) denotes the set of all pairs of feasible configurations \(\sigma ,\tau \in [q]^V\) such that \(\sigma \) and \(\tau \) agree on all vertices except j. Let \(R=(\rho _{i,j})_{i,j\in V}\) be the \(n\times n\)influence matrix.

Definition 2

(Dobrushin’s condition) Let \(\varvec{\alpha }\) be the total influence on a vertex, defined by

We say that the Dobrushin’s condition is satisfied if \(\varvec{\alpha }<1\).

It is a fundamental result that the Dobrushin’s condition is sufficient for the rapid mixing of Glauber dynamics [16, 35, 53], with a mixing rate of \(\tau (\epsilon )=O\left( \frac{n}{1-\varvec{\alpha }}\log \left( \frac{n}{\epsilon }\right) \right) \) . Here we show that the LubyGlauber chain is essentially a parallel speed up of the Glauber dynamics by a factor of \(\varTheta (\frac{n}{\varDelta })\).

Theorem 4

Under the same assumption as Proposition 1, if the total influence \(\varvec{\alpha }<1\), then the mixing rate of the LubyGlauber chain is \(\tau (\epsilon )= O\left( \frac{\varDelta }{1-\varvec{\alpha }}\log \left( \frac{n}{\epsilon }\right) \right) \).

Consequently, for any \(\epsilon >0\) the LubyGlauber algorithm can terminate within \(O\left( \frac{\varDelta }{1-\varvec{\alpha }}\log \left( \frac{n}{\epsilon }\right) \right) \) rounds in the \(\mathsf {LOCAL}\) model and return an \(\varvec{X}\in [q]^V\) whose distribution \(\mu _\mathsf {LG}\) is \(\epsilon \)-close to the Gibbs distribution \(\mu \) in total variation distance.

Remark 2

In fact, Proposition 1 and Theorem 4 hold for a more general family of Markov chains, where the “Luby step” could be any subroutine which independently generates a random independent set I, as long as every vertex has positive probability to be selected into I. In general, the mixing rate in Theorem 4 is in fact \(\tau (\epsilon )= O\left( \frac{1}{(1-\varvec{\alpha })\gamma }\log \left( \frac{n}{\epsilon }\right) \right) \) where \(\gamma \) is a lower bound for the probability \(\Pr [v\in I]\) for all \(v\in V\).

The following lemma is crucial for relating the mixing rate to the influence matrix. The lemma has been proved in various places [14, 18, 35].

Lemma 1

Let X and Y be two random variables that take values over the feasible configurations in \(\varOmega =[q]^V\), then for any \(i\in V\),

Proof

We enumerate V as \(V=\{1,2,\ldots , n\}\). For \(0\le k\le n\), define \(Z^{(k)}\) as that for each \(j\in V\), \(Z^{(k)}_{j}=X_j\) if \(j>k\) and \(Z^{(k)}_{j}=Y_j\) if \(j\le k\). In particular, \(Z^{(0)}=X\) and \(Z^{(n)}=Y\). Now, by triangle inequality,

Next, we note that \(Z^{(k-1)}=Z^{(k)}\) if and only if \(X_k=Y_k\). Therefore,

Since \(Z^{(k-1)}\) and \(Z^{(k)}\) can only differ at vertex k, it follows that \((Z^{(k-1)},Z^{(k)})\in S_k\), and hence,

By linearity of expectation,

\(\square \)

Proof of Theorem 4:

We are actually going to prove a stronger result: Denoted by I the random independent set on which the resampling is executed, we write \(\gamma _v=\Pr [v\in I]\) for each \(v\in V\), and assume that for all \(v\in V\), \(\gamma _v\ge \gamma \) for some \(\gamma >0\). Clearly, when I is generated by the “Luby step”, this holds for \(\gamma =\frac{1}{\varDelta +1}\). We are going to prove that \(\tau (\epsilon )=O\left( \frac{1}{(1-\varvec{\alpha })\gamma }\log \left( \frac{n}{\epsilon }\right) \right) \).

The proof follows the framework of Hayes [35]. We construct a coupling of the Markov chain \((X^{(t)}, Y^{(t)})\) such that the transition rules for \(X^{(t)}\rightarrow X^{(t+1)}\) and \(Y^{(t)}\rightarrow Y^{(t+1)}\) are the same as the LubyGlauber chain. If \(\Pr [X^{(T)}\ne Y^{(T)}\mid X^{(0)}=\sigma \wedge Y^{(0)}=\tau ]\le \epsilon \) for any initial configurations \(\sigma ,\tau \in \varOmega \), then by the coupling lemma for Markov chain [43], we have the mixing rate \(\tau (\epsilon )\le T\).

The coupling we are going to use is the maximal one-step coupling of the LubyGlauber chain, which for every vertex \(i\in V\) achieves that

where \(\mu _i^{X^{(t)}}\) and \(\mu _i^{Y^{(t)}}\) are the marginal distributions as defined in Definition 1. The existence of such coupling is guaranteed by the coupling lemma.

Arbitrarily fix \(\sigma ,\tau \in \varOmega =[q]^V\). For \(t\ge 0\), define \((X^{(t)},Y^{(t)})\in \varOmega ^2\) by iterating a maximal one-step coupling of the LubyGlauber chain, starting from initial condition \(X^{(0)}=\sigma ,Y^{(0)}=\tau \). Due to the well-defined-ness of marginal distribution (2), we know that once all vertices have been resampled, the configuration will be feasible and will remain to be feasible in future.

Let \(T_1\) be a positive integer and \({\mathcal {F}}\) denote the event all vertices have been resampled in chain X and Y in the first \(T_1\) steps. By union bound, we have

Next, we assume that \(X^{(t)},Y^{(t)}\) are both feasible for \(t\ge T_1\). We define the vector \(\mathbf {p}^{(t)}\in [0,1]^V\) as

By the definition of the LubyGlauber chain, it holds for every \(j\in V\) that

By the definition of maximal one-step coupling and Lemma 1, for \(t\ge T_1\), for any \(i\in V\),

Combined with equality (4), for \(t\ge T_1\) we have

where matrix \(M=(J-\varGamma )J+\varGamma R\), where \(\varGamma \) is the \(n\times n\) diagonal matrix with \(\varGamma _{i,i}=\gamma _i\); J is the \(n\times n\) identity matrix; and \(R=(\rho _{ij})\) is the influence matrix. The \(\infty \)-norm of M is bounded as

Let \(T=T_1+T_2\). By induction, we obtain the component-wise inequality

Conditioning on that \(X^{(T_1)}\) and \(Y^{(T_1)}\) are both feasible, we have

For any \(\epsilon \), we choose \(T_1=\left\lceil \frac{1}{\gamma }\ln \left( \frac{4n}{\epsilon }\right) \right\rceil \) and \(T_2=\left\lceil \frac{1}{(1-\varvec{\alpha })\gamma }\ln \left( \frac{2n}{\epsilon }\right) \right\rceil \). Then \(T=T_1+T_2=O\left( \frac{1}{(1-\varvec{\alpha })\gamma }\log \left( \frac{n}{\epsilon }\right) \right) \). Combining (3) and (5), conditioning on \(X^{(0)}=\sigma \wedge Y^{(0)}=\tau \) for arbitrary \(\sigma ,\tau \in \varOmega \), we have

This implies that

In particular, if the random independent set I is generated by the “Luby step", we have \(\gamma =\frac{1}{\varDelta +1}\), therefore for the LubyGlauber chain

\(\square \)

3.2 Application of LubyGlauber for sampling graph colorings

For uniformly distributed proper q-coloring of graph G, it is well known that the Dobrushin’s condition is satisfied when \(q\ge 2\varDelta +1\) where \(\varDelta \) is the maximum degree of graph G.

We consider a more generalized problem, the list colorings, where each vertex \(v\in V\) maintains a list \(L_v\subseteq [q]\) of colors that it can use. The proper q-coloring is a special case of list coloring when everyone’s list is precisely [q]. For each vertex \(v\in V\), we denote by \(q_v=|L_v|\) the size of v’s list, and \(d_v=\deg (v)\) the degree of v. It is easy to verify that the total influence \(\varvec{\alpha }\) is now bounded as

Applying Theorem 4, we have the following corollary, which also implies Theorem 1.

Corollary 1

If there is an arbitrary constant \(\delta >0\) such that \(q_v\ge (2+\delta )d_v\) for every vertex v, then the mixing rate of the LubyGlauber chain for sampling list coloring is \(\tau (\epsilon )= O\left( \varDelta \log \left( \frac{n}{\epsilon }\right) \right) \).

4 The LocalMetropolis algorithm

In this section, we give an algorithm that may fully parallelize the sequential process under suitable mixing conditions, even on graphs with unbounded degree. The algorithm is inspired by the famous Metropolis-Hastings algorithm for MCMC, in which a random choice is proposed and then filtered to enforce the target stationary distribution. Our algorithm, called the LocalMetropolis algorithm, makes each vertex propose independently, and localizes the work of filtering to each edge.

We are given a Markov random field (MRF) defined on the network G(V, E), with edge activities \(\varvec{A}=\{A_e\}_{e\in E}\) and vertex activities \(\varvec{b}=\{b_v\}_{v\in V}\), whose Gibbs distribution is \(\mu \). Starting from an arbitrary configuration \(X\in [q]^V\), in each iteration, the LocalMetropolis chain does the followings:

Propose: Each vertex \(v \in V\) independently proposes a spin state \(\sigma _v\in [q]\) with probability proportional to \(b_v(\sigma _v)\).

Local filter: Each edge \(e\in E\) flips a biased coin independently, with the probability of HEADS being

$$\begin{aligned} \tilde{A}_e(\sigma _u,\sigma _v)\tilde{A}_e(X_u,\sigma _v)\tilde{A}_e(\sigma _u,X_v), \end{aligned}$$where \(\tilde{A}_e\) is the matrix obtained by normalizing \(A_e\) as \(\tilde{A}_e=A_e/\max _{i,j}A_e(i,j)\). We say that the edge passes the check if the outcome of coin flipping is HEADS. Then for each vertex \(v \in V\), if all edges incident with v passed their checks, v accepts the proposal and updates the value as \(X_v=\sigma _v\), otherwise v leaves \(X_v\) unchanged.

After T iterations, where T is a threshold determined for specific Markov random field, the algorithm terminates and outputs the current \(\varvec{X}=(X_v)_{v\in V}\). The pseudocode for the LocalMetropolis algorithm is given in Algorithm 2.

We remark that in each iteration, for each edge \(e=uv\), the two endpoints u and v access the same random coin to determine whether e passes the check in this iteration.

Remark 3

The LocalMetropolis algorithm can be naturally extended to sample from weighted CSPs. The local filtering now occurs on each local constraint, such that a k-ary constraint \(c=(f_c,S_c)\in {\mathcal {C}}\) passes the check with the probability which is a product of \(2^k-1\) normalized factors \(\tilde{f}_c(\tau )\) for the \(\tau \in [q]^{S_c}\) obtained from \(2^k-1\) ways of mixing \(\sigma _{S_c}\) with \(X_{S_c}\) except the \(X_{S_c}\) itself.

4.1 Mixing of LocalMetropolis

Let \(\mu _\mathsf {LM}\) denote the distribution of \(\varvec{X}=(X_v)_{v\in V}\) returned by the LocalMetropolis algorithm after T iterations.

We need to ensure the chain is well behaved even when starting from infeasible configurations. Now we make the following assumption: for all \(X \in [q]^V\) and \(v \in V\),

which is slightly stronger than the assumption made for the Glauber dynamics. As in the case of Glauber dynamics, the property is needed only when the chain is allowed to start from an infeasible configuration \(X\in [q]^V\) with \(\mu (X)=0\). For specific MRF, such as graph colorings, the condition (6) is satisfied as long as \(q \ge \varDelta + 1\) and \(q\ge 3\). As before, we further assume that the single-site Markov chainFootnote 2 is irreducible among feasible configurations.

Theorem 5

The Markov chain LocalMetropolis is reversible and has stationary distribution \(\mu \). Furthermore, under above assumptions, \(d_{\mathrm {TV}}\left( {\mu _{\mathsf {LM}}},{\mu }\right) \) converges to 0 as \(T \rightarrow \infty \).

Proof

Let \(\varOmega =[q]^V\) and \(P \in {\mathbb {R}}_{\ge 0} ^{|\varOmega | \times |\varOmega |}\) denote the transition matrix for the LocalMetropolis chain. First, we show this chain is reversible and \(\mu \) is stationary, by verifying the detailed balance equation:

If two configurations X, Y are both infeasible, then \(\mu (X)=\mu (Y)=0\). If precisely one of X, Y is feasible, say X is feasible and Y is not, then \(\mu (Y)=0\) and X cannot move to Y since at least one edge cannot pass its check, which means \(P(X,Y) = 0\). In both cases, the detailed balance equation holds.

Next, we suppose X, Y are both feasible. Consider a move in the LocalMetropolis chain. Let \({\mathcal {C}}\in \{0,1\}^E\) be a Boolean vector that \({\mathcal {C}}_e\) indicates whether edge \(e\in E\) passes its check. We call \(v\in V\)non-restricted by \({\mathcal {C}}\) if \({\mathcal {C}}_e=1\) for all e incident with v and v accepts the proposal; and call \(v\in V\)restricted by \({\mathcal {C}}\) if otherwise.

A move in the chain is completely determined by \({\mathcal {C}}\) along with the proposed configurations \(\sigma \in [q]^V\). Let \(\varOmega _{X \rightarrow Y}\) denote the set of pairs \((\sigma , {\mathcal {C}})\) with which X moves to Y, and \(\varDelta _{X,Y}=\{v\in V\mid X_v\ne Y_v\}\) the set of vertices on which X and Y disagree. Note that each \((\sigma , {\mathcal {C}})\in \varOmega _{X \rightarrow Y}\) satisfies:

\(\forall v \in \varDelta _{X,Y}\): \(\sigma _v=Y_v\) and v is non-restricted by \({\mathcal {C}}\);

\(\forall v \not \in \varDelta _{X,Y}\): either \(\sigma _v = X_v=Y_v\) or v is restricted by \({\mathcal {C}}\).

Similar holds for \(\varOmega _{Y \rightarrow X}\), the set of \((\sigma , {\mathcal {C}})\) with which Y moves to X. Hence:

In order to verify the detailed balance equation, we construct a bijection \(\phi _{X,Y} : \varOmega _{X \rightarrow Y} \rightarrow \varOmega _{Y \rightarrow X}\), and for every \((\sigma ,{\mathcal {C}}) \in \varOmega _{X \rightarrow Y}\), denoted \((\sigma ^\prime ,{\mathcal {C}}^\prime )=\phi _{X,Y}(\sigma ,{\mathcal {C}})\), and show that

The detailed balance equation then follows from (7) and (8).

The bijection \((\sigma ,{\mathcal {C}}){\mathop {\longmapsto }\limits ^{\phi _{X,Y}}}(\sigma ^\prime ,{\mathcal {C}}^\prime )\) is constructed as follow:

\({\mathcal {C}}^\prime = {\mathcal {C}}\);

for all v non-restricted by \({\mathcal {C}}\), since \((\sigma ,{\mathcal {C}})\in \varOmega _{X \rightarrow Y}\) it must hold \(\sigma _v=Y_v\), then set \(\sigma '_v=X_v\);

for all v restricted by \({\mathcal {C}}\), since \((\sigma ,{\mathcal {C}})\in \varOmega _{X \rightarrow Y}\) it must hold \(X_v=Y_v\), then set \(\sigma '_v=\sigma _v\).

It can be verified that the \(\phi _{X,Y}\) constructed in this way is indeed a bijection from \(\varOmega _{X \rightarrow Y}\) to \(\varOmega _{Y \rightarrow X}\). For any \((\sigma ,{\mathcal {C}})\in \varOmega _{X \rightarrow Y}\) and the corresponding \((\sigma ',{\mathcal {C}}')\in \varOmega _{Y \rightarrow X}\), since \({\mathcal {C}}'={\mathcal {C}}\), in the following we will not specify whether v is (non-)restricted by \({\mathcal {C}}\) or \({\mathcal {C}}'\) but just say v is (non-)restricted, and the followings are satisfied:

\(\forall v\in \varDelta _{X,Y}\): \(\sigma _v = Y_v\), \(\sigma '_v=X_v\) and v is non-retricted;

\(\forall v \not \in \varDelta _{X,Y}\): either \(\sigma _v=\sigma _v'=X_v=Y_v\) or v is restricted and \(\sigma _v=\sigma _v'\). In both cases, \(\sigma _v = \sigma '_v\).

Then we have:

Next, for each edge \(e\in E\) we calculate the ratio \(\frac{{\Pr }({\mathcal {C}}_e\mid \sigma ,X)}{{\Pr }({\mathcal {C}}_e^\prime \mid \sigma ^\prime ,Y)}\). There are two cases:

If \({\mathcal {C}}_e=0\) which means e does not pass its check, then

$$\begin{aligned}&{\Pr }[{\mathcal {C}}_e=0 \mid \sigma ,X] = 1-\tilde{A}_e(\sigma _u,\sigma _v)\tilde{A}_e(X_u,\sigma _v)\tilde{A}_e(\sigma _u,X_v)\\&\text {and}\quad \\&{\Pr }[{\mathcal {C'}}_e=0\mid \sigma ',Y] = 1-\tilde{A}_e(\sigma '_u,\sigma '_v)\tilde{A}_e(Y_u,\sigma '_v)\tilde{A}_e(\sigma '_u,Y_v). \end{aligned}$$And both u and v are restricted by \({\mathcal {C}}\). By our construction of the bijection \(\phi _{X,Y}\), we have \(\sigma _u=\sigma '_u\), \(\sigma _v=\sigma '_v\), \(X_u=Y_u\), and \(X_v=Y_v\). It follows that

$$\begin{aligned} \frac{{\Pr }[{\mathcal {C}}_e=0\mid \sigma ,X]}{{\Pr }[{\mathcal {C^\prime }}_e=0\mid \sigma ^\prime ,Y] } =\frac{A_e(Y_u,Y_v)}{A_e(X_u,X_v)}=1. \end{aligned}$$If \({\mathcal {C}}_e=1\) which means e passes its check, then

$$\begin{aligned}&{\Pr }[{\mathcal {C}}_e=1\mid \sigma ,X] = \tilde{A}_e(\sigma _u,\sigma _v)\tilde{A}_e(X_u,\sigma _v)\tilde{A}_e(\sigma _u,X_v),\\&\text {and }\\&{\Pr }[{\mathcal {C'}}_e=1\mid \sigma ',Y] = \tilde{A}_e(\sigma '_u,\sigma '_v)\tilde{A}_e(Y_u,\sigma '_v)\tilde{A}_e(\sigma '_u,Y_v). \end{aligned}$$There are three sub-cases according to whether vertices u and v are restricted:

- 1.

Both u and v are restricted, in which case \(\sigma _u=\sigma '_u\), \(\sigma _v=\sigma '_v\), \(X_u=Y_u\), \(X_v=Y_v\).

- 2.

Precisely one of \(\{u,v\}\) is restricted, say v is restricted and u is non-restricted, in which case \(\sigma _u=Y_u\), \(\sigma '_u=X_u\), \(\sigma _v=\sigma '_v\), and \(X_v=Y_v\).

- 3.

Both u and v are non-restricted, in which case \(\sigma _u = Y_u\), \(\sigma '_u=X_u\), \(\sigma _v=Y_v\), \(\sigma '_v=X_v\).

In all three sub-cases, the following identity can be verified:

$$\begin{aligned} \frac{{\Pr }[{\mathcal {C}}_e=1\mid \sigma ,X]}{{\Pr }[{\mathcal {C^\prime }}_e=1\mid \sigma ^\prime ,Y]} =\frac{\tilde{A}_e(Y_u,Y_v)}{\tilde{A}_e(X_u,X_v)}=\frac{A_e(Y_u,Y_v)}{A_e(X_u,X_v)}. \end{aligned}$$- 1.

Since each edges passes its check independently, we have

Combining (9) and (10), for every \((\sigma ,{\mathcal {C}})\in \varOmega _{X \rightarrow Y}\) and the corresponding \((\sigma ',{\mathcal {C}}')\in \varOmega _{Y \rightarrow X}\), we have:

This completes the verification of detailed balance equation and the proof of the reversibility of the chain with respect to stationary distribution \(\mu \).

Next, observe that the chain will never move from a feasible configuration to an infeasible one since at least one of the edge will not pass its check. By assumption (6), for all \(X \in [q]^V\), no matter feasible or not, and for every \(v \in V\) there must be a spin state \(i\in [q]\) such that with positive probability v is successfully updated to spin state i. Note that once a vertex is successfully updated it satisfies and will keep satisfying all its local constraints. Therefore, the chain is absorbing to feasible configurations.

It is easy to observe that every feasible configuration is aperiodic, since it has self-loop transition, i.e. \(P(X,X) > 0\) for all feasible X. In addition, any move \(X \rightarrow Y\) between feasible configurations \(X,Y\in \varOmega \) in the single-site Markov chain with vertex v being updated, can be simulated by a move in the LocalMetropolis chain in which all the vertices u other than v propose their current spin state \(X_u\) and v proposes \(Y_v\). Provided the irreducibility of the single-site Markov chain among all feasible configurations, the LocalMetropolis chain is also irreducible among all feasible configurations. Combinining with the absorption towards feasible configurations and their aperiodicity, due to the Markov chain convergence theorem [43], \(d_{\mathrm {TV}}\left( {\mu _{\mathsf {LM}}},{\mu }\right) \) converges to 0 as \(T \rightarrow \infty \). \(\square \)

4.2 The mixing of LocalMetropolis chain for graph colorings

Unlike the LubyGlauber chain, whose mixing rate is essentially due to the analysis of systematic scans. The mixing rate of LocalMetropolis chain is much more complicated to analyze. Here we analyze the mixing rate of the LocalMetropolis chain for proper q-colorings.

Given a graph G(V, E), a q-coloring \(\sigma \in [q]^V\) is proper if \(\sigma _u\ne \sigma _v\) for all \(uv\in E\). For this special MRF, the LocalMetropolis chain behaves simply as follows. Starting from an arbitrary coloring \(X\in [q]^V\), not necessarily proper, in each step:

Propose: each vertex v proposes a color \(c_v\in [q]\) uniformly at random;

Local filter: each vertex v rejects its proposal if there is a neighbor \(u \in \varGamma (v)\) such that one of the followings occurs:

- 1.

(v proposed the neighbor’s current color) \(c_v = X_u\);

- 2.

(v and the neighbor proposed the same color) \(c_v = c_u\);

- 3.

(the neighbor proposed v’s current color) \(X_v = c_u\);

otherwise, v accepts its proposal and updates its color \(X_v\) to \(c_v\).

- 1.

The first two filtering rules are sufficient to guarantee that the chain will never move to a “less proper” coloring. Although at first glance the third filtering rule looks redundant, it is necessary to guarantee the reversibility of the chain as well as the uniform stationary distribution.

It can be verified that when \(q \ge \varDelta + 2\), the condition (6) is satisfied and the single-site Glauber dynamics for proper q-coloring is irreducible, and hence the chain is mixing due to Theorem 5. The following theorem states a condition in the form \(q\ge \alpha \varDelta \) for the logarithmic mixing rate even for unbounded \(\varDelta \) and q. This proves Theorem 2.

Theorem 6

If \(q\ge \alpha \varDelta \) for a constant \(\alpha >2+\sqrt{2}\), the mixing rate of the LocalMetropolis chain for proper q-coloring on graphs with maximum degree at most \(\varDelta =\varDelta (n)\ge 9\) is \(\tau (\epsilon )=O(\log \left( \frac{n}{\epsilon }\right) )\), where the constant factor in \(O(\cdot )\) depends only on \(\alpha \) but not on the maximum degree \(\varDelta \).

The theorem is proved by path coupling, a powerful engineering tool for coupling Markov chains. A coupling of a Markov chain on space \(\varOmega \) is a Markov chain \((X,Y)\rightarrow (X',Y')\) on space \(\varOmega ^2\) such that the transitions \(X\rightarrow X'\) and \(Y\rightarrow Y'\) individually follow the same transition rule as the original chain on \(\varOmega \). For path coupling, we can construct a coupled Markov chain \((X,Y)\rightarrow (X',Y')\) for \(X,Y\in [q]^V\) which differ at only one vertex. The chain mixes rapidly if the expected number of disagreeing vertices in \((X',Y')\) is \(<1\).

4.2.1 An ideal coupling

The \(2+\sqrt{2}\) threshold in Theorem 6 is due to an ideal coupling in the \(\varDelta \)-regular tree. Let \({\mathbb {T}}_{\varDelta }\) denote the infinite \(\varDelta \)-regular tree rooted at \(v_0\). We assume that the current pair of colorings (X, Y) disagree only at the root \(v_0\) and \(X_u=Y_u\not \in \{X_{v_0},Y_{v_0}\}\) for all other vertices u in \({\mathbb {T}}_{\varDelta }\).

An ideal coupling can be constructed as follows in a breadth-first fashion: (1) the root \(v_0\) proposes the same random color in both chains X, Y; (2) each child u of the root proposes the same random color in both chains unless it proposed one of \(\{X_{v_0},Y_{v_0}\}\), in which case it switches the roles of the two colors \(\{X_{v_0},Y_{v_0}\}\) in the Y chain; (3) for all other vertices u, it proposes the same random color in both chains unless its parent proposed different colors in the two chains, in which case u switches the roles of \(\{X_{v_0},Y_{v_0}\}\) in the Y chain. For this ideal coupling, by a calculation, it can be verified that for the root \(v_0\):

and for any non-root vertex u in \({\mathbb {T}}_{\varDelta }\) at distance \(\ell \) from \(v_0\):

The expected number of disagreeing vertices in \((X',Y')\) is then bounded as

The path coupling argument requires this quantity to be \(<1\). For \(q=\alpha ^{\star }\varDelta \) and \(\varDelta \rightarrow \infty \), this quantity becomes \(1-\mathrm {e}^{-2/\alpha ^{\star }}\left( 1-\frac{1}{\alpha ^{\star }}-\frac{1}{\alpha ^{\star }-2}\right) \), which is \(<1\) if \(\alpha ^{\star }>2+\sqrt{2}\).

For general non-tree graphs G(V, E) and arbitrary pairs of colorings (X, Y) which disagree at only one vertex, where X, Y may not even be proper, we essentially show that the above special pair of colorings (X, Y) on the infinite \(\varDelta \)-regular tree \({\mathbb {T}}_{\varDelta }\) represent the worst case for path coupling. The analysis for this general case is quite involved. We first state the path coupling lemma with general metric.

Lemma 2

(Bubley and Dyer [6]) Given a pre-metric, which is a connected undirected graph on configuration space \(\varOmega \) with positive edge weight such that every edge is a shortest path, let \(\varPhi (X,Y)\) be the length of the shortest path between two configurations \(X,Y\in \varOmega \). Suppose that there is a coupling \((X,Y) \rightarrow (X',Y')\) of the Markov chain defined only for the pair (X, Y) of configurations that are adjacent in the pre-metric, which satisfies that

for some \(0< \delta < 1\). Then the mixing rate of the Markov chain is bounded by

where \({\mathrm {diam}}(\varOmega )\) denotes the diameter of \(\varOmega \) in the pre-metric.

We use the following slightly modified pre-metric: A pair \((X,Y)\in \varOmega =[q]^V\) is connected by an edge in the pre-metric if and only if X and Y differ at only one vertex, say v, and the edge-weight is given by \(\deg (v)\). This leads us to the following definition.

Definition 3

For any \(X', Y'\in \varOmega \), for \(u\in V\), we define \(\phi _u(X',Y')=\deg (u)\) if \(X'_u\ne Y'_u\) and \(\phi _u(X',Y')=0\) if otherwise; and for \(S\subseteq V\), we define the distance between \(X'\) and \(Y'\) on S as

In addition, we denote \(\varPhi (X',Y')=\varPhi _V(X',Y')\).

Clearly, the diameter of \(\varOmega \) in distance \(\varPhi \) has \({\mathrm {diam}}(\varOmega )\le n\varDelta \).

We prove the mixing rate in Theorem 6 for two separate regimes for q by using two different couplings. We define \(\alpha ^*\approx 3.634\ldots \) to be the positive root of \(\alpha =2\mathrm {e}^{1/\alpha }+1\).

Lemma 3

If \(q \ge \alpha \varDelta +3\) for a constant \(\alpha >\alpha ^*\), then \(\tau (\epsilon )=O(\log \left( \frac{n}{\epsilon }\right) )\).

Lemma 4

If \(\alpha \varDelta \le q\le 3.7\varDelta +3\) for \(2+\sqrt{2}<\alpha \le 3.7\) and \(\varDelta \ge 9\), then \(\tau (\epsilon )=O(\log \left( \frac{n}{\epsilon }\right) )\).

Theorem 6 follows by combining the two lemmas.

4.2.2 An easy local coupling for \(q > 3.634\varDelta +3\)

We first prove Lemma 3 by constructing a local coupling where the disagreement will not percolate outside its neighborhood. Let \(X,Y\in [q]^V\) two q-colorings, not necessarily proper. Assume that X and Y disagree only at vertex \(v_0\in V\). The coupling \((X,Y)\rightarrow (X',Y')\) is constructed as follows:

Each vertex \(v\in V\) proposes the same random color in the two chains X and Y. Then \((X',Y')\) is determined due to the transition rule of LocalMetropolis chain.

Next we show the path coupling condition:

The following technical lemma is frequently applied in the analysis of this and next couplings.

Lemma 5

If \(q \ge a \varDelta \), then for any integer \(0\le d\le \varDelta \), \(d\left( 1-\frac{a}{q}\right) ^d\le \varDelta \left( 1-\frac{a}{q}\right) ^{\varDelta }\).

Proof

It is sufficient to show the function \(d\left( 1-\frac{a}{q}\right) ^d\) is monotone for integer \(1\le d\le \varDelta \):

which is nonnegative when \(q\ge ad\). \(\square \)

Proof of Lemma 3

First, observe that if \(v \not \in \varGamma ^+(v_0)\), where \(v_0\) is the vertex at which X and Y disagree, then it always holds that \(X'_v = Y'_v\), because all vertices in \(\varGamma ^+(v)\) are colored the same in X and Y and will propose the same random color in the two chains due to the coupling. Therefore, it is sufficient to consider the difference between \(X'\) and \(Y'\) in \(\varGamma ^+(v_0)\) and we have

For each v, let \(c_v\in [q]\) be the uniform random color proposed independently by v, which is identical in both chains by the coupling.

For the disagreeing vertex \(v_0\), it holds that \(X'_{v_0} =Y'_{v_0}\) if \(v_0\) accepts the proposal in both chains, which occurs when \(c_{v_0} \not \in \{X_u, Y_u: {u\in \varGamma (v_0)}\}\) and \(\forall u \in \varGamma (v_0), c_u \not \in \{X_{v_0},Y_{v_0}, c_{v_0}\}\). Since X and Y disagree only at \(v_0\), we have

For each \(u \in \varGamma (v_0)\), since \(X_{u}=Y_{u}\), the event \(X'_u\ne Y'_u\) occurs only when \(c_u \in \{X_{v_0}, Y_{v_0}\}\) and \(\forall w \in \varGamma (u)\), \(c_w \not \in \{X_u, c_u\}\). Note that to guarantee \(X_u'\ne Y'_u\) one must have \(c_u\ne X_u\), thus

Combining (11) and (12) together and due to linearity of expectation, we have

where the last inequality is due to the monotonicity stated in Lemma 5.

The path coupling condition is satisfied when

For \(q=\alpha ^*\varDelta \) and \(\varDelta \rightarrow \infty \), then the LHS becomes \(\left( 1-\frac{1}{\alpha ^*}\right) \mathrm {e}^{-{3}/{\alpha ^*}}-\frac{2}{\alpha }\mathrm {e}^{-{2}/{\alpha ^*}}\), which is 0 when \(\alpha ^*\) is the positive root of \(\alpha ^* = 2\mathrm {e}^{{1}/{\alpha ^*}} + 1\).

Furthermore, for \(\varDelta \ge 1\) and \(q\ge \alpha \varDelta +3\), the LHS become:

which is a positive constant independent of \(\varDelta \) when \(\alpha >\alpha ^*\).

Therefore, when \(\alpha >\alpha ^*\), there is a constant \(\delta >0\) which depends only on \(\alpha \), such that for all \(\varDelta \ge 1\) and \(q\ge \alpha \varDelta +3\), the inequality (13) is satisfied, which by Lemma 2, gives us \(\tau (\epsilon ) = O\left( \log \left( \frac{n}{\epsilon }\right) \right) \).

4.2.3 A global coupling for \((2+\sqrt{2})\varDelta <q\le 3.7\varDelta +3\)

Next, we prove Lemma 4 and bound the mixing rate when \((2+\sqrt{2})\varDelta <q\le 3.7\varDelta +3\). This is done by a global coupling where the disagreement may percolate to the entire graph, whose construction and analysis is substantially more sophisticated than the previous local coupling. Although this sophistication only improves the threshold for q in Lemma 3 by a small constant factor, the effort is worthwhile because it helps us to approache the threshold of the ideal coupling discussed in Sect. 4.2.1 and shows that the infinite \(\varDelta \)-regular tree \({\mathbb {T}}_{\varDelta }\) represents the worst case for path coupling. And curiously, the extremity of this worst case only holds when q is also properly upper bounded, say \(q\le 3.7\varDelta +3\), whereas the mixing rate for larger q was guaranteed by Lemma 3.

Let \(v_0 \in V\) be a vertex and \(X,Y \in [q]^V\) any two q-colorings (not necessarily proper) which disagree only at \(v_0\). The coupling \((X,Y)\rightarrow (X',Y')\) of the LocalMetropolis chain is constructed by coupling \((\varvec{c}^X,\varvec{c}^Y)\), where \(\varvec{c}^X,\varvec{c}^Y\in [q]^V\) are the respective vector of proposed colors in the two chains X and Y. For each \(v\in V\), the \((c_v^X,c_v^Y)\) is sampled from one of the two following joint distributions:

consistent:\(c_v^X=c_v^Y\) and is uniformly distributed over [q];

permuted:\(c_v^X\) is uniform in [q] and \(c_v^Y=\phi (c_v^X)\) where \(\phi :[q]\rightarrow [q]\) is a bijection defined as that \(\phi (X_{v_0})=Y_{v_0}\), \(\phi (Y_{v_0})=X_{v_0}\), and \(\phi (x)=x\) for all \(x\not \in \{X_{v_0},Y_{v_0}\}\).

Note that for all \(u\ne v_0\) we have \(X_u=Y_u\), and if further \(X_u\in \{X_{v_0},Y_{v_0}\}\), we say the vertices \(w\in \varGamma ^+(u)\setminus \{v_0\}\) are blocked by u, and all other \(u\ne v_0\) is unblocked. The special vertex \(v_0\) is neither blocked nor unblocked. We denote by \(\varGamma ^B(v)\) and \(\varGamma ^U(v)\) the respective sets of blocked and unblocked neighbors of vertex v and let \(b_v = |\varGamma ^B(v)|\).

The coupling \((\varvec{c}^X,\varvec{c}^Y)\) of proposed colors is constructed by the following recursive procedure:

Initially, for the disagreeing vertex \(v_0\), \((c_{v_0}^X,c_{v_0}^Y)\) is sampled consistently in the two chains.

For each unblocked \(u\in \varGamma (v_0)\), the \((c_{u}^X,c_{u}^Y)\) is sampled independently (of other vertices) from the permuted distribution.

Let \({\mathcal {S}}\subseteq V\) denote the current set of vertices v such that \((c_v^X,c_v^Y)\) has been sampled, and \({\mathcal {S}}^{\ne }\subseteq {\mathcal {S}}\) the set of vertices v with \((c_v^X,c_v^Y)\) sampled inconsistently as \(c_v^X\ne c_v^Y\). We abuse the notation and use \(\partial {\mathcal {S}}^{\ne }=\{\text {unblocked }u\not \in {\mathcal {S}}\mid \exists uv\in E, \text { s.t. }v\in {\mathcal {S}}^{\ne } \}\) to denote the unblocked un-sampled vertex boundary of \({\mathcal {S}}^{\ne }\). If such \(\partial {\mathcal {S}}^{\ne }\) is non-empty, then all \(u\in \partial {\mathcal {S}}^{\ne }\) sample the respective \((c_{u}^X,c_{u}^Y)\) independently from the permuted distribution and join the \({\mathcal {S}}\) simultaneously. Grow \({\mathcal {S}}^{\ne }\) according to the results of sampling. Repeat this step until the current \(\partial {\mathcal {S}}^{\ne }\) is empty and thus \({\mathcal {S}}\) is stabilized.

For all remaining vertices v, \((c_v^X,c_v^Y)\) is sampled independently and consistently.

This procedure is in fact a Galton-Watson branching process starting from root \(v_0\). The blocked-ness of each vertex is determined by the current X and Y. The \({\mathcal {S}}\) grows from the root by a percolation of disagreement \(c_v^X\ne c_v^Y\) added in a breadth-first order.

It is easy to see that each individual \(c_v^X\) or \(c_v^Y\) is uniformly distributed over [q] and is independent of \(c_u^X\) or \(c_u^Y\) for all other \(u\ne v\) (although the joint distributions \((c_v^X,c_v^Y)\) may be dependent of each other). Therefore, the \((\varvec{c}^X,\varvec{c}^Y)\) is a valid coupling of proposed colors.

A walk \({\mathcal {P}}=(v_0,v_1,\ldots ,v_\ell )\) in G(V, E) is called a strongly self-avoiding walk (SSAW) if \({\mathcal {P}}\) is a simple path in G and \(v_iv_j\) is not an edge in G for any \(0< i+1<j \le \ell \). An SSAW \({\mathcal {P}}=(v_0,v_1,\ldots ,v_\ell )\) is said to be a path of disagreement with respect to \((\varvec{c}^X,\varvec{c}^Y)\) if \((c_{v_i}^X,c_{v_i}^Y), v_i\in {\mathcal {P}}\) are sampled in the order along the path \({\mathcal {P}}\) from \(i=0\) to \(\ell \), and \(c_{v_i}^X\ne c_{v_i}^Y\) for all \(1\le i\le \ell \). For any specific SSAW \({\mathcal {P}}=(v_0,v_1,\ldots ,v_\ell )\) through unblocked vertices \(v_1,v_2,\ldots ,v_{\ell }\), by the chain rule

Proposition 2

For any vertex \(u\ne v_0\), the event \(c_u^X\ne c_u^Y\) occurs only if there is a strongly self-avoiding walk (SSAW) \({\mathcal {P}}=(v_0,v_1,\ldots ,v_\ell )\) from \(v_0\) to \(v_\ell =u\) through unblocked vertices \(v_1,v_2,\ldots ,v_{\ell }\) such that \({\mathcal {P}}\) is a path of disagreement.

Proof

By the coupling, \(c^X_u \ne c^Y_u\) only when \((c^X_u, c^Y_u)\) is sampled from the permuted distribution and it must hold that \(\{c^X_u,c^Y_u\} = \{X_{v_0},Y_{v_0}\}\). This means that u itself must be unblocked.

At the time when \((c^X_u, c^Y_u)\) is being sampled, there must exist a neighbor \(w \in \varGamma (u)\) such that either (1) \(w=v_0\) or (2) \(w \in {\mathcal {S}}^{\ne }\), which means that \(c^X_w\ne c^Y_w\), \(\{c^X_w,c^Y_w\} = \{X_{v_0},Y_{v_0}\}\) was sampled before \((c^X_u, c^Y_u)\), and vertex w is unblocked. If it is the latter case, we repeat this argument for w recursively until \(v_0\) is reached. This will give us a path \({\mathcal {P}}=(v_0,v_1,\ldots ,v_{\ell })\) from \(v_0\) to \(u=v_{\ell }\) through unblocked vertices \(v_1,\ldots ,v_{\ell }\) such that for all \(1\le i\le \ell \), \((c^X_{v_i}, c^Y_{v_i})\) are sampled in that order, \(c^X_{v_i} \ne c^Y_{v_i}\) and \(\{c^X_{v_i},c^Y_{v_i}\} = \{X_{v_0},Y_{v_0}\}\). Thus, \({\mathcal {P}}\) is a path of disagreement through unblocked vertices. Note that this path \({\mathcal {P}}=(v_0,v_1,\ldots ,v_{\ell })\) must be a strongly self-avoiding. To the contrary assume that \({\mathcal {P}}\) is not strongly self-avoiding and there exist \(0\le i,j\le \ell \) such that \(i<j-1\) and \(v_{i}v_{j}\) is an edge. In this case, right after \(c^X_{v_i}\ne c^Y_{v_i}\) being sampled and \(v_i\) joining \({\mathcal {S}}^{\ne }\), \(v_{i+1}\) and \(v_{j}\) must be both in \(\partial {\mathcal {S}}^{\ne }\) because they are both unblocked un-sampled neighbors of \(v_i\) then. And due to our construction of coupling, the \((c^X_{v_{i+1}}, c^Y_{v_{i+1}})\) and \((c^X_{v_{j}}, c^Y_{v_{j}})\) are sampled and \(v_{i+1}, v_{j}\) join \({\mathcal {S}}\) simultaneously, which contradict that \((c^X_{v_{j}}, c^Y_{v_{j}})\) is sampled after \((c^X_{v_{i+1}}, c^Y_{v_{i+1}})\) along the path. Therefore, \({\mathcal {P}}\) is an SSAW through unblocked vertices and is also a path of disagreement. \(\square \)

The coupled next step \((X',Y')\) is determined by the current (X, Y) and the coupled proposed colors \((\varvec{c}^X,\varvec{c}^Y)\).

Proposition 3

For any vertex \(u\ne v_0\), the event \(X'_u\ne Y'_u\) occurs only if \(c^X_u,c^Y_u \in \{X_{v_0},Y_{v_0}\}\). Furthermore, for any unblocked vertex \(u\ne v_0\), the event \(X_u'\ne Y_u'\) occurs only if \(c_u^X\ne c_u^Y\).

Proof

We pick any \(u\ne v_0\). Assume by contradiction that \(c^X_u=c^Y_u \not \in \{X_{v_0},Y_{v_0}\}\). Note that this covers all possible contradicting cases to that \(c^X_u,c^Y_u \in \{X_{v_0},Y_{v_0}\}\), because \(c^X_u\ne c^Y_u\) occurs only when \(c^X_u,c^Y_u \in \{X_{v_0},Y_{v_0}\}\).

We then show for every edge uw incident to u, the followings hold:

With (15), (16) and (17), each edge uw passes the check in chain X if and only if it passes the check in chain Y. Combining with the fact that \(X_u=Y_u\) for all \(u\ne v_0\), this implies \(X_u'=Y_u'\), a contradiction.

We then verify (15), (16) and (17):

If \(X_u=Y_u \in \{X_{v_0},Y_{v_0}\}\), then for every neighbor \(w \in \varGamma (u)\), either w is blocked or \(w=v_0\). In both cases \(c_w^X=c_w^Y\) is sampled consistently, this implies (15) and (16), because \(c^X_u = c^Y_u\) and \(X_u = Y_u\). And it holds that either \(\{X_w,Y_w\} = \{X_{v_0},Y_{v_0}\}\) (in case of \(w = v_0\)) or \(X_w = Y_w\) (in case of \(w \ne v_0\)), this implies (17) because \(c^X_u = c^Y_u \not \in \{X_{v_0},Y_{v_0}\}\).

If \(X_u=Y_u \not \in \{X_{v_0},Y_{v_0}\}\). For each neighbor \(w \in \varGamma (u)\), it holds that either \(\{c^X_w,c^Y_w\} = \{X_{v_0},Y_{v_0}\}\) or \(c^X_w=c^Y_w\), because the event \(c^X_w \ne c^Y_w\) happens if and only if \(\{c^X_w,c^Y_w\} = \{X_{v_0},Y_{v_0}\}\) due to the coupling. Recall that \(c^X_u = c^Y_u \not \in \{X_{v_0},Y_{v_0}\}\) and \(X_u=Y_u \not \in \{X_{v_0},Y_{v_0}\}\), this implies (15) and (16). And it holds that either \(\{X_w,Y_w\} = \{X_{v_0},Y_{v_0}\}\) (in case of \(w = v_0\)) or \(X_w = Y_w\) (in case of \(w \ne v_0\)), this implies (17) because \(c^X_u = c^Y_u \not \in \{X_{v_0},Y_{v_0}\}\).

For an unblocked vertex \(u\ne v_0\), assume \(X_u'\ne Y_u'\). By above argument, we must have \(c_u^X, c_u^Y\in \{X_{v_0},Y_{v_0}\}\). We then show that \(c^X_u \ne c^Y_u\). By contradiction, we assume \(c^X_u = c^Y_u\), since \(c_u^X, c_u^Y\in \{X_{v_0},Y_{v_0}\}\), the \((c_u^X, c_u^Y)\) must be sampled from the consistent distribution. And since u is unblocked and \(u\ne v_0\), the \((c_u^X, c_u^Y)\) is sampled from the consistent distribution only when for all neighbors \(w\in \varGamma (u)\), \(w\ne v_0\) (which means \(X_w=Y_w\)) and \(c^X_w=c^Y_w\). In summary, \(X_u=Y_u\), \(c_u^X=c_u^Y\), and \(X_w=Y_w\), \(c_w^X=c_w^Y\) for all neighbors \(w\in \varGamma (u)\), which guarantees that \(X_u'=Y_u'\), a contradiction. Therefore, we also show that for any unblocked \(u\ne v_0\), \(X_u'\ne Y_u'\) only if \(c_u^X\ne c_u^Y\). \(\square \)

We then analyze the probability of \(X'_u \ne Y'_u\) for each vertex \(u \in V\).

Lemma 6

For the vertex \(v_0\) at which the q-colorings \(X,Y \in [q]^V\) disagree,

Proof

The event \(X'_{v_0}=Y'_{v_0}\) occurs if \(v_0\) accepts the proposal, which happens if the following events occur simultaneously:

\(c^X_{v_0} \not \in \{X_u \mid u \in \varGamma (v_0)\}\) (and hence \(c^Y_{v_0} \not \in \{Y_u \mid u \in \varGamma (v_0)\}\) by the coupling \(c^Y_{v_0}=c^X_{v_0}\) and the fact that \(X_u=Y_u\) for \(u\ne v_0\)). This occurs with probability at least \(\frac{q-d_{v_0}}{q}\).

For all unblocked neighbors \(u \in \varGamma ^U(v_0)\), it must have \(c^X_{u} \not \in \{X_{v_0},c^X_{v_0}\}\) and \(c^Y_u \not \in \{Y_{v_0},c^Y_{v_0}\}\). This occurs with probability at least \(\left( 1-\frac{2}{q}\right) ^{d_{v_0}-b_{v_0}}\) conditioning on any choice of \(c^X_{v_0}=c^Y_{v_0}\).

For all blocked neighbors \(w \in \varGamma ^B(v_0)\), it must have \(c^X_w \not \in \{c^X_{v_0},X_{v_0},Y_{v_0}\}\) (and hence \(c^Y_w \not \in \{c^Y_{v_0},X_{v_0},Y_{v_0}\}\) due to the coupling \(c^Y_{w}=c^X_{w}\)). This occurs with probability at least \(\left( 1-\frac{3}{q}\right) ^{b_{v_0}}\) conditioning on any choice of \(c^X_{v_0}=c^Y_{v_0}\) and independent of unblocked neighbors \(u \in \varGamma ^U(v_0)\).

Thus the following is obtained by the chain rule:

where the last inequality is due to the monotonicity stated in Lemma 5. \(\square \)

Lemma 7

For any unblocked vertex \(u \ne v_0\), it holds that

where the sum enumerates all strongly self-avoiding walks (SSAW) \({\mathcal {P}}=(v_0,v_1,\ldots ,v_{\ell })\) from \(v_0\) to \(v_{\ell }=u\) over unblocked vertices \(v_1,v_2,\ldots , v_{\ell }=u\), and \(\ell ({\mathcal {P}})=\ell \) denotes the length of the walk \({\mathcal {P}}\).

Proof

Due to Proposition 3, for unblocked \(u\ne v_0\), the event \(X_u'\ne Y_u'\) occurs only if \(c_u^X\ne c_u^Y\) and u accepts its proposal in at least one chain among X, Y. Observe that any edge uv between unblocked vertices u, v either passes the check in both chains X, Y or does not pass the check in both chains. Therefore, the event \(X_u'\ne Y_u'\) occurs for an unblocked \(u\ne v_0\) only if the following events occurs simultaneously:

\(c_u^X\ne c_u^Y\), which according to Proposition 2, occurs only if there is a SSAW \({\mathcal {P}}=(v_0,v_1,\ldots , v_{\ell })\) from \(v_0\) to \(v_\ell =u\) through unblocked vertices \(v_1,\ldots , v_{\ell }\) such that \({\mathcal {P}}\) is a path of disagreement;

for all unblocked neighbors \(w\in \varGamma ^{U}(u)\), the edge uw passes the check, which means \(c_w^X\not \in \{c_u^X,X_u\}\) (and meanwhile \(c_w^Y\not \in \{c_u^Y,Y_u\}\) by coupling) for all \(w\in \varGamma ^{U}(u)\);

all blocked neighbors \(w\in \varGamma ^{B}(u)\) passes the check in at least one chains among X, Y, which means either \(c^X_w \not \in \{c^X_u, X_u\}\) for all \(w \in \varGamma ^B(u)\) or \(c^Y_w \not \in \{c^Y_u,Y_u\}\) for all \(w \in \varGamma ^B(u)\).

More specifically, these events occur only if:

there is a SSAW \({\mathcal {P}}=(v_0,v_1,\ldots , v_{\ell })\) from \(v_0\) to \(v_\ell =u\) through unblocked vertices \(v_1,\ldots , v_{\ell }\) such that \(c_{v_i}^X\in \{X_{v_0},Y_{v_0}\}\) for \(1\le i\le \ell -1\), which occurs with probability \(\left( \frac{2}{q}\right) ^{\ell -1}\);

if \(u\in \varGamma (v_0)\), then \(c_u^X=Y_{v_0}\) (and meanwhile \(c_u^Y=X_{v_0}\}\) by coupling), and if \(u\not \in \varGamma (v_0)\), \(c_u^X\in \{X_{v_0},Y_{v_0}\}\setminus \{c_{v_{\ell -1}}^X\}\) (and meanwhile \(c_u^Y\in \{X_{v_0},Y_{v_0}\}\setminus \{c_{v_{\ell -1}}^Y\}\) by coupling), which in either case, occurs with probability \(\frac{1}{q}\) conditioning on \((c_{v_{\ell -1}}^X,c_{v_{\ell -1}}^Y)\);

\(c_w^X\not \in \{c_u^X,X_u\}\) (and meanwhile \(c_w^Y\not \in \{c_u^Y,Y_u\}\) by coupling) for all unblocked \(w\in \varGamma ^U(u)\setminus \{v_{\ell -1}\}\), which occurs with probability \(\left( 1-\frac{2}{q}\right) ^{d_u-b_u-1}\) conditioning on \(c_u^X\);

either \(c^X_w \not \in \{c^X_u, X_u\}\) for all \(w \in \varGamma ^B(u)\) or \(c^Y_w \not \in \{c^Y_u,Y_u\}\) for all \(w \in \varGamma ^B(u)\), which occurs with probability at most \(\left[ 2\left( 1-\frac{2}{q}\right) ^{b_u}-\left( 1-\frac{3}{q}\right) ^{b_u}\right] \) conditioning on \((c_u^X,c_u^Y)\) by the principle of inclusion-exclusion.

Take the union bound over all SSAW \({\mathcal {P}}=(v_0,v_1,\ldots , v_{\ell })\) through unblocked vertices \(v_1,\ldots , v_{\ell }=u\). Due to the strongly-avoiding property, it is safe to apply the chain rule for every \({\mathcal {P}}\). We have:

\(\square \)

Lemma 8

For any blocked vertex \(u \ne v_0\), it holds that

where the sum enumerates all the strongly self-avoiding walks (SSAW) \({\mathcal {P}}=(v_0,v_1,\ldots ,v_{\ell })\) from \(v_0\) to \(v_{\ell }=u\) through unblocked vertices \(v_1,\ldots ,v_{\ell -1}\), and \(\ell ({\mathcal {P}})=\ell \) denotes the length of the walk \({\mathcal {P}}\).

Proof

By the coupling, any blocked vertex \(u \in V\) proposes consistently in the two chains, thus \(c^X_u = c^Y_u\). And we have \(X_u = Y_u\) for \(u \ne v_0\).

We first consider \(v_0\)’s blocked neighbors \(u \in \varGamma ^B(v_0)\). There are two cases for such vertex u:

\(X_u = Y_u \not \in \{X_{v_0},Y_{v_0}\}\). Since vertex u is blocked, there must exist a vertex \(w_0 \in \varGamma (u) \setminus \{v_0\}\), such that \(X_{w_0} = Y_{w_0} \in \{X_{v_0},Y_{v_0}\}\). Without loss of generality, suppose \(X_{w_0} = Y_{w_0} =X_{v_0}\) (and the case \(X_{w_0}=Y_{w_0}=Y_{v_0}\) follows by symmetry). By Proposition 3, \(X'_u \ne Y'_u\) only if \(c^X_u = c^Y_u \in \{X_{v_0},Y_{v_0}\}\). Note that if \(c^X_u = c^Y_u = X_{v_0}\), then the edge \(uw_0\) cannot pass the check in both chains, hence \(X'_u = Y'_u\), a contradiction. So we must have \(c^X_u = c^Y_u=Y_{v_0}\), in which case edge \(v_0u\) cannot pass the check in chain Y, thus the event \(X'_u \ne Y'_u\) occurs only when u accepts the proposal in chain X, which happens only if for all \(w \in \varGamma (u)\), \(c^X_w \not \in \{c^X_u,X_u\}\). Remember that we already have \(c^X_u=Y_{v_0}\ne X_u\) and note that all vertices in chain X propose independently, therefore \(X'_u \ne Y'_u\) occurs with probability at most \(\frac{1}{q}\left( 1-\frac{2}{q}\right) ^{d_u}\).

\(X_u = Y_u \in \{X_{v_0},Y_{v_0}\}\). Without loss of generality, suppose \(X_u = Y_u = X_{v_0}\)(and the case \(X_u = Y_u = Y_{v_0}\) follows by symmetry). By Proposition 3, \(X'_u \ne Y'_u\) only if \(c^X_u = c^Y_u \in \{X_{v_0},Y_{v_0}\}\). If \(c^X_u=c^Y_u=X_{v_0}\), the proposal and the current color of u are the same in two chains, hence \(X'_u = Y'_u\), a contradiction. So we must have \(c^X_u = c^Y_u=Y_{v_0}\), in which case the edge \(uv_0\) cannot pass the check in chain Y, thus event \(X'_u \ne Y'_u\) occurs only if vertex u accepts the proposal in chain X, which happens only if for all \(w \in \varGamma (u)\), \(c^X_w \not \in \{c^X_u,X_u\}=\{X_{v_0},Y_{v_0}\}\). Remember that we already have \(c^X_u=Y_{v_0}\) and note that all vertices in chain X propose independently, therefore \(X'_u \ne Y'_u\) occurs with probability at most \(\frac{1}{q}\left( 1-\frac{2}{q}\right) ^{d_u}\).

Hence, for all \(u \in \varGamma ^B(v_0)\), we have:

The walk \({\mathcal {P}}=(v_0,u)\) is a strongly self-avoiding walk (SSAW) from \(v_0\) to u with only u blocked. Therefore (19) is proved for blocked vertices \(u \in \varGamma ^B(v_0)\).

Now we consider the general blocked vertices \(u \not \in \varGamma ^+(v_0)\). Assume that \(X_u'\ne Y'_u\).