Abstract

We present generic transformations, which allow to translate classic fault-tolerant distributed algorithms and their correctness proofs into a real-time distributed computing model (and vice versa). Owing to the non-zero-time, non-preemptible state transitions employed in our real-time model, scheduling and queuing effects (which are inherently abstracted away in classic zero step-time models, sometimes leading to overly optimistic time complexity results) can be accurately modeled. Our results thus make fault-tolerant distributed algorithms amenable to a sound real-time analysis, without sacrificing the wealth of algorithms and correctness proofs established in classic distributed computing research. By means of an example, we demonstrate that real-time algorithms generated by transforming classic algorithms can be competitive even w.r.t. optimal real-time algorithms, despite their comparatively simple real-time analysis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Executions of distributed algorithms are typically modeled as sequences of zero-time state transitions (steps) of a distributed state machine. The progress of time is solely reflected by the time intervals between steps. Owing to this assumption, it does not make a difference, for example, whether messages arrive at a processor simultaneously or nicely staggered in time: Conceptually, the messages are processed instantaneously in a step at the receiver when they arrive. The zero step-time abstraction is hence very convenient for analysis, and a wealth of distributed algorithms, correctness proofs, impossibility results and lower bounds have been developed for models that employ this assumption [15].

In real systems, however, computing steps are neither instantaneous nor arbitrarily preemptible: A computing step triggered by a message arriving in the middle of the execution of some other computing step is delayed until the current computation is finished. This results in queuing phenomena, which depend not only on the actual message arrival pattern, but also on the queuing/scheduling discipline employed. Real-time systems research has established powerful techniques for analyzing those effects [3, 32], such that worst-case response times and even end-to-end delays [34] can be computed.

Our real-time model for message-passing systems [20, 22] reconciles the distributed computing and the real-time systems perspective: By replacing zero-time steps by non-zero time steps, it allows to reason about queuing effects and puts scheduling in the proper perspective. In sharp contrast to the classic model, the end-to-end delay of a message is no longer a model parameter, but results from a real-time analysis based on job durations and communication delays.

Apart from making distributed algorithms amenable to real-time analysis, the real-time model also allows to address the interesting question of whether/which properties of real systems are inaccurately or even wrongly captured when resorting to classic zero step-time models. For example, it turned out [20] that no \(n\)-processor clock synchronization algorithm with constant running time can achieve optimal precision, but that \({\varOmega }(n)\) running time is required for this purpose. Since an \(\mathrm {O}(1)\) algorithm is known for the classic model [13], this is an instance of a problem where the standard distributed computing analysis gives too optimistic results.

In view of the wealth of distributed computing results, determining the properties that are preserved when moving from the classic zero step-time model to the real-time model is important: This transition should facilitate a real-time analysis without invalidating classic distributed computing analysis techniques and results. We developed powerful general transformations [24, 26], which showed that a system adhering to some particular instance of the real-time model can simulate a system that adheres to some instance of the classic model (and vice versa). All the transformations presented in [26] were based on the assumption of a fault-free system, however.

Contributions: In this paper, we generalize our transformations to the fault-tolerant setting: Processors are allowed to either crash or even behave arbitrarily (Byzantine) [11], and hardware clocks can drift. We define (mild) conditions on problems, algorithms and system parameters, which allow to re-use classic fault-tolerant distributed algorithms in the real-time model, and to employ classic correctness proof techniques for fault-tolerant distributed algorithms designed for the real-time model. As our transformations are generic, i.e., work for any algorithm adhering to our conditions, proving their correctness has already been a non-trivial exercise in the fault-free case [26], and became definitely worse in the presence of failures. We apply our transformation to the well-known problem of Byzantine agreement and analyze the timing properties of the resulting real-time algorithm.

Roadmap: Section 2 gives a brief, informal summary of the computing models and the fundamental problem of real-time analysis, which is followed by a review of related work in Sect. 3. Section 4 restates the formal definitions of the system models and presents the fault-tolerant extensions novel to this paper. The new, fault-tolerant system model transformations and their proofs can be found in Sects. 5 and 6, while Sect. 7 illustrates these transformations by applying them to well-known distributed computing problems.

2 Informal overview

A distributed system consists of a set of processors and some means for communication. In this paper, we will assume that a processor is a state machine running some kind of algorithm and that communication is performed via message-passing over point-to-point links between pairs of processors.

The algorithm specifies the state transitions that the processor may carry out. In distributed algorithms research, the common assumption is that state transitions are performed in zero time. The question remains, however, as to when these transitions are performed. In conjunction with bounds on message transmission delays, the answer to this question determines the synchrony of the computing model: The time required for one message to be sent, transmitted and received can either be constant (lock-step synchrony), bounded (synchrony or partial synchrony), or finite but unbounded (asynchrony). Note that, when computation times are zero, transmission delay bounds typically represent end-to-end delay bounds: All kinds of delays are abstracted away in one system parameter.

2.1 Computing models

The transformations introduced in this paper will relate two different distributed computing models:

-

1.

In what we call the classic synchronous model, processors execute zero-time steps (called actions) and the only model parameters are lower and upper bounds on the end-to-end delays \([\underline{\delta }^-, \underline{\delta }^+]\).Footnote 1 Note that this assumption does not rule out end-to-end delays that are composed of communication delays + inter-step time bounds [7].

-

2.

In the real-time model, the zero-time assumption is dropped, i.e., the end-to-end delay bounds are split into bounds on the transmission time of a message (which we will call message delay) \([\delta ^-_{}, \delta ^+_{}]\) and on the actual processing time \([\mu ^-_{}, \mu ^+_{}]\). In contrast to the actions of the classic model, we call the non-zero-time computing steps in the real-time model jobs. Contrary to the notion of a task in classic real-time analysis literature, a job in our setting does not represent a significant piece of code but rather a (few) simple machine operation(s).

Figure 1 illustrates the real-time model: \(p\) and \(q\) are two processors. Processor \(p\) receives a message (from some other processor not shown in the diagram) at time \(0\), represented by an incoming arrow. The box from time \(0\) to \(3\) corresponds to the time \(p\) requires to process the message, to perform state transitions and to send out messages in response. One of those messages, \(m\) is represented by the dotted arrow and sent to \(q\).Footnote 2 It arrives at processor \(q\) at time \(4\), while \(q\) is still busy executing the jobs triggered by two messages that arrived earlier. At time \(7\), \(q\) is idle again and can start processing \(m\), represented by the dotted box.

The figure explicitly shows the major timing-related parameters of the real-time model, namely, message delay (\(\delta \)), queuing delay (\(\omega \)), end-to-end delay (\({\varDelta }= \delta + \omega \)), and processing delay (\(\mu \)) for the message \(m\). The bounds on the message delay \(\delta \) and the processing delay \(\mu \) are part of the system model (but need not be known to the algorithm).

Bounds on the queuing delay \(\omega \) and the end-to-end delay \({\varDelta }\), however, are not parameters of the system model—in sharp contrast to the classic model. Rather, those bounds (if they exist) must be derived from the system parameters \([\delta ^-_{},\delta ^+_{}]\), \([\mu ^-_{},\mu ^+_{}]\) and the message pattern of the algorithm. Depending on the algorithm, this can be a non-trivial problem, and a generic solution to this issue is outside the scope of this paper. The following subsection gives a high-level overview of the problem; the examples in Sect. 7 will illustrate how such a real-time analysis can be performed for simple algorithms by deriving an upper bound on the queuing delay.

2.2 Real-time analysis

Consider the application of distributed algorithms in real-time systems, where both safety properties (like consistency of replicated data) and timeliness properties (like a bound on the maximum response time for a computation triggered by some event) must be satisfied. In order to assess some algorithm’s feasibility for a given application, bounds on the maximum (and minimum) end-to-end delay \([{\varDelta }^-{}, {\varDelta }^+{}]\) are instrumental: Any relevant time complexity measure obviously depends on end-to-end delays, and even the correctness of synchronous and partially synchronous distributed algorithms [7] may rest on their ability to reliably timeout messages (explicitly or implicitly, via synchronized communication rounds).

Unfortunately, determining \([{\varDelta }^-{},{\varDelta }^+{}]\) is difficult in practice: End-to-end delays include queuing delays, i.e., the time a delivered message waits until the processor is idle and ready to process it. The latter depends not only on the computing step times (\([\mu ^-_{}, \mu ^+_{}]\)) and the communication delays (\([\delta ^-_{}, \delta ^+_{}]\)) of the system, but also on the message pattern of the algorithm: If more messages arrive simultaneously at the same destination processor, the queuing delay increases. In order to compute \([{\varDelta }^-{},{\varDelta }^+{}]\), a proper worst-case response time analysis (like in [34]) must be conducted for the end-to-end delays, which has to take into account the worst-case message pattern, computing requirements, failure patterns, etc.

Computing worst-case end-to-end delays is relatively easy in case of round-based synchronous distributed algorithms, like the Byzantine Generals algorithm [11] analyzed in Sect. 7.2: If one can rely on the lock-step round assumption, i.e., that only round-\(k\) messages are sent and received by the processors in round \(k\), their maximum number and hence the resulting queuing and processing delays can be determined easily. Choosing a round duration larger or equal to the computed maximum end-to-end delay \({\varDelta }^+{}\) is then sufficient to guarantee the lock-step round assumption in the system.

In case of general distributed algorithms, the worst-case response time analysis is further complicated by a circular dependency: The message pattern and computing load generated by some algorithm (and hence the bounds on the end-to-end delays computed in the analysis) may depend on the actual end-to-end delays. In case of partially synchronous processors [7], for example, the number of new messages generated by a fast processor while some slow message \(m\) is still in transit obviously depends on \(m\)’s end-to-end delay. These new messages can cause queuing delays for \(m\) at the receiver processor, however, which in turn affect its end-to-end delay [35]. As a consequence, worst-case response time analyses typically involve solving a fixed point equation [3, 34].

Recast in our setting, the following real-time analysis problem (termed worst-case end-to-end delay analysis in the sequel) needs to be solved: Given some algorithm \(\mathcal {A}\) under failure model \(\mathcal {C}\), scheduling policy \(pol\) and assumed end-to-end delay bounds \([{\varDelta }^-{}, {\varDelta }^+{}]\), where the latter are considered as (still) unvalued parameters, and some real system with computing step times \([\mu ^-_{}, \mu ^+_{}]\) and communication delays \([\delta ^-_{}, \delta ^+_{}]\) in which \(\mathcal {A}\) shall run, develop a fixed point equation for the end-to-end delay bounds \([{\varDelta }^-{}, {\varDelta }^+{}]\) in terms of \([\delta ^-_{}, \delta ^+_{}]\), \([\mu ^-_{}, \mu ^+_{}]\) and also \([{\varDelta }^-{}, {\varDelta }^+{}]\), i.e., determine a function \(F(.)\) such that \([{\varDelta }^-{}, {\varDelta }^+{}] = F_{\mathcal {A},\mathcal {C},pol}([\delta ^-_{}, \delta ^+_{}], [\mu ^-_{}, \mu ^+_{}], [{\varDelta }^-{}, {\varDelta }^+{}])\) (or show that no such function \(F(.)\) can exist, which could happen e.g. if unbounded queuing could develop). Solving this equation provides a feasible assignment of values for the end-to-end delays \([{\varDelta }^-{}, {\varDelta }^+{}]\) for the algorithm \(\mathcal {A}\) in the given system, which is sufficient for guaranteeing its correctness: It will never happen that, during any run, any message will experience an end-to-end delay outside \([{\varDelta }^-{}, {\varDelta }^+{}]\). Since \(\mathcal {A}\) is guaranteed to work correctly under this assumption, it will only generate message patterns that do not violate the assumptions made in the analysis leading to \([{\varDelta }^-{}, {\varDelta }^+{}]\).

Note carefully that, once a feasible assignment for \([{\varDelta }^-{}, {\varDelta }^+{}]\) is known, there is no need to consider the system parameters \([\delta ^-_{}\), \(\delta ^+_{}]\) and \([\mu ^-_{}, \mu ^+_{}]\) further. By “removing” the dependency on the real system’s characteristics in this way, the real-time model facilitates a sound real-time analysis without sacrificing the compatibility with classic distributed computing analysis techniques and results. Recall that, in the classic model, the end-to-end delays \([\underline{\delta }^-, \underline{\delta }^+]\) were part of the system model and hence essentially had to be correctly guessed. By virtue of the transformations introduced in the later sections, all that is needed to employ some classic fault-tolerant distributed algorithm in the real-time model is to conduct an appropriate worst-case end-to-end delay analysis and to compute a feasible end-to-end delay assignment.

3 Related work

All the work on time complexity of distributed algorithms we are aware of considers end-to-end delays as a model parameter in a zero-step time model. Hence, queuing and scheduling does not occur at all, even in more elaborate examples, e.g., [30]. Papers that assume non-zero step-times often consider them sufficiently small to completely ignore queuing effects [27] or assume shared-memory access instead of a message passing network [1, 2].

The only work in the area of fault-tolerant distributed computing we are aware of that explicitly addresses queuing and scheduling is [8]. It introduces the Time Immersion (“late binding”) approach, where real-time properties of an asynchronous or partially synchronous distributed algorithm e.g. for consensus are just “inherited” from the underlying system. Nevertheless, somewhat contrary to intuition, guaranteed timing bounds can be determined by a suitable real-time analysis. Their work does not rest on a formal distributed computing model, however.

There are also a few approaches in real-time systems research that aim at an integrated schedulability analysis in distributed systems [17, 28, 33, 34]. However, contrary to the execution of many distributed algorithms, they assume very simple interaction patterns of the processors in the system, and do not consider failures.

Hence, our real-time model seems to be the first attempt to rigorously bridge the gap between fault-tolerant distributed algorithms and real-time systems that does not sacrifice the strengths of the individual views. Our real-time model, the underlying low-level st-traces and our general transformations between real-time model and classic model have been introduced in [20, 22] and extended in [24, 26]; [20] and [21] analyze clock synchronization issues in this model. The present paper finally adds failures to the picture.

Given that systems with real-time requirements have also been an important target for formal verification since decades, it is appropriate to also relate our approach to some important results of verification-related research. In fact, verification tools like Kronos [6] or Uppaal [12] based on timed automata [4] have successfully been used for model-checking real-time properties in many different application domains. On the other hand, there are also modeling and analysis frameworks based on various IO automata [9, 14, 16, 18, 31], which primarily use interactive (or manual) theorem-proving for verifying implementation correctness via simulation relations.

Essentially, all these frameworks provide the capabilities needed for modeling and analyzing distributed algorithms at the level of our st-traces (see Sect. 4.4).Footnote 3 However, to the best of our knowledge, none of these frameworks provides a convenient abstraction comparable to our rt-runs, which allows to reason about real-time scheduling and queueing effects explicitly and independently of correctness issues: State-based specifications suitable e.g. for Uppaal tightly intertwine the control flow of the algorithms with execution constraints and scheduling policies. This not only leads to very complex specifications, but also rules out the separation of correctness proofs (using classic distributed algorithms results) and real-time analysis (using worst-case response time analysis techniques) made possible by our transformations.

4 System models

Since the fault-free variants of the classic and the real-time model have already been introduced [24, 26], we only restate the most important properties and the fault-tolerant extensions here.

4.1 Classic system model

We consider a network of \(n\) processors, which communicate by passing unique messages. Each processor \(p\) is equipped with a CPU, some local memory, a read-only hardware clock, and reliable, non-FIFO links to all other processors.

The hardware clock \(HC_p: \mathbb {R}^+ \rightarrow \mathbb {R}^+\) is an invertible function that maps dense real-time to dense clock-time; it can be read but not changed by its processor. It starts with some initial value \(HC_p(0)\) and then increases strictly, continuously and without bound.

An algorithm defines initial states and a transition function. The transition function takes the processor index \(p\), one incoming message, the receiver processor’s current local state and hardware clock reading as input, and yields a list of states and messages to be sent, e.g. \([oldstate, int.st._1,int.st._2\), \(\hbox {msg. }m\) \(\hbox { to }q,\,\hbox {msg. }m'\) \(\hbox { to }q',int.st._3,\,newstate]\), as output. The list must start with the processor’s current local state and end with a state. Thus, the single-element list \([oldstate = newstate]\) is also valid.

If the CPU is unable to perform the transition from \(oldstate\) to \(newstate\) in an atomic manner, intermediate states (\(int.st._{1/2/3}\) in our example) might be present for a short period of time. Since, in the classic model, this time is abstracted away and the state transition from \(oldstate\) to \(newstate\) is assumed to be instantaneous, these states are usually neglected in the classic model. We explicitly model them to retain compatibility with the real-time model, where they will become important.

Formally, we consider a state to be a set of (variable name, value) pairs, containing no variable name more than once. We do not restrict the domain or type of those values, which might range, e.g., from simple Boolean values to lists or complex data structures.

A “message to be sent” (\(m\) and \(m'\) in our example) is specified as a pair consisting of the message itself and the destination processor the message will be sent to.

Every message reception immediately causes the receiver processor to change its state and send out all messages according to the transition function (\(=\)an action). The complete action (message arrival, processing and sending messages) is performed instantly in zero time.

Actions can be triggered by ordinary, timer or input messages:

-

Ordinary messages (\(m_o\)) are transmitted over the links. Let \(\underline{\delta }_m\) denote the difference between the real-time of the action sending some ordinary message \(m\) and the real-time of the action receiving it. The classic model defines a lower and an upper bound \([\underline{\delta }^-,\underline{\delta }^+]\) on \(\underline{\delta }_m\), for all \(m\). Since the time required to process a message is zero in the classic model—which also means that no queuing effects can occur—\(\underline{\delta }_m\) represents both the message (transmission) delay as well as the end-to-end delay.

-

Timer messages (\(m_t\)) are used for modeling time(r)-driven execution in our message-driven setting: A processor setting a timer is modeled as sending a timer message \(m\) (to itself) in an action, and timer expiration is represented by the reception of a timer message. Timer messages are received when the hardware clock reaches (or has already reached) the time specified in the message.

-

Input messages (\(m_i\)) arrive from outside the system and can be used to model booting and starting the algorithm, as well as interaction with elements (e.g., users, interfaces) outside the distributed system.

4.1.1 Executions

An execution in the classic model is a sequence \(ex\) of actions and an associated set of \(n\) hardware clocks \(HC^{ex} = \{HC^{ex}_p, HC^{ex}_q, \ldots \}\). (We will omit the superscript of \(HC^{ex}_p\) if the associated execution is clear from context).

An action \(ac\) occurring at real-time \(t\) at processor \(p\) is a \(5\)-tuple, consisting of the processor index \(proc(ac)=p\), the received message \(msg(ac)\), the occurrence real-time \(time(ac)=t\), the hardware clock value \(HC(ac)=HC_p(t)\) and the state transition sequence \(trans(ac) = [oldstate, \cdots , newstate]\) (including messages to be sent).

A valid execution \(ex\) of an algorithm \(\underline{\mathcal {A}}\) must satisfy the following properties:

-

EX1

\(ex\) must be a sequence of actions with a well-defined total order \(\prec \). \(time(ac)\) must be non-decreasing. Message sending and receiving must be in the correct causal order, i.e., \(msg(ac') \in trans(ac) \implies ac\prec ac'\).

-

EX2

Processor states can only change during an action, i.e., \(newstate(ac_1) = oldstate(ac_2)\) must hold for two consecutive actions \(ac_1\) and \(ac_2\) on the same processor.

-

EX3

The first action \(ac\) at every processor \(p\) must occur in an initial state of \(\underline{\mathcal {A}}\).

-

EX4

The hardware clock readings must increase strictly (\(\forall t, t', p: t < t' \Rightarrow HC_p(t) < HC_p(t')\)), continuously and without bound.

-

EX5

Messages must be unique,Footnote 4 i.e., there is at most one action sending some message \(m\) and at most one action receiving it. Messages can only be sent by and processed by the processors specified in the message.

-

EX6

Every non-input message that is received must have been sent.

Note that further conditions (such as adherence to the bounds on the message delay or the state transitions of the algorithm) will be added by the failure model in Sect. 4.3.

A classic system \(\underline{s}\) is a system adhering to the classic model, parameterized by the system size \(n\) and the interval \([\underline{\delta }^-, \underline{\delta }^+]\) specifying bounds on the message delay.

4.2 Real-time model

The real-time model extends the classic model in the following way: A computing step in a real-time system is executed non-preemptively within system-wide bounds \([\mu ^-_{}, \mu ^+_{}]\), which may depend on the number of messages sent in a computing step. In order to clearly distinguish a computing step in the real-time model from a zero-time action in the classic model, we use the term job to refer to the former. We consider jobs as the unit of preemption in the real-time model, i.e., a running job cannot be interrupted by the scheduler.

This simple extension makes the real-time model more realistic but also more complex. In particular, queuing and scheduling effects must be taken into account:

-

We must now distinguish two modes of a processor at any point in real-time t: idle and busy (i.e., currently executing a job). Since jobs cannot be interrupted, a queue is needed that stores messages arriving while the processor is busy.

-

Contrary to the classic model, the state transitions \(oldstate \rightarrow \cdots \rightarrow newstate\) in a single computing step typically occur at different times during the job, allowing an intermediate state to be valid on a processor for some non-zero duration.

-

Some non-idling scheduling policy is used to select a new message from the queue whenever processing of a job has been completed. To ensure liveness, we assume that the scheduling policy is non-idling. Note that the scheduling policy can also be used for implementing non-preemptible tasks consisting of multiple jobs, if required.

-

We assume that the hardware clock can only be read at the beginning of a job. This models the fact that real clocks cannot usually be read arbitrarily fast, i.e., with zero access time. This restriction in conjunction with our definition of message delays allows us to define transition functions in exactly the same way as in the classic model. After all, the transition function just defines the “logical” semantics of a transition, but not its timing.

-

If a timer set during some job \(J\) expires earlier than \(end(J)\), the timer message will arrive at time \(end(J)\), when \(J\) has completed.

-

In the classic zero step-time model, a faulty processor can send an arbitrary number of messages to all other processors. This is not an issue when assuming zero step times, but could cause problems in the real-time model: It would allow a malicious node to create a huge number of jobs at any of its peers. Consequently, we must ensure that messages from faulty processors do not endanger the liveness of the algorithm at correct processors. To protect against such “babbling” faulty nodes, each processor is equipped with an admission control component, allowing the scheduler to drop certain messages instead of processing them.

Both the scheduling and the admission control policy are represented by a single function

with \(\text {queue}^{\text {new}} \subseteq \text {queue}\), \(\text {msg} \not \in \text {queue}^{\text {new}}\) and \(\text {msg} \in \text {queue} \cup \{\bot \}\). The non-idling requirement can be formalized as \(\text {msg} = \bot \implies \text {queue}^{\text {new}} = \emptyset \).

This function is used whenever a scheduling decision is made, i.e., (a) at the end of a job and (b) whenever the queue is empty, the processor is idle, and a new message just arrived. If \(\text {msg} \ne \bot \), the scheduling decision causes \(\text {msg}\) to be processed. “alg. state” refers to the \(newstate\) of the job that just finished or last finished, corresponding to cases (a) and (b), respectively, or the initial state, if no job has been executed on that processor yet.

Since we assume non-preemptive scheduling of jobs, a message received while the processor is currently busy will be neither scheduled nor dropped until the current job has finished. “Delaying” the admission control decision in such a way has the advantage that no intermediate states can ever be used for admission control decisions.

4.2.1 System parameters

Like the processing delay, the message delay and hence the bounds \([\delta ^-_{},\delta ^+_{}]\) may depend on the number of messages sent in the sending job: For example, \(\delta ^+_{(3)}\) is the upper bound on the message delay of messages sent by a job sending three messages in total. Formally, the interval boundaries \(\delta ^-_{}\), \(\delta ^+_{}\), \(\mu ^-_{}\) and \(\mu ^+_{}\) can be seen as functions \(\{0,\ldots ,n-1\} \rightarrow \mathbb {R}^+\), representing a mapping from the number of destination processors to which ordinary messages are sent during that computing step to the actual message or processing delay bound. We assume that \(\delta ^-_{(\ell )}\), \(\delta ^+_{(\ell )}\), \(\mu ^-_{(\ell )}\) and \(\mu ^+_{(\ell )}\) as well as the message delay uncertainty \(\varepsilon _{(\ell )} = \delta ^+_{(\ell )} - \delta ^-_{(\ell )}\) are non-decreasing w.r.t. \(\ell \). In addition, sending \(\ell \) messages at once must not be more costly than sending those messages in multiple steps; formally, \(\forall i, j \ge 1: f_{(i+j)} \le f_{(i)} + f_{(j)}\) (for \(f = \delta ^-_{}\), \(\delta ^+_{}\), \(\mu ^-_{}\) and \(\mu ^+_{}\)).

The delay of a message \(\delta \in [\delta ^-_{}, \delta ^+_{}]\) is measured from the real-time of the start of the job sending the message to the arrival real-time at the destination processor (where the message will be enqueued or, if the processor is idle, the corresponding job starts immediately). This might seem counter-intuitive at a first glance. However, this was not a technical requirement but rather a deliberate choice: The message delays are in fact bounds on the sum of

-

(a)

the time between the start of the job and the actual sending of the message and

-

(b)

the actual transmission delays.

Defining the message delay this way makes the model more flexible: If information about the actual sending time of the messages is known (e.g., always approximately in the middle of the job), this information can be used to make the bounds \([\delta ^-_{}, \delta ^+_{}]\) more realistic. Adding (a) to the message delay is justified, since this is a more-or-less constant value—in stark contrast to the queuing delay, which, depending on the system load, might vary between none and multiple processing delays.

Thus, as a trade-off between accuracy and simplicity, we chose the option where messages are “sent” at the start of processing a job, since it allows at least some information about the actual sending times to be incorporated into the model, without adding additional parameters or making the transition function more complex.

In addition, it is important to note that our model naturally supports a fine-grained modeling of standard ”tasks” used in classic real-time analysis papers: Instead of modeling a job as a significant piece of code, a job in our setting can be thought of as consisting of a few simple machine operations: A classic task is then made up of several jobs, which are executed consecutively (and may of course be preempted at job boundaries). Hence, a job involving the sending of a message can be anywhere within the sequence of jobs making up a task.

4.2.2 Real-time runs

A real-time run (rt-run) corresponds to an execution in the classic model. An rt-run consists of a sequence \(ru\) of receive events, jobs and drop events, and of an associated set of \(n\) hardware clocks \(HC^{ru} = \{HC^{ru}_p, HC^{ru}_q, \ldots \}\). (Again, the superscript will be omitted if clear from context).

A receive event \(R\) for a message arriving at \(p\) at real-time \(t\) is a triple consisting of the processor index \(proc(R)=p\), the message \(msg(R)\), and the arrival real-time \(time(R)=t\). Note that \(t\) is the receiving/enqueuing time in Fig. 1.

A job \(J\) starting at real-time \(t\) on \(p\) is a \(6\)-tuple, consisting of the processor index \(proc(J)=p\), the message being processed \(msg(J)\), the start time \(begin(J)=t\), the job processing time \(duration(J)\), the hardware clock reading \(HC(J)=HC_p(t)\), and the state transition sequence \(trans(J) =\) \([oldstate, \cdots , newstate]\). We define \(end(J) = begin(J) + duration(J)\). Figure 1 provides an example of an rt-run containing three receive events and three jobs on the second processor. Note that neither the actual state transition times nor the actual sending times of the sent messages are modeled in a job.

A drop event \(D\) at real-time \(t\) on processor \(p\) consists of the processor index \(proc(D)=p\), the message \(msg(D)\), and the dropping real-time \(time(D)=t\). These events represent messages getting dropped by the admission control component rather than being processed by a job.

Formally, an rt-run \(ru\) of some algorithm \(\mathcal {A}\) must satisfy the following properties:

-

RU1

\(ru\) must be a sequence of receive events, drop events and jobs with a well-defined total order \(\prec \). The begin times (\(begin(J)\) for jobs, \(time(R)\) and \(time(D)\) for receive events and drop events) must be non-decreasing. Message sending, receiving and processing/dropping must be in the correct causal order, i.e., \(msg(R) \in trans(J) \implies J\prec R\), \(msg(J) = msg(R) \implies R\prec J\), and \(msg(D) = msg(R) \implies R\prec D\).

-

RU2

Processor states can only change during a job, i.e., \(newstate(J_1) = oldstate(J_2)\) must hold for two consecutive jobs \(J_1\) and \(J_2\) on the same processor.

-

RU3

The first job \(J\) at every processor \(p\) must occur in an initial state of \(\mathcal {A}\).

-

RU4

The hardware clock readings must increase strictly, continuously and without bound.

-

RU5

Messages must be unique, i.e., there is at most one job sending some message \(m\), at most one receive event receiving it, and at most one job processing it or drop event dropping it. Messages must only be sent by and received/processed/dropped by the processors specified in the message.

-

RU6

Every non-input message that is received must have been sent. Every message that is processed or dropped must have been received.

-

RU7

Jobs on the same processor do not overlap: If \(J\prec J'\) and \(proc(J) = proc(J')\), then \(end(J) \le begin(J')\).

-

RU8

Drop events can only occur when a scheduling decision is made, i.e., immediately after a receive event when the processor is idle, or immediately after a job has finished processing.

A real-time system \(s\) is defined by an integer \(n\) and two intervals \([\delta ^-_{}, \delta ^+_{}]\) and \([\mu ^-_{}, \mu ^+_{}]\).

4.3 Failures and admissibility

A failure model indicates whether a given execution or rt-run is admissible w.r.t. a given system running a given algorithm. In this work, we restrict our attention to the \(f\)-\(f'\)-\(\rho \) failure model, which is a hybrid failure model [5, 19, 35] that incorporates both crash and Byzantine faulty processors. Of the \(n\) processors in the system,

-

at most \(f\ge 0\) may crash and

-

at most \(f'\ge 0\) may be arbitrarily faulty (“Byzantine”).

All other processors are called correct.

A given execution (resp. rt-run) conforms to the \(f\)-\(f'\)-\(\rho \) failure model, if all message delays are within \([\underline{\delta }^-, \underline{\delta }^+]\) (resp. \([\delta ^-_{}, \delta ^+_{}]\)) and the following conditions hold:

-

All timer messages arrive at their designated hardware clock time.

-

On all non-Byzantine processors, clocks drift by at most \(\rho \): \(\forall t,t': (1+\rho ) \ge \frac{HC_p(t')-HC_p(t)}{t'-t} \ge (1-\rho )\).

-

All correct processors make state transitions as specified by the algorithm. In the real-time model, they obey the scheduling/admission policy, and all of their jobs take between \(\mu ^-_{}\) and \(\mu ^+_{}\) time units.

-

A crashing processor behaves like a correct one until it crashes. In the classic model, the state transition sequence of all actions after the crash contains only the one-element “NOP sequence” \([s]\), i.e., \(s = oldstate(ac) = newstate(ac)\). In the real-time model, after a processor has crashed, all messages in its queue are dropped, and every new message arriving will be dropped immediately rather than being processed. Unclean orderly crashes are allowed: the last action/job on a processor might execute only a prefix of its state transition sequence.

In the analysis and the transformation proofs, we will examine given executions and rt-runs. Therefore, we know which processors behaved in a correct, crashing or Byzantine faulty manner. Note, however, that this information is only available during analysis; the algorithms themselves, including the simulation algorithms presented in the following sections, do not know which of the other processors are faulty. The same holds for timing information: While, during analysis, we can say that an event occurred at some exact real time \(t\), the only information available to the algorithm is the local hardware clock reading at the beginning of the job.

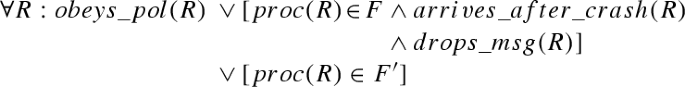

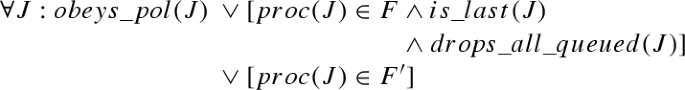

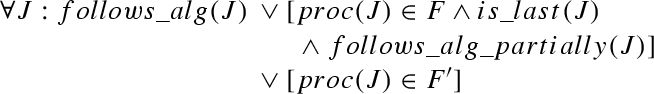

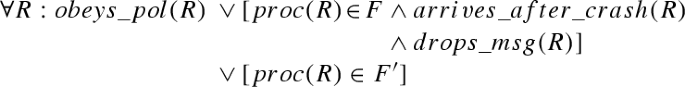

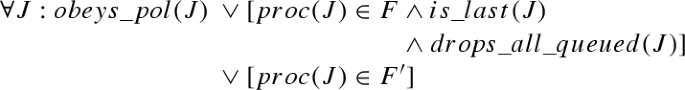

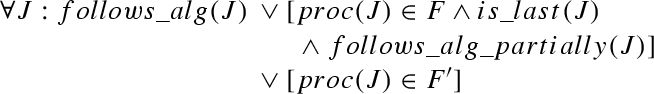

Formally, failure models can be specified as predicates on executions and rt-runs. Let \({\varPi }\) denote the set of \(n\) processors. \(f\)-\(f'\)-\(\rho \) is defined as follows. Predicates involving faulty processors are underlined.

The predicates \(obeys\_pol(R)\) and \(obeys\_pol(J)\) refer to the scheduling and the admission control policy. \(obeys\_pol(R)\) and \(obeys\_pol(J)\) are defined to be satisfied if the following conditions hold, respectively:

-

\(obeys\_pol(R)\): If no job is running at time \(time(R)\), a scheduling decision is made after \(R\) completes.

-

\(obeys\_pol(J)\): If there are still messages that have been received but not processed or dropped at time \(end(J)\), a scheduling decision is made after \(J\) completes.

This scheduling decision causes messages to be dropped and/or a job to be started (according to the chosen policy \(pol\)).

The table in Fig. 2 formalizes the other predicates used in the definition of \(f\)-\(f'\)-\(\rho \). In Sect. 6, two variants of failure model \(f\)-\(f'\)-\(\rho \) will be considered:

-

\(f\)-\(f'\)-\(\rho \)+latetimers\(_{\alpha }\) is equivalent to \(f\)-\(f'\)-\(\rho \) in the classic model, except that \({}\wedge \forall m_t: arrives\_timely(m_t) \vee [proc(m_t) \in F']\) is weakened to \({}\wedge \forall m_t: arrives\_timely(m_t) {}\vee is\_late\_timer(m_t, \alpha ) \vee [proc(m_t) \in F']\).

-

Likewise, \(f\)-\(f'\)-\(\rho \)+precisetimers\(_{\alpha }\) corresponds to \(f\)-\(f'\)-\(\rho \) in the real-time model plus the following restriction: \({} \wedge \forall m_t: gets\_processed\_precisely(m_t, \alpha ) {}\vee [proc(m_t) \in F']\).

These variants will be explained in detail in Sect. 6.

Predicates used in the failure model definitions. Variables \(ac\), \(R\), \(J\), \(D\), \(m_o\), \(m_t\) and \(p\) are used for actions, receive events, jobs, drop events, ordinary messages, timer messages and processor indices, respectively. \(JD\) can refer to either a job or a drop event, with \(time(JD)=begin(J)\) if \(JD=J\) and \(time(JD)=time(D)\) if \(JD=D\). suffix denotes a (possibly empty) sequence of states and messages, and ”+” is used to concatenate sequences as well as to add time values to intervals (resulting in a shifted interval). \(sHC(m_t)\) denotes the hardware clock time for which the timer message \(m_t\) is set (or \(HC(ac)/HC(J)\) of the job setting the timer, whichever is higher). \(proc(m_t)\) is the processor setting the timer. \(\ell \) refers to the number of ordinary messages sent in \(J\). If \(J\) is a crashing job, then \(\ell \) is the number of messages that would have been sent if the processor had not crashed

4.4 State transition traces

The global state of a system is composed of the real-time \(t\) and the local state \(s_p\) of every processor \(p\). Rt-runs do not allow a well-defined notion of global states, since they do not fix the exact time of state transitions in a job. Thus, we use the “microscopic view” of state-transition traces (st-traces) introduced in [24, 26] to assign real-times to all atomic state transitions.

Definition 1

A state transition event (st-event) represents a change in the global state or the arrival of an input message. It is

-

a tuple \((transition: t, p, s, s')\), indicating that, at time \(t\), processor \(p\) changes its internal state from \(s\) to \(s'\), or

-

a tuple \((input: t, m)\), indicating that, at time \(t\), input message \(m\) arrives from an external source.Footnote 5

Example 2

Let \(J\) with \(trans(J) = [oldstate,\,\hbox {msg. }m\) \(\hbox { to }q,\,int.st._1,newstate]\) and \(proc(J) = p\) be a job in a real-time run \(ru\). If \(tr\) is an st-trace of \(ru\), then it contains the following st-events \(ev'\) and \(ev''\):

-

\(ev' = (transition: t', p, oldstate, int.st._1)\)

-

\(ev'' = (transition: t'', p, int.st._1, newstate)\)

with \(begin(J) \le t' \le t'' \le end(J)\). \(\square \)

An st-trace \(tr\) contains the set of st-events, the processor’s hardware clock readings \(HC^{tr}\) (\(=HC^{ex}\) or \(HC^{ru}\)), and, for every time \(t\), at least one global state \(g = (s_1(g), \ldots , s_n(g))\). Note carefully that \(tr\) may contain more than one \(g\) with \(time(g) = t\). For example, if \(t' = t''\) in the previous example, three different global states at time \(t'\) would be present in the st-trace, with \(s_p(g)\) representing \(p\)’s state as \(oldstate\), \(int.st._1\) or \(newstate\). Nevertheless, in every st-trace, all st-events and global states are totally ordered by some relation \(\prec \), based on the times of the st-events and on the order of the state transitions in the transition sequences of the underlying jobs.

The relation \(\prec \) must also preserve the causality of state transitions connected by a message: For example, if one job has a transition sequence of \([s_1,s_2,msg,s_3]\) and the receipt of \(msg\) spawns a job with a transition sequence of \([s_4,s_5]\) on another processor, the switch from \(s_1\) to \(s_2\) must occur before the switch from \(s_4\) to \(s_5\), since there is a causal chain \((s_1 \rightarrow s_2), msg, (s_4 \rightarrow s_5)\).

Clearly, there are multiple possible st-traces for a single rt-run. Executions in the classic model have corresponding st-traces as well, with \(t = time(ac)\) for the time \(t\) of all st-events corresponding to some action \(ac\).

A problem \(\mathcal {P}\) is defined as a set of (or a predicate on) st-traces. An execution or an rt-run satisfies a problem if \(tr\in \mathcal {P}\) holds for all its st-traces. If all st-traces of all admissible rt-runs (or executions) of some algorithm in some system satisfy \(\mathcal {P}\), we say that this algorithm solves \(\mathcal {P}\) in the given system.

5 Running real-time algorithms in the classic model

As the real-time model is a generalization of the classic model, the set of systems covered by the classic model is a strict subset of the systems covered by the real-time model. More precisely, every system in the classic model \((n, [\underline{\delta }^-, \underline{\delta }^+])\) can be specified in terms of a real-time model \((n, [\delta ^-_{}, \delta ^+_{}], [\mu ^-_{}, \mu ^+_{}])\) with \(\delta ^-_{} = \underline{\delta }^-\), \(\delta ^+_{} = \underline{\delta }^+\) and \(\mu ^-_{} = \mu ^+_{} = 0\). Thus, every result (correctness or impossibility) for some classic system also holds in the corresponding real-time system with (a) the same message delay bounds, (b) \(\mu ^-_{(\ell )} = \mu ^+_{(\ell )} = 0\) for all \(\ell \), and (c) an admission control component that does not drop any messages. Intuition tells us that impossibility results also hold for the general case, i.e., that an impossibility result for some classic system \((n, [\underline{\delta }^-, \underline{\delta }^+])\) holds for all real-time systems \((n, [\delta ^-_{}, \delta ^+_{}], [\mu ^-_{}, \mu ^+_{}])\) with \(\delta ^-_{} \le \underline{\delta }^-\), \(\delta ^+_{} \ge \underline{\delta }^+\) and arbitrary \(\mu ^-_{}, \mu ^+_{}\) as well, because the additional delays do not provide the algorithm with any useful information.

As it turns out, this conjecture is true: This section will present a simulation (Algorithm 1) that allows us to use an algorithm designed for the real-time model in the classic model—and, thus, to transfer impossibility results from the classic to the real-time model (see Sect. 7.1 for an example)—provided the following conditions hold:

-

Cond1 Problems must be simulation-invariant.

Definition 3

We define \(gstates(tr)\) to be the (ordered) set of global states in some st-trace \(tr\). For some state \(s\) and some set \(\mathcal {V}\), let \(s|_\mathcal {V}\) denote \(s\) restricted to variable names contained in the set \(\mathcal {V}\). For example, if \(s = \{(a, 1), (b, 2), (c, 3)\}\), then \(s|_{\{a, b\}} = \{(a, 1), (b, 2)\}\). Likewise, let \(gstates(tr)|_\mathcal {V}\) denote \(gstates(tr)\) where all local states \(s\) have been replaced by \(s|_\mathcal {V}\).

A problem \(\mathcal {P}\) is simulation-invariant, if there exists a finite set \(\mathcal {V}\) of variable names, such that \(\mathcal {P}\) can be specified as a predicate on \(gstates(tr)|_\mathcal {V}\) and the sequence of \(input\) st-events (which usually takes the form \(Pred_1(input\text { st-events of }tr) \Rightarrow Pred_2(gstates(tr)|_\mathcal {V})\)).

Informally, this means that adding variables to some algorithm or changing its message pattern does not influence its ability to solve some problem \(\mathcal {P}\), as long as the state transitions of the “relevant” variables \(\mathcal {V}\) still occur in the same way at the same time.

For example, the classic clock synchronization problem specifies conditions on the adjusted clock values of the processors, i.e., the hardware clock values plus the adjustment values, at any given real time. The problem cares neither about additional variables the algorithm might use nor about the number or contents of messages exchanged.

The advantage of such a problem specification is that algorithms can be run in a (time-preserving) simulation environment and still solve the problem: As long as the algorithm’s state transitions are the same and occur at the same time, the simulator may add its own variables and change the way information is exchanged. On the other hand, a problem specification that restricts either the type of messages that might be sent or the size of the local state would not be simulation invariant.

-

Cond2 The delay bounds in the classic system must be at least as restrictive as those in the real-time system. As long as \(\delta ^-_{(\ell )} \le \underline{\delta }^-\) and \(\delta ^+_{(\ell )} \ge \underline{\delta }^+\) holds (for all \(\ell \)), any message delay of the simulating execution (\(\underline{\delta }\in [\underline{\delta }^-{}, \underline{\delta }^+{}]\)) can be directly mapped to a message delay in the simulated rt-run (\(\delta = \underline{\delta }\)), such that \(\delta \in [\delta ^-_{(\ell )}, \delta ^+_{(\ell )}]\) is satisfied, cf. Fig. 6a. Thus, a simulated message corresponds directly to a simulation message with the same message delay.

-

Cond3 Hardware clock drift must be reasonably low. Assume a system with very inaccurate hardware clocks, combined with very accurate processing delays: In that case, timing information might be gained from the processing delay, for example, by increasing a local variable by \((\mu ^-_{} + \mu ^+_{})/2\) during each computing step. If \(\rho \), the hardware clock drift bound, is very large and \(\mu ^+_{} - \mu ^-_{}\) is very small, the precision of this simple “clock” might be better than the one of the hardware clock. Thus, algorithms might in fact benefit from the processing delay, as opposed to the zero step-time situation.

To avoid such effects, the hardware clock must be “accurate enough” to define (time-out) a time span that is guaranteed to lie within \(\mu ^-_{}\) and \(\mu ^+_{}\), which requires \(\rho \le \frac{\mu ^+_{(\ell )} - \mu ^-_{(\ell )}}{\mu ^+_{(\ell )} + \mu ^-_{(\ell )}}\). In this case, the classic system can simulate a delay within \(\mu ^-_{(\ell )}\) and \(\mu ^+_{(\ell )}\) real-time units by waiting for \(\tilde{\mu }_{(\ell )}{} = 2\frac{\mu ^+_{(\ell )}\mu ^-_{(\ell )}}{\mu ^+_{(\ell )} + \mu ^-_{(\ell )}}\) hardware clock time units.

Lemma 4

If \(\rho \le \frac{\mu ^+_{(\ell )} - \mu ^-_{(\ell )}}{\mu ^+_{(\ell )} + \mu ^-_{(\ell )}}\) holds, \(\tilde{\mu }_{(\ell )}\) hardware clock time units correspond to a real-time interval of \([\mu ^-_{(\ell )}, \mu ^+_{(\ell )}]\) on a non-Byzantine processor.

Proof

Since drift is bounded, \((1+\rho ) \ge \frac{HC_p(t')-HC_p(t)}{t'-t} \ge (1-\rho )\). Since \(HC_p\) is an unbounded, strictly increasing continuous function (cf. EX4), an inverse function \(HC^{-1}_p\), mapping hardware clock time to real time, exists. Thus, \( \forall T < T': \frac{1}{1+\rho } \le \frac{HC_p^{-1}(T') - HC_p^{-1}(T)}{T' - T} \le \frac{1}{1-\rho }\).

Choose \(T\) and \(T'\) such that \(T' - T = \tilde{\mu }_{(\ell )}{}\):

Since \(\rho \le \frac{\mu ^+_{(\ell )} - \mu ^-_{(\ell )}}{\mu ^+_{(\ell )} + \mu ^-_{(\ell )}}\) holds,

Applying the definition of \(\tilde{\mu }_{(\ell )}{}\) yields \(\mu ^-_{(\ell )} \le HC_p^{-1}(T + \tilde{\mu }_{(\ell )}{}) - HC_p^{-1}(T) \le \mu ^+_{(\ell )}\). \(\square \)

5.1 Overview

The following theorem, which hinges on a formal transformation from executions to rt-runs, represents one of the main results of this paper in a slightly simplified version.

Theorem 5

Let \(\underline{s}= (n, [\underline{\delta }^-, \underline{\delta }^+])\) be a classic system. If

-

\(\mathcal {P}\) is a simulation-invariant problem (Cond1),

-

the algorithm \(\mathcal {A}\) solves problem \(\mathcal {P}\) in some real-time system \(s= (n, [\delta ^-_{}, \delta ^+_{}], [\mu ^-_{}, \mu ^+_{}])\) with some scheduling/admission policy \(pol\) under failure model \(f\)-\(f'\)-\(\rho \),

-

\(\forall \ell : \delta ^-_{(\ell )} \le \underline{\delta }^-\) and \(\delta ^+_{(\ell )} \ge \underline{\delta }^+\) (Cond2), and

-

\(\forall \ell : \rho \le \frac{\mu ^+_{(\ell )} - \mu ^-_{(\ell )}}{\mu ^+_{(\ell )} + \mu ^-_{(\ell )}}\) (Cond3),

then the algorithm \(\underline{\mathcal {S}}_{\mathcal {A}, pol, \mu {}}\) solves \(\mathcal {P}\) in \(\underline{s}\) under failure model \(f\)-\(f'\)-\(\rho \).

For didactic reasons, the following structure will be used in this section: First, the simulation algorithm, the transformation and a sketch of the correctness proof for Theorem 5 will be presented. Afterwards, we show how Cond2 can be weakened, followed by a full formal proof of correctness.

Cond2: \(\forall \ell : \delta ^-_{(\ell )} \le \underline{\delta }^-\wedge \delta ^+_{(\ell )} \ge \underline{\delta }^+\) is a very strong requirement, since \([\underline{\delta }^-, \underline{\delta }^+]\) must lie within all intervals \([\delta ^-_{(1)}, \delta ^+_{(1)}]\), \([\delta ^-_{(2)}, \delta ^+_{(2)}]\), .... In some cases, such an interval \([\underline{\delta }^-, \underline{\delta }^+]\) might not exist: Consider, e.g., the case in the bottom half of Fig. 6b, where \([\delta ^-_{(1)}, \delta ^+_{(1)}]\) and \([\delta ^-_{(2)}, \delta ^+_{(2)}]\) do not overlap. After the sketch of Theorem 5’s proof, we will show that it is possible to weaken Cond2 while retaining correctness, although this modification adds complexity to the transformation as well as to the algorithm and the proof.

5.2 Algorithm

Algorithm \(\underline{\mathcal {S}}_{\mathcal {A}, pol, \mu {}}\) (\(=\)Algorithm 1), designed for the classic model, allows us to simulate a real-time system, and, thus, to use an algorithm \(\mathcal {A}\) designed for the real-time model to solve problems in a classic system. The algorithm essentially simulates queuing, scheduling, and execution of real-time model jobs of some duration within \(\mu ^-_{(\ell )}\) and \(\mu ^+_{(\ell )}\); it is parameterized with some real-time algorithm \(\mathcal {A}\), some scheduling/admission policy \(pol\) and the waiting time \(\tilde{\mu }_{(\ell )}{} = 2\frac{\mu ^+_{(\ell )}\mu ^-_{(\ell )}}{\mu ^+_{(\ell )} + \mu ^-_{(\ell )}}\). We define \(\underline{\mathcal {S}}_{\mathcal {A}, pol, \mu {}}\) to have the same initial states as \(\mathcal {A}\), with the set of variables extended by a \(queue\) and a flag \(idle\).

All actions occurring on a non-Byzantine processor within an execution \(ex\) of \(\underline{\mathcal {S}}_{\mathcal {A}, pol, \mu {}}\) fall into one of the following five groups:

-

(a)

an algorithm message arriving, which is immediately processed,

-

(b)

an algorithm message arriving, which is enqueued,

-

(c)

a (finished-processing) timer message arriving, causing some message from the queue to be processed,

-

(d)

a (finished-processing) timer message arriving when no messages are in the queue (or all messages in the queue get dropped),

-

(e)

an algorithm message arriving, which is immediately dropped.

Figure 3 illustrates state transitions (a)–(e) in the simulation algorithm: At every point in time, the simulated processor is either idle (variable \(idle = true\)) or busy (\(idle = false\)). Initially, the processor is idle. As soon as the first algorithm message (i.e., a message other than the internal (finished-processing) timer message) arrives [type (a) action], the processor becomes busy and waits for \(\tilde{\mu }_{(\ell )}{}\) hardware clock time units (\(\ell \) being the number of ordinary messages sent during that computing step), unless the message gets dropped by the scheduling/admission policy immediately [type (e) action], which would mean that the processor stays idle. All algorithm messages arriving while the processor is busy are enqueued [type (b) action]. After these \(\tilde{\mu }_{(\ell )}{}\) hardware clock time units have passed (modeled as a (finished-processing) timer message arriving), the queue is checked and a scheduling/admission decision is made (possibly dropping messages). If it is empty, the processor returns to its idle state [type (d) action]; otherwise, the next message is processed [type (c) action].

5.3 The transformation \(T_{C\rightarrow R}\) from executions to rt-runs

As shown in Fig. 4, the first step of the proof that this simulation is correct consists of transforming every execution \(ex\) of \(\underline{\mathcal {S}}_{\mathcal {A}, pol, \mu {}}\) into a corresponding rt-run of \(\mathcal {A}\). By showing that this rt-run is an admissible rt-run of \(\mathcal {A}\) and that the execution and the rt-run have (roughly) the same state transitions, the fact that the execution satisfies \(\mathcal {P}\) will be derived from the fact that the rt-run satisfies \(\mathcal {P}\).

The transformation \(ru= T_{C\rightarrow R}(ex)\) constructs an rt-run \(ru\). We set \(HC^{ru}_p = HC^{ex}_p\) for all \(p\), such that both \(ex\) and \(ru\) have the same hardware clocks. Depending on the type of action, a corresponding receive event, job and/or drop event in \(ru\) is constructed for each action \(ac\) on a fault-free processor.

-

Type (a): This action is mapped to a receive event \(R\) and a subsequent job \(J\) in \(ru\). The job’s duration equals the time required for the (finished-processing) message to arrive.

-

Type (b): This action is mapped to a receive event \(R\) in \(ru\). There is one special (technical) case where the action is instead mapped to a receive event at a different time, see Sect. 5.4 for details.

-

Type (c): This action is mapped to a job \(J\) in \(ru\), processing the algorithm message of the corresponding type (b) action (i.e., the message chosen by applying the scheduling policy to variable \(queue\)). The job’s duration equals the time required for the (finished-processing) message to arrive. In addition, for every message dropped from \(queue\) (if any), a drop event \(D\) is created right before \(J\).

-

Type (d): Similar to type (c) actions, a drop event \(D\) is created for every message removed from \(queue\) (if any).

-

Type (e): This action is mapped to a receive event \(R\) and a subsequent drop event \(D\) in \(ru\), both with the same parameters.

The state transitions of the jobs created by the transformation conform to those of the corresponding actions with the simulation variables (\(queue\), \(idle\)) removed. To illustrate this transformation, Fig. 5 shows an example with actions of types (a), (b) (twice), (c), (d) and (e) occurring in \(ex\) (in this order), the actions taken by the simulation algorithm and the resulting rt-run \(ru\).

Crashing processors: When a processor crashes in \(ex\), there is some action \(ac^{last}\) that might execute only part of its state transition sequence and that is followed only by actions with “NOP” transitions. All actions up to \(ac^{last}\) are mapped according to the rules above. If \(ac^{last}\) was a type (a) or (c) action that did not succeed in sending out its (finished-processing) message, we will, for the purposes of the transformation, assume that such a (finished-processing) message with a real-time delay of \(\mu ^-_{(\ell )}\) had been sent; this allows us to construct the corresponding job \(J^{last}\).Footnote 6 If \(ac^{last}\) was not a type (a) or (c) action, let \(J^{last}\) be the job corresponding to the last type (a) or (c) action before \(ac^{last}\) (if such an action exists).

Clearly, all actions on \(ex\) occurring between \(begin(J^{last})\) and \(end(J^{last})\) are (possibly partial) type (b) actions (before the crash) or NOP actions (after the crash). All of these actions are treated as type (b) actions w.r.t. the transformation, i.e., they are transformed into simple receive events. After \(J^{last}\) has finished, all messages still in \(queue\) plus all messages received during \(J^{last}\) are dropped, i.e., a drop event is created in \(ru\) for each of these messages at time \(end(J^{last})\).

Every action after \(end(J^{last})\) on this processor (which must be a NOP action) is treated like a type (e) action: It is mapped to a receive event immediately followed by a drop event.

Byzantine processors: On Byzantine processors, every action in the execution is simply mapped to a corresponding receive event and a zero-time job, sending the same messages and performing the same state transitions. Since jobs on Byzantine nodes do not need to obey any timing restrictions, it is perfectly legal to model them as taking zero time.

5.4 Special case: timer messages

There is a subtle difference between the classic and the real-time model with respect to the \(arrives\_timely(m_t)\) predicate of \(f\)-\(f'\)-\(\rho \): In an rt-run, a timer message \(m_t\) sent during some job \(J\) arrives at the end of the job (\(end(J)\)) if the desired arrival hardware clock time (\(sHC(m_t)\)) occurs while \(J\) is still in progress. On the other hand, in an execution, the timer message always arrives at \(sHC(m_t)\).

For \(T_{C\rightarrow R}\) this means that the transformation rule for type (b) actions changes: If the type (b) action \(ac\) for timer message \(m_t = msg(ac)\) occurs at some time \(t = time(ac)\) while the (finished-processing) message corresponding to the simulated job that sent \(m_t\) is still in transit, then the corresponding receive event \(R\) does not occur at \(t\) but rather at \(t' = time(ac')\), with \(ac'\) denoting the type (c) or (d) action where the (finished-processing) message arrives.

This change ensures that the receive event in the simulated rt-run occurs at the correct time, i.e., no earlier than at the end of the job sending the timer message. One inconsistency still remains, though: The order of the messages in the queue might differ between the simulated queue in the execution (i.e., variable \(queue\)) and the queue in the rt-run constructed by \(T_{C\rightarrow R}\): In the execution, \(m_t\) is added to \(queue\) at time \(t\), whereas in the rt-run, \(m_t\) is added to the real-time queue at time \(t'\). This could make a difference, for example, when another message arrives between \(t\) and \(t'\).

Since \(\underline{\mathcal {S}}_{\mathcal {A}, pol, \mu {}}\) “knows” about \(\mathcal {A}\), it is obviously possible for the simulation algorithm to detect such cases and reorder \(queue\) accordingly. We have decided not to include these details in Algorithm 1, since the added complexity might make it more difficult to understand the main structure of the simulation algorithm. For the remainder of this section, we will assume that such a reordering takes place.

5.5 Observations on algorithm \(\underline{\mathcal {S}}_{\mathcal {A}, pol, \mu {}}\) and transformation \(T_{C\rightarrow R}\)

The following can be asserted for every fault-free or not-yet-crashed processor:

Observation 6

Every type (c) action has a corresponding type (b) action where the algorithm message being processed in the type (c) action (Line 17) is enqueued (Line 8). More generally, every message removed from \(queue\) by \(pol\) in a type (c) or (d) action has been received earlier by a corresponding type (b) action.

Observation 7

Every type (a) and every type (c) action sending \(\ell \) ordinary messages also sends one (finished-processing) timer message, which arrives \(\tilde{\mu }_{(\ell )}{} := 2\frac{\mu ^+_{(\ell )}\mu ^-_{(\ell )}}{\mu ^+_{(\ell )} + \mu ^-_{(\ell )}}\) hardware clock time units later (Line 19).

Lemma 8

Initially and directly after executing some action \(ac\) with \(proc(ac) = p\), processor \(p\) is in one of two well-defined states:

-

State 1 (idle): \(newstate(ac).idle = true\), \(newstate(ac).queue\,= empty\), and there is no (finished-processing) timer message to \(p\) in transit,

-

State 2 (busy): \(newstate(ac).idle = false\) and there is exactly one (finished-processing) timer message to \(p\) in transit.

Proof

By induction. Initially (replace \(newstate(ac)\) with the initial state), every processor is in state 1. If a message is received while the processor is in state 1, it is added to the queue. Then, the message is either dropped, causing the processor to stay in state 1 [type (e) action], or the message is processed, \(idle\) is set to \(false\) and a (finished-processing) timer message is sent, i.e., the processor switches to state 2 [type (a) action]. If a message is received during state 2, one of two things can happen:

-

The message is a (finished-processing) timer message. If the queue was empty or all messages got dropped (Line 13; recall that \(next = \bot \) implies \(queue = empty\), since we assume a non-idling scheduler), the processor switches to state 1 [type (d) action]. Otherwise, a new (finished-processing) timer message is generated. Thus, the processor stays in state 2 [type (c) action].

-

The message is an algorithm message. The message is added to the queue and the processor stays in state 2 [type (b) action].\(\square \)

The following observation follows directly from this lemma and the design of the algorithm:

Observation 9

Type (a) and (e) actions can only occur in idle state, type (b), (c) and (d) actions only in busy state. Type (a) and (d) actions change the state (from idle to busy and from busy to idle, respectively), all other actions keep the state (see Fig. 3).

Lemma 10

After a type (a) or (c) action \(ac\) sending \(\ell \) ordinary messages occurred at hardware clock time \(T\) on processor \(p\) in \(ex\), the next type (a), (c), (d) or (e) action on \(p\) can occur no earlier than at hardware clock time \(T + \tilde{\mu }_{(\ell )}{}\), when the (finished-processing) message sent by \(ac\) has arrived.

Proof

Since \(ac\) is a type (a) or (c) action, \(newstate(ac).idle = false\), which, by Lemma 8, cannot change until no more (finished-processing) messages are in transit. By Observation 7, this cannot happen earlier than at hardware clock time \(T + \tilde{\mu }_{(\ell )}{}\). Lemma 8 also states that no second (finished-processing) message can be in transit simultaneously.

Thus, between \(T\) and \(T + \tilde{\mu }_{(\ell )}{}\), \(idle = false\) and only algorithm messages arrive at \(p\), which means that only type (b) actions can occur. \(\square \)

Lemma 11

On non-Byzantine processors, there is a one-to-one correspondence between (finished-processing) messages in \(ex\) and jobs in \(ru\): A job \(J\) exists in \(ru\) if, and only if, there is a corresponding (finished-processing) message \(m\) in \(ex\), with \(begin(J) = time(ac)\) of the action \(ac\) sending \(m\) and \(end(J) = time(ac')\) of the action \(ac'\) receiving \(m\).

Proof

(finished-processing) \(\rightarrow \) job: Note that (finished-processing) messages in \(ex\) are only sent in type (a) and (c) actions. \(T_{C\rightarrow R}\) ensures that for both kinds of actions a job exists in \(ru\) that ends exactly at the time at which the (finished-processing) message arrives in \(ex\).

job \(\rightarrow \) (finished-processing): Follows from the fact that, due to the rules of \(T_{C\rightarrow R}\), jobs only exist in \(ru\) if there is a corresponding type (a) or (c) action in \(ex\). These actions send (finished-processing) messages, and the mapping of the job length to the delivery time of the (finished-processing) message ensures that these messages do not arrive until the job has completed. \(\square \)

5.6 Correctness proof (sketch)

This section will sketch the proof idea for Theorem 5, following the outline of Fig. 4. Its main purpose is to prepare the reader for the more intricate proof of Theorem 16.

As defined in Theorem 5, let \(\underline{s}= (n, [\underline{\delta }^-, \underline{\delta }^+])\) be a classic system and \(\mathcal {P}\) be a simulation-invariant problem (Cond1). Let \(\mathcal {A}\) be an algorithm solving problem \(\mathcal {P}\) in some real-time system \(s= (n, [\delta ^-_{}, \delta ^+_{}], [\mu ^-_{}, \mu ^+_{}])\) with some scheduling/admission policy \(pol\) under failure model \(f\)-\(f'\)-\(\rho \). Let \(\forall \ell : \delta ^-_{(\ell )} \le \underline{\delta }^-\) and \(\delta ^+_{(\ell )} \ge \underline{\delta }^+\) (Cond2), and \(\forall \ell : \rho \le (\mu ^+_{(\ell )} - \mu ^-_{(\ell )})/(\mu ^+_{(\ell )} + \mu ^-_{(\ell )})\) (Cond3). As shown in Lemma 4, Cond3 ensures that the simulation algorithm can simulate a real-time delay between \(\mu ^-_{(\ell )}\) and \(\mu ^+_{(\ell )}\).

For each execution \(ex\) of \(\underline{\mathcal {S}}_{\mathcal {A}, pol, \mu {}}\) in \(\underline{s}\) conforming to failure model \(f\)-\(f'\)-\(\rho \), we create the corresponding rt-run \(ru\) according to transformation \(T_{C\rightarrow R}\). Applying the formal definitions of a valid rt-run and of failure model \(f\)-\(f'\)-\(\rho \), it can be shown that \(ru\) is an admissible rt-run of algorithm \(\mathcal {A}\) in system \(s\).

Since (a) \(ru\) is an admissible rt-run of algorithm \(\mathcal {A}\) in \(s\), and (b) \(\mathcal {A}\) is an algorithm solving \(\mathcal {P}\) in \(s\), it follows that \(ru\) satisfies \(\mathcal {P}\). Choose any st-trace \(tr^{ru}\) of \(ru\) where all state transitions are performed at the beginning of the job. Since \(ru\) satisfies \(\mathcal {P}\), \(tr^{ru} \in \mathcal {P}\). Transformation \(T_{C\rightarrow R}\) ensures that exactly the same state transitions are performed in \(ex\) and \(ru\) (omitting the simulation variables \(queue\) and \(idle\)). Since (i) \(\mathcal {P}\) is a simulation-invariant problem, (ii) \(tr^{ru} \in \mathcal {P}\), and (iii) every st-trace \(tr^{ex}\) of \(ex\) performs the same state transitions on algorithm variables as some \(tr^{ru}\) of \(ru\) at the same time, it follows that \(tr^{ex} \in \mathcal {P}\) and, thus, \(ex\) satisfies \(\mathcal {P}\).

By applying this argument to every admissible execution \(ex\) of \(\underline{\mathcal {S}}_{\mathcal {A}, pol, \mu {}}\) in \(\underline{s}\), we see that every such execution satisfies \(\mathcal {P}\). Thus, \(\underline{\mathcal {S}}_{\mathcal {A}, pol, \mu {}}\) solves \(\mathcal {P}\) in \(\underline{s}\) under failure model \(f\)-\(f'\)-\(\rho \).

5.7 Generalizing Cond2

Cond2 can be weakened to \(\delta ^-_{(1)} \le \underline{\delta }^-\wedge \delta ^+_{(1)} \ge \underline{\delta }^+\), by simulating the additional delay with a timer message (see Fig. 6b). This bound, denoted Cond2’, suffices, if Cond3 is slightly strengthened as follows (denoted Cond3’):

First, note that \(\delta ^+_{(1)} \ge \underline{\delta }^+\Leftrightarrow \forall \ell : \delta ^+_{(\ell )} \ge \underline{\delta }^+\), due to \(\delta ^+_{(\ell )}\) being non-decreasing with respect to \(\ell \) (cf. Sect. 4.2). Thus, the generalization mainly allows \(\delta ^-_{(\ell )}\) to be greater than \(\underline{\delta }^-\) for \(\ell > 1\). Since the message delay uncertainty \(\varepsilon _{(\ell )} (= \delta ^+_{(\ell )} - \delta ^-_{(\ell )})\) is non-decreasing in \(\ell \) as well, \(\varepsilon _{(\ell )}\ge {\varepsilon _{(1)}}\) holds, and we can ensure that the simulated message delays lie within \(\delta ^-_{(\ell )}\) and \(\delta ^+_{(\ell )}\), although the real message delay might be smaller than \(\delta ^-_{(\ell )}\), by introducing an artificial, additional message delay within the interval \([\delta ^-_{(\ell )} - \delta ^-_{(1)}, \delta ^+_{(\ell )} - \delta ^+_{(1)}]\) upon receiving a message. The restriction on \(\rho \) in Cond3’ ensures that such a delay can be estimated by the algorithm.

Lemma 12

If Cond3’ holds, \(\tilde{\delta }_{(\ell )}:= 2\frac{(\delta ^+_{(\ell )} - \delta ^+_{(1)})(\delta ^-_{(\ell )} - \delta ^-_{(1)})}{(\delta ^+_{(\ell )} - \delta ^+_{(1)}) + (\delta ^-_{(\ell )} - \delta ^-_{(1)})}\) hardware clock time units correspond to a real-time interval of \([\delta ^-_{(\ell )} - \delta ^-_{(1)}, \delta ^+_{(\ell )} - \delta ^+_{(1)}]\).

Proof

Analogous to Lemma 4. \(\square \)

Of course, being able to add this delay implies that each algorithm message is wrapped into a simulation message that also includes the value \(\ell \). The right-hand side of Fig. 6 illustrates the principle of this extended algorithm (Algorithm 2), denoted \(\underline{\mathcal {S'}}_{\mathcal {A}, pol, \delta {}, \mu {}}\), and the transformation of an execution of \(\underline{\mathcal {S'}}_{\mathcal {A}, pol, \delta {}, \mu {}}\) into an rt-run.

Interestingly, for \(\underline{\mathcal {S'}}_{\mathcal {A}, pol, \delta {}, \mu {}}\) to work, Cond1 needs to be strengthened as well. Recall that processors can only send messages during an action or during a job, which, in turn, must be triggered by the reception of a message – this is the exact reason why we need input messages to boot the system! This restriction applies to Byzantine processors as well.

Consider Fig. 6b and assume that (1) the first action/job on the first processor boots the system and that (2) the second processor is Byzantine. Note that messages \((m,2)\) (in the execution) and \(m\) (in the rt-run) are received at different times. Since Byzantine processors can make arbitrary state transitions and send arbitrary messages, in the classic model, the second processor could send out a message \(m'\) right after receiving \((m,2)\). Let us assume that this happens, and let us call this execution \(ex'\).

Mapping \(ex'\) to an rt-run \(ru'\), however, causes a problem: We cannot map \(m'\) to \(ru'\), since, in the real-time model, the second processor has not received any message yet. Thus, it has not booted – there is no corresponding job that could send \(m'\).Footnote 7

Note that this is only an issue during booting: Afterwards, arbitrary jobs could be constructed on the Byzantine processor due to its ability to send timer messages to itself. Since booting is modeled through input messages, we strengthen Cond1 as follows:

-

Cond1’ Problems must be simulation-invariant, and also invariant with respect to input messages on Byzantine processors.

This allows us to map \(ex'\) to an rt-run \(ru'\) in which the second processor receives an input message right before sending \(m'\).

5.8 Transformation \(T_{C\rightarrow R}\) revisited

\(\underline{\mathcal {S'}}_{\mathcal {A}, pol, \delta {}, \mu {}}\) adds an additional layer: The actions of \(\underline{\mathcal {S}}_{\mathcal {A}, pol, \mu {}}\) previously triggered by incoming ordinary messages are now caused by an (additional-delay, \(m\)) message instead. Two new types of actions, (f) and (g), can occur: A type (f) action receives a \((m, \ell )\) pair and sends an (additional-delay, \(m\)) message (possibly with delay \(0\), if \(\ell = 1\)), and a type (g) action ignores a malformed message. For example, the first action on the second processor in Fig. 6b would be a type (f) action. Since \(\underline{\mathcal {S'}}_{\mathcal {A}, pol, \delta {}, \mu {}}\) modifies neither \(queue\) nor \(idle\), note that Observations 6, 7 and 9 as well as Lemmas 8, 10 and 11 still hold.

In the transformation, actions of type (f) and (g) are ignored—this also holds for NOP actions on crashed processors that would have been type (f) or (g) actions before the crash. Apart from that, the transformation rules of Sect. 5.3 still apply, with the following exceptions. Let a valid ordinary message be a message that would trigger Line 31 in Algorithm 2 after reaching a fault-free recipient (which includes all messages sent by non-Byzantine processors).

-

1.

Valid ordinary messages received by a fault-free processor are “unwrapped”:

-

Sending side: A message \((m, \ell )\) in \(trans(ac)\) in \(ex\) is mapped to simply \(m\) in \(trans(J)\) of the corresponding job in \(ru\).

-

Receiving side: A message (additional-delay, \(m\)) in \(msg(ac)\) is replaced by \(m\) in \(msg(JD)\) of the corresponding job or drop event \(JD\) in \(ru\).

Note that \(T_{C\rightarrow R}\) removes the reception of \((m, \ell )\) and the sending of (additional-delay, \(m\)), since type (f) actions are ignored. Basically, the transformation ensures that the \(m \rightarrow (m, \ell ) \rightarrow \) (additional-delay, \(m\)) \(\rightarrow m\) chain is condensed to a simple transmission of message \(m\) (cf. Fig. 7, the message from \(p_2\) to \(p_1\)).

-

-

2.

Valid ordinary messages received by a crashing processor \(p\) are unwrapped as well. On the sending side, \((m, \ell )\) is replaced by \(m\). As long as the receiving processor \(p\) has not crashed, the remainder of the transformation does not differ from the fault-free case. After (or during) the crash, the receiving type (f) action no longer generates an (additional-delay) timer message. In this case, we add a receive event and a drop event for message \(m\) at \(t + \delta ^-_{(\ell )}\) on \(p\), with \(t\) denoting the sending time of the message. Analogous to Sect. 5.3, the drop event happens at the end of \(J^{last}\) instead, if the arrival time \(t + \delta ^-_{(\ell )}\) lies within \(begin(J^{last})\) and \(end(J^{last})\). Since type (f) actions are ignored in the transformation, we have effectively replaced the transmission of \((m, \ell )\) in \(ex\), taking \([\delta ^-_{(1)}, \delta ^+_{(1)}]\) time units, with a transmission of \(m\) in \(ru\), taking \(\delta ^-_{(\ell )}\) time units.

-

3.

Valid ordinary messages received by some Byzantine processor \(p\) are unwrapped as well. Note, however, that on \(p\) all actions are transformed to (zero-time) jobs—there is no separation in type (a)–(g), since the processor does not need to execute the correct algorithm. In this case, the “unwrapping” just substitutes \((m, \ell )\) with \(m\) on both the sender and the receiver sides and adds a receiving job \(J'_R\) (and a matching receive event) for \(m\) with a NOP transition sequence on the Byzantine processor at \(t + \delta ^-_{(\ell )}\), with \(t\) denoting the sending time of the message. \(msg(J_R)\) and \(msg(R_R)\), the triggering message of the job and the receive event corresponding to the action receiving the message in \(ex\), is changed to some new dummy timer message, sent by adding it to some earlier job on \(p\). If \(R_R\) is the first receive event on \(p\), Cond1’ allows us to insert a new input message into \(ru\) that triggers \(R_R\). Adding \(J'_R\) guarantees that the message delays of all messages stay between \(\delta ^-_{(\ell )}\) and \(\delta ^+_{(\ell )}\) in \(ru\). On the other hand, keeping \(J_R\) is required to ensure that any (Byzantine) actions performed by \(ac_R\) can be mapped to the rt-run and happen at the same time.

-

4.

Invalid ordinary messages (which can only be sent by Byzantine processors) are removed from the transition sequence of the sending job. To ensure message consistency, we also need to make sure that the message does not appear on the receiving side: If the receiving processor is non-Byzantine, a type (g) action is triggered on the receiver. Since type (g) actions are not mapped to the rt-run, we are done. If the receiver is Byzantine, let \(J_R\) be the job corresponding to \(ac_R\), the action receiving the message. As in rule 3, we replace \(msg(J_R)\) (and the message of the corresponding receive event) with a timer message sent by an earlier job or with an additional input message.