Abstract

Given finite i.i.d. samples in a Hilbert space with zero mean and trace-class covariance operator \(\Sigma \), the problem of recovering the spectral projectors of \(\Sigma \) naturally arises in many applications. In this paper, we consider the problem of finding distributional approximations of the spectral projectors of the empirical covariance operator \({\hat{\Sigma }}\), and offer a dimension-free framework where the complexity is characterized by the so-called relative rank of \(\Sigma \). In this setting, novel quantitative limit theorems and bootstrap approximations are presented subject to mild conditions in terms of moments and spectral decay. In many cases, these even improve upon existing results in a Gaussian setting.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let X be a random variable in a separable Hilbert space \(\mathcal {H}\) with expectation zero and covariance operator \(\Sigma = \mathbb {E}X \otimes X\). A fundamental problem in high-dimensional statistics and statistical learning is dimensionality reduction, that is one seeks to reduce the dimension of X, while keeping as much information as possible. Letting \((\lambda _j)\) be the sequence of positive eigenvalues of \(\Sigma \) (in non-increasing order) and \((u_j)\) be a corresponding orthonormal system of eigenvectors, solutions to this problem are given by the projections \(P_{\mathcal {J}}X\) with spectral projector \(P_{\mathcal {J}}=\sum _{j\in \mathcal {J}}u_j\otimes u_j\) and index set \(\mathcal {J}\).

In statistical applications, the distribution of X and thus its covariance structure are unknown. Instead, one often observes a sample \(X_1,\dots ,X_n\) of n independent copies of X, and the problem now is to find an estimator of \(P_{\mathcal {J}}\). The idea of PCA is to solve this problem by first estimating \(\Sigma \) by the empirical covariance operator \(\hat{\Sigma } = n^{-1}\sum _{i = 1}^n X_i \otimes X_i\), and then constructing the empirical counterpart \({\hat{P}}_{\mathcal {J}}\) of \(P_{\mathcal {J}}\) based on \({\hat{\Sigma }}\) (see Sect. 2.2.1 for a precise definition). Hence, a key problem is to control and quantify the distance between \(\hat{P}_{\mathcal {J}}\) and \(P_{\mathcal {J}}\).

Over the past decades, an extensive body of literature has evolved around this problem, see e.g. Fan et al. [13], Johnstoneand Paul [24], Horváth and Kokoszka [18], Scholkopf and Smola [45], Jolliffe [23] for some overviews. A traditional approach for studying the distance between \(\hat{P}_{\mathcal {J}}\) and \(P_{\mathcal {J}}\) is to control a norm measuring the distance between the empirical covariance operator \(\hat{\Sigma }\) and the population covariance operator \(\Sigma \). Once this has been established, one may then deduce bounds for \(\hat{P}_{\mathcal {J}} - P_{\mathcal {J}}\) by inequalities such as the Davis–Kahan inequality, see for instance Hsing and Eubank [16], Yu et al. [52], and Cai and Zhang [9], Jirak and Wahl [25] for some recent results and extensions. However, for a more precise statistical analysis, fluctuation results like limit theorems or bootstrap approximations are much more desirable.

The more recent works by Koltchinskii and Lounici [27], Koltchinskii and Lounici [28, 29] (and related) are of particular interest here. Among other things, they provide the leading order of the expected squared Hilbert–Schmidt distance \(\mathbb {E}\Vert \hat{P}_{\mathcal {J}} - P_{\mathcal {J}}\Vert _2^2\) and a precise, non-asymptotic analysis of distributional approximations of \(\Vert \hat{P}_{\mathcal {J}} - P_{\mathcal {J}}\Vert _2^2\) in terms of Berry–Esseen type bounds, given a Gaussian setup. Some extensions and related questions are discussed in Löffler [32], Koltchinskii [31], Koltchinskii et al. [30]. However, as noted in Naumov et al. [39], these results have some limitations, and bootstrap approximations may be more desirable and flexible. Again, in a purely Gaussian setup, Naumov et al. [39] succeeds in presenting a bootstrap procedure with accompanying bounds that alleviates some of the problems attached to limit distribution for inferential purposes. Let us point out though that, from a mathematical point of view, the results of Koltchinskii and Lounici [29] and Naumov et al. [39] are somewhat complementary. More precisely, there are scenarios where the bound of the bootstrap approximation of Theorem 2.1 in Naumov et al. [39] fails (meaning that it only yields a triviality), whereas the bound in Theorem 6 of Koltchinskii and Lounici [29] does not, and vice versa, see Sect. 5 for some examples and further discussions. The topic of limit theorems and bootstrap approximations has also been broadly investigated for eigenvalues and related quantities, see for instance Cai et al. [7], Yao and Lopes [51], Lopes et al. [33], Jiang and Bai [20], Liu et al. [34].

The aim of this work is to provide quantitative bounds for both distributional (e.g. CLTs) and bootstrap approximations, subject to comparatively mild conditions in terms of moments and spectral decay. Concerning the latter, our results display a certain invariance, being largely unaffected by polynomial, exponential (or even faster) decay.

To be more specific, let us briefly discuss the two main previous approaches used in Koltchinskii and Lounici [29] and Naumov et al. [39]. The Berry–Essen type bounds of Koltchinskii and Lounici [29] rely on classical perturbation (Neumann) series, an application of the isoperimetric inequality for (Hilbert space-valued) Gaussian random variables, and the classical Berry–Esseen bound for independent real-valued random variables. As a consequence, Theorem 6 in Koltchinskii and Lounici [29] yields a Berry–Essen type bound for distributional closeness of \(\Vert \hat{P}_{\mathcal {J}} - P_{\mathcal {J}}\Vert _2^2\) (appropriately normalized) and a standard Gaussian random variable. In contrast, Naumov et al. [39] derive novel comparison and anti-concentration results for certain quadratic Gaussian forms, allowing them to directly compare the random variables in question, bypassing any argument involving a limit distribution. They do, however, rely on similar perturbation and concentration results as in Koltchinskii and Lounici [29]. Finally, let us mention that both only consider the case of single spectral projectors (meaning that \(\mathcal {J}\) is the index set of one eigenvalue), and heavily rely on the assumption of Gaussianity for X.

Removing (sub)-Gaussianity also means that the isoperimetric inequality is no longer available, which, however, is the key ingredient of the above approach. Therefore, we opted for a different route, which is more inspired by the relative rank approach developed in Jirak [21], Jirak and Wahl [26], Jirak and Wahl [25], Reiss and Wahl [43], Wahl [49], and consists of the following three key ingredients:

-

Relative perturbation bounds: Employing the aforementioned concept of the relative rank, we establish general perturbation results for \(\Vert \hat{P}_{\mathcal {J}} - P_{\mathcal {J}}\Vert _2\). These results may be of independent interest.

-

Fuk-Nagaev type inequalities: We formulate general Fuk-Nagaev type inequalities for independent sums of random operators that allow us to replace the assumption of (sub-)Gaussianity by weaker moment assumptions. Together with the relative perturbation bounds, this leads to approximations for \(\Vert \hat{P}_{\mathcal {J}} - P_{\mathcal {J}}\Vert _2\) (valid with high probability) in regimes that appeared to be out of reach by previous approaches based on absolute perturbation theory.

-

Bounds for Wasserstein and uniform distance for Hilbert space-valued random variables: The last key point are general bounds for differences of probability measures on Hilbert spaces. Together with the above expansions and concentration inequalities, these permit us to obtain novel quantitative limit theorems and bootstrap approximations. Moment conditions are expressed in terms of the Karhunen–Loève coefficients of X.

To briefly demonstrate the flavour of our results, let us present two examples where the eigenvalues \(\lambda _j\) of \(\Sigma \) have a rather opposing behaviour. The first result deals with polynomially decaying eigenvalues, a common situation in statistics and machine learning (cf. Bartlett et al. [6], Fischer and Steinwart [14], Hall and Horowitz [17]). For single projectors (\(\mathcal {J}= \{J\}\)), subject to mild moment conditions on the Karhunen–Loève coefficients of X, we show that

for all \(J\ge 1\), where \(L_{\mathcal {J}}Z\) is an appropriate Gaussian random variable. Key points here are the mild moment conditions and the explicit dependence on J, which is optimal up to \(\log \)-factors. This result, among others, is novel even for the Gaussian case. For a precise statement, see Sect. 5.1.2.

The second case deals with a pervasive factor like structure, a popular model in econometrics and finance (cf. Fan et al. [13], Bai [2], Stock and Watson [47]). In this case, again subject to mild moment conditions for the Karhunen–Loève coefficients of X, we have

for all \(J\ge 1\), where \(L_{\mathcal {J}}Z\) is an appropriate Gaussian random variable. As before, we are not aware of a similar result even in a Gaussian setup. For a precise statement, see Sect. 5.2.1.

This work is structured as follows. We first introduce some notation and establish a number of preliminary results in Sects. 2.1–2.3. Bounds for quantitative limit theorems are then presented in Sect. 3 for both the uniform and the Wasserstein distance, whereas Sect. 4 contains accompanying results for a suitable bootstrap approximation. Finally, we discuss some key models from the literature and provide a comparison to previous results in Sect. 5. Proofs are given in the remaining Sect. 6.

2 Preliminaries

2.1 Basic notation

We write \(\lesssim \), \(\gtrsim \) and \(\asymp \) to denote (two-sided) inequalities involving a multiplicative constant. If the involved constant depends on some parameters, say p, then we write \(\lesssim _p\), \(\gtrsim _p\) and \(\asymp _p\). For \(a,b\in \mathbb {R}\), we write \(a \wedge b = \min (a,b)\) and \(a \vee b = \max (a,b)\). Given a subset \(\mathcal {J}\) of the index set (in most cases \(\mathbb {N}\)), we denote with \(\mathcal {J}^c\) its complement in the index set. Given a (real) Hilbert space \(\mathcal {H}\), we always write \(\Vert \cdot \Vert = \Vert \cdot \Vert _{\mathcal {H}}\) for the corresponding norm. For a bounded linear operator A and \(q\in [1,\infty ]\), we denote with \(\Vert A\Vert _q\) the Schatten q-norm. In particular, \(\Vert A\Vert _2\) denotes the Hilbert–Schmidt norm, \(\Vert A\Vert _1\) the nuclear norm and \(\Vert A\Vert _{\infty }\) the operator norm. For a self-adjoint compact operator A mapping \(\mathcal {H}\) into itself, we write |A| for the absolute value of A and \(|A|^{1/2}\) for the positive self-adjoint square-root of |A|. For a random variable X, we write \(\overline{X} = X - \mathbb {E}X\).

2.2 Tools from perturbation theory

In this section, we present our underlying perturbation bounds. Instead of applying standard perturbation theory, we make use of recent improvements from Jirak and Wahl [25], Wahl [49] adapted for our purposes. Proofs are presented in Sect. 2.2.

2.2.1 Further notation

Throughout this section, \(\Sigma \) denotes a positive self-adjoint compact operator on the separable Hilbert space \(\mathcal {H}\) (in applications, it will be the covariance operator of the random vector X with values in \(\mathcal {H}\)). By the spectral theorem there exists a sequence \(\lambda _1\ge \lambda _2\ge \dots >0\) of positive eigenvalues (which is either finite or converges to zero) together with an orthonormal system of eigenvectors \(u_1,u_2,\dots \) such that \(\Sigma \) has spectral representation

with rank-one projectors \(P_j=u_j\otimes u_j\), where \((u\otimes v)x=\langle v,x\rangle u\), \(x\in \mathcal {H}\). Without loss of generality we shall assume that the eigenvectors \(u_1,u_2,\dots \) form an orthonormal basis of \(\mathcal {H}\) such that \(\sum _{j\ge 1}P_j=I\). For \(1\le j_1\le j_2\le \infty \), we consider an interval of the form \({\mathcal {J}}=\{j_1,\dots ,j_2\}\) (with \(\mathcal {J}=\{j_1,j_1+1,\dots \}\) if \(j_2=\infty \)). We write

for the orthogonal projection on the direct sum of the eigenspaces of \(\Sigma \) corresponding to the eigenvalues \(\lambda _{j_1},\dots ,\lambda _{j_2}\), and onto its orthogonal complement. Moreover, let

be the reduced ‘outer’ resolvent of \({\mathcal {J}}\). Finally, let \(g_\mathcal {J}\) be the gap between the eigenvalues with indices in \({\mathcal {J}}\) and the eigenvalues with indices not in \({\mathcal {J}}\) defined by

for \(j_1>1\) and \(j_2<{\text {dim}} \mathcal {H}\) and with corresponding changes otherwise. If \(\mathcal {J}= \{j\}\) is a singleton, then we also write \(g_j\) instead of \( g_{\mathcal {J}}\).

Let \(\hat{\Sigma }\) be another positive self-adjoint compact operator on \(\mathcal {H}\) (in applications, it will be the empirical covariance operator). We consider \(\hat{\Sigma }\) as a perturbed version of \(\Sigma \) and write \(E = \hat{\Sigma }-\Sigma \) for the (additive) perturbation. Again, there exists a sequence \(\hat{\lambda }_1\ge \hat{\lambda }_2\ge \dots \ge 0\) of eigenvalues together with an orthonormal system of eigenvectors \(\hat{u}_1,\hat{u}_2,\dots \) such that we can write

with \(\hat{P}_j=\hat{u}_j\otimes \hat{u}_j\). We shall also assume that the eigenvectors \(\hat{u}_1,\hat{u}_2,\dots \) form an orthonormal basis of \(\mathcal {H}\) such that \(\sum _{j\ge 1}{\hat{P}}_j=I\). We write

for the orthogonal projection on the direct sum of the eigenspaces of \({\hat{\Sigma }}\) corresponding to the eigenvalues \(\hat{\lambda }_{j_1},\dots ,\hat{\lambda }_{j_2}\), and onto its orthogonal complement.

2.2.2 Main perturbation bounds

Let us now present our main perturbation bound. The following quantity will play a crucial role

where

is the positive self-adjoint square-root of \(\vert R_{\mathcal {J}^c}\vert \). In the special case that \({\mathcal {J}}=\{j\}\) is a singleton, it has been introduced in Wahl [49]. Moreover, for a Hilbert–Schmidt operator A on \(\mathcal {H}\) we define

The following result presents a linear perturbation expansion with remainder expressed in terms of \(\delta _{\mathcal {J}}(E)\).

Proposition 1

We have

and

Using the identities \(P_{\mathcal {J}}-{\hat{P}}_\mathcal {J}={\hat{P}}_{\mathcal {J}^c}-P_{\mathcal {J}^c}\) and \(L_{\mathcal {J}}E=-L_{\mathcal {J}^c}E\), it is possible to replace \(\delta _{\mathcal {J}}\) by \(\min (\delta _{\mathcal {J}},\delta _{\mathcal {J}^c})\) in Proposition 1, at least for \(j_1=1\). In the latter case, we implicitly assume that \(\mathcal {J}\) is chosen such that \(\delta _{\mathcal {J}}\) is equal to \(\min (\delta _{\mathcal {J}},\delta _{\mathcal {J}^c})\) in what follows.

Clearly, we can bound \(\delta _{\mathcal {J}}\le \Vert E\Vert _\infty /g_{\mathcal {J}}\), in which case the inequality (2.3) turns into a standard perturbation bound, cf. Lemma 2 in Jirak and Wahl [25] or Equation (5.17) in Reiss and Wahl [43].

Corollary 1

We have

For our next consequence (used in the bootstrap approximations), let \({\tilde{\Sigma }}\) be a third positive self-adjoint compact operator on \(\mathcal {H}\). Similarly as above we will write \({\tilde{E}}={\tilde{\Sigma }}-\Sigma \) and \(\tilde{P}_{\mathcal {J}}\) for the orthogonal projection on the direct sum of the eigenspaces of \({\tilde{\Sigma }}\) corresponding to the eigenvalues with indices in \(\mathcal {J}\).

Corollary 2

We have

The dependence on \(\min (\vert {\mathcal {J}}\vert ,\vert {\mathcal {J}}^c\vert )\) in (2.4) and Corollaries 1 and 2 can be further improved, by using the first inequality in Lemma 10 below (instead of the second one). Since this leads to additional quantities that have to be controlled, such improvements are not pursued here.

2.3 Tools from probability

2.3.1 Metrics for convergence of laws

In this section, we provide several upper bounds for the uniform metric and the 1-Wasserstein metric. The propositions are proved in Sect. 6.2. For two real-valued random variables X, Y, the uniform (or Kolmogorov) metric is defined by

Moreover, for two (induced) probability measures \(\mathbb {P}_X,\mathbb {P}_Y\), let \(\mathcal {L}(\mathbb {P}_X,\mathbb {P}_Y)\) be the set of probability with marginals \(\mathbb {P}_X, \mathbb {P}_Y\). Then the 1-Wasserstein metric is defined as the minimal coupling in \(L^1\)-distance, that is,

Setting 1

Let T be a real-valued random variable. Moreover, let \(Y_1,\dots ,Y_n\) be independent random variables taking values in a separable Hilbert space \(\mathcal {H}\) satisfying \(\mathbb {E}Y_i=0\) and \(\mathbb {E}\Vert Y_i\Vert ^q < \infty \) for some \(q > 2\). Set

Let \(\lambda _1(\Psi )\ge \lambda _2(\Psi )\ge \dots >0\) be the positive eigenvalues of \(\Psi \) (each repeated a number of times equal to its multiplicity). Moreover, let Z be a centered Gaussian random variable in \(\mathcal {H}\) with covariance operator \(\Psi \), that is a Gaussian random variable with \(\mathbb {E}Z = 0\) and \(\mathbb {E}Z \otimes Z=n^{-1}\sum _{i = 1}^n \mathbb {E}Y_i \otimes Y_i\).

In applications T and S will correspond to (scaled or bootstrap versions of) \(\Vert \hat{P}_{\mathcal {J}} - P_{\mathcal {J}}\Vert _2^2\) and its approximation \(\Vert L_{\mathcal {J}}E\Vert _2^2\), respectively.

Our first result deals with the 1-Wasserstein distance between T and \(\Vert Z\Vert ^2\).

Proposition 2

Consider Setting 1 with \(q \in (2,3]\). Assume \(\vert T\vert \le C_T\) almost surely, and \(\sum _{i = 1}^n \mathbb {E}\Vert Y_i\Vert ^r \le n^{r/2}\) for \(r \in \{2,q\}\). Then, for all \(s > 0\) and \(u>0\),

In connection to the uniform metric, the following quantities and relations will be crucial:

where we also used that \(\mathbb {E}(G^2-1)^2=2\) and \(\mathbb {E}(G^2-1)^3=8\) for \(G \sim \mathcal {N}(0,1)\). By monotonicity of the Schatten norms, we have \(C\lesssim B\lesssim A\).

Proposition 3

Consider Setting 1 with \(q =3\). Then, for all \(u > 0\),

as well as

with

The appearance of both \(\lambda _6(\Psi )\) and \(\lambda _{1,6}(\Psi )\) is, in fact, unavoidable, we refer to Sazonov et al. [46] for more details.

Let \(G \sim \mathcal {N}(0,1)\), Then, using invariance properties of the uniform metric, Lemma 15 below and (2.5), we get

However, in this case one may dispose of any conditions on the eigenvalues of \(\Psi \) altogether, as is demonstrated by our next result below.

Proposition 4

Consider Setting 1 with \(q \in (2,3]\). Then, for all \(u > 0\),

Propositions 2, 3 and 4 are based on various normal approximation bounds in the literature, we refer to the proofs for references. Finding (or confirming) the optimal dependence on the dimension and the underlying covariance structure in such bounds is still an open problem in general.

2.3.2 Concentration inequalities

In this section, we recall several useful concentration inequalities that we will need in the proofs below. Additional results are given in Sect. 6.3.

We will make frequent use of the classical Fuk-Nagaev inequality for real-valued random variables (cf. Nagaev [38]).

Lemma 1

Let \(Z_1,\dots , Z_n\) be independent real-valued random variables. Suppose that \(\mathbb {E}Z_i=0\) and \(\mathbb {E}\vert Z_i\vert ^p<\infty \) for some \(p > 2\) and all \(i=1,\dots ,n\). Then, it holds that

for all \(t>0\), with \(a_p = 2 e^{-p}(p+2)^{-2}\) and \(\mu _{n,p} = \sum _{i = 1}^n \mathbb {E}\vert Z_i\vert ^p\).

In particular, if \(Z_1,\dots ,Z_n\) are independent copies of a real-valued random variable Z with \(\mathbb {E}Z=0\) and \(\mathbb {E}\vert Z \vert ^p<\infty \), then there is a constant \(C>0\) such that

We will also apply Banach space versions of the Fuk–Nagaev inequality. The following Lemma collects concentration inequalities in Hilbert–Schmidt norm, in nuclear norm, and in operator norm.

Lemma 2

Let \(Y=\sum _{j\ge 1}\vartheta _j^{1/2}\zeta _ju_j\) be a random variable taking values in a separable Hilbert space \(\mathcal {H}\), where \(\vartheta = (\vartheta _1,\vartheta _2,\dots )\) is a sequence of positive numbers with \(\Vert \vartheta \Vert _1:=\sum _{j\ge 1}\vartheta _j<\infty \), \(u_1,u_2,\dots \) is an orthonormal system in \(\mathcal {H}\), and \(\zeta _1,\zeta _2,\dots \) is a sequence of centered random variables satisfying \(\sup _{j\ge 1}\mathbb {E}\vert \zeta _{j}\vert ^{2p} \le C_1\) for some \(p>2\) and \(C_1 > 0\). Let \(Y_1,\dots ,Y_n\) be n independent copies of Y. Then there exists a constant \(C_2 > 0\) depending only on \(C_1\) and p such that the following holds.

-

(i)

For all \(t \ge 1\), it holds that

$$\begin{aligned} \mathbb {P}\left( \big \Vert \frac{1}{n}\sum _{i=1}^nY_i\otimes Y_i-\mathbb {E}Y\otimes Y\big \Vert _2 > \frac{C_2t\Vert \vartheta \Vert _1}{\sqrt{n}} \right) \le \frac{n^{1-p/2}}{t^{p}} + e^{-t^2}. \end{aligned}$$ -

(ii)

Let \(\varpi _n^2 \ge \sup _{\Vert S\Vert _{\infty } \le 1} \mathbb {E}{\text {tr}}^2(S (Y \otimes Y-\Sigma ))\). Then, for all \(t\ge \Vert (\mathbb {E}(Y\otimes Y-\Sigma )^2 )^{1/2}\Vert _1\), it holds that

$$\begin{aligned} \mathbb {P}\left( \big \Vert \frac{1}{n}\sum _{i=1}^nY_i\otimes Y_i-\mathbb {E}Y\otimes Y\big \Vert _1 \ge \frac{C_2t}{\sqrt{n}}\right) \le \frac{n^{1-p/2}}{t^p} \Vert \vartheta \Vert _1^p + e^{-t^2/\varpi _n^2}. \end{aligned}$$ -

(iii)

Assume additionally that \(\Vert \mathbb {E}(Y \otimes Y-\mathbb {E}Y\otimes Y)^2\Vert _{\infty } = 1\). Then, for all \(t \ge 1\), it holds that

$$\begin{aligned} \mathbb {P}\left( \big \Vert \frac{1}{n}\sum _{i=1}^nY_i\otimes Y_i-\mathbb {E}Y\otimes Y\big \Vert _\infty \ge \frac{C_2t}{\sqrt{n}}\right) \le C_2n^{1-p/2} t^{p} \Vert \vartheta \Vert _1^p + \Vert \vartheta \Vert _1^2 e^{-t^2}. \end{aligned}$$

Lemma 2(iii) is not optimal in terms of t but sufficient for the choice \(t\asymp \sqrt{\log n}\).

An important special case is given if the expansion for Y in Lemma 2 coincides with the Karhunen–Loève expansion. For this, suppose that Y is centered and strongly square-integrable, meaning that \(\mathbb {E} Y =0\) and \(\mathbb {E}\Vert Y\Vert ^2<\infty \). Let \(\Sigma =\mathbb {E} Y\otimes Y\) be the covariance operator of Y, which is a positive, self-adjoint trace class operator, see e.g. Hsing and Eubank, [16, Theorem 7.2.5]. Let \(Y_1,\dots ,Y_n\) be independent copies of Y and let \(\hat{\Sigma }=n^{-1}\sum _{i=1}^nY_i\otimes Y_i\) be the empirical covariance operator. Let \(\lambda _1,\lambda _2,\dots \) and \(u_1,u_2,\dots \) be the eigenvalues and eigenvectors of \(\Sigma \) as introduced in Sect. 2.1. Then we can write \(Y=\sum _{j\ge 1}\lambda _j^{1/2}\eta _ju_j\) almost surely, where \(\eta _j=\lambda _j^{-1/2}\langle u_j, Y\rangle \) are the Karhunen–Loève coefficients of Y. By construction, the \(\eta _j\) are centered, uncorrelated and satisfy \(\mathbb {E}\eta _j^2=1\). Now, if the Karhunen–Loève coefficients satisfy \(\sup _{j\ge 1}\mathbb {E}\vert \eta _{j}\vert ^{2p} \le C_1\) for some \(p>2\) and \(C_1 > 0\), then Lemma 2(i) implies

for all \(t\ge 1\), while Lemma 2(ii) implies

for all \(t\ge \Vert (\mathbb {E}(Y\otimes Y-\Sigma )^2 )^{1/2}\Vert _1\), where \(\varpi _n^2 \ge \sup _{\Vert S\Vert _{\infty } \le 1} \mathbb {E}{\text {tr}}^2(S (Y \otimes Y-\Sigma ))\). If additionally \(\Vert \mathbb {E}(Y \otimes Y-\Sigma )^2\Vert _{\infty }= 1\) holds, then Lemma 2(iii) implies that, for all \(t\ge 1\),

In order to apply the above concentration inequalites, we will make frequent use of the following moment computations based on the Karhunen–Loève coefficients.

Lemma 3

Let \(Y=\sum _{j\ge 1}\vartheta _j^{1/2}\zeta _ju_j\) be an \(\mathcal {H}\)-valued random variable, where \(\vartheta = (\vartheta _1,\vartheta _2,\dots )\) is a sequence of positive numbers with \(\Vert \vartheta \Vert _1<\infty \), \(u_1,u_2,\dots \) is an orthonormal system in \(\mathcal {H}\), and \(\zeta _1,\zeta _2,\dots \) is a sequence of centered random variables satisfying \(\sup _{j\ge 1}\mathbb {E}\vert \zeta _{j}\vert ^{2p} \le C\) for some \(p\ge 2\) and \(C > 0\). Then

-

(i)

\(\mathbb {E}\Vert Y\Vert ^{2r}\le C^{r/p} \Vert \vartheta \Vert _1^r\) for all \(1\le r\le p\).

-

(ii)

\({\text {tr}}(\mathbb {E}(Y\otimes Y-\mathbb {E}Y\otimes Y)^2)\le C^{2/p}\Vert \vartheta \Vert _1^2\).

Suppose additionally that the \(\zeta _j\) are uncorrelated and satisfy \(\mathbb {E}\zeta _j \zeta _{k}^2 \zeta _{s} = 0\) for all j, k, s such that \(j\ne s\). Then

-

(iii)

\(\Vert \mathbb {E}(Y\otimes Y-\mathbb {E}Y\otimes Y)^2\Vert _\infty \le C^{2/p} \Vert \vartheta \Vert _\infty \Vert \vartheta \Vert _1\).

-

(iv)

\(\Vert (\mathbb {E}(Y\otimes Y-\mathbb {E}Y\otimes Y)^2 )^{1/2}\Vert _1\le C^{1/p} (\sum _{j\ge 1}\vartheta _j^{1/2})\Vert \vartheta \Vert _1^{1/2}\).

In (i), it suffices to assume that \(p\ge 1\).

3 Quantitative limit theorems

3.1 Assumptions and main quantities

Throughout this section, let X be a random variable taking values in \(\mathcal {H}\). We suppose that X is centered and strongly square-integrable, meaning that \(\mathbb {E} X =0\) and \(\mathbb {E}\Vert X\Vert ^2<\infty \). Let \(\Sigma =\mathbb {E} X\otimes X\) be the covariance operator of X, which is a positive self-adjoint trace class operator. Let \(X_1,\dots ,X_n\) be independent copies of X and let

be the empirical covariance operator.

Our main assumptions are expressed in terms of the Karhunen–Loève coefficients of X, defined by \(\eta _{j} = \lambda _j^{-1/2}\langle X, u_j \rangle \) for \(j\ge 1\). These lead to the Karhunen–Loève expansion \(X=\sum _{j\ge 1}\sqrt{\lambda _j}\eta _j u_j\) almost surely. If X is Gaussian, then the \(\eta _j\) are independent and standard normal. Non-Gaussian examples are given by elliptical models and factor models, see for instance Lopes [35] and Jirak and Wahl [26] for concrete computations or Hörmann et al. [19] and Panaretos and Tavakoli [42] for the context of functional data analysis.

Assumption 1

Suppose that for some \(p > 2\)

The actual condition on the number of moments p will be mild, and depends on the desired rate of convergence, we refer to our results for more details. Apart from a relative rank condition, Assumption 1 is essentially all we need. In order to simplify the bounds (and examples), we also demand a non degeneracy condition.

Assumption 2

There is a constant \(c_\eta >0\), such that for every \(j\ne k\),

In the special case of bootstrap approximations, the situation is more complicated, and thus our next condition is more restrictive. It allows us to explicitly compute moments of certain, rather complicated random variables in connection with some of our bounds, see Lemma 6 and Sect. 5 below for more details.

Assumption 3

[m-th cumulant uncorrelatedness] If any of the indices \(i_1, \ldots i_m \in \mathbb {N}\) is unequal to all the others, then

Let us point out that both Assumptions 2 and 3 are trivially true if the sequence \((\eta _k)\) is independent. A more general example is, for instance, provided by martingale type differences \(\eta _k = \epsilon _k v_k\) with independent sequences \((\epsilon _k)\) and \((v_k)\), where the \((\epsilon _k)\) are mutually independent. In this case, Assumption 3 holds given the existence of m moments. Apart from results concerning the bootstrap, Assumption 3 is quite convenient for discussing our examples in Sect. 5, allowing us in particular to relate the variance-type term \(\sigma _{\mathcal {J}}^2\) (cf. Definition 1 below) to higher order cumulants. This leads to very simple and explicit bounds, mirroring previous Gaussian results, and is in line with our recent findings in Jirak and Wahl [26], where it is shown that moment conditions alone are not enough to get Gaussian type behaviour in general in this context, and an entirely different behaviour is possible. Also note that related assumptions, like the \(L_4 - L_2\) norm equivalence (see for instance Mendelson and Zhivotovskiy [37], Wei and Minsker [50], Zhivotovskiy [53]), have already been used in the literature for such a purpose.

Definition 1

For \(\mathcal {J}=\{1,\dots ,j_2\}\), we define

On the other hand, for \({\mathcal {J}}=\{j_1,\dots ,j_2\}\) with \(j_1>1\), we define

The quantity \(\sigma _{\mathcal {J}}\) plays an important role in the analysis of \(\delta _{\mathcal {J}}\) (resp. \(\delta _{\mathcal {J}^c}\)) defined in (2.1). The size of \(\sigma _{\mathcal {J}}\) can be characterized by the so-called relative ranks defined as follows.

Definition 2

For \(\mathcal {J}=\{1,\dots ,j_2\}\), we define

On the other hand, for \({\mathcal {J}}=\{j_1,\dots ,j_2\}\) with \(j_1>1\), we define

Note that in the special case of \(\mathcal {J}=\{j\}\), our definition coincides with the notion of the relative rank given in Jirak and Wahl [26]. In the general case, we use a slightly weaker version of Jirak and Wahl [25].

The quantities \(\textbf{r}_\mathcal {J}\) and \(\sigma _\mathcal {J}\) are related as follows.

Lemma 4

Suppose that Assumption 1 holds. Then

If additionally Assumptions 2 and 3 hold with \(m = 4\), then

for \(\mathcal {J}=\{1,\dots ,j_2\}\) and

for \(\mathcal {J}=\{j_1,\dots ,j_2\}\) with \(j_1>1\). Moreover, we have

for \(\mathcal {J}=\{1,\dots ,j_2\}\) and

for \(\mathcal {J}=\{j_1,\dots ,j_2\}\) with \(j_1>1\).

Proof of Lemma 4

If \(\mathcal {J}=\{j_1,\dots ,j_2\}\) with \(j_1>1\), then Lemma 4 follows from Lemma 3 applied to the transformed data \(X'=(\vert R_{\mathcal {J}^c}\vert ^{1/2}+g_{\mathcal {J}}^{-1/2}P_{\mathcal {J}})X\). The main observation is that \(X'\) has the same Karhunen–Loève coefficients as X (in a possibly different order) and thus satisfies Assumption 1 with the same constant \(C_\eta \). Moreover, the eigenvalues of the covariance operator \(\Sigma '=\mathbb {E}\, X'\otimes X'\) are transformed in such a way that the eigenvalues are \(\lambda _k/(\lambda _{k} - \lambda _{j_1})\) for \(k < j_1\), \(\lambda _k/g_\mathcal {J}\) for \(k\in \mathcal {J}\), and \(\lambda _k/(\lambda _{j_2} - \lambda _k)\) for \(k > j_2\). Hence, the first two claims follow from Lemma 3 (ii) and (iii). The last claim follows similarly, by using (6.12) below and Assumption 2 instead. If \(\mathcal {J}=\{1,\dots ,j_1\}\), then the claim follows similarly by changing the role of \(\mathcal {J}\) and \(\mathcal {J}^c\). \(\square \)

The relative rank \(\textbf{r}_{\mathcal {J}}\) and \(\sigma _{\mathcal {J}}^2\) allow to characterize the behavior of \(\delta _\mathcal {J}\), given in (2.1).

Lemma 5

If Assumption 1 holds, then

with

Proof of Lemma 5

If \(\mathcal {J}=\{j_1,\dots ,j_2\}\) with \(j_1>1\), then Lemma 5 follows from (2.9) applied to the transformed and scaled data \(X'=\sigma _{\mathcal {J}}^{-1/2}(\vert R_{\mathcal {J}^c}\vert ^{1/2}+g_{\mathcal {J}}^{-1/2}P_{\mathcal {J}})X\), using again that \(X'\) has the same Karhunen–Loève coefficients as X and thus satisfies Assumption 1 with the same constant \(C_\eta \). Moreover, the eigenvalues of the covariance operator \(\Sigma '=\mathbb {E}\, X'\otimes X'\) are transformed in such a way that \({\text {tr}}(\Sigma ')=\textbf{r}_\mathcal {J}/\sigma _\mathcal {J}\). Hence, an application of (2.9) with \(t = C\sqrt{\log n}\) yields

where we also used the first claim in Lemma 4 and \(p>2\). If \(\mathcal {J}=\{1,\dots ,j_1\}\), then the claim follows similarly by changing the role of \(\mathcal {J}\) and \(\mathcal {J}^c\). \(\square \)

We continue by recalling the following asymptotic result from multivariate analysis. By Dauxois et al. [10, Proposition 5], we have

where Z is a Gaussian random variable in the Hilbert space of all Hilbert–Schmidt operators (endowed with trace-inner product) with mean zero and covariance operator \({\text {Cov}}(Z)={\text {Cov}}(X\otimes X)\). More concretely, we have

where the upper triangular coefficients \(\xi _{jk}\), \(k\ge j\) are Gaussian random variables with

and the lower triangular coefficients \(\xi _{jk}\), \(k< j\), are determined by \(\xi _{jk}=\xi _{kj}\). In the distributional approximation of \(\Vert \hat{P}_{\mathcal {J}} - P_{\mathcal {J}}\Vert _{2}\), the random variable \(L_\mathcal {J}Z\) defined in (2.2) plays a central role. The latter is a centered Gaussian random variable taking values in the separable Hilbert space of all (self-adjoint) Hilbert–Schmidt operators on \(\mathcal {H}\). Let

be the covariance operator of \(L_\mathcal {J}Z\). We will make frequent use of the following quantities and relations (cf. (2.5)).

The following lemma provides the connection of the quantities \(A_\mathcal {J}\), \(B_\mathcal {J}\) and \(C_\mathcal {J}\) with the eigenvalues of \(\Sigma \).

Lemma 6

Suppose that Assumptions 1 and 2 are satisfied. Then

If additionally Assumption 3 holds with \(m = 4\), then the eigenvalues of \(\Psi _{\mathcal {J}}\) are given by

with \(\alpha _{jk}=\mathbb {E}\eta _{j}^2\eta _k^2\). In particular, we have

Proof of Lemma 6

Using the representation in (3.3), we get

Hence, the lower bound follows from inserting Assumption 2, while the upper bounds follows from Assumption 1 and Hölder’s inequality. Moreover, subject to Assumption 3 with \(m = 4\), the random variable \(L_\mathcal {J}Z\) has the Karhunen–Loève expansion

and the last two claims follow from the last relations given in (3.4). \(\square \)

3.2 Main results

This section is devoted to quantitative limit theorems for \(\Vert \hat{P}_{\mathcal {J}} - P_{\mathcal {J}}\Vert _{2}\). We state several results subject to different conditions, all having a different range of application, and refer to Sect. 5 for examples and illustrations. Proofs are given in Sect. 6.4. We assume throughout this section that \(n \ge 2\). Our first result concerns estimates based on the 1-Wasserstein distance.

Theorem 1

Suppose that Assumptions 1 (\(p > 4\)) and 2 hold and that

for some sufficiently small constant \(c > 0\). Then, for any \(s> 0\),

where \(\textbf{p}_{\mathcal {J},n,p}\) is given in (3.1).

We require Condition (3.5) for all of our results. It is, however, redundant in most cases (see for instance Sect. 5) in the following sense: Upper bounds are nontrivial only if (3.5) holds.

The generality of Theorem 1 has a price: Note that \((A_{\mathcal {J}}/n)^2\) may scale as the variance of \(\Vert \hat{P}_{\mathcal {J}} - P_{\mathcal {J}}\Vert _{2}^2\) or not. In particular, if the expectation \(\mathbb {E}\Vert \hat{P}_{\mathcal {J}} - P_{\mathcal {J}}\Vert _{2}^2\) significantly dominates the square root of the variance, finer approximation results can be obtained by studying the appropriately centred and scaled version. As a first result in this direction, we present the following.

Theorem 2

Suppose that Assumptions 1 and 2 and (3.5) hold with \(p\ge 3\). Then we have

as well as

Recall the definition of \(\lambda _{1,j}(\Psi _\mathcal {J})\), given in (2.6).

A simpler (distributional) approximation is given by the following corollary.

Corollary 3

Suppose that the assumptions of Theorem 2 hold and that \(G \sim \mathcal {N}(0,1)\). Then we have

However, in this case one may dispose of any conditions on the eigenvalues \(\lambda _k(\Psi )\) altogether, as is demonstrated by our next result below. The cost is a slower rate of convergence.

Theorem 3

Suppose that Assumptions 1 and 2 and (3.5) hold and let \(G \sim \mathcal {N}(0,1)\). Then we have

An important feature of both Corollary 3 and Theorem 3 is the error term \((C_{\mathcal {J}}/B_{\mathcal {J}})^3\). As can be deduced from Barbour and Hall [4], it is, in general, necessary for a central limit theorem to have

otherwise it cannot hold.

So far, we did not require any condition in terms of n, \(A_{\mathcal {J}}\), \(B_{\mathcal {J}}\) and \(C_{\mathcal {J}}\). Questions of optimality related to these quantities remain unknown in general, see also the comments after Proposition 4. In Sect. 5, we discuss examples to further illustrate this and also present optimal results up to \(\log \)-factors in case of polynomially decaying eigenvalues.

4 Bootstrap approximations

Bootstrap methods are nowadays one of the most popular ways for measuring the significance of a test or building confidence sets. Among others, their superiority compared to (conventional) limit theorems stems from the fact that they offer finite sample approximations. Some bootstrap methods even outperform typical Berry–Esseen bounds attached to corresponding limit distributions, see for instance Hall [15]. As is apparent from our results in Sect. 3, our (unknown) normalising sequences are quite complicated. Hence, as is discussed intensively in Naumov et al. [39], bootstrap methods are a very attractive alternative in the present context.

4.1 Assumptions and main quantities

We first require some additional notation. We denote with \(\mathcal {X} = \sigma \big (X_i,\, i \in \mathbb {N}\big )\) the \(\sigma \)-algebra generated by the whole sample. We further denote with \((X_i')\) an independent copy of \((X_i)\). Next, we introduce the conditional expectations and probabilities

The (conditional) measure \({\tilde{\mathbb {P}}}\) will act as our probability measure in the bootstrap-world (the latter refers to the fact that the sample is fixed and randomness comes from the bootstrap procedure). Finally, recall the uniform metric \(\textbf{U}(A,B)\) for real-valued random variables A, B, and denote with

the corresponding conditional version. As before, we assume throughout that \(n \ge 2\).

There are many ways to design bootstrap approximations. A popular and powerful method are multiplier methods, which we also employ here. To this end, let \((w_i)\) be an i.i.d. sequence with the following properties.

where p corresponds to the same value as in Assumption 1. Moreover, denote with \(\sigma _w^2 = \mathbb {E}(w_i^2-1)^2\). One may additionally demand \(\mathbb {E}w_i = 0\), but this is not necessary. Throughout this section (and the corresponding proofs), we always assume the validity of (4.2) without mentioning it any further.

Algorithm 1

[Bootstrap] Given \((X_i)\) and \((w_i)\), construct the sequence \(({\tilde{X}}_i) = (w_i X_i)\). Treat \(({\tilde{X}}_i)\) as new sample, and compute the corresponding empirical covariance matrix \({\tilde{\Sigma }}\) and projection \({\tilde{P}}_{\mathcal {J}}\) accordingly.

Note that our multiplier bootstrap is slightly different from the one of Naumov et al. [39], but a bit more convenient to analyze. On the other hand, by passing to the complex domain \(\mathbb {C}\) as underlying field of our Hilbert space, it is not hard to show that Theorems 4 and 5 and the attached corollaries below are equally valid for the multiplier bootstrap employed in Naumov et al. [39].

4.2 Main results

Recall that \((X_i')\) is an independent copy of \((X_i)\), and thus \(\hat{P}_{\mathcal {J}}'\), the empirical projection based on \((X_i')\), is independent of \(\mathcal {X}\). Throughout this section, we entirely focus on the uniform metric \({\tilde{\textbf{U}}}\).

Theorem 4

Suppose that Assumptions 1, 2 hold and that (3.5) is satisfied. Moreover, suppose that

is satisfied for some sufficiently small constant \(c > 0\). Let \(q \in (2,3]\) and \(s \in (0,1)\) and assume that \(p>2q\). Then, with probability at least \(1 - C_p\textbf{p}^{1-s}_{\mathcal {J},n,p}-C_p n^{1-p/(2q)}\), \(C_p > 0\), we have

where

The bound \(\textbf{A}_{\mathcal {J},n,p,s}\) above is also based on Theorem 3, which explains the origin of \((C_{{\mathcal {J}}}/B_{{\mathcal {J}}})^3\). Loosely speaking, this means that we approximate with a standard Gaussian distribution to show closeness in Theorem 4.

The uniform metric is strong enough to deduce quantitative statements for empirical quantiles, which is useful in statistical applications. To exemplify this further, we state the following result.

Corollary 4

Given the assumptions and conditions of Theorem 4 and

we have

where \(\textbf{A}_{\mathcal {J},n,p,s}\) is defined in (4.4).

Next, we present our second bound main result in this section.

Theorem 5

Suppose that Assumptions 1 and 2 hold with \(p > 6\), Assumption 3 holds with \(m\in \{4,8\}\), and that (3.5) and (4.3) are satisfied. Finally, suppose that

Let \(s \in (0,1)\). Then, with probability at least \(1 - C_p\textbf{p}^{1-s}_{\mathcal {J},n,p} - C_pn^{1-p/6}\), \(C_p > 0\), we have

where

and \(\textbf{p}_{\mathcal {J},n,p}\) is given in (3.1). In all the results above, we require \(\mathcal {J}=\{j_1,\dots ,j_2\}\) and \(\mathcal {I}=\{1,\dots ,i_2\}\) with \(i_2>j_2+2\).

As in Theorem 2, it is possible to establish a \(\sqrt{n}\) rate at the cost of additional factors involving various eigenvalues. Since the proof is very similar, we omit any further details here.

The quantities \(A_{\mathcal {J},\mathcal {I}^c}\) and \(\Psi _{\mathcal {J},\mathcal {I}}\) that appear in the above bound are not defined yet. In essence, the set \(\mathcal {I}\) is used to truncate some lower degree indices. The exact definition requires some preparation, and is given in Sect. 6.6.1. In contrast to Theorem 4, Theorem 5 above is built around Theorem 2, and thus avoids the error term \((C_{{\mathcal {J}}}/B_{{\mathcal {J}}})^3\). However, apart from the eigenvalues, this comes at (other) additional costs, in particular the expressions \({\text {tr}}(\Psi _{\mathcal {J},\mathcal {I}}^{1/2})\) and \(A_{\mathcal {J},\mathcal {I}^c}\) require a careful selection of the set \(\mathcal {I}\). As before, we have the following corollary.

Corollary 5

Given the assumptions and conditions of Theorem 5 and \(\hat{q}_{\alpha }\) defined as in (4.5), we have for \(s \in (0,1)\)

where \(\textbf{B}_{\mathcal {J},n,p,s}\) is given in (4.7).

5 Applications: specific models and computations

In this Section, we discuss specific models to illustrate our results with explicit bounds and compare them to previous results.

To this end, we discuss two basic, fundamental models omnipresent in the literature. In our first model, we consider the case where the eigenvalues \(\lambda _j\) decay at a certain rate (polynomial or exponential). This behaviour is typically encountered in functional data analysis or in a machine learning context involving kernels, see for instance Hall and Horowitz [17], Bartlett et al. [6], Fischer and Steinwart [14]. Our second model is the classical spiked covariance model (factor model), which is more popular in high dimensional statistics, econometrics and probability theory, see Johnstone [22], Fan et al. [13], Bai [2].

5.1 Model I

Throughout this section, we assume that Assumptions 1, 2 and 3 (\(m = 4\)) hold with \(p>3\).

For \(J\ge 1\), we consider the set \(\mathcal {J}= \{1,\ldots ,J\}\) and the singleton \(\mathcal {J}'=\{J\}\). We assume that \(n\ge 2\), so \(\log n\) is always positive. Throughout this Section, all constants depend on the parameter \(\mathfrak {a}\). To simplify notation, we will not indicate this explicitly.

5.1.1 Exponential decay

We first consider the case of exponential decay, that is, we suppose that for some \(\mathfrak {a} > 0\), we have \(\lambda _j= e^{-\mathfrak {a} j}\) for all \(j\ge 1\).

In this setup, the relative rank \(\textbf{r}_{\mathcal {J}}\), \(A_{\mathcal {J}}\) and related quantities have already been computed in the literature, see for instance Jirak [21], Jirak and Wahl [26], Reiss and Wahl [43]. By similar (straightforward) computations together with Lemmas 4 and 6, we have

as well as

Moreover, using again Lemma 6, we have

It is now easy to apply the results. For example, Theorem 2 yields

for cumulated projectors, and

for the special case of single projectors. Here, we used that \(\textbf{p}^{}_{\mathcal {J},n,p}\) is negligible due to the fact that \(p> 3\), and that condition (3.5) can be dropped due to the fact that the uniform distance is bounded by 1, while the right-hand side of these bounds exceeds 1 whenever (3.5) does not hold, that is, when \(n^{-1/2}J (\log n)^{1/2}\gtrsim 1\).

Comparison and discussion: The results of Koltchinskii and Lounici [29] are not applicable in this setup and only provide the trivial bound \(\le 1\). More precisely, Theorem 6 in Koltchinskii and Lounici [29] only yields a non trivial result if

However, this is not the case here. In fact, a normal approximation by a standard Gaussian random variable G is not possible at all in this setup. This follows from (3.6) and the fact that \(B_{\mathcal {J}} \asymp C_{\mathcal {J}}\).

In stark contrast, our bounds above in (5.2) and (5.3) provide non-trivial results even for moderately large J. This is a consequence of the relative approach that we employ here. In addition, our probabilistic assumptions are much weaker compared to Koltchinskii and Lounici [29]. Hence, even for the Gaussian case, our results are new.

Next, regarding bootstrap approximations, if \(p \ge 9\), then an application of Corollary 5 (with \(s = 1/2\), \(\mathcal {I}= \mathbb {N}\)) yields

Comparison and discussion: In contrast to Koltchinskii and Lounici [29], the bootstrap approximation of Naumov et al. [39], Theorem 2.1, is applicable if we additionally assume Gaussianity. For finite J, it appears that the bound provided by Theorem 2.1 is better than ours for single projectors. However, this drastically changes if J increases: Simple computations show that their rate is lower bounded by

which is useless already for \(J \ge (\log n)/6\mathfrak {a}\). On the other hand, our bound (5.4) yields useful results even for \(J \le n^{1/6 - \delta }\), \(\delta > 0\). Thus, despite having much weaker assumptions, our results extend and improve upon those of Naumov et al. [39] even in the Gaussian case.

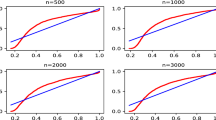

5.1.2 Polynomial decay

We next consider the case of polynomial decay, that is, we suppose that there is a constant \(\mathfrak {a} > 1\) such that \(\lambda _j= j^{-\mathfrak {a}}\) for all \(j\ge 1\). For simplicity, we assume that \(J\ge 2\).

As in the previous Sect. 5.1.1, straightforward computations, together with Lemmas 4 and 6, yield

as well as

Moreover, using again Lemma 6, it follows that

It is now again easy to apply the results. For example, Theorem 2 yields

for general projectors \(P_{\mathcal {J}}\), and

for the special case of single projectors. Here, we again exploited that condition (3.5) can be dropped due to the fact that the uniform distance is bounded by 1, while the right-hand side of these bounds exceeds 1 whenever (3.5) does not hold, that is, when \(n^{-1/2}J^{3/2}(\log J\log n)^{1/2}\gtrsim 1\) and \(n^{-1/2}J(\log J\log n)^{1/2}\gtrsim 1\), respectively. Observe that in case of single projectors, the dependence on J is optimal up to \(\log \)-factors, we refer to Jirak and Wahl [26], Example 2 for a more detailed discussion.

Comparison and discussion: As in the exponential case, the results of Koltchinskii and Lounici [29] are not applicable here. On the other hand, again due to the relative nature of our approach, our bounds in (5.2) are quite general, simple, and new even in for the Gaussian case. We emphasize in particular that our bounds are independent of \(\mathfrak {a}\) up to constants.

Next, we want to apply Corollary 5. To this end, we need to strengthen Assumption 3 to \(m = 8\). First, if \(\mathfrak {a}>2\), we choose \(\mathcal {I}=\mathbb {N}\), in which case we have \(\Psi _{\mathcal {J},\mathcal {I}} = \Psi _{\mathcal {J}}\) (using Lemma 6) and thus

Hence, if \(p \ge 9\), an application of Corollary 5 (with \(s=1/2\)) yields

Second, if \(1<\mathfrak {a}\le 2\), let \(\mathcal {I}= \{I,I+1,\ldots \}\) with \(I\ge 2J\), in which case

Thus, if \(p \ge 9\), an application of Corollary 5 (with \(s=1/2\)) yields

Balancing with respect to I leads to

Comparison and discussion: The situation is similarly as before in the exponential case: For finite J, Theorem 2.1 in Naumov et al. [39] gives superior results for single projectors, if the underlying distribution is Gaussian. However, their rate is lower bounded by

and thus leads to a much smaller range for J, particularly for larger \(\mathfrak {a}\). In contrast, the range for J for our results in (5.9) is invariant in \(\mathfrak {a}\), and the overall bound even slightly improves as \(\mathfrak {a}\) gets larger.

5.2 Model II

We only consider the case \(\mathcal {J}= \{1,\ldots , J\}\) with \(J > 6\) in this section. We note, however, that single projectors can readily be handled in a similar manner. Throughout this section, we assume the validity of Assumptions 1, 2 and 3 with \(p \ge 3\) and \(m = 4\).

5.2.1 Factor models—pervasive case

The literature on (approximate, pervasive) factor models typically assumes that the first J eigenvalues diverge at rate \(\asymp d\) (with \(d = {\text {dim}}\mathcal {H}\)), whereas all the remaining eigenvalues are bounded and do not, in total, have significantly more mass than any of the first J eigenvalues. This assumption can be expressed in terms of pervasive factors, see for instance Bai [2], Stock and Watson [47], which are particularly relevant in econometrics. In the language of statistics, this means the remaining \(d-J\) components do not explain significantly more (variance) than any of the first J.

Here, we generalize the above conditions as follows. We assume that there exist constants \(0< c \le C < \infty \), such that

Observe that this implies

which is the desired feature of pervasive factor models. It is convenient to introduce a notion of a subset trace by

We then have the following relations.

It turns out that pervasive factor models lead to particularly simple results. Indeed, an application of Theorem 2 yields

where we used that \((C_{\mathcal {J}}/B_{\mathcal {J}})^3 \lesssim 1/\sqrt{J}\).

We note that there is no restriction on the dimension d of the underlying Hilbert space in this case.

Comparison and discussion: Our bound in (5.14) is easy to use and fairly general in terms of underlying assumptions. The literature does not appear to have a comparable result, even in the Gaussian case. The single projector results of Theorem 6 in Koltchinskii and Lounici [29] only yield the trivial bound \(\le 1\) in this case. We mention however that condition (3.6) amounts to \((C_{\mathcal {J}}/B_{\mathcal {J}})^3 \lesssim 1/\sqrt{J}\). Hence, if we consider the general projectors \(P_{\mathcal {J}}\), \(\mathcal {J}= \{1,\ldots ,J\}\) with \(J \rightarrow \infty \), one may also use a standard Gaussian approximation as in Theorem 3.

Next, we turn to the bootstrap. Using Corollary 5 (\(p > 6\), \(s = 1/2\), \(\mathcal {I}= \{1,\ldots ,d\}\), \(m = 8\) in Assumption 3), we arrive at

where we also used \({\text {tr}}(\sqrt{\Psi _{\mathcal {J}}}) \lesssim \sum _{1 \le k \le d} \sqrt{\lambda _k/\lambda _1} \lesssim \sqrt{d}\).

Comparison and discussion: The situation is related to Model I. If the dimension d is small and the setup is purely Gaussian, the results of Naumov et al. [39] are superior. However, for larger d, this changes, as can be seen as follows. In the present context, their rate is lower bounded by

This implies in particular \(d = o\big ( \sqrt{n/\log n}\big )\) for a non trivial result. In contrast, our bound (5.15) is valid also for d as large as \(d = o\big (n/\log n\big )\). For a sake of better comparison, we assumed here that J is fixed, since the results of Naumov et al. [39] only apply to single projectors. Among others, a key reason for our improvement compared to Naumov et al. [39] is the usage of our concentration inequality in Lemma 2(ii).

5.2.2 Spiked covariance

We next consider a simple spiked covariance model of the form

where \(\sigma ^2>0\) is the level of noise, \(g_J=\lambda _J-\lambda _{J+1}>0\) is the relevant spectral gap, and \(C>0\) is a constant. For simplicity, we assume that \(\sigma ^2=1\) such that J and \(g_J\) are the only remaining parameters. Moreover, we assume that \(d\ge 6\), \(J\le d-J\) and \(g_J\in (0,1]\). In particular, all eigenvalues have the same magnitude up to multiplicative constants and we have the following relations

Moreover, using again Lemma 6 and the fact that \(J>6\), it follows that \(\lambda _{j}(\Psi _\mathcal {J}) \asymp 1/g_J^2, \quad j=1,\dots ,6\). It is now again easy to apply the results. For example, Theorem 1 yields

provided that \(\log (n)dJ/(ng_J^2)\lesssim 1\), which amounts to condition (3.5). Note that if we demand that the bound in (5.18) is of magnitude o(1), (3.5) is trivially true. Moreover, an application of Theorem 3 yields

where we again exploited that condition (3.5) can be dropped due to the fact that the uniform distance is bounded by 1, while the right-hand side of these bounds exceeds 1 whenever (3.5) does not hold, that is, when \(dJ\log (n)/(ng_J^2)\gtrsim 1\). Note that the bound in (5.19) is only nontrivial if \(d \rightarrow \infty \) due to the error term \((d J)^{-1/2}\). However, if d is bounded, one may (again) apply Theorem 2, we omit the details.

Comparison and discussion: We first observe that for \((J d)/(ng_J^2) \le n^{-\delta }\), \(\delta > 0\) and p large enough, (5.18) is bounded by \(n^{-\alpha }\), where \(\alpha = (1/12) \wedge (\delta \rho /2)\) for \(\rho < 1\). It follows, in particular, that

This should be contrasted with previous results in the purely Gaussian setting, see for instance Cai et al. [8], and Birnbaum et al. [5] for a closely related result. Regarding (5.19), the situation is a bit more complex, as, even for large enough p, three terms may be dominant. Following the discussion in Koltchinskii and Lounici [29] below Theorem 6, let us fix J (and hence \(g_J\)), and assume \(d = d_n \rightarrow \infty \) as n increases, and that p is large enough. Then (5.19) and Theorem 6 in Koltchinskii and Lounici [29] amount to

respectively. So in this case, our bound is inferior compared to the Gaussian case treated in Koltchinskii and Lounici [29], only allowing a range for \(d = o(n^{1/3})\) compared to (almost) \(d = o(n)\). Such a behaviour will always be present if the error bound from the pertubation approximation dominates, and is weaker than bounds implied by the isoperimetric inequality. On the other hand, Koltchinskii and Lounici [29] only treats \(\mathcal {J}= \{J\}\), and our distributional assumptions are much weaker. In particular, no sub-Gaussianity or even Gaussianity is necessary for (5.19) to hold.

Next, we consider bootstrap approximations. Assuming \(p > 6\), Corollary 4 (\(q = 3\), \(s = 1/2\)), yields

For a comparison with Theorem 2.1 in Naumov et al. [39], we follow the discussion above and reconsider the case where J, \(g_J\) are finite, \(d = d_n \rightarrow \infty \) and p is large enough. Our bound (5.21), and the one of Theorem 2.1 in Naumov et al. [39] (purely Gaussian setup), are of magnitude

respectively. We see that for smaller d, the bound of Naumov et al. [39] is superior compared to ours. However, for larger d, our bound prevails. In particular, our range of applicability \(d = o(n^{1/3})\) is larger compared to \(d = o(n^{1/6}/(\log n)^{3/3})\), despite not demanding any sub-Gaussianity or even Gaussianity as in Naumov et al. [39].

6 Proofs

6.1 Proofs for Section. 2.2

Conceptually, we borrow some ideas from Jirak and Wahl [26] and Wahl [49], coupled with some substantial innovations to deal with sums of eigenspaces.

The proofs are based on a series of lemmas. We start with an eigenvalue separation property.

Lemma 7

If \(\delta _{\mathcal {J}}\le 1\), then we have

Proof

The claim follows from Jirak and Wahl, [26, Proposition 1], using the same line of arguments as in the proof of Lemma 2 in Wahl [49]. For completeness, we repeat the proof. Set

Then Jirak and Wahl, [26, Proposition 1] states that \(\hat{\lambda }_{j_1}-\lambda _{j_1}\le \delta _{\mathcal {J}}(\lambda _{j_{1}-1}-\lambda _{j_1})\) (resp. \(\hat{\lambda }_{j_2}-\lambda _{j_2}\ge -\delta _{\mathcal {J}}(\lambda _{j_2}-\lambda _{j_2+1})\)), provided that \(\Vert T_{\ge j_1}ET_{\ge j_1}\Vert _\infty \le 1\) (resp. \(\Vert T_{\le j_2}ET_{\le j_2}\Vert _\infty \le 1\)). Now, by simple properties of the operator norm, using that

we get (recall that \(\delta _\mathcal {J}\le 1\))

Similarly, we have \(\Vert T_{\le j_2}ET_{\le j_2}\Vert _\infty \le 1\), and the claim follows. \(\square \)

The following lemma follows from simple properties of the operator norm.

Lemma 8

We have

Lemma 9

If \(\delta _{\mathcal {J}}<1/2\), then we have

and

where \(\vert R_{{\mathcal {J}}^c}\vert ^{-1/2}\) is the inverse of \(\vert R_{{\mathcal {J}}^c}\vert ^{1/2}\) on the range of \(P_{{\mathcal {J}}^c}\)

Proof

By Lemma 7, we have for every \(k<j_1\),

and thus also

Similarly, we have

By the identity \((\hat{\lambda }_j-\lambda _k)P_k{\hat{P}}_j=P_kE{\hat{P}}_j\), valid for every \(j,k\ge 1\), we get

Inserting (6.1) and (6.2) into (6.3), we arrive at

Moreover, we have

Combining these two estimates with Lemma 8, we arrive at

and the first claim follows inserting

where we again applied Lemma 8 in the second inequality. To get the second claim, note that

and the second claim follows from inserting the first claim. \(\square \)

In what follows, we abbreviate

Lemma 10

Suppose that \(\delta _{\mathcal {J}}<1/2\). Then we have

In particular, we have

Proof

Proceeding as in (4.10)–(4.11) in Jirak and Wahl [26], we have

Hence, by the triangular inequality, we get

First, we have

Inserting \(\Vert P_{{\mathcal {J}}}{\hat{P}}_{{\mathcal {J}}^c}\Vert _2=\Vert P_{{\mathcal {J}}^c}{\hat{P}}_{{\mathcal {J}}}\Vert _2\) and the second bound in Lemma 9, we get

where \(\gamma _{\mathcal {J}}\) is defined in (6.5). Second, by Lemma 7, proceeding as in the proof of Lemma 9, we get

Inserting Lemmas 8 and 9, we get

Finally,

Applying Lemmas 8 and 9 and the inequality

we get

Collecting these bounds for \(I_1,I_2,I_3\), we get

By (6.6), we have

and the second claim follows from inserting this into the first one. \(\square \)

Proof of Proposition 1

In order to prove (2.3), assume first that \(\delta _{\mathcal {J}}<1/4\). Then it follows from \(\Vert P_{{\mathcal {J}}}-{\hat{P}}_{\mathcal {J}}\Vert _2^2=2\Vert P_{{\mathcal {J}}^c}{\hat{P}}_{\mathcal {J}}\Vert _2^2\) and the second inequality in Lemma 9 that (2.3) holds. On the other hand, we always have \(\Vert P_{{\mathcal {J}}}-{\hat{P}}_{\mathcal {J}}\Vert _2^2\le 2\min (\vert {\mathcal {J}}\vert ,\vert {\mathcal {J}}^c\vert )\), implying (2.3) also in the case that \(\delta _{\mathcal {J}}\ge 1/4\).

It remains to prove (2.4). Applying the identities \(I=P_{{\mathcal {J}}}+P_{{\mathcal {J}}^c}= {\hat{P}}_{\mathcal {J}}+{\hat{P}}_{\mathcal {J}^c}\) and \(P_{\mathcal {J}}P_{\mathcal {J}^c}=0\), we have

Combining this with the triangle inequality, we obtain

Inserting the definition of the linear term in (2.2), we get

We again start with the case \(\delta _{\mathcal {J}}<1/4\). First, by Lemma 9 and the identity \(\Vert {\hat{P}}_{\mathcal {J}}P_{\mathcal {J}^c}\Vert _2=\Vert {\hat{P}}_{\mathcal {J}^c} P_{\mathcal {J}}\Vert _2\), we get

and

Second, by Lemma 10, we have

Hence, in the case \(\delta _{\mathcal {J}}< 1/4\), we arrive at

Finally, if \(\delta _{\mathcal {J}}\ge 1/4\), then we can use \(\Vert P_{\mathcal {J}}-{\hat{P}}_{\mathcal {J}}\Vert _2\le \sqrt{2}\min (\vert \mathcal {J}\vert ,\vert \mathcal {J}^c\vert )^{1/2}\) and (6.6) to obtain

This completes the proof. \(\square \)

Proof of Corollary 1

By the Cauchy–Schwarz inequality, we have

Hence, the claim follows from inserting the second claim of Proposition 1 and (6.6). \(\square \)

Proof of Corollary 2

By the triangular inequality, the inequality \((a+b)^2 \le 2a^2 + 2b^2\) and the Cauchy–Schwarz inequality, it follows that

Hence, the claim follows from inserting the second claim of Proposition 1 and (6.6) applied to both \(\hat{\Sigma }\) and \(\tilde{\Sigma }\). \(\square \)

6.2 Proofs for Section 2.3.1

Lemma 11

Consider Setting 1. Then we have

Here, \(V \sim \mathcal {N}(0,v^2)\), \(v \in (0,1]\), is independent of the \(Y_i\) and Z.

Proof of Lemma 11

The proof is mainly relying on methods given in Bentkus [3]. For a random variable Y in \(\mathcal {H}\), let \(Y^{\delta } = Y \mathbbm {1}_{\Vert Y\Vert \le \delta \sqrt{n}}\). Then by properties of the uniform distance (or a simple conditioning argument), we have

An application of Esseens smoothing inequality (cf. Feller, [12, Lemma 1, XVI.3]) yields

Next, proceeding as in Ul’yanov [48] (see Equation (86), resp. Theorem 4.6 in Bentkus [3]), one derives that

Since \(q\in (2,3]\), we have

and

It follows that (recall \(v\in (0,1]\))

This completes the proof. \(\square \)

Lemma 12

[Pinelis [41], Theorem 4.1] Let \((Y_i) \in \mathcal {H}\) be an independent sequence with \(\mathbb {E}Y_i = 0\) and \(\mathbb {E}\Vert Y_i\Vert ^{q}<\infty \) for all i and \(q\ge 1\). Then,

Lemma 13

Consider Setting 1 and assume in addition that \(\sum _{i = 1}^n \mathbb {E}\Vert Y_i\Vert ^r \le n^{r/2}\) for \(r \in \{2,q\}\). Then

Here, \(V \sim \mathcal {N}(0,v^2)\), \(v \in (0, 1]\), is independent of \(Y_1,\dots ,Y_n\) and Z.

Proof of Lemma 13

We use the well-known fact that for real-valued random variables X, Y, we have the dual representation

We conclude that for any \(a > 0\) and \(s > 1\), Markov’s inequality gives

We apply this inequality with \(s=q/2>1\). By assumption, we have \(\mathbb {E}\Vert Z\Vert ^2\le 1\) and thus \(\mathbb {E}\Vert Z\Vert ^{q} \lesssim _q 1\) (cf. Lemma 3). Lemma 11, the inequality \((x+y)^{q/2} \le 2^{q/2-1}\big (x^{q/2} + y^{q/2}\big )\), \(x,y \ge 0\), together with Lemma 12 then yield

Selecting \(a = v^{6/q} \big (n^{-q/2}\sum _{i = 1}^n\mathbb {E}\Vert Y_i\Vert ^{q} \big )^{-2/q}\), the claim follows. \(\square \)

Lemma 14

Consider Setting 1. Suppose in addition that \(\mathbb {E}\Vert Z\Vert ^2\le 1\) and \(|T|\le C_T\) almost surely. Then for any \(u,s > 0\), we have

Here, \(V \sim \mathcal {N}(0,v^2)\), \(v \in (0,1]\), is independent of S, T, Z.

Proof of Lemma 14

Let \(\mathcal {E} = \{|T-S|\le u \}\). By the triangle inequality and \(\mathbbm {1}_{\mathcal {E}}\le 1\), we have

Since again by the triangle inequality

we get

Since \(\mathbb {E}\Vert Z\Vert ^2 \le 1\), it follows that \(\mathbb {E}\Vert Z\Vert ^{2\,s+2} \lesssim _s 1\) for all \(s>0\) (cf. Lemma 3) and thus

Using this bound, we arrive at

and the claim follows from \(\mathbb {E}\vert V\vert \le v\). \(\square \)

Proof of Proposition 2

The claim follows by combining Lemmas 13 and 14 and balancing with respect to v. \(\square \)

Lemma 15

Let \(Y_1,\dots ,Y_n\) be independent random variables satisfying \(\mathbb {E}Y_i = 0\), \(\mathbb {E}Y_i^2 = 1\) and \(\mathbb {E}\vert Y_i\vert ^3 < \infty \). For \(a_i \in \mathbb {R}\), let \(A_n = \sum _{i = 1}^n a_i^2\). Then

Proof of Lemma 15

This immediately follows from a general version of the Berry–Esseen Theorem for non-identically distributed random variables, see e.g. Petrov [40]. \(\square \)

Lemma 16

Let \(G \sim \mathcal {N}(0,1)\) and T, S be real-valued random variables. Then, for any \(a,b > 0\), \(c \in \mathbb {R}\),

Proof of Lemma 16

Recall first that the uniform metric \(\textbf{U}\) is invariant with respect to affine transformations of the underlying random variables. Next, note that

A corresponding lower bound is also valid. Therefore, using the above mentioned affine invariance and the fact that the distribution function of G is Lipschitz continuous, the claim follows. \(\square \)

Next, we recall the following smoothing inequality (cf. Senatov [44], Lemma 4.2.1).

Lemma 17

Let X be a real-valued random variable, \(G \sim \mathcal {N}(0,\sigma ^2)\), \(\sigma > 0\), and \(G_{\epsilon } \sim \mathcal {N}(0,\epsilon ^2)\), \(\epsilon > 0\), independent of X, G. Then there exits an absolute constant C, such that

Proof of Proposition 4

Due to Lemma 16 (with \(c = A\), \(b = B\)), it suffices to derive a bound for

For any \(\epsilon > 0\), let \(G_{\epsilon } \sim \mathcal {N}(0,\epsilon ^2)\). By Lemma 17 and the triangle inequality, we get

By the affine invariance of \(\textbf{U}\), we have

Setting \(V = G_{\epsilon }B/A\) implies \(v^2 = \mathbb {E}V^2 = (B \epsilon /A)^2 \). Hence an application of Lemma 11 yields

On the other hand, by the regularity of \(\textbf{U}\) (or explicitly by a simple conditioning argument) and Lemma 15 (applied with \(n=1\)), we obtain the bound

Piecing everything together, the claim follows. \(\square \)

For the next result, recall the definition of \(\lambda _{1,j}(\Psi )\), given in (2.6).

Lemma 18

[Lemma 3 in Naumov et al. [39]] Let \(Z\in \mathcal {H}\) be a Gaussian random variable with \(\mathbb {E}Z = 0\) and covariance operator \(\Psi \). Then, for any \(a>0\),

In particular, for any \(x \ge 0\), we have

that is, we have a uniform upper bound for the density of \(\Vert Z\Vert ^2\).

Lemma 19

[Lemma 2 in Naumov et al. [39]] For \(i \in \{1,2\}\), let \(Z_i\) be Gaussian with \(\mathbb {E}Z_i = 0\) and covariance operator \(\Psi _i\). Then

Lemma 20

Consider Setting 1 with \(q=3\). Then, we have

Proof of Lemma 20

We argue very similarly as in the proof of Lemma 11, using also the notation therein. By Esseen’s smoothing inequality (cf. Feller, [12, Lemma 1, XVI.3]) together with Lemma 18, we have for any \(a > 0\)

Setting \(t=1\) and using also (6.7), the claim follows from the (optimal) choice \(a = (n^{-3/2} \sum _{i = 1}^n \mathbb {E}\Vert Y_i\Vert ^3 \sqrt{\lambda _{1,2}(\Psi )})^{1/5}\). \(\square \)

Finally, let us mention the following result of Sazonov et al. [46].

Lemma 21

Consider Setting 1 with \(q= 3\). Then

Proof of Proposition 3

There exists a constant \(C > 0\) such that, for all \(x \ge 0\),

where we used Lemma 18 in the last step. In the same way, one derives a corresponding lower bound. Hence, the first claim now follows from Lemma 20. Using Lemma 21 instead of Lemma 20, the second claim follows. \(\square \)

6.3 Proofs for Section 2.3.2

The following general result is from Einmahl and Li [11].

Lemma 22

[Einmahl and Li [11]] Let \((B,\Vert \cdot \Vert )\) be a real separable Banach space with dual \(B^*\) and let \(B_1^*\) be the unit ball of \(B^*\). Let \(Z_1,\ldots ,Z_n\) be independent B-valued random variables with mean zero such that for some \(s > 2\), \(\mathbb {E}\Vert Z_i\Vert ^s < \infty \), \(1 \le i \le n\). Then we have for \(0 < \nu \le 1\), \(\delta > 0\) and any \(t > 0\),

where \(\varpi _n = \sup \big \{ \sum _{i = 1}^n \mathbb {E}f^2(Z_i) \,\,: \,\, f \in B_1^* \big \}\) and C is a positive constant depending on \(\nu \), \(\delta \) and s.

Proof of Lemma 2

(i) Follows from applying Lemma 22 to the Hilbert space of all Hilbert–Schmidt operators equipped with the Hilbert–Schmidt norm (see also Lemma 1 in Jirak and Wahl [25]).

(ii) Let B be the Banach space of all nuclear operators on \(\mathcal {H}\) equipped with the nuclear norm. It is well-known that the dual space \(B^*\) of B is the Banach space of all bounded linear operators equipped with the operator norm \(\Vert \cdot \Vert _\infty \). Moreover, for a bounded linear operator S, the corresponding functional is given by \(T\mapsto {\text {tr}}(TS)\). In order to apply Lemma 22 with \(Z_i=Y_i\otimes Y_i\) and \(s=p>2\), it remains to bound all involved quantities. First, since the map \(A \mapsto {\text {tr}}(A^{1/2})\) is concave on the set of all positive self-adjoint trace-class operators, Jensen’s inequality yields

Next, since \(Y \otimes Y\) is rank-one, we have \(\Vert Y \otimes Y \Vert _1=\Vert Y \Vert ^2\). Hence, by the triangle inequality and Jensen’s inequality, we have

Combining this with Lemma 3, we get

Finally, by the above discussion, we have

This completes the proof.

(iii) It is also possible to apply Lemma 22 to the operator norm. Yet, since the involved expectation term is difficult to bound in this case, we proceed differently and apply techniques from Minsker [36] instead. Note that under Assumptions 1 and 3 (which jointly imply \(L^4-L^2\) norm equivalence) one can alternatively apply techniques from Zhivotovskiy [53] and Abdalla and Zhivotovskiy [1], leading to slightly better statements in terms of logarithmic terms.

Let \(\psi : \mathbb {R}\rightarrow \mathbb {R}\) be the truncation function defined by

The following lemma follows from Theorem 3.2 in Minsker [36] and a standard approximation result.

Lemma 23

Let \((Z_i)\) with \(Z_i {\mathop {=}\limits ^{d}} Z\) be a sequence of i.i.d. compact self-adjoint random operators on \(\mathcal {H}\), let \(\tau _n^2 \ge n \Vert \mathbb {E}Z^2 \Vert _{\infty }\) and let \(\theta >0\) be a truncation level. Then

for any \(t>0\), where \(\overline{d} = {\text {tr}}(\mathbb {E}Z^2 )/\Vert \mathbb {E}Z^2 \Vert _{\infty }\). Here, \(\psi (\theta Z_i)\) denotes the usual functional transformation, i.e. the function \(\psi \) acts only on the spectrum of \(\theta Z_i\).

We now turn to the proof of Lemma 2(iii), combining Lemma 23 with a truncation argument. To this end, set \(\theta = t/\sqrt{n}\), \(Z=\overline{Y \otimes Y}\) and \(\tau _n^2 = n \Vert \mathbb {E}(\overline{Y \otimes Y})^2 \Vert _{\infty } = n\), recalling that \(\Vert \mathbb {E}(\overline{Y \otimes Y})^2\Vert _\infty =1\). First,

Combining this with (6.11), it follows that

An application of Lemma 23 yields

The claim now follows form Lemma 3(ii). \(\square \)

Proof of Lemma 3

For \(1\le r\le p\), we have

Hence, by the triangle inequality, the Hölder inequality and the moment assumption, we have

This gives claim (i). In order to prove (ii), let us write

where the second equality follows from the fact that \((u_j \otimes u_k) (u_r \otimes u_s)\) is equal to \(u_j \otimes u_s\) if \(k = r\) and equal to 0 otherwise. Hence,

and thus

as can be seen from inserting

To see the improvements of (iii)–(iv), let us note that under the additional assumptions, we have \(\mathbb {E}\overline{\zeta _j \zeta _k}\, \overline{\zeta _k \zeta _s}=0\) for \(j\ne s\). Indeed, if \(j\ne s\), then either \(j\ne k\) and \(k\ne s\) in which case \(\mathbb {E}\overline{\zeta _j \zeta _k}\, \overline{\zeta _k \zeta _s}=\mathbb {E}\zeta _j \zeta _k^2 \zeta _s=0\), or \(j=k\) and \(k\ne s\) in which case \(\mathbb {E}\overline{\zeta _j \zeta _k}\, \overline{\zeta _k \zeta _s}=\mathbb {E}\zeta _k^3\zeta _s-(\mathbb {E}\zeta _k^2)(\mathbb {E}\zeta _k \zeta _s)=0\), or \(j\ne k\) and \(s=k\) in which case \(\mathbb {E}\overline{\zeta _j \zeta _k}\, \overline{\zeta _k \zeta _s}=\mathbb {E}\zeta _k^3\zeta _j-(\mathbb {E}\zeta _k^2)(\mathbb {E}\zeta _k \zeta _j)=0\). Hence, under the additional assumptions, we have

leading to

and

This gives claims (iii) and (iv). \(\square \)

6.4 CLTs: Proofs for Section 3

Proof of Theorem 1

We want to apply Proposition 2 with the choices

For this, let us write

The random variable \(L_\mathcal {J}X\otimes X\) takes values in the separable Hilbert space of all (self-adjoint) Hilbert–Schmidt operators on \(\mathcal {H}\) (endowed with trace-inner product) and has the decomposition

with \(\zeta _{jk} = \eta _j \eta _k\), as can be seen from inserting the Karhunen–Loève expansion of X and the definition of \(L_\mathcal {J}\). Using Assumption 1, the Cauchy–Schwarz inequality and the fact that the summation is over different indices, we have

Hence, by Lemma 3(i) and Lemma 6, we have for every \(1\le r\le p\),

In particular, setting \(q=p\wedge 3\), we get

for all \(r\in [2,q]\). By Corollary 1, we have

Thus, by (3.5) and Lemma 5, we get

as long as

Finally, we have

Inserting these choices for T, S, u and \(C_T\) into Proposition 2 (applied with \(q=p\wedge 3\)), the claim follows. \(\square \)

Proof of Theorem 3

We want to apply Proposition 4 with the choices \(A=A_{\mathcal {J}}\), \(B=B_{\mathcal {J}}\), \(C=C_{\mathcal {J}}\) (recall (3.4)),

and

For this, let us write

Inserting these choices for A, B, C, T, S, u into Proposition 4 (applied with \(q=\min (3,p)\)), the claim follows from inserting (6.14) and (6.15). \(\square \)

Proof of Corollary 3

Using Lemma 15, we may argue as in the proof of Proposition 4. \(\square \)

Proof of Theorem 2

We proceed exactly as in the proof of Theorem 3, using Proposition 3 instead of Proposition 4. \(\square \)

6.5 Bootstrap I: Proof of Theorem 4

We start by applying Proposition 4 with respect to \({\tilde{\textbf{U}}}\), and

In doing so, we consider Setting 1 with

Note that we use an additional tilde to indicate that we are dealing with the bootstrap quantities. Hence, we have

as well as

The following lemma shows that the quantities \({\tilde{A}}_{\mathcal {J}}\), \({\tilde{B}}_{\mathcal {J}}\), \({\tilde{C}}_{\mathcal {J}}\) are concentrated around their corresponding quantities \({A}_{\mathcal {J}}\), \({B}_{\mathcal {J}}\), \({C}_{\mathcal {J}}\) from (3.4).

Lemma 24

Suppose that (4.3) holds. Then with probability at least \(1 - 2n^{1- p/4}\), we have

In particular, for n large enough, we have with the same probability,

Proof

First, (i)–(iii) are consequences of (4.3) and the first three claims (note that \(C_{\mathcal {J}}\lesssim B_{\mathcal {J}}\lesssim A_{\mathcal {J}}\) by properties of the Schatten norms). Let us start proving the first claim. By independence of \((w_i)\) and \((Y_i)\), we have

By (6.14), we have \((\mathbb {E}(\Vert Y \Vert _2^2)^{p/2})^{2/p}\lesssim _p A_\mathcal {J}\). Since \(\mathbb {E}{\tilde{A}}_{\mathcal {J}} = A_{\mathcal {J}}\), (2.7) yields that for some constant \(C > 0\),

Using that \({\tilde{B}}_{\mathcal {J}}=\sqrt{2}\Vert {\tilde{\Psi }}_\mathcal {J}\Vert _2\) and \({B}_{\mathcal {J}}=\sqrt{2}\Vert \Psi _\mathcal {J}\Vert _2\), the triangle inequality and \(\vert \Vert {\tilde{\Psi }}_\mathcal {J}\Vert _2-\Vert \Psi _\mathcal {J}\Vert _2 \vert \le \Vert \tilde{\Psi }_\mathcal {J}-\Psi _\mathcal {J}\Vert _2\), the second claim follows if we can show that, with probability at least \(1 - n^{1- p/4}\),

In order to get (6.19), we apply Lemma 2(i). For this, recall from (6.13) that we have

with \(\zeta _{jk} = \eta _j \eta _k\), where the \(\zeta _{jk}\) satisfy \(\mathbb {E}\zeta _{jk}=0\) and \(\mathbb {E}\big \vert \zeta _{jk}\big \vert ^p \le C_{\eta }\) for all \(j\in \mathcal {J}\) and \(k\notin \mathcal {J}\). Hence, Lemmas 2(i) and 6 yield that for some \(C > 0\),

where \(t\ge 1\), and (6.19) follows by setting \(t = C\sqrt{\log n}\). Finally, using that \({\tilde{C}}_{\mathcal {J}}=2\Vert \tilde{\Psi }_\mathcal {J}\Vert _3\) and \({C}_{\mathcal {J}}=2\Vert \Psi _\mathcal {J}\Vert _3\) and the inequality \(\vert \Vert \tilde{\Psi }_\mathcal {J}\Vert _3-\Vert \Psi _\mathcal {J}\Vert _3 \vert \le \Vert \tilde{\Psi }_\mathcal {J}- \Psi _\mathcal {J}\Vert _3\le \Vert \tilde{\Psi }_\mathcal {J}- \Psi _\mathcal {J}\Vert _2\), the third claim follows from (6.19). Applying the union bound completes the proof. \(\square \)

Corollary 6

Let \(q \in (2,3]\) with \(q\le p\) and \(s \in (0,1)\). Then, with probability at least \(1 - C_p \textbf{p}^{1-s}_{\mathcal {J},n,p}-C_pn^{1-p/(2q)}\), \(C_p > 0\), we have

Proof

Applying Proposition 4 to the choices (6.18), we get

By (6.14), we have \((\mathbb {E}(\Vert Y \Vert _2^q)^{p/q})^{q/p}\lesssim _q A_\mathcal {J}^{q/2}\). Hence, (2.7) yields that for some constant \(C > 0\),

Hence, with probability at least \(1 - n^{1-p/(2q)}\),

By Corollary 2, we have

Similarly as in Lemma 5, we have

In fact, since w is independent of X and satisfies \(\mathbb {E}w^2=1\), the random variabe \(w(\vert R_{\mathcal {J}^c}\vert ^{1/2}+g_\mathcal {J}^{-1/2}P_\mathcal {J})X\) has the same covariance operator as \(X'=(\vert R_{\mathcal {J}^c}\vert +g_\mathcal {J}^{-1/2}P_\mathcal {J})X\) and its Karhunen–Loève coefficients are given by \(w\eta _j\), where \(\eta _j\) are the Karhunen–Loève coefficients of \(X'\) and X. Hence, (6.21) follows by the same line of arguments as Lemma same line of arguments as Lemma 5. Combining (6.21) with (3.5), we thus obtain

for \(C > 0\) sufficiently large. By Markov’s inequality, we have

Due to Lemma 24, we can replace \({\tilde{A}}_{\mathcal {J}}, {\tilde{B}}_{\mathcal {J}}, {\tilde{C}}_{\mathcal {J}}\) by its counterparts \({A}_{\mathcal {J}}, {B}_{\mathcal {J}}, {C}_{\mathcal {J}}\) with probability at least \(1 - C n^{1-p/4}\), \(C > 0\). Piecing everything together, the claim follows. \(\square \)

Proof of Theorem 4

To simplify the notation, we assume w.l.o.g. \(\sigma _w^2 = 1\). Then by affine invariance and the triangle inequality

The first term on the right-hand side is treated in Corollary 6. In order to deal with the second term, we can directly apply Theorem 3 as follows. By affine invariance, the triangle inequality and independence

For the first term on the right-hand-side, we can apply Theorem 3. Note that the corresponding bound is dominated by the one provided by Corollary 6. For the second term, using that the standard Gaussian distribution function \(\Phi \) is Lipschitz continuous and satisfies for \(a\ge 1\) and \(x>0\) that \(\Phi (ax)-\Phi (x)\le (a-1)x\Phi '(x)\lesssim a-1\), we get

Due to Lemma 24, we have

with probability at least \(1 - 2n^{1-p/4}\). Combining all bounds together with the union bound for the involved probabilities, the claim follows. \(\square \)

Proof of Corollary 4