Abstract

We prove a non-extinction result for Fleming–Viot-type systems of two particles with dynamics described by an arbitrary symmetric Hunt process under the assumption that the reference measure is finite. Additionally, we describe an invariant measure for the system, we discuss its ergodicity, and we prove that the reference measure is a stationary measure for the embedded Markov chain of positions of the surviving particle at successive branching times.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider a Hunt process X(t) on a state space D, with a possibly finite lifetime \(\zeta \). The Fleming–Viot system of \(K \geqslant 2\) particles is a collection of K processes \({\bar{X}}_k(t)\), which evolve as follows. The particles are independent copies of X(t) up to the random time \(\tau _1\) when one of the particles reaches its lifetime. Instead of dying, however, this particle immediately jumps to the position of a randomly chosen other particle. Then the particles again evolve as independent copies of X(t) until one of them reaches its lifetime, at a random time \(\tau _2\), and so the process continues.

Alternatively, one can think that a randomly chosen particle branches into two whenever some particle dies. For this reason, the random times \(\tau _n\) described above are often called branching times. One expects that, under reasonable assumptions, the branching times \(\tau _n\) diverge to infinity, and so the Fleming–Viot system is well-defined for every \(t \in (0, \infty )\). It is known, however, that in some cases the limit \(\tau _\infty = \lim _{n \rightarrow \infty } \tau _n\) can be finite with positive probability, and then there is no obvious way to extend the evolution of the Fleming–Viot system past \(\tau _\infty \). Therefore, we say that extinction, or branching explosion, occurs at \(\tau _\infty \) whenever it is finite.

The main result of this paper deals with just \(K = 2\) particles. Note that in this case at each branching time there is only one surviving particle, and so both particles occupy the same location at each branching time. Our key assumption is that the Hunt processes X(t) is self-dual (or symmetric) with respect to a finite reference measure m. Here by self-duality we simply mean that the transition operators of X(t) are self-adjoint on \(L^2(m)\).

Theorem 1.1

Consider the Fleming–Viot system of two particles evolving according to a self-dual Hunt process on a state space D with a finite reference measure m. For almost every initial configuration of the two particles, either (a) on \(D \times D\) (with respect to the product measure \(m \times m\)); or (b) on the diagonal of \(D \times D\) (with respect to the measure with marginals m), extinction never happens.

Remark 1.2

The Fleming–Viot system of particles following the Brownian motion was introduced in [6, 7]. A non-extinction result was stated already in [7], but the proof given there has an error. This led to an open problem which has been resolved only for sufficiently regular domains.

More precisely, non-extinction was established rigorously in [15] under the assumption that the domain satisfies the interior and exterior cone conditions, and the interior cone has a sufficiently large aperture. The result of [15] also covers particles following a general diffusion with smooth coefficients. A very similar result was simultaneously proved in [2] for Lipschitz domains with a sufficiently small Lipschitz constant, as well as for systems of two particles in polyhedral domains.

Our Theorem 1.1 shows that no regularity is needed for Fleming–Viot systems of two Brownian particles, and that the Brownian motion can be replaced by an arbitrary self-dual Hunt process with a finite reference measure. The question for Fleming–Viot systems of more than two particles, however, remains open.

We mention here a related stream of research [1, 4, 5, 8, 21] on the spine (the path of the surviving particle) of the Fleming–Viot system. In particular, the results of the present paper are used in [5] to show that for Fleming–Viot systems of two particles following the Brownian motion in an interval, the spine does not coincide with the Brownian motion conditioned to live forever.

Remark 1.3

Self-duality is an essential assumption in Theorem 1.1. For example, if \(D = (0, 1)\) and X(t) is the uniform motion to the left (that is, \(X(t) = X(0) - t\) for t less than the life-time \(\zeta = X(0)\)), then \(\tau _\infty = \max \{{\bar{X}}_1(0), \bar{X}_2(0)\}\) is always finite.

We expect that for many self-dual Hunt processes on state spaces D with an infinite reference measure m the assertion Theorem 1.1 holds true, but the assumption that m is finite cannot be completely removed. In other words, if m is an infinite measure, then the system may become extinct in finite time with positive probability. For example, Theorem 1.1(a) in [3] asserts that Bessel processes of negative dimension lead to Fleming–Viot systems of two particles with \(\tau _\infty < \infty \) almost surely; see also Example 5.7 in [2]. We note that a Bessel process of dimension \(\nu \in \mathbb {R}\) is a self-dual Hunt process on \((0, \infty )\), but the reference measure \(r^{\nu - 1} dr\) has infinite mass near the origin when \(\nu \leqslant 0\).

Under mild additional assumptions, Theorem 1.1 holds for every initial configuration. This is illustrated by the following result, which clearly covers the case of Brownian particles.

Corollary 1.4

Consider the Fleming–Viot system of two particles evolving according to a self-dual Hunt process X(t) on a state space D with a finite reference measure m. Suppose that the one-dimensional distributions of X(t) are absolutely continuous with respect to m for every \(t > 0\) and every starting point \(X(0) \in D\). Then, the Fleming–Viot system of two particles never becomes extinct, regardless of the initial configuration of the particles.

Let G(x, dy) be the potential kernel of X(t):

and denote

We say that the Hunt process X(t) is irreducible if for every Borel set \(A \subseteq D\) such that \(m(A) > 0\) we have \(G(x, A) > 0\) for almost every \(x \in D\). By Theorems 6 and 29 in [19], irreducibility is equivalent to the following property: for every \(t > 0\) and every Borel set \(A \subseteq D\) such that \(m(A) > 0\) we have \(\mathbb {P}^x(X(t) \in A) > 0\) for almost every \(x \in E\); for further equivalent definitions, we refer to [19]. In addition to Theorem 1.1, we prove the following result on ergodicity of Fleming–Viot systems of two particles.

Theorem 1.5

Consider the Fleming–Viot system of two particles \(({\bar{X}}_1(t), {\bar{X}}_2(t))\) evolving according to a self-dual Hunt process X(t) on a state space D with a finite reference measure m. If \(\Vert G\Vert \) is finite (or, more generally, if the measure G(x, dy)m(dx) is \(\sigma \)-finite), then G(x, dy)m(dx) is an invariant measure for \(({\bar{X}}_1(t), {\bar{X}}_2(t))\).

If \(\Vert G\Vert \) is finite and additionally X(t) is irreducible, then, for every nonnegative Borel function \(\varphi \) on \(D \times D\) and for almost every initial configuration of the two particles (with respect to either the product measure \(m \times m\) or the measure m on the diagonal of \(D \times D\), as in Theorem 1.1), the ergodic averages of \(\varphi ({\bar{X}}_1(t), {\bar{X}}_2(t))\) converge with probability one:

The above theorem is closely related to the following result on the embedded Markov chain of branching positions.

Corollary 1.6

Consider the Fleming–Viot system of two particles evolving according to a self-dual Hunt process X(t) on a state space D with a finite reference measure m, and assume that the branching times are finite with probability one. Let \(Z_n\) denote the position of the surviving particle at the nth branching time \(\tau _n\). Then \(Z_n\) is a conservative Markov chain, and m is a stationary measure for \(Z_n\).

Remark 1.7

Let us discuss the assumptions in Theorem 1.5 and Corollary 1.6. By definition, \(\Vert G\Vert = \int _D \mathbb {E}^x \, \zeta m(dx)\). Therefore, if \(\Vert G\Vert \) is finite, then \(\mathbb {P}^x(\zeta < \infty ) = 1\) for almost every \(x \in D\), and hence the branching times \(\tau _n\) are finite with probability one for almost every initial configuration (with respect to both the product measure \(m \times m\) and the measure on the diagonal with marginals m). Converse implication need not be true, but if \(\mathbb {P}^x(\zeta < \infty ) = 1\) for almost every \(x \in D\), then G(x, dy)m(dx) is a \(\sigma \)-finite measure; see, for example, items (iv) and (v) of Proposition (2.2) in [12].

Let us define \(D_0 = \{x \in D: \mathbb {P}^x(\zeta < \infty ) = 1\}\). As it was kindly pointed out by the referee, for a self-dual Hunt process X(t) on a state space D with a finite reference measure m, it can be proved that \(D_0\) is an invariant set, and \(\mathbb {P}^x(\zeta = \infty ) = 1\) for almost every \(x \in D {\setminus } D_0\) (see Remark 3.3). From the point of view of Fleming–Viot systems of particles, we can safely ignore \(D {\setminus } D_0\), or, in other words, we may restrict our attention to the case when \(\mathbb {P}^x(\zeta < \infty ) = 1\) for almost every \(x \in D\). Thus, the assumptions in the first part of Theorem 1.5 and in Corollary 1.6 are quite natural and not restrictive. We refer to [13] for further discussion.

The remaining part of the paper is divided into four sections. In Sect. 2 we illustrate the main idea of the proof when X(t) is the standard Brownian motion in a smooth domain in \(\mathbb {R}^d\). In Sect. 3 we consider two independent copies of the underlying Hunt process X(t). This part contains two auxiliary lemmas, which describe the location of the surviving particle when the other one dies. Section 4 contains the proof of Theorem 1.1, while in Sect. 5 we prove Theorem 1.5.

2 Idea of the proof

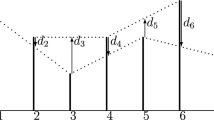

Let us consider a Fleming–Viot system of two particles, that from now on we denote by \(({\bar{X}}(t), {\bar{Y}}(t))\) rather than \((\bar{X}_1(t), {\bar{X}}_2(t))\). In this section we assume that \({\bar{X}}(t)\) and \({\bar{Y}}(t)\) evolve as independent Brownian motions in a smooth, bounded Euclidean domain D, and whenever one of the particles hits the boundary, it immediately jumps to the position of the other particle. The key idea of our proof lies in the following observation. Its refined variant, Lemma 3.2, is proved rigorously in Sect. 3.

Proposition 2.1

If the Fleming–Viot pair of particles is started at a random point distributed uniformly over the diagonal of \(D \times D\), then at the first branching time the particles are again distributed uniformly on the diagonal of \(D \times D\).

With the above result at hand, the remaining part of the proof of Theorem 1.1 is fairly simple. The sequence \((Z_n, \sigma _n)\) of branching locations \(Z_n = {\bar{X}}(\tau _n) = \bar{Y}(\tau _n)\) and gaps between branching times \(\sigma _n = \tau _n - \tau _{n - 1}\) forms a stationary Markov chain, and hence, by the ergodic theorem, \(\tau _n / n\) converges to a positive limit with probability one. In particular, \(\tau _\infty = \lim _{n \rightarrow \infty } \tau _n\) is necessarily infinite, that is, there is no extinction.

We remark that our proof of Theorem 1.1 in Sect. 4 uses the full statement of Lemma 3.2 rather than just Proposition 2.1, and it is in fact even simpler than the argument sketched above.

Our proof of Proposition 2.1 (or Lemma 3.2) in the next section uses relatively standard, but rather abstract tools from the theory of Markov processes. For this reason, we think it will be instructive to discuss first a more direct approach to Proposition 2.1 for Brownian particles in a smooth domain D. While the two arguments (the one that follows and the actual proof in Sect. 3) may appear quite different, in fact both of them follow the same line: we identify the occupation density of the bivariate process with the Green function of D (using an analytic approach in Step 1 below, and a probabilistic reasoning in Lemma 3.1), and then we determine the distribution at the first branching time (expressing it in terms of the Poisson kernel of D in Step 2 below, and using resolvent techniques in Lemma 3.2).

Proof

(Sketch of the proof of Proposition 2.1) We divide the argument into three steps.

Step 1. Let \(G_D(x, y)\) denote the usual Green function in D. That is, we have \(G_D(x, y) = G_D(y, x)\), for every \(y \in D\) the function \(x \mapsto G_D(x, y)\) vanishes continuously on the boundary of D, and we have

in the sense of distributions. Here \(\Delta _x\) stands for the Laplace operator in \(\mathbb {R}^d\) acting on the variable x, and \(\delta _y\) is the Dirac delta at y. By symmetry,

It follows that, as a bivariate function, the Green function satisfies

Here and below we denote by \(\delta \) the uniform measure on the diagonal of \(D \times D\):

Since \(G_D(x_1, x_2)\) converges to zero on the boundary of \(D \times D\), Green’s third identity implies that

Here \(G_{D \times D}((x_1, x_2), (y_1, y_2))\) denotes the Green function in \(D \times D\).

Step 2. Consider the 2d-dimensional Brownian motion (X(t), Y(t)) in \(D \times D\), absorbed at the boundary, and denote by \(\tau \) the hitting time of the boundary. By Kakutani’s formula for the solution of the Dirichlet problem, if \((X(0), Y(0)) = (x_1, x_2)\), then the density function of the distribution of \((X(\tau ), Y(\tau ))\) on the boundary of \(D \times D\) is the Poisson kernel \(P_{D \times D}((x_1, x_2), (z_1, z_2))\). Recall that the Poisson kernel is the inward normal derivative of the Green function (with respect to the second variable) on the boundary \(\partial (D \times D) = (\partial D \times D) \cup (D \times \partial D)\):

Here \(\tfrac{\partial }{\partial \nu }|_z\) denotes the inward normal derivative on the boundary of D evaluated at a point \(z \in \partial D\).

It follows that if the initial position (X(0), Y(0)) is uniformly distributed over the diagonal of \(D \times D\), then the density function of the distribution of \((X(\tau ), Y(\tau ))\) is given by

and hence it is the inward normal derivative on the boundary of \(D \times D\) of

In other words,

But the inward normal derivative (with respect to y) of the Green function in D, \(G_D(x, y)\), is the Poisson kernel in D, \(P_D(x, z)\). Thus,

Suppose that \(Z = X(\tau )\) if \(Y(\tau ) \in \partial D\), and \(Z = Y(\tau )\) if \(X(\tau ) \in \partial D\). What we have found above implies that for every Borel set \(A \subseteq D\),

However, the integral of \(P_D(x, y)\) over \(y \in \partial D\) is equal to one, and so

that is, Z is uniformly distributed over D.

Step 3. Let us now consider the Fleming–Viot pair \(\bar{X}(t)\) and \({\bar{Y}}(t)\) evolving as independent Brownian motions in D, but whenever either particle hits the boundary of D, it is immediately resurrected at the location of the other particle. We suppose that \({\bar{X}}(0) = {\bar{Y}}(0)\) is uniformly distributed over D, and we claim that in this case also the first branching location \(Z_1 = {\bar{X}}(\tau _1) = {\bar{Y}}(\tau _1)\) is uniformly distributed over D.

Observe that up to time \(\tau _1\), \(({\bar{X}}(t), {\bar{Y}}(t))\) is the Brownian motion in \(D \times D\), and \(Z_1 = {\bar{X}}(\tau _1) = \bar{Y}(\tau _1)\) is equal to the left limit \({\bar{X}}(\tau _1-) = \bar{X}(\tau _1)\) if \({\bar{Y}}(\tau _1-) \in \partial D\), and to the left limit \({\bar{Y}}(\tau _1-) = {\bar{Y}}(\tau _1)\) if \({\bar{X}}(\tau _1-) \in \partial D\). Therefore, \(Z_1\) is the same random variable as the variable Z studied in the previous step. This completes the proof: we have already shown that Z is uniformly distributed over D. \(\square \)

3 Where were you

In this section we prove an auxiliary result, which describes the distribution of the position of the Hunt process X(t) at the lifetime of its independent copy. This turns out to be the key ingredient of the proof of Theorem 1.1 in the next section.

Suppose that X(t) is a self-dual Hunt processes with state space \(D \cup \{\partial \}\), where \(\partial \) is a cemetery point, and a finite reference measure m. By \({\mathscr {F}}_t\) we denote the natural filtration of X(t). For notational convenience, with no loss of generality we assume that m is a probability measure. As it is customary, we adopt the convention that \(X(\infty ) = \partial \), and that if f is a function on D, then we automatically extend f to \(D \cup \{\partial \}\) so that \(f(\partial ) = 0\). We denote by \(\mathbbm {1}\) the constant one on D; note, however, that \(\mathbbm {1}(\partial ) = 0\). We also write \(\langle f, g \rangle \) for the inner product of \(f, g \in L^2(m)\). Finally, we denote by \(\delta \) the measure concentrated on the diagonal of \(D \times D\) with marginals m,

We write \(\mathbb {P}^x\) for the probability corresponding to the process X(t) started at \(X(0) = x\). We denote by \(\zeta \) the lifetime of X(t), by

the transition kernel of X(t), and by

the transition operators \(P_t\) of the process X(t), defined whenever the expectation or the integral is well-defined. Then \(P_t\) form a strongly continuous semigroup of self-adjoint contractions on \(L^2(D, m)\). We also define the resolvent kernel

and the resolvent operators

defined whenever \(\lambda \geqslant 0\) and the double integral on the right-hand side is well-defined. Note that for \(\lambda = 0\) we recover the potential kernel \(G(x, dy) = u_0(x, dy)\) discussed in the introduction. For further information about Hunt processes and their transition and resolvent operators, we refer to [9].

Suppose that Y(t) an independent copy of X(t). Let us write \(\mathbb {P}^{x, y}\) for the probability corresponding to processes X(t) and Y(t) started at \(X(0) = x\) and \(Y(0) = y\), and \(\mathbb {P}^\delta \) for the probability corresponding to processes X(t) and Y(t) started at a random point \(X(0) = Y(0)\) with distribution m:

We stress that under \(\mathbb {P}^\delta \), the processes X(t) and Y(t) are not independent. Denote by \(\zeta ^X\), \(\zeta ^Y\) the lifetimes of X(t) and Y(t), respectively. We view the bivariate process (X(t), Y(t)) as a Hunt process on state space \((D \cup \{\partial \}) \times (D \cup \{\partial \})\), with lifetime \(\max \{\zeta ^X, \zeta ^Y\}\), and cemetery state \((\partial , \partial )\). Clearly, for every Borel sets \(A, B \subseteq D \cup \{\partial \}\), for every Borel functions f, g on \(D \cup \{\partial \}\), and for every \(x, y \in D\),

Here we abuse the notation by setting \(p_t(x, \{\partial \}) = 1 - p_t(x, D)\).

Symmetry (or self-duality) of X(t) allows us to link the resolvent kernels of the bivariate process (X(t), Y(t)) and the original process X(t).

Lemma 3.1

For bounded Borel functions f and g and \(\lambda > 0\), or for nonnegative Borel functions f and g and \(\lambda \geqslant 0\), we have

Note that since we agreed that \(f(\partial ) = g(\partial ) = 0\), we have \(f(X(t)) g(Y(t)) = 0\) when \(t \geqslant \min \{\zeta ^X, \zeta ^Y\}\). Thus, the integral in (3.1) is effectively over \(t \in [0, \min \{\zeta ^X, \zeta ^Y\})\).

Proof

Clearly,

Using the fact that \(P_t\) is self-adjoint and the semigroup property, we find that

Thus, by Fubini’s theorem,

as desired. \(\square \)

Our next result is a refined version of Proposition 2.1. Before we state it, we recall that if \(\zeta ^X \leqslant \zeta ^Y\), then we understand that \(X(\zeta ^Y) = \partial \) and so \(f(X(\zeta ^Y)) = 0\). We also agree that \(e^{-\infty } = 0\).

Lemma 3.2

If f is a bounded Borel function and \(\lambda > 0\), then

When \(\lambda = 0\) and \(\mathbb {P}^x(\zeta ^X < \infty ) = 1\) for almost all \(x \in D\), then we have

Remark 3.3

Self-duality of X(t) and finiteness of m imply that \(\mathbb {P}^x(\zeta ^X < \infty ) \in \{0, 1\}\) for almost every \(x \in D\). Indeed: if \(f(x) = \mathbb {P}^x(\zeta ^X = \infty ) = \lim _{t \rightarrow \infty } P_t \mathbbm {1}(x)\), then, arguing as in (3.2), we have

Therefore, \(f(x) - (f(x))^2\) is a nonnegative function with integral zero. This is only possible if \(f(x) \in \{0, 1\}\) for almost every \(x \in D\), as claimed. Additionally, \(f = P_t f\) for every \(t > 0\), and hence the set of \(x \in D\) such that \(\mathbb {P}^x(\zeta ^X < \infty ) = 1\) is an invariant set. Thus, if X(t) is irreducible, then either X(t) is conservative (in the sense that \(\mathbb {P}^x(\zeta ^X = \infty ) = 1\) for almost every \(x \in D\)) or \(\mathbb {P}^x(\zeta ^X < \infty ) = 1\) for almost every \(x \in D\). We refer to [19] for a closely related discussion.

Remark 3.4

One can prove that X(t) has an exit law \(\ell _t(x)\) such that if \(s, t > 0\), then \(P_s \ell _t(x) = \ell _{t + s}(x)\) for almost every \(x \in D\), and \(\mathbb {P}^x(\zeta ^X \in dt) = \ell _t(x) dt\) on \((0, \infty )\) for almost every \(x \in D\). Thus, using independence of X(t) and \(\zeta ^Y\), we have

Since \(P_t\) is a self-adjoint operator,

Undoing the initial steps, we conclude that

and Lemma 3.2 follows. The above argument, kindly suggested by the referee, is very elegant and insightful, but it depends on the existence of the exit law \(\ell _t(x)\). This fact can be proved using Proposition (3.7) in [10] and an appropriate approximation argument, similar to the one given below; see also [13]. With some effort, one can also extend Lemma 3.2 to the case of a \(\sigma \)-finite reference measure m. However, we choose to restrict our attention to finite reference measures m, and we give a slightly longer, but more elementary proof.

Proof

The idea of the proof is to apply Lemma 3.1 to f and \(L \mathbbm {1}\), where L denotes the generator of the process X(t). However, \(\mathbbm {1}\) typically fails to be in the domain of L. In order to circumvent this difficulty, we use resolvent techniques. More precisely, we approximate the (non-existent) function \(L \mathbbm {1}\) by \(\mu \mathbbm {1}- \mu ^2 U_\mu \mathbbm {1}\), where \(\mu \rightarrow \infty \).

Let \(\mu> \lambda > 0\), and let \(g = \mu \mathbbm {1}- \mu ^2 U_\mu \mathbbm {1}\). Our goal is to apply Lemma 3.1 to f and g, simplify both sides of (3.1), and pass to the limit as \(\mu \rightarrow \infty \). We divide the proof into three steps.

Step 1. By the resolvent equation,

As \(\mu \rightarrow \infty \), we have \(\mu U_\mu \mathbbm {1}\rightarrow \mathbbm {1}\) in \(L^2(D, m)\), and hence \(U_\lambda g \rightarrow \mathbbm {1}- \lambda U_\lambda \mathbbm {1}\) in \(L^2(D, m)\). It follows that

Finally, since \(\mathbbm {1}(X(t)) = 1\) when \(t < \zeta ^X\) and \(\mathbbm {1}(X(t)) = 0\) for \(t \geqslant \zeta ^X\), we have

Therefore,

The above identity links the right-hand side of (3.1) and the right-hand side of (3.3).

Step 2. For \(x, y \in D \cup \{\partial \}\) we have

Using the above formula and the Markov property (with the usual abuse of notation), alongside with Fubini’s theorem, we find that

Recall that \(\mathbbm {1}(Y(t)) = 1\) if \(t < \zeta ^Y\) and \(\mathbbm {1}(Y(t)) = 0\) otherwise. It follows that

By Fubini’s theorem,

Substituting \(s = u / \mu \) and \(t = \zeta ^Y - v / \mu \), we arrive at

Since f is bounded and \(e^{-2 \lambda (\zeta ^Y - v / \mu )} \leqslant 1\), the dominated convergence theorem applies, and we conclude that

This identity provides a link between the left-hand sides of (3.1) and (3.3).

Step 3. The desired result for \(\lambda > 0\) follows now from (3.5) and (3.6), combined with Lemma 3.1. Finally, the result for \(\lambda = 0\) is shown by passing to the limit as \(\lambda \rightarrow 0^+\) and using the dominated convergence theorem. \(\square \)

We will need the following simple property:

This follows easily from the fact that the semigroup \(P_t\) is analytic (see Theorem 1 in Sect. III.1, p. 67, in [20]). Indeed: the function \(\mathbb {P}^\delta (\zeta ^X> s, \zeta ^Y > t) = \langle P_s \mathbbm {1}, P_t \mathbbm {1}\rangle \) is real-analytic with respect to \(s, t > 0\), and so the joint distribution of \((\zeta ^X, \zeta ^Y)\) under \(\mathbb {P}^\delta \) is absolutely continuous on \((0, \infty ) \times (0, \infty )\) (with a real-analytic density function). Alternatively, one can derive (3.7) from formula (3.4) with \(f = \mathbbm {1}\), with a minor twist when \(\mathbb {P}^\delta (\zeta ^X = \infty ) \in (0, 1)\) (which is only possible when X(t) is not irreducible). For a closely related result, see Proposition (3.7) in [10] or Proposition (6.20)(i) in [14].

We define the random time

and the random variable

Recall that \(f(X(t)) g(Y(t)) = 0\) when \(t \geqslant \sigma \). Thus, Lemma 3.1 reads

When \(\lambda > 0\) and \(f = g = \mathbbm {1}\), we obtain

On the other hand, setting \(\lambda = 0\) and \(f = g = \mathbbm {1}\) leads to

(see (1.2)). Thus, the assumption that \(\Vert G\Vert \) is finite in Theorem 1.5 is equivalent to finiteness of \(\mathbb {E}^\delta \sigma \).

We now rephrase Lemma 3.2. Note that \(e^{-2 \lambda \zeta ^Y} f(X(\zeta ^Y)) = e^{-2 \lambda \sigma } f(X(\sigma ))\), as \(\zeta ^Y = \sigma \) when \(\zeta ^Y < \zeta ^X\), and both sides are equal to zero otherwise. Thus, formula (3.3) can be written as

By symmetry,

and therefore

By considering \(\lambda = 0\), we find that if \(\mathbb {P}^x(\zeta ^X < \infty ) = 1\) for almost all \(x \in D\), then

a property that was stated as Proposition 2.1 in the previous section, and proved with a more direct approach when X(t) is the Brownian motion.

4 We’ll meet again

We are now ready to prove Theorem 1.1. This is done below, after the Fleming–Viot system of two particles is introduced in a more careful way.

As in the previous section, we consider a self-dual Hunt process X(t) on a state space D with a finite reference measure m, and its independent copy Y(t). Again with no loss of generality we assume that m is a probability measure. In this section we consider the corresponding Fleming–Viot system of two particles: a bivariate process \(({\bar{X}}(t), {\bar{Y}}(t))\) which evolves just as (X(t), Y(t)), except that at an increasing sequence of branching times \(\tau _n\) the coordinate that is about to die, reenters the state space D at the position of the other coordinate.

More precisely, the Fleming–Viot system \(({\bar{X}}(t), {\bar{Y}}(t))\) is constructed recursively. We let \(\tau _0 = 0\), and we let \(Z_0\) to be a random variable taking values in D, with distribution m. Once \(\tau _{n - 1} \in [0, \infty )\) and \(Z_{n - 1} \in D\) are given, and \({\bar{X}}(t)\) and \({\bar{Y}}(t)\) are defined on \([0, \tau _{n - 1})\), we proceed as follows. We sample an independent copy \((X_n(t), Y_n(t))\) of the bivariate process (X(t), Y(t)) started at the random position \((Z_{n - 1}, Z_{n - 1})\), and we denote the corresponding variables \(\sigma \) and Z by \(\sigma _n\) and \(Z_n\). That is,

We define

for \(t \in [0, \sigma _n)\). Furthermore, we let

We denote the probability corresponding to the above construction by \({\bar{\mathbb {P}}}^\delta \) to emphasise that the initial configuration of the particles is distributed according to the measure \(\delta \) on the diagonal of \(D \times D\) with marginals m.

Exactly the same construction can be carried out for an arbitrary distribution of the initial configuration of the two particles. If \({\bar{X}}(0) = x\) and \({\bar{Y}}(0) = y\) with probability one, we write \({\bar{\mathbb {P}}}^{x, y}\) for the corresponding probability. If \({\bar{X}}(0)\) and \({\bar{Y}}(0)\) are drawn independently from distribution m, we denote the corresponding probability by \({\bar{\mathbb {P}}}^{m \times m}\). More generally, for an arbitrary probability measure \(\mu \) on \(D \times D\) we write \({\bar{\mathbb {P}}}^\mu = \int _{D \times D} {\bar{\mathbb {P}}}^{x, y} \mu (dx, dy)\) for the probability corresponding to the system of particles with initial configuration \(({\bar{X}}(0), {\bar{Y}}(z))\) chosen randomly from distribution \(\mu \). Clearly, for any event E we have

Note that the process \(({\bar{X}}(t), {\bar{Y}}(t))\) is defined on \([0, \tau _\infty )\), where

If \(\tau _\infty < \infty \), we say that the Fleming–Viot particle system becomes extinct (or suffers from a branching explosion) at time \(\tau _\infty \), and in this case we set \(\bar{X}(t) = {\bar{Y}}(t) = \partial \) for \(t \geqslant \tau _\infty \) to make the definition of \(({\bar{X}}(t), {\bar{Y}}(t))\) complete.

Below we restate, and then prove, Theorem 1.1. For convenience, we show items (a) and (b) of Theorem 1.1 separately, as Corollary 4.2 and Theorem 4.1, respectively.

Theorem 4.1

Consider the Fleming–Viot system \(({\bar{X}}(t), \bar{Y}(t))\) of two particles evolving according to a self-dual Hunt process X(t) on a state space D with a finite reference measure m. For almost every \(x \in D\) (with respect to m), if the initial configuration is \({\bar{X}}(0) = {\bar{Y}}(0) = x\), then the system never becomes extinct.

Proof

With no loss of generality we assume that m is a probability measure.

By construction, up to the first branching time, the process \((\bar{X}(t), {\bar{Y}}(t))\) is a copy of the process (X(t), Y(t)) studied in the previous section. More precisely, for \(t \in [0, \tau _1)\) the process \(({\bar{X}}(t), {\bar{Y}}(t)) = (X_1(t), Y_1(t))\) is a copy of the process (X(t), Y(t)) restricted to \(t \in [0, \sigma )\). Note, however, that at time \(\tau _1\) we have \({\bar{X}}(\tau _1) = \bar{Y}(\tau _1) = Z_1\), while one of the variables \(X_1(\tau _1)\) and \(Y_1(\tau _1)\) is equal to \(\partial \).

By definition, \(Z_1 = X_1(\tau _1)\) if \(\tau _1 = \zeta ^{Y_1}\), and \(Z_1 = Y_1(\tau _1)\) if \(\tau _1 = \zeta ^{X_1}\). This means that \(Z_1\) is defined in terms of \(X_1(t)\) and \(Y_1(t)\) in the same way as the random variable Z was constructed in (3.8) using X(t) and Y(t).

Again by construction, the process \(({\bar{X}}(t), {\bar{Y}}(t))\) has the strong Markov property at time \(\tau _1\): the evolution after time \(\tau _1\) is independent of the past, conditionally on the present value of \(Z_1 = {\bar{X}}(\tau _1) = {\bar{Y}}(\tau _1)\). We fix \(\lambda > 0\), and we write

By the strong Markov property and (3.10), we have

Thus,

However, \(\mathbb {E}^x e^{-\lambda \zeta ^X} < 1\) for every \(x \in D\). It follows that \(f(x) = 0\) for almost every \(x \in D\), that is, \(\tau _\infty = \infty \) with probability \({\bar{\mathbb {P}}}^{x, x}\) one for almost every \(x \in D\). \(\square \)

Claim (b) in Theorem 1.1 is thus proved. In order to extend this result to almost all initial configurations \(({\bar{X}}(0), {\bar{Y}}(0)) = (x, y)\) with respect to the product measure \(m \times m\), as in claim (a), we need one more step.

Corollary 4.2

The result of Theorem 4.1 remains true for almost every initial configuration \({\bar{X}}(0) = x\), \({\bar{Y}}(0) = y\) (with respect to the product measure \(m \times m\)), and also when the distribution of \(({\bar{X}}(0), {\bar{Y}}(0))\) is absolutely continuous with respect to \(m \times m\).

Proof

Again, with no loss of generality we assume that m is a probability measure. Suppose that the initial position of \((\bar{X}(t), {\bar{Y}}(t))\) is chosen randomly according to the product measure \(m \times m\), and recall that we denote the corresponding probability by \({\bar{\mathbb {P}}}^{m \times m}\). As in the proof of Theorem 4.1, we observe that up to the first branching time \(\tau _1\), we have \({\bar{X}}(t) = X_1(t)\) and \({\bar{Y}}(t) = Y_1(t)\), where \(X_1(t)\) and \(Y_1(t)\) are independent copies of the underlying Hunt process X(t), and both are started independently at a random position in D chosen according to the measure m. Furthermore, we have \(Z_1 = X_1(\tau _1)\) if \(\tau _1 = \zeta ^{Y_1}\) and \(Z_1 = Y_1(\tau _1)\) if \(\tau _1 = \zeta ^{X_1}\). It follows that for every Borel set \(A \subseteq D\),

(recall that \(X_1(\zeta ^{Y_1}) = \partial \) if \(\zeta ^{Y_1} \geqslant \zeta ^{X_1}\)). By independence,

If \(\mathbb {P}^m\) corresponds to the process X(t) started at a random position X(0) with distribution m, then we obtain

However,

and since \(P_t\) is self-adjoint, we have

Therefore,

In particular, the distribution of \(Z_1\) is absolutely continuous with respect to m.

By the strong Markov property, the shifted process \(({\bar{X}}(\tau _1 + t), {\bar{Y}}(\tau _1 + t))\) is the same Fleming–Viot particle system, with initial configuration \((Z_1, Z_1)\). Above we proved that the distribution of \(Z_1\) under \({\bar{\mathbb {P}}}^{m \times m}\) is absolutely continuous with respect to m. Thus, using the strong Markov property and Theorem 4.1, we find that

that is, the system never becomes extinct. By (4.1) (with \(\mu = m \times m\)), we conclude that \(\tau _\infty = \infty \) with probability \({\bar{\mathbb {P}}}^{x, y}\) one for almost every pair x, y.

The latter assertion of the lemma follows immediately from the former by (4.1) and Fubini. Alternatively, one can repeat the above argument with the initial configuration distributed according to a given absolutely continuous distribution rather than \(m \times m\). \(\square \)

Of course, this proves claim (a) of Theorem 1.1, and so the proof of our main result is complete.

Proof of Corollary 1.4

Suppose that \({\bar{X}}(t)\) and \({\bar{Y}}(t)\) are started at fixed points x and y, respectively. Then the processes \({\bar{X}}(t)\) and \(\bar{Y}(t)\) are independent up to the first branching time \(\tau _1\). By the same argument as in the proof of Corollary 4.2, the distribution of \(Z_1\) is a mixture of the one-dimensional distributions of the underlying Hunt process X(t), and hence, by assumption, it is absolutely continuous with respect to m. The remaining part of the proof is exactly the same as in the proof of Corollary 4.2. \(\square \)

5 Isn’t this where we came in?

In this section we prove Theorem 1.5 and Corollary 1.6. We use the notation introduced in the previous section, and we begin by showing that m is a stationary measure for the embedded Markov chain \(Z_n\).

Proof of Corollary 1.6

With no loss of generality we assume that m is a probability measure, and we suppose that the initial configuration \(({\bar{X}}(0), {\bar{Y}}(0))\) has distribution \(\delta \) on the diagonal of \(D \times D\), as in the proof of Theorem 4.1. By assumption, \(Z_0 = {\bar{X}}(0) = {\bar{Y}}(0)\) has distribution m, and \(\mathbb {P}^x(\zeta ^X < \infty ) = 1\) for almost every \(x \in D\).

As it was observed in the proof of Theorem 4.1, the process \(({\bar{X}}(t), {\bar{Y}}(t))\) up to the first branching time \(\tau _1\) is a copy of the bivariate process (X(t), Y(t)) up to time \(\sigma = \min \{\zeta ^X, \zeta ^Y\}\), and hence the distribution of \(Z_1\) under \({\bar{\mathbb {P}}}^\delta \) is equal to the distribution of Z under \(\mathbb {P}^\delta \). By (3.11), the latter is equal to m. Therefore, \(Z_1\) has distribution m.

Suppose that we already know that \(Z_{n - 1}\) has distribution m. By the strong Markov property for the process \(({\bar{X}}(t), \bar{Y}(t))\) at time \(\tau _{n - 1}\) (see [16, 18]), the shifted process \(({\bar{X}}(\tau _{n - 1} + t), {\bar{Y}}(\tau _{n - 1} + t))\) under \({\bar{\mathbb {P}}}^\delta \) is just a copy of the original process \((\bar{X}(t), {\bar{Y}}(t))\) under \({\bar{\mathbb {P}}}^\delta \). The result of the previous paragraph implies that \(Z_n\) has distribution m, and additionally it proves the Markov property for the sequence \(Z_0, Z_1, \ldots \) at time \(n - 1\). Thus, by induction, the sequence \(Z_0, Z_1, \ldots \) is indeed a Markov chain, and for every n the random variable \(Z_n\) has distribution m. \(\square \)

For the construction of a stationary measure for the Fleming–Viot system \(({\bar{X}}(t), {\bar{Y}}(t))\) in Theorem 1.5, we additionally denote

whenever \(\Vert G\Vert \) is finite; see (1.1) and (1.2). Note that in this case \(\mu \) is a probability measure on \(D \times D\). Our goal is to show that under probability \({\bar{\mathbb {P}}}^\mu \), corresponding to the Fleming–Viot system of two particles with initial configuration \(({\bar{X}}(0), {\bar{Y}}(0))\) having distribution \(\mu \), the system \(({\bar{X}}(t), {\bar{Y}}(t))\) is stationary.

The only essential property that we use here is that the process \(({\bar{X}}(t), {\bar{Y}}(t))\) is obtained by concatenating ‘excursions’ \((X_k(t), Y_k(t))\), \(t \in [0, \sigma _k)\), and that the initial configurations \((X_k(0), Y_k(0)) = (Z_{k - 1}, Z_{k - 1})\) of these excursions form a stationary Markov chain (by Corollary 1.6). While such a concatenation procedure seems to be rather standard (see, for example, [16, 18]), the author failed to find a proper reference, and so we include full details.

We fix \(\lambda > 0\) and two bounded nonnegative Borel functions f, g, and we denote

Recall that \(\delta \) is the measure on the diagonal of \(D \times D\) with marginals m, and once again with no loss of generality we assume that m is a probability measure. The following lemma is the key technical result in the proof of Theorem 1.5

Lemma 5.1

Suppose that \(\Vert G\Vert \) is finite. With the above definitions, we have

Proof

Recall that \(G(x, dy) = u_0(x, dy)\), and that the process \((\bar{X}(t), {\bar{Y}}(t))\) up to the first branching time \(\tau _1\) is a copy of the process (X(t), Y(t)) up to time \(\sigma = \min \{\zeta ^X, \zeta ^Y\}\). Thus, formula (3.9) implies that

We now show a variant of the resolvent equation, with one integral over \((0, \infty )\) and the other one over \((0, \tau _1)\). Using the Markov property, we find that

By Fubini’s theorem,

We study each of the terms on the right-hand side. For the first one, by equality of \(({\bar{X}}(t), {\bar{Y}}(t))\) and (X(t), Y(t)) up to the first branching time, and by (3.9) with \(\lambda = 0\), we obtain

In order to transform the third term, we apply the strong Markov property:

Since \(\Vert G\Vert \) is finite, we have \({\bar{\mathbb {P}}}^{x, x}(\tau _1< \infty ) = \mathbb {P}^{x, x}(\sigma < \infty ) = 1\) for almost every \(x \in D\), and hence \({\bar{\mathbb {P}}}^\delta (\tau _1 < \infty ) = 1\). By Corollary 1.6, the distribution of \(Z_1\) under \({\bar{\mathbb {P}}}^\delta \) is equal to m. Thus,

By the definition of \(\varphi \), we have

Combining (5.3) and (5.4) with (5.2), we find that

The last three terms on the right-hand side cancel out, and the desired result follows. \(\square \)

Proof of Theorem 1.5

We divide the argument into three parts.

Step 1. Suppose that \(\Vert G\Vert \) is finite. Using the definition of \({\bar{\mathbb {P}}}^\mu \) and \(\varphi \), formula (5.1) can be rewritten as

Fubini’s theorem implies that if \(\Phi (t) = {\bar{\mathbb {E}}}^\mu \bigl (f({\bar{X}}(t)) g({\bar{Y}}(t))\bigr )\), then

that is, the Laplace transform of \(\Phi \) is given by \(\Phi (0) / \lambda \). It follows that \(\Phi (t) = \Phi (0)\) for almost every \(t \in [0, \infty )\). Assume that f and g are additionally continuous. Then \(\Phi \) is right-continuous, and hence we simply have \(\Phi (t) = \Phi (0)\) for every \(t \in [0, \infty )\). In other words,

for every \(t \geqslant 0\) and every bounded continuous nonnegative functions f and g. By a density argument, the distributions of \(({\bar{X}}(t), {\bar{Y}}(t))\) and \(({\bar{X}}(0), {\bar{Y}}(0))\) under \({\bar{\mathbb {P}}}^\mu \) are equal, that is, \(({\bar{X}}(t), {\bar{Y}}(t))\) is indeed a stationary process under \(\mathbb {P}^\mu \). The first assertion of Theorem 1.5 is proved when \(\Vert G\Vert \) is finite.

Step 2. Extension to the case when G(x, dy)m(dx) is a \(\sigma \)-finite measure is immediate, except that we need to work with the infinite measure \(\mu (dx, dy) = G(x, dy) m(dx)\) (without the normalisation constant \(\Vert G\Vert ^{-1}\)). We leave it to the interested reader to verify that the above proof carries over to this setting.

Step 3. The second assertion of Theorem 1.5 follows now from Birkhoff’s ergodic theorem for Markov processes. Indeed: suppose that \(\varphi \) is a nonnegative Borel function on \(D \times D\). By the ergodic theorem given in Corollary 25.9 in [17], the limit

exists with probability \({\bar{\mathbb {P}}}^\mu \) one, and if \({\mathscr {I}}\) denotes the \(\sigma \)-algebra of all events which are invariant under time shifts, then

Below we prove that irreducibility of X(t) implies that \(\mathscr {I}\) is trivial: it only contains events of probability \({\bar{\mathbb {P}}}^\mu \) zero or one. This implies that

with probability \({\bar{\mathbb {P}}}^\mu \) one, completing the proof of the theorem.

It remains to show that \({\mathscr {I}}\) is trivial. This follows by a standard argument, which is however difficult to find in literature, and so we provide full details. Recall that we assume irreducibility of X(t): if \(m(A) > 0\) and \(t > 0\), then \(\mathbb {P}^x(X_t \in A) > 0\) for almost every \(x \in D\), and our goal is to prove that if I is an invariant event for \(({\bar{X}}(t), {\bar{Y}}(t))\), in the sense that the time-shifts leave I unchanged, then \({\bar{\mathbb {P}}}^\mu (I)\) is either zero or one.

By Lemma 1 in [11], for every invariant event I we have

for some invariant Borel set \(B \subseteq D \times D\). That is, for every \(t > 0\) we have

for almost every \((x, y) \in D \times D\) with respect to the measure \(\mu \). Since the product measure \(m \times m\) is absolutely continuous with respect to \(\mu (dx, dy) = \Vert G\Vert ^{-1} G(x, dy) m(dx)\), the above property also holds for almost every \((x, y) \in D \times D\) with respect to \(m \times m\). It follows that for every \(t > 0\),

for almost every \((x, y) \in D \times D\) (with respect to \(m \times m\)). On the other hand, irreducibility of X(t) and Y(t) and independence of these processes imply that if \(m \times m(B) > 0\), then for almost every \((x, y) \in D \times D\) we have

Thus, if \(m \times m(B) > 0\), then \(\mathbbm {1}_B(x, y) > 0\) for almost every \((x, y) \in D \times D\), that is, B is of full measure \(m \times m\). We conclude that either B or its complement has zero measure \(m \times m\). In the former case \({\bar{\mathbb {P}}}^\mu (I) = 0\), while in the latter \({\bar{\mathbb {P}}}^\mu (I) = 1\), and so \({\mathscr {I}}\) is indeed trivial. \(\square \)

References

Bieniek, M., Burdzy, K.: The distribution of the spine of a Fleming–Viot type process. Stoch. Process. Appl. 128(11), 3751–3777 (2018). https://doi.org/10.1016/j.spa.2017.12.003

Bieniek, M., Burdzy, K., Finch, S.: Non-extinction of a Fleming–Viot particle model. Probab. Theory Relat. Fields 153, 1–40 (2012). https://doi.org/10.1007/s00440-011-0372-5

Bieniek, M., Burdzy, K., Pal, S.: Extinction of Fleming–Viot-type particle systems with strong drift. Electron. J. Probab. 17(11), 1–15 (2012). https://doi.org/10.1214/EJP.v17-1770

Burdzy, K., Engländer, J.: The spine of the Fleming–Viot process driven by Brownian motion. Preprint (2021). arXiv:2112.01720

Burdzy, K., Engländer, J., Marshall, D.E.: The spine of two-particle Fleming–Viot process in a bounded interval. Preprint (2023). arXiv:2308.14290

Burdzy, K., Hołyst, R., Ingerman, D., March, P.: Configurational transition in a Fleming–Viot-type model and probabilistic interpretation of Laplacian eigenfunctions. J. Phys. A: Math. Gen. 29(11), 2633–2642 (1996). https://doi.org/10.1088/0305-4470/29/11/004

Burdzy, K., Hołyst, R., March, P.: A Fleming–Viot particle representation of the Dirichlet Laplacian. Commun. Math. Phys. 214(3), 679–703 (2000). https://doi.org/10.1007/s002200000294

Burdzy, K., Tadić, T.: On the spine of two-particle Fleming–Viot process driven by Brownian motion. Preprint (2021). arXiv:2111.07968

Chung, K.L., Walsh, J.B.: Markov Processes, Brownian Motion, and Time Symmetry. Grundlehren der Mathematischen Wissenschaften, vol. 249. Springer, New York (2005). https://doi.org/10.1007/0-387-28696-9

Fitzsimmons, P.J., Getoor, R.K.: On the potential theory of symmetric Markov processes. Math. Ann. 281, 495–512 (1988). https://doi.org/10.1007/BF01457159

Fukushima, M.: A note on irreducibility and ergodicity of symmetric Markov processes. In: Albeverio, S., Combe, P., Sirugue-Collin, M. (eds.) Stochastic Processes in Quantum Theory and Statistical Physics. Lecture Notes in Physics, vol. 173, pp. 200–207. Springer, Berlin, Heidelberg (1982)

Getoor, R.K.: Transience and recurrence of Markov processes. Sémin. Probab. Strasbg. 14, 397–409 (1980)

Getoor, R.K.: Excessive Measures. Probability and Its Applications. Birkhäuser, Boston (1990)

Getoor, R.K., Sharpe, M.J.: Naturality, standardness, and weak duality for Markov processes. Z. Wahrscheinlichkeitstheor. Verw. Geb. 67, 1–62 (1984). https://doi.org/10.1007/BF00534082

Grigorescu, I., Kang, M.: Immortal particle for a catalytic branching process. Probab. Theory Relat. Fields 153, 333–361 (2012). https://doi.org/10.1007/s00440-011-0347-6

Ikeda, N., Nagasawa, M., Watanabe, S.: A construction of Markov processes by piecing out. Proc. Jpn. Acad. 42, 370–375 (1966). https://doi.org/10.3792/pja/1195522037

Kallenberg, O.: Foundations of Modern Probability, 3rd edn. Springer Nature, Switzerland (2021). https://doi.org/10.1007/978-3-030-61871-1

Meyer, P.-A.: Renaissance, recollements, mélanges, ralentissement de processus de Markov. Ann. Inst. Fourier 25(3–4), 465–497 (1975). https://doi.org/10.5802/aif.593

Schilling, R.: A note on invariant sets. Probab. Math. Stat. 24(1), 47–66 (2004)

Stein, E.M.: Topics in Harmonic Analysis Related to the Littlewood–Paley Theory. Annals of Mathematics Studies, vol. 63. Princeton University Press, Princeton (1970). https://doi.org/10.1515/9781400881871

Tough, O.: Scaling limit of the Fleming–Viot multi-colour process. Preprint (2021). arXiv:2110.05049

Acknowledgements

I am immensely grateful to Krzysztof Burdzy and Jim Pitman for introduction to the subject and inspiration, and to the anonymous referee for insightful and exceptionally helpful comments.

Author information

Authors and Affiliations

Contributions

M.K. is the sole author of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

In loving memory of my father.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Work supported by the Polish National Science Centre (NCN) Grant No.@ 2019/33/B/ST1/03098.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kwaśnicki, M. Fleming–Viot couples live forever. Probab. Theory Relat. Fields 188, 1385–1408 (2024). https://doi.org/10.1007/s00440-023-01247-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00440-023-01247-z