Abstract

Executive functions (EF) are a set of higher-order cognitive abilities that enable goal-directed behavior by controlling lower-level operations. In the brain, those functions have been traditionally associated with activity in the Frontoparietal Network, but recent neuroimaging studies have challenged this view in favor of more widespread cortical involvement. In the present study, we aimed to explore whether the network that serves as critical hubs at rest, which we term network reliance, differentiate individuals as a function of their level of EF. Furthermore, we investigated whether such differences are driven by genetic as compared to environmental factors. For this purpose, resting-state functional magnetic resonance imaging data and the behavioral testing of 453 twins from the Colorado Longitudinal Twins Study were analyzed. Separate indices of EF performance were obtained according to a bifactor unity/diversity model, distinguishing between three independent components representing: Common EF, Shifting-specific and Updating-specific abilities. Through an approach of step-wise in silico network lesioning of the individual functional connectome, we show that interindividual differences in EF are associated with different dependencies on neural networks at rest. Furthermore, these patterns show evidence of mild heritability. Such findings add knowledge to the understanding of brain states at rest and their connection with human behavior, and how they might be shaped by genetic influences.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Executive functions (EF) are an umbrella term for high-order cognitive abilities employed for situations requiring goal-directed behavior, including the maintenance of task goal(s), the integration and elaboration of incoming lower-level signals and processes for the selection of relevant information and inhibition of distractors, switching between concurrent goals, and overall decision making (Banich 2009). The neurological underpinnings of brain organization are commonly investigated by using fluctuations in the amount of blood (de-)oxygenation, known as Blood Oxygen Level Dependent (BOLD) signal, across distant cortical regions (Anderson et al. 2008). This approach has unveiled complex cortical profiles, whereby regions show patterns of co-activation and spontaneously assemble into coherent networks at rest, which mimic those observed during task (Smith et al. 2013). Traditionally, cognitive processes underlying EF have been thought to rely on the metabolic and functional activity of frontal and parietal regions (Stuss et al. 1998; Collette et al. 2005), which form the Frontoparietal Network (FPN). However, recent neuroimaging studies have expanded this view in favor of a more widespread involvement of remote brain regions in EF as well, including of posterior and subcortical structures (Jurado and Rosselli 2007; Fedorenko et al. 2013). This evidence suggests that interconnectedness and integrity across multiple brain regions may help sustaining executive functioning. As such, investigating inter-network organization may be a fruitful route for understanding interindividual differences in EF, especially considering the role that regions outside the FPN might play in supporting higher levels of EF.

Since EF abilities rely upon distributed brain functional patterns, great interest has been directed toward the study of interindividual differences in their organization as assessed by resting-state patterns of connectivity. Indeed, not only have interindividual differences in the functional connectome been proven to represent a unique signature of the individual (Finn et al. 2015), but they have also been proven highly stable in the adult brain (Ronnlund et al. 2015). These findings have motivated the development of new approaches to help characterize individual differences, with such approaches showing that most of the regions helpful in improving interindividual differentiation lie in the default mode, attentional and control executive networks (Airan et al. 2016). Such findings are consistent with prior studies that reveal how the network organization of both the FPN and other attentional networks, as well as their coupling, differentiate higher versus lower EF individuals (Reineberg et al. 2015, 2018).

More recently, the study of brain networks has been conceptualized within the framework of graph theory measures that allow for investigations of integration and segregation mechanisms that ensure information flow across the brain (Sporns 2013). Graph theory approaches deal with the study of network topology looking at the relationship between the graph nodes (i.e., brain regions) and edges (i.e., functional or structural connections). The study of interindividual differences has been approached with the use of several graph theory measures. These have shown evidence of a tight link between global efficiency measures and interindividual differences in intelligence scores (Li et al. 2009). Of interest, the degree of global connectivity of the prefrontal cortex has also been shown to selectively relate with interindividual differences in intelligence, suggesting a role of this region as a major hub for information exchange in the brain that is necessary for higher-order cognitive behavior (Cole et al. 2012; Duncan et al. 2020). In line with the aforementioned studies, high and low EF individuals show distinct network topologies at rest with hub-like behavior for high EF individuals occurring for some regions outside the traditional FPN (Reineberg and Banich 2016).

In the present study, we expand upon these findings in two ways. First, we used in silico lesioning of the functional connectome (Hart et al. 2016) to examine network characteristics as a function of an individual’s level of EF. In these in silico approaches, the network topology at baseline is determined. Then, networks are selectively lesioned iteratively in a step-by-step manner. In our case, in each step we took out the brain node (or edge) that is associated with the highest level of hub-like activity, referred to as centrality. In our study, centrality of nodes was determined based on their nodal degree, a measure representing the amount of connection that a given brain region has to the rest of the brain. While several other measures of centrality have also been utilized in the literature, they tend to yield similar results (Joyce et al. 2013). This approach is then run iteratively, such as that every time a node is removed from the individual connectivity matrix, centrality of all nodes is recalculated and the “new” most central node is removed. Because the most central nodes for information transfer are removed first in this procedure, one can determine those regions that play the most important role in the system across varying levels of degradation. Networks which lost the greatest number of nodes during the first stages of lesioning were considered to represent the networks on which individuals most rely during rest, which we term “network reliance”.

Lesioning approaches have a long history in network science, as they provide a window on the role of network topology in sustaining efficient information processing (Barabasi and Bonabeau 2003). For example, in silico lesioning studies of scale-free systems have revealed that efficiency comes with a trade-off in the resilience of the network to sustain the random or targeted attack of its nodes, as the removal of central hubs causes greater disruption than the removal of random nodes (Barabasi and Bonabeau 2003). Particularly efficient networks, like the brain, appear to be able to withstand both targeted and random attacks (Achard et al. 2006; Joyce et al. 2013).

The second manner in which our study extends beyond prior work is that it was performed on a set of twins (in their late 20 s) which provides the opportunity to examine whether genetic or environmental influences are most prominent in an individual’s network characteristics as a function of EF. We did so by exploring how these brain patterns of network reliance differ between pairs of monozygotic (MZ) twins, who share 100% of their genes, as compared to dizygotic (DZ) twins, who share half their genes. Twins’ studies represent indeed one of the most common approaches for the investigation of genetic influences on a trait and hence its heritability (Mayhew and Meyre 2017; Friedman et al. 2021). In recent years, twins’ studies have for instance been employed to demonstrate the genetic underpinnings of EF (Lessov-Schlaggar et al. 2007; Friedman et al. 2008; Tucker-Drob et al. 2013), the genetic influences in shaping the individual functional and structural connectome (Thompson et al. 2013; Reineberg et al. 2019), the heritability of the topological organization of the human connectome (Bolken et al. 2014; Sinclair et al. 2015), as well as the heritability of resilience to its systematic lesioning (Menardi et al. 2021). However, to our knowledge, no study has tried to combine this evidence to verify if in silico lesioning can be used to unveil patterns of preferential network reliance at rest as a function of individual EF abilities, nor if such reliance is at least partially influenced by genetic factors.

Methods

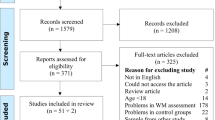

Participants and assessment of executive functioning

A total of 453 twins from the Colorado Longitudinal Study (age: M = 28.6, SD = ± 0.62; MZ: n = 229, M = 28.6, SD = ± 0.62; DZ: n = 216, M = 28.7, SD = 0.63) were recruited based on birth records from the Colorado Twin Registry. All participants underwent cognitive testing particularly focused on determining EF. From all tests’ scores, three EF components in the form of z-values were extracted according to the unity and diversity model by Miyake and colleagues (Miyake et al. 2000; Friedman and Miyake 2017), consisting of a common EF (cEF) factor, representative of the shared variance underlying all the administered EF tasks; and the updating (UPD) and shifting (SHI) specific components, reflecting the remaining correlation between EF tasks once the cEF factor is removed. For more details on the model, the readers are referred to the original published work (Miyake et al. 2000; Friedman and Miyake 2017).

To determine the amount of overlap in participants’ scores across EF factors, higher and lower performers for each of the three EF components (cEF, SHI, UPD) were determined based on a cut-off score around 0, such as that z-values above 0 were considered as representative of individuals whose performance was above the sample mean, thus referred as “higher performers”, whereas z-values below 0 were considered indicative of “lower performers” within the same sample. Overall, the 67% and 65% of the subjects classified as higher performers for the cEF component were also observed to show higher performance on the SHI and UPD components, respectively; on the other hand, 45% of higher SHI performers also had higher scores at the UPD component. In this study, a moderate positive correlation was observed between our measures of cEF and SHI (r = 0.31, p < 0.0001) and between cEF and UPD (r = 0.38, p < 0.0001); with a negative correlation between SHI and UPD measures (r = − 0.28; p < 0.0001).

To control for the possible risk of multicollinearity, the Variance Inflation Factor (VIF) was computed for each cognitive measure. VIF values inform on the percentage of the coefficients’ variance that is inflated, where values close to 1 indicate no correlation, values between 1 and 5 indicate moderate correlation and values above 5 suggest high correlation (Fox 2015). Our results prove a VIF near 1 for all the three EF factors (VIFcEF = 1.49; VIFSHI = 1.38; VIFUPD = 1.46), suggesting the correlations to be only mild and that they do not imply a risk of multicollinearity of the data (Fox 2015).

Neuroimaging data acquisition and preprocessing

All participants underwent a single scanning session in either a Siemens Tim Trio (3 T) or Prisma (3 T) scanner for the acquisition of T1 anatomical images (repetition time (TR) = 2400 ms, echo time (TE) = 2.07 ms, matrix size = 320 × 320 × 224, voxel-size = 0.80 × 0.80 × 0.80mm3, flip angle (FA) = 8.00 deg., slice thickness = 0.80 mm) and T2* resting-state functional magnetic resonance imaging (rs-fMRI) lasting 6.25 min (number of volumes = 816, TR = 460 ms, TE = 27.2 ms, matrix size = 82 × 82 × 56, voxel-size = 3.02 × 3.02 × 3.00mm3, FA = 44.0 deg., slice thickness = 3.00 mm, field of view (FOV) = 248 mm). Details on the preprocessing steps have been published in a recent work by some of the authors (Reineberg et al. 2019) and include: signal stabilization via the removal of the first 10 volumes, head motion correction, coregistration, normalization to the standard MNI152 template, denoising from motion and other noise signals through the AROMA ICA procedure and finally band-pass filtering (0.001–0.08 Hz). Blood Oxygen Level Dependent (BOLD) time series were extracted from each of the 264 spherical 1 cm parcels of the Power’s Atlas (Power et al. 2011). For each participant, a 264 × 264 functional connectivity matrix was then computed from the Pearson’s r Correlation between each pair of parcels and further underwent normalization through Fischer’s z transformation.

In silico networks’ lesioning

To obtain a quantitative estimate over network reliance as a function of performance scores at the three EF components, we adopted a lesioning approach based on the selective removal of each node (parcel) from the original correlation matrix. In more detail, weighted adjacency matrices were obtained from the thresholded functional matrices, retaining only the 10% of the overall connection density. The rationale for such a stringent approach comes from prior large cohort studies demonstrating that 10% sparsity thresholds have the highest test–retest reproducibility for global metrics (Wang et al. 2011). Furthermore, genetic contributions have been reported to be better exploited at connection density around 10% (Sinclair et al. 2015). Nevertheless, as common in the graph theory literature, we have also tested a range of lower thresholds (from 80 to 60%), retaining from the 20% to the 40% of the original connection densities. Results comparing different thresholding are available in the Supplementary Materials, and demonstrate that the pattern of results is maintained across different thresholds.

The study of brain network properties was then approached within the graph theory framework, whereby graphs are constructed considering brain parcels as nodes and their functional correlation as weighted links, known as edges. A measure of nodal degree, an index of connectedness between a node and all the other nodes of the brain, was computed based on the Brain Connectivity Toolbox (https://sites.google.com/site/bctnet/) function running in Matlab 2017b. The measure of nodal degree was weighted based on the individual adjacency matrix, and hence it was computed as the total sum of the weighted links connecting a given node to all other nodes of the network. Nodes were then ranked based on their nodal degree, such as that parcels ranking first are thought to represent major hubs in the information flow, whereby their lesioning is expected to cause a greater disruption in network connectivity (Jeong et al. 2000), compared to the removal of less central nodes (Albert et al. 2000).

To investigate the patterns of network degradation, we progressively decomposed the functional matrix through the selective removal of one node at a time, iteratively re-computing the rank order at each stage of degradation. We then inferred the relevance of each network by counting the average number of nodes lost per network across 8 equal stages of matrix lesioning, each accounting for 33 nodes loss (i.e., equal to a 12.5% reduction in network components), totalizing 264 nodes. We hypothesized that nodes lost at the first stages of lesioning represent the brain regions and connections that are most important in network integrity as they count the most in ensuring proficient information flow. In contrast, regions and connections lost at later stages have instead a minor role in ensuring efficient functioning.

Linear regression models, accounting for dependence between twins and scanner type (TRIO, PRISMA), were then run to test the association between EF components’ scores and the associated number of nodes lost for each network, which were both treated in the form of continuous variables in the formula:

Significance threshold value was set as p = 0.05. Figure 1 graphically presents the main methodological steps underlying neuroimaging data preprocessing, definition of matrices and in silico lesioning of networks.

Data acquisition and analysis workflow. a Structural and functional MRI data were acquired from 453 twins from the Colorado LTS dataset. b. BOLD signal fluctuations were then extracted from each of the 264 cortical nodes as defined by the Power Atlas (Power et al. 2011) and used to extract individual functional connectivity matrices from the Pearson’s r correlation value between each pair of ROIs. c Matrices were then thresholded to retain the 10% of the connection density. d Brain graph metrics were extracted, considering brain parcels as nodes and their functional connections as edges. e A matrix lesioning approach was employed to estimate the extent for which individual brain activity at rest relies on cortical nodes belonging to different cortical networks. To do so, all nodes in the brain were ranked based on their nodal degree, so that the most important hub in the brain was removed first during the lesioning process. After each lesion, brain topology measures were re-computed and the order of lesioning updated. f At the end of the lesioning process, we counted the number of nodes belonging to a given network that were lost at each stage of matrix lesioning. Networks which lost the greatest number of nodes during the first stages of lesioning (reduction of 12.5% of nodes) were considered to represent the networks on which individuals most rely during rest (i.e., network reliance). Finally, the influence of genetics and of environmental factors in determining network reliance was computed as the difference between MZ and DZ twins. *AUD auditory network, CING cingulo-opercular network, DAN dorsal attention network, DMN default mode network, FPN fronto-parietal network, LTS Colorado Longitudinal Twin Study, rs-fMRI resting-state functional magnetic resonance imaging, s-MRI structural magnetic resonance imaging, SMN sensorimotor network, SN salience network, SUB subcortical network, VAN ventral attention network, VIS visual network

Heritability analyses

Monozygotic and dizygotic twins born and raised in the same family represent a unique sample of data from which to derive estimates of a trait’s heritability. In particular, pairs of MZ twins share 100% of their genetic profile, or additive genetic variance (A). Moreover, they are likely to receive equal maternal care, education, and socioeconomic benefits, thus also sharing a common environment (C) (Mayhew and Meyre 2017). Similarly, DZ twins also share the environment in which they grow but, differently from MZ twins, they only share 50% of their genes (Mayhew and Meyre 2017). Based on these notions, Falconer’s formula (Mayhew and Meyre 2017) is commonly employed to estimate heritability of a given human trait from the correlation between MZ and DZ twins, multiplied by 2:

According to this formula, if a trait presents a significantly higher correlation in MZ twins compared to DZ twins, then that trait can be considered as genetically influenced, whereas if a higher DZ correlation is observed compared to MZ, then that trait might be more driven by environmental factors instead. Although the Falconer’s formula has the advantage of providing a fast and easily computable estimate of heritability, more information can be derived from structural equation models (SEMs) (Mayhew and Meyre 2017). In genetic studies, SEMs are typically employed for the comparisons of 4 models: ACE, which considers the influence of A, C and of unique (E) environmental influences plus measurement error, of only AC (dropping E), of only AE (dropping C) and finally of only E models. This approach has the advantage of allowing an estimation of the contribution of each specific factor (A, C and E) to the phenotype. In the present study, SEMs were compute via OpenMx (Neale et al. 2016). The best model fit was chosen based on the model with a non-significant Chi-Square test and the lowest Akaike Information Criteria (AIC), as those are indicative of models with smaller prediction error (Wilson and Hilferty 1931; Akaike 1973).

In this study, Falconer’s formula was used to derive initial estimates of heritability, followed by a more throughout analysis via SEMs. The heritability of EF was assessed for replication purposes, since prior studies have already determined their genetic origin (Lessov-Schlaggar et al. 2007; Friedman et al. 2008; Tucker-Drob et al. 2013). On the other hand, a more in-depth analysis was carried out to explore the extent to which resting-state networks’ reliance, computed from the average node loss per network in the first four stages of lesioning (up to 50% of the network is lesioned), could be considered genetically influenced and, thus, heritable.

Results

Differential networks reliance as a function of EF performance

Networks’ lesioning via the targeted removal of nodes based on their nodal degree was used to explore patterns of networks reliance as a function of EF performance. Results of linear regression models yielding a significant, positive beta value indicated that individuals with higher EF scores were losing more nodes belonging to that specific resting-state network than those with lower EF scores, while negative beta values indicated the opposite: individuals with lower EF scores were losing more nodes belonging to a specific network than those with higher EF scores. Figure 2 shows a graphical depiction of the observed patterns of network reliance as a function of EF performance. Associated raw beta and p values for each stage of matrix lesioning are listed in Table 1. In consideration of potential type I errors due to multiple comparisons, p values surviving false-discovery rate (FDR) correction (Jafari and Ansari-Pour 2019) are marked in bold in Table 1. For each network, FDR was applied to consider multiple testing over the 8 stages of matrix lesioning.

Network reliance at rest as a function of EF performance. Patterns of network reliance were computed based on the average number of nodes lost per network for each stage of matrix lesioning. Overall, degradation patterns appeared to differ as a function of EF performance. a Individuals scoring higher across EF tasks (cEF component) showed an initial greater loss of DMN nodes, opposite to the lower performers, who appeared to rely more on VIS nodes instead. Interestingly, the pattern switched along the lesioning process, with high and low performers losing visual and DMN/FPN nodes at the last stages of lesioning, respectively. b Performance scores for the shifting-specific (SHI) component proved that, even at rest, higher performers tend to rely more on CING and SUB network nodes, whereas those same networks appear of less relevance in low performers, who lose them only at the last stages of lesioning. c. Higher and lower performers at the updating-specific (UPD) component tended to equally rely more on bottom-up attentional networks (VAN), with a slight tendency for higher performers to also lose more DMN nodes. In the legend, brain size is indicative of the associated statistical significance of each finding. *AUD auditory network, cEF common executive function, CING cingulo-opercular network, DAN dorsal attention network, DMN default mode network, FPN fronto-parietal network, ns non-significant, SHI shifting-specific factor, SMN sensorimotor network, SN salience network, SUB subcortical network, UPD updating-specific factor, VAN ventral attention network, VIS visual network

For cEF, none of the results survived the correction for multiple comparisons. Nonetheless, a number of statistically significant effects were observed and they are described here for completeness. Higher scoring individuals tended to lose nodes belonging to the Default Mode Network (DMN) at the very first stage of lesioning (b1 = 1.15, p1 = 0.036), opposite to what observed in those with lower EF, who tended to lose sensory nodes belonging to the Visual (VIS) network in the first two steps of matrix lesioning (b1 = − 0.91, p1 = 0.037; b2 = − 0.53, p2 = 0.024). Interestingly, the observed pattern appeared to flip toward the final stages of matrix lesioning, with higher cEF individuals losing VIS nodes at stages 4 and 6 (b4 = 0.045, p4 = 0.018; b6 = 0.35, p6 = 0.026) and lower cEF losing DMN and FPN nodes at stage 5 and 6 (DMN: b5 = − 0.41, p5 = 0.041; FPN: b6 = − 0.29, p6 = 0.046).

With regard to the SHI component, results surviving the FDR correction showed a significant positive correlation between performance scores at SHI-specific abilities and the higher loss of nodes belonging to the Subcortical (SUB) network at stage 3 (b3 = 0.3, p3 = 0.002) and Ventral Attention (VAN) at stage 7 (b7 = 0.2, p7 = 0.012), and conversely a negative correlation with the loss of nodes belonging to the Auditory (AUD) network at stage 6 (b6 = − 0.38, p6 = 0.006) and the VAN at stage 5 (b5 = − 0.22, p5 = 0.004). Although not surviving correction for multiple comparisons, we also observed a trend in the data whereby lower SHI performers tended to show a higher loss of nodes belonging to the cingulo-opercular (CING) and SUB networks at later stages of lesioning (5th, 6th and 8th (i.e., final)) (CING: b5 = − 0.23, p5 = 0.021; b6 = − 0.19, p6 = 0.044; SUB: b8 = − 0.38, p8 = 0.019).

In regard of UPD-specific abilities, results surviving the FDR correction showed a significant higher loss of nodes belonging to the VAN at stage 6 of lesioning (b6 = 0.28, p6 = 0.004) in individuals with higher performance scores. In addition, although not surviving correction for multiple comparisons, we observed a tendency by the higher performers to also loose VAN nodes at stages 4 of matrix lesioning (b4 = 0.21, p4 = 0.039) and more DMN nodes at stage 5 (b5 = 0.54, p5 = 0.034).

Genetic influence in neuronal networks reliance and EF performance

As found in previous waves of this study (Friedman et al. 2008; Friedman and Miyake 2017), high heritability was observed for the measures of EF performance. In general, higher correlations for EF scores were observed in MZ twins compared to DZ twins, suggesting very similar cognitive profiles across pairs of MZ twins. As a result, moderate-to-high heritability estimates were observed for the cEF factor (rMZ = 0.81, rDZ = 0.22, Falconer’s value = 1.18), followed by the SHI (rMZ = 0.55, rDZ = 0.15, Falconer’s value = 0.79) and UPD (rMZ = 0.65, rDZ = 0.32, Falconer’s value = 0.68) components (see Table 2).

In the present work, we also asked whether the observed patterns of network reliance, as indexed by the average node loss in the first stages of lesioning (stage 1 to 4), was influenced by genetics and/or environmental factors. Said differently, we were examining whether the pattern of loss of nodes appears to show genetic influences. For this purpose, both the Falconer’s Formula and SEMs, comparing the models’ fitness considering all combinations between A, C and E components, were run. As shown in Table 3, both the Falconer’s Formula and the model selection based on non-significant Chi-Square and low AIC values reveal the presence of genetic influences for 3 networks: the DAN, the DMN and the FPN. For the DAN network, the Falconer’s Formula reveals a MZ correlation that is double that of DZ twins’ pairs (rMZ = 0.28, rDZ = 0.14, H = 0.29). The pairwise model comparison dropping A resulted in poorer model fit, as well as the model comparison dropping A and C. This suggests a significant contribution of genetic components to the phenotype, which is supported by the best model fit being the one combining the A and E components. A similar pattern was observed for the DMN, where the MZ correlation remained higher than the DZ correlation (rMZ = 0.46, rDZ = 0.38, H = 0.15), suggesting a genetic contribution. Again, the pairwise model comparison revealed the AE model as the one with the smallest prediction error and it was hence preferred over all other combinations. Finally, a slightly more complex scenario was observed for the FPN. As for the DAN and DMN, the correlation between MZ twin pairs was still higher than that of DZ twins (rMZ = 0.40, rDZ = 0.28, H = 0.23). However, a drop in model fit was observed whenever one of the three parameters (A, C or E) was removed from the model, suggesting that the best prediction could be achieved only when their combined contribution was considered.

Interestingly, these findings were replicated across different matrix thresholding, specifically from 80 to 60% thresholding, for both the DAN, DMN and FPN. SEMs results along all ranges of matrix thresholding are reported in Table S1 of the Supplementary Materials. The complete patterns of network lesioning for the 3 networks showing genetic influences are also displayed in Fig. 3.

Phenotypic similarity across MZ and DZ twins. The average number of nodes lost for the FPN, DAN and DMN network along the 8 stages of matrix lesioning is shown. The greater the similarity in the profiles between MZ and DZ siblings, the narrower the shadowed area in-between profiles. *FPN fronto-parietal network, DAN dorsal attention network, DMN default mode network, MZ monozygotic, DZ dizygotic

Discussion

In the present study, we expand the current knowledge of the relationship between brain and cognition by quantifying how, within a large set of neurologically normal individuals, one’s level of EF performance is associated with the differential load upon brain networks at rest. The notion that specific cortical activation patterns are observed as a function of the individual EF abilities, as assessed outside the scanner, has already been shown in the literature (see for example Reineberg et al. 2015, 2018; Reineberg and Banich 2016). These patterns of activity extend beyond the traditionally recognized frontal and parietal regions, involving subcortical and lower-level regions (Jurado and Rosselli 2007; Bettcher et al. 2016), whereby individuals with higher cognitive performance appear to benefit from more distributed resting-state network activity (Reineberg et al. 2015). An example of this diffuse processing is represented by the different network-to-network interactions that underlie individual differences in aspects of higher-level cognition, which have been reported both during resting-state conditions (Tian et al. 2013; Roye et al. 2020; Jolles et al. 2020), as well as during online task execution (Kelly et al. 2008; Sala-Llonch et al. 2012; Zhang et al. 2019).

Notably, several sources of evidence have shown that the functional patterns associated with behavior, usually detected via task fMRI, can be reliably detected via resting-state fMRI as well (Smith et al. 2013; Vidaurre et al. 2021). Indeed, recent work has unveiled this tight linkage providing the feasibility of reliably predicting interindividual differences via the mapping of resting-state components to task-evoked activity, showing the application of a wide range of machine learning tools for the prediction of behavior via rest data (Parker Jones et al. 2017; Cohen et al. 2020). Moreover, recent evidence also suggests that the capacity of resting-state data to accurately identify regions typically associated with behavior, e.g., language areas, might even be superior to what exploited via task-evoked activity (Park et al. 2020). In another study, the observed close correlation between task activity and the amplitude in fluctuation of the resting-state signal has been suggested as a possible rationale for the implementation of resting-state fMRI analysis as a possible proxy to determine task activity in non-compliant patients’ populations, where task fMRI testing is not possible (Kannurpatti et al. 2012). Taken together, all those lines of evidence support the existence of a strong relationship between networks behavior at rest and the unique profile of the individual (Finn et al. 2015).

In this study, we hypothesized that individuals would differ in their reliance on brain networks as function of their executive function ability and that this relationship would be reflected by our in silico lesioning approach. In particular, our logic was that networks for which the individuals showed higher reliance during rest and hence greater intra- and inter-network connectivity, as indexed by the graph theory metric of nodal degree, would be lost at the first stages of lesioning. For each of our cumulative measures of EF, specifically cEF, SHI and UPD, we were indeed able to observe distinguishable patterns, which we will discuss below.

For our cEF factor, we observed a tendency for a positive association between performance scores and the amount of nodes lost in the first stages of lesioning belonging to the DMN and VAN, whereas lower performance scores showed a tendency toward the preferential loss of nodes belonging to the VIS network instead. Interestingly, this pattern appeared to switch halfway through the stages of lesioning, such as that high performers lost VIS nodes only at the later stages and, vice versa: low cEF performers lost DMN, FPN and marginally VAN nodes at the last stages (Fig. 2a). However, because these findings did not survive the correction for multiple comparisons, great caution is advised in their interpretation, which we will discuss only for completeness and future reference. Indeed, the plausibility of the existence of discernable patterns as a function of performance abilities, has already been addressed (Reineberg et al. 2018). In this regard, prior studies have demonstrated that the DMN is involved not only in internal processes such as memory retrieval or mind wandering (Buckner and Carroll 2007; Hongkeun 2010; Spreng et al. 2010; Sestieri et al. 2011), but it also actively cooperates with other networks in sustaining high-order cognitive processes (Elton and Gao 2015), useful for the formation of chains of thoughts (Spreng et al. 2010) and in supporting goal-directed behavior (Smallwood et al. 2012). In line with this interpretation, greater DMN activity at rest is associated with better task performance (for a review see Anticevic and colleagues (Anticevic et al. 2012)). In contrast, the more the DMN activity is suppressed during a task, the more the activity of visual and sensory areas tends to emerge (Greicius and Menon 2004), such as that the greater the overall decoupling between VIS and DMN nodes, the better the performance at cognitive tasks, such as memory recall (Zhang et al. 2019). On the other hand, reduced activation of the DMN in favor of abnormal activity in the VIS network has been associated with important attentional deficits, as those observed in individuals suffering from Attention Deficit Hyperactivity Disorder (ADHD) (Hale et al. 2014). Taken together, we speculate that lower cEF performers might be more driven by external sensory inputs resulting in greater functional connectivity at rest between VIS nodes.

Differently from what was observed for the cEF component, shifting-specific scores, after correcting for multiple comparisons, were associated with differences between high and low performers mainly with regards to the involvement of networks mediating attentional processes and bottom-up/automatized behavior. In particular, we observed that individuals with higher SHI scores showed greater reliance on the SUB network (whose nodes were lost at the beginning of the lesioning process) and less reliance on the VAN (nodes were lost only at the last stages of lesioning), whereas individuals with lower SHI scores lost both AUD and VAN nodes in the last stages of lesioning. In addition, we also observed a tendency (that did not survive multiple comparisons) toward the loss of CING and SUB nodes in lower performers at the last stages, suggesting a less central role of those networks at rest (Fig. 2b). In line with this observation, studies on both healthy and pathological populations have reported a link between subcortical structures, especially the basal ganglia and the thalamus, and an individual’s capacity to proficiently switch one’s attentional focus (Ravizza and Ivry 2001; Green et al. 2017). Similarly, the connectivity of the anterior cingulate cortex with the prefrontal cortex is thought to help sustain high-order cognitive processes by enabling a transition from”thoughts to actions” (Paus 2001). On the other hand, VAN activity mediates the ability to attend to relevant sensory stimuli, particularly relevant in attentional shifting (Corbetta et al. 2008). Finally, the CING network has also been proposed to mediate alertness levels in the absence of predictive stimuli, such as that the greater its pre-stimulus activity, the faster the response times upon stimulus presentation (Coste and Kleinschmidt 2016). As such, it may be that greater connectivity at rest in nodes mediating attentional-relevant processes may allow for an individual to promptly respond to, and to switch between, different stimuli.

Finally, we investigated whether differences in network reliance could also be observed for the updating-specific component of the unity-diversity model (Friedman and Miyake 2017). In this case, UPD performance scores were mostly related to nodes belonging to the VAN network, when associations were corrected for multiple comparisons. This pattern was present in form of a significant positive correlation between the number of VAN nodes lost and the individual performance scores, which might suggest that higher UPD performers might benefit from greater network reliance upon this network, whose activity is relevant in mediating contextual updating and modulation of top-down processes (Geng and Vossel 2013). In addition, although not surviving correction for multiple comparisons, we also observed that individuals with higher scores tended to present greater involvement of the DMN network at the central stages of lesioning (Fig. 2c). A possible link between higher UPD abilities and the activity of the DMN is represented by the reported positive association between the extent of DMN network engagement and the greater levels of thought’s details during a working memory task (Sormaz et al. 2018; Turnbull et al. 2019).

A second aim of the present study was to address if the observed interindividual differences in brain and behavior might be explained by genetic influences, as exploited via Falconer’s Formula and SEMs’ pairwise comparison. SEMs were run to compare the efficacy of 4 models, iteratively considering all possible combinations between the A, C and E factors to respectively determine the influence of genetic, common and unique environmental influences on the trait of interest. Our results suggest the presence of genetic influences in the lesioning patterns of the DAN, DMN and FPN networks across a range of matrix thresholding scenarios. Interestingly, all three networks showing genetic influences are well-established cognitively relevant networks, with a strong role in determining EF abilities, as discussed above. Furthermore, we were able to replicate prior evidences of the strong genetic origin of EF abilities via the simpler implementation of the Falconer’s Formula, used to compare MZ and DZ measures based on the assumption that higher correlation between MZ twins, compared to DZ twins, is indicative of a genetic contribution to the measure at hand. It is, however, important to consider that the estimates made by the model on the role of additive genetic and environmental components on a given trait are specific to the population being tested (Mayhew and Meyre 2017). As a result, if any alteration is present in one of the component (genetic or environmental) as a result of sampling, this will be reflected in the model’s results as well (Mayhew and Meyre 2017).

Prior work was able to detail the complex heterogeneity of genetic influences in the brain, and in particular how it correlates with the resting-state networks (Richiardi et al. 2015), proving higher heritability for within, rather than between, regions in the brain serving similar functional roles (Reineberg et al. 2019). It is worth mentioning that prior studies have highlighted the issue that heritability estimates might be more difficult to exploit in the absence of long scan times (Menardi et al. 2021). Because this is a common issue in neuroimaging studies, a recent investigation has suggested a possible way to overcome this via the combination of task and rest fMRI data, to obtain a measure known as “general functional connectivity” (GFC), which has shown to entail stronger test–retest reliability and to display higher heritability estimates compared to when only rest or task fMRI data are analyzed (Elliott et al. 2019). It would be of interest for future studies to apply in silico lesioning considering GFC measures.

Limitations

Overall, our study is not free of limitations. First of all, one might argue that the majority of the cited evidence in favor of a given network preponderance in explaining cognitive differences comes from studies looking at task fMRI data, whereas all our inferences come purely from rs-fMRI. As such, it might be challenging to assume that interindividual differences at rest could still hold any value in explaining task-related behavior. Nevertheless, a recent study investigating the replicability and reliability of fMRI data on a large dataset was able to prove how interindividual differences in the functional connectome are persistent across resting state and 7 different task-related scan acquisitions, spanning EF, motor, social and emotional tasks (Shah et al. 2016). This evidence supports that what is observed during the resting state is indeed characteristic of individual differences that shape intra and inter-network connections, which ultimately determine behavior. Of course, not all the brain areas show such heterogeneous profiles across individuals, but all the regions that do predict cognitive differences belong to areas of the brain with high variability (Mueller et al. 2013).

A second limitation of the present study is that our approach allows us to discern reliance on specific networks as a function of EF performance but not within regions of the same network, and also not with regards to hemispheric differences. For instance, prior studies have proven the existence of a right-hemispheric bias during selective attention tasks (Shulman et al. 2010), and a left hemispheric bias for task switching performance (Kim et al. 2012; Ambrosini and Vallesi 2016). One possibility is that our approach applied to resting-state data might present sufficient sensitivity, but diminished specificity, to detect the intrinsic and finer grade linkages of behavior to network reliance, which might otherwise be more easily examined via task-evoked activity instead.

Finally, while the high interindividual variability across our participants has allowed us to detect interesting differences in the brain–behavior continuum as a function of their cognitive performance, on the other hand it might have diminished the statistical strength of some of our findings. In particular, only some of the results survived FDR correction. Nevertheless, we believe that providing the reader with all our findings can help point to meaningful patterns, which would have otherwise been obscured by using more stringent corrections. Nonetheless, it would be advisable to attempt to replicate our findings in future studies.

Conclusions

The present study adds knowledge on the relationship between the individual organization of the functional connectome at rest and interindividual differences in EF. In particular, we show that discernable patterns of preferential network reliance can be demonstrated as a function of EF performance, as revealed through an in silico lesioning approach of the functional connectome. Furthermore, while interindividual differences in EF showed high heritability estimates in our sample, replicating prior findings in the literature, heritability estimates of reliance on specific rs-fMRI networks as a function of level of EF were more modest.

Availability of data and material

Data release is handled on a case-by-case basis after contacting the Authors.

Code availability

The code used for the computation of the Nodal Degree measure is freely available from the Brain Connectivity Toolbox (https://sites.google.com/site/bctnet/).

References

Achard S, Raymond S, Whitcher B et al (2006) A resilient, low-frequency, small-world human brain functional network with highly connected association cortical hubs. J Neurosci 26:63–72. https://doi.org/10.1523/JNEUROSCI.3874-05.2006

Airan RD, Vogelstein JT, Pillai JJ et al (2016) Factors affecting characterization and localization of interindividual differences in functional connectivity using MRI. Hum Brain Mapp 37:1986–1997. https://doi.org/10.1002/hbm.23150

Akaike H (1973) Information theory and an extension of maximum likelihood principle. In: Petrov BN, Csa´ki F (eds), Akademia´Kiado´, Budapest, pp 267–281

Albert R, Jeong H, Barabási A-L (2000) Error and attack tolerance of complex networks. Nature 406:378. https://doi.org/10.1038/35019019

Ambrosini E, Vallesi A (2016) Asymmetry in prefrontal resting-state EEG spectral power underlies individual differences in phasic and sustained cognitive control. Neuroimage 124:843–857. https://doi.org/10.1016/j.neuroimage.2015.09.035

Anderson JR, Carter CS, Fincham JM et al (2008) Using fMRI to test models of complex cognition. Cogn Sci 32:1323–1348. https://doi.org/10.1080/03640210802451588

Anticevic A, Cole MW, Murray JD et al (2012) The role of default network deactivation in cognition and disease. Trends Cogn Sci 16:584–592. https://doi.org/10.1016/j.tics.2012.10.008

Banich MT (2009) Executive function: the search for an integrated account. Curr Dir Psychol Sci

Barabasi A-L, Bonabeau E (2003) Scale-free networks. Sci Am 288:60–69

Bettcher BM, Mungas D, Patel N et al (2016) Neuroanatomical substrates of executive functions: beyond prefrontal structures. Neuropsychologia 85:100. https://doi.org/10.1016/j.neuropsychologia.2016.03.001

Bolken MM, Mandl RCW, Brouwer RM et al (2014) Heritability of structural brain network topology: A DTI study of 156 twins. Hum Brain Mapp 35:5295–5305. https://doi.org/10.1002/hbm.22550

Buckner RL, Carroll DC (2007) Self-projection and the brain. Trends Cogn Sci 11:49–57. https://doi.org/10.1016/j.tics.2006.11.004

Cohen AD, Chen Z, Jones OP et al (2020) Regression-based machine-learning approaches to predict task activation using resting-state fMRI. Hum Brain Mapp 41:815–826. https://doi.org/10.1002/hbm.24841

Cole MW, Yarkoni T, Repovš G et al (2012) Global connectivity of prefrontal cortex predicts cognitive control and intelligence. J Neurosci 32:8988–8999. https://doi.org/10.1523/JNEUROSCI.0536-12.2012

Collette F, der Linden MV, Laureys S et al (2005) Exploring the unity and diversity of the neural substrates of executive functioning. Hum Brain Mapp 25:409–423. https://doi.org/10.1002/hbm.20118

Corbetta M, Patel G, Shulman GL (2008) The reorienting system of the human brain: from environment to theory of mind. Neuron 58:306–324. https://doi.org/10.1016/j.neuron.2008.04.017

Coste CP, Kleinschmidt A (2016) Cingulo-opercular network activity maintains alertness. Neuroimage 128:264–272. https://doi.org/10.1016/j.neuroimage.2016.01.026

Duncan J, Assem M, Shashidhara S (2020) Integrated intelligence from distributed brain activity. Trends Cogn Sci 24:838–852. https://doi.org/10.1016/j.tics.2020.06.012

Elliott ML, Knodt AR, Cooke M et al (2019) General functional connectivity: shared features of resting-state and task fMRI drive reliable and heritable individual differences in functional brain networks. Neuroimage 189:516–532. https://doi.org/10.1016/j.neuroimage.2019.01.068

Elton A, Gao W (2015) Task-positive functional connectivity of the default mode network transcends task domain. J Cogn Neurosci. https://doi.org/10.1162/jocn_a_00859

Fedorenko E, Duncan J, Kanwisher N (2013) Broad domain generality in focal regions of frontal and parietal cortex. PNAS 110:16616–16621. https://doi.org/10.1073/pnas.1315235110

Finn ES, Shen X, Scheinost D et al (2015) Functional connectome fingerprinting: identifying individuals using patterns of brain connectivity. Nat Neurosci 18:1664–1671. https://doi.org/10.1038/nn.4135

Fox J (2015) Applied regression analysis and generalized linear models. Sage Publications, Thousand Oaks

Friedman NP, Miyake A (2017) Unity and diversity of executive functions: Individual differences as a window on cognitive structure. Cortex 86:186–204. https://doi.org/10.1016/j.cortex.2016.04.023

Friedman NP, Miyake A, Young SE et al (2008) Individual differences in executive functions are almost entirely genetic in origin. J Exp Psychol Gen 137:201. https://doi.org/10.1037/0096-3445.137.2.201

Friedman NP, Banich MT, Keller M (2021) Twin studies to GWAS: there and back again. Trends Cogn Sci. https://doi.org/10.1016/j.tics.2021.06.007

Geng JJ, Vossel S (2013) Re-evaluating the role of TPJ in attentional control: contextual updating? Neurosci Biobehav Rev 37:2608–2620. https://doi.org/10.1016/j.neubiorev.2013.08.010

Green JJ, Boehler CN, Roberts KC et al (2017) Cortical and subcortical coordination of visual spatial attention revealed by simultaneous EEG–fMRI recording. J Neurosci 37:7803–7810. https://doi.org/10.1523/JNEUROSCI.0326-17.2017

Greicius MD, Menon V (2004) Default-mode activity during a passive sensory task: uncoupled from deactivation but impacting activation. J Cogn Neurosci 16:1484–1492. https://doi.org/10.1162/0898929042568532

Hale TS, Kane AM, Kaminsky O et al (2014) Visual network asymmetry and default mode network function in ADHD: an fMRI study. Front Psych. https://doi.org/10.3389/fpsyt.2014.00081

Hart MG, Ypma RJF, Romero-Garcia R et al (2016) Graph theory analysis of complex brain networks: new concepts in brain mapping applied to neurosurgery. J Neurosurg 124:1665–1678. https://doi.org/10.3171/2015.4.JNS142683

Hongkeun K (2010) Dissociating the roles of the default-mode, dorsal, and ventral networks in episodic memory retrieval. Neuroimage 50:1648–1657. https://doi.org/10.1016/j.neuroimage.2010.01.051

Jafari M, Ansari-Pour N (2019) Why, When and How to Adjust Your P Values? Cell Journal (yakhteh) 20:604. https://doi.org/10.22074/cellj.2019.5992

Jeong H, Tombor B, Albert R et al (2000) The large-scale organization of metabolic networks. Nature 407:651. https://doi.org/10.1038/35036627

Jolles DD, Mennigen E, Gupta MW et al (2020) Relationships between intrinsic functional connectivity, cognitive control, and reading achievement across development. Neuroimage. https://doi.org/10.1016/j.neuroimage.2020.117202

Joyce KE, Hayasaka S, Laurienti PJ (2013) The human functional brain network demonstrates structural and dynamical resilience to targeted attack. PLoS Comput Biol 9:e1002885. https://doi.org/10.1371/journal.pcbi.1002885

Jurado MB, Rosselli M (2007) The elusive nature of executive functions: a review of our current understanding. Neuropsychol Rev 17:213–233. https://doi.org/10.1007/s11065-007-9040-z

Kannurpatti SS, Rypma B, Biswal BB (2012) Prediction of task-related BOLD fMRI with amplitude signatures of resting-state fMRI. Front Syst Neurosci. https://doi.org/10.3389/fnsys.2012.00007

Kelly CAM, Uddin LQ, Biswal BB et al (2008) Competition between functional brain networks mediates behavioral variability. Neuroimage 39:527–537. https://doi.org/10.1016/j.neuroimage.2007.08.008

Kim C, Cilles SE, Johnson NF, Gold BT (2012) Domain general and domain preferential brain regions associated with different types of task switching: a meta-analysis. Hum Brain Mapp 33:130–142. https://doi.org/10.1002/hbm.21199

Lessov-Schlaggar CN, Swan GE, Reed T et al (2007) Longitudinal genetic analysis of executive function in elderly men. Neurobiol Aging 28:1759–1768. https://doi.org/10.1016/j.neurobiolaging.2006.07.018

Li Y, Liu Y, Li J et al (2009) Brain Anatomical Network and Intelligence. PLoS Comput Biol 5:e1000395. https://doi.org/10.1371/journal.pcbi.1000395

Mayhew AJ, Meyre D (2017) Assessing the heritability of complex traits in humans: methodological challenges and opportunities. Curr Genomics 18:332. https://doi.org/10.2174/1389202918666170307161450

Menardi A, Reineberg AE, Vallesi A et al (2021) Heritability of brain resilience to perturbation in humans. Neuroimage 235:118013. https://doi.org/10.1016/j.neuroimage.2021.118013

Miyake A, Friedman NP, Emerson MJ et al (2000) The unity and diversity of executive functions and their contributions to complex “Frontal Lobe” tasks: a latent variable analysis. Cogn Psychol 41:49–100. https://doi.org/10.1006/cogp.1999.0734

Mueller S, Wang D, Fox MD et al (2013) Individual variability in functional connectivity architecture of the human brain. Neuron 77:586–595. https://doi.org/10.1016/j.neuron.2012.12.028

Neale MC, Hunter MD, Pritikin JN et al (2016) OpenMx 2.0: extended structural equation and statistical modeling. Psychometrika 81:535–549. https://doi.org/10.1007/s11336-014-9435-8

Park KY, Lee JJ, Dierker D et al (2020) Mapping language function with task-based vs. resting-state functional MRI. PLoS ONE 15:e0236423. https://doi.org/10.1371/journal.pone.0236423

ParkerJones O, Voets NL, Adcock JE et al (2017) Resting connectivity predicts task activation in pre-surgical populations. NeuroImage Clin 13:378–385. https://doi.org/10.1016/j.nicl.2016.12.028

Paus T (2001) Primate anterior cingulate cortex: where motor control, drive and cognition interface. Nat Rev Neurosci 2:417–424. https://doi.org/10.1038/35077500

Power JD, Cohen AL, Nelson SM et al (2011) Functional network organization of the human brain. Neuron 72:665–678. https://doi.org/10.1016/j.neuron.2011.09.006

Ravizza SM, Ivry RB (2001) Comparison of the basal Ganglia and cerebellum in shifting attention. J Cogn Neurosci 13:285–297. https://doi.org/10.1162/08989290151137340

Reineberg AE, Banich MT (2016) Functional connectivity at rest is sensitive to individual differences in executive function: a network analysis: executive function individual differences at rest. Hum Brain Mapp 37:2959–2975. https://doi.org/10.1002/hbm.23219

Reineberg AE, Andrews-Hanna JR, Depue BE et al (2015) Resting-state networks predict individual differences in common and specific aspects of executive function. Neuroimage 104:69–78. https://doi.org/10.1016/j.neuroimage.2014.09.045

Reineberg AE, Gustavson DE, Benca C et al (2018) The relationship between resting state network connectivity and individual differences in executive functions. Front Psychol. https://doi.org/10.3389/fpsyg.2018.01600

Reineberg AE, Hatoum AS, Hewitt JK et al (2019) Genetic and environmental influence on the human functional connectome. Cereb Cortex. https://doi.org/10.1093/cercor/bhz225

Richiardi J, Altmann A, Milazzo A-C et al (2015) Correlated gene expression supports synchronous activity in brain networks. Science 348:1241–1244. https://doi.org/10.1126/science.1255905

Ronnlund M, Sundstrom A, Nilsson LG (2015) Interindividual differences in general cognitive ability from age 18 to age 65 years are extremely stable and strongly associated with working memory capacity. Intelligence 53:59–64. https://doi.org/10.1016/j.intell.2015.08.011

Roye S, Castagna PJ, Calamia M et al (2020) Relationships between multiple dimensions of executive functioning and resting-state networks in adults. Neuropsychologia 141:107418. https://doi.org/10.1016/j.neuropsychologia.2020.107418

Sala-Llonch R, Pena-Gomez C, Arenaza-Urquijo EM et al (2012) Brain connectivity during resting state and subsequent working memory task predicts behavioural performance. Cortex 48:1187–1196. https://doi.org/10.1016/j.cortex.2011.07.006

Sestieri C, Corbetta M, Romani GL, Shulman GL (2011) Episodic memory retrieval, parietal cortex, and the default mode network: functional and topographic analyses. J Neurosci 31:4407–4420. https://doi.org/10.1523/JNEUROSCI.3335-10.2011

Shah LM, Cramer JA, Ferguson MA et al (2016) Reliability and reproducibility of individual differences in functional connectivity acquired during task and resting state. Brain Behav. https://doi.org/10.1002/brb3.456

Shulman GL, Pope DLW, Astafiev SV et al (2010) Right hemisphere dominance during spatial selective attention and target detection occurs outside the dorsal frontoparietal network. J Neurosci 30:3640–3651. https://doi.org/10.1523/JNEUROSCI.4085-09.2010

Sinclair B, Hansell NK, Blokland GAM et al (2015) Heritability of the network architecture of intrinsic brain functional connectivity. Neuroimage 121:243–252. https://doi.org/10.1016/j.neuroimage.2015.07.048

Smallwood J, Brown K, Schooler JW (2012) Cooperation between the default mode network and the frontal–parietal network in the production of an internal train of thought. Brain Res 1428:60–70. https://doi.org/10.1016/j.brainres.2011.03.072

Smith SM, Vidaurre D, Beckmann CF et al (2013) Functional connectomics from resting-state fMRI. Trends Cogn Sci 17:666–682. https://doi.org/10.1016/j.tics.2013.09.016

Sormaz M, Murphy C, Wang H et al (2018) Default mode network can support the level of detail in experience during active task states. Proc Natl Acad Sci 115:9318–9323. https://doi.org/10.1073/pnas.1721259115

Sporns O (2013) Structure and function of complex brain networks. Dialogues Clin Neurosci 15:16

Spreng NR, Stevens DW, Chamberlain JP et al (2010) Default network activity, coupled with the frontoparietal control network, supports goal-directed cognition. Neuroimage 53:303–317. https://doi.org/10.1016/j.neuroimage.2010.06.016

Stuss DT, Alexander MP, Hamer L et al (1998) The effects of focal anterior and posterior brain lesions on verbal fluency. J Int Neuropsychol Soc 4:265–278. https://doi.org/10.1017/S1355617798002653

Thompson P, Ge T, Glahn DC et al (2013) Genetics of the connectome. Neuroimage 80:475–488. https://doi.org/10.1016/j.neuroimage.2013.05.013

Tian L, Kong Y, Ren J et al (2013) Spatial vs. temporal features in ICA of resting-state fMRI – a quantitative and qualitative investigation in the context of response inhibition. PLoS ONE 8:e66572. https://doi.org/10.1371/journal.pone.0066572

Tucker-Drob EM, Briley DA, Harden KP (2013) Genetic and environmental influences on cognition across development and context. Curr Direct Psychol Sci. https://doi.org/10.1177/0963721413485087

Turnbull A, Wang HT, Schooler JW et al (2019) The ebb and flow of attention: between-subject variation in intrinsic connectivity and cognition associated with the dynamics of ongoing experience. Neuroimage 15:286–299. https://doi.org/10.1016/j.neuroimage.2018.09.069

Vidaurre A, Llera A, Smith SM, Woolrich MW (2021) Behavioural relevance of spontaneous, transient brain network interactions in fMRI. Neuroimage 229:117713. https://doi.org/10.1016/j.neuroimage.2020.117713

Wang J-H, Zuo X-N, Gohel S et al (2011) Graph theoretical analysis of functional brain networks: test-retest evaluation on short- and long-term resting-state functional MRI data. PLoS ONE. https://doi.org/10.1371/journal.pone.0021976

Wilson EB, Hilferty MM (1931) The distribution of chi-square. Proc Natl Acad Sci USA 17:684. https://doi.org/10.1073/pnas.17.12.684

Zhang M, Savill N, Margulies DS et al (2019) Distinct individual differences in default mode network connectivity relate to off-task thought and text memory during reading. Sci Rep. https://doi.org/10.1038/s41598-019-52674-9

Acknowledgements

We thank Dr. Naomi P. Friedman, PI, of NIH grant R01 MH063207, who shared the dataset analyzed in this study as well as NIH grants R01 MH063207 and R01 AG046938 that supported the data collection.

Funding

LTS data were collected under grant MH063207 and NIH grants R01 MH063207 and R01 AG046938 as supporting data collection.

Author information

Authors and Affiliations

Contributions

All authors have contributed to the conceptualization of the study. AM, AER, LLS and CF conducted the main analyses of the paper. All authors helped in the interpretation of the data. AM wrote the first draft. All authors revised the manuscript critically for important intellectual content and the final version has been approved by all authors.

Corresponding author

Ethics declarations

Conflict of interest

All authors have no conflict of interest to declare.

Ethics approval

Data collection was approved under protocol 11-0614 from the University of Colorado Boulder's Institutional Review Board.

Consent to participate

All LTS participants signed informed consent prior to data collection.

Consent for publication

Not Applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Menardi, A., Reineberg, A.E., Smith, L.L. et al. Topographical functional correlates of interindividual differences in executive functions in young healthy twins. Brain Struct Funct 227, 49–62 (2022). https://doi.org/10.1007/s00429-021-02388-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00429-021-02388-4