Abstract

Previous work showed that language has an important function for the development of action control. This study examined the role of verbal processes for action–effect learning in 4-year-old children. Participants performed an acquisition phase including a two-choice key-pressing task in which each key press (action) was followed by a particular sound (effect). Children were instructed to either (1) label their actions along with the corresponding effects, (2) verbalize task-irrelevant words, (3) or perform without verbalization. In a subsequent test phase, they responded to the same sound effects either under consistent or under inconsistent sound-key mappings. Evidence for action–effect learning was obtained only if action and effects were labeled or if no verbalization was performed, but not if children verbalized task-irrelevant labels. Importantly, action–effect learning was most pronounced when children verbalized the actions and the corresponding effects, suggesting that task-relevant verbal labeling supports the integration of event representations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

“If I hit the brake, then my car will slow down”—learning this type of simple association between an action and its effect forms the basis for goal-directed behavioral activity and is essential for mastering the challenges of our daily lives. According to ideomotor approaches to action control (e.g., Greenwald, 1970; Hommel, 1996; Hommel, Müsseler, Aschersleben, & Prinz, 2001; James, 1890; Stock & Stock, 2004), performing a given action, such as a certain movement, automatically results in an association between the motor pattern producing the movement (m) and its sensory effects (e). The theory of action–effect learning (Elsner & Hommel, 2001) assumes that these associations are bidirectional (m ↔ e), suggesting that one can use them to control one’s motor behavior by mentally anticipating the effect of a given action. That is, once I know that hitting the break will slow down my car (m → e), the intention of slowing down (which re-activates e) will prompt me to hit the break by priming the associated action (e → m).

Adults indeed acquire and use bidirectional action-outcome contingencies to plan and guide their actions (for reviews, see Hommel, 2009; Hommel & Elsner, 2009), but only a few studies focused on action–effect learning in childhood, where the acquisition of novel action–effect contingencies seems particularly important. In one study, 4 and 7-year-olds performed in two successive experimental phases (Eenshuistra, Weidema, & Hommel, 2004): In a first acquisition phase, they carried out free-choice responses by pressing one of two response keys (m 1 and m 2) in each trial. Each key press triggered a distinct auditory effect (i.e., one of two sounds; m 1 → e 1, m 2 → e 2). In a second test phase, children first heard the sound and then responded by pressing one of the response keys. Importantly, the assignment of response keys to sounds was either consistent (e 1 → m 1, e 2 → m 2) or inconsistent (e 1 → m 2, e 2 → m 1) with the sound-key mapping in the acquisition phase. Results on the level of reaction times showed that children in the consistent mapping condition outperformed those in the inconsistent mapping condition in both age groups (mapping-consistency effect). However, on the level of response accuracy, the mapping consistency effect was much more pronounced in the 4-year-olds than in the 7-year-olds (cf. Eenshuistra, Verschoor, Kray, & Hommel, 2009; Kray, Eenshuistra, Kerstner, Weidema, & Hommel, 2006), indicating that younger children were less efficient in overwriting previously learned action–effect associations in order to maintain and implement a new set of task rules in the test phase. Based on these findings, one goal of the present study was to provide further evidence for action–effect learning in childhood.

Our second and more important focus was on the role of language in the acquisition of action–effect associations. Ever since the early work of Vygotsky (1962), researchers have emphasized the importance of language for the development of action control (e.g., Luria, 1960, 1969; Meacham, 1979). Their findings are compatible with more recent frameworks that highlight the important role of language for the regulation of behavioral activity. The cognitive complexity and control theory (Zelazo & Frye, 1997), for instance, assumes that language is the medium for rule use. That is, formulating rules in natural language allows children to access and focus on particular pieces of knowledge when performing tasks. Accordingly, Zelazo (1999, 2004) assumes that language serves two distinct functions in the development of action control: When children start talking, they begin to label and name previous perceptions and the outcome of behavioral activity. These semantic descriptions can outlive and exist independently of the respective sensory data and thus support the maintenance of enduring memory traces in working memory (constitutive function). Once an event is represented in working memory, the retrieval of its label may trigger action schemata without external stimulation (executive function). With increasing age, children learn to use this mechanism for action regulation. Similarly, Gentner assumes that language has an important function for cognitive development because the use of common labels for distinct instances of a category and the acquisition of words for relations support the development of increasingly complex relations (e.g., Gentner & Loewenstein, 2002; Gentner & Medina, 1998). Taken together, these models suggest that language can support the integration of relationships between events (cf. Hermer-Vasquez, Spelke, & Katsnelson, 1999) and facilitate the binding of actions to their effects (i.e., action–effect learning).

Consistent with these theoretical assumptions, a number of recent empirical studies indicate that children and adults can indeed employ verbal processes to support the retrieval and maintenance of task goals, action sequences, and if–then rules (e.g., Baddeley, Chincotta, & Adlam, 2001; Bryck & Mayr, 2005; Emerson & Miyake, 2003; Goschke, 2000; Kirkham, Cruess, & Diamond, 2003; Kray, Eber, & Karbach, 2008; Kray, Eber, & Lindenberger, 2004; Müller, Zelazo, Hood, Leone, & Rohrer, 2004; Towse, Redbond, Houston-Price, & Cook, 2000).

A previous study of ours has examined the role of verbal labeling for the acquisition of action–effect associations in 4-year-old preschoolers (Kray et al., 2006). Similar to previous studies (e.g., Eenshuistra et al., 2004), children performed in an acquisition phase and a test phase. In the acquisition phase, they responded to the faces of Ernie and Bert from the popular TV show “Sesame Street” by freely choosing a blue or a green response button as soon as Ernie’s face appeared on the screen. Pressing one of the keys was followed by a trumpet sound, while pressing the other resulted in a bell sound. Children carried out the acquisition phase under one of four verbalization conditions: The action group named their actions, that is, they labeled which response button they had pressed (e.g., “blue”). The effect group named the effect of their action, that is, the sound it induced (e.g., “trumpet”). The action + effect group labeled both the action and its effect (e.g., “green-bell”), while a fourth group verbalized words that were unrelated to the action and the effect (predicting what Ernie liked for lunch, “pizza” or “spaghetti”). In the test phase, half of each verbalization group was tested under a consistent or inconsistent sound-response button mapping. Accuracy data showed that naming one part of the action–effect pair (i.e., action-only or effect-only) did not allow for action–effect learning while naming both the action and the effect did. This suggests that naming only one member of the action–effect pair attracts attention to this member to the expense of the other, thereby preventing the acquisition of their relationship. In contrast, verbally relating effects to the corresponding action seems to support the integration of this event.

Although these results point to an important role of verbal labeling in action–effect learning, the study of Kray et al. also yielded an unexpected result: Children performing under task-irrelevant verbalization did not perform statistically different from the children in the “action + effect” condition. Thus, instead of disrupting inner speech and thereby impairing action–effect learning, task-irrelevant verbalization still allowed for the acquisition of action–effect associations. One possible explanation of this finding is that the exact nature of the verbalization may be less relevant, as long as it attracts attention to the relation between action and effect. If this were true, choosing the words “pizza” and “spaghetti” as task-irrelevant verbalization may not have been ideal: Though these words were neither directly related to the actions nor their effects, the cover story did provide a general link between the verbalizations and the stimuli because the children were told to guess Ernie’s lunch. One way to test whether this link was of relevance for the acquisition of action–effect associations would be to apply a different type of task-irrelevant verbalization, such as randomly created non-words completely unrelated to the task, the stimuli, and the instructions. Another issue that hampers the interpretation of the Kray et al. study is that it included both a task-relevant and a task-irrelevant verbalization condition, but no baseline condition without verbalization. Accordingly, it is hard to disentangle the relative contributions of both types of verbalization and to determine whether action–effect learning would have occured without verbalization in this paradigm (cf. Eenshuistra et al., 2004; Elsner & Hommel, 2001).

Thus, the purpose of the present study was twofold: Firstly, to investigate the influence of task-relevant (action + effect) and task-irrelevant verbalization on action–effect learning. In contrast to our previous study (Kray et al., 2006), we chose meaningless non-words as task-irrelevant verbalization in order to make sure that they were neither related to the task nor particularly attracting the children’s attention to any relevant task features. Our second goal was to examine the acquisition of action–effect associations under a control condition without verbalization, allowing the assessment of children’s performance under both the task-relevant and the task-irrelevant verbalization relative to a baseline condition without verbalization, so that the effects of both types of verbalization on action–effect learning can be assessed independently. Since action control can be supported by task-relevant verbalization (Kray et al., 2006, 2008) and impaired by task-irrelevant verbalization (e.g. Baddeley et al., 2001; Emerson & Miyake, 2003; Kray et al., 2008), we expected action–effect learning (i.e., the mapping consistency effect) to be more pronounced under the task-relevant verbalization than under the control condition, and less pronounced under the task-irrelevant verbalization than under the control condition. As previous studies have suggested that accuracy measures are more sensitive to action–effect acquisition in children than reaction times are, we will focus on accuracy, but will also report reaction times.

Method

Participants

110 four-year-old children recruited from four kindergartens in South Germany participated in the study. Exclusion criteria were motor impairments and developmental disorders reported by parents and kindergarten teachers. Children suffering from myopia (n = 8) wore corrective glasses while performing the experimental tasks. Twenty children had to be excluded from the analysis because they either did not finish the experiment (n = 4), were not able to perform the color naming test (see below) (n = 4), or responded at chance level in the testing phase (n = 12), yielding a final sample of 90 children (see Table 1). The children received small presents for participating, and the kindergartens were given money to buy games (total amount per child: 6 EUR/~8 USD).

Stimuli and apparatus

We used a notebook computer for data collection and stimulus presentation. The faces of Ernie and Bert from the TV show ‘‘Sesame Street’’ served as visual stimuli. A left and a right response key on an external response box registered manual responses. Since 4-year-olds did not have a clear representation of “left” and “right”, the response buttons were color-tagged and referred to as the “green” and the “blue” button. The mapping of the colors to the left and right response button was counterbalanced across subjects. Auditory stimuli consisted of the sounds of a bell and a trumpet.

Design and procedure

The experiment was divided into an acquisition phase and a test phase. In the acquisition phase, the children were to press one of the two response keys as quickly as possible when Ernie appeared. They were instructed to choose the keys freely but to use them about equally often. Pressing one of the response keys was followed by a bell sound (m 1 → e 1) and pressing the other one by a trumpet sound (m 2 → e 2), that is, each sound effect was induced by a distinct motor action. The mapping of the two response buttons to the two sounds was counterbalanced across subjects. In order to motivate the children to complete the acquisition phase, the experiment was set up as a game. The children were told to respond to the appearance of Ernie because he would like to play the game (go trials), but not to respond to Bert because he does not like to play (no-go trials).

The acquisition phase consisted of 144 trials (96 go- and 48 no-go trials) separated into three blocks. Each acquisition trial started after an inter-trial interval of 1,500 ms. The go-stimulus remained on the screen for 7,000 ms or until a response was made. The sound effects lasted for 250 ms. Their frequency slightly varied across participants (depending on how often they pressed each of the response keys), but it was equally distributed in all experimental conditions (see Table 2). The no-go stimulus remained on the screen for about 2,000 ms. While performing the acquisition phase, participants were divided into three verbalization groups. In the task-relevant verbalization group, the children named both the effect and the action producing it (e.g., “green-trumpet”/“blue-bell”). Children in the task-irrelevant verbalization group verbalized non-words (i.e., “bababu”/“dododi”) that were unrelated to the action and its effect. Finally, the third group served as a control condition without verbalization.

In the test phase, each of the three groups was randomly split: One half of the participants performed with an acquisition-consistent sound-key mapping and the other half with an acquisition-inconsistent sound-key mapping. Under the consistent mapping, children worked with the same action–effect assignment as in the acquisition phase but in the reversed order, so that they now pressed the key in response to the sound that this key previously had produced (i.e., m 1 → e 1, m 2 → e 2 in the acquisition phase would yield the mapping e 1 → m 1, e 2 → m 2 in the test phase). Under inconsistent mapping, the sound-key assignment from the acquisition phase was inverted (i.e., m 1 → e 1, m 2 → e 2 in the acquisition phase would yield the mapping e 1 → m 2, e 2 → m 1). Children were told that Ernie likes to make music, so they should press one key when they heard the sound of the trumpet and the other key when they heard the sound of the bell. Again, they were to withhold responding when Bert appeared, because “Bert does not like music and prefers the silence.”

The test phase consisted of three blocks of 24 go- and 6 no-go trials. The trial procedure was identical to the acquisition phase. Consistent with previous studies (Eenshuistra et al., 2004; Kray et al., 2006), the response keys triggered the corresponding sounds in both the acquisition phase and the test phase to avoid extinction of the action–effect associations (see Elsner & Hommel, 2001).

The test sessions took approximately 40 min per child, including short breaks between color-naming test (see below), acquisition phase, and test phase.

Matching procedure

To avoid pre-existing group differences in verbal speed, children were matched to the three verbalization groups based on the results of a color-naming test. In this test, the children saw a sheet with a template in the top row assigning four different colors to four different shapes (yellow circle, blue cross, red triangle, and green square). Twenty-four uncolored shapes were shown below the template. Children were instructed to name the corresponding colors as quickly and accurately as possible. The test score was the number of correctly named colors after 45 s.

Verbal speed

Table 1 shows the mean number of correctly named colors for the three verbalization groups. Analysis of variance (ANOVA) including the between-subjects factors verbalization group (relevant/irrelevant/control) and mapping group (consistent/inconsistent) revealed that neither the main effects nor their interaction reached significance (all p’s > 0.10), showing that the groups did not differ significantly in their speed of verbal responding.

Acquisition phase

Left and right key presses were equally distributed in each of the three groups (see Table 2). ANOVA based on mean reaction times (RT) with the within-subjects factor key press (left/right) and the between-subjects factor verbalization group (relevant/irrelevant/control) showed that children generally responded somewhat faster with the right hand than with the left hand, F(1, 84) = 4.98, p < 0.05, η 2 = 0.05, but that latencies for the three verbalization groups were comparable, and that there was no interaction between key side and verbalization group.

Test phase

Mean error rates and RT of correct responses were analyzed by means of ANOVAs with the between-subjects factors verbalization group (relevant/irrelevant/control) and mapping group (consistent/inconsistent).

Error rates

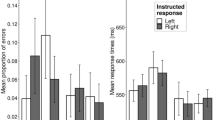

We found a main effect of mapping group, F(1, 84) = 20.86, p < 0.0001, η 2 = 0.17, pointing to higher error rates under the inconsistent-mapping condition than under the consistent-mapping condition, and an interaction between mapping group and verbalization group, F(1, 84) = 4.27, p < 0.05, η 2 = 0.07, but no main effect for verbalization group (p = 0.22). In order to test whether the mapping-consistency effect reached significance under each one of the three verbalization conditions, we ran individual analyses for each of them, revealing reliable mapping-consistency effects in the task-relevant verbalization group and in the control group (F(1, 29) = 24.77, p < 0.0001, η 2 = 0.46, and F(1, 28) = 5.06, p < 0.05, η 2 = 0.15, respectively) but not in the task-irrelevant verbalization group (p = 0.41). Importantly, pairwise comparisons indicated that the mapping-consistency effect was larger in the task-relevant verbalization condition than in the task-irrelevant condition and the control condition (F(1, 56) = 7.42, p < 0.01, η 2 = 0.12, and F(1, 57) = 4.41, p < 0.05, η 2 = 0.07, respectively), but there was no difference between the task-irrelevant condition and the control condition (p = 0.42) (see Fig. 1, upper panel).

Proportion of errors (upper panel) and mean reaction times (lower panel) as a function of verbalization group (relevant, irrelevant, control) and mapping condition (consistent, inconsistent). Error bars refer to standard errors of the mean. Significant between-group differences with respect to consistency effects are highlighted

Latencies

A main effect for mapping group, F(1, 84) = 4.31, p < 0.05, η 2 = 0.05, showed that children responded slower under the inconsistent-mapping than under the consistent-mapping condition (see Fig. 1, lower panel). However, neither the main effect for verbalization group nor the interaction with mapping reached significance (p = 0.92 and p = 0.76, respectively), ruling a speed-accuracy trade-off as explanation for the pattern of results on the level of error rates out.Footnote 1

Discussion

The purpose of the present study was to examine the role of verbal processes in the acquisition of action–effect associations in 4-year-old children. Specifically, we tested whether different types of verbalization (task-relevant, task-irrelevant, no verbalization) performed during an acquisition phase modulated the occurrence of action–effect learning (i.e., the mapping-consistency effect) in a test phase. Similar to our previous studies (Eenshuistra et al., 2004; Eenshuistra et al., 2009; Kray et al., 2006), effects were most significant on the level of accuracy, which is considered to be a more sensitive measure than reaction times in preschool-aged children (for a review, see Diamond & Kirkham, 2005). Overall, our present findings suggest two novel insights.

First, although action–effect learning occurred in the absence of any verbalization, it was particularly pronounced under the task-relevant verbalization. This pattern provides clear evidence for the acquisition of bidirectional action–effect associations in preschoolers under “standard” conditions without verbalization, supporting ideomotor approaches to action control in general and Elsner and Hommel’s theory of action–effect learning (2001) in particular. Importantly, our data also provide evidence for the view that task-relevant verbalization supports action control in childhood (cf. Kray et al., 2006, 2008). That is, if children verbalized their actions and the corresponding effects, thereby verbally connecting the two most relevant aspects of the task, the acquisition of action–effect associations was particularly supported. This finding is consistent with a recent study investigating the role of verbal processes for feature binding in 4-year-olds (Dessalegn & Landau, 2008). In this study, children were shown a target (e.g., a split square, red on the left and green on the right). After a brief delay, the participants saw a display including the target, its reflection, and a square with a different geometric split and they were instructed to identify the target. Results showed that children failed to maintain color-location conjunctions, but that performance improved when targets were accompanied by a verbal cue specifying the relation between the color and the direction (e.g., “the red is on the left”), while no such improvements were found with non-linguistic cues (e.g., pointing). On a more general note, our results support accounts stressing the importance of verbal processes for the integration of different sources of information into one coherent representation (e.g., Hermer-Vazquez et al., 1999), the organization of temporal relations between stimuli and the corresponding motor responses (e.g., Zelazo, 1999), and the sequencing of goal-directed behavioral activity (e.g., Bryck & Mayr, 2005).

Second, while action–effect learning was found under task-relevant verbalization and in the control condition, it did not occur under task-irrelevant verbalization. Thus, in contrast to our previous study (Kray et al., 2006), suppressing inner speech by means of task-irrelevant verbalization did impair the acquisition of action–effect associations, suggesting that children strongly rely on verbal processes to integrate relations between events (cf. Zelazo, 1999, 2004). This result is consistent with previous evidence provided by Kray et al. (2008). In their study, they examined the role of language in task-switching performance in children, younger adults and older adults. While switching from one task to another, participants either engaged in task-relevant verbalization (labeling the next task goal), task-irrelevant verbalization (supposed to disrupt inner speech), or did not verbalize at all. Task-switching was facilitated by task-relevant verbalization, particularly in children and older adults, and hampered by task-irrelevant verbalization, which points to the importance of verbal processes in selecting task goals and maintaining action sequences (cf. Baddeley et al., 2001; Bryck & Mayr, 2005; Emerson & Miyake, 2003; Goschke, 2000; Kirkham et al., 2003; Kray et al., 2008, 2004; Müller et al., 2004; Towse et al., 2000).

So why did our previous study show action–effect learning even under task-irrelevant verbalization? Considering the specific nature of the verbalization (guessing what Ernie liked for lunch), it seems likely that even the very general link between the stimuli and the task-irrelevant verbalization in this study facilitated action–effect learning. This finding has important implications for understanding the role of verbal processes in action control because it suggests that aside from facilitating the integration of relationships between events, language can also have a more general function that primarily serves to redirect and focus attention to the task (cf. Karbach & Kray, 2007; Kirkham et al., 2003; Müller et al., 2004; Towse et al., 2000; Vygotsky, 1962), even if it does not explicitly stress the relationship between the most relevant task features (such as an action and its effect). In fact, this interpretation is consistent with the cognitive control and complexity theory which assumes that verbal processes can serve to facilitate higher-order rule use, but also support the refocusing of children’s attention in the absence of higher-order rules. According to Jacques and Zelazo (2005), the effects of language are limited to refocusing attention in 3-year-olds. At the age of four, however, it can indeed support the integration of conflicting stimulus dimensions and relations between events.

Taken together, our present results suggest that the interplay between language and action control is complex and operating on multiple levels; that is, language can serve different functions in order to regulate behavioral activity (cf. Dessalegn & Landau, 2008; Luria, 1969; Jaques & Zelazo, 2005; Zelazo, 1999, 2004). Future studies need to disentangle the specific function of different types of task-related verbalization for different components of action planning and behavioral control, and to determine to which degree these mechanisms are subject to age-related changes during childhood. In sum, our findings suggest that action–effect learning in early childhood is strongly mediated by verbal processes and that it can be supported by means of task-relevant verbalization relating actions and the corresponding effects. These effects indicate that language can serve as useful tool for (re)directing attention to relevant task features and for implementing and applying task representations in order to plan and guide behavior in preschoolers.

Notes

Although we found no significant pre-experimental group differences in verbal speed, one may argue that the children in the consistent mapping group generally tended to verbalize faster than those in the inconsistent mapping group. In order to make sure that this tendency did not account for the group differences found in the test phase, we performed two control analyses in which we included the color-naming score as a covariate into the two-way ANOVA with the between-subjects factors verbalization group (relevant/irrelevant/control) and mapping group (consistent/inconsistent). We found no significant effect for verbal speed on the level of latencies (p = 0.53). Though the effect reached significance with respect to error rates, F(1, 84) = 4.60, p < 0.05, η 2 = 0.05, the pattern of results did not change.

References

Baddeley, A., Chincotta, D., & Adlam, A. (2001). Working memory and the control of action: Evidence from task switching. Journal of Experimental Psychology: General, 130, 641–657.

Bryck, R. L., & Mayr, U. (2005). On the role of verbalization during task set selection: Switching or serial order control? Memory & Cognition, 33, 611–623.

Dessalegn, B., & Landau, B. (2008). More than meets the eye: The role of language in binding and maintaining feature conjunctions. Psychological Science, 19, 189–195.

Diamond, A., & Kirkham, N. (2005). Not quite as grown-up as we like to think: Parallels between cognition in childhood and adulthood. Psychological Science, 16, 291–297.

Eenshuistra, R. M., Verschoor, S., Kray, J., & Hommel, B. (2009). Explicit learning of arbitrary and non-arbitrary action–effect relations in adults and 4-year-olds (Manuscript under revision).

Eenshuistra, R. M., Weidema, M. A., & Hommel, B. (2004). Development of the acquisition and control of action–effect associations. Acta Psychologica, 115, 185–209.

Elsner, B., & Hommel, B. (2001). Effect anticipation and action control. Journal of Experimental Psychology: Human Perception and Performance, 27, 229–240.

Emerson, M. J., & Miyake, A. (2003). The role of inner speech and task switching: A dual-task investigation. Journal of Memory and Language, 48, 148–168.

Gentner, D., & Loewenstein, J. (2002). Relational language and relational thought. In E. Amsel & J. P. Byrnes (Eds.), Language, literacy, and cognitive development: The development and consequences of symbolic communication (pp. 87–120). Mahwah, NJ: Erlbaum.

Gentner, D., & Medina, J. (1998). Similarity and the development of rules. Cognition, 53, 129–153.

Goschke, T. (2000). Intentional reconfiguration and involuntary persistence in task set switching. In S. Monsell & J. Driver (Eds.), Control of cognitive processes: Attention and performance XVIII (pp. 331–355). Cambridge, MA: MIT Press.

Greenwald, A. G. (1970). Sensory feedback mechanisms in performance control: With special reference to the ideo-motor mechanism. Psychological Review, 77, 73–99.

Hermer-Vazquez, L., Spelke, E. S., & Katsnelson, A. S. (1999). Sources of flexibility in human cognition: Dual-task studies of space and language. Cognitive Psychology, 39, 3–36.

Hommel, B. (1996). The cognitive representation of action: Automatic integration of perceived action effects. Psychological Research, 59, 176–186.

Hommel, B. (2009). Action control according to TEC (theory of event coding). Psychological Research, 73, 512–526.

Hommel, B., & Elsner, B. (2009). Acquisition, representation, and control of action. In E. Morsella, J. A. Bargh, & P. M. Gollwitzer (Eds.), Oxford handbook of human action (pp. 371–398). New York: Oxford University Press.

Hommel, B., Müsseler, J., Aschersleben, G., & Prinz, W. (2001). The theory of event coding (TEC): A framework for perception and action planning. Behavioral and Brain Sciences, 24, 849–937.

Jacques, S., & Zelazo, P. D. (2005). On the possible roots of cognitive flexibility. In B. D. Homer & C. S. Tamis-Lemonda (Eds.), The development of social understanding and communication (pp. 53–81). Mahwah, NJ: Lawrence Erlbaum.

James, W. (1890). The principles of psychology. New York: Dover.

Karbach, J., & Kray, J. (2007). Developmental changes in switching between mental task sets: The influence of verbal labeling in childhood. Journal of Cognition and Development, 8, 205–236.

Kirkham, N. Z., Cruess, L., & Diamond, A. (2003). Helping children apply their knowledge to their behavior on a dimension-switching task. Developmental Science, 6, 449–476.

Kray, J., Eber, J., & Karbach, J. (2008). Verbal self-instructions in task switching: A compensatory tool for action-control deficits in childhood and old age? Developmental Science, 11, 223–236.

Kray, J., Eber, J., & Lindenberger, U. (2004). Age differences in executive functioning across lifespan: The role of verbalization in task preparation. Acta Psychologica, 115, 143–165.

Kray, J., Eenshuistra, R. M., Kerstner, H., Weidema, M., & Hommel, B. (2006). Language and action control: The acquisition of action goals in early childhood. Psychological Science, 17, 737–741.

Luria, A. R. (1960). Experimental analysis of the development of voluntary action in children. In H. P. David & J. C. Brengelmann (Eds.), Perspectives in personality research (pp. 139–149). Oxford, England: Springer.

Luria, A. R. (1969). Speech development and the formation of mental processes. In P. Llyod & C. Fernyhough (Eds.), Lev Vygotsky: critical assessments: thought and language (Vol. II, pp. 84–112). Florence, KY, US: Taylor & Francis/Routledge.

Meacham, J. A. (1979). The role of verbal activity in remembering the goals of actions. In G. Zivin (Ed.), The development of self-regulation through private speech (pp. 237–263). New York: Wiley.

Müller, U., Zelazo, P. D., Leone, T., Hood, S., & Rohrer, L. (2004). Interference control in a new rule use task: Age-related changes, labeling, and attention. Child Development, 75, 1–16.

Stock, A., & Stock, C. (2004). A short history of ideo-motor action. Psychological Research, 68, 176–188.

Towse, J. N., Redbond, J., Houston-Price, C. M. T., & Cook, S. (2000). Understanding the dimensional change card sort. Perspectives from task success and failure. Cognitive Development, 15, 347–365.

Vygotsky, L. S. (1962). Thought and language. Cambridge, MA: MIT Press. (Original work published 1934).

Zelazo, P. D. (1999). Language, levels of consciousness, and the development of intentional action. In P. D. Zelazo & J. W. Astington (Eds.), Developing theories of intention: Social understanding and self-control (pp. 95–117). Mahwah, NJ, US: Lawrence Erlbaum Associates.

Zelazo, P. D. (2004). The development of conscious control in childhood. Trends in Cognitive Sciences, 8, 12–17.

Zelazo, P. D., & Frye, D. (1997). Cognitive complexity and control: A theory of the development of deliberate reasoning and intentional action. In M. I. Stamenov (Ed.), Language structure, discourse and the access to consciousness (pp. 112–153). Amsterdam: Benjamins.

Acknowledgments

This research was supported by the Deutsche Forschungsgemeinschaft (SPP 1107) through grants to Jutta Kray (KR 1884/3-3) and Bernhard Hommel (HO 1430/8-3). We are grateful to Rena Eenshuistra for constructive comments and to Kathrin Bächle, Anna Orth, and Anna Walter for their help in running the experiment.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Karbach, J., Kray, J. & Hommel, B. Action–effect learning in early childhood: does language matter?. Psychological Research 75, 334–340 (2011). https://doi.org/10.1007/s00426-010-0308-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00426-010-0308-1