Abstract

Several methods to localise sources of vibrations have been established in the literature. A great amount of those methods are based on databases with features of known impact positions. Great effort needs to be put into highly expensive experiments that deliver those databases. In this paper, we propose several simulation techniques that may replace the expensive experiments for source localisation. The paper compares the localisation accuracy of simulated and experimental data for two different localisation approaches, the reference database method and neural networks. Both methods process signal arrival time differences from several positions on the structure. The methods are exemplarily applied to a complex small-scale structure from the automotive industry: The small dimensions of the brake disk hat and the inclusion of holes is a challenging task for the accuracy of the applied localisation techniques. Results show that simulated data can replace experimentally gained data well in case of the reference database method, whereas the neuronal networks approach should stick to experimentally gained data. The evaluations show that, despite the small dimension, the relative localisation accuracy is within accepted ranges of literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

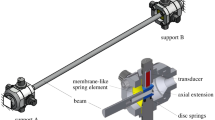

Stick–slip vibrations occur in various systems which involve friction processes. These range from the string vibrations of violins, and creaking doors, from everyday life to highly complex engineering problems. One of the latter represents the subject of this paper from the automotive industry: the contact between the rim, brake disc hat and the wheel carrier can give rise to acoustic phenomena under certain boundary conditions. To investigate the mechanisms at the contact, the exact localisation of the points of excitation is essential. Several studies focus on the localisation of sound sources with the help of measurements with microphone arrays [1,2,3,4,5,6]. Another strategy is to use signals that are recorded by sensors, directly mounted on the surface of the object. In the literature, there are several studies that use one (triaxial) sensor, see, e.g. [7,8,9]. In this study, the focus lies on strategies using more than one sensor mounted on the surface. Furthermore, the study focuses on the determination of the point of impact and not on determining the force-time history like it is presented in [10,11,12]. In the following, we present the experimental setup and some localisation strategies that are suitable for this type of problem.

1.1 Geometry of the investigated problem

In this paper, we investigate the feasibility of localising acoustic sources from the wheel assembly, whose principal layout is shown in Fig. 1. The brake disc hat is part of a vehicle’s braking system and has been chosen for the analysis due to its small dimensions and inhomogeneities. The geometry is visualised in Fig. 1a. The brake disc hat in the case at hand is made of aluminium and includes a hole for centring the disc hat on a car wheel carrier. The five smaller holes are called through bores and are used for the wheel bolts; the sixth one is used to fix the brake disc hat onto the wheel carrier. The disc has a thickness of 7 mm and a diameter of 155 mm. For this setup, the high velocity of the structure-borne sound waves, the dimensions of the disc, plus the inhomogeneities induced by the material cutouts lead to a challenging task.

1.2 Basics of source localisation using acoustic emissions

Generally, the localisation of sources of vibrations, often caused by damage or impacts, can be performed by the so-called Acoustic Emission (AE) technique. AEs are defined as the generation of transient acoustic waves due to a sudden redistribution of stress in the material. The sources of AE can be of different nature like thermal or mechanical stresses, cracks or friction processes. An important source of AEs in the context of the aforementioned stick–slip effects is the transition from a stick phase to a slip phase [13]. The thereby induced waves travelling through the material can be recorded by AE sensors, placed at different positions on the structure. The signals recorded by the sensors are then processed to determine the wave’s point of origin [14]. Different methods exist in the literature for this purpose. Different categorisations for the approaches have been proposed in several review papers [7, 14,15,16,17]. Hereinafter, some of the algorithms that are based on the use of arrival time differences of the waves at the different sensors will be briefly summarised.

Travel times can be obtained from the difference between the time of excitation \(t_{\textrm{exc}}\) and the time of arrival \(t_{\textrm{AT},i}^p\) at a sensor i. The arrival time \(t_{\textrm{AT},i}^p\) when exciting a structure at position p is determined from the recorded raw signals of the sensors, using methods for Arrival Time Picking (ATP) that are comprehensively introduced in Appendix B. All determined arrival times at sensors 1 to \(n_{\textrm{sens}}\) from one source p are stored in the vector \(\textbf{t}_{\textbf{AT}}^\textbf{p}\):

When sensor data is recorded from an unknown impact location, the time of excitation \(t_{\textrm{exc}}\) is unknown. However, the travel time differences from the source location to the divergent sensors correspond to the differences in arrival times \(\Delta t_{\textrm{AT},ij}^p\) at the sensors that can be determined by

The most established source localisation technique for isotropic and homogeneous structures is triangulation [16, 18]. It is based on the differences in travelled distances \(\Delta d_{ij}^p\) between the source and two different sensors:

where v is the velocity of the wave travelling through the material. The wave speed has to be chosen according to the wave type that the sensors recognise first. Different types of waves propagate through the material [19] and, in this study, it has to be focussed on the P wave which can be identified as it arrives first. In the triangulation, the acoustic source is determined by constructing three circles around three sensor positions based on the differences in travelled distance. The circle radii are increased equally until they intersect at one common point, which is the location of impact [16]. Triangulation is predominantly suitable for simple, homogeneous structures without holes, as these would disturb a straightforward propagating wave. A minimum of three sensors is necessary for the determination of the impact position in the case of a two-dimensional structure with isotropic material [20].

1.2.1 Arrival time approach

The so-called Arrival Time Approach (ATA) is based on an arrival time function \(f(\mathbf {x_{S,i},x_{\textrm{exc}}})\), which describes the arrival time \(t_{\textrm{AT},i}^p\) at a sensor i at position \(x_{S,i}\) when the structure is excited at position \(x_{\textrm{exc}}\) at time \(t_{\textrm{exc}}\):

Different strategies for solving this equation exist, depending on the complexity of the arrival time function. A simple example for \(f(\mathbf {x_{S,i},x_{\textrm{exc}}})\) is [7]

Iterative and non-iterative techniques to solve a set of Eq. (4) for \(n_{\textrm{sens}}\) sensors are summarised in [7, 17]. Non-iterative methods are, in general, simple to apply and quicker, whereas iterative methods allow more complex velocity models. The USBM [21] and the Inglada method [22] are examples for solving these equation non-iteratively. Examples for iterative approaches are derivative approaches [23, 24], the Simplex algorithm [25,26,27] or genetic algorithms [28,29,30,31,32,33]. More examples for localisation strategies using an optimisation technique for isotropic or anisotropic plates can be found in [16, 34,35,36,37,38]. All these iterative and non-iterative methods aim to converge at one single point which is interpreted as the point of impact.

1.2.2 Artificial neural networks

To overcome the arising localisation issues when dealing with complex structures or inhomogeneous materials, Artificial Neural Networks (ANNs) are frequently used, e.g. [39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55]. Feed-forward neural networks with back-propagation are a suitable network type for localisation problems [51, 53, 54]. This kind of ANN is also the most commonly applied one [56]. The inputs of the model are the arrival time differences of the acoustic signals at the different sensors. The outputs of the ANN are the predicted coordinates of the acoustic source. In [53], the ANN approach is compared to classic approaches regarding the localisation accuracy.

1.2.3 Reference database method

Similar to ANN, the reference DaTaBase method (DTB) [43, 57,58,59,60,61,62,63,64] uses a large amount of datasets with known input features and results. An unknown impact can be located by comparing the input features from a set of known sources with that of the unknown impact. Database features and features from an unknown excitation are compared by correlation analysis [58, 59, 63, 65, 66] or calculating root mean square values [61,62,63]. As only discrete test points can be stored in the databases, an interpolation is normally applied between the entries of the databases [57,58,59,60]. The last two data-based approaches, ANN and DTB, are compared in [57] regarding the localisation performance for impacts on a composite plate.

1.3 Outline of the paper

The majority of the methods discussed in the cited literature rely on the construction of databases based on experiments. The effort and costs involved raise the question of whether data can also be generated differently, for example by simulation. An approach to obtain arrival time data numerically is shown by [64]. The travel times are determined by

where \((x_{\textrm{exc}},z_{\textrm{exc}})\) and \((x_S,z_S)\) are the coordinates of the source and the sensor respectively. This definition works well for simple plate structures without holes.

In this paper, two main targets are pursued on the basis of the presented structure:

-

The first question is whether a digital twin of an experimental setup can replace the generation of real experimental data.

-

The second question is if existing methods from the literature fed by either experimental or simulated data are suitable for a small-scale problem like the aforementioned problem in the wheel assembly.

Different simulation models of the hardware problem are compared for their modelling effort and localisation accuracy. The replacement of experimental data by simulated data is analysed for two different localisation approaches, the DTB method and localisation with ANNs. The sensitivity of the localisation accuracy of the localisation process with respect to several parameters is investigated using experimental data with known impact positions. Section 2 will focus on the acquisition of data, both by simulation and experiment. Section 3 will introduce the two localisation methods based on data sets that are discussed in this paper. Section 4 shows how to parametrise the methods with the goal of tuning various parameters of both methods to improve their localisation accuracy. The methods with tuned parameters are compared in Sect. 5.

2 Data acquisition methods

The localisation approaches applied in this paper are based on the usage of data sets that are collected prior to the localisation process. To avoid costly experiments as discussed in Sect. 2.1, different simulation models of varying complexity are established to describe the sound propagation in elastic materials and to collect data:

-

Direct Ray Approach (DRA); presented in Sect. 2.2

-

Ray Tracing (RT); presented in Sect. 2.3

-

Finite Element Method (FEM); presented in Sect. 2.4

After an introduction to experimental data acquisition in Sect. 2.1, the application of DRA, RT and FEM on the brake disc hat is introduced.

2.1 Experimental data acquisition

A classic method to obtain data for the establishment of databases are experiments where the real structure is impacted at several locations. The experimental set-up for this purpose consists of the brake disc hat located on foam which has a much lower wave speed than aluminium to avoid bypasses. Ten tear-drop charge accelerometer sensors are mounted on both sides of the structure. The signals are collected at a sampling rate of 5 MHz. During the experiments, 470 different positions are excited on the structure. A steel ball is used as an impulsive excitation of the structure. Figure 2a shows an example of the signal obtained from one of the sensors.

As travel times with respect to the impact cannot be revealed directly, normalised arrival times \({\tau }_{\textrm{AT},\textbf{p}}\) are used: these refer to the arrival time \(t_{\textrm{AT},sf}^{p}\) at the sensor sf, the sensor that records the signal first and thus has the shortest distance between source and sensor [43]:

By sorting all normalised arrival times in a vector, the normalised arrival time vector \({\tau }_{\textrm{AT},\textbf{p}}\) is obtained:

Different methods, such as the Akaike Information Criterion (AIC), the Short Time Average over Long Time Average (STA/LTA) and a correlation-based approach are applied for the purpose of ATP, and are briefly introduced in Appendix B. Figure 2b zooms in on Fig. 2a near the detected arrival time of the wave. The vertical lines mark the determined arrival times picked by the AIC and the STA/LTA method. Figure 3 shows the normalised arrival times (Eq. (7)) for measurements with five repetitions as black, dashed lines. The results indicate very good reproducibility. Sensors 1 and 6 as well as 2 and 7 (and other pairs of sensors) detect similar arrival times of the waves as they are similarly distant from the source, but on different sides of the hat; see Fig. 4. The additional lines represent the normalised arrival times for the three simulation approaches described later in this section. The simulated results differ slightly for sensors close to the point of excitation. Greater differences are found for sensors 5 and 10, which had the largest distance from the point of excitation.

2.2 Simulation: direct ray approach

The DRA is the simulation technique with the highest reduction in complexity of the presented structure. In this approach, the source and sensors are connected by the shortest geometrical distance \(l_i\) between them; see Eq. (6) or [64]. The travel times \(t_{TT}^i\) are determined by dividing the length of this "direct ray" by the wave propagation speed:

If this linear function intersects with the circular cutout in the centre of the plate, the arc length between the two intersection points is taken instead of the direct connection. To keep this approach simple, the algorithm still chooses the direct connection when the ray intersects with one of the through bores where the effect is lower than that of the big cutout in the centre of the plate. The advantage of this approach is the short calculation time and the low effort required to construct the database. The blue, dashed lines in Fig. 4 visualise direct rays between a sensor and two different possible points of excitation.

2.3 Simulation: ray tracing

The RT is a refined version of the direct ray approach. If a ray intersects with one of the circular cutouts, the ray is bent around it. This is visualised by the green, solid lines shown in Fig. 4. The length of the rays hitting the desired end point (sensors) are compared so that the shortest distance between the source and sensor can be determined. Travel times are finally calculated by inserting the shortest distance into Eq. (9). A detailed description of the algorithm is given in Appendix C.

2.4 Simulation: finite elements

This approach uses a finite element model of the brake disc hat to simulate the wave propagation and arrival times at the different sensors. In the simulation, the brake disc hat is supported by several soft springs to model the foam on which the disc is positioned in the validation experiments. The excitation signal is idealised as a triangular pulse as shown in Fig. 5a. The excited frequency range depends on the duration of the triangular load: short durations lead to higher frequency inputs, but may cause numerical issues. A duration of \(2\cdot 10^{-7}\) s is chosen. The object of investigation is represented through an ABAQUS explicit model, which is simulated for a total time of \(3\cdot 10^{-5}\) s using a numerical time step procedure. The output signal for five out of ten sensors is shown in Fig. 5b. The arrival times at the different sensors are highlighted as vertical, dashed lines. A threshold-based ATP algorithm is used to extract the times of arrival.

3 Methods for localisation

To investigate the localisation potential with computer-generated data, two approaches of localisation are discussed within this paper: the comparison of recorded data from an unknown source position with that from databases, (Sect. 3.1) and the application of a neural network that is trained with reference data (Sect. 3.2).

3.1 Approach A: comparison with reference data

In the following, data related to travel times from different known positions of excitation are compared with data from an unknown location. Delta Arrival Time (DAT) matrices \(\textbf{A}_{\textrm{AT}}^p \in \mathbb {R}^{n_{\textrm{sens}} \text {x} n_{\textrm{sens}}}\) are constructed from the normalised arrival times:

The approach to localising an unknown point of excitation is based on the comparison between the unknown DAT matrix \(\tilde{\textbf{A}}_{\textrm{AT}}^p\) and that from possible reference positions from the database \(\textbf{A}_{\textrm{AT}}^p\) by subtraction:

For every point p, a residuum \(\epsilon _p\) is determined:

In this study, the proposal for the point of excitation is determined using a weighted average over k points with coordinates \(x_i\):

Using a weighted average enables us to propose source coordinates from a continuous field and not only the discrete points that are stored in the database. The weights \(w_i\) depend on the error determined at the respective position:

Fig. 6b visualizes this approach. Green areas mark points with low values of \(\epsilon _{p}\) whereas red areas indicate a high deviation from the measured data to that from the database.

3.2 Approach B: artificial neural networks

In the second approach, an ANN is trained with sensor data to predict impact source locations. A feedforward ANN is chosen for the task. It is considered the most suitable network type for localisation problems [51, 53, 54]. Its architecture is shown in Fig. 7. The ANN consists of nodes, referred to as neurons, which are arranged in several layers. All the neurons in one layer are connected to all the neurons in its neighbouring layers. The applied ANN has three different types of layers: the arrival times \(\tau _{\textrm{AT},s}^p\) are inserted into the network at the input layer. The so-called hidden layers process the data. The results of the network are the predicted source coordinates \(x_{\textrm{prop}}\), \(z_{\textrm{prop}}\), and are obtained in the output layer. Input as well as output data are continuous in this case. The processing of data in the applied ANN is performed in the same way in all layers. The connections forward values from neurons in one layer to neurons in another layer. The incoming values are combined using weighted sums by the neurons of the receiving layer. The thereby applied weights and biases are learnable parameters of the network. The weighted sums form the input of so-called activation functions. The values of the functions are the outputs of the neurons and are forwarded to the subsequent layer. The weights and biases of the network are adjusted using supervised learning with data of known impact positions. The error backpropagation rule is applied to update the parameters [56]. Since the weights and biases are only slightly adjusted in each step, the data sets are processed several times by the network. A single run through all the training data is called a training epoch. After training, the performance of the ANN is evaluated in the validation phase. The percentage of the validation data in the complete data is specified by the validation split. After validation, the ANN can be applied to predict unknown source positions.

4 Tuning of parameters for the localisation and data generation methods to increase localisation accuracy

In this section, localisation process parameters that influence the localisation accuracy are varied to find the best set-up for the comparison of the methods in the discussion in Sect. 5. Here, the data sets from the experiments are used as validation points for the purpose of parameter tuning: the recorded signals from the validation points are used as an input for the localisation methods, while proposed positions of excitation \({\textbf{x}}_{\textbf{prop}}\) are compared with the actual ones \(\textbf{x}_{\textbf{exc}}\). An error criterion \(\xi \) is defined based on the distance between these two points:

There are different causes for the error: firstly, errors arise during the measurements due to uncertainties in the exact positions of sensors and impact positions, the sensor dimensions and some other minor aspects [54]. The ATP process affects arrival times and, therefore, has an impact on the localisation accuracy. In the case of simulated data acquisition, the abstraction of the method (DRA, RT, FEM) and its parameters (mesh size, material properties, etc.) influence the localisation accuracy. Finally, the localisation approaches themselves have parameters that influence the accuracy. The goal of this section is, on the one hand, to find parameters for both approaches that minimise the total error and, on the other hand, to quantify the effects of the different parameters. The localisation accuracy of all 450 test points should be taken into account when different parametrisations of the methods are compared. A cumulative frequency analysis is performed, which evaluates the percentage of all test points that have an error smaller than a certain reference value.

4.1 Influence of parameters of approach A on the localisation accuracy

For the comparison of different data acquisition methods, the parameters of the localisation and data acquisition process need to be tuned. The effect of different parameters is presented in the following.

4.1.1 Data preparation: influence of the ATP method

The influence of the ATP methods that are introduced in Appendix B is considered in Fig. 8. The parameters of the different approaches are adjusted to estimate the arrival times as accurately as possible. The chosen parameters and how they are defined can be found in Table 5 in Appendix B. The AIC and the correlation approach lead to similar results. STA/LTA leads to worse results for the majority of the considered points. In general, different ATP methods should be used and compared for robust detection. In this case, it seems that the improvement obtained using a more accurate ATP method would be small or even unnoticeable, since two independent ATP approaches led to similar results. For the discussion in Sect. 5.1, the results gained from the AIC are chosen.

4.1.2 Database creation: sound propagation velocity

Preliminary investigations with the experimental setup yielded a wave velocity of \(c=5391~\frac{\textrm{m}}{\textrm{s}}\), see Appendix D. Nevertheless, a study on the influence of the wave propagation speed on the localisation accuracy is conducted in the following, as this value will vary in reality due to the scatter of the material properties. Figure 9a–c shows the localisation accuracy of the DRA, RT and FEM for different wave propagation velocities. It can be deduced from Fig. 9a and b that using the determined wave speed leads to an enhanced localisation accuracy for the DRA and RT. The optimal value for the FEM simulation of \(c=~5200~\frac{\textrm{m}}{\textrm{s}}\) represents a deviation of \(3.6 \%\) from the experimentally obtained value. All graphics show that determining the wave propagation speed is essential, as higher deviations from the real value significantly influence the localisation accuracy.

4.1.3 Database creation: influence of database mesh

The influence of the mesh size of the simulation techniques is investigated in this section. Databases with 500, 1000, 3000 and 5000 reference points that are generated by the DRA and RT will be compared. In the case of the FEM model, the reference mesh of the brake disc hat with 15,524 elements is refined to 123,712 elements and re-meshed with 2446 elements. The localisation results with all these different meshes are shown in Fig. 9g–i. The graphic shows that a refinement of the mesh leads to better results. Investigations with an even finer mesh do not lead to further improvement of the accuracy for any of the types of simulation approach. For the discussion in Sect. 5.1, the finest meshes for each case are chosen.

4.1.4 Comparison: number of considered sensors

This paragraph investigates the effect of using different numbers of sensors in the case of the complex geometry of the break disc hat. Figure 9d–f shows the influence of the number of considered sensors on the localisation accuracy for the simulated approaches. The mentioned number of sensors x means that x sensors with the lowest time of arrival are considered, whereas the remaining 10-x sensors with higher arrival times are neglected. The figure shows that taking 8 sensors into account leads to better results than 10 sensors. It is important to note that this does not mean that installing more sensors on the structure always leads to worse results: the figure demonstrates that the precision of localisation improves when the sensors nearest to the source are considered, and the sensors that have a larger distance from the source are neglected. This observation is further discussed in Sect. 5.1.

4.2 Influence of parameters of approach B on the localisation accuracy

In order to find adequate parameters for the training process, different properties of the neural networks are investigated, including the number of neurons per layer or the number of epochs. The parametrisation of the network and its training process is evaluated by averaging the error \(\epsilon \) over all test points. Figure 10a shows the influence of the number of neurons in the first and the second hidden layer. The graphic shows that a minimum number of three neurons is necessary in both layers to avoid poor localisation results. Above that minimum, no clear tendency can be determined from Fig. 10a. The same result holds for Fig. 10b, which visualises the influence of the number of training epochs. Again, a minimum number of training epochs is necessary to avoid the effect of underfitting the neural network, leading to worse results. If too many epochs are chosen (\(\gg \) 100 in the case at hand), results get worse again due to the effect of overfitting.

The performance of neural networks is further influenced by the type of optimiser, the activation function, the way of preparing the data and many other parameters. The optimisation of all these parameters is not dealt with in this paper. A setup with parameters that lead to satisfying results is chosen for the discussion in Sect. 5.1 and is summarised in Table 1. The number of neurons in layer 1 and layer 2, and the number of training epochs are chosen based on a full factorial design. The values are different depending on the type of input data.

5 Results and discussion

In this section, the data acquisition methods are compared and some results from Sect. 4 are discussed. Whether experimental data can be replaced by simulated data in the case of the two presented localisation approaches is also discussed.

5.1 Comparison of the localisation performance of experimental and simulated data

The localisation accuracy is an essential criteria when considering the replacement of experimental data by simulated data. Figure 11 shows the localisation error for the four different approaches of data acquisition, both for localisation approach A and B. In the case of approach A, the extension of wave bending around the holes by refining DRA towards RT leads to a noticeable increase in performance. Compared to RT, the more detailed FEM simulation does not further improve the results. A possible explanation could be the additional ATP process, which adds an additional source of error. All approaches based on simulated data yield better results than those based on experimental data, even though it should be mentioned that much fewer experimental data points could be used with respect to the simulated ones. A different conclusion can be drawn for approach B: the highest localisation accuracy is obtained for the application of a neural network that is trained with experimental data. When an ANN is trained with simulated data, its parameters are adjusted to the laws and assumptions on which the simulation model is based. An ANN trained with simulated data can therefore not deal with all the properties and effects of data obtained by an experimental setup using the real structure. Hence less accurate results are achieved when applying this ANN to experimental data.

5.2 Comparison of the effort and quality of data acquisition methods

The effort of the data acquisition process is the second important criterion that should be considered if experimental data should be replaced by simulated data. First of all, it will be shown that more experimental points increase localisation accuracy. The 450 data points are grouped randomly in five groups of 90 points, each containing the data of different excitation points. Between one and four of these groups are then used as “training sets" to parametrise the localisation method while the corresponding remaining groups serve as validation points. For a training set of 180 points, e.g., there are nine possible combinations of groups, leading to nine graphs in Fig. 12. The remaining points, that are not used as a basis for establishing the database, are used as validation points. The figure shows the influence of the number of database points on the localisation accuracy. It becomes clear that the more points there are, the higher the localisation performance is, which obviously also leads to higher effort and costs in the data generation phase.

Table 2 summarises and compares the presented approaches of data acquisition. "Preparation effort" evaluates the effort for implementing the simulation model or rather building up the experimental set-up. In contrast, the "acquisition effort" describes the effort that is needed for data acquisition when the method is prepared, i.e. the simulation model is validated or rather the experimental set-up is ready for use. An advantage of applying measurement data is that both the sensor properties as well as the actual wave propagation speed are "included" in the database. These properties are not taken into account in the simulation models. The FEM needs an additional ATP because the output of the method are the time series of the sensors. In many applications, a FEM model may be already available for other purposes, and this constitutes an advantage of this approach. DRA and RT are limited to simple structures like plates with holes, and are not suitable for complex 3D geometries. The effort to implement RT as presented in Appendix C is significantly higher than the effort for DRA due to the consideration of wave reflections. Localisation accuracy depends on the combination of the data acquisition method and localisation approach. As shown in Sect. 5.1, approach A worked well with simulated data, whereas approach B led to better results with experimental data. Costs for hardware are not taken into account, as in the case of simulated data acquisition, measurement hardware is still needed for the collection of data from unknown sources.

The following is a brief comparison of the two discussed localisation approaches. Approach A is very simple to implement. The main problem of approach B, the neural network approach, is the high effort for determining good parameters of the training process and the network itself. As shown in Sect. 4.2, localisation accuracy is sensitive to properties of the network itself.

5.3 Discussion on the localisation accuracy in small-scale problems

The mean errors and corresponding standard deviations of the localisation errors that are shown in Fig. 11 and are summarised in Table 3. The mean errors range from 5.34 mm to 7.59 mm. Taking the diameter of the disc of 17 cm into account leads to relative errors of 3.14 to 4.47 \(\%\). We then compared these values with results in the literature. In [53], a comparison of the localisation performance between a classic localisation method and an ANN is shown for a vessel with a diameter of 500 mm. The mean deviations from the actual point of excitation are 70–140 mm for the classic localisation technique and 20–50 mm for the ANN, depending on the type of excitation. With regard to the circumference of the vessel of 500 mm, the deviations are between 0.6 and 4.7\(\%\) of the circumference of the vessel. Further accuracy estimations of classic localisation methods can be found in seismology. Beside the choice of the localisation method, several factors, such as the accuracy of the crust velocity models and the density of the seismic stations network influence the localisation precision. Therefore, it is difficult to make a general statement. However, in [67] it is shown that, for distances between source and station of approx. 100–200 km, an error of 5 km, that is, 2.5–5\(\%\), is expected. It can be concluded that the localisation accuracy for the small-scale problem at hand is within the range of known values in the literature.

5.4 Discussion on the influence of the number of considered sensors in the comparison step

In Sect. 4.1.4, the influence of the number of sensors that are considered during the feature comparison step with the database is shown. Due to the damping properties of the material, the slope of the amplitude of the signal at arrival is less steep when sensors have a larger distance from the source. ATP is more difficult for the algorithm if the rise of the signal is less steep. As shown in Fig. 13a, sensors that have a larger distance from the source (red, thick lines) exhibit a less steep amplitude slope at arrival than sensors nearer to the source (thin, green lines). Signals at sensors that have a larger distance from the source and are the last to detect the wave, may show errors in ATP and consequently, lead to a reduction in localisation accuracy, if they are still considered.

Definition of a criterion to select signals for the database comparison step. a Shows that signals of sensors that have a larger distance from the source have a less steep increase of the signal. All signals are shifted by their corresponding arrival time. b Shows the dependency of the criterion \(\theta \) on the rise position of the sensor

This negative impact of sensors that have a larger distance from the source should be minimised in the following by determining a criterion \(\theta \) that helps to identify which signals should be neglected and which should be kept in the localisation process. The "steepness" of the signal at arrival is quantified by determining the area under the derivative of the signal \(\frac{df}{dt}\) in the time interval \([0, \tau _{ev}]\). The trapezoidal rule of integration replaces the integrals because the measured signals are discrete. A criterion \(\theta \) is defined in the following way where \(f_s\) is the sampling rate:

Figure 13b shows that this criterion takes higher values for sensors near the source, i.e. sensors that recognise the wave first. The idea is to choose those signals for the comparison step of approach A for which \(\theta > \theta _{th}\) holds. The two parameters of this criterion, the threshold \(\theta _{th}\) and the time interval of evaluation \(\tau _{ev}\) are chosen optimally from a full factorial design. Figure 14 shows the performance of the described criterion compared to taking all sensors into account (black dashed line). To get an optimal line for the criterion, every point is localised by taking 2 to 10 sensors into account, leading to different errors between the proposed and actual point of impact for the different number of considered sensors. The minimum of these nine values is then stored for every test point, leading to a vector of optimal results. The green, dashed line represents the optimal result when the ideal number of sensors is considered for every test point. It becomes clear that the defined criterion increases localisation accuracy over taking all sensors into account. Nevertheless, there is still some room for improvement left for the criterion compared to the optimal results.

6 Conclusion

The main question of this paper is whether simulated data can replace experimental data in data-based localisation strategies. For this purpose, a small complex aluminium plate is chosen as the investigation object, to find out if the approaches perform accurately for problems where classic localisation approaches generally have issues to deal with. Two different localisation approaches, the DTB method and neural networks, are implemented and fed with different types of data. Besides classic experimental data acquisition, different simulation techniques are presented for the creation of databases: the direct ray approach, which is simple to implement, the ray tracing approach, which extends the direct ray approach by accounting for waves bending around structural holes, and the finite element method, which allows for a more detailed structural representation. The parameters of the whole localisation process are tuned to increase the localisation accuracy and to perform a final comparison of the approaches with experimental or simulated data. The main contribution of the paper can be summarised as follows:

-

The localisation accuracy, described by the mean error, varies between 3.14 and 4.47 \(\%\). This means that all the presented methods are, in general, suitable for localising impacts on the investigated small-scale structure.

-

Simulated data have the potential to replace experimental data when considering the localisation accuracy. Combining simulated data with the DTB localisation method led to even better results than using experimental data. However, ANNs trained with experimental data led to better results than ANNs trained with simulated data.

-

The RT and the FEM approach showed the best performance among the presented wave propagation simulation techniques. Nevertheless, the DRA can be an interesting approach thanks to its low computational load and simplicity in implementation.

-

The localisation accuracy obviously depends on the mesh size of the data set. A localisation with simulated data has the main advantage that a mesh refinement only increases the computational load, avoiding effort related to experiments.

-

Results showed that the localisation accuracy depends on the number of sensors that are taken into account when comparing the features of an unknown event with features from the database. A criterion is presented that helps to select which signals should be taken into account. Using this criterion improves localisation accuracy compared to the case of an unfiltered use of the signals of all sensors.

Finally, we would like to emphasize that the presented approach can be applied to various localisation problems that have a punctual excitation in tangential and/or normal direction. However, the type of excitation influences the wave type travelling through the material and the corresponding velocities of the waves. The presented simulation techniques have to be parametrised according to the specific problem.

References

Billingsley, J., Kinns, R.: The acoustic telescope. J. Sound Vib. 48(4), 485–510 (1976)

Lanslots, J., Deblauwe, F., Janssens, K.: Selecting sound source localization techniques for industrial applications. Sound Vib. 44(6), 6 (2010)

Padois, T., Sgard, F., Doutres, O., Berry, A.: Acoustic source localization using a polyhedral microphone array and an improved generalized cross-correlation technique. J. Sound Vib. 386, 82–99 (2017)

Ma, W., Bao, H., Zhang, C., Liu, X.: Beamforming of phased microphone array for rotating sound source localization. J. Sound Vib. 467, 115064 (2020)

Wei, M., Xun, L.: Improving the efficiency of Damas for sound source localization via wavelet compression computational grid. J. Sound Vib. 395, 341–353 (2017)

Kita, S., Kajikawa, Y.: Fundamental study on sound source localization inside a structure using a deep neural network and computer-aided engineering. J. Sound Vib. 513, 116400 (2021)

Ge, M.: Analysis of source location algorithms: part I. Overview and non-iterative methods. J. Acoust. Emiss. 21(1), 29–51 (2003)

Stafsudd, J., Asgari, S., Hudson, R., Yao, K., Taciroglu, E.: Localization of short-range acoustic and seismic wideband sources: algorithms and experiments. J. Sound Vib. 312(1–2), 74–93 (2008)

Goutaudier, D., Gendre, D., Kehr-Candille, V.: Impulse identification technique by estimating specific modal ponderations from vibration measurements. J. Sound Vib. 474, 115263 (2020)

Gaul, L., Hurlebaus, S.: Determination of the impact force on a plate by piezoelectric film sensors. Arch. Appl. Mech. 69(9), 691–701 (1999)

Yen, C.S., Wu, E.: On the inverse problem of rectangular plates subjected to elastic impact. Part II: experimental verification and further applications. J. Appl. Mech. 62(3), 699–705 (1995)

Inoue, H., Harrigan, J.J., Reid, S.R.: Review of inverse analysis for indirect measurement of impact force. Appl. Mech. Rev. 54(6), 503–524 (2001)

Moreno Triguero, E: Acoustic emission in friction: influence of driving velocity and normal force (2019)

Sengupta, S., Datta, A.K., Topdar, P.: Structural damage localisation by acoustic emission technique: a state of the art review. Lat. Am. J. Solids Struct. 12(8), 1565–1582 (2015)

Mujica Delgado, LE, Bened, R: A review of impact damage detection in structures using strain data

Kundu, T.: Acoustic source localization. Ultrasonics 54(1), 25–38 (2014). https://doi.org/10.1016/j.ultras.2013.06.009

Ge, M.: Analysis of source location algorithms: part II. Iterative methods. J. Acoust. Emiss. (2003). https://doi.org/10.1016/j.ultras.2013.06.009

Tobias, A.: Acoustic-emission source location in two dimensions by an array of three sensors. Nondestruct. Test. 9(1), 9–12 (1976)

Achenbach, J.: Wave Propagation in Elastic Solids. Elsevier, Amsterdam (2012)

Salehian, A: Identifying the location of a sudden damage in composite laminates using wavelet approach (2003)

Leighton, F, Blake, W: Rock noise source localization techniques. (1970)

Inglada, V.: Die Berechnung der Herdkoordinaten eines Nahbebens aus den Eintrittszeiten der in einingen benachbarten Stationen aufgezeichneten P-oder S-Wellen. Gerlands Beitrage zur Geophysik 19(12), 73–98 (1928)

Geiger, L.: Herdbestimmung bei Erdbeben aus den Ankunftszeiten. Nachrichten von der Gesellschaft der Wissenschaften zu Göttingen. Mathematisch-Physikalische Klasse 19(12), 331–349 (1910)

Thurber, C.H.: Nonlinear earthquake location: theory and examples. Bull. Seismol. Soc. Am. 75(3), 779–790 (1985)

Nelder, J.A., Mead, R.: A simplex method for function minimization. Comput. J. 7(4), 308–313 (1965)

Prugger, A.F., Gendzwill, D.J.: Microearthquake location: a nonlinear approach that makes use of a simplex stepping procedure. Bull. Seismol. Soc. Am. 78(2), 799–815 (1988)

Gendzwill, D, Prugger, A: Algorithms for micro-earthquake locations. In: Proc. 4th Syrup. on Acoustic Emissions and Microseismicity, pp. 601–615 (1985)

Kennett, B.L., Sambridge, M.S.: Earthquake location-genetic algorithms for teleseisms. Phys. Earth Planet. Inter. 75(1–3), 103–110 (1992)

Sambridge, M., Gallagher, K.: Earthquake hypocenter location using genetic algorithms. Bull. Seismol. Soc. Am. 83(5), 1467–1491 (1993)

Billings, S., Kennett, B.L., Sambridge, M.S.: Hypocentre location: genetic algorithms incorporating problem-specific information. Geophys. J. Int. 118(3), 693–706 (1994)

Xie, Z., Spencer, T.W., Rabinowitz, P.D., Fahlquist, D.A.: A new regional hypocenter location method. Bull. Seismol. Soc. Am. 86(4), 946–958 (1996)

Barricelli, NA: Symbiogenetic Evolution Processes Realized by Artificial Methods (1957)

Fraser, A., Burnell, D.: Computer Models in Genetics (1970)

Nicknam, A., Hosseini, M.H.: Structural damage localization and evaluation based on modal data via a new evolutionary algorithm. Arch. Appl. Mech. 82, 191–203 (2011)

Kundu, T, Das, S, Jata, K: Impact point detection in stiffened plates by acoustic emission technique. Smart Mater. Struct. 18 (2009)

Kundu, T., Das, S., Jata, K.V.: Point of impact prediction in isotropic and anisotropic plates from the acoustic emission data. J. Acoust. Soc. Am. 122(4), 2057–2066 (2007)

Hajzargerbashi, T., Kundu, T., Bland, S.: An improved algorithm for detecting point of impact in anisotropic inhomogeneous plates. Ultrasonics 51(3), 317–324 (2011)

Koabaz, M., Hajzargarbashi, T., Kundu, T., Deschamps, M.: Locating the acoustic source in an anisotropic plate. Struct. Health Monit. 11(3), 315–323 (2012)

Yue, N., Sharif Khodaei, Z.: Assessment of impact detection techniques for aeronautical application: Ann vs. LSSVM. Multiscale Model. Simul. 7(04), 1640005 (2016)

Kundu, T., Das, S., Jata, K.V.: An improved technique for locating the point of impact from the acoustic emission data. In: Structural Health Monitoring, vol. 6532, p. 65320 (2007). International Society for Optics and Photonics

Seno, A.H., Khodaei, Z.S., Aliabadi, M.F.: Passive sensing method for impact localisation in composite plates under simulated environmental and operational conditions. Mech. Syst. Signal Process. 129, 20–36 (2019)

Mallardo, V., Aliabadi, M., Khodaei, Z.S.: Optimal sensor positioning for impact localization in smart composite panels. J. Intell. Mater. Syst. Struct. 24(5), 559–573 (2013)

Sanchez, N., Meruane, V., Ortiz-Bernardin, A.: A novel impact identification algorithm based on a linear approximation with maximum entropy. Smart Mater. Struct. 25(9), 095050 (2016)

Sung, D.U., Oh, J.H., Kim, C.G., Hong, C.S.: Impact monitoring of smart composite laminates using neural network and wavelet analysis. J. Intell. Mater. Syst. Struct. 11(3), 180–190 (2000)

Jang, B.W., Kim, C.G.: Impact localization of composite stiffened panel with triangulation method using normalized magnitudes of fiber optic sensor signals. Compos. Struct. 211, 522–529 (2019)

Jang, B.W., Lee, Y.G., Kim, J.H., Kim, Y.Y., Kim, C.G.: Real-time impact identification algorithm for composite structures using fiber Bragg grating sensors. Struct. Health Monit. 19(7), 580–591 (2012)

Haywood, J., Coverley, P., Staszewski, W.J., Worden, K.: An automatic impact monitor for a composite panel employing smart sensor technology. Smart Mater. Struct. 14(1), 265 (2004)

Staszewski, W.J., Worden, K., Wardle, R., Tomlinson, G.R.: Fail-safe sensor distributions for impact detection in composite materials. Smart Mater. Struct. 9(3), 298–303 (2000)

Worden, K., Staszewski, W.: Impact location and quantification on a composite panel using neural networks and a genetic algorithm. Strain 36(2), 61–68 (2000)

LeClerc, J., Worden, K., Staszewski, W.J., Haywood, J.: Impact detection in an aircraft composite panel-A neural-network approach. J. Sound Vib. 299(3), 672–682 (2007)

Fu, T., Zhang, Z., Liu, Y., Leng, J.: Development of an artificial neural network for source localization using a fiber optic acoustic emission sensor array. Struct. Health Monit. 14(2), 168–177 (2015)

Prevorovsky, Z, Chlada, M, Vodicka, J: Inverse problem solution in acoustic emission source analysis: classical and artificial neural network approaches. In: Universality of Nonclassical Nonlinearity, pp. 515–529. Springer (2006)

Kalafat, S., Sause, M.G.: Localization of acoustic emission sources in fiber composites using artificial neural networks (2014)

Kalafat, S., Sause, M.G.: Acoustic emission source localization by artificial neural networks. Struct. Health Monit. 14(6), 633–647 (2015)

Steinberg, B.Z., Beran, M.J., Chin, S.H., Howard, J.H., Jr.: A neural network approach to source localization. J. Acoust. Soc. Am. 90(4), 2081–2090 (1991)

Basheer, I.A., Hajmeer, M.: Artificial neural networks: fundamentals, computing, design, and application. J. Microbiol. Methods 43(1), 3–31 (2000)

Seno, A.H., Aliabadi, M.: Impact localisation in composite plates of different stiffness impactors under simulated environmental and operational conditions. Sensors 19(17), 3659 (2019)

Li, H., Wang, Z., Forrest, J.Y.L., Jiang, W.: Low-velocity impact localization on composites under sensor damage by interpolation reference database and fuzzy evidence theory. IEEE Access 6, 31157–31168 (2018)

Kim, J.H., Kim, Y.Y., Park, Y., Kim, C.G.: Low-velocity impact localization in a stiffened composite panel using a normalized cross-correlation method. Smart Mater. Struct. 24(4), 045036 (2015)

Shrestha, P., Park, Y., Kwon, H., Kim, C.G.: Error outlier with weighted median absolute deviation threshold algorithm and FBG sensor based impact localization on composite wing structure. Compos. Struct. 180, 412–419 (2017)

Jang, B.W., Kim, C.G.: Impact localization on a composite stiffened panel using reference signals with efficient training process. Compos. B. Eng. 94, 271–285 (2016)

Jang, B.W., Lee, Y.G., Kim, C.G., Park, C.Y.: Impact source localization for composite structures under external dynamic loading condition. Adv. Compos. Mater. 24(4), 359–374 (2015)

Shrestha, P., Kim, J.H., Park, Y., Kim, C.G.: Impact localization on composite wing using 1d array FBG sensor and RMS/correlation based reference database algorithm. Compos. Struct. 125, 159–169 (2015)

Frieden, J., Cugnoni, J., Botsis, J., Gmür, T.: Low energy impact damage monitoring of composites using dynamic strain signals from FBG sensors—part I: impact detection and localization. Compos. Struct. 94(2), 438–445 (2012). https://doi.org/10.1016/j.compstruct.2011.08.003

Ing, R.K., Quieffin, N., Catheline, S., Fink, M.: In solid localization of finger impacts using acoustic time-reversal process. Appl. Phys. Lett. 87(20), 204104 (2005)

Park, B., Sohn, H., Olson, S.E., DeSimio, M.P., Brown, K.S., Derriso, M.M.: Impact localization in complex structures using laser-based time reversal. Struct. Health Monit. 11(5), 577–588 (2012)

Karasozen, E., Karasozen, B.: Earthquake location methods. Int. J. Geomath. 11, 1–28 (2020)

Kurz, J., Grosse, C., Reinhardt, H.W.: Strategies for reliable automatic onset time picking of acoustic emissions and of ultrasound signals in concrete. Ultrasonics 43(7), 538–546 (2005)

Akram, J., Eaton, D.: A review and appraisal of arrival-time picking methods for downhole microseismic dataarrival-time picking methods. Geophysics 81(2), 71–91 (2016)

Maeda, N.: A method for reading and checking phase times in autoprocessing system of seismic wave data. Zisin 38, 365–379 (1985)

Acknowledgements

Manuel Scholl: conceptualisation, methodology, writing: original draft, writing: review and editing, visualisation, software, validation Matthias Passek: conceptualisation, methodology, writing: original draft, writing: review and editing, software, validation Mirjam Lainer: conceptualisation, methodology, software, writing: review and editing Francesca Taddei: conceptualisation, writing: review and editing Felix Schneider: conceptualisation, writing: review and editing Gerhard Müller: conceptualisation, methodology, writing: review and editing, validation, supervision BMW Group: resources (data of the experiments) TU Munich: resources (data of the experiments). The authors would like to thank the team of the Chair of Vibroacoustics of Vehicles and Machines of the TUM for their support.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: List of symbols

Table 4 summarise all symbols that are used within this paper. Symbols that are only used in the appendix are not listed.

Appendix B: ATP

Several approaches for the purpose of ATP are proposed in the literature, see, e.g., [68, 69]. The AIC, the STA/LTA and a correlation-based criterion are presented in the following.

1.1 B.1 AIC

The AIC for the discrete time signal f is defined for all time steps \(k = 1... N\) as follows [70]:

where \(\textrm{var}(x)\) is the variance function and N the length of the discrete time signal f. f(a, b) means that all discrete samples of f from index a to b are taken. The ToA is determined at the minimum value of AIC(k). The discrete time signal f is shortened to the interval \([t_0 - t_{w,1}; t_0 +t_{w,2}]\) so that the AIC is not determined for all samples of the recorded signal. \(t_{w,1}\) and \(t_{w,2}\) are user-defined window lengths.

1.2 B.2 STA/LTA

This criterion is defined by the ratio of STA to LTA, which are computed by [69]:

where \(n_s\) and \(n_l\) are the short term and long term window lengths, respectively. The arrival time is determined when the ratio \(r = \frac{STA}{LTA} > d_{\textrm{LTA},\textrm{STA}}\) for the first time, where \(d_{\textrm{LTA},\textrm{STA}}\) is a user-defined threshold. We slightly changed this criterion and defined the arrival time at the point where the derivative of r exceeds a certain threshold for the first time. This change lead to better results in the case at hand.

1.3 B.3 Correlation

The idea of this approach is to focus on comparing signals from different sensors, because arrival time differences are—in contrast to travel times—available. The time series are shortened to the interval \([t_0 - t_{w}/2; t_0 +t_{w}/2 ]\), where \(t_0\) is the point where the derivative of the signal exceeds the threshold \(d_{\textrm{cor},\textrm{A}}\) for the first time. \(t_{w}\) is the block length. The signal is set to 0 for all \(t > t_1\). \(t_1\) is the point where the derivative of the signal exceeds the threshold \(d_{\textrm{cor},\textrm{B}}\) for the first time. The signals are normalised by the value \(f(t_1)\) so that the maximum value of all blocks is 1. A cross-correlation of all combinations of recorded signals is calculated to determine the delays between the signals, which describes the arrival time differences that should be determined.

1.4 B.4 Threshold

This algorithm simply searches for the point where the absolute value of the recorded signal exceeds the threshold \(d_{\textrm{tresh},\textrm{C}}\) for the first time. This algorithm is not suitable for measured signals because of their noise. Nevertheless, tests showed that this criterion lead to better results than the AIC and the STA/LTA criterion for time series gained from FEM simulations.

1.5 B.5 Comparison of the criterion

Fig. 15 compares the normalised arrival times for different points of excitation. Whereas the AIC criterion and the correlation method yield similar results, the STA/LTA criterion deviated for the sensors with the largest distance from the point of impact. The arrival time differences are very similar for the three different criteria. This is an important conclusion as it shows the robustness of the algorithms.

Influence of the ATP approach on the normalised arrival times for the ten sensor positions. Different points of excitation are shown: a \(\hat{x} = (4.0,0.1,-1.0)\), b \(\hat{x} = (4.0,0.1,1.0)\), c \(\hat{x} = (-1.5,0,6.5)\), d \(\hat{x} = (-5.5,0,-4.5)\). Dimensions are given in cm; compare with Fig. 4

1.6 B.6 Chosen parameters for the ATP methods

The chosen parameters for the different ATP methods are summarised in Table 5.

Appendix C: Detailed Description of the ray tracing approach

The ray tracing approach uses the following steps to determine the minimum distance between a possible location of excitation and every sensor on the disc:

-

Determine a direct connection between sensor and source: if an intersection with any hole exists, go to step 2. Otherwise, stop the algorithm at this point. The RT approach is equivalent to the DRA in this case.

-

Determine the tangent points between source and every hole.

-

Starting from the tangent points, the rays are bent around the hole. Every \(\frac{360}{n_{\textrm{subrays}}}\) degree, a new subray starts in the direction of the sensor. If the subray reaches the sensor position, the length of this ray is saved.

-

Compare the length of all direct rays and subrays that connect the position of excitation and the sensor and determine the shortest one.

Figure 16 visualises the described procedure: the blue rays start from the source point in every direction and end when a hole is hit. Tangent rays (darkgreen) are determined between the source and every hole. The red rays highlight the bending around the holes. Light green rays start from different points at the circumference of the holes, and are orientated towards the sensor. The pink rays visualise the shortest distance between the source and sensor.

Appendix D: Determination of the wave propagation speed

A single estimation for the wave propagation speed can be determined by the ratio of the difference of traveled distances and travel times between two sensors i and j

For all points of excitation \(p= 1... n_{\textrm{Measure}}\), the wave propagation speed can be determined by averaging over all estimations \(c_{\textrm{est},ij}\):

The parameter \(n_{\textrm{pairs}}\) simply defines the number of pairs defined by \(\frac{2}{n_{\textrm{sens}}^2 - n_{\textrm{sens}}}\). The estimation \(c_{\textrm{est}}\) represents an approximation for the wave propagation speed. The time of excitation is not required in that approach. A mean value of 5391 \(\frac{\textrm{m}}{\textrm{s}}\) is determined from this approach. Figure 17 shows the distribution of determined wave propagation speeds together with their mean value. The very low and very high values that are determined in some of the evaluations are caused by errors in the ATP.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Scholl, M., Passek, M., Lainer, M. et al. Data-based localisation methods using simulated data with application to small-scale structures. Arch Appl Mech 93, 2411–2433 (2023). https://doi.org/10.1007/s00419-023-02388-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00419-023-02388-2