Abstract

Purpose

The purpose of this study is to evaluate ChatGPT's responses to Ear, Nose and Throat (ENT) clinical cases and compare them with the responses of ENT specialists.

Methods

We have hypothesized 10 scenarios, based on ENT daily experience, with the same primary symptom. We have constructed 20 clinical cases, 2 for each scenario. We described them to 3 ENT specialists and ChatGPT.

The difficulty of the clinical cases was assessed by the 5 ENT authors of this article. The responses of ChatGPT were evaluated by the 5 ENT authors of this article for correctness and consistency with the responses of the 3 ENT experts. To verify the stability of ChatGPT's responses, we conducted the searches, always from the same account, for 5 consecutive days.

Results

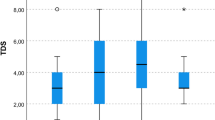

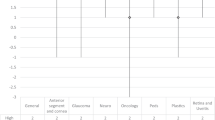

Among the 20 cases, 8 were rated as low complexity, 6 as moderate complexity and 6 as high complexity. The overall mean correctness and consistency score of ChatGPT responses was 3.80 (SD 1.02) and 2.89 (SD 1.24), respectively. We did not find a statistically significant difference in the average ChatGPT correctness and coherence score according to case complexity. The total intraclass correlation coefficient (ICC) for the stability of the correctness and consistency of ChatGPT was 0.763 (95% confidence interval [CI] 0.553–0.895) and 0.837 (95% CI 0.689–0.927), respectively.

Conclusions

Our results revealed the potential usefulness of ChatGPT in ENT diagnosis. The instability in responses and the inability to recognise certain clinical elements are its main limitations.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Biswas S (2023) ChatGPT and the future of medical writing. Radiology 307(2):e223312. https://doi.org/10.1148/radiol.223312+

Park I, Joshi AS, Javan R (2023) Potential role of ChatGPT in clinical otolaryngology explained by ChatGPT. Am J Otolaryngol 44(4):103873. https://doi.org/10.1016/j.amjoto.2023.103873

Else H (2023) Abstracts written by ChatGPT fool scientists. Nature 613(7944):423. https://doi.org/10.1038/d41586-023-00056-7

Chee J, Kwa ED, Goh X (2023) “Vertigo, likely peripheral”: the dizzying rise of ChatGPT [published online ahead of print, 2023 Jul 26]. Eur Arch Otorhinolaryngol. https://doi.org/10.1007/s00405-023-08135-1

Howard A, Hope W, Gerada A (2023) ChatGPT and antimicrobial advice: the end of the consulting infection doctor? Lancet Infect Dis 23(4):405–406. https://doi.org/10.1016/S1473-3099(23)00113-5

Koo TK, Li MY (2016) A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J Chiropr Med 15(2):155–163. https://doi.org/10.1016/j.jcm.2016.02.012

Hoch CC, Wollenberg B, Lüers JC et al (2023) ChatGPT’s quiz skills in different otolaryngology subspecialties: an analysis of 2576 single-choice and multiple-choice board certification preparation questions. Eur Arch Otorhinolaryngol 280(9):4271–4278. https://doi.org/10.1007/s00405-023-08051-4

Ayoub NF, Lee YJ, Grimm D, Divi V (2023) Head-to-head comparison of ChatGPT versus google search for medical knowledge acquisition published online ahead of print, 2023 Aug 2]. Otolaryngol Head Neck Surg. https://doi.org/10.1002/ohn.465

Chiesa-Estomba CM, Lechien JR, Vaira LA et al (2023) Exploring the potential of Chat-GPT as a supportive tool for sialendoscopy clinical decision making and patient information support [published online ahead of print, 2023 Jul 5]. Eur Arch Otorhinolaryngol. https://doi.org/10.1007/s00405-023-08104-8

Qu RW, Qureshi U, Petersen G, Lee SC (2023) Diagnostic and management applications of ChatGPT in structured otolaryngology clinical scenarios. OTO Open. 7(3):e67. https://doi.org/10.1002/oto2.67

Nielsen JPS, von Buchwald C, Grønhøj C (2023) Validity of the large language model ChatGPT (GPT4) as a patient information source in otolaryngology by a variety of doctors in a tertiary otorhinolaryngology department [published online ahead of print, 2023 Sep 11]. Acta Otolaryngol. https://doi.org/10.1080/00016489.2023.2254809

Lechien JR, Maniaci A, Gengler I et al (2023) Validity and reliability of an instrument evaluating the performance of intelligent chatbot: the Artificial Intelligence Performance Instrument (AIPI). Eur Arch Otorhinolaryngol. https://doi.org/10.1007/s00405-023-08219-y

Acknowledgements

We express our appreciation for the support of ChatGPT, an AI language model, during the composition of this manuscript. ChatGPT played a role in generating certain portions of the text and offered valuable recommendations for enhancing the language and coherence of the manuscript.

Funding

None.

Author information

Authors and Affiliations

Contributions

VD and LG conceived the study and wrote the paper; AS, RS and LC responded blindly to the clinical cases, and in case of inconsistencies, they reached unanimous consensus for the resolution of the case; FV completed the statistical analysis of the study; Luca Calabrese supervised the work and corrected the paper.

Corresponding author

Ethics declarations

Conflict of interest

All authors disclose any potential sources of conflict of interest. Any interest or relationship, financial or otherwise in the subject matter or materials discussed.

Ethical approval

Ethics board approval was not applicable for this study.

Informed consent

No participant consent for publication is necessary.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix. ChatGPT's responses and ENT specialists' responses to 20 clinical cases, subdivided into 10 scenarios.

Appendix. ChatGPT's responses and ENT specialists' responses to 20 clinical cases, subdivided into 10 scenarios.

See Tables 3, 4, 5, 6, 7, 8, 9, 10, 11, 12 and Fig. 2.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Dallari, V., Sacchetto, A., Saetti, R. et al. Is artificial intelligence ready to replace specialist doctors entirely? ENT specialists vs ChatGPT: 1-0, ball at the center. Eur Arch Otorhinolaryngol 281, 995–1023 (2024). https://doi.org/10.1007/s00405-023-08321-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00405-023-08321-1