Abstract

This is the first of two papers that describe the generation of a 25-member perturbed parameter ensemble (PPE) of high-resolution, global coupled simulations for the period 1900–2100, using CMIP5 historical and RCP8.5 emissions. Fifteen of these 25 coupled simulations now form a subset of the global projections provided for the UK Climate Projections 2018 (UKCP18). This first paper describes the selection of 25 variants (combinations of 47 parameters) using a set of cheap, coarser-resolution atmosphere-only simulations from a large sample of nearly 3000 variants. Retrospective 5-day weather forecasts run at climate resolution, and simulations of 2004–2009 with prescribed SST and sea ice are evaluated to filter out poor performance. We opted for a single design choice and sensitivity tests were done after the PPE was generated to demonstrate the effect of design choices on the filtering. Given our choice, only 38 of the parameter combinations were found to have acceptable performance at this stage. Idealised atmosphere-only simulations were then used to select the subset of 25 members that were as diverse as possible in terms of their CO2 and aerosol forcing, and their response to warmer SSTs. Using our parallel set of atmosphere-only and coupled PPEs (the latter from paper 2), we show that local biases in the atmosphere-only experiments are generally informative about the biases in the coupled PPE. Biases in radiative fluxes and cloud amounts are strongly informative for most regions, whereas this is only true for a smaller fraction of the globe for precipitation and dynamical variables. Therefore, the cheap experiments are an affordable way to search for promising parameter combinations but have limitations.

Similar content being viewed by others

Availability of data and code

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request. The parameter combinations for both the full 2914 PPE members and the subset of 25 members are available in CSV format at https://qump-project.github.io/qump-hadgem3/key-documents/. Some code to read the CSV file is also available there.

References

Aldrin M, Holden M, Guttorp P et al (2012) Bayesian estimation of climate sensitivity based on a simple climate model fitted to observations of hemispheric temperatures and global ocean heat content. Environmetrics 23:253–271. https://doi.org/10.1002/env.2140

Andrews T, Gregory JM, Paynter D et al (2018) Accounting for changing temperature patterns increases historical estimates of climate sensitivity. Geophys Res Lett 45:8490–8499. https://doi.org/10.1029/2018GL078887

Armour KC (2017) Energy budget constraints on climate sensitivity in light of inconstant climate feedbacks. Nat Clim Chang 7:331–335. https://doi.org/10.1038/nclimate3278

Barsugli JJ, Battisti DS (2002) The basic effects of atmosphere-ocean thermal coupling on midlatitude variability*. J Atmos Sci 55:477–493. https://doi.org/10.1175/1520-0469(1998)055%3c0477:tbeoao%3e2.0.co;2

Best MJ, Pryor M, Clark DB et al (2011) The joint UK land environment simulator (JULES), model description—part 1: energy and water fluxes. Geosci Model Dev. https://doi.org/10.5194/gmd-4-677-2011

Bond TC, Bergstrom RW (2006) Light absorption by carbonaceous particles: an investigative review. Aerosol Sci Technol

Booth BBB, Bernie D, McNeall D et al (2013) Scenario and modelling uncertainty in global mean temperature change derived from emission-driven global climate models. EARTH Syst Dyn 4:95–108. https://doi.org/10.5194/esd-4-95-2013

Carslaw KS, Lee LA, Reddington CL et al (2013) Large contribution of natural aerosols to uncertainty in indirect forcing. Nature 503:67–71. https://doi.org/10.1038/nature12674

Chadwick R, Good P, Andrews T, Martin G (2014) Surface warming patterns drive tropical rainfall pattern responses to CO2 forcing on all timescales. Geophys Res Lett 41:610–615. https://doi.org/10.1002/2013GL058504

Chadwick R, Douville H, Skinner CB (2017) Timeslice experiments for understanding regional climate projections: applications to the tropical hydrological cycle and European winter circulation. Clim Dyn 49:3011–3029. https://doi.org/10.1007/s00382-016-3488-6

Collins M, Booth BBB, Harris GR et al (2006) Towards quantifying uncertainty in transient climate change. Clim Dyn 27:127–147. https://doi.org/10.1007/s00382-006-0121-0

Collins M, Brierley CM, MacVean M et al (2007) The sensitivity of the rate of transient climate change to ocean physics perturbations. J Clim 20:2315–2320. https://doi.org/10.1175/JCLI4116.1

Collins M, Booth BBB, Bhaskaran B et al (2011) Climate model errors, feedbacks and forcings: a comparison of perturbed physics and multi-model ensembles. Clim Dyn. https://doi.org/10.1007/s00382-010-0808-0

Covey C, Lucas DD, Tannahill J et al (2013) Efficient screening of climate model sensitivity to a large number of perturbed input parameters. J Adv Model Earth Syst. https://doi.org/10.1002/jame.20040

Cumming JA, Wooff DA (2007) Dimension reduction via principal variables. Comput Stat Data Anal. https://doi.org/10.1016/j.csda.2007.02.012

DEFRA (2016) UK climate change—risk assessment 2017

Demory M-E, Vidale PL, Roberts MJ et al (2014) The role of horizontal resolution in simulating drivers of the global hydrological cycle. Clim Dyn 42:2201–2225. https://doi.org/10.1007/s00382-013-1924-4

Department for Farming and Rural Affairs (2012) Summary of the key findings from the UK climate change risk assessment 2012 contents. Assessment. https://doi.org/10.1029/2005JD006548

Flato G, Marotzke J, Abiodun B, et al (2013) IPCC AR5. WG1. Chap. 9. Evaluation of climate models. Clim Chang 2013 Phys Sci Basis Contrib Work Gr I to Fifth Assess Rep Intergov Panel Clim Chang. https://doi.org/10.1017/CBO9781107415324

Fountalis I, Dovrolis C, Bracco A et al (2018) δ-MAPS: from spatio-temporal data to a weighted and lagged network between functional domains. Appl Netw Sci. https://doi.org/10.1007/s41109-018-0078-z

Gates WL, Boyle JS, Covey C et al (1999) An Overview of the results of the atmospheric model intercomparison project (AMIP I). Bull Am Meteorol Soc 80:29–55

Giorgi F, Francisco R (2000) Uncertainties in regional climate change prediction: a regional analysis of ensemble simulations with the HADCM2 coupled AOGCM. Clim Dyn 16:169–182. https://doi.org/10.1007/PL00013733

Harris GR, Sexton DMH, Booth BBB et al (2006) Frequency distributions of transient regional climate change from perturbed physics ensembles of general circulation model simulations. Clim Dyn 27:357–375. https://doi.org/10.1007/s00382-006-0142-8

Hawkins E, Sutton R (2009) The potential to narrow uncertainty in regional climate predictions. Bull Am Meteorol Soc. https://doi.org/10.1175/2009BAMS2607.1

Hyder P, Edwards JM, Allan RP et al (2018) Critical southern ocean climate model biases traced to atmospheric model cloud errors. Nat Commun. https://doi.org/10.1038/s41467-018-05634-2

Johnson JS, Regayre LA, Yoshioka M, et al (2020) Robust observational constraint of uncertain aerosol processes and emissions in a climate model and the effect on aerosol radiative forcing. submitted

Jones RN, Patwardhan A, Cohen SJ, et al (2015) Foundations for decision making. In: Climate change 2014 impacts, adaptation and vulnerability: part A: global and sectoral aspects

Karmalkar AV., Sexton DMH, Murphy JM, et al (2019) Finding plausible and diverse variants of a climate model. Part II: development and validation of methodology. Clim Dyn. https://doi.org/10.1007/s00382-019-04617-3

Lambert FH, Harris GR, Collins M et al (2013) Interactions between perturbations to different Earth system components simulated by a fully-coupled climate model. Clim Dyn 41:3055–3072. https://doi.org/10.1007/s00382-012-1618-3

Lee LA, Carslaw KS, Pringle KJ et al (2011) Emulation of a complex global aerosol model to quantify sensitivity to uncertain parameters. Atmos Chem Phys 11:12253–12273. https://doi.org/10.5194/acp-11-12253-2011

Lee LA, Carslaw KS, Pringle KJ, Mann GW (2012) Mapping the uncertainty in global CCN using emulation. Atmos Chem Phys 12:9739–9751. https://doi.org/10.5194/acp-12-9739-2012

Lee LA, Pringle KJ, Reddington CL et al (2013) The magnitude and causes of uncertainty in global model simulations of cloud condensation nuclei. Atmos Chem Phys 13:8879–8914. https://doi.org/10.5194/acp-13-8879-2013

Liu Y, Daum PH, Guo H, Peng Y (2008) Dispersion bias, dispersion effect, and the aerosol-cloud conundrum. Environ Res Lett. https://doi.org/10.1088/1748-9326/3/4/045021

Lowe JA, Bernie D, Bett P, et al (2019) UKCP18 science overview report

Ma H-Y, Xie S, Klein SA et al (2014) On the correspondence between mean forecast errors and climate errors in CMIP5 models. J Clim 27:1781–1798. https://doi.org/10.1175/JCLI-D-13-00474.1

Mann GW, Carslaw KS, Spracklen DV et al (2010) Description and evaluation of GLOMAP-mode: a modal global aerosol microphysics model for the UKCA composition-climate model. Geosci Model Dev. https://doi.org/10.5194/gmd-3-519-2010

McKay MD, Beckman RJ, Conover WJ (1979) Comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 21:239–245. https://doi.org/10.1080/00401706.1979.10489755

McSweeney CF, Jones RG, Lee RW, Rowell DP (2015) Selecting CMIP5 GCMs for downscaling over multiple regions. Clim Dyn 44:3237–3260. https://doi.org/10.1007/s00382-014-2418-8

Menary MB, Kuhlbrodt T, Ridley J et al (2018) Preindustrial control simulations with HadGEM3-GC3.1 for CMIP6. J Adv Model Earth Syst 10:3049–3075. https://doi.org/10.1029/2018MS001495

Mulcahy JP, Jones C, Sellar A et al (2018) Improved aerosol processes and effective radiative forcing in HadGEM3 and UKESM1. J Adv Model Earth Syst 10:2786–2805. https://doi.org/10.1029/2018MS001464

Mulholland DP, Haines K, Sparrow SN, Wallom D (2017) Climate model forecast biases assessed with a perturbed physics ensemble. Clim Dyn 49:1729–1746. https://doi.org/10.1007/s00382-016-3407-x

Murphy JM, Sexton DMH, Barnett DN et al (2004) Quantification of modelling uncertainties in a large ensemble of climate change simulations. Nature 430:768–772. https://doi.org/10.1038/nature02771

Murphy JM, Sexton DMH, Jenkins GJ et al (2009) UK climate projections science report: climate change projections. Meteorological Office Hadley Centre, Exeter

Murphy JM, Harris GR, Sexton DMH, et al (2018) UKCP18 land projections: science report

Oakley JE, O’ Hagan A (2010) SHELF: the Sheffield Elicitation Framework (Version 2.0)

Ogura T, Shiogama H, Watanabe M et al (2017) Effectiveness and limitations of parameter tuning in reducing biases of top-of-atmosphere radiation and clouds in MIROC version 5. Geosci Model Dev 10:4647–4664. https://doi.org/10.5194/gmd-10-4647-2017

Olson R, Sriver R, Goes M et al (2012) A climate sensitivity estimate using Bayesian fusion of instrumental observations and an earth system model. J Geophys Res Atmos. https://doi.org/10.1029/2011JD016620

Phillips TJ, Potter GL, Williamson DL et al (2004) Evaluating parameterizations in general circulation models—climate simulation meets weather prediction. Bull Am Meteorol Soc 85:1903–1915. https://doi.org/10.1175/BAMS-85-12-1903

Qian Y, Wan H, Yang B, et al (2018) Parametric sensitivity and uncertainty quantification in the version 1 of E3SM atmosphere model based on short perturbed parameter ensemble simulations. J Geophys Res Atmos 123:13,046–13,073. https://doi.org/10.1029/2018JD028927

Regayre LA, Pringle KJ, Booth BBB et al (2014) Uncertainty in the magnitude of aerosol-cloud radiative forcing over recent decades. Geophys Res Lett 41:9040–9049. https://doi.org/10.1002/2014GL062029

Regayre LA, Pringle KJ, Lee LA et al (2015) The climatic importance of uncertainties in regional aerosol-cloud radiative forcings over recent decades. J Clim 28:6589–6607. https://doi.org/10.1175/JCLI-D-15-0127.1

Regayre LA, Johnson JS, Yoshioka M et al (2018) Aerosol and physical atmosphere model parameters are both important sources of uncertainty in aerosol ERF. Atmos Chem Phys. https://doi.org/10.5194/acp-18-9975-2018

Ringer MA, Andrews T, Webb MJ (2014) Global-mean radiative feedbacks and forcing in atmosphere-only and coupled atmosphere-ocean climate change experiments. Geophys Res Lett 41:4035–4042. https://doi.org/10.1002/2014GL060347

Rodwell MJ, Palmer TN (2007) Using numerical weather prediction to assess climate models. Q J R Meteorol Soc 133:129–146. https://doi.org/10.1002/qj.23

Rostron JW, Sexton DMH, McSweeney CF et al (2020) The impact of performance filtering on climate feedbacks in a perturbed parameter ensemble. Clim Dyn. https://doi.org/10.1007/s00382-020-05281-8

Rougier J, Goldstein M (2014) climate simulators and climate projections. In: Fienberg, SE (ed) Annual review of statistics and its application, vol 1. pp 103–123

Rougier J, Sexton DMH (2007) Inference in ensemble experiments. Philos Trans R Soc A Mathematical Phys Eng Sci 365:2133–2143. https://doi.org/10.1098/rsta.2007.2071

Sanchez C, Williams KD, Collins M (2016) Improved stochastic physics schemes for global weather and climate models. Q J R Meteorol Soc. https://doi.org/10.1002/qj.2640

Scaife AA, Copsey D, Gordon C et al (2011) Improved Atlantic winter blocking in a climate model. Geophys Res Lett. https://doi.org/10.1029/2011GL049573

Senior CA, Andrews T, Burton C et al (2016) Idealized climate change simulations with a high-resolution physical model: HadGEM3-GC2. J Adv Model Earth Syst. https://doi.org/10.1002/2015MS000614

Sexton DMH, Murphy JM, Collins M, Webb MJ (2012) Multivariate prediction using imperfect climate models part I: outline of methodology. Clim Dyn 38:2513–2542

Sexton DMH, Karmalkar AV, Murphy JM et al (2019) Finding plausible and diverse variants of a climate model. Part 1: establishing the relationship between errors at weather and climate time scales. Clim Dyn 53:989–1022. https://doi.org/10.1007/s00382-019-04625-3

Shiogama H, Watanabe M, Yoshimori M et al (2012) Perturbed physics ensemble using the MIROC5 coupled atmosphere-ocean GCM without flux corrections: experimental design and results Parametric uncertainty of climate sensitivity. Clim Dyn 39:3041–3056. https://doi.org/10.1007/s00382-012-1441-x

Stainforth DA, Aina T, Christensen C et al (2005) Uncertainty in predictions of the climate response to rising levels of greenhouse gases. Nature 433:403–406. https://doi.org/10.1038/nature03301

Taylor KE, Crucifix M, Braconnot P et al (2007) Estimating shortwave radiative forcing and response in climate models. J Clim. https://doi.org/10.1175/JCLI4143.1

Taylor KE, Stouffer RJ, Meehl GA (2012) An overview of CMIP5 and the experiment design. Bull Am Meteorol Soc 93:485–498. https://doi.org/10.1175/BAMS-D-11-00094.1

Tett SFB, Stott PA, Allen MR et al (1999) Causes of twentieth-century temperature change near the Earth’s surface. Nature. https://doi.org/10.1038/21164

Titchner HA, Rayner NA (2014) The met office hadley centre sea ice and sea surface temperature data set, version 2: 1. sea ice concentrations. J Geophys Res. https://doi.org/10.1002/2013JD020316

Turnock ST, Mann GW, Woodhouse MT et al (2019) The impact of changes in cloud water pH on aerosol radiative forcing. Geophys Res Lett 46:4039–4048. https://doi.org/10.1029/2019GL082067

Waliser DE, Moncrieff MW, Burridge D et al (2012) The “year” of tropical convection (May 2008-April 2010): climate variability and weather highlights. Bull Am Meteorol Soc 93:1189–1218

Walters D, Baran A, Boutle I, et al (2017a) The met office unified model global atmosphere 7.0/7.1 and JULES global land 7.0 configurations. Geosci Model Dev Discuss. https://doi.org/10.5194/gmd-2017-291

Walters D, Boutle I, Brooks M et al (2017) The met office unified model global atmosphere 6.0/6.1 and JULES global land 6.0/6.1 configurations. Geosci Model Dev 10:1487–1520. https://doi.org/10.5194/gmd-10-1487-2017

Wang H, Su W (2013) Evaluating and understanding top of the atmosphere cloud radiative effects in intergovernmental panel on climate change (ipcc) fifth assessment report (ar5) coupled model intercomparison project phase 5 (cmip5) models using satellite observations. J Geophys Res Atmos. https://doi.org/10.1029/2012JD018619

Williams KD, Bodas-Salcedo A, Deque M et al (2013) The transpose-AMIP II experiment and its application to the understanding of southern ocean cloud biases in climate models. J Clim 26:3258–3274. https://doi.org/10.1175/JCLI-D-12-00429.1

Williams KD, Copsey D, Blockley EW, et al (2018) The met office global coupled model 3.0 and 3.1 (GC3.0 and GC3.1) configurations. J Adv Model Earth Syst. https://doi.org/10.1002/2017MS001115

Williamson D, Goldstein M, Allison L et al (2013) History matching for exploring and reducing climate model parameter space using observations and a large perturbed physics ensemble. Clim Dyn 41:1703–1729. https://doi.org/10.1007/s00382-013-1896-4

Willmott C, Robeson S, Matsuura K (2017) Climate and other models may be more accurate than reported. Eos (Washington DC). https://doi.org/10.1029/2017EO074939

Wilson DR, Bushell AC, Kerr-Munslow AM et al (2008) PC2: a prognostic cloud fraction and condensation scheme. I: scheme description. Q J R Meteorol Soc 134:2093–2107. https://doi.org/10.1002/qj.333

Wilson DR, Bushell AC, Kerr-Munslow AM et al (2008) PC2: a prognostic cloud fraction and condensation scheme. II: climate model simulations. Q J R Meteorol Soc 134:2109–2125. https://doi.org/10.1002/qj.332

Wood N, Staniforth A, White A et al (2014) An inherently mass-conserving semi-implicit semi-Lagrangian discretization of the deep-atmosphere global non-hydrostatic equations. Q J R Meteorol Soc. https://doi.org/10.1002/qj.2235

Yamazaki K, Sexton DMH, Rostron JW, et al (2021) A perturbed parameter ensemble of HadGEM3-GC3.05 coupled model projections: Part 2: Global performance and future changes. Prep

Yoshioka M, Regayre LA, Pringle KJ, et al (2019) Ensembles of global climate model variants designed for the quantification and constraint of uncertainty in aerosols and their radiative forcing. J Adv Model Earth Syst. https://doi.org/10.1029/2019MS001628

Zappa G, Shepherd TG (2017) Storylines of atmospheric circulation change for European regional climate impact assessment. J Clim 30:6561–6577. https://doi.org/10.1175/JCLI-D-16-0807.1

Acknowledgements

This work and David Sexton, Carol McSweeney, Kuniko Yamazaki, Ben Booth, and James Murphy, and the visit of Ambarish Karmalkar was supported by the Met Office Hadley Centre Climate Programme funded by BEIS and Defra. The contributors John Rostron, Leighton Regayre and Jill Johnson were supported by the UK-China Research & Innovation Partnership Fund through the Met Office Climate Science for Service Partnership (CSSP) China as part of the Newton Fund. Jill Johnson was also supported by the Natural Environment Research Council (NERC) under grants ACID-PRUF and GASSP (NE/I020059/1 and NE/J024252/1 respectively). Leighton Regayre was also funded by A-CURE (NE/P013406/1), a NERC Doctoral Training Grant, and a CASE studentship with the UK Met Office Hadley Centre. We would also like to thank Steven Turnock for his advice on the cloud pH issue, Pat Hyder for useful conversations on the link between atmosphere-only and coupled runs, Hazel Thornton and Philip Bett for help with the qualitative filtering, the model experts with help on the elicitation, Mark Ringer for advice on the atmosphere experiments. We would like to thank our modelling experts: Alison Stirling, Rachel Stratton, Simon Vosper, Ian Boutle, Adrian Lock, Cyril Morcrette, Paul Field, Kalli Furtado, Jonathan Wilkinson, John Edwards, and Andy Wiltshire. We also thank Jane Mulcahy and Ben Johnson for help with GC3.1 developments, and Colin Johnson for help with implementing the aerosol parameters in the model.

Funding

This work and David Sexton, Carol McSweeney, Kuniko Yamazaki, Ben Booth, and James Murphy, and the visit of Ambarish Karmalkar was supported by the Met Office Hadley Centre Climate Programme funded by BEIS and Defra. The contributors John Rostron, Leighton Regayre and Jill Johnson were supported by the UK-China Research & Innovation Partnership Fund through the Met Office Climate Science for Service Partnership (CSSP) China as part of the Newton Fund. Jill Johnson was also supported by the Natural Environment Research Council (NERC) under grants ACID-PRUF and GASSP (NE/I020059/1 and NE/J024252/1 respectively). Leighton Regayre was also funded by A-CURE (NE/P013406/1), a NERC Doctoral Training Grant, and a CASE studentship with the UK Met Office Hadley Centre.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

We declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

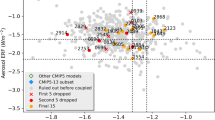

For the filtering of the ATMOS experiment, we added a criterion for the net TOA flux imbalance, which was distinct from the other MSE-based criteria. A model variant was filtered out if its net TOA balance was outside the range − 3.3 to 3.7Wm−2; this kind of constraint is similar in nature to the kind used in History Matching.

The tolerable range used here is wider than a range used to tune a climate model, which typically requires that the net TOA flux is balanced to a precision that is greater than the uncertainty in its individual components to stop the model drifting into an unrealistic state (this is described briefly in Box 9.1 in Flato et al. 2013). The hope is that when the ocean component is coupled, then the net TOA flux at the start of the spin-up will be close to its final value so that it only takes several decades to spin up and the SST biases are not intolerably large. Collins et al. (2006) explain the need for this wider tolerance in a PPE. One reason pertinent to our study is that there is a structural error in the TOA balance “due to missing, poorly-resolved or structurally-deficient representations of physical processes, in which case the process of tuning model parameters may lead to TOA balance being achieved for the wrong reasons”. For example, the addition of a previously missing process in HadGEM3-GC3 via the inclusion of the Liu scheme (described in Sect. 2.1) altered the net TOA flux without tuning by 0.38 Wm−2. Therefore, the potential for tuning to resolve TOA imbalance for the wrong reasons, and that the imposition of a tight constraint on TOA would neglect the structural error, go against our values V2 and V3 respectively. Collins et al. (2006) used flux adjustment in their PPE where they perturbed ocean parameters, to reduce drifts and SST biases. In Part 2, we use flux adjustment over ocean points outside the Arctic with a spatially uniform correction for each perturbed member to counteract the net TOA flux differences from the standard variant.

We had several pieces of evidence to consider when determining the tolerable range. First, the CMIP5 models explore a range of TOA imbalances, with one study of 12 models showing an AMIP mean bias of 0.6 Wm−2 with a standard deviation of 1 Wm−2 (Wang and Su 2013), whilst another shows a different range of − 2 to 6 Wm−2 (Karmalkar et al. 2019) based on shorter time periods. We started with an initial range that may reflect uncertainty for tuned models of − 1.5 to 2 Wm−2, but then centred this around the HadGEM3-GC3.05 standard value of 0.2 Wm−2. To make a range that was more tolerant for perturbed members (Karmalkar et al (2019) explored TOA from − 15 to 15 Wm−2), we just doubled the width of our initial range to give a tolerable range of − 3.3 to 3.7 Wm−2.

Appendix B

Another step in the method is to augment the PPE with new members that are chosen because they are expected to have a greater chance of not being ruled out and therefore are likelier to be a candidate for the final 25 model variants than their predecessors. This idea of a new ‘wave’ of runs is an integral part of History Matching to focus in on the better parts of parameter space. The new wave is chosen based on the use of emulators for each of the quantities of interest, trained on the runs that exist. Members are not ruled out unless the emulator confidently predicts the Implausibility threshold to be exceeded; a poor emulator with a high variance can cause a model variant to be retained.

Here, we are using emulators to predict MSEs for potential new PPE members, and then to predict the subset of these that when actually run, do not exceed the hard and soft thresholds. The emulators predict a distribution for the model output with mean and variance, and so we can use this to test that the emulated MSEs are not statistically different from these hard and soft thresholds relevant for actual runs, i.e. \(T\Sigma \) and \(S\Sigma \) where \(\Sigma \) is the normalisation term in Eq. 1. At this point, History Matching uses a normalisation term that includes the emulator variance rather than internal variability. As the former is essentially internal variability plus an additional contribution from lack of fit of the mean term, and therefore larger, our test is a tougher requirement but necessitated by the different aim of building the new wave.

The emulators are built for the \(\mathrm{log}(MSE)\) for each metric, as the logarithmic transformation stabilises the variance. As in Sexton et al. (2019), we use a Gaussian Process emulator that simultaneously fits a linear model to the parameter values, and a decorrelation length scale for each parameter that together define a correlation matrix representing a smooth response surface across the residuals. We use an initial stepwise linear analysis to remove redundant parameters from the linear model. We also fit a nugget term based on the variance of the \(\mathrm{log}(MSE)\) values from the relevant stochastic ensemble (Sect. 2.4) to avoid over-fitting to internal variability. Note for each diversity metric and TOA imbalance, the emulators are built in the same way, but without the logarithmic transform. Examples of how well the emulators of key performance metrics and feedbacks validate can be found in Fig. 13 of Rostron et al. (2020). With the emulated prediction of the \(\mathrm{log}(MSE)\) for the ith metric for parameter combination, x, which has a mean, \({\mu }_{EM}(x)\) and error variance, \({\Sigma }_{EM}(x)\), we test if the error in the emulated mean is statistically significantly above the hard or soft threshold. For instance, a hard threshold is exceeded if.

We define a parameter \(\alpha \) to control the level of statistical significance (see Sects. 4.2 and 5.2 for more information on the effect of this parameter). For building new waves, we also emulated the TOA flux and tested whether it was statistically significantly outside the tolerable range (see Appendix A).

Appendix C

An important step in Karmalkar et al. (2019) was the selection of a subset of surviving members that were as diverse as possible, in terms of maximising their spread across a pre-defined set of so-called diversity metrics. In our approach, there are two circumstances where we need to subsample a set of parameter combinations. The first is when we are augmenting the TAMIP or ATMOS ensemble to improve our chances of finding parameter combinations that pass the acceptability criteria (see Appendix B). The second is when we sub-select from the complete filtered set of PPE members to generate the final small, affordable set of parameter combinations to run as coupled models. In both cases, we aim to pick a subset of M members from N candidates where the selection criterion is designed to generate a subset M that are optimally spread out across the diversity metrics. The two cases differ in that the augmentation works with untried parameter combinations and so is based on emulated estimates of the diversity metrics, whereas the final sub-selection is based on estimates of the diversity metrics from actual runs.

We have adapted the approach in Karmalkar et al. (2019) to (i) include more diversity metrics, and (ii) simplify the measure of distance between each pair of model variants. First, Karmalkar et al. (2019) used two types of diversity metrics. The first type measured globally-averaged forcing and feedback based on the differences between the idealised atmosphere-only experiments and ATMOS (see Sect. 2.3) for the given parameter combination. The second type was the performance metrics i.e. the mean square errors, to ensure that diversity was not achieved simply by sub-selecting the relatively poor members from the filtered set. Karmalkar et al. (2019) used a small set of globally-averaged diversity metrics to demonstrate the concept. Here we have a much larger number of performance metrics, and we also aim to widen the net to include regional climate response to warmer SSTs based on differences between the SSTfuture and ATMOS experiments.

The diversity metrics are based on the cheap atmosphere-only experiments and not actual coupled simulations. So here, this step is to make sure the final 25 runs sample parameter combinations as widely as possible by focussing on the parameters and processes that cause most spread in atmosphere-only mode. We could have just aimed for runs to be as disparate as possible in parameter space normalised between 0 and 1. However, this would treat each parameter as equally important. The diversity step aims to do better than this by implicitly focussing on parameters that affect the atmospheric and land processes that are important for generating spread in the diversity metrics atmosphere-only idealised runs.

Ringer et al. (2014) show that in AMIP4K runs (similar to SSTfuture but warming is uniform across global ocean) that the processes that affect clouds in those runs dominate the spread in coupled abrupt4CO2 simulations. Therefore, we expect diversity in global feedbacks from the atmosphere-only experiments to imply diversity in global temperature in the coupled PPE. Part 2 shows that for the global temperature response the spread is driven more by the use of different CO2 pathways, a result that requires further experiments and work to understand. The extension of the set of metrics to include regional responses is based on work by Lee et al. (2011) and our own investigation into HadGEM3-GA4 which show that different parameters are important at different regions of the globe (e.g. Regayre et al. 2015). We expect diversity of the regional responses to transfer through to the coupled PPE, with the caveat that patterns of regional response will also be influenced in coupled simulations by air-sea interactions and future changes in ocean circulation, notably through their effects on historical biases and future changes in SSTs.

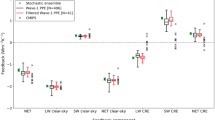

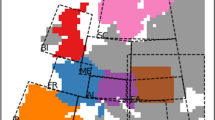

For the diversity metrics based on forcing and feedbacks, we chose nine (net feedback plus the four LW/SW all-sky/cloud feedback components, the net and aerosol-radiative-interaction (ARI) and aerosol-cloud-interaction (ACI) components of the aerosol forcing, and the 4xCO2 forcing). For regional climate response, we considered annual, DJF and JJA responses in temperature and precipitation across all 23 Giorgi-Francisco regions(Giorgi and Francisco 2000). Finally, we considered all the performance metrics used in the filtering (see Fig. 5 lower panel). To provide a better balance across the three types of diversity metric, we used nine from each set. This meant using all the metrics from the forcing and feedback set, but sub-selecting nine from each of the other two sets.

For the regional climate response, we first removed all those variables for which the parametric spread was not much larger than that generated by the stochastic physics schemes. This was done so that the distance between parameter combinations was more representative of differences in the signal rather than randomly sampled noise. To achieve this, we removed variables where the spread across the stochastic ensemble was at least 65% of the spread across the PPE in the response to SSTfuture relative to the present day ATMOS run; 65% was chosen as this choice provided a clear separation between the 14 that were ruled out and the remaining 103 regional diversity metrics. We then selected nine of the 103 diversity metrics, using Principal Variables (Cumming and Wooff 2007), which is a form of dimensional reduction that picks a subset of the original variables that are most independent to each other whilst explaining the most variance between them. Finally, we also used Principal Variables to pick nine out of the 962 performance metrics.

Based on these 27 variables, we pick the 25 members that are most spread out in terms of “standardized Euclidean” distance i.e. the Euclidean distance calculated following standardization of the metrics across the candidate set. This is done by picking an initial member, either randomly or in the case of the final selection, using the standard member which by design is to be a member of the final 25. Then iteratively, we pick the member that when added causes the greatest increase in the total pairwise distance with all the other members included so far.

Appendix D

Cloud_pH (param_cloud_hplus) is an important parameter in determining the sulphate aerosol concentrations, especially over source regions of anthropogenic sulphur dioxide (SO2) emissions and affects aerosol forcing over these regions and ones remote from emission sources (Turnock et al. 2019). In reality, there are several different reactions to form sulphate particles but in the family of HadGEM3-GC3.x models there are only three different oxidation reactions involving SO2: a gaseous phase reaction with hydroxyl radicals leading to new particle formation, and two aqueous reactions with hydrogen peroxide and ozone respectively. Cloud_pH has a strong influence on the aqueous dissolution of SO2 and the subsequent rate of reaction involving ozone.

Having run many of the first wave of ATMOS runs, we discovered that we had made an error in the implementation of the cloud_pH parameter for these runs and the TAMIP ensemble. The value was elicited by the experts as a pH number but the line of code to convert this to the number of hydrogen ions as required by HadGEM3-GC3 was not included. In effect we had drastically lowered the number of hydrogen ions by several orders of magnitude. Our error effectively switched off the reaction with ozone, reducing the number of cloud condensation nuclei.

We checked the impact of correcting the cloud_pH parameter in 50 randomly sampled members of the ATMOS PPE and compared their MSEs with the corresponding incorrect run for the ATMOS metrics. The effect of correcting cloud_pH does not have a major effect on the overall performance of the members. This is part of the reason that the error was hard to spot. Clear sky outgoing shortwave radiation shows improvement everywhere due to improved aerosol-radiation interaction. Some variables like thick and thin extratropical cloud amounts show small systematic improvements with a high correlation across the 50 members, whilst others like the medium thick cloud amounts are also systematic but slightly worse. The shortwave cloud forcing is slightly worse but this is less systematic across the regions.

Our filtering method is based on normalised MSEs. The normalisation is dominated by the structural term where we use the MSE of the standard variant. In effect, our normalised MSE is the ratio between the MSE of the ensemble member and the MSE of the standard variant, and both will be affected by this error. We assessed how much the error in cloud_pH might affect our filtering of the ATMOS experiments by counting how many runs are beyond the hard threshold of 4.5 times the MSE of the standard variant. For most variables these counts were similar for the corrected and incorrect PPEs. Low and mid-level thick cloud both became less effective at ruling out poor performance because there were larger improvements in the worse members and the standard MSE did not change much. Outgoing longwave at the top of atmosphere became slightly more effective at ruling out poor performers. The other two variables that were most important in the TAMIP filtering, that is, precipitation and mean sea level pressure, showed no change in effectiveness of filtering.

The real impact of cloud_pH is on the aerosol forcing (Regayre et al. 2018). As there was only a small impact of the error on the present-day performance of the PPE members for the variables that were effective at filtering 5-day climate forecasts, we did not re-run the TAMIP experiment. So, there may be a chance that we inappropriately ruled out a few members or retained a few other members based on the TAMIP filtering. The latter still would have been filtered using the 5-year runs, so the overall impact of this error is small.

Rights and permissions

About this article

Cite this article

Sexton, D.M.H., McSweeney, C.F., Rostron, J.W. et al. A perturbed parameter ensemble of HadGEM3-GC3.05 coupled model projections: part 1: selecting the parameter combinations. Clim Dyn 56, 3395–3436 (2021). https://doi.org/10.1007/s00382-021-05709-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-021-05709-9