Abstract

Using the sub-seasonal to seasonal forecast model of Beijing Climate Center, several key physical parameters are perturbed by the Latin hypercube sampling method to find a better configuration for representation of Madden–Julian oscillation (MJO) in the free-run simulation. We find that although model simulation is especially sensitive to some parameters, there are overall no significant linear relationships between model skill and any one of the parameters, and the optimum performance can be obtained by combined perturbations of multiple parameters. By optimization, MJO’s spectrum, intensity, spatial structure and propagation, as well as the mean state and variance, are all improved to some extent, suggesting the correspondence and interrelation of model’s performances in simulating different characteristics of MJO. Further, several sets of initialized hindcasts using the optimized parameters are conducted, and their results are compared with the hindcasts using only improved initial conditions. We show that with an optimized model, the forecast of MJO beyond 3-week lead time is not improved, and the maximum useful skill is only slightly increased, implying that a decrease of model error does not always translate into an increase of forecast skill at all lead time. However, the skill is obviously enhanced during lead times of 2–3 weeks for forecasts in most seasons and initial phases except for a few cases. Particularly, the deficiency in forecasting MJO’s propagation from the Indian Ocean to the Pacific is relieved, further highlighting the positive contribution of reducing model error compared to previous work that only reduced initial condition error. In this study, we also show benefits of multi-scheme ensemble strategy in describing uncertainties of model error and initial condition error and thus improving MJO forecast.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Madden–Julian oscillation (MJO; Madden and Julian 1971) is a well-known phenomenon that prevails in the tropics and exerts remarkable modulations on the tropical and extra-tropical atmospheric circulations. Due to its close connection with a wide range of local or remote weather and climate events (e.g., tropical cyclone, mid-latitude weathers, monsoon, and El Niño–Southern Oscillation), MJO has become an important topic of many research and operational centers.

For exploring MJO’s origin, representation and influence, numerical climate model is undoubtedly a pivotal tool, which has achieved widespread use in the past few decades. Many studies showed that state-of-the-art climate models are capable of reproducing MJO’s basic characteristics in intensity, spectrum, spatial structure and propagation as well as the relevant dynamics (e.g., Kim et al. 2009, 2014b; Hung et al. 2013; Ahn et al. 2017). However, it was also revealed by these studies that the skill of MJO simulation always varied from one model to another, and most models showed limitations in one or more aspects. To minimize climate model’s deficiency and to improve MJO simulation, numerous attempts have been made in enhancing model’s grid resolution or its ability of explicitly resolving subgrid processes (e.g., Inness et al. 2001; Khairoutdinov et al. 2005; Ziemianski et al. 2005; Liu et al. 2009), introducing complete air-sea coupling (e.g., Waliser et al. 1999; Zheng et al. 2004; Sperber et al. 2005; Rajendran and Kitoh 2006; Zhang et al. 2006), and improving physics parameterizations (e.g., Lee et al. 2001; Liu et al. 2005; Zhang and Mu 2005; Deng et al. 2015). These efforts present effective methods for improving model performance, and also suggest that MJO simulation is highly sensitive to climate model’s various settings. Especially, convection parameterization scheme is a critical part in determining model’s ability in simulating MJO (e.g., Lee et al. 2003; Liu et al. 2005; Kim and Kang 2012; Cai et al. 2013; Zhu et al. 2017). Even small changes of physical parameters related to convection and precipitation, such as closure assumption, convection trigger, evaporation of convective precipitation, entrainment rate, and diabatic heating, can result in marked differences in MJO’s representation in a model (e.g., Wang and Schlesinger 1999; Maloney and Hartmann 2001; Zhang and Mu 2005; Lin et al. 2008b; Li et al. 2009; Boyle et al. 2015; Del Genio et al. 2015). In this context, refining physics parameterization, optimizing relevant key physical parameters and thus improving model performance become goals of research groups for numerical simulation of MJO.

With the success in MJO simulation, dynamic prediction of MJO has also made good progress. In recent years, forecast skill of MJO is gradually enhanced, and useful skills at the lead time beyond 2–3 weeks have been achieved by many climate models. Particularly, the operational prediction models at the Australian Bureau of Meteorology and the National Center for Environmental Prediction (NCEP) of NOAA can provide skillful MJO forecast up to 3 weeks (Rashid et al. 2011; Kim et al. 2014c; Wang et al. 2014); the European Centre for Medium-Range Weather Forecasts (ECMWF) forecast system can skillfully predict the MJO up to 27 days in advance (Kim et al. 2014c; Vitart 2014); and the Geophysical Fluid Dynamics Laboratory (GFDL) coupled model also exhibited 27-day skill for the boreal winter MJO (Xiang et al. 2015). The multi-model ensemble results can further increase MJO prediction skill by about 1 week, as shown by the Intraseasonal Variability Hindcast Experiment (ISVHE) Project (Zhang et al. 2013). Even with the above-mentioned gratifying progress, MJO prediction is still considerably challenging due to its significant sensitivity to initial conditions (e.g., Vitart et al. 2007; Fu et al. 2011; Liu et al. 2017), air-sea coupling (e.g., Vitart et al. 2007; Fu et al. 2013), differences among models (e.g., Fu et al. 2013; Zhang et al. 2013; Neena et al. 2014), and the characteristics of MJO event itself (e.g., Lin et al. 2008a; Rashid et al. 2011).

Given the importance and the challenges of MJO simulation and prediction, increasing attention is paid to this topic by many major projects (Zhang et al. 2013). Particularly, in recent years, the Sub-seasonal to Seasonal (S2S) Prediction Project is ongoing, and many organizations join in this effort to promote scientific research and operational application of sub-seasonal forecast (Vitart et al. 2017). Being an important source of sub-seasonal predictability, MJO is listed as one of the key issues for the S2S project. Thus, improving the simulation and prediction of MJO in any individual model that participates in this project is meaningful, not only for the improvement of model itself but also for a positive contribution to the real-time operational products released by the project.

The Beijing Climate Center (BCC) climate system model (BCC_CSM) is one of the climate models participating in the S2S project. It has provided comprehensive reforecasts for every initial date during the past 20 years and real-time forecast products up to now. Liu et al. (2017) is a detailed documentation on the progress of BCC’s participation in the S2S project, including the model version used to build a forecast system, initialization and ensemble forecast schemes, performance and deficiency, and further improvement on MJO prediction. It pointed out that the BCC_CSM has apparent biases in spectrum, structure and propagation of MJO, which may cause the limited skill of MJO prediction to some extent. Even with these model deficiencies, the MJO prediction skill was still enhanced from 15–16 to 21–22 days because of the improvement of initial conditions (Liu et al. 2017). In this context, it is necessary to explore whether this skill can be further enhanced by optimizing the model itself. In addition to a possible benefit to the S2S products from this model, this exploration is meaningful for addressing the following questions. (1) To what degree is the MJO simulation sensitive to changes of multiple physical parameters in a complex climate model? Parameter tuning has always been used as an economic and effective method to optimize a model with established dynamics and physics schemes. Previous studies of parameter sensitivity focused more on the extreme cases, climatological mean state or seasonal-to-interannual variability rather than on the intraseasonal variability; or they paid more attention to the sensitivity of MJO simulation to a single physical parameter rather than to multiple parameters. It is useful to explore how good the representation of MJO is if multiple key parameters are simultaneously tuned to reduce the uncertainty of physics in a climate model, given that the impacts of various parameters may be nonlinear and interactive. (2) To what extent can the MJO prediction skill be enhanced by reducing the error in MJO simulation? Studies by Jiang et al. (2015), Klingaman et al. (2015a, b), and Xavier et al. (2015) characterized the key features in MJO physics and further examined how these features were related to models’ MJO simulation and forecast skills. They showed weak and statistically insignificant relationships between MJO fidelity in the free-run simulations and initialized hindcasts. From the perspective of cross comparison among multiple models, these studies suggested that MJO performance in model simulation does not necessarily translate into performance in short-term forecast. Due to use of different dynamic frameworks and physical processes, various models differ largely in terms of error behavior, which leads to uncertainty of multi-model comparison results; thus, it is meaningful to explore the above-mentioned issue using a single model with relatively fixed error feature. (3) What are the impacts of model error and initial condition error on limiting MJO prediction skill in a model? Model error and initial condition error are two crucial factors controlling reliability of prediction. The in-depth understanding of the uncertainty, importance and impacts of these two types of error is meaningful for enhancing model’s capability in forecasting MJO.

The rest of this paper is organized as follows. In Sect. 2, we give details of the model, experiment, validation data, and methods. In Sect. 3, we show the sensitivity of model performance to several key physical parameters and the improvement of MJO simulation by parameter optimization. In Sect. 4, we present the impact of parameter optimization on MJO prediction. Summary and discussion are given in Sect. 5.

2 Model, experiments and validation data

2.1 Model

The model used in this study is the moderate-resolution BCC_CSM version 1.2, which participates in the S2S project. It is an atmosphere–land–ocean–sea ice coupled model. The atmospheric component is the BCC Atmospheric General Circulation Model version 2 with a horizontal T106 triangular truncation and 40 vertical hybrid sigma/pressure layers. The land component is the BCC Atmosphere and Vegetation Interaction Model version 1.0. The ocean component is the GFDL Modular Ocean Model version 4 at 1/3°–1° horizontal resolution. The sea ice component is GFDL Sea Ice Simulator. Details on similar versions of BCC_CSM and their use in climate change projection and short-term climate prediction have been documented in several studies (e.g., Wu et al. 2013, 2014; Liu et al. 2014, 2015).

2.2 Experimental design

Both simulation and prediction experiments are conducted in this study. Their design details differ due to different purposes.

2.2.1 Simulation experiments with perturbed parameter sets

It is assumed that a large part of the convection and precipitation biases in climate models may come from the cloud and convection parameterization schemes that include many uncertain parameters (e.g., Jackson et al. 2008; Yan et al. 2014; Qian et al. 2015; Yang et al. 2015). Especially, significant sensitivities of MJO simulation to deep and shallow convection schemes and relevant parameters have been shown by previous studies. In BCC_CSM, the shallow convection (Hack scheme) and cloud process parameterizations resemble those in the Community Atmosphere Model version 3 (CAM3; Collins et al. 2004). The deep convection parameterization uses a mass flux cumulus convection scheme by Wu (2012), which makes several innovations and is thus different from that in CAM3 (Zhang and McFarlane 1995).

In this study, we select seven parameters related to the convection and cloud processes. The parameters’ description, default values and perturbed ranges are given in Table 1. The adjustment time scale and precipitation efficiency for shallow convection (τ_shal and C0_shal), the relative humidity threshold for low and high stable clouds (RH_low and RH_high), the relative humidity threshold for convection trigger (RH_trig), precipitation efficiency and evaporation efficiency for deep convection (C0_deep and Ke_deep) are chosen according to the following considerations. The selections of τ_shal and C0_shal are for testifying the importance of the Hack shallow convection scheme in MJO simulation, which was demonstrated by Zhang and Song (2009) and Cai et al. (2013). The sensitivities of RH_trig, C0_deep and Ke_deep in deep convection parameterization for MJO simulation were shown by many studies (e.g., Wang and Schlesinger 1999; Maloney and Hartmann 2001; Zhang and Mu 2005; Lin et al. 2008b; Boyle et al. 2015). In addition, for the atmospheric component model of the BCC_CSM, Yang et al. (2015) illustrated the significant sensitivity of precipitation and winds over the tropical Indian Ocean and western Pacific to the parameters of C0_deep, Ke_deep, RH_low and RH_high. The uncertain ranges for these parameters follow the suggestions by model developers or previous studies (Collins et al. 2004; Jackson et al. 2008; Boyle et al. 2015; Yang et al. 2015; Wu 2017, personal communication). Perturbed parameter ensembles approaches have been widely used to assess the uncertainties of model parameters or further explore the potential for improving model physical schemes (e.g., Jackson et al. 2008; Collins et al. 2011; Yan et al. 2014; Boyle et al. 2015; Posselt et al. 2016). Here, we use the Latin hypercube sampling (LHS) method to sample points within the seven-dimensional parameter space. LHS is an effective sampling technique for a large number of dimensions. It uses stratification strategy to produce good uniformity on each dimensional projection. This method divides the range of each parameter into several equal bins, and makes each bin appear once at an optimal distance, thus ensuring an even distribution of the sampled points in the multi-dimensional space. Theoretically, the sampling size should be as large as possible. Boyle et al. (2015) conducted 1100-member perturbing experiments for 22 parameters, which is well worth learning but difficult to imitate in this study due to limited computing resources. Loeppky et al. (2009) suggested that the number of sampled points should be at least 10 times the number of parameters. Given this, a total of 85 perturbed points are sampled for the 7 parameters in this work, with a ratio of about 12 times. Similar consideration is also used by Yang et al. (2015) for a sampling and sensitivity analysis of several parameters in the BCC atmospheric model.

For the perturbed runs with 85 sets of parameters as well as the control run with the default parameters, the model is integrated for 7 years for each run and the daily outputs of the last 6-year are used for analysis. Also, to confirm the effects of parameter tuning, two additional 15-year simulations are conducted by using the default parameters and optimal parameters, respectively. Using the outputs from all these experiments, the performances of the model in simulating MJO features are scored. Many metrics regarding MJO variability and internal physics have been defined in previous studies. Here, we only choose several basic metrics including the wavenumber-frequency spectra of 15°S–15°N averaged 850-hPa zonal wind (WFS_U850), wavenumber-frequency spectra of 15°S–15°N averaged outgoing longwave radiation (WFS_OLR), two leading empirical orthogonal functions (EOFs) of intraseasonal anomalies of combined outgoing longwave radiation, 850-hPa and 200-hPa zonal winds, and lagged correlation between the first and second principal components corresponding to the two EOFs (LC_PCs). To obtain a skill score in a simple way, for 1- or 2-dimensional image distributions of the above fields, we compute the pattern correlation coefficient (PCC) to represent the feature similarity between simulation and observation. The PCCs are computed separately for WFS_U850, WFS_OLR, EOFs and LC_PCs, and then an average of the PCCs is used as the skill score of one experiment. Using this method, all the simulation experiments are evaluated to find out the skillful and unskillful ones.

2.2.2 Prediction experiments using optimized parameters

Since the model used in this study is one of the participants in the S2S project, a prediction strategy similar to that used in the project is utilized for a direct comparison with the BCC S2S hindcast outputs. We use the original hindcasts for the S2S project (EXP0_S2S) and the improved hindcasts with new initialization schemes (EXP1_Ini) by BCC S2S forecast model. Details of these two sets of hindcast can be found in Liu et al. (2017). Compared to EXP0_S2S, EXP1_Ini use different atmospheric reanalysis to generate atmosphere initial condition, and also introduce observational daily sea surface temperature data to upgrade ocean initial condition. Both were conducted on 1st, 6th, 11th, 16th, 21st, and 26th of each month during 2000–2013 with a quasi-5-day interval. Each hindcast did a 60-day integration and included four lagged average forecasting (LAF) members, initialized at 0000 UTC on the hindcast day and 0018, 0012 and 0006 UTC of the previous day, respectively. The differences between EXP0_S2S and EXP1_Ini only lie in different initial conditions.

For a further exploration on the impacts of model optimization to MJO prediction, we add several sets of hindcast as follows.

-

1.

Hindcast experiments with the optimal parameter set (EXP2_Ini_OptPara). On the basis of EXP1_Ini, these hindcasts replace the default model parameter set with the optimal parameter set from the parameter perturbation experiments defined in Sect. 2.2.1. Comparing EXP2_Ini_OptPara with EXP1_Ini may provide the degree of improvement for MJO prediction due to the optimization of MJO itself in the model.

-

2.

Hindcast experiments with the ensemble of several different parameter sets (EXP3_Ini_EnsPara). These hindcasts are all initialized at 0000 UTC of the hindcast day, and they replace the LAF ensemble strategy with an ensemble of four different parameter sets. To achieve this, all the parameter perturbation experiments are scored according to the metrics shown in Sect. 2.2.1 and the first four skillful ones are chosen to give better parameter sets for constituting ensemble members. The ensemble scheme in EXP3_Ini_EnsPara depicts uncertainty of model physical parameters, while those in EXP1_Ini and EXP2_Ini_OptPara depict the uncertainty of initial conditions.

-

3.

Hindcast experiments with a broad ensemble strategy (EXP1-3_Ens), which do an ensemble average on the hindcasts of EXP1_Ini, EXP2_Ini_OptPara and EXP3_Ini_EnsPara. Compared to the other experiments, EXP1-3_Ens try to explore whether the MJO prediction can be improved by ensemble of different types of experiments regarding the uncertainty of both initial condition error and model error.

2.3 Validation data and method

The observational data used to evaluate the MJO simulation and forecast mainly include the NOAA daily outgoing longwave radiation (OLR; Liebmann and Smith 1996), and daily wind field from the NCEP/Department of Energy (DOE) Reanalysis 2 (Kanamitsu et al. 2002).

The processes of extracting MJO signals from observation, simulation and prediction runs are completely in the same way as those in Liu et al. (2017), in which several procedures were adopted based on the techniques in Wheeler and Hendon (2004), Lin et al. (2008a) and Gottschalck et al. (2010). The preprocess for simulation outputs is different from that for prediction outputs. For the former, the intraseasonal variability is extracted by using a 20–100-day Lancoz filter, and MJO spatial structure and principal components (PCs) are derived by an EOF expansion of combined variables of OLR, 850-hPa zonal wind (U850) and 200-hPa zonal wind (U200). For the latter, a deduction of the average variability over the previous 120 days is used, and the PCs are obtained by projecting forecasted intraseasonal anomalies onto the observed multiple-variable EOFs.

As in Wheeler and Hendon (2004), MJO structure, amplitude and phase angle are defined by the two leading EOF modes, (PC12 + PC22)1/2, and tan−1(PC2/PC1), respectively. The MJO forecast skill is mainly measured by bivariate anomaly correlation (BAC) as defined in Lin et al. (2008a).

3 Impacts of parameter perturbation on MJO simulation

3.1 Sensitivity of model performance to parameters

A model’s performance is often determined by the configuration of main physical parameters. In this section, we explore the sensitivity of model climatology and variance over the tropics to the parameters in Table 1.

Figure 1 shows the spatial distributions of sensitivities of climatology and intraseasonal variance of U850 and OLR in November–April to the selected parameters. Here, sensitivity is defined as the regression coefficients of climatology and intraseasonal variance against the perturbed parameters in the 85 simulations. In terms of sensitivity of climatology (Fig. 1a), RH_low is found to be the most influential one among the parameters, which has especially significant positive correlations with the zonal wind and precipitation over the eastern Pacific (not shown). It means that over the eastern Pacific where a large amount of low-level clouds is often observed, enlarging RH_low value can reduce the low-level clouds and remarkably strengthen the convection there. This is possibly because the decrease of low-level clouds is helpful to increase the surface incident solar radiation, warm the underlying surface, intensify the low-level instability, and thus support the enhancement of convection. With a relatively weak magnitude, the impact of RH_high is generally similar to that of RH_low, while the impacts of τ_shal and C0_shal are both opposite to that of RH_low. This may suggest that the effect of RH_high on high-level clouds and the effects of τ_shal and C0_shal on shallow convection are associated with the change of low-level clouds in the model; or it may mean that the results of tuning RH_high, τ_shal and C0_shal tend to follow or offset the results of tuning RH_low while simultaneously perturbing multiple parameters. The three parameters in the deep convection scheme affect the climatology in different ways. C0_deep shows more impacts over the tropical Indian Ocean and the eastern Pacific; Ke_deep and RH_trig are relatively more influential over the Maritime Continent and the equatorial Pacific, respectively. The sensitivities of intraseasonal variance to the parameters exhibit different details (Fig. 1b). Nevertheless, RH_low is still the most influential one. Also, the effect of RH_low is opposite to the effects of τ_shal and C0_shal, but similar to that of RH_high; the influences of C0_deep, Ke_deep and RH_trig in the deep convection scheme differ from each other. These results are highly model-dependent. Yang et al. (2015) used an atmosphere-only model that was the same as the atmospheric component of the coupled BCC_CSM and chose several parameters that were partly similar to the parameters used in this study, but they found some results that are different from this study. This is possibly because we use different combinations of parameters in perturbation experiments and an ocean–atmosphere coupling can also increase the complexity of parameter sensitivity.

Spatial distributions of sensitivities of 850-hPa zonal wind (shading; units m s−1) and outgoing longwave radiation (contour; units W m−2) to different parameters. Model sensitivities are calculated as the regression coefficients of November–April a climatology and b intraseasonal variance of these two variables against the parameter values (normalized to [0, 1]) in the 85 simulations. Stippled areas indicate the responses of 850-hPa zonal wind are statistically significant at the 95% confidence level

For climatology and intraseasonal variance of U850 and OLR, PCC of spatial distribution between each simulation experiment and observation is calculated as the model’s skill in depicting climatological background of MJO, and then the responses of skill to various parameters in the 85 simulations are given in Fig. 2. It is found that there are clear differences in the magnitude and variation range between the skills of OLR and U850, and that the skill of OLR is mostly lower than that of U850, suggesting that OLR is more difficult to simulate in the model due to the complicated impacts of convection, cloud and microphysics processes. Nevertheless, for both climatology and intraseasonal variance, the variations of OLR skill agree well with those of U850 skill, indicating correspondences of parameters’ impacts on model performance in simulating different climatological variables. For the variations of model skill and each parameter, the distribution of PCC versus parameter value often shows considerably scattered feature, and there is overall no obvious monotonic linear relationship between the changes of parameter and model performance, possibly due to complex interrelation among multiple parameters and physical parameters’ nonlinear impacts on model simulation. One exception is RH_low, for which a too large parameter value can cause a nearly linear decline of model skill. This is because RH_low is the most influential parameter as shown in Fig. 1, and its large-amplitude increase may induce an intense change of physics process and thus aggravate the model simulation.

Responses of model performances in simulating a climatology and b intraseasonal variance of 850-hPa zonal wind (blue dots) and outgoing longwave radiation (red dots) to the seven parameters (all normalized to [0, 1]). Model performance is defined as the pattern correlation coefficient between simulation and observation over the tropics (30°S–30°N). The results for zonal wind and outgoing longwave radiation in b refer to the left and right y-coordinates, respectively. The purple and yellow lines are the fittings of blue and red scattered data points, respectively

Further, PCC of the MJO phase-longitude composite map between simulation and observation is computed as the model’s performance in describing MJO characteristics, and its responses to the various parameters are shown in Fig. 3. The MJO PCC series given in Fig. 3 are somewhat correlated with the U850 PCCs (R = 0.26) and OLR PCCs (R = 0.25) shown in Fig. 2, passing the 95% confidence test for 85 samples. This suggests a possible link between model performance in terms of climatology and that in terms of MJO variability. It is further noted that, compared to the responses of model skills in depicting climatology and variance distributions to the selected parameters, responses of model skills in simulating MJO features are more complicated. For most parameters, quite scattered distributions of model skills versus parameter values are found, indicating that there are overall no linear relationship between the changes of model performance and parameters. This reemphasizes the complexity of parameters’ interactions and their influences. Of course, to reveal the significance of these parameters, more approaches other than simple linear methods ought to be adopted. For example, Boyle et al. (2015) demonstrated parameter sensitivity for MJO simulation via conducting a huge number of perturbation experiments and then using a Bayesian parameter estimation method, to reveal the importance of several parameters in the deep convection scheme of the CAM5 model.

Responses of model performances in simulating MJO characteristics to the seven parameters (all normalized to [0, 1]). Model performance is defined as the pattern correlation coefficient of phase-longitude composites of 15°S–15°N averaged outgoing longwave radiation and 850-hPa zonal wind between simulation and observation. Due to a possible phase lag between MJO in the model and those in the observation, the order of MJO phases in the simulation is sometimes adjusted to have maximum similarity to the observation. The purple curve is the fitting of scattered data points

3.2 Influence on basic characteristics of MJO

Using the method shown in Sect. 2.2.1, we compute the PCCs of WFS_U850, WFS_OLR, EOFs, and LC_PCs between simulations and observations for all the experiments with perturbed parameters, and then rank the skillful and unskillful runs according to the magnitude of average PCC scores. The parameter values and PCC scores for the perturbed runs with the highest and lowest skills, as well as for the unperturbed control run, are given in Table 2. It again indicates that, the range of parameter values does not exhibit a clear relationship with the degree of skill, suggesting that the best or worst simulation may result from a complex compromise of nonlinear impacts of various parameters.

Climatology and intraseasonal variance are two basic characteristics, although they are not considered in the metrics in Sect. 2.2.1 to score the experiments. Figure 4 shows the distributions of OLR and U850 climatology in observation and various model runs. The observations exhibit robust convection from the tropical Indian Ocean to the western Pacific, coupled with strong precipitation and westerly wind over the region. The control run captures the general distribution of convection, but with apparent biases; especially, a remarkable convection suppression bias appears over the eastern Indian Ocean and the Maritime Continent, which is thought to be an important deficiency in the BCC_CSM to limit the MJO propagation from the Indian Ocean to the Pacific and further contribute to the formation of the Maritime Continent predictability barrier (Liu et al. 2017). In the low-skill simulation, this deficiency is maintained, while the biases over the eastern Pacific and the western Pacific are mostly strengthened. In contrast, in the high-skill simulation, the extent and magnitude of dry bias over the Maritime Continent is clearly reduced. This indicates significant sensitivity of model climatology to parameter values. However, the biases may be affected by the structural deficiency of physical schemes, given that the patterns of bias distribution in different runs are generally similar. Boyle et al. (2015) suggested that parameter optimization may produce reasonable skill in precipitation mean field at the cost of deterioration in radiation mean field in the CAM5 model. Differences in model parameterizations and parameter properties may lead to different simulation results. In Table 3, we list the bias and root mean square error (RMSE) of shortwave and longwave cloud radiation effects over the global domain for boreal winter climatological means between simulation and observation. The high-skill run exhibits an error magnitude comparable to that in the control run, providing a reasonable parameter setting and radiation simulation result that is acceptable for a climate model.

Spatial distributions of November–April climatology of outgoing longwave radiation (shading; units W m−2) and 850-hPa zonal wind (contour; units m s−1) in a observation, b control simulation, d high-skill simulation, and f low-skill simulation. Also shown are the corresponding biases for the c control, e high-skill and g low-skill simulations

Figure 5 shows the distributions of intraseasonal variance of U850 and OLR in observation and various runs. In the observation, there is strong variance of U850 over the tropical Indian Ocean and the extra-tropical Pacific, with a large-magnitude center over the Maritime Continent. The feature is generally reproduced in the high-skill run, but with apparent biases over several regions in the control run and low-skill run. Generally speaking, the simulation of intraseasonal variance of OLR is worse than that of U850, despite that the strong variance over the Indian Ocean and Maritime Continent is partially reproduced by the high-skill run and the control run. The metrics for parameter optimization in this study are aimed at the improvement of MJO features other than that of climatology or variance, but the results shown in Figs. 4 and 5 partly confirm the correspondence of skill enhancement in climatology and that in MJO itself.

Spatial distributions of November–April intraseasonal variance of (left column) 850-hPa zonal wind (shading; units m2 s−2) and (right column) outgoing longwave radiation (shading; units W2 m−4) and its ratio to the total variance (contour; units %). Shown are the results from a, b observation, c, d control simulation, e, f high-skill simulation, and g, h low-skill simulation

Spectrum and EOF modes are two important aspects to examine the parameter perturbation experiments. Their features can directly indicate the validity of parameter tuning. Figure 6 gives the wavenumber-frequency spectra of tropical U850 and OLR in observation and model runs. The observation is featured by a dominant spatial scale of zonal wavenumber 1 for U850 and zonal wavenumbers 1–3 for OLR during the periods of 30–80 days, which distinguish the MJO from other equatorial waves. The control run is featured by a spectral peak at lower frequency and a wider wavenumber range. With perturbed parameters, the low-skill run shows extremely small spectral values at the intraseasonal scale, while the high-skill run displays a distribution more resembling the observation, although with an underestimated spectral peak for OLR and U850. Similarly, the precipitation spectra in high-skill run also gives a spectral peak at the intraseasonal scale of 30–80 days, although the spectral magnitude is apparently underestimated (not shown).

Wavenumber-frequency spectra of 15°S–15°N averaged (left column) outgoing longwave radiation and (right column) 850-hPa zonal wind in a, b observation, c, d control simulation, e, f high-skill simulation, and g, h low-skill simulation. Value given in the parentheses at the top right above each panel is the pattern correlation between observed and simulated feature

Figures 7 and 8 give the two leading EOFs of 20–100-day filtered anomalies of combined OLR, U850 and U200, and the lagged correlations between the PCs of these two modes, respectively. We can see that the first EOF mode is featured by enhanced convection near the Maritime Continent and inhibited convection over the eastern Pacific to Africa, while the second EOF mode shows convection enhancement over the western Pacific and suppression over the Indian Ocean (Fig. 7a, b). These two modes explain about 43% of the variance, and exhibit the maximum positive or negative correlation when PC1 leads or lags PC2 by 10 days, denoting the eastward propagation of MJO signal (Fig. 8). In the control run, reversed order of EOF1 and EOF2 (with respect to the order in the observation), underestimated total variance, unrealistic strength and width of convection anomaly, and the largest correlation at a shorter-than-observed lag between PCs are found. However, the spatial structure is reasonably captured by the model, with pattern correlation coefficients of 0.93 and 0.83 between the simulations and observations for EOF1 and EOF2, respectively (Figs. 7c, d, 8). The low-skill run shows deteriorated features. In contrast, the high-skill run gives similar pattern correlations of EOFs as the control run, and thus does not show remarkable improvement in spatial structure, but its lagged correlation between PCs is closer to the observation and its total variance of the two EOFs is also slightly enhanced, suggesting a more reasonable evolution of MJO (Figs. 7e, f, 8).

Two leading EOFs of intraseasonal anomalies of combined outgoing longwave radiation, 850- and 200-hPa zonal winds in a, b observation, c, d control simulation, e, f high-skill simulation, and g, h low-skill simulation. The variance explained by each mode is shown at the top right above each panel. Value given in the parentheses of the subtitle is the spatial pattern correlation between observation and simulation. The order of EOF1 and EOF2 in simulations is reversed with respect to that of the observation

3.3 Improvement of MJO propagation

To further verify the improvement of MJO simulation by parameter tuning, two additional 15-year runs are conducted with the optimal parameters and default parameters in the above-mentioned high-skill simulation and control simulation, respectively. The characteristics of MJO propagation in these runs are then investigated.

Figure 9 shows the lag-longitude diagram of 10°S–10°N averaged OLR and U850 correlated against PC1. Compared to the strong MJO signal and clear propagation from the Indian Ocean to the Pacific in the observation, there are apparently weaker signal and a discontinuous and faster propagation in the control run. In the high-skill run with the optimal parameters, the continuity and magnitude of MJO signal are both obviously improved, though the propagation is still faster than the observed.

November–April lag–longitude diagram of 10°S–10°N averaged instraseasonal outgoing longwave radiation anomalies (shading) and intraseasonal 850-hPa zonal wind anomalies (contour) correlated against the first principal component of EOF in a observation, b control simulation and c high-skill simulation

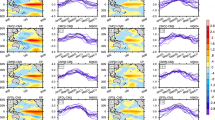

The composites of OLR and U850 anomalies for the eight phases of MJO lifecycle are given in Fig. 10. We can see that well-organized MJO convection initiates from Africa and the western Indian Ocean, propagates from the Indian Ocean across the Maritime Continent to the western Pacific and disappears in the western hemisphere. The control run generally captures the eastward propagation of MJO, but it shows a faster developing and decaying tendency for the convection from the Indian Ocean to the Maritime Continent. Meanwhile, the convection anomalies over the western Indian Ocean in phases 2–4 and over the western Pacific in phases 5–6 show especially wide spatial range, displaying not well-organized MJO structure. To some extent, the high-skill run clearly reduces the biases over the western Indian Ocean and the western Pacific, and gives a more reasonable transition of convection from wet phase to dry phase over the Indian Ocean and the Maritime Continent.

November–April composite intraseasonal anomalies of outgoing longwave radiation (shading; units W m−2) and 850-hPa zonal wind (contour; units m s−1) as a function of MJO phase in a observation, b control simulation and c high-skill simulation. The composite is made using the days when the MJO amplitude is larger than one. The number of days used to generate the composite for each phase is shown at the bottom right of each panel

Figure 11 shows the pressure–phase diagrams of 10°S–10°N averaged intraseasonal specific humidity anomaly and its tendency at three positions. Over the Indian Ocean and western Pacific, apparently wet and dry anomalies are observed in most troposphere, with maximum centers located between 500 and 700 hPa. Along the phase axis, the positive (negative) moisture anomaly is led by a drying (wetting) tendency and followed by a wetting (drying) tendency, denoting the propagation of convection and thus inducing alternation of wet and dry phases. By comparison, the control run shows weaker anomalies, lower intensity centers and unrealistic pace of phase transition. These deficiencies are apparently lessened in the optimized run, although there are obvious biases. Over the eastern Pacific, the observation displays quite small thickness and strength of moisture anomalies, which are wrongly depicted in the control run but significantly improved in the optimized run.

November–April pressure–phase diagram of 10°S–10°N averaged intraseasonal specific humidity anomalies (shading; units g kg−1) and its tendency (contour; units 10−10 kg kg−1 s−1) at the longitudes of (top row) 80°E, (middle row) 130°E and (bottom row) 140°W. Shown are the results from (left column) observation, (middle column) control simulation and (right column) high-skill simulation

These results indicate that though the goal of parameter tuning is to optimize the wave spectrum and spatial modes of MJO, the propagation and phase transitions of MJO, as well as its climatological background, are all improved to some extent. This illustrates that the optimization result in this study is not a coincidence by multiple tuned parameters; it is on the basis of recognizing and further reducing the uncertainty of physical parameters in the model. However, the biases remained in the optimized run reveal some systematic deficiencies, which cannot be overcome by parameter optimization.

4 Impact of parameter optimization on MJO prediction

The model used in this study participated in the S2S project. Its original hindcast for the S2S project (i.e., EXP0_S2S) showed limited skill in forecasting MJO, while another hindcast with improved initialization schemes (i.e., EXP1_Ini) showed clearly improved skills. However, even with improved initial conditions, the deficiency in the model itself is still an important factor that limits MJO forecast (Liu et al. 2017). In this section, two additional sets of hindcast (i.e., EXP2_Ini_OptPara and EXP3_Ini_EnsPara) and the ensemble of multiple hindcasts (i.e., EXP1-3_Ens) are used to explore whether the MJO forecast skill can be further improved by optimizing the MJO characteristics in the model itself. Details of these experiments are given in Sect. 2.2.

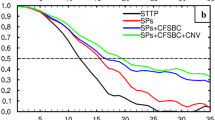

Figure 12 shows the variation of MJO forecast skill with different lead times in various experiments. For the 5-day sampling frequency (Fig. 12a), if we take BAC = 0.5 as the threshold of useful skill, the skill is 15 days in EXP0_S2S, 21 days in EXP1_Ini, 22 days in EXP2_Ini_OptPara and EXP3_Ini_EnsPara, and 23 days in EXP1-3_Ens. The degree of skill in these improved experiments is comparable to that in the operational models in Australian Bureau of Meteorology and NCEP (Rashid et al. 2011; Wang et al. 2014). Our results indicate a 6-day increase of skill by improving initial conditions and 1–2-day enhancement of skill by further optimizing the physical parameters or changing the ensemble strategy. Compared to EXP1_Ini, the upper limit of useful skill is not greatly raised in both EXP2_Ini_OptPara and EXP3_Ini_EnsPara, but the skills during the lead times of 7–20 days are consistently increased after adopting better parameters. This demonstrates the success of model physics optimization in improving MJO prediction. It should be noted that, however, the skills beyond the 20-day lead time are similar among EXP1_Ini, EXP2_Ini_OptPara and EXP3_Ini_EnsPara, which suggests a quick growth of forecast error that cannot be overcome by current methods. With an ensemble of these three sets of experiments, the skill beyond 20 days is enhanced in EXP1-3_Ens, indicating a better representation of error uncertainty in the new ensemble scheme. Further investigation on the ensemble of any two of these hindcast sets showed that the increase of skill at long lead time is not simply due to enlarged member size, and it only occurred when EXP1_Ini was included in the ensemble (not shown). This implies that a reasonable description of error uncertainty rather than the employment of many members is more critical to the performance of ensemble forecast, although in this study we do not build a highly efficient ensemble strategy to enhance the prediction skills at most lead times. For the 10-day sampling frequency (Fig. 12b), which is conventionally used by most models in the ISVHE project and by several models in the S2S project (Zhang et al. 2013; Vitart et al. 2017), similar skill variation features are found, indicating that the forecast cases with this sampling frequency can also be used to show the overall forecast skill of MJO.

MJO forecast during the Dynamics of the MJO/Cooperative Indian Ocean Experiment on Intraseasonal Variability in Year 2011 (DYNAMO/CINDY) Period attracts much attention in recent years (e.g., Fu et al. 2013; Xiang et al. 2015). Thus, Fig. 13 shows MJO forecast skill as a function of lead time during the DYNAMO/CINDY Period. With the 5-day experiment frequency (Fig. 13a), EXP1_Ini gives a 27-day skill, but it is not further enhanced in EXP2_Ini_OptPara and EXP3_Ini_EnsPara. This means that the optimization of model physics and use of parameter ensemble approach do not necessarily translate into an increase of overall forecast skill of MJO during that particular period. However, EXP1-3_Ens shows a skill of about 28–29 days, which is comparable to the results in Fu et al. (2013) and Xiang et al. (2015). When the experiment frequency is changed to 10 days (Fig. 13b), the useful skills in various experiments exhibit a clear spread from 22 to 31 days, suggesting that forecasts for some of the cases during the DYNAMO period are sensitive to the change of model parameters and ensemble prediction schemes.

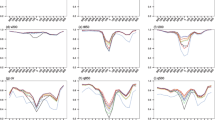

Figure 14 further gives forecast skill’s seasonal variation in various experiments. As demonstrated in Liu et al. (2017), EXP1_Ini shows a better MJO forecast skill compared to EXP0_S2S, due to the improvement of initial conditions. The skill is high in winter and low in summer, corresponding to the difference of MJO amplitude in the two seasons. From EXP1_Ini to EXP2_Ini_OptPara and EXP3_Ini_EnsPara, with optimization of model parameters, a clear enhancement of forecast skill is mainly found beyond the lead time of about 1 week. As a result, the useful skill (BAC = 0.5) is increased from about 16 days to more than 20 days in summer. In winter, although the skill is also enhanced at most lead times, it is little raised or ever slightly reduced beyond 3 weeks in EXP2_Ini_OptPara and EXP3_Ini_EnsPara. EXP1-3_Ens partly relieves this problem, but it does not achieve a consistent increase of skill during most lead times in all four seasons. This again confirms that enlarging member size does not always lead to effective enhancement of ensemble forecast skill.

Bivariate anomaly correlation between observations and forecasts as a function of lead time and calendar date. Skills are computed using 5-day-interval experiments within a 3-month window centered on each hindcast start date. Results shown are from EXP1_Ini (shading), EXP2_Ini_OptPara (short dashed contour), EXP3_Ini_EnsPara (long dashed contour), and EXP1-3_Ens (solid contour)

MJO forecast skill is also sensitive to initial and target phase (e.g., Lin et al. 2008a; Kim et al. 2014c; Wang et al. 2014). Thus, the BAC is computed for each MJO phase using the cases with large-than-one initial or target amplitude. The skills as a function of lead time and phase in various experiments are given in Fig. 15. In EXP1_Ini, the differences of BAC among various initial phases become appreciable beyond 1-week lead time, featured by gradually emerging peaks near phases 2 and 5 and valleys near phases 3 and 7 (Fig. 15a). For the forecast targeting phases, relatively high skills are found near phases 3 and 6 and low skills near phases 2 and 5 (Fig. 15b). This implies that the MJO is easily predicted when it is initially located over the central-western Indian Ocean and Maritime Continent, but is difficult to forecast when it starts from the eastern Indian Ocean and the central Pacific. In EXP2_Ini_OptPara and EXP3_Ini_EnsPara, due to optimization of model physics parameters, the skills beyond 1-week lead time at most initial and target phases are somewhat enhanced. Particularly, the forecasts at initial phase 2 and target phases 6 and 7, aiming at the MJO propagation from the central-western Indian Ocean to the western Pacific, show better than 30-day skills. In EXP1-3_Ens, the skills are further raised somewhat.

Focusing on the initial phase 2, at which the forecast is most skillful and the improvement by scheme modification is most significant, Fig. 16 displays time-longitude composites of OLR and U850 anomalies. In the observation, MJO evolution from initial phase 2 is featured by propagation of enhanced convection anomaly from the Indian Ocean to the western Pacific, surrounded by eastward propagating anomaly of suppressed convection on both sides (Fig. 16a). In EXP0_S2S, eastward propagation of convection signal is featured by faster moving speed, quicker amplitude decay and more disordered spatial structure compared to the observation (Fig. 16b). EXP1_Ini with upgraded initializations partially improves the initial state and evolution of convection anomaly over the Indian Ocean and the western Pacific, but the MJO propagation is still clearly faster than the observed, and the spatial structure beyond 3 weeks is still disordered (Fig. 16c). With optimized physical parameters, EXP2_Ini_OptPara and EXP3_Ini_EnsPara show distributions of OLR and U850 anomalies that are more similar to the observation, despite that the decay of convection intensity and the propagation of convection signal at longer lead times are still faster than observed (Fig. 16d, e). EXP1-3_Ens does not further improve the convection and circulation evolution to a considerable extent. This shows that the growing forecast error is complicated and changeable, and current ensemble scheme is still deficient in depicting uncertainty of forecast error at longer lead time (Fig. 16f).

Time-longitude composites of outgoing longwave radiation (shading; units W m−2) and 850-hPa zonal wind (contour; units m s−1) anomalies for initial phase 2 of MJO in a observation, b EXP0_S2S, c EXP1_Ini, d EXP2_Ini_OptPara, e EXP3_Ini_EnsPara, and f EXP1-3_Ens. Only MJO cases with larger-than-one initial amplitude are used in the composite

5 Summary and discussion

In this study, using the BCC S2S forecast model, we examine the sensitivity of several key physical parameters and explore the usefulness of parameter optimization in enhancing the skills of MJO simulation and prediction.

For several key parameters related to the cloud and convection physics that are crucial to MJO simulation, we use the LHS method to perturb the parameters and further investigate their impacts on model performance. In term of the climatology and intraseasonal variance of U850 and OLR, the relative humidity threshold for low stable clouds is the most sensitive parameter, with especially remarkable impacts over the eastern Pacific. Among the multiple parameters, some of them show quite similar (opposite) responses, suggesting the homogeneity (heterogeneity) of these parameters in affecting model’s performance. However, there are overall no significant linear relationships between any one of the parameters and the model skill in depicting climatology, intraseasonal variance and MJO characteristics, possibly due to parameters’ nonlinear influences on model results and complex interaction among various parameters. This indicates the necessity of tuning the model in multi-dimensional parameter space, to search for the best configuration of parameters for optimum model performance.

Based on the comparison among all the experiments with perturbed parameters, the most skillful and unskillful runs are chosen, and their skills in simulating basic characteristics of MJO are then explored. Compared to the control run, the optimal-parameter run shows more realistic spectrum, and similar skill in spatial distribution of MJO modes but clearly improved relationship between the modes. Meanwhile, the distributions of climatology and intraseasonal variance, which are not the goals of the optimization, are also improved, implying a possible link between the simulation of mean state and that of MJO variability. Using the optimal and default parameter sets, two longer integrations are conducted to verify the degree of skill in simulating MJO’s propagation characteristics. It is found that due to the parameter optimization, model’s deficiencies such as weaker-than-observed signals, not-well-organized spatial structure and faster-than-observed propagation of MJO are all somewhat reduced. Especially, the vertical structure of convection and the transition between wet phase and dry phase of convection during MJO’s life cycle are considerably improved. These results support that the optimization process may have tuned the physical parameters towards ideal values at which model physics match the reality better, rather than values at which the model state occasionally resemble the reality.

Further, several sets of sub-seasonal hindcast using the optimized parameters are conducted, and their results are compared with those of the hindcast using the original default parameters. We then explore the importance of model optimization and impacts of model error and initial condition error on forecast. For overall forecast of MJO, the original model shows a maximum useful skill of 15 days, which is largely increased to 21 days in the hindcasts with improved initial conditions, and further enhanced to 22 days in the hindcasts with both improved initials and model parameters. By optimizing the model, the skills are little changed within 1 week and beyond 3 weeks, but they are somewhat enhanced during lead times of 2–3 weeks. The skill’s dependences on season and on MJO phase in different hindcasts are also compared. After 1-week lead time, a clear increase of skill due to model parameter optimization is often found in most seasons, especially the summer, and at most initial phases, especially phase 2. Beyond 3-week lead time, however, the upper limit of useful skill in winter remains unchanged or even slightly reduced, and the forecast from a few initial phases also become slightly worse when the optimized parameters are adopted. This suggests that the forecast error is not a simple linear accumulation of initial condition error and model error, and the decrease of model error does not always translate into increase of forecast skill. The above results demonstrate the positive impact of model optimization on improving MJO forecast, but they also indicate the deficiency of current initialization and model physics schemes in inhibiting the quick growth of forecast error at longer lead time. An ensemble of multiple hindcasts with different schemes can partly reduce this deficiency, due to a better representation of error uncertainty rather than a simple enlargement of ensemble member size.

This study to some extent reveals the relationships between representations of different MJO characteristics in model simulation, and between the simulation skill and forecast skill of MJO. Sperber et al. (2005) indicated that realistic description of mean state is important for better simulation of MJO, while Kim et al. (2011), Benedict et al. (2013) and Boyle et al. (2015) found that improved representation of MJO may corresponds to degraded mean state in one or more aspects in models. By comparing several models, Jiang et al. (2015) showed an insignificant link between model MJO fidelity and mean state, which suggests that some factors other than the mean state are critical for realistic simulation of MJO. Klingaman et al. (2015a, b) further showed that there is little correspondence between the MJO fidelity in model simulation and the MJO skill in initialized forecasts. For the model used in this study, higher fidelity of MJO is accompanied by a better mean state in the model, and is also associated with overall better performance in MJO forecast. However, our results also indicate that improvement of MJO simulation does not necessarily translate into enhancement of MJO forecast skill at any time, which agrees with the finding that there are weak relationships between MJO fidelity in 2-day hindcasts and 20-day hindcasts (Klingaman et al. 2015b). The irregular correspondence between simulation and forecast may be attributed to the complex and model-dependent interaction between model error and initial condition error; thus, improvement of forecast should rely more on describing the uncertainty of these two errors, rather than on only decreasing the magnitude of the errors.

In Liu et al. (2017), the tendency toward drying over the eastern Indian Ocean and Maritime Continent during MJO’s evolution is assumed to be an important factor to accelerate the wet-to-dry phase transition and thus accounts for the Maritime Continent predictability barrier of MJO in the BCC_CSM. This current work has partially relieved this deficiency as shown in Figs. 4 and 10, in which the dry bias of mean state and the underestimated wet anomaly of MJO convection near the Maritime Continent are both reduced to some extent. With this improvement of the model, the prediction skill valleys near target phases 4 and 5 at the lead time of about 20 days are not as notable as those in the forecasts with the original model (see the dashed lines and shadings in Fig. 15b). In a way, these results partially support the assumption for the attribution of the Maritime Continent predictability barrier of MJO in Liu et al. (2017), and demonstrate the positive role of model optimization in overcoming forecast deficiency.

Particularly, because of the optimization of the model, the forecasts of MJO for initial phase 2 or target phase 7 show especially prominent increases of skill, implying the obviously improved forecast of MJO propagation from the central Indian Ocean to the western Pacific. It is assumed that the convection suppression over the western Pacific plays a dynamically active role in MJO propagation over the Indian Ocean and Maritime Continent (Kim et al. 2014a), and its strong representation is the key for high skill MJO events in the ECMWF hindcast (Kim et al. 2016). Liu et al. (2017) pointed out that a better description of dry anomaly over the western Pacific in model initial condition favors the enhancement of forecast skill for MJO propagation from the Indian Ocean in the BCC S2S hindcast. As a follow-up study of Liu et al. (2017), we reveal in this study that for further increase of MJO forecast skill by improved model physics, the dry anomaly over the western Pacific could last at longer lead time (see Fig. 16d), suggesting the positive role of decreasing model’s deficiency over this area. Thus, the efforts for improving MJO forecast should take the optimization of convection state over the western Pacific as a necessary target.

References

Ahn MS, Kim D, Sperber KR et al (2017) MJO simulation in CMIP5 climate models: MJO skill metrics and process-oriented diagnosis. Clim Dyn 49:4023–4045

Benedict JJ, Maloney ED, Sobel AH, Frierson DM, Donner LJ (2013) Tropical intraseasonal variability in version 3 of the GFDL atmosphere model. J Clim 26:426–449

Boyle JS, Klein SA, Lucas DD, Ma HY, Tannahill J, Xie S (2015) The parametric sensitivity of CAM5’s MJO. J Geophys Res Atmos 120:1424–1444

Cai Q, Zhang GJ, Zhou T (2013) Impacts of shallow convection on MJO simulation: a moist static energy and moisture budget analysis. J Clim 26:2417–2431

Collins WD, Rasch PJ, Boville BA et al (2004) Description of the NCAR Community Atmosphere Model (CAM 3.0). NCAR Tech Note NCAR/TN-464+STR. http://www.cesm.ucar.edu/models/atm-cam/docs/description/description.pdf. Accessed June 2004

Collins M, Booth BB, Bhaskaran B, Harris GR, Murphy JM, Sexton DMH, Webb MJ (2011) Climate model errors, feedbacks and forcings: a comparison of perturbed physics and multi-model ensembles. Clim Dyn 36:1737–1766

Del Genio AD, Wu J, Wolf AB, Chen Y, Yao MS, Kim D (2015) Constraints on cumulus parameterization from simulations of observed MJO events. J Clim 28:6419–6442

Deng Q, Khouider B, Majda A (2015) The MJO in a coarse-resolution GCM with a stochastic multicloud parameterization. J Atmos Sci 72:55–74

Fu X, Wang B, Lee JY, Wang W, Gao L (2011) Sensitivity of dynamical intraseasonal prediction skills to different initial conditions. Mon Weather Rev 139:2572–2592

Fu X, Lee JY, Hsu PC, Taniguchi H, Wang B, Wang W, Weaver S (2013) Multi-model MJO forecasting during DYNAMO/CINDY period. Clim Dyn 41:1067–1081

Gottschalck J, Wheeler M, Weickmann K et al (2010) A framework for assessing operational Madden–Julian oscillation forecasts: a CLIVAR MJO Working Group project. Bull Am Meteorol Soc 91:1247–1258

Hung MP, Lin JL, Wang W, Kim D, Shinoda T, Weaver SJ (2013) MJO and convectively coupled equatorial waves simulated by CMIP5 climate models. J Clim 26:6185–6214

Inness PM, Slingo JM, Woolnough SJ, Neale RB, Pope VD (2001) Organization of tropical convection in a GCM with varying vertical resolution; implications for the simulation of the Madden–Julian Oscillation. Clim Dyn 17:777–793

Jackson CS, Sen MK, Huerta G, Deng Y, Bowman KP (2008) Error reduction and convergence in climate prediction. J Clim 21:6698–6709

Jiang X, Waliser DE, Xavier PK et al (2015) Vertical structure and physical processes of the Madden–Julian oscillation: exploring key model physics in climate simulations. J Geophys Res Atmos 120:4718–4748

Kanamitsu M, Ebisuzaki W, Woollen J, Yang SK, Hnilo JJ, Fiorino M, Potter GL (2002) NCEP–DOE AMIP-II reanalysis (R-2). Bull Am Meteorol Soc 83:1631–1643

Khairoutdinov M, Randall D, DeMott C (2005) Simulations of the atmospheric general circulation using a cloud-resolving model as a superparameterization of physical processes. J Atmos Sci 62:2136–2154

Kim D, Kang IS (2012) A bulk mass flux convection scheme for climate model description and moisture sensitivity. Clim Dyn 38:411–429

Kim D, Sperber K, Stern W et al (2009) Application of MJO simulation diagnostics to climate models. J Clim 22:6413–6436

Kim D, Sobel AH, Maloney ED, Frierson DMW, Kang IS (2011) A systematic relationship between intraseasonal variability and mean state bias in AGCM simulations. J Clim 24:5506–5520

Kim D, Kug JS, Sobel AH (2014a) Propagating versus nonpropagating Madden–Julian oscillation events. J Clim 27:111–125

Kim D, Xavier P, Malonty E et al (2014b) Process-oriented MJO simulation diagnostic: moisture sensitivity of simulated convection. J Clim 27:5379–5395

Kim HM, Webster PJ, Toma VE, Kim D (2014c) Predictability and prediction skill of the MJO in two operational forecasting systems. J Clim 27:5364–5378

Kim HM, Kim D, Vitart F, Toma VE, Kug JS, Webster PJ (2016) MJO propagation across the maritime continent in the ECMWF ensemble prediction system. J Clim 29:3973–3988

Klingaman NP, Jiang X, Xavier PK, Petch J, Waliser D, Woolnough SJ (2015a) Vertical structure and physical processes of the Madden–Julian oscillation: synthesis and summary. J Geophys Res Atmos 120:4671–4689

Klingaman NP, Woolnough SJ, Jiang X et al (2015b) Vertical structure and physical processes of the Madden–Julian oscillation: linking hindcast fidelity to simulated diabatic heating and moistening. J Geophys Res Atmos 120:4690–4717

Lee MI, Kang IS, Kim JK, Mapes BE (2001) Influence of cloud-radiation interaction on simulating tropical intraseasonal oscillation with an atmospheric general circulation model. J Geophys Res 106:14 219–214 233

Lee MI, Kang IS, Mapes BE (2003) Impacts of cumulus convection parameterization on aqua-planet AGCM simulations of tropical intraseasonal variability. J Meteorol Soc Jpn 81:963–992

Li C, Jia X, Ling J, Zhou W, Zhang C (2009) Sensitivity of MJO simulations to diabatic heating profiles. Clim Dyn 32:167–187

Liebmann B, Smith CA (1996) Description of a complete (interpolated) outgoing longwave radiation dataset. Bull Am Meteorol Soc 77:1275–1277

Lin H, Brunet G, Derome J (2008a) Forecast skill of the Madden–Julian oscillation in two Canadian atmospheric models. Mon Weather Rev 136:4130–4149

Lin JL, Lee MI, Kim D, Kang IS, Frierson DMW (2008b) The impacts of convective parameterization and moisture triggering on AGCM-simulated convectively coupled equatorial waves. J Clim 21:883–909

Liu P, Wang B, Sperber KR, Li T, Meehl GA (2005) MJO in the NCAR CAM2 with the Tiedtke convective Scheme. J Clim 18:3007–3020

Liu P, Satoh M, Wang B et al (2009) An MJO simulated by the NICAM at 14- and 7-km resolutions. Mon Weather Rev 137:3254–3268

Liu X, Wu T, Yang S et al (2014) Relationships between interannual and intraseasonal variations of the Asian-western Pacific summer monsoon hindcasted by BCC_CSM1.1(m). Adv Atmos Sci 31:1051–1064

Liu X, Wu T, Yang S et al (2015) Performance of the seasonal forecasting of the Asian summer monsoon by BCC_CSM1.1(m). Adv Atmos Sci 32:1156–1172

Liu X, Wu T, Yang S et al (2017) MJO prediction using the sub-seasonal to seasonal forecast model of Beijing Climate Center. Clim Dyn 48:3283–3307

Loeppky JL, Sacks J, Welch WJ (2009) Choosing the sample size of a computer experiment: a practical guide. Technometrics 51:366–376

Madden RA, Julian PR (1971) Detection of a 40–50 day oscillation in the zonal wind in the tropical Pacific. J Atmos Sci 28:702–708

Maloney ED, Hartmann DL (2001) The sensitivity of intraseasonal variability in the NCAR CCM3 to changes in convective parameterization. J Clim 14:2015–2034

Neena JM, Lee JY, Waliser D, Wang B, Jiang X (2014) Predictability of the Madden–Julian Oscillation in the intraseasonal variability hindcast experiment (ISVHE). J Clim 27:4531–4543

Posselt D, Fryxell B, Molod A, Williams B (2016) Quantitative sensitivity analysis of physical parameterizations for cases of deep convection in the NASA GEOS-5. J Clim 29:455–479

Qian Y, Yan H, Hou Z et al (2015) Parametric sensitivity analysis of precipitation at global and local scales in the Community Atmosphere Model CAM5. J Adv Model Earth Syst 7:382–411

Rajendran K, Kitoh A (2006) Modulation of tropical intraseasonal oscillations by ocean–atmosphere coupling. J Clim 19:366–391

Rashid HA, Hendon HH, Wheeler MC, Alves O (2011) Prediction of the Madden–Julian oscillation with the POAMA dynamical prediction system. Clim Dyn 36:649–661

Sperber KR, Gualdi S, Legutke S, Gayler V (2005) The Madden–Julian oscillation in ECHAM4 coupled and uncoupled general circulation models. Clim Dyn 25:117–140

Vitart F (2014) Evolution of ECMWF sub-seasonal forecast skill scores. Q J R Meteorol Soc 140:1889–1899

Vitart F, Woolnough S, Balmaseda MA, Tompkins A (2007) Monthly forecast of the Madden–Julian oscillation using a coupled GCM. Mon Weather Rev 135:2700–2715

Vitart F, Ardilouze C, Bonet A et al (2017) The sub-seasonal to seasonal prediction (S2S) project database. Bull Am Meteorol Soc 98:163–173

Waliser DE, Lau KM, Kim JH (1999) The influence of coupled sea surface temperatures on the Madden–Julian oscillation: a model perturbation experiment. J Atmos Sci 56:333–358

Wang W, Schlesinger ME (1999) The dependence on convection parameterization of the tropical intraseasonal oscillation simulated by the UIUC 11-layer atmospheric GCM. J Clim 12:1423–1457

Wang W, Hung MP, Weaver SJ, Kumar A, Fu X (2014) MJO prediction in the NCEP climate forecast system version 2. Clim Dyn 42:2509–2520

Wheeler MC, Hendon HH (2004) An all-season real-time multivariate MJO index: development of an index for monitoring and prediction. Mon Weather Rev 132:1917–1932

Wu T (2012) A mass-flux cumulus parameterization scheme for large-scale models: description and test with observations. Clim Dyn 38:725–744

Wu T, Li W, Ji J et al (2013) Global carbon budgets simulated by the Beijing Climate Center climate system model for the last century. J Geophys Res Atmos 118:1–22

Wu T, Song L, Li W et al (2014) An overview of BCC climate system model development and application for climate change studies. J Meteorol Res 28:34–56

Xavier PK, Petch JC, Klingaman NP et al (2015) Vertical structure and physical processes of the Madden–Julian Oscillation: biases and uncertainties at short range. J Geophys Res Atmos 120:4749–4763

Xiang B, Zhao M, Jiang X et al (2015) The 3–4-week MJO prediction skill in a GFDL coupled model. J Clim 28:5351–5364

Yan H, Qian Y, Lin G, Leung LR, Yang B, Fu Q (2014) Parametric sensitivity and calibration for the Kain–Fritsch convective parameterization scheme in the WRF Model. Clim Res 59:135–147

Yang B, Zhang Y, Qian Y, Wu T, Huang A, Fang Y (2015) Parametric sensitivity analysis for the Asian summer monsoon precipitation simulation in the Beijing Climate Center AGCM, version 2.1. J Clim 28:5622–5644

Zhang GJ, McFarlane NA (1995) Sensitivity of climate simulations to the parameterization of cumulus convection in the Canadian Climate Centre general circulation model. Atmos Ocean 33:407–446

Zhang GJ, Mu M (2005) Simulation of the Madden–Julian oscillation in the NCAR CCM3 using a revised Zhang–McFarlane convection parameterization scheme. J Clim 18:4046–4064

Zhang GJ, Song X (2009) Interaction of deep and shallow convection is key to Madden–Julian oscillation simulation. Geophys Res Lett 40:L09708. https://doi.org/10.1029/2009GL037340

Zhang C, Dong M, Gualdi S, Hendon HH, Maloney ED, Marshall A, Sperber KR, Wang W (2006) Simulations of the Madden–Julian oscillation in four pairs of coupled and uncoupled global models. Clim Dyn 27:573–592

Zhang C, Gottschalck J, Maloney ED et al (2013) Cracking the MJO nut. Geophys Res Lett 40:1223–1230

Zheng Y, Waliser DE, Stern W, Jones C (2004) The role of coupled sea surface temperatures in the simulation of the tropical intraseasonal oscillation. J Clim 17:4109–4134

Zhu J, Wang W, Kumar A (2017) Simulations of MJO propagation across the Maritime continent: impacts of SST feedback. J Clim 30:1689–1704

Ziemianski MZ, Grabowski WW, Moncrieff MW (2005) Explicit convection over the western Pacific warm pool in the Community Atmospheric Model. J Clim 18:1482–1502

Acknowledgements

This study was jointly supported by the National Natural Science Foundation of China (Grant 41675090), the National Basic Research Program of China (Grant 2015CB453203), and the National Key R&D Program of China (Grant 2016YFA0602100).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Liu, X., Li, W., Wu, T. et al. Validity of parameter optimization in improving MJO simulation and prediction using the sub-seasonal to seasonal forecast model of Beijing Climate Center. Clim Dyn 52, 3823–3843 (2019). https://doi.org/10.1007/s00382-018-4369-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-018-4369-y