Abstract

Intrinsic variability (IV) in regional climate models (RCMs) is often assumed to be small because at climatological timescales, the model solutions tend to be dominated by the model’s lateral boundary conditions. Recent studies have indicated that this IV may actually be large in certain instances for some variables. Direct interpretation of anomalies from RCM sensitivity studies relies on the assumption that differences between model simulations are entirely due to a physical forcing. However, if IV is as large or larger than the physical signal, then this assumption is violated. Using a 20 member ensemble of RCM simulations, we verify that IV of precipitation within a RCM can be large enough to violate the sensitivity study assumption, and we show that generating ensembles of simulations can help reduce the level of IV. We also present two indicators that can rule out the influence of IV when it is ambiguous whether anomalies within a sensitivity study are due to the sensitivity perturbation or whether they are due to IV.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Regional climate models (RCMs) are becoming a common tool for assessing the sensitivity of climate to perturbations in the climate system. In this manner, they are typically used in place of global climate models (GCMs) when either the scale of the forcing or the scale of the studied-climate is smaller than the resolvable scale of a GCM. For example, RCMs have been used to investigate the sensitivity of the hydrological climate of Western N. America to doubled CO2 (Snyder et al. 2004), the sensitivity of radiative balance over East Asia to the presence of dust (Zhang et al. 2009), and the sensitivity of monsoonal rainfall to vegetation cover in India (Dutta et al. 2009).

The traditional experimental setup for such experiments, the model sensitivity study, is to perform two RCM simulations that are identical in all but one way: one experiment represents a control climate, and the other experiment represents a perturbation to the control climate [e.g. see the methodologies of Snyder et al. (2004), Zhang et al. (2009), and Dutta et al. (2009)]. The climatological differences between the two experiments are then inferred to be due to whatever factor is different between the control and the perturbation simulation. We argue that this simple interpretation of the differences between the two experiments assumes that no other factors affect the climatologies of the two experiments. Considering that RCMs are deterministic numerical simulations, it would seem reasonable to accept this assumption.

It has been shown that the spatiotemporal evolution of weather in climate models is extremely sensitive to initial conditions in the model (Lorenz 1963; Hulme et al. 1999). This chaotic sensitivity to initial conditions could be a source of noise that is not controllable in a traditional sensitivity study, since it is intrinsic to the models used in the study. We will refer to this ‘intrinsic noise’ as intrinsic variability (IV), as is done in Lucas-Picher et al. (2008). However, the chaotic nature of RCMs is often assumed to be small, since the initial conditions lose their influence for long integrations; the lateral boundary conditions, which supply information about incoming weather systems, dominate at climatological timescales (Giorgi and Mearns 1999). If the level of a model’s intrinsic variability (IV) were not small, it would violate the assumption that the sensitivity study is a perfectly controlled experiment, thus complicating an otherwise simple interpretation of the climatological differences. In an investigation of IV, Giorgi and Bi (2000) show that anomalies within a sensitivity study can fall within the level of IV.

Recent studies indicate that IV within RCMs increases with increasing domain size (Rinke and Dethloff 2000; Vannitsem and Chomé 2005; Plummer et al. 2006; Lucas-Picher et al. 2008), most model variables exhibit a seasonal cycle in IV (with summer having higher IV) (Christensen et al. 2001; Caya and Biner 2004; Alexandru et al. 2007; de Elía et al. 2008; Lucas-Picher et al. 2008), the magnitude of IV can affect seasonal averages (de Elía et al. 2008), and IV shows no long-term trend over a 10 year period (Lucas-Picher et al. 2008). All these studies suggest that IV could be large enough to affect interpretation of results in certain cases.

The result of Giorgi and Bi (2000), that IV can be large enough to affect interpretation of a sensitivity study, motivates this investigation of IV within an RCM sensitivity study with different forcings, over a longer time period, and with multiple ensemble members. The main goal of this manuscript is to validate a new methodology for performing RCM sensitivity studies when the change in physical forcing is relatively small.

2 Experimental design

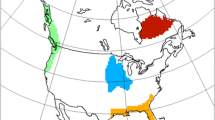

In order to verify the result of Giorgi and Bi (2000) in a different domain and to attempt to develop a method for discriminating between physical signal and IV, we did a series of simple sensitivity tests. The control run is a 10-year simulation of climate over Western North America, with the International Center for Theoretical Physics’s RegCM3 (Pal et al. 2000), forced in its initial and lateral boundary conditions by NCEP Reanalysis II (Kanamitsu et al. 2002) data for 1995–2006, and with vegetation in the BATS land surface model (Dickinson et al. 1993) consistent with present-day vegetation cover. The first 12 months of the simulation were discarded for model spin-up time. Figure 1a shows the BATS vegetation cover at 60 km resolution, and Fig. 1b shows the areas where evergreen forest was changed to deciduous. The 10-year precipitation climatology for this simulation is shown in Fig. 2.

a Modern vegetation cover as prescribed in the BATS land surface model of RegCM3. The domain shown is a grid on a Lambert Conformal projection centered at 123° W, 37° N; its size is 64 by 34 grid cells (at 60 km resolution) in the horizontal and 18 levels in the vertical. b Areas where evergreen needle-leaf forest is changed to broad-leaf deciduous forest between the CONTROL and FORESTMOD experiments. Twenty-eight grid cells were changed. The colored boxes, labeled A, B, and C, mark areas referred to in the text

The perturbation simulation is identical to the control simulation in every way, except that all areas of evergreen needle-leaf forest in the BATS land surface model are changed to deciduous broad-leaf forest. We will refer to these two simulations as CONTROL and FORESTMOD, respectively. The main differences between the two land cover parametrizations are detailed in Table 1. All of the differences between the two land cover types are constant throughout the year, except the vegetation coverage. The vegetation coverage transitions smoothly from between the warm and cold seasons, such that it has its maximum coverage in the summer and its minimum coverage in the winter (specifically when the temperature falls below 269 K).

We also duplicated both the control and the perturbation simulations: we did one simulation of each that was identical in every way, except that we randomly added or removed up to 1/1,000th of the existing specific humidity to each grid cell in the lateral boundaries Footnote 1. We will refer to these two simulations as CONTROL+n and FORESTMOD+n, where the ‘+n’ indicates the intentional induction of IV in the CONTROL and FORESTMOD experiments. Because the land surface perturbation persists throughout the duration of the run, it can induce IV throughout the duration of the run. As a result, it is imperative that the technique for generating ensemble members also induce IV throughout the duration of the run. We accomplish this by adding noise to the lateral boundary conditions (LBCs), which differs from the methods used by Caya and Biner (2004), Alexandru et al. (2007), de Elía et al. (2008), and Lucas-Picher et al. (2008). Whereas their methods only perturb the initial conditions of the model, the method that we use perturbs the initial conditions in addition to perturbing the LBCs throughout the duration of the run.

As a traditional sensitivity study (without the ensemble members), we would be investigating the sensitivity of Western North American climate in RegCM3 to the presence of evergreen versus deciduous forest. Strictly speaking, we are only attempting to emulate a sensitivity study; we do not imply that there is a situation where this ecosystem transition might occur. In the context of our experimental design and the goals of this study, the exact sensitivity perturbation (and its details: e.g. seasonally changing vegetation coverage differences) are not as important as whether the sensitivity perturbation generates anomalies with a similar (or smaller) magnitude to the model’s IV. We chose to change evergreen needle-leaf coverage to deciduous broad-leaf coverage as the sensitivity perturbation for this reason: because of the presumably mild impact of this change on the domain’s climatology. Results shown in the following section validate this choice.

3 Results and analysis

We focus on precipitation anomalies in this study because precipitation shows the most IV relative to the boundary condition perturbations. Figure 3a shows the 10-year average December, January and February (DJF) climatological difference in precipitation between FORESTMOD and CONTROL, Fig. 3b shows the same difference for FORESTMOD+n and CONTROL+n, and Fig. 3c shows the same difference between CONTROL+n and CONTROL. Figure 3d–f show the same differences as Fig. 3a–c, respectively, but for the June, July, and August (JJA) months.

All six of these figures show a relatively spatially-incoherent pattern of increases and decreases in precipitation, though Fig. 3a, b have somewhat coherent regions of increase and decrease that appear to be part of the physical signal Footnote 2. A striking feature is that the pattern and magnitude of the difference in Fig. 3c over the ocean and most of California appears similar to that of both Fig. 3a, b. The difference shown in Fig. 3c is due only to the model’s IV; CONTROL and CONTROL+n are identical except for differences in humidity of one part in a thousand at the lateral boundaries. Likewise, Fig. 3d–f all look quite similar, even though the differences in (f) are entirely due to IV, whereas (e) and (f) should bear the physical signal of changing evergreen to deciduous (as well as differences due to IV).

While it might be tempting to categorize the anomalies in Fig. 3d as physical signal because the anomalies are generally co-located with areas where evergreen was changed to deciduous, the anomalies in (f), which are entirely due to IV, occur over the same area. This clearly shows that visual inspection cannot distinguish between physical signal and IV. As in Giorgi and Bi (2000), we would be forced to conclude that there may be a physical signal associated with changing evergreen to deciduous that is hidden by the model’s IV. Because Fig. 3c, f show that the model exhibits IV in precipitation that is of the same magnitude as the anomalies in (a) and (d), the assumption of a perfect sensitivity study is clearly violated over the ocean (and most of California) in the winter months and over the continent in the summer months. Any interpretation of the differences shown in Fig. 3a or d would be tenuous at best.

However, it may be possible to control for the IV by generating ensembles of each experiment. The basic idea is that individual manifestations of IV are random in nature, and so perturbed copies of both the control run and the perturbation run (e.g. duplications of CONTROL+n and FORESTMOD+n) will differ by small, random amounts. The numerous copies (ensembles) of each experiment are then averaged together to produce an ensemble average. Given that the manifestations of IV are random, the magnitude of the IV signal should tend toward zero as the number of ensemble members in the ensemble average tends toward infinity. For a large enough ensemble set, the difference between the two ensemble average experiments should then be mainly due to the physical perturbation, though it will still have a small (ideally negligible) component of IV.

We tested this process on the CONTROL and FORESTMOD experiments. We generated 18 duplicates of both simulations using the same process used to create the CONTROL+n and FORESTMOD+n simulations (e.g. randomly changing the water vapor mixing ratio at the lateral boundaries by a tiny amount). Including the original simulations and the ‘+n’ simulations, this gives twenty ensemble members for each experiment. Each duplicate differed from its siblings by at most 1/1000th of the specific humidity in the lateral boundaries. We took the mean of all twenty CONTROL ensemble members and all twenty FORESTMOD ensemble members. We will refer to the ensemble averages as CONTROL_AVG and FORESTMOD_AVG respectively.

Figure 4a shows the 10-year average DJF difference between FORESTMOD_AVG and CONTROL_AVG, and (b) shows the corresponding JJA difference. In Fig. 4a, there is large, spatially-coherent negative precipitation anomaly off the coast of Oregon state along with an equally large and coherent positive precipitation anomaly just inland. The off-coast negative anomaly appears to be caused by a positive height anomaly aloft (not shown) associated with an increase in subsidence over the region. We attribute the positive precipitation anomaly to an increase in the moisture content of the downwind air, since the upwind sink of moisture aloft, precipitation, is made less effective due to the increased subsidence. This moistened air ends up being orthographically uplifted downwind.

Figure 4b shows a hint of a similar, but offset, signal. However, interpretation of this figure is not straight-forward, since the magnitude of the signal is similar in magnitude to what appears to be a persistent IV signal in the eastern portion of the domain. This point will be addressed further on in the paper.

In contrast to Figs. 3a, 4a and b appears less spatially noisy. In particular the DJF IV signal, originally shown in Fig. 3c, is much weaker in Fig. 4a. Likewise, the part of the anomaly that appeared to be more spatially-coherent than the IV signal in Fig. 3a, and that is roughly spatially co-located with the land surface modification, totally dominates Fig. 4. The disappearance of the IV signal in conjunction with the strengthening of the physical signal shows that ensemble-averaging can be successfully applied to RCM sensitivity studies. However, the lack of a spatially-coherent signal in Fig. 4b and the relatively large precipitation anomalies within the summer IV region (the eastern portion of the domain; refer to Fig. 3f) make it difficult to assess whether all of the anomalies in Fig. 4b are physically meaningful.

If we assume that the boundary condition perturbations induce statistically identical IV in the CONTROL and FORESTMOD experiments (i.e. IV-induced anomalies at a given grid point are drawn from the same probability distribution in both the CONTROL and FORESTMOD experiments), then the distribution of IV-induced differences between the CONTROL and FORESTMOD experiments should have a mean of zero. This comes as a corollary to the fact that two independent variables, x and y, drawn from the exact same distribution function, will have the same mean, \(\mu:\bar{x} = \bar{y} = \mu.\) The difference between x and y will then be zero on average: \(\overline{x-y}=\bar{x}-\bar{y}=\mu-\mu=0.\) With the above assumption of statistical equality, differences between the CONTROL and FORESTMOD experiments that are not centered about zero must not be IV.

We use a bootstrapping method to generate an approximate distribution of the precipitation differences between the CONTROL and FORESTMOD experiments within Box A (see Fig. 1b) in the DJF months. We label the mean DJF precipitation difference between the two experiments, at latitude i, at longitude j, and within ensemble member n as ΔP i,j,n. We assume that all the ΔP are independent and identically distributed. Following standard bootstrapping methodology (Wilks 2006, Chap. 5), we generate 10,000 permutations of ΔP i,j,n by drawing and replacing each bootstrap member, ΔP′i,j,n,k (where k is the kth bootstrap permutation), from the original set of ΔP. We then average ΔP′i,j,n,k over i, j, and n to generate 10,000 random averages of ΔP: \(\overline{\Delta P'_{k}} = \frac{1}{\sum_{i,j,n}}\sum_{i,j,n}{\Delta P'_{i,j,n,k}}\). The distribution of \(\overline{\Delta P'}\), which approximates the distribution function of the DJF precipitation anomalies within Box A, is shown in Fig. 5a.

In the following paragraphs, we will refer to analyses performed in Boxes A, B, and C. We select Boxes A, B, and C because Box A represents an area of high winter IV, Box C represents an area of high summer IV (and visually ambiguous physical signal), and Box B represents an area where a physical signal is clearly present from Fig. 4a.

The distribution of precipitation anomalies in Fig. 5a is not exactly centered around zero, but zero is well within the bulk of the distribution, meaning that a mean of zero for the precipitation anomalies cannot be statistically ruled out. We would have to conclude that it is statistically ambiguous whether the DJF precipitation anomaly is due to a physical forcing or due to IV. In contrast, we show an approximate distribution of the JJA precipitation anomalies within Box C in Fig. 5b (generated in the same manner as the histogram in Fig. 5a); zero is well outside the bulk of the distribution. We can confidently say (at well above the 99% confidence level) that the mean of the distribution is not zero, meaning that it is unlikely that the JJA anomaly within Box C is due to IV.

De Elía et al. (2008) show that for independent and identically distributed variables (or variables that approximate this condition), IV tends to drop following the expression \(C/\sqrt{N},\) where C is some constant and N is the number of simulation years. In order to investigate the rate at which IV decreases, and whether it also follows some sort of \(1/\sqrt{N}\) dependence (where N is now the number of ensemble members), we plot the spatial mean and standard deviation of the precipitation differences within Boxes A, B, and C (see Fig. 1b) as a function of number of ensemble members. Figure 6a shows this functional difference for the DJF months, whereas (b) shows it for the JJA months.

a The spatial mean and standard deviation within Boxes A and B of average DJF precipitation difference between FORESTMOD and CONTROL as a function of number of ensemble members used in the average. The black curve shows \(S(N) = 0.157/\sqrt{N}.\) The convention of the precipitation anomalies is reversed (CONTROL − FORESTMOD is shown) for clarity in the plot. b As in a but for JJA and Boxes B and C. The horizontal black lines show the median of the bootstrap distribution of JJA precipitation anomalies

As expected from IV-induced anomalies, the Box A average in Fig. 6a sits near 0. The standard deviation within Box A, which is a measure of the strength of the IV signal, falls off with a dependence on N that is close to an inverse square root. A least-squares fit yields \(S(N) = 0.157/\sqrt{N},\) which is shown as the black curve in Fig. 6a. This inverse square root dependence is indicative of an IV-induced anomaly. In contrast, the standard deviation within Box B follows that of Box A until about four ensemble members, and then levels off to a standard deviation of about 0.10 mm/day; this left-over spatial variance is due to the gradient in precipitation anomaly that increases with proximity to the coast (see Fig. 4a) and is indicative of a non-IV induced anomaly.

While it is not as clear as in Fig. 6a, the mean JJA anomaly off the Oregon coast (Box B; Fig. 6b) appears to tend toward a non-zero value. However, its standard deviation appears to converge around a stable value. The lack of \(1/\sqrt{N}\) dependence in Box B suggests that the anomaly is not due to IV. The variability in this region is smaller in the summer than it is in the winter [compare the magnitudes of the red, dot–dash lines in (a) and (b)], though the variability is larger than the mean in the summerFootnote 3. Both the mean and the variability in the high summer IV region (Box C) drops rapidly, but then stabilizes around 0.15 mm/day. The mean converges toward the median value of the bootstrap distribution in Fig. 5b. In conjunction with the evidence of physical signal in Fig. 5b, the lack of inverse square root dependence on N suggests that the JJA anomaly in Box C is not due to IV, which means that the anomaly is likely due to the land surface perturbation.

In both Figs. 6a and b, the standard deviations within Box B and Box C fall off to a steady value somewhere around four ensemble members. This suggests that for this sensitivity study, four ensembles members might have been sufficient. It is not clear from this study whether so few ensemble members would be appropriate for other sensitivity studies, though it is certainly worth investigating.

4 Conclusions

Under the assumption that IV-related anomalies are statistically identical in the two experiments of our sensitivity study, we have shown that a distinctly non-zero mean of the distribution of precipitation anomalies between the two experiments is a positive indicator of physical signal (versus IV signal). In addition to the distribution indicator, we have shown evidence that areas where the standard deviation converges to a non-zero value instead of falling off like \(1/\sqrt{N}\) are areas where the anomaly is caused by the perturbation in the sensitivity study and not by IV. The convergence indicator could be used where the non-zero mean indicator might fail: where the sensitivity perturbation causes a change in variability, but not a change in the mean. Alternatively in this case, the bootstrap methodology could be used to test whether the ratio of variances between the two experiments is statistically different from 1. Our results also suggest that as few as four ensemble members might be sufficient to detect a physical signal with this method.

Additionally, one might reasonably assume that an anomaly is a physical signal if it appears outside a region that tends to exhibit high IV. For example, the negative anomaly off Oregon’s coast in Fig. 4a appears outside the oceanic region where this domain shows the highest IV in winter in Fig. 2c, so we interpret this anomaly as being caused by the land surface perturbation and not by the model’s IV.

This study shows that ensembles of RCM simulations can improve the integrity of sensitivity studies when a model’s IV is large for the variable of interest, relative to the anomaly generated by the physical forcing. Using 20 ensemble members, we are able to show that changing evergreen forest to deciduous in Western N. America clearly causes a distinct pattern of precipitation anomaly over Oregon and parts of California in the DJF months. However, it is visually unclear whether the JJA anomalies are due to the land-surface perturbation in our sensitivity study, or whether they are due to IV. Using two different indicators, we show that the precipitation anomalies in the Eastern portion of the domain (which is also the high IV region of this domain in the JJA months) are likely a physical signal. We emphasize that RCM sensitivity studies, especially sensitivity studies that examine small changes in forcing, should be carefully analyzed, since RCM IV can be quite large for some variables (especially precipitation). Even sensitivity studies that use an ensembling method, as we have used here, need to be interpreted carefully, since it is not always visually obvious whether anomalies are a physical signal or due to IV that might converge slowly toward zero. The RCM community would greatly benefit from more and detailed studies of intrinsic variability with different RCMs (and using different parametrizations within those RCMs), in different regions, with different forcings, and especially in the context of sensitivity studies. Novel indicators of physical signal in sensitivity studies (versus IV signal), especially ones that require few ensemble members, could greatly improve the integrity of published sensitivity studies without requiring the high computational cost of numerous ensemble members.

Notes

The magnitude of the perturbation is as high as about 0.02 g/kg, though it is typically an order of magnitude smaller.

When we refer to a physical signal in this paper, we are referring to anomalies that are caused by the perturbation in the sensitivity study, and not by IV.

It is likely that the variability in precipitation is largest in Box B in the winter because the jet stream tends to drive precipitation in to this region in the winter, and the variability in precipitation is largest in Box C in the summer because convective precipitation is most active in the summer over this region.

References

Alexandru A, de Elia R, Laprise R (2007) Internal variability in regional climate downscaling at the seasonal scale. Mon Weather Rev 135(9):3221–3238

Caya D, Biner S (2004) Internal variability of RCM simulations over an annual cycle. Clim Dyn 22(1):33–46

Christensen OB, Gaertner MA, Prego JA, Polcher J (2001) Internal variability of regional climate models. Clim Dyn 17(11):875–887

Dickinson R, Henderson-Sellers A, Kennedy P (1993) Biosphere–atmosphere transfer scheme (BATS) version 1e as coupled to the NCAR community climate model. Technical report, NCAR/TN-387+STR

Dutta SK, Das S, Kar SC, Mhanty UC, Joshi PC (2009) Impact of vegetation on the simulation of seasonal monsoon rainfall over the indian subcontinent using a regional model. J Earth Sys Sci 118(5):413 (printed in India)

de Elía R, Caya D, Côté H, Frigon A, Biner S, Giguère M, Paquin D, Harvey R, Plummer D (2008) Evaluation of uncertainties in the CRCM-simulated North American climate. Clim Dyn 30(2):113–132

Giorgi F, Bi X (2000) A study of internal variability of a regional climate model. J Geophys Res 105:503

Giorgi F, Mearns LO (1999) Introduction to special section: regional climate modeling revisited. J Geophys Res 104:6335 doi:10.1029/98JD02072

Hulme M, Barrow EM, Arnell NW, Harrison PA, Johns TC, Downing TE (1999) Relative impacts of human-induced climate change and natural climate variability. Nature 397(6721):688–691

Kanamitsu M, Ebisuzaki W, Woollen J, Yang SK, Hnilo JJ, Fiorino M, Potter GL (2002) NCEP–DOE AMIP-II reanalysis (r-2). Bull Am Meteorol Soc 83(11):1631–1643

Lorenz EN (1963) Deterministic nonperiodic flow. J Atmos Sci 20(2):130–141

Lucas-Picher P, Caya D, de Elía R, Laprise R (2008) Investigation of regional climate models’ internal variability with a ten-member ensemble of 10-year simulations over a large domain. Clim Dyn 31(7):927–940

Pal J, Small E, Eltahir E (2000) Simulation of regional-scale water and energy budgets: representation of subgrid cloud and precipitation processes within RegCM. J Geophys Res Atmos 105:29579–29594

Plummer DA, Caya D, Frigon A, Côté; H, Giguère M, Paquin D, Biner S, Harvey R, de Elía R (2006) Climate and climate change over North America as simulated by the Canadian RCM. J Clim 19(13):3112–3132

Rinke A, Dethloff K (2000) On the sensitivity of a regional arctic climate model to initial and boundary conditions. Clim Res 14(2):101–113

Snyder MA, Sloan LC, Bell JL (2004) Modeled regional climate change in the hydrologic regions of California: a CO2 sensitivity study. J Astron Soc West Aust 40:591–601

Vannitsem S, Chomé F (2005) One-way nested regional climate simulations and domain size. J Clim 18(1):229–233

Wilks DS (2006) Statistical methods in the atmospheric sciences, International Geophysics Series, vol 91, 2nd edn. Academic Press, San Diego

Zhang DF, Zakey AS, Gao XJ, Giorgi F, Solmon F (2009) Simulation of dust aerosol and its regional feedbacks over East Asia using a regional climate model. Atmos Chem Phys Discuss 9(4):1095–1110

Acknowledgments

This material is based upon work supported by the National Science Foundation under Grant No. ATM-0533482-001. This work was partially supported by a grant from the California Energy Commission. We would like to thank the editor and two anonymous reviewers, whose comments greatly helped to improve the clarity of this manuscript.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

O’Brien, T.A., Sloan, L.C. & Snyder, M.A. Can ensembles of regional climate model simulations improve results from sensitivity studies?. Clim Dyn 37, 1111–1118 (2011). https://doi.org/10.1007/s00382-010-0900-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-010-0900-5