Abstract

Around 2001 we classified the Leonard systems up to isomorphism. The proof was lengthy and involved considerable computation. In this paper we give a proof that is shorter and involves minimal computation. We also give a comprehensive description of the intersection numbers of a Leonard system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

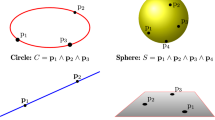

In the area of Algebraic Combinatorics there is an object called a commutative association scheme [2, 13]. This is a combinatorial generalization of a finite group, that retains enough of the group structure so that one can still speak of the character table. During the decade 1970–1980 it was realized by E. Bannai, P. Delsarte, D. Higman and others that commutative association schemes help to unify many aspects of group theory, coding theory, and design theory. An early work in this direction was the 1973 thesis of Delsarte [5]. This thesis helped to motivate the work of Bannai [2, p. i], who taught a series of graduate courses on commutative association schemes during September 1978–December 1982 at the Ohio State University. The lecture notes from those courses, along with more recent developments, became a book coauthored with T. Ito [2]. The book had a large impact; it is currently cited 698 times according to MathSciNet.

In the Introduction to [2], Bannai and Ito describe the goals of their book. One goal was to summarize what is known about commutative association schemes up to that time. Another goal was to focus the reader’s attention on two remarkable types of schemes, said to be P-polynomial and Q-polynomial. A P-polynomial scheme is essentially the same thing as a distance-regular graph, and can be viewed as a finite analog of a 2-point homogeneous space [25]. Similarly, a Q-polynomial scheme is a finite analog of a rank 1 symmetric space [25]. By a theorem of H. C. Wang [25], a compact Riemannian manifold is 2-point homogeneous if and only if it is rank 1 symmetric. This result was extended to the noncompact case by J. Tits [24] and S. Helgason [8]. Motivated by all this, Bannai and Ito conjectured that a primitive association scheme is P-polynomial if and only if it is Q-polynomial, provided that the diameter is sufficiently large [2, p. 312]. They also proposed the classification of schemes that are both P-polynomial and Q-polynomial [2, p. xiii].

Progress on the proposed classification was made while the book was still in preparation. A P-polynomial scheme gets its name from the fact that there exists a sequence of orthogonal polynomials \(\lbrace u_i\rbrace _{i=0}^d\) such that \(u_i(A)=A_i/k_i\) for \(0 \le i \le d\), where d is the diameter of the scheme, \(A_i\) is the ith associate matrix, \(A=A_1\), and \(k_i\) is the ith valency [2, pp. 190, 261]. Similarly, for a Q-polynomial scheme there exists a sequence of orthogonal polynomials \(\lbrace u^*_i\rbrace _{i=0}^d\) such that \(u^*_i(A^*)=A^*_i/k^*_i\) for \(0 \le i \le d\), where \(A^*_i\) is the ith dual associate matrix, \(A^*=A^*_1\), and \(k^*_i\) is the ith dual valency [2, pp. 193, 261], [15, p. 384]. For schemes that are P-polynomial and Q-polynomial, we have \(u_i(\theta _j)= u^*_j(\theta ^*_i) \) for \(0 \le i,j\le d\), where \(\lbrace \theta _i\rbrace _{i=0}^d\) (resp. \(\lbrace \theta ^*_i\rbrace _{i=0}^d\) ) are the eigenvalues of A (resp. \(A^*\)) [2, p. 262]. These equations are known as Delsarte duality [12] or Askey-Wilson duality [20, p. 261]. This duality can be defined for \(d=\infty \), but throughout this paper we assume that d is finite.

Askey-Wilson duality comes up naturally in the area of special functions and orthogonal polynomials. In this area the classical orthogonal polynomials are often described using a partially-ordered set called the Askey-tableau. The vertices in the poset represent the various families of classical orthogonal polynomials, and the covering relation describes what happens when a limit is taken. See [11] for an early version of the tableau, and [10, pp. 183, 413] for a more recent version. One branch of the tableau, sometimes called the terminating branch, contains the polynomials that are orthogonal with respect to a measure that is nonzero at finitely many arguments. At the top of this terminating branch sit the q-Racah polynomials, introduced in 1979 by R. Askey and J. Wilson [1]. The rest of the terminating branch consists of the q-Hahn, dual q-Hahn, q-Krawtchouk, dual q-Krawtchouk, quantum q-Krawtchouk, affine q-Krawtchouk, Racah, Hahn, dual-Hahn, and Krawtchouk polynomials. The above-named polynomials are defined using hypergeometric series or basic hypergeometric series, and it is transparent from the definition that they satisfy Askey-Wilson duality.

Back at Ohio State, there was a graduate student attending Bannai’s classes by the name of Douglas Leonard. With Askey’s encouragement, Leonard showed in [12] that the q-Racah polynomials give the most general orthogonal polynomial system that satisfies Askey-Wilson duality, under the assumption that \(d\ge 9\). In [2, Theorem 5.1] Bannai and Ito give a version of Leonard’s theorem that removes the assumption on d and explicitly describes all the limiting cases that show up. This version gives a complete classification of the orthogonal polynomial systems that satisfy Askey-Wilson duality. It shows that the orthogonal polynomial systems that satisfy Askey-Wilson duality are from the terminating branch of the Askey-tableau, except for one family with \(q=-1\) now called the Bannai-Ito polynomials [2, p. 271], [21, Example 5.14]. In our view, the terminating branch of the Askey-tableau should include the Bannai-Ito polynomials. Adopting this view, for the rest of this paper we include the Bannai-Ito polynomials in the terminating branch of the Askey-tableau.

The Leonard theorem [2, Theorem 5.1] is notoriously complicated; the statement alone takes 11 pages. In an effort to simplify and clarify the theorem, the present author introduced the notion of a Leonard pair [18, Definition 1.1] and Leonard system [18, Definition 1.4]. Roughly speaking, a Leonard pair consists of two diagonalizable linear transformations on a finite-dimensional vector space, each acting on the eigenspaces of the other one in an irreducible tridiagonal fashion; see Definition 2.1 below. A Leonard system is essentially a Leonard pair, together with appropriate orderings of their eigenspaces; see Definition 2.3 below. In [18, Theorem 1.9] the Leonard systems are classified up to isomorphism. This classification is related to Leonard’s theorem as follows. In [18, Appendix A] and [20, Sect. 19], a bijection is given between the isomorphism classes of Leonard systems over \({\mathbb {R}}\), and the orthogonal polynomial systems that satisfy Askey-Wilson duality. Given the bijection, the classification [18, Theorem 1.9] becomes a ‘linear-algebraic version’ of Leonard’s theorem. This version is conceptually simple and quite elegant in our view. In [23] we start with the Leonard pair axiom and derive, in a uniform and attractive manner, the polynomials in the terminating branch of the Askey-tableau, along with their properties such as the 3-term recurrence, difference equation, Askey-Wilson duality, and orthogonality.

We comment on how the theory of Leonard pairs and Leonard systems depends on the choice of ground field. The classification [18, Theorem 1.9] shows that the ground field does not matter in a substantial way, unless it has characteristic 2. In this case, the theory admits an additional family of polynomials called the orphans. The orphans have diameter \(d=3\) only; they are described in [21, Example 5.15] and Example 20.13 below.

The book [2] appeared in 1984 and the paper [18] appeared in 2001. It is natural to ask what happened in between. The concept of a Leonard pair over \({\mathbb {R}}\) appears in [14, Definitions 1.1, 1.2], where it is called a thin Leonard pair. Also appearing in [14] is the correspondence between Leonard pairs over \({\mathbb {R}}\) and the orthogonal polynomial systems that satisfy Askey-Wilson duality. In addition [14, Definition 3.1] describes an algebra called the Leonard algebra, now known as the Askey-Wilson algebra. The paper [14] was submitted but never published. In [26] A. Zhedanov introduced the Askey-Wilson algebra. This algebra has a presentation involving two generators \(K_1\), \(K_2\) that satisfy a pair of quadratic relations. In [6], Granovskii, Lutzenko, and Zhedanov consider a finite-dimensional irreducible module for the Askey-Wilson algebra, on which each of \(K_1\), \(K_2\) are diagonalizable. Under some minor assumptions, they show that each of \(K_1\), \(K_2\) acts in an irreducible tridiagonal fashion on an eigenbasis for the other one. In hindsight, it is fair to say that they constructed an example of a Leonard pair, although they did not define a Leonard pair as an independent concept. The paper [15, Theorem 2.1] contains a version of the Leonard pair concept that is close to [18, Definition 1.1].

Turning to the present paper, we obtain two main results: (i) an improved proof for the classification of Leonard systems; (ii) a comprehensive description of the intersection numbers of a Leonard system.

We now describe our main results in detail. In [18, Theorem 1.9] the Leonard systems are classified up to isomorphism, and the given proof is completely correct as far as we know. However the proof is longer than necessary. In the roughly two decades since the paper was published, we have discovered some ‘shortcuts’ that simplify the proof and avoid certain tedious calculations. The shortcuts are summarized as follows.

-

In [18, Sect. 3] we established the split canonical form for a Leonard system. In the present paper we make use of the fact that the split canonical form still exists under weaker assumptions; these are described in Proposition 7.6 below.

-

The concept of a normalizing idempotent was introduced by Edward Hanson in [7, Sect. 6]. In the present paper we use this concept to simplify numerous arguments; see Sects. 6, 7, 17 below.

-

In [18, Theorem 4.8] we explicitly gave the matrix entries for a certain matrix representation of the primitive idempotents for a Leonard system. The computation of these entries is tedious and takes up most of [18, Sect. 4]. In the present paper we replace all of this by a single identity (15) that is established in a few lines.

-

In the present paper we use an antiautomorphism \(\dagger \) to obtain the result Proposition 4.4, which is roughly summarized as follows: as we construct a Leonard system, if we construct three-fourths of it then the last fourth comes for free.

-

In [18, Lemma 7.2] we used a slightly obscure method to establish the irreducibility of the underlying module for a Leonard system. In the present paper this lemma is avoided using the first bullet point above.

-

We replace the slightly technical results [18, Lemmas 10.3–10.5] by a more elementary result, Proposition 13.4 below.

-

We replace most of [18, Sect. 11] by a single result Proposition 8.4 called the wrap-around result. The wrap-around result was discovered by T. Ito and the present author during our effort to classify the tridiagonal pairs; it is the essential idea behind the proof of [9, Lemma 9.9].

-

Using the improvements listed above, we replace the arguments in [18, Sects. 13, 14] with more efficient arguments in Sect. 17 below.

Some parts of the improved proof are unchanged from the original; we still use [18, Sect. 8, 9] and [18, Lemmas 10.2, 12.4]. These results are reproduced in the present paper in order to obtain a complete proof, all in one place. We believe that this complete proof is suitable for The Book if not this journal.

Concerning our second main result, we mentioned earlier that the Leonard systems correspond to the orthogonal polynomial sequences that satisfy Askey-Wilson duality. Unfortunately, it is a bit difficult to go back and forth between the two points of view, because from the polynomial perspective, the main parameters are the intersection numbers (or connection coefficients) that describe the 3-term recurrence, and from the Leonard system perspective, the main parameters are the first and second split sequence that make up part of the parameter array. There are some equations that relate the two types of parameters; see [20, Theorem 17.7] and Lemma 19.4 below. However the nonlinear nature of these equations makes them difficult to use. In order to mitigate the difficulty, we display many identities that involve the intersection numbers along with the first and second split sequence. Taken together, these identities should make it easier to work with the intersection numbers in the future. These identities can be found in Sect. 19. We also explicitly give the intersection numbers for every isomorphism class of Leonard system; these are contained in the Appendix.

The paper is organized as follows. Sects. 2, 3 contain preliminary comments and definitions. In Sect. 4 we describe the antiautomorphism \(\dagger \) and use it to obtain Proposition 4.4. In Sect. 5 we describe some polynomials that will be used throughout the paper. In Sect. 6 we discuss the concept of a normalizing idempotent. In Sect. 7 we use normalizing idempotents to describe certain kinds of decompositions relevant to Leonard systems. Section 8 contains the wrap-around result. In Sect. 9 we recall the parameter array of a Leonard system. In Sect. 10 we state the Leonard system classification, which is Theorem 10.1. Sections 11, 12, 13 are about recurrent sequences. In Sect. 14 we define a polynomial in two variables that will be useful on several occasions later in the paper. Sections 15, 16 are about the tridiagonal relations. In Sect. 17 we complete the proof of Theorem 10.1. Section 18 contains two characterizations related to Leonard systems and parameter arrays. In Sect. 19 we give a comprehensive treatment of the intersection numbers of a Leonard system. These intersection numbers are listed in the Appendix.

2 Preliminaries

We now begin our formal argument. Shortly we will define a Leonard pair and Leonard system. Before we get into detail, we briefly review some notation and basic concepts. Let \({\mathbb {F}}\) denote a field. Every vector space and algebra discussed in this paper is understood to be over \({\mathbb {F}}\). Throughout the paper fix an integer \(d\ge 0\). Let \(\mathrm{Mat}_{d+1}({\mathbb {F}})\) denote the algebra consisting of the \(d+1\) by \(d+1\) matrices that have all entries in \({\mathbb {F}}\). We index the rows and columns by \(0,1,\ldots , d\). Throughout the paper V denotes a vector space with dimension \(d+1\). Let \(\mathrm{End}(V)\) denote the algebra consisting of the \({\mathbb {F}}\)-linear maps from V to V. Next we recall how each basis \(\lbrace v_i\rbrace _{i=0}^d\) of V gives an algebra isomorphism \(\mathrm{End}(V) \rightarrow \mathrm{Mat}_{d+1}({\mathbb {F}})\). For \(X \in \mathrm{End}(V)\) and \(M \in \mathrm{Mat}_{d+1}({\mathbb {F}})\), we say that M represents X with respect to \(\lbrace v_i\rbrace _{i=0}^d\) whenever \(Xv_j = \sum _{i=0}^d M_{ij}v_i\) for \(0 \le j \le d\). The isomorphism sends X to the unique matrix in \(\mathrm{Mat}_{d+1}({\mathbb {F}})\) that represents X with respect to \(\lbrace v_i\rbrace _{i=0}^d\). A matrix \(M \in \mathrm{Mat}_{d+1}({\mathbb {F}})\) is called tridiagonal whenever each nonzero entry lies on either the diagonal, the subdiagonal, or the superdiagonal. Assume that M is tridiagonal. Then M is called irreducible whenever each entry on the subdiagonal is nonzero, and each entry on the superdiagonal is nonzero.

Definition 2.1

(See [18, Definition 1.1]). By a Leonard pair on V we mean an ordered pair \(A, A^*\) of elements in \(\mathrm{End}(V)\) such that:

-

(i)

there exists a basis for V with respect to which the matrix representing A is diagonal and the matrix representing \(A^*\) is irreducible tridiagonal;

-

(ii)

there exists a basis for V with respect to which the matrix representing \(A^*\) is diagonal and the matrix representing A is irreducible tridiagonal.

The Leonard pair \(A,A^*\) is said to be over \({\mathbb {F}}\) and have diameter d.

Note 2.2

According to a common notational convention, \(A^*\) denotes the conjugate-transpose of A. We are not using this convention. In a Leonard pair A, \(A^*\) the linear transformations A and \(A^*\) are arbitrary subject to (i), (ii) above.

When working with a Leonard pair, it is convenient to consider a closely related object called a Leonard system. Before defining a Leonard system, we recall a few concepts from linear algebra. An element \(A \in \mathrm{End}(V)\) is said to be diagonalizable whenever V is spanned by the eigenspaces of A. The element A is called multiplicity-free whenever A is diagonalizable and each eigenspace of A has dimension one. Note that A is multiplicity-free if and only if A has \(d+1\) mutually distinct eigenvalues in \({\mathbb {F}}\). Assume that A is multiplicity-free, and let \(\lbrace V_i\rbrace _{i=0}^d\) denote an ordering of the eigenspaces of A. For \(0 \le i \le d\) let \(\theta _i\) denote the eigenvalue of A for \(V_i\). For \(0 \le i \le d\) define \(E_i \in \mathrm{End}(V)\) such that \((E_i - I)V_i = 0 \) and \(E_i V_j=0\) if \(j\not =i\) \((0 \le j \le d)\). We call \(E_i\) the primitive idempotent of A for \(V_i\) (or \(\theta _i\)). We have (i) \(E_i E_j = \delta _{i,j} E_i\) \((0 \le i,j\le d)\); (ii) \(I = \sum _{i=0}^d E_i\); (iii) \(AE_i = \theta _i E_i = E_i A\) \((0 \le i \le d)\); (iv) \(A = \sum _{i=0}^d \theta _i E_i\); (v) \(V_i = E_iV\) \((0 \le i \le d)\); (vi) \(\mathrm{rank}(E_i) = 1\) \((0 \le i \le d)\), (vii) \(\mathrm{tr}(E_i) = 1\) \((0 \le i \le d)\), where tr means trace. Moreover

Let \({\mathcal {D}}\) denote the subalgebra of \(\mathrm{End}(V)\) generated by A. The elements \(\lbrace A^i\rbrace _{i=0}^d\) form a basis for \({\mathcal {D}}\), and \(\prod _{i=0}^d (A-\theta _i I) = 0\). Moreover \(\lbrace E_i\rbrace _{i=0}^d\) form a basis for \({\mathcal {D}}\).

Definition 2.3

(See [18, Definition 1.4]). By a Leonard system on V, we mean a sequence

of elements in \(\mathrm{End}(V)\) that satisfy (i)–(v) below:

-

(i)

each of A, \(A^*\) is multiplicity-free;

-

(ii)

\(\lbrace E_i\rbrace _{i=0}^d\) is an ordering of the primitive idempotents of A;

-

(iii)

\(\lbrace E^*_i\rbrace _{i=0}^d\) is an ordering of the primitive idempotents of \(A^*\);

-

(iv)

\({\displaystyle { E^*_iAE^*_j = {\left\{ \begin{array}{ll} 0, &{} {\text { if }\vert i-j\vert > 1}; \\ \not =0, &{}{\text { if }\vert i-j \vert = 1} \end{array}\right. } \qquad (0 \le i,j\le d)}}\);

-

(v)

\({\displaystyle { E_iA^*E_j = {\left\{ \begin{array}{ll} 0, &{} {\text { if }\vert i-j\vert > 1}; \\ \not =0, &{}{\text { if }\vert i-j \vert = 1} \end{array}\right. } \qquad (0 \le i,j\le d)}}\).

The Leonard system \(\Phi \) is said to be over \({\mathbb {F}}\) and have diameter d.

Leonard pairs and Leonard systems are related as follows. Let \((A; \lbrace E_i\rbrace _{i=0}^d; A^*; \lbrace E^*_i\rbrace _{i=0}^d)\) denote a Leonard system on V. Then \(A, A^*\) is a Leonard pair on V. Conversely, let \(A, A^*\) denote a Leonard pair on V. Then each of A, \(A^*\) is multiplicity-free [18, Lemma 1.3]. Moreover there exists an ordering \(\lbrace E_i\rbrace _{i=0}^d\) of the primitive idempotents of A, and there exists an ordering \(\lbrace E^*_i\rbrace _{i=0}^d\) of the primitive idempotents of \(A^*\), such that \((A; \lbrace E_i\rbrace _{i=0}^d; A^*; \lbrace E^*_i\rbrace _{i=0}^d)\) is a Leonard system on V.

Next we recall the notion of isomorphism for Leonard pairs and Leonard systems.

Definition 2.4

Let \(A, A^*\) denote a Leonard pair on V, and let \(B,B^*\) denote a Leonard pair on a vector space \(V'\). By an isomorphism of Leonard pairs from \(A, A^*\) to \(B, B^*\) we mean a vector space isomorphism \(\sigma : V \rightarrow V'\) such that \(B = \sigma A \sigma ^{-1}\) and \(B^* = \sigma A^* \sigma ^{-1}\). The Leonard pairs \(A, A^*\) and \(B,B^*\) are isomorphic whenever there exists an isomorphism of Leonard pairs from \(A, A^*\) to \(B, B^*\).

Let \(\sigma : V \rightarrow V'\) denote an isomorphism of vector spaces. For \(X \in \mathrm{End}(V)\) abbreviate \(X^\sigma = \sigma X \sigma ^{-1}\) and note that \(X^\sigma \in \mathrm{End}(V')\). The map \(\mathrm{End}(V) \rightarrow \mathrm{End}(V')\), \(X \mapsto X^\sigma \) is an isomorphism of algebras. For a Leonard system \(\Phi = (A; \lbrace E_i\rbrace _{i=0}^d; A^*; \lbrace E^*_i\rbrace _{i=0}^d)\) on V the sequence

is a Leonard system on \(V'\).

Definition 2.5

Let \(\Phi \) denote a Leonard system on V, and let \(\Phi '\) denote a Leonard system on a vector space \(V'\). By an isomorphism of Leonard systems from \(\Phi \) to \(\Phi '\), we mean an isomorphism of vector spaces \(\sigma : V\rightarrow V'\) such that \(\Phi ^\sigma = \Phi '\). The Leonard systems \(\Phi \) and \(\Phi '\) are isomorphic whenever there exists an isomorphism of Leonard systems from \(\Phi \) to \(\Phi '\).

In [18, Theorem 1.9] we classified the Leonard systems up to isomorphism. Our first main goal in the present paper is to give an improved proof of this classifiction. This goal will be accomplished in Sects. 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17. The statement of the classification is given in Theorem 10.1. The proof of Theorem 10.1 will be completed in Sect. 17.

Recall the commutator notation \([r,s]= rs - sr\).

3 Pre Leonard Systems

As we start our investigation of Leonard systems, it is helpful to consider a more general object called a pre Leonard system. This object is defined as follows.

Definition 3.1

By a pre Leonard system on V, we mean a sequence

of elements in \(\mathrm{End}(V)\) that satisfy conditions (i–iii) in Definition 2.3.

The results in this section refer to the pre Leonard system \(\Phi \) from (3).

Definition 3.2

For \(0 \le i \le d\) let \(\theta _i\) (resp. \(\theta ^*_i\)) denote the eigenvalue of A (resp. \(A^*\)) for \(E_i\) (resp. \(E^*_i\)). We call \(\lbrace \theta _i \rbrace _{i=0}^d\) (resp. \(\lbrace \theta ^*_i \rbrace _{i=0}^d\)) the eigenvalue sequence (resp. dual eigenvalue sequence) of \(\Phi \). Let \({\mathcal {D}}\) (resp. \({\mathcal {D}}^*\)) denote the subalgebra of \(\mathrm{End}(V)\) generated by A (resp. \(A^*\)).

Definition 3.3

Define

We call \(\lbrace a_i \rbrace _{i=0}^d\) (resp. \(\lbrace a^*_i \rbrace _{i=0}^d\)) the diagonal sequence (resp. dual diagonal sequence) of \(\Phi \).

Lemma 3.4

We have

Proof

To obtain (4), observe that

The proof of (5) is similar. \(\square \)

Lemma 3.5

For \(0 \le i \le d\),

-

(i)

\(E^*_i A E^*_i = a_i E^*_i\);

-

(ii)

\(E_i A^* E_i = a^*_i E_i\).

Proof

-

(i)

Abbreviate \({{\mathcal {A}}}=\mathrm{End}(V)\). Since \(E^*_i\) has rank 1, the vector space \(E^*_i{\mathcal {A}} E^*_i\) is spanned by \(E^*_i\). Therefore there exists \(\alpha _i \in {\mathbb {F}}\) such that \(E^*_i A E^*_i=\alpha _i E^*_i\). In this equation take the trace of each side and use Definition 3.3 along with \(\mathrm{tr}(XY) = \mathrm{tr}(YX)\) to obtain \(a_i=\alpha _i\).

-

(ii)

Similar to the proof of (i) above.

\(\square \)

We have been discussing the pre Leonard system

on V. Each of the following is a pre Leonard system on V:

Proposition 3.6

With the above notation,

pre LS | Eigenvalue seq. | Dual eigenvalue seq. | Diagonal seq. | Dual diagonal seq. |

|---|---|---|---|---|

\(\Phi \) | \(\lbrace \theta _i \rbrace _{i=0}^d\) | \(\lbrace \theta ^*_i \rbrace _{i=0}^d\) | \(\lbrace a_i \rbrace _{i=0}^d\) | \(\lbrace a^*_i \rbrace _{i=0}^d\) |

\(\Phi ^\Downarrow \) | \(\lbrace \theta _{d-i} \rbrace _{i=0}^d\) | \(\lbrace \theta ^*_i \rbrace _{i=0}^d\) | \(\lbrace a_i \rbrace _{i=0}^d\) | \(\lbrace a^*_{d-i} \rbrace _{i=0}^d\) |

\(\Phi ^\downarrow \) | \(\lbrace \theta _i \rbrace _{i=0}^d\) | \(\lbrace \theta ^*_{d-i} \rbrace _{i=0}^d\) | \(\lbrace a_{d-i} \rbrace _{i=0}^d\) | \(\lbrace a^*_i \rbrace _{i=0}^d\) |

\(\Phi ^* \) | \(\lbrace \theta ^*_i \rbrace _{i=0}^d\) | \(\lbrace \theta _i \rbrace _{i=0}^d\) | \(\lbrace a^*_i \rbrace _{i=0}^d\) | \(\lbrace a_i \rbrace _{i=0}^d\) |

Proof

4 The Antiautomorphism \(\dagger \)

We continue to discuss the pre Leonard system \(\Phi =(A; \lbrace E_i\rbrace _{i=0}^d; A^*; \lbrace E^*_i\rbrace _{i=0}^d)\) from Definition 3.1.

Lemma 4.1

Assume that

Then the elements

form a basis for the vector space \(\mathrm{End}(V)\).

Proof

For \(0 \le i \le d\) pick \(0 \not =v_i \in E^*_iV\). So \(\lbrace v_i \rbrace _{i=0}^d \) is a basis for V. Without loss of generality, we may identify each \(X\in \mathrm{End}(V)\) with the matrix in \(\mathrm{Mat}_{d+1}({\mathbb {F}})\) that represents X with respect to \(\lbrace v_i \rbrace _{i=0}^d\). From this point of view A is irreducible tridiagonal and \(E^*_0 = \mathrm{diag}(1,0,\ldots ,0)\). Using these matrices one routinely checks that the elements (6) are linearly independent. There are \((d+1)^2\) elements listed in (6), and this is the dimension of \(\mathrm{End}(V)\). Therefore the elements (6) form a basis for \(\mathrm{End}(V)\). \(\square \)

Lemma 4.2

Under the assumption in Lemma 4.1, each of the following is a generating set for the algebra \(\mathrm{End}(V)\): (i) \(A, E^*_0\); (ii) \(A, A^*\).

Proof

-

(i)

By Lemma 4.1.

-

(ii)

By (i) above and since \(E^*_0\) is a polynomial in \(A^*\).

\(\square \)

By an automorphism of \(\mathrm{End}(V)\) we mean an algebra isomorphism \(\mathrm{End}(V)\rightarrow \mathrm{End}(V)\). By an antiautomorphism of \(\mathrm{End}(V)\) we mean a vector space isomorphism \(\zeta : \mathrm{End}(V) \rightarrow \mathrm{End}(V) \) such that \((XY)^\zeta = Y^\zeta X^\zeta \) for all \(X, Y \in \mathrm{End}(V)\).

Lemma 4.3

Under the assumption in Lemma 4.1,

-

(i)

there exists a unique antiautomorphism \(\dagger \) of \(\mathrm{End}(V)\) that fixes each of \(A, A^*\);

-

(ii)

\(\dagger \) fixes everything in \({\mathcal {D}}\) and everything in \({\mathcal {D}}^*\);

-

(iii)

\(\dagger \) fixes each of \(E_i, E^*_i\) for \(0 \le i \le d\);

-

(iv)

\((X^\dagger )^\dagger = X\) for all \(X \in \mathrm{End}(V)\).

Proof

-

(i)

First we show that \(\dagger \) exists. For \(0 \le i \le d\) pick \(0 \not =v_i \in E^*_iV\). So \(\lbrace v_i \rbrace _{i=0}^d\) is a basis for V. For \(X \in \mathrm{End}(V)\) let \(X^\sharp \in \mathrm{Mat}_{d+1}({\mathbb {F}})\) represent X with respect to \(\lbrace v_i \rbrace _{i=0}^d\). The map \(\sharp : \mathrm{End}(V) \rightarrow \mathrm{Mat}_{d+1}({\mathbb {F}})\), \(X \mapsto X^\sharp \) is an algebra isomorphism. Write \(B=A^\sharp \) and \(B^*=A^{*\sharp }\). The matrix B is irreducible tridiagonal and \(B^*=\mathrm{diag}(\theta ^*_0, \theta ^*_1,\ldots , \theta ^*_d)\). Define a diagonal matrix \(K \in \mathrm{Mat}_{d+1}({\mathbb {F}})\) with diagonal entries

$$\begin{aligned} K_{ii} = \frac{B_{01} B_{12} \cdots B_{i-1,i}}{B_{10}B_{21} \cdots B_{i,i-1}} \qquad \qquad (0 \le i \le d). \end{aligned}$$The matrix K is invertible and \(K^{-1} B^t K = B\). Define a map \(\flat : \mathrm{Mat}_{d+1}({\mathbb {F}}) \rightarrow \mathrm{Mat}_{d+1}({\mathbb {F}}), X \mapsto K^{-1} X^t K\). The map \(\flat \) is an antiautomorphism of \(\mathrm{Mat}_{d+1}({\mathbb {F}})\) that fixes each of \(B,B^*\). The composition

is an antiautomorphism of \(\mathrm{End}(V)\) that fixes each of \(A,A^*\). We have shown that \(\dagger \) exists. Next we show that \(\dagger \) is unique. Let \(\zeta \) denote an antiautomorphism of \(\mathrm{End}(V)\) that fixes each of \(A,A^*\). Then the composition \(\dagger \circ \zeta ^{-1}\) is an automorphism of \(\mathrm{End}(V)\) that fixes each of \(A, A^*\). Now \(\dagger \circ \zeta ^{-1} = 1\) in view of Lemma 4.2(ii). Therefore \(\dagger = \zeta \). We have shown that \(\dagger \) is unique.

-

(ii)

Since A (resp. \(A^*\)) generates \({\mathcal {D}}\) (resp. \({\mathcal {D}}^*\)).

-

(iii)

Since \(E_i \in {\mathcal {D}}\) and \(E^*_i \in {\mathcal {D}}^*\) for \(0 \le i \le d\).

-

(iv)

The composition \(\dagger \circ \dagger \) is an automorphism of \(\mathrm{End}(V)\) that fixes each of \(A, A^*\). Now \(\dagger \circ \dagger = 1\) in view of Lemma 4.2(ii).

\(\square \)

Proposition 4.4

Consider the following four conditions:

-

(i)

\(\displaystyle { E^*_iAE^*_j = {\left\{ \begin{array}{ll} 0, &{} {\text { if } i-j > 1}; \\ \not =0, &{}{\text { if } i-j = 1} \end{array}\right. } \qquad (0 \le i,j\le d); }\)

-

(ii)

\(\displaystyle { E^*_iAE^*_j = {\left\{ \begin{array}{ll} 0, &{} {\text { if } j-i > 1}; \\ \not =0, &{}{\text { if } j-i = 1} \end{array}\right. } \qquad (0 \le i,j\le d); }\)

-

(iii)

\(\displaystyle { E_iA^*E_j = {\left\{ \begin{array}{ll} 0, &{} {\text { if } i-j > 1}; \\ \not =0, &{}{\text { if } i-j = 1} \end{array}\right. } \qquad (0 \le i,j\le d); }\)

-

(iv)

\(\displaystyle { E_iA^*E_j = {\left\{ \begin{array}{ll} 0, &{} {\text { if } j-i > 1}; \\ \not =0, &{}{\text { if } j-i = 1} \end{array}\right. } \qquad (0 \le i,j\le d). }\)

Assume at least three of (i)–(iv) hold. Then each of (i)–(iv) holds; in other words the pre Leonard system \(\Phi \) is a Leonard system.

Proof

Interchanging \(A, A^*\) if necessary, we may assume without loss of generality that (i), (ii) hold. Now the assumption of Lemma 4.1 holds, so Lemma 4.3 applies. Consider the map \(\dagger \) from Lemma 4.3. For \(0 \le i,j\le d\) we have

Therefore \(E_i A^*E_j= 0\) if and only if \(E_jA^*E_i=0\). Consequently (iii) holds if and only if (iv) holds. The result follows. \(\square \)

5 The Polynomials \(\tau _i, \eta _i, \tau ^*_i, \eta ^*_i\)

We continue to discuss the pre Leonard system \(\Phi =(A; \lbrace E_i\rbrace _{i=0}^d; A^*; \lbrace E^*_i\rbrace _{i=0}^d)\) from Definition 3.1.

Let \(\lambda \) denote an indeterminate. Let \({\mathbb {F}}[\lambda ]\) denote the algebra consisting of the polynomials in \(\lambda \) that have all coefficients in \({\mathbb {F}}\).

Definition 5.1

For \(0 \le i \le d\) define \(\tau _i, \eta _i, \tau ^*_i, \eta ^*_i \in {\mathbb {F}}[\lambda ]\) by

Each of \(\tau _i, \eta _i, \tau ^*_i, \eta ^*_i \) is monic with degree i.

We mention some results about \(\lbrace \tau _i\rbrace _{i=0}^d\) and \(\lbrace \eta _i\rbrace _{i=0}^d\); similar results apply to \(\lbrace \tau ^*_i\rbrace _{i=0}^d\) and \(\lbrace \eta ^*_i\rbrace _{i=0}^d\).

Lemma 5.2

The vectors \(\lbrace \tau _i(A)\rbrace _{i=0}^d\) form a basis for \({\mathcal {D}}\). Moreover the vectors \(\lbrace \eta _i(A)\rbrace _{i=0}^d\) form a basis for \({\mathcal {D}}\).

Proof

Since \(\lbrace A^i\rbrace _{i=0}^d\) is a basis for \({\mathcal {D}}\), and \(\tau _i\), \(\eta _i\) have degree i for \(0 \le i \le d\). \(\square \)

Lemma 5.3

For \(0 \le i \le d\),

-

(i)

\(\tau _i(A) = \sum _{h=i}^d \tau _i(\theta _h) E_h\);

-

(ii)

\(\eta _i(A) = \sum _{h=0}^{d-i} \eta _i(\theta _h) E_h\).

Proof

-

(i)

We have \(A=\sum _{h=0}^d \theta _h E_h\), so \(\tau _i(A)=\sum _{h=0}^d \tau _i(\theta _h) E_h\). However \(\tau _i(\theta _h)=0\) for \(0 \le h \le i-1\), so \(\tau _i(A)=\sum _{h=i}^d \tau _i(\theta _h) E_h\).

-

(ii)

Apply (i) above to \(\Phi ^\Downarrow \).

\(\square \)

Lemma 5.4

For \(0 \le i \le d\),

-

(i)

the elements \(\lbrace \tau _j(A)\rbrace _{j=0}^i\) and \(\lbrace A^j\rbrace _{j=0}^i\) have the same span;

-

(ii)

the elements \(\lbrace \tau _j(A)\rbrace _{j=i}^d\) and \(\lbrace E_j\rbrace _{j=i}^d\) have the same span.

Proof

-

(i)

The polynomial \(\tau _j\) has degree j for \(0 \le j \le d\).

-

(ii)

By Lemma 5.2 it suffices to show that the span of \(\lbrace \tau _j(A)\rbrace _{j=i}^d\) is contained in the span of \(\lbrace E_j\rbrace _{j=i}^d\). But this follows from Lemma 5.3(i).

\(\square \)

Lemma 5.5

For \(0 \le i \le d\),

-

(i)

the elements \(\lbrace \eta _j(A)\rbrace _{j=0}^i\) and \(\lbrace A^j\rbrace _{j=0}^i\) have the same span;

-

(ii)

the elements \(\lbrace \eta _j(A)\rbrace _{j=i}^d\) and \(\lbrace E_j\rbrace _{j=0}^{d-i}\) have the same span.

Proof

Apply Lemma 5.4 to \(\Phi ^\Downarrow \). \(\square \)

6 Normalizing Idempotents

We continue to discuss the pre Leonard system \(\Phi =(A; \lbrace E_i\rbrace _{i=0}^d; A^*; \lbrace E^*_i\rbrace _{i=0}^d)\) from Definition 3.1.

Next we explain what it means for \(E^*_0\) to be normalizing. This concept was introduced in [7, Sect. 6], although our point of view is different.

Definition 6.1

The primitive idempotent \(E^*_0\) is called normalizing whenever

In the next two lemmas we give some necessary and sufficient conditions for \(E^*_0\) to be normalizing. The proofs are routine, and omitted.

Lemma 6.2

The following (i)–(iv) are equivalent:

-

(i)

\(E^*_0\) is normalizing;

-

(ii)

\({{\mathcal {D}}}E^*_0\) has dimension \(d+1\);

-

(iii)

the elements \(\lbrace A^i E^*_0\rbrace _{i=0}^d\) are linearly independent;

-

(iv)

for \(X \in {\mathcal {D}}\), \(X E^*_0=0\) implies \(X= 0\).

Lemma 6.3

The following (i)–(iv) are equivalent:

-

(i)

\(E^*_0\) is normalizing;

-

(ii)

\(E_iV = E_iE^*_0V\) for \(0 \le i \le d\);

-

(iii)

\({{\mathcal {D}}}E^*_0V = V\);

-

(iv)

for \(0 \not =\xi \in E^*_0V\) the map \({\mathcal {D}} \rightarrow V\), \(X \mapsto X\xi \) is a bijection.

Proposition 6.4

Assume that \(E^*_0\) is normalizing. Then for \(X \in \mathrm{End}(V)\) and \(0 \le i \le d\) the following are equivalent:

-

(i)

\(XE_i=0\);

-

(ii)

\(XE_iE^*_0=0\).

Proof

Using Lemma 6.3(ii),

\(\square \)

7 Normalizing Idempotents and Decompositions

We continue to discuss the pre Leonard system \(\Phi =(A; \lbrace E_i\rbrace _{i=0}^d; A^*; \lbrace E^*_i\rbrace _{i=0}^d)\) from Definition 3.1.

Definition 7.1

By a decomposition of V we mean a sequence \(\lbrace V_i \rbrace _{i=0}^d\) of one-dimensional subspaces of V such that the sum \(V= \sum _{i=0}^d V_i\) is direct.

Example 7.2

Each of the sequences

is a decomposition of V.

Example 7.3

Let \(\lbrace v_i\rbrace _{i=0}^d\) denote a basis for V. For \(0 \le i \le d\) let \(V_i\) denote the span of \(v_i\). Then \(\lbrace V_i\rbrace _{i=0}^d\) is a decomposition of V, said to be induced by \(\lbrace v_i\rbrace _{i=0}^d\).

Lemma 7.4

Assume that \(E^*_0\) is normalizing, and define

Then the following (i)–(v) hold:

-

(i)

\(\lbrace U_i\rbrace _{i=0}^d \) is a decomposition of V;

-

(ii)

\((A-\theta _i I)U_i = U_{i+1}\) \((0 \le i \le d-1)\);

-

(iii)

\((A-\theta _d I)U_d = 0\);

-

(iv)

\(U_0 + U_1 + \cdots + U_i = E^*_0V + A E^*_0V+ \cdots + A^i E^*_0V \qquad (0 \le i \le d);\)

-

(v)

\(U_i + U_{i+1} + \cdots + U_d = E_iV+ E_{i+1}V+ \cdots + E_dV \qquad (0 \le i\le d).\)

Proof

-

(i)

By Lemma 6.3(iv) and since \(\lbrace \tau _i(A)\rbrace _{i=0}^d\) is a basis for \({\mathcal {D}}\).

-

(ii)

By Definition 5.1.

-

(iii)

Since \(0=\prod _{i=0}^d (A-\theta _i I)=(A-\theta _d I)\tau _d(A)\).

- (iv)

- (iv)

\(\square \)

Lemma 7.5

The following (i)–(iii) are equivalent:

-

(i)

\(\displaystyle { E^*_iAE^*_j = {\left\{ \begin{array}{ll} 0, &{} {\text { if } i-j > 1}; \\ \not =0, &{}{\text { if } i-j = 1} \end{array}\right. } \qquad (0 \le i,j\le d); }\)

-

(ii)

for \(0 \le i \le d\) there exists \(f_i \in {{\mathbb {F}}}[\lambda ]\) such that \(\mathrm{deg}(f_i)=i\) and \(E^*_iV = f_i(A) E^*_0V\);

-

(iii)

for \(0 \le i \le d\),

$$\begin{aligned} E^*_0V + E^*_1V+ \cdots + E^*_iV = E^*_0V + A E^*_0V + \cdots + A^i E^*_0V. \end{aligned}$$(7)

Assume that (i)–(iii) hold. Then \(E^*_0\) is normalizing.

Proof

\(\mathrm{(i)} \Rightarrow \mathrm{(ii)}\) For \(0 \le i \le d\) pick \(0 \not =v_i \in E^*_iV\). So \(\lbrace v_i\rbrace _{i=0}^d\) is a basis for V. Let \( B \in \mathrm{Mat}_{d+1}({\mathbb {F}})\) represent A with respect to \(\lbrace v_i \rbrace _{i=0}^d\). The entries of B satisfy

Define polynomials \(\lbrace f_i \rbrace _{i=0}^d\) in \({\mathbb {F}}[\lambda ]\) by \(f_0=1\) and

For \(0 \le i \le d\) the polynomial \(f_i\) has degree i. Also \(v_i = f_i(A)v_0\), so \(E^*_iV = f_i(A)E^*_0V\).

\(\mathrm{(ii)} \Rightarrow \mathrm{(iii)}\) The polynomial \(f_j\) has degree at most i for \(0 \le j\le i\), so

In this inclusion, the left-hand side has dimension \(i+1\) and the right-hand side has dimension at most \(i+1\). Therefore the inclusion holds with equality.

\(\mathrm{(iii)} \Rightarrow \mathrm{(i)}\) For \(0 \le i \le d\) let \(V_i\) denote the common value in (7). Observe that

Also observe that

Now for \(0 \le i,j\le d\) we check the conditions in (i). First assume that \(i-j>1\). Then

so \(E^*_iAE^*_j = 0\). Next assume that \(i-j=1\). To show that \(E^*_iAE^*_j\not =0\), we suppose \(E^*_iAE^*_j=0\) and get a contradiction. For \(0 \le h \le i-1\) we have \(E^*_iA E^*_h = 0\), so \(E^*_iA E^*_hV = 0\). Therefore \(E^*_i A V_{i-1} = 0\). We also have \(E^*_iV_{i-1}=0\), so \(E^*_iV_i = E^*_i(V_{i-1} + A V_{i-1}) = 0\), for a contradiction. Therefore \(E^*_iAE^*_j\not =0\).

Assume that (i)–(iii) hold. Setting \(i=d\) in (iii) we obtain \(V={{\mathcal {D}}} E^*_0V\). Consequently \(E^*_0\) is normalizing by Lemma 6.3(i),(iii). \(\square \)

Proposition 7.6

The following (i)–(iii) are equivalent:

-

(i)

Both

$$\begin{aligned} E^*_iAE^*_j&= {\left\{ \begin{array}{ll} 0, &{} {\text { if } i-j > 1}; \\ \not =0, &{}{\text { if }i-j = 1} \end{array}\right. } \qquad (0 \le i,j\le d), \end{aligned}$$(8)$$\begin{aligned} E_i A^*E_j&= 0 \quad \text { if }j-i> 1 \qquad (0 \le i,j \le d). \end{aligned}$$(9) -

(ii)

There exists a decomposition \(\lbrace U_i \rbrace _{i=0}^d\) of V such that

$$\begin{aligned}&(A-\theta _i I) U_i = U_{i+1} \qquad (0 \le i \le d-1), \qquad \quad (A-\theta _d I)U_d=0, \end{aligned}$$(10)$$\begin{aligned}&(A^*-\theta ^*_i I)U_i \subseteq U_{i-1} \qquad (1 \le i \le d), \qquad \qquad (A^* - \theta ^*_0 I) U_0=0. \end{aligned}$$(11) -

(iii)

There exist scalars \(\lbrace \varphi _i\rbrace _{i=1}^d\) in \({\mathbb {F}}\) and a basis for V with respect to which

$$\begin{aligned} A: \quad \left( \begin{array}{cccccc} \theta _0 &{} &{} &{} &{} &{} \mathbf{0} \\ 1 &{} \theta _1 &{} &{} &{} &{} \\ &{} 1 &{} \theta _2 &{} &{} &{} \\ &{}&{} \cdot &{} \cdot &{}&{} \\ &{} &{} &{} \cdot &{} \cdot &{} \\ \mathbf{0} &{} &{} &{} &{} 1 &{} \theta _d \end{array} \right) , \qquad \quad A^*: \quad \left( \begin{array}{cccccc} \theta ^*_0 &{} \varphi _1 &{} &{} &{} &{} \mathbf{0} \\ &{} \theta ^*_1 &{} \varphi _2 &{} &{} &{} \\ &{} &{} \theta ^*_2 &{} \cdot &{} &{} \\ &{}&{} &{} \cdot &{} \cdot &{} \\ &{} &{} &{} &{} \cdot &{} \varphi _d \\ \mathbf{0} &{} &{} &{} &{} &{} \theta ^*_d \end{array} \right) . \end{aligned}$$(12)

Assume that (i)–(iii) hold. Then \(E^*_0\) is normalizing and \(U_i= \tau _i(A)E^*_0V\) for \(0 \le i \le d\). The basis for V from (iii) is \(\lbrace \tau _i(A)\xi \rbrace _{i=0}^d\), where \(0 \not =\xi \in E^*_0V\). This basis induces the decomposition \(\lbrace U_i \rbrace _{i=0}^d\). The sequence \(\lbrace \varphi _i\rbrace _{i=1}^d\) is unique.

Proof

\(\mathrm{(i)}\Rightarrow \mathrm{(ii)} \) The element \(E^*_0\) is normalizing by Lemma 7.5, so Lemma 7.4 applies. Consider the decomposition \(\lbrace U_i \rbrace _{i=0}^d\) of V from Lemma 7.4. This decomposition satisfies (10) by Lemma 7.4(ii),(iii). We now show (11). By Lemma 7.4(iv) and Lemma 7.5(iii),

For \(0 \le i \le d\),

Also, using Lemma 7.4(v) and (9),

The above comments imply (11).

\(\mathrm{(ii)}\Rightarrow \mathrm{(i)} \) From (11) we obtain

In particular \(U_0=E^*_0V\). By this and (10) we obtain \(U_i=\tau _i(A)E^*_0V\) for \(0 \le i \le d\). Consequently \(V={{\mathcal {D}}}E^*_0V\), so \(E^*_0\) is normalizing by Lemma 6.3(i),(iii). We show (8). Combining Lemma 7.4(iv) and (13) we obtain Lemma 7.5(iii). This gives Lemma 7.5(i), which is (8). Next we show (9). Let i, j be given with \(j-i>1\). Using Lemma 7.4(v) and (11),

Therefore \(E_i A^*E_j=0\). We have shown (9).

\(\mathrm{(ii)}\Leftrightarrow \mathrm{(iii)} \) Assertion (iii) is a reformulation of (ii) in terms of matrices.

Now assume that (i)–(iii) hold. We mentioned in the proof of \(\mathrm{(ii)}\Rightarrow \mathrm{(i)} \) that \(E^*_0\) is normalizing and \(U_i = \tau _i(A)E^*_0V\) for \(0 \le i \le d\). Let \(\lbrace u_i\rbrace _{i=0}^d\) denote the basis for V from (iii), and define \(\xi = u_0\). From the matrix representing \(A^*\) in (12), we see that \(\xi \) is an eigenvector for \(A^*\) with eigenvalue \(\theta ^*_0\). So \(\xi \in E^*_0V\). From the matrix representing A in (12), we obtain \((A-\theta _i I)u_i =u_{i+1}\) for \(0 \le i \le d-1\). Consequently \(u_i = \tau _i(A)\xi \) for \(0 \le i \le d\). For \(0 \le i \le d\) we have \(u_i = \tau _i(A)\xi \in \tau _i(A)E^*_0V=U_i\). So the basis \(\lbrace u_i \rbrace _{i=0}^d\) induces the decomposition \(\lbrace U_i \rbrace _{i=0}^d\). The sequence \(\lbrace \varphi _i\rbrace _{i=1}^d\) is unique since the vector \(\xi \) is unique up to multiplication by a nonzero scalar. \(\square \)

Lemma 7.7

Assume that the equivalent conditions (i)–(iii) hold in Proposition 7.6. Then for \(1 \le i \le d\) the following (i)–(iii) are equivalent:

-

(i)

\(E_{i-1}A^*E_i \not =0\);

-

(ii)

\((A^*-\theta ^*_i I) U_i =U_{i-1}\);

-

(iii)

\(\varphi _i \not =0\).

Proof

\(\mathrm{(i)} \Rightarrow \mathrm{(ii)}\) We assume that \((A^*-\theta ^*_i I) U_i \not =U_{i-1}\) and get a contradiction. We have \((A^*-\theta ^*_i I) U_i =0\) since \((A^*-\theta ^*_i I) U_i \subseteq U_{i-1}\) and \(U_{i-1}\) has dimension one. Using Lemma 7.4(v),

Therefore \(E_{i-1}A^*E_i=0\) for a contradiction. We have shown \((A^*-\theta ^*_i I) U_i =U_{i-1}\).

\(\mathrm{(ii)} \Rightarrow \mathrm{(i)}\) We assume that \(E_{i-1} A^*E_i=0\) and get a contradiction. Using Lemma 7.4(v),

This contradicts the fact that \(\lbrace U_i\rbrace _{i=0}^d\) is a decomposition. We have shown \(E_{i-1} A^*E_i\not =0\).

\(\mathrm{(ii)} \Leftrightarrow \mathrm{(iii)}\) Use the matrix representation of \(A^*\) from (12). \(\square \)

8 A Result About Wrap-Around

We continue to discuss the pre Leonard system \(\Phi =(A; \lbrace E_i\rbrace _{i=0}^d; A^*; \lbrace E^*_i\rbrace _{i=0}^d)\) from Definition 3.1.

Throughout this section we assume that \(\Phi \) satisfies the equivalent conditions (i–iii) in Proposition 7.6. We will obtain a useful result involving the scalars \(\lbrace \varphi _i \rbrace _{i=1}^d\) from Proposition 7.6(iii); this result is sometimes called the wrap-around result.

Recall the parameters \(\lbrace a_i\rbrace _{i=0}^d\), \(\lbrace a^*_i\rbrace _{i=0}^d \) from Definition 3.3. We next compute these parameters in terms of \(\lbrace \theta _i\rbrace _{i=0}^d\), \(\lbrace \theta ^*_i\rbrace _{i=0}^d\), \(\lbrace \varphi _i\rbrace _{i=1}^d\). First assume that \(d=0\). Then \(A=\theta _0 I\) and \(A^*=\theta ^*_0 I\), so \(a_0=\theta _0\) and \(a^*_0 = \theta ^*_0\).

Lemma 8.1

(See [18, Lemma 5.1]). For \(d\ge 1\) we have

and

Proof

Concerning \(\lbrace a_i\rbrace _{i=0}^d\), define

We show that \(a_i=x_i\) for \(0 \le i \le d\). Since \(\lbrace \theta ^*_i\rbrace _{i=0}^d\) are mutually distinct, it suffices to show that \(0 = \sum _{i=0}^d (x_i-a_i)\theta ^{*r}_i\) for \(0 \le r \le d\). Let r be given. We compute the trace of \(A A^{*r}\) in two ways. On one hand, \(A^*= \sum _{i=0}^d \theta ^*_i E^*_i\) so \(A^{*r}= \sum _{i=0}^d \theta ^{*r}_i E^*_i\). By this and Definition 3.3,

On the other hand, consider the matrix representations of A and \(A^*\) from (12). Using these matrices we compute the trace of \(A A^{*r}\) as the sum of the diagonal entries. A brief calculation yields

Comparing (14), (15) we get an equation that becomes \(0 = \sum _{i=0}^d (x_i-a_i)\theta ^{*r}_i\) after rearranging the terms. We have shown that \(a_i=x_i\) for \(0 \le i \le d\). Our assertions concerning \(\lbrace a^*_i\rbrace _{i=0}^d\) are similarly obtained, by computing in two ways the trace of \(A^* A^r\) for \(0 \le r \le d\). \(\square \)

Lemma 8.2

(See [18, Lemma 5.2]). For \(1 \le i \le d\) the scalar \(\varphi _i\) is equal to each of the following four expressions:

Proof

Use Lemmas 3.4, 8.1. \(\square \)

Definition 8.3

Define

and \(\vartheta _0=0\), \(\vartheta _{d+1}=0\).

Proposition 8.4

(wrap-around) Assume \(d\ge 2\). Then

Proof

In the equation \(I = \sum _{i=0}^d E^*_i\), multiply each term on the right by \(AE^*_0\). Simplify the result using \(E^*_0AE^*_0= a_0 E^*_0\) and \(E^*_i A E^*_0 = 0 \) \((2 \le i \le d)\) to obtain

In (16), multiply each term on the left by \(A^*\) and simplify to get

In the equation \(I = \sum _{i=0}^d E_i\), multiply each term on the left by \(E_d A^*\). Simplify the result using \(E_d A^* E_d = a^*_d E_d\) to obtain

In (18), multiply each term on the right by A and simplify to obtain

We now compute \(\theta ^*_1 E_d\) times (16) minus \(E_d\) times (17) minus (18) times \(\theta _{d-1} E^*_0\) plus (19) times \( E^*_0\). The result is

In the above equation, consider the coefficient of \(E_d E^*_0\). Evaluate this coefficient using

to find that this coefficient is \(\vartheta _1 -\vartheta _d\). \(\square \)

For the sake of completeness, we mention a second version of Proposition 8.4. We do not use this second version, so we will not dwell on the proof.

Lemma 8.5

Assume \(d\ge 2\). Then

Proof

Similar to the proof of Proposition 8.4. \(\square \)

9 The Parameter Array of a Leonard System

In this section we consider a Leonard system \(\Phi = (A; \lbrace E_i\rbrace _{i=0}^d; A^*; \lbrace E^*_i\rbrace _{i=0}^d)\) on V. Note that \(\Phi \) satisfies the equivalent conditions (i)–(iii) in Proposition 7.6.

Definition 9.1

By the first split sequence for \(\Phi \) we mean the sequence \(\lbrace \varphi _i\rbrace _{i=1}^d\) from Proposition 7.6(iii). Let \(\lbrace \phi _i \rbrace _{i=1}^d\) denote the first split sequence for \(\Phi ^\Downarrow \). We call \(\lbrace \phi _i \rbrace _{i=1}^d\) the second split sequence for \(\Phi \).

By Lemma 7.7 and the construction, \(\varphi _i\) and \(\phi _i\) are nonzero for \(1 \le i \le d\).

Lemma 9.2

There exists a basis for V with respect to which

Proof

Apply Proposition 7.6(iii) to \(\Phi ^\Downarrow \). \(\square \)

Lemma 9.3

For a Leonard system \(\Phi '\) over \({\mathbb {F}}\), the following are equivalent:

-

(i)

\(\Phi , \Phi '\) are isomorphic;

-

(ii)

\(\Phi , \Phi '\) have the same eigenvalue sequence, dual eigenvalue sequence, and first split sequence;

-

(iii)

\(\Phi , \Phi '\) have the same eigenvalue sequence, dual eigenvalue sequence, and second split sequence.

Proof

\(\mathrm{(i)} \Leftrightarrow \mathrm{(ii)}\) By Proposition 7.6(iii).

\(\mathrm{(i)} \Leftrightarrow \mathrm{(iii)}\) By Lemma 9.2. \(\square \)

In Lemma 8.1 we gave some formulas for \(\lbrace a_i\rbrace _{i=0}^d\), \(\lbrace a^*_i\rbrace _{i=0}^d\) that involved \(\lbrace \varphi _i\rbrace _{i=1}^d\). Next we give some similar formulas that involve \(\lbrace \phi _i\rbrace _{i=1}^d\).

Lemma 9.4

For \(d\ge 1\) we have

and

Proof

Recall that \(\lbrace \phi _i \rbrace _{i=1}^d\) is the first split sequence for \(\Phi ^\Downarrow \). Apply Lemma 8.1 to \(\Phi ^\Downarrow \) and use the data for \(\Phi ^\Downarrow \) in Proposition 3.6. \(\square \)

Lemma 9.5

(See [18, Lemma 6.4]). For \(1 \le i \le d\) the scalar \(\phi _i\) is equal to each of the following four expressions:

Proof

Apply Lemma 8.2 to \(\Phi ^\Downarrow \) and use the data for \(\Phi ^\Downarrow \) in Proposition 3.6. \(\square \)

Definition 9.6

(See [19, Definition 10.1]). By the parameter array of \(\Phi \) we mean the sequence

where we recall that \(\lbrace \theta _i \rbrace _{i=0}^d\) is the eigenvalue sequence of \(\Phi \), \(\lbrace \theta ^*_i \rbrace _{i=0}^d\) is the dual eigenvalue sequence of \(\Phi \), \(\lbrace \varphi _i \rbrace _{i=1}^d\) is the first split sequence of \(\Phi \), and \(\lbrace \phi _i \rbrace _{i=1}^d\) is the second split sequence of \(\Phi \).

Lemma 9.7

(See [18, Theorem 1.11]). The parameter arrays of

are related as follows.

LS | Parameter array |

|---|---|

\(\Phi \) | \(\bigl ( \lbrace \theta _i \rbrace _{i=0}^d; \lbrace \theta ^*_i \rbrace _{i=0}^d; \lbrace \varphi _i \rbrace _{i=1}^d; \lbrace \phi _i \rbrace _{i=1}^d \bigr ) \) |

\(\Phi ^\Downarrow \) | \( \bigl ( \lbrace \theta _{d-i} \rbrace _{i=0}^d; \lbrace \theta ^*_i \rbrace _{i=0}^d; \lbrace \phi _i \rbrace _{i=1}^d; \lbrace \varphi _i \rbrace _{i=1}^d \bigr ) \) |

\(\Phi ^\downarrow \) | \( \bigl ( \lbrace \theta _{i} \rbrace _{i=0}^d; \lbrace \theta ^*_{d-i} \rbrace _{i=0}^d; \lbrace \phi _{d-i+1} \rbrace _{i=1}^d; \lbrace \varphi _{d-i+1} \rbrace _{i=1}^d \bigr )\) |

\(\Phi ^*\) | \( \bigl ( \lbrace \theta ^*_{i} \rbrace _{i=0}^d; \lbrace \theta _{i} \rbrace _{i=0}^d; \lbrace \varphi _{i} \rbrace _{i=1}^d; \lbrace \phi _{d-i+1} \rbrace _{i=1}^d \bigr ) \) |

Proof

Use Proposition 3.6 and Lemmas 8.2, 9.5. \(\square \)

We mention a variation on Lemma 9.3.

Proposition 9.8

Two Leonard systems over \({\mathbb {F}}\) are isomorphic if and only if they have the same parameter array.

Proof

10 Statement of the Leonard System Classification

In the following theorem we classify up to isomorphism the Leonard systems over \({\mathbb {F}}\).

Theorem 10.1

(See [18, Theorem 1.9]). Consider a sequence

of scalars in \({\mathbb {F}}\). Then there exists a Leonard system \(\Phi \) over \({\mathbb {F}}\) with parameter array (21) if and only if the following conditions (PA1)–(PA5) hold:

- (PA1):

-

\( \theta _i\not =\theta _j,\quad \theta ^*_i\not =\theta ^*_j \quad \) if \(\;\;i\not =j,\qquad \qquad (0 \le i,j\le d)\);

- (PA2):

-

\( \varphi _i \not =0, \quad \phi _i\not =0 \qquad \qquad (1 \le i \le d)\);

- (PA3):

-

\( {\displaystyle { \varphi _i = \phi _1 \sum _{h=0}^{i-1} \frac{\theta _h-\theta _{d-h}}{\theta _0-\theta _d} +(\theta ^*_i-\theta ^*_0)(\theta _{i-1}-\theta _d) \qquad \;\;(1 \le i \le d)}}\);

- (PA4):

-

\( {\displaystyle { \phi _i = \varphi _1 \sum _{h=0}^{i-1} \frac{\theta _h-\theta _{d-h}}{\theta _0-\theta _d} +(\theta ^*_i-\theta ^*_0)(\theta _{d-i+1}-\theta _0) \qquad (1 \le i \le d)}}\);

- (PA5):

-

the scalars

$$\begin{aligned} \frac{\theta _{i-2}-\theta _{i+1}}{\theta _{i-1}-\theta _i},\qquad \qquad \frac{\theta ^*_{i-2}-\theta ^*_{i+1}}{\theta ^*_{i-1}-\theta ^*_i} \qquad \qquad \end{aligned}$$(22)are equal and independent of i for \(2\le i \le d-1\).

Moreover, if \(\Phi \) exists then \(\Phi \) is unique up to isomorphism of Leonard systems.

The proof of Theorem 10.1 will be completed in Sect. 17.

Definition 10.2

By a parameter array of diameter d over \({\mathbb {F}}\), we mean a sequence (21) of scalars in \({\mathbb {F}}\) that satisfy (PA1)–(PA5).

Theorem 10.1 gives a bijection between the following two sets:

-

(i)

the parameter arrays over \({\mathbb {F}}\) that have diameter d;

-

(ii)

the isomorphism classes of Leonard systems over \({\mathbb {F}}\) that have diameter d.

We have a comment.

Lemma 10.3

For \(d\ge 1\), a parameter array \(\bigl ( \lbrace \theta _i \rbrace _{i=0}^d; \lbrace \theta ^*_i \rbrace _{i=0}^d; \lbrace \varphi _i \rbrace _{i=1}^d; \lbrace \phi _i \rbrace _{i=1}^d \bigr )\) is uniquely determined by \(\varphi _1\), \(\lbrace \theta _i \rbrace _{i=0}^d\), \(\lbrace \theta ^*_i \rbrace _{i=0}^d\).

Proof

By the nature of the equations (PA3), (PA4). \(\square \)

11 Recurrent Sequences

Throughout this section let \(\lbrace \theta _i\rbrace _{i=0}^d\) denote scalars in \({\mathbb {F}}\).

Definition 11.1

(See [18, Definition 8.2]). Let \(\beta , \gamma , \varrho \) denote scalars in \({\mathbb {F}}\).

-

(i)

The sequence \(\lbrace \theta _i\rbrace _{i=0}^d\) is said to be recurrent whenever \(\theta _{i-1}\not =\theta _i\) for \(2 \le i \le d-1\), and

$$\begin{aligned} \frac{\theta _{i-2}-\theta _{i+1}}{\theta _{i-1}-\theta _i} \end{aligned}$$(23)is independent of i for \(2 \le i \le d-1\).

-

(ii)

The sequence \(\lbrace \theta _i\rbrace _{i=0}^d\) is said to be \(\beta \)-recurrent whenever

$$\begin{aligned} \theta _{i-2}\,-\,(\beta +1)\theta _{i-1}\,+\,(\beta +1)\theta _i \,-\,\theta _{i+1} \end{aligned}$$(24)is zero for \(2 \le i \le d-1\).

-

(iii)

The sequence \(\lbrace \theta _i\rbrace _{i=0}^d\) is said to be \((\beta ,\gamma )\)-recurrent whenever

$$\begin{aligned} \theta _{i-1}\,-\,\beta \theta _i\,+\,\theta _{i+1}=\gamma \end{aligned}$$(25)for \(1 \le i \le d-1\).

-

(iv)

The sequence \(\lbrace \theta _i\rbrace _{i=0}^d\) is said to be \((\beta ,\gamma ,\varrho )\)-recurrent whenever

$$\begin{aligned} \theta ^2_{i-1}-\beta \theta _{i-1}\theta _i+\theta ^2_i -\gamma (\theta _{i-1} +\theta _i)=\varrho \end{aligned}$$(26)for \(1 \le i \le d\).

Lemma 11.2

The following are equivalent:

-

(i)

the sequence \(\lbrace \theta _i\rbrace _{i=0}^d\) is recurrent;

-

(ii)

the scalars \(\theta _{i-1}\not =\theta _i\) for \(2 \le i \le d-1\), and there exists \(\beta \in {\mathbb {F}}\) such that \(\lbrace \theta _i\rbrace _{i=0}^d\) is \(\beta \)-recurrent.

Suppose (i), (ii) hold, and that \(d\ge 3\). Then the common value of (23) is equal to \(\beta +1\).

Proof

Routine. \(\square \)

Lemma 11.3

For \(\beta \in {\mathbb {F}}\) the following are equivalent:

-

(i)

the sequence \(\lbrace \theta _i\rbrace _{i=0}^d\) is \(\beta \)-recurrent;

-

(ii)

there exists \(\gamma \in {\mathbb {F}}\) such that \(\lbrace \theta _i\rbrace _{i=0}^d\) is \((\beta ,\gamma )\)-recurrent.

Proof

\(\mathrm{(i)}\Rightarrow \mathrm{(ii)} \) For \(2\le i \le d-1\), the expression (24) is zero by assumption, so

The left-hand side of (25) is independent of i, and the result follows.

\(\mathrm{(ii)}\Rightarrow \mathrm{(i)} \) For \(2\le i \le d-1\), subtract the equation (25) at i from the corresponding equation obtained by replacing i by \(i-1\), to find (24) is zero. \(\square \)

Lemma 11.4

The following (i), (ii) hold for all \(\beta , \gamma \in {\mathbb {F}}\).

-

(i)

Suppose \(\lbrace \theta _i\rbrace _{i=0}^d\) is \((\beta ,\gamma )\)-recurrent. Then there exists \(\varrho \in {\mathbb {F}}\) such that \(\lbrace \theta _i\rbrace _{i=0}^d\) is \((\beta ,\gamma ,\varrho )\)-recurrent.

-

(ii)

Suppose \(\lbrace \theta _i\rbrace _{i=0}^d\) is \((\beta ,\gamma ,\varrho )\)-recurrent, and that \(\theta _{i-1}\not =\theta _{i+1}\) for \(1 \le i\le d-1\). Then \(\lbrace \theta _i\rbrace _{i=0}^d\) is \((\beta ,\gamma )\)-recurrent.

Proof

Let \(p_i\) denote the expression on the left in (26), and observe

for \(1 \le i \le d-1\). Assertions (i), (ii) are both routine consequences of this. \(\square \)

12 Recurrent Sequences in Closed Form

In this section, we obtain some formula involving recurrent sequences. Let \(\overline{{\mathbb {F}}}\) denote the algebraic closure of \({\mathbb {F}}\). For \(q \in \overline{{\mathbb {F}}}\) let \({\mathbb {F}}[q ]\) denote the field extension of \({\mathbb {F}}\) generated by q.

Throughout this section let \(\beta \) and \(\lbrace \theta _i\rbrace _{i=0}^d\) denote scalars in \({\mathbb {F}}\).

Lemma 12.1

Assume that \(\lbrace \theta _i\rbrace _{i=0}^d\) is \(\beta \)-recurrent. Then the following (i)–(iii) hold.

-

(i)

Suppose \(\beta \not =2\), \(\beta \not =-2\), and pick \(0 \not =q \in \overline{{\mathbb {F}}}\) such that \(q+q^{-1}=\beta \). Then there exist scalars \(\alpha _1, \alpha _2, \alpha _3\) in \({\mathbb {F}}[q ]\) such that

$$\begin{aligned} \theta _i = \alpha _1 + \alpha _2 q^i + \alpha _3 q^{-i} \qquad \qquad (0 \le i \le d). \end{aligned}$$(27) -

(ii)

Suppose \(\beta = 2\). Then there exist \(\alpha _1, \alpha _2, \alpha _3 \) in \({\mathbb {F}} \) such that

$$\begin{aligned} \theta _i = \alpha _1 + \alpha _2 i + \alpha _3 i(i-1)/2 \qquad \qquad (0 \le i \le d). \end{aligned}$$(28) -

(iii)

Suppose \(\beta = -2\) and \(\mathrm{char}({\mathbb {F}}) \not =2\). Then there exist \(\alpha _1, \alpha _2, \alpha _3 \) in \({\mathbb {F}} \) such that

$$\begin{aligned} \theta _i = \alpha _1 + \alpha _2 (-1)^i + \alpha _3 i(-1)^i \qquad \qquad (0 \le i \le d). \end{aligned}$$(29)

Referring to case (ii) above, if \(\mathrm{char}({\mathbb {F}}) =2\) then we interpret the expression \(i(i-1)/2\) as 0 if \(i=0\) or \(i=1\) (mod 4), and as 1 if \(i=2\) or \(i=3\) (mod 4).

Proof

-

(i)

We assume \(d\ge 2\); otherwise the result is trivial. Let q be given, and consider the equations (27) for \(i=0,1,2\). These equations are linear in \(\alpha _1, \alpha _2, \alpha _3\). We routinely find the coefficient matrix is nonsingular, so there exist \(\alpha _1, \alpha _2, \alpha _3\) in \({\mathbb {F}} [q ]\) such that (27) holds for \(i=0,1,2\). Using these scalars, let \(\varepsilon _i \) denote the left-hand side of (27) minus the right-hand side of (27), for \(0 \le i \le d\). On one hand \(\varepsilon _0\), \(\varepsilon _1\), \(\varepsilon _2\) are zero from the construction. On the other hand, one readily checks

$$\begin{aligned} \varepsilon _{i-2}\,-\,(\beta +1)\varepsilon _{i-1}\,+\,(\beta +1)\varepsilon _i \,-\,\varepsilon _{i+1}=0 \end{aligned}$$for \(2 \le i \le d-1\). By these comments \(\varepsilon _i=0\) for \(0 \le i \le d\), and the result follows.

-

(ii), (iii)

Similar to the proof of (i) above.

\(\square \)

Lemma 12.2

Assume that \(\lbrace \theta _i\rbrace _{i=0}^d\) are mutually distinct and \(\beta \)-recurrent. Then (i)–(iv) hold below.

-

(i)

Suppose \(\beta \not =2\), \(\beta \not =-2\), and pick \(0 \not = q \in \overline{{\mathbb {F}}}\) such that \(q+q^{-1}=\beta \). Then \(q^i \not =1 \) for \(1 \le i \le d\).

-

(ii)

Suppose \(\beta = 2\) and \(\mathrm{char}({\mathbb {F}})=p\), \(p\ge 3\). Then \(d<p\).

-

(iii)

Suppose \(\beta = -2\) and \(\mathrm{char}({\mathbb {F}}) =p\), \(p\ge 3\). Then \(d<2p\).

-

(iv)

Suppose \(\beta = 0\) and \(\mathrm{char}({\mathbb {F}}) =2\). Then \(d\le 3\).

Proof

-

(i)

Using (27), we find \(q^i=1 \) implies \(\theta _i=\theta _0\) for \(1 \le i \le d\).

-

(ii)

Suppose \(d\ge p\). Setting \(i=p\) in (28) and recalling that p is congruent to 0 modulo p, we obtain \(\theta _p=\theta _0\), a contradiction. Hence \(d<p\).

-

(iii)

Suppose \(d\ge 2p\). Setting \(i=2p\) in (29) and recalling that p is congruent to 0 modulo p, we obtain \(\theta _{2p}=\theta _0\), a contradiction. Hence \(d<2p\).

-

(iv)

Suppose \(d\ge 4\). Setting \(i=4\) in (28), we find \(\theta _4=\theta _0\) in view of the comment at the end of Lemma 12.1. This is a contradiction, so \(d\le 3\).

\(\square \)

Lemma 12.3

(See [18, Lemma 9.4]). Assume that \(\lbrace \theta _i\rbrace _{i=0}^d\) are mutually distinct and \(\beta \)-recurrent. Pick any integers i, j, r, s \((0 \le i,j,r,s \le d)\) such that \(i+j=r+s\), \(r\not =s\). Then (i)–(iv) hold below.

-

(i)

Suppose \(\beta \not =2\), \(\beta \not =-2\). Then

$$\begin{aligned} \frac{\theta _i-\theta _{j}}{\theta _r-\theta _s} = \frac{q^{i}-q^j}{q^r-q^s}, \end{aligned}$$(30)where \(q+q^{-1}=\beta \).

-

(ii)

Suppose \(\beta = 2\) and \(\mathrm{char}({\mathbb {F}}) \not =2\). Then

$$\begin{aligned} \frac{\theta _i-\theta _{j}}{\theta _r-\theta _s} = \frac{i-j}{r-s}. \end{aligned}$$(31) -

(iii)

Suppose \(\beta = -2\) and \(\mathrm{char}({\mathbb {F}})\not =2\). Then

$$\begin{aligned} \frac{\theta _i-\theta _{j}}{\theta _r-\theta _s} = \left\{ \begin{array}{ll} (-1)^{i+r} \frac{i-j}{r-s}, &{} \text {if }\;i+j\;\text { is even}; \\ (-1)^{i+r}, &{} \text {if }\;i+j\;\text { is odd.} \end{array} \right. \end{aligned}$$(32) -

(iv)

Suppose \(\beta = 0\) and \(\mathrm{char}({\mathbb {F}}) =2\). Then

$$\begin{aligned} \frac{\theta _i-\theta _{j}}{\theta _r-\theta _s} = \left\{ \begin{array}{ll} 0, &{} \text {if }\;i=j; \\ 1, &{} \text {if }\;i\not =j. \end{array} \right. \end{aligned}$$(33)

Proof

To get (i), evaluate the left-hand side of (30) using (27), and simplify the result. The cases (ii)–(iv) are similar. \(\square \)

13 A Sum

Throughout this section assume \(d\ge 1\). Let \(\beta \) and \(\lbrace \theta _i\rbrace _{i=0}^d\) denote scalars in \({\mathbb {F}}\) with \(\lbrace \theta _i\rbrace _{i=0}^d\) mutually distinct.

We consider the sums

where \(0 \le i \le d+1\). Denoting the sum in (34) by \(\vartheta _i\), we have

Moreover

The sums (34) play an important role a bit later, so we will examine them carefully. We begin by giving explicit formulas for the sums (34) under the assumption that \(\lbrace \theta _i\rbrace _{i=0}^d\) is \(\beta \)-recurrent. To avoid trivialities we assume that \(d\ge 3\).

Lemma 13.1

(See [18, Lemma 10.2]). Assume that \(\lbrace \theta _i\rbrace _{i=0}^d\) are mutually distinct and \(\beta \)-recurrent. Further assume that \(d\ge 3\). Then for \(0 \le i \le d+1\) we have the following.

-

(i)

Suppose \(\beta \not =2\), \(\beta \not =-2\). Then

$$\begin{aligned} \sum _{h=0}^{i-1} \frac{\theta _h-\theta _{d-h}}{\theta _0-\theta _d} = \frac{q^i-1}{q-1}\,\frac{q^{d-i+1}-1}{q^d-1}, \end{aligned}$$(37)where \(q+q^{-1}=\beta \).

-

(ii)

Suppose \(\beta = 2\) and \(\mathrm{char}({\mathbb {F}}) \not =2\). Then

$$\begin{aligned} \sum _{h=0}^{i-1} \frac{\theta _h-\theta _{d-h}}{\theta _0-\theta _d} = \frac{i(d-i+1)}{d}. \end{aligned}$$(38) -

(iii)

Suppose \(\beta = -2\), \(\mathrm{char}({\mathbb {F}}) \not =2\), and d odd. Then

$$\begin{aligned} \sum _{h=0}^{i-1} \frac{\theta _h-\theta _{d-h}}{\theta _0-\theta _d} = \left\{ \begin{array}{ll} 0, &{} \text {if }i\text { is even}; \\ 1, &{} \text {if }i\text { is odd.} \end{array} \right. \end{aligned}$$(39) -

(iv)

Suppose \(\beta = -2\), \(\mathrm{char}({\mathbb {F}}) \not =2\), and d even. Then

$$\begin{aligned} \sum _{h=0}^{i-1} \frac{\theta _h-\theta _{d-h}}{\theta _0-\theta _d} = \left\{ \begin{array}{ll} i/d, &{} \text {if }i\text { is even; } \\ (d-i+1)/d, \quad &{} \text {if }i\text { is odd. } \end{array} \right. \end{aligned}$$(40) -

(v)

Suppose \(\beta = 0\), \(\mathrm{char}({\mathbb {F}}) =2\), and \(d=3\). Then

$$\begin{aligned} \sum _{h=0}^{i-1} \frac{\theta _h-\theta _{d-h}}{\theta _0-\theta _d} = \left\{ \begin{array}{ll} 0, &{} \text {if }i \text { is even; } \\ 1, \quad &{} \text {if }i\text { is odd. } \end{array} \right. \end{aligned}$$(41)

Proof

The above sums can be computed directly from Lemma 12.3. \(\square \)

Note 13.2

Referring to Lemma 13.1, the cases (iii), (iv) can be handled in the following uniform way. Suppose \(\beta =-2\) and \(\mathrm{char}({\mathbb {F}}) \not =2\). Then for \(0 \le i \le d+1\),

We make an observation.

Lemma 13.3

Assume that \(\lbrace \theta _i\rbrace _{i=0}^d\) are mutually distinct and \(\beta \)-recurrent. Define

Then the sequence \(\lbrace \vartheta _i\rbrace _{i=0}^{d+1}\) is \(\beta \)-recurrent.

Proof

For \(d=1\) there is nothing to prove. For \(d=2\) we have

since

For \(d\ge 3\) the result is obtained by examining the cases in Lemma 13.1. \(\square \)

Proposition 13.4

Assume that \(\lbrace \theta _i\rbrace _{i=0}^d \) are mutually distinct and \(\beta \)-recurrent. Then for scalars \(\lbrace \vartheta _i\rbrace _{i=0}^{d+1}\) in \({\mathbb {F}}\) the following are equivalent:

-

(i)

\(\displaystyle \vartheta _i = \vartheta _1 \sum _{h=0}^{i-1} \frac{\theta _h-\theta _{d-h}}{\theta _0 - \theta _d} \qquad \qquad (0 \le i \le d+1)\);

-

(ii)

the sequence \(\lbrace \vartheta _i\rbrace _{i=0}^{d+1}\) is \(\beta \)-recurrent and

$$\begin{aligned} \vartheta _0=0, \qquad \quad \vartheta _1 =\vartheta _d, \qquad \quad \vartheta _{d+1} = 0. \end{aligned}$$(43)

Proof

\(\mathrm{(i)} \Rightarrow \mathrm{(ii)}\) The sequence \(\lbrace \vartheta _i\rbrace _{i=0}^{d+1}\) is \(\beta \)-recurrent by Lemma 13.3. Condition (43) follows from (35).

\(\mathrm{(ii)} \Rightarrow \mathrm{(i)}\) Define

We show that \(\Delta _i=0\) for \(0 \le i \le d+1\). By construction

For the rest of the proof we assume that \(d\ge 3\); otherwise we are done. By construction and Lemma 13.3, the sequence \(\lbrace \Delta _i \rbrace _{i=0}^{d+1}\) is \(\beta \)-recurrent. We break the argument into cases.

Case \(\beta \not =2\), \(\beta \not =-2\). Pick \(0 \not = q \in \overline{{\mathbb {F}}}\) such that \(q+q^{-1}=\beta \). There exist \(\alpha _1\), \(\alpha _2\), \(\alpha _3\) in \({{\mathbb {F}}}[q ]\) such that

Since \(\lbrace \theta _i \rbrace _{i=0}^d\) are mutually distinct and \(\beta \)-recurrent, we have \(q^i \not =1\) for \(1 \le i \le d\). The first three equations in (44) give

For the above equation the coefficient matrix has determinant

which is nonzero. Therefore the coefficient matrix is invertible, so each of \(\alpha _1\), \(\alpha _2\), \(\alpha _3\) is zero. Consequently \(\Delta _i = 0\) for \(0 \le i \le d+1\).

Case \(\beta =2\) and \(\mathrm{char}({\mathbb {F}})\not =2\). There exist \(\alpha _1\), \(\alpha _2\), \(\alpha _3\) in \({{\mathbb {F}}}\) such that

Since \(\lbrace \theta _i \rbrace _{i=0}^d\) are mutully distinct and \(\beta \)-recurrent, we have \(\mathrm{char}({\mathbb {F}})=0\) or \(\mathrm{char}({\mathbb {F}})=p\) with \(d<p\). The first three equations in (44) give

For the above equation the coefficient matrix has determinant \(d(d-1)/2\), which is nonzero. Therefore the coefficient matrix is invertible, so each of \(\alpha _1\), \(\alpha _2\), \(\alpha _3\) is zero. Consequently \(\Delta _i = 0\) for \(0 \le i \le d+1\).

Case \(\beta =-2\) and \(\mathrm{char}({\mathbb {F}})\not =2\). There exist \(\alpha _1\), \(\alpha _2\), \(\alpha _3\) in \({{\mathbb {F}}}\) such that

Since \(\lbrace \theta _i \rbrace _{i=0}^d\) are mutully distinct and \(\beta \)-recurrent, we have

The first three equations in (44) give

For the above equation, consider the determinant of the coefficient matrix. For even \(d=2n\) this determinant is \(-2^2n\), and for odd \(d=2n+1\) this determinant is \(2^2 n\). Note that \(2 \not =0\) in \({\mathbb {F}}\) since \(\mathrm{char}({\mathbb {F}})\not =2\). For either parity of d we have \(n\not =0\) in \({\mathbb {F}}\) by (45). So for either parity of d the determinant is nonzero. Therefore the coefficient matrix is invertible, so each of \(\alpha _1\), \(\alpha _2\), \(\alpha _3\) is zero. Consequently \(\Delta _i = 0\) for \(0 \le i \le d+1\).

Case \(\beta =0\) and \(\mathrm{char}({\mathbb {F}})=2\). We have \(d=3\) by Lemma 12.2(iv). There exist \(\alpha _1\), \(\alpha _2\), \(\alpha _3\) in \({{\mathbb {F}}}\) such that

where \(i(i-1)/2\) is interpreted at the end of Lemma 12.1. The first three equations in (44) give

In the above equation the coefficient matrix is invertible, so each of \(\alpha _1\), \(\alpha _2\), \(\alpha _3\) is zero. Consequently \(\Delta _i = 0\) for \(0 \le i \le d+1\). \(\square \)

14 The Polynomial P(x, y)

Let \(\beta , \gamma , \varrho \) denote scalars in \({\mathbb {F}}\), and consider a polynomial in two variables

Note that \(P(x,y)= P(y,x)\). Let \(\lbrace \theta _i \rbrace _{i=0}^d\) denote scalars in \({\mathbb {F}}\).

Lemma 14.1

The following are equivalent:

-

(i)

\(P(\theta _{i-1},\theta _i)=0 \) for \(1 \le i \le d\);

-

(ii)

the sequence \(\lbrace \theta _i \rbrace _{i=0}^d\) is \((\beta , \gamma , \varrho )\)-recurrent.

Proof

By Definition 11.1(iv). \(\square \)

Proposition 14.2

Assume that \(\lbrace \theta _i\rbrace _{i=0}^d\) are mutually distinct and \((\beta , \gamma ,\varrho )\)-recurrent. Then the following hold:

-

(i)

\(P(x,\theta _j) = (x-\theta _{j-1})(x-\theta _{j+1}) \qquad (1 \le j \le d-1)\);

-

(ii)

for \(0 \le i,j\le d\), \(P(\theta _i, \theta _j) = 0\) implies \(|i-j|=1\) or \(i,j\in \lbrace 0,d\rbrace \).

Proof

-

(i)

The polynomial \(P(x,\theta _j)\) is monic in x, and has roots \(\theta _{j-1}\), \(\theta _{j+1}\) by Lemma 14.1.

-

(ii)

Assume that \(P(\theta _i,\theta _j)=0\). Also assume that \(1 \le i \le d-1\) or \(1 \le j \le d-1\); otherwise \(i,j\in \lbrace 0,d\rbrace \) and we are done. Interchanging i, j if necessary, we may assume that \(1 \le j \le d-1\). Using (i) we have \(0= P(\theta _i,\theta _j)= (\theta _i-\theta _{j-1})(\theta _i-\theta _{j+1})\). Therefore \(i=j-1\) or \(i=j+1\), so \(|i-j|=1\).

\(\square \)

15 The Tridiagonal Relations

In this section, we consider a Leonard system

on V, with eigenvalue sequence \(\lbrace \theta _i \rbrace _{i=0}^d\) and dual eigenvalue sequence \(\lbrace \theta ^*_i \rbrace _{i=0}^d\). Our goal is to prove the following result.

Theorem 15.1

(See [18, Theorem 1.12]). There exists a sequence of scalars \(\beta ,\gamma ,\gamma ^*,\varrho ,\varrho ^*\) taken from \({\mathbb {F}}\) such that both

- (TD1):

-

\(\qquad 0 = [A, A^2 A^*-\beta A A^* A+A^* A^2-\gamma \left( A A^*+A^* A\right) -\varrho A^* ]\),

- (TD2):

-

\(\qquad 0=[A^*, A^{*2} A-\beta A^*AA^* +AA^{*2}-\gamma ^* \left( A^*A+AA^*\right) -\varrho ^* A]\).

The sequence is unique if \(d\ge 3\).

The relations (TD1), (TD2) are called the tridiagonal relations. They are displayed in [16, Lemma 5.4] and examined carefully in [17].

Lemma 15.2

For \(\beta , \gamma , \varrho \in {\mathbb {F}}\) the following are equivalent:

-

(i)

the scalars \(\beta , \gamma , \varrho \) satisfy (TD1);

-

(ii)

the sequence \(\lbrace \theta _i\rbrace _{i=0}^d\) is \((\beta ,\gamma , \varrho )\)-recurrent.

Proof

Let C denote the expression on the right in (TD1). We have

For \(0 \le i,j\le d\),

where P is from (46).

\(\mathrm{(i)} \Rightarrow \mathrm{(ii)}\) We have \(C=0\). So for \(1 \le j \le d\),

By construction \(\theta _{j-1}\not =\theta _j\) and \(E_{j-1}A^*E_j\not =0\). Therefore \(P(\theta _{j-1},\theta _j)=0\). Consequently the sequence \(\lbrace \theta _i\rbrace _{i=0}^d\) is \((\beta ,\gamma , \varrho )\)-recurrent.

\(\mathrm{(ii)} \Rightarrow \mathrm{(i)}\) For \(0 \le i,j\le d\) the right-hand side of (47) has at least one zero factor, so \(E_iCE_j=0\). Consequently \(C=0\). \(\square \)

Lemma 15.3

The following (i)–(iii) hold for \(0 \le i,j\le d\):

-

(i)

\(E^*_i A^r E^*_j=0\) for \(0 \le r<\vert i-j\vert \);

-

(ii)

\(E^*_iA^rE^*_j \not =0\) for \(r=\vert i-j\vert \);

-

(iii)

for \(0 \le r,s\le d\),

$$\begin{aligned}&E^*_i A^r A^* A^s E^*_j= {\left\{ \begin{array}{ll} \theta ^*_{j+s} E^*_i A^{r+s} E^*_j,&{} {\text {if }i-j=r+s}; \\ \theta ^*_{j-s}E^*_i A^{r+s} E^*_j,&{} {\text {if }j-i=r+s}; \\ 0,&{} {\text { if }\vert i-j\vert >r+s}. \end{array}\right. } \end{aligned}$$

Proof

For \(0 \le i \le d\) pick \(0 \not =v_i \in E^*_iV\). So \(\lbrace v_i \rbrace _{i=0}^d\) is a basis for V. Without loss of generality, we may identify each \(X\in \mathrm{End}(V)\) with the matrix in \(\mathrm{Mat}_{d+1}({\mathbb {F}})\) that represents X with respect to \(\lbrace v_i \rbrace _{i=0}^d\). From this point of view, A is irreducible tridiagonal and \(A^* = \mathrm{diag}(\theta ^*_0,\theta ^*_1,\ldots ,\theta ^*_d)\). Moreover for \(0 \le i \le d\), the matrix \(E^*_i\) is diagonal with (i, i)-entry 1 and all other entries 0. Using these matrix representations, one routinely verifies the assertions (i)–(iii) in the lemma statement. \(\square \)

Recall that \({\mathcal {D}}\) is the subalgebra of \(\mathrm{End}(V)\) generated by A.

Lemma 15.4

Define

Then

-

(i)

\(\lbrace L_i \rbrace _{i=0}^d\) is a basis for the vector space \({\mathcal {D}}\);

-

(ii)

\(L_d=I\);

-

(iii)

for \(0 \le i \le d-1\),

$$\begin{aligned} L_i A^*-A^*L_i = E_i A^*E_{i+1}-E_{i+1}A^*E_i. \end{aligned}$$

Proof

-

(i)

Since \(\lbrace E_i \rbrace _{i=0}^d\) is a basis for \({\mathcal {D}}\).

-

(ii)

Since \(I=\sum _{i=0}^d E_i\).

-

(iii)

For \(0\le j\le d-1\) we have

$$\begin{aligned} E_jA^*&= E_j A^* (E_0 + \cdots + E_d) \nonumber \\&=E_jA^*E_{j-1}+E_jA^*E_j+E_jA^*E_{j+1} , \end{aligned}$$(48)where \(E_{-1}=0\). Similarly for \(0 \le j \le d-1\),

$$\begin{aligned} A^*E_j&= E_{j-1}A^*E_j+E_jA^*E_j+E_{j+1}A^*E_j. \end{aligned}$$(49)Sum both (48) and (49) over \(j=0,1,\ldots ,i\) and take the difference between these two sums.

\(\square \)

Lemma 15.5

We have

Proof

Using Lemma 15.4 we obtain

\(\square \)

Proof of Theorem 15.1

First assume that \(d\ge 3\). By Lemma 15.5 (with \(X=A^2\) and \(Y=A\)) there exists \(Z\in {\mathcal {D}}\) such that

Since \(\lbrace A^i \rbrace _{i=0}^d\) is a basis for \({\mathcal {D}}\), there exists a polynomial \(f\in {\mathbb {F}}[\lambda ]\) such that \(\mathrm{deg}(f)\le d\) and \(Z=f(A)\). Let k denote the degree of f.

We show that \(k=3\). We first assume that \(k<3\) and get a contradiction. We multiply each term in (50) on the left by \(E^*_3\) and on the right by \(E^*_0\). We evaluate the result using Lemma 15.3 to find \((\theta ^*_1-\theta ^*_2)E^*_3A^3E^*_0=0\). The scalar \( \theta ^*_1-\theta ^*_2\) is nonzero, and \(E^*_3A^3E^*_0\not =0\) by Lemma 15.3(ii). Therefore \( (\theta ^*_1-\theta ^*_2 )E^*_3A^3E^*_0\not =0\) for a contradiction. We have shown \(k\ge 3\). Let c denote the coefficient of \(\lambda ^k\) in f. By construction \(c\not =0\).

Next we assume that \(k>3\) and get a contradiction. We multiply each term in (50) on the left by \(E^*_k\) and on the right by \(E^*_0\). We evaluate the result using Lemma 15.3 to find \(0= c(\theta ^*_0-\theta ^*_k) E^*_kA^kE^*_0\). The scalars c and \( \theta ^*_0-\theta ^*_k\) are nonzero, and \(E^*_kA^kE^*_0\not =0\) by Lemma 15.3(ii). Therefore \( 0\not =c\left( \theta ^*_0-\theta ^*_k\right) E^*_kA^kE^*_0\) for a contradiction. We have shown \(k=3\).

Define \(\beta = c^{-1}-1\), so \(\beta +1=c^{-1}\). Multiply each term in (50) by \(c^{-1}\). The result is

where \(\gamma , \varrho \in {\mathbb {F}}\). The equation (51) is (TD1) in disguise; it is (TD1) with the commutator expanded. Therefore \(\beta , \gamma ,\varrho \) satisfy (TD1). Concerning (TD2), pick any integer i \((2 \le i\le d-1)\). We multiply each term in (51) on the left by \(E^*_{i-2}\) and on the right by \(E^*_{i+1}\). We evaluate the result using Lemma 15.3 to find that \(E^*_{i-2}A^3E^*_{i+1}\) times

is zero. We have \(E^*_{i-2}A^3E^*_{i+1}\not =0\) by Lemma 15.3(ii), so (52) is zero. Thus the sequence \(\lbrace \theta ^*_i\rbrace _{i=0}^d\) is \(\beta \)-recurrent. By Lemma 11.3 there exists \(\gamma ^* \in {\mathbb {F}}\) such that \(\lbrace \theta ^*_i\rbrace _{i=0}^d\) is \((\beta , \gamma ^*)\)-recurrent. By Lemma 11.4(i) there exists \(\varrho ^* \in {\mathbb {F}}\) such that \(\lbrace \theta ^*_i\rbrace _{i=0}^d\) is \((\beta , \gamma ^*, \varrho ^*)\)-recurrent. By this and Lemma 15.2 (applied to \(\Phi ^*\)) we see that \(\beta , \gamma ^*, \varrho ^*\) satisfy (TD2).

We have obtained scalars \(\beta , \gamma , \gamma ^*, \varrho , \varrho ^*\) in \({\mathbb {F}}\) that satisfy (TD1), (TD2). Next we show that these scalars are unique. Let \(\beta , \gamma , \gamma ^*, \varrho , \varrho ^*\) denote any scalars in \({\mathbb {F}}\) that satisfy (TD1), (TD2). By Lemma 15.2 the sequence \(\lbrace \theta _i\rbrace _{i=0}^d\) is \((\beta , \gamma , \varrho )\)-recurrent. By Lemma 11.4(ii) the sequence \(\lbrace \theta _i\rbrace _{i=0}^d\) is \((\beta , \gamma )\)-recurrent. By Lemma 11.3 the sequence \(\lbrace \theta _i\rbrace _{i=0}^d\) is \(\beta \)-recurrent. Also by Lemma 15.2 the sequence \(\lbrace \theta ^*_i\rbrace _{i=0}^d\) is \((\beta , \gamma ^*, \varrho ^*)\)-recurrent. By Lemma 11.4(ii) the sequence \(\lbrace \theta ^*_i\rbrace _{i=0}^d\) is \((\beta , \gamma ^*)\)-recurrent. By Lemma 11.3 the sequence \(\lbrace \theta ^*_i\rbrace _{i=0}^d\) is \(\beta \)-recurrent. By these comments and Definition 11.1,

-

the scalars

$$\begin{aligned} \frac{\theta _{i-2}-\theta _{i+1}}{\theta _{i-1}-\theta _i},\qquad \frac{\theta ^*_{i-2}-\theta ^*_{i+1}}{\theta ^*_{i-1}-\theta ^*_i} \end{aligned}$$are both equal to \(\beta +1\) for \(2\le i\le d-1\);

-

\(\gamma =\theta _{i-1}-\beta \theta _i+\theta _{i+1} \qquad (1\le i\le d-1)\),

-

\(\gamma ^*=\theta ^*_{i-1}-\beta \theta ^*_i+\theta ^*_{i+1} \qquad (1\le i\le d-1)\),

-