Abstract

Fourier PlenOctrees have shown to be an efficient representation for real-time rendering of dynamic neural radiance fields (NeRF). Despite its many advantages, this method suffers from artifacts introduced by the involved compression when combining it with recent state-of-the-art techniques for training the static per-frame NeRF models. In this paper, we perform an in-depth analysis of these artifacts and leverage the resulting insights to propose an improved representation. In particular, we present a novel density encoding that adapts the Fourier-based compression to the characteristics of the transfer function used by the underlying volume rendering procedure and leads to a substantial reduction of artifacts in the dynamic model. We demonstrate the effectiveness of our enhanced Fourier PlenOctrees in the scope of quantitative and qualitative evaluations on synthetic and real-world scenes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Photorealistic rendering of dynamic real-world scenes such as moving persons or interactions of people with surrounding objects plays a vital role in 4D content generation and has numerous applications including augmented reality (AR) and virtual reality (VR), advertisement, or entertainment. Traditional approaches typically capture such scenarios with professional well-calibrated hardware setups [10, 19] in a controlled environment. This way, high-fidelity reconstructions of scene geometry, material properties, and surrounding illumination can be obtained. Recent advances in neural scene representations and, in particular, the seminal work in neural radiance fields (NeRF) [39] marked a breakthrough toward synthesizing photorealistic novel views. Unlike in previous approaches, highly detailed renderings of complex static scenes can be generated only from a set of posed multi-view images recorded by commodity cameras. Several extensions have subsequently been developed to alleviate the limitations of the original NeRF approach which led to significant reductions in the training times [41] or acceleration of the rendering performance [8, 18].

Further approaches explored the application of NeRF to dynamic scenarios but still suffer from slow rendering speed [20, 27, 32, 51]. Among these, Fourier PlenOctrees (FPO) [60] offer an efficient representation and compression of the temporally evolving scene while at the same time enabling free viewpoint rendering in real time. In particular, they join the advantages of the static PlenOctree representation [72] with a discrete Fourier transform (DFT) compression technique to compactly store time-dependent information in a sparse octree structure. Although this elegant formulation enables a high runtime performance, the Fourier-based compression results in a low-frequency approximation of the original data. This estimate is susceptible to artifacts in both the reconstructed geometry and color of the model which often persist and cannot be fully resolved even after an additional fine-tuning step. Strong priors like a pretrained generalizable NeRF [62] may mitigate these artifacts and are applied in FPOs for a more robust initialization. However, when considering recent state-of-the-art techniques to boost the training of the static per-frame neural radiance fields without requiring prior knowledge, obtaining a suitable compressed model remains challenging.

In this paper, we revisit the frequency-based compression of Fourier PlenOctrees in the context of volume rendering and investigate the characteristics and behavior of the involved time-dependent density functions. Our analysis reveals that they exhibit beneficial properties after the decompression that can be exploited via the implicit clipping behavior in terms of an additional Rectified Linear Unit (ReLU) operation applied for rendering that enforces non-negative values. Based on these observations, we aim to find efficient strategies that retain the compact representation of FPOs without introducing significant additional complexity or overhead while eliminating artifacts and even further accelerating the high rendering performance. We particularly focus on flexible approaches that allow for interchanging components to leverage recent advances and, thus, model the Fourier-based compression as an explicit step in the training process, instead of investigating end-to-end trainable systems. To this end, we derive an efficient density encoding consisting of two transformations, where (1) a component-dependent encoding counteracts the under-estimation of values inherent to the reconstruction with a reduced set of Fourier coefficients, and (2) a further logarithmic encoding facilitates the reconstruction from Fourier coefficients and the fine-tuning process by putting higher attention to small values in the underlying \( L_2 \)-minimization. While it is tempting to learn such an encoding in an end-to-end fashion using, e.g., small MLPs, our handcrafted encoding directly incorporates the gained insights and only adds negligible computation overhead, which is especially beneficial for fast rendering.

In summary, our key contributions are:

-

We perform an in-depth analysis of the compression artifacts induced in the Fourier PlenOctree representation when recent state-of-the-art techniques for training the individual per-frame NeRF models are employed.

-

We introduce a novel density encoding for the Fourier-based compression that adapts to the characteristics of the transfer function in volume rendering of NeRF.

2 Related work

2.1 Neural scene representations

With the rise of machine learning, neural networks have become increasingly popular and attracted significant interest for reconstructing and representing scenes [5, 23, 40, 43, 50, 52, 70, 71]. In this context, the seminal work on NeRF [39] demonstrated impressive results for synthesizing novel views using volume rendering of density and view-dependent radiance that are optimized in an implicit neural scene representation using multi-layer perceptrons (MLP) from a set of posed images. Further work focused on addressing several of its limitations such as relaxing the dependence on accurate camera poses by jointly optimizing extrinsic [29, 61, 64] and intrinsic parameters [61, 64], the dependence on sharp input images by a simulation of the blurring process [36, 61], representing unbounded scenes [3, 74] and large-scene environments [54], or reducing aliasing artifacts by modeling the volumetric rendering with conical frustums instead of rays [2,3,4].

2.2 Acceleration of NeRF training and rendering

A further major limitation of implicit neural scene representations is the high computational cost during both training and rendering. In order to speed up the rendering process, several techniques were proposed that reduce the amount of required samples along the ray [25, 31, 42] or subdivide the scene and use smaller and faster networks for the evaluation of the individual parts [47, 48]. Some approaches represented the scene using a latent feature embedding where feature vectors are stored in voxel grids [53, 67] or octrees [33]. Another strategy for accelerating rendering relies on storing precomputed features efficiently into discrete representations such as sparse grids with a texture atlas [21], textured polygon meshes [8], or caches [18] and inferring view-dependent effects by a small MLP. Furthermore, PlenOctrees [72] use a hierarchical octree structure of the density and the view-dependent radiance in terms of spherical harmonics (SH) to entirely avoid network evaluations.

Improving the convergence of the training process has also been investigated by using additional data such as depth maps [11] or a visual hull computed from binary foreground masks [24] as an additional guidance. Furthermore, meta learning approaches allow for a more effective initialization compared to random weights [55]. Similar to the advances in rendering performance, discrete scene representations were also leveraged to boost the training. Instant-NGP [41] incorporated a multi-resolution hash encoding to significantly accelerate the training of neural models including NeRF. Plenoxels [15] stored SH and opacity values within a sparse voxel grid, and TensoRF [7] factorized dense voxel grids into multiple low-rank components. However, all the aforementioned methods and representations are limited to static scenes only and do not take dynamic scenarios like motion into account.

2.3 Dynamic scene representations

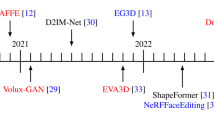

Although novel views of scenes containing motions can be directly synthesized from the individual per-frame static models, significant effort has been spent into more efficient representations for neural rendering such as subdividing the scene into static and dynamic parts [30, 68], using point clouds [68], mixtures of volumetric primitives [35], deformable human models [45], or encoding the dynamics with encoder–decoder architectures [34, 38]. Due to the success and representation power of neural radiance fields, these developments also inspired recent extensions of NeRF to dynamic scenes. Some methods leveraged the additional temporal information to perform novel-view synthesis from a single video of a moving camera instead of large collections of multi-view images [12, 16, 28, 44, 46, 56, 65, 69]. Among these, the reconstruction of humans also gained increasing interest where morphable [16] and implicit generative models [69], pre-trained features [59], or deformation fields [44, 65] were employed to regularize the reconstruction. Furthermore, TöRF [1] used time-of-flight sensor measurements as an additional source of information and DyNeRF [27] learned time-dependent latent codes to constrain the radiance field. Another way of handling scene dynamics is through the decomposition into separate networks where each handles a specific part such as static and dynamic content [17], rigid and non-rigid motion [65], new areas [51], or even only a single dynamic entity [73]. Similarly, some methods reconstruct the scene in a canonical volume and model motion via a separate temporal deformation field [13, 20, 32] or residual fields [26, 58]. Discrete grid-based representations [7] applied for accelerating static scene training have also been extended to factorize the 4D spacetime [6, 14, 22, 49]. In this context, Fourier PlenOctrees (FPO) [60] relaxed the limitation of ordinary PlenOctrees [72] to only capture static scenes in a hierarchical manner by combining it with the Fourier transform which enables handling time-variant density and SH-based radiance in an efficient way.

3 Preliminaries

In this section, we revisit the method of representing a dynamic scene using a Fourier PlenOctree [60], which extends the model-free, static, explicit PlenOctree representation [72] for real-time rendering of NeRFs. Given a set of T individual PlenOctrees each corresponding to a frame in a dynamic time sequence, the construction of an FPO consists of two parts: (1) a structure unification of the T static models, and (2) the computation of the DFT-compressed octree leaf entries, which will be discussed in more detail in Sects. 3.1 and 3.2, respectively.

In order to render an image of a scene at time step \( { t \in \{0,\dots ,T-1\} } \), the color \(\hat{\varvec{C}}\) of a pixel is accumulated along the ray \( { \varvec{r}(\tau ) = \varvec{o} + \tau \cdot \varvec{d} \in {\mathbb {R}}^3 } \) with origin \( { \varvec{o} \in {\mathbb {R}}^3 } \) at the camera, viewing direction \( { \varvec{d} \in {\mathbb {R}}^3 } \) as well as step length \( \tau \in {\mathbb {R}}_{\ge 0} \). The ray \(\varvec{r}\) is taken from the set of all rays \( {\mathcal {R}} \) cast from the input images. The accumulation is performed analogously to PlenOctrees [72]:

where N is the number of octree leaves hit by \(\varvec{r}\), \( { \delta _i {=} \tau _{i + 1} - \tau _i } \) the distance between voxel borders and

is the accumulated transmittance from the camera up to the leaf node i. Hereby, \(\left( 1 - \exp (-\sigma _i(t) \, \delta _i)\right) \) can be considered as the transfer function from densities to transmittance in this node.

The rendering procedure of an FPO is analogous to PlenOctrees [72], with the addition of passing the time step t to the renderer. The time-dependent density \( { \sigma _i(t) \in {\mathbb {R}} } \) is reconstructed using the inverse discrete Fourier transform (IDFT) for time step t applied to the values stored in the FPO in leaf node i. Similarly, the time- and view-dependent color \( { \varvec{c}_i(t) \in {\mathbb {R}}^3 } \) is obtained by first applying the IDFT to the FPO entries of the respective SH-coefficients \( { \varvec{z}_i(t) \in {\mathbb {R}}^{Z \times 3} } \) with Z SH-coefficients per color channel and then querying \(\varvec{z}_i(t)\) for the given viewing direction \(\varvec{d}\). Finally, the sigmoid function is applied to \( \varvec{c}_i(t)\) for normalization. In the following, we omit the subscript i for brevity as all computations are performed per leaf. Since all operations are differentiable with respect to the octree leaves, the compressed representation can be directly fine-tuned based on the rendered images using the following image loss function [60]:

3.1 PlenOctree structure unification

To construct an FPO, time-dependent SH coefficients and densities from all PlenOctrees are merged into a single data structure. The sparse octree structures are first unified to obtain the structure of the FPO. The static PlenOctrees contains leaves with maximum resolution only where the scene is non-empty. Identifying these regions over all time steps and refining the structure of all PlenOctrees accordingly yields the sparse octree structure for the dynamic representation [60].

3.2 Time-variant data compression

After creating the structure of the FPO, the SH coefficients and densities of all leaves and time steps are compressed by converting them into the frequency domain using the DFT. Each SH coefficient and density value is compressed independently for each octree leaf, where only \( K_{\sigma } \) components for the transformed density functions and \( K_{\varvec{z}} \) components for the SH coefficients are kept and stored. Thereby, K components correspond to \( 0.5 \cdot (K + 1) \) frequencies and omitted components correspond to higher frequencies in the frequency domain, so a low-frequency approximation of the data is computed. Thus, the entries of the Fourier PlenOctree are calculated as

with

Here, x represents either the density \( \sigma \) or a component of the SH coefficients \( \varvec{z} \), and \( \omega _k \) is the k-th Fourier coefficient for the density or the specific SH coefficient. Rendering remains completely differentiable and the time-dependent densities and SH coefficients can be reconstructed using the IDFT:

with

4 FPO analysis

Upon investigation of the FPO representations of a dynamic scene, we especially notice geometric reconstruction errors that are visible as ghosting artifacts of scene parts from other time steps. While the DFT in general is able to represent arbitrary discrete sequences using \(K=2T-1\) Fourier coefficients, we observe that cutting off high frequencies for the purpose of compression leads to artifacts that are equally distributed across the entire signal. These artifacts persist even after fine-tuning which implies that the lower-dimensional representation of the signal cannot capture the crucial characteristics of the original values at each time step from the static reconstructions. However, especially the density functions always exhibit the same properties that upon analysis lead to two key observations.

Density over time of a single octree leaf. The leaf is marked in red in the respective images taken from the same view at different time steps t. Although the opacity is similar in the views, highly varying densities are observed over time, except for \(t=16\) where there is empty space in the tree leaf

When dealing with static PlenOctrees that have been optimized independently, it is possible that leaf entries are highly varying in terms of the estimated density and color, even though the rendered results are similar. The reason for this effect lies in the underlying volume rendering which involves the exponential function in Eqs. 1 and 2 to compute the observed color and transmittance based on \(\sigma \). For large input values, this function saturates, which can lead to large differences where scene content appears similarly opaque, as shown in Fig. 1.

Two exemplary density functions over time (left) reconstructed with different number of coefficients \(K_\sigma \). The original function of T time steps is equal to its reconstruction with \(K_\sigma = 119\) Fourier coefficients. The falloff of the marked peaks relative to its original value (right) is depending on \(K_\sigma \) and follows the linear scaling function \( s(K_\sigma ) \)

Compressing a time-dependent function with a reduced amount of frequencies in Fourier space returns a smoothed approximation of the original function. Figure 2 shows this effect for different settings of the number of components \(K_\sigma \) kept to represent the signal. Fewer frequencies thereby result in smoother functions and higher reconstruction error, especially visible with sharp peaks. Density values with a higher absolute difference to the average \({ {\bar{\sigma }} = 1/T \sum _{t=0}^{T-1}\sigma (t) }\) are reconstructed with higher error. This interferes with faithfully reconstructing areas with low or zero-densities, such as empty space or transparent and semi-opaque surfaces. However, large positive values do not need to be reconstructed as precisely. The saturation property of the transfer function allows for higher reconstruction errors of large positive values, which is visualized in Fig. 3. The reconstruction with only the compressed IDFT exhibits large errors, whereas after applying the transfer function, high densities are still approximated well. Scaling down the range of values by applying for instance a logarithmic function automatically allows for a higher approximation error of high densities after the inverse transformation.

In addition to the compressed IDFT, the \(L_2\)-minimization of the fine-tuning process treats approximation errors of all input values equally. This is not necessary for large densities in opaque areas and leads to the conclusion that the density reconstruction needs to be concentrated on low and zero-density areas.

A density function and its reconstruction \(\pi _{K_\sigma }\) using the DFT and IDFT with \({K_\sigma =31}\) (top left) and the same function and its reconstruction after applying our logarithmic encoding \(e_{\log }\) (center left). Their full and compressed Fourier representations (top right, center right) show that a logarithmically scaled function contains less high-frequency information that gets lost during compression. Applying the transfer function to the reconstructions (bottom left) shows that the logarithmic version can better represent the original one

During rendering an FPO, zero-densities are interpreted as free space and color computations are skipped for these locations. Negative densities generally cannot be interpreted in a meaningful way. However, during rendering and fine-tuning, colors only need to be evaluated for existing geometry, which is represented with positive densities. Negative values are ignored and can be interpreted to represent free space. Thus, an implicit clipping via the ReLU function lifts the restriction that free space has to be represented as a zero value. With this observation, we can grant more freedom to the representation of zero-density values and, thus, also to the DFT approximation.

Reconstruction of a density function (Orig.) using only DFT and IDFT as proposed for FPOs [60] and additionally in combination with our component-dependent (comp.) and logarithmic (log.) encoding on top of the DFT and IDFT

5 Density encoding

Based on the insights of the aforementioned analysis, we propose an encoding for the densities to facilitate the reconstruction of the original \(\sigma \). During the FPO construction, we perform the compression on encoded densities

where the encoding consists of a component-dependent and a logarithmic part. We use the latter also during rendering and fine-tuning the FPO, while we apply the former only as an initialization during construction.

Figure 4 shows the differences in the reconstruction of a density function using both or only one part of the encoding. The encoding allows for a better reconstruction of the original densities without any fine-tuning than can be achieved with only the DFT and IDFT.

5.1 Logarithmic density encoding

We use the observation that high density values can have a larger approximation error without impairing the rendered result to improve the reconstruction with the IDFT. To weigh the values according to their importance in reconstruction and focus the approximation on densities near or equal to zero, larger values should be mapped closer together, while smaller values should stay almost the same.

This property is satisfied when encoding the individual non-negative density values \(\sigma \) logarithmically using

before applying the DFT. We choose the shift by 1 so that \(e_{\log }\) remains a non-negative function for non-negative input densities with \(e_{\log }(0) = 0\). During rendering, we apply the inverse of Eq. 9 after the IDFT to project the densities back to their original range. The effect of this encoding can be seen in Fig. 4.

The encoded density sequences are easier to approximate with a low-frequency Fourier basis exploiting the properties of the transfer function. Furthermore, the \(L_2\)-error in fine-tuning is allowed to be larger for encoded high values than without logarithmic encoding, and we focus the optimization on the more important parts of the reconstruction.

5.2 Component-dependent encoding

With the DFT, an approximation of the original function is reconstructed, where low \(\sigma \) values are increased, while high \(\sigma \) values are reduced. This leads to an under-estimation of its variations over time.

Intuitively, using fewer components leads to this under-estimation as fewer frequencies are summed up to reconstruct the original function. The heights of the peaks in the function are correlated with the ratio

between the number of frequencies \( K_\sigma \) and the number of time steps T, as shown in Fig. 2. Amplitudes of the density function are, however, not smaller compared to zero but relative to the average \({\bar{\sigma }}\). Smaller than average values thus need to be reduced further.

We shift the densities by \(\sigma _{\text {shift}}\) and then scale them with the inverse ratio \(1 / s(K_\sigma )\) before the DFT during FPO construction for a better approximation:

In octree leaves that only contain positive \(\sigma \) for all t, applying \(e_{\text {comp}}\) with a shift by the mean value \({\bar{\sigma }}\) can lead to undesired non-positive values, where positive \(\sigma \) can be accidentally pushed below zero and cause holes in the reconstructed model. Thus, the shifting is only applied if empty space and, in turn, at least one zero-density is encountered. While such non-positive \(\sigma \) may still be introduced to the reconstruction, most cases that would lead to significant errors in the reconstruction can be handled faithfully this way.

This scaling can lead to higher amplitudes in the reconstruction than desired. However, this is not problematic following the two key observations: Both large and small values can be larger or smaller, respectively, to achieve the same result. We can largely remove geometric artifacts from incorrectly reconstructed zero values using this technique. The effect of the scaling is shown in Fig. 4. We apply \(e_{\text {comp}}\) only during FPO construction for a better initialization of the Fourier components in the octree leaves, so its inverse and the involved values of \({\bar{\sigma }}\) do not have to be used and stored for rendering or fine-tuning. Since the component-dependent encoding introduces negative densities, it needs to be applied after \(e_{\log }\).

6 Experimental results

6.1 Data sets

We use synthetic data sets of the Lego scene from the NeRF synthetic data set [39] and of a walking human model (Walk) generated from motion data from the CMU Motion Capture Database [9] using a human model [37]. Each data set includes 125 inward-facing camera views with a resolution of \({800\,\times \,800}\) pixels per time step anchored to the model, where \(20\,\%\) are used for testing purposes. The real-world NHR data set consisting of four scenes (Basketball, Sport 1, Sport 2 and Sport 3) including corresponding masks [68] is used for evaluation on real scenes. Basketball includes 72 views of \({1024\,\times \,768}\) and \({1224\,\times \,1024}\) resolution, where 7 views are withheld for testing purposes, whereas the Sport data sets each contain 56 views with 6 views withheld for testing.

6.2 Training

Since the reference implementation of the original Fourier PlenOctrees [60] is unfortunately not publically available and also relies on a generalizable NeRF [62] that has been fine-tuned on the commercial Twindom data set [57], we evaluate our approach against a reimplementation, which is further referred to as FPO-NGP. In particular, we employed Instant-NGP [41] instead of a generalizable NeRF and further removed floater artifacts [66].

To obtain the static PlenOctrees, a set of \({T=60}\) NeRF models is trained first. The networks use the multiresolution hash encoding and NeRF network architecture of Instant-NGP [41] but produce view-independent SH coefficients instead of view-dependent RGB colors as output, analogous to NeRF-SH [72]. The training images are scaled down by a factor of two.

The PlenOctrees are extracted from the trained implicit reconstructions [72] using 9 SH coefficients per color channel on a grid of size \(512^3\). The PlenOctree bounds are set to be constant over time with varying center positions to enable representing larger motions. Fine-tuning of the static PlenOctrees is performed for 5 epochs with training images at full resolution.

We choose the same parameters for the Fourier approximation as in the original approach [60]: For the density, \(K_\sigma =31\) Fourier coefficients are stored in the FPO, while \(K_{\varvec{z}}=5\) components are used for the SH coefficients of each color channel. The FPO is fine-tuned for 10 epochs on the randomized training images of all time steps at full resolution. We also augment the time sequence by duplicating the first and last frame to avoid ghosting artifacts but exclude these additional two frames from the fine-tuning and the evaluation.

All training is performed on NVIDIA GeForce RTX 3090 and RTX 4090 GPUs, where frame rates and training times are listed here for the RTX 4090 GPU. The process to obtain a fine-tuned FPO with our un-optimized implementation takes around 6 h for training one set of the static NeRF models and around 10–30 min per epoch of fine-tuning. Any other steps in the training require a few minutes each.

Further details of the training procedure are provided in the supplemental material.

6.3 Evaluation

Figure 5 shows renderings of the baseline and our enhanced FPO. The baseline exhibits artifacts stemming from the geometry of other time steps that are visible as floating structures and are introduced by the Fourier-based compression. In comparison with FPO-NGP, the amount of these artifacts is significantly reduced with our method. In the case of fast moving scene content such as the legs in the Walk scene, artifacts are mostly removed and much less apparent. Even without fine-tuning, the geometry is reconstructed well, as can be seen in Fig. 6.

In the enhanced FPO before fine-tuning gray artifacts are still visible on the reconstruction. These stem from approximation errors in the SH coefficient functions, which are not altered by our approach. Leaves that are empty most of the time contain a default value of zero in most static PlenOctrees, which result in the gray coloration. The fine-tuning process however ensures a realistic reconstruction of RGB colors for all time steps.

Table 1 provides an overview of the achieved PSNR, SSIM [63] and LPIPS [75] values. Considering our baseline reimplementation FPO-NGP, we observe a lower performance both qualitatively and quantitatively in comparison with the results of the original reference implementation [60]. However, our results are consistent with the evaluations reported in their supplemental material when the generalizable NeRF [62], which has been specifically fine-tuned on the commercial Twindom data set, is not employed. Additional comparisons can be found in the supplementary material. Besides these observations, our method achieves much better results than the baseline even with only a single epoch of fine-tuning. Similarly for the case when no further optimization is involved, our method achieves higher metrics than the baseline due to a better initialization of the geometry. Due to this fact, the creation process of an FPO representation of a dynamic scene is accelerated indirectly, as less time needs to be spent on fine-tuning.

Since our proposed encoding only adds a few additional computation operations, its impact on the performance of the optimization and rendering is minimal. In fact, we still achieve real-time frame rates, as can be seen in Table 2. The resulting FPS are even increased as free space, which previously exhibited artifacts due to the incorrect positive densities, is now correctly identified. Because free space does not require any computation of color, this step in rendering is now skipped which accelerates rendering significantly. Furthermore, our encoding does not change the required memory for storing an FPO, which is 2.4 GiB on average across all tested scenes.

6.4 Ablation studies

In Fig. 6, we present a more detailed overview of the effects of each part of our density encoding. Table 1 lists the corresponding metrics for different scenes.

Especially the logarithmic part improves the reconstruction significantly, since the low-frequency approximation can reconstruct the density functions much better than without it and most geometric artifacts are removed or become barely visible. The DFT and optimization process puts more focus on the reconstruction of lower values and changes between free space and positive densities requiring a smaller error for good results.

The component-dependent part shows to be beneficial for a better initialization of the geometry reconstruction before fine-tuning. Gray artifacts are removed in most places at most time steps, as zero-densities are represented with negative values and are thus interpreted as free space. In combination with the logarithmic part, the quality of the initial geometry reconstruction is increased even further. After fine-tuning, the FPO initialized with only the component-depending part of the encoding achieves better results than the baseline FPO, but also in this case, our full encoding further improves the overall quality.

Both parts of the encoding result in a significant speed-up in rendering before and after fine-tuning, see Table 2. While the reconstruction using only the logarithmic part of the encoding shows improved visual quality over the baseline, the rendering speed is decreased without fine-tuning. Here, zero-densities are better approximated but are still assigned small positive values. Since higher densities also exhibit smaller values, more values need to be accumulated along the ray to reach the termination criterion which is determined by the transmittance. Our full encoding consisting of both parts yields the highest frame rate.

We provide additional renderings in the supplemental video and further ablation studies on the number of Fourier coefficients and on the underlying NeRF models in the supplementary material.

6.5 Limitations

Similar to other approaches, our method also has some limitations. The primary focus of our encoding lies in transforming the density functions to make it easier to compress via the Fourier-based signal representation. SH coefficient functions, however, show different properties and are currently solely compressed using the DFT, which introduces similar artifacts as for the density function. While fine-tuning allows to improve the reconstruction, obtaining a realistic representation of the colors is also important and a challenging problem. Furthermore, our method inherits several limitations of the original FPO approach. Only the provided data are compressed, so generalization of the scene dynamics in terms of extrapolation and interpolation of the motion is not directly possible.

7 Conclusion

In this paper, we revisited Fourier PlenOctrees as an efficient representation for real-time rendering of dynamic neural radiance fields and analyzed the characteristics of its compressed frequency-based representation. Based on the gained insights of the artifacts that are introduced by the compression in the context of the underlying volume rendering when state-of-the-art NeRF techniques are employed, we derived an efficient density encoding that counteracts these artifacts while retaining the compactness of FPOs and avoiding significant additional complexity or overhead. Our method showed a superior reconstructed quality as well as a substantial further increase of the real-time rendering performance, and we believe that our insights will also be beneficial for further Fourier-based methods [58].

Data availability

Our source code is available at https://github.com/SaskiaRabich/FPOplusplus.

References

Attal, B., Laidlaw, E., Gokaslan, A., Kim, C., Richardt, C., Tompkin, J., O’Toole, M.: Törf: time-of-flight radiance fields for dynamic scene view synthesis. Adv. Neural Inf. Process. Syst. (NeurIPS) 34, 26289–26301 (2021)

Barron, J.T., Mildenhall, B., Tancik, M., Hedman, P., Martin-Brualla, R., Srinivasan, P.P.: Mip-nerf: A multiscale representation for anti-aliasing neural radiance fields. In: IEEE International Conference on Computer Vision (ICCV), pp. 5855–5864 (2021)

Barron, J.T., Mildenhall, B., Verbin, D., Srinivasan, P.P., Hedman, P.: Mip-nerf 360: unbounded anti-aliased neural radiance fields. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022)

Barron, J.T., Mildenhall, B., Verbin, D., Srinivasan, P.P., Hedman, P.: Zip-nerf: anti-aliased grid-based neural radiance fields. In: IEEE International Conference on Computer Vision (ICCV) (2023)

Bi, S., Xu, Z., Sunkavalli, K., Hašan, M., Hold-Geoffroy, Y., Kriegman, D., Ramamoorthi, R.: Deep reflectance volumes: relightable reconstructions from multi-view photometric images. In: European Conference on Computer Vision (ECCV), pp. 294–311. Springer (2020)

Cao, A., Johnson, J.: Hexplane: A fast representation for dynamic scenes. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2023)

Chen, A., Xu, Z., Geiger, A., Yu, J., Su, H.: Tensorf: Tensorial radiance fields. In: European Conference on Computer Vision (ECCV), pp. 333–350 (2022)

Chen, Z., Funkhouser, T., Hedman, P., Tagliasacchi, A.: Mobilenerf: exploiting the polygon rasterization pipeline for efficient neural field rendering on mobile architectures. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 16,569–16,578 (2023)

CMU Graphics Lab: Carnegie Mellon university-cmu graphics lab-motion capture library (2022). http://mocap.cs.cmu.edu/. Accessed on: 2022-07-22

Collet, A., Chuang, M., Sweeney, P., Gillett, D., Evseev, D., Calabrese, D., Hoppe, H., Kirk, A., Sullivan, S.: High-quality streamable free-viewpoint video. ACM Trans. Graph. (TOG) 34(4), 1–13 (2015)

Deng, K., Liu, A., Zhu, J.Y., Ramanan, D.: Depth-supervised nerf: fewer views and faster training for free. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 12882–12891 (2022)

Du, Y., Zhang, Y., Yu, H.X., Tenenbaum, J.B., Wu, J.: Neural radiance flow for 4D view synthesis and video processing. In: IEEE International Conference on Computer Vision (ICCV). IEEE Computer Society (2021)

Fang, J., Yi, T., Wang, X., Xie, L., Zhang, X., Liu, W., Nießner, M., Tian, Q.: Fast dynamic radiance fields with time-aware neural voxels. In: SIGGRAPH Asia Conference Papers, pp. 1–9 (2022)

Fridovich-Keil, S., Meanti, G., Warburg, F.R., Recht, B., Kanazawa, A.: K-planes: explicit radiance fields in space, time, and appearance. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 12479–12488 (2023)

Fridovich-Keil, S., Yu, A., Tancik, M., Chen, Q., Recht, B., Kanazawa, A.: Plenoxels: radiance fields without neural networks. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022)

Gafni, G., Thies, J., Zollhofer, M., Nießner, M.: Dynamic neural radiance fields for monocular 4d facial avatar reconstruction. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2021)

Gao, C., Saraf, A., Kopf, J., Huang, J.B.: Dynamic view synthesis from dynamic monocular video. In: IEEE International Conference on Computer Vision (ICCV), pp. 5712–5721 (2021)

Garbin, S.J., Kowalski, M., Johnson, M., Shotton, J., Valentin, J.: Fastnerf: high-fidelity neural rendering at 200fps. In: IEEE International Conference on Computer Vision (ICCV), pp. 14346–14355 (2021)

Guo, K., Lincoln, P., Davidson, P., Busch, J., Yu, X., Whalen, M., Harvey, G., Orts-Escolano, S., Pandey, R., Dourgarian, J., et al.: The relightables: volumetric performance capture of humans with realistic relighting. ACM Trans. Graph. (TOG) 38(6), 1–19 (2019)

Guo, X., Chen, G., Dai, Y., Ye, X., Sun, J., Tan, X., Ding, E.: Neural deformable voxel grid for fast optimization of dynamic view synthesis. In: Asian Conference on Computer Vision (ACCV), pp. 3757–3775 (2022)

Hedman, P., Srinivasan, P.P., Mildenhall, B., Barron, J.T., Debevec, P.: Baking neural radiance fields for real-time view synthesis. In: IEEE International Conference on Computer Vision (ICCV), pp. 5875–5884 (2021)

Işık, M., Rünz, M., Georgopoulos, M., Khakhulin, T., Starck, J., Agapito, L., Nießner, M.: Humanrf: high-fidelity neural radiance fields for humans in motion. ACM Trans. Graph. (TOG) 42(4), 1–12 (2023)

Jena, S., Multon, F., Boukhayma, A.: Neural mesh-based graphics. In: European Conference on Computer Vision Workshops (ECCVW) (2022)

Kondo, N., Ikeda, Y., Tagliasacchi, A., Matsuo, Y., Ochiai, Y., Gu, S.S.: Vaxnerf: Revisiting the classic for voxel-accelerated neural radiance field. arXiv preprint arXiv:2111.13112 (2021)

Kurz, A., Neff, T., Lv, Z., Zollhöfer, M., Steinberger, M.: Adanerf: adaptive sampling for real-time rendering of neural radiance fields. In: European Conference on Computer Vision (ECCV), pp. 254–270 (2022)

Li, L., Shen, Z., Wang, Z., Shen, L., Tan, P.: Streaming radiance fields for 3D video synthesis. Adv. Neural Inf. Process. Syst. (NeurIPS) 35, 13485–13498 (2022)

Li, T., Slavcheva, M., Zollhoefer, M., Green, S., Lassner, C., Kim, C., Schmidt, T., Lovegrove, S., Goesele, M., Newcombe, R., et al.: Neural 3d video synthesis from multi-view video. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022)

Li, Z., Niklaus, S., Snavely, N., Wang, O.: Neural scene flow fields for space-time view synthesis of dynamic scenes. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6498–6508 (2021)

Lin, C.H., Ma, W.C., Torralba, A., Lucey, S.: Barf: bundle-adjusting neural radiance fields. In: IEEE International Conference on Computer Vision (ICCV) (2021)

Lin, K.E., Xiao, L., Liu, F., Yang, G., Ramamoorthi, R.: Deep 3D mask volume for view synthesis of dynamic scenes. In: IEEE International Conference on Computer Vision (ICCV), pp. 1749–1758 (2021)

Lindell, D.B., Martel, J.N., Wetzstein, G.: Autoint: automatic integration for fast neural volume rendering. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 14556–14565 (2021)

Liu, J.W., Cao, Y.P., Mao, W., Zhang, W., Zhang, D.J., Keppo, J., Shan, Y., Qie, X., Shou, M.Z.: Devrf: fast deformable voxel radiance fields for dynamic scenes. Adv. Neural Inf. Process. Syst. (NeurIPS) 35, 36762–36775 (2022)

Liu, L., Gu, J., Zaw Lin, K., Chua, T.S., Theobalt, C.: Neural sparse voxel fields. Adv. Neural Inf. Process. Syst. (NeurIPS) 33, 15651–15663 (2020)

Lombardi, S., Simon, T., Saragih, J., Schwartz, G., Lehrmann, A., Sheikh, Y.: Neural volumes: learning dynamic renderable volumes from images. ACM Trans. Graph. (TOG) 38(4), 1–14 (2019)

Lombardi, S., Simon, T., Schwartz, G., Zollhoefer, M., Sheikh, Y., Saragih, J.: Mixture of volumetric primitives for efficient neural rendering. ACM Trans. Graph. (TOG) 40(4), 1–13 (2021)

Ma, L., Li, X., Liao, J., Zhang, Q., Wang, X., Wang, J., Sander, P.V.: Deblur-nerf: neural radiance fields from blurry images. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022)

MakeHuman: Makehuman - open source tool for making 3d characters (2022). www.makehumancommunity.org. Accessed on: 2022-07-22

Meka, A., Pandey, R., Haene, C., Orts-Escolano, S., Barnum, P., David-Son, P., Erickson, D., Zhang, Y., Taylor, J., Bouaziz, S., et al.: Deep relightable textures: volumetric performance capture with neural rendering. ACM Trans. Graph. (TOG) 39(6), 1–21 (2020)

Mildenhall, B., Srinivasan, P., Tancik, M., Barron, J., Ramamoorthi, R., Ng, R.: Nerf: representing scenes as neural radiance fields for view synthesis. In: European Conference on Computer Vision (ECCV) (2020)

Mildenhall, B., Srinivasan, P.P., Ortiz-Cayon, R., Kalantari, N.K., Ramamoorthi, R., Ng, R., Kar, A.: Local light field fusion: practical view synthesis with prescriptive sampling guidelines. ACM Trans. Graph. (TOG) 38(4), 1–14 (2019)

Müller, T., Evans, A., Schied, C., Keller, A.: Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans. Graph. (TOG) 41(4), 1021–10215 (2022)

Neff, T., Stadlbauer, P., Parger, M., Kurz, A., Mueller, J.H., Chaitanya, C.R.A., Kaplanyan, A., Steinberger, M.: Donerf: towards real-time rendering of compact neural radiance fields using depth oracle networks. Comput. Graph. Forum (CGF) 40(4), 45–59 (2021)

Niemeyer, M., Mescheder, L., Oechsle, M., Geiger, A.: Differentiable volumetric rendering: learning implicit 3d representations without 3D supervision. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3504–3515 (2020)

Park, K., Sinha, U., Barron, J.T., Bouaziz, S., Goldman, D.B., Seitz, S.M., Martin-Brualla, R.: Nerfies: deformable neural radiance fields. In: IEEE International Conference on Computer Vision (ICCV) (2021)

Peng, S., Zhang, Y., Xu, Y., Wang, Q., Shuai, Q., Bao, H., Zhou, X.: Neural body: Implicit neural representations with structured latent codes for novel view synthesis of dynamic humans. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2021)

Pumarola, A., Corona, E., Pons-Moll, G., Moreno-Noguer, F.: D-nerf: neural radiance fields for dynamic scenes. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2021)

Rebain, D., Jiang, W., Yazdani, S., Li, K., Yi, K.M., Tagliasacchi, A.: Derf: decomposed radiance fields. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 14,153–14,161 (2021)

Reiser, C., Peng, S., Liao, Y., Geiger, A.: Kilonerf: Speeding up neural radiance fields with thousands of tiny mlps. In: IEEE International Conference on Computer Vision (ICCV), pp. 14335–14345 (2021)

Shao, R., Zheng, Z., Tu, H., Liu, B., Zhang, H., Liu, Y.: Tensor4d: efficient neural 4d decomposition for high-fidelity dynamic reconstruction and rendering. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 16632–16642 (2023)

Sitzmann, V., Zollhöfer, M., Wetzstein, G.: Scene representation networks: Continuous 3d-structure-aware neural scene representations. In: Advances in Neural Information Processing Systems (NeurIPS) 32 (2019)

Song, L., Chen, A., Li, Z., Chen, Z., Chen, L., Yuan, J., Xu, Y., Geiger, A.: Nerfplayer: a streamable dynamic scene representation with decomposed neural radiance fields. IEEE Trans. Vis. Comput. Graph. 29(5), 2732–2742 (2023)

Suhail, M., Esteves, C., Sigal, L., Makadia, A.: Light field neural rendering. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022)

Sun, C., Sun, M., Chen, H.T.: Direct voxel grid optimization: super-fast convergence for radiance fields reconstruction. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022)

Tancik, M., Casser, V., Yan, X., Pradhan, S., Mildenhall, B., Srinivasan, P.P., Barron, J.T., Kretzschmar, H.: Block-nerf: scalable large scene neural view synthesis. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 8248–8258 (2022)

Tancik, M., Mildenhall, B., Wang, T., Schmidt, D., Srinivasan, P.P., Barron, J.T., Ng, R.: Learned initializations for optimizing coordinate-based neural representations. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2846–2855 (2021)

Tretschk, E., Tewari, A., Golyanik, V., Zollhöfer, M., Lassner, C., Theobalt, C.: Non-rigid neural radiance fields: reconstruction and novel view synthesis of a dynamic scene from monocular video. In: IEEE International Conference on Computer Vision (ICCV) (2021)

Twindom: Twindom dataset. https://web.twindom.com/. Accessed on: 2024-02-10

Wang, L., Hu, Q., He, Q., Wang, Z., Yu, J., Tuytelaars, T., Xu, L., Wu, M.: Neural residual radiance fields for streamably free-viewpoint videos. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 76–87 (2023)

Wang, L., Wang, Z., Lin, P., Jiang, Y., Suo, X., Wu, M., Xu, L., Yu, J.: ibutter: neural interactive bullet time generator for human free-viewpoint rendering. In: ACM International Conference on Multimedia (2021)

Wang, L., Zhang, J., Liu, X., Zhao, F., Zhang, Y., Zhang, Y., Wu, M., Yu, J., Xu, L.: Fourier plenoctrees for dynamic radiance field rendering in real-time. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 13524–13534 (2022)

Wang, P., Zhao, L., Ma, R., Liu, P.: Bad-nerf: bundle adjusted deblur neural radiance fields. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4170–4179 (2023)

Wang, Q., Wang, Z., Genova, K., Srinivasan, P.P., Zhou, H., Barron, J.T., Martin-Brualla, R., Snavely, N., Funkhouser, T.: Ibrnet: learning multi-view image-based rendering. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2021)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. (TIP) 13(4), 600–612 (2004)

Wang, Z., Wu, S., Xie, W., Chen, M., Prisacariu, V.A.: Nerf–: neural radiance fields without known camera parameters. arXiv preprint arXiv:2102.07064 (2021)

Weng, C.Y., Curless, B., Srinivasan, P.P., Barron, J.T., Kemelmacher-Shlizerman, I.: Humannerf: free-viewpoint rendering of moving people from monocular video. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 16210–16220 (2022)

Wirth, T., Rak, A., Knauthe, V., Fellner, D.W.: A post processing technique to automatically remove floater artifacts in neural radiance fields. In: Computer Graphics Forum (CGF), vol. 42. Wiley Online Library (2023)

Wu, L., Lee, J.Y., Bhattad, A., Wang, Y.X., Forsyth, D.: Diver: real-time and accurate neural radiance fields with deterministic integration for volume rendering. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 16200–16209 (2022)

Wu, M., Wang, Y., Hu, Q., Yu, J.: Multi-view neural human rendering. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Xu, H., Alldieck, T., Sminchisescu, C.: H-nerf: neural radiance fields for rendering and temporal reconstruction of humans in motion. Adv. Neural Inf. Process. Syst. (NeurIPS) 34, 14955–14966 (2021)

Yariv, L., Gu, J., Kasten, Y., Lipman, Y.: Volume rendering of neural implicit surfaces. Adv. Neural Inf. Process. Syst. (NeurIPS) 34, 4805 (2021)

Yariv, L., Kasten, Y., Moran, D., Galun, M., Atzmon, M., Ronen, B., Lipman, Y.: Multiview neural surface reconstruction by disentangling geometry and appearance. Adv. Neural Inf. Process. Syst. (NeurIPS) 33, 2492–2502 (2020)

Yu, A., Li, R., Tancik, M., Li, H., Ng, R., Kanazawa, A.: Plenoctrees for real-time rendering of neural radiance fields. In: IEEE International Conference on Computer Vision (ICCV), pp. 5752–5761 (2021)

Zhang, J., Liu, X., Ye, X., Zhao, F., Zhang, Y., Wu, M., Zhang, Y., Xu, L., Yu, J.: Editable free-viewpoint video using a layered neural representation. ACM Trans. Graph. (TOG) 40(4), 1–18 (2021)

Zhang, K., Riegler, G., Snavely, N., Koltun, V.: Nerf++: Analyzing and improving neural radiance fields. arXiv preprint arXiv:2010.07492 (2020)

Zhang, R., Isola, P., Efros, A.A., Shechtman, E., Wang, O.: The unreasonable effectiveness of deep features as a perceptual metric. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Acknowledgements

This work has been funded by the Federal Ministry of Education and Research under grant no. 01IS22094E WEST-AI, by the Federal Ministry of Education and Research of Germany and the state of North-Rhine Westphalia as part of the Lamarr-Institute for Machine Learning and Artificial Intelligence, and additionally by the DFG project KL 1142/11-2 (DFG Research Unit FOR 2535 Anticipating Human Behavior).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 2 (mp4 15050 KB)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rabich, S., Stotko, P. & Klein, R. FPO++: efficient encoding and rendering of dynamic neural radiance fields by analyzing and enhancing Fourier PlenOctrees. Vis Comput 40, 4777–4788 (2024). https://doi.org/10.1007/s00371-024-03475-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-024-03475-3