Abstract

Yarn hairiness affects yarn and fabric quality. The existing hairiness detection methods cannot discriminate crossover fibers or hairs. In order to accurately separate and detect crossover fibers, an algorithm of separating crossover hairs is proposed. By obtaining refined hair skeletons after image pretreatment, the positions of fiber intersection point were determined, and a hair information table according to the characteristics of the hair cross-point was constructed. After classifying each hair branch skeleton and screening out the hair common skeleton, the branch hair matching table by using the two end points of the true common hair skeleton adjacent to the hair branch was constructed. Through pairing the same cross-hair branch with the principle of the closest slope at the adjacent cross-end, each complete cross-hair skeleton was defined for hair count in a field of view. The detection results show that compared with the existing photoelectric hairiness detection instrument, the algorithm can realize the crossover hair separation, and calculate the length of complete crossover hairs and curved hairs with high accuracy. On average, the developed algorithm measures hair length about 11.1% longer than the manually measured results, while commercial apparatus would report hair length 62.5% shorter than the actual hair length.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

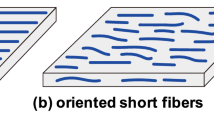

Conventional yarns have a core and hairiness structure. Fiber ends, i.e., hairs, protruding out from the body of yarn spun from staple fibers are essential characteristics compared with filament yarns. While many protruding short fibers contribute substantially to a good handle, long fibers can impair the appearance and practical properties of the textile article. Since yarn hairs are not straight and will inevitably cross and overlap each other, the number of yarn hairs and their length distribution are directly related to the quality of the textile [1]; hence, yarn hairiness information is important for evaluating the quality of the yarn. However, it is a current challenge to accurately detect and measure fiber curvature and length from a yarn hairiness image [2]. The common detection methods for yarn hairiness are photoelectric detection and pixel tracking technique [3,4,5]. These methods can only detect hairs without considering fiber crossovers and curvature.

For yarn hairiness detection, projection method and photoelectric method are widely used at present. The detection results from these methods are often significantly different from the actual hairiness length. The most fundamental reason is that photoelectric method and projection method detect the projection length of hairiness in the two-dimensional plane, rather than the actual elongation length of hairiness. With the development of image technology and science and technology, digital image processing methods have been widely used in hairiness parameter detection since the 1990s. Guha et al. [6] detected the hairiness length by Canny edge detection algorithm and using the core edge obtained as the reference line. In this method, the determination of measuring datum line and hairiness extraction led to large errors. Telli [7] studied seven different edge detection algorithms to separate the hairs from the yarn body using an image processing approach for the determination of yarn hairiness. The issue of hair separation for accurate hair length measurement remains. Yuvaraj et al. [8] applied high voltage to charge the yarn so that the hairs are projected out from the yarn surface for hair length measurement on captured yarn images. Since the hair was straightened due to static charging, their method gives a more correct hair count than commercial testers. Xia et al. [9] designed a test device to install a blowpipe tool to elongate the curly hairs in one direction, and used a projection receiver and a corresponding laser to detect the hair length. However, this method changes the hair morphology and may result in longer hair due to external forces. Huang et al. [10] proposed the use of relative hairiness index (RHI) to evaluate the fiber ends being tucked into yarn bodies. The RHI can directly reveal the effectiveness of different spinning parameters and methods on fiber ends security and fixation. It provides an important reference for spinning process design and yarn quality control. Wang et al. [11] and Yasar, Memis and Mike [12] proposed using image processing methods to obtain yarn core information and total hair fiber length information in images, and then calculated the yarn diameter coefficient of variation and the hairiness index. Although there are many novel methods for hairiness detection, these methods usually obtain hairiness index roughly by selecting baseline to improve or disturb hairiness morphology and directly obtaining hairiness index. However, the existing hairiness measurement methods, such as the hairiness partition detection proposed by Sun et al. [13], improve the accuracy of hairiness detection, but these methods do not provide a solution for detecting crossover hairs.

To overcome the issues faced in the detection of cross-fibers, the algorithm reported in this paper collects independent fiber branch information in a hairiness image according to the intersection point characteristics of the hair skeleton and then removes all the intersection points in the hair skeleton image according to the intersection point characteristics of the hair skeleton. A hair branch information table was constructed to determine whether eight neighbors of the hair two endpoints are adjacent to each other to classify the hair branches. The hair branches in the group are paired to achieve the separation of crossed fibers, and the pairing is based on the slope of the end of each branch of hairs near the intersection point. This method provides a solution to the problem of overlapping hairs for hairiness measurement. It is of great significance for the accurate detection of hairiness.

2 Hair determination process and image acquisition

2.1 Test protocol design

The overall design flow of the algorithm for cross-fiber separation and yarn hair length determination is shown in Fig. 1. The process starts from yarn image acquisition then image processing and finally measurement of hair length.

2.2 Hair skeleton acquisition

An RH2000 video microscope with 50 times magnification was used to collect yarn original images of 1920 × 1200 resolution. The field of view of the collected images is 11.5 × 7.2 mm. To avoid uneven brightness during the image acquisition, an image of background with high contrast to the yarn was first acquired. Under the background, the image of yarn and hair was obtained (Fig. 2a). After removing the background and grayscale and binarization transformation using image processing software, the hair feature image was obtained (processed images). To avoid the blurring effect of out of focus hairs and at the same time to reduce the complexity of the subsequent hair tracking algorithm, a refinement algorithm was used to refine the hair features to a skeleton image with a single pixel for the hair diameter [5]. The pixel values were inverted, and the hair skeleton image of hairs and cross-positions was obtained. Figure 2a shows an example of yarn image and Fig. 2b shows the pixel skeleton and the cross-positions between hairs.

3 Hair skeleton treatment

Due to the random distribution characteristics of fibers, some yarn hairs will appear crossed, and some will be non-intersecting. The eight-neighborhood pixel tracking method was used for identifying crossed hairs [6]. However, to accurately track the hairs with crossovers, it is necessary to design an adaptive algorithm to deal with the crosses of the hairs.

3.1 Determination of hair information

In the yarn hairiness skeleton image, the cross-point is the branch point of two cross-hairs, and the removal of the hair crossing point divides the cross-hairs into different branches. The hair intersection is determined as follows:

-

(a)

In the image with hair crossing, the pixel point with a pixel value of 1 is used as the target point, and the number of pixels with a pixel value of 1 in the eight neighborhoods of the target point is assessed, As shown in Fig. 3, if the number of hair points around a3 is greater than 2, it is determined to be the hair intersection point.

-

(b)

By iterating through the hairiness image matrix row by row in Fig. 4, the coordinates of all hair intersection points (X1, X2, X3 and X4 in Fig. 4) as shown in Table 1 can be obtained.

-

(c)

Setting the pixel value of all the hair intersection points in the image to 0 can divide the crossed hairs into separate hair branches, and the numbered pixels of the hair branches are reversed as shown in Fig. 5.

Figure 5 shows that each hair branch exists independently and has no crossover phenomenon. Hairs in Fig. 5 include branch numbers of A (1, 3, 5, 7, 9), B (2, 3, 4), C (6, 7, 8) and D (10), respectively.

3.2 Construction of a fiber information table

Since the hair branches are a skeleton image with a single pixel as the diameter, the removal of the hair intersection points makes all the hair branches do not cross. The hair branch information can be obtained by traversing each hair skeleton branch using the eight-neighborhood tracking method. For example, Fig. 6 shows the eight-field tracking process in the hairiness pixel matrix for a1.

The starting point of the hair branch was determined by traversing the crossed hairiness image row by row and finding the first pixel point with a pixel value. The number of pixels with a pixel value of 1 in the eight neighborhoods of the pixel is assessed. When the result is 1, it is determined that the pixel is the end/starting point of the hair, i.e., Pixel c1 in Fig. 6.

The eight-neighborhood tracking steps are as follows:

-

1.

Iterate through the hairiness image matrix to obtain the starting point c1 of the hair branch. Record the hair path [c1].

-

2.

Use the latest pixel point c1 in the hair path as the seed point and then perform Step 3.

-

3.

Determine the number of effective hair crossing points in the eight neighborhoods around the seed point (c2). Go to Step 4.

-

4.

Locate the untraced hair point c2 into the hair path. The hair path is [c1, c2].

-

5.

Use the latest pixel point c2 in the hair path as the seed point and then to determine the number of effective hair crossing points in the eight neighborhoods around the seed point [c1, c3].

-

6.

Locate the untraced hair point c3 into the hair path. The hair path is [c1, c2, c3].

-

7.

Repeat the above steps until the hair tracing is complete and the hair path is [c1, c2, c3, c4, c5, c6].

-

8.

When the last pixel point (such as c6) is used as the seed point to search the eight neighborhoods and there is only pixel point (i.e., c5 as reference to c6, and c5 has been stored in the path already), the tracking is finished, and the hair pixel length is obtained.

According to the above algorithm, the pixel length of each hair branch is counted, and the pixel coordinates of the hair points at both ends of the hair branch are recorded. The information of each hair branch in Fig. 5 is shown in Table 2.

3.3 Hair branch information

It can be seen from the hairiness image in Fig. 5 that the ends of each crossed hair branch are adjacent to the hair crossing point. To distinguish the branch category of hairs and accurately use the adjacent ends of each hair branch for hair branch matching as a single hair, it is necessary to determine whether any end of the hair branch is adjacent to the hair crossing point. This was done by taking the two end points of the hair branch as the center point coordinates, as shown in Fig. 7, finding whether the eight-neighborhood coordinates of the center point match the hair intersection coordinates, and if so, updating the hair branch end.

According to the hair cross-point coordinates and the branch endpoint coordinates of each hair in Tables 1 and 2, the hair branch endpoint is found, and Table 3 is generated.

4 Crossed hair separation

4.1 Removal of the pseudo-public hair skeleton

Based on whether an end of a hair branch has an adjacent intersection point or not, the hair branch can be divided into different categories, namely the public/common hair branch, the independent hair branch, and the cross-hair branch [14]. Common hair branches have intersections of hairs at both ends; cross-hair branches are characterized having only one end of the hair branch adjacent to the hair intersection; independent hair branches have no adjacent fiber crossings. Table 4 shows the classification of hair branches in Fig. 5.

4.2 Selection of public hair branches

Due to the complex crossover of hairs, when there are multiple consecutive crossings of hair branches, it is determined whether the two ends of a hair branch are adjacent to the intersection of hairs in the common hair branches (i.e., 3, 5 and 7), or contain pseudo-public hair branch 5 (i.e., meeting the public hair branch condition but not a crossed hair public branch. It is only part of one of the crossed hairs). Through analyzing a large number of cross-hairs, it was found that the common hair branches, namely the overlapping parts of hairs, are usually short, and the number of pixels is less than 20, while the pseudo-public hair skeleton has more than 20 pixels. Therefore, when the number of pixels in the hair path is greater than 20, it can be considered as the pseudo-common hair skeleton, which has the following two characteristic features:

Feature 1: Pseudo-public hair branch pixel number P ≥ 20 as determined by Eq. (1).

where j represents the number of hair pixels in a branch, xi and yi represent the coordinates of hair pixel points.

Feature 2: At least one of the two ends of the pseudo-public hair branch adjacent to the hair branch is in line with the conditions of the public hair branch.

According to the above characteristics, the pseudo-public hair branches can be singled out, and the public hair branches 3 and 7 can be obtained as true public hair branches.

4.3 Hairiness information matching table

With the two ends for common hair branches 3 and 7, as well as their hair branch endpoint information table, it is determined that there are other hair branch endpoints in the eight neighborhoods, and finally the hair information matching table is generated, as shown in Table 5.

As shown in Fig. 5, the four hair branches (1, 2, 4, and 5) adjacent to the two ends of the common hair branch 3 in Table 5 are two pairs of branches from two crossing hairs. The same results can be derived from the four hair branches (5, 6, 8, and 9) adjacent to the two ends of the common hair branch 7.

4.4 Hair skeleton matching

According to the curved morphology of the hair skeleton, it can be found that the slope at the near intersection point of the hair branch belongs to the same hair skeleton. The slope (k) of the cross-hair skeleton near the intersection point can be obtained using Eq. (2) by calculating the average slope between the n pixels of the cross-hair skeleton near the intersection point [15, 16]. Therefore, according to the principle of similarity of slope, the matching of hair branches is realized.

where n represents the number of pixels near the crossed end of the selected hair branch. ki is void when \(x(i + 1) = x(i)\).

Due to the curvature and changeability of the hair branch, the slope information at the two ends of a hair branch will be different. For example, the pseudo-public hair skeleton 5 has a very gentle slope to the adjacent hair branch 9 but a steep slope to the hair branch 8. It is necessary to calculate the local slope at the two ends of the pseudo-public hair skeleton and match the slope at the two ends and the slope of the adjacent hair branches at both ends. The slope information near the cross-end of the hair branch is shown in Table 6. Based on the hair branch slope information, the connection of the same hair skeleton can be determined.

As can be seen from Table 6, at both ends of the common branch 3, branches 1 and 5 have the closest inclination with a difference of 0.02, while branches 2 and 4 have the closest inclination with a difference of 0.362. At both ends of the common branch 7, the slope of branch 6 and 8 is close to each other with a difference of 0.379, while the slope of branch 5 and 9 is the closest with a difference of 0. It can be seen that only the branches belonging to the same cross-hair skeleton have small differences in oblique, while the branches of different roots and hairs have large differences in oblique. According to this criterion, the cross-hair skeleton is matched, and the matching results are shown in Table 7. When the slope of the crossed hairs tends to be similar in some cases, it might be possible that two hair branches could be wrongly matched. However, the statistical results of mean hair length would not be affected.

4.5 Cross-hair separation

Through Table 7, hair branch matching table, a complete hair skeleton, can be constructed following the steps:

Step 1: Record the head and tail numbers against a set of hair branch matching arrays.

Step 2: Search for items with the same number as the base array in other hair matching arrays, and take the combination of the two hair branch matching numbers as the benchmark.

Step 3: Repeat Step 2 for the remaining unsearched array of hair branches until all the hair branch arrays are completed, then remove the arrays that have been searched.

The intact hair skeleton contains the hair branches and pixel lengths as shown in Table 8. Based on the constructed complete hairiness skeleton table, the crossed hair skeleton can be accurately separated, and the pixel length of each crossed hair can be obtained. This cross-hair separation method is based on traditional image processing algorithms and does not involve in any deep learning or artificial intelligence technologies. It is simple and dramatically reduces the computation cost compared to a machine learning method, which requires large datasets for training and validation.

4.6 Hair length

Using the lateral resolution V of the image and the transverse length L of the acquired hairiness image, the number of pixels contained in 1 mm in the image can be calculated using Eq. (3):

In the present embodiment, V is 1920, L is 11.5 mm, and the length of 1 mm contains 167 pixels. Hence, the length of the hair (mm) = the number of pixels/167. Table 9 compares hair length results by the cross-hair separation algorithm, the two-dimensional detection tool included with the RH2000 digital microscope as a manual hair measurement method, and the projection method. The manual method traces full length hair and measures the hair length manually, while the projection method simulates commercial hairiness testing methods, such as Uster hairiness tester.

The error of the hair length between the manual method and the cross-hair separation algorithm is small, within 8%. The error is mainly due to the fact that the hair in the actual image makes the adjacent hair pixels 45 degrees arranged due to bending, rather than horizontal or vertical, and the length from this algorithm is calculated from hair pixels horizontally or vertically. The error of manual method VS projection method is within 55%, which is relatively large.

The hair length results of the cross-separation algorithm are longer than the length of the hairs obtained by the projection method; the main reason is that the projection method measures the absolute length of the hair, ignoring the influence of the curvature of the hair [17], resulting in inaccurate detection results. The cross-hair separation algorithm can accurately track the hair curvature and reduce the error for length measurement, especially it is more applicable for the crossing hair detection. The method can improve the detection accuracy of yarn hairiness.

To further verify the correctness of the experiment, 50 hairiness images were acquired by the video microscope with a resolution of 1024 × 768 and a field of view of 24 × 18 mm. The hairiness information with hair lengths in the range of 1–6 mm was selected and compared with the USTER ZWEIGLE HL400 hairiness detection by manual method, respectively. The results in Table 10 show that the cross-hair separation algorithm results are more accurate in hair length measurement than the projection method. On average, the method developed in this paper measures hair length about 11.1% longer than the manual measurement, while commercial apparatus would report 62.5% shorter than the actual hair length. When adjacent field views are connected to form a large size image, longer hair length (> 6 mm), if there are any, can be detected. Further research is needed for more accurate hair length measurement and covering full hair length range.

5 Conclusion

This paper presents a cross-hair separation algorithm to convert yarn hairiness images to hair skeletons for hairiness measurement. It solves the cross-hair detection problem by obtaining the hair branch information to match the branches of crossed fibers and accurately measuring the length of crossed fibers. Compared with two hair detection methods (manual method and projection method), this method can process the cross-hairs according to the morphological characteristics of the hairs, while avoiding the influence of overlapping hair pixel points at the cross-point of hairs, resulting in small measurement error. The comparison of hairiness detection results shows that the cross-hair separation algorithm proposed in this report improves the accuracy on the existing detection methods. This algorithm for cross-fiber separation in yarn hairiness image processing can also provide the theoretical basis for cross-fiber detection and separation and hair length measurement.

References

Jing, J., Huang, M., Li, P., Zhang, L., Zhang, H.: Automatic determination of yarn hairiness length based on image processing and analysis algorithm. J. Donghua Univ. 33, 587–591 (2016). https://doi.org/10.19884/j.1672-5220.2016.04.018

Rizvandi, NB, Pižurica, A, Philips, W (2008) in Campilho, A, Kamel, M (eds) Image Analysis and Recognition. Springer Berlin Heidelberg

Fabijańska, A., Jackowska-Strumiłło, L.: Image processing and analysis algorithms for yarn hairiness determination. Mach. Vision Appl. 23, 527–540 (2012). https://doi.org/10.1007/s00138-012-0411-y

Khan, M.K.R., Begum, H.A., Sheikh, M.R.: An overview on the spinning triangle based modifications of ring frame to reduce the staple yarn hairiness. J. Text. Sci. Technol. 6, 19–39 (2020). https://doi.org/10.4236/jtst.2020.61003

Li, Z., Zhong, P., Tang, X., Chen, Y., Su, S., Zhai, T.: A new method to evaluate yarn appearance qualities based on machine vision and image processing. IEEE Access 8, 30928–30937 (2020). https://doi.org/10.1109/ACCESS.2020.2972967

Guha, A., Amarnath, C., Pateria, S., Mittal, R.: Measurement of yarn hairiness by digital image processing. J. Text. Inst. 101, 214–222 (2010). https://doi.org/10.1080/00405000802346412

Telli, A.: The comparison of the edge detection methods in the determination of yarn hairiness through image processing. Text. Appar. 31, 91–98 (2021). https://doi.org/10.32710/tekstilvekonfeksiyon.770366

Yuvaraj, D., Nayar, R.C.: A simple yarn hairiness measurement setup using image processing techniques. Indian J. Fibre Text. 37, 331–336 (2012)

Xia, Z., Liu, X., Wang, K., Deng, B., Xu, W.: A novel analysis of spun yarn hairiness inside limited two-dimensional space. Text. Res. J. 89, 4710–4716 (2019). https://doi.org/10.1177/0040517519841368

Huang, X., Tao, X., Yin, R., Liu, S.: A relative hairiness index for evaluating the securities of fiber ends in staple yarns and its application. Text. Res. J. 92, 356–367 (2022). https://doi.org/10.1177/00405175211035136

Wang, L., Lu, Y., Pan, R., Gao, W.: Evaluation of yarn appearance on a blackboard based on image processing. Text. Res. J. 91, 2263–2271 (2021). https://doi.org/10.1177/00405175211002863

Yassar, A.O., Memis, A., Mike, J.: Digital image processing and illumination techniques for yarn characterization. J. Electron. Imaging 14, 023001 (2005). https://doi.org/10.1117/1.1902743

Sun, Y., Li, Z., Pan, R., Zhou, J., Gao, W.: Measurement of long yarn hair based on hairiness segmentation and hairiness tracking. J. Text. Inst. 108, 1271–1279 (2017). https://doi.org/10.1080/00405000.2016.1240144

Tang, Y., Zheng, Y.: Mechanisms and Robotics. In: Pei, Z. (ed) Proceedings of the SPIE 12331, International Conference on Mechanisms and Robotics (ICMAR 2022), Zhuhai, China (2022)

Srisang, W., Jaroensutasinee, K., Jaroensutasinee, M.: Segmentation of overlapping chromosome images using computational geometry. Walailak J. Sci. Tech. 3, 181–194 (2011)

Bai, X., Latecki, L.J., Liu, Wy.: Skeleton pruning by contour partitioning with discrete curve evolution. IEEE Trans. Pattern Anal. Mach. Intell. 29, 449–462 (2007). https://doi.org/10.1109/TPAMI.2007.59

Wang, W., Xin, B., Deng, N., Li, J., Liu, N.: Single vision based identification of yarn hairiness using adaptive threshold and image enhancement method. Meas. J. Int. Meas. Confed. 128, 220–230 (2018). https://doi.org/10.1016/j.measurement.2018.06.029

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Deng, Z., Yu, L., Wang, L. et al. An algorithm for cross-fiber separation in yarn hairiness image processing. Vis Comput 40, 3591–3599 (2024). https://doi.org/10.1007/s00371-023-03053-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-03053-z