Abstract

Strong demand for autonomous vehicles and the wide availability of 3D sensors are continuously fueling the proposal of novel methods for 3D object detection. In this paper, we provide a comprehensive survey of recent developments from 2012–2021 in 3D object detection covering the full pipeline from input data, over data representation and feature extraction to the actual detection modules. We introduce fundamental concepts, focus on a broad range of different approaches that have emerged over the past decade, and propose a systematization that provides a practical framework for comparing these approaches with the goal of guiding future development, evaluation, and application activities. Specifically, our survey and systematization of 3D object detection models and methods can help researchers and practitioners to get a quick overview of the field by decomposing 3DOD solutions into more manageable pieces.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Gaining a high-level and three-dimensional understanding of digital pictures is one of the major challenges in the field of artificial intelligence. Applications like augmented reality, autonomous driving and other robotic navigation systems are pushing research in this field faster than ever [3, 4, 14]. Participating in real-life road traffic, self-driving vehicles need to gain an absolute understanding of their surroundings. Hence, a vehicle not only needs to recognize other road users and other objects, but also comprehend their pose and location to avoid collisions. This objective is well known as 3D object detection (3DOD) [5].

Meanwhile, 2D object detection (2DOD) has obtained impressive results in terms of precision and inference time, and is able to compete with or even surpass human vision [164]. However, to fully grasp the scene in a real 3D world, 2D recognition and detection results alone are no longer sufficient. 3DOD now extends this approach into the three-dimensional space by adding the desired parameters of dimension and orientation of the object to the established location and classification results.

The literature volume for 3DOD has increased significantly over the past years [31, 34]. Against the backdrop of highly sophisticated 2DOD models, it is apparent that the focus of research is shifting to 3DOD as the necessary hardware in terms of sensors and computing units becomes increasingly available.

Since 3DOD is a steadily growing field of investigation, there are several promising approaches and trends, including a large pool of various design options for the object detection pipeline. Providing an overview about relevant approaches and seminal achievements may offer orientation and can help to initiate further development in the research community. For this reason, we present a comprehensive review of 3DOD models and methods with exemplary applications and aim to conceptualize the full range of 3DOD approaches along a multi-stage pipeline.

With our work, we complement related surveys in the field (e.g., Arnold et al. [5]; Guo et al., [45]; Fernandes et al., [31]), which often focus on a particular domain (e.g., autonomous driving), specific data input (e.g., point cloud data), or a certain set of methods (e.g., deep learning techniques).

To carry out our review, we investigated papers that were published in a period from 2012 to 2021. In total, our literature corpus comprises more than hundred papers which we examined in detail to provide a classification of all approaches. Throughout our review, we describe representative examples along the 3DOD pipeline, while highlighting seminal achievements.

This survey is structured as follows. In Sect. 2, we provide all relevant foundations and subsequently refer to related work in Sect. 3. Thereafter, the identified literature is discussed and analyzed in detail. This constitutes the main part of our survey, for which we propose a structured framework along the 3DOD pipeline in Sect. 4. The framework consists of several stages with corresponding design options, which are examined in Sects. 5–9 due to their thematic depth. Afterward, we leverage our framework and classify the examined literature corpus in Sect. 10. Finally, we draw a conclusion of this work and give an Sect. 11.

2 Foundations

To give orientation in the following sections, we introduce major concepts of computer vision and in particular of 3DOD that are regarded as background and foundational knowledge.

2.1 Object detection

A core task in the field of computer vision is to recognize and classify objects in images. This general task can further be subdivided into several sub-tasks as summarized in Table 1.

Following this distinction, object detection is the fusion of object recognition and localization. In detail, the approach tries to simultaneously classify and localize specific object instances in an image [164]. Detected objects are classified and usually marked with bounding boxes. These are imaginary boxes that describe the objects of interest. They are defined as the coordinates of the rectangular border that fully encloses the target object.

Object detection can be considered as a supervised learning problem which defines the process of learning a function that can map input data to known targets based on a given set of training data [10]. For this task, different kinds of machine learning algorithms can be applied.

Conventional machine learning approaches require to first extract representative image features based on feature descriptors, such as Viola-Jones method [143], scale-invariant feature transform (SIFT) [90] or histogram of oriented gradients (HOG) [23]. Those are low-level features which are manually designed for the specific use case. On their basis, prediction models such as support vector machines (SVMs) can be trained to perform the object recognition task [120]. However, the diversity of image objects and use cases in form of pose, illumination and background makes it difficult to manually create a robust feature descriptor that can describe all kinds of objects [54].

For this reason, recent efforts are increasingly directed toward the application of artificial neural networks with deep network architectures, broadly summarized under the term deep learning [66]. Deep neural networks are able to perform object recognition without having to manually define specific features in advance. Their multi-layered architecture allows them to be fed with high-dimensional raw input data and then automatically discover internal representations at different levels of abstraction that are needed for recognition and detection tasks [7, 66].

A common type of deep neural network architecture, which is widely adopted by the computer vision community, is that of convolutional neural networks (CNNs). Due to their nested design, they are able to process high-dimensional data that come in the form of multiple arrays, such as given by color images that are composed of arrays containing pixel intensities in different color channels [85]. These techniques are proved to be superior in 2DOD offering more complex and robust features, while being applicable to any use case [66, 85].

2.2 3D vision and 3D object detection

3D vision aims to extend the previously discussed concepts of object detection by adding data of the third dimension. This leads on the one hand to six possible degrees of freedom (6-DoF)Footnote 1 instead of three, and on the other hand, to an accompanying increase in the number of scenery configurations. While methods in 2D space are good for simple visual tasks, more sophisticated approaches are required to improve, for instance, autonomous driving or robotics applications [78, 82]. The full understanding of the environment composed of real 3D objects implies the interpretation of scenes in which items may show up in absolutely discretionary positions and directions related to 6-DoF. This requires a substantial amount of computing power and increases the complexity of the performed operations [24].

3DOD transfers the task of object detection into the three-dimensional space. The idea of 3DOD is to output dimension, location and rotation of 3D bounding boxes and the corresponding class labels for all relevant objects within the sensors field of view [121]. 3D bounding boxes are rectangular cuboids in the three-dimensional space. To ensure relevancy, their size should be minimal, while still containing all relevant parts of an object. One common way to parameterize a 3D bounding box is (x, y, z, h, w, l, c), where (x, y, z) represent the 3D coordinates of the bounding box center, (h, w, l) refer to the height, width and length of the box and c stands for the class of the box [19]. Further, most approaches add an orientation parameter to the 3D bounding box defining the rotation of each box (e.g., Shi et al.,[125]).

2.3 Sensing technologies

To capture 3D scenes, commonly used monocular cameras are no longer sufficient. Therefore, special sensors have been developed to capture depth information. RGB-Depth (RGB-D) cameras like Intel’s RealSense use stereo vision, while Light Detection and Ranging (LiDAR) sensors such as Velodyne’s HDL-32E use laser beams to infer depth information. The data acquired by these 3D sensors can be converted to a more generic structure, the point cloud, which can be understood as a set of points in vector space. Further details on the different data inputs are provided in Sect. 5. Typically, 3DOD models rely on data captured by various active and passive optical sensor modalities, with cameras and LiDAR-sensors being the most popular representatives.

2.3.1 Cameras

Stereo cameras: Stereo cameras are inspired by human ability to estimate depth by capturing images with two eyes. Depth gets reconstructed by exploiting the disparity between two or more camera images that record the same scene from different points of view. To do so, stereoscopy leverages triangulation and epipolar geometry theory to create range information [37]. The acquired depth map normally gets appended to an RGB image as the fourth channel, together called RGB-D image. This sensor variant exhibits a dense depth map, though its quality is heavily dependent on depth estimation, which is also computationally expensive [28].

Time-of-Flight cameras: Instead of deriving depth information from different perspectives, the time-of-flight (TOF) principle can directly estimate the device-to-target distance. TOF systems are based on the LiDAR principle of sending light signals to the scene and measuring the time until they return. The difference between LiDAR and camera-based TOF is that LiDAR creates point clouds with a pulsed laser, whereas TOF cameras capture depth maps with an RGB-like camera. The data captured by stereo and TOF cameras can either be transformed into 2.5D representation like RGB-D or into 3D representation by generating a point cloud (e.g., Song and Xiao [134], Qi et al. [107], Sun et al. [137], Ren and Sudderth [118].

In general, camera sensors such as stereo and TOF have the advantage of low integration costs and relatively low arithmetic complexity. However, all these methods experience considerable quality volatility through environmental conditions like light and weather.

2.3.2 LiDAR sensors

LiDAR sensors emit laser pulses onto the scene and measure the time from emitting the beam to receiving the reflection. In combination with the constant of the speed of light, the measured time reveals the distance to the target. By assembling the 3D spatial information from the reflected laser at a 360\(^\circ \) angle, the sensor constructs a full three-dimensional map of the environment. This map is a set of 3D points, also called point cloud.

The respective reflection values represent the strength of the received pulses. Thereby, LiDAR does not consider RGB [67]. The HDL-64E3, as a common LiDAR system, outputs 120,000 points per frame, which adds up to a huge amount of data, namely 1,200,000 points per second on a 10 Hz frame rate [5].

The advantages of LiDAR sensors are long-range detection abilities, high resolution compared to other 3D sensors and independence of lighting conditions, which are counterbalanced by its high costs and bulky devices [5, 31].

2.4 Domains

Looking at the extensive research on 3DOD in the past ten years, the literature can be roughly summarized into two main areas: indoor applications and autonomous vehicle applications. These two domains are the main drivers for the field, even though 3DOD is not strictly limited to these two specific areas, as there are also other applications conceivable and already in use, such as in retail, agriculture and fitness.

Both domains face individual challenges and opportunities which led to this differentiation. However, it should also be noted that research in both areas is not mutually exclusive, as some 3DOD models offer solutions that are sufficiently generic and therefore do not focus on a particular domain (e.g., Qi et al. [108], Xu et al. [153], Tang and Lee [139], Wang and Jia [148]).

A fundamental difference between indoor and autonomous vehicle applications is that objects in indoor environments are often stacked on top of each other. This provides opportunities for learning inter-object relationships between the target and base/carrier objects. In research, this is referred to as a holistic understanding of the inner scene, which enables a better communication between service robots and people [51, 118]. The challenges with indoor applications are that scenes are often cluttered and many objects occlude each other [118].

Autonomous vehicle applications are characterized by long distances to potential objects and difficult weather conditions such as snow, rain and fog, which make the detection process more difficult [5]. Objects may also occlude each other, but since objects like cars, pedestrians and traffic lights are unlikely to be on top of each other, techniques such as bird’s-eye view projection can efficiently compensate for this disadvantage (e.g., Beltrán et al. [9], Wang et al. [149]).

2.5 Datasets

As seen in 2DOD, a crucial prerequisite for continuous development and fast progress of algorithms is the availability of publicly accessible datasets. Extensive data are required to train learning-based state-of-the-art models. Further, they are used to apply benchmarks to compare one’s own results with those of others. For instance, the availability of the dataset ImageNet [25] accelerated the development of 2D image classification and 2DOD models remarkably. The same phenomenon is observable in 3DOD and other tasks based on 3D data: More available data lead to a larger coverage of possible scenarios.

Similar to the domain focus, the most commonly used datasets for 3DOD developments can be roughly divided into two groups, distinguishing between autonomous driving scenarios and indoor scenes. In the following, we describe some of the major datasets that are publicly available. In addition, Table 2 provides an overview of the described datasets including the main reference and the environment of the recordings as well as the number of scenes, frames, 3D bounding boxes and object classes.

2.5.1 Autonomous driving datasets

KITTI: The most popular dataset for autonomous driving applications is KITTI [36]. It consists of stereo images, LiDAR point clouds and GPS coordinates, all synchronized in time. Recorded scenes range from highways, complex urban areas and narrow country roads. The dataset can be used for various tasks such as stereo matching, visual odometry, 3D tracking and 3D object detection. For object detection, KITTI provides 7481 training and 7518 test frames including sensor calibration information and annotated 3D bounding boxes around the objects of interest in 22 video scenes. The annotations are categorized in easy, moderate and hard cases, depending on the object size, occlusion and truncation levels. Drawbacks of the dataset are the limited sensor configurations and light conditions: All recordings were made during daytime and mostly under sunny conditions. Moreover, the class frequencies are quite unbalanced. 75% belong to the class car, 15% to the class pedestrian and 4% to the class cyclist. In natural scenarios, the missing variety challenges the evaluation of the latest methods.

Waymo Open: The Waymo Open dataset [138] focuses on providing a diverse and comprehensive dataset. It consists of 1,150 videos that are exhaustively annotated with 2D and 3D bounding boxes in images and LiDAR point clouds, respectively. The data collection was conducted by using five cameras presenting a front and side view of the recording vehicle, as well as a LiDAR sensor for 360\(^\circ \) view. Further, the data were recorded in three different cities with various light and weather conditions, providing a diverse scenery.

nuScenes: NuScenes [12] comprises 1,000 video scenes, 20 s each, in the context of autonomous driving. Each scene is represented by six different camera views, LiDAR and radar data with full 360\(^\circ \) field of view. It is significantly larger than the pioneering KITTI dataset with more than seven times as many annotations and 100 times as many images. Further, the nuScenes dataset also provides nighttime and bad weather scenarios, which is neglected in the KITTI dataset. On the downside, the dataset has limited LiDAR sensor quality with 34,000 points per frame and limited geographical diversity compared to the Waymo Open dataset, which covers an effective area of only five square kilometers.

2.5.2 Indoor datasets

NYUv2 & SUN RGB-D: NYUv2 [128] and its successor SUN RGB-D [133] are datasets commonly used for indoor applications. The goal of these datasets is to encourage methods focused on total scene understanding. The datasets were recorded using four different RGB-D sensors to ensure the generalizability of applied methods for different sensors. Even though SUN RGB-D inherited the 1449 labeled RGB-D frames from the NYUv2 dataset, NYUv2 is still occasionally used by nowadays methods. SUN RGB-D consists of 10,335 RGB-D images that are labeled with about 146,000 2D polygons and around 64,500 3D bounding boxes with accurate object orientation measures. Additionally, there is a room layout and scene category provided for each image. To improve image quality, short videos of every scene have been recorded. Several frames of these videos were then used to create a refined depth map.

Objectron: Recently, Google released the Objectron dataset [1], which is composed of object-centric video clips capturing nine different objects categories in indoor and outdoor scenarios. The dataset consists of 14,819 annotated video clips containing over four million annotated images. Each video is accompanied by a sparse point cloud representation.

3 Related reviews

As of today, to the best of our knowledge, there are only a limited number of reviews that aim to organize and classify the most important methods and pipelines for 3DOD.

Arnold et al. [5] were some of the first to propose a classification for 3DOD approaches with a particular focus on autonomous driving applications. Based on the input data that is passed into the detection model, they divide the approaches into (i) monocular image-based methods, (ii) point cloud methods and (iii) fusion-based methods. Furthermore, they break down the point cloud category into three subcategories of data representation: (ii-a) projection-based, (ii-b) volumetric representations and (ii-c) Point Nets. The data representation states which kind of input the model consumes and which information the input contains so that the subsequent stage can process it more conveniently according to the design choice.

While regarding various applications, such as 3D object classification, semantic segmentation and 3DOD, Liu et al. [87] focus on feature extraction methods which constitutes the properties and characteristics that the model derives from the passed data. They classify deep learning models on point clouds into (i) point-based methods and (ii) tree-based methods. The former directly uses the raw point cloud, and the latter first employs a k-dimensional tree to preprocess the corresponding data representation.

Griffiths and Boehm [44] consider object detection as a special type of classification and thus provide relevant information for 3DOD in their review on deep learning techniques for 3D sensed data classification. They differentiate the approaches on behalf of the data representation into (i) RGB-D methods, (ii) volumetric approaches, (iii) multi view CNNs, (iv) unordered point set processing methods and (v) ordered point set processing techniques.

Huang and Chen [53] touch lightly upon 3DOD in their review paper about autonomous driving technologies using deep learning methods. They suggest a similar classification of methods as Arnold et al. [5] by distinguishing between (i) camera-based methods, (ii) LiDAR-based methods, (iii) sensor-fusion methods and additionally (iv) radar-based methods. While giving a coarse structure for 3DOD, the conference paper is waiving an explanation for their classification.

Bello et al. [8] consider the field from a broader perspective by providing a survey of deep learning methods on 3D point clouds. The authors organize and compare different methods based on a structure that is task-independent. Subsequently, they discuss the application of exemplary approaches for different 3D vision tasks, including classification, segmentation and object detection.

Addressing likewise the higher-level topic of deep learning for 3D point clouds, Guo et al. [45] give a more detailed look into 3DOD. They structure the approaches for handling point clouds into (i) region proposal-based methods, (ii) single-shot methods and (iii) other methods by categorizing them on account of their model design choice. Additionally, the region proposal-based methods are split along their data representation into (i-a) multi-view, (i-b) segmentation, (i-c) frustum-based and again (i-d) other methods. Likewise, the single-shot category inherits the subcategories (ii-a) bird’s-eye view, (ii-b) discretization and (ii-c) point-based approaches.

Most recently, Fernandes et al. [31] presented a comprehensive survey which might be the most similar to this work. They developed a detailed taxonomy for point-cloud-based 3DOD. In general, they divide the detection models along their pipeline into three stages, namely data representation, feature extraction and detection network modules.

The authors note that in terms of data representation, the existing literature takes the approach of either converting the point cloud data into voxels, pillars, frustums or 2D-projections, or consuming the raw point cloud directly. Feature extraction gets emphasized as the most crucial part of the 3DOD pipeline. Suitable features are essential for an optimal feature learning which in turn has a great impact on the appropriate object localization and classification in later steps. The authors classify the extraction methods into point-wise, segment-wise, object-wise and CNNs which are further divided into 2D CNN and 3D CNN backbones. The detection network module consists of the multiple output task of object localization and classification, as well as the regression of 3D bounding box parameters and object orientation. Same as in 2DOD, these modules are categorized into the architectural design principles of single-stage and dual-stage detectors.

Although all preceding reviews provide some systematization for 3DOD, they move—with exception of Fernandes et al. [31]—on a high level of abstraction. They tend to lose some of the information which is crucial to fully map relevant trends in this vivid research field.

Moreover, as mentioned above, all surveys are limited to either domain-specific aspects (e.g., autonomous driving applications) or focus on a subset of methods (e.g., point cloud-based approaches). Monocular-based methods, for example, are neglected in almost all existing review papers.

4 3D object detection pipeline

Intending to structure the research field of 3DOD from a broad perspective, we propose a systematization that enables to classify current 3DOD approaches at an appropriate abstraction level, by neither losing relevant information caused by a high level of abstraction nor being too specific and complex by a too fine-granular perspective. Likewise, our systematization aims at being sufficiently robust to allow a classification of all existing 3DOD pipelines and methods as well as of future works without the need for major adjustments to the general framework.

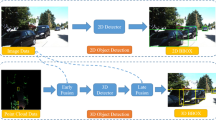

Figure 1 provides an overview of our systematization. It is structured along the general stages of an object detection pipeline, with several design choices at each stage. It starts with the choice of input data (Sect. 5), followed by the selection of a suitable data representation (Sect. 6) and corresponding approaches for feature extraction (Sect. 7). For the latter steps, it is possible to apply fusion approaches (Sect. 8) to combine different data inputs and take advantage of multiple feature representations. Finally, the object detection module is defined (Sect. 9).

The structuring along the pipeline enables us to order and understand the underlying principles of this field. Furthermore, we can compare the different approaches and are able to outline research trends in different stages of the pipeline. To this end, we carry out a qualitative literature analysis of proposed 3DOD approaches in the following sections along the pipeline to examine specific design options, benefits, limitations and trends within each stage.

5 Input data

In the first stage of the pipeline, a model consumes the input data which already restricts the further processing. Common inputs for 3DOD pipelines are (i) RGB images (Sect. 5.1), (ii) RGB-D images (Sect. 5.2) and (iii) point clouds (Sect. 5.3). 3DOD models using RGB-D images are often referred to as 2.5D approaches (e.g., Deng and Latecki [27], Sun et al. [137], Maisano et al. [94]), whereas 3DOD models using point clouds are regarded as true 3D approaches.

5.1 RGB images

Monocular or RGB images provide a dense pixel representation in the form of texture and shape information [5, 37, 80]. A 2D image can be seen as a matrix, containing the dimensions of height and width with the corresponding color values.

Especially for subtasks of 3DOD applications such as lane line detection, traffic light recognition or object classification, monocular-based approaches enjoy the advantage of real time processing by 2DOD models. Probably, the most severe disadvantage of monocular images is the lack of depth information. 3DOD benchmarks have shown that depth data is essential for accurate 3D localization [59]. Furthermore, monocular images face the problem of object occlusion as they only capture a single view.

5.2 RGB-D images

RGB-D images can be created with stereo or TOF cameras that provide depth information in addition to color information (cf. Section 2.3). RGB-D images consist of an RGB image with an additional depth map [28]. The depth map is comparable to a grayscale image, except that each pixel represents the actual distance between the sensor and the surface of the scene object. An RGB image and a depth image ideally have a one-to-one correspondence between pixels [147].

RGB-D images, also known as range images, are convenient to use with the majority of 2DOD methods, treating depth information similarly to the three RGB channels [37]. However, as with monocular images, RGB-D faces the problem of occlusion since the scene is only presented through a single perspective. In addition, objects are presented at different scales depending on their position in space.

5.3 Point cloud

The data acquired by 3D sensors can be converted to a more generic structure, the point cloud. It is a three-dimensional set of points that has an unorganized spatial structure [101]. The point cloud is defined by its points, which comprise the spatial coordinates of a sampled surface of an object. However, further geometric and visual attributes can be added to each point [37].

As described in Sect. 2.3, point clouds can be obtained from LiDAR sensors or transformed RGB-D images. Yet, point clouds obtained from RGB-D images are typically noisier and sparser compared to LiDAR-generated point clouds due to low resolution and perspective occlusion [92].

The point cloud offers a fully three-dimensional reconstruction of the scene, providing rich geometric, shape and scale information. This enables the extraction of meaningful features that boost the detection performance. Nevertheless, point clouds face severe challenges which are based on their nature and processability. Common deep learning operations, which have proven to be the most effective techniques for object detection, require data to be organized in a tensor with a dense structure (e.g., images, videos) which is not fulfilled by point clouds [169]. In particular, point clouds exhibit irregular, unstructured and unordered data characteristics [8].

Characteristics of point cloud data: (a) irregular collection with sparse and dense regions, (b) unstructured cloud of independent points without a fixed grid, (c) unordered set of points that are invariant to permutation [8]

-

Irregular means that the points of a point cloud are not evenly sampled across the scene. Hence, some of the regions have a denser distribution of points than others. Especially distant objects are usually represented sparsely by very few points because of the limited range recording ability of current sensors.

-

Unstructured means that points are not on a regular grid. Accordingly, the distances between neighboring points can vary. In contrast, pixels in an image always have a fixed position to their neighbors, which is evenly spaced throughout the image.

-

Unordered means that the point cloud is just a set of points that is invariant to permutations of its members. Particularly, the order in which the points are stored does not change the scene that it represents. In other formats, e.g., an image, data usually get stored as a list [8, 106]. Permutation invariance, however, means that a point cloud of N points has N! permutations and the subsequent data processing must be invariant to each of these different representations.

Figure 2 provides an illustrative overview of the three challenging characteristics of point cloud data.

6 Data representation

To ensure a correct processing of the input data by 3DOD models, it must be available in a suitable representation. Due to the different data formats, 3DOD data representations can be generally classified into 2D and 3D representations. Beyond that, we assign 2.5 representations to either 2D if they come in an image format (regardless of the number of channels), or 3D if the data get described in a spatial structure. 2D representations generally cover (i) monocular representations (Sect. 6.1) and (ii) RGB-D front views (Sect. 6.2). 3D representations cover (iii) grid cells (Sect. 6.3) and (iv) point-wise representations (Sect. 6.4).

6.1 Monocular representation

Despite the lack of information about the range, monocular representation enjoys a certain popularity among 3DOD methods due to its efficient computation. Additionally, the required cameras are affordable and simple to set up. Hence, monocular representations are attractive for applications where resources are limited [56, 61].

The vast majority of monocular representations use the widely known frontal view which is limited by the viewing angle of the camera. Other than that, [63] tackle monocular 360\(^\circ \) panoramic imagery using equirectangular projections instead of rectilinear projections of conventional camera images. To access true 360\(^\circ \) processing, they fold the panorama imagery into a 360\(^\circ \) ring by stitching left and right edges together with a 2D convolutional padding operation.

Only a small proportion of 3DOD monocular approaches exclusively use an image representation (e.g., Jörgensen et al. [56]) for 3D spatial estimations. Most models leverage additional data and information to substitute the missing depth information (more details in Sect. 7.2.2). Additionally, representation fusion techniques are quite popular to compensate disadvantages. For instance, 2D candidates get initially detected from monocular images before a 3D bounding box for the spatial object is predicted based on the initial proposals. In general, the latter step processes an extruded 3D subspace derived from the 2D bounding box. In case of representation fusion, the monocular representation is usually not used for full 3DOD but rather as a support for increasing efficiency through limiting the search space for heavy three-dimensional computations or for delivering additional features such as texture and color. These methods are described in depth in Sect. 8 (Fusion Approaches).

6.2 RGB-D front view

RGB-D data can either be transformed to a point cloud or kept in its natural form of four channels. Therefore, we can distinguish between an RGB-D (3D) representation (e.g., Chen et al. [18]; Tang and Lee [139], Ferguson and Law [30]), which exploits the depth information in its spatial form of a point cloud, and an RGB-D (2D) representation (e.g., Chen et al. [17], He et al. [49], Li et al. [75], Rahman et al. [112], Luo et al. [92]), which holds an additional 2D depth map in an image format.

Thus, as mentioned in Sect. 5.2, RGB-D (2D) images represent monocular images with an appended fourth channel of the depth map. The data are compressed along the z-axis generating a dense projection in the frontal view. The 2D depth image can be processed similarly to RGB channels by common 2D CNN models.

The RGB-D (2D) representation is often referred to as front view (FV) in 3DOD research. However, front and range view (RV) are occasionally equated with each other in current research. For clarification: In this work, the FV is considered as an RGB-D image generated by a TOF, stereo or similar camera, while the RV is defined as the natural frontal projection of a point cloud (see also Sect. 6.3.2).

6.3 Grid cells

Processing a point cloud (cf. Section 5.3) poses a particular challenge for CNNs because convolution operations require a structured grid, which is not present in point cloud data [8]. Thus, to take advantage of advanced deep learning methods and leverage highly informative point clouds, they must first be transformed into a suitable representation.

Current research presents two ways of handing point clouds. The first and more natural solution is to fit a regular grid onto the point cloud, producing a grid cell representation. Many approaches do so by either quantizing point clouds into 3D volumetric grids (Sect. 6.3.1) (e.g., Song and Xiao [134], Zhou and Tuzel [169], Shi et al. [125]) or by discretizing them into (multi-view) projections (Sect. 6.3.2) (e.g., Li et al. [72], Chen et al. [19], Beltrán et al. [9], Zheng et al. [165]).

The second and more abstract way to solve the point cloud representation problem is to process the point cloud directly by grouping points into point sets. This approach does not require convolutions and thus allows the point cloud to be processed without transforming it into a point-wise representation (Sect. 6.4) (e.g., Qi et al. [108], Shi et al. [124], Huang et al. [52]).

Along these directions, several state-of-the-art methods have been proposed for 3DOD pipelines, which we will describe exemplarily in the following.

6.3.1 Volumetric grids

The main idea behind the volumetric representation is to subdivide the point cloud into equally distributed grid cells, called voxels, which allows further processing in a structured form. For this purpose, the point cloud is converted into a 3D fixed-size voxel structure of dimension (x, y, z). The resulting voxels either contain raw points or already encode the occupied points into a feature representation such as point density or intensity per voxel [8]. Figure 3 illustrates the transformation of a point cloud into a voxel-based representation.

Voxelization of a point cloud [8]

Usually, voxels have a cuboid shape (e.g., Li [70], Zhou and Tuzel [169], Ren and Sudderth [118]). However, there are also approaches applying other forms such as pillars (e.g., Lang et al. [65], Lehner et al. [68]).

Feature extraction networks utilizing voxel-based representations are computationally more efficient and reduce memory needs. Instead of extracting low- and high-dimensional features for each point individually, clusters of points (i.e., voxels) are used to extract such features [31]. Despite reducing the dimensionality of a point cloud through discretization, the spatial structure is kept and allows to make use of the geometric features of the scene.

Volumetric approaches using cuboid transformation of the point cloud scene have been used, for example, by Song and Xiao [134], Li [70], Engelcke et al. [29], Zhou and Tuzel [169] and Ren and Sudderth [118].

To speed up computation, Lang et al. [65] propose a pillar-based voxelization of the point cloud, instead of using the conventional cubical quantization. The vertical column representation allows to skip expensive 3D convolutions in the following steps, since pillars have unlimited spatial extent in z-direction and can therefore be projected directly onto 2D pseudo images. As a result, all feature extraction operations are processable by efficient 2D CNNs. Kuang et al. [62] extent this approach even further by using a learning-based feature encoding approach as opposed to relying on handcrafted feature initialization.

6.3.2 Projection-based representation

In addition to the need to transform the point cloud into a processable state, several approaches seek to leverage the expertise and power of 2DOD processing. Especially for reasons of better inference times for point cloud models, projection approaches became popular. They project the point cloud onto an image plane whilst preserving the depth information. Subsequently, the representation can be processed by efficient 2D extractors and detectors. Commonly used representations are the previously mentioned range view, using an image plane projection, and the bird’s eye view, projecting the point cloud onto the ground plane.

Range View: Whereas the 2D FV corresponds to monocular and stereo cameras, the RV is a native 2D representation of the LiDAR data. The point cloud is projected onto a cylindrical 360\(^\circ \) panoramic plane exactly as the data is captured by the LiDAR sensor. Since the LiDAR projection is still neither in a processable state nor contains any discriminative features such as RGB information, the projected RV is partitioned into a fine-grained grid and encoded in the successive feature initialization step (see Sect. 7.4.1).

Meyer et al. [97] and Liang et al. [81] emphasize that the naturally compact RV results in a more efficient computation in comparison to other projections. In addition, the information loss of projection is considerably small since the RV constitutes the native representation of a rotating LiDAR sensor [81]. At the same time, RV suffers from distorted object size and shape due to its cylindrical image character [157]. Inevitably, RV representations face the same problem as camera images, in that the size of objects is closely related to their range and occlusions may occur due to perspective [168]. Zhou et al. [81] argue that “range image is a good choice for extracting initial features, but not a good choice for generating anchors”. Moreover, its performance in 3DOD models does not match with state-of-the-art bird’s eye view (BEV) projections. Nevertheless, RV enables more accurate detection of small objects [96, 97].

Exemplary approaches using RV are proposed by Li et al. [72], Chen et al. [19], Zhou et al. [168] and Liang et al. [81].

Bird’s Eye View: While RV discretize the data onto a panoramic image plane, the BEV is an orthographic view of the point cloud scene projecting the points onto the ground plane. Therefore, data get condensed along the y-axis. Figure 4 shows an exemplary illustration of a BEV projection.

Visualization of bird’s eye view projection of a point cloud without discretization into grid cells [156]

Chen et al. [19] were some of the first introducing BEV to 3DOD in their seminal work MV3D. They organized the point cloud as a set of voxels and then transformed each voxel column through an overhead perspective into a 2D grid with a specific resolution and encoding for each cell. As a result, a dense pseudo-image of the ground plane is generated which can be processed by standard image detection architectures.

Unlike RV, BEV offers the advantage that the object scales are preserved regardless of the range. Further, BEV perspective eases the typical occlusion problem of object detection since objects are displayed separately from each other in a free-standing position [168]. These advantages let the networks exploit priors about the physical dimension of objects, especially for anchor generation [157].

On the other hand, BEV data gets sparse in at distance, which makes it unfavorable for small objects [65, 153]. Furthermore, the assumption that all objects lie on one mutual ground plane often turns out to be infeasible in reality, especially in indoor scenarios [168]. Also the often coarse voxelization of BEV may remove fine granular information leading to inferior detection at small object sizes [96].

Exemplary models using BEV representation can be found in the work from Wang et al. [149], Beltrán et al. [9], Liang et al. [80], Yang et al. [157], Simon et al. [130], Zeng et al. [162], Li et al. [74], Ali et al. [2], He et al. [48], Liang et al. [81] and Wang et al. [145].

Multi-View Representation: Often BEV and RV are not used as single data representations but as a multi-view approach, meaning that RV and BEV but also monocular-based images are combined to represent the spatial information of a point cloud.

Chen et al. [19] were the first to integrate this concept into a 3DOD pipeline, followed by many other models adapting to fuse 2D representations from different perspectives (e.g., Ku et al. [60], Li et al. [74], Wang et al. [145, 146], Liang et al. [81]).

Although the representations of BEV and RV are compact and efficient, they are always limited by the loss of information, originating from the discretization of the point cloud into a fixed number of grid-cells and the respective feature encoding of the cells’ points.

6.4 Point-wise representation

Either way, discretizing the point cloud into a projection or volumetric representation inevitably leads to information loss. Against this backdrop, Qi et al. [106] introduced PointNet, and thus a new way to consume the raw point cloud in its unstructured nature having access to all the recorded information.

In point-wise representations, points are isolated and sparsely distributed in a spatial structure representing the visible surface, while preserving precise localization information. PointNet handles this representation by aggregating neighboring points and extracting a compressed feature from the low-dimensional point features of each set, enabling a raw point-based representation for 3DOD models. A more detailed description of PointNet and its successor PointNet++ is given in Sects. 7.3.1 and 7.3.2, respectively.

However, PointNet was developed and tested on point clouds containing 1,024 points [106], whereas realistic point clouds captured by a standard LiDAR sensor such as Velodyne’s HDL-64E3 usually consist of 120,000 points per frame. Thus, applying PointNet on the whole point cloud is a time- and memory-consuming operation. De facto, point clouds are rarely consumed in total. As a consequence, further techniques are required to improve efficiency, such as cascading fusion approaches (see Sect. 8.1) that crop point clouds to the region of interest and pass only subsets of the point cloud to the point-based feature extraction stage.

In general, it can be stated that point-based representations retain more information than voxel- or projection-based methods. But on the downside point-based methods are inefficient when the number of points is large. Yet, a reduction of the point clouds like in cascading fusion approaches always comes with a decrease in information. In summary, it can be said that maintaining both efficiency and performance is not achievable for any of the representations to date.

7 Feature extraction

Feature extraction gets emphasized as the most crucial part of the 3DOD pipeline that research is focusing on Fernandes et al. [31]. It follows the paradigm to reduce dimensionality of the data representation with the intention of representing the scene by a robust set of features. Features generally depict the unique characteristics of the data used to bridge the semantic gap, which denotes the difference between the human comprehension of the scene and the model’s prediction. Suitable features are essential for an optimal feature learning which in turn has a great impact on the detection in later steps. Hence, the goal of feature extraction is to provide a robust semantic representation of the visual scene that ultimately leads to the recognition and detection of different objects [164].

As with 2DOD, feature extraction approaches can be roughly divided into (i) handcrafted feature extraction (Sect. 7.1) and (ii) feature learning via deep learning methods. Regarding the latter, we can distinguish the broad body of 3DOD research depending on the respective data representation. Hence, feature learning can be performed either in a (ii-a) monocular (Sect. 7.2), (ii-b) point-wise (Sect. 7.3), (ii-c) segment-wise (Sect. 7.4) or in a (ii-d) fusion-based approach (Sect. 8).

7.1 Handcrafted feature extraction

Although the vast majority of 3DOD approaches have moved toward hierarchical deep learning, which can generate more complex and robust features, there are still cases where features are created manually.

Handcrafted feature extraction differs from feature learning in that the features are selected individually and are usually used directly for the final determination of the scene. Features like edges or corners are tailored by hand and serve as the ultimate characteristics for object detection. There is no algorithm that independently learns how these features are constructed or how they can be combined, as it is the case with CNNs. The feature initialization already represents the feature extraction step. Often, these handcrafted features are then scored by SVMs or random forest classifiers, which are deployed exhaustively over the entire image or scene.

A few exemplary 3DOD models using handcrafted feature extraction shall be introduced in the following. For instance, Song and Xiao [134] use four types of 3D features which they exhaustively extract from each voxel cell, namely point density, 3D shape feature, 3D normal feature and truncated signed distance function feature. The features are used to handle the problem of self-occlusion (see Fig. 5).

Visualization of manually crafted features [134]

Wang and Posner [144] also use a fixed-dimensional feature vector containing the mean and variance of the reflectance values of all points that lie within a voxel and an additional binary occupancy feature. The features are not processed further and are used directly for detection purposes in a voting scheme.

Ren and Sudderth [116] introduce their discriminative cloud of oriented gradients (COG) descriptor, which they further develop in their subsequent work by proposing LSS (latent support surfaces) [117] and COG 2.0 [118]. Additionally, the approach was also adopted by Liu et al. [88]. In general, the COG feature is able to describe complex 3D appearances within every orientation, as it consists of a gradient computation, 3D orientation bins and a normalization. For each proposal, the point cloud density, surface normal features and a COG-feature are calculated in a sliding window fashion, which are then scored with pre-trained SVMs.

In addition, manually configured features are typically used in combination with matching algorithms for detection purposes. For example, Yamazaki et al. [154] create gradient-based image features by applying principal component analysis to the point cloud, which are subsequently used to compute the normalized cross-correlation. The main idea is to use the spatial relationships between image projection directions to discover the optimal template matching for detection. Similarly, Teng and Xiao [140] use handcrafted features, namely color histogram and key point histogram for template matching purposes. Another example is the approach proposed by He et al. [49]. The authors create silhouette gradient orientations from RGB and surface normal orientations from depth images.

7.2 Monocular feature learning

Probably the most difficult form of feature extraction for 3DOD is exercised in monocular representation. Since there is no direct depth data, well-informed features are difficult to obtain, and thus, most approaches attempt to compensate for this lack of information with various depth substitution techniques.

7.2.1 Solely monocular approaches

In our literature corpus, there is only one paper which takes up the challenge of performing 3DOD exclusively with monocular images [56]. The authors use a single-shot detection (SSD) framework ( [86], see also Sect. 9.3.2) to generate per-object canonical 3D bounding box parameters. They start from a classical bounding box detector and add new output heads for 3DOD features. More specifically, they add distance, orientation, dimension and 3D corners to the already available class score and 2D bounding box heads. The conducted feature extraction is the same as it is in the 2D framework.

Models that use only monocular inputs follow the straightforward way of predicting spatial parameters directly without any range information. While the objectives of dimension and pose estimation are relatively easy to fulfill as they rely heavily on the given feature of appearance in 2D images, they face the challenge of difficult location estimation, since monocular inputs do not have a natural feature for spatial location [46, 87].

7.2.2 Informed monocular approaches

The absence of depth information cannot be fully compensated for in purely monocular approaches. Therefore, many state-of-the-art monocular models supplement the 2D representation by an auxiliary depth estimation network or additional external data and information. The idea is to use prior knowledge of the target objects and the scene, such as shape, context and occlusion patterns to compensate for missing depth data.

These depth substitution techniques can be roughly divided into (i) depth estimation networks, (ii) geometric constraints and (iii) 3D template matching. Often, they are used in combination.

While depth estimation networks are applied directly to the original representation to generate a new input for the model, geometric constraints and 3D model matching tackle the lack of range information in the later detection steps of the pipeline.

Depth Estimation Networks: Depth estimation networks generate informed depth features or even entirely new representations that possess range information, such as point clouds derived synthetically from monocular imagery. These new representations are then subsequently exploited by depth-aware models. A representative model is Mono3D [16]. It uses additional instance and semantic segmentation along with further features to reason about the pose and location of 3D objects.

Srivastava et al. [136] modify the generative adversarial network of BirdNet Beltran et al. [9] to create a BEV projection from the monocular representation. All following operations such as feature extractions and predictions are then performed on this new representation.

Similarly, Roddick et al. [119] transform a monocular representation to the BEV perspective. They introduce an orthographic feature transformation network that maps the features from the RGB perspective to a 3D voxel map. The features of the voxel map are eventually reduced to the 2D orthographic BEV feature map by consolidation along the vertical dimension.

Payen de La Garanderie et al. [63] adapt the monocular depth recovery technique of Godard et al. [40], called Mono Depth, for their special case of monocular 360\(^\circ \) panoramic processing. They predict a depth map by training a CNN on left-right consistency inside stereo image pairs. However, at the time of inference, the model only requires single monocular images to estimate a dense depth map.

Even more advanced, a few approaches devote themselves to generate a point cloud from monocular images. Xu and Chen [152] use a stand-alone network for disparity prediction which is similarly based on Mono Depth to predict a depth map. Unlike Payen de La Garanderie et al. [63], they further process the depth map to estimate a LiDAR-like point cloud.

An almost identical procedure is presented by Ma et al. [93]. First, they generate a depth map through a self-defined CNN and then proceed to generate a point cloud by using the camera calibration files. While Xu and Chen [152] mainly take the depth data as auxiliary information of RGB features, Ma et al. [93] focus on taking the generated depth as a core feature and explicitly using its spatial information.

Similarly, Weng and Kitani [150] adapt the deep ordinal regression network (DORN) by Fu et al. [35] for their pseudo LiDAR point cloud generation and then exploit a Frustum-PointNet-like model [108] for the object detection task. Further information on the Frustum PointNet approach is given in Sect. 8.1.

Instead of covering the entire scene in a point-based representation, Ku et al. [61] primarily reduce the space by lightweight predictions and then only transform the candidate boxes to point clouds, preventing redundant computation. To do so, they exploit instance segmentation and available LiDAR data for training to reconstruct a point cloud in a canonical object coordinate system. A similar approach for instance depth estimation is pursued by Qin et al. [110].

Geometric Constraints: Depth estimation networks offer the advantage of closing the gap of missing depth in a direct way. Yet, errors and noise occur during depth estimation, which may lead to biased overall results and contributes to a limited upper-bound of performance [6, 11]. Hence, various methods try to skip the naturally ill-posed depth estimation and tackle monocular 3DOD as a geometrical problem of mapping 2D into 3D space.

Especially in autonomous driving applications, the 3D box proposals are often constrained by a flat ground assumption, namely the street. It is assumed that all possible targets are located on this plane, since automotive vehicles do not fly. Therefore, these approaches force the bounding boxes to lay along the ground plane. In indoor scenarios, on the other hand, objects are located on various height levels. Hence, the ground plane constraint does not get the attention as in autonomous driving applications. Nevertheless, plane fitting is frequently applied in indoor scenarios to get the room orientation.

Zia et al. [170] were one of the first to assume a common ground plane in their approach, which helped them to extensively reconstruct the scene. Further, a ground plane drastically reduces the search space by only leaving two degrees of freedom for translation and one for rotation. Other representative examples for implementing ground plane assumptions in monocular representations are given by Chen et al. [16], Du et al. [28] and Gupta et al. [46]. All of them leverage the random sample consensus approach by Fischler and Bolles [33], a popular technique that is applied for ground plane estimation.

A different geometrical approach that is used to recover the under-constrained monocular 3DOD problem is to establish consistency between the 2D and 3D scenes. Mousavian et al. [99], Li et al. [71], Liu et al. [84] and Naiden et al [100] do so by projecting the 3D bounding box onto a previously determined 2D bounding box. The core notion is that the 3D bounding box should fit tightly to at least one side of its corresponding 2D box detection. Naiden et al. [100] use, for instance, a least square method for the fitting task.

Other methods deploy 2D-3D consistency by incorporating geometric constraints such as room layout and camera pose estimations through an entangled 2D-3D loss function (e.g., Huang et al. [51], Simonelli et al. [131], Brazil and Liu [11]). For example, Huang et al. [51] define the 3D object center through corresponding 2D and camera parameters. Then a physical overlap between 3D objects and 3D room layout gets penalized. Simonelli et al. [131] first disentangle the 2D and 3D detection losses to optimize each loss individually. Subsequently, they also leverage the correlation between 2D and 3D in a combined multi-task loss.

Another approach is presented by Qin et al. [111]. The authors exploit triangulation, which is well known for estimating 3D geometry in stereo images. They use 2D detection of the same object in a left and right monocular image for a newly introduced anchor triangulation, where they directly localize 3D anchors based on the 2D region proposals.

3D template matching: An additional way of handling monocular representations for 3DOD is to match the images with 3D object templates. The idea is to have a database of object images from different viewpoints and their underlying 3D depth features. One popular approach for creating templates is to render synthetic images from computer-aided design (CAD) models, whereby images are created from all sides of the object. Then the monocular input image is searched and matched using this template database. On this basis, the object pose and location can be concluded.

Fidler et al. [32] address the task of monocular object detection with the representation of an object as a deformable 3D cuboid. The 3D cuboid consists of faces and parts, which are allowed to deform according to their anchors in the 3D box. Each of these faces is modeled by a 2D template that corresponds to appearance of the object from an orthogonal point of view. It is assumed that the 3D cuboid can be rotated so that the image view from a defined set of angles can be projected onto the respective cuboids’ face and subsequently scored by a latent SVM.

Chabot et al. [13] initially use a network to generate 2D detection results, vehicle part coordinates and a 3D box dimension proposal. Thereafter, they match the dimensions to a 3D CAD dataset consisting of a fixed number of object models to assign the corresponding 3D shapes in the form of manually annotated vertices. Those 3D shapes are then used for performing 2D-to-3D pose matching in order to recover 3D orientation and location. Barabanau et al. [6] reason their approach on sparse but salient features, namely 2D key points. They match CAD templates based on 14 key points. After assigning one of five distinct geometric classes, they execute an instance depth estimation by a vertical plane passing through two of the visible key points to lift the predictions into a 3D space.

3D templates provide a potentially powerful source of information. However, a sufficient number of models are not always available for each object class. Therefore, these methods tend to focus on a small amount of classes. The limitation to shapes covered by the selection of 3D templates makes it difficult to generalize or extend 3D template matching to classes for which no models are available [61].

In summary, monocular 3DOD achieves promising results. Nevertheless, the lack of depth data prevents monocular 3DOD from reaching state-of-the-art results. The depth substitution techniques may limit the detection performance because errors in depth estimation, geometrical assumptions or template matching are propagated to the final 3D box prediction. In addition, informed monocular approaches may be comparably vulnerable to external attacks. Cheng et al. [21] showed that both physical and digital attacks on depth estimation networks have serious impacts on 3DOD performance.

7.3 Point-wise feature learning

As described above, the application of deep learning techniques on point cloud data is not straightforward due to the irregularity of the point cloud (cf. Section 5.3). Many existing methods try to leverage the expertise of convolutional feature extraction by either projecting point clouds onto 2D image views or by converting them into regular grids of voxels. However, projecting point clouds onto a specific viewpoint discards valuable information, which is particularly important in crowded scenes. The voxelization, on the other hand, leads to high computational costs due to the sparse nature of point clouds and also suffers from information loss in point-crowded voxels. Either way, manipulating the original data may have a negative effect.

To overcome the problem of irregularity in point clouds with an alternative approach, Qi et al. [106] proposed PointNet, which is able to learn point-wise features directly from the raw point cloud. It is based on the assumption that points which lie close to each other can be grouped together and compressed as a single point. Shortly after, Qi et al. [109] introduced its successor PointNet++, adding the ability to capture local structures in the point cloud. Both networks are originally designed for classification tasks on the whole point cloud, next to being able of predicting semantic classes for each point of the point cloud. Thereafter, Qi et al. [108] introduced a way to implement PointNet into 3DOD by proposing Frustum-PointNet. By now, many of the state-of-the-art 3DOD methods are based on the general PointNet-architectures. Therefore, it is crucial to understand the underlying architecture and how it is used in 3DOD methods.

7.3.1 PointNet

PointNet consists of three key modules: (i) a max-pooling layer serving as a symmetric function, (ii) a local and global information combination structure in the form of a multi-layer perceptron (MLP) and (iii) two joint alignment networks for the alignment of input points and point features, respectively Qi et al. [106].

To deal with the permutation invariance of point clouds, PointNet is built with symmetric functions in the form of max-pooling operations. Symmetric functions have the same output regardless of the input order. The max-pooling operation results in a global feature vector that aggregates information from all points of the point cloud. Since the max pooling function operates as a “winner takes it all” paradigm, it does not consider local structures, which is the main limitation of PointNet.

Following, PointNet accommodates an MLP that uses the global feature vector subsequently for the classification tasks. Other than that, the global features can also be used in a combination with local point features for segmentation purposes.

The joint alignment networks ensure that a single point cloud is invariant to geometric transformations (e.g., rotation and translation). PointNet uses this natural solution to align the input points of a set of point clouds to a canonical space by pose normalization through spatial transformers, called T-Net. The same operation is deployed again by a separate network for feature alignment of all point clouds in a feature space. Both operations are crucial for the networks’ predictions to be invariant of the input point cloud.

7.3.2 PointNet++

PointNet++ is built in a hierarchical manner on several set abstraction layers to address the original PointNet’s missing ability to consider local structures. At each level, a set of points is further abstracted to produce a new set with fewer elements, in fact summarizing the local context. The set abstraction layers are composed again of three key layers: (i) a sampling layer, (ii) a grouping layer, (iii) and a PointNet layer [109]. Figure 6 provides an overview of the architecture.

Architecture of PointNet++ [109]

The sampling layer is employed to reduce the resolution of points. PointNet++ uses farthest point sampling (FPS) which only samples the points that are the most distant from the rest of the sampled points [8]. Thereby, FPS identifies and retains the centroids of the local regions for a set of points.

Subsequently, the grouping layers are used to group the representative points, which are obtained from the sampling operation, into local patches. Moreover, it constructs local region sets by finding neighboring points around the sampled centroids. These are further exploited to compute the local feature representation of the neighborhood. PointNet++ adopts ball query, which searches a fixed sphere around the centroid point and then groups all within laying points.

The grouping and sampling layers represent preprocessing tasks to capture local structures before the abstracted points are passed to the PointNet layer. This layer consists of the original PointNet-architecture and is applied to generate a feature vector of the local region pattern. The input for the PointNet layer is the abstraction of the local regions, i.e., the centroids and local features that encode the centroids’ neighborhood.

The process of grouping, sampling and applying PointNet is repeated in a hierarchical fashion, with points being down-sampled further and further until the last layer yields a final global feature vector [8]. In this way, PointNet++ can work with the same input data at different scales and generate higher level features at each set abstraction layer, while thus capturing local structures.

7.3.3 PointNet-based feature extraction

In the following, no explicit distinction is made between PointNet and PointNet++. Instead, we summarize both under the term PointNet-based approaches, considering that we primarily want to imply that point-wise methods for feature extraction or classification purposes are used.

Many state-of-the-art models use PointNet-like networks in their pipeline for feature extraction. An exemplary selection of seminal works include the proposals from Qi et al. [108], Yang et al. [159], Zhou and Tuzel [169] Xu et al. [153], Shi et al. [124], Shin et al. [127], Pamplona et al. [102], Lang et al. [65], Wang and Jia [148], Yang et al. [158], Li et al. [73], Yoo et al. [161], Zhou et al. [167] and Huang et al. [52].

Very little is changed in the way PointNet is used from the models adopting it for feature extraction, indicating that it is already a well-designed and mature technique. Yet, Yang et al. [158] examine in their work that the up-sampling operation in the feature propagation layers and refinement modules consume about half of the inference time of existing PointNet approaches. Therefore, they abandon both processes to drastically reduce inference time. However, predicting only on the surviving representative points on the last set abstraction layer leads to huge performance drops. Therefore, they propose a novel sampling strategy based on feature distance and merge this criterion with the common Euclidean distance sampling for meaningful features.

PointNet-based 3DOD models generally show superior performances in classification as compared to models using other feature extraction methods. The set abstraction operation brings the crucial advantage of flexible receptive fields for feature learning through setting different search radii within the grouping layer [124]. Flexible receptive fields can better capture the relevant content or features because they adapt to the input. Fixed receptive fields such as convolutional kernels are always limited in their dimensionality, which can mean that features and objects of different sizes cannot be captured so well. However, PointNet operations, especially set abstractions, are computationally expensive, which translates into long inference times compared to convolutions or fully connected layers [158, 160].

7.4 Segment-wise feature learning

Segment-wise feature learning follows the idea of a regularized 3D data representation. In comparison to point-wise feature extraction, it does not take the points of the whole point cloud into account as in the previous section. Instead it processes an aggregated set of grid-like representations of the 3D scene (cf. Section 6.3), which are therefore considered as segments.

In the following, we describe central aspects and operations of segment-wise feature learning, including (i) feature initialization (Sect. 7.4.1), (ii) 2D and 3D convolutions (Sect. 7.4.2), sparse convolution (Sect. 7.4.3) and voting scheme (Sect. 7.4.4).

7.4.1 Feature initialization

Volumetric approaches discretize the point cloud into a specific volumetric grid during preprocessing. Projection models, on the other hand, typically lay a relatively fine-grained grid on the 2D mapping of the point cloud scene. In either case, the representation is transformed into segments. In other words, segments can be either be volumetric grids like voxels and pillars (cf. Section 6.3.1) or discretized projections of the point cloud like RV and BEV projections (cf. Section 6.3.2).

These generated segments enclose a certain set of points that does not yet have a processable state. Therefore, an encoding is applied on the individual segments that aggregates the points they enclose.

The intention of the encoding is to fill the formulated segments or grids with discriminative features that provide information about the set of points that lie in each individual grid. This process is called feature initialization. Through the grid, these features are now available in a regular and structured format. In contrast to handcrafted feature extraction methods (cf. Section 7.1), these grids are not yet used for detection, but are made accessible to CNNs or other extraction mechanisms to further condense the features.

For volumetric approaches, current research can be divided into two streams of feature initialization. The first and probably more intuitive approach is to manually encode the voxels. However, handcrafted feature encoding introduces a bottleneck that discards spatial information and may prevent these approaches from effectively utilizing 3D shape information. This led to the latter approach, where models apply a lightweight PointNet to each voxel to learn point-wise features and assign them in aggregated form as voxel features, which is referred to as voxel feature encoding (VFE) [169].

Volumetric feature initialization: Traditionally, voxels are encoded into manually selected features such that each voxel contains one or more values consisting of statistics computed from the points within that voxel cell. The selection of features is already a crucial task, as they should capture the important information within a voxel and describe the corresponding points in a sufficiently discriminative way.

Early approaches such as Sliding Shapes [134] use a combination of four types of 3D features to encode the cells, namely point density, a 3D shape feature, a surface normal feature and a specifically designed feature to deal with the problem of self-occlusion, called the truncated signed distance function. Others, however, rely only on statistical encoding like Wang and Posner [144] as well as their adaption by Engelcke et al. [29], which propose three shape factors, the mean and variance of the reflectance values of points as well as a binary occupancy feature.

Much simpler approaches are pursued by Li [70] and Li et al. [77] using a binary encoding to express whether a voxel contains points or not. To avoid too much information loss due to a rudimentary binary encoding, the voxel size is usually chosen comparatively small to generate high-resolution 3D grids [77].

More recently, voxel feature initialization has shifted more and more toward deep learning approaches for similar reasons as feature extraction in other computer vision tasks, where manually selected features are not as performant as learned ones.

While segment-wise approaches prove to be comparatively efficient 3D feature extraction methods, point-wise models show impressive results in detection accuracy as they have recourse to the full information of each point of the point cloud. By manually encoding the voxels into standard features, a lot of information is usually lost.

As a response, Zhou and Tuzel [169] introduced the seminal idea of VFE and a corresponding deep neural network which moves the feature initialization from hand-crafted voxel feature encoding to deep-learning-based encoding. More specifically, they proposed VoxelNet, which is able to extract point-wise features from each segment of a voxelized point cloud through a lightweight PointNet-like network. Subsequently, the individual point features are stacked together with a locally aggregated feature at voxel level. Finally, this volumetric representation is fed into 3D convolutional layers for further feature aggregation.

The use of PointNet allows VoxelNet to capture inter-point relations within a voxel and therefore hold a more discriminative feature then normal encoded voxel consisting of statistical values. A schematic overview of VoxelNet’s architecture is shown in Fig. 7.

Architecture of VFE module in VoxelNet [169]

The seminal idea of VFE is used—in modified versions—in many subsequent approaches. Several works focus on improving performance and efficiency of VoxelNet. In terms of performance, Kuang et al. [62] developed a novel feature pyramid extraction paradigm. To speed up the model, Yan et al. [155] and Shi et al. [125] combined VFE with more efficient sparse convolutions. In addition, Sun et al. [137] and Chen et al. [20] reduced the original architecture of VFE to reduce inference times.

Projection-based feature initialization: In projection approaches, feature initialization of cells is usually done by hand. Both RV and BEV utilize fine-grained grids that are primarily filled with statistical quantities of the point lying within them. Only recently have the first models begun to encode features using deep learning methods (e.g., Lehner et al. [68], Wang et al. [145], Liang et al. [81]).

For RV, it is most popular to encode the projection map into three-channel features, namely height, distance and intensity [19, 81, 168]. Instead, Meyer et al. [96, 97] form a five-channel image with range, height, azimuth angle, intensity and a flag indicating whether a cell contains a point. In contrast to manual encoding, Wang et al. [145] use a point-based, fully connected layer to learn high-dimensional point features of the LiDAR point cloud, and then apply a max-pooling operation along the z-axis to obtain the cells’ features in RV.

Similar to RV, Chen et al. [19] encode each cell of a BEV representation in height, intensity and density. To increase significance of this representation, the point cloud is divided into M slices along the y-axis, resulting in a BEV map with \(M + 2\) channel features.

After the introduction to 3DOD by Chen et al. [19], BEV representation became quite popular and many other approaches followed their proposal of feature initialization (e.g., Beltrán et al. [9], Liang et al. [80], Wang et al. [149], Simon et al. [130], Li et al. [74]). Yet, others choose a simpler set up, encoding only the maximum height and density without slicing the point cloud [2] or even using a binary occupancy encoding [48, 157].

To avoid information loss, more recent approaches first extract features using deep learning approaches and then project these features into BEV (e.g., Wang et al. [145], Liang et al. [81]).

Analogous to their RV approach, Wang et al. [145] again use the learned point-wise features of the point cloud, but now apply the max pooling operation along the y-axis for feature aggregation in BEV. Liang et al. [81], on the other hand, first extract features in RV and then transform them into a BEV representation, adding more high-level information compared to directly projecting the point cloud to BEV.

After feature initialization of either volumetric grids or projected cells, segment-wise solutions usually utilize 2D/3D convolutions to extract features of the global scene.

7.4.2 2D and 3D convolutions

Preprocessed 2D representations such as feature-initialized projections, monocular images and RGB-D (2D) images all have the advantage that they can all leverage mature 2D convolutional techniques to extract features.

Volumetric voxel-wise representations, on the other hand, represent the spatial space in a regular format which are accessed by 3D convolutions. However, directly applying convolutions in 3D space is a very inefficient procedure due to the multiplication of space.

Early approaches to extend the traditional 2D convolutions to 3D were applied by Song and Xiao [135] as well as Li [70] by placing 3D convolutional filters in 3D space and performing feature extraction in an exhaustive operation. Since the search space increases drastically from 2D to 3D, this procedure involves immense computational costs.

Further examples using conventional 3D CNNs can be found in the models of Chen et al. [17], Sun et al. [137], Zhou and Tuzel [169] and Sindagi et al. [132].

Despite delivering state-of-the-art results, the conventional 3D CNN lacks efficiency. Given the fact that the sparsity of point clouds leads to many empty and non-discriminative voxels in a volumetric representation, the exhausting 3D CNN operations perform a large amount of redundant computations. This issue can be addressed by a sparse convolution.

7.4.3 Sparse convolution

Sparse convolution was proposed by Graham [41] and [42]. First, a ground state is defined for the input data. The ground state expresses whether the spatial location (site) is active or not. A site in the input representation is active if it has a non-zero value, which in the case of a regularized point cloud is a voxel enclosing at least a certain threshold of points. Furthermore, a site in the following layers is active if any of the spatial locations from the foregoing layer, from which it receives its input, is active. Therefore, sparse convolution must only process the sites which differ from the ground state of the preceding convolution layer, focusing computational power on the meaningful and new information.