Abstract

People with color vision deficiency (CVD) have a reduced capability to discriminate different colors. This impairment can cause inconveniences in the individuals’ daily lives and may even expose them to dangerous situations, such as failing to read traffic signals. CVD affects approximately 200 million people worldwide. In order to compensate for CVD, a significant number of image recoloring studies have been proposed. In this survey, we briefly review the representative existing recoloring methods and categorize them according to their methodological characteristics. Concurrently, we summarize the evaluation metrics, both subjective and quantitative, introduced in the existing studies and compare the state-of-the-art studies using the experimental evaluation results with the quantitative metrics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Color perception is one of the essential functions of human vision. It affects almost all aspects of an individual’s daily and working lives, for example, recognizing traffic signals, checking the condition of cooked meat, discriminating the colors of flowers and leaves, and so on. However, disorders in color vision have many causes, such as genetic alterations of cone cells, cortical trauma, degenerative diseases/disorders, but the anomaly in cone cells is the most common reason for color vision deficiency (CVD). There are three kinds of cone cells in the human retina: L-cone, M-cone, and S-cone cells. When all three kinds of cone cells function properly, individuals are identified as having normal color vision, a.k.a., trichromats (trichromacy). However, anomalies in these cells can lead to partial or complete loss of function. According to the number of affected cone cell kinds and their severity, CVD can be classified as anomalous trichromacy (anomalous trichromats), dichromacy (dichromats), or monochromacy (monochromats) [1]. Both anomalous trichromacy and dichromacy involve one type of anomalous cone cells. Moreover, anomalous trichromacy and dichromacy can be further divided into protan (L-cone anomaly), deutan (M-cone anomaly), and tritan (S-cone anomaly) deficiencies. Specifically, anomalous trichromacy can be classified as protan trichromacy, deutan trichromacy, and tritan trichromacy; dichromacy can be classified as protanopia (L-cone), deuteranopia (M-cone), and tritanopia (S-cone). Because individuals with protan deficiency (protan trichromacy; protanopia) and those with deutan deficiency (deutan trichromacy; deuteranopia) have difficulty discriminating red and green, they are commonly stated as having red–green CVD. For monochromacy, either two or all kinds of cone cells are missing or dysfunctional. People with monochromacy either perceive all colors in grayscale shades or blue shades [2].

People with CVD experience varying degrees of contrast loss. In order to compensate for CVD, numerous recoloring approaches have been proposed in the past two decades. Ribeiro et al. carefully reviewed the recoloring algorithms for CVD compensation in their survey paper [3] on the aspects of methodology and the recoloring procedure. They classified all methods according to the compensation targets (anomalous trichromacy, dichromacy, and monochromacy) and color space (e.g., RGB, CIE L*a*b*) where the recoloring was performed. What is more, they gave a qualitative assessment of the recoloring approaches based on the perceptual requirements of contrast enhancement, naturalness preservation, and color consistency. It was a very efficient way to learn the key ideas and main pipelines of many recoloring methods via [3]. However, the timestamp pressed on [3] is 2019, and outstanding recoloring works have since been released throughout the world. What has come to light is that the evaluation experiments verifying the effectiveness of the recoloring algorithms are equally important for the methodology proposals.

In this survey, we briefly review all the representative existing recoloring approaches and give detailed descriptions of the state-of-the-art works; categorize all these methods according to their compensation targets and common methodological characteristics; summarize the subjective experiments and quantitative metrics introduced in the existing recoloring studies; and reproduce the recoloring results using the state-of-the-art works, and evaluate these recolored images using the introduced quantitative metrics.

2 Color vision deficiency simulation

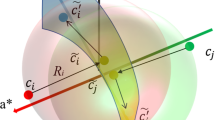

In order to digitally visualize what is seen by individuals with CVD, simulation methods have been proposed in [4,5,6]. Due to the missing or dysfunction of one of the three kinds of cone cells, individuals with dichromacy can perceive colors using the remaining two kinds of cone cells in addition to the achromatic colors. Based on the studies [7, 8] that reported the color percepts of dichromats, Brettel et al. [4] proposed to simulate their color vision in the LMS color space. The gamut of dichromacy is modeled as two half-planes. As shown in Fig. 1, for people with protanopia or deuteranopia, the two half-planes are composed of a neutral color vector (e.g., gray, white) and two chromatic vectors with wavelengths of 475 nm (blue) and 575 nm (yellow). For people with tritanopia, the half-planes are formed with neutral color vector and two chromatic vectors with wave length of 485 nm (blue–green) and 660 nm (red). Then, colors in the LMS space are projected onto those half-planes along the axis corresponding to the non-functioning cone cell, and the simulation result of dichromacy can be obtained. Due to the discontinuity of the gamut formulated in [4], when giving a color in the 3D color space, the simulation algorithm needs to first determine a half-plane to project onto. Viénot et al. [5] conducted a further experiment on [4] and proposed a linear dichromacy simulation algorithm, a \(3 \times 3\) transformation matrix.

Protanopia simulation in the LMS color space using the method proposed by Brettel et al. [4]. \(c\) color in LMS 3D space. \(c_{p}\) protanopia simulation result for \(c\) (along L-axis)

Based on the shift theory of cone cell sensitivity [1] (Fig. 2), Machado et al. [6] proposed a method for simulating anomalous trichromacy of varying degrees. The origin theory of sensitivity shift explains the exon replacement in cone cells, anomalous trichromacy should result from that. Exons in one kind of cone cells are partially replaced with those in other cone cells, and such replacements make the sensitivity of anomalous cone cells shift toward that of other cone cells, for example, the L-cone sensitivity of protan trichromats shifts toward M-cone sensitivity (Fig. 2b, c). Moreover, the longer shift distance indicates a severe degree of CVD. In [6], a sensitivity shift of 20 nm is regarded as a severe case of anomalous trichromacy. The simulation method builds upon the two-stage theory put forward in [9]. According to the two-stage theory, perception of the color of a stimulus on display involves two transformations: (1) L-, M-, and S-cone cells transform the visible electromagnetic spectrum signal emitted from the screen to bioelectrical signal \(\left( {l, m, s} \right)\) and transport the signal to the visual center the brain; (2) the visual center transforms the bioelectrical signal \(\left( {l, m, s} \right)\) to three opponent-color channels, i.e., white–black, red–green, and yellow–blue; (3), a \(3 \times 3\) transformation matrix \(T_{{{\text{normal}}}}\) that converts an RGB stimulus to opponent-color perception can be obtained. However, the alterations in cone cells hinder the transformation of bioelectrical signals, and the alternative transformation matrix \(T_{{{\text{CVD}}}}\) that converts an RGB stimulus to opponent-color space, which is in a manner of CVD, can be obtained by taking sensitivity shift into consideration. For visualizing the color perception of CVD for trichromats, given a stimulus \(C\) in RGB color space and a specified distance of sensitivity shift \(d\), CVD simulation result \(C_{d}\) can be obtained as \(C_{d} = M\left( {C,d} \right) = T_{{{\text{normal}}}}^{ - 1} T_{{{\text{CVD}}}} C\). Let \(T_{{{\text{sim}}}} = T_{{{\text{normal}}}}^{ - 1} T_{{{\text{CVD}}}}\), the entire procedure of anomalous trichromacy simulation can be performed in the RGB color space as \(C_{d} = T_{{{\text{sim}}}} C\).

Shift theory of cone cell sensitivity adopted in the CVD simulation method [6]. a Sensitivity curves of normal vision. b, c Sensitivity curves of protan trichromacy. L-cone sensitivity shifts toward M-cone sensitivity. d, e Sensitivity curves of deutan trichromacy. M-cone sensitivity shifts toward L-cone sensitivity

3 Evaluation metrics

This section reviews the evaluation metrics used in existing subjective and objective experiments to verify the recoloring algorithms’ effectiveness. In the subjective experiments, participants with CVD were recruited to evaluate recoloring results directly. Considering the difficulty in recruiting CVD participants, some studies adopted indirect evaluation, that is, inviting individuals with trichromacy and showing them the CVD simulation results of the recolored images. Contrast and naturalness are the two most important factors which should be considered. Contrast can be understood as the difference within a local area or among distant areas in an image, such as the texture of an object or chromatic difference among objects. One important goal of recoloring is to compensate for the loss of contrast in the perception of CVD. When recoloring an image for contrast enhancement, it is inevitable to change some colors that can be properly perceived by CVD users, for example, recoloring the user discriminable blue sky to gray, resulting in an image that appears unnatural for CVD users. Therefore, the preservation of naturalness also becomes an important indicator of algorithm effectiveness. In the objective experiments, the naturalness and contrast can be directly evaluated using quantitative metrics. In the subjective experiments, in addition to requiring the participants to score the contrast of recolored images subjectively, some studies involved participants in color vision-related tasks for performance evaluation.

3.1 Subjective evaluation

Ishihara test: this evaluation scheme is introduced in [10,11,12]. Each test plate in Ishihara test consists of dots with different colors. This tool is used to diagnose whether an individual is with CVD. People with trichromacy can read the figure or pattern formed by these dots correctly. However, people with CVD cannot discriminate the dots of the foreground from those of the background due to loss of color contrast; they may fail to read the figure or read it incorrectly. Therefore, it can be used to evaluate the effectiveness of the recoloring algorithms that whether the recolored images can help CVD users read those test plates.

Farnsworth–Munsell 100 Hue test: this evaluation scheme is introduced in [13]. This tool involves an examinee in a cap-sorting task. Given two fixed chromatic caps, A and B, at two polar ends, an examinee is asked to adjust the array of chromatic caps between A and B to make the color variation smooth. Though distinct, each cap is very similar to others, and an error value can be calculated from the cap-sorting result of the examinee; a higher error value indicates a more severe CVD. Besides the diagnosis of CVD, this test has the capability to measure the degree of CVD. Therefore, it can be used to evaluate the effectiveness of the recoloring algorithms that whether the recoloring results can help CVD users reduce the error values of the test.

Color matching test: this evaluation scheme is introduced in [14]. After being presented with a reference chromatic block, an examinee is required to select the same color block from a set of candidate blocks. This test can evaluate the recoloring algorithms that whether they can reduce the mismatching of chromatic blocks by CVD users.

Character recognition: this evaluation scheme is introduced in [15]. In this scheme, a participant is required to read the tinted characters from a chromatic background. A high similarity between font color and background color can make the characters hard to be read. This scheme can evaluate whether the recoloring algorithms can improve CVD users’ readability if the font color and background color in the original media contents are confusing to them.

Image scoring: this evaluation scheme can be seen in [16,17,18]. In this scheme, a participant can directly score the recolored image from his or her own point of view. The test image can receive a score on several scales (e.g., the 5-point Likert scale) from negative to positive. The effectiveness of the recoloring algorithms can be confirmed if they can achieve high evaluation scores from participants.

3.2 Objective evaluation

Using the CVD simulation methods, the perception of CVD users can be obtained approximately. The recolored images and their CVD simulation results can be used in contrast and naturalness evaluations.

The quantitative metrics for contrast evaluation can be classified as either absolutive ones or relative ones, according to whether a reference image is required. An absolutive metric (without a reference image) sums up the strengths of contrasts within a single image. In contrast, a relative metric (with a reference image) compares the difference between contrasts of homologous pixel pairs or color pairs in the test image and the reference image. In an absolutive metric, stronger contrasts contribute to a higher score. In a relative metric, not over-enhanced contrast, but just noticeable difference (JND) or contrast identical to that in the reference image is better. On the other hand, the pixel pairing way of a metric determines whether it is a global contrast calculator or not. A metric that pairs a pixel with its neighboring pixels can be considered a local contrast calculator.

Different from metrics for contrast evaluation, reference images are required in the quantitative metrics for naturalness evaluation. There are two kinds of assessment schemes used in the existing studies. An intuitive scheme involves measuring the amounts of chromatic changes made to CVD perceptions, i.e., comparing the CVD simulation of the recolored image with that of the original image. As an alternative scheme that takes the universal design into account, the amount of the changes to the perceptions of CVD is considered as well as the changes to the perceptions of normal vision; thus, this scheme directly compares the recolored images with the original images, pixel-by-pixel.

3.2.1 Contrast metrics in monochromacy studies

Global luminance error: in [19], the global luminance error (GLE) metric was introduced. In the GLE metric, the luminance difference between any pixels \(c_{i}\) and \(c_{j}\) in the grayscale image is compared with color contrast \(\delta_{ij}\) between homologous colors in the original image, and it can be calculated as:

where \(i\) ranges over the image, and \(K\) indicates a set of pixels in the image; \({\text{lum}}\left( \cdot \right)\) stands for the pixel’s luminance in the grayscale image. A lower value of GLE indicates increased preservation of contrast. The GLE metric is a global contrast calculator, and it calculates the relative contrast error between the test image and the reference image. In order to uniformly and comprehensively evaluate the contrast preservation in the grayscale image, whether inside the object or between adjacent or distant objects, the GLE metric can be used.

Color contrast preserving ratio: in [20,21,22], the color contrast preserving ratio (CCPR) metric was introduced. This metric is devised based on JND, and it is defined as:

where \(\tau\) is a threshold set by the user; \(\Omega\) represents a pixel pair set, and it contains neighboring pixel pair \(\left( {x,y} \right)\) in the original image, whose color contrast is greater than \(\tau\); \(\Omega\) indicates the number of pixel pairs in \(\Omega\); \(g_{x}\), \(g_{y}\) denotes the grayscale values of pixels \(x\), \(y\) in the grayscale image; \(\# \left\{ \cdot \right\}\) stands for the number of pixel pairs that satisfy two conditions, i.e., \(\left( {x,y} \right) \in \Omega\) and \(\left| {g_{x} - g_{y} } \right| \ge \tau\). A higher value of CCPR indicates better preservation of contrast. The CCPR metric is a local contrast calculator, and it calculates the relative contrast preserving ratio of the test image to the reference image. In order to evaluate whether the grayscale image preserves local details at the lowest degree, for example, studies of enhancing edges between adjacent objects, the CCPR metric can be used.

3.2.2 Contrast metrics in dichromacy studies

Global chromatic diversity: in [23, 24], the global chromatic diversity (GCD) metric in the CIE L*a*b* color space was introduced, and the GCD value of an image can be calculated as:

\({\text{GCD}}\left( {i,j} \right) = \sqrt {\left( {l_{i} - l_{j} } \right)^{2} + \left( {a_{i} - a_{j} } \right)^{2} + \left( {b_{i} - b_{j} } \right)^{2} }\),where \(i\) and \(j \) range over the colors in CVD simulation result of the recolored image; \(l_{i}\), \(a_{i}\), and \(b_{i}\) are L*, a*, and b* values of pixel \(i\). The GCD metric is a global contrast calculator, and it calculates the absolutive strength of the contrast in an image. The higher the GCD value, the stronger the contrast is. In order to uniformly and comprehensively evaluate the contrast which may even be stronger than that in the input image, for example, image enhancement studies, the GCD metrics can be used.

Global contrast error: in [25, 26], global contrast error (GCE) metric was introduced, which can be computed as:

where \(i\) and \(j\) range over the colors in the image; \(c_{i}\) and \(c_{i}^{^{\prime}}\) denote homologous colors in the original image and the recolored image, respectively; \(\left\| \cdot \right\|\) is the Euclidean length of a vector; \({\text{sim}}\left( \cdot \right)\) is the dichromacy simulation function. The GCE metric is a global contrast calculator, and it calculates the relative contrast error of the test image, comparing to the reference image. The smaller the GCE value, the better the contrast preservation is. For the studies which constrain the pixel pair-wise or color pair-wise contrast in the CVD simulation result for the recolored image to be identical to that in the input image, the GCE metric can be used.

Local contrast error: in [17, 18, 27], the local contrast error (LCE) metric was introduced, which can be computed as:

where \(i\) ranges over the pixels in the image; \(\Omega_{i}\) indicates a set of randomly selected pixels from the neighborhoods of pixel \(i\), and \(\Omega_{i}\) represents the number of pixels in \(\Omega_{i}\). The LCE metric is a local contrast calculator, and it calculates the relative contrast error between the test image and the reference image. The smaller the LCE value, the better the contrast preservation is. In order to evaluate the local contrast within a certain area, the LCE metric can be used.

Local absolute contrast: in [16], the local absolute contrast (LAC) metric was introduced, which can be computed as:

where \(i\) ranges over the pixels in the CVD simulation result of the recolored image; \(G_{h} \left( \cdot \right)\) and \(G_{v} \left( \cdot \right)\) are horizontal and vertical Sobel gradient operators, respectively. The LAC metric is a local contrast calculator, and it calculates the absolutive strength of the contrast in an image. The higher the LAC value, the stronger the contrast is. In order to evaluate the local contrast within a very small area, the LAC metric can be used.

3.2.3 Contrast metrics in anomalous trichromacy studies

Contrast preservation rate: in [12], contrast preservation rate (CPR) metric was introduced, which can be computed as:

where \(i\) ranges over the pixels in the image, and \(j\) is the neighboring pixel of \(i\); \(n\) is the number of neighboring pixels of \(i\); \(p_{i}\) and \(q_{i}\) are homologous pixels in the test image and the reference image; \(\mu_{pi}\) and \(\sigma_{pi}\) are the mean and standard deviation of neighboring pixels of \(p_{i}\); \(\varepsilon\) is a small constant to avoid the denominator being zero. The CPR metric is a local contrast calculator, and it calculates the relative contrast error between the test image and the reference image. The higher the CPR value, the better the contrast preservation is. Similar to LCE, in order to evaluate the local contrast within a user-specified area, the CPR metric can be used. Table 1 shows the summary of quantitative metrics for contrast evaluation.

3.2.4 Naturalness metrics

Chromatic difference: in [12, 16, 18, 24,25,26, 28], chromatic difference (CD) metric in the CIE L*a*b* color space was introduced, which can be computed as:

where \(i\) ranges over the pixels in the image; \(l_{i}^{^{\prime}}\), \(l_{i}\), \(a_{i}^{^{\prime}}\), \(a_{i}\), \(b_{i}^{^{\prime}}\), and \(b_{i}\) are L*, a*, and b* values of homologous pixel \(i\) in the test image and reference image; \(\lambda\) is the weight of luminance difference. By setting \(\lambda = 0\), the metric in [12, 16, 18] can consider only two chromatic components.

In addition to the CD metric, structure similarity (SSIM) [29] and feature similarity (FSIMc) [30] metrics are common indexes used in naturalness evaluation.

4 Recoloring for monochromacy

Because only one kind of cone cell or no cone cell (only rod cells) functions, the color gamut of monochromacy is reduced to one dimension. Recoloring methods for monochromacy merely remap colors in the image to one channel (usually the luminance channel).

4.1 Monochromacy tailor-made recoloring

Recoloring methods [31, 32] are the only two works that cover monochromacy compensation, among those depicted in [3]. These two methods are optimization-based, and the recoloring was performed in the CIE L*a*b* color space. The authors devised their objective functions to preserve an equal-proportional contrast in the grayscale image, referring to the original contrast between color pairs. In [31], a linear transformation function \(g\left( {c_{i} } \right)\) was adopted to remap arbitrary color \(c_{i}\) in the original image to the target gamut; and the Fletcher–Reeves conjugate gradient method was utilized to minimize their objective function:

where constants Dmax and dmax in the function denote the maximal distance between any colors in the gamut of normal vision and monochromacy, respectively. The concept behind [32] is the same as [31], that is, preserving an equal-proportional contrast in the grayscale image. In [32], the objective function was formulated as:

where \(g_{i}\) stands for the one-dimensional value after recoloring for \(c_{i}\); and \(k\) denotes the target proportionality. Each resulting color \(g_{i}\) can be directly obtained by minimizing the objective function. The naturalness preservation, that is, the preservation of original luminance perceived by individuals with monochromacy, was not considered in [31, 32].

4.2 Color-to-gray conversion studies

As discussed by Shen et al. [33], recoloring for CVD individuals is one type of dimension reduction problem, especially for monochromacy, which has high relevance to color-to-gray conversion (decolorization) studies. In this survey, we introduce five color-to-gray methods, which are optimization-based [19, 20, 34, 35] and convolutional neural network-based [21].

Lu et al. [20], Gooch et al. [34], and Liu et al. [35] paid attention to contrast preservation. Gooch et al. [34] firstly devised a mechanism to calculate signed difference \(\delta_{ij}\) for each color pair \(\left( {c_{i} ,c_{j} } \right)\) in the CIE L*a*b* space; then, they introduced their objective function as:

where \(g_{i}\) and \(g_{j}\) stand for the resulting grayscale values of \(c_{i}\) and \(c_{j}\); finally, the conjugate gradient iteration method was adopted to minimize \(E\).

Likewise, Lu et al. [20] proposed a method to preserve the contrast in the grayscale image, considering both the RGB color space and CIE L*a*b* space. Given two colors \(c_{x} = \left( {r_{x} ,g_{x} ,b_{x} } \right)\) and \(c_{y} = \left( {r_{y} ,g_{y} ,b_{y} } \right)\), their difference \(g_{x}^{^{\prime}} - g_{y}^{^{\prime}}\) in the grayscale image is constrained by the original contrast \(\delta_{x,y}\), in a manner of maximizing likelihood:

Because of the ambiguity in determining the sign of \(\delta_{x,y}\) (the order of grayscale value), the authors adopted a bimodal selection mechanism to loosen the constraint introduced in [34]. For an unambiguous color pair \(\left( {c_{x} ,c_{y} } \right)\), which satisfies \(c_{x} \le c_{y}\), that is, \(r_{x} \le r_{y} \& g_{x} \le g_{y} \& b_{x} \le b_{y}\), the model \(G\left( {\delta_{x,y} ,\sigma^{2} } \right)\) is used; otherwise, if the pair \(\left( {c_{x} ,c_{y} } \right)\) is regarded as ambiguous, then an alternative model,

is used. Considering the complexity of the objective function, the authors developed a solver to solve their problem.

Liu et al. [35] proposed “WpmDecolor” as an optimization model for decolorization in RGB color space. This model calculates the grayscale image g by a linear combination of three channels of the original image. It can be represented as:

\(g^{\prime} = \mathop \sum \limits_{{c = \left\{ {r,g,b} \right\}}} w_{c} I_{c}\),where \(I_{c}\) denotes the \(c\) channel of the original image, and \(w_{c}\) stands for the weight assigned to the \(c\) channel. Once the weight \(w_{c}\) is determined, the grayscale image \(g^{\prime}\) is obtained. The weight \(w_{c}\) is subject to \(\sum\nolimits_{{c = \left\{ {r,g,b} \right\}}} {w_{c} = 1}\) and can be obtained by maximizing the objective function:

where \(P\) indicates a pixel pair set, which consists of local neighboring pixels and global paired pixels. The coefficient \(S_{x,y}\) is calculated as:

where \(I_{c,x}\) and \(I_{c,y}\) indicate the \(c\) channel values of the homologous pixels in the original image. A smaller \(\left| {I_{c,x} - I_{c,y} } \right|\) will output a larger \(S_{x,y}\), and the details in the resulting image can be enhanced.

In [21], a convolutional neural network was proposed to decolorize images. The authors performed the decolorization while considering both global and local semantic features of the color images. Thus, the convolutional neural network consists of four parts. The first part was called a low-level feature network, directly extracting low-level features from the image. The second and third networks, global feature network, and local semantic network, calculate global and local features from the extracted low-level features; finally, the fourth network computes the grayscale image from the calculated global and local features. In order to train their whole network, the authors prepared a dataset using the decolorization method introduced in [20]. Furthermore, their loss function was devised as:

in which the Euclidean distance between the output of the network, \(\widehat{{g_{n} }}\), and the ground truth, \(g_{n}\), is calculated; \(N\) represents the number of images they used to train the network.

Kuhn et al. [19] proposed a decolorization algorithm utilizing the spring–mass system in the CIE L*a*b* color space. Each color \(c_{i}\) is regarded as a particle \(P_{i}\) in the system, and the mass of \(P_{i}\) is represented as:

where \(a_{i}\) and \(b_{i}\) stand for the a* and b* component values of color \(c_{i}\), respectively. To this point, we can know that the smaller the distance to the luminance axis, the larger the mass the particle assigned. For an achromatic color whose mass is assumed to be infinity, its luminance is kept unchanged. Otherwise, the force \(F_{i} \left( t \right)\) that is applied to \(P_{i}\) and its luminance \(L_{i}^{*} \left( t \right)\) at time \(t\) are calculated according to Hooke’s law; the luminance at the next time \(t + \Delta t\) can be calculated by:

Before applying the spring–mass system, Kuhn et al. decreased the number of particles via color quantization methods; finally, they interpolated the final grayscale image according to the decolorized quantized colors. The luminance of the original image is preserved to some extent by setting larger masses to colors with less chromaticity.

4.3 Evaluation experiment

Due to the difficulty in inviting individuals with monochromacy and most studies introduced in this section proposing the color-to-gray conversion task, there were no subjective evaluations involving CVD participants; alternatively, the user study with normal vision volunteers was conducted by [21, 32, 35]. The contrast evaluation-related task selects the grayscale image that can represent the details of the reference (original) image best.

4.4 Summary of monochromacy and the color-to-gray conversion studies

In this survey, seven recoloring methods which are for monochromacy compensation or decolorization were introduced. All these methods perform the recoloring based on the optimization models. Both recoloring for monochromacy and decolorization aim to solve a common problem: mapping information of three channels into a single channel against contrast loss. Before the popularization of the artificial intelligence network, hand-crafted algorithms occupied the majority of recoloring methods for monochromacy/decolorization. Albeit need to prepare training data using existing hand-crafted methods, the network proposed in [21] introduced deep learning to the recoloring for monochromacy.

5 Recoloring for dichromacy

An enormous number of recoloring algorithms have been proposed for dichromacy compensation based on the dichromacy simulation methods. In this section, we first categorize the existing recoloring methods for dichromacy and then give detailed descriptions of the state-of-the-art methods; finally, we compare these methods using the results from the evaluation experiments conducted in these studies.

5.1 Recoloring methods for dichromacy in past two decades

5.1.1 Customized difference addition

We classify the methods in [10, 22, 26, 28, 36, 37] into a category named “customized difference addition” (CDA). For these methods, dichromacy simulation methods are firstly adopted to simulate the dichromacy perception \(I_{d}\) to the original image \(I\), and \(\Delta I = \left| {I - I_{d} } \right|\), the difference between the original image and the simulated image is then calculated; next, the calculated difference is adjusted either by the algorithms or the users to obtain a customized difference \(\Delta I_{e}\); finally, the customized difference \(\Delta I_{e}\) is added to \(I\) to obtain the recolored image \(I^{\prime}\). CDA algorithms usually can preserve naturalness well; they are usually easy to implement and time-efficient, which is important for daily usage. However, the contrast in the recolored image may not be guaranteed because the relationship between pixels or colors has not been given enough consideration.

5.1.2 Hue rotation

We classify the methods in [25, 27, 38,39,40,41,42,43,44,45,46] into a category named “hue rotation” (HR). For these methods, rotation \(\Delta H^{\theta }\) by an angle \(\theta\) is applied to the hue of the original image. For example, the algorithm proposed by Ching et al. [44] rotates hues of reds to yellows and maps hues of greens to blues for contrast enhancement. In addition to the hue, methods by [38, 39, 42] also deform the saturation and luminance of the original image to obtain the recolored image. Similar to CDA, the algorithms in HR are usually easy to implement and time-efficient. Nevertheless, the hue rotation may make significant changes to CVD perception, which can reduce the naturalness of the result.

5.1.3 Optimization

We classify the methods in [16,17,18, 22, 23, 25, 31, 32, 41, 43, 45,46,47,48,49,50,51,52,53] into a category named “optimization” (Opt). For these methods, except for Huang et al. [25], key colors are firstly extracted from the original image using image quantization, clustering, color sampling, and so forth. Then, objective functions are used to find optimal target colors for the extracted key colors or optimal mapping applied to the whole image. Finally, the resulting image can be obtained by interpolating from the recolored key colors or applying the mapping to the original image. The method by Huang et al. [25] can be viewed as a mixture of HR and Opt, because the angle of hue rotation is obtained through the optimization model. The most salient advantage of optimization-based algorithms is that the contrast is guaranteed. However, Opt methods may require more time of computation than those of HR and CDA.

5.1.4 Node mapping

We classify the methods in [24, 54] into a category named “node mapping” (NM). These methods firstly distribute identical numbers of nodes to the gamut of trichromats in 3D RGB space and the 2D gamut of dichromats; then, the algorithms try to map the path from the nodes in the 3D space to the nodes in the 2D space, while maintaining the original distance relations. Methods of NM can preserve the image structure; however, the consideration of naturalness preservation is not enough.

5.2 The state-of-the-art studies

The algorithm in [26] is a follow-up of [28]. Both methods were proposed to compensate for red–green deficiency (protanopia and deuteranopia). Because individuals with red–green deficiency have difficulty discriminating red and green while they can perceive blue well, these methods attempt to compensate for the confusing colors by increasing their blue component. When they perform the recoloring in the CIE XYZ color space, the X and Z components are modified, while the luminance component Y is kept unchanged after recoloring. The algorithms try to increase the Z component of the colors because this component contributes to blue. In order to generate the recoloring result \(I^{\prime}\) for an input image \(I\), the following procedures are conducted: (1) use Brettel’s method to calculate the dichromacy simulation result \(I_{d}\) of \(I\), (2) transform \(I\) and \(I_{d}\) from RGB space to XYZ space, (3) normalize each color by its Y component, and the normalized original color and simulated color are represented as \(C_{o} = \left( {X_{o} ,Y_{o} ,Z_{o} } \right)\), and \(C_{s} = \left( {X_{s} ,Y_{s} ,Z_{s} } \right)\), respectively, (4) calculate compensation vector \(\left( {\Delta X,0,\Delta Z} \right)\) from \(C_{o}\) and \(C_{s}\), (5) add the vector \(\left( {\Delta X,0,\Delta Z} \right)\) to \(C_{o}\), to obtain the resulting color \(C^{\prime}\), (6) transform \(C^{\prime}\) back to RGB space. The only difference between [26] and [28] is the increasing amount of Z component. The increasing amount \(\Delta Z\) in [26] is β times to that in [28], where \(1 \le \beta \le 4\). For colors with a small difference from their dichromacy simulations, the amount of change is also small; thus, the naturalness of the original image should be preserved well in the recolored images. The method proposed in [26] can be classified into the CDA category.

Zhu et al. proposed two recoloring methods [16, 17] for dichromacy compensation. Zhu et al. performed the recoloring within the gamut of dichromacy. Both methods formulate the recoloring as an optimization problem, consisting of contrast enhancement and naturalness preservation, and the recolored one can be obtained by solving a linear equations system. The objective function is represented as follows:

where \(c_{i}^{^{\prime}}\), \(c_{j}^{^{\prime}}\) denote the recoloring results; \(\lambda\) is the weight of naturalness term. Similar to existing optimization-based studies, the methods proposed by Zhu et al. firstly extract a small number of representative colors \(RC\) (or dominant color) from the original image \(I\) for acceleration; then, the recolored representative colors \(RRC\) are obtained through putting \(RC\) into the optimization model; finally, the whole image is obtained from \(RRC\) using edit propagation methods. On the other hand, the method in [16] performs the recoloring in the LMS color space, and that in [17] performs in the CIE L*a*b* color space, respectively. For simplification, the method in [16] processes each channel of LMS individually; the method in [17] focuses on compensation for red–green dichromacy, and the optimization is conducted in the one channel, that is, yellow–blue channel b*. Generally, both methods simultaneously enhance the contrast and preserve the naturalness in the recolored image; the high computation time of representative color extraction and propagation hinders their application in real time. The methods in [16] and [17] can be classified into the Opt category.

Wang et al. [18] proposed an optimized dichromacy projection (ODP) for dichromacy compensation in the CIE L*a*b* color space. In [52], the two half-planes in the LMS space are transformed into 2D planes, the CIE L*a*b* space by the method of least squares; the 2D planes go through the luminance axis L*, and the angle between the plane and L*b* plane can be represented as \(\theta\). The algorithm in [18] attempts to find a linear transformation that projects the colors in the 3D space onto the 2D plane while preserving naturalness and enhancing contrast. By rotating the coordinates of CIE L*a*b* space along the L* axis by angle \(\theta\), the L*b* plane can be aligned with the 2D plane. Furthermore, the a* value of the colors projected onto the 2D plane can be left alone because any color on the 2D plane has \(a = 0\); in addition, supposing that recoloring the image with the colors on the 2D plane can preserve the luminance of the original image, modification to luminance channel can also be left alone. As a result, channel b* becomes the only channel that needs to be changed, and the recoloring can be represented as projecting colors on the 2D a*b* plane to 1D b* axis. The projection can be represented as: \(b^{\prime} = w_{a} a_{o} + w_{b} b_{o}\), where \(a_{o}\) and \(b_{o}\) are a* and b* components of the original color after rotation; \(w_{a}\) and \(w_{b}\) are projection weights. Constraining \(w_{a} + w_{b} = 1\), the projection can be further simplified as: \(b^{\prime} = \left( {1 - w_{b} } \right)a_{o} + w_{b} b_{o}\). The only variable \(w_{b}\) in the projection is obtained from an optimization model consisting of the naturalness preservation and contrast enhancement terms. The linear transformation and sparse sampling strategy in the optimization enable the algorithm to recolor an image very quickly; contrast enhancement and naturalness preservation are guaranteed by the optimization. The method in [18] can be classified into the Opt category.

Li et al. [22] introduced neural networks into the recoloring for dichromacy. With the great achievement of research on generative adversarial networks (GAN), much more studies try to use GAN and its variations to transform the style of the input image or change the color of the image. Li et al. demonstrated that training GAN (e.g., pix2pix-GAN [55]) could be used to generate recolored images for dichromacy compensation. For training, Li et al. prepared a dataset consisting of the original images and the recolored image pairs. The recolored images are generated by Huang et al. [41] or their original method, named the improved octree quantification method (IOQM). The IOQM first clusters the input image to obtain key colors \(C^{0}\). Then, the difference \(D_{i}\) between each key color \(C_{i}^{0}\) and its dichromacy simulation result \(C_{i}^{d}\) is calculated to determine its confidence; a smaller \(D_{i}\) links to higher confidence. Next, each key color \(C_{i}^{0}\) is compared to those key colors which have higher confidences than \(C_{i}^{0}\), and if \(C_{i}^{0}\) is judged to be “unrecognizable,” it will be recolored by adding a customized difference of \(D_{i}\) to itself, while the difference customization is in the optimization stage. Finally, each pixel in the original image is recolored according to the distance to its nearest key color. In order to improve the quality of the dataset, they recruited people with normal vision to filter inappropriate data from the dataset. Besides the neural network’s performance, the characteristics of the dataset (e.g., whether the naturalness preservation is considered) are an important factor that influences the recoloring results. The method in [22] can be classified as a mixture of categories CDA and Opt. Simultaneously, semantic information (e.g., object) has begun to attract researchers’ attention, and [22] showed that the tendency for introducing an artificial intelligence network into recoloring for CVD would become popular.

5.3 Comparison

In order to evaluate the effectiveness of the recoloring results, subjective and quantitative experiments were conducted in the recoloring studies. The methodologies and evaluation experiments are summarized in Table 2.

Figures 3 and 4 show the recoloring results of Hassan et al. [26, 28], Zhu et al. [16, 17], and Wang et al. [18] for protanopia and deuteranopia compensations, respectively. The first row of each instance shows the stimulus images, and the second row shows the dichromacy simulation images generated using [4]. In Fig. 3, for the instance of “Paint,” Zhu et al. [16, 17] (Fig. 3d, e) and Wang et al. [18] (Fig. 3f) enhanced the contrast between the sun and the sky well; for the instance of “Plant,” Hassan et al. [26] (Fig. 3c), Zhu et al. [17] (Fig. 3e), and Wang et al. [18] (Fig. 3f) significantly enhanced the contrast between the berries and leaves; for the instance of “Paprika,” Hassan et al. [26, 28] (Fig. 3b, c), Zhu et al. [16] (Fig. 3d), and Wang et al. (Fig. 3f) significantly enlarged the difference between red paprikas and green paprikas. For “Flower 1” in Fig. 4, Hassan [26] (Fig. 4c) and Wang et al. [18] (Fig. 4f) enhanced the contrast between the flowers and background. For “Flower 2,”

Wang et al. [18] (Fig. 4f) significantly enhanced the contrast between the flower and leaves; for the instance of “Ishihara Test,” Hassan [26] (Fig. 4c) and Wang et al. (Fig. 4f) significantly enlarged the difference between the figures “26” and background. The quantitative evaluation results of the recolored images in Figs. 3 and 4 using GCD, LCE, CD, and FSIMc metrics are shown in Tables 3, 4, 5, and 6, respectively. From the viewpoint of quantitative metrics, in terms of protanopia compensation, [26] and [16] obtained the best scores for the global contrast evaluation metric GCD, whereas [17, 28], and [18] obtained the best scores for the local contrast evaluation metric LCE, respectively; for the naturalness evaluation, the scores of [28] and [17] are best. Regarding deuteranopia compensation, [26] and [18] obtained the best scores for the global contrast evaluation metric GCD. Additionally, [18] obtained the best scores for the local contrast evaluation metric LCE, respectively; for the naturalness evaluation, the scores of [28] and [17] are best. In general, the method of [28] preserves naturalness well, but the improvement of contrast is not significant; the method of [26] is just opposite to [28]. Zhu et al. [16, 17] preserved naturalness well but may fail to enhance the contrast in some cases (e.g., Fig. 3-“Paprika”). Wang et al. [18] enhanced contrast well but may fail to preserve the naturalness in some cases (e.g., Fig. 4-“Flower 2”). In addition, compared to Zhu et al. [16, 17], the algorithms of [18, 26, 28] are time-efficient.

5.4 Summary of recoloring for dichromacy

In this section, recoloring methods for dichromacy have been classified as CDA, HR, Opt, and NM. The state-of-the-art studies [16,17,18, 22, 26] have been explained in detail. The methodologies and evaluation metrics have been summarized. Qualitative and quantitative comparisons of state-of-the-art studies have been made, using the examples of recoloring results and the evaluation metrics introduced in Sect. 3, respectively.

6 Recoloring for anomalous trichromacy

This section first categorizes the existing recoloring methods for anomalous trichromacy and then gives detailed descriptions of the state-of-the-art method; finally, we introduce the evaluation experiment we conducted.

6.1 Recoloring methods in past two decades

6.1.1 Color vision correction

We classify the methods in [13, 56,57,58,59] into a category named “color vision correction” (CVC). These methods were proposed based on the assumption that individuals with low-severity anomalous trichromacy distortedly see the colors, while their color gamut is almost identical to that of normal vision. Thus, the distortion in the color perception of anomalous trichromats can be corrected by reinforcing components of the original stimulus. These methods correct the perception of CVD by applying the reversing operation of CVD simulation \(M\) to the original stimulus \(s\) to obtain the recoloring result \(s^{\prime}\), that is, \(s^{\prime} = M^{ - 1} s\). Then, CVD perceptions of \(s^{\prime}\) are approximately identical to normal vision perceptions of \(s\). It is very charming that CVC methods can correct the distortion of color perception of anomalous trichromacy. However, it is invalid if a target color overflows (out of the capacity of the screen) or the degree of CVD is severe (reduction in the color gamut is significant).

6.1.2 Channel adjustment

We classify the methods in [60,61,62] into a category named “channel adjustment” (CA). These methods individually increase or decrease the value of channels of the input image to compensate for target CVD. For example, the method proposed by Poret et al. [60] decreases the red channel of the input image by about 20% in the RGB color space for protan deficiency compensation; the thought behind [60] is that individuals with protan deficiency perceive dark, saturated reds better than dull, light ones. On the contrary, Chen et al. [61] decided to increase the saturation of the input image by 20% in the HSI color space through an experimental study. In contrast, the method in [62] distributes the information of the red channel to green and blue channels for protan compensation and distributes the value of the green channel to red and blue channels for deutan compensation. Similar to methods in the CVC, methods in CA are time-efficient. These methods can improve color discriminability to some extent. However, methods by Poret et al. [60] and Chen and Liao [61] do not consider the diversity of anomalous trichromacy, i.e., they cannot adapt the recoloring results to the degree of CVD; thus, these methods are difficult for practical application. Because the contents of images are not considered by Lee and Santos [62], its effectiveness may be limited.

6.1.3 Optimization

We classify the methods in [11, 12, 14, 15, 63, 65] into a category named “optimization” (Opt). These methods, except for Huang et al. [63], follow a recoloring pipeline of “key color extraction,” “key color recoloring,” and “result image interpolation.” By interacting with CVD users, Flatla et al. [14] obtained a function that could judge whether the user could distinguish between two colors; if two key colors were judged as indistinguishable, then one of two key colors was replaced with a randomly generated color; the replacement procedure continued until there were no indistinguishable colors. In order to improve the color discrimination, Ichikawa et al. [15] recolored the key colors by a genetic algorithm dependent on a fitness function in the CIE Luv color space. Huang et al. [63] optimized the hue channel of the input image in the HSV color space via histogram transformation. Milic et al. [11] and Shen et al. [65] rotated the colors, which are on the same confusing line, by different angles and directions referring to the positions of neighborhoods in the color space. Optimization guarantees the color contrast but may increase the computation time significantly.

6.2 The state-of-the-art methods

The method proposed in [12] was a follow-up of Zhu et al. [16] and [17]. The recoloring model of Zhu et al. [12] is illustrated in Fig. 5. The methods in [16] and [17] recolored images for dichromacy compensation, while [12] adopted the anomalous trichromacy simulation method from Machado et al. [6] and adapted the recoloring to the difference in anomalous trichromacy for better compensation. In addition, they further improved the effect of compensation by introducing a coefficient \(\alpha\) which is related to the perception errors of individual colors into the recoloring. This strategy keeps strong naturalness preservation constraints on discriminable colors, such as yellow and blue, for protan and deutan deficiencies, while loosening those constraints on confusing colors, such as red and green, for contrast enhancement. Given a specified CVD degree \(d\), the objective function formulated in [12] can be represented as follows:

where \(c_{i}\) and \(c_{i}^{^{\prime}}\) indicate homologous color in the original image and the recolored image, and \(M\left( {c_{i} ,d} \right)\) denotes the result of CVD simulation for \(c_{i}\) using [6]; naturalness coefficient \(\alpha_{i}\) of individual color \(c_{i}\) is calculated in a Gaussian function form:

Recoloring model proposed by Zhu et al. [12]. a The cubic space represents the gamut of normal vision, and the subspace in shadow stands for the gamut of anomalous trichromacy; the diagram illustrates the recoloring procedure of Zhu et al. [12] in RGB color space. b A simplified diagram of the recoloring procedure shown in a. \(c_{i}\), \(c_{j}\) colors in the input image. \(\widetilde{{c_{i} }}\), \(\widetilde{{c_{j} }}\) CVD simulation results for \(c_{i}\), \(c_{j}\). \(c_{i}^{^{\prime}}\), \(c_{j}^{^{\prime}}\) recoloring results for \(c_{i}\), \(c_{j}\). \(\widetilde{{c_{i}^{^{\prime}} }}\), \(\widetilde{{c_{j}^{^{\prime}} }}\) CVD simulation results for \(c_{i}^{^{\prime}}\), \(c_{j}^{^{\prime}}\)

In [12], the authors recruited participants with different CVD degrees and showed them compensation images of varying CVD degrees generated from Ishihara test images. Furthermore, they computed “singular degrees” from the subjective evaluation result of each participant to explore the relationship between the performances of participants in the subjective experiment and their physical degrees of CVD. Finally, a user interface is developed for users to select appropriate recoloring degrees for their conditions.

The method proposed by Shen et al. [65] is a follow-up of [11]. Same as [11], Shen et al. [65] extracted key colors from the input image and transformed the image from RGB color space to CIE Luv color space. Referring to the confusion points and confusion lines defined by Mollon and Regan [66], the key colors which locate on the same confusion lines are recolored for enhancing the contrast between key colors. Finally, other colors are recolored referring to the key colors. A significant difference between [65] and [11] is that Milic et al. [11] only processed the chromatic component of colors, while Shen et al. [65] further adopted a lightness adjustment strategy to improve the effect of contrast enhancement. The lightness adjustment strategy modifies the luminance channel of a color according to its distance to the confusion point given by [66].

6.3 Comparison

The methodologies and evaluation experiments are summarized in Table 7. Compared to dichromacy studies, less quantitative experiments were conducted in anomalous trichromacy research. A possible reason is that no study can statistically summarize or classify all CVD severities worldwide. However, powerful simulation methods for anomalous trichromacy had already been proposed. Naively assuming five severity degrees (which may not physically exist), i.e., 20, 40, 60, 80, and 100% of anomalous trichromacy according to the simulation model of Machado et al. [6], Zhu et al. [12] conducted a quantitative experiment for contrast and naturalness evaluations. They adopted the contrast preservation rate (CPR) metric introduced by Shen et al. [33] for contrast evaluation and calculated chromatic difference between the CVD simulation of the recolored and the original images for naturalness evaluation. Due to the fact that 100% degree of anomalous trichromacy approaches dichromacy, we compared the recoloring results for 100% anomalous trichromacy with dichromacy studies evaluated above, using the same quantitative metrics used above.

Figures 6 and 7 show the recoloring results of Zhu et al. [12] for protan trichromacy and deutan trichromacy compensations, respectively. Both figures show that losses of color contrast become severe with increasing of the assumed CVD degree. From these two examples, the algorithm in [12] adapts the contrast enhancement and naturalness preservation to the degrees of CVD well. Evaluation results of recolored images in Figs. 6 and 7 using the same metrics we used to evaluate dichromacy studies are shown in Tables 8 and 9.

Recoloring results of for 20, 40, 60, 80, and 100% protan trichromacy using Zhu et al. [12]. The first row of b–f shows the CVD simulation results of a, which are generated using Machado et al. [6]. The second rows of b–f show the recoloring results. The third row of b–f shows the CVD simulation results of the recolored images shown in the second row

Recoloring results of for 20, 40, 60, 80, and 100% deutan trichromacy using Zhu et al. [12]. The first row of b–f shows the CVD simulation results of a, which are generated using Machado et al. [6]. The second row of b–f shows the recoloring results. The third row of b–f shows the CVD simulation results of the recolored images shown in the second row

At the same time, we compare the results of CVD degree 100% in Figs. 6 and 7 with those in Figs. 3 and 4. The result for protan 100% (Fig. 6f) shows that Zhu et al. [12] preserved the contrast between the sun and sky well, which is comparable to those of Zhu et al. [16] (Fig. 3d), Zhu et al. [17] (Fig. 3e), and Wang et al. [18] (Fig. 3f), but better than those of Hassan and Paramesran [28] (Fig. 3b) and Hassan [26] (Fig. 3c). Figure 7f shows a distinction between the flower and leaves in the recoloring result of Zhu et al. [12] for deutan 100%, which is better than those of Hassan and Paramesran [28] (Fig. 4b), Hassan [26] (Fig. 4c), Zhu et al. [16] (Fig. 4d), and Zhu et al. [17] (Fig. 4e); additionally, it is more natural than that of Wang et al. [18] (Fig. 4f). For the result of the quantitative metric evaluation shown in Tables 8 and 3–6, the results of Zhu et al. [12] are comparable to those of Zhu et al. [16, 17], Wang et al. [18], and Hassan and Paramesran [28] but better than those of Hassan [26]; for Tables 9 and 3–6, the results of Zhu et al. [17] are better than those of Zhu et al. [12], but Zhu et al. [12] is still comparable to Zhu et al. [16], Hassan and Paramesran [28] and Wang et al. [18] and is better than Hassan [26].

6.4 Summary of recoloring for anomalous trichromacy

In this section, recoloring methods for anomalous trichromacy compensation have been reviewed. These methods are classified as CVC, CA, and Opt. Different from dichromacy, the gamut of low-severity anomalous trichromacy is almost the same as that of normal vision, but colors are perceived in a distorted way. This characteristic enables anomalous trichromats to perceive the recolored images as trichromats perceiving the original images. Inadequacy of severity calibration reduces the performance of CVC methods, and these methods may perform well within low-severity anomalous trichromacy, but the effect of compensation may be decreased if the severity increases. Nevertheless, both CVC and CA approaches are time-efficient. Optimization guarantees the color contrast but may increase the computation time significantly. On the other hand, though the severity calibration problem has been discussed in [33] and [12], there is still no effective method for CVD degree calibration. Besides the methodologies, we summarized the subjective experiments and objective experiments conducted in the recoloring studies; finally, we showed the recoloring results of the state-of-the-art work [12] and compared the results of CVD 100% with those of dichromacy studies from the results of GCD, GCE, CD, and FSIMc metrics.

7 Direction of future research

A comprehensive solution for CVD compensation should consist of CVD calibration, recoloring algorithm, and application to devices. In this section, we discuss the future research directions from these three view-points.

7.1 Calibration

As reviewed in Sect. 2, simulation methods for anomalous trichromacy, such as the model in [6], can simulate varying degrees of CVD. Nevertheless, there is still no method can precisely measure the degree of CVD which an individual is affected. The subjective experiment conducted by Zhu et al. [12] demonstrated the importance of adapting the recoloring to the personal degree of CVD. Therefore, developing CVD calibration techniques can be a fundamental direction of future research for CVD compensation. Zhu et al. [12] gave a potential breakthrough for CVD calibration, that is, utilizing the recoloring results generated for varying degrees.

7.2 Compensation approach

As discussed in [3], conventional recoloring approaches process images in a pixelwise manner and they suffer from the conflicting requirements of contrast and naturalness. The appearance and development of deep learning techniques provide a new option for improving approaches for CVD compensation. Although studies [21, 22] showed the potential of introducing deep learning to the recoloring for CVD compensation, they still used the result images generated by conventional recoloring methods as ground truth for training the network. Therefore, how to collect a sufficient number of high-quality training data can be an important issue. Extracting semantics information with deep learning approaches and incorporating them into the recoloring is also a potential direction of research.

7.3 Application

In addition to PC display, applying recoloring algorithms to some other devices, such as head mounted display, may encounter problems like high computation time, position misalignment between contents of displaying and the real scene [67]. Studies [33, 64] lighted a future direction of combining recoloring algorithms with advanced display devices, such as stereo display and computational glasses for supporting the daily lives of CVD individuals as well as the visual communication between CVD individuals and people with normal vision.

8 Summary

In this survey, we first reviewed the CVD simulation models for dichromacy and anomalous trichromacy. We then summarized the evaluation schemes and metrics introduced in the existing studies. Finally, we introduced the recoloring studies on monochromacy, dichromacy, and anomalous trichromacy.

The subjective evaluation schemes reviewed in this survey can be listed as follows:

-

Ishihara test (performance);

-

Farnsworth–Munsell 100 Hue test (performance);

-

color matching test (performance);

-

character recognition (performance);

-

image scoring (performance, naturalness).

The quantitative evaluation metrics reviewed in this survey can be listed as follows:

-

global luminance error (contrast);

-

color contrast preserving ration (contrast);

-

global chromatic diversity (contrast);

-

global contrast error (contrast);

-

local contrast error (contrast);

-

local absolute contrast (contrast);

-

contrast preservation rate (contrast);

-

chromatic difference (naturalness);

-

structure similarity (naturalness);

-

feature similarity (naturalness).

In the sections, recoloring for dichromacy and recoloring for anomalous trichromacy, we first categorized existing recoloring methods. The recoloring methods for dichromacy compensation were classified into categories as follows:

-

customized difference addition;

-

hue rotation;

-

optimization;

-

node mapping.

The recoloring methods for anomalous trichromacy compensation were classified into categories as follows:

-

color vision correction;

-

channel adjustment;

-

optimization.

And we discussed the pros and cons of these kinds of methods. Then, we gave detailed descriptions of the state-of-the-art methods. Finally, we compared the recoloring methods using results from the evaluation experiments and discussed the directions of future research regarding CVD compensation.

References

Sharpe, L., Stockman, A., Jägle, H., Nathans, J.: Opsin genes, cone photopigments, color vision and color blindness. In: Gegenfurtner, K., Sharpe, L. (eds.) Color vision: from genes to perception. Cambridge University Press, Cambridge (1999)

Reitner, A., Sharpe, L., Zrenner, E.: Is colour vision possible with only rods and blue-sensitive cones? Nature 352(6338), 798–800 (1991)

Ribeiro, M., Gomes, A.: Recoloring algorithms for colorblind people: a survey. ACM Comput. Surv. 52(4), 72 (2019)

Brettel, H., Viénot, F., Mollon, J.D.: Computerized simulation of color appearance for dichromats. J. Opt. Soc. Am. A 14(10), 2647–2655 (1997)

Viénot, F., Brettel, H., Mollon, J.D.: Digital video colourmaps for checking the legibility of displays by dichromats. Color Res. Appl. 24(4), 243–252 (1999)

Machado, G.M., Oliveira, M.M., Fernandes, L.A.F.: A physiologically-based model for simulation of color vision deficiency. IEEE Trans. Vis. Comput. Graph. 15(6), 1291–1298 (2009)

Judd, D.B.: Color perceptions of deuteranopic and protanopic observers. J. Res. Natl. Bur. Stand. 41, 247–271 (1948)

Graham, C.H., Hsia, Y.: Studies of color blindness: a unilaterally dichromatic subject. Proc. Natl. Acad. Sci. 45(1), 96–99 (1959)

Judd, D.B.: Fundamental studies of color vision from 1860 to 1960. Proc. Natl. Acad. Sci. 55(6), 1313–1330 (1966)

Jefferson, L., Harvey, R.: An interface to support color blind computer users. In: Proceedings of the Conference on Human Factors in Computing Systems (CHI’2007), pp. 1535–1538. ACM Press (2007)

Milic, N., Hoffmann, M., Tomacs, T., Novakovic, D., Milosavljevic, B.: A content-dependent naturalness spreserving daltonization method for dichromatic and anomalous trichromatic color vision deficiencies. J. Imaging Sci. Technol. 59(1), 10504 (2015)

Zhu, Z., Toyoura, M., Go, K., Kashiwagi, K., Fujishiro, I., Wong, T., Mao, X.: Personalized image recoloring for color vision deficiency compensation. IEEE Trans. Multimed. (2021) (first online)

Yang, S., Ro, Y., Wong, E., Lee, J.: Quantification and standardized description of color vision deficiency caused by anomalous trichromats—part I: simulation and measurement. EURASIP J. Image Vid. Process. 2008, 487618 (2008)

Flatla, D., Gutwin, C.: SSMRecolor: improving recoloring tools with situation-specific models of color differentiation. In: Proceedings of the 30th International Conference on Human factors in Computing Systems (CHI’12), pp. 2297–2306. ACM Press (2012)

Ichikawa, M., Tanaka, K., Kondo, S., Hiroshima, K., Ichikawa, K., Tanabe, S., Fukami, K.: Web-page color modification for barrier-free color vision with genetic algorithm. In: Proceedings of the Genetic and Evolutionary Computation Conference (GECCO’03), Lecture Notes in Computer Science, vol. 2724, pp. 2134–2146. Springer, Berlin (2003)

Zhu, Z., Toyoura, M., Go, K., Fujishiro, I., Kashiwagi, K., Mao, X.: Processing images for red–green dichromats compensation via naturalness and information-preservation considered recoloring. Vis. Comput. 35(6–8), 1053–1066 (2019)

Zhu, Z., Toyoura, M., Go, K., Fujishiro, I., Kashiwagi, K., Mao, X.: Naturalness- and information-preserving image recoloring for red–green dichromats. Sign. Process.: Image Commun. 76, 68–80 (2019)

Wang, X., Zhu, Z., Chen, X., Go, K., Toyoura, M., Mao, X.: Fast contrast and naturalness preserving image recolouring for dichromats. Comput. Graph. 98, 19–28 (2021)

Khun, G., Oliveira, M., Fernandes, L.: An improved contrast enhancing approach for color-to-grayscale mappings. Vis. Comput. 24(7–9), 505–514 (2008)

Lu, C., Xu, L., Jia, J.: Contrast preserving decolorization. In: 2012 IEEE International Conference on Computational Photography (ICCP), 1–7, April (2012)

Zhang, X., Liu, S.: Contrast preserving image decolorization combining global features and local semantic features. Vis. Comput. 34(6), 1099–1108 (2018)

Li, H., Zhang, L., Zhang, X., Zhang, M., Zhu, G., Shen, P., Shah, S.A.A.: Color vision deficiency datasets & recoloring evaluation using GANs. Multimed. Tools Appl. 79(37), 27583–27614 (2020)

Chen, W., Chen, W., Bao, H.: An efficient direct volume rendering approach for dichromats. IEEE Trans. Vis. Comput. Graph. 17(12), 2144–2152 (2011)

Ma, Y., Gu, X., Wang, Y.: Color discrimination enhancement for dichromats using self-organizing color transformation. Inf. Sci. 179(6), 830–843 (2008)

Huang, J.-B., Tseng, Y.-C., Wu, S.-I., Wang, S.-J.: Information preserving color transformation for protanopia and deuteranopia. Sign. Process. Lett. 14(10), 711–714 (2007)

Hassan, M.: Flexible color contrast enhancement method for red-green deficiency. Multidimens. Syst. Sign. Process. 30(4), 1975–1989 (2019)

Machado, G.M., Oliveira, M.M.: Real-time temporal-coherent color contrast enhancement for dichromats. Comput. Graph. Forum 29(3), 933–942 (2010)

Hassan, M., Paramesran, R.: Naturalness preserving image recoloring method for people with red-green deficiency. Sign. Process.: Image Commun. 57, 126–133 (2017)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Zhang, L., Zhang, L., Mou, X., Zhang, D.: FSIM: a feature similarity index for image quality assessment. IEEE Trans. Image Process. 20(8), 2378–2386 (2011)

Rasche, K., Geist, R., Westall, J.: Detail preserving reproduction of color images for monochromats and dichromats. IEEE Comput. Graph. Appl. 25(3), 22–30 (2005)

Rasche, K., Geist, R., Westall, J.: Re-coloring images for gamuts of lower dimension. Comput. Graph. Forum 24(3), 423–432 (2005)

Shen, W., Mao, X., Hu, X., Wong, T.: Seamless visual sharing with color vision deficiencies. ACM Trans. Graph. 35(4), 70 (2016)

Gooch, A., Olsen, S., Tumblin, J., Gooch, B.: Color2gray: salience-preserving color removal. ACM Trans. Graph. 24(3), 634–639 (2005)

Liu, Q., Li, S., Xiong, J., Qin, B.: Wpmdecolor: weighted projection maximum solver for contrast-preserving decolorization. Vis. Comput. 35(2), 205–221 (2019)

Anagnostopoulos, C.-N., Tsekouras, G., Anagnostopoulos, I., Kalloniatis, C.: Intelligent modification for the daltonization process of digitized paintings. In: Proceedings of the 5th International Conference on Computer Vision Systems (ICVS’07) (2007)

Ruminski, J., Wtorek, J., Ruminska, J., Kaczmarek, M., Bujnowski, A., Kocejko, T., Polinski, A.: Color transformation methods for dichromats. In: Proceedings of the 3rd Conference on Human System Interactions (HSI’10). IEEE Computer Society, pp. 634–641 (2010)

Yang, S., Ro, Y.: Visual contents adaptation for color vision deficiency. In: Proceedings of the 2003 International Conference on Image Processing (ICIP’03), vol. 1, pp. 453–456. IEEE Computer Society (2003)

Iaccarino, G., Malandrino, D., Del Percio, M., Scarano, V.: Efficient edge-services for colorblind users. In: Proceedings of the 15th International Conference on World Wide Web (WWW’06), pp. 919–920. ACM Press (2006)

Wong, A., Bishop, W.: Perceptually-adaptive color enhancement of still images for individuals with dichromacy. In: Proceedings of the Canadian Conference on Electrical and Computer Engineering (CCECE’08), pp. 2027–2032. IEEE Computer Society (2008)

Huang, J.-B., Chen, C.-S., Jen, T.-C., Wang, S.-J.: Image recolorization for the colorblind. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP’09), pp. 1161–1164. IEEE Press (2009)

Lai, C.-L., Chang, S.-W.: An image processing based visual compensation system for vision defects. In Proceedings of the 5th International Conference on Intelligent Information Hiding and Multimedia Signal Processing (IIH-MSP’09), pp. 559–562. IEEE Computer Society (2009)

Wang, M., Liu, B., Hua, X.: Accessible image search. In: Proceedings of the 17th ACM International Conference on Multimedia (MM’09). ACM Press (2009)

Ching, S.-L., Sabudin, M.: Website image colour transformation for the colour blind. In: Proceedings of the 2nd International Conference on Computer Technology and Development (ICCTD’10), pp. 255–259. IEEE Computer Society (2010)

Huang, C.-R., Chiu, K.-C., Chen, C.-S.: Key color priority based image recoloring for dichromats. In: Proceedings of the 11th Pacific-Rim Conference on Multimedia (PCM’10), Lecture Notes in Computer Science, vol. 6298, pp. 637–647. Springer, Berlin (2010)

Wang, M., Liu, B., Hua, X.: Accessible image search for colorblindness. ACM Trans. Intell. Syst. Technol. 1(1), 8 (2010)

Kovalev, V.: Towards image retrieval for eight percent of color-blind men. In: Proceedings of the 17th International Conference on Pattern Recognition (ICPR’04), vol. 2, pp. 943–946. IEEE Press (2004)

Kovalev, V., Petrou, M.: Optimising the choice of colours of an image database for dichromats. In: Proceedings of the 4th International Conference on Machine Learning and Data Mining in Pattern Recognition (MLDM’05), Lecture Notes in Computer Science, vol. 3587, pp. 456–465. Springer, Berlin (2005)

Wakita, K., Shimamura, K.: SmartColor: disambiguation framework for the colorblind. In: Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS’05), pp. 158–165. ACM Press (2005)

Jefferson, L., Harvey, R.: Accommodating color blind computer users. In: Proceedings of the 8th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS’06), pp. 40–47. ACM Press (2006)

Nakauchi, S., Onouchi, T.: Detection and modification of confusing color combinations for red-green dichromats to achieve a color universal design. Color Res. Appl. 33(3), 203–211 (2008)

Kuhn, G., Oliveira, M., Fernandes, L.: An efficient naturalness-preserving image-recoloring method for dichromats. IEEE Trans. Vis. Comput. Graph. 14(6), 1747–1754 (2008)

Flatla, D., Reinecke, K., Gutwin, C., Gajos, K.: SPRWeb: preserving subjective responses to website colour schemes through automatic recolouring. In: Proceedings of the Conference on Human Factors in Computing Systems (CHI’2013), pp. 2069–2078. ACM Press (2013)

Deng, Y., Wang, Y., Ma, Y., Bao, J., Gu, X.: A fixed transformation of color images for dichromats based on similarity matrices. In: Proceedings of the 3rd International Conference on Intelligent Computing (ICIC’07), Lecture Notes in Computer Science, vol. 4681, pp. 1018–1028. Springer, Berlin (2007)

Isola, P., Zhu, J., Zhou, T., Efros, A.:. Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1125–1134 (2017)

Mochizuki, R., Nakamura, T., Chao, J., Lenz, R.: Color-weak correction by discrimination threshold matching. In: Proceedings of the 5th Conference on Colour in Graphics, Imaging and Vision (CGIV’08), pp. 208–213. Society for Imaging Science and Technology (2008)

Kojima, T., Mochizuki, R., Lenz, R., Chao, J.: Riemann geometric color-weak compensation for individual observers. In: Proceedings of the Universal Access in Human–Computer Interaction (UAHCI’14), Lecture Notes in Computer Science, vol. 8514, pp. 121–131. Springer, Cham (2014)

J. R. Nuñez, C. R. Anderton, and R. S. Renslow. Optimizing colormaps with consideration for color vision deficiency to enable accurate interpretation of scientific data. PLoS One, vol. 13, no. 7, Article e0199239, 14 pages, 2018.

Woo, S., Park, C., Baek, Y.S., Kwak, Y.: Flexible technique to enhance color-image quality for color-deficient observers. Curr. Opt. Photon. 2(1), 101–106 (2018)

Poret, S., Dony, R., Gregori, S.: Image processing for colour blindness correction. In: Proceedings of the International Conference on Science and Technology for Humanity (TIC-STH’09), pp. 539–544. IEEE Computer Society (2009)

Chen, Y.-C., Liao, T.-S.: Hardware digital color enhancement for color vision deficiencies. ETRI J. 33(1), 71–77 (2011)

Lee, J., Santos, W.: An adaptive fuzzy-based system to simulate, quantify and compensate color blindness. Integr. Comput.-Aid. Eng. 18(1), 29–40 (2011)

Huang, J.-B., Wu, S.-Y., Chen, C.-S.: Enhancing color representation for the color vision impaired. In: Proceedings of the Workshop on Enhancing Color Representation for the Color Vision Impaired, Held in Conjunction with the 10th European Conference on Computer Vision (ECCV’08) (2008)

Langlotz, T., Sutton, J., Zollmann, S., Itoh, Y., Regenbrecht, H.: Chromaglasses: computational glasses for compensating colour blindness. In: Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI'2018), p. 390. ACM Press (2018)

Shen, X., Feng, J., Zhang, X.: A content-dependent daltonization algorithm for colour vision deficiencies based on lightness and chroma information. IET Image Process. 15(4), 983–996 (2021)

Mollon, J.D., Regan, B.C.: Cambridge colour test handbook. Cambridge Research Systems Ltd, Cambridge (2000)

Tang, Y., Zhu, Z., Toyoura, M., Go, K., Kashiwagi, K., Fujishiro, I., Mao, X.: ALCC-glasses: arriving light chroma controllable optical see-through head-mounted display system for color vision deficiency compensation. Appl. Sci. 10(7), 2381 (2020)

Acknowledgements

This work is supported by JSPS Grants-in-Aid for Scientific Research (Grant Nos. 17H00738, 20J15406, and 20K20408).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhu, Z., Mao, X. Image recoloring for color vision deficiency compensation: a survey. Vis Comput 37, 2999–3018 (2021). https://doi.org/10.1007/s00371-021-02240-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-021-02240-0