Abstract

Isogeometric analysis has brought a paradigm shift in integrating computational simulations with geometric designs across engineering disciplines. This technique necessitates analysis-suitable parameterization of physical domains to fully harness the synergy between Computer-Aided Design and Computer-Aided Engineering analyses. Existing methods often fix boundary parameters, leading to challenges in elongated geometries such as fluid channels and tubular reactors. This paper presents an innovative solution for the boundary parameter matching problem, specifically designed for analysis-suitable parameterizations. We employ a sophisticated Schwarz–Christoffel mapping technique, which is instrumental in computing boundary correspondences. A refined boundary curve reparameterization process complements this. Our dual-strategy approach maintains the geometric exactness and continuity of input physical domains, overcoming limitations often encountered with the existing reparameterization techniques. By employing our proposed boundary parameter matching method, we show that even a simple linear interpolation approach can effectively construct a satisfactory analysis-suitable parameterization. Our methodology offers significant improvements over traditional practices, enabling the generation of analysis-suitable and geometrically precise models, which is crucial for ensuring accurate simulation results. Numerical experiments show the capacity of the proposed method to enhance the quality and reliability of isogeometric analysis workflows.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Isogeometric analysis (IGA) represents a revolutionary development in the integration of Computer-Aided Design (CAD) and Computer-Aided Engineering (CAE), offering a transformative approach across various engineering disciplines [1, 2]. IGA distinguishes itself from the conventional finite element method by utilizing consistent spline-based basis functions for both geometric modeling and numerical simulation. This seamless integration circumvents the need for converting spline-based CAD models into linear mesh models, thereby preserving geometric integrity and eliminating potential errors in the analysis phase.

A pivotal first step in the IGA workflow is to construct an analysis-suitable spline-based parameterization \(\varvec{x}(\varvec{\xi })\) from the Boundary Representation (B-Rep) of the physical domain \(\Omega\) [3]. Research indicates that the quality of this parameterization significantly influences the accuracy and efficiency of subsequent analyses [4, 5]. For an analysis-suitable parameterization, ensuring bijectivity is of utmost importance, followed by the minimization of angle and area distortions. Over the past decade, various approaches have been developed [6,7,8,9,10,11,12].

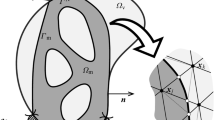

Many established methods in this field assume that input boundary curves remain unchanged. However, the parameterization speed of these curves plays a crucial role in the quality of the resulting parameterization. It is particularly true for elongated and thin physical domains, as illustrated in Fig. 1a. A common challenge arises from designers focusing primarily on the immediate curves, often neglecting the parameterization speed of their opposite counterparts. This oversight frequently leads to a manual determination of boundary correspondences, a practice that limits both automation and efficiency in the parameterization process. Our work addresses this gap, proposing a method that enhances automation and streamlines the parameterization process.

The quality of boundary correspondence has been recognized as a pivotal factor in analysis-suitable parameterization, directly influencing the quality of domain parameterization and then the accuracy of subsequent analyses. Zheng et al. pioneered a method leveraging optimal mass transportation theory to enhance boundary correspondence, primarily focusing on selecting the four corner points [13]. Zhan et al. utilized a deep neural network approach for corner point selection in planar parameterizations. Both of these methodologies typically commence with selecting four corner points for planar parameterization, followed by reliance on conventional chord-length parameterization to determine the speed of boundary parameters. This approach, however, presents a notable limitation: it can constrain the overall quality and accuracy of the parameterization, which is clearly illustrated in Fig. 1a.

This paper presents a novel approach to address the boundary parameter matching problem for elongated physical domains in isogeometric analysis-suitable parameterization, focusing specifically on the parameter speed of boundary curves. Our method employs the Schwarz–Christoffel (SC) mapping, a specialized form of conformal mapping that has not been previously applied in the IGA framework. This method facilitates precise boundary correspondence, as demonstrated in Fig. 1b. To the best of our knowledge, this study is the first to tackle the boundary parameter matching issue in IGA.

The main contributions of this paper are as follows:

-

Schwarz–Christoffel (SC) mapping is employed to compute markers on input boundary curves. The proposed approach transforms complex NURBS-represented boundary curves into simplified polygons, followed by computing a SC mapping from these polygons to a unit disk. The existence and uniqueness of the SC mapping are underpinned by the well-known Riemann mapping theorem.

-

A boundary reparameterization scheme is developed, which actively recalibrates parameters to achieve a harmonious alignment between boundary curves. This scheme is specifically designed to maintain the geometric accuracy of the original boundaries. The theoretical proof is given to demonstrate that our proposed scheme preserves the exact geometry of the input boundaries.

-

Our findings suggest that satisfactory parameterization results can be achieved with a straightforward linear interpolation-based method, offering an alternative to more advanced analysis-suitable parameterization techniques. This is a significant insight, as it implies that simpler methods, when applied effectively, can still yield high-quality parameterizations. The effectiveness of this approach is further validated by numerical experiments, which demonstrate notable improvements in parameterization quality using the proposed method.

The remainder of the paper is organized as follows. Section 2 provides a comprehensive review of the existing literature on analysis-suitable parameterization and SC mapping. In Sect. 3, we define the specific problem our study addresses and introduce the key ideas underlying our proposed method. Section 4 presents an in-depth exploration of our methodology. The results and comparative analysis of different methods are discussed in Sect. 5, demonstrating the efficacy of our approach. Section 6 explores the practical applications of our proposed method in solid modelling and IGA simulations. This paper concludes in Sect. 7 with a summary of our key findings and insights into future research directions.

2 Related work

This section provides a comprehensive review of current methodologies in parameterization as applied in IGA. Next, we will explore the diverse applications and theoretical advancements of SC mapping.

2.1 Review for analysis-suitable parameterization in IGA

The importance of parameterization quality in enhancing the accuracy and efficiency of downstream analyses has been emphasized in various studies [3,4,5]. Algebraic methods, such as Coons patches [14] and Spring patches [15], are commonly employed for constructing analysis-suitable parameterizations due to their simplicity and efficiency. However, these methods can sometimes result in non-bijective parameterizations, particularly for complex computational domains.

To address these limitations, a range of methods has been developed over the past decade. Xu et al. [6] pioneered a nonlinear constrained optimization framework, marking a significant advancement in this field. However, this method often involves a large number of constraints, leading to substantial computational demands. To mitigate this, Wang and Qian [16] and Pan et al. [17] introduced strategies that effectively reduce computational burdens. Utilizing barrier-type objective functions, Ji et al. efficiently eliminated foldovers through solving a simple unconstrained optimization problem [18]. An alternative approach involves the use of penalty functions and Jacobian regularization techniques, tracing back to Garanzha et al.’s work in mesh untangling [19, 20]. Wang and Ma [21] adopted this strategy, thereby avoiding additional foldover elimination steps. Ji et al. improved upon this by introducing a new penalty term that minimizes numerical errors from previous iterations [22]. Beyond the barrier-type objective function, other studies have delved into quasi-conformal theories, such as Teichmüller mapping [23] and the low-rank quasi-conformal method [24]. Additionally, Martin et al. utilized discrete harmonic functions for trivariate B-spline solids [25], while Nguyen and Jüttler [26] and Xu et al. [27] explored sequences and variational methods of harmonic mapping, respectively. Falini et al. approached the problem by computing harmonic maps from physical domains to parametric domains using boundary element methods, followed by inverse mapping approximations via least-squares fitting [28]. Building on the principles of Elliptic Grid Generation (EGG), Hinz et al. proposed PDE-based methods that excel in domains with extreme aspect ratios due to their robust convergence properties [8, 29]. Further advancing this domain, Ji et al. introduced an enhanced elliptic PDE-based parameterization technique, notable for its speed and ability to produce uniform elements near concave/convex boundary regions in general domains [11]. Zhang et al. [30, 31] and Liu et al. [32] developed volumetric spline-based parameterizations for IGA, utilizing T-splines known for their local refinement capabilities. A computational reuse framework for three-dimensional models was introduced by Xu et al. [33]. Later, Wang et al. [34] proposed a multi-patch parameterization method that integrates frame field and polysquare structures to handle complex computational domains. In addition, Xu et al. [35] and Ji et al. [36] introduced r-adaptive methods that focus on minimizing curvature metrics.

The aforementioned studies presuppose the availability of boundary representations and maintain these input boundary curves and surfaces in their original fixed form. Establishing a coherent correspondence between distinct shapes is a fundamental challenge in shape analysis, various methods have been established during past decades [37, 38]. However, these traditional methods fall short in the context of our research as the underlying assumptions are not applicable. Liu et al. allow the parameterization of boundary curves may be movable by optimize the boundary and interior control points simultaneously [10]. Zheng et al. introduced an automated technique for boundary correspondence in isogeometric analysis-suitable parameterization, grounded in optimal mass transportation theory [13]. This approach primarily focuses on identifying appropriate corner placements and then applies chord-length methods for boundary reparameterization, which may lead to outcomes as depicted in Fig. 1a. Building on this, Zheng et al. extended the concept to volumetric cases [39], while Zhan et al. innovated the identification of corner points in the physical domain using deep neural networks [40].

This paper proceeds with the fundamental assumption that the four corner points of the domain are predetermined. The key contribution of our work is in calculating the boundary parameter correspondence, a critical aspect for achieving isogeometric analysis-suitable parameterization.

2.2 Review for Schwarz–Christoffel mapping

Conformal mapping, particularly the Schwarz–Christoffel (SC) mapping, plays a vital role due to its theoretical significance and wide-ranging practical applications [41]. Central to the numerical computation of SC mapping is the challenging Schwarz–Christoffel parameter problem [42]. To address this complexity, a myriad of computational techniques has been developed, with numerical conformal mapping emerging as a particularly effective solution. To surmount computational challenges associated with SC mapping, Driscoll and Vavasis introduced an innovative approach utilizing Cross-Ratios and Delaunay Triangulation (CRDT) algorithms [43]. This method was further extended by Delillo and Kropf to accommodate multiply connected domains [44]. As for the available implementations of SC mapping, the SCPACK package [45], originally developed in Fortran, represents an early solution. The SC Toolbox [46] in MATLAB and its subsequent open-source version [47] are notable advancements. The computational speed of SC mapping has been further accelerated by Banjai and Trefethen [48] through the use of a multipole method. Moreover, Anderson extended SC mapping to non-polygonal domains [49]. For an in-depth understanding of SC mapping, the monograph by Driscoll and Trefethen [50] is a valuable reference.

3 Problem statement and key ideas

In this section, the focus is on defining the specific problem addressed by our research, which establishes the notation that will be used consistently throughout the paper. Additionally, we introduce the key ideas that underpin our proposed methodology.

3.1 Problem statement

In numerous industrial applications, domains characterized by a pronounced, elongated shape with extreme aspect ratios are commonly encountered. Such configurations are prevalent in channels, conduits within reactors, and waterways, as illustrated in Fig. 1. In these scenarios, a reasonable boundary correspondence across opposing long boundary curves is crucial. The parameter speed along these boundaries significantly influence the quality and bijectivity of the derived parameterization. Our study specifically addresses this challenge, seeking to optimize boundary parameter matching and ensure the integrity of parameterization in such elongated domains.

Consider the real variables \(\varvec{x} = [x,y]^{\textrm{T}}\) in the physical domain \(\Omega\), alongside \(\varvec{\xi } = [\xi ,\eta ]^{\textrm{T}}\), representing orthogonal real variables within the parametric domain \(\hat{\Omega }\). The quartet of boundary curves defined in B-spline form, namely \(\mathcal {C}^{\textrm{West}}(\eta )\), \(\mathcal {C}^{\textrm{East}}(\eta )\), \(\mathcal {C}^{\textrm{South}}(\xi )\), and \(\mathcal {C}^{\textrm{North}}(\xi )\), with the superscript \(^{*}\) representing the boundary direction, are given by

where \(R_{i,p^{*}}^{\varvec{\Xi }^{*}}(\xi ) = {\omega _i N_{i,p^{*}}^{\varvec{\Xi }^{*}}(\xi )}/{\sum _{j=0}^{n^{*}} \omega _j N_{j,p^{*}}^{\varvec{\Xi }^{*}}(\xi )}\) are the i-th degree-\(p^{*}\) NURBS basis functions, and \(N_{i,p^{*}}^{\varvec{\Xi }^{*}}(\xi )\) denote the i-th degree-\(p^{*}\) B-Spline basis functions defined over the knot vector \(\varvec{\Xi }^{*}\). Assuming, for simplicity, that the West curve \(\mathcal {C}^{\textrm{West}}(\eta )\) and the East curve \(\mathcal {C}^{\textrm{East}}(\eta )\) represent the longitudinal boundaries, our goal is to establish an analysis-suitable parameterization \(\varvec{x}:\ \hat{\Omega } \rightarrow \Omega\) through a coordinate transformation, expressed in B-Spline form, that adheres to the prescribed boundary conditions \(\varvec{x}^{-1}|_{\partial \Omega } = \partial \hat{\Omega }\). Hence, we define:

where \(\mathcal {I}_I\) and \(\mathcal {I}_B\) index the unknown interior and the known boundary control points, respectively,

are bivariate tensor-product NURBS basis functions of bi-degree \((p_1,p_2)\), and \(\omega _{i_1, i_2}\) are weights.

The differential form of the transformation is expressed as follows:

with \(\varvec{\mathcal {J}}(\xi , \eta )\) representing the Jacobian matrix. A scaled rotation transformation is defined by the composition of a diagonal scaling matrix and an orthogonal rotation matrix, i.e.,

where \(h(\xi , \eta )\) and \(g(\xi , \eta )\) are scalar functions that respectively define the components of the scaling transformation, and \(\theta (\xi , \eta )\) represents the angle of rotation transformation.

A conformal transformation is distinguished as a scaled rotation transformation that fulfills the additional condition of \(g = h\). Specifically, this implies that

Such a transformation preserves angles and is characterized by a metric that remains invariant with respect to the direction of \(d \varvec{\xi } = d \xi + \vec {i} d\eta\), where \(\vec {i}\) is the imaginary unit. For concise notation, a point in a plane is not distinguished from a complex number in this paper. In complex context, if we define \(\varvec{x} = x + \vec {i} y\) and \(\varvec{\xi } = \xi + \vec {i} \eta\), then (6) is essentially a statement of the well-known Cauchy-Riemann equations.

3.2 Key ideas

Figure 2 presents the main workflow of our methodology. Central to our approach is the utilization of the Schwarz–Christoffel mapping—a conformal mapping technique. Initially, we simplify complex NURBS-represented boundary curves into a closed polygon. This step prepares the domain for the application of SC mapping. Then the polygon is numerically transposed onto a unit circle through the SC mapping process. Next, a series of markers are placed along the long boundary curves to guide the reparameterization process.

Subsequent to the mapping, we proceed with a reparameterization scheme for the boundary curves. This entails a careful recalibration of parameters, ensuring the parameterization speed of one curve is harmoniously aligned with the other. A distinguishing feature of our method is its retention to preserving both the accuracy and the continuity of the initial geometric boundaries.

Eventually, we integrate the robust and efficient parameterization technique from our previous research. This workflow yields parameterizations that are exceptionally suited to the demands of isogeometric analysis, please refer to Fig. 2, thereby enhancing the fidelity and utility of the analytic process.

4 Methodology

This section details the core methodology of our proposed approach. In Sect. 4.1, we explore the foundational concepts of SC mapping. Section 4.2 details the computational aspects of the SC parameter problem. Section 4.3 presents the curve reparameterization process, emphasizing its role in preserving geometric accuracy. Lastly, Sect. 4.4 offers a concise overview of the improved PDE-based method.

4.1 Schwarz–Christoffel mapping

Consider \(\mathcal {P}\), an open and simply connected polygon on the complex plane \(\mathbb {C}\), as illustrated in the right of Fig. 3. The Riemann mapping theorem guarantees an analytic function f, with a consistently non-zero derivative, mapping the open unit disk \(\mathcal {D}\) onto \(\mathcal {P}\), such that \(f(\mathcal {D}) = \mathcal {P}\). This function f is uniquely bijective over the domain \(\mathcal {D}\). If we select a specific point \(z_0\) in the unit disk \(\mathcal {D}\) and an angle \(\alpha\) in the range of \([0, 2\pi )\), then f can be uniquely identified to fulfill \(f(0) = z_0\) and \(\arg (f'(0)) = \alpha\). Here, \(z_0\) serves as the conformal center of f, anchoring the mapping in the complex plane.

Denote by \(\omega _1< \omega _2< \cdots < \omega _n\) the vertices of the polygon \(\mathcal {P}\) in counterclockwise order. For ease of indexing purposes, we define \(\omega _{n+1} = \omega _1\) and \(\omega _0 = \omega _n\). The interior angles at these vertices are represented by \(\alpha _1, \alpha _2, \ldots , \alpha _n\). We define \(\beta _j = \alpha _j / \pi - 1\) for each vertex j, where \(\beta _j\) falls within the interval \((-1, 1]\). Furthermore, the sum of the interior angles must equate to a total turn of \(2\pi\), satisfying \(\sum _{k=1}^n \alpha _k = n - 2\). Given these constraints, a conformal mapping f from the unit disk \(\mathcal {D}\) to the polygon \(\mathcal {P}\), as shown in Fig. 3, can be described as [50]:

where \(f(z_0)\) and C are complex constants with \(C \ne 0\). Here, \(z_1, z_2, \ldots , z_n\) are prevertices located on the boundary of \(\mathcal {D}\) in counterclockwise order, satisfying the condition \(\omega _k = f(z_k)\) for each \(k = 1, 2, \ldots , n\). Equation (7) is commonly referred to as the Schwarz–Christoffel formula.

4.2 Schwarz–Christoffel parameter problem

A crucial step of the transformation (7) is the determination of the prevertices \(z_j\), for \(j =1, 2, \ldots , n\). These prevertices typically lack a closed-form solution, except in special cases. The transformation is characterized by \(n+4\) real parameters, including the affine constants \(f(z_0)\) and C, as well as the angles \(\theta _1, \theta _2, \ldots , \theta _n\). Each \(\theta _i\) denotes the argument of the complex number \(\omega _i\). Due to the three degrees of freedom inherent in Möbius transformations, it is possible to arbitrarily select three prevertices, including the predetermined \(z_n\). This choice results in \(n-3\) remaining prevertices, which are uniquely defined and necessitate solving a system of nonlinear equations. This challenge forms the essence of the Schwarz–Christoffel parameter problem.

Practical implementation of SC mapping often involves solving complex nonlinear equations, derived from the geometric constraints of polygons. This process, fundamental to software like SCPACK [45] and the SC Toolbox for Matlab [46], aims to match the computed properties of the polygon \(\mathcal {P}\)—such as side lengths and orientation - with those of the desired polygon, thereby establishing \(n-3\) conditions [42]. However, two significant challenges limit the efficiency and applicability of these software packages.

Firstly, the nonlinear systems often lack a simple, solvable structure, leading to potential pitfalls such as local minima, which can significantly hinder, or even entirely prevent, the convergence of solvers. Secondly, a more critical challenge is the “crowding” phenomenon, a frequent occurrence in domains characterized by elongated and narrow channels [51]. This effect causes the positions of the prevertices \(z_j\) to be disproportionately skewed, with their displacement being exponentially proportional to the aspect ratio of these constricted regions. Such intricacies in the conventional SC mapping approaches underscore the need for a more robust and reliable alternative. In response to these challenges, our approach adopts the Cross-Ratios of the Delaunay Triangulation (CRDT) algorithm, a method within the numerical conformal mapping paradigm, as proposed in [43]. This method addresses the aforementioned issues more effectively by leveraging the stability and precision of the CRDT technique.

The CRDT hinges on the principle that the cross-ratio is invariant under conformal mapping. Consider four distinct points a, b, c, and d in the complex plane, arranged to form a quadrilateral abcd with vertices ordered counterclockwise. Additionally, let ac be an interior diagonal of this quadrilateral. The cross-ratio of these points is mathematically defined as follows:

The main computation scheme is as follows:

-

Step 1: Splitting the long edges of polygon \(\mathcal {P}\) prevents the formation of elongated and narrow quadrilaterals whose edges align with those of the polygon. This step is crucial as it ensures the prevertices of such quadrilaterals are not densely crowded on the unit circle. After this splitting, let n represent the total number of vertices.

-

Step 2: Compute the Delaunay triangulation of the polygon \(\mathcal {P}\). From this triangulation, identify the \(n-3\) diagonals, denoted as \(d_1, d_2, \ldots , d_{n-3}\), and their corresponding quadrilaterals \(Q_1, Q_2, \ldots , Q_{n-3}\), with \(Q_i = Q(d_i)\) for each i. For each quadrilateral \(Q_i\), the vertices are represented as \(\omega _{k(i,1)}, \omega _{k(i,2)}, \omega _{k(i,3)},\) and \(\omega _{k(i,4)}\), where i ranges from 1 to \(n-3\). Here, each four-tuple \(\left( k(i,1), k(i,2), k(i,3), k(i,4) \right)\) consists of distinct indices drawn from the set \({1, 2, \ldots , n}\). The next step is to calculate \(c_i\) for each quadrilateral, defined as:

$$\begin{aligned} c_i = \log \left( \left| \rho (\omega _{k(i,1)}, \omega _{k(i,2)}, \omega _{k(i,3)}, \omega _{k(i,4)}) \right| \right) , \end{aligned}$$(9)for \(i=1,2,\ldots ,n-3\), where \(\vert \cdot \vert\) indicates the magnitude of a complex number.

-

Step 3: Solve the nonlinear system \(\varvec{\mathcal {F}} = 0\), where the i-th nonlinear equation is

$$\begin{aligned} \varvec{\mathcal {F}}_i = \log \left( \left| \rho (\zeta _{k(i,1)}, \zeta _{k(i,2)}, \zeta _{k(i,3)}, \zeta _{k(i,4)}) \right| \right) - c_i, \end{aligned}$$(10)\(\zeta _1, \zeta _2, \ldots , \zeta _n\) constitute the vertices of the polygonal image derived from the SC mapping formula (7). This is based on the invariance of the cross-ratio among the vertices \(\zeta\) under a similarity transformation. For integration along a straight-line path originating from the origin, the compound Gauss-Jacobi quadrature rule, as detailed by [42], is utilized. The central computational challenge in CRDT lies in effectively solving this nonlinear system \(\varvec{\mathcal {F}} = 0\) in (10). In practice, we found that the simple Picard iteration converges too slowly. Therefore, we opt for nonlinear solvers that rely solely on function evaluations. Specifically, we employ the Gauss-Newton solver, enhanced with a Broyden update for the derivative \(\varvec{\mathcal {F}}'\), to achieve more efficient convergence.

Upon computing the SC mapping as described in Eq. (7), we then evaluate equation (7) by uniformly sampling on the boundaries of the unit disk to identify the marker sets \(\textbf{P}_i^{\textrm{West}}\) and \(\textbf{P}_i^\textrm{East}\), located on the closed polygon, as depicted in Fig. 4(a). The next step in our methodology is to reparameterize one boundary curve while keeping the parameter speed of the other boundary fixed.

4.3 Geometry-preserving curve reparameterization technique

Without loss of generality, we choose to fix the West boundary curve \(\mathcal {C}^{\textrm{West}}\) and apply reparameterization to the East boundary curve \(\mathcal {C}^\textrm{East}\). The theoretical foundation of the reparameterization process is the invariant property of B-Spline basis functions under scaling and translation transformations, as elucidated in the following lemma.

Lemma 1

Let \(N^{\varvec{\Xi }}_{i, p}(\xi )\) be the i-th degree-p B-Spline basis function defined over the knot vector \(\varvec{\Xi }\). Consider a scaled and translated knot vector \(\hat{\varvec{\Xi }} = s \varvec{\Xi } + t\) with \(s > 0\). Then \(N^{\hat{\varvec{\Xi }}}_{i, p}(s \xi + t) = N^{\varvec{\Xi }}_{i, p}(\xi )\) holds.

Proof

We prove this lemma using the principle of mathematical induction on the degree p of the B-Spline basis functions.

For \(p=0\), \(N^{\varvec{\Xi }}_{i, 0}(\xi )\) and \(N^{\hat{\varvec{\Xi }}}_{i, 0}(s \xi + t)\) are piecewise constant functions defined as:

and

Since \(s > 0\), the conditions for non-zero values are equivalent, implying \(N^{\hat{\varvec{\Xi }}}_{i, 0}(s \xi + t) = N^{\varvec{\Xi }}_{i, 0}(\xi )\).

Assume the lemma holds for all degrees less than \(p \ge 1\). Then, for degree p, the recurrence relation for B-Spline basis functions gives:

This completes the proof. \(\square\)

Corollary 2

Let \(R^{\varvec{\Xi }, \varvec{\mathcal {H}}}_{i,j,p,q}(\xi , \eta )\) be the NURBS basis function defined over the knot vectors \(\varvec{\Xi }\) and \(\varvec{\mathcal {H}}\) with weights \(\omega _{i,j}\), and degrees p and q. Consider scaled and translated knot vectors \(\hat{\varvec{\Xi }} = s \varvec{\Xi } + t\) and \(\hat{\varvec{\mathcal {H}}} = s' \varvec{\varvec{\mathcal {H}}} + t'\) with \(s> 0\) and \(s' > 0\). Then, \(R^{\hat{\varvec{\Xi }}, \hat{\varvec{\mathcal {H}}}}_{i,j,p,q}(s \xi + t, s' \eta + t') = R^{\varvec{\Xi }, \varvec{\mathcal {H}}}_{i,j,p,q}(\xi , \eta )\).

Proof

Given the invariant property of B-Spline basis functions as stated in Lemma 1, the proof of this corollary is trivial. \(\square\)

Building upon the invariant property of B-Spline basis functions established in Lemma 1, we can extend this concept to NURBS curves. The invariance of B-Spline basis functions under scaling and translation transformations of the knot vector implies that NURBS curves, which are defined using these basis functions, will similarly maintain their geometric form under such transformations. This observation leads us to the following proposition:

Proposition 3

Let \(\mathcal {C}(\xi )\) be a NURBS curve defined over the parameter \(\xi\) within the domain of the knot vector \(\varvec{\Xi }\). If \(\hat{\varvec{\Xi }} = s \varvec{\Xi } + t\) represents the scaled and translated knot vector with \(s > 0\), then the NURBS curve \(\hat{\mathcal {C}}(s \xi + t)\), defined over the transformed parameter domain, is geometrically identical to \(\mathcal {C}(\xi )\). Formally, \(\hat{\mathcal {C}}(s\xi +t) = \mathcal {C}(\xi )\), ensuring the geometric shape of the curve remains invariant under scaling and translation of its parameter domain.

Based on the fundamental principle in Proposition 3 that the geometric integrity of NURBS curves is preserved under scaling and translation transformations of their parameter domain, we develop a novel reparameterization method specifically tailored for addressing boundary parameter correspondence challenges.

With the computed markers \(\textbf{P}_i^{\textrm{West}}\) and \(\textbf{P}_i^\textrm{East}\) (\(i=0,1,\ldots ,m\)) from the SC map, the next step is to determine the corresponding parameter values \(\xi _i^\textrm{East}\) and \(\xi _i^{\textrm{West}}\) for these markers. This involves projecting a point onto a NURBS curve to find the closest point on the curve and then performing point inversion to find the corresponding parameter. This process is known as the point inversion and projection problem, as described in Section 6.1 of the well-known monograph [52]. In our context, the markers \(\textbf{P}_i^{\textrm{West}}\) and \(\textbf{P}_i^\textrm{East}\) do not necessarily lie on the input curves \(\mathcal {C}^{\textrm{West}}(\xi )\) and \(\mathcal {C}^\textrm{East}(\xi )\) since they are on the approximating polygon. Therefore, we compute the closest points on the East and West boundary curves by solving the following nonlinear equation:

where \(\mathcal {C}^{\prime , \textrm{West}}(\xi ) = {\partial \mathcal {C}^{\textrm{West}}(\xi )}/{\partial \xi }\) and \(\mathcal {C}^{\prime , \textrm{East}}(\xi ) = {\partial \mathcal {C}^{\textrm{East}}(\xi )}/{\partial \xi }\) represent the first derivatives of the curves \(\mathcal {C}^{\textrm{West}}(\xi )\) and \(\mathcal {C}^{\textrm{East}}(\xi )\), respectively. Here, \(\textbf{P}^{\textrm{West}}_i\) and \(\textbf{P}^{\textrm{East}}_i\) denote the i-th marker on the boundary curves \(\mathcal {C}^{\textrm{West}}(\xi )\) and \(\mathcal {C}^{\textrm{East}}(\xi )\), respectively.

In our implementation, the standard Newton’s method is employed to solve equation (12) efficiently. Note that a good initial guess is critical to the convergence of the standard Newton’s method. To this end, we evaluate curve points at densely spaced parameter values and use the parameter value with the closest distance to the current marker as the initial guess for the Newton iteration.

Upon identifying these parameters, we then focus on the East boundary curve \(\mathcal {C}^\textrm{East}\). We split this curve at the parameter \(\xi _{i+1}^\textrm{East}\). This is achieved by inserting the value of \(\xi _{i+1}^\textrm{East}\) until its multiplicity is equal to the degree of the curve. Consequently, we obtain a curve segment defined over the parameter interval \([\xi _{i}^\textrm{East}, \xi _{i+1}^\textrm{East}]\). The next step is to align this segment with the West boundary curve \(\mathcal {C}^{\textrm{West}}\). To accomplish this, we apply an affine transformation to the curve segment. This transformation adjusts the parameter interval of the East curve segment to match the parameter interval \([\xi _{i}^{\textrm{West}}, \xi _{i+1}^{\textrm{West}}]\). The result is a harmonized alignment between the East and West boundary curves, crucial for maintaining geometric consistency in the reparameterization process. However, please note that it is equivalent to subdividing the entire computational domain into a set of subdomains and parametrizing each subdomain separately. Therefore, the curve, after reparametrization, only has \(G^k\) continuity instead of \(C^k\) continuity in its original form.

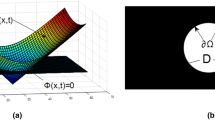

This procedure enables the reparameterization of the curve while maintaining the geometric integrity. By applying the linear interpolation-based parameterization method along the \(\eta\)-direction, we achieve a refined parameterization. This improvement is demonstrated in Fig. 4b, which highlights the significant improvement in parameterization quality achieved through our reparameterization procedure.

4.4 Analysis-suitable parameterization

As demonstrated in Fig. 5a, our method for reparameterizing boundary curves, when compared with the original boundary curves shown in Fig. 1a, proves to be highly effective. Even a straightforward linear interpolation between corresponding boundary control points results in a markedly improved parameterization. In this section, we explore the possibility of further enhancing the parameterization quality by integrating our previously developed PDE-based method [11].

It is important to highlight that, in this case, we are constrained by the absence of additional degrees of freedom to refine the parameterization quality. To address this, we implement k-refinements along the \(\eta\)-direction, thereby introducing the necessary degrees of freedom. Henceforth in this paper, we will default to elevating the degree along \(\eta\)-direction to 2 and inserting 2 extra knots to the knot vector \(\varvec{\Xi }\), except where explicitly stated otherwise. This k-refinement procedure results in the formation of the knot vector \(\varvec{\mathcal {H}} = \{ 0, 0, 0, 1/3, 2/3, 1, 1, 1 \}\).

The core step of the PDE-based parameterization method lies in solving a nonlinear elliptic PDE system:

where the tensor field \(\mathbb {A}\) is set to \(\texttt {DIAG}(1/\left| \varvec{\mathcal {J}} \right| , 1/\left| \varvec{\mathcal {J}} \right| )\) in [11].

Denote by \(\mathbb {S}_{p,q}^{\varvec{\Xi }, \varvec{\mathcal {H}}}\) the spline space spanned by the bivariate B-spline bases of degree p and q with knot vectors \(\varvec{\Xi }\) and \(\varvec{\mathcal {H}}\), respectively, and \((\mathbb {S}_{p,q}^{\varvec{\Xi }, \varvec{\mathcal {H}}})^0 = \{N_i \in \mathbb {S}_{p,q}^{\varvec{\Xi }, \varvec{\mathcal {H}}}: N_i \vert _{\partial \hat{\Omega }} = 0\}\) be those vanishing on \(\partial \Omega\). The variational principle deduces the nonlinear PDE system (13) to its equivalent discretization scheme:

where \(\textbf{N}\) denotes the collection of B-spline basis functions in the spline space \((\mathbb {S}_{p,q}^{\varvec{\xi }, \varvec{\mathcal {H}}})^0\). The enhanced parameterization, depicted in Fig. 5b, demonstrates a significant improvement in parameterization quality. This advancement underscores the effectiveness of the proposed approach in refining the parameterization process. Our implementation is available now in the open-source G+Smo library [53], offering a robust solution framework for the analysis-suitable parameterization problem in isogeometric analysis.

5 Numerical experiments and comparisons

In this section, we present a numerical investigation to demonstrate the effectiveness of our proposed method for boundary parameter matching problem.

5.1 Implementation details and parameterization quality metrics

The method described in this paper is implemented using C++ programming language. The computational experiments were conducted on a MacBook Pro 14-inch 2021 equipped with an Apple M1 Pro CPU and 16 GB of RAM. We utilize G+Smo (Geometry + Simulation Modules), an open-source C++ library, as the foundation of our implementation, leveraging its extensive functionalities in geometric computing and simulation [53, 54]. For handling matrix and vector operations, as well as solving the linear systems integral to our method, Eigen library [55] is integrated.

Two quality metrics are employed to assess the parameterization: the scaled Jacobian, denoted as \(\vert \varvec{\mathcal {J}} \vert _s\), which evaluates orthogonality, and the uniformity metric, denoted as \(m_{\mathrm{unif.}}\), which measures uniformity.

-

The scaled Jacobian is defined as follows:

$$\begin{aligned} \vert \varvec{\mathcal {J}} \vert _s = \frac{\vert \varvec{\mathcal {J}} \vert }{\vert \varvec{x}_{\xi } \vert \vert \varvec{x}_{\eta } \vert }. \end{aligned}$$(15)This metric takes values ranging from \(-1.0\) to 1.0. A negative value of \(\vert \varvec{\mathcal {J}} \vert _s\) indicates a fold in the parameterization \(\varvec{x}\), signifying overlaps in the mapping. The ideal value of \(\vert \varvec{\mathcal {J}} \vert _s\), which is 1.0, is achieved when the orthogonality in the parameterization is maintained optimally.

-

The uniformity metric is computed as:

$$\begin{aligned} m_{\mathrm{unif.}} = \left| \frac{\vert \mathcal {J} \vert }{R_\textrm{area}} - 1 \right| , \end{aligned}$$(16)where \(R_\textrm{area} = {\text {Area}(\Omega )}/{\text {Area}(\hat{\Omega })}\) represents the area ratio between the physical domain \(\Omega\) and the parametric domain \(\hat{\Omega }\). This metric \(m_{\mathrm{unif.}}\) attains its optimal value of 0.0 when the parameterization uniformly conserves area.

For a comprehensive evaluation, these metrics were calculated over a dense grid of \(1001 \times 1001\) sample points, including the domain boundaries. In our analysis, we exclude the maximum values of \(\vert \varvec{\mathcal {J}} \vert _s\) and the minimum values of \(m_{\mathrm{unif.}}\) from the statistics, as these are typically achievable in all the examples.

5.2 Role of boundary correspondence

This section is devoted to conducting a comparative quality analysis of the parameterizations derived from various methods.

Initially, as depicted in Fig. 6a, the boundary curves are parameterized using an approximate chord-length method tailored to their individual representations. However, this method faces challenges in creating analysis-suitable parameterizations, mainly due to a mismatch in parameter speeds that degrades the parameterization quality. This degradation becomes particularly noticeable in the resulting poor parameterization.

Our proposed boundary parameter matching method addresses these issues effectively, enhancing the overall quality of parameterization. This improvement is achieved through a simple linear interpolation along the \(\eta\)-direction, as illustrated in Fig. 6b, leading to considerable enhancements. With the linear interpolation-based parameterization as an initial guess, further refinement is obtained by implementing our PDE-based parameterization technique, which results in a superior quality of parameterization, as shown in Fig. 6c. The effectiveness of these improvements is substantiated by comprehensive statistical data, detailed in Table 1. In this case, the initial application of the chord-length method results in an invalid parameterization, indicated by a negative minimum value of the scaled Jacobian \(\vert \varvec{\mathcal {J}} \vert _s\). This suggests that the parameterization is non-bijective. We omit the additional quality metrics for the non-bijective parameterization results.

Building on the previous discussion, the effectiveness of our proposed method is further exemplified with additional “M”-shaped domain, as shown in Fig. 7. This example is instrumental in highlighting the substantial improvement in parameterization quality achieved by our approach. Remarkably, even a straightforward linear interpolation technique, when applied in conjunction with our boundary parameter approach, results in parameterizations of surprisingly high quality. In instances like these, resorting to a PDE-based parameterization method to further improve quality becomes superfluous. This outcome demonstrates not only the robustness of our method, but also its capability and robustness in handling complex geometries.

Although the proposed method is primarily designed for elongated strip-shaped geometries with extreme aspect ratios, it can also be applied to other types of geometries, such as disk-shaped ones. As shown in Fig. 8, the upper boundary is composed of two B-spline curves. This example demonstrates the feasibility of applying the proposed method to boundary curves composed of multiple B-spline curves. First, we manually determine the four corners for parameterization. Next, the two upper boundary curves are merged into a single \(C^0\) continuity B-spline curve. Then, we apply the proposed method to the current geometry. The result, seen in Fig. 8b, demonstrates the applicability of the proposed method to not only strip-shaped geometries but also other types of geometries. Quantitative data is shown in Table 1.

5.3 Comparisons with existing methods

This section presents a comparative analysis of our proposed method against established techniques, specifically the low-rank parameterization method introduced by Pan et al. [24], the boundary correspondence method utilizing optimal mass transport as proposed by Zheng et al. [13], the boundary corners selection method based on deep learning by Zhan et al. [40], and the simultaneous boundary and interior parameterization method via deep learning proposed by Zhan et al. [56].

For our comparative analysis, we utilize the open-source implementation of the boundary correspondence method provided by the original authors [13], which is accessible at https://github.com/ZH-ye/BoundaryCorrespondenceOT. Within the workflow of this method, the authors first identify optimal corner points that align the curvature measure of the physical domain \(\Omega\) with that of the unit square. Subsequently, a spline-based least-squares fitting subroutine in conjunction with chord-length parameterization is utilized to approximate the input point cloud, which is followed by the integration of the low-rank quasi-conformal mapping parameterization method [24] to complete. While the last two competitors by Zhan et al. from [40, 56], the parameterizations are kindly provided by the original authors.

Figure 9 shows the parameterizations of a "G"-shaped domain obtained through various existing methods alongside our proposed approach. As depicted in Fig. 9a, the boundary correspondence method [13] does not correctly identify the corners in this example. Specifically, the least-square approximation of the input curves results in the loss of geometric precision at a sharp corner, an issue our method avoids by preserving the exact geometry of the boundaries. A key concern is the significant distortion in the resulting parameterization due to the mismatched opposite boundaries. To address this, we establish correct corner correspondences and engage the low-rank quasi-conformal method [57]. Figure 9c shows the automatic corner detection using a deep learning method as proposed by Zhan et al. [40], coupled with an interior parameterization achieved through the low-rank quasi-conformal method detailed by Pan et al. [24]. It is evident that the parameterization quality is poor due to the mismatching of the opposing boundaries. Figure 10d demonstrates the resulting non-bijective parameterization characterized by numerous self-intersections, as produced by the deep learning approach [56]. However, as shown in Fig. 9b, this approach yields a non-bijective parameterization (refer to Table 1 please). In contrast, parameterizations initiated with chord-length parameterization and further refined using our method with simple linear interpolation produce bijective outcomes, as demonstrated in Fig. 9e and Fig. 9f, respectively. Notably, our method significantly enhances the orthogonality of the resulting parameterization compared with the initial parameterization in Fig. 9e.

Additional comparative results are presented in Fig. 10. In this case, the boundary correspondence method [13], as depicted in Fig. 10a, successfully identifies the four corners, leading to a parameterization result comparable to that achieved by the low-rank quasi-conformal method (Fig. 10b). Figure 10c, d show the resulting non-bijective parameterizations generated by [40] and [56], respectively. The parameterization derived from the initial chord-length method, illustrated in Fig. 10e, exhibits significant distortion, particularly at the upper left turn. Comprehensive statistical analysis is provided in Table 1, where the negative minimum value of the scaled Jacobian \(\left| \mathcal {J} \right| _s\) indicates that these parameterizations are non-bijective. In contrast, our proposed parameterization method, shown in Fig. 10f, demonstrates superior parameterization is achieved.

6 Applications

6.1 Application to solid modeling

In 3D solid modeling applications, numerous CAD models can be generated or effectively simplified to planar cases through fundamental operations such as extrusion, sweeping, lofting, ruling, and revolving [52]. Although this paper primarily focuses on planar domains, the proposed method can be readily adapted to 3D volumes created by using these fundamental modeling operations. Figure 11 shows volumetric parameterizations generated by combining the proposed method with extrusion and sweeping, showcasing its applicability to solid modeling.

Furthermore, we apply the proposed boundary parameter matching method to generate volumetric parameterization for a 5-lobe rotor and its circular casing, as illustrated in Fig. 11(c). The parameterizations are generated using the PDE-based technique introduced in Sect. 4.4 for a planar slice, and then the volumetric parameterization was achieved through rotation and lofting along the third direction in the physical space, both before (left) and after (right) employing the proposed boundary parameter matching method. The colormap represents the scaled Jacobian of the resulting parameterization. It is evident that the proposed method significantly improves the quality, particularly enhancing the orthogonality of the resulting parameterization.

6.2 Application to IGA simulation

To validate the usability of our parameterizations for IGA simulation, we consider Poisson’s problem with mixed boundary conditions in the domain \(\Omega\) (see Fig. 12):

where \(f \in L^2(\Omega ): \Omega \rightarrow \mathbb {R}\) denotes a given source term, \(\partial \Omega =\bar{\Gamma }_{D}\cup \bar{\Gamma }_{N}\) defines the boundary of the physical domain \(\Omega\) with \(\Gamma _{D}\cap \Gamma _{N}=\mathbf {\varnothing }\), \(\textbf{n}\) denotes the outward pointing unit normal vector on the boundary \(\partial \Omega\), \(\Gamma _{D}\) and \(\Gamma _{N}\) denote the separate parts of the boundary where Dirichlet and Neumann boundary conditions are prescribed, respectively.

Figure 12a, b present the initial chord-length and linear interpolation-based parameterizations achieved through our boundary matching method. These visualizations, with the scaled Jacobian \(\vert \varvec{\mathcal {J}} \vert _s\) color-encoded, demonstrate significant improvement in orthogonality due to our method. For this example, the source term is set as \(f = 8 \pi ^2 \sin (2 \pi x) \sin (2 \pi y)\), leading to the exact solution \(u^\textrm{exact} = \sin (2 \pi x) \sin (2 \pi y)\). The boundary conditions and the exact solution are depicted in Fig. 12c, d, respectively.

Figure 13 shows the \(L_2\) and \(H_1\) error convergence for different parameterizations. The results highlight a substantial reduction in error for our improved parameterization compared to the original chord-length parameterization, with all methods achieving the optimal rate of convergence.

7 Conclusions and outlook

This paper presents a novel method designed to address the boundary parameter correspondence challenge, a crucial aspect of analysis-suitable parameterization in IGA. Our approach, integrating Schwarz–Christoffel mapping with a subsequent curve reparameterization procedure, effectively maintains the geometric exactness and continuity of the input. Significantly, our boundary parameter matching technique enables even basic linear interpolation methods to yield high-quality parameterizations, especially in elongated domains. Through numerous numerical experiments, the effectiveness and reliability of our method have been convincingly demonstrated, underscoring its potential in practical applications.

Despite its strengths, our method is not without limitations. A significant constraint lies in the planar nature of conformal mapping, while the demand for mesh generation predominantly exists in three-dimensional contexts. This discrepancy presents a challenge and an opportunity for future research. Exploring the potential of extending our method to address surface parameter correspondence in three-dimensional spaces is an exciting and valuable direction for ongoing investigations. Such advancements could significantly broaden the applicability and impact of our approach in the field of IGA. In addition, a typical workflow for computational domains with holes involves first computing a multi-patch layout and then applying a single-patch parameterization method to each individual patch. In this context, boundary parameter matching is critical to the quality of the resulting parameterization [58, 59]. Applying the proposed boundary parameter matching technique in this context is a very interesting topic.

Data Availability

No datasets were generated or analysed during the current study.

References

Hughes TJ, Cottrell JA, Bazilevs Y (2005) Isogeometric analysis: CAD, finite elements, NURBS, exact geometry and mesh refinement. Comput Methods Appl Mech Eng 194(39–41):4135–4195

Cottrell JA, Hughes TJ, Bazilevs Y (2009) Isogeometric analysis: toward integration of CAD and FEA. Wiley

Cohen E, Martin T, Kirby R, Lyche T, Riesenfeld R (2010) Analysis-aware modeling: understanding quality considerations in modeling for isogeometric analysis. Comput Methods Appl Mech Eng 199(5–8):334–356

Xu G, Mourrain B, Duvigneau R, Galligo A (2013) Optimal analysis-aware parameterization of computational domain in 3D isogeometric analysis. Comput Aided Des 45(4):812–821

Pilgerstorfer E, Jüttler B (2014) Bounding the influence of domain parameterization and knot spacing on numerical stability in Isogeometric Analysis. Comput Methods Appl Mech Eng 268:589–613

Xu G, Mourrain B, Duvigneau R, Galligo A (2011) Parameterization of computational domain in isogeometric analysis: methods and comparison. Comput Methods Appl Mech Eng 200(23–24):2021–2031

Xu G, Mourrain B, Galligo A, Rabczuk T (2014) High-quality construction of analysis-suitable trivariate NURBS solids by reparameterization methods. Comput Mech 54(5):1303–1313

Hinz J, Möller M, Vuik C (2018) Elliptic grid generation techniques in the framework of isogeometric analysis applications. Comput Aided Geomet Des 65:48–75

Pan Q, Rabczuk T, Xu G, Chen C (2019) Isogeometric analysis for surface PDEs with extended Loop subdivision. J Comput Phys 398:108892

Liu H, Yang Y, Liu Y, Fu X-M (2020) Simultaneous interior and boundary optimization of volumetric domain parameterizations for IGA. Comput Aided Geomet Des 79:101853

Ji Y, Chen K, Möller M, Vuik C (2023) On an improved PDE-based elliptic parameterization method for isogeometric analysis using preconditioned Anderson acceleration. Comput Aided Geomet Des 102:102191

Pan M, Zou R, Tong W, Guo Y, Chen F (2023) G1-smooth planar parameterization of complex domains for isogeometric analysis. Comput Methods Appl Mech Eng 417:116330

Zheng Y, Pan M, Chen F (2019) Boundary correspondence of planar domains for isogeometric analysis based on optimal mass transport. Comput Aided Des 114:28–36

Farin G, Hansford D (1999) Discrete Coons patches. Comput Aided Geomet Des 16(7):691–700

Gravesen J, Evgrafov A, Nguyen D-M, Nørtoft P (2012) Planar parametrization in isogeometric analysis In: International Conference on mathematical methods for curves and surfaces, Springer, pp. 189–212

Wang X, Qian X (2014) An optimization approach for constructing trivariate B-spline solids. Comput Aided Des 46:179–191

Pan M, Chen F, Tong W (2020) Volumetric spline parameterization for isogeometric analysis. Comput Methods Appl Mech Eng 359:112769

Ji Y, Yu Y-Y, Wang M-Y, Zhu C-G (2021) Constructing high-quality planar NURBS parameterization for isogeometric analysis by adjustment control points and weights. J Comput Appl Math 396:113615

Garanzha V, Kaporin I (1999) Regularization of the barrier variational method. Comput Math Math Phys 39(9):1426–1440

Garanzha V, Kaporin I, Kudryavtseva L, Protais F, Ray N, Sokolov D (2021) Foldover-free maps in 50 lines of code. ACM Trans Graph (TOG) 40(4):1–16

Wang X, Ma W (2021) Smooth analysis-suitable parameterization based on a weighted and modified Liao functional. Comput Aided Des 140:103079

Ji Y, Wang M-Y, Pan M-D, Zhang Y, Zhu C-G (2022) Penalty function-based volumetric parameterization method for isogeometric analysis. Comput Aided Geomet Des 94:102081

Nian X, Chen F (2016) Planar domain parameterization for isogeometric analysis based on Teichmüller mapping. Comput Methods Appl Mech Eng 311:41–55

Pan M, Chen F, Tong W (2018) Low-rank parameterization of planar domains for isogeometric analysis. Comput Aided Geomet Des 63:1–16

Martin T, Cohen E, Kirby RM (2009) Volumetric parameterization and trivariate B-spline fitting using harmonic functions. Comput Aided Geomet Des 26(6):648–664

Nguyen T, Jüttler B (2010) Parameterization of contractible domains using sequences of harmonic maps In: International Conference on curves and surfaces, Springer, pp 501–514

Xu G, Mourrain B, Duvigneau R, Galligo A (2013) Constructing analysis-suitable parameterization of computational domain from CAD boundary by variational harmonic method. J Comput Phys 252:275–289

Falini A, Špeh J, Jüttler B (2015) Planar domain parameterization with THB-splines. Comput Aided Geomet Des 35:95–108

Hinz J (2020) PDE-based parameterization techniques for isogeometric analysis applications, Ph.D. thesis, Delft University of Technology

Zhang Y, Wang W, Hughes TJ (2012) Solid T-spline construction from boundary representations for genus-zero geometry. Comput Methods Appl Mech Eng 249–252:185–197

Zhang Y, Wang W, Hughes TJ (2013) Conformal solid T-spline construction from boundary T-spline representations. Comput Mech 51(6):1051–1059

Liu L, Zhang Y, Hughes TJ, Scott MA, Sederberg TW (2014) Volumetric T-spline construction using Boolean operations. Eng Comput 30:425–439

Xu G, Kwok T-H, Wang CC (2017) Isogeometric computation reuse method for complex objects with topology-consistent volumetric parameterization. Comput Aided Des 91:1–13

Wang S, Ren J, Fang X, Lin H, Xu G, Bao H, Huang J (2022) IGA-suitable planar parameterization with patch structure simplification of closed-form polysquare. Comput Methods Appl Mech Eng 392:114678

Xu G, Li B, Shu L, Chen L, Xu J, Khajah T (2019) Efficient r-adaptive isogeometric analysis with Winslow’s mapping and monitor function approach. J Comput Appl Math 351:186–197

Ji Y, Wang M-Y, Wang Y, Zhu C-G (2022) Curvature-based r-adaptive planar NURBS parameterization method for isogeometric analysis using bi-level approach. Comput Aided Des 150:103305

Van Kaick O, Zhang H, Hamarneh G, Cohen-Or D (2011) A survey on shape correspondence. Comput Graph Forum 30(6):1681–1707

Sahillioğlu Y (2020) Recent advances in shape correspondence. Vis Comput 36(8):1705–1721

Zheng Y, Chen F (2021) Volumetric boundary correspondence for isogeometric analysis based on unbalanced optimal transport. Comput Aided Des 140:103078

Zhan Z, Zheng Y, Wang W, Chen F (2023) Boundary correspondence for isogeometric analysis based on deep learning. Commun Math Stat 11(1):131–150

Lopez-Menchon H, Ubeda E, Heldring A, Rius JM (2022) A parallel Monte Carlo method for solving electromagnetic scattering in clusters of dielectric objects. J Comput Phys 463:111231

Trefethen LN (1980) Numerical computation of the Schwarz-Christoffel transformation. SIAM J Sci Stat Comput 1(1):82–102

Driscoll TA, Vavasis SA (1998) Numerical conformal mapping using cross-ratios and Delaunay triangulation. SIAM J Sci Comput 19(6):1783–1803

Delillo TK, Kropf EH (2011) Numerical computation of the Schwarz-Christoffel transformation for multiply connected domains. SIAM J Sci Comput 33(3):1369–1394

Trefethen LN (1983) SCPACK: A FORTRAN77 package for Schwarz-Christoffel conformal mapping. https://www.netlib.org/conformal/. Accessed 23 June 2024

Driscoll TA (1996) Algorithm 756: a MATLAB toolbox for Schwarz-Christoffel mapping. ACM Trans Math Softw (TOMS) 22(2):168–186

Driscoll TA (2005) Algorithm 843: improvements to the Schwarz-Christoffel toolbox for MATLAB. ACM Trans Math Softw (TOMS) 31(2):239–251

Banjai L, Trefethen LN (2003) A multipole method for Schwarz-Christoffel mapping of polygons with thousands of sides. SIAM J Sci Comput 25(3):1042–1065

Andersson A (2008) Schwarz-Christoffel mappings for nonpolygonal regions. SIAM J Sci Comput 31(1):94–111

Driscoll TA, Trefethen LN (2002) Schwarz-Christoffel mapping, vol 8. Cambridge University Press

Howell LH, Trefethen LN (1990) A modified Schwarz-Christoffel transformation for elongated regions. SIAM J Sci Stat Comput 11(5):928–949

Piegl L, Tiller W (1996) The NURBS book. Springer Science & Business Media

Jüttler B, Langer U, Mantzaflaris A, Moore SE, Zulehner W (2014) Geometry + simulation modules: implementing isogeometric analysis. PAMM 14(1):961–962

Mantzaflaris A (2019) An overview of geometry plus simulation modules. In: International Conference on mathematical aspects of computer and information sciences, Springer, pp. 453–456

Guennebaud G, Jacob B et al (2010) Eigen v3. http://eigen.tuxfamily.org. Accessed 23 June 2024

Zhan Z, Wang W, Chen F (2024) Simultaneous boundary and interior parameterization of planar domains via deep learning. Comput Aided Des 166:103621

Pan M, Chen F (2019) Low-rank parameterization of volumetric domains for isogeometric analysis. Comput Aided Des 114:82–90

Xu G, Li M, Mourrain B, Rabczuk T, Xu J, Bordas SP (2018) Constructing IGA-suitable planar parameterization from complex CAD boundary by domain partition and global/local optimization. Comput Methods Appl Mech Eng 328:175–200

Zhang Y, Ji Y, Zhu C-G (2024) Multi-patch parameterization method for isogeometric analysis using singular structure of cross-field. Comput Math Appl 162:61–78

Acknowledgements

This research was partially funded by the National Natural Science Foundation of China (Grant nos. 12071057 and 12301490) and the Fundamental Research Funds for the Central Universities (Grant no. DUT23LAB302).

Author information

Authors and Affiliations

Contributions

Y.J. and M.M. conceptualized the study and designed the research framework. Y.J. and Y.Y. contributed to the methodology development and data analysis. Y.J. conducted the software and numerical experiments, while Y.Y. assisted with data analysis and figure preparation. The manuscript was jointly written by Y.J. and C.Z., with significant revisions by M.M. and C.Z. All authors reviewed and approved the final manuscript for submission.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ji, Y., Möller, M., Yu, Y. et al. Boundary parameter matching for isogeometric analysis using Schwarz–Christoffel mapping. Engineering with Computers (2024). https://doi.org/10.1007/s00366-024-02020-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00366-024-02020-z