Abstract

The generalized Kaiser–Bessel window function is defined via the modified Bessel function of the first kind and arises frequently in tomographic image reconstruction. In this paper, we study in details the properties of the Kaiser–Bessel distribution, which we define via the symmetric form of the generalized Kaiser–Bessel window function. The Kaiser–Bessel distribution resembles to the Bessel distribution of McKay of the first type, it is a platykurtic or sub-Gaussian distribution, it is not infinitely divisible in the classical sense and it is an extension of the Wigner’s semicircle, parabolic and n-sphere distributions, as well as of the ultra-spherical (or hyper-spherical) and power semicircle distributions. We deduce the moments and absolute moments of this distribution and we find its characteristic and moment generating function in two different ways. In addition, we find its cumulative distribution function in three different ways and we deduce a recurrence relation for the moments and absolute moments. Moreover, by using a formula of Ismail and May on quotient of modified Bessel functions of the first kind, we deduce a closed-form expression for the differential entropy. We also prove that the Kaiser–Bessel distribution belongs to the family of log-concave and geometrically concave distributions, and we study in details the monotonicity and convexity properties of the probability density function with respect to the argument and each of the parameters. In the study of the monotonicity with respect to one of the parameters we complement a known result of Gronwall concerning the logarithmic derivative of modified Bessel functions of the first kind. Finally, we also present a modified method of moments to estimate the parameters of the Kaiser–Bessel distribution, and by using the classical rejection method we present two algorithms for sampling independent continuous random variables of Kaiser–Bessel distribution. The paper is closed with conclusions and proposals for future works.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Motivation of Our Work

Signal processing is an electrical engineering subfield that focuses on analysing, modifying, and synthesizing signals such as sound, images, and scientific measurements, and it is very essential for the use of X-rays, MRIs and CT scans, allowing medical images to be analyzed and deciphered by complex data processing techniques. According to the web-page https://en.wikipedia.org/wiki/Window_function, in signal processing a window function (also called a tapering function or apodization function) is often used to restrict an arbitrary function or signal in some way. A window function is usually zero-valued outside of some interval, symmetric around the middle of the interval, usually near to a maximum in the middle, and usually tapering away from the middle. Thus, when another function or waveform is ”multiplied” by an arbitrary window function, the product is also zero-valued outside the interval: all that is left is the part where they overlap, the so-called ”view through the window”. Thus, tapering, not segmentation, is the main purpose of window functions. In typical applications, the window functions used are non-negative, smooth, ”bell-shaped” curves. Window functions are often used in spectral analysis, the design of finite impulse response filters, as well as beamforming and antenna design. The most popular window functions are the followings: Parzen window, Welch window, sine window, power of sine/cosine window, Hann and Hamming window, Blackman window, Nuttal window, Blackman–Nuttall window, Blackman–Harris window, Rife–Vincent window, Gaussian window, Slepian window, Kaiser window, Dolph–Chebyshev window, ultraspherical window, Bartlett–Hann window, Planck–Bessel window and Lanczos window. For more details on window functions and their applications in signal processing the interested reader is referred to the recent book [55] on this topic and to the references therein. The Kaiser window, also known as the Kaiser–Bessel window, was developed by the electrical engineer James Frederick Kaiser at Bell Laboratories. It is a one-parameter family of window functions used in finite impulse response filter design and spectral analysis. The Kaiser window approximates the discrete prolate spheroidal sequence or Slepian window which maximizes the energy concentration in the main lobe, but which is difficult to compute. The Kaiser–Bessel window function is given by [37]

where \(I_0\) is the zeroth-order modified Bessel function of the first kind, a is the window duration, and the parameter \(\alpha \) controls the taper of the window and thereby controls the trade-off between the width of the main lobe and the amplitude of the side lobes of the Fourier transform of the window.

The use of spherically symmetric basis functions, also known as blobs, represents an alternative regularization method for the low-count emission imaging. Moreover, blobs usually have some attractive properties for tomographic image reconstruction such as rotational symmetry, finite spatial support and their being nearly band-limited. Blobs are non-orthogonal functions, which means that there exists overlapping between adjacent blobs. A specific blob used in tomographic image reconstruction is based on the generalized Kaiser–Bessel window function and is described as follows (see [41, p. 1844])

where r is the radial distance from the blob center, \(I_m\) denotes the modified Bessel function of the first kind of order \(m>-1\), a is the radius of the blob, and \(\alpha \) is a parameter controlling the blob shape. The shape and smoothness of this generalized Kaiser–Bessel window function can be controlled by the parameters (the parameter m allows us to control the smoothness of the function and the parameter \(\alpha \) determines its shape), it is completely localized in space, it is nearly band limited, and finally, its projection has a particularly convenient analytical form which can be easily evaluated. Moreover, this function is isotropic (its projections do not depend on the direction), which makes the computation of the imaging operator significantly faster, see [52] for more details. An interesting study of the blob parameters and a comparison of different spherically symmetric basis functions is presented in [46], where the authors found out the optimum parameters in some sense to be \(m=2\) (or higher, because in this case the blob has a continuous first derivative at the radial boundary), \(a=2\) and \(\alpha =10.417\) (being the second positive zero of the Bessel function \(J_{\frac{7}{2}}\)). In [46] the optimum parameters are found out with the help of the Fourier transform of the generalized Kaiser–Bessel window function (see also [42]), and in [52] the authors arrive at a similar conclusion based on an analysis on approximation-theoretic properties and determining \(\alpha \) to minimize a residual error (since the generalized Kaiser–Bessel window function does not satisfy the partition of unity). For more details and interesting applications on the generalized Kaiser–Bessel window function the interested reader is also referred to the papers [19, 54, 72] and to the references therein.

It is important to mention that in fact the generalized Kaiser–Bessel window function \(w_{a,\alpha ,m}\) is the extension of the truncated version of the Kaiser–Bessel window function \(w_{a,\alpha }\) having support the interval [0, a] instead of \([-a,a].\) Motivated by the importance of the Kaiser–Bessel window function and of the generalized Kaiser–Bessel window function, in this paper our aim is to consider the symmetric version of the generalized Kaiser–Bessel window function supported on the symmetric intervalFootnote 1\([-a,a]\) and to look at from the point of view of probability theory and of classical analysis. The idea behind this is quite natural: since the symmetric and generalized Kaiser–Bessel window function is a ”bell-shaped” curve, we can normalize it to arrive at a probability density function and then to study the properties of the obtained distribution. Since the Kaiser–Bessel and the generalized Kaiser–Bessel window functions are frequently used in signal processing, we hope that the Kaiser–Bessel distribution will be of potential interestFootnote 2 for the electrical engineering people and also for the mathematical community. The rest of the paper is organized as follows: in the next section we define the so-called Kaiser–Bessel distribution with the help of the symmetric version of the generalized Kaiser–Bessel window function. We find out that its cumulative distribution function can be expressed in terms of hypergeometric type functions. In Sect. 3 we make a detailed analysis of the moments of the Kaiser–Bessel distribution, and conclude that this distribution is sub-Gaussian and it is an extension of the Wigner’s semicircle distribution. We also present a modified version of the method of moments in parameter estimation of the Kaiser–Bessel distribution. The conclusion of Sect. 4 is that the Kaiser–Bessel distribution is a log-concave and geometrically concave distribution and the monotonicity and convexity (with respect to the argument and each of the parameters) of its probability density function depends on the behavior of the logarithmic derivative of modified Bessel functions of the first kind. Some known and new Turán type inequalities for modified Bessel functions of the first kind play an important role in this section. In addition, by using the classical rejection method two algorithms are presented for sampling independent continuous random variables of Kaiser–Bessel distribution. Section 5 contains the characteristic function and moment generating function of the Kaiser–Bessel distribution, as well as an explicit form of the differential entropy or Shannon entropy and of the Rényi entropy of the Kaiser–Bessel distribution.

2 Probability Density and Cumulative Distribution Function of the Kaiser–Bessel Probability Distribution

In this section our aim is to introduce a new univariate continuous and symmetric distribution, named in what follows as the Kaiser–Bessel distribution, of which probability density function consists from the normalized form of the symmetric and generalized Kaiser–Bessel window function and the normalizing constant is related to the modified Bessel function of the first kind. We also present three different derivations of the cumulative distribution function by using special cases of the generalized hypergeometric functions.

2.1 Probability Density Function

For the real parameters \(a>0,\) \(\alpha >0\) and \(\nu >-1\) we consider the so-called symmetric and generalized Kaiser–Bessel window function \(f_{a,\alpha ,\nu }:{\mathbb {R}}\rightarrow [0,\infty ),\) defined by

By using the change of variable \(s=\sqrt{1-\left( \frac{x}{a}\right) ^2}\) we obtain

It turns out that the function \(\varphi _{a,\alpha ,\nu }:{\mathbb {R}}\rightarrow [0,\infty ),\) defined by

that is,

is a probability density function with symmetric support \([-a,a]\). Consequently, the continuous and symmetric (with respect to the origin) random variable X defined on some standard probability space has Kaiser–Bessel distribution with the parameter space \(\left\{ (a, \alpha , \nu ) \in {\mathbb {R}}_+^2\times (-1, \infty )\right\} \) if it possesses the probability density function (2.2). This we signify in the sequel as \(X\sim \textrm{KB}(a,\alpha ,\nu ).\)

The Bessel distribution of type I of McKay has the probability density function (see [47])

where \(x>0,\) \(b>0,\) \(c>1\) and \(m>-\frac{1}{2}.\) This is a well-known univariate distribution and together with the Bessel distribution of type II of MacKay, which involves the modified Bessel function of the second kind, appears frequently in electrical and electronic engineering literature, see for example [21, 34, 49] and [26] for more details and the references therein. The next transformation of the probability density function of the Bessel distribution of type I of McKay

resembles to the probability density function of the Kaiser–Bessel distribution, however the exponential term makes these distributions completely different from each other. Finally, note that an interesting list of Bessel type (and other special function type) distributions can be found in [48].

2.2 Cumulative Distribution Function

Since the Kaiser–Bessel distribution is a symmetric distribution with respect to the origin, that is, the probability density function \(x\mapsto \varphi _{a,\alpha ,\nu }(x)\) is an even function, the related cumulative distribution function \(F_{a,\alpha ,\nu }(x) = P(X<x)\) can be uniquely presented for each \(x \in {\mathbb {R}}\) in the form

where the auxiliary function \(\Phi _{a,\alpha ,\nu }:{\mathbb {R}}\rightarrow [0,\infty )\) is defined by

This auxiliary function is an odd function of the argument, that is, \(\Phi _{a,\alpha ,\nu }(-x) = -\Phi _{a,\alpha ,\nu }(x)\) for each x real, and the median equals zero, therefore \(\Phi _{a,\alpha ,\nu }(a) =\frac{1}{2}\), since the random variable X has support \([-a, a]\). To obtain the cumulative distribution function we have to compute the integral in (2.3). Substituting \(u = a\sqrt{1-s^2}\), we arrive at

where

In what follows the integral (2.4) will be the starting point in the evaluation of \(\Phi _{a,\alpha ,\nu }(x).\)

First approach Expanding \((1-s^2)^{-\frac{1}{2}}\) in the integrand of (2.4) into the Maclaurin series, which is a legitimate procedure since the integration region \(\left( g_a(x), 1\right) \) is inside the unit s-interval, and then changing the order of summation and integration (Tonelli’s theorem) we conclude that

where

Collecting the appropriate relations we deduce the expression

Here we used the usual notation for the generalized hypergeometric function \({}_pF_q,\) which is defined by

and \((a)_n=a(a+1)\cdots (a+n-1),\) \( a\ne 0,\) stands for the Pochhammer symbol or ascending factorial. Recall that for \(p \le q\) this series converges for any x, but when \(q = p-1\) convergence occurs for \(|x| <1\) unless the series terminates. The function \({}_2F_1\) is the familiar Gaussian hypergeometric function.

Second approach Expanding now the modified Bessel function term in the integrand of (2.4) into the power series, then changing in legitimate manner the integration and summation order we arrive at

Here the complete and the incomplete Beta functions were used, respectively

where \(\min \{a,b\}>0\). Having in mind the transformation formula

after some algebraic computations we find that

where

Therefore, we deduce that

Third approach Expanding now either the binomial \((1-s^2)^{-\frac{1}{2}}\) and the modified Bessel \(I_\nu (\alpha s)\) terms in the integrand of (2.4), repeating the summation and integration change, we deduce a double series result. Indeed, after several routine steps in which we employ the Legendre duplication formula for the Pochhammer symbol, that is,

we arrive at

where

and

stands for the so-called Kampé de Fériet hypergeometric function of two variables [4, p. 150]. This function converges when

-

(i) \(p+q<l+m+1\), \(p+k<l+n+1\) and \(\max \{|x|, |y|\} <\infty \);

-

(ii) \(p+q=l+m+1\), \(p+k=l+n+1\) and \(|x|^{\frac{1}{p-l}}+|y|^{\frac{1}{p-l}}<1\) for \(l<p\) and \(\max \{|x|,|y|\} < 1\) for \(l\ge p.\)

Consequently, we find that

It remains to confirm the convergence in both Kampé de Fériet double hypergeometric series. According to the condition (ii) we have \(p+q=2=l+m+1\), and therefore the convergence domain of the first series above is \(0<\alpha <2\), while the second series converges for \(|x|<a\).

Summarizing, since the cumulative distribution functions are left continuous, we obtain the next result.

Theorem 1

The cumulative distribution function of the Kaiser–Bessel distribution has the form

with the condition that when we use (2.7) we need to suppose that \(\alpha <2.\)

Note that since \(F_{a, \alpha , \nu }(a) = 1,\) the last case is well-defined, and clearly the cumulative distribution function formulae (2.5), (2.6) and (2.7) present the same function. Therefore as a consequence of our result by equating the related auxiliary \(\Phi _{a, \alpha , \nu }\) functions we may establish potentially useful functional transformation results between special cases of generalized hypergeometric \({}_1F_2\), the Gaussian \({}_2F_1\) and/or the generalized Kampé de Fériet hypergeometric function \(F_{1:0;1}^{1:1;0}\) of two variables.

3 Moments, Absolute Moments and Mellin Transforms of the Kaiser–Bessel Distribution

In probability theory the moments of a probability distribution are important numerical characteristics. For a probability distribution on a bounded interval, the collection of all the moments (of all orders) uniquely determines the distribution (Hausdorff moment problem) if it is solvable (determinate moment problem). In this section our aim is to give an explicit expression for the moments, absolute moments and Mellin transforms of the Kaiser–Bessel distribution. In addition, we consider the limiting and some particular cases of the Kaiser–Bessel distribution, and it turns out that the Kaiser–Bessel distribution is an extension of Wigner’s semicircle distribution, Wigner’s parabolic distribution and Wigner’s n-sphere distribution. We also deduce a recurrence relation for the moments and by using some Turán type inequalities for modified Bessel functions of the first kind, we deduce some bounds for the effective variance and we show that the Kaiser–Bessel distribution is a platykurtic distribution. We mention that in Sect. 5.2 we show that the moments of the Kaiser–Bessel distribution determine uniquely the distribution.

3.1 Recurrence Relation for the Moments of the Kaiser–Bessel Distribution

Since the probability density function of the Kaiser–Bessel distribution is an even function, the odd order moments vanish, that is, \({\text {E}}\left[ X^{2n-1}\right] =0\) for each \(n\in {\mathbb {N}}\). The even order moments for each \(n\in {\mathbb {N}}\) are given by

Now, consider the following notations: \(\mu _{2n,\nu }={\text {E}}\left[ X^{2n}\right] \) and

Then clearly

and by using the recurrence relation

we deduce that

that is,

This recurrence relation can be rewritten as

and this is equivalent to

3.2 Mellin Transforms and Inequalities for the Absolute Moments

An extension of the moments involves the Mellin transform of |X|, that is, \({\text {E}}\left[ |X|^{r-1}\right] ,\) which for \(r\in {\mathbb {C}}\) such that \({\text {Re}}r>0\) is given by

Thus, for \(m>-1\) the \(m\hbox {th}\) absolute moment of the random variable \(X_{\nu }\sim \textrm{KB}(a,\alpha ,\nu )\) is given by

On the other hand, we know that [44, Theorem 3] if \(\alpha >0\) and \(\beta >0\) are fixed, then \(\nu \mapsto I_{\nu +\beta }(\alpha )/I_{\nu }(\alpha )\) is decreasing for \(-\beta \le 2\nu \) and \(\nu >-1.\) This implies that for \(\alpha >0,\) \(m\ge 3\) and \(\nu >-1\) the function \(\nu \mapsto {I_{\nu +\frac{m+1}{2}}(\alpha )}/{I_{\nu +\frac{1}{2}}(\alpha )}\) is decreasing, and consequently the function \(\nu \mapsto {\text {E}}\left[ \left| X_{\nu }\right| ^{m}\right] \) is also decreasing on \((-1,\infty )\) for all \(a>0,\) \(\alpha >0\) and \(m\ge 3.\) In other words, if \(X_{\mu }\sim \textrm{KB}(a,\alpha ,\mu )\) and \(X_{\nu }\sim \textrm{KB}(a,\alpha ,\nu ),\) then for \(\mu \ge \nu >-1,\) \(a>0,\) \(\alpha >0\) and \(m\ge 3\) we arrive at

Observe that \(\beta _{\nu ,0}=1\) and \(r\mapsto {\left( \beta _{\nu ,r}\right) }^{\frac{1}{r}}\) is a non-decreasing function on \((0,\infty ),\) that is, if \(r>s>0,\) \(\alpha >0\) and \(\nu >-1,\) then

This Lyapunov-type inequality follows from the Hölder-Rogers inequality for integrals with conjugate exponents. Moreover, if we consider the Gauss-Winckler inequality (see [16, eq. (51)] or [8]) for the absolute moments, that is,

where \(r>s>0,\) we obtain that

where \(r>s>0,\) \(\alpha >0\) and \(\nu >-1,\) and this inequality clearly improves (3.2) since the function \(r\mapsto (r+1)^{\frac{1}{r}}\) is decreasing on \((-1,\infty ).\)

It is also known that the absolute moment \(\beta _r={\text {E}}\left[ |X|^r\right] \) of a random variable X is log-convex with respect to r and there holds the Turán type inequality [45, p. 28, Eq. (1.4.6)] \(\beta _{r-1}^2 \le \beta _r \beta _{r-2},\) where \(r>2\), which we can readily extend by using the well-known Cauchy-Bunyakovsky-Schwarz inequality to the case \(\beta _{r-s}^2 \le \beta _r \beta _{r-2\,s},\) where \(r>2\,s>0.\) Now, if we consider the absolute moments of the Kaiser–Bessel distribution, the above inequality for the absolute moments leads to the next Turán-type inequality for modified Bessel functions of the first kind

where \(\alpha >0,\) \(\nu >-1\) and \(r>2s-1>-1.\) In Sect. 3.4 we will see that this general Turán type inequality is not new, it has been proved recently in [69] and [51].

3.3 Limiting and Particular Cases

Now, let us focus on the limiting and some particular cases of the Kaiser–Bessel probability distribution and its moments. Since for \(\nu \) fixed as \(x\rightarrow 0\) we have

we obtain that for \(a>0,\) \(\nu >-1\) and \(|x|<a\) fixed as \(\alpha \rightarrow 0\)

Similarly, since for \(\nu \) fixed as \(x\rightarrow \infty \) we have

we obtain that for \(a>0,\) \(\nu >-1\) and \(|x|<a\) fixed as \(\alpha \rightarrow \infty \)

Moreover, in view of the asymptotic expansion

where \(x\rightarrow \infty ,\) \(\nu \) is fixed and

and taking into account the fact that the quotient of two asymptotic power series is also an asymptotic power series, we arrive at the following asymptotic power series

where \(s=g_a(x)\) and for all \(n\in {\mathbb {N}}\) we have

Observe also that for \(\nu =\frac{1}{2},\) \(\nu =1\) and \(a=1,\) \(\nu =\frac{n-1}{2}\) the limiting distribution function in (3.4) for \(|x|<a\) and \(|x|<1,\) respectively, reduces to

and these are known in the literature as Wigner’s semicircle distribution (called also sometimes in the number-theoretic literature as the Sato-Tate distribution), Wigner’s parabolic distribution and Wigner’s n-sphere distribution (see for example [24] and [39]). This shows that the Kaiser–Bessel distribution is in fact an extension of the Wigner’s semicircle distribution.Footnote 3 Moreover, the distribution with density function

is called in the literature as the ultra-spherical (or hyper-spherical) distribution, and contains all the Gaussian distributions with respect to the five fundamental independence in non-commutative probability, as classified by Muraki [50], see [6] for more details. Note also that the limiting distribution in (3.4) is known in probability theory and statistics as the Pearson type II distribution, and its density function is a solution of the Pearson differential equation. It is also worth to mention that the limiting distribution in (3.4) for \(\nu +\frac{1}{2}\) instead of \(\nu \) is known in the literature as the power semicircle distribution (see for example [6]) and from the Kaiser–Bessel distribution can be obtained for \(a>0,\) \(\nu >-\frac{3}{2}\) and \(|x|<a\) fixed as \(\alpha \rightarrow 0\)

Moreover, if we use the known result that for \(x>0\) fixed we have

as \(\nu \rightarrow \infty ,\) then for \(\alpha >0,\) and x, a fixed such that \(|x|<a\) we deduce that

as \(\nu \rightarrow \infty .\)

By using the above limiting forms of the modified Bessel function of the first kind it is also possible to deduce the limiting behavior of the moments and absolute moments of the Kaiser–Bessel distribution. More precisely, for fixed \(n\in {\mathbb {N}},\) \(m>-1,\) \(a>0\) and \(\nu >-1\) as \(\alpha \rightarrow 0\) we arrive at

and

Similarly, for fixed \(n\in {\mathbb {N}},\) \(m>-1,\) \(a>0\) and \(\nu >-1\) as \(\alpha \rightarrow \infty \) we see that

and for \(n\in {\mathbb {N}},\) \(m>-1,\) \(\alpha >0\) and \(a>0\) fixed, as \(\nu \rightarrow \infty \) we find that

and

Note also that by using the relations of modified Bessel functions of the first kind with elementary functions like hyperbolic sine and hyperbolic cosine functions, that is,

we arrive at the following particular hyperbolic sine and hyperbolic cosine distributions with support \([-a,a]\)

It is important to note that the symmetric and generalized Kaiser–Bessel window function \(f_{a,\alpha ,\nu }\) for \(\nu =-\frac{1}{2}\) reduces to

which resembles to the hyperbolic cosine window function considered in [7]

with support \([-a,a].\)

3.4 Bounds for the Effective Variance and Sign of the Excess Kurtosis

The effective radius and variance of a probability distribution (see [29]) is defined via the second, third and fourth order moments and are analogous to characteristics used in statistics to describe frequency distribution. In the case of the generalized inverse Gaussian distribution the knowledge of the effective radius and variance obtained for example by means of remote sensing together with the aerosol optical depth is very useful to calculate physical quantities as the aerosol column mass loading per unit area (see [1]). Motivated by the papers [1, 29], in this subsection we introduce the effective radius and variance of the absolute moments of the Kaiser–Bessel distribution and we deduce some bounds for the effective variance of the absolute moments. It is worth to mention that because we use absolute moments instead of moments, the properties of the effective variance of the absolute moments will be different from the properties of the usual effective variance involving moments. By using the notation \(\beta _{\nu ,m}={\text {E}}\left[ \left| X_{\nu }\right| ^{m}\right] ,\) we define the effective radius and variance for the Kaiser–Bessel distribution involving the \(m\hbox {th}\) absolute moments as follows

By using the above mentioned result of Lorch, clearly the effective radius is a decreasing function of \(\nu \) on \(\left[ -\frac{3}{4},\infty \right) \) for each \(a>0\) and \(\alpha >0.\) Observe that the effective variance is independent of a. On the other hand, by following the discussion in Sect. (3.2) about the log-convexity of the absolute moments, we conclude that \(\sigma _{\nu }(\alpha )>0\) for all \(\alpha >0\) and \(\nu >-1.\) Moreover, according to Lorch [44, p. 79] the next general Turán type inequality is valid

for all \(\nu >-\frac{1}{2},\) \(0<\varepsilon \le 1\) and \(x>0.\) Consequently, for each \(\nu >-\frac{5}{2}\) and \(\alpha >0\) we find that

that is, the effective variance satisfies the next inequality

Note that according to [69] it is known that for \(\min \{\nu ,\mu \}>-2,\) \(\nu +\mu >-2\) and \(\nu ,\mu \ne -1\) the function

is strictly increasing on \((0,\infty )\) and consequently under the same conditions the Turán type inequalityFootnote 4

is valid (see also [51, Theorem 3] and its proof for a different approach of (3.10)). This in turn implies that for all \(\nu >-\frac{5}{2}\) and \(\alpha >0\) we have

which leads to the next result

Moreover, by using the general result in [38, Eq. (25)] for \(\varepsilon =\frac{1}{2},\) we arrive at

for all \(\nu >-\frac{5}{2}\) and \(\alpha >0,\) which clearly implies the next result.

Theorem 2

The effective variance of the Kaiser–Bessel distribution satisfies

for all \(\nu >-\frac{5}{2}\) and \(\alpha >0,\) where \(\ell _{\nu }\) is described in (3.11).

In other words, Kalmykov and Karp result on the generalized Turánian in (3.9) gives in particular the same lower bound for the effective variance as the left-hand side of (3.10) does, however their lower bound for the generalized Turánian gives a better upper bound for the effective variance.

It is also important to mention that Lorch’s lower bound in (3.9) can be improved in another way than in [38, Example 1] and this in turn yields another upper bound for the effective variance. Namely, by using the well-known Jordan inequalities

and having in mind also the monotone increasing nature of the modified Bessel function of the first kind appearing in the integrand of (3.9), after suitable substitutions we conclude that

that is,

where

as the integral is positive for all \(\nu >-\frac{1}{2},\) \(x>0,\) \(0<\varepsilon \le 1\) and where we applied three times the formula

with \(2\nu +n+1>0\) and \(n\in {\mathbb {N}}.\) By using the above improved lower bound for the generalized Turánian \(T_{\nu ,\varepsilon }(x)\) we obtain the next upper bound for the effective variance

which holds for all \(\nu >-\frac{5}{2}\) and \(\alpha >0.\)

We also mention that as an attractive by-product on the same parameter range we arrive at a convexity type inequality, which is valid for the contiguous neighbors for the hypergeometric function \({}_1F_2\)

and holds for all \(\nu > -\frac{1}{2}\) and \(x>0\).

Note that the excess kurtosis of the Kaiser–Bessel distribution is given by

and in view of (3.12) we arrive at

for all \(a>0,\) \(\alpha >0\) and \(\nu >-2.\) This implies the following result.

Theorem 3

If \(a>0,\) \(\alpha >0\) and \(\nu >-1,\) then the Kaiser–Bessel distribution has negative excess kurtosis and thus it is a platykurtic or sub-Gaussian distribution. Since the Kaiser–Bessel distribution has compact support, it is not infinitely divisible in the classical sense.

The fact that the Kaiser–Bessel distribution is not infinitely divisible in the classical sense can be also deduced immediately from the fact that its excess kurtosis is strictly negative. In other words, if the Kaiser–Bessel distribution would be infinitely divisible in the classical sense, then its excess kurtosis would be non-negative, according to [6, p. 171]. It would be of interest to verify whether the Kaiser–Bessel distribution is infinitely divisible in the free sense or in the monotone sense, and to evaluate its free divisibility indicator. This problem is motivated by the fact that the integer powers of Wigner’s semicircular distribution are freely infinitely divisible, see [5] for more details.

3.5 Bounds for the Moments

By using the well-known Amos’ inequality (see for example [2] or [58])

which holds for all \(\nu \ge 0\) and \(\alpha >0,\) we find that

for all \(n\in {\mathbb {N}},\) \(\nu \ge -\frac{1}{2}\) and \(\alpha >0.\) This in turn implies that \(\mu _{2n,\nu }={\text {E}}[X^{2n}]<(2n-1)!!a^{2n}\) for each \(a>0,\) \(\alpha >0,\) \(\nu \ge -\frac{1}{2}\) and \(n\in {\mathbb {N}}.\)

Moreover, in view of the well-known Mittag-Leffler expansion

where \(j_{\nu ,n}\) stands for the \(n\hbox {th}\) positive zero of the Bessel function of the first kind \(J_{\nu },\) we find that the function

is decreasing on \((0,\infty )\) for all \(\nu \ge -1\) and \(a>0\) as a product of n decreasing functions. Thus, we deduce that \(\alpha \mapsto \mu _{2n,\nu }={\text {E}}\left[ X^{2n}\right] \) is also decreasing on \((0,\infty )\) for all \(\nu >-1\) and \(a>0\), and this together with (3.8) implies that

for all \(n\in {\mathbb {N}},\) \(a>0,\) \(\alpha >0\) and \(\nu \ge -1.\)

Since the zeros \(j_{\nu ,n}\) for fixed \(n\in {\mathbb {N}}\) are increasing functions of \(\nu ,\) in view of the Mittag-Leffler expansion (3.13) it follows that the function

is decreasing on \((-1,\infty )\) for all \(a>0\) and \(\alpha >0\) as a product of n decreasing functions. Thus, we find that \(\nu \mapsto \mu _{2n,\nu }={\text {E}}\left[ X^{2n}\right] \) is also decreasing on \((-1,\infty )\) for all \(a>0\) and \(\alpha >0,\) and this in turn implies that

for all \(n\in {\mathbb {N}},\) \(a>0,\) \(\alpha >0\) and \(\nu >-1,\) where in the last inequality we used the monotonicity of (3.14) for \(\nu =-1\) and the relation

3.6 Parameter Estimation by Method of Moments

To determine the values of the unknown parameters of the Kaiser–Bessel distribution \(\textrm{KB}(a, \alpha , \nu )\) under the knowledge of the values of three consecutively indexed absolute moments \(\beta _{\nu ,m}, \beta _{\nu ,m+1}, \beta _{\nu ,m+2},\) it is enough to consider for some fixed m the related system of their formulae

Obviously, it is necessary to compute a, \(\alpha ,\) \(\nu \) from the system (3.15) numerically, since explicit solution can be found (indeed partially) only in exceptional cases, like for fixed \(\nu \), where the above system reduces after some routine calculation to the a-free equation which consists of three Turánian quotients

Now, solving this equation with respect to \(\alpha \), routine steps lead to the value of a.

Another approach to solve (3.15) it would be to consider the corresponding quotients of modified Bessel functions of the first kind in the above equations and to use the fact that every quotient is in fact a power series (whose coefficients can be evaluated by using the Cauchy product of two power series) and then to use the Lagrange inversion theorem for each equations and then solve the system of equations. However, this way looks complicated and difficult to implement numerically.

However, to avoid the numerical equation solving, we can proceed by another method fixing the values of five consecutive odd order absolute moments \(\beta _{\nu ,j}\) with \(j\in \{1,3,5,7,9\}\). Thus, since this system looks difficultFootnote 5 to solve, we are going to propose a new approach of the method of moments for this special situation, based on the recurrence relation for the modified Bessel functions of the first kind. For this observe that since

if we use the three term recurrence relation (3.1) of the modified Bessel functions of the first kind for \(\nu +n\) instead of \(\nu ,\) then we arrive at the following recurrence relation for the absolute moments of odd order of the Kaiser–Bessel distribution

Thus, if we consider the particular cases when \(n\in \{1,2,3\},\) we find that

which is equivalent to the non-homogeneous linear system

where \(x= a^2,\) \(y= \nu ,\) \(z =\alpha ^2/a^2\). Solving this system we obtain the following explicitly expressed values of the parameters a, \(\alpha \) and \(\nu \)

provided that the denominator in the above fractions does not vanish and everywhere the shorthand notation \(\beta _j = \beta _{\nu ,j}\) is used. It is worth to mention that the system (3.17) is not overdetermined because there are no more equations than unknowns, and using five absolute theoretical moments instead of three, there is no inconsistency in the system since that moments are in connection to each other according to the recurrence relation (3.16).

In the method of moments classical approach the parameters of a probability distribution are estimated by matching the empirical moments of the sample with that of the corresponding probability distribution. The number of moments required corresponds to the number of unknown parameters of the distribution. Application of this method is straightforward, as closed-form expressions for the moments can be readily derived for most commonly used distributions. Now, let \(X_1,X_2,\ldots ,X_n\) be independent and identically distributed Kaiser–Bessel random variables with unknown parameters a, \(\alpha \) and \(\nu ,\) and suppose that for some \(k\in {\mathbb {N}}\) the theoretical absolute moment \(\beta _{\nu ,k}\) is estimated by the empirical absolute sample moment

where, in the case of a numerical sample the realizations \(x_j\) replace the random variable \(X_j\) in the statistics \({\overline{\beta }}_k\). However, these considerations lead to the counterpart system to (3.17), that is,

which leads to the following estimates of the parameters a, \(\alpha \) and \(\nu \)

provided that the denominator in the above fractions does not vanish.

Finally, note that clearly we can use the same procedure with the first five even order absolute moments \(\beta _{\nu ,j},\) \(j\in \{2,4,6,8,10\}\) (which in fact coincide with the same order raw moments \(\mu _{j,\nu },\) \(j\in \{2,4,6,8,10\}\)). Namely, by using again the three term recurrence relation (3.1) we find that

and if we consider the particular cases when \(n\in \{1,2,3\},\) we obtain that

which is equivalent to the following linear system

The desired values of the parameters are

provided that the denominator in the above fractions does not vanish. Moreover, when we have no insight into the values of the theoretical absolute moments \(\beta _{\nu ,2j},\) \(j\in \{1,2,3,4,5\}\), but the statistical sample from a \(\textrm{KB}(a, \alpha , \nu )\)-distributed population is at our disposal, we estimate the unknown distributional parameters by the following moments method estimators

provided that the denominator in the above fractions does not vanish. The probabilistic/statistical efficiency analysis we will consider on a different address.

4 Unimodality, Monotonicity, Convexity, Log-Concavity and Geometrical Concavity of the Kaiser–Bessel Probability Density Function

In this section our aim is to present a detailed analysis of the probability density function of the Kaiser–Bessel distribution. We show that the distribution is unimodal, it belongs to the family of log-concave and geometrical concave distributions, and we also study the monotonicity (and convexity) of the probability density function with respect to the argument x as well as with respect to the parameters a, \(\alpha \) and \(\nu \). In the process of this analytic study we use some known inequalities for the logarithmic derivative of the modified Bessel function of the first kind as well as some Turán type inequalities. We also use a formula for the product of two modified Bessel functions of the first kind of different order and argument, and this formula is one of the key tools in the proofs of this whole section. Because of the connection between the monotonicity and convexity of the probability density function of the Kaiser–Bessel distribution with respect to the argument and parameters, in this section we present these monotonicity and convexity results divided in subsections and the order of these subsections depends mostly on the connections. In addition, by using the classical rejection method we present two algorithms for sampling independent continuous random variables of Kaiser–Bessel distribution. Some nice figures illustrate the main results of this section.

4.1 Unimodality, Monotonicity with Respect to x

To show that the Kaiser–Bessel distribution is unimodal we use the monotonicity of \(s\mapsto s^{\nu }I_{\nu }(\alpha s).\) Namely, by using the recurrence relation

we obtain that for each \(\alpha >0\) and \(\nu >-1\) the function \(s\mapsto s^{\nu }I_{\nu }(\alpha s)\) is increasing on \((0,\infty )\) and so is on (0, 1]. On the other hand, by using the notations

we arrive at

and since

this becomesFootnote 6

Thus, we have the following result.

Theorem 4

The probability density function of the Kaiser–Bessel distribution \(\varphi _{a,\alpha ,\nu }\) is increasing on \((-a,0]\) and decreasing on [0, a) for all \(a>0,\) \(\alpha >0\) and \(\nu >-1.\) In other words, the distribution is unimodal, and its mode is zero,Footnote 7 and similarly as in the case of other symmetric unimodal distributions the mean \({\text {E}}[X]=0\) and mode coincide. Moreover, the median of the distribution is also zero because of the symmetry of the support.

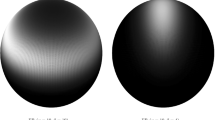

Figure , which resembles to a beautiful colored pashmina, illustrates the monotonicity behavior of the probability density function for fixed values of a and \(\nu ,\) and for different values of \(\alpha .\)

4.2 Log-Concavity and Geometrical Concavity with Respect to x

In view of (4.1), the logarithmic derivative of the probability density function of the Kaiser–Bessel distribution becomes

Now, consider the auxiliary function \(S_{a,\alpha ,\nu }:(0,\alpha ]\rightarrow (-\infty ,0),\) defined by

The function \(x\mapsto s_{a,\alpha }(x)=-\displaystyle \frac{\alpha \sqrt{\alpha ^2-x^2}}{ax}\) is strictly increasing on \((0,\alpha )\) for all \(a>0\) and \(\alpha >0\) since its derivative is strictly positive therein, that is

for each \(a>0,\) \(\alpha >0\) and \(x\in (0,\alpha ).\) On the other hand, the function \(x\mapsto 1/q_{\nu }(x)=I_{\nu }(x)/I_{\nu -1}(x)\) is increasing on \((0,\infty )\) for \(\nu \ge \frac{1}{2},\) see [71] or [67]. This implies that when \(\nu \ge \frac{1}{2}\) the function \(q_{\nu }\) is decreasing on \((0,\infty )\) and so is on \((0,\alpha ].\) This in turn implies that for \(a>0,\) \(\alpha >0\) and \(\nu \ge \frac{1}{2}\) the function \(x\mapsto S_{a,\alpha ,\nu }(x)=s_{a,\alpha }(x)\cdot q_{\nu }(x)\) is strictly increasing on \((0,\alpha ]\) as a product of a negative and strictly increasing function and of a strictly positive and decreasing function. Now, observe that \(Q_{a,\alpha ,\nu }(x)=S_{a,\alpha ,\nu }(\alpha g_a(x)).\) Since \(x\mapsto \alpha g_a(x)\) is decreasing on [0, a), the monotonicity of \(S_{a,\alpha ,\nu }\) implies that \(Q_{a,\alpha ,\nu }\) is decreasing on [0, a) for all \(a>0,\) \(\alpha >0\) and \(\nu \ge \frac{1}{2}.\) Moreover, the function \(g_a\) is even on \((-a,a)\) and thus the function \(Q_{a,\alpha ,\nu }\) is odd on \((-a,a),\) and consequently \(Q_{a,\alpha ,\nu }\) is decreasing too on \((-a,0]\) for all \(a>0,\) \(\alpha >0\) and \(\nu \ge \frac{1}{2}.\) This is justified by the fact that geometrically, the graph of an odd function has rotational symmetry with respect to the origin, meaning that its graph remains unchanged after rotation of 180 degrees about the origin. Thus, the logarithmic derivative \(Q_{a,\alpha ,\nu }\) is decreasing on \((-a,a)\) for all \(a>0,\) \(\alpha >0\) and \(\nu \ge \frac{1}{2},\) and this is equivalent to the fact that the probability density function \(\varphi _{a,\alpha ,\nu }\) is log-concave on \((-a,a)\) for all \(a>0,\) \(\alpha >0\) and \(\nu \ge \frac{1}{2}.\) In other words, the Kaiser–Bessel distribution for \(a>0,\) \(\alpha >0\) and \(\nu \ge \frac{1}{2}\) belongs to the family of log-concave distributions, that is

for all \(a>0,\) \(\alpha >0,\) \(\nu \ge \frac{1}{2},\) \(x,y\in (-a,a)\) and \(\rho \in [0,1].\) Moreover, since the probability density function \(\varphi _{a,\alpha ,\nu }\) is decreasing on [0, a) for all \(a>0,\) \(\alpha >0\) and by using the arithmetic–geometric mean inequality we arrive at

for all \(a>0,\) \(\alpha >0,\) \(\nu \ge \frac{1}{2},\) \(x,y\in [0,a)\) and \(\rho \in [0,1],\) that is, the probability density function \(\varphi _{a,\alpha ,\nu }\) is geometrically concave on [0, a) for \(a>0,\) \(\alpha >0\) and \(\nu \ge \frac{1}{2}.\)

Figure , which resembles to a beautiful rainbow, illustrates the log-concavity behavior of the probability density function.

On the other hand, we know that when \(\nu \in \left( 0,\frac{1}{2}\right) ,\) then the function \(x\mapsto 1/q_{\nu }(x)=I_{\nu }(x)/I_{\nu -1}(x)\) is increasing first to reach a maximum and then decreasing, see for example [71, p. 446]. Note that the quotient \(I_{\nu }(x)/I_{\nu -1}(x)\) satisfies the Ricatti differential equation

So, if we denote by \(x_{\nu }^*\) the point in which the derivative of \(1/q_{\nu }(x)\) vanishes, then \(x_{\nu }^*\) is in fact the solution of the equation

and numerical experiments and graphs show that \(x_{\nu }^*\) is increasing with \(\nu .\) By using a similar argument as in the case of \(\nu \ge \frac{1}{2}\) above, we readily see that the probability density function \(\varphi _{a,\alpha ,\nu }\) is log-concave on \((-a,a)\) for all \(a>0,\) \(x_{\nu }^*\ge \alpha >0\) and \(0<\nu <\frac{1}{2},\) and is geometrically concave on [0, a) for all \(a>0,\) \(x_{\nu }^*\ge \alpha >0\) and \(0<\nu <\frac{1}{2}.\)

Summarizing, we have obtained the following result.

Theorem 5

The probability density function \(\varphi _{a,\alpha ,\nu }\) is log-concave on \((-a,a)\) for all for \(a>0,\) \(\alpha >0\) and \(\nu \ge \frac{1}{2}\) and is geometrically concave on [0, a) for \(a>0,\) \(\alpha >0\) and \(\nu \ge \frac{1}{2}.\) Moreover, the probability density function \(\varphi _{a,\alpha ,\nu }\) is log-concave on \((-a,a)\) for all \(a>0,\) \(x_{\nu }^*\ge \alpha >0\) and \(0<\nu <\frac{1}{2},\) and is geometrically concave on [0, a) for all \(a>0,\) \(x_{\nu }^*\ge \alpha >0\) and \(0<\nu <\frac{1}{2}.\)

It is worth to mention here that if a probability density function is log-concave, then the corresponding cumulative distribution is also log-concave, see for example [3] and [9]. Moreover, we also know that if a probability density function is geometrically concave, then the cumulative distribution function is also geometrically concave, according to [14, Theorem 3]. By using these results, we clearly have the following result.

Corollary 1

The cumulative distribution function \(F_{a,\alpha ,\nu }\) is log-concave on \((-a,a)\) for all \(a>0,\) \(\alpha >0\) and \(\nu \ge \frac{1}{2},\) and is geometrically concave on [0, a) for \(a>0,\) \(\alpha >0\) and \(\nu \ge \frac{1}{2}.\) In addition, the cumulative distribution function \(F_{a,\alpha ,\nu }\) is log-concave on \((-a,a)\) for all \(a>0,\) \(x_{\nu }^*\ge \alpha >0\) and \(0<\nu <\frac{1}{2},\) and is geometrically concave on [0, a) for all \(a>0,\) \(x_{\nu }^*\ge \alpha >0\) and \(0<\nu <\frac{1}{2}.\)

Note that in view of the explicit form of \(F_{a,\alpha ,\nu }(x)\) a direct proof for the above properties would be not trivial.

4.3 Monotonicity of the Probability Density Function with Respect to a

Observe that by using the notations \(s=g_a(x)\) and \(t=\alpha s,\) and the recurrence relation

we find that

In view of the inequalities [58, p. 526]

where the left-hand side holds for \(\nu \ge -1\) and \(t>0,\) while the right-hand side holds for \(\nu \ge -\frac{1}{2}\) and \(t>0,\) we obtain that the right-hand side of (4.3) is strictly positive when \((2\nu +1)x^2\ge a^2\) and it is strictly negative when

More precisely, for \(\alpha >0,\) \(a>0\) and \(\nu \ge -\frac{1}{2},\) the function \(a\mapsto \varphi _{a,\alpha ,\nu }(x)\) is strictly increasing for \(a>|x|\ge \frac{a}{\sqrt{2\nu +1}}\) and is strictly decreasing for

Moreover, the range of the monotonicity can be further extended in the case when \(a>0\) and \(\alpha>\nu +1>1.\) Namely, by using the arithmetic–geometric mean inequality for \(I_{\nu -1}(t)\) and \(I_{\nu +1}(t)\) and the Turán type inequality (3.10) we arrive at

whenever

with \(a>0,\) \(\alpha >0\) and \(\nu >0.\) Thus, in this case the function \(a\mapsto \varphi _{a,\alpha ,\nu }(x)\) is strictly increasing on \((0,\infty ),\) of course with the above assumptions.

Now, we introduce the following notations: for \(\nu \ge 0\) let \(t_{\alpha ,\nu }\) be the unique positive root of the equation

in the interval \([0,\alpha )\) and let \(r_{\alpha ,\nu }^2=1-t_{\alpha ,\nu }^2/\alpha ^2.\) Moreover, for \(-1<\nu <0\) and \(\alpha \) large enough let \(t_{\alpha ,\nu ,1}\) and \(t_{\alpha ,\nu ,2}\) denote the positive roots of the equation (4.5) in the interval \([0,\alpha )\), and let \(r_{\alpha ,\nu ,i}^2=1-t_{\alpha ,\nu ,i}^2/\alpha ^2\) for \(i\in \{1,2\}.\)

With a more sophisticated analysis we arrive at an almost complete description of the monotonicity pattern of the probability density function with respect to a.

Theorem 6

The following assertions are true:

-

(i) If \(a>0,\) \(\alpha >0,\) \(\nu \ge 0\) and \(a>|x|\ge a\cdot r_{\alpha ,\nu },\) then the function \(a\mapsto \varphi _{a,\alpha ,\nu }(x)\) is increasing; if \(a>0,\) \(\alpha >0,\) \(\nu \ge 0\) and \(|x|\le a\cdot r_{\alpha ,\nu },\) then the function \(a\mapsto \varphi _{a,\alpha ,\nu }(x)\) is decreasing;

-

(ii) If \(a>0,\) \(-1<\nu <0,\) \(\alpha \) is large enough and \(a\cdot r_{\alpha ,\nu ,1}\ge |x|\ge a\cdot r_{\alpha ,\nu ,2},\) then the function \(a\mapsto \varphi _{a,\alpha ,\nu }(x)\) is increasing; if \(a>0,\) \(-1<\nu <0,\) \(\alpha \) is large enough and \(|x|\le a\cdot r_{\alpha ,\nu ,2},\) \(a\cdot r_{\alpha ,\nu ,1}\le |x|<a\) then the function \(a\mapsto \varphi _{a,\alpha ,\nu }(x)\) is decreasing;

Proof

First we show that the Eq. (4.5) for \(\nu \ge 0\) has only one positive solution in \([0,\alpha ).\) To prove this we introduce the next auxiliary notations

Since

the function \(t\mapsto y_{\nu }(t)\) maps \([0,\infty )\) into \([\nu ,\infty )\) and hence \([0,\alpha ]\) into \([\nu ,y_{\nu }(\alpha )].\) This implies that for all \(\nu >-1\) the function \(t\mapsto r_{\nu }(t)\) maps \([0,\alpha ]\) into \([2\nu +1,r_{\nu }(\alpha )].\) On the other hand, the function \(t\mapsto y_{\nu }(t)\) is an increasing function on \((0,\infty )\) for all \(\nu >-1,\) and for large values of t and fixed \(\nu \) it has the asymptotic expansion [28, p. 275]

Consequently \(\lim \limits _{t\rightarrow \infty }{r_{\nu }(t)}/{t}=1\) and \(\lim \limits _{t\rightarrow \infty }\left[ r_{\nu }(t)-t\right] =\nu +\frac{1}{2},\) and thus the function \(t\mapsto r_{\nu }(t)\) is increasing on \([0,\alpha ]\) and has the skew asymptote \(u=t+\nu +\frac{1}{2}\) in the tu-plane. Moreover, since according to [28, p. 275] (see also [15, p. 231]) we have \(y_{\nu }(t)>t-\frac{1}{2}\) for all \(t>0\) and \(\nu \ge \frac{1}{2},\) we see that \(r_{\nu }(t)>t+\nu +\frac{1}{2},\) that is, the graph of the function \(r_{\nu }\) is over the graph of its skew asymptote. On the other hand, the function \(q_{\alpha }\) is an increasing and convex function on \([0,\alpha )\) and has the vertical asymptote \(t=\alpha \) in the tu-plane. Since \(q_{\alpha }(0)=1\le \nu +\frac{1}{2}<2\nu +1=r_{\nu }(0),\) the graph of the function \(q_{\alpha }\) crosses the graph of the skew asymptote \(u=t+\nu +\frac{1}{2}\) and then the graph of the function \(r_{\nu }\) only once, and in the case when \(\nu \ge \frac{1}{2}\) the equation (4.5) has indeed only one positive solution in \([0,\alpha ).\)

When \(0\le \nu <\frac{1}{2}\) the situation is a little bit different. In this case the function \(r_{\nu }\) crosses its skew asymptote \(u=t+\nu +\frac{1}{2}\) at a point \(\left( s_{\nu },r_{\nu }(s_{\nu })\right) \) and for \(t > rless s_{\nu }\) we find that \(r_{\nu }(t)\lessgtr t+\nu +\frac{1}{2}.\) Since \(r_{\nu }(0)=2\nu +1\ge q_{\alpha }(0)=1> \nu +\frac{1}{2},\) the graph of the function \(q_{\alpha }\) crosses the graph of the function \(r_{\nu }\) and its skew asymptote only onceFootnote 8 and in this case the Eq. (4.5) has also only one positive solution in \((0,\alpha ).\) It remains just to show that the graph of the function \(r_{\nu }\) crosses his skew asymptote only once, that is, the equation \(r_{\nu }(t)=t+\nu +\frac{1}{2}\) has only one solution in t on \([0,\infty )\) for each \(\nu \in \left[ 0,\frac{1}{2}\right) .\) For this we use Gronwall’s idea [28, p. 276] and consider the function \(\gamma _{\nu }:[0,\infty )\rightarrow (-\infty ,\nu ],\) defined by

and we show that the equation \(\gamma _{\nu }(t)=-\frac{1}{2}\) has only one solution in t on \([0,\infty )\) for each \(\nu \in \left[ 0,\frac{1}{2}\right) .\) Since \(y_{\nu }\) satisfies the Ricatti differential equation

we obtain that \(\gamma _{\nu }\) satisfies the next Ricatti differential equation

and hence

Now, observe that \(\gamma _{\nu }(0)=\nu \) and in view of (4.6) the function \(\gamma _{\nu }\) is decreasing for t sufficiently small. This implies that the first extreme of \(\gamma _{\nu },\) if any, must therefore be a minimum, and according to (4.8) this minimum must satisfy \(\gamma _{\nu }(t)\le -\frac{1}{2}.\) Thus, the graph of the function \(t\mapsto \gamma _{\nu }(t)\) crosses the horizontal line \(u=-\frac{1}{2}\) at least once (Fig. illustrates this behavior quite well) and in what follows we show that there is no more intersection point. We denote the first solution of the equation \(\gamma _{\nu }(t)=-\frac{1}{2}\) by \(t_{\nu }\) and we assume that \(t^{\circ },\) where \(t^{\circ }>t_{\nu },\) is the smallest positive value of t for which \(\gamma _{\nu }(t)=-\frac{1}{2}.\) Then obviously \(\gamma _{\nu }'(t^{\circ })\ge 0\) and in view of the Ricatti differential equation (4.7) we obtain that \(\nu ^2\ge \frac{1}{4},\) which is a contradiction. Thus, indeed when \(\nu \in \left[ 0,\frac{1}{2}\right) ,\) the equation \(\gamma _{\nu }(t)=-\frac{1}{2}\) has only one solutionFootnote 9, denoted by \(t_{\nu }.\) Moreover, it is important to mention here that in fact the above proof about the uniqueness of the solution is valid for all \(\nu \in \left( -\frac{1}{2},0\right] \) too and thus we have shown that the equation \(\gamma _{\nu }(t)=-\frac{1}{2}\) has only one solution in t when \(|\nu |<\frac{1}{2}.\)

Now, since for \(\nu \ge 0\) we find that \(w_{\alpha ,\nu }(0)=2\nu \ge 0\) and \(w_{\alpha ,\nu }(t)\) tends to \(-\infty \) as \(t\nearrow \alpha ,\) we arrive at the conclusion that

is positive when \(t\in (0,t_{\alpha ,\nu }]\) and is negative when \(t\in [t_{\alpha ,\nu },\alpha ).\) This leads to the result stated in (i).

When \(-\frac{1}{2}\le \nu <0\) the situation is a little bit more complicated. In this case the graph of the function \(r_{\nu }\) crosses its skew asymptote \(u=t+\nu +\frac{1}{2}\) at a point \(\left( s_{\nu },r_{\nu }(s_{\nu })\right) \) and for \(t > rless s_{\nu }\) we find that \(r_{\nu }(t)\lessgtr t+\nu +\frac{1}{2}.\) The equation \(r_{\nu }(t)=t+\nu +\frac{1}{2}\) has indeed only one solution in t for \(\nu \in \left( -\frac{1}{2},0\right) ,\) according to the above discussion about the uniqueness of the equation \(\gamma _{\nu }(t)=-\frac{1}{2}\). We just need to verify the case when \(\nu =-\frac{1}{2}.\) In this case the equation \(\gamma _{-\frac{1}{2}}(t)=-\frac{1}{2}\) becomes \(t\sinh t/\cosh t=t,\) which has the unique solution \(t=0.\) Now, since \(q_{\alpha }(0)=1>r_{\nu }(0)=2\nu +1\ge \nu +\frac{1}{2},\) for \(\alpha \) large enough the graph of the function \(q_{\alpha }\) crosses the graph of the function \(r_{\nu }\) and its skew asymptote twice. This is because the function \(q_{\alpha }\) is convex, it has a vertical asymptote and is growing faster than \(r_{\nu }.\)

Similarly, when \(-1<\nu <-\frac{1}{2}\) the graph of the function \(r_{\nu }\) crosses its skew asymptote \(u=t+\nu +\frac{1}{2}\) at a point \(\left( s_{\nu },r_{\nu }(s_{\nu })\right) \) and for \(t > rless s_{\nu }\) we find that \(r_{\nu }(t) > rless t+\nu +\frac{1}{2}.\) To show this we use a similar argument as before. We know that in this case \(-1<\gamma _{\nu }(0)=\nu <-\frac{1}{2}\) and \(\lim \limits _{t\rightarrow \infty }\gamma _{\nu }(t)=-\frac{1}{2}.\) On the other hand, in view of (4.6) the function \(\gamma _{\nu }\) is decreasing for t sufficiently small, and thus the first extreme of \(\gamma _{\nu }\) is clearly a minimum. Now, if we suppose that the function \(\gamma _{\nu }\) has no more critical points, then clearly we arrive at \(\gamma _{\nu }'(t)>0\) for all \(t>t^*,\) where \(\gamma _{\nu }'(t^*)=0.\) But, according to (4.7), this implies that \(\gamma _{\nu }^2(t)<\nu ^2\) and thus \(\gamma _{\nu }(t)>\nu \) for all \(t>t^*,\) which contradicts the fact that \(\gamma _{\nu }\) intersects the horizontal line \(u=\nu .\) Thus, the function \(\gamma _{\nu }\) has a second critical point and because of its behaviour at infinity, this is a maximum. Denoting by \(t^\bullet \) the value for which \(\gamma _{\nu }'(t^\bullet )=0\) and \(\gamma _{\nu }''(t^\bullet )<0,\) in view of (4.8) we clearly obtain that \(\gamma _{\nu }(t^\bullet )>-\frac{1}{2},\) that is, the graph of the function \(\gamma _{\nu }\) intersects the horizontal line \(u=-\frac{1}{2}\) at least once. Now, we show that there is no other intersection point. We denote the first solution of the equation \(\gamma _{\nu }(t)=-\frac{1}{2}\) by \(t_{\nu }\) and we assume that \(t^{\star },\) where \(t^{\star }>t_{\nu },\) is the smallest positive value of t for which \(\gamma _{\nu }(t)=-\frac{1}{2}.\) Then obviously \(\gamma _{\nu }'(t^{\star })\le 0\) and in view of the Ricatti differential equation (4.7) we obtain that \(\nu ^2\le \frac{1}{4},\) which is a contradiction. Thus, indeed when \(\nu \in \left( -1,-\frac{1}{2}\right) ,\) the equation \(\gamma _{\nu }(t)=-\frac{1}{2}\) has only one solution. Now, since \(q_{\alpha }(0)=1>\nu +\frac{1}{2}>r_{\nu }(0)=2\nu +1,\) for \(\alpha \) large enough the graph of the function \(q_{\alpha }\) crosses the graph of the function \(r_{\nu }\) and its skew asymptote twice. This is also the consequence of the convexity of \(q_\alpha \), its vertical asymptote and the faster growth of \(q_{\alpha }\) than \(r_\nu \).

Thus, when \(-1<\nu <0\) we find that \(w_{\alpha ,\nu }(0)=2\nu <0\) and \(w_{\alpha ,\nu }(t)\) tends to \(-\infty \) as \(t\nearrow \alpha .\) Taking into account these facts we arrive at the conclusion that \(w_{\alpha ,\nu }(t)\) is positive when \(t\in [t_{\alpha ,\nu ,1},t_{\alpha ,\nu ,2}]\) and is negative when \(t\in (0,t_{\alpha ,\nu ,1}]\) or \(t\in [t_{\alpha ,\nu ,2},\alpha ).\) This leads to the result stated in (ii). \(\square \)

Figure illustrates well geometrically the solutions of Eq. (4.5) in the above four different cases concerning the parameter \(\nu \). The only thing which is missing from this almost complete description of monotonicity pattern is that when \(-1<\nu <0\) the parameter \(\alpha \) how large needs to be in order that the graph of \(q_{\alpha }\) is crossing the graph of \(r_{\nu },\) that is, Eq. (4.5) has two solutions. Of course, when \(\alpha \) is small and the graph of the functions \(q_{\alpha }\) and \(r_{\nu }\) do not intersect each other, the right-hand side of (4.3) is negative, and in this case the probability density function of the Kaiser–Bessel distribution is decreasing with respect to a. It is a challenging problem to find in the case when \(-1<\nu <0\) the smallest positive value of \(\alpha \) for which the Eq. (4.3) has at least one solution.

4.4 Inequalities for the Quotient of Modified Bessel Functions

In this subsection we present some direct consequences of an auxiliary result proved in the previous subsection. Observe that the equation \(\gamma _{\nu }(t)=-\frac{1}{2}\) is equivalent to \(y_{\nu }(t)=t-\frac{1}{2}.\) Thus, in fact in Sect. 4.3 we have shown that the equation \(y_{\nu }(t)=t-\frac{1}{2}\) has a unique solution in t when \(t\in [0,\infty )\) and \(\nu \in \left( -1,\frac{1}{2}\right) .\) Since for \(|\nu |<\frac{1}{2}\) we have \(y_{\nu }(0)=\nu >-\frac{1}{2},\) we obtain the inequalities \(y_{\nu }(t) > rless t-\frac{1}{2},\) that is

whenever \(t\lessgtr t_{\nu }.\) Moreover, since for \(-1<\nu <-\frac{1}{2}\) we have \(y_{\nu }(0)=\nu <-\frac{1}{2},\) we arrive at the inequalities \(y_{\nu }(t)\lessgtr t-\frac{1}{2},\) that is

whenever \(t\lessgtr t_{\nu }.\) These inequalities complement the results in [28] and [15].

To show another direct consequence of the above mentioned auxiliary result observe that the equation \(r_{\nu }(t)=t+\nu +\frac{1}{2}\) is equivalent to \(v_{\nu }(t)=h_{\nu }(t),\) where

The function \(v_{\nu }\) has applications in finite elasticity, and it has been used to prove that a nonlinearly elastic cylinder eventually becomes unstable in uniaxial compression, see the papers [60] and [61] for more details. The properties of the function \(v_{\nu }\) have been well studied in the last years, several bounds have been found for this quotient of modified Bessel functions of the first kind, see for example the recent papers [10, 31, 68] and [70]. In this subsection we present some novel results on the function \(v_{\nu },\) which are in fact some byproducts of the results proved in the previous subsection. Recall that from Sect. 4.3 we know that the equation \(v_{\nu }(t)=h_{\nu }(t)\) has only one solution in t when \(t\in [0,\infty )\) and \(\nu \in \left( -1,\frac{1}{2}\right) .\) Since \(v_{\nu }(0)=2(\nu +1)>0,\) when \(|\nu |<\frac{1}{2}\) we obtain that \(v_{\nu }(t)<h_{\nu }(t)\) for all \(\nu +\frac{1}{2}<t<t_{\nu }\) and \(v_{\nu }(t)>h_{\nu }(t)\) for all \(t>t_{\nu }.\) Moreover, since \(t_{-\frac{1}{2}}=0,\) we easily obtain that \(v_{-\frac{1}{2}}(t)>h_{-\frac{1}{2}}(t)=t\) for all \(t\ge 0.\) In addition, since \(v_{\nu }(0)=2(\nu +1)>0\) for all \(\nu \in \left( -1,-\frac{1}{2}\right) ,\) we arrive at \(v_{\nu }(t)>h_{\nu }(t)\) for all \(0\le t<t_{\nu }\) and \(\nu \in \left( -1,-\frac{1}{2}\right) ,\) and \(v_{\nu }(t)<h_{\nu }(t)\) for all \(t>t_{\nu }\) and \(\nu \in \left( -1,-\frac{1}{2}\right) .\) Finally, since \(y_{\nu }(t)>t-\frac{1}{2}\) for all \(t\ge 0\) and \(\nu \ge \frac{1}{2},\) according to to [28, p. 275] (see also [15, p. 231]), it follows that the equation \(y_{\nu }(t)=t-\frac{1}{2}\) has no solution when \(t\ge 0\) and \(\nu \ge \frac{1}{2}.\) This implies that the equation \(v_{\nu }(t)=h_{\nu }(t)\) has no solution when \(t\ge 0\) and \(\nu \ge \frac{1}{2},\) and consequently, for all \(t>\nu +\frac{1}{2}\) and \(\nu \ge \frac{1}{2}\) we have that \(v_{\nu }(t)<h_{\nu }(t).\) In other words, for all \(t>\nu +\frac{1}{2}\) and \(\nu \ge \frac{1}{2}\) the following inequality is valid

It is interesting to note that both functions \(v_{\nu }\) and \(h_{\nu }\) have the skew asymptote \(u=t+\nu +\frac{1}{2}\) in the tu-plane. Moreover, \(v_{\nu }\) is convex (according to [60]) for all \(\nu \ge 0\) and the hyperbola \(h_{\nu }\) is also convex on \(\left( \nu +\frac{1}{2},\infty \right) \) for all \(\nu \ge 0.\) The asymptotic expansion (in fact the Laurent series) of \(h_{\nu }(t)\) is the following

Now, in view of (3.5), for the function \(v_{\nu }(t)\) we arrive to the following asymptotic expansion

where \(\alpha _0(\nu )=1,\) \(\alpha _1(\nu )=\nu +\frac{1}{2}\) and for all \(n\ge 1\)

with the coefficients \(a_n(\nu )\) given in (3.6). Note that by using the above recurrence relation, we arrive at the next explicit expressions

Now, if we consider the sequence of functions \(\{\pi _n(\nu )\}_{n\ge 2},\) defined by

then we obtain that

Observe that \(\pi _n(\nu )>0\) for all \(n\in \{2,3,4,5,6\}\) and \(|\nu |<\frac{1}{2},\) and based on computer experiments our conjecture is that \(\pi _n(\nu )>0\) for all \(n\ge 2\) and \(|\nu |<\frac{1}{2}.\) This conjecture was also motivated by the fact that when \(t>t_{\nu }\) and \(|\nu |<\frac{1}{2}\) we have that \(v_{\nu }(t)>h_{\nu }(t).\)

Finally, it is worth to mention that in [12, p. 581] the author mentioned that computer-generated pictures suggest that for \(\nu \in \left( -1,\frac{1}{2}\right) \) the first derivative of the function \(t\mapsto \sqrt{t}e^{-t}I_{\nu }(t)\) changes sign on \([0,\infty ).\) An elementary argument shows that the fact that the equation \(y_{\nu }(t)=t-\frac{1}{2}\) has a unique solution in t when \(t\in [0,\infty )\) and \(\nu \in \left( -1,\frac{1}{2}\right) \) is equivalent to the following result: for \(\nu \in \left( -1,\frac{1}{2}\right) \) the first derivative of the function \(t\mapsto \sqrt{t}e^{-t}I_{\nu }(t)\) indeed changes sign on \([0,\infty ),\) and only once. This shows the validity of the above claim stated in [12].

4.5 Convexity with Respect to x and Inflection Points

In view of (4.1) we obtain

where

Thus, the inflection points of the probability density function \(\varphi _{a,\alpha ,\nu }\) are given by the transcendental equation \(\zeta _{a,\alpha ,\nu }(x)=0.\) This equation for \(a,\alpha >0\) and \(\nu \ge 0\) has clearly at least two solutions in x since the function \(\zeta _{a,\alpha ,\nu }\) is even, \(\zeta _{a,\alpha ,\nu }(0)=-a^2<0\) and \(\zeta _{a,\alpha ,\nu }(x)>0\) for \(\nu x^2\ge a^2.\) Fig. , which resembles to the wings of a beautiful flying adder, illustrates the inflection points of the probability density function. This figure suggests that the positive zeros of \(\zeta _{a,\alpha ,\nu }\) are decreasing with respect to \(\nu ,\) and hence the negative zeros of \(\zeta _{a,\alpha ,\nu }\) are increasing with respect to \(\nu .\)

Note also that for \(a>0,\) \(\alpha >0\) and \(\nu \ge 0\) such that \(\nu x^2\ge a^2\) we deduce that \(\varphi _{a,\alpha ,\nu }''(x)>0\) and thus for \(a>|x|\ge \frac{a}{\sqrt{\nu }}\) the probability density function \(\varphi _{a,\alpha ,\nu }\) is convex. By using some known bounds for the logarithmic derivative of the modified Bessel function of the first kind we can improve the range of convexity a little bit. The idea is to use the change of variables \(t=\alpha g_a(x)\) and the recurrence relation (4.2). With this change of variables, \(\zeta _{a,\alpha ,\nu }(x)\) becomes

By using the inequalities (4.4) we find that

for \((2\nu -1)x^2\ge a^2\) and

for \(\left( \sqrt{\alpha ^2+\left( \nu -\frac{1}{2}\right) ^2}+\nu -\frac{1}{2}\right) x^2\le a^2.\) Here in the first case \(a>0,\) \(\alpha >0\) and \(\nu >\frac{1}{2},\) while in the second case \(a>0,\) \(\alpha >0\) and \(\nu \ge \frac{1}{2}.\) In other words, for all \(a>0,\) \(\alpha >0\) and \(\nu >\frac{1}{2}\) the probability density function \(\varphi _{a,\alpha ,\nu }\) is convex for \(|x|\ge \frac{a}{\sqrt{2\nu -1}}\) and for all \(a>0,\) \(\alpha >0\) and \(\nu \ge \frac{1}{2}\) is concave for \(|x|\le \frac{a}{\sqrt{\sqrt{\alpha ^2+\left( \nu -\frac{1}{2}\right) ^2}+\nu -\frac{1}{2}}}.\) Thus, for \(a>0,\) \(\alpha >0\) and \(\nu >-\frac{1}{2}\) the inflection points of the probability density function of the Kaiser–Bessel distribution are contained in

Moreover, the range of convexity can be further extended in the case when \(a>0\) and \(\alpha>\nu >1.\) Namely, by using the arithmetic–geometric mean inequality for \(I_{\nu }(t)\) and \(I_{\nu -2}(t)\) and the Turán type inequality (3.10) we obtain

whenever \(\frac{a}{\sqrt{\nu +\alpha \varsigma }}\le |x|\le \frac{a}{\sqrt{\nu }}\) with \(a>0,\) \(\alpha >0\) and \(\varsigma =1-\frac{1}{\nu }>0.\) This clearly implies that for \(a>0,\) \(\alpha >0\) and \(\nu >1\) the probability density function \(\varphi _{a,\alpha ,\nu }\) is convex for \(|x|\ge \frac{a}{\sqrt{\nu +\alpha \varsigma }}.\)

Finally, in view of (4.10)Footnote 10 and by changing \(\nu \) to \(\nu -1\) in (i) and (ii) of Sect. 4.3, we obtain the following result.

Corollary 2

The next assertions are true:

-

(iii) If \(a>0,\) \(\alpha >0,\) \(\nu \ge 1\) and \(a>|x|\ge a\cdot r_{\alpha ,\nu -1},\) then the function \(x\mapsto \varphi _{a,\alpha ,\nu }(x)\) is convex; if \(a>0,\) \(\alpha >0,\) \(\nu \ge 1\) and \(|x|\le a\cdot r_{\alpha ,\nu -1},\) then the function \(x\mapsto \varphi _{a,\alpha ,\nu }(x)\) is concave;

-

(iv) If \(a>0,\) \(0<\nu <1,\) \(\alpha \) is large enough and \(a\cdot r_{\alpha ,\nu -1,1}\ge |x|\ge a\cdot r_{\alpha ,\nu -1,2},\) then the function \(x\mapsto \varphi _{a,\alpha ,\nu }(x)\) is convex; if \(a>0,\) \(0<\nu <1,\) \(\alpha \) is large enough and \(|x|\le a\cdot r_{\alpha ,\nu -1,2},\) \(a\cdot r_{\alpha ,\nu -1,1}\le |x|<a\) then the function \(x\mapsto \varphi _{a,\alpha ,\nu }(x)\) is concave;

4.6 Monotonicity of the Probability Density Function with Respect to \(\nu \)

By using the notations \(t=\alpha s\) and \(s=g_a(x),\) we focus on the following expression

and in what follows our aim is determine its sign. For this we recall the following Cauchy product formula for the product of Bessel functions of the first kind (see [66, p. 148])

which is valid for \(\mu ,\nu >-1,\) \(a,b\in {\mathbb {R}}\) and \(z\in {\mathbb {C}}.\) Observe that if we change in this equation z to \(\textrm{i}z\) and we use the relation \(I_{\nu }(z)=\textrm{i}^{-\nu }J_{\nu }(\textrm{i}z),\) then we arrive at the following interesting formula for the product of modified Bessel functions of the first kind

Choosing in (4.11) the values \(z=\alpha ,\) \(b=s,\) \(a=1,\) \(\mu =\nu +\frac{1}{2}\) and changing \(\nu \) to \(\nu +\varepsilon ,\) we find that

By choosing \(z=\alpha ,\) \(b=s,\) \(a=1\) and \(\mu =\nu +\varepsilon +\frac{1}{2}\) in (4.11), a similar argument shows that

It is important to mention that

is a polynomial and is equal to

Combining this with (4.12) and (4.13) we arrive at

where

Now, since

we deduce that

where \(\psi (x)=\Gamma '(x)/\Gamma (x)\) is the logarithmic derivative of the Euler gamma function. It is well-known that the gamma function is log-convex on \((0,\infty )\), and hence the logarithmic derivative of the gamma function is increasing on \((0,\infty ).\) On the other hand we see that \(\ln s<0,\) since \(s<1.\) These in turn imply that for each \(m\ge n\ge 1\) and \(\nu >-\frac{1}{2}\) the coefficients \(\delta _{\nu ,m,n}(s)\) are all strictly negative. From this we readily see that the sign of (4.14) depends on the sign of \(\delta _{\nu ,m,0}(s),\) that is,

Here in the last step we used the recurrence relation for the digamma function, that is,

Observe that for all \(m\ge 0\) and \(\nu \ge \nu _0\) we arrive at

where \(\nu _0\simeq 1.24873582438346{\cdots }\) is the unique positive rootFootnote 11 of the equation \(\psi (\nu +1)=\frac{1}{\nu +\frac{1}{2}},\) and we find that \(\delta _{\nu ,m,0}(s)\) is strictly negative for all \(m\ge 0\) and \(\nu \ge \nu _0.\) From this we readily see that the sign of (4.14) is negative for all \(a>0,\) \(\alpha >0,\) \(\nu \ge \nu _0\) and \(|x|<a,\) and since \(\nu \mapsto s^{\nu }\) is decreasing on \((0,\infty ),\) we deduce that the probability density function of the Kaiser–Bessel distribution is decreasing with respect to the parameter \(\nu \) on the interval \((\nu _0,\infty ).\) In other words, \(\nu \mapsto \varphi _{a,\alpha ,\nu }(x)\) is decreasing on \((\nu _0,\infty )\) for all \(a>0,\) \(\alpha >0\) and \(|x|<a.\) Moreover, because the coefficients \(\delta _{\nu ,m,0}(s)\) contain the term \(\ln s,\) we may have other monotonicity results with respect to \(\nu ,\) but on subintervals of \([-a,a].\) For example, by using the elementary inequality \(\ln (1-u)<-u\) for \(u=x^2/a^2<1,\) we see that for \(a>|x|\ge \frac{a}{\sqrt{\nu +\frac{1}{2}}},\) \(\nu \ge \nu _1\) and \(m\ge 0\) this leads to the next result

where \(\nu _1\simeq 0.901017015767612{\cdots }\) is the unique positive root of the equation \(2\psi (\nu +1)=\frac{1}{\nu +\frac{1}{2}}.\) Consequently, we deduce that \(\nu \mapsto \varphi _{a,\alpha ,\nu }(x)\) is decreasing on \((\nu _1,\infty )\) for all \(a>0,\) \(\alpha >0\) and \(\frac{a}{\sqrt{\nu +\frac{1}{2}}}\le |x|<a.\) It is possible to broaden the domain of x in the above monotonicity result. For this we emphasize that a similar argument as in the derivation of (4.14) shows that

and changing s to \(s^2\) in (4.15) we deduce that \(\nu \mapsto \varphi _{a,\alpha ,\nu }(x)\) is decreasing on \((\nu _1,\infty )\) for all \(a>0,\) \(\alpha >0\) and \(\frac{a}{\sqrt{2\nu +1}}\le |x|<a.\)

Summarizing, we have obtained the following monotonicity results.

Theorem 7

The function \(\nu \mapsto \varphi _{a,\alpha ,\nu }(x)\) is decreasing on \((\nu _0,\infty )\) for all \(a>0,\) \(\alpha >0\) and \(|x|<a,\) and is decreasing on \((\nu _1,\infty )\) for all \(a>0,\) \(\alpha >0\) and \(\frac{a}{\sqrt{2\nu +1}}\le |x|<a.\)

These monotonicity properties are illustrated on Fig. . This figure resembles also to a beautiful colored pashmina, and it is interesting to note the similarity between the behavior of the probability density function with respect to \(\alpha \) and \(\nu .\) Figure illustrates also the monotonicity behavior of the function \(\nu \mapsto \varphi _{a,\alpha ,\nu }(x).\) From this figure we can see that for example the function \(\nu \mapsto \varphi _{2,1,\nu }(1)\) is decreasing on \((1,\infty )\) and this shows that the number \(\nu _0\) is not the optimal one in the sense that it is not the critical point of \(\nu \mapsto \varphi _{2,1,\nu }(1).\) All the same, the decreasing property of \(\nu \mapsto \varphi _{a,\alpha ,\nu }(x)\) on \((\nu _0,\infty )\) is quite interesting since does not depend on a, \(\alpha \) and x. It is an interesting challenge to find the critical point of \(\nu \mapsto \varphi _{a,\alpha ,\nu }(x),\) which is a global maximum and is independent of a, \(\alpha \) and x.

4.7 Monotonicity of the Abel Transform of the Density Function with Respect to \(\nu \)

Among other properties, the probability density function of the Kaiser–Bessel distribution satisfies the following integral relationFootnote 12

where \({\mathcal {A}}[\varphi _{a,\alpha ,\nu }]\) is the Abel transform of the probability density function, and the derivation of this integral equation can be done by using the Sonine first finite integral [66, p. 373]

where \(\mu ,\nu >-1\) and z is an arbitrary complex number. Namely, following Lewitt [41, p. 1844], and choosing \(\mu =-\frac{1}{2}\) in the above Sonine first finite integral, we arrive at

where we used the change of variables \(t=\sqrt{a^2-x^2}\cos \theta \) in the first step and later the connection between Bessel and modified Bessel functions of the first kind.

Now, observe that by using the Chu-Vandermonde identity

the relation (4.11) reduces to a well-known and frequently used formula for modified Bessel functions of the first kind

which holds for all \(\mu ,\nu >-1\) and \(z\in {\mathbb {C}}.\) Applying this product formula for the modified Bessel functions of the first kind, we arrive at

which is clearly negative for each \(\alpha >0\) and \(\nu >-\frac{3}{2}.\) Thus, the function \(\nu \mapsto {I_{\nu +1}(\alpha )}/{I_{\nu +\frac{1}{2}}(\alpha )}\) is decreasing on \(\left[ -\frac{3}{2},\infty \right) \) for all \(\alpha >0.\) Combining this result with the results of the previous subsection, in view of (4.16) we obtain the following results for the Abel transform of the probability density function of the Kaiser–Bessel distribution.

Corollary 3

The function \(\nu \mapsto {\mathcal {A}}[\varphi _{a,\alpha ,\nu }]\) is decreasing on \(\left( \nu _0-\frac{1}{2},\infty \right) \) for all \(a>0,\) \(\alpha >0\) and \(|x|<a;\) the function \(\nu \mapsto {\mathcal {A}}[\varphi _{a,\alpha ,\nu }]\) is decreasing on \(\left( \nu _1-\frac{1}{2},\infty \right) \) for all \(a>0,\) \(\alpha >0\) and \(\frac{a}{\sqrt{2\nu +1}}\le |x|<a.\)

4.8 Monotonicity of the Probability Density Function with Respect to \(\alpha \)

By using the recurrence relation (4.2) twice, and the Mittag-Leffler expansion for modified Bessel functions of the first kind (3.13), we see that

Note that, based on the Hellmann-Feynman theorem of quantum chemistry (see for example [33] for a rigorous proof of the Hellmann-Feynman theorem), Lewis and Muldoon [40] proved that for fixed n the function \(\nu \mapsto j_{\nu ,n}/\nu \) is decreasing on \((0,\infty )\) and \(\nu \mapsto j_{\nu ,n}^2/\nu \) is increasing for sufficiently large \(\nu ,\) in particular, \(\nu \mapsto j_{\nu ,1}^2/\nu \) is increasing for \(\nu \ge 3.\) By using these results we immediately conclude that

where \(n\in {\mathbb {N}},\) \(\nu >0\) in the first inequality and \(\nu \) is sufficiently large in the second inequality, in particular when \(n=1\) satisfies \(\nu \ge 3.\) These in turn imply that

where \(n\in {\mathbb {N}},\) \(a>0,\) \(\nu >0\) in (4.17) and \(n\in {\mathbb {N}},\) \(a>0\) and \(\nu \) is sufficiently large in (4.18). Thus, as a function of \(\alpha ,\) the probability density function \(\alpha \mapsto \varphi _{a,\alpha ,\nu }(x)\) is decreasing on \((0,\infty )\) for \(a>0,\) \(\nu >0\) and \(a>|x|\ge \frac{a\sqrt{4\nu +1}}{2\nu +1},\) and is increasing on \((0,\infty )\) for \(a>0\) and sufficiently large \(\nu \) such that \(|x|\le \frac{a}{\sqrt{2\nu +1}}.\) Moreover, it is possible to obtain a monotonicity property of a little bit different type with a similar argument as above. Namely, if we apply the fact that the function \(\nu \mapsto j_{\nu ,n}/j_{\nu ,1}\) is decreasing on \((-1,\infty )\) for all \(n\in {\mathbb {N}}\) fixed (see [43]), then we arrive at

which implies that the probability density function \(\alpha \mapsto \varphi _{a,\alpha ,\nu }(x)\) is decreasing on \((0,\infty )\) for \(a>0,\) \(\nu >-1\) and \(a>|x|\ge a\sqrt{j_{\nu +\frac{1}{2},1}^2-j_{\nu ,1}^2}\Big /{j_{\nu +\frac{1}{2},1}}.\) On the other hand, we know that \(\nu \mapsto j_{\nu ,n}\) is concave (according to Elbert [25]) and hence log-concave on \((-1,\infty )\) for each \(n\in {\mathbb {N}}\) fixed and so is the function \(\nu \mapsto j_{\nu ,1}.\) In view of this we see that \(\nu \mapsto j_{\nu +\frac{1}{2},1}/j_{\nu ,1}\) is clearly decreasing on \((-1,\infty )\) and consequently \(\nu \mapsto j_{\nu ,1}^2/j_{\nu +\frac{1}{2},1}^2\) is increasing on \((-1,\infty ),\) and \(\nu \mapsto 1-j_{\nu ,1}^2/j_{\nu +\frac{1}{2},1}^2\) is decreasing on \((-1,\infty ).\) From this we may obtain for example that the function \(\alpha \mapsto \varphi _{a,\alpha ,\nu }(x)\) is decreasing on \((0,\infty )\) for \(a>0,\) \(\nu >0\) and \(a>|x|\ge a\sqrt{j_{\frac{1}{2},1}^2-j_{0,1}^2}\Big /{j_{\frac{1}{2},1}}.\) Note that \(j_{\frac{1}{2},1}=\pi \) and \(j_{0,1}\simeq 2.404825557695772{\cdots }\) and hence \(\sqrt{j_{\frac{1}{2},1}^2-j_{0,1}^2}\Big /{j_{\frac{1}{2},1}}\simeq 0.643459985555029{\cdots }.\)

The above monotonicity properties are illustrated in Fig. 1.

4.9 Other Bounds for the Ratio \(\varphi _{a,\alpha ,\nu +1}(x)/\varphi _{a,\alpha ,\nu }(x)\)

By using the monotonicity property of \(\nu \mapsto \varphi _{a,\alpha ,\nu }(x)\) we readily see that

for all \(a>0,\) \(\alpha >0,\) \(\nu \ge \nu _0\) and \(|x|<a.\) We note that it is possible to deduce other upper bounds for the ratio \(\varphi _{a,\alpha ,\nu +1}(x)/\varphi _{a,\alpha ,\nu }(x)\) which are not so tight, but valid for a greater range of the parameter \(\nu .\) For example, we can deduce the following inequality

which is valid for all \(a>0,\) \(\alpha >0,\) \(\nu \ge -\frac{1}{2},\) \(|x|<a\) and follows from the Soni inequality [62] \(I_{\nu }(t)>I_{\nu +1}(t)\) (valid for \(t>0\) and \(\nu \ge -\frac{1}{2}\)). In the case when \(\nu >0\) the inequality (4.20) follows also from the fact that the function \(\nu \mapsto I_{\nu +\frac{1}{2}}(\alpha )\varphi _{a,\alpha ,\nu }(x)\) is decreasing on \((0,\infty )\) for all \(a>0,\) \(\alpha >0\) and \(|x|<a.\) This latter monotonicity property is a direct consequence of the decreasing property of the function \(\nu \mapsto I_{\nu }(t)\) for \(t>0\) fixed, see [20]. A better upper bound than in the inequality (4.20) can be obtained easily by noticing that \(t\mapsto tI_{\nu +1}(t)/I_{\nu }(t)\) is strictly increasing on \((0,\infty )\) for all \(\nu >-1\) (this follows for example from the Mittag-Leffler expansion (3.13)). Namely, we find that.Footnote 13