Abstract

We develop new theoretical results on matrix perturbation to shed light on the impact of architecture on the performance of a deep network. In particular, we explain analytically what deep learning practitioners have long observed empirically: the parameters of some deep architectures (e.g., residual networks, ResNets, and Dense networks, DenseNets) are easier to optimize than others (e.g., convolutional networks, ConvNets). Building on our earlier work connecting deep networks with continuous piecewise-affine splines, we develop an exact local linear representation of a deep network layer for a family of modern deep networks that includes ConvNets at one end of a spectrum and ResNets, DenseNets, and other networks with skip connections at the other. For regression and classification tasks that optimize the squared-error loss, we show that the optimization loss surface of a modern deep network is piecewise quadratic in the parameters, with local shape governed by the singular values of a matrix that is a function of the local linear representation. We develop new perturbation results for how the singular values of matrices of this sort behave as we add a fraction of the identity and multiply by certain diagonal matrices. A direct application of our perturbation results explains analytically why a network with skip connections (such as a ResNet or DenseNet) is easier to optimize than a ConvNet: thanks to its more stable singular values and smaller condition number, the local loss surface of such a network is less erratic, less eccentric, and features local minima that are more accommodating to gradient-based optimization. Our results also shed new light on the impact of different nonlinear activation functions on a deep network’s singular values, regardless of its architecture.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Deep learning has significantly advanced our ability to address a wide range of difficult inference and approximation problems. Today’s machine learning landscape is dominated by deep (neural) networks (DNs) [19], which are compositions of a large number of simple parameterized linear and nonlinear operators, known as layers. An all-too-familiar story of late is that of plugging a DN into an application as a black box, learning its parameter values using gradient descent optimization with copious training data, and then significantly improving performance over classical task-specific approaches.

Over the past decade, a menagerie of DN architectures has emerged, including Convolutional Neural Networks (ConvNets) that feature affine convolution operations [18], Residual Networks (ResNets) that extend ConvNets with skip connections that jump over some layers [12], Dense Networks (DenseNets) with several parallel skip connections [15], and beyond. A natural question for the practitioner is: Which architecture should be preferred for a given task? Approximation capability does not offer a point of differentiation, because, as their size (number of parameters) grows, these and most other DN models attain universal approximation capability [6].

Practitioners know that DNs with skip connections, such as ResNets and DenseNets, are much preferred over ConvNets, because empirically their gradient descent learning converges faster and more stably to a better solution. In other words, it is not what a DN can approximate that matters, but rather how it learns to approximate. Empirical studies [21] have indicated that this is because the so-called loss landscape of the objective function navigated by gradient descent as it optimizes the DN parameters is much smoother for ResNets and DenseNets as compared to ConvNets (see Fig. 1). However, to date there has been no analytical work in this direction.

In this paper, we provide the first analytical characterization of the local properties of the DN loss landscape that enables us to quantitatively compare different DN architectures. The key is that, since the layers of all modern DNs (ConvNets, ResNets, and DenseNets included) are continuous piecewise-affine (CPA) spline mappings [1, 2], the loss landscape is a continuous piecewise function of the DN parameters. In particular, for regression and classification problems where the DN approximates a function by minimizing the \(\ell _2\)-norm squared-error, we show that the loss landscape is a continuous piecewise quadratic function of the DN parameters. The local eccentricity of this landscape and width of each local minimum basin is governed by the singular values of a matrix that is a function of not only the DN parameters but also the DN architecture. This enables us to quantitatively compare different DN architectures in terms of their singular values.

Let us introduce some notation to elaborate on the above programme. We study state-of-the-art DNs whose layers \(f_k\) comprise a nonlinear, continuous piecewise-linear activation function \(\phi \) that is applied element-wise to the output of an affine transformation. We focus on the ubiquitous class of activations \(\phi (t) = \max (\eta t,t)\), which yields the so-called rectified linear unit (ReLU) for \(\eta =0\), leaky-ReLU for small \(\eta \ge 0\), and absolute value for \(\eta =-1\) [11]. In an abuse of notation that is standard in the machine learning community, \(\phi \) can also operate on vector inputs by applying the above activation function to each coordinate of the vector input separately. Focusing for this introduction on ConvNets and ResNets (we deal with DenseNets in Sect. 3), denote the input to layer k by \({z}_k\) and the output by \({z}_{k+1}\). Then we can write

where \(W_k\) is an \(n\times n\) weight matrix and \(b_k\) is a vector of offsets.Footnote 1 Typically \(W_k\) is a (strided) convolution matrix. The choice \(\rho =0\) corresponds to a ConvNet, while \(\rho =1\) corresponds to a ResNet.

Optimization loss landscape of two deep networks (DNs) along a 2D slice of their parameter space (from [21]). (left) Convolutional neural network (ConvNet) with no skip connections. (right) Residual network (ResNet) with skip connections. This paper develops new matrix perturbation theory tools to understand these landscapes and in particular explain why skip connections produce landscapes that are less erratic, less eccentric, and feature local minima that are more accommodating to gradient-based optimization. Used with permission

Previous work [1, 2] has demonstrated that the operator \(f_k\) is a collection of continuous piecewise-affine (CPA) splines that partition the layer’s input space into polytopal regions and fit a different affine transformation on each region with the constraint that the overall mapping is continuous. This means that, locally around the input \(z_{k}\) and the DN parameters \(W_k, b_k\), (1) can be written as

where the diagonal matrix \(D_k\) contains 1s at the positions where the corresponding entries of \(W_k {z}_k+b_k\) are positive and \(\eta \) where they are negative.

In supervised learning, we are given a set of G labeled training data pairs \(\{x^{(g)}, y^{(g)}\}_{g=1}^G\), and we tune the DN parameters \(W_k, b_k\) such that, when datum \(x^{(g)}\) is input to the DN, the output \(\widehat{y}^{(g)}\) is close to the true label \(y^{(g)}\) as measured by some loss function L. In this paper, we focus on the \(\ell _2\)-norm squared-error loss averaged over a subset of the training data (called a mini-batch)

Standard practice is to use some flavor of gradient descent to iteratively reduce L by differentiating with respect to \(W_k, b_k\). For ease of exposition, we focus our analysis first on the case of fully stochastic gradient descent that involves only a single data point per gradient step (i.e., \(G=1\)), meaning that we iteratively minimize

for different choices of \(g\). We then extend our theoretical results to arbitrary \(G>1\) in Sect. 3.6. Let \({z}_k^{(g)}\) denote the input to the k-th layer when the DN input is \(x^{(g)}\). Using the CPA spline formulation (2), it is easy to show (see (46)) that, for fixed \(y^{(g)}\), the loss function \(L^{(g)}\) is continuous and piecewise-quadratic in \(W_k, b_k\).

The squared-error loss \(L^{(g)}\) is ubiquitous in regression tasks, where \(y^{(g)}\) is real-valued, but also relevant for classification tasks, where \(y^{(g)}\) is discrete-valued. Indeed, recent empirical work [16] has demonstrated that state-of-the-art DNs trained for classification tasks using the squared-error loss perform as well or better than the same networks trained using the more popular cross-entropy loss.Footnote 2

We can characterize the local geometric properties of this piecewise-quadratic loss surface as follows. First, without loss of generality, we simplify our notation. For the rest of the paper, we label the layer of interest by \(k=0\) and suppress the subscript 0 (i.e., \(W_0 \rightarrow W\)); we assume that there are p subsequent layers (\(f_1,\dots ,f_p\)) between layer 0 and the output \(\widehat{y}\). Optimizing the weights W of layer f with training datum \(x^{(g)}\) that produces layer input \(z^{(g)}\) requires the analysis of the DN output \(\widehat{y}\), due to the chain rule calculation of the gradient of L with respect to W. (As we discuss below, there is no need to analyze the optimization of b.) For further simplicity, we suppress the superscript \({(g)}\) that enumerates the training data whenever possible. We thus write the DN output as

where

collects the combined effect of applying \(W_k\) in subsequent layers \(f_k\), \(k=1,\dots ,p\), and B collects the combined offsets. Note that \(z\) reflects the influence of the training datum combined with the action of the layers preceding f and can be interpreted as the input to the shallower network consisting of layers \(f,f_1,\dots ,f_p\). This justifies using the index 0 for the layer under consideration.

Using this notation and fixing b as well as the input \({z}\), the piecewise-quadratic loss function L can be written locally as a linear term in W plus a quadratic term in W, which can be written as a quadratic form featuring matrix Q (see Lemma 8)

Here, w denotes the columnized vector version of the matrix W.

The semi-axes of the ellipsoidal level sets of the local quadratic loss (7) are determined by the singular values of the matrix Q, which we can write as \(s_i \cdot |{z}[j]|\) according to Corollary 9, with \(s_i=s_i(MD)\) the i-th singular value of the linear mapping MD and \({z}[j]\) the j-th entry in the vector \({z}\). The eccentricity of the ellipsoidal level sets is governed by the condition number of Q which, therefore, factors as

Here, \(\min ^* \) denotes the minimum after discarding all vanishing elements (cf. (53)). In this paper we focus on the effect of the DN architecture on the condition number of the matrix MD representing the action of the subsequent layers, which is

However, the factorization in (8) provides insights into the role played by the training datum \({z}\) (see Corollary 9). Extensions of these results to batches \((G>1)\) are found in Sect. 3.6. (We note at this point that the optimization of the offset vector b has no effect on the shape of the loss function.)

For a fixed set of training data (i.e., fixed \({x}\)), the difference between the loss landscapes of a ConvNet and a ResNet is determined soley by \(\rho \) in (6). Therefore, we can make a fair, quantitative comparison between the loss landscapes of the ConvNet and ResNet architectures by studying how the singular values of the linear mapping \(M(\rho )D\) evolve as we move infinitesimally from a ConvNet (\(\rho =0\)) towards a ResNet (\(\rho =1\)).

Addressing how the singular values and condition number of the linear mapping \(M(\rho )D\) (and hence the DN optimization landscape) change when passing from \(\rho =0\) towards \(\rho =1\) requires a nontrivial extension of matrix perturbation theory. Our focus and main contributions in this paper lie in exploring this under-explored territory.Footnote 3 Our analysis technique is new, and so we dedicate most of the paper to its development, which is potentially of independent interest.

We briefly summarize our key theoretical findings from Sects. 2–6 and our penultimate result in Theorem 22. We provide concrete bounds on the growth or decay of the singular values of any square matrix \(M_o=M(0)\) when it is perturbed to \(M(\rho ) = M_o + \rho \,\textrm{Id}\) by adding a multiple of the identity \(\rho \,\textrm{Id}\) for \(0\le \rho \le 1\). The bounds are in terms of the largest and smallest eigenvalues of the symmetrized matrix \(M_o^T+ M_o\). The behavior of the largest singular value under this perturbation can be controlled quite accurately in the sense that random matrices tend to follow the upper bound we provide quite closely with high probability. For all other singular values, we require that they be non-degenerate, meaning that they have multiplicity 1 for all but finitely many \(\rho \), a property that holds for most matrices in a generic sense (see Lemma 12). Further, we establish bounds for arbitrary singular values that do not hinge on the assumption of multiplicity 1 but that are somewhat less tight.

Our new perturbation results enable us to draw three rigorous conclusions regarding the optimization landscape of a DN. First, with regards to network architecture, we establish that the addition of skip connections improves the condition number of a DN’s optimization landscape. To this end, we point out that no guarantees on the piecewise-quadratic optimization landscape’s condition number are available for ConvNets; in particular, \(\kappa (M(0))\) can take any value, even if the entries of M(0) are bounded. However, as we move from ConvNets (\(\rho =0\)) towards networks with skip connections such as ResNets and DenseNets (\(\rho >0\)), bounds on \(\kappa (M(\rho ))\) become available. For an appropriate random initialization of the weights and a wide network architecture (large n), we show that the condition number of a ResNet (\(\rho =1\)) is bounded above asymptotically by just 3 (see (121), (122), and (128)). Second, for the particular case of DNs employing absolute value activation functions, we show that the relevant condition number is easier to control than for ReLU activations, that is, absolute value requires less restrictive assumptions on the entries of M in order to bound the condition number. For absolute value, we also prove that the condition number of M(1) (ResNet) is smaller than that of M(0) (ConvNet) with probability asymptotically equal to 1. Third, with regards to the influence of the training data, we prove that extreme values of the data negatively affect the performance of linear least squares and that this impact is tempered when applying mini-batch learning due to its inherent averaging.

In the context of DN optimization, these results demonstrate that the local loss landscape of a DN with skip connections (e.g., ResNet, DenseNet) is better conditioned than that of a ConvNet and thus less erratic, less eccentric, and with local minima that are more accommodating to gradient-based optimization, particularly when the weights in W are small. This is typically the case at the initialization of the optimization [10] and often remains true throughout training [9]. In particular, our results also provide new insights into the best magnitude to randomly initialize the weights at the beginning of learning in order to reap the maximum benefit from a skip connection architecture.

This paper is organized as follows. Section 2 introduces our notation and provides a review of existing useful results on the perturbation of singular values. Section 3 overviews our prior work establishing that CPA DNs can be viewed as locally linear and leverages this essential property to identify the singular values relevant for understanding the shape of the quadratic loss surface used in gradient-based optimization. Section 4 develops the perturbation results needed to capture the influence on the singular values when adding a skip connection, i.e., when passing from \(M_o\) to \(M_o+\textrm{Id}\). In particular, we provide bounds on the condition number of \(M_o+\rho \textrm{Id}\) in terms of the largest singular value of \(M_o\) and the largest and the smallest eigenvalue of \(M_o^T+M_o\). Section 5 combines the findings of Sects. 3 and 4 to show that a layer with a skip connection and weights bounded by an appropriate constant will have a bounded condition number. Clearly, this applies to weights drawn from a uniform distribution. Section 6 is dedicated to a ResNet layer with random weights of bounded standard deviation. Here, we establish bounds on the condition number of the corresponding ResNet and on the probability with which they hold, explicitly in terms of the width n of the network. Further, we provide convincing numerical evidence that the asymptotic bounds apply in practice for widths as modest as \(n\ge 5\). We conclude in Sect. 7 with a synthesis of our results and perspectives on future research directions. All proofs are provided within the main paper. Various empirical experiments sprinkled throughout the paper support our theoretical results.

2 Background

2.1 Notation

Singular Values Let \(A\) be a \(n\times n\)-matrix, and let \(\Vert u\Vert \) denote the \(\ell _2\)-norm of the vector u. All products are to be understood as matrix multiplications, even if the factors are vectors. Vectors are always column vectors.

Let \(u_i\) denote a unit-eigenvector of \(A^TA\) to its eigenvalue \(\lambda _i=\lambda _i(A^TA)\) such that the vectors \(u_i\) (\(i=1,\ldots , n\)) form an orthonormal basis.

The singular values of \(A\) are defined as the square roots of the eigenvalues of \({A}^T{A}\)

Note that the singular values are always real and non-negative valued, since

For reasons of definiteness, we take the eigenvalues of \({A}^T{A}\), and thus the singular values of \({A}\) to be ordered by size: \(s_1\ge \cdots \ge s_n\).

Singular Vectors The vectors \(u_i\) are called right singular vectors of \({A}\). The left singular vectors of \({A}\) are denoted \(v_i\) and are defined as an orthonormal basis with the property that

Such a basis of left-singular vectors always exists.

SVD Collecting the right singular vectors \(u_i\) as columns into an orthogonal matrix U, the left singular vectors \(v_i\) into an orthogonal matrix V, and the singular values \(s_i\) into a diagonal matrix \(\Sigma \) in corresponding order, we obtain the Singular Value Decomposition (SVD) of \({A}=V\Sigma U^T\).

Eigenvectors Note that \({A}\) may have eigenvectors that differ from the singular vectors. In particular, eigenvectors are not always orthogonal onto each other. Clearly, if \({A}\) is symmetric then the eigenvectors and right-singular vectors coincide and every eigenvalue \(r_i\) of \({A}\) corresponds to a singular value \(s_i=|r_i|\). However, to avoid confusion, we will refrain from referring to the eigenvalues and the singular values of the same matrix whenever possible.

Smooth Matrix Deformations Consider a family of \(n \times n\) matrices \({A}(\rho )\), where the variable \(\rho \) in assumed to lie in some interval I. We say that the family \({A}(\rho )\) is \(\mathcal {C}^{m}(I)\) if all its entries are \(\mathcal {C}^{m}(I)\), with \(m=0\) corresponding to being continuous and \(m=1\) to continuously differentiable. If \({A}\) is \(\mathcal {C}^{m}(I)\), then so is \({A}^T{A}\).

We denote the matrix of derivatives of the entries of \({A}\) by \({A}'(\rho )=\frac{d}{d\rho } {A}(\rho )\).

Multiplicity of Singular Values Denote by \(S_i\subset I\) the set of \(\rho \) values for which \(s_i\) is a simple singular value:

For later use, let

For completeness, we mention an obvious fact that follows from the SVD:

2.2 Singular Values Under Additive Perturbation

A classical result that will prove to be key in our study is due to Wielandt and Hoffman [14]. It uses the notion of the Frobenius norm \(\Vert {A}\Vert _F\) of the \(n\times n\)-matrix \({A}\). Denoting the entries of \({A}\) by \({A}[i,j]\) and its singular values by \(s_i({A})\), it is well known that

Proposition 1

(Wielandt and Hoffman) Let \({A}\) and \({{\tilde{A}}}\) be symmetric \(n\times n\)-matrices with eigenvalues ordered by size. Then

This result can easily be strengthened to read as follows.

Corollary 2

(Wielandt and Hoffman) Let \({A}\) and \({{\tilde{A}}}\) be any \(n\times n\)-matrices with singular values ordered by size. Then

Note that this result, as most others, holds actually also for rectangular matrices with the usual adjustments.

Proof

We establish Corollary 2. The 2n eigenvalues of the symmetric matrix

are \(s_i({A})\) with eigenvector \(\frac{1}{\sqrt{2}}{ v_i \atopwithdelims ()u_i }\) and \(-s_i({A})\) with eigenvector \(\frac{1}{\sqrt{2}}{ -v_i \atopwithdelims ()u_i }\). Also, \(\Vert H_A\Vert _F^2=2\Vert {A}\Vert _F^2\). Therefore, when applying Proposition 1 with \(A\) replaced by \(H_A\) and \({\tilde{A}}\) replaced by \(H_{\tilde{A}}\), we run through the left-hand side of (18) twice and in turn obtain twice the right-hand side.

Corollary 2 immediately implies a well-known result that will be key: the singular values of a matrix depend continuously on its entries, as we state next.

Lemma 3

If \({A}(\rho )\) is \(\mathcal {C}^{0}(I)\), then all its singular values \(s_i(\rho )\) depend continuously on \(\rho \). Consequently, all \(S_i\) in (13) and \(S_i^*\) in (14) are then open.

Proof

To establish continuity, choose \(\rho _1\) and \(\rho _2\) arbitrary and apply Corollary 2 (in slight abuse of notation) with \({A}={A}(\rho _1)\) and \({{\tilde{A}}}={A}(\rho _2)\).

As will become clear in the sequel, the main difficulty in establishing further results on the regularities of the singular values with elementary arguments and estimates lies in dealing with multiple zeros, i.e., with multiple singular values.

Also useful are some facts about the special role of the largest singular value.

Proposition 4

(Operator norm) Let \({A}\) be a \(n\times n\)-matrix. Then, its largest singular value is equal to its operator norm, and we have the following inequalities:

In particular, if \({A}\) is rank 1, then both norms coincide. Also, being a norm, the largest singular value satisfies

for any matrices \({A}\) and \({{\tilde{A}}}\).

Proof

The first equality follows from the fact that \(s_1\) is the largest semi-axis of the ellipsoid that is the image of the unit-sphere. The first inequality follows from (16). The last inequality follows again from considering the aforementioned ellipsoid.

Another proof of the continuity of singular values is found in the well-known interlacing properties of singular values, which we adapt to our notation and summarize for the convenience of the reader (see, e.g. [5, Theorem 6.1.3-5.]).

Proposition 5

(Interlacing) Let \({A}\) and \({{\tilde{A}}}\) be \(n\times n\)-matrices with singular values ordered by size.

-

(Weyl additive perturbation) We have:

$$\begin{aligned} s_{i+j-1}({A}) \le s_i({{\tilde{A}}})+s_j({A}-{{\tilde{A}}}). \end{aligned}$$(22) -

(Cauchy interlacing by deletion) Let \({{\tilde{A}}}\) be obtained from \({A}\) by deleting m rows or m columns. Then

$$\begin{aligned} s_{i}({A}) \ge s_i({{\tilde{A}}}) \ge s_{i+m}({A}). \end{aligned}$$(23)

From (22) applied with \(j=1\), we note that the singular values are in fact uniformly continuous. Indeed, we have for any i that

2.3 Singular Values Under Smooth Perturbation

We now turn to the study of how singular values of \({A}(\rho )\) change as a function of \(\rho \), thereby leveraging the concept of differentiability.

This can be done in several ways; the following computation is particularly simple and well known. Let \(N(\rho )\) denote a family of symmetric \(\mathcal {C}^{1}(I)\) matrices. Dropping all indices and variables \(\rho \) for ease of reading, the eigenvalues and eigenvectors satisfy

Denoting the derivatives with respect to \(\rho \) by \(N'\), \(\lambda '\) and \(u'\) and assuming they exist, we obtain

We then left-multiply the first equality by \(u^T\) and use the second equality to find

Finally, as N is symmetric we see that \(u^T Nu'=(Nu)^Tu'=\lambda u^T u' = 0\), leading to

In quantum mechanics, the last equation is known as the Hellmann–Feynman Theorem and was discovered independently by several authors in the 1930s. It is known, though seldom mentioned, that the formula may fail when eigenvalues coincide. For further studies in this direction, we refer the interested reader to works in the field of degenerate perturbation theory.

For a proof of the Hellmann–Feynman Theorem, it is natural to consider the function

where u is an n-dimensional column vector.

The Implicit Function Theorem applied to \(\Psi \) then yields the following result.

Theorem 6

(Hellmann–Feynman) Assume \(N(\rho )\) forms a family of symmetric \(\mathcal {C}^{1}(I)\) matrices. Then, as a function of \(\rho \), each of its eigenvalues \(\lambda _i=\lambda _i(\rho )\) is continuously differentiable where it is simple. Its derivative reads as

Its corresponding right singular vector \(u_i(\rho )\) can be chosen such that it becomes \(\mathcal {C}^{1}(S_i)\) as well.

If in addition to the assumptions of Theorem 6 the family N is actually \(\mathcal {C}^{m}(I)\), then \(\lambda _i(\rho )\) and \(u_i(\rho )\) are \(\mathcal {C}^{m}(S_i)\). From Hellmann–Feynmann we may conclude the following.

Corollary 7

Let \({A}(\rho )\) be \(\mathcal {C}^{1}(I)\). Assume that \(S_i^*\) consists of isolated points only. Then, \(s_i(A)\) and its left singular vector \(v_i\) are \(\mathcal {C}^{1}(S_i^*)\) with

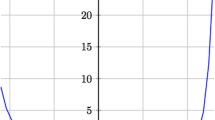

Simple examples show that the above results cannot be improved without stronger assumptions (see Example 1 below and Fig. 2).

Proof

Apply Theorem 6 to the family \(N={A}^T {A}\). Note that \(N'={A}'^T {A} + {A}^T {A}'\) and apply the chain rule to \(\lambda _i=(s_i(\rho ))^2\) to find the first equality of the following formula:

Also, we have \(u_i^T{A}'^T {A}u_i=(u_i^T{A}'^T {A}u_i)^T =u_i^T{A}^T {A}'u_i\), since this is a real-valued number. This implies the second equality in the formula above. Finally note that \({A} u_i=s_i v_i\) to complete the proof.

Singular values of two families of matrices of the form \(M(\rho )=M_o+\rho \textrm{Id}\) for \(0\le \rho \le 1\). a \(M_o=\textrm{diag}(-0.4~~ -0.2 ~~-0.1)\) demonstrates that even the largest singular value may not be increasing or everywhere differentiable. b The entries of the \(15\times 15\) matrix \(M_o\) are drawn from a zero-mean Gaussian distribution with \(\sigma =0.3\). This example demonstrates that the singular values may be non-convex and may have several extrema even without coinciding with others

Example 1. The diagonal family \(M=\)diag\((-4 ~~ -2)+\rho \)Id is  . When ordered by size, the eigenvalues of \(N=M^TM\) are \(\max ((\rho -4)^2,(\rho -2)^2)\) and \(\min ((\rho -4)^2,(\rho -2)^2)\). They are not differentiable where they coincide. In particular, the matrix norm of N is not differentiable at \(\rho =3\). The singular values of M are \(s_1=\max (|\rho -4|,|\rho -2|)\) and \(s_2=\min (|\rho -4|,|\rho -2|)\); they are not differentiable at their respective zeros. Also, the left singular vectors \(v_i\) change sign at the zero of \(s_i\) and so are not even continuous there (see Fig. 2 for a similar example).

. When ordered by size, the eigenvalues of \(N=M^TM\) are \(\max ((\rho -4)^2,(\rho -2)^2)\) and \(\min ((\rho -4)^2,(\rho -2)^2)\). They are not differentiable where they coincide. In particular, the matrix norm of N is not differentiable at \(\rho =3\). The singular values of M are \(s_1=\max (|\rho -4|,|\rho -2|)\) and \(s_2=\min (|\rho -4|,|\rho -2|)\); they are not differentiable at their respective zeros. Also, the left singular vectors \(v_i\) change sign at the zero of \(s_i\) and so are not even continuous there (see Fig. 2 for a similar example).

3 Singular Values in Deep Learning

In this section, we establish that the squared-error loss landscape of a deep network (DN) is governed locally by the singular values of the matrix of weights of a layer and that the difference between certain network architectures can be interpreted as a perturbation of this matrix. Combining this key insight with the perturbation theory of singular values for DNs, which will be developed in the sections to follow, will enable us to characterize the dependence of the loss landscape on the underlying architecture. Doing so, we will ultimately provide analytical insight into numerous phenomena that have been observed only empirically so far, such as the fact that so called ResNets [13] are easier to optimize than ConvNets [18].

3.1 The Continuous Piecewise-Affine Structure of Deep Networks

The most popular DNs contain activation nonlinearities that are continuous piecewise-affine (CPA), such as the ReLU, leaky-ReLU, absolute value, and max-pooling nonlinearities [11]. To be more precise, let \(f_{k}\) denote a layer of a DN. We will assume that it can be written as an affine map \( W_{k}{z_{k}}+b_{k} \) followed by the activation \(\phi _{k}\)

The entries of the matrix \(W_{k}\) are called weights; the constant additive term \(b_{k}\) is called the bias. More general settings can easily be employed; however, the above will be sufficient for our purpose. The above-mentioned, commonly used nonlinearities can be written in this form using

where the max is taken coordinate by coordinate. Clearly, any activation of the form (34) is continuous and piecewise linear, rendering the corresponding layer CPA (See Fig. 3). The following choices for \(\eta \) correspond to ReLU (\(\eta =0\)) [10], leaky-ReLU (\(0<\eta <1\)) [25] and absolute value [4] (\(\eta =-1\)) (see also [1, 2]). In summary:

For example, in two dimensions we obtain \(\phi _{k}^\mathrm{(Abs)}(t_1,t_2)=(|t_1|,|t_2|)\). We will consider only nonlinearities that can be written in the form (34). Such nonlinearities are in fact linear in each hyper-octant of space, and can be written as

where \(D_{k}\) is a diagonal matrix with entries that depend on the signs of the coordinates of the input \(t=W_{k}{z_{k}}+b_{k}\). The diagonal entries of \(D_{k}\) equal either 1 or \(\eta \) depending on the hyper-octant.

Consequently, the input space of the layer \(f_{k}\) is divided into regions that depend on \(W_{k}\) and \(b_{k}\) in which \(f_{k}\) is affine and can be rewritten as

By the linear nature of \({z_{k}}\mapsto W_{k}{z_{k}}\), these regions of the input space (\({z_{k}}\)-space) are piecewise linear. Concatenating several layers into a multi-layer \(F({z_1})=f_p\circ \cdots \circ f_1({z_1})\) then leads to a subdivision of its input space into piecewise-linear regions, in which F is affine, a result due to [1, 2]. In each linear region, this multi-layer can be written as

where all constant terms are collected in

A multi-layer can be considered to be an entire DN or a part of one.

3.2 From ConvNets to ResNets and DenseNets, and Learning

A DN with an architecture \(F({z_1})=f_p\circ \cdots \circ f_1({z_1})\) as described above is called a Convolutional Network (ConvNet) [18] whenever the \(W_k\) are circulant-block-circulant; otherwise it is called a multi-layer-perceptron. Without loss of generality, we will focus on ConvNets and their extensions, particularly ResNets and DenseNets, in this paper. While such architectures are responsible for the recent cresting wave of deep learning successes, they are known to be intricate to effectively employ when the number of layers p large. This motivated research on alternative architectures.

When adding the input \({z_{k}}\) to the output \(\phi _{k}(W_{k}{z_{k}}+b_{k})\) of a convolutional layer (a skip connection), one obtains a residual layer which can be written in each region of linearity as

A DN in which all (or most) layers are residual is called a Residual Network (ResNet) [13]. Densely Connected Networks (DenseNets) [15] allow the residual connection to bypass not just a single but multiple layers

All of the above DN architectures are universal approximators. Learning the per-layer weights of any DN architecture is performed by solving an optimization problem that involves a training data set, a differentiable loss function such as the squared-error or cross-entropy, and a weight update policy such as gradient descent. Hence, the key benefit of one architecture over another only emerges when considering the learning problem, and in particular gradient-based optimization.

3.3 Learning

We now consider the learning process for one particular layer of a DN. As we make clear below, for the comparison of different network architectures, it is sufficient to study each layer independently of the others. For ease of exposition, our analysis in Sects. 3.3–3.5 also focuses on a single training data point, which corresponds to learning via fully stochastic gradient descent (\(G=1\) in the Introduction). We extend our analysis to the general case of mini-batch gradient descent (\(G>1\)) in Sect. 3.6.

The layer being learned is \(f_0\); however, for clarity we drop the index 0 and use the notation

The layers following f are the only ones relevant in the study of learning layer f. In fact, the previous layers transform the DN input into a feature map that, for the layer in question, can be seen as a fixed input. This part of the network will be denoted by \(F=f_p\circ \cdots \circ f_1\). In each partition region, it takes the form

Here, B collects all of the constants, D represents the nonlinearity of the trained layer in the current region, and M collect the action of the subsequent layers F. We use the parameter \(\rho \) to distinguish between ConvNets and ResNets, with \(\rho =0\) corresponding to convolution layers and \(\rho =1\) to residual ones. The matrix \(M=M(\rho )\) representing the subsequent layers F becomes

As a shorthand, we will often write \(M(\rho ) = M_o+\rho \textrm{Id}\), where

When saying “let \(M_o\) be arbitrary” we mean that no restrictions on the factors in (45) are imposed except for the \(D_i\) to be diagonal and the \(W_i\) to be square. When choosing \(D_p=\cdots =D_1=W_p=\cdots =W_2=\textrm{Id}\) and \(W_1\) arbitrary, we have \(M_o=W_1\) arbitrary. So, there is no ambiguity in our usage of the term “arbitrary”.

3.4 Quadratic Loss

We wish to quantify the advantage in training of a ResNet architecture over a ConvNet one. We consider a quadratic loss function such as the squared-error as mentioned in the Introduction (cf. (4); we drop the superscript (g)):

In the above, we used that \(y^T\widehat{y}=\left( y^T\widehat{y}\right) ^T=\widehat{y}^Ty\), since this is a real number.

Recalling that the DN is a continuous piecewise-affine operator and fixing all parameters except for W, we can locally write \(\widehat{y}=MD W {z} +B\) for all W in some region \(\mathcal {R}\) of F (cf. (4); \(z\) is the input to f produced by the datum \(x^{(g)}\)). Writing \(\left\| \widehat{y} \right\| _2^2 =\widehat{y} ^T\widehat{y} \), we find that the loss can be viewed as an affine term P(W) with P a polynomial of degree 1 plus a quadratic term \(\Vert MD W {z} \Vert _2^2\)

We stress once more that all parameters are fixed except for W. Denote by \(W_o=W_o(\rho ,\mathcal {R})\) the minimum of the function \(h(W)=P(W)+\Vert MD W {z} \Vert _2^2\) (W arbitrary), which may very well lie outside \(\mathcal {R}\). Writing \(\Delta W=W-W_o\) and developing h into its Taylor series at \(W_o\), we find \(h(W)=h(W_o)+\Vert MD \Delta W {z} \Vert _2^2\), since all first order derivatives of h vanish at \(W_o\).

It is worthwhile noting that the region \(\mathcal {R}\) does not change when changing the value of \(\rho \) in \(M=M(\rho )\), since \(D\Delta W{z}\) does not depend on \(\rho \). From this, writing \(c=h(W_o)\) for short, we obtain for any \(\rho \)

This means that the loss surface is in each region \(\mathcal {R}\) of F is a part of an exact ellipsoid whose shape is determined by the second-order expression \(\Vert M(\rho )D \Delta W {z} \Vert _2^2\) as a function of \(\Delta W\). The value c should not be interpreted as the minimum of the loss function, since \(W_o\) may lie outside the region \(\mathcal {R}\).

Before commenting further on the loss, however, let us untangle the influence of the data \({z}\) (which remains unchanged during training) from the weights W in (47) (analogous for \(\Delta W\) in (48)). When listing the variables W[i, j] row by row in a “flattened” vector w, the term (47) becomes a quadratic form defined by the following matrix Q.

Lemma 8

In the quadratic term (47) of the MSE, we have the following matrices: W is \(n\times n\), MD is \(n\times n\), and \({z}\) is \(n\times 1\). Denote the flattened version of W by w (\(n^2 \times 1\)) and the singular values of MD by \(s_i=s_i(MD)\). Let \( Q = \textrm{diag}({z}) \otimes (MD) \) meaning that

Then, we have (cf. (47))

and that the singular values of Q factor as

with \(1 \le i,j \le n\).

Proof

Direct computation easily verifies (50). For (51), note that the characteristic function of \(A=Q^TQ\) is as follows, where in slight abuse of notation Id denotes the identity in the appropriate dimensions

Its zeros are easily identified as \(\lambda _{i,j} = {z}[j]^2\lambda _i((MD)^T(MD)) = {z}[j]^2s_i^2(MD) \), establishing (51).

3.5 Condition Number

We return now to the shape of the loss surface of the trained layer f. We point out that the loss surface is exactly equal to a portion of an ellipsoid whose geometry is completely characterized by the spectrum of the matrix Q due to (48) and Lemma 8. Its curvature and higher-dimensional eccentricity has a considerable influence on the numerical behavior of gradient-based optimization. In essence, the larger the eccentricity, the less accurately the gradient points towards the maximal change of the loss when making a non-infinitesimal change in W.

The condition number \(\kappa \) of the matrix Q provides a measure of its higher-dimensional eccentricity and, therefore, of the difficulty of optimization by linear least squares. It is defined as the ratio of the largest singular value \(s_1\) to the smallest non-zero singular value. Denoting the latter by \(s_*\) for convenience, the condition number of an arbitrary matrix A is given by

Having identified \(\kappa (Q)\) as a most relevant quantity towards understanding the performance of linear least squares, we can apply Lemma 8 to identify and separate the influence of data and subsequent layers on the loss landscape as follows. For an efficient notation we recall that the singular values of \(\textrm{diag}({z})\) are |z[j]| and that vanishing z[j] do not contribute to \(\kappa (\textrm{diag}({z}))\) (cf. (53)).

Corollary 9

(The Data Factor) The condition number of the matrix Q of Lemma 8 factors as

Our main interest lies in the impact of the architecture of the p layers following f (adding a residual link or not), leaving the rest of the DN as is. Since the input data \({z}\) does not depend on this architectural choice, the performance of the linear least-squares optimization we focus on here is governed by \(\kappa (MD)=\kappa (M(\rho )D)\).

Consequently, we are faced with two types of perturbation settings: First, the evolution of the loss landscape of DNs as their architecture is continuously interpolated from a ConvNet (\(\rho =0\)) to a ResNet (\(\rho =1\)), that is, when moving from M(0) to M(1). In this case, since the region \(\mathcal {R}\) of F does not depend on \(\rho \), such an analysis is meaningful for understanding the optimization of the loss via (48). Second, the effect of multiplying M by a diagonal matrix D. Before studying the perturbations in the subsequent sections, we make a remark regarding the use of mini-batches.

3.6 Learning Using Mini-Batches

In deep learning, one works typically with mini-batches of data \({x}^{(g)}\) (\(g=1,\ldots , G\)) that produce the inputs \(z^{(g)}\) to the trained layer f. The loss of a mini-batch is obtained by averaging the losses of the individual data points (cf. (3), (47) and (50)). Using the superscript (g) in a self-explanatory way and letting w be as in Lemma 8, we have

Letting \({z}^{(g)} [k]\) denote the k-th coordinate of the input to the trained layer produced by the g-th datum \({x}^{(g)}\) we can rewrite the mini-batch loss in a form analogous to (47), which enables us to extend our analysis of the shape of the loss surface to mini-batches.

Lemma 10

There exists a linear polynomial \({P_\textrm{batch}}\) and a symmetric matrix \({Q_\textrm{batch}}\) such that

The singular values of \({Q_\textrm{batch}}\) can be bounded as follows:

Notably, for any j with \({z}^{(g)} [j]=0\) \((1\le g \le G)\), we have that \( s_{i,j}({Q_\textrm{batch}})=0 \) for \(1 \le i \le n\).

Proof

The linear polynomial is obviously \( {P_\textrm{batch}}(W) = ( 1 / G ) \sum _{g=1}^G P^{(g)}(W) .\) To clarify the choice of \({Q_\textrm{batch}}\), set \( A= ( 1 / G ) \sum _{g=1}^G (Q^{(g)})^TQ^{(g)} .\) Then, \( {L_\textrm{batch}}= {P_\textrm{batch}}(W) + w^T Aw \), and A is symmetric and positive semi-definite.

Notably, the matrix \(A\) inherits the diagonal block structure of the \(Q^{(g)}\) as given in (49). In a slight abuse of notation, we can write \(A=\textrm{diag}(A_1\ldots ,A_n)\), where the j-th block is a symmetric \(n\times n\) matrix

Following the argumentation of the proof of Lemma 8, meaning that we exploit the fact that the blocks do not interact, it becomes apparent that the eigenvalues of \(A\) are those of the blocks \(A_j\). In short, we can list the eigenvalues of \(A\) as \( \lambda _{i,j}(A) = \lambda _{i}(A_j) \ge 0, \) where we arrange eigenvalues by size in descending order within each block. Arranging these eigenvalues by block into a diagonal matrix \(\Lambda \), there is an orthogonal matrix U such that \(A= U^T \Lambda U\). Now arranging their square roots \(\sqrt{\lambda _{i,j}(A)}\) in the same order into the diagonal matrix S, we can set \({Q_\textrm{batch}}=U^TSU\). Then, \( A= {Q_\textrm{batch}}^T {Q_\textrm{batch}}\) and \( w^T Aw = \Vert {Q_\textrm{batch}}w \Vert _2^2\) as desired. While we could choose \({Q_\textrm{batch}}\) to be any matrix of the form \(V^TSU\) with V orthogonal and find identical singular values, we chose a symmetric matrix for reasons of simplicity and convenience.

Clearly, \( s_{i,j}^2({Q_\textrm{batch}}) = \lambda _{i,j}(A) = \lambda _{i}(A_j) .\) For the upper bound of these singular values, we proceed as follows

From this, (57) follows easily. For the lower bound (58) let u denote the normalized eigenvector to the smallest eigenvalue \(\lambda _{n,j}\) of \(A_j\). Using (59) we obtain

From this, using \(\Vert ( M^{(g)}D^{(g)} )u \Vert _2 \ge s_n( M^{(g)}D^{(g)} ) \Vert u \Vert _2 = s_n( M^{(g)}D^{(g)} ) \), the bound (58) follows.

For a convenient bound of the condition number of \({Q_\textrm{batch}}\), we set \(\overline{z} [j] = \sqrt{(1/G) \sum _{g=1}^G |{z}^{(g)} [j]|^2 } \), which can be interpreted as the j-th mean square feature of the batch. From Lemma 10 we can conclude that \(\overline{z} [j]=0\) implies \(s_{i,j}({Q_\textrm{batch}})=0\) for all i. Since vanishing singular values are disregarded in the computation of the condition number as defined in this paper, we obtain the following result.

Corollary 11

In the notation of Lemma 10, assume that there are constants C and \(c>0\) such that for \(1\le g \le G\)

Assume that there is at least one non-vanishing \(\overline{z} [j]\), and denote by \(\min _j^*\overline{z} [j]\) the minimum over all non-vanishing \(\overline{z} [j]\). Then

4 Perturbation of a DN’s Loss Landscape When Moving from a ConvNet to a ResNet

In this section we exploit differentiability of the family \(M(\rho )\) in order to study the perturbation of its singular values when moving from ConvNets with \(\rho =0\) to ResNets with \(\rho =1\) (see Sect. 3.2).

We start with a general result that will prove most useful.

Lemma 12

Let \(N(\rho )\) be a family of symmetric \(n\times n\) matrices with entries that are polynomials in \(\rho \). Let \({\mathcal {E}}\) denote the set of  for which \(N(\rho )\) has multiple eigenvalues. Then the following dichotomy holds:

for which \(N(\rho )\) has multiple eigenvalues. Then the following dichotomy holds:

-

Either \({\mathcal {E}}\) is finite or

.

.

Also, if the entries of N are real analytical functions of \(\rho \) in some open set containing the compact interval \([\rho _1,\rho _2]\), then the set of \(\rho \in [\rho _1,\rho _2]\) with multiple eigenvalues of \(N(\rho )\) is either finite or all of \([\rho _1,\rho _2]\).

Clearly, this result extends easily to the multiplicity the singular values of any family of square matrices \(A(\rho )\) by considering \(N(\rho )=A^TA\).

Proof

Fixing \(\rho \) for a moment, the eigenvalues of \(N(\rho )\) are the zeros of the characteristic polynomial \(h(\lambda )=\det (N(\rho )-\lambda \,\textrm{Id})\). This polynomial h has multiple zeros \(\lambda \) if and only if its discriminant is zero. It is well known that the discriminant \({\chi }_h\) of a polynomial h is itself a polynomial in the coefficients of h.

If the entries of N are polynomials in \(\rho \), then the discriminant of h is, as a function of \(\rho \), in fact a polynomial \({\chi }_h(\rho )\). In other words, the set \({\mathcal {E}}\) is the set of zeros of the polynomial \({\chi }_h(\rho )\). This set is finite unless the polynomial \({\chi }_h(\rho )\) vanishes identically, in which case \({\mathcal {E}}\) becomes all of  .

.

Finally, if the entries of N are real analytical, then so is \({\chi }_h(\rho )\), and the identity theorem implies the claim.

Combining with Lemma 3 and Theorem 6 we obtain the following.

Corollary 13

Let N be as in Lemma 12. In the real analytical case, assume that \(\rho _1=0\) and \(\rho _2=\rho \) for some fixed \(\rho \). Assume that N(0) has no multiple eigenvalues. Then \({\mathcal {E}}\) is finite. Thus, for any i the set \([0,\rho ]{\setminus } S_i\) is finite and

After these general remarks, let us now consider \(M(\rho )=M_o+\rho \) Id (45) and use (64) to bound the growth of the singular values of M. To this end, we note the following.

Lemma 14

(Derivative) Let \(M_o\) be arbitrary (see (45)). Writing \(u_i=u_i(\rho )\) for short, the derivative of the eigenvalue \(\lambda _i(\rho )\) of \(M^TM\) becomes, on \(S_i\) (see (13))

On \(S_i^*\) (see (14)) we have

where \(\alpha _i(\rho )\) denotes the angle between the i-th left and right singular vectors.

Using (66) it is possible to compute the derivative \(s_i'(\rho )\) numerically with ease.

Proof

Apply Theorem 6 to \(N(\rho )=M^TM\). Use \(N'=(M')^TM+M^T(M')=M+M^T=M_o^T+M_o+2\)Id and \(u_i^Tu_i=1\) to obtain

Also, note that \(u_i^TM_o^Tu_i=(u_i^TM_o^Tu_i)^T =u_i^TM_ou_i\), since this is a real-valued number. This establishes (65). In a similar way

The chain rule implies (66). Alternatively, (66) follows from Corollary 7.

Definition 15

For a family of matrices of the form \(M(\rho )=M_o+\rho \,\textrm{Id}\), denote the smallest eigenvalue of the symmetric matrix \(M_o^T+M_o\) by \(\underline{\tau }\) and its largest by \(\overline{\tau }\).

Note that, due to \(\underline{\tau }\le \overline{\tau }\), we have

Using Corollary 13 with \(N=M^TM\) and (65) from Lemma 14, we have the following immediate result.

Theorem 16

Assume that \(M_o\) has no multiple singular values but is otherwise arbitrary (see (45)). Then, for all i,

We mention that a random matrix with iid uniform or Gaussian entries has almost surely no multiple singular values (see Theorem 34).

We continue with some observations on the growth of singular values that leverage the simple form of \(M(\rho )\) (see (45)) explicitly and that apply even in the case of \(M_o\) having multiple zeros.

Obviously, (70) holds also if for any \(\rho \) the matrix \(M(\rho )\) is known to have no multiple singular values. Even without any such knowledge, we have the following useful consequence of Weyl’s additive perturbation bound.

Lemma 17

Let \(M_o\) be arbitrary (see (45)). Then we have

Proof

Apply (24) with \(A=M(\rho )=M_o+\rho \,\textrm{Id}\) and \({\tilde{A}}=\rho \,\textrm{Id}\) to obtain

For a comparison, note that (70) implies that for any i

whenever it holds. Since \(\overline{\tau }\le 2 s_1(M_o)\) and \(s_i(0)\le s_1(0)\), the upper bound is clearly sharper than that of (71).

Continuing our observations, the following fact is obvious due to the simple form of \(M(\rho )\).

Lemma 18

(Eigenvector) Let \(M_o\) be arbitrary (see (45)). Assume that, for some \(\rho _o\), the vector \(w=u_i(\rho _o)\) is a right singular vector as well as an eigenvector of \(M(\rho _o)\) with eigenvalue t. Then, for all \(\rho \), the vector w is an eigenvector and right singular vector of \(M(\rho )\) with eigenvalue \(t+\rho -\rho _o\) and singular value \(|t+\rho -\rho _o|\).

Note that the singular value of the fixed vector w may change order, as other singular values may increase or decrease at a different speed.

Further, we have that \(|s_i'(\rho )|\le 1\) on \(S_i^*\) which is obvious from geometry and from (66). The eigenvectors of \(M_o\), if they exist, achieve extreme growth or decay.

Lemma 19

(Maximum Growth) Let \(M_o\) be arbitrary (see (45)). Assume that \(s'_i(\rho _o)=1\) for some \(\rho _o\). Then, \(w=u_i(\rho _o)\) is eigenvector and right singular vector of \(M(\rho )\) with eigenvalue \(t+\rho -\rho _o\) and singular value \(|t+\rho -\rho _o|\) for \(t=s_i(\rho _o)>0\).

The analogous results holds for \(s'_i(\rho _o)=-1\) with \(t=-s_i(\rho _o)<0\).

Note that the singular value of the fixed vector w may change order, since other singular values may increase or decrease at a different speed.

Of interest are also the zeros of the singular values since differentiability may fail there (c.f. Lemma 14).

Lemma 20

(Non-vanishing Singular Values) Let \(M=M_o+\rho \textrm{Id}\). Assume that \(s_i(\rho _o)=0\) for some \(\rho _o\). Then, \(w=u_i(\rho _o)\) is an eigenvector and right singular vector of \(M(\rho )\) for all \(\rho \) with eigenvalue \(\rho -\rho _o\) and singular value \(|\rho -\rho _o|\). Consequently, if \(M_o\) has no eigenvectors, then \(s_i(\rho )\ne 0\) for all \(\rho \) and i.

Theorem 16 shows that under mild conditions all singular values should be expected to increase when adding a multiple of the identity. However, when concerned only with the condition number, the following result suffices, requiring no technical assumptions at all.

Lemma 21

Let \(M_o\) be arbitrary (see (45)). Then

Proof

The argument consists of a direct computation, leveraging the special form of \(M(\rho )=M_o+\rho \textrm{Id}\). For simplicity, let us denote the normalized eigenvector of \(N(\rho )=M^TM\) with eigenvalue \(\lambda _i(\rho )=s_i(\rho )^2\) by \(u=u_i(\rho )\). Then,

Similarly, we can estimate the second to last expression from below by \(\lambda _n(0)+\rho \underline{\tau }+\rho ^2 \). Since i is arbitrary, the claim follows.

The advantage of (71) is its simple form, while (74) is sharper (cf. (73)). As we will see, certain random matrices of interest tend to achieve the upper bound (74) quite closely with high probability. Combining this observation with Lemmata 17 and 21, we obtain the following.

Theorem 22

Let \(M_o\) be arbitrary (see (45)). Assume that \(s_1(M_o)< 1\). Then

Alternatively, assume that \(\underline{\tau } > -1\). Then

We list all four bounds due to their different advantages. Due to (69), the first bounds in both (76) as well as (77) are in general sharper than the second. Indeed, for random matrices as used in Sect. 6.2 we have typically \(\overline{\tau }\simeq \sqrt{2}s_1(M_o) <2s_1(M_o)\). The second bounds are simpler and sufficient in certain deterministic settings.

The first bound of (77) is the tightest of all four bounds for the random matrices of interest in this paper. On the other hand, since we typically have \(-\underline{\tau }\simeq \sqrt{2}s_1(M_o)\) for such matrices, the stated sufficient condition for (76) is somewhat easier to meet than that of (77).

Proof

From Lemmata 21 and 17 we obtain the first inequality of (76); the second part follows from \(\overline{\tau }\le 2s_1(0)\). Using Lemma 21 we obtain (77).

5 Influence of the Activation Nonlinearity on a Deep Network’s Loss Landscape

As we put forward in Sect. 3, each layer of a DN can be represented locally as a product of matrices \(D_iW_i\), where \(W_i\) contains the weights of the layer while the diagonal \(D_i\) with entries equal to \(\eta \) or 1 (compare (35) and (36)) captures the effect of the activation nonlinearity.

In the last section we explored how the singular values of a matrix \(M_o\) change when adding a fraction of the identity, i.e., when moving from a ConvNet to a ResNet. In this section we explore the effect of the activation nonlinearities on the singular values. To this end, let \(\mathcal D(m,n,\eta )\) denote the set of all \(n \times n\) diagonal matrices with exactly m diagonal entries equal to \(\eta \) and \(n-m\) entries equal to 1:

We will always assume that \(0\le m \le n\). Note that \(\mathcal D(0,n,\eta )=\{\textrm{Id}\}\) and \(\mathcal D(n,n,\eta )=\{\eta \textrm{Id}\}\).

5.1 Absolute Value Activation

Consider the case \(\eta =-1\), corresponding to an activation of the type “absolute value” (see (35)).

Proposition 23

(Absolute Value) Let \(M_o\) be arbitrary (see (45)). For any \(D\in \mathcal {D}(m,n,-1)\), we have

Proof

This is a simple consequence of the SVD. Since D is orthogonal due to \(\eta =-1\), DV and DU are orthogonal as well. Multiplication by D only changes the singular vectors, not the singular values: \(DM=D(V\Sigma U^T)=(DV)\Sigma U^T\) and \(MD=(V\Sigma U^T)D=V\Sigma (DU)^T\).

5.2 ReLU Activation

The next simple case is the ReLU activation, corresponding to \(\eta =0\) in (35). As we state next, the singular values of a linear map M decrease when the map is combined with a ReLU activation, from left or right.

Lemma 24

(ReLU Decreases Singular Values) Let \(M_o\) be arbitrary (see (45)). For any \(D\in \mathcal {D}(m,n,0)\) we have

Note that a similar result holds for leaky ReLU (\(\eta \ne 0\)), however only for \(i=1\). This follows from the fact that \(s_1(MD)\le s_1(M)s_1(D)\) for any two matrices M and D.

Proof

This is a simple consequence of the Cauchy interlacing property by deletion (see (23)).

As a consequence, we have for ReLU that \( | s_i(M) - s_i(DM) | = s_i(M) - s_i(DM) \) and the analogous for MD. This motivates the computation of the difference of traces which equals the sum of squares of the singular values (recall (16)).

Proposition 25

(ReLU) Let \(M_o\) be arbitrary (see (45)). Let \(\rho \) be arbitrary and write M instead of \(M(\rho )\) for short. For any \(D\in \mathcal {D}(m,n,0)\), the following holds: Let \(k_i\) (\(i=1,\ldots , m\)) be such that \(D[k_ik_i]=0\). Denote by \({s_i}\) the singular values of M and by \(\tilde{s_i}\) those of MD. Then

Proof

Write \(\tilde{M}=MD\) for short. The following equality simplifies our computation and holds in this special setting, because D amounts to an orthogonal projection enabling us to appeal to Pythagoras: \( \Vert M\Vert _F^2 = \Vert \tilde{M}\Vert _F^2 + \Vert M-\tilde{M}\Vert _F^2 . \) This can also be verified by direct computation. Lemma 24 implies

yielding (81). Using Corollary 2 with \({A}=M\) and \({{\tilde{A}}}=\tilde{M}\), we obtain (82).

Bounds on the effect of nonlinearities at the input can be obtained easily from bounds at the output using the following fact.

Proposition 26

(Input–Output via Transposition) For D diagonal,

Proof

This follows from the fact that matrix transposition does not alter singular values.

6 Bounding the Weights of a ResNet Bounds its Condition Number

In this section, we combine the results of the preceding two sections in order to quantify how bounds on the entries of the matrix \(W_i\) translate into bounds on the condition number. As we have seen, this is of relevance in the context of optimizing DNs, especially when comparing ConvNets to ResNets (recall Sect. 3.2).

To this end, we will proceed in three steps:

-

1.

Bounds on the weights \(W_i[j,k]\) translate into bounds of M(0) (ConvNet)

-

2.

Perturbation when adding a residual link, moving from M(0) (ConvNet) to M(1) (ResNet)

-

3.

Perturbation by the activation nonlinearity of the trained layer (passing from M(1) to M(1)D)

We treat the two cases of deterministic weights (hard bounds) and random weights (high probability bounds) separately.

6.1 Hard Bounds

We start with a few facts on how hard bounds on the entries of a matrix are inherited by the entries and singular values of perturbed versions of the matrix. We use the term “hard bounds” for the following results on weights \(W_i\) bounded by a constant in order to distinguish them from “high probability bounds” for random weights \(W_i\) with bounded standard deviation. Clearly, for uniform random weights we can apply both types of results.

Lemma 27

Assume that all entries of the \(n\times n\) matrix \(W_i\) are bounded by the same constant: \(|W_i[j,k]|\le c\). Then

with equality, for example, for the matrix \(W_i\) with all entries equal to c.

Proof

The bound is a simple consequence of (20): \(s_1(W_i) \le \Vert W_i\Vert _F \le cn\). If all entries of \(W_i\) are equal to c, then the unit vector \(u_1=(1/\sqrt{n}) (1,\ldots ,1)^T\) achieves \(W_i\, u_1=c\,n\, u_1\).

Lemma 28

Let \(W_i\) (\(i=1,\ldots , p\)) be matrices as in Lemma 27. Assume that \(c\le 1/n\). Let \(D_i\) (\(i=1,\ldots , p\)) be diagonal matrices with entries bounded by 1. Then all the entries of \(M_o=M(0)=D_pW_p\cdots D_1W_1\) are bounded by c as well.

Proof

Each entry of \(D_iW_i\) is bounded by c and each entry of \((D_2W_2)(D_1W_1)\) is bounded by \(nc^2=(nc)c\le c\). Iterating the argument establishes the second claim.

Recall that \(M(0)=M_o\) corresponds to a multi-layer \(F=f_p,\ldots , f_1\) with arbitrary nonlinearities. Let us add a skip-residual link by choosing \(\rho =1\) in (44).

Lemma 29

Let \(W_i\) be as in Lemma 28 and recall (44). Assume that \(c<1/n\). Then

and

Proof

To establish (86) apply Lemma 17 with \(i=1\) and \(i=n\) and \(\rho =1\), and note that \(s_1(M_o)\le cn\) by Lemmata 27 and 28. The bound (87) follows immediately.

Proposition 23 implies the following bound for the absolute value activation.

Theorem 30

(Hard Bound, Absolute Value) Under the assumptions of Lemma 29 and for \(D\in \mathcal {D}(m,n,-1)\), we have

For a residual multi-layer (44) with ReLU nonlinearities, we find the following.

Theorem 31

(Hard Bound, ReLU) Under the assumptions of Lemma 29 and for \(D\in \mathcal {D}(m,n,0)\), the following statements hold:

Provided \(2cn<1/(1+n)\), we have

Provided \(m\le n(1-cn)^2\), we have

Provided \(2cn<1/(1+2m)\), we have

Proof

Applying Proposition 25 with \(\rho =1\), i.e., \(M=M(1)\), we have by (81) that

Note that this bound cannot be made arbitrarily small by choosing c small and that it amounts to at least m. However, the bulk of the sum of deviations stems from some m singular values of M being changed to zero by D, compensating the constant term m in the above bound. More precisely, \(s_i\ge 1-cn\) by (86) for all i while \(\tilde{s}_i=0\) for \(i=n-m+1,\ldots , n\). Thus,

implying that for any \(1\le j \le n-m\)

(using \(cn-cn^2<0\)). Therefore,

(using \(m(1+n) \le n^2-1\le n^2\)). Since this bound holds for all non-zero singular values of \(\tilde{M}\), we obtain

Finally, we have \(\tilde{s}_1\le s_1\le 1+cn\) by Lemma 24 and (86). This proves (89). Alternatively, we can estimate as follows:

using \(1/n\le 1\), which proves (91). At last, we can use (82) instead of (81). Analogous to before, we find

implying for any \(1\le j \le n-m\) that \( |s_j - \tilde{s}_j |^2 \le 2mc(1+n) . \) Therefore, provided \(2cn<1/(1+n)\) or \(2c(1+n)<1/n\), we obtain (90) by observing that

For leaky-ReLU, the situation is more intricate.

Theorem 32

(Hard Bound, Leaky-ReLU) Let \(W_i\) be as in Lemma 28 and recall (44). Assume that \(c<1/n\). Let \(D\in \mathcal {D}(m,n,\eta )\) with \(0\le \eta \le 1\).

Provided \(n\ge 5\) and \(\eta <1\) large enough to ensure \((1-cn)^2 > 4(1-\eta )\sqrt{m} \), we have

Alternatively, if \(\eta <1\) is such that \(1-cn>(1-\eta )m(3c+1)\), then we have

This situation corresponds to a ResNet (44) with leaky-ReLU nonlinearities, because \(\eta \) can take any value between 0 and 1 (see (35)).

Proof

We proceed along the lines of the arguments of Theorem 31. To this end, we need to generalize the results of Proposition 25.

In order to replace (81) we write for short \(M=M(1)=M_o+\)Id with singular values \(s_i\) and \(\tilde{M}=M(1)D\) with singular values \(\tilde{s}_i\). Applying Proposition 1 with \({A}=M^TM\) and \({{\tilde{A}}}= \tilde{M}^T\tilde{M}= (MD)^TMD=D{A}D\), we obtain

Since all entries of \(M_o\) are bounded by c due to Lemma 28 and using \(cn<1\), it follows that the entries of \({A}\) are bounded as follows

Using these bounds, direct computation shows that \( {A}-{{\tilde{A}}}={A}-D{A}D \) contains \(2m(n-m)\) terms off the diagonal bounded by \(3c(1-\eta )\), m terms in the diagonal bounded by \((3c+1)(1-\eta ^2)\), further \(m^2-m\) terms off the diagonal bounded by \(3c(1-\eta ^2)\), and the remaining \((n-m)^2\) terms equal to zero. Using \(cn<1\) whenever apparent as well as \((1-\eta ^2)\le 2(1-\eta )\), we can estimate as follows:

We conclude that, for \(n\ge 5\), we have \(|s_j^2-\tilde{s}_j^2 |\le (1-\eta )4\sqrt{m}\). Together with \(s_j\ge (1-cn)\), we obtain (100).

Alternatively, we may strive at adapting (82) (which is based on Corollary 2) for \(\eta \ne 0\). Recomputing (82) using (18) with \({A}=M\) and \({{\tilde{A}}}=\tilde{M}\) for non-zero \(\eta \), we obtain

We obtain \(|s_j-\tilde{s}_j | \le (1-\eta )\sqrt{m(c^2n+2c+1)} \le (1-\eta )\sqrt{m(3c+1)} \) and (101).

We note that the earlier Theorem 22 will not lead to strong results in this context. However, it will prove effective in the random setting of the next section.

6.2 Bounds with High Probability for Random Matrices

While the results of the last section provide absolute guarantees, they are quite restrictive on the matrix \(M_o\) since they cover the worst cases. Working with the average behavior of a randomly initialized matrix \(M_o\) enables us to relax the restrictions from bounding the actual values of the weights to only bounding their standard deviation.

The strongest results in this context concern the operator norm and exploit cancellations in the entries of \(M_o\) (see [3, Proposition 3.1], [23, 24]). Most useful for this study is the well-known result due to Tracy and Widom [23, 24]. Translated into our setting, it reads as follows.

Theorem 33

(Tracy-Widom) Let \(X_{i,j}\) (\(1\le i,j\)) be an infinite family of iid copies of a Uniform or Gaussian random variable X with zero mean and variance 1.

Let \(K_n\) be a sequence of \(n\times n\) matrices with entries \(K_n[i,j]=X_{i,j}\) (\(1\le i,j\le n\)).

Let \(\overline{t_n}\) denote the largest eigenvalue of \(K_n^T+K_n\) and let \(\underline{t_n}\) denote its smallest.

Then, as \(n\rightarrow \infty \), both \(\overline{t_n}\) and \(-\underline{t_n}\) lie with high probability in the interval

More precisely, the distributions of

converge both (individually) to a Tracy-Widom law \(P_{TW}\). The Tracy-Widom law is well concentrated at values close to 0.

The random variables \(Z_n\) and \(\tilde{Z}_n\) are equal in distribution due to the symmetry of the random variable X. However, they are not independent.

Empirical demonstration of the Tracy-Widom law. Note the collapsing histograms of \(Z_n=(\overline{t_n} -\sqrt{8n})\, n^{1/6}\) for \(n=5, 10, 25, 50, 100\) where \(\overline{t_n}\) denotes the largest eigenvalue of the \(n\times n\) matrix of the form \(K_n+K_n^T\) and where the entries of \(K_n\) are drawn from a a Gaussian or b uniform distribution with zero mean and variance 1. Note that the right-side tails \(P[Z_n>t]\) decay very quickly, leading to negligible probabilities at moderate values of t

It is easy to verify that the theory of Wigner matrices and the law of Tracy-Widom apply here and to establish Theorem 33. In particular, \(K_n^T+K_n\) is symmetric with iid entries above the diagonal and iid entries in the diagonal, all with finite non-zero second moment. The normalization of \(\overline{t_n}\) leading to \(Z_n\) (see (107)) is quite sharp, meaning that the distributions of \(Z_n\) are close to their limit starting at values as low as \(n=5\) (see Fig. 4).

The Tracy-Widom distributions are well studied and documented, with a right tail that decays exponentially fast [7]. Moreover, they are well concentrated at values close to 0. Combined with the fast convergence, this implies that \(\overline{t_n}\) is close to \( \sqrt{8n}\) with high probability even for modest n.

With the appropriate adjustments, Theorem 33 provides tight control on \(\overline{\tau }\) and \(\underline{\tau }\) (see Definition 15). In order to exploit this control toward a bound on the growth of singular values via Theorem 16, the following proves useful.

Theorem 34

Assume that the \(n^2\) entries of the random matrix \(M_o\) are jointly continuous with some joint probability density function (e.g., Uniform or jointly Gaussian entries). Then, almost surely, \(M_o\) possesses no multiple singular value, and the growth of the singular values of \(M_o+\rho \textrm{Id}\) is bounded as in (70).

Proof

As in the proof of Lemma 12, we argue that the discriminant \(\chi \) of the characteristic polynomial of the \(n\times n\) matrix \(M_o^TM_o\) is itself a polynomial of the entries of \(M_o\). Viewing \(M_o\) as a point in  defined by its entries, the matrices \(M_o\) with multiple singular values form a set that is identical to the zero-set of the polynomial \(\chi \) and form, therefore, a set of Lebesgue measure 0. The statement is now obvious.

defined by its entries, the matrices \(M_o\) with multiple singular values form a set that is identical to the zero-set of the polynomial \(\chi \) and form, therefore, a set of Lebesgue measure 0. The statement is now obvious.

A related useful result reads as follows (see [5, Theorem 6.2.6 and Section 6.2.3] and the references therein).

Theorem 35

Let \(K_n\) be as in Theorem 33, but Gaussian. Then, as \(n\rightarrow \infty \)

Moreover, letting \(\mu _n=(\sqrt{n-1}+\sqrt{n})^2\) and \(q_n=\sqrt{\mu _n}(1/\sqrt{n-1}+1/\sqrt{n})^{1/3}\), the distribution of

converges narrowly to a Tracy-Widom law.

Comparing with Theorem 33, we should expect that \(s_1(K_n)\simeq 2\sqrt{n}\simeq \overline{t}_n/\sqrt{2}\) (compare to Fig. 5).

a Empirical demonstration of the convergence of \(Y_n\) in (110) to a Tracy-Widom law. Note that the right-side tails \(P[Y_n>t]\) decay very quickly, leading to negligible probabilities at moderate values of t and for n as small as 5 similar to \(Z_n\) from Fig. 4. b Empirical demonstration of the convergence of \(\overline{\tau }/(\sqrt{2}s_1)\) to 1: The ratio lies indeed with high probability between 0.6 and 1.4 for n as small as 10

Combining Theorem 22 (with \(M_0=W_1\)) and Theorem 33, and using that (for \(n\ge 2\)) \(\mu _n\le 4n\) and \(q_n \le \sqrt{(4n)}2^{1/3}(n-1)^{-1/6} \le \sqrt{(4n)}2^{1/3}(n/2)^{-1/6} \le 2^{3/2}n^{1/3} \), we obtain the following.

Lemma 36

(High Probability Bound) In the notation of Theorem 33, let \(r>1\) and \(n\ge 2\), set

and let \( W_1 = \sigma _n K_n . \) Denote the largest and smallest eigenvalues of \(W_1^T+W_1\) by \(\overline{\tau }_n=\overline{\tau }_n(r)\) and \(\underline{\tau }_n=\underline{\tau }_n(r)\) (compare with (69)).

(i) We have

and

(ii) In the Gaussian case, we have that \( s_1(W_1) {\rightarrow } 1/(\sqrt{2}r) \) almost surely. Also

(iii) For any realization of \(W_1\) with \(s_1(W_1)<1\)

(iv) For any realization of \(W_1\) with \(\underline{\tau }_n>-1\) we have the slightly tighter bounds

The condition number of \(W_1+\textrm{Id}\) is then bounded as

When comparing (i) and (ii), it becomes apparent that the stated sufficient condition in (iii) is more easily satisfied than that of (iv).

For a a single residual layer (43) with weights \(W_1\) and a ResNet with absolute value nonlinearities, we obtain the following.

Corollary 37

(High Probability Bound, Absolute Value) Let \(W_1\) be as in Lemma 36. Let \(D,D_1\in \mathcal {D}(m,n,-1)\) for some fixed m. Set \(M_o=D_1W_1\) and recall Definition 15.

(i) In the Gaussian case, we have \( s_1(M_o) {\rightarrow } 1/(\sqrt{2}r) \) almost surely.

(ii) For any realization of \(M_o\) for which \(s_1(M_o)<1\), we have

(iii) For any realization of \(W_1\) with \(\underline{\tau }>-1\) the following bound holds

Proof

Note that \(M_0=D_1W_1\) is a random matrix with the same properties as \(W_1\) itself, since \(D_1\) is deterministic and changes only the sign of some of the entries of \(W_1\). We may, therefore, apply Lemma 36 to \(M_o\) (replacing \(W_1\)).

Also, D does not alter the singular values: \(s_i(M_o)=s_i(W_1)\) and \(s_i((M_o+\textrm{Id})D)=s_i(M_o+\textrm{Id})\).

Probabilities of Exceptional Events

-

Clarification regarding \(\underline{\tau }\) vs. \(\underline{\tau }_n\) in the setting of Corollary 37: While we have \(s_1(M_o)=s_1(W_1)\), one should note that \(\overline{\tau }\) (largest eigenvalue of \(M_o^T+M_o\)) may differ from \(\overline{\tau }_n\) (largest eigenvalue of \(W_1^T+W_1\)) for any particular realization. However, since \(M_o\) and \(W_1\) are equal in distribution due to the special form of \(D_1\), we have that \(\overline{\tau }\) and \(\overline{\tau }_n\), as well as \(-\underline{\tau }\) and \(-\underline{\tau }_n\) are all equal in distribution with distribution well concentrated around 1/r.

-

Exception \( E_{\underline{\tau }_n} = E_{\underline{\tau }_n(r)} = \{\underline{\tau }_n(r) \le -1\}\): We have

$$\begin{aligned} P[E_{\underline{\tau }_n}]\rightarrow 0 \quad \text{ as }\quad n\rightarrow \infty \quad \hbox {for}\quad r>1. \end{aligned}$$(119)Moreover, \(P[E_{\underline{\tau }_n}]\approx 0\) for values as small as \(r\ge 2\) and \(n\ge 5\). We are able to support this claim through the following computations and simulations: Clearly, on the one hand, \(P[\underline{\tau }_n>-1] = P[\tilde{Z}_n < (r-1)\sqrt{8} n^{2/3}] \rightarrow 1\) for \(r>1\). On the other hand, for \(r\ge 2\) and \(n\ge 5\), we have \((r-1)\sqrt{8} n^{2/3}>8\) and may estimate \(P[\underline{\tau }_n>-1] = P[\tilde{Z}_n< (r-1)\sqrt{8} n^{2/3}] \ge P[\tilde{Z}_n< 8] = P[ Z_n < 8]. \) From Fig. 4 it appears that \(P[Z_n<8]=1\) for \(n\ge 5 \) for all practical purposes. This is confirmed by our simulations in the following sense: Every single one of the 500,000 random matrices simulated with \(r=2\) satisfied \(\underline{\tau }_n>-1\).

-

Exception \( E_{s_1(W_1)} = \{s_1(W_1)\ge 1\}\): We have that

$$\begin{aligned} P[ E_{s_1(W_1)} ]\rightarrow 0 \quad \text{ as }\quad n\rightarrow \infty \quad \hbox {for} \quad r>1. \end{aligned}$$(120)Moreover, \(P[ E_{s_1(W_1)} ]\approx 0\) for values as small as \(r\ge 2\) and \(n\ge 5\). According to (113) and Theorem 35, we have \( P[s_1(W_1)^2\ge 1] \le P[ Y_n \ge \sqrt{2}({2r^2}-1) n^{2/3} ] \rightarrow 0 . \) As demonstrated in Fig. 5, \(Y_n\) converges rapidly to a Tracy-Widom law whence \(P[E_{s_1(W_1)}]\) is small for \(r\ge 2\) and modest n. In the setting of Corollary 37, the probability of the event \( E_{s_1(M_o)} = \{s_1(M_o)\ge 1\}\) tends to zero rapidly in a similar fashion.

Empirical demonstration of the efficiency of the bounds (115) and (116) using \(r=2\). The figures show histograms of the fractions a \( [s_n^2(W_1+\textrm{Id})]/ [s_n^2(0)+\underline{\tau }_n+1] \), b \( [s_1^2(W_1)+\overline{\tau }_n+1] / [s_1^2(W_1+\textrm{Id})] \), and c \( \sqrt{({s_1^2(W_1)+\overline{\tau }_n+1) }/({\underline{\tau }_n+1}}) / \kappa (W_1+\textrm{Id}) \) . Note that all values are above 1 and actually quite close to 1

The bounds (115) and (116) are surprisingly efficient. The first upper bound of (116), for example, is only about \(10\%\) larger than \(\kappa (W_1+\textrm{Id})\) for large n (see Fig. 6).

We see an explanation for this efficiency of the bounds to be rooted in the fact that the singular values of random matrices tend to be as widely spread as possible, meaning that the largest singular values grow as fast as possible while the smallest singular values grow as slowly as possible. While this maximal spreading is well known for symmetric random matrices called “Wigner matrices,” we are not aware of any reports of this behavior for non-symmetric matrices such as \(W_1\).

Empirical demonstration of the asymptotic bound (121). Histograms of the \(\kappa (W_1+\textrm{Id})\) for a \(r=2\) and b \(r=3\) together with the upper bound in form of a deterministic expression from (121) in black. Note that the values of \(\kappa (W_1+\textrm{Id})\) lie asymptotically below the deterministic expression as indicated in (121). Also, recall that \(\kappa (W_1+\textrm{Id})\) and \(\kappa (D_1W_1+\textrm{Id})\) are equal in distribution in the setting of Corollary 37

For design purposes, an asymptotic bound for the condition number \(\kappa (M(1)D)\) in terms of the network parameters might be more useful than those in terms of the random realization as given in Corollary 37.

Theorem 38

(High Probability Bound in the Limit) With the notation and assumptions of Theorem 33 and Lemma 36, the upper bounds given there converge in distribution to constants as follows

and

For a demonstration of the deterministic expression bounding the condition number as in (121), see Fig. 7. For \(r=2\), for example, the right-hand side of (121) becomes 1.8028, while the right-hand side of (122) becomes the slightly larger 1.9719.

Proof

Since \(Z_n\) converges in distribution to a real-valued random variable Z, we can conclude that \(Z_n/n^{2/3}\) converges in distribution to 0. Indeed, for any \(\varepsilon >0\) and any \(n_o\ge 1\), we have for \(n\ge n_o\) that

From this we obtain for any \(n_o\ge 1\) that

and letting \(n_o\rightarrow \infty \) we conclude that \( P[|Z_n/n^{2/3}|\ge \varepsilon ] \rightarrow 0 . \) This establishes that \(Z_n/n^{2/3}{\mathop {\rightarrow }\limits ^\textrm{distr}} 0\). Similarly, we obtain \(\tilde{Z}_n/n^{2/3}{\mathop {\rightarrow }\limits ^\textrm{distr}} 0\).

By the continuous mapping theorem, and since \(r>1\), we obtain

Similarly,

Simple algebra completes the proof.

Our simulations suggest that actually \(\overline{\tau }_n / s_1(W_1)\) converges in distribution to \(\sqrt{2}\) as \(n\rightarrow \infty \), implying that we should see \(\overline{\tau }_n \simeq \sqrt{2} s_1(W_1)\) for most matrices in real world applications.

We turn now to the condition number of the relevant matrix \((D_1W_1+\textrm{Id})D\) for a ResNet with ReLU activations that are represented by \(D_1\in \mathcal {D}(\tilde{m},n,0)\) and \(D\in \mathcal {D}(m,n,0)\). We address the case of a single residual layer following the trained layer (cf. (43) with \(p=1\)).

To this end, we let

which can be considered as a measure of the extent to which these two nonlinearities affect the output in combination.

Theorem 39

(High Probability Bound, ReLU) Let \(D_1\in \mathcal {D}(\tilde{m},n,0)\) and \(D\in \mathcal {D}(m,n,0)\) with \(1 \le m + \tilde{m} \). Then, \(1\le \nu \le n\). Choose \(\theta \) such that \(4<\theta \le 2 \sqrt{n}\), and let \(\sigma _n\) and \(W_1\) be as in Lemma 36, with r defined as below and with Gaussian entries for \(W_1\).

(1) Choose \(r>(2+2\nu +\theta )\). Then, with probability at least \(1-2\exp (-\theta ^2/2) - P[E_{s_1(W_1)}],\) we have

(2) Choose \(r'>1\) and \(r>({r'}+{r'}\nu +\theta )\). Then, with probability at least \(1-2\exp (-\theta ^2/2) - P[E_{\underline{\tau }_n(r')}] \), we have

We recall that, according to our simulations, \( P[ E_{s_1(W_1)}] = P[s_1(W_1)^2\ge 1] \le P[ Y_n \ge \sqrt{2}({2r^2}-1) n^{2/3} ] \) and \( P[E_{\underline{\tau }_n(r')}] = P[Z_n > ({r'}-1)\sqrt{8} n^{2/3}] \) are negligible for \(r,{r'}\ge 2\) and modest n.

We mention further that the restriction \(4\le \theta \le 2 \sqrt{n}\) is solely for simplicity of the representation and can be dropped at the cost of more complicated formulas (see proof). In the given form, useful bounds on the probability are obtained even with modest n as low as \(n=7\).

Clearly, the bound (128) on the condition number \(\kappa \) can be forced arbitrarily close to 2 by choosing r sufficiently large. In fact, bounds can be given that come arbitrarily close to 1, as becomes apparent from (149) and (143) in the proof.

Proof

Let \({A}=W_1+\textrm{Id}\) with singular values \(s_i\) and \({{\tilde{A}}}=(D_1W_1+\textrm{Id})D\) with singular values \(\tilde{s}_i\). Clearly, \({r}>{r'}>1\).

We proceed in several steps, addressing first (129).

(i) Sure bound on the sum of perturbations. We cannot apply Proposition 25 as we did in Theorem 31, since we need to take into account also the effect of the left-factor \(D_1\). Due to Corollary 2 we have