Abstract

We consider approximating analytic functions on the interval \([-1,1]\) from their values at a set of \(m+1\) equispaced nodes. A result of Platte, Trefethen & Kuijlaars states that fast and stable approximation from equispaced samples is generally impossible. In particular, any method that converges exponentially fast must also be exponentially ill-conditioned. We prove a positive counterpart to this ‘impossibility’ theorem. Our ‘possibility’ theorem shows that there is a well-conditioned method that provides exponential decay of the error down to a finite, but user-controlled tolerance \(\epsilon > 0\), which in practice can be chosen close to machine epsilon. The method is known as polynomial frame approximation or polynomial extensions. It uses algebraic polynomials of degree n on an extended interval \([-\gamma ,\gamma ]\), \(\gamma > 1\), to construct an approximation on \([-1,1]\) via a SVD-regularized least-squares fit. A key step in the proof of our main theorem is a new result on the maximal behaviour of a polynomial of degree n on \([-1,1]\) that is simultaneously bounded by one at a set of \(m+1\) equispaced nodes in \([-1,1]\) and \(1/\epsilon \) on the extended interval \([-\gamma ,\gamma ]\). We show that linear oversampling, i.e. \(m = c n \log (1/\epsilon ) / \sqrt{\gamma ^2-1}\), is sufficient for uniform boundedness of any such polynomial on \([-1,1]\). This result aside, we also prove an extended impossibility theorem, which shows that such a possibility theorem (and consequently the method of polynomial frame approximation) is essentially optimal.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the problem of approximating an analytic function \(f : [-1,1] \rightarrow \mathbb {C}\) from its values at \(m+1\) equispaced points in \([-1,1]\). Several years ago, Platte et al. [34] demonstrated that this problem is intrinsically difficult. They proved an ‘impossibility’ theorem which states that any method that offers exponential rates of convergence in m for all functions analytic in a fixed, but arbitrary region of the complex plane must necessarily be exponentially ill-conditioned. Furthermore, the best rate of convergence achievable by a stable method is necessarily subexponential—specifically, root-exponential in m.

This result generalizes what has long been known for polynomial interpolation at equispaced nodes: namely, Runge’s phenomenon. Polynomial interpolation is divergent for functions that not analytic in a sufficiently large complex region (the Runge region). And while it converges exponentially fast for functions that are analytic in this region, its condition number is also exponentially large. Such ill-conditioning means that, when computed in floating point arithmetic, the error of polynomial interpolation eventually increases, even for entire functions, due to the accumulation of round-off error.

Many methods have been proposed to overcome Runge’s phenomenon by stably and accurately approximating analytic functions from equispaced nodes (see, e.g., [10, 15, 34] and references therein). Several such methods appear to offer fast convergence in practice, which, for some functions at least, appears faster than root-exponential. Yet full mathematical explanations for this phenomenon have hereto been lacking.

The purpose of this paper is to fully analyse one such method in view of the impossibility theorem. This method is termed polynomial frame approximation or polynomial extensions [7] and is closely related to so-called Fourier extensions [14, 16, 26, 29, 31, 33]. It approximates a function f on \([-1,1]\) using orthogonal polynomials on an extended interval \([-\gamma ,\gamma ]\) for some fixed \(\gamma > 1\). The approximation is then computed by solving a regularized least-squares problem, with user-controlled regularization parameter \(\epsilon > 0\).

Our main contribution is to show that this method offers a positive counterpart to the impossibility theorem of [34]. We prove a ‘possibility’ theorem, which asserts that the polynomial frame approximation method is well-conditioned and, for all functions that are analytic in a sufficiently large region (related to the parameter \(\gamma \)), its error decreases exponentially fast down to roughly \(\mathcal {O}(m^{3/2} \epsilon )\), where \(\epsilon \) is the user-determined tolerance. This tolerance may be taken to be on the order of machine epsilon without impacting the conditioning of the method, thus rendering the approach suitable for practical purposes. But it may also be taken larger (with benefits in terms of stability) if fewer digits of accuracy are required. While the impossibility theorem dictates that exponential convergence to zero cannot be achieved by a well-conditioned method, we show that exponential decrease of the error down to an arbitrary tolerance, multiplied by the slowly growing factor proportional to \(m^{3/2}\), is indeed possible. Additionally, we establish an ‘extended’ impossibility theorem, which relates conditioning and error decay for approximation methods that achieve only a finite accuracy \(\epsilon \). This theorem explains how the method we consider is essentially optimal, in a suitable sense.

Our main result hinges on a new bound for the maximal behaviour of polynomials that are bounded simultaneously at a set of \(m+1\) equispaced nodes on \([-1,1]\) and on the extended interval \([-\gamma ,\gamma ]\). The impossibility theorem of [34] uses a classical result of Coppersmith and Rivlin [21], which states that a polynomial of degree n that is bounded by one at \(m \ge n\) equispaced nodes can grow as large as \(\alpha ^{n^2/m}\) on \([-1,1]\) outside of these nodes, where \(\alpha > 1\) is a constant. In particular, \(m = c n^2\) equispaced points are both sufficient and necessary to ensure boundedness of such a polynomial on \([-1,1]\). We consider a nontrivial variation of this setting, where the polynomial is also assumed to be no larger than \(1/\epsilon \) on \([-\gamma ,\gamma ]\). Our key result shows that \(m = c n \log (1/\epsilon ) / \sqrt{\gamma ^2-1}\) equispaced points suffice for ensuring boundedness of any such polynomial on \([-1,1]\). While we use this bound to analyse polynomial frames, we expect it to be of independent interest from a pure approximation-theoretic perspective.

More broadly, our work has some connections with optimal recovery. We note that optimal recovery of functions in general, and from equispaced samples in particular has been actively studied in approximation theory, with emphasis on optimal approximation methods without taking stability issues into considerations. See, for instance, [32, 37, 38].

The outline of the remainder of this paper is as follows. In Sect. 2 we present an overview of the main components of the paper. We introduce notation, describe the impossibility theorem of [34] and then present polynomial frame approximation. We next state our main results and then conclude with a discussion of related work. In Sect. 3 we analyse the accuracy and conditioning of polynomial frame approximation. Then in Sect. 4 we give the proof of the aforementioned result on the maximal behaviour of polynomials bounded at equispaced nodes and on the extended interval \([-\gamma ,\gamma ]\). With this in hand, in Sect. 5 we give the proof of the main results: the possibility theorem for polynomial frame approximation and the extended impossibility theorem. We then present several numerical examples in Sect. 6, before offering some concluding remarks in Sect. 7.

2 Overview of the Paper

2.1 Notation

Throughout, \(\mathbb {P}_n\) denotes the space of polynomials of degree at most n. For \(m \ge 1\), we let \(\{ x_i \}^{m}_{i=0}\) be a set of \(m+1\) equispaced points in \([-1,1]\) including endpoints, i.e. \(x_i = -1 + 2 i /m\). Given an interval I, we let C(I) be the space of continuous functions on I and

be the uniform norm over I. We also let

be the usual \(L^2\)-inner product over I and \({\left\| \cdot \right\| }_{I,2} = \sqrt{\langle \cdot , \cdot \rangle _{I,2}}\) be the corresponding \(L^2\)-norm.

Next, we define several discrete semi-norms and semi-inner products. We define

where \(\{ x_i \}^{m}_{i=0}\) is the equispaced grid on \([-1,1]\), and

We also let \({\left\| \cdot \right\| }_{m,2} = \sqrt{\langle \cdot , \cdot \rangle _{m,2}}\) be the corresponding discrete semi-norm. Note that \(\langle \cdot , \cdot \rangle _{m,2}\) is an inner product on \(\mathbb {P}_n\) for any \(m \ge n\), since a polynomial of degree n cannot vanish at \(m+1 \ge n+1\) distinct points unless it is the zero polynomial. Observe also that

for any \(f \in C(I)\).

Finally, given a compact set \(E \subset \mathbb {C}\), we write B(E) for the set of functions that are continuous on E and analytic in its interior. We also define \({\left\| f\right\| }_{E,\infty } = \sup _{z \in E} |f(z)|\).

2.2 The Impossibility Theorem

Throughout this paper, we consider families of mappings

where, for each \(m \ge 1\) and \(f \in C([-1,1])\), \(\mathcal {R}_m(f)\) depends only on the values \(\{f(x_i)\}^{m}_{i=0}\) of f on the equispaced grid \(\{x_i\}^{m}_{i=0}\). We define the (absolute) condition number of \(\mathcal {R}_m\) (in terms of the continuous and discrete uniform norms) as

We are now ready to state the impossibility theorem:

Theorem 2.1

(The impossibility theorem, [34]) Let \(E \subset \mathbb {C}\) be a compact set containing \([-1,1]\) in its interior and \(\{ \mathcal {R}_m \}^{\infty }_{m=1}\) be an approximation procedure based on equispaced grids of \(m+1\) points such that, for some \(C,\rho > 1\) and \(1/2 < \tau \le 1\), we have

Then the condition numbers (2.2) satisfy

for some \(\sigma > 1\) and all sufficiently large m.

When \(\tau = 1\), this implies that any approximation procedure that achieves exponential convergence at a geometric rate must also be exponentially ill-conditioned at a geometric rate. Furthermore, the best possible (and achievable [11]) rate of convergence of a stable method is root-exponential in m, i.e. the error decays like \(\rho ^{-\sqrt{m}}\) for some \(\rho > 1\).

2.3 Polynomial Frame Approximation

We now describe polynomial frame approximation. As observed, this method was formalized in [7] and is related to earlier works on Fourier extensions [9, 12, 14, 16, 26, 29, 31, 33], and more generally, numerical approximations with frames [6, 8].

Let \(\gamma > 1\). Polynomial frame approximation uses orthogonal polynomials on an extended interval \([-\gamma ,\gamma ]\) to construct an approximation to a function over \([-1,1]\). In this paper, we use orthonormal Legendre polynomials, although we remark in passing that other orthogonal polynomials such as Chebyshev polynomials could also be employed. Note that an orthonormal basis on \([-\gamma ,\gamma ]\) fails to constitute a basis when restricted to the smaller interval \([-1,1]\). It forms a so-called frame [6, 19], hence the name polynomial ‘frame’ approximation.

Let \(P_i(x)\) be the classical Legendre polynomial on \([-1,1]\), normalized so that \(P_i(1) = 1\). Since \(\int ^{1}_{-1} |P_i(x)|^2 \,\mathrm {d}x = (i+1/2)^{-1}\), we define Legendre polynomial frame on \([-1,1]\) as \(\{ \psi _i \}^{\infty }_{i=0}\), where the ith such function is given by

Let \(m,n \ge 0\) and consider a function \(f \in C([-1,1])\). Our aim is to compute a polynomial approximation to f of the form

for suitable coefficients \({\hat{c}}_i\). It is natural to strive to do this via a least-squares fit, i.e.

where

Note that \(\sqrt{2/(m+1)}\) is simply a normalization factor that is included for convenience. Unfortunately, as described in [7], this least-squares problem is ill-conditioned for large n (even when \(m \gg n\)) due to the use of a frame rather than a basis [6]. Therefore, we instead solve a suitably regularized least-squares problem. There are a number of different ways to do this, but, following previous works we consider an \(\epsilon \)-truncated Singular Value Decomposition (SVD).

Suppose that the least-squares matrix (2.5) has SVD \(A = U \Sigma V^*\), where \(\Sigma = \mathrm {diag}(\sigma _0,\ldots ,\sigma _n) \in \mathbb {R}^{m \times n}\) is the diagonal matrix of singular values. Recall that the minimal 2-norm solution \({\hat{c}}\) of (2.4) is given by

where \(\dag \) denotes the pseudoinverse. Given \(\epsilon > 0\), we define \(\Sigma ^{\epsilon }\) as \(\epsilon \)-regularized version of \(\Sigma \) as

and let \(\Sigma ^{\epsilon ,\dag }\) be its pseudoinverse, i.e.

Then we define the \(\epsilon \)-regularized approximation of (2.4) as \({\hat{c}}^{\epsilon } = V \Sigma ^{\epsilon ,\dag } U^* b\) and the corresponding approximation to f as

With this in hand, we define the overall approximation procedure as the mapping

where

Remark 2.2

(Why not use orthogonal polynomials on the original interval) The use of orthogonal polynomials on an extended interval may at first seem bizarre, since, as noted, the infinite collection of such functions no longer forms a basis when restricted to \([-1,1]\), but a frame. And even though the first \(n+1\) such functions \(\psi _0,\ldots ,\psi _n\) constitute a basis for \(\mathbb {P}_n\), they are extremely ill-conditioned as \(n \rightarrow \infty \). To be precise, the condition number of their Gram matrix \(G = (\langle \psi _j, \psi _i \rangle _{[-1,1],2} )^{n}_{i,j=0}\) is exponentially-large in n. In turn, the matrix A is also exponentially ill-conditioned in n, even when \(m \gg n\). To understand why, observe that \(A^* A = (\langle \psi _j, \psi _i \rangle _{m,2} )^{n}_{i,j=0}\) is simply a discrete approximation to G.

Why then, do we not consider Legendre polynomials in \([-1,1]\)? These constitute a perfectly well-conditioned basis, which means that the corresponding matrix A would also be well-conditioned for \(m \gg n\). Thus there is no need for regularization, and we may simply compute the least-squares fit by solving (2.4). Note that this simply corresponds to \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}\) with \(\epsilon = 0\) and \(\gamma = 1\). The problem is that such an approximation, which we term polynomial least-squares approximation, behaves exactly as the impossibility theorem (Theorem 2.1) predicts in the best case. It is ill-conditioned if \(m = o(n^2)\) as \(n \rightarrow \infty \), and in particular, if \(m \sim c n\) as \(n \rightarrow \infty \), then the condition number of the mapping grows exponentially fast. On the other hand, with quadratic oversampling, i.e. \(m \sim c n^2\) as \(n \rightarrow \infty \), the approximation is well-conditioned and its convergence rate is root-exponential in m for all analytic functions. See [11] for a discussion on polynomial least-squares and the impossibility theorem. See also Remark 2.3 below.

2.4 Maximal Behaviour of Polynomials Bounded at Equispaced Nodes

As mentioned, both the impossibility theorem and the subsequent possibility theorem rely on estimates for the maximal behaviour of polynomials that are bounded at equispaced nodes. The former is based on a classical result due to Coppersmith and Rivlin concerning the maximal growth of a polynomial \(p \in \mathbb {P}_n\) that is at most one at the \(m+1\) equispaced nodes \(\{ x_i \}^{m}_{i=0}\). Specifically, in [21] they showed that there exist constants \(\beta \ge \alpha > 1\) such that, if

then

Remark 2.3

(The condition number of polynomial least-squares approximation) It is not difficult to show that the condition number of polynomial least-squares approximation \(\mathcal {P}_{m,n} = \mathcal {P}^{0,1}_{m,n}\) satisfies

where B(m, n) is as in (2.8) (this follows by setting \(\gamma = 1\) and \(\epsilon = 0\) in a result we show later, Lemma 3.1). Thus, (2.8) immediately explains why this approximation is ill-conditioned when \(m = o(n^2)\) as \(n \rightarrow \infty \), and only well-conditioned when \(m \sim c n^2\) (or faster) as \(n \rightarrow \infty \).

As described above, the polynomial frame approximation is constructed via an \(\epsilon \)-truncated SVD. We will see later in Sect. 3 that such truncation means that the approximation \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}(f)\) of a function f belongs to (a subspace of) the set of polynomials

whose \(L^2\)-norm over the extended interval \([-\gamma ,\gamma ]\) is at most \(1/\epsilon \) times larger than their discrete 2-norm over the equispaced grid. In other words, the effect of regularization via the truncated SVD is to restrict the type of polynomial the approximation can take to one that does not grow too large on the extended interval.

After interchanging the 2-norms for uniform norms, this observation motivates the study of the quantity

In Sect. 3, we show that the condition number of the polynomial frame approximation \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}\) satisfies

Notice that \(C(m,n,\gamma ,0) = B(m,n)\) and, in general,

where B(m, n) is the as in (2.8). However, whereas B(m, n) is only bounded as \(m,n\rightarrow \infty \) in the quadratic oversampling regime (i.e. \(m \sim c n^2\) for some \(c>0\)), for \(C(m,n,\gamma ,\epsilon )\) we show that linear oversampling is sufficient. Specifically:

Theorem 2.4

(Maximal behaviour of polynomials bounded at equispaced nodes and extended intervals) Let \(0 < \epsilon \le 1/\mathrm {e}\), \(\gamma > 1\) and \(n \ge \sqrt{\gamma ^2-1} \log (1/\epsilon )\), and consider the quantity \(C(m,n,\gamma ,\epsilon )\) defined in (2.9). Suppose that

Then

for some numerical constant \(c > 0\). Specifically, c can be taken as \(c = 4 \beta + 3\) for \(\beta \) as in (2.8).

This theorem is a direct consequence of a more general result (Theorem 4.4) that we state and prove in Sect. 4. Note that the factor 36 appearing in (2.10) was chosen for convenience. Theorem 4.4 describes in general how a condition roughly of the form \(m \ge c_1 n \log (1/\epsilon ) / \sqrt{\gamma ^2-1}\) leads to a bound \(C(m,n,\gamma ,\epsilon ) \le h(c_1)\) for some function \(h(c_1)\) depending on \(c_1\).

2.5 The Motivations for Considering Polynomials on an Extended Interval

Above we asserted that, by considering orthogonal polynomials on an extended interval and using regularization, the polynomial frame approximation constructs an approximation in a space within which the polynomials cannot grow too large on \([-\gamma ,\gamma ]\). As Theorem 2.4 makes clear, this prohibits such polynomials from behaving too wildly on \([-1,1]\) away from the equispaced grid, whenever m scales linearly with n. Thus, using orthogonal polynomials on an extended interval allows for a reduction in the oversampling rate from quadratic in n (as is the case for standard polynomial-least squares approximation—see Remark 2.3) to linear in n.

On the other hand, by restricting the approximation space in this way, we potentially limit the ability of the scheme to accurately approximate analytic functions. In Theorem 3.3, we establish an error bound for the polynomial frame approximation that takes the form

where \(\epsilon ' = \epsilon (n+1) / \sqrt{\gamma }\) (the reasons for this choice of \(\epsilon '\) are discussed further below). This holds for any \(c > 1\) and \(f \in C([-1,1])\), provided m and n are chosen so that

Such a bound is very similar to those shown previously for both Fourier extensions [9] and polynomial frame approximations [7], the main difference being the use of the \(L^{\infty }\)-norm instead of the \(L^2\)-norm. The key component of it is the best approximation term

In other words, the effect of the truncated SVD regularization is to replace the classical best approximation error term

(which arises in the case \(\epsilon = 0\), i.e. standard polynomial least-squares approximation) by one that also involves a term depending on \(\epsilon \) multiplied by the norm of p over the extended interval. As a result, the overall approximation error depends on how well f can be approximated by a polynomial \(p \in \mathbb {P}_n\) uniformly on \([-1,1]\) (the term \({\left\| f - p\right\| }_{[-1,1],\infty }\)) that does not grow too large on the extended interval \([-\gamma ,\gamma ]\) (the term \( {\left\| p\right\| }_{[-\gamma ,\gamma ],\infty } \)).

Our main result, stated next, arises by bounding this best approximation term for functions that are analytic in sufficiently large complex regions.

2.6 Main Result: The Possibility Theorem

We now state our main result (see Sect. 5 for the proof). For this, we first recall the definition of the Bernstein ellipse with parameter \(\theta > 1\):

A classical theorem in approximation theory states that any function \(f \in B(E_{\theta })\) is approximated to exponential accuracy by polynomials. Specifically,

(see also Lemma 5.1 later). Our main results assert that polynomial frame approximation can achieve a similar rate of decay in n, subject to linear oversampling in m. Specifically:

Theorem 2.5

(The possibility theorem) Let \(0 < \epsilon \le 1/\mathrm {e}\), \(\gamma > 1\) and \(n \ge \sqrt{\gamma ^2-1} \log (1/\epsilon )\), and consider the polynomial frame approximation \(\mathcal {P}^{\epsilon ',\gamma }_{m,n}\) defined in (2.6)–(2.7), where

Then the condition number of the mapping \(\mathcal {P}^{\epsilon ',\gamma }_{m,n}\) satisfies

where c is as in Theorem 2.4. Moreover, if \(E_{\theta }\) is a Bernstein ellipse with parameter

then, for all \(f \in B(E_{\theta })\),

where \(g(\theta ,\gamma )\) depends on \(\theta \) and \(\gamma \) only and

This result shows that polynomial frame approximation is well-conditioned when n scales linearly with m (specifically, (2.13) holds), with its condition number being at worst \(\mathcal {O}(\sqrt{m})\) as \(m \rightarrow \infty \). Moreover, for functions that are analytic in \(E_{\theta }\) (note that this region contains the extended interval \([-\gamma ,\gamma ]\) in its interior, due to the condition (2.14)) its error decreases exponentially fast in m down to the level \(\mathcal {O}(m^{3/2} \epsilon )\). Recall that the rate \(\theta ^{-n}\) in (2.15) is the same as in (2.12) for the best polynomial approximation of a function in \(B(E_{\theta })\). Thus, one can achieve a near-optimal error decay rate in n with only linear oversampling in m.

Overall, Theorem 2.5 provides a positive counterpart to the impossibility theorem (Theorem 2.1). It is important emphasize that it does not violate Theorem 2.1: indeed, exponential decay of the error is only guaranteed down to a finite accuracy.

Remark 2.6

(Varying \(\epsilon \) with n) It is worth observing how the theorem changes if one strives to scale \(\epsilon \) with n so as to achieve exponential convergence of the error down to zero, rather than exponential decay down to a finite level of accuracy. If \(\epsilon \) is chosen as \(\epsilon = \theta ^{-n}\) then the scaling between m and n becomes quadratic, since \(\log (1/\epsilon ) = n \log (\theta )\) in this case. When substituted into Theorem 2.4, this implies quadratic scaling of m with n, and therefore root-exponential convergence (to zero) of the approximation with respect to m. This is in precise agreement with the impossibility theorem.

Another key aspect of Theorem 2.5 is the dependence on \(\epsilon \) in (2.13) and, in turn, the exponential rate (2.16). Since \(\epsilon > 0\) dictates the limiting accuracy of the approximation scheme, it is often desirable to choose \(\epsilon \) close to machine epsilon \(\epsilon _{\mathrm {mach}}\), which in IEEE double precision is roughly \(\epsilon _{\mathrm {mach}} \approx 1.1 \times 10^{-16}\). Thus, the scaling \(\log (1/\epsilon )\)—which is proportional to the number of digits of accuracy desired—is highly appealing. A scaling of, for example, \(1/\epsilon \), would be meaningless for practical purposes.

Note that Theorem 2.5 does not say anything about the rate of the decay of the error for functions that are not analytic in a Bernstein ellipse \(E = E_{\theta }\) that is large enough to contain the extended interval \([-\gamma ,\gamma ]\). We discuss the behaviour of the error for such functions in Sect. 2.7. On the other hand, this theorem also offers some insight into the effect of the choice of \(\gamma \) on the approximation. Specifically, choosing a smaller \(\gamma \) means that (2.14) holds for smaller values of \(\theta \), thus the analyticity requirement \(f \in B(E_{\theta })\) becomes less stringent. However, this also leads to a slower rate of exponential convergence in m, since \(\rho \) is an increasing function of both \(\gamma \) and \(\theta \). We discuss this matter further in Sect. 6.

Finally, we remark that this theorem actually considers a polynomial frame approximation with parameter \(\epsilon ' = \epsilon (n+1)/\sqrt{\gamma }\) that grows linearly in n. The reason for this can be traced to the need to switch between the \(L^2\)-norm (or corresponding discrete seminorm) and the \(L^{\infty }\)-norm (or corresponding discrete seminorm) at various stages in the proof. See the proofs of Lemma 3.2 and Theorem 3.3 for the precise details. This choice of scaling is made to ensure the first term in the error bound decreases exponentially fast in m, which in turn follows from the linear relationship \(m \approx 24 n \log (1/\epsilon )/\sqrt{\gamma ^2-1}\). It is also possible to use \(\epsilon \) as the truncation parameter rather than \(\epsilon '\). Following much the same arguments, one can show that this choice results in a log-linear relationship between m and n, which in turn leads to subexponential convergence of the form \(\sigma ^{-m / \log (m)}\) for large m, where, like \(\rho \), \(\sigma > 1\) depends on \(\theta \), \(\gamma \) and \(\epsilon \).

2.7 Error Decay Rates for Functions of Lower Regularity

Theorem 2.5 only asserts exponential decay of the error for functions that are analytic in complex regions containing the extended interval \([-\gamma ,\gamma ]\). We now consider arbitrary analytic functions. The following result shows that the error for such functions decays exponentially fast with the same rate \(\theta ^{-n}\), but only down to a larger tolerance.

Theorem 2.7

(Error decay for arbitrary analytic functions) Consider the setup of Theorem 2.5. Let \(E_{\theta }\) be the Bernstein ellipse with parameter

Then, for all \(f \in B(E_{\theta })\),

where \(g(\theta ,\gamma )\) and \(\rho \) are also as in Theorem 2.5.

This result shows decrease of the error exponentially fast down to roughly \(\epsilon ^{\frac{\log (\theta )}{\log (\tau )}}\), i.e. some fractional power of \(\epsilon \) depending on the relative sizes of \(\theta \) and \(\tau = \gamma + \sqrt{\gamma ^2-1}\). It raises an immediate question: what happens after such an accuracy level is reached? As we show later through an extended impossibility theorem, we cannot expect exponential decay down to \(\epsilon \) in general. However, we now show that superalgebraic decay—i.e. faster than any power of \(m^{-k}\)—is indeed possible down to this level.

We do this by first noting that an analytic function is infinitely smooth, and then by establishing an error bound for functions that are k-times continuously differentiable. Specifically, in the following result we consider the space \(C^{k}([-1,1])\) of functions that are k-times continuously differentiable on \([-1,1]\). We define the norm on this space as

Theorem 2.8

(Error decay for \(C^k\) functions) Consider the setup of Theorem 2.5. Then, for all \(k \in \mathbb {N}\) and \(f \in C^k([-1,1])\),

where \(g(k,\gamma )\) depends on k and \(\gamma \) only.

Proofs of Theorems 2.7 and 2.8 can be found in Sect. 5. Note that all these observations about the rate of error decay are seen in practice in numerical examples. We present a series of experiments confirming these results in Sect. 6.

We remark in passing that superalgebraic decay is slower than root-exponential decay, which is the best possible stipulated by the impossibility theorem for analytic function approximation. Whether or not polynomial frame approximation exhibits root-exponential decay in m after the breakpoint \(\epsilon ^{\frac{\log (\theta )}{\log (\tau )}}\) is an open problem.

2.8 An Extended Impossibility Theorem

Theorems 2.5 and 2.7 show that polynomial frame approximation can achieve roughly the same exponential rate \(\theta ^{-n}\) as the best polynomial approximation for functions in \(B(E_{\theta })\) when subject to linear oversampling. However, it only maintains this rate down to roughly \(\epsilon \) for sufficiently large \(\theta \). We now ask whether or not there exists an approximation scheme that can perform better than this: namely, whether an exponential rate \(\theta ^{-n}\) down to \(\epsilon \) can be attained for any \(\theta > 1\) in the linear oversampling regime. The following extended impossibility theorem shows that the answer to this question is no.

Theorem 2.9

(Extended impossibility theorem) Let \(\{ \mathcal {R}_m \}^{\infty }_{m=1}\) be an approximation procedure based on equispaced grids of \(m+1\) points such that, for some \(c > 0\), \(C,\theta ^* > 1\), \(0 < \epsilon \le (4C)^{-2}\) and \(1/2 < \tau \le 1\), we have

for all \(m \in \mathbb {N}\), \(f \in B(E_{\theta })\) and \(1 < \theta \le \theta ^*\). Then the condition numbers (2.2) satisfy

for some \(\sigma > 1\) and all sufficiently large m.

The proof of this theorem can be found in Sect. 6. It follows essentially the same steps as that of the impossibility theorem.

This theorem has several consequences. First, it extends the impossibility theorem by showing that exactly the same relationship between fast error decay and conditioning holds even when the overall error decreases only down to a constant tolerance \(\epsilon \). To do this, it makes the stronger assumption that the scheme yields exponential decay for all analytic functions—including those with singularities arbitrarily close to \([-1,1]\)—with the rate of exponential decay being dependent on the size of the region of analyticity. Specifically, \(f \in B(E_{\theta })\) implies a term of the form \(\theta ^{-c m^{\tau }}\) for all \(1 < \theta \le \theta ^*\).

Second, it implies the following. Any well-conditioned method must either (i) yield root-exponential decrease of the error down to \(\epsilon \), i.e. \(\theta ^{-c \sqrt{m}} + \epsilon \); or (ii) fail to yield exponential convergence for all analytic functions, i.e. (2.18) holds with \(\tau = 1\) only for \(\theta ^{**} \le \theta \le \theta ^*\) for some \(1 < \theta ^{**} \le \theta ^*\). As discussed previously, (ii) is exactly how polynomial frame approximation behaves, up to small algebraic factors in m. In other words, it is nearly optimal.

Remark 2.10

(Varying \(\gamma \) with n) Recall that Theorem 2.5 only asserts exponential decay down to \(\epsilon \) for functions that are analytic Bernstein ellipses containing the interval \([-\gamma ,\gamma ]\). A natural idea is therefore to decrease \(\gamma \) with n so that, for any fixed, analytic function, the interval \([-\gamma ,\gamma ]\) is included in its region of analyticity for all large n.

To determine a suitable scaling for \(\gamma \), one can use similar ideas to those employed in so-called mapped polynomial spectral methods [10, 13, 22, 25, 28]: namely, choose \(\gamma \) to match the terms in the error bound (2.15). Ignoring the term n (for simplicity), we therefore solve the equation \((\gamma + \sqrt{\gamma ^2-1})^{-n} = \epsilon \) with respect to \(\gamma \), which yields

Observe that \(\gamma \rightarrow 1^+\) as \(n \rightarrow \infty \) for fixed n. By combining Theorems 2.5 (for large n, since \(\gamma < \theta \) for all large n) and 2.7 (for small n, noting that \(\epsilon ^{\frac{\log (\tau )}{\log (\theta )}} = \theta ^{-n}\) with this choice of \(\gamma \)), one can show that

for \(f \in B(E_{\theta })\) and any \(\theta > 1\). Yet, scaling \(\gamma \) as in (2.19) causes the relation between m and n to become quadratic. Indeed, \(\sqrt{\gamma ^2 - 1} = \log (1/\epsilon )/n + \mathcal {O}((\log (1/\epsilon )/n)^3)\) as \(n \rightarrow \infty \), and therefore the condition (2.10) becomes \(m > 36 n^2\) for all large n. Of course, this is to be expected in view of Theorem 2.9, since the error bound (2.20) holds for any \(\theta > 1\).

3 Accuracy and Conditioning of \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}\)

The next three sections develop the proofs of Theorems 2.4–2.9. We commence in this section by analysing the error and condition number of the polynomial frame approximation \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}\). Our analysis follows similar lines to that of [8] and, in particular, [7], the main difference being that we work in the \(L^{\infty }\)-norm, rather than the \(L^2\)-norm.

3.1 Reformulation in Terms of Singular Vectors

Let \(v_0,\ldots ,v_{n} \in \mathbb {C}^n\) be the right singular vectors of the matrix A defined in (2.5). Then, to each such vector, we can associate a polynomial in \(\mathbb {P}_n\):

Here the \(\psi _j\) are as in (2.3). Since these functions are orthonormal on \([-\gamma ,\gamma ]\) and the \(v_i\) are orthonormal vectors, the functions \(\xi _i\) are themselves orthonormal on \([-\gamma ,\gamma ]\):

However, the functions \(\xi _i\) are also orthogonal with respect to the discrete inner product \(\langle \cdot , \cdot \rangle _{m,2}\). Indeed, since \(v_j\) and \(v_k\) are singular vectors, we have

With this in hand, we now define the subspace

We note in passing that this space coincides with \(\mathbb {P}_n\) whenever \(m \ge n\) and \(\sigma _{\min } = \sigma _n > \epsilon \). In particular, \(\mathbb {P}^{0,\gamma }_{m,n} = \mathbb {P}_n\) for \(m \ge n\). On the other hand, when \(\epsilon > 0\) we have \(\mathbb {P}^{\epsilon ,\gamma }_{m,n} \subseteq P^{\epsilon ,\gamma }_{m,n}\), where

is, as before, the set of polynomials of degree at most n whose \(L^2\)-norm over the extended interval \([-\gamma ,\gamma ]\) is at most \(1/\epsilon \) times larger than their discrete 2-norm over the equispaced grid. To see why this holds, let \(p = \sum _{\sigma _i > \epsilon } c_i \xi _i \in \mathbb {P}^{\epsilon ,\gamma }_{m,n}\). Then, by the double orthogonality of the \(\xi _i\),

Hence, \(p \in P^{\epsilon ,\gamma }_{m,n}\), as required.

Finally, observe that the polynomial frame approximation \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}(f)\) of \(f \in C([-1,1])\) belongs to the space \(\mathbb {P}^{\epsilon ,\gamma }_{m,n}\). In fact, it is the orthogonal projection onto this space with respect to the discrete inner product \(\langle \cdot , \cdot \rangle _{m,2}\). Therefore, by orthogonality, we may write

3.2 Accuracy and Conditioning Up to Constants

We can now examine the accuracy and conditioning of \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}\). We first require the following:

Lemma 3.1

Let \(\epsilon \ge 0\), \(\gamma \ge 1\) and \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}\) the corresponding polynomial frame approximation. Then, for any \(f \in C([-1,1])\),

where

Moreover, the condition number \(\kappa (\mathcal {P}^{\epsilon ,\gamma }_{m,n})\) satisfies

These constants are interpreted as follows. The first, \(C_1\), measures how large an element of the space \(\mathbb {P}^{\epsilon ,\gamma }_{m,n}\) can be uniformly on \([-1,1]\) relative to its discrete uniform norm over the grid. The second, \(C_2\), examines the effect of the \(\epsilon \)-truncation. Specifically, it measures the error in reconstructing a polynomial \(p \in \mathbb {P}_n\) relative to its size on the extended interval. Note, in particular, that \(C_2 = 0\) when \(\epsilon = 0\) and \(m \ge n\). We also have \(C_1(m,n,\gamma ,0) = B(m,n)\) in this case, where B(m, n) is as in (2.8).

Proof

Let \(f \in C([-1,1])\) and \(p \in \mathbb {P}_n\). Then

Here, in the second step, we used the fact that \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}\) is linear and its range is the space \(\mathbb {P}^{\epsilon ,\gamma }_{m,n}\). Now recall that \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}\) is an orthogonal projection with respect to \(\langle \cdot , \cdot \rangle _{m,2}\). Thus, using (2.1),

Substituting this into the previous expression now gives (3.2).

For the second result, we use the fact that \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}\) is linear once more to write

Notice that \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}(p) = p\) for all \(p \in \mathbb {P}^{\epsilon ,\gamma }_{m,n}\). Hence,

which gives the lower bound in (3.4). On the other hand, using (2.1) and the fact that \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}\) is an orthogonal projection once more, we have

This gives the upper bound in (3.4). \(\square \)

3.3 Bounding the Constants

The next step is to estimate the constants \(C_1\) and \(C_2\) appearing in this lemma.

Lemma 3.2

Consider the setup of the previous lemma. Then the constants \(C_1\) and \(C_2\) defined in (3.3) satisfy

and

where C is as in (2.9) and \(c_{n,\gamma } = \frac{n+1}{\sqrt{2\gamma }}\).

Proof

Consider \(C_1\) first. Let \(p \in \mathbb {P}^{\epsilon ,\gamma }_{m,n}\) with \({\left\| p\right\| }_{m,\infty } \le 1\). Then we can write \(p = \sum _{\sigma _i > \epsilon } c_i \xi _i\) and, using the orthogonality of the \(\xi _i\), we see that

Therefore,

We also recall the following inequality over \(\mathbb {P}_n\):

This can be show directly by recalling that the classical Legendre polynomial \(P_i(x)\) attains its maximum at \(x = 1\) and takes value \(P_i(1) = 1\). Hence, writing \(q \in \mathbb {P}_n\) as \(q = \sum ^{n}_{i=0} c_i \psi _i\) and recalling (2.3), we get

which establishes (3.5). Therefore, since \(p \in \mathbb {P}^{\epsilon ,\gamma }_{m,n} \subseteq \mathbb {P}_n\), we get

We deduce that

which gives the first result.

We now consider \(C_2\). Let \(p \in \mathbb {P}_n\) with \({\left\| p\right\| }_{[-\gamma ,\gamma ],\infty } \le \epsilon ^{-1}\). Since \(p \in \mathbb {P}_n\), we may write

and using (3.1), we may also write

Therefore,

Using the fact that the \(\xi _i\) are orthonormal over \([-\gamma ,\gamma ]\), we deduce that

and, using the fact that the \(\xi _i\) are orthogonal with respect to the discrete semi-inner product \(\langle \cdot , \cdot \rangle _{m,2}\), we see that

Now observe that we can write

Let \(q = p - \mathcal {P}^{\epsilon ,\gamma }_{m,n}(p) \in \mathcal {A}\). Then \(q \in \mathbb {P}_n\) and, due to (3.5) and (3.6),

Also, by (2.1), (3.7) and the fact that \({\left\| p\right\| }_{[-\gamma ,\gamma ],\infty } \le \epsilon ^{-1}\),

and therefore \( {\Vert q\Vert }_{m,\infty } \le \sqrt{(m+1)/2} \sqrt{2 \gamma }\). Hence,

which implies that \(C_2 \le \max \{ {\Vert q\Vert }_{[-1,1],\infty } : q \in \mathcal {B}\}\) and, after renormalizing,

This gives the second result. \(\square \)

3.4 Main Result on Accuracy and Conditioning

We now summarize these two lemmas with the following theorem:

Theorem 3.3

(Accuracy and conditioning of polynomial frame approximation) Let \(\epsilon > 0\), \(\gamma \ge 1\), \(c > 1\) and \(m , n \ge 1\) be such that

Then the polynomial frame approximation \(\mathcal {P}^{\epsilon ',\gamma }_{m,n}\) with \(\epsilon ' = \epsilon (n+1) / \sqrt{\gamma }\) satisfies

and, for any \(f \in C([-1,1])\),

Proof

Observe that \(C(m,n,\gamma ,\epsilon )\) is a decreasing function of \(\epsilon \). Hence, by this, Lemma 3.2 and the fact that \(\epsilon ' = \sqrt{2} c_{n,\gamma } \epsilon \),

and

Therefore, the condition (3.8) implies that

We now apply Lemma 3.1 to get that \(\kappa (\mathcal {P}^{\epsilon ',\gamma }_{m,n}) \le c \sqrt{m+1}\). For the error bound, we have

where in the second step we used the definition of \(\epsilon '\). The result now follows, since \(1 + c\sqrt{m+1} \le 2 c \sqrt{m+1}\). \(\square \)

4 Maximal Behaviour of Polynomials Bounded at Equispaced Nodes

In this section, we prove Theorem 2.4.

4.1 Pointwise Markov Inequality

We first require a pointwise Markov inequality. The following lemma may be viewed as a generalization of the pointwise Bernstein inequality for algebraic polynomials \(p \in \mathbb {P}_n\):

to higher derivatives. It appeared in [27] in a slightly less general form. We provide essentially the same proof for completeness.

Lemma 4.1

For any \(k,n \in \mathbb {N}\) and \(\delta \in (0,1)\) such that \(k < n \sqrt{(1-\delta ^2)/2}\), and for any polynomial \(p \in \mathbb {P}_n\), we have

Note that we cannot get (4.2) just by iterating the Bernstein inequality (4.1). Such iterated use produces a much weaker result

Proof

We will use the following known results. First, Shaeffer and Duffin [35] proved that, for any \(k,n \in \mathbb {N}\) and \(p \in \mathbb {P}_n\),

where

Second, Shadrin [36] derived the explicit expression for \(D_{n,k}(\cdot )\). Specifically,

where

In particular,

Now, using (4.5) and (4.6), we see that

Clearly

We now find an upper bound for the sum \(B_{n,k}(x)\). Using (4.7) we expand \(c_{m,k}\) as

In the latter expression, we estimate the first factor using Wallis’ inequality (see, e.g., [17, Chpt. 22]):

For the second factor, we observe that

Therefore,

where in the second step we used the inequality \(\frac{k^2-s^2}{n^2-s^2} < \frac{k^2}{n^2}\). Finally, if \(k \le n \sqrt{(1-\delta ^2)/2}\) and \(|x| \le \delta \), then \(\frac{1}{1-x^2} \frac{k^2}{n^2} \le \frac{1}{2}\), and

Altogether, this gives

as required. \(\square \)

Corollary 4.2

For any \(k,n \in \mathbb {N}\) and \(\delta \in (0,1)\) such that \(k < n \sqrt{(1-\delta ^2)/2}\), and for any polynomial \(p \in \mathbb {P}_n\), we have

4.2 A Lemma on Best Approximation

Next, we require the following lemma on best approximation by polynomials. Although well known, we give a proof for completeness.

Lemma 4.3

Let \(f \in C^r([a,b])\). Then

Proof

Let \(p_\Delta \in \mathbb {P}_{r-1}\) be the polynomial that interpolates f at the r points of the set \(\Delta = (x_i)^{r}_{i=1}\). By the Lagrange interpolation formula, for any \(x \in [a,b]\) we have

for some \(\xi = \xi _x \in [a,b]\). It follows that

It is well known that the infimum of \(\Vert \omega _\Delta \Vert _{[a,b],\infty }\) is attained for the set \(\Delta _*\) of zeros of the Chebyshev polynomial \(T_r^*\) on [a, b], and that for this set we have

This completes the proof. \(\square \)

4.3 Bounding the Norm on a Subinterval

We are now in a position to state and prove the main result of this section. Note that, for convenience, we formulate this result in terms of intervals \([-\delta ,\delta ]\) and \([-1,1]\) as opposed to intervals \([-1,1]\) and \([-\gamma ,\gamma ]\). The analogous result for the latter is obtained by setting \(\delta = 1/\gamma \).

Theorem 4.4

Given \(\epsilon > 0\), let \(p \in \mathbb {P}_n\) satisfy

and assume that, for some \(\delta \in (0,1)\) and \(m \in \mathbb {N}\), we also have

If

for some \(0 < \epsilon \le 1/\mathrm {e}\) and \(c_1 \ge \max \{1/\lfloor \log (1/\epsilon ) \rfloor , 3/n\}\), then

where \(\beta > 1\) is the upper constant in the Coppersmith and Rivlin bound (2.8).

This theorem is essentially a more general version of Theorem 2.4 in which the dependence of the bound on \(C_0\) in the constant factor \(c_1\) in (4.9) is made explicit. We now show how it implies Theorem 2.4.

Proof of Theorem 2.4

We use Theorem 4.4 with \(\delta = 1/\gamma \) and \(c_1 = 3\). Recall that \(0 < \epsilon \le 1/\mathrm {e}\) and \(n \ge \sqrt{\gamma ^2-1} \log (1/\epsilon ) > 0\) by assumption. In particular, \(n \ge 1\) since it is an integer. This implies that

Hence, the value \(c_1 = 3\) is permitted. Observe now that, since \(\delta = 1/\gamma \),

Therefore, (2.10) implies that (4.9) holds. We deduce that

as required. \(\square \)

Proof of Theorem 4.4

For a given \(p \in \mathbb {P}_n\) that satisfies assumptions of the theorem, set

If \(k > n \sqrt{(1-\delta ^2)/2}\), then the condition (4.9) implies that

Hence, the polynomial p is bounded on the interval \([-\delta , \delta ]\) at \(m+1 > c_1 n^2/3 + 1\) equidistant points. We deduce that

by the Coppersmith and Rivlin bound (2.8).

We now suppose that \(k \le n \sqrt{(1-\delta ^2)/2}\), so that the Markov-type inequality (4.8) is applicable. First, consider partition of the interval \([-\delta ,\delta ]\) with the \(m+1\) equispaced points

Take any subinterval \(I = [x_r, x_s] \subset [-\delta ,\delta ]\) containing \(\lfloor c_1 k^2 \rfloor + 1\) points, so that

where we used the inequality \(m \ge 12 c_1 n k \frac{\delta }{\sqrt{1-\delta ^2}}\).

Now, with the same \(k = \lfloor \log (1/\epsilon ) \rfloor \), let \(Q \in \mathbb {P}_k\) be the polynomial of best approximation to \(p\in \mathbb {P}_n\) from \(\mathbb {P}_k\) on I:

By Lemma 4.3,

and by Corollary 4.2

Hence, using the well-known estimate \(k! \ge \sqrt{2\pi }\sqrt{k} (k/\mathrm {e})^k\), and the bound (4.11) for |I|, we obtain

Now, recalling that \(k = \lfloor \log (1/\epsilon ) \rfloor \), whereas \(\Vert p\Vert _{[-1,1],\infty } \le 1/\epsilon \), we conclude that

On the other hand, by construction the interval \(I = [x_r, x_s]\) contains \(\lfloor c_1 k^2 \rfloor +1\) equispaced points from the \((x_i)\). By (2.8), for \(Q \in \mathbb {P}_k\), we have

Here, for the Copppersmith–Rivlin bound, the assumption \(c_1k^2 \ge k\), i.e. boundedness of \(Q \in \mathbb {P}_k\) on at least \(k+1\) points, is required, and similarly we required \(c_1 n^2/3 \ge n\) in obtaining (4.10). This is where the theorem’s condition

came from. Also, since \(c_1 k^2 \ge k \ge 1\), we have the inequality \(\lfloor c_1 k^2 \rfloor \ge c_1 k^2/2\) which we used in (4.13)

We now conclude the proof. Using (4.12) and (4.13), we obtain

as required. \(\square \)

5 Proofs of the Possibility and Impossibility Theorems

We now prove Theorems 2.5–2.9. For these, we first require the following result.

Lemma 5.1

Suppose that \(E_{\theta } \subset \mathbb {C}\) is the Bernstein ellipse (2.11) with parameter \(\theta > 1\) and \(f \in B(E_{\theta })\). Then there exists a polynomial \(p \in \mathbb {P}_n\) for which

Moreover, this polynomial satisfies

and

Proof

This result is essentially standard. We repeat it to obtain the explicit bounds for p. Since \(f \in B(E_{\theta })\) its Chebyshev expansion converges uniformly on \([-1,1]\), i.e.

and its coefficients satisfy \(|c_k| \le 2 {\left\| f\right\| }_{E_{\theta }} \theta ^{-k}\) [39, Thm. 8.1]. Let \(p = \sum ^{n}_{k=0} c_k \phi _k\). Then

Evaluating the sum gives the first result.

For the other results, recall that the Bernstein ellipse is given by \(E_{\tau } = \left\{ J(z) : z \in \mathbb {C},\ 1 \le |z | \le \tau \right\} \), where \(J(z) = \frac{1}{2}(z+z^{-1})\) is the Joukowsky map, and the Chebyshev polynomials satisfy \(\psi _k(J(z)) = \frac{1}{2} \left( z^n + z^{-n} \right) \). Hence, \({\left\| \psi _k \right\| }_{E_{\tau },\infty } \le \tau ^{n}\). Therefore,

which yields the result. \(\square \)

Proof of Theorem 2.5

We use Theorem 3.3. Note that the condition (2.13) implies that (2.10) holds. Therefore, Theorem 2.4 implies that (3.8) holds with c as defined therein.

The desired bound for the condition number follows immediately. For the error bound, let \(f \in B(E_{\theta })\), where \(\theta > \gamma + \sqrt{\gamma ^2-1}\), and let \(p \in \mathbb {P}_n\) be as in the previous lemma. Since \([-\gamma ,\gamma ] \subset E_{\tau }\) where \(\tau = \gamma + \sqrt{\gamma ^2-1} < \theta \) by assumption, this lemma gives

for some function \(g(\theta ,\gamma )\) depending on \(\theta \) and \(\gamma \) only. Here we also use the fact that \(n \ge 1\), which follows from the fact that n is an integer and \(n \ge \log (1/\epsilon ) > 0\) by assumption. Supposing that (3.8) holds, Theorem 3.3 now gives, up to a constant change in \(g(\theta ,\gamma )\),

which is the desired error bound with respect to n. To obtain the error bound with respect to m, we simply notice that (2.13) implies that \(m \le 36 n \log (1/\epsilon ) / \sqrt{\gamma ^2-1} + 1 = n/c^*+1\). Hence, \(\theta ^{-n} \le \theta ^{c^*(1-m)} = \rho ^{1-m}\), as required. \(\square \)

Proof of Theorem 2.7

The overall argument is similar to the previous proof. The only difference is the estimation of the term

Suppose that f is analytic in \(E = E_{\theta }\) for some \(\theta \) with \(1< \theta < \tau : = \gamma + \sqrt{\gamma ^2-1}\). In other words, the Bernstein ellipse \(E_{\theta }\) does not contain the extended interval \([-\gamma ,\gamma ]\). Let \(1 \le k \le n\) and \(p \in \mathbb {P}_k\) be the polynomial guaranteed by Lemma 5.1. Then

We now choose

so that

and

Since \(n \ge 1\), we deduce that

as required. \(\square \)

Proof of Theorem 2.8

As in the previous proof, we only need to obtain the desired estimate for the term (5.1). Let \(f \in C^{k}([-1,1])\). Then f has a \(C^k\)-extension to the interval \([-\gamma ,\gamma ]\). Specifically, there is a function \({\tilde{f}} \in C^{k}([-\gamma ,\gamma ])\) satisfying \({\tilde{f}}(x) = f(x)\) for all \(x \in [-1,1]\) and

for some constant \(c_{k,\gamma } \ge 1\) depending on k and \(\gamma \) only. Since \({\tilde{f}} \in C^{k}([-\gamma ,\gamma ])\) a classical result (see, e.g., [18, §4.6]) gives that

for some \(c'_{k,\gamma } > 0\). Observe that

Therefore,

where in the last step we used (5.2) and the fact that \(n \ge 1\). This gives the desired result. \(\square \)

We conclude this section with the proof of Theorem 2.9.

Proof of Theorem 2.9

The proof is similar to that of the impossibility theorem shown in [34]. First observe that \(\mathcal {R}_m(0) = 0\) for any approximation procedure satisfying (2.18). Now let \(k \in \mathbb {N}_0\). Then the condition number (2.2) satisfies

Let \(p \in \mathbb {P}_k\) with \({\left\| p\right\| }_{m,\infty } \ne 0\) and set \(q = \delta p / {\left\| p\right\| }_{m,\infty }\) so that \(q \in \mathbb {P}_k\) with \({\left\| q\right\| }_{m,\infty } = \delta \). Since polynomials are entire functions, (2.18) gives that

Here, in the second step, we used the classical inequality \({\left\| q\right\| }_{E_{\theta },\infty } \le \theta ^k {\left\| q\right\| }_{[-1,1],\infty }\), \(\forall q \in \mathbb {P}_k\). Recalling the definition of q, we deduce that

Now let

This choice of k gives \(C \left( \theta ^{-c m^{\tau }} + \epsilon \right) \theta ^k \le 1/2\), and therefore,

where \(\alpha > 1\) is as in (2.8). Observe that this holds for all \(1 < \theta \le \theta ^*\). Now choose \(\theta = \theta _m = \epsilon ^{-\frac{1}{c m^{\tau }}}\), and observe that \(\theta _m \le \theta ^*\) for all large m. This value of \(\theta \) and the fact that \(0 < \epsilon \le (4 C)^{-2}\) give

and therefore \(k \ge \frac{1}{3} c m^{\tau }\) for all sufficiently large m. We deduce that

for some \(\sigma > 1\) and all large m, as required. \(\square \)

6 Numerical Examples

We conclude this paper with a series of experiments to examine the various theoretical results. Unless otherwise stated, we compute the discrete \(L^{\infty }\)-norm error of the approximation on a grid of 50,000 equispaced points in \([-1,1]\). Also, we consider the polynomial frame approximation threshold parameter \(\epsilon \) rather than \(\epsilon '\) (as used in the main theorems). Theoretically, this choice leads to a log-linear sample complexity, but in practice it appears to be adequate.

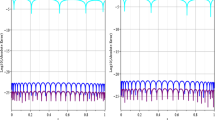

In Fig. 1 we plot the error versus n for different values of the oversampling parameter \(\eta = m/n\). We compare several different values for the extended domain parameter \(\gamma \), and several different values of \(\epsilon \). Notice that in all cases, we witness exponential decrease of the error down to some fixed limiting accuracy. The limiting accuracy is related to the stability of the approximation and the parameter \(\epsilon \). Observe that it gets smaller with increasing \(\eta \), and for sufficiently large \(\eta \) it closely tracks the value of \(\epsilon \) used. Moreover, it is larger when \(\gamma \) is smaller and smaller when \(\gamma \) is larger. Both observations are intuitively true. Increasing the number of sample points (i.e. larger \(\eta \)) reduces the maximal growth of a polynomial on \([-1,1]\) relative to its values of the equispaced grid. Similarly, increasing \(\gamma \) lengthens the region within which the polynomial cannot exceed the value \(1/\epsilon \), and therefore, it also cannot grow as large on \([-1,1]\). We also notice that increasing the oversampling parameter makes less difference to the limiting accuracy when \(\epsilon \) is larger than it does when \(\epsilon \) is smaller. Again, this is intuitively true, since larger \(\epsilon \) means the polynomial cannot grow as large on the extended interval. These three observations are also supported by Theorem 2.4. Here, the sufficient scaling between m and n depends on \(\log (1/\epsilon )/\sqrt{\gamma ^2-1}\), i.e. it is a decreasing function of both \(\epsilon \) and \(\gamma \).

Approximation error versus n for approximating the function \(f(x) = \frac{1}{1+x^2}\) via \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}\), where \(m/n = \eta \), using various different values of \(\eta \), \(\gamma \) and \(\epsilon \). The values of \(\epsilon \) used are \(\epsilon = 10^{-14}\) (top), \(\epsilon = 10^{-10}\) (middle) and \(\epsilon = 10^{-6}\) (bottom). The dashed line shows the quantity \(\theta ^{-n}\), where \(\theta = \sqrt{2}+1\)

In Fig. 1 the function we consider has poles at \(x = \pm \mathrm {i}\), meaning that it is analytic within any Bernstein ellipse \(E_{\theta }\) for which \(\frac{1}{2}(\theta - \theta ^{-1}) = 1\), i.e. \(1< \theta < \sqrt{2}+1 \approx 2.41\). Recall that the interval \([-\gamma ,\gamma ]\) is contained in the Bernstein ellipse \(E_{\tau }\) with \(\tau = \gamma + \sqrt{\gamma ^2-1}\). In particular, \(\tau < \sqrt{2}+1\) for \(\gamma = 1.2\) and \(\gamma = 1.4\). Our analysis in Theorem 2.5 therefore asserts exponential decay of the error with rate roughly \((\sqrt{2}+1)^{-n}\) for these two choices of \(\gamma \). This is what we observe in practice in Fig. 1.

On the other hand, when \(\gamma = 1.8\), \(\tau = \gamma + \sqrt{\gamma ^2-1} \approx 3.30 > 2.41 = \sqrt{2}+1\). In this case, our analysis in Theorem 2.7 predicts exponential convergence with rate roughly \((\sqrt{2}+1)^{-n}\) down to roughly \(\epsilon ^{\frac{\log (\sqrt{2}+1)}{\log (\tau )}} \approx \epsilon ^{0.74}\), and slower convergence below this level. This is again in agreement with the right column of Fig. 1.

To further investigate this effect of decreasing error decay for less regular functions, in Fig. 2 we plot the error versus n for several different functions and values of \(\gamma \). We also plot the theoretical breakpoint described in Theorem 2.7, i.e. the value

In all cases, we see reasonable agreement between the theoretical results and the empirical performance. First, the error decays with rate \(\theta ^{-n}\) down to the breakpoint, as in Theorem 2.7, before decreasing more slowly beyond it. This second phase is described by Theorem 2.8 (since analytic functions are infinitely differentiable).

Approximation errors versus n for approximating the functions \(f_1(x) = \frac{1}{1+4x^2}\) (top), \(f_2(x) = \frac{1}{10-9 x}\) (middle) and \(f_3(x) = 25 \sqrt{9 x^2-10}\) (bottom) via \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}\), where \(m/n = 4\), using various different values of \(\gamma \) and \(\epsilon \). The dot-dashed lines show the breakpoints (6.1) in each case and dashed line shows the quantity \(\theta ^{-n}\). In this experiment, the values of \(\theta \) are \(\theta = \frac{1}{2}(1+\sqrt{5})\) (top), \(\theta = \frac{1}{9}(10 + \sqrt{19})\) (middle) and \(\theta = \sqrt{10/9} + 1/3\) (bottom)

It is notable that the error decay rate after the breakpoint is quite different for the functions considered. This effect has also been observed and discussed in the case of Fourier extensions [5, 12]. It can be understood through Theorem 2.8. Recall that this theorem asserts that the error is bounded by

for any \(k \in \mathbb {N}\) (since all functions considered are infinitely smooth). The derivatives of the first function \(f_1(x) = \frac{1}{1+4x^2}\) do not grow too large on \([-1,1]\) with increasing k. Hence, the constants \( {\left\| f\right\| }_{C^k([-1,1])}\) in the error term remain reasonably small and we see rapid decrease in n. On the other hand, the derivatives of the functions \(f_2\) and \(f_3\) grow rapidly with k, meaning the constants \( {\left\| f\right\| }_{C^k([-1,1])}\) also grow rapidly with k. Thus, (6.2) suggests that the error decays progressively more slowly the closer it gets to the limiting value \(\epsilon \). This is exactly the effect we observe in practice.

Approximation errors versus n for approximating the functions \(f(x) = \exp (\mathrm {i}\omega \pi x)\)via \(\mathcal {P}^{\epsilon ,\gamma }_{m,n}\), where \(m/n = 4\), using various different values of \(\gamma \) and \(\epsilon \). The values of \(\omega \) are \(\omega = 40\) (top), \(\omega = 60\) (middle) and \(\omega = 80\) (top)

In Fig. 3 we consider approximating the oscillatory function \(f(x) = \mathrm {e}^{\mathrm {i}\omega \pi x}\) for various different values of \(\omega \). Oscillatory functions are an interesting case study for approximation procedures from equispaced nodes. They are entire functions, yet they grow extremely rapidly along the imaginary axis for large \(|\omega |\), meaning that the term \({\left\| f\right\| }_{E_{\theta },\infty }\) is extremely large unless \(\theta \approx 1\). As we see in Fig. 3, the approximation error is order one until a minimum value of \(n = n_0(\omega )\) is met. After this point, the function begins to be resolved and the error decreases rapidly. Determining the behaviour of \(n_0(\omega )\) allows us to examine the resolution power of the approximation scheme, i.e. the number of points needed before decay of the error sets in.

For the polynomial frame approximation, we determine this point by recalling the error bound

Since f is entire and \(|f(x)| = 1\) for real x, we can write

Hence, the resolution power for polynomial approximation is related to the resolution power of best polynomial approximation on the extended interval \([-\gamma ,\gamma ]\). It is well known (see, e.g., [5, 13, 24, 25]) that on the interval \([-1,1]\) an oscillatory function with frequency \(\omega \) can be approximated by a polynomial once the degree n exceeds the value \(\pi \omega \). Since we consider the extended interval, this implies that \(n_0(\omega ) = \pi \gamma \omega \).

This value is also shown in Fig. 3. It closely predicts the point at which the error begins to decrease. Note that this suggests choosing a small value of \(\gamma \) so as to obtain a higher resolution power. Yet, as seen, this worsens the condition number of the approximation. Or, to put it another way, a smaller \(\gamma \) necessitates a more severe oversampling ratio \(\eta \) so as to maintain the same condition number, thus worsening the resolution power with respect to the number of equispaced samples m.

Finally, we conclude in Fig. 4 with an experiment that compares fixed \(\epsilon \) (the main setting in this paper) with varying \(\epsilon \), the latter chosen as in Remark 2.6 to decay like \(\theta ^{-n}\) for a given \(\theta > 1\). In order to make a fair comparison, we define a maximum allowable condition number \(\kappa ^* = 100\). Then, given n, we find the largest value of m for each scheme such that its condition number is at most \(\kappa ^*\). In order to do this, we use follow the approach of [7, §8] and work in the discrete \(L^2\)-norm (as opposed to the discrete uniform norm considered in previous experiments) over a grid of 50,000 equispaced points in \([-1,1]\). Doing so means that the condition number can be computed as the norm of a certain matrix.

For the first function, \(f_1\), we observe that varying \(\epsilon \) can lead to a benefit whenever the parameter \(\theta \) is chosen suitably: specifically, whenever it is chosen close to the value \(\theta = \theta ^*\), where \(\theta ^*\) is the largest Bernstein ellipse within which the function is analytic. If \(\theta \) is chosen too large, then the scheme behaves similarly to the standard polynomial frame approximation with fixed \(\epsilon \). On the other hand, if \(\theta \) is chosen too small, then the scheme performs significantly worse. In fact, it can even perform worse that standard polynomial least-squares approximation using orthonormal Legendre polynomials on \([-1,1]\) (see Remark 2.2).

For the second function, \(f_2\), varying \(\epsilon \) conveys no benefit over fixed \(\epsilon \), and, as before, it can lead to worse performance if \(\theta \) is chosen too small. It is notable that polynomial least-squares approximation outperforms any of the polynomial frame approximations for this function. This is not surprising. The function grows rapidly near the endpoint \(x = + 1\). In polynomial frame approximation the approximating polynomial is constrained to be of a finite size on \([-\gamma ,\gamma ]\). Hence, it cannot capture this rapid growth as effectively as in polynomial least-squares approximation, where there is no such constraint.

Finally, the third function, \(f_3\), is oscillatory and therefore entire. Observe that when \(\theta \) is small, the polynomial frame approximation with varying \(\epsilon \) initially resolves the function using fewer samples than the scheme with fixed \(\epsilon \). Yet, as m increases the error decays more slowly, and is eventually larger than the error for the latter method.

Finally, in the second row of Fig. 4 we plot the scaling between m and n. As expected, polynomial least-squares approximation exhibits a quadratic scaling, while polynomial frame approximation with fixed \(\epsilon \) exhibits a linear scaling. Polynomial frame approximation with varying \(\epsilon \) exhibits a quadratic scaling for small \(\theta \). When \(\theta \) is larger the scaling is at first quadratic and then linear. This arises because \(\epsilon \) is constrained to be no smaller than \(10^{-14}\) in this experiments, this being done in order to avoid numerical effects in the thresholded SVD. Note that in this regime, the two polynomial frame approximations coincide.

The main conclusion from this experiment is that varying \(\epsilon \) with n can lead to some benefit (for small m), as long as the parameter \(\theta \) is chosen carefully. Unfortunately, such a choice is function dependent (compare, for instance, \(f_1\) versus \(f_3\)), and may require knowledge of the domain of analyticity of the (unknown) function.

Comparison of various schemes for approximating the functions \(f_1\), \(f_2\) and \(f_3\). The top row shows the approximation error (measured in the discrete \(L^2\)-norm) versus m. The bottom row shows the relationship between m and n where, given n, m is smallest integer such that the (discrete \(L^2\)-norm) condition number of each scheme is at most 100. The schemes considered are: polynomial least-squares approximation (“PLS"), which uses orthonormal Legendre polynomials on \([-1,1]\) (see Remark 2.2); polynomial frame approximation with fixed \(\epsilon = 10^{-14} \) (“PFF"); and polynomial frame approximation with varying \(\epsilon = \max \{ \theta ^{-n}, 10^{-14} \}\) (“PFV"). Both PFF and PFV use the value \(\gamma = 1.25\). The quantity \(\theta ^*\) is the ‘optimal’ value of \(\theta \), in the sense that it is the parameter of the largest Bernstein ellipse within which the given function is analytic

7 Conclusions and Outlook

In this work, we have shown a positive counterpart to the impossibility theorem of [34]. Namely, we have shown a possibility theorem (Theorem 2.5) for polynomial frame approximation, which asserts stability and exponential convergence down to a finite, but user-controlled limiting accuracy. This holds for all functions that are analytic in a sufficiently large region—a condition that must be the case for any scheme achieving this type of error decay, as shown by our extended impossibility theorem (Theorem 2.9). On the other hand, for insufficiently analytic functions, we have shown exponential decay down to some fractional power of \(\epsilon \) (Theorem 2.7), and superalgebraic decay (Theorem 2.8) beyond this point.

There are several avenues for further investigation. First, recall that our main error bounds involve (albeit small) algebraic factors in m and n. It would be interesting to see if such factors could be removed by modifying the approximation scheme. Alternatively, one might instead work in the \(L^2\)-norm, which is arguably more natural for least-squares approximations.

Second, this work was inspired by so-called Fourier extensions [9, 12, 14, 16, 26, 29, 31, 33], wherein a smooth, non-periodic function on \([-1,1]\) is approximated by a Fourier series on \([-\gamma ,\gamma ]\). In practice, linear oversampling appears sufficient for accuracy and stability of \(\epsilon \)-regularized Fourier extensions, with exponential error decay down to \(\epsilon \) [9, 12]. Proving a similar possibility theorem for this scheme is an open problem. Note that Fourier extension is equivalent to a polynomial approximation problem on an arc of the complex unit circle [23, 40]. Fourier extension schemes have several advantages over the polynomial extension scheme studied herein. For example, they generally possess higher resolution power [5] (recall Fig. 3 and the discussion in Sect. 6).

Third, we mention that equispaced points are not special. Similar impossibility theorems have been shown for scattered data, or more generally, any sample points that do not cluster quadratically near the endpoints \(x = \pm 1\) [11]. Beyond pointwise samples, it is notable that an impossibility theorem also holds for reconstructing analytic functions from their Fourier samples [2,3,4]. The question of whether or not possibility theorems hold for these more general types of samples is also an open problem.

Fourth, notice that we have not concerned ourselves with fast computation of the polynomial frame approximation in this work. We anticipate, however, that a fast implementation may be possible, as it is with Fourier extensions [29,30,31]. One potential idea in this direction is the AZ algorithm [20].

Fifth and finally, we note that the one-dimensional problem is, in some senses, a toy problem. Polynomial frame approximations we first formalized in [7] to accurately and stably approximate functions that are defined over general, compact domains in two or more dimensions. Here the domain is embedded in a hypercube, and a tensor-product orthogonal polynomial basis on the hypercube is used to construct the approximation. Such approximations are often used in practice in surrogate model construction problems in uncertainty quantification [1, 7]. Showing that linear oversampling is sufficient in two or more dimensions and a corresponding possibility theorem for analytic function approximation in arbitrary dimensions would be an interesting and practically relevant extension.

References

Adcock, B., Brugiapaglia, S., Webster, C.G.: Sparse Polynomial Approximation of High-Dimensional Functions. Society for Industrial and Applied Mathematics, Philadelphia (2022)

Adcock, B., Hansen, A.C.: Stable reconstructions in Hilbert spaces and the resolution of the Gibbs phenomenon. Appl. Comput. Harmon. Anal. 32(3), 357–388 (2012)

Adcock, B., Hansen, A.C.: Generalized sampling and the stable and accurate reconstruction of piecewise analytic functions from their Fourier coefficients. Math. Comput. 84, 237–270 (2014)

Adcock, B., Hansen, A.C., Shadrin, A.: A stability barrier for reconstructions from Fourier samples. SIAM J. Numer. Anal. 52(1), 125–139 (2014)

Adcock, B., Huybrechs, D.: On the resolution power of Fourier extensions for oscillatory functions. J. Comput. Appl. Math. 260, 312–336 (2014)

Adcock, B., Huybrechs, D.: Frames and numerical approximation. SIAM Rev. 61(3), 443–473 (2019)

Adcock, B., Huybrechs, D.: Approximating smooth, multivariate functions on irregular domains. Forum Math. Sigma 8, e26 (2020)

Adcock, B., Huybrechs, D.: Frames and numerical approximation II: generalized sampling. J. Fourier Anal. Appl. 26(6), 87 (2020)

Adcock, B., Huybrechs, D., Martín-Vaquero, J.: On the numerical stability of Fourier extensions. Found. Comput. Math. 14(4), 635–687 (2014)

Adcock, B., Platte, R.: A mapped polynomial method for high-accuracy approximations on arbitrary grids. SIAM J. Numer. Anal. 54(4), 2256–2281 (2016)

Adcock, B., Platte, R., Shadrin, A.: Optimal sampling rates for approximating analytic functions from pointwise samples. IMA J. Numer. Anal. 39(3), 1360–1390 (2019)

Adcock, B., Ruan, J.: Parameter selection and numerical approximation properties of Fourier extensions from fixed data. J. Comput. Phys. 273, 453–471 (2014)

Boyd, J.P.: Chebyshev and Fourier Spectral Methods. Springer-Verlag (1989)

Boyd, J.P.: A comparison of numerical algorithms for Fourier Extension of the first, second, and third kinds. J. Comput. Phys. 178, 118–160 (2002)

Boyd, J.P., Ong, J.R.: Exponentially-convergent strategies for defeating the Runge phenomenon for the approximation of non-periodic functions. I. Single-Interval Schemes Commun. Comput. Phys. 5(2–4), 484–497 (2009)

Bruno, O.P., Han, Y., Pohlman, M.M.: Accurate, high-order representation of complex three-dimensional surfaces via Fourier continuation analysis. J. Comput. Phys. 227(2), 1094–1125 (2007)

Bullen, P.S.: Dictionary of Inequalities. Monographs and Research Notes in Mathematics, 2nd edn. CRC Press, Boca Raton (2015)

Cheney, E.W.: Introduction to Approximation Theory, vol. 317, 2nd edn. AMS Chelsea Publishing, Providence (1982)

Christensen, O.: An Introduction to Frames and Riesz Bases. Applied and Numerical Harmonic Analysis, 2nd edn. Birkhäuser, Basel (2016)

Coppé, V., Huybrechs, D., Matthysen, R., Webb, M.: The AZ algorithm for least squares systems with a known incomplete generalized inverse. SIAM J. Mat. Anal. Appl. 41(3), 1237–1259 (2020)

Coppersmith, D., Rivlin, T.J.: The growth of polynomials bounded at equally spaced points. SIAM J. Math. Anal. 23, 970–983 (1992)

Don, W.-S., Solomonoff, A.: Accuracy enhancement for higher derivatives using Chebyshev collocation and a mapping technique. SIAM J. Sci. Comput. 18, 1040–1055 (1997)

Geronimo, J.S., Liechty, K.: The Fourier extension method and discrete orthogonal polynomials on an arc of the circle. Adv. Math. 365, 107064 (2020)

Gottlieb, D., Orszag, S.A.: Numerical Analysis of Spectral Methods: Theory and Applications, 1st edn. Society for Industrial and Applied Mathematics, Philadelphia (1977)

Hale, N., Trefethen, L.N.: New quadrature formulas from conformal maps. SIAM J. Numer. Anal. 46(2), 930–948 (2008)

Huybrechs, D.: On the Fourier extension of non-periodic functions. SIAM J. Numer. Anal. 47(6), 4326–4355 (2010)

Konyagin, S., Shadrin, A.: On stable reconstruction of analytic functions from selected Fourier coefficients. In preparation (2021)

Kosloff, D., Tal-Ezer, H.: A modified Chebyshev pseudospectral method with an \(\cal{O} (N^{-1})\) time step restriction. J. Comput. Phys. 104, 457–469 (1993)

Lyon, M.: A fast algorithm for Fourier continuation. SIAM J. Sci. Comput. 33(6), 3241–3260 (2012)

Matthysen, R., Huybrechs, D.: Fast algorithms for the computation of Fourier extensions of arbitrary length. SIAM J. Sci. Comput. 38(2), A899–A922 (2016)

Matthysen, R., Huybrechs, D.: Function approximation on arbitrary domains using Fourier frames. SIAM J. Numer. Anal. 56(3), 1360–1385 (2018)

Micchelli, C.A., Rivlin, T.J.: Lectures on optimal recovery. In: Turner, P.R. (ed.) Numerical Analysis Lancaster 1984. Lecture Notes in Mathematics, vol. 1129. Springer, Berlin (1985)

Pasquetti, R., Elghaoui, M.: A spectral embedding method applied to the advection–diffusion equation. J. Comput. Phys. 125, 464–476 (1996)

Platte, R., Trefethen, L.N., Kuijlaars, A.: Impossibility of fast stable approximation of analytic functions from equispaced samples. SIAM Rev. 53(2), 308–318 (2011)

Schaeffer, A.C., Duffin, R.J.: On some inequalities of for derivatives of S. Bernstein and W. Markoff polynomials. Bull. Am. Math. Soc. 44(4), 289–297 (1938)

Shadrin, A.: Twelve proofs of the Markov inequality. In: Approximation Theory: A Volume Dedicated to Borislav Bojanov, pp. 233–298. Professor Marin Drinov Academic Publishing House, Sofia (2004)

Temlyakov, V.: Multivariate Approximation. Cambridge Monographs on Applied and Computational Mathematics, vol. 32. Cambridge University Press, Cambridge (2018)

Temlyakov, V.N.: On approximate recovery of functions with bounded mixed derivative. J. Complex. 9(1), 41–59 (1993)

Trefethen, L.N.: Approximation Theory and Approximation Practice. Society for Industrial and Applied Mathematics, Philadelphia (2013)

Webb, M., Coppé, V., Huybrechs, D.: Pointwise and uniform convergence of Fourier extensions. Constr. Approx. 52, 139–175 (2020)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Albert Cohen.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Adcock, B., Shadrin, A. Fast and Stable Approximation of Analytic Functions from Equispaced Samples via Polynomial Frames. Constr Approx 57, 257–294 (2023). https://doi.org/10.1007/s00365-022-09593-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00365-022-09593-2

Keywords

- Polynomial approximation

- Equispaced samples

- Exponential convergence

- Markov-type inequalities

- Least squares