Abstract

For two probability measures \({\rho }\) and \({\pi }\) on \([-1,1]^{{\mathbb {N}}}\) we investigate the approximation of the triangular Knothe–Rosenblatt transport \(T:[-1,1]^{{\mathbb {N}}}\rightarrow [-1,1]^{{\mathbb {N}}}\) that pushes forward \({\rho }\) to \({\pi }\). Under suitable assumptions, we show that T can be approximated by rational functions without suffering from the curse of dimension. Our results are applicable to posterior measures arising in certain inference problems where the unknown belongs to an (infinite dimensional) Banach space. In particular, we show that it is possible to efficiently approximately sample from certain high-dimensional measures by transforming a lower-dimensional latent variable.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper we discuss the approximation of transport maps on infinite-dimensional domains. Our main motivation are inference problems, in which the unknown belongs to a Banach space Y. Two examples could be the following:

-

Groundwater flow Consider a porous medium in a domain \(\mathrm {D}\subseteq {{\mathbb {R}}}^3\). Given observations of the subsurface flow, we are interested in the permeability (hydraulic conductivity) of the medium in \(\mathrm {D}\). The physical system is described by an elliptic partial differential equation, and the unknown quantity describing the permeability can be modeled as a function \(\psi \in L^\infty (\mathrm {D})=Y\) [25].

-

Inverse scattering Suppose that \(\mathrm {D}_{\mathrm{scat}}\subseteq {{\mathbb {R}}}^3\) is filled by a perfect conductor and illuminated by an electromagnetic wave. Given measurements of the scattered wave, we are interested in the shape of the scatterer \(\mathrm {D}_{\mathrm{scat}}\). Assume that this domain can be described as the image of some bounded reference domain \(\mathrm {D}\subseteq {{\mathbb {R}}}^3\) under a bi-Lipschitz transformation \(\psi :\mathrm {D}\rightarrow {{\mathbb {R}}}^3\), i.e., \(\mathrm {D}_\mathrm{scat}=\psi (\mathrm {D})\). The unknown is then the function \(\psi \in W^{1,\infty }(\mathrm {D})=Y\). We describe the forward model in [17].

The Bayesian approach to these problems is to model \(\psi \) as a Y-valued random variable and to determine the distribution of \(\psi \) conditioned on a (typically noisy) observation of the system. Bayes’ theorem can used to specify this “posterior” distribution via the prior and the likelihood. The prior is a measure on Y that represents our information on \(\psi \in Y\) before making an observation. Mathematically speaking, assuming that the observation and the unknown follow some joint distribution, the prior is the marginal distribution of the unknown \(\psi \). The goal is to explore the posterior and in this way to make inferences about \(\psi \). We refer to [9] for more details on the general methodology of Bayesian inversion in Banach spaces.

For the analysis and implementation of such methods, instead of working with (prior and posterior) measures on the Banach space Y, it can be convenient to parameterize the problem and work with measures on \({{\mathbb {R}}}^{{\mathbb {N}}}\) instead. To demonstrate this, choose a sequence \((\psi _j)_{j\in {{\mathbb {N}}}}\) in Y and a measure \(\mu \) on \({{\mathbb {R}}}^{{\mathbb {N}}}\). With \({{\varvec{y}}}{:=}(y_j)_{j\in {{\mathbb {N}}}}\in {{\mathbb {R}}}^{{\mathbb {N}}}\) and

we can formally define a prior measure on Y as the pushforward \(\Phi _\sharp \mu \). Instead of inferring \(\psi \in Y\) directly, we may instead infer the coefficient sequence \({{\varvec{y}}}=(y_j)_{j\in {{\mathbb {N}}}}\in {{\mathbb {R}}}^{{\mathbb {N}}}\), in which case \(\mu \) holds the prior information on the unknown coefficients. These viewpoints are equivalent in the sense that the conditional distribution of \(\psi \) given an observation is the pushforward, under \(\Phi \), of the conditional distribution of \({{\varvec{y}}}\) given the observation. Under certain assumptions on the prior and the space Y, construction (1.1) arises naturally through the Karhunen–Loève expansion; see, e.g., [1, 22]. In this case the \(y_j\in {{\mathbb {R}}}\) are uncorrelated random variables with unit variance, and the \(\psi _j\) are eigenvectors of the prior covariance operator, with their norms equal to the square root of the corresponding eigenvalues.

In this paper we concentrate on the special case where the coefficients \(y_j\) are known to belong to a bounded interval. Up to a shift and a scaling this is equivalent to \(y_j\in [-1,1]\), which will be assumed throughout. We refer to [9, Sect. 2] for the construction and further discussion of such (bounded) priors. The goal then becomes to determine and explore the posterior measure on \(U{:=}[-1,1]^{{\mathbb {N}}}\). Denote this measure by \({\pi }\) and let \(\mu \) be the prior measure on U such that \({\pi }\ll \mu \). Then the Radon-Nikodym derivative \(f_{\pi }{:=}\frac{\mathrm {d}{\pi }}{\mathrm {d}\mu }:U\rightarrow [0,\infty )\) exists. Since the forward model (and thus the likelihood) only depends on \(\Phi ({{\varvec{y}}})\) in the Banach space Y, \(f_{\pi }\) must be of the specific type

for some \({\mathfrak {f}}_{\pi }:Y\rightarrow [0,\infty )\). We give a concrete example in Example 2.6 where this relation holds.

“Exploring” the posterior refers to computing expectations and variances w.r.t. \({\pi }\), or detecting areas of high probability w.r.t. \({\pi }\). A standard technique to do so in high dimensions is Monte Carlo—or in this context Markov chain Monte Carlo—sampling, e.g., [31]. Another approach is via transport maps [23]. Let \({\rho }\) be another measure on U from which it is easy to sample. Then, a map \(T:U\rightarrow U\) satisfying \(T_\sharp {\rho }={\pi }\) (i.e., \({\pi }(A)={\rho }(\{{{\varvec{y}}}\,:\,T({{\varvec{y}}})\in A\})\) for all measurable A) is called a transport map that pushes forward \({\rho }\) to \({\pi }\). Such a T has the property that if \({{\varvec{y}}}\sim {\rho }\) then \(T({{\varvec{y}}})\sim {\pi }\), and thus samples from \({\pi }\) can easily be generated once T has been computed. Observe that \(\Phi \circ T:U\rightarrow Y\) will then transform a sample from \({\rho }\) to a sample from \((\Phi \circ T)_\sharp {\rho }=\Phi _\sharp {\pi }\), which is the posterior in the Banach space Y. Thus, given T, we can perform inference on the quantity in the Banach space.

This motivates the setting we are investigating in this paper: for two measures \({\rho }\) and \({\pi }\) on U, such that their densities are of type (1.2) for a smooth (see Sect. 2) function \({\mathfrak {f}}_{\pi }\), and we are interested in the approximation of \(T:U\rightarrow U\) such that \(T_\sharp {\rho }={\pi }\). More precisely, we will discuss the approximation of the so-called Knothe–Rosenblatt (KR) transport by rational functions. The reason for using rational functions (rather than polynomials) is to guarantee that the resulting approximate transport is a bijection from \(U\rightarrow U\). The rate of convergence will in particular depend on the decay rate of the functions \(\psi _j\). If (1.1) is a Karhunen–Loève expansion, this is the decay rate of the square root of the eigenvalues of the covariance operator of the prior. The faster this decay, the larger the convergence rate will be. The reason for analyzing the triangular KR transport is its wide use in practical algorithms [13, 16, 35, 38], and the fact that its concrete construction makes it amenable to a rigorous analysis.

Sampling from high-dimensional distributions by transforming a (usually lower-dimensional) “latent” variable into a sample from the desired distribution is a standard problem in machine learning. It is tackled by methods such as generative adversarial networks [15] and variational autoencoders [12]. In the setting above, the high-dimensional distribution is the posterior on Y. We will show that under the assumptions of this paper, it is possible to approximately sample from this distribution by transforming a low-dimensional latent variable and without suffering from the curse of dimensionality. While Bayesian inference is our motivation, for the rest of the manuscript the presentation remains in an abstract setting, and our results therefore have ramifications on the broader task of transforming high-dimensional distributions.

1.1 Contributions and Outline

In this manuscript we generalize the analysis of [41] to the infinite-dimensional case. Part of the proofs are based on the results in [41], which we recall in appendix where appropriate to improve readability.

In Sect. 2 we provide a short description of our main result. Section 3 discusses the KR map in infinite dimensions. Its well-definedness in infinite dimensions has been established in [4]. In Theorem 3.3 we additionally give a formula for the pushforward density assuming continuity of the densities w.r.t. the product topology. In Sect. 4 we analyze the regularity of the KR transport. The fact that a transport inherits the smoothness of the densities is known for certain function classes: for example, in the case of \(C^k\) densities, [11] shows that the optimal transport also belongs to \(C^k\), and a similar statement holds for the KR transport; see for example [33, Remark 2.19]. In Proposition 4.2, assuming analytic densities we show analyticity of the KR transport. Furthermore, and more importantly, we carefully examine the domain of holomorphic extension to the complex numbers. These results are exploited in Sect. 5 to show convergence of rational function approximations to T in Theorem 5.2. This result proves a dimension-independent higher-order convergence rate for the transport of measures supported on infinite-dimensional spaces (which need not be supported on finite-dimensional subspaces). In this result, all occurring constants (not just the convergence rate) are controlled independently of the dimension. In Sect. 6 we show that this implies convergence of the pushforward measures (on U and on the Banach space Y) in the Hellinger distance, the total variation distance, the KL divergence, and the Wasserstein distance. These results are formulated in Theorems 6.1 and 6.4. To prove the latter, in Proposition 6.2 we slightly extend a statement from [32] to compact Polish spaces, to show that the Wasserstein distance between two pushforward measures can be bounded by the maximal distance of the two maps pushing forward the initial measure. Finally, we show that it is possible to compute approximate samples from the pushforward measure in the Banach space Y, by mapping a low-dimensional reference sample to the Banach space; see Corollary 6.5. All proofs can be found in the appendix.

2 Main Result

Let for \(k\in {{\mathbb {N}}}\)

where these sets are equipped with the product topology and the Borel \(\sigma \)-algebra, which coincides with the product \(\sigma \)-algebra [3, Lemma 6.4.2 (ii)]. Additionally, let \(U_0{:=}\emptyset \). Denote by \(\lambda \) the Lebesgue measure on \([-1,1]\) and by

the infinite product measure. Then \(\mu \) is a (uniform) probability measure on U. By abuse of notation, for \(k\in {{\mathbb {N}}}\) we additionally denote \(\mu =\otimes _{j=1}^k\frac{\lambda }{2}\), where k will always be clear from context.

For a reference \({\rho }\ll \mu \) and a target measure \({\pi }\ll \mu \) on U, we investigate the smoothness and approximability of the KR transport \(T:U\rightarrow U\) satisfying \(T_\sharp {\rho }={\pi }\); the notation \(T_\sharp {\rho }\) refers to the pushforward measure defined by \(T_\sharp {\rho }(A){:=}{\rho }(\{T({{\varvec{y}}})\in A\,:\,{{\varvec{y}}}\in U\})\) for all measurable \(A\subseteq U\). While in general there exist multiple maps \(T:U\rightarrow U\) pushing forward \({\rho }\) to \({\pi }\), the KR transport is the unique such map satisfying triangularity and monotonicity. Triangularity refers to the kth component \(T_k\) of \(T=(T_k)_{k\in {{\mathbb {N}}}}\) being a function of the variables \(x_1,\dots ,x_k\) only, i.e., \(T_k:U_{k}\rightarrow U_{1}\) for all \(k\in {{\mathbb {N}}}\). Monotonicity means that \(x_k\mapsto T_k(x_1,\dots ,x_{k-1},x_k)\) is monotonically increasing on \(U_{1}\) for every \(k\in {{\mathbb {N}}}\) and every fixed \((x_1,\dots ,x_{k-1})\in U_{k-1}\).

Absolute continuity of \({\rho }\) and \({\pi }\) w.r.t. \(\mu \) implies existence of the Radon-Nikodym derivatives

which will also be referred to as the densities of these measures. Assuming for the moment existence of the KR transport T, approximating T requires approximating the infinitely many functions \(T_k:U_{k}\rightarrow U_{1}\), \(k\in {{\mathbb {N}}}\). This, and the fact that the domain \(U_{k}\) of \(T_k\) becomes increasingly high dimensional as \(k\rightarrow \infty \), makes the problem quite challenging.

For these reasons, further assumptions on \({\rho }\) and \({\pi }\) are necessary. Typical requirements imposed on the measures guarantee some form of intrinsic low dimensionality. Examples include densities belonging to certain reproducing kernel Hilbert spaces, or to other function classes of sufficient regularity. In this paper we concentrate on the latter. As is well-known, if \(T_k:U_{k}\rightarrow U_{1}\) belongs to \(C^k\), then it can be uniformly approximated with the k-independent convergence rate of 1, for instance with multivariate polynomials. The convergence rate to approximate \(T_k\) then does not deteriorate with increasing k, but the constants in such error bounds usually still depend exponentially on k. Moreover, as \(k\rightarrow \infty \), this line of argument requires the components of the map to become arbitrarily regular. For this reason, in the present work, where \(T=(T_k)_{k\in {{\mathbb {N}}}}\), it is not unnatural to restrict ourselves to transports that are \(C^\infty \). More precisely, we in particular assume analyticity of the densities \(f_{\rho }\) and \(f_{\pi }\), which in turn implies analyticity of T as we shall see. This will allow us to control all occurring constants independent of the dimension, and approximate the whole map \(T:U\rightarrow U\) using only finitely many degrees of freedom in our approximation.

Assume in the following that Z is a Banach space with complexification \(Z_{\mathbb {C}}\); see, e.g., [18, 27] for the complexification of Banach spaces. We may think of Z and \(Z_{\mathbb {C}}\) as real- and complex-valued function spaces, e.g., \(Z=L^2([0,1],{{\mathbb {R}}})\) and \(Z_{\mathbb {C}}=L^2([0,1],{\mathbb {C}})\). To guarantee analyticity and the structure in (1.1) we consider densities f of the following type:

Assumption 2.1

For constants \(p\in (0,1)\), \(0<M\le L<\infty \), a sequence \((\psi _j)_{j\in {{\mathbb {N}}}}\subseteq Z\), and a differentiable function \({\mathfrak {f}}:O_Z\rightarrow {\mathbb {C}}\) with \(O_Z\subseteq Z_{\mathbb {C}}\) open, the following hold:

-

(a)

\(\sum _{j\in {{\mathbb {N}}}}\Vert \psi _{j} \Vert _{Z}^p<\infty \),

-

(b)

\(\sum _{j\in {{\mathbb {N}}}}y_j\psi _{j}\in O_Z\) for all \({{\varvec{y}}}\in U\),

-

(c)

\({\mathfrak {f}}(\sum _{j\in {{\mathbb {N}}}}y_j\psi _{j})\in {{\mathbb {R}}}\) for all \({{\varvec{y}}}\in U\),

-

(d)

\(M= \inf _{\psi \in O_Z}|{\mathfrak {f}}(\psi )|\le \sup _{\psi \in O_Z}|{\mathfrak {f}}(\psi )| = L\).

The function \(f:U\rightarrow {{\mathbb {R}}}\) given by

satisfies \(\int _U f({{\varvec{y}}})\;\mathrm {d}\mu ({{\varvec{y}}})=1\).

Assumption 2.2

For two sequences \((\psi _{*,j})_{j\in {{\mathbb {N}}}}\in Z\) with \((*,Z)\in \{({\rho },X),({\pi },Y)\}\), the functions

both satisfy Assumption 2.1 for some fixed constants \(p\in (0,1)\) and \(0<M\le L<\infty \).

The summability parameter p determines the decay rate of the functions \(\psi _j\)—the smaller p the stronger the decay of the \(\psi _j\). Because \(p<1\), the argument of \({\mathfrak {f}}\) in (2.3) is well-defined for \({{\varvec{y}}}\in U\) since \(\sum _{j\in {{\mathbb {N}}}}|y_j|\Vert \psi _{j} \Vert _{Z}<\infty \).

Our main result is about the existence and approximation of the KR-transport \(T:U\rightarrow U\) satisfying \(T_\sharp {\rho }={\pi }\). We state the result here in a simplified form; more details will be given in Theorems 5.2, 6.1, and 6.4. We only mention that the trivial approximation \(T_k(x_1,\dots ,x_k)\simeq x_k\) is interpreted as not requiring any degrees of freedom in the following theorem.

Theorem 2.3

Let \(f_{\rho }:U\rightarrow (0,\infty )\) and \(f_{\pi }:U\rightarrow (0,\infty )\) be two probability densities as in Assumption 2.2 for some \(p\in (0,1)\). Then there exists a unique triangular, monotone, and bijective map \(T:U\rightarrow U\) satisfying \(T_\sharp {\rho }={\pi }\).

Moreover, for every \(N\in {{\mathbb {N}}}\) there exists a space of rational functions employing N degrees of freedom, and a bijective, monotone, and triangular \({{\tilde{T}}}:U\rightarrow U\) in this space such that

Here C is a constant independent of N and “\(\mathrm{dist}\)” may refer to the total variation distance, the Hellinger distance, the KL divergence, or the Wasserstein distance.

Equation (2.4) shows a dimension-independent convergence rate (indeed our transport is defined on the infinite-dimensional domain \(U=[-1,1]^{{\mathbb {N}}}\)), so that the curse of dimensionality is overcome. The rate of algebraic convergence becomes arbitrarily large as \(p\in (0,1)\) in Assumption 2.1 becomes small. The convergence rate \(\frac{1}{p}-1\) in Theorem 2.3 is well-known for the approximation of functions as in (2.3) by sparse polynomials, e.g., [6,7,8]; also see Remark 2.7. There is a key difference to earlier results dealing with the approximation of such functions: we do not approximate the function \(f:U\rightarrow {{\mathbb {R}}}\) in (2.3), but instead we approximate the transport \(T:U\rightarrow U\), i.e., an infinite number of functions. Our main observation in this paper is that the sparsity of the densities \(f_{\rho }\) and \(f_{\pi }\) carries over to the transport. Even though it has infinitely many components, T can still be approximated very efficiently if the ansatz space is carefully chosen and tailored to the specific densities. In addition to showing error convergence (2.4), in Theorem 5.2 we give concrete ansatz spaces achieving this convergence rate. These ansatz spaces can be computed in linear complexity and may be used in applications.

The main application for our result is to provide a method to sample from the target \({\pi }\) or the pushforward \(\Phi _\sharp {\pi }\) in the Banach space Y, where \(\Phi ({{\varvec{y}}})=\sum _{j\in {{\mathbb {N}}}}y_j\psi _{{\pi },j}\). Given an approximation \({{\tilde{T}}}=({{\tilde{T}}}_j)_{j\in {{\mathbb {N}}}}\) to T, this is achieved via \(\Phi ({{\tilde{T}}}({{\varvec{y}}}))\) for \({{\varvec{y}}}\sim {\rho }\). It is natural to truncate this expansion, which yields

for some truncation parameter \(s\in {{\mathbb {N}}}\) and \((y_1,\dots ,y_s)\in U_{s}\). This map transforms a sample from a distribution on the s-dimensional space \(U_{s}\) to a sample from an infinite-dimensional distribution on Y. In Corollary 6.5 we show that the error of this truncated representation in the Wasserstein distance converges with the same rate as given in Theorem 2.3.

Remark 2.4

The reference \({\rho }\) is a “simple” measure whose main purpose is to allow for easy sampling. One possible choice for \({\rho }\) (that we have in mind throughout this paper) is the uniform measure \(\mu \). It trivially satisfies Assumption 2.1 with \({\mathfrak {f}}_{\rho }:{\mathbb {C}}\rightarrow {\mathbb {C}}\) being the constant 1 function (and, e.g., \(\psi _{{\rho },j}=0\in {\mathbb {C}}\)).

Remark 2.5

Even though we can think of \({\rho }\) as being \(\mu \), we formulated Theorem 2.3 in more generality, mainly for the following reason: since the assumptions on \({\rho }\) and \({\pi }\) are the same, we may switch their roles. Thus Theorem 2.3 can be turned into a statement about the inverse transport \(S{:=}T^{-1}:U\rightarrow U\), which can also be approximated at the rate \(\frac{1}{p}-1\).

Example 2.6

(Bayesian inference) For a Banach space Y (“parameter space”) and a Banach space \({\mathcal {X}}\) (“solution space”), let \({\mathfrak {u}}:O_Y\rightarrow {\mathcal {X}}_{\mathbb {C}}\) be a complex differentiable forward operator. Here \(O_Y\subseteq Y_{\mathbb {C}}\) is some nonempty open set. Let \(G:{\mathcal {X}}\rightarrow {{\mathbb {R}}}^m\) be a bounded linear observation operator. For some unknown \(\psi \in Y\) we are given a noisy observation of the system in the form

where \(\eta \sim {\mathcal {N}}(0,\Gamma )\) is a centered Gaussian random variable with symmetric positive definite covariance \(\Gamma \in {{\mathbb {R}}}^{m\times m}\). The goal is to recover \(\psi \) given the measurement \(\varsigma \).

To formulate the Bayesian inverse problem, we first fix a prior: let \((\psi _{j})_{j\in {{\mathbb {N}}}}\) be a summable sequence of linearly independent elements in Y. With

and the uniform measure \(\mu \) on U, we choose the prior \(\Phi _\sharp \mu \) on Y. Determining \(\psi \) within the set \(\{\Phi ({{\varvec{y}}})\,:\,{{\varvec{y}}}\in U\}\subseteq Y\) is equivalent to determining the coefficient sequence \({{\varvec{y}}}\in U\). Assuming independence of \({{\varvec{y}}}\sim \mu \) and \(\eta \sim {{\mathcal {N}}}(0,\Gamma )\), the distribution of \({{\varvec{y}}}\) given \(\varsigma \) (the posterior) can then be characterized by its density w.r.t. \(\mu \), which, up to a normalization constant, equals

This posterior density is of form (2.3) and the corresponding measure \({\pi }\) can be chosen as a target in Theorem 2.3. Given T satisfying \(T_\sharp {\rho }={\pi }\), we may then explore \({\pi }\) to perform inference on the unknown \({{\varvec{y}}}\); see for instance [41, Sect. 7.4]. For more details on the rigorous derivation of (2.5) we refer to [34] and in particular [9, Sect. 3].

Remark 2.7

Functions as in Assumption 2.1 belong to the set of so-called \(({{\varvec{b}}},p,\varepsilon )\)-holomorphic functions; see, e.g., [6]. This class contains infinite parametric functions that are holomorphic in each argument \(y_j\), and exhibit some growth in the domain of holomorphic extension as \(j\rightarrow \infty \). The results of the present paper and the key arguments remain valid if we replace Assumption 2.1 with the \(({{\varvec{b}}},p,\varepsilon )\)-holomorphy assumption. Since most relevant examples of such functions are of specific type (2.3), we restrict the discussion to this case in order to avoid technicalities.

3 The Knothe–Rosenblatt Transport in Infinite Dimensions

Recall that we consider the product topology on \(U=[-1,1]^{{\mathbb {N}}}\). Assume that \(f_{\rho }\in C^0(U,{{\mathbb {R}}}_+)\) and \(f_{\pi }\in C^0(U,{{\mathbb {R}}}_+)\) are two positive probability densities. Here \({{\mathbb {R}}}_+{:=}(0,\infty )\), and \(C^0(U,{{\mathbb {R}}}_+)\) denotes the continuous functions from \(U\rightarrow {{\mathbb {R}}}_+\). We now recall the construction of the KR map.

For \({{\varvec{y}}}=(y_j)_{j\in {{\mathbb {N}}}}\in {\mathbb {C}}^{{\mathbb {N}}}\) and \(1\le k\le n<\infty \) let

For \(*\in \{{\rho },{\pi }\}\) and \({{\varvec{y}}}\in U\) define

and for \(k\in {{\mathbb {N}}}\)

Then, \({{\varvec{y}}}_{[k]}\mapsto {{\hat{f}}}_{{\rho },k}({{\varvec{y}}}_{[k]})\) is the marginal density of \({\rho }\) in the first k variables \({{\varvec{y}}}_{[k]}\in U_{k}\), and we denote the corresponding measure on \(U_{k}\) by \({\rho }_k\). Similarly, \(y_k\mapsto f_{{\rho },k}({{\varvec{y}}}_{[k-1]},y_k)\) is the conditional density of \(y_k\) given \({{\varvec{y}}}_{[k-1]}\), and the corresponding measure on \(U_{1}\) is denoted by \({\rho }_k^{{{\varvec{y}}}_{[k-1]}}\). The same holds for the densities of \({\pi }\), and we use the analogous notation \({\pi }_k\) and \({\pi }_k^{{{\varvec{y}}}_{[k-1]}}\) for the marginal and conditional measures.

Recall that for two atomless measures \(\eta \) and \(\nu \) on \(U_{1}\) with distribution functions \(F_\eta :U_{1}\rightarrow [0,1]\) and \(F_\nu :U_{1}\rightarrow [0,1]\), \(F_\eta ^{-1}\circ F_\nu :U_{1}\rightarrow U_{1}\) pushes forward \(\nu \) to \(\eta \), as is easily checked, e.g., [33, Theorem 2.5]. In case \(\eta \) and \(\nu \) have positive densities on \(U_{1}\), this map is the unique strictly monotonically increasing such function. With this in mind, the KR-transport can be constructed as follows: let \(T_1:U_{1}\rightarrow U_{1}\) be the (unique) monotonically increasing transport satisfying

Analogous to (3.1) denote \(T_{[k]}{:=}(T_j)_{j=1}^k:U_{k}\rightarrow U_{k}\). Let inductively for any \({{\varvec{y}}}\in U\), \(T_{k+1}({{\varvec{y}}}_{[k]},\cdot ):U_{1}\rightarrow U_{1}\) be the (unique) monotonically increasing transport such that

Note that \(T_{k+1}:U_{{k+1}}\rightarrow U_{1}\) and thus \(T_{[k+1]}=(T_j)_{j=1}^{k+1}:U_{{k+1}}\rightarrow U_{{k+1}}\). It can then be shown that for any \(k\in {{\mathbb {N}}}\) [33, Proposition 2.18]

By induction this construction yields a map \(T{:=}(T_k)_{k\in {{\mathbb {N}}}}\) where each \(T_k:U_{k}\rightarrow U_{1}\) satisfies that \(T_k({{\varvec{y}}}_{[k-1]},\cdot ):U_{1}\rightarrow U_{1}\) is strictly monotonically increasing and bijective. This implies that \(T:U\rightarrow U\) is bijective, as follows. First, to show injectivity: let \({{\varvec{x}}}\ne {{\varvec{y}}}\in U\) and \(j={{\,\mathrm{argmin}\,}}\{i\,:\,x_i\ne y_i\}\). Since \(t\mapsto T_j(x_1,\dots ,x_{j-1},t)\) is bijective, \(T_j(x_1,\dots ,x_{j-1},x_j)\ne T_j(x_1,\dots ,x_{j-1},y_j)\) and thus \(T({{\varvec{x}}})\ne T({{\varvec{y}}})\). Next, to show surjectivity: fix \({{\varvec{y}}}\in U\). Bijectivity of \(T_1:U_{1}\rightarrow U_{1}\) implies existence of \(x_1\in U_{1}\) such that \(T_1(x_1)=y_1\). Inductively choose \(x_j\) such that \(T_j(x_1,\dots ,x_j)=y_j\). Then \(T({{\varvec{x}}})={{\varvec{y}}}\). Thus:

Lemma 3.1

Let \(T=(T_k)_{k\in {{\mathbb {N}}}}:U\rightarrow U\) be triangular. If \(t\mapsto T_k({{\varvec{y}}}_{[k-1]},t)\) is bijective from \(U_{1}\rightarrow U_{1}\) for every \({{\varvec{y}}}\in U\) and \(k\in {{\mathbb {N}}}\), then \(T:U\rightarrow U\) is bijective.

The continuity assumption on the densities guarantees that the marginal densities on \(U_{k}\) converge uniformly to the full density, as we show next. This indicates that in principle it is possible to approximate the infinite-dimensional transport map by restricting to finitely many dimensions.

Lemma 3.2

Let \(f\in C^0(U,{{\mathbb {R}}}_+)\), and let \({{\hat{f}}}_k\) and \(f_k\) be as in (3.2). Then

-

(i)

f is measurable and \(f\in L^2(U,\mu )\),

-

(ii)

\({{\hat{f}}}_{k}\in C^0(U_{k},{{\mathbb {R}}}_+)\) and \(f_{k}\in C^0(U_{k},{{\mathbb {R}}}_+)\) for every \(k\in {{\mathbb {N}}}\),

-

(iii)

it holds

$$\begin{aligned} \lim _{k\rightarrow \infty }\sup _{{{\varvec{y}}}\in U}|{{\hat{f}}}_{k}({{\varvec{y}}}_{[k]})-f({{\varvec{y}}})|=0. \end{aligned}$$(3.5)

Throughout what follows T always stands for the KR transport defined in (3.3). Next we show that T indeed pushes forward \({\rho }\) to \({\pi }\), and additionally we provide a formula for the transformation of densities. In the following \(\partial _jg({{\varvec{x}}}){:=}\frac{\partial }{\partial x_j}g({{\varvec{x}}})\). Furthermore, we call \(f:U\rightarrow {{\mathbb {R}}}\) a positive probability density if \(f({{\varvec{y}}})>0\) for all \({{\varvec{y}}}\in U\) and \(\int _U f({{\varvec{y}}})\;\mathrm {d}\mu ({{\varvec{y}}})=1\).

Theorem 3.3

Let \(f_{\pi }\), \(f_{\rho }\in C^0(U,{{\mathbb {R}}}_+)\) be two positive probability densities. Then

-

(i)

\(T=(T_k)_{k\in {{\mathbb {N}}}}:U\rightarrow U\) is measurable, bijective and satisfies \(T_\sharp {\rho }={\pi }\),

-

(ii)

for each \(k\in {{\mathbb {N}}}\) holds \(\partial _kT_k({{\varvec{y}}}_{[k]})\in C^0(U_{k},{{\mathbb {R}}}_+)\) and

$$\begin{aligned} \det dT({{\varvec{y}}}){:=}\lim _{n\rightarrow \infty }\prod _{j=1}^n\partial _jT_j({{\varvec{y}}}_{[j]})\in C^0(U,{{\mathbb {R}}}_+) \end{aligned}$$(3.6)is well-defined (i.e., converges in \(C^0(U,{{\mathbb {R}}}_+)\)). Moreover

$$\begin{aligned} f_{{\pi }}(T({{\varvec{y}}}))\det dT({{\varvec{y}}})=f_{\rho }({{\varvec{y}}}) \qquad \forall {{\varvec{y}}}\in U. \end{aligned}$$

Remark 3.4

Switching the roles of \(f_{\rho }\) and \(f_{\pi }\), for \(S=T^{-1}\) it holds \(f_{\rho }(S({{\varvec{y}}}))\det dS({{\varvec{y}}})=f_{\pi }({{\varvec{y}}})\) for all \({{\varvec{y}}}\in U\), where \(\det dS({{\varvec{y}}}){:=}\lim _{n\rightarrow \infty }\prod _{j=1}^n\partial _jS_j({{\varvec{y}}}_{[j]})\) is well-defined.

4 Analyticity of T

In this section we investigate the domain of analytic extension of T. To state our results, for \(\delta >0\) and \(D\subseteq {\mathbb {C}}\) we introduce the complex sets

and for \(k\in {{\mathbb {N}}}\) and \({\varvec{\delta }}\in (0,\infty )^k\)

which are subsets of \({\mathbb {C}}^k\). Their closures will be denoted by \({{\bar{{{\mathcal {B}}}}}}_\delta \), etc. If we write \({{\mathcal {B}}}_{\varvec{\delta }}(U_{1})\times U\) we mean elements \({{\varvec{y}}}\in {\mathbb {C}}^{{\mathbb {N}}}\) with \(y_j\in {{\mathcal {B}}}_{\delta _j}(U_{1})\) for \(j\le k\) and \(y_j\in U_{1}\) otherwise. Subsets of \({\mathbb {C}}^{{\mathbb {N}}}\) are always equipped with the product topology.

In this section we analyze the domain of holomorphic extension of each component \(T_k:U_{k}\rightarrow U_{1}\) of T to subsets of \({\mathbb {C}}^k\). The reason why we are interested in such statements, is that they allow to upper bound the expansion coefficients w.r.t. certain polynomial bases: for a multiindex \({\varvec{\nu }}\in {{\mathbb {N}}}_0^k\) (where \({{\mathbb {N}}}_0=\{0,1,2,\dots \}\)) let \(L_{\varvec{\nu }}({{\varvec{y}}})=\prod _{j=1}^kL_{\nu _j}(y_j)\) be the product of the one-dimensional Legendre polynomials normalized in \(L^2(U_{1},\mu )\). Then \((L_{\varvec{\nu }})_{{\varvec{\nu }}\in {{\mathbb {N}}}_0^k}\) forms an orthonormal basis of \(L^2(U_{k},\mu )\). Hence we can expand \(\partial _kT_k({{\varvec{y}}}_{[k]})=\sum _{{\varvec{\nu }}\in {{\mathbb {N}}}_0^k}l_{k,{\varvec{\nu }}} L_{\varvec{\nu }}({{\varvec{y}}}_{[k]})\) for \({{\varvec{y}}}\in U\) and with the Legendre coefficients

Analyticity of \(T_k\) (and thus of \(\partial _kT_k\)) on the set \({{\mathcal {B}}}_{\varvec{\delta }}(U_{1})\) implies bounds of the type (see Lemma C.3)

Here C in particular depends on \(\min _j \delta _j>0\). The exponential decay in each \(\nu _j\) leads to exponential convergence of truncated sparse Legendre expansions. Once we have approximated \(\partial _kT_k\), we integrate this term in \(x_k\) to obtain an approximation to \(T_k\). The reason for not approximating \(T_k\) directly is explained after Proposition 4.2 below; see (4.5). The size of the holomorphy domain (the size of \({\varvec{\delta }}\)) determines the constants in these estimates—the larger the entries of \({\varvec{\delta }}\), the smaller the upper bound (4.2) and the faster the convergence.

We are now in position to present our main technical tool to find suitable holomorphy domains of each \(T_k\) (or equivalently \(\partial _kT_k\)). We will work under the following assumption on the two densities \(f_{\rho }:U\rightarrow (0,\infty )\) and \(f_{\pi }:U\rightarrow (0,\infty )\). The assumption is a modification of [41, Assumption 3.5].

Assumption 4.1

For constants \(C_1\), \(M>0\), \(L<\infty \), \(k\in {{\mathbb {N}}}\), and \({\varvec{\delta }}\in (0,\infty )^k\), the following hold:

-

(a)

\(f\in C^0({{\mathcal {B}}}_{{\varvec{\delta }}}(U_{1})\times U,{\mathbb {C}})\) and \(f:U\rightarrow {{\mathbb {R}}}_+\) is a probability density,

-

(b)

\({{\varvec{x}}}\mapsto f({{\varvec{x}}},{{\varvec{y}}})\in C^1({{\mathcal {B}}}_{{\varvec{\delta }}}(U_{1}),{\mathbb {C}})\) for all \({{\varvec{y}}}\in U\),

-

(c)

\( M\le |f({{\varvec{y}}})|\le L\) for all \({{\varvec{y}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}}(U_{1})\times U\),

-

(d)

\(\sup _{{{\varvec{y}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}}\times \{0\}^{{\mathbb {N}}}}|f({{\varvec{x}}}+{{\varvec{y}}})-f({{\varvec{x}}})| \le C_1\) for all \({{\varvec{x}}}\in U\),

-

(e)

\(\sup _{{{\varvec{y}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}_{[j]}}\times \{0\}^{{{\mathbb {N}}}}}|f({{\varvec{x}}}+{{\varvec{y}}})-f({{\varvec{x}}})|\le C_1 \delta _{j+1}\) for all \({{\varvec{x}}}\in U\) and \(j\in \{1,\dots ,k-1\}\).

Such densities yield certain holomorphy domains for \(T_k\) as we show in the next proposition, which is an infinite-dimensional version of [41, Theorem 3.6].

Proposition 4.2

Let \(k\in {{\mathbb {N}}}\), \({\varvec{\delta }}\in (0,\infty )^k\) and \(0< M\le L<\infty \). There exist \(C_1>0\), \(C_2\in (0,1]\) and \(C_3>0\) solely depending on M and L (but not on k or \({\varvec{\delta }}\)) such that if \(f_{\rho }\) and \(f_{\pi }\) satisfy Assumption 4.1 with \(C_1\), M, L, and \({\varvec{\delta }}\), then:

With \({\varvec{\zeta }}=(\zeta _j)_{j=1}^k\) defined by

it holds for all \(j\in \{1,\dots ,k\}\) with \(R_j{:=}\partial _jT_j\) (with T as in (3.3)) that

-

(i)

\(R_j\in C^1({{\mathcal {B}}}_{{\varvec{\zeta }}_{[j]}}(U_{1}),{{\mathcal {B}}}_{ C_3}(1))\) and \(\Re (R_j({{\varvec{x}}}))\ge \frac{1}{C_3}\) for all \({{\varvec{x}}}\in {{\mathcal {B}}}_{{\varvec{\zeta }}_{[j]}}(U_{1})\),

-

(ii)

if \(j\ge 2\), \(R_j:{{\mathcal {B}}}_{{\varvec{\zeta }}_{[j-1]}}(U_{1})\times U_{1}\rightarrow {{\mathcal {B}}}_{\frac{C_3}{\delta _j}}(1)\).

Let us sketch how this result can be used to show that \(T_k\) can be approximated by polynomial expansions. In Appendix B.2 we will verify Assumption 4.1 for densities as in (2.3). Proposition 4.2 (i) then provides a holomorphy domain for \(\partial _kT_k\), and together with (4.2) we can bound the expansion coefficients \(l_{k,{\varvec{\nu }}}\) of \(\partial _kT_k=\sum _{{\varvec{\nu }}\in {{\mathbb {N}}}_0^k}l_{k,{\varvec{\nu }}} L_{\varvec{\nu }}({{\varvec{y}}})\). However, there is a catch: in general one can find different \({\varvec{\delta }}\) such that Assumption 4.1 holds. The difficulty is to choose \({\varvec{\delta }}\) in a way that depends on \({\varvec{\nu }}\) to obtain a possibly sharp bound in (4.2). To do so we will use ideas from, e.g., [6] where similar calculations were made.

The outlined argument based on Proposition 4.2 (i) suffices to prove convergence of sparse polynomial expansions in the finite-dimensional case; see [41, Theorem 4.6]. In the infinite-dimensional case where we want to approximate \(T=(T_k)_{k\in {{\mathbb {N}}}}\) with only finitely many degrees of freedom we additionally need to employ Proposition 4.2 (ii): for \({\varvec{\nu }}\in {{\mathbb {N}}}_0^k\) such that \({\varvec{\nu }}\ne {\varvec{0}}{:=}(0)_{j=1}^k\) but \(\nu _k=0\), Proposition 4.2 (ii) together with (4.2) implies a bound of the type

where the additional \(\frac{1}{\delta _k}\) stems from \(\Vert \partial _kT_k-1 \Vert _{L^\infty ({{\mathcal {B}}}_{{\varvec{\zeta }}_{[j-1]}}(U_{1})\times U_{1})}\le \frac{C_3}{\delta _k}\). Here we used the fact \(\int _{U_{k}} L_{\varvec{\nu }}({{\varvec{y}}}_{[k]})\;\mathrm {d}\mu ({{\varvec{y}}}_{[k]})=0\) for all \({\varvec{\nu }}\ne {\varvec{0}}\) by orthogonality of the \((L_{\varvec{\nu }})_{{\varvec{\nu }}\in {{\mathbb {N}}}_0^k}\) and because \(L_{\varvec{0}}\equiv 1\). In case \(\nu _k\ne 0\), then the factor \(\frac{1}{1+\delta _k}\) occurs on the right-hand side of (4.2) . Hence, all coefficients \(l_{k,{\varvec{\nu }}}\) for which \({\varvec{\nu }}\ne {\varvec{0}}\) are of size \(O(\frac{1}{\delta _k})\). In fact one can show that even \(\sum _{{\varvec{\nu }}\ne {\varvec{0}}}|l_{k,{\varvec{\nu }}}||L_{\varvec{\nu }}({{\varvec{y}}}_{[k]})|\) is of size \(O(\frac{1}{\delta _k})\). Thus

Using \(L_{\varvec{0}}\equiv 1\)

and therefore if \(\delta _k\) is very large, since \(L_{\varvec{0}}\equiv 1\)

Hence, for large \(\delta _k\) we can use the trivial approximation \(T_k({{\varvec{y}}}_{[k]})\simeq y_k\). To address this special role played by the kth variable for the kth component we introduce

which, up to constants, corresponds to the minimum of (4.2) and (4.4). This quantity can be interpreted as measuring the importance of the monomial \({{\varvec{y}}}^{\varvec{\nu }}\) in the ansatz space used for the approximation of \(T_k\), and we will use it to construct such ansatz spaces.

Remark 4.3

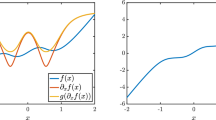

To explain the key ideas, in this section we presented the approximation of \(T_k\) via a Legendre expansion of \(\partial _k T_k\). For the proofs of our approximation results in Sect. 5 we instead approximate \(\sqrt{\partial _k T_k}-1\) with truncated Legendre expansions. This will guarantee the approximate transport to satisfy the monotonicity property as explained in Sect. 5.

5 Convergence of the Transport

We are now in position to state an algebraic convergence result for approximations of infinite-dimensional transport maps \(T:U\rightarrow U\) associated to densities of type (2.3).

For a triangular approximation \({{\tilde{T}}}=({{\tilde{T}}}_k)_{k\in {{\mathbb {N}}}}\) to T it is desirable that it retains the monotonicity and bijectivity properties, i.e., \(\partial _k{{\tilde{T}}}_k>0\) and \({{\tilde{T}}}:U\rightarrow U\) is bijective. The first guarantees that \({{\tilde{T}}}\) is injective and easy to invert (by subsequently solving the one-dimensional equations \(x_k={{\tilde{T}}}_k(y_1,\dots ,y_k)\) for \(y_k\) starting with \(k=1\)), and for the purpose of generating samples, the second property ensures that for \({{\varvec{y}}}\sim {\rho }\), the transformed sample \({{\tilde{T}}}({{\varvec{y}}})\sim {{\tilde{T}}}_\sharp {\rho }\) also belongs to U. These constraints are hard to enforce for polynomial approximations. For this reason, we use the same rational parametrization we introduced in [41] for the finite-dimensional case: for a set of k-dimensional multiindices \(\Lambda \subseteq {{\mathbb {N}}}_0^k\), define

The dimension of this space is equal to the cardinality of \(\Lambda \), which we denote by \(|\Lambda |\). Let \(p_k\in {{\mathbb {P}}}_\Lambda \) (where \(\Lambda \) remains to be chosen) be a polynomial approximation to \(\sqrt{\partial _kT_k}-1\). Set for \({{\varvec{y}}}\in U_{k}\)

It is easily checked that \({{\tilde{T}}}_k\) satisfies both monotonicity and bijectivity as long as \(p_k\ne -1\). Thus we end up with a rational function \({{\tilde{T}}}_k\), but we emphasize that the use of rational functions instead of polynomials is not due to better approximation capabilities, but solely to guarantee bijectivity of \({{\tilde{T}}}:U\rightarrow U\).

Remark 5.1

Observe that \(\Lambda =\emptyset \) gives the trivial approximation \(p_k{:=}0\in {{\mathbb {P}}}_\emptyset \) and \({{\tilde{T}}}_k({{\varvec{y}}})=y_k\).

The following theorem yields an algebraic convergence rate independent of the dimension (since the dimension is infinity) in terms of the total number of degrees of freedom for the approximation of T. Therefore the curse of dimensionality is overcome for densities as in Assumption 2.1.

Theorem 5.2

Let \(f_{\rho }\), \(f_{\pi }:U\rightarrow (0,\infty )\) be two probability densities satisfying Assumption 2.2 for some \(p\in (0,1)\). Set \(b_j{:=}\max \{\Vert \psi _{{\rho },j} \Vert _{Z},\Vert \psi _{{\pi },j} \Vert _{Z}\}\), \(j\in {{\mathbb {N}}}\).

There exist \(\alpha >0\) and \(C>0\) such that the following holds: for \(j\in {{\mathbb {N}}}\) set

and with \(\gamma ({\varvec{\varrho }},{\varvec{\nu }})\) as in (4.6) define

For each \(k\in {{\mathbb {N}}}\) there exists a polynomial \(p_k\in {{\mathbb {P}}}_{\Lambda _{\varepsilon ,k}}\) such that with the components \({{\tilde{T}}}_{\varepsilon ,k}\) as in (5.1), \({{\tilde{T}}}_\varepsilon =(\tilde{T}_{\varepsilon ,k})_{k\in {{\mathbb {N}}}}:U\rightarrow U\) is a monotone triangular bijection. For all \(\varepsilon >0\), it holds that \(N_\varepsilon {:=}\sum _{k\in {{\mathbb {N}}}} |\Lambda _{\varepsilon ,k}|<\infty \) and

and

Remark 5.3

Fix \(\varepsilon >0\). Since \(N_\varepsilon <\infty \), there exists \(k_0\in {{\mathbb {N}}}\) such that for all \(k\ge k_0\) holds \(\Lambda _{\varepsilon ,k}=\emptyset \) and thus \({{\tilde{T}}}_{\varepsilon ,k}({{\varvec{y}}}_{[k]})=y_k\), cp. Remark 5.1.

Switching the roles of \({\rho }\) and \({\pi }\), Theorem 5.2 also yields an approximation result for the inverse transport \(S=T^{-1}\) by some rational functions \({{\tilde{S}}}_k\) as in (5.1). Moreover, if \({{\tilde{T}}}\) is the rational approximation from Theorem 5.2, then its inverse \(\tilde{T}^{-1}:U\rightarrow U\) (whose components are not necessarily rational functions) also satisfies an error bound of type (5.3) as we show next.

Corollary 5.4

Consider the setting of Theorem 5.2. Denote \(S{:=}T^{-1}:U\rightarrow U\) and \({{\tilde{S}}}_\varepsilon {:=}{{\tilde{T}}}_\varepsilon ^{-1}:U\rightarrow U\). Then there exists a constant C such that for all \(\varepsilon >0\)

and

Note that both S and \({{\tilde{S}}}_\varepsilon \) in Corollary 5.4 are monotonic, triangular bijections as they are the inverses of such maps.

6 Convergence of the Pushforward Measures

Theorem 5.2 established smallness of \(\sum _{k\in {{\mathbb {N}}}}|\partial _k(T_k-{{\tilde{T}}}_k)|\). The relevance of this term stems from the formal calculation (cp. (3.6))

Assuming that we can bound the last two products, the determinant \(\det d{{\tilde{T}}}\) converges to \(\det d T\) at the rate given in Theorem 5.2. This will allow us to bound the Hellinger distance (H), the total variation distance (TV), and the Kullback-Leibler divergence (KL) between \({{\tilde{T}}}_\sharp {\rho }\) and \({\pi }\), as we show in the following theorem. Recall that for two probability measures \(\nu \ll \mu \), \(\eta \ll \mu \) on U with densities \(f_\nu =\frac{\mathrm {d}\nu }{\mathrm {d}\mu }\), \(f_\eta =\frac{\mathrm {d}\eta }{\mathrm {d}\mu }\),

Theorem 6.1

Let \(f_{\rho }\), \(f_{\pi }\) satisfy Assumption 2.2 for some \(p\in (0,1)\), and let \({{\tilde{T}}}_\varepsilon :U\rightarrow U\) be the approximate transport from Theorem 5.2.

Then there exists \(C>0\) such that for \(\mathrm{dist}\in \{\mathrm{H},\mathrm{TV},\mathrm{KL}\}\) and every \(\varepsilon >0\)

Next we treat the Wasserstein distance. Recall that for a Polish space (M, d) (i.e., M is separable and complete with the metric d on M) and for \(q\in [1,\infty )\), the q-Wasserstein distance between two probability measures \(\nu \) and \(\eta \) on M (equipped with the Borel \(\sigma \)-algebra) is defined as [37, Def. 6.1]

where \(\Gamma \) stands for the couplings between \(\eta \) and \(\nu \), i.e., the set of probability measures on \(M\times M\) with marginals \(\nu \) and \(\eta \), cp. [37, Def. 1.1].

To bound the Wasserstein distance, we employ the following proposition. It has been similarly stated in [32, Theorem 2], but for measures on \({{\mathbb {R}}}^d\). To fit our setting, we extend the result to compact metric spaces,Footnote 1 but emphasize that the proof closely follows that of [32, Theorem 2], and the argument is very similar. As pointed out in [32], the bound in the proposition is sharp.

Proposition 6.2

Let \((M_1,d_1)\) be a compact metric space, and \((M_2,d_2)\) a Polish space, both equipped with the Borel \(\sigma \)-algebra. Let \(T:M_1\rightarrow M_2\) and \({{\tilde{T}}}:M_1\rightarrow M_2\) be two continuous functions and let \(\nu \) be a probability measure on \(M_1\). Then for every \(q\in [1,\infty )\)

To apply Proposition 6.2 we first have to equip U with a metric. For a sequence \((c_j)_{j\in {{\mathbb {N}}}}\in \ell ^1({{\mathbb {N}}})\) of positive numbers set

By Lemma A.1, d defines a metric that induces the product topology on U. Since U with the product topology is a compact space by Tychonoff’s theorem [26, Theorem 37.3], (U, d) is a compact Polish space. Moreover:

Lemma 6.3

Let \(f_{\rho }\), \(f_{\pi }\) satisfy Assumption 2.2 and consider metric (6.3) on U. Then \(T:U\rightarrow U\) and the approximation \({{\tilde{T}}}_\varepsilon :U\rightarrow U\) from Theorem 5.2 are continuous with respect to d. Moreover, if there exists \(C>0\) such that with

it holds that \(b_j\le Cc_j\) for all \(j\in {{\mathbb {N}}}\) (cp. Assumption 2.2), then T and \({{\tilde{T}}}_\varepsilon \) are Lipschitz continuous.

With \(d:U\times U\rightarrow {{\mathbb {R}}}\) as in (6.3), (U, d) is a compact Polish space and T and \({{\tilde{T}}}_\varepsilon \) are continuous, so that we can apply Proposition 6.2. Using Theorem 5.2 and \(\sup _jc_j\in (0,\infty )\),

Next let us discuss why \(c_j{:=}b_j\) as in (6.4) is a natural choice in our setting. Let \(\Phi :U\rightarrow Y\) be the map

In the inverse problem discussed in Example 2.6, we try to recover an element \(\Phi ({{\varvec{y}}})\in Y\). For computational purposes, the problem is set up to recover instead the expansion coefficients \({{\varvec{y}}}\in U\). Now suppose that \({\pi }\) is the posterior measure on U. Then \(\Phi _\sharp {\pi }=(\Phi \circ T)_\sharp {\rho }\) is the corresponding posterior measure on Y (the space we are actually interested in). The map \(\Phi :U\rightarrow Y\) is Lipschitz continuous w.r.t. the metric d on U, since for \({{\varvec{x}}}\), \({{\varvec{y}}}\in U\) due to \(\Vert \psi _{{\pi },j} \Vert _{Y}\le b_j\),

Therefore, \(\Phi \circ T:U\rightarrow Y\) and \(\Phi \circ {{\tilde{T}}}_\varepsilon :U\rightarrow Y\) are Lipschitz continuous by Lemma 6.3. Moreover, compactness of U and continuity of \(\Phi :U\rightarrow Y\) imply that \(\Phi (T(U))=\Phi ({{\tilde{T}}}_\varepsilon (U))=\Phi (U)\subseteq Y\) is compact and thus separable. Hence we may apply Proposition 6.2 also to the maps \(\Phi \circ T:U\rightarrow \Phi (U)\) and \(\Phi \circ \tilde{T}_\varepsilon :U\rightarrow \Phi (U)\). This gives a bound on the distance between the pushforward measures on the Banach space Y. Specifically, since \(\Vert \Phi (T({{\varvec{y}}}))-\Phi ({{\tilde{T}}}_\varepsilon ({{\varvec{y}}})) \Vert _{Y}\le d(T({{\varvec{y}}}),{{\tilde{T}}}_\varepsilon ({{\varvec{y}}}))\), which can be bounded as in (6.5), we have shown:

Theorem 6.4

Let \(f_{\rho }\), \(f_{\pi }\) satisfy Assumption 2.2 for some \(p\in (0,1)\), let \({{\tilde{T}}}_\varepsilon :U\rightarrow U\) be the approximate transport and let \(N_\varepsilon \in {{\mathbb {N}}}\) be the number of degrees of freedom as in Theorem 5.2.

Then there exists \(C>0\) such that for every \(q\in [1,\infty )\) and every \(\varepsilon >0\)

and for the pushforward measures on the Banach space Y

Finally let us discuss how to efficiently sample from the measure \(\Phi _\sharp {\pi }\) on the Banach space Y. As explained in the introduction, for a sample \({{\varvec{y}}}\sim {\rho }\) we have \(T({{\varvec{y}}})\sim {\pi }\) and \(\Phi (T({{\varvec{y}}}))=\sum _{j\in {{\mathbb {N}}}}T_j({{\varvec{y}}}_{[j]})\psi _{{\pi },j}\sim \Phi _\sharp {\pi }\). To truncate this series, introduce \(\Phi _s({{\varvec{y}}}_{[s]}){:=}\sum _{j=1}^s y_j\psi _{{\pi },j}\). As earlier, denote by \({\rho }_s\) the marginal measure of \({\rho }\) on \(U_{s}\). For \({{\varvec{y}}}_{[s]}\sim {\rho }_s\), the sample

follows the distribution of \((\Phi _s\circ \tilde{T}_{\varepsilon ,[s]})_\sharp {\rho }_s\), where \({{\tilde{T}}}_{\varepsilon ,[s]}{:=}({{\tilde{T}}}_{\varepsilon ,k})_{k=1}^s:U_{s}\rightarrow U_{s}\). In the next corollary we bound the Wasserstein distance between \((\Phi _s\circ \tilde{T}_{\varepsilon ,[s]})_\sharp {\rho }_s\) and \(\Phi _\sharp {\pi }\). Note that the former is a measure on Y, and in contrast to the latter, is supported on an s-dimensional subspace. Thus in general neither of these two measures need to be absolutely continuous w.r.t. the other. This implies that the KL divergence, the total variation distance, and the Hellinger distance, in contrast with the Wasserstein distance, need not tend to 0 as \(\varepsilon \rightarrow 0\) and \(s\rightarrow \infty \).

The corollary shows that the convergence rate in (6.7) can be retained by choosing the truncation parameter s as \(N_\varepsilon \) (the number of degrees of freedom in Theorem 5.2); in fact, it even suffices to truncate after the maximal k such that \(\Lambda _{\varepsilon ,k}\ne \emptyset \), as explained in Remark 6.7.

Corollary 6.5

Consider the setting of Theorem 6.4 and assume that \((b_j)_{j\in {{\mathbb {N}}}}\) in (6.4) is monotonically decreasing. Then there exists \(C>0\) such that for every \(q\in [1,\infty )\) and \(\varepsilon >0\)

Remark 6.6

Convergence in \(W_q\) implies weak convergence [37, Theorem 6.9].

Remark 6.7

Checking the proof of Theorem 5.2, we have \(N_\varepsilon \le C\varepsilon ^{-p}\), cp. (C.21). Thus the maximal activated dimension (represented by the truncation parameter \(s=N_\varepsilon \)) increases only algebraically as \(\varepsilon \rightarrow 0\). The approximation error also decreases algebraically like \(\varepsilon ^{1-p}\) as \(\varepsilon \rightarrow 0\), cp. (C.22). Moreover, the function \(\Phi _{s_\varepsilon }\circ {{\tilde{T}}}_{\varepsilon ,[s_\varepsilon ]}\) with \(s_\varepsilon {:=}\max \{k\in {{\mathbb {N}}}\,:\,\Lambda _{\varepsilon ,k}\ne \emptyset \}\) leads to the same convergence rate in Corollary 6.5. In other words, we only need to use the components \({{\tilde{T}}}_{\varepsilon ,k}\) for which \(\Lambda _{\varepsilon ,k}\ne \emptyset \).

7 Conclusions

The use of transportation methods to sample from high-dimensional distributions is becoming increasingly popular to solve inference problems and perform other machine learning tasks. Therefore, questions of when and how these methods can be successful are of great importance, but thus far not well understood. In the present paper we analyze the approximation of the KR transport in the high- (or infinite-)dimensional regime and on the bounded domain \([-1,1]^{{\mathbb {N}}}\). Under the setting presented in Sect. 2, it is shown that the transport can be approximated without suffering from the curse of dimension. Our approximation is based on polynomial and rational functions, and we provide an explicit a priori construction of the ansatz space. Moreover, we show how these results imply that it is possible to efficiently sample from certain high-dimensional distributions by transforming a lower-dimensional latent variable.

As we have discussed in the finite-dimensional case [41, Sect. 5], from an approximation viewpoint there is also a link to neural networks, which can be established via [36, 39] where it is proven that ReLU neural networks are efficient at emulating polynomials and rational functions. While we have not developed this aspect further in the present manuscript, we mention that neural networks are used in the form of normalizing flows [29, 30] to couple distributions in spaces of equal dimension, and for example in the form of generative adversarial networks [2, 14] and, more recently, injective flows [19, 21], to map lower-dimensional latent variables to samples from a high-dimensional distribution. In Sect. 6 we provided some insight (for the present setting, motivated by inverse problems in science and engineering) into how low-dimensional the latent variable can be, and how expressive the transport should be, to achieve a certain accuracy in the Wasserstein distance (see Corollary 6.5). Further examining this connection and generalizing our results to distributions on unbounded domains (such as \({{\mathbb {R}}}^{{\mathbb {N}}}\) instead of \([-1,1]^{{\mathbb {N}}}\)) will be the topic of future research.

Notes

The author of [37] mentions that such a result is already known, but without providing a reference. For completeness we have added the proof.

References

Alexanderian, A.: A brief note on the Karhunen–Loève expansion (2015)

Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein generative adversarial networks. In D. Precup and Y. W. Teh (eds.) Proceedings of the 34th International Conference on Machine Learning, volume 70 of Proceedings of Machine Learning Research, pp. 214–223. PMLR, 06–11 Aug (2017)

Bogachev, V.I.: Measure Theory, vol. I–II. Springer, Berlin (2007)

Bogachev, V.I., Kolesnikov, A.V., Medvedev, K.V.: Triangular transformations of measures. Mat. Sb. 196(3), 3–30 (2005)

Chkifa, A.: Sparse polynomial methods in high dimension: application to parametric PDE, Ph.D. thesis, UPMC, Université Paris 06, Paris, France (2014)

Cohen, A., Chkifa, A., Schwab, C.: Breaking the curse of dimensionality in sparse polynomial approximation of parametric pdes. J. Math. Pures et Appliquees 103(2), 400–428 (2015)

Cohen, A., DeVore, R., Schwab, C.: Convergence rates of best \(N\)-term Galerkin approximations for a class of elliptic sPDEs. Found. Comput. Math. 10(6), 615–646 (2010)

Cohen, A., Devore, R., Schwab, C.: Analytic regularity and polynomial approximation of parametric and stochastic elliptic PDE’s. Anal. Appl. (Singap.) 9(1), 11–47 (2011)

Dashti, M., Stuart, A.M.: The Bayesian approach to inverse problems. In: Handbook of Uncertainty Quantification. Vol. 1, 2, 3, pp. 311–428. Springer, Cham (2017)

Davis, P.: Interpolation and Approximation. Dover Books on Mathematics, Dover Publications (1975)

De Philippis, G., Figalli, A.: Partial regularity for optimal transport maps. Publ. Math. Inst. Hautes Études Sci. 121, 81–112 (2015)

Doersch, C.: Tutorial on variational autoencoders. arXiv preprintarXiv:1606.05908 (2016)

El Moselhy, T.A., Marzouk, Y.M.: Bayesian inference with optimal maps. J. Comput. Phys. 231(23), 7815–7850 (2012)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: Z. Ghahramani, M. Welling, C. Cortes, N. Lawrence, and K. Q. Weinberger (Ed.) Advances in Neural Information Processing Systems, volume 27. Curran Associates, Inc., (2014)

Goodfellow, I. J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial networks. arXiv preprintarXiv:1406.2661 (2014)

Jaini, P., Selby, K.A., Yu, Y.: Sum-of-squares polynomial flow. ICML, (2019)

Jerez-Hanckes, C., Schwab, C., Zech, J.: Electromagnetic wave scattering by random surfaces: shape holomorphy. Math. Mod. Meth. Appl. Sci. 27(12), 2229–2259 (2017)

Kirwan, P.: Complexifications of multilinear and polynomial mappings, 1997. Ph.D. thesis, National University of Ireland, Galway

Kothari, K., Khorashadizadeh, A., de Hoop, M., Dokmanić, I.: Trumpets: injective flows for inference and inverse problems (2021)

Krantz, S. G.: Function theory of several complex variables. AMS Chelsea Publishing, Providence (Reprint of the 1992 edition 2001)

Kumar, A., Poole, B., Murphy, K.: Regularized autoencoders via relaxed injective probability flow (2020)

Lord, G.J., Powell, C.E., Shardlow, T.: An Introduction to Computational Stochastic PDEs. Cambridge Texts in Applied Mathematics. Cambridge University Press, New York (2014)

Marzouk, Y., Moselhy, T., Parno, M., Spantini, A.: Sampling via measure transport: an introduction. In: Handbook of Uncertainty Quantification. Vol. 1, 2, 3, pp. 785–825. Springer, Cham (2017)

Mattner, L.: Complex differentiation under the integral. Nieuw Arch. Wiskd. (5), 2(1), 32–35 (2001)

McLaughlin, D., Townley, L.R.: A reassessment of the groundwater inverse problem. Water Resour. Res. 32(5), 1131–1161 (1996)

Munkres, J.R.: Topology. Prentice Hall Inc, Upper Saddle River (2000)

Muñoz, G.A., Sarantopoulos, Y., Tonge, A.: Complexifications of real Banach spaces, polynomials and multilinear maps. Studia Math. 134(1), 1–33 (1999)

Olver, F.W.J., Lozier, D.W., Boisvert, R.F., Clark, C.W. editors. NIST handbook of mathematical functions. U.S. Department of Commerce, National Institute of Standards and Technology, Washington, DC; Cambridge University Press, Cambridge (2010)

Papamakarios, G., Nalisnick, E., Rezende, D.J., Mohamed, S., Lakshminarayanan, B.: Normalizing flows for probabilistic modeling and inference. J. Mach. Learn. Res. 22, 1–64 (2021)

Rezende, D., Mohamed, S.: Variational inference with normalizing flows. In: F. Bach and D. Blei (Eds.) Proceedings of the 32nd International Conference on Machine Learning, volume 37 of Proceedings of Machine Learning Research, pp. 1530–1538, Lille, France, 07–09 Jul 2015. PMLR

Robert, C.P., Casella, G.: Monte Carlo Statistical Methods (Springer Texts in Statistics). Springer, Berlin, Heidelberg (2005)

Sagiv, A.: The Wasserstein distances between pushed-forward measures with applications to uncertainty quantification. Commun. Math. Sci. 18(3), 707–724 (2020)

Santambrogio, F.: Optimal Transport for Applied Mathematicians, Volume 87 of Progress in Nonlinear Differential Equations and their Applications. Birkhäuser/Springer, Cham, Calculus of variations, PDEs, and modeling (2015)

Schwab, C., Stuart, A.M.: Sparse deterministic approximation of Bayesian inverse problems. Inverse Problems, 28(4), 045003 (2012)

Spantini, A., Bigoni, D., Marzouk, Y.: Inference via low-dimensional couplings. J. Mach. Learn. Res. 19(1), 2639–2709 (2018)

Telgarsky, M.: Neural networks and rational functions. In: D. Precup and Y. W. Teh (Eds.) Proceedings of the 34th International Conference on Machine Learning, Volume 70 of Proceedings of Machine Learning Research, pp. 3387–3393. PMLR, 06–11 Aug (2017)

Villani, C.: Optimal Transport, Volume 338 of Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences]. Springer, Berlin, Old and new (2009)

Wehenkel, A., Louppe, G.: Unconstrained monotonic neural networks. arXiv preprint arXiv:1908.05164 (2019)

Yarotsky, D.: Error bounds for approximations with deep ReLU networks. Neural Netw. 94, 103–114 (2017)

Zech, J.: Sparse-Grid Approximation of High-Dimensional Parametric PDEs, Dissertation 25683, ETH Zürich. https://doi.org/10.3929/ethz-b-000340651 (2018)

Zech, J., Marzouk, Y.: Sparse approximation of triangular transports. Part I: the finite dimensional case. Constr. Approx. https://doi.org/10.1007/s00365-022-09569-2 (2022)

Zech, J., Schwab, C.: Convergence rates of high dimensional Smolyak quadrature. ESAIM Math. Model. Numer. Anal. 54(4), 1259–1307 (2020)

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Albert Cohen.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This paper was written during the postdoctoral stay of JZ at MIT. JZ acknowledges support by the Swiss National Science Foundation under Early Postdoc Mobility Fellowship 184530. YM and JZ acknowledge support from the United States Department of Energy, Office of Advanced Scientific Computing Research, AEOLUS Mathematical Multifaceted Integrated Capability Center.

Appendices

Proofs of Sect. 3

1.1 Lemma 3.2

Lemma A.1

Let \((c_j)_{j\in {{\mathbb {N}}}}\in \ell ^1({{\mathbb {N}}})\) be a sequence of positive numbers. Then \(d({{\varvec{x}}},{{\varvec{y}}}){:=}\sum _{j\in {{\mathbb {N}}}}c_j|x_j-y_j|\) defines a metric on U that induces the product topology.

Proof

Recall that the family of sets

forms a basis of the product topology on U. Fix \({{\varvec{y}}}\in U\) and \(\varepsilon >0\), and let \(N_\varepsilon \in {{\mathbb {N}}}\) be so large that \(\sum _{j>N_\varepsilon }2c_j<\frac{\varepsilon }{2}\). Let \(C_0{:=}\sum _{j=1}^{N_\varepsilon }c_j\). Then if \({{\varvec{x}}}\), \({{\varvec{y}}}\in U\) satisfy \(|x_j-y_j|<\frac{\varepsilon }{2C_0}\) for all \(j\le N_\varepsilon \), we have \(d({{\varvec{x}}},{{\varvec{y}}})=\sum _{j\in {{\mathbb {N}}}}c_j|x_j-y_j|<\frac{\varepsilon }{2}\frac{\sum _{j=1}^{N_\varepsilon }c_j}{C_0}+\sum _{j>N_\varepsilon }2c_j\le \varepsilon \), and thus

On the other hand, if we fix \({{\varvec{y}}}\in U\), \(\varepsilon >0\) and \(N\in {{\mathbb {N}}}\), and set \(C_0{:=}\min _{j=1,\dots ,N} c_j>0\), then

\(\square \)

Proof of Lemma 3.2

By [3, Lemma 6.4.2 (ii)], the Borel \(\sigma \)-algebra on U (with the product topology) coincides with the product \(\sigma \)-algebra on U. Since \(f:U\rightarrow {{\mathbb {R}}}\) is continuous, and because U and \({{\mathbb {R}}}\) are equipped with the Borel \(\sigma \)-algebras, \(f:U\rightarrow {{\mathbb {R}}}\) is measurable. Since f is bounded it belongs to \(L^2(U,\mu )\).

Fix \((c_j)_{j\in {{\mathbb {N}}}}\in \ell ^1({{\mathbb {N}}})\) with \(c_j>0\) for all \(j\in {{\mathbb {N}}}\), and let d be the metric on U from Lemma A.1. Since \(f\in C^0(U,{{\mathbb {R}}}_+)\) and U is compact by Tychonoff’s theorem [26, Theorem 37.3], the Heine-Cantor theorem yields f to be uniformly continuous. Thus for any \(\varepsilon >0\) there exists \(\delta _\varepsilon >0\) such that for all \({{\varvec{x}}}\), \({{\varvec{y}}}\in U\) with \(d({{\varvec{x}}},{{\varvec{y}}})<\delta _\varepsilon \) it holds \(|f({{\varvec{x}}})-f({{\varvec{y}}})|<\varepsilon \). Now let \(k\in {{\mathbb {N}}}\) and \(\varepsilon >0\) arbitrary. Then for all \({{\varvec{x}}}_{[k]}\), \({{\varvec{y}}}_{[k]}\in U_{k}\) such that \(\sum _{j=1}^k c_j|x_j-y_j|<\delta _\varepsilon \), we get

which shows continuity of \({{\hat{f}}}_k:U_{k}\rightarrow {{\mathbb {R}}}\).

Next, using that \(\inf _{{{\varvec{y}}}\in U}f({{\varvec{y}}}){=:}r>0\) (due to compactness of U and continuity of f), for \(k>1\) we have \({{\hat{f}}}_{k-1}({{\varvec{x}}}_{[k-1]})\ge \min \{r,1\}>0\) independent of \({{\varvec{x}}}_{[k-1]}\in U_{{k-1}}\). This implies that also \(\frac{{{\hat{f}}}_k}{{{\hat{f}}}_{k-1}}=f_k:U_{k}\rightarrow {{\mathbb {R}}}_+\) is continuous, where the case \(k=1\) is trivial since \({{\hat{f}}}_0\equiv 1\).

Finally we show (3.5). Let again \(\varepsilon >0\) be arbitrary and \(N_\varepsilon \in {{\mathbb {N}}}\) so large that \(\sum _{j>N_\varepsilon }2c_j<\delta _\varepsilon \). Then for every \({{\varvec{x}}}\), \({{\varvec{t}}}\in U\) and every \(k>N_\varepsilon \) we have \(d(({{\varvec{x}}}_{[k]},{{\varvec{t}}}),{{\varvec{x}}})\le \sum _{j>N_\varepsilon }c_j|x_j-t_j|\le \sum _{j>N_\varepsilon }2c_j<\delta _\varepsilon \), which implies \(|f({{\varvec{x}}}_{[k]},{{\varvec{t}}})-f({{\varvec{x}}})|<\varepsilon \). Thus for every \({{\varvec{x}}}\in U\) and every \(k>N_\varepsilon \)

which concludes the proof. \(\square \)

1.2 Theorem 3.3

With \(F_{*;k}({{\varvec{x}}}_{[k-1]},x_k){:=}\int _{-1}^{x_k} f_{*;k}({{\varvec{x}}}_{[k-1]},t_k)\;\mathrm {d}t_k\), the construction of \(T_k:U_{k}\rightarrow U_{k}\) described in Sect. 3 amounts to the explicit formula \(T_1(x_1){:=}(F_{{\pi };1})^{-1}\circ F_{{\rho };1}(x_1)\) and inductively

where \(F_{{\pi };k}(T_{[k-1]}({{\varvec{x}}}_{[k-1]}),\cdot )\) denotes the inverse of \(x_k\mapsto F_{{\pi };k}(T_{[k-1]}({{\varvec{x}}}_{[k-1]}),x_k)\).

Remark A.2

If \(f_{*;k}\in C^0(U_{k},{{\mathbb {R}}}_+)\) for \(*\in \{{\rho },{\pi }\}\), then by (A.1) it holds \(T_k\), \(\partial _kT_k\in C^0(U_{k})\).

Proof of Theorem 3.3

We start with (i). As a consequence of Remark A.2 and Lemma 3.2, \(T_k\in C^0(U_{k},U_{1})\) for every \(k\in {{\mathbb {N}}}\). So each \(T_k:U_{k}\rightarrow U_{1}\) is measurable and thus also \(T_{[n]}=(T_k)_{k=1}^n:U_{n}\rightarrow U_{n}\) is measurable for each \(n\in {{\mathbb {N}}}\). Furthermore \(T:U\rightarrow U\) is bijective by Lemma 3.1 and because for every \({{\varvec{x}}}\in U\) and \(k\in {{\mathbb {N}}}\) it holds that \(T_k({{\varvec{x}}}_{[k-1]},\cdot ):U_{1}\rightarrow U_{1}\) is bijective.

The product \(\sigma \)-algebra on U is generated by the algebra (see [3, Def. 1.2.1]) \({{\mathcal {A}}}_0\) given as the union of the \(\sigma \)-algebras \({{\mathcal {A}}}_n{:=}\{A_n\times [-1,1]^{{\mathbb {N}}}\,:\,A_n\in {{\mathcal {B}}}(U_{n})\}\), \(n\in {{\mathbb {N}}}\), where \({{\mathcal {B}}}(U_{n})\) denotes the Borel \(\sigma \)-algebra. For sets of the type \(A{:=}A_n \times [-1,1]^{{\mathbb {N}}}\in {{\mathcal {A}}}_n\) with \(A_n\in {{\mathcal {B}}}(U_{n})\), due to \(T_j({{\varvec{y}}}_{[j]})\in U_{1}\) for all \({{\varvec{y}}}\in U\) and \(j>n\), we have

which belongs to \({{\mathcal {A}}}_n\) and thus to the product \(\sigma \)-algebra on U since \(T_{[n]}\) is measurable. Hence \(T:U\rightarrow U\) is measurable w.r.t. the product \(\sigma \)-algebra.

Denote now by \({\pi }_n\) and \({\rho }_n\) the marginals on \(U_{n}\) w.r.t. the first n variables, i.e., e.g., \({\pi }_n(A){:=}{\pi }(A\times [-1,1]^{{\mathbb {N}}})\) for every \(A\in {{\mathcal {B}}}(U_{n})\). By (3.4) (see [33, Proposition 2.18]), \((T_{[n]})_\sharp {\rho }_n={\pi }_n\). For sets of the type \(A{:=}A_n \times [-1,1]^{{\mathbb {N}}}\in {{\mathcal {A}}}_n\) with \(A_n\in {{\mathcal {B}}}(U_{n})\),

According to [3, Theorem 3.5.1], the extension of a non-negative \(\sigma \)-additive set function on the algebra \({{\mathcal {A}}}_0\) to the \(\sigma \)-algebra generated by \({{\mathcal {A}}}_0\) is unique. Since \(T:U\rightarrow U\) is bijective and measurable, it holds that both \({\pi }\) and \(T_\sharp {\rho }\) are measures on U and therefore \({\pi }=T_\sharp {\rho }\).

Finally we show (ii). Let \({{\hat{f}}}_{{\pi },n}\in C^0(U_{n},{{\mathbb {R}}}_+)\) and \({{\hat{f}}}_{{\rho },n}\in C^0(U_{n},{{\mathbb {R}}}_+)\) be as in (3.2), i.e., these functions denote the densities of the marginals \({\pi }_n\), \({\rho }_n\). Since \((T_{[n]})_\sharp {\rho }_n={\pi }_n\), by a change of variables (see, e.g., [4, Proposition 2.5]), for all \({{\varvec{x}}}\in U\)

Therefore

According to Lemma 3.2 we have uniform convergence

and uniform convergence of

The latter implies with \({{\varvec{y}}}=T({{\varvec{x}}})\) that

converges uniformly. Since \(f_{\pi }:U\rightarrow {{\mathbb {R}}}_+\) is continuous and U is compact, we can conclude that \({{\hat{f}}}_{{\pi },n}({{\varvec{x}}})\ge r\) (cp. (3.2)) for some \(r>0\) independent of \(n\in {{\mathbb {N}}}\) and \({{\varvec{x}}}\in U_{n}\). Thus the right-hand side of (A.2) converges uniformly, and

converges uniformly. Moreover \(\det dT({{\varvec{x}}})f_{\pi }(T({{\varvec{x}}}))=f_{\rho }({{\varvec{x}}})\) for all \({{\varvec{x}}}\in U\). \(\square \)

Proofs of Sect. 4

1.1 Proposition 4.2

The proposition is a consequence of the finite-dimensional result shown in [41]. For better readability, we recall the statement here together with its requirements; see [41, Assumption 3.5, Theorem 3.6]:

Assumption B.1

Let \(0<{{\hat{M}}}\le {{\hat{L}}}\), \({{{\hat{C}}}_1}>0\), \(k\in {{\mathbb {N}}}\) and \({\varvec{\delta }}\in (0,\infty )^k\) be given. For \(*\in \{{\rho },{\pi }\}\):

-

(a)

\({{\hat{f}}}_*:U_{k}\rightarrow {{\mathbb {R}}}_+\) is a probability density and \({{\hat{f}}}_{*}\in C^1({{\mathcal {B}}}_{{\varvec{\delta }}}(U_{1}),{\mathbb {C}})\),

-

(b)

\({{\hat{M}}}\le |{{\hat{f}}}_{*}({{\varvec{x}}})|\le {{\hat{L}}}\) for \({{\varvec{x}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}}(U_{1})\),

-

(c)

\(\sup _{{{\varvec{y}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}}}|{{\hat{f}}}_{*}({{\varvec{x}}}+{{\varvec{y}}})-{{\hat{f}}}_{*}({{\varvec{x}}})| \le {{{\hat{C}}}_1}\) for \({{\varvec{x}}}\in U_{k}\),

-

(d)

\(\sup _{{{\varvec{y}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}_{[j]}}\times \{0\}^{k-j}}|{{\hat{f}}}_{*}({{\varvec{x}}}+{{\varvec{y}}})-{{\hat{f}}}_{*}({{\varvec{x}}})|\le {{{\hat{C}}}_1} \delta _{k+1} \) for \({{\varvec{x}}}\in U_{k}\) and \(j\in \{1,\dots ,k-1\}\).

Theorem B.2

Let \(0<{{\hat{M}}}\le {{\hat{L}}}<\infty \), \(k\in {{\mathbb {N}}}\) and \({\varvec{\delta }}\in (0,\infty )^k\). There exist \({{{\hat{C}}}_1}\), \({{{\hat{C}}}_2}\) and \({{{\hat{C}}}_3}>0\) depending on \({{\hat{M}}}\) and \({{\hat{L}}}\) (but not on k or \({\varvec{\delta }}\)) such that if Assumption B.1 holds with \({{{\hat{C}}}_1}\), then:

Let \(H:U_{k}\rightarrow U_{k}\) be the KR-transport as in (3.3) such that H pushes forward the measure with density \({{\hat{f}}}_{\rho }\) to the one with density \({{\hat{f}}}_{\pi }\). Set \(R_k{:=}\partial _{k}H_k\). With \({\varvec{\zeta }}=(\zeta _j)_{j=1}^k\) where \(\zeta _{j}{:=}{{{\hat{C}}}_2} \delta _{j}\), it holds for all \(j\in \{1,\dots ,k\}\):

-

(i)

\(R_j\in C^1({{\mathcal {B}}}_{{\varvec{\zeta }}_{[j]}}(U_{1}),{{\mathcal {B}}}_{{{{\hat{C}}}_3}}(1))\) and \(\Re (R_j({{\varvec{x}}}))\ge \frac{1}{{{{\hat{C}}}_3}}\) for all \({{\varvec{x}}}\in {{\mathcal {B}}}_{{\varvec{\zeta }}_{[j]}}(U_{1})\),

-

(ii)

if \(j\ge 2\), \(R_j:{{\mathcal {B}}}_{{\varvec{\zeta }}_{[j-1]}}(U_{1})\times U_{1}\rightarrow {{\mathcal {B}}}_{\frac{{{{\hat{C}}}_3}}{\max \{1,\delta _j\}}}(1)\).

Proof of Proposition 4.2

For \(*\in \{{\rho },{\pi }\}\) and \({{\varvec{z}}}\in {{\mathcal {B}}}_{\varvec{\delta }}(U_{1})\subseteq {\mathbb {C}}^k\) let

be the extension of (3.2) to complex numbers. By Lemma 3.2, \({{\hat{f}}}_{*,k}\in C^0(U_{k})\). Moreover with \({\rho }_k\) and \({\pi }_k\) being the marginal measures on \(U_{k}\) in the first k variables, by definition \({{\hat{f}}}_{{\rho },k}=\frac{\mathrm {d}{\rho }_k}{\;\mathrm {d}\mu }\) and \({{\hat{f}}}_{{\pi },k}=\frac{\mathrm {d}{\pi }_k}{\;\mathrm {d}\mu }\). In other words, these functions are the respective marginal densities in the first k variables.

Let \(H:U_{k}\rightarrow U_{k}\) be the KR-transport satisfying \(H_\sharp {\rho }_k={\pi }_k\), and let \(T:U\rightarrow U\) be the KR-transport satisfying \(T_\sharp {\rho }={\pi }\). By construction (cp. (3.3)) and uniqueness of the KR-transport, it holds \(T_{[k]}=(T_j)_{j=1}^k=(H_j)_{j=1}^k=H\). In order to complete the proof, we will apply Theorem B.2 to H. To this end we need to check Assumption B.1 for the densities \({{\hat{f}}}_{{\rho },k}\), \({{\hat{f}}}_{{\pi },k}:U_{k}\rightarrow {{\mathbb {R}}}\). We will do so with the constants

where \({{{\hat{C}}}_1}({{\hat{M}}},{{\hat{L}}})\) is as in Theorem B.2. Assume for the moment that \({{\hat{f}}}_{{\rho },k}\), \({{\hat{f}}}_{{\pi },k}:U_{k}\rightarrow {{\mathbb {R}}}\) satisfy Assumption B.1 with \({{\hat{M}}}\) and \({{\hat{L}}}\). Then Theorem B.2 immediately implies the statement of Proposition 4.2 with \(C_2(M,L) {:=}{{{\hat{C}}}_2}({{\hat{M}}},{{\hat{L}}})\) and \(C_3(M,L){:=}{{{\hat{C}}}_3}({{\hat{M}}},{{\hat{L}}})\), where \({{{\hat{C}}}_2}\) and \({{{\hat{C}}}_3}\) are as in Theorem B.2.

It remains to verify Assumption B.1. We do so item by item and fix \(*\in \{{\rho },{\pi }\}\):

-

(a)

By Lemma 3.2, \({{\hat{f}}}_{*,k}\in C^0(U_{k})\) and \(\int _{U_{k}}{{\hat{f}}}_{*,k}({{\varvec{x}}})\;\mathrm {d}\mu ({{\varvec{x}}})=\int _U f_*({{\varvec{y}}})\;\mathrm {d}\mu ({{\varvec{y}}})=1\), so that \({{\hat{f}}}_{*,k}\) is a positive probability density on \(U_{k}\). Fix \({{\varvec{z}}}\in {{\mathcal {B}}}_{\varvec{\delta }}(U_{1})\subseteq {\mathbb {C}}^k\) and \(i\in \{1,\dots ,k\}\). We want to show that \(z_i\mapsto {{\hat{f}}}_{*,k}({{\varvec{z}}})\in {\mathbb {C}}\) is complex differentiable for \(z_i\in {{\mathcal {B}}}_{\delta _i}(U_{1})\). It holds:

-

By Assumption 4.1 (a), \({{\varvec{y}}}\mapsto f_*({{\varvec{z}}},{{\varvec{y}}}):U\rightarrow {\mathbb {C}}\) is continuous and therefore measurable for all \(z_i\in {{\mathcal {B}}}_{\delta _i}(U_{1})\).

-

By Assumption 4.1 (b), for every fixed \({{\varvec{y}}}\in U\), \(z_i\mapsto f_*({{\varvec{z}}},{{\varvec{y}}}):{{\mathcal {B}}}_{\delta _i}(U_{1})\rightarrow {\mathbb {C}}\) is differentiable.

-

By Assumption 4.1 (a) \(f_*:{{\mathcal {B}}}_{\varvec{\delta }}(U_{1})\times U\rightarrow {\mathbb {C}}\) is continuous. Thus, compactness of \({{\bar{{{\mathcal {B}}}}}}_r(z_i)\times U\) (w.r.t. the product topology), implies that for every \(z_i\in {{\mathcal {B}}}_{\delta _i}(U_{1})\) with \(r>0\) s.t. \({{\bar{{{\mathcal {B}}}}}}_r(z_i)\subseteq {{\mathcal {B}}}_{\delta _i}(U_{1})\), holds \(\sup _{x\in {{\mathcal {B}}}_{r}(z_i)}\sup _{{{\varvec{y}}}\in U}|f_*({{\varvec{z}}}_{[i-1]},x,{{\varvec{z}}}_{[i+1:k]},{{\varvec{y}}})|<\infty \). Hence

$$\begin{aligned} z_i\in {{\mathcal {B}}}_{\delta _i}(U_{1})\Rightarrow \exists r\!>\!0: \sup _{x\in {{\mathcal {B}}}_{r}(z_i)}\int _{{{\varvec{y}}}\!\in \! U}|f_*({{\varvec{z}}}_{[i-1]},x,{{\varvec{z}}}_{[i+1:k]},{{\varvec{y}}})|\;\mathrm {d}\mu ({{\varvec{y}}})\!<\!\infty . \end{aligned}$$

According to the main theorem in [24], this implies \(z_i\mapsto {{\hat{f}}}_{*,k}({{\varvec{z}}})=\int _U f_*({{\varvec{z}}},{{\varvec{y}}})\;\mathrm {d}\mu ({{\varvec{y}}})\) to be differentiable on \({{\mathcal {B}}}_{\delta _i}(U_{1})\). Since \(i\in \{1,\dots ,k\}\) was arbitrary, Hartog’s theorem, e.g., [20, Theorem 1.2.5], yields \({{\varvec{z}}}\mapsto {{\hat{f}}}_{*,k}({{\varvec{z}}}):{{\mathcal {B}}}_{\varvec{\delta }}(U_{1})\rightarrow {\mathbb {C}}\) to be differentiable.

-

-

(b)

By Assumption 4.1 (c), and because \(f_*({{\varvec{y}}})\in {{\mathbb {R}}}_+\) for \({{\varvec{y}}}\in U\), we have \(M\le f_*({{\varvec{y}}})\le L\) for all \({{\varvec{y}}}\in U\). Thus \({{\hat{f}}}_{*,k}({{\varvec{x}}})=\int _U f({{\varvec{x}}},{{\varvec{y}}})\;\mathrm {d}\mu ({{\varvec{y}}})\ge M\) and also \({{\hat{f}}}_{*,k}({{\varvec{x}}})\le L\) for all \({{\varvec{x}}}\in U_{k}\). Furthermore, for \({{\varvec{z}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}}\subseteq {\mathbb {C}}^k\) and \({{\varvec{x}}}\in U_{k}\), by Assumption 4.1 (d) and (B.1)

$$\begin{aligned} |{{\hat{f}}}_{*,k}({{\varvec{x}}}+{{\varvec{z}}})-{{\hat{f}}}_{*,k}({{\varvec{x}}})|\le \int _U|f_*({{\varvec{x}}}+{{\varvec{z}}},{{\varvec{y}}})-f_*({{\varvec{x}}},{{\varvec{y}}})|\;\mathrm {d}\mu ({{\varvec{y}}}) \le C_1 \le \frac{M}{2}. \end{aligned}$$Thus, with \({{\hat{M}}}=\frac{M}{2}>0\) and \({{\hat{L}}}=L+\frac{{{\hat{M}}}}{2}\) we have \({{\hat{M}}}\le |{{\hat{f}}}_{*,k}({{\varvec{z}}})|\le {{\hat{L}}}\) for all \({{\varvec{z}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}}(U_{1})\).

-

(c)

For \({{\varvec{x}}}\in U_{k}\) by Assumption 4.1 (d) and (B.1)

$$\begin{aligned}&\sup _{{{\varvec{z}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}}}|{{\hat{f}}}_{*,k}({{\varvec{x}}}+{{\varvec{z}}})-{{\hat{f}}}_{*,k}({{\varvec{x}}})|\\&\quad \le \sup _{{{\varvec{y}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}}} \int _U |f_{*}({{\varvec{x}}}+{{\varvec{z}}},{{\varvec{y}}})-f_{*}({{\varvec{x}}},{{\varvec{y}}})|\;\mathrm {d}\mu ({{\varvec{y}}}) \le C_1(M,L)\\&\quad \le {{{\hat{C}}}_1}({{\hat{M}}},{{\hat{L}}}). \end{aligned}$$ -

(d)

For \({{\varvec{x}}}\in U_{k}\) and \(j\in \{1,\dots ,k-1\}\) by Assumption 4.1 (e) and (B.1)

$$\begin{aligned}&\sup _{{{\varvec{z}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}_{[j]}}\times \{0\}^{k-j}}|{{\hat{f}}}_{*,k}({{\varvec{x}}}+{{\varvec{z}}})-{{\hat{f}}}_{*,k}({{\varvec{x}}})|\\&\quad \le \sup _{{{\varvec{z}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}_{[j]}}\times \{0\}^{{{\mathbb {N}}}}} \int _U |f_{*}({{\varvec{x}}}+{{\varvec{z}}},{{\varvec{y}}})-f_{*}({{\varvec{x}}},{{\varvec{y}}})|\;\mathrm {d}\mu ({{\varvec{y}}}) \nonumber \\&\quad \le C_1(M,L) \delta _{j+1} \le {{{\hat{C}}}_1}({{\hat{M}}},{{\hat{L}}})\delta _{j+1}. \end{aligned}$$

\(\square \)

1.2 Verifying Assumption 4.1

In this section we show that densities as in Assumption 2.1 satisfy Assumption 4.1.

Lemma B.3

Let \(f({{\varvec{y}}})={\mathfrak {f}}(\sum _{j\in {{\mathbb {N}}}}y_j\psi _j)\) satisfy Assumption 2.1 for some \(p\in (0,1)\) and \(0<M\le L<\infty \). Let \((b_j)_{j\in {{\mathbb {N}}}}\subset (0,\infty )\) be summable and such that \(b_j\ge \Vert \psi _j \Vert _{Z}\) for all \(j\in {{\mathbb {N}}}\). Let \(C_1=C_1(M,L)>0\) be as in Proposition 4.2.

There exists a monotonically increasing sequence \((\kappa _j)_{j\in {{\mathbb {N}}}}\in (0,\infty )^{{\mathbb {N}}}\) and \(\tau >0\) (depending on \((b_j)_{j\in {{\mathbb {N}}}}\), \(C_1\) and \({\mathfrak {f}}\)) such that for every fixed \(J\in {{\mathbb {N}}}\), \(k\in {{\mathbb {N}}}\) and \({\varvec{\nu }}\in {{\mathbb {N}}}_0^k\), with

f satisfies Assumption 4.1.

Lemma B.4

Let \({{\varvec{b}}}=(b_j)_{j\in {{\mathbb {N}}}}\in \ell ^1({{\mathbb {N}}})\) with \(b_j\ge 0\) for all j, and let \(\gamma >0\). There exists \((\kappa _j)_{j\in {{\mathbb {N}}}}\subset (0,\infty )\) monotonically increasing and such that \(\kappa _j\rightarrow \infty \) and \(\sum _{j\in {{\mathbb {N}}}}b_j\kappa _j<\gamma \).

Proof

If there exists \(d\in {{\mathbb {N}}}\) such that \(b_j=0\) for all \(j>d\), then the statement is trivial. Otherwise, for \(n\in {{\mathbb {N}}}\) set \(j_n{:=}\min \{j\in {{\mathbb {N}}}\,:\,\sum _{i\ge j}b_i\le 2^{-n}\}\). Since \({{\varvec{b}}}\in \ell ^1({{\mathbb {N}}})\), \((j_n)_{n\in {{\mathbb {N}}}}\) is well-defined, monotonically increasing, and tends to infinity (it may have repeated entries). For \(j\in {{\mathbb {N}}}\) let

which is well-defined since \(j_n\rightarrow \infty \) so that

and those sets are disjoint, in particular if \(j_n=j_{n+1}\) then \([j_n,j_{n+1})\cap {{\mathbb {N}}}=\emptyset \). Then

Set \(\kappa _j{:=}\frac{\gamma {{\tilde{\kappa }}}_j}{\sum _{j\in {{\mathbb {N}}}}b_j{{\tilde{\kappa }}}_j}\). \(\square \)

Proof of Lemma B.3

In Steps 1–2 we will construct \((\kappa _j)_{j\in {{\mathbb {N}}}}\subset (0,\infty )\) and \(\tau >0\) independent of \(J\in {{\mathbb {N}}}\), \(k\in {{\mathbb {N}}}\) and \({\varvec{\nu }}\in {{\mathbb {N}}}_0^k\). In Steps 3–4, we verify that \((\kappa _j)_{j\in {{\mathbb {N}}}}\) and \(\tau \) have the desired properties.

Moreover, we will use that Z is a Banach space, \(Z_{\mathbb {C}}\) its complexification as introduced in (and before) Assumption 2.1, and \(\psi _j\in Z\subseteq Z_{\mathbb {C}}\) for all j.

Step 1. Set \(K{:=}\{\sum _{j\in {{\mathbb {N}}}}y_j\psi _j\,:\,{{\varvec{y}}}\in U\}\subseteq Z\). According to [40, Remark 2.1.3], \({{\varvec{y}}}\mapsto \sum _{j\in {{\mathbb {N}}}}y_j\psi _j:U\rightarrow Z\) is continuous and \(K\subseteq Z\) is compact (as the image of a compact set under a continuous map). Compactness of K and continuity of \({\mathfrak {f}}\) imply \(\sup _{\psi \in K}|{\mathfrak {f}}(\psi )|<\infty \) and