Abstract

For two probability measures \({\rho }\) and \({\pi }\) with analytic densities on the d-dimensional cube \([-1,1]^d\), we investigate the approximation of the unique triangular monotone Knothe–Rosenblatt transport \(T:[-1,1]^d\rightarrow [-1,1]^d\), such that the pushforward \(T_\sharp {\rho }\) equals \({\pi }\). It is shown that for \(d\in {{\mathbb {N}}}\) there exist approximations \({\tilde{T}}\) of T, based on either sparse polynomial expansions or deep ReLU neural networks, such that the distance between \({\tilde{T}}_\sharp {\rho }\) and \({\pi }\) decreases exponentially. More precisely, we prove error bounds of the type \(\exp (-\beta N^{1/d})\) (or \(\exp (-\beta N^{1/(d+1)})\) for neural networks), where N refers to the dimension of the ansatz space (or the size of the network) containing \({\tilde{T}}\); the notion of distance comprises the Hellinger distance, the total variation distance, the Wasserstein distance and the Kullback–Leibler divergence. Our construction guarantees \({\tilde{T}}\) to be a monotone triangular bijective transport on the hypercube \([-1,1]^d\). Analogous results hold for the inverse transport \(S=T^{-1}\). The proofs are constructive, and we give an explicit a priori description of the ansatz space, which can be used for numerical implementations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A long-standing challenge in applied mathematics and statistics is to approximate integrals w.r.t. a probability measure \({\pi }\), given only through its (possibly unnormalized) Lebesgue density \(f_{{\pi }}\), on a high-dimensional integration domain. Here we consider probability measures on the bounded domain \([-1,1]^d\). One of the main applications is in Bayesian inference, where parameters \({{\varvec{y}}}\in [-1,1]^d\) are inferred from noisy and/or indirect data. In this case, \({\pi }\) is interpreted as the so-called posterior measure. It is obtained by Bayes’ rule and encompasses all information about the parameters given the data. A typical goal is to compute the expectation \(\int _{[-1,1]^d}g({{\varvec{y}}})\;\mathrm {d}{\pi }( {{\varvec{y}}})\) of some quantity of interest \(g:[-1,1]^d\rightarrow {{\mathbb {R}}}\) w.r.t. the posterior.

Various approaches to high-dimensional integration have been proposed in the literature. One of the most common strategies consists of Monte Carlo-type sampling, e.g., with Markov chain Monte Carlo (MCMC) methods [55]. Metropolis–Hastings MCMC algorithms, for instance, are versatile and simple to implement. Yet the mixing times of standard Metropolis algorithms can scale somewhat poorly with the dimension d. (Here function-space MCMC algorithms [15, 16, 57] and others [47, 70] represent notable exceptions, with dimension-independent mixing for certain classes of target measures.) In general, MCMC algorithms may suffer from slow convergence and possibly long burn-in phases. Furthermore, MCMC is intrinsically serial, which can make sampling infeasible when each evaluation of \(f_{\pi }\) is costly. Variational inference, e.g., [5], can improve on some of these drawbacks. It replaces the task of sampling from \({\pi }\) by an optimization problem. The idea is to minimize (for instance) the KL divergence between \({\pi }\) and a second measure \({\tilde{{\pi }}}\) in some given class of tractable measures, where ‘tractable’ means that independent and identically distributed (iid) samples from \({\tilde{{\pi }}}\) can easily be produced.

Transport-based methods are one instance of this category: given an easy-to-sample-from “reference” measure \({\rho }\), we look for an approximation \({\tilde{T}}\) to the transport T such that \(T_\sharp {\rho }={\pi }\). Here \(T_\sharp {\rho }\) denotes the pushforward measure, i.e., \(T_\sharp {\rho }(B)={\rho }(T^{-1}(B))\) for all measurable sets B. Then \({\tilde{{\pi }}}{:}{=}{\tilde{T}}_\sharp {\rho }\) is an approximation to the “target” \({\pi }=T_\sharp {\rho }\). Unlike in optimal transportation theory, e.g., [59, 71], T is not required to minimize some cost. This allows imposing further structure on T in order to simplify numerical computations. In this paper, we concentrate on the triangular Knothe–Rosenblatt (KR) rearrangement [56], which has been found to be particularly useful in this context. The reason for concentrating on the KR transport (rather than, for instance, optimal transport) is that it is widely used in practical algorithms [25, 34, 66, 72], with the advantages of allowing easy inversion, efficient computation of the Jacobian determinant, and direct extraction of certain conditionals [65]; and from a mathematical standpoint, its explicit construction makes it amenable to a rigorous analysis. Given an approximation \({\tilde{T}}\) to (some) transport T, for a random variable \(X\sim {\rho }\) it holds that \({\tilde{T}}(X)\sim {\tilde{{\pi }}}\). Thus, iid samples from \({\tilde{{\pi }}}\) are obtained via \(({\tilde{T}}(X_i))_{i=1}^n\), where \((X_i)_{i=1}^n\) are \({\rho }\)-distributed iid. This strategy, and various refinements and variants, has been investigated theoretically and empirically, and successfully applied in Bayesian inference; see, e.g., [2, 22, 25, 45, 52, 66].

Normalizing flows, now widely used in the machine learning literature [36, 50, 54] for variational inference, generative modeling, and density estimation, are another instance of this transport framework. In particular, many so-called autoregressive flows, e.g., [34, 51], employ specific neural network parametrizations of triangular maps. A complete mathematical convergence analysis of these methods is not yet available in the literature.

Sampling methods can be contrasted with deterministic approaches, where a quadrature rule is constructed that converges at a guaranteed (deterministic) rate for all integrands in some function class. Unlike sampling methods, deterministic quadratures can achieve higher-order convergence. They even overcome the curse of dimensionality, presuming certain smoothness properties of the integrand. We refer to sparse-grid quadratures [10, 13, 27, 30, 61, 77] and quasi-Monte Carlo quadrature [9, 20, 60] as examples. It is difficult to construct deterministic quadrature rules for an arbitrary measure \({\pi }\), however, so typically they are only available in specific cases such as for the Lebesgue or Gaussian measure. Interpreting \(\int _{[-1,1]^d}g(t)\;\mathrm {d}{\pi }( t)\) as the integral \(\int _{[-1,1]^d}g(t)f_{\pi }(t)\;\mathrm {d}t\) w.r.t. the Lebesgue measure (here \(f_{\pi }\) is again the Lebesgue density of \({\pi }\)), such methods are still applicable. In Bayesian inference, however, \({\pi }\) can be strongly concentrated, corresponding to either small noise in the observations or a large data set. Then this viewpoint may become problematic. For example, the error of Monte Carlo quadrature depends on the variance of the integrand. The variance of \(gf_{\pi }\) (w.r.t. the Lebesgue measure) can be much larger than the variance of g (w.r.t. \({\pi }\)) when \({\pi }\) is strongly concentrated, i.e., when \(f_{\pi }\) is very “peaky.” This problem was addressed in [62] by combining an adaptive sparse-grid quadrature with a linear transport map. This approach combines the advantage of high-order convergence with quadrature points that are mostly within the area where \({\pi }\) is concentrated. Yet if \({\pi }\) is multimodal (i.e., concentrated in several separated areas) or unimodal but strongly non-Gaussian, then the linearity of the transport precludes such a strategy from being successful. A similar statement can be made about the method analyzed in [63], where the (Gaussian) Laplace approximation is used in place of a strongly concentrated posterior measure. For such \({\pi }\), the combination of nonlinear transport maps with deterministic quadrature rules may lead to significantly improved algorithms.

In a related spirit, we also mention interacting particle systems such as kernel-based Stein variational gradient descent (SVGD) and its variants, which have recently emerged as a promising research direction [19, 41]. Put simply, these methods try to find n points \((x_i)_{i=1}^n\) minimizing an approximation of the KL divergence between the target \({\pi }\) and the measure represented by the sample \((x_i)_{i=1}^n\). A discrete convergence analysis is not yet available, but a connection between the mean field limit and gradient flow has been established [23, 40, 42].

In this paper, we analyze the approximation of the transport T satisfying \(T_\sharp {\rho }={\pi }\) under the assumption that the reference and target densities \(f_{\rho }\) and \(f_{\pi }\) are analytic. This assumption is quite strong, but satisfied in many applications, including the main application we have in mind, which is Bayesian inference in partial differential equations (PDEs). The reference \({\rho }\) can be chosen at will, e.g., as a uniform measure so that \(f_{\rho }\) is constant (thus analytic) on \([-1,1]^d\). And here the target density \(f_{\pi }\) is a posterior density, stemming from a PDE-driven likelihood function. For certain linear and nonlinear PDEs (for example, the Navier–Stokes equations), it can be shown under suitable conditions that the corresponding posterior density is indeed an analytic function of the parameters; we refer, for instance, to [13, 64].

As outlined above, T can be employed in the construction of either sampling-based or deterministic quadrature methods. Understanding the approximation properties of T is the first step toward a rigorous convergence analysis of such algorithms. In practice, once a suitable ansatz space has been identified, a (usually non-convex) optimization problem must be solved to find a suitable \({\tilde{T}}\) within the ansatz space. While this optimization is beyond the scope of the current paper, we intend to empirically analyze a numerical algorithm based on the present results in a forthcoming publication.

Throughout we consider transports on \([-1,1]^d\) with \(d\in {{\mathbb {N}}}\). It is straightforward to generalize all the presented results to arbitrary Cartesian products  with \(-\infty<a_j<b_j<\infty \) for all j, via an affine transformation of all occurring functions. Most (theoretical and empirical) earlier works on this topic have, however, assumed measures supported on all of \({{\mathbb {R}}}^d\). A similar analysis in the unbounded case, as well as numerical experiments and the development and improvement of algorithms in this case, will be the topics of future research.

with \(-\infty<a_j<b_j<\infty \) for all j, via an affine transformation of all occurring functions. Most (theoretical and empirical) earlier works on this topic have, however, assumed measures supported on all of \({{\mathbb {R}}}^d\). A similar analysis in the unbounded case, as well as numerical experiments and the development and improvement of algorithms in this case, will be the topics of future research.

1.1 Contributions

For \(d\in {{\mathbb {N}}}\) and under the assumption that the reference and target densities \(f_{\rho }\), \(f_{\pi }:[-1,1]^d\rightarrow (0,\infty )\) are analytic, we prove that there exist sparse polynomial spaces of dimension \(N\in {{\mathbb {N}}}\), in which the best approximation of the KR transport T converges to T at the exponential rate \(\exp (-\beta N^{1/d})\) for some \(\beta >0\) as \(N\rightarrow \infty \); see Theorem 4.3. To guarantee that the approximation \(\tilde{T}:[-1,1]^d\rightarrow [-1,1]^d\) is bijective, we propose to use an ansatz of rational functions, which ensures this property and retains the same convergence rate. In this case, N refers to the dimension of the polynomial space used in the denominator and numerator, i.e., again to the number of degrees of freedom; see Theorem 4.5. The argument is based on a result quantifying the regularity of T in terms of its complex domain of analyticity, which is given in Theorem 3.6.

In Sect. 6, we investigate the implications of approximating the transport map for the corresponding pushforward measures. We show that closeness of the transports in \(W^{1,\infty }\) implies closeness of the pushforward measures in the Hellinger distance, the total variation distance and the KL divergence. A similar statement is true for the Wasserstein distance if the transports are close in \(L^\infty \). Specifically, Proposition 6.4 states the same \(\exp (-\beta N^{1/d})\) error convergence as for the approximation of the transport is obtained for the distance between the pushforward \(\tilde{T}_\sharp {\rho }\) and the target \({\pi }\).

We provide lower bounds on \(\beta >0\), based on properties of \(f_{\rho }\) and \(f_{\pi }\). Furthermore, given \(\varepsilon >0\), we provide a priori ansatz spaces guaranteeing the best approximation in this ansatz space to be \(O(\varepsilon )\)-close to T; see Theorem 4.5 and Sect. 7. This allows to improve upon existing numerical algorithms: Previous approaches were either based on heuristics or based on adaptive (greedy) enrichment of the ansatz space [25], neither of which can guarantee asymptotic convergence or convergence rates in general. Moreover, greedy methods are inherently sequential (in contrast to a priori approaches), which can slow down computations.

Using known approximation properties of polynomials by rectified linear unit (ReLU) neural networks (NNs), we also show that ReLU NNs can approximate T at the exponential rate \(\exp (-\beta N^{1/(1+d)})\). In this case, N refers to the number of trainable parameters (“weights and biases”) in the network; see Theorem 5.1 for the convergence of the transport map and Proposition 6.5 for the convergence of the pushforward measure. We point out that normalizing flows in machine learning also try to approximate T with a neural network; see, for example, [26, 29, 33, 54]. Recent theoretical works on the expressivity of neural network representations of transport maps include [43, 68, 69], which provide universal approximation results; moreover, Reference [37] provides estimates on the required network depth. In the present work we do not merely show universality, i.e., approximability of T by neural networks, but we even prove an exponential convergence rate. Similar results have not yet been established to the best of our knowledge.

1.2 Main Ideas

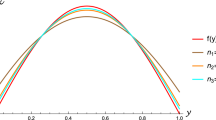

Consider the case \(d=1\). Let \({\pi }\) and \({\rho }\) be two probability measures on \([-1,1]\) with strictly positive Lebesgue densities \(f_{\rho }\), \(f_{\pi }:[-1,1]\rightarrow \{x\in {{\mathbb {R}}}\,:\,x>0\}\). Let

be the corresponding cumulative distribution functions (CDFs), which are strictly monotonically increasing and bijective from \([-1,1]\rightarrow [0,1]\). For any interval \([a,b]\subseteq [-1,1]\), it holds that

Hence, \(T{:}{=}F_{{\pi }}^{-1}\circ F_{\rho }\) is the unique monotone transport satisfying \({\rho }\circ T^{-1}={\pi }\), i.e., \(T_\sharp {\rho }={\pi }\). The formula \(T=F_{{\pi }}^{-1}\circ F_{\rho }\) implies that T inherits the smoothness of \(F_{{\pi }}^{-1}\) and \(F_{\rho }\). Thus, it is at least as smooth as \(f_{\rho }\) and \(f_{\pi }\) (more precisely, \(f_{\rho }\), \(f_{\pi }\in C^k\) imply \(T\in C^{k+1}\)). We will see in Proposition 3.4 that if \(f_{\rho }\) and \(f_{\pi }\) are analytic, the domain of analyticity of T is (under further conditions and in a suitable sense) proportional to the minimum of the domain of analyticity of \(f_{\rho }\) and \(f_{\pi }\). By the “domain of analyticity,” we mean the domain of holomorphic extension to the complex numbers.

Knowledge of the analyticity domain of T allows to prove exponential convergence of polynomial approximations: Assume for the moment that \(T:[-1,1]\rightarrow [-1,1]\) admits an analytic extension to the complex disk with radius \(r>1\) and center \(0\in {{\mathbb {C}}}\). Then \(T(x)=\sum _{k\in {{\mathbb {N}}}}\frac{d^k}{dy^k} T(y)|_{y=0} \frac{x^k}{k!}\) for \(x\in [-1,1]\), and the kth Taylor coefficient can be bounded with Cauchy’s integral formula by \(C r^{-k}\). This implies that the nth Taylor polynomial uniformly approximates T on \([-1,1]\) with error \(O(r^{-n})=O(\exp (-\log (r)n))\). Thus, r determines the rate of exponential convergence.

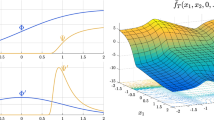

The above construction of the transport can be generalized to the KR transport \(T:[-1,1]^d\rightarrow [-1,1]^d\) for \(d\in {{\mathbb {N}}}\). We will determine an analyticity domain for each component \(T_k\) of \(T=(T_k)_{k=1}^d\): not in the shape of a polydisc, but rather as a pill-shaped set containing \([-1,1]^k\). The reason is that analyticity of \(f_{\rho }\) and \(f_{\pi }\) does not imply the existence of a polydisc, but does imply the existence of such pill-shaped domains. Instead of Taylor expansions, one can then prove exponential convergence of Legendre expansions. Rather than approximating T with Legendre polynomials, we introduce a correction guaranteeing our approximation \(\tilde{T}:[-1,1]^d\rightarrow [-1,1]^d\) to be bijective. This results in a rational function \({\tilde{T}}\). Using existing theory for ReLU networks, we also deduce a ReLU approximation result.

1.3 Outline

In Sect. 1.4, we introduce notation. Section 2 recalls the construction of the triangular KR transport T. In Sect. 3, we investigate the domain of analyticity of T. Section 4 applies the results of Sect. 3 to prove exponential convergence rates for the approximation of the transport through sparse polynomial expansions. Subsequently, Sect. 5 discusses a deep neural network approximation result for the transport. We then use these results in Sect. 6 to establish convergence rates for the associated measures (rather than the transport maps themselves). Finally, in Sect. 7 we present a standard example in uncertainty quantification and demonstrate how our results may be used in inference algorithms.

For the convenience of the reader, in Sect. 3.1 we discuss analyticity of the transport map in the one-dimensional case separately from the general case \(d\in {{\mathbb {N}}}\) (which builds on similar ideas but is significantly more technical) and provide most parts of the proof in the main text. In the remaining sections, all proofs and technical arguments are deferred to appendix.

1.4 Notation

1.4.1 Sequences, Multi-indices, and Polynomials

Boldface characters denote vectors, e.g., \({{\varvec{x}}}=(x_i)_{i=1}^d\), \(d\in {{\mathbb {N}}}\). For \(j\le k\le d\), we denote slices by \({{\varvec{x}}}_{[k]}{:}{=}(x_i)_{i=1}^k\) and \({{\varvec{x}}}_{[j:k]}{:}{=}(x_i)_{i=j}^k\).

For a multi-index \({\varvec{\nu }}\in {{\mathbb {N}}}_0^d\), \({{\,\mathrm{supp}\,}}{\varvec{\nu }}{:}{=}\{j\,:\,\nu _j\ne 0\}\), and \(|{\varvec{\nu }}|{:}{=}\sum _{j\in {{\,\mathrm{supp}\,}}{\varvec{\nu }}}\nu _j\), where empty sums equal 0 by convention. Additionally, empty products equal 1 by convention and \({{\varvec{x}}}^{\varvec{\nu }}{:}{=}\prod _{j\in {{\,\mathrm{supp}\,}}{\varvec{\nu }}} x_j^{\nu _j}\). We write \({\varvec{\eta }}\le {\varvec{\nu }}\) if \(\eta _j\le \nu _j\) for all j, and \({\varvec{\eta }}<{\varvec{\nu }}\) if \({\varvec{\eta }}\le {\varvec{\nu }}\) and there exists j such that \(\eta _j<\nu _j\). A subset \(\Lambda \subseteq {{\mathbb {N}}}_0^d\) is called downward closed if it is finite and satisfies \(\{{\varvec{\eta }}\in {{\mathbb {N}}}_0^d\,:\,{\varvec{\eta }}\le {\varvec{\nu }}\}\subseteq \Lambda \) whenever \({\varvec{\nu }}\in \Lambda \).

For \(n\in {{\mathbb {N}}}\), \({{\mathbb {P}}}_n{:}{=}\mathrm{span}\{x^i\,:\,i\in \{0,\dots ,n\}\}\), where the span is understood over the field \({{\mathbb {R}}}\) (rather than \({{\mathbb {C}}}\)). Moreover, for \(\Lambda \subseteq {{\mathbb {N}}}_0^d\)

and a function \(p\in {{\mathbb {P}}}_\Lambda \) maps from \({{\mathbb {C}}}^d\rightarrow {{\mathbb {C}}}\). If \(\Lambda =\emptyset \), \({{\mathbb {P}}}_\emptyset {:}{=}\{0\}\), i.e., \({{\mathbb {P}}}_\emptyset \) only contains the constant 0 function.

1.4.2 Real and Complex Numbers

Throughout, \({{\mathbb {R}}}^d\) is equipped with the Euclidean norm and \({{\mathbb {R}}}^{d\times d}\) with the spectral norm. We write \({{\mathbb {R}}}_+{:}{=}\{x\in {{\mathbb {R}}}\,:\,x>0\}\) and denote the real and imaginary part of \(z\in {{\mathbb {C}}}\) by \(\Re (z)\), \(\Im (z)\), respectively. For any \(\delta \in {{\mathbb {R}}}_+\) and \(S\subseteq {{\mathbb {C}}}\)

and thus, \({{\mathcal {B}}}_\delta (S)=\bigcup _{x\in S} {{\mathcal {B}}}_\delta (x)\). For \({\varvec{\delta }}=(\delta _i)_{i=1}^d\subset {{\mathbb {R}}}_+\),  . If we omit the argument S, then \(S{:}{=}\{0\}\), i.e., \({{\mathcal {B}}}_\delta {:}{=}{{\mathcal {B}}}_\delta (0)\).

. If we omit the argument S, then \(S{:}{=}\{0\}\), i.e., \({{\mathcal {B}}}_\delta {:}{=}{{\mathcal {B}}}_\delta (0)\).

1.4.3 Measures and Densities

Throughout \([-1,1]^d\) is equipped with the Borel \(\sigma \)-algebra. With \(\lambda \) denoting the Lebesgue measure on \([-1,1]\), \(\mu {:}{=}\frac{\lambda }{2}\). By abuse of notation also \(\mu {:}{=}\otimes _{j=1}^k\frac{\lambda }{2}\), where \(k\in {{\mathbb {N}}}\) will always be clear from context.

If we write “\(f:[-1,1]^d\rightarrow {{\mathbb {R}}}_+\) is a probability density,” we mean that f is measurable, \(f({{\varvec{x}}})>0\) for all \({{\varvec{x}}}\in [-1,1]^d\) and \(\int _{[-1,1]^d}f({{\varvec{x}}})\;\mathrm {d}\mu ({{\varvec{x}}})=1\), i.e., f is a probability density w.r.t. the measure \(\mu \) on \([-1,1]^d\).

1.4.4 Derivatives and Function Spaces

For \(f:[-1,1]^d\rightarrow {{\mathbb {R}}}\) (or \({{\mathbb {C}}}\)), we denote by \(\partial _kf({{\varvec{x}}}){:}{=}\frac{\partial }{\partial x_k}f({{\varvec{x}}})\) the (weak) partial derivative. For \({\varvec{\nu }}\in {{\mathbb {N}}}_0^d\), we write instead \(\partial ^{\varvec{\nu }}_{{\varvec{x}}}f({{\varvec{x}}}){:}{=}\frac{\partial ^{|{\varvec{\nu }}|}}{\partial _{x_1}^{\nu _1}\cdots \partial _{x_d}^{\nu _d}} f({{\varvec{x}}})\). For \(m\in {{\mathbb {N}}}_0\), the space \(W^{m,\infty }([-1,1]^d)\) consists of all \(f:[-1,1]^d\rightarrow {{\mathbb {R}}}\) with finite \(\Vert f \Vert _{W^{m,\infty }({[-1,1]^d})}{:}{=}\sum _{j=0}^m {{\,\mathrm{ess\,sup}\,}}_{{{\varvec{x}}}\in [-1,1]^d} \Vert d^j f({{\varvec{x}}}) \Vert _{}\). Here \(d^0f({{\varvec{x}}})=f({{\varvec{x}}})\) and for \(j\ge 1\), \(d^j f({{\varvec{x}}})\in {{\mathbb {R}}}^{d\times \cdots \times d}\simeq {{\mathbb {R}}}^{d^j}\) denotes the weak jth derivative, and \(\Vert d^jf({{\varvec{x}}}) \Vert _{}\) denotes the norm on \({{\mathbb {R}}}^{d\times \cdots \times d}\) induced by the Euclidean norm. For \(j=1\) we simply write \(df{:}{=}d^1f\). By abuse of notation, e.g., for \(T=(T_k)_{k=1}^d:{{\mathbb {R}}}^d\rightarrow {{\mathbb {R}}}^d\) we also write \(T\in W^{m,\infty }([-1,1]^d)\) meaning that \(T_k\in W^{m,\infty }([-1,1]^d)\) for all \(k\in \{1,\dots ,d\}\), and in this case, \(\Vert T \Vert _{W^{m,\infty }([-1,1]^d)}{:}{=}\sum _{k=1}^d\Vert T_k \Vert _{W^{m,\infty }([-1,1]^d)}\). Similarly, for a measure \(\nu \) on \([-1,1]^d\) and \(p\in [1,\infty )\) we denote by \(L^p([-1,1]^d,\nu )\) the usual \(L^p\) space with norm \(\Vert f \Vert _{L^p([-1,1]^d,\nu )}{:}{=}(\int _{[-1,1]^d}\Vert f({{\varvec{x}}}) \Vert _{}\;\mathrm {d}\nu ({{\varvec{x}}}))^{1/p}\), where either \(f:[-1,1]^d\rightarrow {{\mathbb {R}}}\) or \(f:[-1,1]^d\rightarrow {{\mathbb {R}}}^d\).

1.4.5 Transport Maps

Let \(d\in {{\mathbb {N}}}\). A map \(T:[-1,1]^d\rightarrow [-1,1]^d\) is called triangular if \(T=(T_j)_{j=1}^d\) and each \(T_j:[-1,1]^j\rightarrow [-1,1]\) is a function of \({{\varvec{x}}}_{[j]}=(x_i)_{i=1}^j\). We say that T is monotone if \(x_j\mapsto T_j({{\varvec{x}}}_{[j-1]},x_j)\) is monotonically increasing for every \({{\varvec{x}}}_{[j-1]}\in [-1,1]^{j-1}\), \(j\in \{1,\dots ,d\}\). Note that \(x_j\mapsto T_j({{\varvec{x}}}_{[j-1]},x_j):[-1,1]\rightarrow [-1,1]\) being bijective for every \({{\varvec{x}}}_{[j-1]}\in [-1,1]^{j-1}\), \(j\in \{1,\dots ,d\}\), implies \(T:[-1,1]^d\rightarrow [-1,1]^d\) to be bijective. Similar to our notation for vectors, for the vector valued function \(T=(T_j)_{j=1}^d\) we write \(T_{[k]}{:}{=}(T_j)_{j=1}^k\). Note that for a triangular transport, it holds that \(T_{[k]}:[-1,1]^k\rightarrow [-1,1]^k\).

For a measurable bijection \(T:[-1,1]^d\rightarrow [-1,1]^d\) and a measure \({\rho }\) on \([-1,1]^d\), the pushforward \(T_\sharp {\rho }\) and the pullback \(T^\sharp {\rho }\) measures are defined as

for all measurable \(A\subseteq [-1,1]^d\).

The inverse transport \(T^{-1}:[-1,1]^d\rightarrow [-1,1]^d\) is denoted by S. If \(T:[-1,1]^d\rightarrow [-1,1]^d\) is a triangular monotone bijection, then the same is true for \(S:[-1,1]^d\rightarrow [-1,1]^d\): It holds that \(S_1(y_1)=T_1^{-1}(y_1)\) and

Also note that \(T_\sharp {\rho }={\pi }\) is equivalent to \(S^\sharp {\rho }={\pi }\).

2 Knothe–Rosenblatt Transport

Let \(d\in {{\mathbb {N}}}\). Given a reference probability measure \({\rho }\) and a target probability measure \({\pi }\) on \([-1,1]^d\), under certain conditions (e.g., as detailed below) the KR transport is the (unique) triangular monotone transport \(T:[-1,1]^d\rightarrow [-1,1]^d\) such that \(T_\sharp {\rho }={\pi }\). We now recall the explicit construction of T, as, for instance, presented in [59]. Throughout it is assumed that \({\pi }\ll \mu \) and \({\rho }\ll \mu \) have continuous and positive densities, i.e.,

For a continuous probability density \(f:[-1,1]^d \rightarrow {{\mathbb {C}}}\), we denote \({\hat{f}}_0{:}{=}1\) and for \({{\varvec{x}}}\in [-1,1]^d\)

Thus, \({\hat{f}}_{k}( \cdot )\) is the marginal density of \({{\varvec{x}}}_{[k]}\) and \(f_k({{\varvec{x}}}_{[k-1]},\cdot )\) is the marginal density of \(x_k\) conditioned on \({{\varvec{x}}}_{[k-1]}\). The corresponding marginal conditional CDFs

are well defined for \({{\varvec{x}}}\in [-1,1]^d\) and \(k\in \{1,\dots ,d\}\). They are interpreted as functions of \(x_k\) with \({{\varvec{x}}}_{[k-1]}\) fixed; in particular, \(F_{{\pi };k}({{\varvec{x}}}_{[k-1]},\cdot )^{-1}\) denotes the inverse of \(x_k\mapsto F_{{\pi };k}({{\varvec{x}}}_{[k]})\).

For \({{\varvec{x}}}\in [-1,1]^d\), let

and inductively for \(k\in \{2,\dots ,d\}\) with \(T_{[k-1]}{:}{=}(T_j)_{j=1}^{k-1}:[-1,1]^{k-1}\rightarrow [-1,1]^{k-1}\), let

Then

yields the triangular KR transport \(T:[-1,1]^d\rightarrow [-1,1]^d\). In the following we denote by \(dT:[-1,1]^d\rightarrow {{\mathbb {R}}}^{d\times d}\) the Jacobian matrix of T. The following theorem holds; see, e.g., [59, Prop. 2.18] for a proof.

Theorem 2.1

Assume (2.1). The KR transport T in (2.5) satisfies \(T_\sharp {\rho }={\pi }\) and

The regularity assumption (2.1) on the densities can be relaxed in Theorem 2.1; see, e.g., [6].

In general, T satisfying \(T_\sharp {\rho }={\pi }\) is not unique. To keep the presentation succinct, henceforth we will simply refer to “the transport T,” by which we always mean the unique triangular KR transport in (2.5).

3 Analyticity

The explicit formulas for T given in Sect. 2 imply that positive analytic densities yield an analytic transport. Analyzing the convergence of polynomial approximations to T requires knowledge of the domain of analyticity of T. This is investigated in the present section.

3.1 One-Dimensional Case

Let \(d=1\). By (2.4a), \(T:[-1,1]\rightarrow [-1,1]\) can be expressed through composition of the CDF of \({\rho }\) and the inverse CDF of \({\pi }\). As the inverse function theorem is usually stated without giving details on the precise domain of extension of the inverse function, we give a proof, based on classical arguments, in Appendix A. This leads to the result in Lemma 3.2. Before stating it, we provide another short lemma that will be used multiple times.

Lemma 3.1

Let \(\delta >0\) and let \(K\subseteq {{\mathbb {C}}}\) be convex. Assume that \(f\in C^1({{\mathcal {B}}}_\delta (K);{{\mathbb {C}}})\) such that \(\sup _{x\in {{\mathcal {B}}}_\delta (K)}|f(x)|\le L\). Then \(\sup _{x\in K}|f'(x)|\le \frac{L}{\delta }\) and \(f:K\rightarrow {{\mathbb {C}}}\) is Lipschitz continuous with Lipschitz constant \(\frac{L}{\delta }\).

Proof

For any \(x\in K\) and any \(\varepsilon \in (0,\delta )\), by Cauchy’s integral formula

Letting \(\varepsilon \rightarrow 0\) implies the claim. \(\square \)

Lemma 3.2

Let \(\delta >0\), \(x_0\in {{\mathbb {C}}}\) and let \(f\in C^1({{\mathcal {B}}}_\delta (x_0);{{\mathbb {C}}})\). Suppose that

Let \(F:{{\mathcal {B}}}_\delta (x_0)\rightarrow {{\mathbb {C}}}\) be an antiderivative of \(f:{{\mathcal {B}}}_\delta (x_0)\rightarrow {{\mathbb {C}}}\).

With

there then exists a unique function \(G:{{\mathcal {B}}}_{\alpha \delta }(F(x_0))\rightarrow {{\mathcal {B}}}_{\beta \delta }(x_0)\) such that \(F(G(y))=y\) for all \(y\in {{\mathcal {B}}}_{\alpha \delta }(F(x_0))\). Moreover, \(G\in C^1({{\mathcal {B}}}_{\alpha \delta }(F(x_0));{{\mathbb {C}}})\) with Lipschitz constant \(\frac{1}{M}\).

Proof

We verify the conditions of Proposition A.2 with \({\tilde{\delta }}{:}{=}\delta /(1+\frac{2L}{M})<\delta \). To obtain a bound on the Lipschitz constant of \(F'=f\) on \({{\mathcal {B}}}_{{\tilde{\delta }}}(x_0)\), it suffices to bound \(F''=f'\) there. Due to \({\tilde{\delta }}+\frac{{\tilde{\delta }} 2L}{M} =\delta \), for all \(x\in {{\mathcal {B}}}_{{\tilde{\delta }}}(x_0)\) we have by Lemma 3.1

Since \(F'(x_0)=f(x_0)\ne 0\), the conditions of Proposition A.2 are satisfied, and G is well defined and exists on \({{\mathcal {B}}}_{\alpha \delta }(F(x_0))\), where \(\alpha \delta =\frac{\delta M^2}{2M+4L}=\frac{{\tilde{\delta }} M}{2}\le \frac{{\tilde{\delta }} |F'(t_0)|}{2}\). Finally, due to \(1=F(G(y))'=F'(G(y))G'(y)\), it holds \(G'(y)=\frac{1}{f(G(y))}\) for all \(y\in {{\mathcal {B}}}_{\alpha \delta }(F(x_0))\), which shows that \(G:{{\mathcal {B}}}_{\alpha \delta }(F(x_0))\rightarrow {{\mathbb {C}}}\) has Lipschitz constant \(\frac{1}{M}\). Hence, \(G:{{\mathcal {B}}}_{\alpha \delta }(F(x_0))\rightarrow {{\mathcal {B}}}_{\alpha \delta /M}(G(F(x_0)))={{\mathcal {B}}}_{\beta \delta }(x_0)\). Uniqueness of \(G:{{\mathcal {B}}}_{\alpha \delta }(F(x_0))\rightarrow {{\mathcal {B}}}_{\beta \delta }(x_0)\) satisfying \(F\circ G=\mathrm{Id}\) on \({{\mathcal {B}}}_{\alpha \delta }(F(x_0))\) follows by Proposition A.2 and the fact that \(\beta \delta =\frac{{\tilde{\delta }}}{2}\le {\tilde{\delta }}\). \(\square \)

For \(x\in [-1,1]\) and a density \(f:[-1,1]\rightarrow {{\mathbb {R}}}\), the CDF equals \(F(x)=\int _{-1}^xf(t)\;\mathrm {d}\mu ( t)\). By definition of \(\mu \),

In case f allows an extension \(f:{{\mathcal {B}}}_\delta ([-1,1])\rightarrow {{\mathbb {C}}}\), then \(F:{{\mathcal {B}}}_\delta ([-1,1])\rightarrow {{\mathbb {C}}}\) is also well defined via (3.2). Without explicitly mentioning it, we always consider F to be naturally extended to complex values in this sense.

The next result generalizes Lemma 3.2 from complex balls \({{\mathcal {B}}}_\delta \) to the pill-shaped domains \({{\mathcal {B}}}_\delta ([-1,1])\) defined in (1.3). The proof is given in Appendix B.1.

Lemma 3.3

Let \(\delta >0\) and let

-

(a)

\(f:[-1,1]\rightarrow {{\mathbb {R}}}_+\) be a probability density such that \(f\in C^1({{\mathcal {B}}}_\delta ([-1,1]);{{\mathbb {C}}})\),

-

(b)

\(M\le |f(x)|\le L\) for some \(0<M\le L<\infty \) and all \(x\in {{\mathcal {B}}}_\delta ([-1,1])\).

Set \(F(x){:}{=}\int _{-1}^x f(t)\;\mathrm {d}\mu ( t)\) and let \(\alpha =\alpha (M,L)\), \(\beta =\beta (M,L)\) be as in (3.1).

Then

-

(i)

\(F:[-1,1]\rightarrow [0,1]\) is a \(C^1\)-diffeomorphism, and \(F\in C^1({{\mathcal {B}}}_\delta ([-1,1]);{{\mathbb {C}}})\) with Lipschitz constant L,

-

(ii)

there exists a unique \(G:{{\mathcal {B}}}_{\alpha \delta }([0,1])\rightarrow {{\mathcal {B}}}_{\beta \delta }([-1,1])\) such that \(F(G(y))=y\) for all \(y\in {{\mathcal {B}}}_{\alpha \delta }([0,1])\) and

$$\begin{aligned} G:{{\mathcal {B}}}_{\alpha \delta }(F(x_0))\rightarrow {{\mathcal {B}}}_{\beta \delta }(x_0) \end{aligned}$$(3.3)for all \(x_0\in [-1,1]\). Moreover, \(G\in C^1({{\mathcal {B}}}_{\alpha \delta }([0,1]);{{\mathbb {C}}})\) with Lipschitz constant \(\frac{1}{M}\).

We arrive at a statement about the domain of analytic extension of the one-dimensional monotone transport \(T{:}{=}F_{\pi }^{-1}\circ F_{\rho }:[-1,1]\rightarrow [-1,1]\) as in (2.4a).

Proposition 3.4

Let \(\delta _{\rho }\), \(\delta _{\pi }>0\), \(L_{\rho }<\infty \), \(0<M_{\pi }\le L_{\pi }<\infty \) and

-

(a)

for \(*\in \{{\rho },{\pi }\}\) let \(f_*:[-1,1]\rightarrow {{\mathbb {R}}}_+\) be a probability density and \(f_*\in C^1({{\mathcal {B}}}_{\delta _*}([-1,1]);{{\mathbb {C}}})\),

-

(b)

for \(x\in {{\mathcal {B}}}_{\delta _{\rho }}([-1,1])\), \(y\in {{\mathcal {B}}}_{\delta _{\pi }}([-1,1])\)

$$\begin{aligned} |f_{\rho }(x)|\le L_{\rho },\qquad 0< M_{\pi }\le |f_{\pi }(y)|\le L_{\pi }. \end{aligned}$$

Then with \(r{:}{=}\min \{\delta _{\rho }, \frac{\delta _{\pi }M_{\pi }^2}{L_{\rho }(2M_{\pi }+4L_{\pi })}\}\) and \(q{:}{=}\frac{r L_{\rho }}{M_{\pi }}\) it holds \(T\in C^1({{\mathcal {B}}}_r([-1,1]); {{\mathcal {B}}}_{q}([-1,1]))\).

Proof

First, according to Lemma 3.3 (i), \(F_{\rho }:[-1,1]\rightarrow [0,1]\) admits an extension

where we used that \(F_{\rho }\) is Lipschitz continuous with Lipschitz constant \(L_{\rho }\). Furthermore, Lemma 3.3 (ii) implies with \(\varepsilon {:}{=}\frac{\delta _{\pi }M_{\pi }^2}{2M_{\pi }+4L_{\pi }}\) that \(F_{\pi }^{-1}:[0,1]\rightarrow [-1,1]\) admits an extension

where we used that \(F_{\pi }^{-1}\) is Lipschitz continuous with Lipschitz constant \(\frac{1}{M_{\pi }}\).

Assume first \(r=\delta _{\rho }\), which implies \(L_{\rho }\delta _{\rho }\le \varepsilon \). Then \(F_{\pi }^{-1}\circ F_{\rho }\in C^1({{\mathcal {B}}}_{\delta _{\rho }}([-1,1]);{{\mathbb {C}}})\) is well defined by (3.4). In the second case where \(r=\frac{\delta _{\pi }M_{\pi }^2}{L_{\rho }(2M_{\pi }+4L_{\pi })}\), we have \(\varepsilon =L_{\rho }r\) and \(r\le \delta _{\rho }\). Hence, \(F_{\rho }:{{\mathcal {B}}}_r([-1,1])\rightarrow {{\mathcal {B}}}_{L_{\rho }r}([0,1])={{\mathcal {B}}}_{\varepsilon }([0,1])\) is well defined. Thus, \(F_{\pi }^{-1}\circ F_{\rho }\in C^1({{\mathcal {B}}}_{r}([-1,1]);{{\mathbb {C}}})\) is well defined. In both cases, since \(T=F_{\pi }^{-1}\circ F_{\rho }\) is Lipschitz continuous with Lipschitz constant \(\frac{L_{\rho }}{M_{\pi }}\) (cp. Lemma 3.3), T maps to \({{\mathcal {B}}}_{\frac{rL_{\rho }}{M_{\pi }}}([-1,1])\). \(\square \)

The radius r in Proposition 3.4 describing the analyticity domain of the transport behaves like \(O(\min \{\delta _{\rho },\delta _{\pi }\})\) as \(\min \{\delta _{\rho },\delta _{\pi }\}\rightarrow \infty \) (considering the \(M_*\), \(L_*\) constants fixed). In this sense, the analyticity domain of T is proportional to the minimum of the analyticity domains of the reference and target densities.

3.2 General Case

We now come to the main result of Sect. 3, which is a multidimensional version of Proposition 3.4. More precisely, we give a statement about the analyticity domain of \((\partial _{k}T_k)_{k=1}^d\). The reason is that, from both a theoretical and a practical viewpoint, it is convenient first to approximate \(\partial _{k}T_k:[-1,1]^k\rightarrow [0,1]\) and then to obtain an approximation to \(T_k\) by integrating over \(x_k\). We explain this in more detail in Sect. 4, see (4.9).

The following technical assumption gathers our requirements on the reference \({\rho }\) and the target \({\pi }\).

Assumption 3.5

Let \(0< M \le L <\infty \), \(C_6>0\), \(d\in {{\mathbb {N}}}\) and \({\varvec{\delta }}\in (0,\infty )^d\). For \(*\in \{{\rho },{\pi }\}\):

-

(a)

\(f_*:[-1,1]^d\rightarrow {{\mathbb {R}}}_+\) is a probability density and \(f_{*}\in C^1({{\mathcal {B}}}_{{\varvec{\delta }}}([-1,1]);{{\mathbb {C}}})\),

-

(b)

\(M\le |f_{*}({{\varvec{x}}})|\le L\) for \({{\varvec{x}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}}([-1,1])\),

-

(c)

\(\sup _{{{\varvec{y}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}}}|f_{*}({{\varvec{x}}}+{{\varvec{y}}})-f_{*}({{\varvec{x}}})| \le C_6\) for \({{\varvec{x}}}\in [-1,1]^d\),

-

(d)

\( \sup _{{{\varvec{y}}}\in {{\mathcal {B}}}_{{\varvec{\delta }}_{[k]}}\times \{0\}^{d-k}}|f_{*}({{\varvec{x}}}+{{\varvec{y}}})-f_{*}({{\varvec{x}}})|\le C_6 \delta _{k+1} \) for \({{\varvec{x}}}\in [-1,1]^d\) and \(k\in \{1,\dots ,d-1\}\).

Assumptions (a) and (b) state that \(f_*\) is a positive analytic probability density on \([-1,1]^d\) that allows a complex differentiable extension to the set \({{\mathcal {B}}}_{\varvec{\delta }}([-1,1])\subseteq {{\mathbb {C}}}^d\), cp. (1.3). Equation (2.4) shows that \(T_{k+1}\) is obtained by a composition of \(F_{{\pi };k+1}(T_{1},\dots ,T_{k},\cdot )^{-1}\) (the inverse in the last variable) and \(F_{{\rho };k+1}\). The smallness conditions (c) and (d) can be interpreted as follows: They will guarantee \(F_{{\rho };k+1}({{\varvec{y}}})\) (for certain complex \({{\varvec{y}}}\)) to belong to the domain where the complex extension of \(F_{{\pi };k+1}(T_1,\dots ,T_{k},\cdot )^{-1}\) is well defined.

Theorem 3.6

Let \(0<M\le L<\infty \), \(d\in {{\mathbb {N}}}\) and \({\varvec{\delta }}\in (0,\infty )^d\). There exist \(C_6\), \(C_7\), and \(C_8>0\) depending on M and L (but not on d or \({\varvec{\delta }}\)) such that if Assumption 3.5 holds with \(C_6\), then:

Let \(T=(T_k)_{k=1}^d\) be as in (2.5) and \(R_k{:}{=}\partial _{k}T_k\). With \({\varvec{\zeta }}=(\zeta _k)_{k=1}^d\) where

it holds for all \(k\in \{1,\dots ,d\}\) that

-

(i)

\(R_k\in C^1({{\mathcal {B}}}_{{\varvec{\zeta }}_{[k]}}([-1,1]);{{\mathcal {B}}}_{C_8}(1))\) and \(\Re (R_k({{\varvec{x}}}))\ge \frac{1}{C_8}\) for all \({{\varvec{x}}}\in {{\mathcal {B}}}_{{\varvec{\zeta }}_{[k]}}([-1,1])\),

-

(ii)

if \(k\ge 2\), \(R_k:{{\mathcal {B}}}_{{\varvec{\zeta }}_{[k-1]}}([-1,1])\times [-1,1]\rightarrow {{\mathcal {B}}}_{\frac{C_8}{\max \{1,\delta _k\}}}(1)\).

Put simply, the first item of the theorem can be interpreted as follows: The function \(\partial _{k}T_k\) allows in the jth variable an analytic extension to the set \({{\mathcal {B}}}_{\zeta _j}([-1,1])\), where \(\zeta _j\) is proportional to \(\delta _j\). The constant \(\delta _j\) describes the domain of analytic extension of the densities \(f_{\rho }\), \(f_{\pi }\) in the jth variable. Thus, the analyticity domain of each \(\partial _{k} T_k\) is proportional to the (intersection of the) domains of analyticity of the densities. Additionally, the real part of \(\partial _{k} T_k\) remains strictly positive on this extension to the complex domain. Note that \(\partial _{k} T_k({{\varvec{x}}})\) is necessarily positive for real \({{\varvec{x}}}\in [-1,1]^k\), since the transport is monotone.

The second item of the theorem states that the kth variable \(x_k\) plays a special role for \(T_k\): if we merely extend \(\partial _{k}T_k\) in the first \(k-1\) variables to the complex domain and let the kth argument \(x_k\) belong to the real interval \([-1,1]\), then the values of this extension behave like \(1+O(\frac{1}{\delta _k})\), and thus, the extension is very close to the constant 1 function for large \(\delta _k\). In other words, if the densities \(f_{\rho }\), \(f_{\pi }\) allow a (uniformly bounded from above and below) analytic extension to a very large subset of the complex domain in the kth variable, then the kth component of the transport \(T_k({{\varvec{x}}}_{[k]})\) will be close to \(-1+\int _{-1}^{x_k}1\;\mathrm {d}t=x_k\), i.e., to the identity in the kth variable.

We also emphasize that we state the analyticity results here for \(\partial _{k}T_k\) (in the form they will be needed below), but this immediately implies that \(T_k\) allows an analytic extension to the same domain.

Remark 3.7

Crucially, for any \(k<d\) the left-hand side of the inequality in Assumption 3.5 (d) depends on \((\delta _j)_{j=1}^k\), while the right-hand side depends only on \(\delta _{k+1}\) but not on \((\delta _j)_{j=1}^k\). This will allow us to suitably choose \({\varvec{\delta }}\) when verifying this assumption (see the proof of Lemma 3.9).

Remark 3.8

The proof of Theorem 3.6 shows that there exists \(C\in (0,1)\) independent of M and L such that we can choose \(C_6=C\frac{\min \{M,1\}^5}{\max \{L,1\}^4}\), \(C_7=C\frac{\min \{M,1\}^3}{\max \{L,1\}^3}\) and \(C_8=C^{-1}(\frac{\max \{L,1\}^4}{\min \{M,1\}^4})\); see (B.43), (B.41) and (B.24a), (B.40).

To give an example for \({\rho }\), \({\pi }\) fitting our setting, we show that Assumption 3.5 holds (for some sequence \({\varvec{\delta }}\)) whenever the densities \(f_{\rho }\), \(f_{\pi }\) are analytic.

Lemma 3.9

For \(*\in \{{\rho },{\pi }\}\), let \(f_*:[-1,1]^d\rightarrow {{\mathbb {R}}}_+\) be a probability density, and assume that \(f_*\) is analytic on an open set in \({{\mathbb {R}}}^d\) containing \([-1,1]^d\). Then there exist \(0<M\le L <\infty \) and \({\varvec{\delta }}\in (0,\infty )^d\) such that Assumption 3.5 holds with \(C_6(M,L)\) as in Theorem 3.6.

4 Polynomial-Based Approximation

Analytic functions on \([-1,1]^d\rightarrow {{\mathbb {R}}}\) allow for exponential convergence when approximated by multivariate polynomial expansions. We recall this in Sect. 4.1 for truncated Legendre expansions. These results are then applied to the KR transport in Sect. 4.2.

4.1 Exponential Convergence of Legendre Expansions

For \(n\in {{\mathbb {N}}}_0\), let \(L_n\in {{\mathbb {P}}}_n\) be the nth Legendre polynomial normalized in \(L^2([-1,1],\mu )\). For \({\varvec{\nu }}\in {{\mathbb {N}}}_0^d\) set \(L_{\varvec{\nu }}({{\varvec{y}}}){:}{=}\prod _{j=1}^dL_{\nu _j}(y_j)\). Then \((L_{\varvec{\nu }})_{{\varvec{\nu }}\in {{\mathbb {N}}}_0^d}\) is an orthonormal basis of \(L^2([-1,1]^d,\mu )\). Thus, every \(f\in L^2([-1,1]^d,\mu )\) admits the multivariate Legendre expansion \(f({{\varvec{y}}}) = \sum _{{\varvec{\nu }}\in {{\mathbb {N}}}_0^d}l_{\varvec{\nu }}L_{\varvec{\nu }}({{\varvec{y}}})\) with coefficients \(l_{\varvec{\nu }}= \int _{[-1,1]^d}f({{\varvec{y}}})L_{\varvec{\nu }}({{\varvec{y}}})\;\mathrm {d}\mu ({{\varvec{y}}})\). For finite \(\Lambda \subseteq {{\mathbb {N}}}_0^d\), \(\sum _{{\varvec{\nu }}\in \Lambda }l_{\varvec{\nu }}L_{\varvec{\nu }}({{\varvec{y}}})\) is the orthogonal projection of f in the Hilbert space \(L^2([-1,1]^d,\mu )\) onto its subspace

As is well known, functions that are holomorphic on sets of the type \({{\mathcal {B}}}_{\varvec{\delta }}([-1,1])\) have exponentially decaying Legendre coefficients. We recall this in the next lemma, which is adapted to the regularity we showed for the transport in Theorem 3.6.

Lemma 4.1

Let \(k\in {{\mathbb {N}}}\), \({\varvec{\delta }}\in (0,\infty )^k\) and \(f\in C^1({{\mathcal {B}}}_{\varvec{\delta }}([-1,1]);{{\mathbb {C}}})\). Set \(w_{\varvec{\nu }}{:}{=}\prod _{j=1}^k(1+2\nu _j)^{3/2}\), \({\varvec{\varrho }}=(1+\delta _j)_{j=1}^k\) and \(l_{\varvec{\nu }}{:}{=}\int _{[-1,1]^d} f({{\varvec{y}}}) L_{\varvec{\nu }}({{\varvec{y}}})\;\mathrm {d}\mu ({{\varvec{y}}})\) for \({\varvec{\nu }}\in {{\mathbb {N}}}_0^k\). Then

-

(i)

for all \({\varvec{\nu }}\in {{\mathbb {N}}}_0^k\)

$$\begin{aligned} |l_{\varvec{\nu }}| \le {\varvec{\varrho }}^{-{\varvec{\nu }}} w_{\varvec{\nu }}\Vert f \Vert _{L^\infty ({{\mathcal {B}}}_{\varvec{\delta }}({[-1,1]}))} \prod _{j\in {{\,\mathrm{supp}\,}}{\varvec{\nu }}}\frac{2\varrho _j}{\varrho _j-1}, \end{aligned}$$(4.1) -

(ii)

for all \({\varvec{\nu }}\in {{\mathbb {N}}}_0^{k-1}\times \{0\}\)

$$\begin{aligned} |l_{\varvec{\nu }}| \le {\varvec{\varrho }}^{-{\varvec{\nu }}} w_{\varvec{\nu }}\Vert f \Vert _{L^\infty ({{\mathcal {B}}}_{{\varvec{\delta }}_{[k-1]}}([-1,1])\times [-1,1])} \prod _{j\in {{\,\mathrm{supp}\,}}{\varvec{\nu }}}\frac{2\varrho _j}{\varrho _j-1}. \end{aligned}$$(4.2)

The previous lemma in combination with Theorem 3.6 yields a bound on the Legendre coefficients of the partial derivatives \(\partial _k T_k-1\) of the kth component of the transport. Specifically, for \({\varvec{\nu }}\in {{\mathbb {N}}}_0^k\) Theorem 3.6 (i) together with Lemma 4.1 (i) implies with \(t_1{:}{=}{\varvec{\varrho }}^{-{\varvec{\nu }}}\Vert \partial _kT_k-1 \Vert _{L^\infty ({{\mathcal {B}}}_{\varvec{\delta }}({[-1,1]}))}\) and \(t_2{:}{=}w_{\varvec{\nu }}\prod _{j\in {{\,\mathrm{supp}\,}}{\varvec{\nu }}}\frac{\varrho _j}{\varrho _j-1}\) the bound \(t_1t_2\) for the corresponding Legendre coefficient. For a multi-index \({\varvec{\nu }}\in {{\mathbb {N}}}_0^{k-1}\times \{0\}\), applying instead Theorem 3.6 (ii) together with Lemma 4.1 (ii) yields the bound \({\tilde{t}}_1 t_2\) where \({\tilde{t}}_1{:}{=}{\varvec{\varrho }}^{-{\varvec{\nu }}}\Vert \partial _kT_k-1 \Vert _{L^\infty ({{\mathcal {B}}}_{{\varvec{\delta }}_{[k-1]}}({[-1,1]})\times [-1,1])}\). By Theorem 3.6, the last norm is bounded by \(\frac{C_3}{\delta _k}\). Hence, compared to the first estimate \(t_1\), we gain the factor \(\frac{1}{\delta _k}\) by using the second estimate \({\tilde{t}}_1\) instead.Footnote 1

Taking the minimum of these estimates leads us to introduce for \(k\in {{\mathbb {N}}}\), \({\varvec{\nu }}\in {{\mathbb {N}}}_0^k\) and \({\varvec{\varrho }}\in (1,\infty )^k\) the quantity

and the set

corresponding to the largest values of \(\gamma ({\varvec{\varrho }},{\varvec{\nu }})\).

The structure of \(\Lambda _{k,\varepsilon }\) is as follows: The larger the \(\varrho _j\), the smaller the \(\varrho _j^{-1}\). Thus, the larger the \(\varrho _j\), the fewer the multi-indices \({\varvec{\nu }}\) with \(j\in {{\,\mathrm{supp}\,}}{\varvec{\nu }}\) belong to \(\Lambda _{k,\varepsilon }\). In this sense, \(\varrho _j\) measures the importance of the jth variable in the Legendre expansion of \(\partial _k T_k\). The kth variable plays a special role, as it always is among the most important variables, since for all \({\varvec{\nu }}\in {{\mathbb {N}}}_0^k\) it holds that \(\gamma ({\varvec{\varrho }}, {\varvec{\nu }}) \ge \gamma ({\varvec{\varrho }}, {\varvec{e}}_k)\), where \({\varvec{e}}_k=(0,\dots ,0,1)\in {{\mathbb {N}}}_0^k\). In other words, whenever \(\varepsilon >0\) is so small that \(\Lambda _{k,\varepsilon }\ne \emptyset \), at least one \({\varvec{\nu }}\) with \(\nu _k\ne 0\) belongs to \(\Lambda _{k,\varepsilon }\).

Having determined a set of multi-indices corresponding to the largest upper bounds obtained for the Legendre coefficients, we arrive at the next proposition. The assumptions on the function f correspond to the regularity of \(\partial _{k}T_k\) shown in Theorem 3.6. The proposition states that such f can be approximated with the error decreasing as \(O(-\beta N^{1/k})\) in terms of the dimension N of the polynomial space.

Proposition 4.2

Let \(k\in {{\mathbb {N}}}\), \({\varvec{\delta }}\in (0,\infty )^k\) and \(r>0\), such that \(f\in C^1({{\mathcal {B}}}_{{\varvec{\delta }}}([-1,1]);{{\mathcal {B}}}_r)\) and \(f:{{\mathcal {B}}}_{{\varvec{\delta }}_{[k-1]}}([-1,1])\times [-1,1]\rightarrow {{\mathcal {B}}}_{\frac{r}{1+\delta _k}}\). With \(\varrho _j{:}{=}1+\delta _j\) set

For \({\varvec{\nu }}\in {{\mathbb {N}}}_0^k\), set \(l_{\varvec{\nu }}{:}{=}\int _{[-1,1]^k}f({{\varvec{y}}})L_{\varvec{\nu }}({{\varvec{y}}})\;\mathrm {d}\mu ({{\varvec{y}}})\).

Then for every \({\tilde{\beta }}<\beta \), there exists \(C=C(k,m,{\tilde{\beta }},{\varvec{\delta }},r,\Vert f \Vert _{L^\infty ({{{\mathcal {B}}}_{\varvec{\delta }}([-1,1])})})\) s.t. for every \(\varepsilon \in (0,\varrho _k^{-1})\) the following holds with \(\Lambda _{k,\varepsilon }\) as in (4.4):

In Proposition 4.2, \({\tilde{\beta }}\in (0,\beta )\) can be chosen arbitrarily close to \(\beta \). However, as \({\tilde{\beta }}\) approaches \(\beta \), the constant C in (4.8) will tend to infinity, cp. (C.11).

4.2 Polynomial and Rational Approximation

Combining Proposition 4.2 with Theorem 3.6 we obtain the following approximation result for the transport. It states that \(T:[-1,1]^d\rightarrow [-1,1]^d\) can be approximated by multivariate polynomials, converging in \(W^{m,\infty }([-1,1]^d)\) with the error decreasing as \(\exp (-\beta N_\varepsilon ^{1/d})\). Here \(N_\varepsilon \) is the dimension of the (ansatz) space in which T is approximated.

Theorem 4.3

Let \(m\in {{\mathbb {N}}}_0\). Let \(f_{\rho }\), \(f_{\pi }\) satisfy Assumption 3.5 for some constants \(0<M\le L<\infty \), \({\varvec{\delta }}\in (0,\infty )^d\) and with \(C_6=C_6(M,L)\) as in Theorem 3.6. Let \(C_7=C_7(M,L)\) be as in Theorem 3.6. For \(j\in \{1,\dots ,d\}\), set

For \(k\in \{1,\dots ,d\}\), let \(\Lambda _{k,\varepsilon }\) be as in (4.4) and define

For every \({\tilde{\beta }}<\beta \), there exists \(C=C({\varvec{\varrho }},m,d,{\tilde{\beta }},f_{\rho },f_{\pi })>0\) such that for every \(\varepsilon \in (0,1)\) with

\({\tilde{T}}{:}{=}({\tilde{T}}_k)_{k=1}^d\) and \(N_\varepsilon {:}{=}\sum _{k=1}^d|\Lambda _{k,\varepsilon }|\), it holds

Remark 4.4

We set \(\varrho _j=1+C_7\delta _j>1\) in Theorem 4.3, where \(\delta _j\) as in Assumption 3.5 encodes the size of the analyticity domain of the densities \(f_{\rho }\) and \(f_{\pi }\) (in the jth variable). The constant \(\beta \) in (4.7b) is an increasing function of each \(\varrho _j\). Loosely speaking, Theorem 4.3 states that the larger the analyticity domain of the densities, the faster the convergence when approximating the corresponding transport T with polynomials.

We skip the proof of the above theorem and instead proceed with a variation of this result. It states a convergence rate for an approximation \({\tilde{T}}_{k}\) to \(T_k\), which enjoys the property that \({\tilde{T}}_{k}({{\varvec{x}}}_{[k-1]},\cdot ):[-1,1]\rightarrow [-1,1]\) is monotonically increasing and bijective for every \({{\varvec{x}}}_{[k-1]}\in [-1,1]^{k-1}\). Thus, contrary to \({\tilde{T}}\) in Theorem 4.3, the \({\tilde{T}}\) in the next proposition is a bijection from \([-1,1]^d\rightarrow [-1,1]^d\) by construction.

This is achieved as follows: Let \(g:{{\mathbb {R}}}\rightarrow \{x\in {{\mathbb {R}}}\,:\,x\ge 0\}\) be analytic, such that \(g(0)=1\) and \(h{:}{=}g^{-1}:(0,\infty )\rightarrow {{\mathbb {R}}}\) is also analytic. We first approximate \(h(\partial _{k}T_k)\) by some function \(p_k\) and then obtain \(-1+\int _{-1}^{x_k}g(p_k({{\varvec{x}}}_{[k-1]},t))\;\mathrm {d}t\) as an approximation \({\tilde{T}}_k\) to \(T_k\). This approach, similar to what is proposed in [53], and in the present context in [45], guarantees \(\partial _{k}{\tilde{T}}_k=g(p_k({{\varvec{x}}}_{[k]}))\ge 0\) and \({\tilde{T}}_k({{\varvec{x}}}_{[k-1]},-1)=-1\) so that \({\tilde{T}}_k({{\varvec{x}}})\) is monotonically increasing in \(x_k\). In order to force \(\tilde{T}_k({{\varvec{x}}}_{[k-1]},\cdot ):[-1,1]\rightarrow [-1,1]\) to be bijective we introduce a normalization which leads to

The meaning of \(g(0)=1\) is that the trivial approximation \(p_k\equiv 0\) then yields \({\tilde{T}}_k({{\varvec{x}}})=x_k\).

To avoid further technicalities, henceforth we choose \(g(x)=(x+1)^2\) (and thus \(h(x)=\sqrt{x}-1\)), but emphasize that our analysis works just as well with any other positive analytic function such that \(g(0)=1\), e.g., \(g(x)=\exp (x)\) and \(h(x)=\log (x)\). The choice \(g(x)=(x+1)^2\) has the advantage that \(g(p_k)\) is polynomial if \(p_k\) is polynomial. This allows exact evaluation of the integrals in (4.9) without resorting to numerical quadrature, and results in a rational approximation \(\tilde{T}_k\):

Theorem 4.5

Let \(m\in {{\mathbb {N}}}_0\). Let \(f_{\rho }\), \(f_{\pi }\) satisfy Assumption 3.5 for some constants \(0<M\le L<\infty \), \({\varvec{\delta }}\in (0,\infty )^d\) and with \(C_6=C_6(M,L)\) as in Theorem 3.6. Let \(\varrho _j\), \(\beta \) and \(\Lambda _{k,\varepsilon }\) be as in Theorem 4.3.

For every \({\tilde{\beta }}<\beta \) there exists \(C=C({\varvec{\xi }},m,d,{\tilde{\beta }},f_{\rho },f_{\pi })>0\) and for every \(\varepsilon \in (0,1)\) there exist polynomials \(p_{k,\varepsilon }\in {{\mathbb {P}}}_{\Lambda _{k,\varepsilon }}\), \(k\in \{1,\dots ,d\}\), such that with

the map \({\tilde{T}}_\varepsilon {:}{=}({\tilde{T}}_{k,\varepsilon })_{k=1}^d:[-1,1]^d\rightarrow [-1,1]^d\) is a monotone triangular bijection, and with

it holds

We emphasize that our reason for using rational functions rather than polynomials in Theorem 4.5 is merely to guarantee that the resulting approximation \({\tilde{T}}:[-1,1]^d\rightarrow [-1,1]^d\) is a bijective and monotone map. We do not employ specific properties of rational functions (as done for Padé approximations) in order to improve the convergence order.

Remark 4.6

If \(\Lambda _{k,\varepsilon }=\emptyset \) then by convention \({{\mathbb {P}}}_{\Lambda _{k,\varepsilon }}=\{0\}\); thus, \(p_{k,\varepsilon }= 0\) and \(\tilde{T}_{k,\varepsilon }({{\varvec{x}}})=x_k\).

Remark 4.7

Let \(S=T^{-1}\) so that \(T_\sharp {\rho }={\pi }\) is equivalent to \(S^\sharp {\rho }={\pi }\). It is often simpler to first approximate S, and then compute T by inverting S, see [45]. Since the assumptions of Theorem 4.5 (and Theorem 3.6) on the measures \({\rho }\) and \({\pi }\) are identical, Theorem 4.5 also yields an approximation result for the inverse transport map S: For all \(\varepsilon >0\) and with \(\Lambda _{k,\varepsilon }\) as in Theorem 4.5, there exist multivariate polynomials \(p_k\in {{\mathbb {P}}}_{\Lambda _{k,\varepsilon }}\) such that with

it holds

5 Deep Neural Network Approximation

Based on the seminal paper [73], it has recently been observed that ReLU neural networks (NNs) are capable of approximating analytic functions at an exponential convergence rate [24, 49], and slight improvements can be shown for certain smoother activation functions, e.g., [39]. We also refer to [46] for much earlier results of this type for different activation functions. As a consequence, our analysis in Sect. 3 yields approximation results of the transport by deep neural networks (DNNs). Below we present the statement, which is based on [49, Thm. 3.7].

To formulate the result, we recall the definition of a feedforward ReLU NN. The (nonlinear) ReLU activation function is defined as \(\varphi (x){:}{=}\max \{0,x\}\). We call a function \(f:{{\mathbb {R}}}^d\rightarrow {{\mathbb {R}}}^d\) a ReLU NN, if it can be written as

for certain weight matrices \({{\varvec{W}}}_j\in {{\mathbb {R}}}^{n_{j+1}\times n_j}\) and bias vectors \({{\varvec{b}}}_j\in {{\mathbb {R}}}^{n_{j+1}}\) where \(n_0=n_{L+1}=d\). For simplicity, we do not distinguish between the network (described by \(({{\varvec{W}}}_j,{{\varvec{b}}}_j)_{j=0}^L\)), and the function it expresses (different networks can have the same output). We then write \(\mathrm{depth}(f){:}{=}L\), \(\mathrm{width}(f){:}{=}\max _j n_j\) and \(\mathrm{size}(f){:}{=}\sum _{j=0}^{L+1}(|{{\varvec{W}}}_j|_0+|{{\varvec{b}}}_j|_0)\), where \(|{{\varvec{W}}}_j|_0=|\{(k,l)\,:\,({{\varvec{W}}}_j)_{kl}\ne 0\}|\) and \(|{{\varvec{b}}}_j|_0=|\{k\,:\,({{\varvec{b}}}_j)_{k}\ne 0\}|\). In other words, the depth corresponds to the number of applications of the activation function (the number of hidden layers) and the size equals the number of nonzero weights and biases, i.e., the number of trainable parameters in the network.

Theorem 5.1

Let \(f_{\rho }\), \(f_{\pi }\) be two positive and analytic probability densities on \([-1,1]^d\). Then there exists \(\beta >0\), and for every \(N\in {{\mathbb {N}}}\), there exists a ReLU NN \(\Phi _N=(\Phi _{N,j})_{j=1}^d:{{\mathbb {R}}}^d\rightarrow {{\mathbb {R}}}^d\), such that \(\Phi _N:[-1,1]^d\rightarrow [-1,1]^d\) is bijective, triangular and monotone,

\(\mathrm{size}(\Phi _N) \le C N\) and \(\mathrm{depth}(\Phi _N)\le C\log (N)N^{1/2}\). Here C is a constant depending on d, \(f_{\rho }\) and \(f_{\pi }\) but independent of N.

Remark 5.2

Compared to Theorems 4.3 and 4.5, for ReLU networks we obtain the slightly worse convergence rate \(\exp (-\beta N^{1/(d+1)})\) instead of \(\exp (-\beta N^{1/d})\). This stems from the fact that, for ReLU networks, the best known approximation results for analytic functions in d dimensions show convergence with the rate \(\exp (-\beta N^{1/(d+1)})\); see [49, Thm. 3.5].

The proof of Theorem 5.1 proceeds as follows: First, we apply results from [49] to obtain a neural network approximation \({\tilde{\Phi }}_k\) to \(T_k\). The constructed \((\tilde{\Phi }_k)_{k=1}^d:[-1,1]^d\rightarrow {{\mathbb {R}}}^d\) is a triangular map that is close to T in the norm of \(W^{1,\infty }([-1,1]^d)\). However, it is not necessarily a monotone bijective self-mapping of \([-1,1]^d\). To correct the construction, we use the following lemma:

Lemma 5.3

Let \(f:[-1,1]^{k-1}\rightarrow {{\mathbb {R}}}\) be a ReLU NN. Then there exists a ReLU NN \(g_f:[-1,1]^{k}\rightarrow {{\mathbb {R}}}\) such that \(|g_f({{\varvec{y}}},t)|\le |f({{\varvec{y}}})|\) for all \(({{\varvec{y}}},t)\in [-1,1]^{k-1}\times [-1,1]\),

and \(\mathrm{depth}(g_f)\le 1+\mathrm{depth}(f)\) and \(\mathrm{size}(g_f)\le C (1+\mathrm{size}(f))\) with C independent of f and \(g_f\). Moreover, in the sense of weak derivatives \(|\nabla _{{\varvec{y}}}g_f({{\varvec{y}}},t)|\le |\nabla f({{\varvec{y}}})|\) and \(|\frac{d}{dt} g_f({{\varvec{y}}},t)|\le \sup _{{{\varvec{y}}}\in [-1,1]^{k-1}}|f({{\varvec{y}}})|\), i.e., these inequalities hold a.e. in \([-1,1]^{k-1}\times [-1,1]\).

With \({\tilde{\Phi }}_k:[-1,1]^k\rightarrow {{\mathbb {R}}}\) approximating the kth component \(T_k:[-1,1]^k\rightarrow [-1,1]\), it is then easy to check that with \(f_1({{\varvec{x}}}_{[k-1]}){:}{=}1-{\tilde{\Phi }}_k({{\varvec{x}}}_{[k-1]},1)\) and \(f_{-1}({{\varvec{x}}}_{[k-1]}){:}{=}-1-{\tilde{\Phi }}_k({{\varvec{x}}}_{[k-1]},-1)\) for \({{\varvec{x}}}\in [-1,1]\), the NN

satisfies \(\Phi _k({{\varvec{x}}}_{[k-1]},1)=1\) and \(\Phi _k({{\varvec{x}}}_{[k-1]},-1)=-1\). Since the introduced correction terms \(g_{f_1}\) and \(g_{f_{-1}}\) have size and depth bounds of the same order as \({\tilde{\Phi }}_k\), they will not worsen the resulting convergence rates. The details are provided in Appendix D.1.

In the previous theorem, we consider a “sparsely connected” network \(\Phi \), meaning that certain weights and biases are, by choice of the network architecture, set a priori to zero. This reduces the overall size of \(\Phi \). We note that this also yields a convergence rate for fully connected networks: Consider all networks of width O(N) and depth \(O(\log (N) N^{1/2})\). The size of a network within this architecture is bounded by \(O(N^2\log (N) N^{1/2})=O(N^{5/2}\log (N))\), since the number of elements of the weight matrix \({{\varvec{W}}}_j\) between two consecutive layers is \(n_jn_{j+1}\le N^2\). The network \(\Phi \) from Theorem 5.1 belongs to this class, and thus, the best approximation among networks with this architecture achieves at least the exponential convergence \(\exp (-\beta N^{1/(d+1)})\). In terms of the number of trainable parameters \(M=O(N^{5/2}\log (N))\), this convergence rate is, up to logarithmic terms, \(\exp (-\beta M^{2/(5d+5)})\).

Remark 5.4

The constant C in (4.12) and the (possibly different) constant C in (5.2) typically depend exponentially on the dimension d: This dependence is true for polynomial approximation results of analytic functions in d dimensions, which is why it will hold for C in (4.12) in general. Since the proof in [49], upon which our analysis is based, uses polynomial approximations, the same can be expected for the constant in (5.2). In [76] we discuss the approximation of T by rational functions in the high-dimensional case. There we give sufficient conditions on the reference and target to guarantee algebraic convergence of the error, with all constants being controlled independent of the dimension.

Remark 5.5

Normalizing flows approximate a transport map T using a variety of neural network constructions, typically by composing a series of nonlinear bijective transformations; each individual transformation employs neural networks in its parametrization, embedded into a specific functional form (possibly augmented with constraints) that ensures bijectivity [36, 50]. “Residual” normalizing flows [1, 54] compose maps that are not in general triangular, but “autoregressive” flows [33, 34, 72] use monotone triangular maps as their essential building block. Many practical implementations of autoregressive flows, however, limit the class of triangular maps that can be expressed. Thus, they cannot seek to directly approximate the KR transport in a single step; rather, they compose multiple such triangular maps, interleaved with permutations [68]. In principle, however, a direct approximation of the KR map is sufficient, and our results could be a starting point for constructive and quantitative guidance on the parametrization and expressivity of autoregressive flows in this setting. Our result is also close in style to [43], which shows low-order convergence rates for neural network approximations of transport maps for certain classes of target densities, by writing the map as a gradient of a potential function given by a neural network. This construction, which employs semi-discrete optimal transport, is not in general triangular and does not necessarily coincide with common normalizing flow architectures.

6 Convergence of Pushforward Measures

Let again \(T_\sharp {\rho }={\pi }\). In Sect. 4 we have shown that the approximation \({\tilde{T}}\) to T obtained in Theorems 4.5 and 5.1 converges to T in the \(W^{m,\infty }([-1,1]^d)\) norm for suitable \(m\in {{\mathbb {N}}}\). In the present section, we show that these results imply corresponding error bounds for the approximate pushforward measure, i.e., bounds for

with “\(\mathrm{dist}\)” referring to the Hellinger distance, the total variation distance, the Wasserstein distance or the KL divergence. Specifically, we will show that smallness of \(\Vert T-{\tilde{T}} \Vert _{W^{1,\infty }}\) (or \(\Vert T-\tilde{T} \Vert _{L^{\infty }}\) in case of the Wasserstein distance) implies smallness of (6.1).

As mentioned before, when casting the approximation of the transport as an optimization problem, it is often more convenient to first approximate the inverse transport \(S=T^{-1}\) by some \({\tilde{S}}\), and then to invert \({\tilde{S}}\) to obtain an approximation \({\tilde{T}} = {\tilde{S}}^{-1}\) of T; see [45] and also, e.g., the method in [22]. In this case, we usually have an upper bound on \(\Vert S-{\tilde{S}} \Vert _{}\) rather than \(\Vert T-{\tilde{T}} \Vert _{}\) in a suitable norm; cp. Remark 4.7. However, a bound of the type \(\Vert T-{\tilde{T}} \Vert _{W^{m,\infty }}<\varepsilon \) implies \(\Vert S-{\tilde{S}} \Vert _{W^{m,\infty }}=O(\varepsilon )\) for \(m\in \{0,1\}\) as the next lemma shows. Since closeness in \(L^\infty \) or \(W^{1,\infty }\) is all we require for the results of this section, the following analysis covers either situation.

Lemma 6.1

Let \(T:[-1,1]^d\rightarrow [-1,1]^d\) and \({\tilde{T}}:[-1,1]^d\rightarrow [-1,1]^d\) be bijective. Denote \(S=T^{-1}\) and \({\tilde{S}}={\tilde{T}}^{-1}\). Suppose that S has Lipschitz constant \(L_S\). Then

-

(i)

it holds

$$\begin{aligned} \Vert S-{\tilde{S}} \Vert _{L^\infty ([-1,1]^d)}\le L_S \Vert T-{\tilde{T}} \Vert _{L^\infty ([-1,1]^d)}, \end{aligned}$$ -

(ii)

if S, T, \({\tilde{S}}\), \({\tilde{T}}\in W^{1,\infty }([-1,1]^d)\) and \(dT:[-1,1]^d\rightarrow {{\mathbb {R}}}^{d\times d}\) has Lipschitz constant \(L_{dT}\), then

$$\begin{aligned} \Vert dS-d{\tilde{S}} \Vert _{L^\infty ([-1,1]^d)}\le (1+L_SL_{dT}) \Vert dS \Vert _{L^\infty ([-1,1]^d)} \Vert d\tilde{S} \Vert _{L^\infty ([-1,1]^d)} \Vert T-{\tilde{T}} \Vert _{W^{1,\infty }([-1,1]^d)}. \end{aligned}$$

6.1 Distances

For two probability measures \({\rho }\ll \mu \) and \({\pi }\ll \mu \) on \([-1,1]^d\) equipped with the Borel sigma-algebra, we consider the following distances:

-

Hellinger distance

$$\begin{aligned} \mathrm{H}({\rho },{\pi }) {:}{=}\left( \frac{1}{2}\int _{[-1,1]^d} \left( \sqrt{\frac{\mathrm {d}{\rho }}{\mathrm {d}\mu }({{\varvec{x}}})}-\sqrt{\frac{\mathrm {d}{\pi }}{\mathrm {d}\mu }({{\varvec{x}}})}\right) ^2 \;\mathrm {d}\mu ({{\varvec{x}}})\right) ^{1/2}, \end{aligned}$$(6.2a) -

total variation distance

$$\begin{aligned} \mathrm{TV}({\rho },{\pi }) {:}{=}\sup _{A\in {{\mathcal {A}}}}|{\rho }(A)-{\pi }(A)|=\frac{1}{2}\int _{[-1,1]^d} \Big |\frac{\mathrm {d}{\rho }}{\mathrm {d}\mu }({{\varvec{x}}})-\frac{\mathrm {d}{\pi }}{\mathrm {d}\mu }({{\varvec{x}}})\Big |\;\mathrm {d}\mu ({{\varvec{x}}}), \end{aligned}$$(6.2b) -

Kullback–Leibler (KL) divergence

$$\begin{aligned} \mathrm{KL}({\rho }\Vert {\pi }) {:}{=}{\left\{ \begin{array}{ll}\int _{[-1,1]^d} \log \left( \frac{\mathrm {d}{\rho }}{\mathrm {d}{\pi }}({{\varvec{x}}})\right) \;\mathrm {d}{\rho }({{\varvec{x}}}) &{}\text {if }{\rho }\ll {\pi }\\ \infty &{}\text {otherwise}, \end{array}\right. } \end{aligned}$$(6.2c) -

for \(p\in [1,\infty )\), the p-Wasserstein distance

$$\begin{aligned} W_p({\rho },{\pi }){:}{=}\inf _{\nu \in \Gamma ({\rho },{\pi })}\left( \int _{[-1,1]^d\times [-1,1]^d} \Vert {{\varvec{x}}}-{{\varvec{y}}} \Vert _{}^p\;\mathrm {d}\nu ({{\varvec{x}}},{{\varvec{y}}})\right) ^{1/p}, \end{aligned}$$(6.2d)where \(\Gamma ({\rho },{\pi })\) denotes the set of all measures on \([-1,1]^d\times [-1,1]^d\) with marginals \({\rho }\), \({\pi }\).

Contrary to the Hellinger, total variation, and Wasserstein distances, the KL divergence is not symmetric; however, \(\mathrm{KL}({\rho }\Vert {\pi })>0\) iff \({\rho }\ne {\pi }\).

Remark 6.2

As is well known, the Hellinger distance provides an upper bound of the difference of integrals w.r.t. two different measures. Assume that \(g\in L^2([-1,1]^d,{\rho }) \cap L^2([-1,1]^d,{\pi })\). Then

6.2 Error Bounds

Throughout this subsection, \(p\in [1,\infty )\) is arbitrary but fixed. Under suitable assumptions a result of the following type was shown in [58, Theorem 2] (see the extended result [76, Prop. 6.2] for the following variant)

Thus, Theorems 4.5 and 5.1 readily yield bounds on \(W_p({\tilde{T}}_\sharp {\rho },{\pi })\) for the approximate polynomial, rational, and NN transport maps from Sects. 4.2 and 5.

For the other three distances/divergences in (6.2), to obtain a bound on \(\mathrm{dist}({\tilde{T}}_\sharp {\rho },T_\sharp {\rho })\), we will upper bound the difference between the densities of those measures. Since the density of \({\tilde{T}}_\sharp {\rho }\) is given by \(f_{\rho }({\tilde{S}}({{\varvec{x}}}))\det d{\tilde{S}}({{\varvec{x}}})\), where \({\tilde{S}}={\tilde{T}}^{-1}\), we need to upper bound \(|f_{\rho }(S({{\varvec{x}}}))\det dS({{\varvec{x}}}) -f_{\rho }({\tilde{S}}({{\varvec{x}}}))\det d{\tilde{S}}({{\varvec{x}}})|\), where \(S=T^{-1}\). This will be done in the proof of the following theorem. To state the result, for a triangular map \(S\in C^1([-1,1]^d;[-1,1]^d)\) we first define

Theorem 6.3

Let T, \({\tilde{T}}:[-1,1]^d\rightarrow [-1,1]^d\) be bijective, monotone, and triangular. Define \(S{:}{=}T^{-1}\) and \({\tilde{S}}{:}{=}{\tilde{T}}^{-1}\) and assume that T, \(S\in W^{2,\infty }([-1,1]^d)\) and \({\tilde{T}}\), \({\tilde{S}}\in W^{1,\infty }([-1,1]^d)\). Moreover, let \({\rho }\) be a probability measure on \([-1,1]^d\) such that \(f_{\rho }{:}{=}\frac{\mathrm {d}{\rho }}{\mathrm {d}\mu }:[-1,1]^d\rightarrow {{\mathbb {R}}}_+\) is strictly positive and Lipschitz continuous. Suppose that \(\tau _0>0\) is such that \(\Vert d{\tilde{S}} \Vert _{L^\infty ([-1,1]^d)}<\frac{1}{\tau _0}\) and \({\tilde{S}}_{\mathrm{min}}\ge \tau _0\).

Then there exists C depending on \(\tau _0\) but not on \({\tilde{T}}\) such that for \(\mathrm{dist}\in \{\mathrm{H},\mathrm{TV}\}\)

and

Together with Theorem 4.5, we can now show exponential convergence of the pushforward measure in the case of analytic densities. For \(\varepsilon >0\), denote by \({\tilde{T}}_\varepsilon = (\tilde{T}_{\varepsilon ,k})_{k=1}^d\) the approximation to T from Theorem 4.5. Moreover, recall that \(N_\varepsilon \) in (4.11) denotes the number of degrees of freedom of this approximation (the number of coefficients of this rational function). As shown in Lemma 3.9, the exponential convergence shown in the next proposition holds in particular for positive and analytic reference and target densities \(f_{\rho }\), \(f_{\pi }\).

Proposition 6.4

Consider the setting of Theorem 4.5; in particular, let \(f_{\rho }\), \(f_{\pi }\) satisfy Assumption 3.5, and let \(\beta >0\) be as in (4.7b).

Then for every \({\tilde{\beta }}<\beta \) there exists \(C>0\) such that for every \(\varepsilon \in (0,1)\) and \(\mathrm{dist}\in \{\mathrm{H},\mathrm{TV},\mathrm{KL},W_p\}\) with \({\tilde{T}}_\varepsilon \) as in (4.10) and \(N_\varepsilon \) as in (4.11) it holds

Similarly, we get a bound for the pushforward under the NN transport from Theorem 5.1.

Proposition 6.5

Let \(f_{\rho }\) and \(f_{\pi }\) be two positive and analytic probability densities on \([-1,1]^d\). Then for every \(\mathrm{dist}\in \{\mathrm{H},\mathrm{TV},\mathrm{KL},W_p\}\), there exist constants \(\beta >0\) and \(C>0\), and for every \(N\in {{\mathbb {N}}}\) there exists a ReLU neural network \(\Phi _N:[-1,1]^d\rightarrow [-1,1]^d\) such that

and \(\mathrm{size}(\Phi _N)\le CN\) and \(\mathrm{depth}(\Phi _N)\le C\log (N)N^{-1/2}\).

The proof is completely analogous to Proposition 6.4 (but using Theorem 5.1 instead of Theorem 4.5 to approximate the transport T with the NN \(\Phi _N\)) which is why we do not give it in appendix.

7 Application to Inverse Problems in UQ

To give an application and explain in more detail the practical value of our results, we briefly discuss a standard inverse problem in uncertainty quantification.

7.1 Setting

Let \(n\in {{\mathbb {N}}}\) and let \(\mathrm {D}\subseteq {{\mathbb {R}}}^n\) be a bounded Lipschitz domain. For a diffusion coefficient \(a\in L^\infty (\mathrm {D};{{\mathbb {R}}})\) such that \({{\,\mathrm{ess\,inf}\,}}_{x\in \mathrm {D}}a(x)>0\), and a forcing term \(f\in H^{-1}(\mathrm {D})\), the PDE

has a unique weak solution in \(H_0^1(\mathrm {D})\). We denote it by \({\mathfrak {u}}(a)\), and call \({\mathfrak {u}}:a\mapsto {\mathfrak {u}}(a)\) the forward operator.

Let \(A:H_0^1(\mathrm {D})\rightarrow {{\mathbb {R}}}^m\) be a bounded linear observation operator for some \(m\in {{\mathbb {N}}}\). The inverse problem consists of recovering the diffusion coefficient \(a\in L^\infty (\mathrm {D})\), given the noisy observation

with the additive observation noise \({\varvec{\eta }}\sim {{\mathcal {N}}}(0,\Sigma )\), for a symmetric positive definite covariance matrix \(\Sigma \in {{\mathbb {R}}}^{m\times m}\).

7.2 Prior and Posterior

In uncertainty quantification and statistical inverse problems, the diffusion coefficient \(a\in L^\infty (\mathrm {D})\) is modeled as a random variable (independent of the observation noise \({\varvec{\eta }}\)) distributed according to some known prior distribution; see, e.g., [35]. Bayes’ theorem provides a formula for the distribution of the diffusion coefficient conditioned on the observations. This conditional is called the posterior and interpreted as the solution to the inverse problem.

To construct a prior, let \((\psi _j)_{j=1}^d\subset L^\infty (\mathrm {D})\) and set

where \(y_j\in [-1,1]\). We consider the uniform measure on \([-1,1]^d\) as the prior: Every realization \((y_j)_{j=1}^d\in [-1,1]^d\) corresponds to a diffusion coefficient \(a({{\varvec{y}}})\in L^\infty (\mathrm {D})\), and equivalently the pushforward of the uniform measure on \([-1,1]^d\) under \(a:[-1,1]^d\rightarrow L^\infty (\mathrm {D})\) can be interpreted as a prior on \(L^\infty (\mathrm {D})\). Throughout we assume \({{\,\mathrm{ess\,inf}\,}}_{x\in \mathrm {D}}a({{\varvec{y}}},x)>0\) for all \({{\varvec{y}}}\in [-1,1]^d\) and write \(u({{\varvec{y}}}){:}{=}{\mathfrak {u}}(a({{\varvec{y}}}))\) for the solution of (7.1).

Given m measurements \((\varsigma _i)_{i=1}^m\) as in (7.2), the posterior measure \({\pi }\) on \([-1,1]^d\) is the distribution of \({{\varvec{y}}}|{\varvec{\varsigma }}\). Since \({\varvec{\eta }}\sim {{\mathcal {N}}}(0,\Sigma )\), the likelihood (the density of \({\varvec{\varsigma }}|{{\varvec{y}}}\)) equals

By Bayes’ theorem, the posterior density \(f_{\pi }\), corresponding to the distribution of \({{\varvec{y}}}|{\varvec{\varsigma }}\), is proportional to the density of \({{\varvec{y}}}\) times the density of \({\varvec{\varsigma }}|{{\varvec{y}}}\). Since the (uniform) prior has constant density 1,

The normalizing constant Z is in practice unknown. For more details see, e.g., [17].

In order to compute expectations w.r.t. the posterior \({\pi }\), we want to determine a transport map \(T:[-1,1]^d \rightarrow [-1,1]^d\) such that \(T_\sharp \mu ={\pi }\): Then if \(X_i\in [-1,1]^d\), \(i=1,\dots ,N\), are iid uniformly distributed on \([-1,1]^d\), \(T(X_i)\), \(i=1,\dots ,N\), are iid with distribution \(\pi \). This allows us to approximate integrals \(\int _{[-1,1]^d}g({{\varvec{y}}})\;\mathrm {d}{\pi }({{\varvec{y}}})\) via Monte Carlo sampling as \(\frac{1}{N}\sum _{i=1}^N g(T(X_i))\).

7.3 Determining \(\Lambda _{k,\varepsilon }\)

Choose as the reference measure the uniform measure \({\rho }=\mu \) on \([-1,1]^d\) and let the target measure \({\pi }\) be the posterior with density \(f_{\pi }\) as in (7.4). To apply Theorem 4.5, we first need to determine \({\varvec{\delta }}\in (0,\infty )^d\), such that \(f_{\pi }\in C^1({{\mathcal {B}}}_{{\varvec{\delta }}}([-1,1]);{{\mathbb {C}}})\). Since \(\exp :{{\mathbb {C}}}\rightarrow {{\mathbb {C}}}\) is an entire function, by (7.4), in case \(u\in C^1({{\mathcal {B}}}_{{\varvec{\delta }}}([-1,1]);{{\mathbb {C}}})\) we have \(f_{\pi }\in C^1({{\mathcal {B}}}_{{\varvec{\delta }}}([-1,1]);{{\mathbb {C}}})\). One can show that the forward operator \({\mathfrak {u}}\) is complex differentiable from \(\{L^\infty (\mathrm {D};{{\mathbb {C}}})\,:\,{{\,\mathrm{ess\,inf}\,}}_{x\in \mathrm {D}}\Re (a(x))>0\}\) to \(H_0^1(\mathrm {D};{{\mathbb {C}}})\); see [75, Example 1.2.39]. Hence, \(u({{\varvec{y}}})={\mathfrak {u}}(\sum _{j=1}^dy_j\psi _j)\) indeed is complex differentiable, e.g., for all \({{\varvec{y}}}\in {{\mathbb {C}}}^d\) such that

Complex differentiability implies analyticity, and therefore, \(u({{\varvec{y}}})\) is analytic on \({{\mathcal {B}}}_{\varvec{\delta }}([-1,1];{{\mathbb {C}}})\) with \(\delta _j\) proportional to \(\Vert \psi _j \Vert _{L^\infty (\mathrm {D})}^{-1}\):

Lemma 7.1

There exists \(\tau =\tau ({\mathfrak {u}},\Sigma ,d)>0\) and an increasing sequence \((\kappa _j)_{j=1}^d\subset (0,1)\) such that with \(\delta _j{:}{=}\kappa _j+\frac{\tau }{\Vert \psi _j \Vert _{L^\infty (\mathrm {D})}}\), \(f_{\pi }\) in (7.4) satisfies Assumption 3.5.

Let \(\varrho _j=1+C_7\delta _j\) be as in Theorem 4.5 (i.e., as in (4.7a)), where \(C_7\) is as in Theorem 3.6. With \(\kappa _j\in (0,1]\), \(\tau >0\) as in Lemma 7.1

In particular, \(\varrho _j\ge 1+C_7\tau \Vert \psi _j \Vert _{L^\infty (\mathrm {D})}^{-1}\). In practice, we do not know \(\tau \) and \(C_7\) (although pessimistic estimates could be obtained from the proofs), and we simply set \(\varrho _j{:}{=}1+\Vert \psi _j \Vert _{L^\infty (\mathrm {D})}^{-1}\). Theorem 4.3 (and Theorem 4.5) then suggest the choice (cp. (4.3), (4.4))

to construct a sparse polynomial ansatz space \({{\mathbb {P}}}_{\Lambda _{k,\varepsilon }}\) in which to approximate \(T_k\) (or \(\sqrt{\partial _{k}T_k}-1\)), \(k\in \{1,\dots ,d\}\). Here \(\varepsilon >0\) is a thresholding parameter, and as \(\varepsilon \rightarrow 0\) the ansatz spaces become arbitrarily large. We interpret (7.5) as follows: The smaller the \(\Vert \psi _j \Vert _{L^\infty (\mathrm {D})}\), the less important the variable j is in the approximation of \(T_k\) if \(j<k\). The kth variable plays a special role for \(T_k\), however, and is always among the most important in the approximation of \(T_k\).

7.4 Performing Inference

Given data \({\varvec{\varsigma }}\in {{\mathbb {R}}}^m\) as in (7.2), we can now describe a high-level algorithm to perform inference on the model problem in Sects. 7.1–7.2:

-

(i)

Determine ansatz space: Fix \(\varepsilon >0\) and determine \(\Lambda _{k,\varepsilon }\) in (7.5) for \(k=1,\dots ,d\).

-

(ii)

Find transport map: Use as a target \(\pi \) the posterior with density in (7.4), and solve the optimization problem

$$\begin{aligned} {{\,\mathrm{arg\,min}\,}}_{{\tilde{T}}\text { as in (4.10) with} p_k\in {{\mathbb {P}}}_{\Lambda _{k,\varepsilon }}}\mathrm{dist}(\tilde{T}_\sharp {\rho },{\pi }). \end{aligned}$$(7.6) -

(iii)

Estimate parameter: Estimate the unknown parameter \({{\varvec{y}}}\in [-1,1]^d\) via its conditional mean (CM), i.e., compute the expectation under the posterior \(\pi \simeq {\tilde{T}}_\sharp {\rho }\)

$$\begin{aligned} \int _{[-1,1]^d} {{\varvec{y}}}\;\mathrm {d}{\pi }({{\varvec{y}}}) \simeq \int _{[-1,1]^d} \tilde{T}({{\varvec{x}}})\;\mathrm {d}{\rho }({{\varvec{x}}}){=}{:}{\tilde{{{\varvec{y}}}}}. \end{aligned}$$(7.7)An estimate of the unknown diffusion coefficient \(a\in L^\infty (\mathrm {D})\) in (7.3) is obtained via \(1+\sum _{j=1}^d {\tilde{y}}_j\psi _j\).

We next provide more details for each of those steps.

7.4.1 Determining the Ansatz Space