Abstract

The Painlevé-III equation with parameters \(\Theta _0=n+m\) and \(\Theta _\infty =m-n+1\) has a unique rational solution \(u(x)=u_n(x;m)\) with \(u_n(\infty ;m)=1\) whenever \(n\in \mathbb {Z}\). Using a Riemann–Hilbert representation proposed in Bothner et al. (Stud Appl Math 141:626–679, 2018), we study the asymptotic behavior of \(u_n(x;m)\) in the limit \(n\rightarrow +\infty \) with \(m\in \mathbb {C}\) held fixed. We isolate an eye-shaped domain E in the \(y=n^{-1}x\) plane that asymptotically confines the poles and zeros of \(u_n(x;m)\) for all values of the second parameter m. We then show that unless m is a half-integer, the interior of E is filled with a locally uniform lattice of poles and zeros, and the density of the poles and zeros is small near the boundary of E but blows up near the origin, which is the only fixed singularity of the Painlevé-III equation. In both the interior and exterior domains we provide accurate asymptotic formulæ for \(u_n(x;m)\) that we compare with \(u_n(x;m)\) itself for finite values of n to illustrate their accuracy. We also consider the exceptional cases where m is a half-integer, showing that the poles and zeros of \(u_n(x;m)\) now accumulate along only one or the other of two “eyebrows,” i.e., exterior boundary arcs of E.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Generic solutions of the six Painlevé equations cannot be expressed in terms of elementary functions, hence the common terminology of Painlevé transcendents for the general solutions of these famous equations. However, it is also known that all of the Painlevé equations except for the Painlevé-I equation admit solutions expressible in terms of classical special functions (e.g., Airy solutions for Painlevé-II, or Bessel solutions for Painlevé-III) as well as rational solutions, both of which occur for certain isolated values of the auxiliary parameters (each Painlevé equation except Painlevé-I is actually a family of differential equations indexed by one or more complex parameters). Rational solutions of Painlevé equations have attracted interest partly because they are known to occur in several diverse applications such as the description of equilibrium configurations of fluid vortices [11] and of particular solutions of soliton equations [10], electrochemistry [1], parametrization of string theories [15], spectral theory of quasi-exactly solvable potentials [20], and the description of universal wave patterns [6]. In several of these applications it is interesting to consider the behavior of the rational Painlevé solutions when the parameters in the equation become large (possibly along with the independent variable); as the degree of the rational function is tied to the parameters via Bäcklund transformations, in this limit algebraic representations of rational solutions become unwieldy and hence less attractive than analytical ones as a means for extracting asymptotic behaviors. Recent progress on the analytical study of large-degree rational Painlevé solutions includes [3, 7, 8, 18] for Painlevé-II and [5, 17] for Painlevé-IV. Both of these equations have the property that there is no fixed singular point except the point at infinity. On the other hand, the Painlevé-III equation is the simplest of the Painlevé equations having a finite fixed singular point (at the origin). This paper is the second in a series beginning with [4] concerning the large-degree asymptotic behavior of rational solutions to the Painlevé-III equation, which we take in the generic form

It is convenient to represent the constant parameters \(\Theta _0\) and \(\Theta _\infty \) in the form

It is known that if \(n\in \mathbb {Z}\), there exists a unique rational solution \(u(x)=u_n(x;m)\) of (1) that tends to 1 as \(x\rightarrow \infty \). The odd reflection \(u(x)=-u_n(-x;m)\) provides a second distinct rational solution. Similarly, if \(m\in \mathbb {Z}\), there are two rational solutions tending to \(\pm \mathrm {i}\) as \(x\rightarrow \infty \), namely \(u(x)=\pm \mathrm {i}u_m(\pm \mathrm {i}x;n)\), while if neither m nor n is an integer, (1) has no rational solutions at all. If only one of m and n is an integer, then there are exactly two rational solutions; however if both \(m\in \mathbb {Z}\) and \(n\in \mathbb {Z}\), there are exactly four distinct rational solutions: \(u_n(x;m)\), \(-u_n(-x;m)\), \(\mathrm {i}u_m(\mathrm {i}x;n)\), and \(-\mathrm {i}u_m(-\mathrm {i}x;n)\).

1.1 Representations of \(u_n(x;m)\)

1.1.1 Algebraic Representation

It has been shown [9, 12, 21] that \(u_n(x;m)\) admits the representation

where \(\{s_n(x;m)\}_{n=-1}^{\infty }\) are polynomials in x with coefficients polynomial in m that are defined by the recurrence formula

and the initial conditions \(s_{-1}(x;m)=s_0(x;m)=1\). The polynomials \(\{s_n(x;m)\}_{n=-1}^\infty \) are frequently called the Umemura polynomials, although in [21] Umemura originally considered instead related functions that are polynomials in 1 / x. For n not too large, the recurrence relation (4) provides an effective computational strategy to obtain the poles and zeros of \(u_n(x;m)\). The rational function \(u_n(x;m)\) has the following symmetry:

This follows from the fact that \(u(x)\mapsto u(-x)^{-1}\) is a symmetry of (1)–(2) corresponding to the parameter mapping \((m,n)\mapsto (-m,n)\). Since this symmetry preserves rationality and asymptotics \(u\rightarrow 1\) as \(x\rightarrow \infty \), it descends from general solutions to the particular solution \(u_n(x;m)\) as written in (5).

1.1.2 Analytic Representation

The goal of this paper is to study \(u_n(x;m)\) when n is a large positive integer and m is a fixed complex number. The representation (3) is useful to determine numerous properties of the rational Painlevé-III solutions; however, when n is large another representation becomes more preferable. To explain this alternate representation, we first define some x-dependent arcs in an auxiliary complex \(\lambda \)-plane as follows. Given \(x\in \mathbb {C}\) with \(x\ne 0\) and \(|\mathrm {Arg}(x)|<\pi \), there is an intersection point \({p}\) and four oriented arcs  ,

,  ,

,  , and

, and  such that:

such that:

The arc

originates from \(\lambda =\infty \) in such a direction that \(\mathrm {i}x\lambda \) is negative real and terminates at \(\lambda ={p}\), the arc

originates from \(\lambda =\infty \) in such a direction that \(\mathrm {i}x\lambda \) is negative real and terminates at \(\lambda ={p}\), the arc  begins at \(\lambda ={p}\) and terminates at \(\lambda =0\) in a direction such that \(-\mathrm {i}x\lambda ^{-1}\) is negative real, and the net increment of the argument of \(\lambda \) along

begins at \(\lambda ={p}\) and terminates at \(\lambda =0\) in a direction such that \(-\mathrm {i}x\lambda ^{-1}\) is negative real, and the net increment of the argument of \(\lambda \) along  is

is  (6)

(6)The ambiguity of the sign in (6) will be explained below (see Remark 1).

The arc

originates from \(\lambda =\infty \) in such a direction that \(-\mathrm {i}x\lambda \) is negative real and terminates at \(\lambda ={p}\), the arc

originates from \(\lambda =\infty \) in such a direction that \(-\mathrm {i}x\lambda \) is negative real and terminates at \(\lambda ={p}\), the arc  begins at \(\lambda ={p}\) and terminates at \(\lambda =0\) in a direction such that \(\mathrm {i}x\lambda ^{-1}\) is negative real, and the net increment of the argument of \(\lambda \) along

begins at \(\lambda ={p}\) and terminates at \(\lambda =0\) in a direction such that \(\mathrm {i}x\lambda ^{-1}\) is negative real, and the net increment of the argument of \(\lambda \) along  is

is  (7)

(7)The arcs

,

,  ,

,  , and

, and  do not otherwise intersect.

do not otherwise intersect.

See Fig. 19 below for an illustration in the case of \(\mathrm {Arg}(x)=\tfrac{1}{4}\pi \). We define a single-valued branch of the argument function \(\lambda \mapsto \arg (\lambda )\), henceforth denoted by  , by first selecting

, by first selecting  as the branch cut and then defining

as the branch cut and then defining  for sufficiently large positive \(\lambda \) when \(\mathrm {Im}(x)>0\) and

for sufficiently large positive \(\lambda \) when \(\mathrm {Im}(x)>0\) and  for sufficiently large negative \(\lambda \) when \(\mathrm {Im}(x)<0\). It is easy to see that this definition is consistent for \(x>0\), but there is a jump across the negative real x-axis. We define an associated branch of the complex logarithm \(\log (\lambda )\) by setting

for sufficiently large negative \(\lambda \) when \(\mathrm {Im}(x)<0\). It is easy to see that this definition is consistent for \(x>0\), but there is a jump across the negative real x-axis. We define an associated branch of the complex logarithm \(\log (\lambda )\) by setting  . Then, given \(q\in \mathbb {C}\), the corresponding branch of the power function \(\lambda ^q\) will be denoted by

. Then, given \(q\in \mathbb {C}\), the corresponding branch of the power function \(\lambda ^q\) will be denoted by  . Finally, we denote by L the union of the four oriented arcs

. Finally, we denote by L the union of the four oriented arcs  ,

,  ,

,  , and

, and  , and define the function

, and define the function

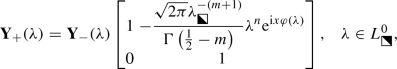

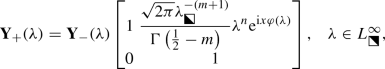

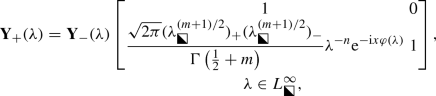

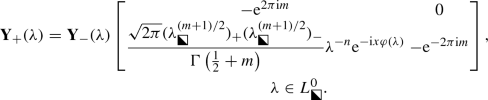

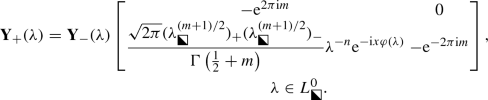

The following Riemann–Hilbert problem was formulated in [4, Sec. 1.2]. Here and below, we follow the convention that subscripts \(+\)/− refer to boundary values taken on a jump contour from the left/right, and \(\sigma _3:=\mathrm {diag}[1,-1]\) denotes a standard Pauli spin matrix.

Riemann–Hilbert Problem 1

Given parameters \(m\in \mathbb {C}\) and \(n=0,1,2,3,\dots \), as well as \(x\in \mathbb {C}\setminus \{0\}\) with \(|\mathrm {Arg}(x)|<\pi \), seek a \(2\times 2\) matrix function \(\lambda \mapsto \mathbf {Y}(\lambda )=\mathbf {Y}_n(\lambda ;x,m)\) with the following properties:

- 1.

Analyticity:\(\lambda \mapsto \mathbf {Y}(\lambda )\) is analytic in the domain \(\lambda \in \mathbb {C}\setminus L\). It takes continuous boundary values on \(L\setminus \{0\}\) from each maximal domain of analyticity.

- 2.

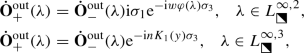

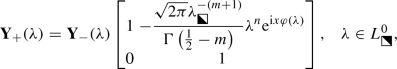

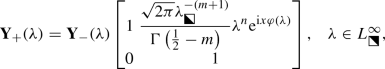

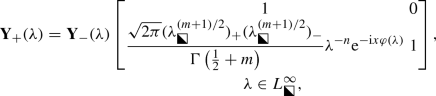

Jump conditions: The boundary values \(\mathbf {Y}_\pm (\lambda )\) are related on each arc of L by the following formulæ:

(8)

(8) (9)

(9) (10)

(10) (11)

(11) - 3.

Asymptotics:\(\mathbf {Y}(\lambda )\rightarrow \mathbb {I}\) as \(\lambda \rightarrow \infty \). Also, the matrix function

has a well-defined limit as \(\lambda \rightarrow 0\) (the same limit from each side of L).

has a well-defined limit as \(\lambda \rightarrow 0\) (the same limit from each side of L).

Remark 1

Given any choice of sign in (6), the sign may be reversed by a surgery performed on  for any given value of \(x\ne 0\), \(|\mathrm {Arg}(x)|<\pi \) which leaves the conditions of Riemann–Hilbert Problem 1 invariant. The surgery consists of bringing

for any given value of \(x\ne 0\), \(|\mathrm {Arg}(x)|<\pi \) which leaves the conditions of Riemann–Hilbert Problem 1 invariant. The surgery consists of bringing  together (with the same orientation) with

together (with the same orientation) with  in some small arc. The jump for \(\mathbf {Y}\) cancels on this small arc because the jump matrices in (8)–(9) are inverses of each other; thus, up to some relabeling, one has effectively changed the sign in (6). In [4] the choice of sign in (6) was tied to the sign of \(\mathrm {Im}(x)\) due to the derivation of Riemann–Hilbert Problem 1 from direct/inverse monodromy theory; however, the above surgery argument shows that the sign is in fact arbitrary. The freedom to choose this sign will be important later when the solution of Riemann–Hilbert Problem 1 is constructed for large n.

in some small arc. The jump for \(\mathbf {Y}\) cancels on this small arc because the jump matrices in (8)–(9) are inverses of each other; thus, up to some relabeling, one has effectively changed the sign in (6). In [4] the choice of sign in (6) was tied to the sign of \(\mathrm {Im}(x)\) due to the derivation of Riemann–Hilbert Problem 1 from direct/inverse monodromy theory; however, the above surgery argument shows that the sign is in fact arbitrary. The freedom to choose this sign will be important later when the solution of Riemann–Hilbert Problem 1 is constructed for large n.

It turns out that if Riemann–Hilbert Problem 1 is solvable for some \(x \in \mathbb {C}\setminus \{0\}\), then we may define corresponding matrices \(\mathbf {Y}_1^\infty (x)\) and \(\mathbf {Y}_0^0(x)\) by expanding \(\mathbf {Y}(\lambda )=\mathbf {Y}_n(\lambda ;x,m)\) for large and small \(\lambda \), respectively:

and

Then, according to [4, Theorem 1], an alternate formula for the rational solution \(u_n(x;m)\) of the Painlevé-III equation (1) is

where we have suppressed the parametric dependence on \(n\in \mathbb {Z}\) and \(m\in \mathbb {C}\) on the right-hand side.

1.2 Results and Outline of Paper

A good way to introduce our results is to first explain a simple formal asymptotic calculation. Since we are interested in solutions \(u=u_n(x;m)\) of (1) with parameters written in the form (2) when n is large, and since numerical experiments such as those in [4, Sec. 2] suggest that the largest poles and zeros of \(u_n(x;m)\) lie at a distance |x| from the origin proportional to n with a local spacing that neither grows nor shrinks with n, it is natural to introduce a complex parameter \(y\ne 0\) and a new independent variable \(w\in \mathbb {C}\) by setting \(x=ny+w\). It follows that if u(x) solves (1)–(2), then \({p}(w):=-\mathrm {i}u(x=ny+w)\) satisfies

in which the \(\mathcal {O}(n^{-1})\) symbol absorbs several terms each of which is explicitly proportional to \(n^{-1}\ll 1\). Dropping these formally small terms leads to an autonomous second-order equation which is amenable to classical analysis:

where \(\dot{{p}}\) denotes a formal approximation to \({p}\). Solutions of the equationFootnote 1 (13) can be classified as follows:

Equilibrium solutions \(\dot{{p}}\equiv \text {constant}\). Generically with respect to y there are four such equilibria: \(\dot{{p}}\equiv \pm 1\) and

$$\begin{aligned} \dot{{p}}\equiv {p}_0^\pm (y):=\frac{\mathrm {i}}{2y}\mp \mathrm {i}\sqrt{\frac{1}{4y^2}+1}, \end{aligned}$$where to be precise we take the square roots to be equal to 1 at \(y=\infty \) and to be analytic in y except on a line segment branch cut connecting the branch points \(y=\pm \tfrac{1}{2}\mathrm {i}\) in the y parameter plane. Note that of these four, the unique equilibrium that tends to \(-\mathrm {i}\) as \(y\rightarrow \infty \) (as would be consistent with \(u=u_n(x;m)\rightarrow 1\) as \(x\rightarrow \infty \)) is \(\dot{{p}}\equiv {p}_0^+(y)\).

Nonequilibrium solutions. These can be obtained by integrating (13) to find a first integral. Thus, provided \(\dot{{p}}(w)\) is nonconstant, we may write (13) in the equivalent form

$$\begin{aligned} \left( \frac{\mathrm {d}\dot{{p}}}{\mathrm {d}w}\right) ^2= & {} \frac{16}{y^2}P(\dot{{p}};y,C),\nonumber \\ P(\dot{{p}};y,C):= & {} -\frac{y^2}{4}\dot{{p}}^4+\frac{\mathrm {i}y}{2}\dot{{p}}^3 + C\dot{{p}}^2 + \frac{\mathrm {i}y}{2}\dot{{p}}-\frac{y^2}{4}, \end{aligned}$$(14)in which C is a constant of integration. There are two types of nonequilibrium solutions:

If C is generic given y such that P has 4 distinct roots, then all nonconstant solutions of (14) are (doubly-periodic) elliptic functions of w with elliptic modulus depending on C and y.

If \(C=C(y)\) is such that the quartic P has fewer than 4 distinct roots, then the higher-order roots are necessarily equilibrium solutions of (13) and all nonconstant solutions of (14) are (singly-periodic) trigonometric functions of w.

Our rigorous analysis of \(u_n(x;m)\) in the large-n limit shows that all of the above types of solutions of the approximating equation (13) play a role. In order to begin to explain our results, first observe that if x is replaced with \(ny+w\), then for large n, the dominant factors in the off-diagonal elements of the jump matrices in Riemann–Hilbert Problem 1 are the exponentials \(\mathrm {e}^{\pm nV(\lambda ;y)}\), where

The fact that V is multi-valued is not important because \(\mathrm {e}^{\pm nV(\lambda ;y)}\) is single-valued whenever \(n\in \mathbb {Z}\). However, \(\mathrm {Re}(V(\lambda ;y))\) is certainly single-valued for \(\lambda \in \mathbb {C}\setminus \{0\}\) and \(y\in \mathbb {C}\). For simplicity, in the rest of the paper we write \({p}(y):={p}_0^+(y)\). Since \({p}(y)\) is analytic and nonvanishing in its domain of definition, the left-hand side of the equation

defines a harmonic function in the complex y-plane omitting the vertical branch cut of \({p}(y)\) connecting the branch points \(\pm \tfrac{1}{2}\mathrm {i}\). Therefore, (16) determines a curve in the latter domain that turns out to be the union of four analytic arcs: two rays on the imaginary axis connecting the branch points \(y=\pm \tfrac{1}{2}\mathrm {i}\) to \(y=\pm \mathrm {i}\infty \) respectively, an arc in the right half-plane joining the two branch points, and its image under reflection through the imaginary axis. The union of the latter two arcs is the boundary of a compact and simply-connected eye-shaped set denoted by E containing the origin \(y=0\). The eye E is symmetric with respect to reflection through the origin as well as both the real and imaginary axes. See Fig. 20 below. Our first result is then the following.

Theorem 1

(Equilibrium asymptotics of \(u_n(x;m)\)) Fix \(m\in \mathbb {C}\), and let \(K\subset \mathbb {C}\setminus E\) be bounded away from E, i.e., \(\mathrm {dist}(K,E)>0\). Then

where the error estimate is uniform for \(y\in K\).

Thus, \(u_n\) is approximated by the unique equilibrium solution of (13) that tends to \(-\mathrm {i}\) as \(y\rightarrow \infty \), provided that y lies outside the eye E. Since \({p}(y)\) is analytic and nonvanishing as a function of y bounded away from E, the uniform convergence immediately implies the following.

Corollary 1

Fix \(m\in \mathbb {C}\), and let K be as in the statement of Theorem 1. Then \(u_n(\cdot ;m)\) has no zeros or poles in the set nK for n sufficiently large.

As an application of these results, let \(y\in \mathbb {C}\setminus E\), and let \(C_y\) denote a positively-oriented loop surrounding the point y. Then, from Cauchy’s integral formula it follows that, as \(n\rightarrow +\infty \),

where to evaluate the integral we used (17). It is easy to see that the error term enjoys similar uniformity properties as in Theorem 1.

Next, we let \(E_\mathrm {L}\) (resp., \(E_\mathrm {R}\)) denote the part of the interior of E lying in the open left (resp., right) half-plane, compare again Fig. 20. We now develop an asymptotic formula for \(u_n(x;m)\) when \(n^{-1}x\in E_\mathrm {R}\) and \(m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\). Since \(E_\mathrm {L}\) and \(E_\mathrm {R}\) are related by reflection through the origin, by the symmetry (5) this formula will also be sufficient to describe \(u_n(x;m)\) for large n when \(n^{-1}x\in E_\mathrm {L}\), because \(-m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\) whenever \(m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\). Given \(m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\), in (88)–(89) below we define complex-valued functions \(\mathcal {Z}_n^\bullet (y,w;m)\), \(\mathcal {Z}_n^\circ (y,w;m)\), \(\mathcal {P}_n^\bullet (y,w;m)\), \(\mathcal {P}_n^\circ (y,w;m)\), and N(y), whose real and imaginary parts are smooth but nonanalytic functions of the real and imaginary parts of \(y\in E_\mathrm {R}\) and which are entire functions of \(w\in \mathbb {C}\), with \(N:E_\mathrm {R}\rightarrow \mathbb {C}\) nonvanishing. These functions depend crucially on a smooth but nonanalytic function \(C=C(y)\) defined on \(E_\mathrm {R}\) by a procedure described in Sects. 4.1.1 and 4.1.2, and also on a related smooth function \(B=B(y)\) with \(\mathrm {Re}(B(y))<0\) defined by (67). In detail, compare (88),

in which \(\Theta (z,B)\) denotes the Riemann theta function defined by (71), and in which the complex-valued phases  , and

, and  are well-defined affine linear functions of \(w\in \mathbb {C}\) and \(n\in \mathbb {Z}_{\ge 0}\) with coefficients and constant terms that are smooth functions of \(y\in E_\mathrm {R}\) depending parametrically on \(m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\). We then define

are well-defined affine linear functions of \(w\in \mathbb {C}\) and \(n\in \mathbb {Z}_{\ge 0}\) with coefficients and constant terms that are smooth functions of \(y\in E_\mathrm {R}\) depending parametrically on \(m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\). We then define

excluding isolated exceptional values of \((y,w)\in E_\mathrm {R}\times \mathbb {C}\) for which the denominator vanishes.

Theorem 2

(Elliptic asymptotics of \(u_n(x;m)\)) Fix \(m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\). For each \(n\in \mathbb {Z}_{\ge 0}\) and each \(y\in E_\mathrm {R}\), the function \(\dot{{p}}(w):=-\mathrm {i}\dot{u}_n(y,w;m)\) is a nonequilibrium elliptic function solution of (13) in the form (14) with integration constant \(C=C(y)\). If \(\epsilon >0\) is an arbitrarily small fixed number and \(K_y\subset E_\mathrm {R}\) and \(K_w\subset \mathbb {C}\) are compact sets, then

holds uniformly on the set of (y, w, n) defined by the conditions \(y\in K_y\), \(w\in K_w\) such that

Under the same conditions and with the same sense of convergence,

which provides asymptotics of \(u_n(ny+w;m)\) when \(y\in E_\mathrm {L}\).

Formula (22) follows from (20) with the use of the symmetry (5) (and that \(\dot{u}_n(y,w;m)\) is bounded and bounded away from zero on the indicated set, as it happens). Thus, provided that \(n^{-1}x\) lies in either domain \(E_\mathrm {L}\) or \(E_\mathrm {R}\) and m is not a half-integer, the rational Painlevé-III function \(u_n(x;m)\) is locally approximated by a nonequilibrium elliptic function solution of the differential equation (13). Note that the fact that the leading term on the right-hand side of (22) is an elliptic function follows from the first statement of Theorem 2 and the fact that the integrated form (14) admits the symmetry \((\dot{p},C,y,w)\mapsto (-\dot{p}^{-1},C,-y,-w)\).

Remark 2

The fact that in (20) and (22) we are approximating a function of a single complex variable \(x=ny+w\) with a function of two independent complex variables (y, w) deserves some explanation. Indeed, given x, there are many different choices of parameters (y, w) for which \(x=ny+w\), so the form of \(\dot{u}_n(y,w;m)\) actually gives a family of approximations for the same quantity. The variable w captures the local properties of the rational function \(u_n(x;m)\); it is the scale on which \(u_n(x;m)\) resembles a fixed elliptic function. On the other hand, the variable y captures the way that the elliptic modulus depends on the point of observation within the eye E, and unlike the meromorphic dependence on w, \(\dot{u}_n(y,w;m)\) is a decidedly nonanalytic function of y. If we approximate \(u_n(x;m)\) by setting \(w=0\) and letting y vary, we obtain a globally accurate (on \(K_y\)) approximation that is unfortunately not analytic in y. However, if we fix \(y\in E_\mathrm {R}\) and let w vary, we obtain a locally accurate (\(w\in K_w\), so \(x-ny=w=\mathcal {O}(1)\) as \(n\rightarrow +\infty \)) approximation that is an exact elliptic function depending only parametrically on y.

If in any of the conditions (21) we put \(\epsilon =0\), then at equality the corresponding phase agrees with a point of the lattice \(2\pi \mathrm {i}\mathbb {Z}+B(y)\mathbb {Z}\), and the associated factor in the definition of \(\dot{u}_n(y,w;m)\) vanishes. For \(\epsilon >0\), each condition in (21) defines a “swiss-cheese”-like region in the variables (y, w) given \(n\in \mathbb {Z}_{\ge 0}\) and \(m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\) with holes centered at points corresponding to lattice points. In fact, if \(y\in E_\mathrm {R}\) is also fixed, then the lattice \(2\pi \mathrm {i}\mathbb {Z}+B(y)\mathbb {Z}\) is a uniform lattice and each of the conditions in (21) omits from the complex w-plane the union of disks of radius \(\epsilon \) centered at the lattice points. On the other hand, if instead it is \(w\in \mathbb {C}\) that is fixed, then each of the conditions (21) omits from the complex y-plane neighborhoods of diameter proportional to \(\epsilon n^{-1}\) containing the points in a set that can be roughly characterized as a curvilinear grid of spacing proportional to \(n^{-1}\).

Corollary 2

Fix \(m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\) and a compact set \(K_y\subset E_\mathrm {R}\). If \(\{y_n\}_{n=N}^\infty \subset K_y\) is a sequence such that \(\mathcal {Z}_n^\bullet (y_n,0;m)=0\) for \(n=N, N+1,\dots \) (or such that \(\mathcal {Z}_n^\circ (y_n,0;m)=0\) for \(n=N,N+1,\dots \)), then for each sufficiently small \(\epsilon >0\), there is exactly one simple zero, and possibly a group of an equal number of additional zeros and poles, of \(u_n(ny;m)\) within \(|y-y_n|<\epsilon n^{-1}\) for n sufficiently large. Likewise, if \(\{y_n\}_{n=N}^\infty \subset K_y\) is a sequence such that \(\mathcal {P}_n^\bullet (y_n,0;m)=0\) for \(n=N, N+1,\dots \) (or such that \(\mathcal {P}_n^\circ (y_n,0;m)=0\) for \(n=N,N+1,\dots \)), then for each sufficiently small \(\epsilon >0\), there is exactly one simple pole, and possibly a group of an equal number of additional zeros and poles, of \(u_n(ny;m)\) within \(|y-y_n|<\epsilon n^{-1}\) for n sufficiently large.

The proof of this result depends on Theorem 2 and some additional technical properties of the zeros of the factors in the formula (19) and will be given in Sect. 4.7. The proof is based on an index argument, which computes the net number of zeros over poles within a small disk. For this reason, we cannot rule out the possible attraction of one or more pole-zero pairs of the rational function \(u_n(x;m)\), in excess of a simple zero (or pole), toward a given zero (or singularity) of the approximating function. However, we do not observe any such “excess pairing” in practice. One approach to ruling out any excess pairing would be to compare against precise counts of the zeros and poles of \(u_n(x;m)\) as documented in [12]. However, such a comparison would require accurate approximations in domains that completely cover the eye E without overlaps. In this paper, we avoid analyzing \(u_n(x;m)\) near the origin, the corners \(y=\pm \tfrac{1}{2}\mathrm {i}\), and the “eyebrows” (except in the special case \(m\in \mathbb {Z}+\tfrac{1}{2}\); see below). These are projects for the future. Although for these reasons there remains some ambiguity about the distribution of poles and zeros of the rational function \(u_n(x;m)\), our analysis gives very detailed information about the distribution of singularities and zeros of the approximation \(\dot{u}_n(y,w;m)\). In particular, we have the following.

Theorem 3

Let \(m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\). There is a continuous function \(\rho :E_\mathrm {R}\rightarrow \mathbb {R}_+\), \(\rho \in L^1_\mathrm {loc}(E_\mathrm {R})\), such that for any compact set \(K\subset E_\mathrm {R}\),

where \(\mathrm {d}A(y)\) denotes the Lebesgue measure in the y-plane. The density \(\rho \) is independent of \(m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\) and satisfies \(\rho (y)\rightarrow 0\) as \(y\rightarrow \partial E_\mathrm {R}\setminus \{0\}\) and \(\rho (r\mathrm {e}^{\mathrm {i}\theta })=h(\theta )r^{-1}+o(r^{-1})\) as \(r\downarrow 0\) for some function \(h:(-\pi /2,\pi /2)\rightarrow \mathbb {R}_+\).

We would expect that the same statement holds with \(\dot{u}_n(y,0;m)\) replaced by \(u_n(ny;m)\), but this would require ruling out the excess pairing phenomenon mentioned above. The density function \(\rho (y)\) is defined in (122) below, and the proof of Theorem 3 is given in Sect. 4.7. Although the proof of Theorem 3 does not allow us to consider sets K that depend on n in any serious way, the assumption that (23) holds when K is the disk of radius \(n^{-2}\) centered at the origin leads to the prediction that this disk contains \(\mathcal {O}(1)\) zeros/singularities of \(\dot{u}_n(y,0;m)\) consistent with the empirical observation that the smallest zeros and poles of \(u_n(x;m)\) scale like \(n^{-1}\) in the x-plane [4].

While the asymptotic approximations of the rational Painlevé-III function \(u_n(x;m)\) for \(n^{-1}x\in E_\mathrm {L}\cup E_\mathrm {R}\) are much more complicated than the simple formula \(\mathrm {i}{p}(n^{-1}x)\) valid for \(n^{-1}x\in \mathbb {C}\setminus E\), they are easily implemented numerically, once the necessary ingredients developed as part of the proof of Theorem 2 are incorporated. To quantitatively illustrate the accuracy of the approximations described in Theorems 1 and 2, we compare \(u_n(x;m)\) with its approximations for x restricted to a real interval that bisects E in Figs. 1, 2 and 3. In these figures, we found it compelling to plot the approximate formula \(\mathrm {i}{p}(y)\) of Theorem 1 continued into the eye E from the left and right, even though we have no basis for comparing the graphs of these (reciprocal) continuations with that of \(u_n(ny;m)\) when \(y\in E\). Indeed, in some situations these graphs appear to form quite accurate upper or lower envelopes of the wild modulated elliptic oscillations of \(u_n(ny;m)\) that occur when \(y\in E\) and that are captured with locally uniform accuracy by \(\dot{u}_n(y,0;m)\). We have no explanation for these somewhat imprecise observations, but we find them interesting and note that similar phenomena occur for the rational solutions of the Painlevé-II equation (also without explanation) as was noted in [7].

Comparison of \(u_n(ny;m)\) (blue curve) with its approximations over the interval \(-0.5<y<0.5\) for \(m=0\) with \(n=10\) (left) and \(n=20\) (right). The points where this interval intersects \(\partial E\) are shown with vertical gray lines. The approximation \(\dot{u}_n(y,0;m)\) of Theorem 2 is plotted in between the gray lines with black broken curves. The dotted curve is the analytic continuation into E from the right of the outer approximation \(\mathrm {i}{p}(y)\) described in Theorem 1. Likewise, the dash/dotted curve is the meromorphic continuation into E from the left of the same outer approximation (Color figure online)

As in Fig. 1 but for \(m=1\) (Color figure online)

Now, we go into the complex y-plane where we can illustrate both the shape of the eye E and the phenomenon of attraction of poles and zeros of \(u_n(ny;m)\) to the left (\(E_\mathrm {L}\)) and right (\(E_\mathrm {R}\)) halves. In these figures, the zeros and poles of the rational Painlevé function \(u_n(ny;m)\) are plotted with the following convention (as in our earlier paper [4]):

Zeros of \(u_n(x;m)\) that are also zeros of \(s_n(x;m-1)\): blue filled dots.

Zeros of \(u_n(x;m)\) that are also zeros of \(s_{n-1}(x;m)\): blue unfilled dots.

Poles of \(u_n(x;m)\) that are also zeros of \(s_n(x;m)\): red filled dots.

Poles of \(u_n(x;m)\) that are also zeros of \(s_{n-1}(x;m-1)\): red unfilled dots.

In addition to displaying the overall attraction of the poles and zeros to the eye domain E, the plots in Figs. 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14 and 15 are also intended to demonstrate the remarkable accuracy of the approximation of Theorem 2 in capturing the locations of individual poles and zeros as described in Corollary 2. As described in Sect. 4.7 below, each of the four factors in the fraction on the right-hand side of (19) has zeros that may be characterized as the intersection points of integral level curves of two different functions (see (116) and (117) below) defined on \(E_\mathrm {R}\) (and via the symmetry (5), \(E_\mathrm {L}\)). We plot the families of level curves for each of the four factors in separate figures in order to demonstrate another phenomenon that is evident but for which we have no good explanation: the zeros of the separate factors in the approximation \(\dot{u}_n(y,0;m)\) as defined by (19) appear to correspond precisely to the actual zeros of the four polynomial factors in the formula (3) for the rational Painlevé-III function \(u_n(ny;m)\). This coincidence is what motivates the superscript notation (\(\bullet \) versus \(\circ \)) on the four factors in (19); the zeros of the factors with superscript \(\bullet \) (resp., \(\circ \)) apparently correspond in the limit \(n\rightarrow +\infty \) to filled (resp., unfilled) dots.

The black curves including the vertical segment form the boundary of the left (\(E_\mathrm {L}\)) and right (\(E_\mathrm {R}\)) halves of the eye E. The light blue curves are \(\alpha _n^{0,+}(y,0,m)\in \mathbb {Z}\) (solid) and \(\beta _n^{0,+}(y,0,m)\in \mathbb {Z}\) (dotted) plotted in the y-plane; see (116) for definitions of these functions. These plots are for \(m=0\) and \(n=5\) (left), \(n=10\) (center), and \(n=20\) (right). The blue/red dots are the actual zeros/poles of \(u_n(ny;m)\) (filled for zeros of \(s_n\) and unfilled for zeros of \(s_{n-1}\)). Note how the unfilled blue dots are attracted toward the intersections of the curves, which are the zeros of \(\dot{u}_n(y,0;m)\) arising from roots of \(\mathcal {Z}_n^\circ (y,0;m)\) (Color figure online)

As in Fig. 4 but here the light blue curves are \(\alpha _n^{0,-}(y,0,m)\in \mathbb {Z}\) (solid) and \(\beta _n^{0,-}(y,0,m)\in \mathbb {Z}\) (dotted); see (116) for definitions of these functions. Note how the filled blue dots are attracted toward the intersections of the curves, which are now the zeros of \(\dot{u}_n(y,0;m)\) arising from roots of \(\mathcal {Z}_n^\bullet (y,0;m)\) (Color figure online)

As in Fig. 4 but here the light red curves are \(\alpha _n^{\infty ,+}(y,0,m)\in \mathbb {Z}\) (solid) and \(\beta _n^{\infty ,+}(y,0,m)\in \mathbb {Z}\) (dotted); see (117) for definitions of these functions. Note how the filled red dots are attracted toward the intersections of the curves, which are the singularities of \(\dot{u}_n(y,0;m)\) arising from roots of \(\mathcal {P}_n^\bullet (y,0;m)\) (Color figure online)

As in Fig. 4 but here the light red curves are \(\alpha _n^{\infty ,-}(y,0,m)\in \mathbb {Z}\) (solid) and \(\beta _n^{\infty ,-}(y,0,m)\in \mathbb {Z}\) (dotted); see (117) for definitions of these functions. Note how the unfilled red dots are attracted toward the intersections of the curves, which are now the singularities of \(\dot{u}_n(y,0;m)\) arising from roots of \(\mathcal {P}_n^\circ (y,0;m)\) (Color figure online)

As in Fig. 4 but for \(m=\tfrac{4}{5}\mathrm {i}\) (Color figure online)

As in Fig. 5 but for \(m=\tfrac{4}{5}\mathrm {i}\) (Color figure online)

As in Fig. 6 but for \(m=\tfrac{4}{5}\mathrm {i}\) (Color figure online)

As in Fig. 7 but for \(m=\tfrac{4}{5}\mathrm {i}\) (Color figure online)

As in Fig. 4 but for \(m=\tfrac{1}{4}\) (Color figure online)

As in Fig. 5 but for \(m=\tfrac{1}{4}\) (Color figure online)

As in Fig. 6 but for \(m=\tfrac{1}{4}\) (Color figure online)

As in Fig. 7 but for \(m=\tfrac{1}{4}\) (Color figure online)

Another feature of the plots in Figs. 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14 and 15 is that only one pole or zero is evidently attracted to each crossing point of the curves, which suggests that the excess pairing phenomenon that cannot be ruled out by our index-based proof of Corollary 2 does in fact not occur. Finally, these plots illustrate the most important properties of the pole/zero density function \(\rho (y)\) described in Theorem 3, namely the infinite density at the origin and the dilution of poles/zeros near the boundaries of \(\partial E_\mathrm {L}\) and \(\partial E_\mathrm {R}\) (which include the imaginary axis vertically bisecting E).

Clearly, when \(m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\), there are many poles and zeros in the domains \(E_\mathrm {L}\) and \(E_\mathrm {R}\) when n is large, and in this situation we say that the eye is open. On the other hand, the large-n asymptotic behavior of \(u_n(x;m)\) when \(n^{-1}x\) is in a neighborhood of the eye E is completely different than described above when \(m\in \mathbb {Z}+\tfrac{1}{2}\). We refer to the closures (i.e., including endpoints) of the arcs of \(\partial E_\mathrm {L}\) and \(\partial E_\mathrm {R}\) in the open left and right half-planes respectively as the “eyebrows” of the eye E, denoting them by  and

and  , respectively. Our first result is that, in a sense, the eye is closed when \(m\in \mathbb {Z}+\tfrac{1}{2}\).

, respectively. Our first result is that, in a sense, the eye is closed when \(m\in \mathbb {Z}+\tfrac{1}{2}\).

Theorem 4

(Equilibrium asymptotics of \(u_n(x;m)\) for \(m\in \mathbb {Z}+\tfrac{1}{2}\)) Suppose that \(m=-(k+\tfrac{1}{2})\) for \(k\in \mathbb {Z}_{\ge 0}\). Let  be bounded away from

be bounded away from  , i.e.,

, i.e.,  . Then

. Then

where  denotes the meromorphic continuation of \({p}(y)\) from a neighborhood of \(y=\infty \) to the maximal domain

denotes the meromorphic continuation of \({p}(y)\) from a neighborhood of \(y=\infty \) to the maximal domain  as a nonvanishing function whose only singularity is a simple pole at the origin \(y=0\), and the error estimate is uniform for \(y\in K\). Likewise, if \(m=k+\tfrac{1}{2}\) for \(k\in \mathbb {Z}_{\ge 0}\) and

as a nonvanishing function whose only singularity is a simple pole at the origin \(y=0\), and the error estimate is uniform for \(y\in K\). Likewise, if \(m=k+\tfrac{1}{2}\) for \(k\in \mathbb {Z}_{\ge 0}\) and  is bounded away from

is bounded away from  , then

, then

where  denotes the analytic continuation of \({p}(y)\) from a neighborhood of \(y=\infty \) to the maximal domain

denotes the analytic continuation of \({p}(y)\) from a neighborhood of \(y=\infty \) to the maximal domain  as a function whose only zero is simple and lies at the origin, and the error estimate is uniform for \(y\in K\).

as a function whose only zero is simple and lies at the origin, and the error estimate is uniform for \(y\in K\).

The functions  and

and  both agree with \({p}(y)\) for \(y\in \mathbb {C}\setminus E\), and they are reciprocals of one another when \(y\in E\). Theorem 4 is proved in Sect. 5.1. Note that this result is consistent with Theorem 1, which does not require any condition on \(m\in \mathbb {C}\). Moreover, it gives a far-reaching generalization of Theorem 1 for the special case of \(m\in \mathbb {Z}+\tfrac{1}{2}\). The uniform nature of the convergence implies that \(u_n(ny;m)\) can have no poles or zeros in K for sufficiently large n unless the set K contains the origin, in which case an index argument predicts a unique simple pole near the origin for \(m=-(k+\tfrac{1}{2})\) and a unique simple zero near the origin for \(m=k+\tfrac{1}{2}\). However, it is proven in [12] that there is a simple pole or zero exactly at the origin if n is sufficiently large (given \(k\in \mathbb {Z}_{\ge 0}\)). Therefore, we have the following.

both agree with \({p}(y)\) for \(y\in \mathbb {C}\setminus E\), and they are reciprocals of one another when \(y\in E\). Theorem 4 is proved in Sect. 5.1. Note that this result is consistent with Theorem 1, which does not require any condition on \(m\in \mathbb {C}\). Moreover, it gives a far-reaching generalization of Theorem 1 for the special case of \(m\in \mathbb {Z}+\tfrac{1}{2}\). The uniform nature of the convergence implies that \(u_n(ny;m)\) can have no poles or zeros in K for sufficiently large n unless the set K contains the origin, in which case an index argument predicts a unique simple pole near the origin for \(m=-(k+\tfrac{1}{2})\) and a unique simple zero near the origin for \(m=k+\tfrac{1}{2}\). However, it is proven in [12] that there is a simple pole or zero exactly at the origin if n is sufficiently large (given \(k\in \mathbb {Z}_{\ge 0}\)). Therefore, we have the following.

Corollary 3

Suppose that \(m=-(k+\tfrac{1}{2})\), \(k\in \mathbb {Z}_{\ge 0}\). If \(K\subset \mathbb {C}\) is bounded away from  , then \(u_n(\cdot ;m)\) has no zeros or poles in the set nK for n sufficiently large, except for a simple pole at the origin. On the other hand, if \(m=k+\tfrac{1}{2}\), \(k\in \mathbb {Z}_{\ge 0}\), and \(K\subset \mathbb {C}\) is bounded away from

, then \(u_n(\cdot ;m)\) has no zeros or poles in the set nK for n sufficiently large, except for a simple pole at the origin. On the other hand, if \(m=k+\tfrac{1}{2}\), \(k\in \mathbb {Z}_{\ge 0}\), and \(K\subset \mathbb {C}\) is bounded away from  , then \(u_n(\cdot ;m)\) has no zeros or poles in the set nK for n sufficiently large, except for a simple zero at the origin.

, then \(u_n(\cdot ;m)\) has no zeros or poles in the set nK for n sufficiently large, except for a simple zero at the origin.

This result can be combined with Theorem 4 to show immediately as in (18) that the convergence of \(u_n(ny;m)\) for \(y\in K\) extends to all derivatives. Corollary 3 also shows that if \(m\in \mathbb {Z}+\tfrac{1}{2}\), all of the poles/zeros but one are attracted toward one or the other of the eyebrows as \(n\rightarrow +\infty \), depending on the sign of m; this is what we mean when we say that the eye is closed. Counting arguments suggest it is reasonable that the poles and zeros should be organized near curves rather than in a two-dimensional area such as \(E_\mathrm {L}\cup E_\mathrm {R}\) in this case. Indeed, in [12] it is also shown that the total number of zeros and poles of \(u_n(x;m)\) scales as n as \(n\rightarrow +\infty \) when \(m\in \mathbb {Z}+\tfrac{1}{2}\), while for \(m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\) the number scales as \(n^2\). Our methods allow for the following precise statement concerning the nature of convergence of the poles/zeros to one or the other of the eyebrows for \(m\in \mathbb {Z}+\tfrac{1}{2}\). The following results refer to a “tubular neighborhood” T of the eyebrow  defined as follows: for sufficiently small positive constants \(\delta _1\) and \(\delta _2\),

defined as follows: for sufficiently small positive constants \(\delta _1\) and \(\delta _2\),

Since points on the eyebrow  satisfy \(\mathrm {Re}(V({p}(y));y)=0\), the set T contains points on both sides of

satisfy \(\mathrm {Re}(V({p}(y));y)=0\), the set T contains points on both sides of  , and the angular condition bounds the set T away from the endpoints \(y=\pm \tfrac{1}{2}\mathrm {i}\) of

, and the angular condition bounds the set T away from the endpoints \(y=\pm \tfrac{1}{2}\mathrm {i}\) of  . Note that \(V({p}(y)^{-1};y)=-V({p}(y);y)\pmod {2\pi \mathrm {i}}\).

. Note that \(V({p}(y)^{-1};y)=-V({p}(y);y)\pmod {2\pi \mathrm {i}}\).

Theorem 5

(Layered trigonometric asymptotics of \(u_n(x;m)\) for \(m\in \mathbb {Z}+\tfrac{1}{2}\)) Let \(m=-(\tfrac{1}{2}+k)\), \(k\in \mathbb {Z}_{\ge 0}\), and let T be as defined in (24). Then the following asymptotic formulæ hold in which the error terms are uniform on the indicated sub-domains of T from which small discs of radius proportional to an arbitrarily small multiple of \(n^{-1}\) centered at each zero or pole of the indicated approximation are excised:

If \(y\in T\) with \(\mathrm {Re}(V({p}(y)^{-1};y))\le -\tfrac{1}{2}kn^{-1}\ln (n)\), then \(u_n(ny;m)=\dot{u}_n+\mathcal {O}(n^{-1})\), where \(\dot{u}_n\) is given explicitly by (149).

For \(\ell =1,\dots ,k\),

If \(y\in T\) with \(-\tfrac{1}{2}(k-2\ell +2)n^{-1}\ln (n)\le \mathrm {Re}(V({p}(y)^{-1};y))\le -\tfrac{1}{2}(k-2\ell +\tfrac{3}{2})n^{-1}\ln (n)\), then \(u_n(ny;m)=\dot{u}_n+\mathcal {O}(n^{-1/2})\), where \(\dot{u}_n\) is given explicitly by (150).

If \(y\in T\) with \(-\tfrac{1}{2}(k-2\ell +\tfrac{3}{2})n^{-1}\ln (n)\le \mathrm {Re}(V({p}(y)^{-1};y))\le -\tfrac{1}{2}(k-2\ell +\tfrac{1}{2})n^{-1}\ln (n)\), then \(u_n(ny;m)=\dot{u}_n+\mathcal {O}(n^{-1/2})\), where \(\dot{u}_n\) is given explicitly by (151) or (152).

If \(y\in T\) with \(-\tfrac{1}{2}(k-2\ell +\tfrac{1}{2})n^{-1}\ln (n)\le \mathrm {Re}(V({p}(y)^{-1};y))\le -\tfrac{1}{2}(k-2\ell )n^{-1}\ln (n)\), then \(u_n(ny;m)=\dot{u}_n+\mathcal {O}(n^{-1/2})\), where \(\dot{u}_n\) is given explicitly by (153).

If \(y\in T\) with \(\mathrm {Re}(V({p}(y)^{-1};y))\ge \tfrac{1}{2}kn^{-1}\ln (n)\), then \(u_n(ny;m)=\dot{u}_n+\mathcal {O}(n^{-1})\), where \(\dot{u}_n\) is given explicitly by (154).

These results imply corresponding asymptotic formulæ for \(u_n(ny;m)\) if \(m=\tfrac{1}{2}+k\), \(k\in \mathbb {Z}_{\ge 0}\) by the exact symmetry (5); in particular, the eyebrow near which the asymptotics are nontrivial is then the left one,  .

.

The inequalities on y in the statement of the theorem describe a dissection of T into finitely-many (depending on k) “layers” roughly parallel to the right eyebrow  and overlapping at their common boundaries. The order of the layers as written in the theorem corresponds to y crossing

and overlapping at their common boundaries. The order of the layers as written in the theorem corresponds to y crossing  from inside E to outside, and the “interior” layers described by the index \(\ell \) are each of width proportional to \(n^{-1}\ln (n)\). The approximation \(\dot{u}_n\) assigned to each layer is a fractional linear (Möbius) function of \(n^\beta \mathrm {e}^{2nV({p}(y)^{-1};y)}\), where the power \(\beta \) and the coefficients of the linear expressions in the numerator/denominator depend on the layer. The latter coefficients are relatively slowly-varying functions of y alone that are explicitly built from \({p}(y)\), and hence the dominant local behavior in any given layer is essentially trigonometric with respect to y. We wish to stress that, unlike the approximation formula (19) whose ingredients involve implicitly-defined functions of \(y\in E_\mathrm {R}\) and elements of algebraic geometry, the approximation \(\dot{u}_n\) in each layer is an elementary function of \(V(\lambda ;y)\) and \({p}(y)\). In particular, it is easy to check that when y is in the innermost or outermost layers but bounded away from

from inside E to outside, and the “interior” layers described by the index \(\ell \) are each of width proportional to \(n^{-1}\ln (n)\). The approximation \(\dot{u}_n\) assigned to each layer is a fractional linear (Möbius) function of \(n^\beta \mathrm {e}^{2nV({p}(y)^{-1};y)}\), where the power \(\beta \) and the coefficients of the linear expressions in the numerator/denominator depend on the layer. The latter coefficients are relatively slowly-varying functions of y alone that are explicitly built from \({p}(y)\), and hence the dominant local behavior in any given layer is essentially trigonometric with respect to y. We wish to stress that, unlike the approximation formula (19) whose ingredients involve implicitly-defined functions of \(y\in E_\mathrm {R}\) and elements of algebraic geometry, the approximation \(\dot{u}_n\) in each layer is an elementary function of \(V(\lambda ;y)\) and \({p}(y)\). In particular, it is easy to check that when y is in the innermost or outermost layers but bounded away from  (the “overlap domain”), Theorem 5 is consistent with Theorem 4.

(the “overlap domain”), Theorem 5 is consistent with Theorem 4.

The analogue of Corollary 2 in the present context is the following.

Corollary 4

Let \(m=-(k+\tfrac{1}{2})\), \(k\in \mathbb {Z}_{\ge 0}\), and let T be defined as in (24). If \(\{y_n\}_{n=N}^\infty \subset T\) is a sequence for which \(y_n\) is a zero of \(\dot{u}_n\) for all \(n\ge N\), then for each \(\epsilon >0\) sufficiently small there is exactly one simple zero, and possibly a group of an equal number of additional zeros and poles, of \(u_n(ny;m)\) within \(|y-y_n|<\epsilon n^{-1}\) for n sufficiently large. Likewise, if \(\{y_n\}_{n=N}^\infty \subset T\) is a sequence for which \(y_n\) is a pole of \(\dot{u}_n\) for all \(n\ge N\), then for each \(\epsilon >0\) sufficiently small there is exactly one simple pole, and possibly a group of an equal number of additional zeros and poles, of \(u_n(ny;m)\) within \(|y-y_n|<\epsilon n^{-1}\) for n sufficiently large.

As before, we suspect that with additional work one should be able to preclude the excess pairing phenomenon, so that the poles and zeros of \(u_n(x;m)\) and its approximation \(\dot{u}_n\) are in one-to-one correspondence. Now in each layer of T, the poles and zeros of \(\dot{u}_n\) are easily seen to lie exactly along certain explicit curves roughly parallel to the eyebrow.

Theorem 6

Suppose that \(m=-(\tfrac{1}{2}+k)\), \(k\in \mathbb {Z}_{\ge 0}\), and let T be as in (24). The zeros and poles of the piecewise-meromorphic approximating function \(\dot{u}_n\) on T lie on a system of \(4k+2\) nonintersecting curves roughly parallel to the eyebrow  . From left-to-right, these are:

. From left-to-right, these are:

Analogous results hold for the approximation to \(u_n(ny;m)\) for \(m=\tfrac{1}{2}+k\), \(k\in \mathbb {Z}_{\ge 0}\), obtained from \(\dot{u}_n\) via the symmetry (5) (\(y\mapsto -y\), \(m\mapsto -m\), \(\dot{u}_n\mapsto \dot{u}_n^{-1}\)).

Corollary 4 and Theorem 6 are proved in Sect. 5.2.8. To illustrate the accuracy of these results, we compare the exact locations of zeros and poles of \(u_n(ny;m)\) for \(m=-(k+\tfrac{1}{2})\), \(k\in \mathbb {Z}_{\ge 0}\), with the curves described in Theorem 6 in Figs. 16, 17 and 18. In addition to illustrating the accuracy of the approximation by \(\dot{u}_n\), these figures demonstrate another phenomenon for which we do not yet have an explanation: for any given curve, the poles/zeros attracted are those contributed by exactly one of the four polynomial factors in (3). Furthermore, there appears again to be no excess pairing of poles and zeros.

As in Fig. 16 but for \(k=1\) (Color figure online)

As in Fig. 16 but for \(k=2\) (Color figure online)

Evidently, the large-n asymptotic behavior of \(u_n(x;m)\) is completely different for \(m=\pm (k+\tfrac{1}{2})\), \(k\in \mathbb {Z}_{\ge 0}\), and for \(m=\pm (k+\tfrac{1}{2})+\epsilon \), however small \(\epsilon \ne 0\) is. In other words, even crude aspects of the large-n asymptotic behavior of \(u_n(x;m)\) for \(n^{-1}x\) in a neighborhood of the eye E fail to be uniformly valid with respect to the second parameter m near half-integer values of the latter. Thus, given \(m\in \mathbb {C}\), the eye is either open or closed in the large-n limit. On the other hand, the polynomials \(s_n(x;m)\) in the formula (3) are actually polynomials in both arguments x and m [12], and in this sense the limits of \(n\rightarrow +\infty \) and \(m\rightarrow \mathbb {Z}+\tfrac{1}{2}\) do not commute. Capturing the process of the closing of the eye requires connecting m with n in a suitable double-scaling limit so that m tends to a given half-integer as \(n\rightarrow +\infty \). In a subsequent paper, we will show that in the right double-scaling limit, all three types of solutions of the autonomous model equation (13) play a role in describing\(u_n(ny;m)\)as\(n\rightarrow +\infty \).

We also note that all of the results reported here assume that \(m\in \mathbb {C}\) is held fixed while \(n\rightarrow +\infty \), and we do not expect uniformity of the large-n asymptotic behavior of \(u_n(x;m)\) if m also becomes large. The parameter m enters into the conditions of Riemann–Hilbert Problem 1 in a more complicated way than does n, appearing not only in exponents but also through the factors \(\Gamma (\tfrac{1}{2}\pm m)\). To partly simplify the latter factors, let us write \(m\in \mathbb {C}\setminus (\mathbb {Z}+\tfrac{1}{2})\) in the form \(m=m_0-k\), where \(k\in \mathbb {Z}\) and \(0\le \mathrm {Re}(m_0)<1\); then consider the matrix \(\widetilde{\mathbf {Y}}(\lambda )\) related to \(\mathbf {Y}(\lambda )\) solving Riemann–Hilbert Problem 1 by

where \((a)_k:=\Gamma (a+k)/\Gamma (a)\) is a Pochhammer symbol. It is easy to check that \(\widetilde{\mathbf {Y}}(\lambda )\) satisfies the conditions of Riemann–Hilbert Problem 1 in which the only change is that \(\Gamma (\tfrac{1}{2}-m)\) and \(\Gamma (\tfrac{1}{2}+m)\) are replaced in the jump matrices by \(\Gamma (\tfrac{1}{2}-m_0)\) and \((-1)^k\Gamma (\tfrac{1}{2}+m_0)\), respectively. Moreover, one can then check that exactly the same reconstruction formula (12) holds for \(u_n(x;m)\) in which the elements of expansion coefficients of \(\mathbf {Y}(\lambda )\) are merely replaced with the corresponding elements for \(\widetilde{\mathbf {Y}}(\lambda )\). This means that to study \(u_n(x;m)\) for m large (whether or not n is also large), the only situation in which it becomes necessary to apply Stirling asymptotics to deal with the gamma function factors is if the imaginary part of m is growing. In a future work, we hope to investigate the rational solutions of the Painlevé-III equation when the parameter m is large.

2 Spectral Curve and g-Function

When n is large, the exponential factors \(\lambda ^{\mp n}\mathrm {e}^{\mp \mathrm {i}x\varphi (\lambda )}=\mathrm {e}^{\pm nV(\lambda ;y)}\) appearing in the jump conditions (8)–(11) need to be balanced in general by some compensating factors that can be used to control exponential growth. We therefore introduce a “g-function” \(g(\lambda ;y)\) that is taken to be bounded and analytic in \(\mathbb {C}\setminus L\) with \(g(\lambda ;y)\rightarrow g_\infty (y)\) as \(\lambda \rightarrow \infty \) for some \(g_\infty (y)\) to be determined, and we set

Thus, representing (8)–(11) in the general form \(\mathbf {Y}_{n+}(\lambda ;x,m)=\mathbf {Y}_{n-}(\lambda ;x,m)\mathbf {V}(\lambda ;x,m)\), we obtain the corresponding jump conditions for \(\mathbf {M}_n(\lambda ;y,m)\) in the form \(\mathbf {M}_{n+}(\lambda ;y,m)=\mathbf {M}_{n-}(\lambda ;y,m)\mathrm {e}^{ng_-(\lambda ;y)\sigma _3}\mathbf {V}(\lambda ;ny,m)\mathrm {e}^{-ng_+(\lambda ;y)\sigma _3}\). Noting that

we place the following conditions on g. We want g to be chosen so that L can be deformed and then split into several arcs along each of which one of the following alternatives holds (recall that V is defined by (15)):

\(g_+(\lambda ;y)-g_-(\lambda ;y)=\mathrm {i}K\) where \(K\in \mathbb {R}\) is constant (implying that \(g'(\lambda ;y)\) has no jump discontinuity across the arc), and \(\mathrm {Re}(2g_\pm (\lambda ;y)-V(\lambda ;y))<0\), or

\(g_+(\lambda ;y)+g_-(\lambda ;y)-V(\lambda ;y)=\mathrm {i}K\) where \(K\in \mathbb {R}\) is constant (implying that \(g_+'(\lambda ;y)+g_-'(\lambda ;y)-V'(\lambda ;y)=0\) holds along the arc), while \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))>0\) on both sides of the arc, or

\(g_+(\lambda ;y)+g_-(\lambda ;y)-V(\lambda ;y)=\mathrm {i}K\) where \(K\in \mathbb {R}\) is constant (implying that \(g_+'(\lambda ;y)+g_-'(\lambda ;y)-V'(\lambda ;y)=0\) holds along the arc), while \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))<0\) on both sides of the arc, or

\(g_+(\lambda ;y)-g_-(\lambda ;y)=\mathrm {i}K\) where \(K\in \mathbb {R}\) is constant (implying that \(g'(\lambda ;y)\) has no jump discontinuity across the arc), and \(\mathrm {Re}(2g_\pm (\lambda ;y)-V(\lambda ;y))>0\).

The real constant K will generally be different in each maximal arc.

2.1 The Spectral Curve and Its Degenerations

If we assume that \(g'(\lambda ;y)\) has a finite number of arcs of discontinuity along \(L\setminus \{0\}\), then obviously \((g'(\lambda ;y)-\tfrac{1}{2}V'(\lambda ;y))_+=(g'(\lambda ;y)-\tfrac{1}{2}V'(\lambda ;y))_-\) except along these arcs. Along the arcs of discontinuity where instead the condition \(g_+(\lambda ;y)+g_-(\lambda ;y)-V(\lambda ;y)=\mathrm {i}K\) holds, by differentiation along the arc we have \((g'(\lambda ;y)-\tfrac{1}{2}V'(\lambda ;y))_+=-(g'(\lambda ;y)-\tfrac{1}{2}V'(\lambda ;y))_-\). It follows that \((g'(\lambda ;y)-\tfrac{1}{2}V'(\lambda ;y))^2\) is an analytic function of \(\lambda \) except at \(\lambda =0\), which is the only singularity of \(V'(\lambda ;y)\). Now since \(g'(\lambda ;y)=\mathcal {O}(\lambda ^{-2})\) as \(\lambda \rightarrow \infty \) and \(g'(\lambda ;y)=\mathcal {O}(1)\) as \(\lambda \rightarrow 0\), it follows that

and hence if \(y\ne 0\),

Therefore, if \(y=0\), Liouville’s theorem shows that

while if \(y\ne 0\), we necessarily have that

where \(P(\cdot ;y,C)\) is the quartic polynomial defined by (14) and it only remains to determine C. Since the zero locus of \(P(\lambda ;y,C)\) is obviously symmetric with respect to the involution \(\lambda \mapsto \lambda ^{-1}\), the following configurations for \(P(\lambda ;y,C)\) include all possibilities, given that \(y\ne 0\):

- (i)

All four roots coincide, in which case the four-fold root must lie at either \(\lambda =1\) or \(\lambda =-1\); i.e., \(P(\lambda ;y,C)=-\tfrac{1}{4}y^2(\lambda \mp 1)^4 = -\tfrac{1}{4}y^2\lambda ^4\pm y^2\lambda ^3 -\tfrac{3}{2}y^2\lambda ^2 \pm y^2\lambda -\tfrac{1}{4}y^2\). Comparing with (14), we see that this situation occurs only if \(y=\pm \tfrac{1}{2}\mathrm {i}\), and then only if also \(C=-\tfrac{3}{2}y^2=\tfrac{3}{8}\). In this case, since \(P(\lambda ;y,C)\) is a perfect square, we have either \(g'(\lambda ;y)-\tfrac{1}{2}V'(\lambda ;y)=\tfrac{1}{2}\mathrm {i}y(1\mp \lambda ^{-1})^2=\tfrac{1}{2}\mathrm {i}y(1\mp 2\lambda ^{-1}+\lambda ^{-2})\) or \(g'(\lambda ;y)-\tfrac{1}{2}V'(\lambda ;y)=-\tfrac{1}{2}\mathrm {i}y(1\mp \lambda ^{-1})^2=-\tfrac{1}{2}\mathrm {i}y(1\mp 2\lambda ^{-1}+\lambda ^{-2})\). Since \(g'(\lambda ;y)=\mathcal {O}(\lambda ^{-2})\) as \(\lambda \rightarrow \infty \), only the former is consistent with (15), and then we see that in fact \(g'(\lambda ;y)-\tfrac{1}{2}V'(\lambda ;y)=-\tfrac{1}{2}V'(\lambda ;y)\); i.e., \(g'(\lambda ;y)=0\) in this case, which implies that \(g(\lambda ;y)=g_\infty (y)\). This case turns out to be relevant exactly for \(y=\pm \tfrac{1}{2}\mathrm {i}\).

- (ii)

There are two double roots that are exchangedFootnote 2 by the involution, in which case there is a number \({p}\ne \pm 1\) such that \(P(\lambda ;y;C)=-\tfrac{1}{4}y^2(\lambda -{p})^2(\lambda -{p}^{-1})^2 = -\tfrac{1}{4}y^2\lambda ^4+\tfrac{1}{2}y^2({p}+{p}^{-1})\lambda ^3 -\tfrac{1}{4}y^2({p}^2+4+{p}^{-2})\lambda ^2 +\tfrac{1}{2}y^2({p}+{p}^{-1})\lambda -\tfrac{1}{4}y^2\). Comparing with (14) shows that this is possible for all \(y\ne 0\), provided that \({p}\) is determined as a function of y up to reciprocation by \({p}+{p}^{-1}=\mathrm {i}y^{-1}\) and then C is given the value \(C=-\tfrac{1}{4}y^2({p}^2+4+{p}^{-2})=-\tfrac{1}{4}(2y^2-1)\). In this case, \(P(\lambda ;y,C)\) is again a perfect square and hence either \(g'(\lambda ;y)-\tfrac{1}{2}V'(\lambda ;y)=\tfrac{1}{2}\mathrm {i}y(1-{p}\lambda ^{-1})(1-{p}^{-1}\lambda ^{-1})\) or \(g'(\lambda ;y)-\tfrac{1}{2}V'(\lambda ;y)=-\tfrac{1}{2}\mathrm {i}y(1-{p}\lambda ^{-1})(1-{p}^{-1}\lambda ^{-1})\). Only the former is consistent with (15) given that \(g'(\lambda ;y)=\mathcal {O}(\lambda ^{-2})\) as \(\lambda \rightarrow \infty \), and again we deduce that \(g'(\lambda ;y)=0\) and hence also \(g(\lambda ;y)=g_\infty (y)\). This turns out to be the case corresponding to \(y\in \mathbb {C}\setminus E\).

- (iii)

There is one double root and two simple roots, with the double root being fixed by the involution and hence occurring at \(\lambda =\pm 1\) and the simple roots being permuted by the involution and hence being given by \(\lambda =\lambda _0\) and \(\lambda =\lambda _0^{-1}\) for some \(\lambda _0\ne \pm 1\). Thus \(P(\lambda ;y,C)=-\tfrac{1}{4}y^2(\lambda \mp 1)^2(\lambda -\lambda _0)(\lambda -\lambda _0^{-1})=-\tfrac{1}{4}y^2\lambda ^4 +\tfrac{1}{4}y^2(\lambda _0\pm 2+\lambda _0^{-1})\lambda ^3-\tfrac{1}{2}y^2(1\pm (\lambda _0+\lambda _0^{-1}))\lambda ^2 +\tfrac{1}{4}y^2(\lambda _0\pm 2+\lambda _0^{-1})\lambda -\tfrac{1}{4}y^2\). Comparing with (14) shows that this configuration is possible for all \(y\ne 0\), provided that \(\lambda _0\) is determined up to reciprocation by \(\lambda _0+\lambda _0^{-1}=2\mathrm {i}y^{-1}\mp 2\) and that C is assigned the value \(C=-\tfrac{1}{2}y^2(1\pm (\lambda _0+\lambda _0^{-1}))=\tfrac{1}{2}y^2\mp \mathrm {i}y\). This case turns out to be relevant only when \(y\in (E\cap \mathrm {i}\mathbb {R})\setminus \{\pm \tfrac{1}{2}\mathrm {i}\}\).

- (iv)

There are four simple roots, none of which equalFootnote 3 1 or \(-1\), in which case for some \(\lambda _0\) and \(\lambda _1\) with \(\lambda _0^2\ne 1\), \(\lambda _1^2\ne 1\), \(\lambda _1\ne \lambda _0\), and \(\lambda _1\ne \lambda _0^{-1}\), we have \(P(\lambda ;y,C)=-\tfrac{1}{4}y^2(\lambda -\lambda _0)(\lambda -\lambda _0^{-1})(\lambda -\lambda _1)(\lambda -\lambda _1^{-1})=-\tfrac{1}{4}y^2\lambda ^4+\tfrac{1}{4}y^2(\lambda _0+\lambda _0^{-1}+\lambda _1+\lambda _1^{-1})\lambda ^3-\tfrac{1}{4}y^2((\lambda _0+\lambda _0^{-1})(\lambda _1+\lambda _1^{-1})+2)\lambda ^2 + \tfrac{1}{4}y^2(\lambda _0+\lambda _0^{-1}+\lambda _1+\lambda _1^{-1})\lambda -\tfrac{1}{4}y^2\). Comparing with (14) shows that this case is possible for all \(y\ne 0\) with arbitrary C, and that then \(\lambda _0\) and \(\lambda _1\) are determined up to reciprocation and exchange by the identities \(\lambda _0+\lambda _0^{-1}+\lambda _1+\lambda _1^{-1}=2\mathrm {i}y^{-1}\) and \((\lambda _0+\lambda _0^{-1})(\lambda _1+\lambda _1^{-1})=-2-4Cy^{-2}\). This turns out to be the case for \(y\in E_\mathrm {L}\cup E_\mathrm {R}\).

Note that in either of the cases that \(P(\lambda ;y,C)\) is not a perfect square, it is necessary to take care in placing the branch cuts of the square root to obtain \(g'(\lambda ;y)-\tfrac{1}{2}V'(\lambda ;y)\) from \((g'(\lambda ;y)-\tfrac{1}{2}V'(\lambda ;y))^2=\lambda ^{-4}P(\lambda ;y,C)\) in order that the asymptotic relations (26) hold rather than just (27).

2.2 Boutroux Integral Conditions

In order to ensure that the constant K associated with each distinguished arc of L is real, it is necessary in the above case (iv) to impose further conditions. Given y and C such that this is the case, let \(\Gamma =\{(\lambda ,\mu ):\,\mu ^2=\lambda ^{-4}P(\lambda ;y,C)\}\) be the genus-1 Riemann surface or algebraic variety associated with the equation \(\mu ^2=\lambda ^{-4}P(\lambda ;y,C)\) in \(\mathbb {C}^2\) with coordinates \((\lambda ,\mu )\). Let \((\mathfrak {a},\mathfrak {b})\) be a canonical homology basis on \(\Gamma \), and take concrete representatives that do not pass through the preimages on \(\Gamma \) of each of the two points \(\lambda =0\) or \(\lambda =\infty \). Then we impose the Boutroux conditions

where \(C=u+\mathrm {i}v\), i.e., \(u:=\mathrm {Re}(C)\) and \(v:=\mathrm {Im}(C)\). It follows from (26) that the differential \(\mu \,\mathrm {d}\lambda \) has real residues at the two points of \(\Gamma \) over \(\lambda =0\) and the two points over \(\lambda =\infty \); therefore taken together the conditions (29) do not depend on the choice of homology basis. We expect that the two real conditions (29) should determine u and v as functions of \(y\in \mathbb {C}\). Differentiation of the algebraic identity relating \(\mu \) and \(\lambda \) gives

from which it follows (since the paths \(\mathfrak {a}\) and \(\mathfrak {b}\) may be locally taken to be independent of y and C) that

Therefore, the Jacobian determinant of the equations (29) equals

Noting that \(\mu ^{-1}\lambda ^{-2}\mathrm {d}\lambda \) is a nonzero differential spanning the (one-dimensional) vector space of holomorphic differentials on \(\Gamma \), it follows from [13, Chapter II, Corollary 1] that the above Jacobian is strictly negative under the assumption that the four roots of \(P(\lambda ;y,C)\) are distinct. Thus, an application of the implicit function theorem allows us to extend any solution of the integral conditions (29) for which \(P(\lambda ;y_0,u_0+\mathrm {i}v_0)\) has distinct roots to a neighborhood of \(y_0\) on which u and v are smooth real-valued functions of y satisfying \(u(y_0)=u_0\) and \(v(y_0)=v_0\). In fact, one can show that the Jacobian determinant (30) blows up as the spectral curve degenerates, and it is in this way that the implicit function theorem ultimately fails.

3 Asymptotics of \(u_n(ny;m)\) for \(y\in \mathbb {C}\setminus E\)

In this section, we study Riemann–Hilbert Problem 1 with \(x=ny\) (i.e., we set \(w=0\)) and assume that y lies in a neighborhood of \(y=\infty \) to be determined.

3.1 Placement of Arcs of L and Determination of \(\partial E\)

We first show that for y sufficiently large in magnitude, we may take \(C=-\tfrac{1}{4}(2y^2-1)\) and hence \(P(\lambda ;y,C)\) has two double roots; therefore the spectral curve is reducible leading to \(g(\lambda ;y)=g_\infty (y)\) for a suitable value of the latter constant. For y large we take the double root \({p}={p}(y)\) satisfying \({p}+{p}^{-1}=\mathrm {i}y^{-1}\) to be the branch for which \({p}(y)=-\mathrm {i}(1-\tfrac{1}{2}y^{-1}+\mathcal {O}(y^{-2}))\) as \(y\rightarrow \infty \). Then, we choose \(g_\infty (y):=\tfrac{1}{2}V({p}(y);y)\). Thus,

where the \(\mathcal {O}(1)\) error term applies in the limit \(y\rightarrow \infty \) uniformly for \(\lambda \) in compact subsets of \(\mathbb {C}\setminus \{0\}\). Taking into account that \(2g(\lambda ;y)-V(\lambda ;y)\) has a double zero at \(\lambda ={p}(y)\), one can show that if |y| is sufficiently large, taking the common intersection point of all four contour arcs to be the point \({p}(y)\), it is possible to arrange the arcs so that \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))<0\) (resp., \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))>0\)) holds on  (resp., on

(resp., on  ), with the inequality being strict except at the intersection point \(\lambda ={p}(y)\), compare Fig. 19. The function \({p}(y)\) has an analytic continuation from the neighborhood of \(y=\infty \) to the maximal domain \(y\in \mathbb {C}\setminus I\), where I denotes the imaginary segment connecting the two branch points \(\pm \tfrac{1}{2}\mathrm {i}\). As y is brought in from the point at infinity, it remains possible to place the arcs of the contour L as described above at least until either y meets the branch cut I of \({p}(y)\) or the topology of the zero level set of \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))\) changes. The latter occurs precisely when the only other critical point \(\lambda ={p}(y)^{-1}\) moves onto the zero level set; since \(\mathrm {Re}(V(\lambda ^{-1};y))=-\mathrm {Re}(V(\lambda ;y))\), whenever both \({p}(y)\) and \({p}(y)^{-1}\) lie on the same level of \(\mathrm {Re}(V(\lambda ;y))\) we necessarily have \(\mathrm {Re}(V({p}(y);y))=0\). The set of \(y\in \mathbb {C}\setminus I\) where the latter condition holds true is plotted in Fig. 20. Because \(y\in \mathrm {i}\mathbb {R}\setminus I\) implies that \(|{p}(y)|=1\), it is easy to confirm that indeed \(\mathrm {Re}(V({p}(y);y))=0\) for such y, see also Fig. 20. The rest of the points comprise a closed curve \(\partial E\) with two smooth arcs meeting at the branch points \(\pm \tfrac{1}{2}\mathrm {i}\) and bounding the eye-shaped domain E defined in Sect. 1.2.

), with the inequality being strict except at the intersection point \(\lambda ={p}(y)\), compare Fig. 19. The function \({p}(y)\) has an analytic continuation from the neighborhood of \(y=\infty \) to the maximal domain \(y\in \mathbb {C}\setminus I\), where I denotes the imaginary segment connecting the two branch points \(\pm \tfrac{1}{2}\mathrm {i}\). As y is brought in from the point at infinity, it remains possible to place the arcs of the contour L as described above at least until either y meets the branch cut I of \({p}(y)\) or the topology of the zero level set of \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))\) changes. The latter occurs precisely when the only other critical point \(\lambda ={p}(y)^{-1}\) moves onto the zero level set; since \(\mathrm {Re}(V(\lambda ^{-1};y))=-\mathrm {Re}(V(\lambda ;y))\), whenever both \({p}(y)\) and \({p}(y)^{-1}\) lie on the same level of \(\mathrm {Re}(V(\lambda ;y))\) we necessarily have \(\mathrm {Re}(V({p}(y);y))=0\). The set of \(y\in \mathbb {C}\setminus I\) where the latter condition holds true is plotted in Fig. 20. Because \(y\in \mathrm {i}\mathbb {R}\setminus I\) implies that \(|{p}(y)|=1\), it is easy to confirm that indeed \(\mathrm {Re}(V({p}(y);y))=0\) for such y, see also Fig. 20. The rest of the points comprise a closed curve \(\partial E\) with two smooth arcs meeting at the branch points \(\pm \tfrac{1}{2}\mathrm {i}\) and bounding the eye-shaped domain E defined in Sect. 1.2.

For \(y=5\mathrm {e}^{\mathrm {i}\pi /4}\), the domain where \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))<0\) is shaded in red, and the domain where \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))>0\) is shaded in blue. Left panel: the \(\lambda \)-plane. Right panel: the \(\lambda ^{-1}\)-plane. The unit circle is shown with a dashed curve in each plot. Suitable contour arcs matching the scheme described in Sect. 1.1.2 including the argument increment conditions (6)–(7) (for one choice of the arbitrary sign in (6)) are also shown (Color figure online)

The curves in the y-plane where \(\mathrm {Re}(V({p}(y);y))=0\). The branch cut I of \({p}(y)\) (gray line) joins the two junction points. The dots correspond to plots in subsequent figures. The domain E is defined as the bounded region between the two black curves, bisected by the branch cut of \({p}(y)\). The sign of \(\mathrm {Re}(V({p}(y);y))\) is indicated in each region. In particular, \(E_\mathrm {R}\) (resp., \(E_\mathrm {L}\)) is the bounded region where \(\mathrm {Re}(V({p}(y);y))>0\) (resp., \(\mathrm {Re}(V({p}(y);y))<0\)) holds (Color figure online)

The following figures illustrate how the domains such as shown in Fig. 19 change as the value of y varies near the arcs of the curve shown in Fig. 20. Figure 21 concerns the three points on the real axis, and Fig. 22 concerns the three points on the diagonal. Figure 23 shows that although there is a topological change in the level curve as y crosses the imaginary axis in the exterior of E, this does not obstruct the placement of the contour arcs of L. On the other hand, the topological change that occurs when y lies along the arc of \(\partial E\) in the right half-plane (resp., left half-plane) only obstructs placement of the arc  (resp.,

(resp.,  ), and therefore we write \(\partial E\) as the union of two closed arcs:

), and therefore we write \(\partial E\) as the union of two closed arcs:  . Note that the surgery allowing for a sign change

. Note that the surgery allowing for a sign change  (see Remark 1) is compatible with the sign-chart/contour placement scheme provided that the domain \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))<0\) consists of a single component. If it consists of two components, then the contours

(see Remark 1) is compatible with the sign-chart/contour placement scheme provided that the domain \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))<0\) consists of a single component. If it consists of two components, then the contours  and

and  necessarily lie in distinct components and the surgery becomes impossible. The former holds in the exterior of E for \(\mathrm {Re}(y)>0\) and the latter for \(\mathrm {Re}(y)\le 0\).

necessarily lie in distinct components and the surgery becomes impossible. The former holds in the exterior of E for \(\mathrm {Re}(y)>0\) and the latter for \(\mathrm {Re}(y)\le 0\).

The domain where \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))<0\) in red and where \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))>0\) in blue for \(y=0.381372\) (left column), \(y=0.331372\) (middle column), and \(y=0.281372\) (right column), corresponding to the green, amber, and red dots, respectively, on the real axis in Fig. 20. The top row shows a neighborhood of the unit disk in the \(\lambda \)-plane, while the bottom row shows the exterior of the unit disk in the \(\lambda ^{-1}\)-plane. In the plots in the right-hand column, the level curve has broken, and it is no longer possible to place the contour arc  connecting \({p}(y)\) and \(\infty \) completely within the red region. This phase transition, which apparently occurs only on the right edge of the domain E, is only relevant if the jump matrix on

connecting \({p}(y)\) and \(\infty \) completely within the red region. This phase transition, which apparently occurs only on the right edge of the domain E, is only relevant if the jump matrix on  is not the identity, i.e., if \(m-\tfrac{1}{2}\notin \mathbb {Z}_{\ge 0}\). These plots show contours with

is not the identity, i.e., if \(m-\tfrac{1}{2}\notin \mathbb {Z}_{\ge 0}\). These plots show contours with  . The other choice

. The other choice  would also be compatible with the sign chart (Color figure online)

would also be compatible with the sign chart (Color figure online)

As in Fig. 21 except for \(y=0.414768\mathrm {e}^{-3\pi \mathrm {i}/4}\) (left column), \(y=0.364768\mathrm {e}^{-3\pi \mathrm {i}/4}\) (middle column), and \(y=0.314768\mathrm {e}^{-3\pi \mathrm {i}/4}\) (right column), corresponding to the green, amber, and red dots, respectively, on the diagonal in Fig. 20. In the plots in the right-hand column, the level curve has broken and it is no longer possible to place the contour arc  connecting \({p}(y)\) and 0 completely within the blue region. This phase transition, which apparently occurs only on the left edge of the domain E, is only relevant if the jump matrix on

connecting \({p}(y)\) and 0 completely within the blue region. This phase transition, which apparently occurs only on the left edge of the domain E, is only relevant if the jump matrix on  is not the identity, i.e., if \(-m-\tfrac{1}{2}\notin \mathbb {Z}_{\ge 0}\). These plots show contours with

is not the identity, i.e., if \(-m-\tfrac{1}{2}\notin \mathbb {Z}_{\ge 0}\). These plots show contours with  . In this case, the other choice of

. In this case, the other choice of  could only be arranged by a surgery of

could only be arranged by a surgery of  that would result in contours incompatible with the sign chart (Color figure online)

that would result in contours incompatible with the sign chart (Color figure online)

As in Fig. 21 except for \(y=-0.05+0.55\mathrm {i}\) (left column), \(y=0.55\mathrm {i}\) (middle column), and \(y=0.05+0.55\mathrm {i}\) (right column), corresponding to the three green dots near the positive imaginary axis in Fig. 20. The topological change in sign chart has no effect on the placement of the contours. The configurations with \(\mathrm {Re}(y)\le 0\) require the choice  . On the other hand, the configuration with \(\mathrm {Re}(y)>0\), although pictured here with

. On the other hand, the configuration with \(\mathrm {Re}(y)>0\), although pictured here with  , admits surgery of the contours

, admits surgery of the contours  and

and  within the domain \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))<0\) and hence the choice

within the domain \(\mathrm {Re}(2g(\lambda ;y)-V(\lambda ;y))<0\) and hence the choice  is also possible (Color figure online)

is also possible (Color figure online)

3.2 Parametrix Construction

Let y be fixed outside of E, let \(\delta >0\) be a fixed sufficiently small (given y) constant, and let D denote the simply-connected neighborhood of \(\lambda ={p}(y)\) defined by the inequality \(|2g(\lambda ;y)-V(\lambda ;y)|<\delta ^2\). We will define a parametrix \(\dot{\mathbf {M}}_n(\lambda ;y,m)\) for \(\mathbf {M}_n(\lambda ;y,m)\) in (25) by a piecewise formula:

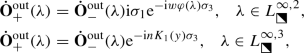

Noting that the jump matrix for \(\mathbf {M}_n(\lambda ;y,m)\) converges uniformly on \(L\setminus D\) (with exponential accuracy) to \(\mathbb {I}\) except on  , where the limit is instead \(-\mathrm {e}^{2\pi \mathrm {i}m\sigma _3}\), and that

, where the limit is instead \(-\mathrm {e}^{2\pi \mathrm {i}m\sigma _3}\), and that  should have a limit as \(\lambda \rightarrow 0\), we define \(\dot{\mathbf {M}}^{\mathrm {out}}(\lambda ;y,m)\) as the following diagonal matrix:

should have a limit as \(\lambda \rightarrow 0\), we define \(\dot{\mathbf {M}}^{\mathrm {out}}(\lambda ;y,m)\) as the following diagonal matrix:

where the branch cut is taken to be  and the branch is chosen such that the right-hand side tends to \(\mathbb {I}\) as \(\lambda \rightarrow \infty \). In order to define \(\dot{\mathbf {M}}_n^{\mathrm {in}}(\lambda ;y,m)\), we will find a certain canonical matrix function that satisfies exactly the jump conditions of \(\mathbf {M}_n(\lambda ;y,m)\) within the neighborhood D and then we will multiply the result on the left by a matrix holomorphic in D to arrange a good match with \(\dot{\mathbf {M}}^{\mathrm {out}}(\lambda ;y,m)\) on \(\partial D\). For the first part, we introduce a conformal mapping \(W:D\rightarrow \mathbb {C}\) by the following relation:

and the branch is chosen such that the right-hand side tends to \(\mathbb {I}\) as \(\lambda \rightarrow \infty \). In order to define \(\dot{\mathbf {M}}_n^{\mathrm {in}}(\lambda ;y,m)\), we will find a certain canonical matrix function that satisfies exactly the jump conditions of \(\mathbf {M}_n(\lambda ;y,m)\) within the neighborhood D and then we will multiply the result on the left by a matrix holomorphic in D to arrange a good match with \(\dot{\mathbf {M}}^{\mathrm {out}}(\lambda ;y,m)\) on \(\partial D\). For the first part, we introduce a conformal mapping \(W:D\rightarrow \mathbb {C}\) by the following relation:

Because \(2g(\lambda ;y)-V(\lambda ;y)\) is a locally analytic function vanishing precisely to second orderFootnote 4 at \(\lambda ={p}(y)\), the relation (31) defines two different analytic functions of \(\lambda \) both vanishing to first order at \(\lambda ={p}(y)\). We choose the analytic solution \(W=W(\lambda ;y)\) that is negative real in the direction tangent to  . Then we deform the arcs of L within D so that in this neighborhood

. Then we deform the arcs of L within D so that in this neighborhood  and

and  correspond exactly to negative and positive real values of W, while

correspond exactly to negative and positive real values of W, while  and

and  correspond exactly to negative and positive imaginary values of W. By the definition of D, its image W(D; y) under W is the disk of radius \(\delta \) centered at the origin, see Fig. 24. Consider the matrix \(\mathbf {N}_n(\lambda ;y,m)\) defined in terms of \(\mathbf {M}_n(\lambda ;y,m)\) for \(\lambda \in D\) by \(\mathbf {N}_n(\lambda ;y,m):= \mathbf {M}_n(\lambda ;y,m)d(\lambda ;y,m)^{\sigma _3}\), where