Abstract

Equivalence tests are statistical hypothesis testing procedures that aim to establish practical equivalence rather than the usual statistical significant difference. These testing procedures are frequent in “bioequivalence studies," where one would wish to show that, for example, an existing drug and a new one under development have comparable therapeutic effects. In this article, we propose a two-stage randomized (RAND2) p-value that depends on a uniformly most powerful (UMP) p-value and an arbitrary tuning parameter \(c\in [0,1]\) for testing an interval composite null hypothesis. We investigate the behavior of the distribution function of the two p-values under the null hypothesis and alternative hypothesis for a fixed significance level \(t\in (0,1)\) and varying sample sizes. We evaluate the performance of the two p-values in estimating the proportion of true null hypotheses in multiple testing. We conduct a family-wise error rate control using an adaptive Bonferroni procedure with a plug-in estimator to account for the multiplicity that arises from our multiple hypotheses under consideration. The various claims in this research are verified using a simulation study and real-world data analysis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Equivalence tests are testing procedures for establishing practical equivalence rather than the usual statistical significant difference. Within the frequentist framework, this test uses the fact that failing to reject a given null hypothesis of no difference is not logically equivalent to accepting the said null hypothesis (cf. (Fogarty and Small 2014)). Equivalence studies are common in the medical field, for example, where one would wish to show that an existing drug and a new one under development have comparable therapeutic effects. We refer to such studies as “bioequivalence studies." Another area of application is in genetics, where they can be used to identify non-DE (differentially expressed) genes (cf. (Qiu and Cui 2010)) or to test for the Hardy-Weinberg equilibrium (HWE) when we have multiple alleles as in Ostrovski (2020). One can also use these tests to compare the similarity between two Kaplan-Meier curves, which estimate the survival functions in two populations. See Sect. 1.3 of Wellek (2010) for an in-depth discussion of these applications.

Some studies on equivalence testing include (Romano 2005), which provides bounds for the asymptotic power of equivalence tests and constructs efficient tests that attain those bounds. The same author also gives an asymptotically UMP test based on Le Cam’s notion of convergence of experiments for testing the mean of a multivariate normal. Equivalence tests can be conducted using the Two One-Sided Test (TOST) procedure. The TOST procedure is an example of an intersection–union test (cf. (Berger and Hsu 1996)) with the null hypothesis as a union of the null for the lower- and upper-sided tests and the alternative as an intersection of the rejection regions for the lower- and upper-sided tests.

Berger and Hsu (1996) consider an intersection–union test for the simultaneous assessment of equivalence on multiple endpoints. This test requires that all the \((1-2\alpha )100\%\) simultaneous intervals fall within the equivalence bounds for an overall \(\alpha \) level test. This approach can be conservative depending on the correlation structure among the endpoints and the study power. Due to these difficulties, they propose a \(100(1-\alpha )\%\) confidence interval corresponding to a size \(\alpha \) test.

Munk (1996) considered equivalence tests for Lehmann’s alternative, which are unbiased for equal sample sizes within the two groups. An extension of the expected p-value of a test (EPV) to univariate equivalence tests was considered by Pflüger and Hothorn (2002). Since this procedure is independent of the distribution of the test statistic under the null hypothesis, it avoids the problem of looking for this distribution for the test statistic. Furthermore, the EPV is independent of the nominal level \(\alpha .\)

Conducting multiple equivalence tests without multiplicity adjustments increases the probability of making false claims of equivalence (type I errors). Leday et al (2023) proposed a familywise error rate (FWER) control based on Hochberg’s method. The same authors also showed that Hommel’s method performs as well as Hochberg’s and that an “adaptive” version of Bonferroni’s method is more powerful than Hommel’s for equivalence testing. Giani and Finner (1991) and Giani and Straßburger (1994) on the other hand considered simultaneous equivalence tests in the \(k-\)sample case and proposed tests based on the range statistic. Qiu and Cui (2010) and Qiu et al (2014) consider multiple equivalence tests based on the average equivalence criterion to identify non-DE genes. Both articles investigate the power and false discovery rate (FDR) of the TOST. Since the variance estimator in the TOST procedure can become unstable and lead to low power for small sample sizes, the latter article proposes a shrinkage variance estimator to improve the power. Huang et al (2006) also applied an average equivalence test criterion but adjusted for the multiplicity using the simultaneous confidence interval approach.

Multiple test procedures that utilize p-values are only valid if the p-value statistics follow the Uniform (0, 1) distribution under the null hypothesis. Since we use the p-values many times, any non-uniformity in their distribution quickly accumulates and reduces the power of the overall procedure. We can decompose the equivalence hypothesis into two one-sided hypotheses, each leading to a composite null hypothesis. When dealing with a composite null hypothesis, the p- value can fail to follow the Uniform (0, 1) distribution under the null hypothesis if the true parameter used is not the least favorable parameter configuration (LFC). Furthermore, we can have categorical data, for example, in genetic association studies that generate discrete data in counts, leading to test statistics with discrete distributions. Since the p-value is a deterministic transformation of the test statistic, this leads to discretely distributed p-values that are also nonuniform under the null hypothesis.

The problems of composite nulls and discrete test statistics can lead to conservative p-values, which implies that the p-value is stochastically larger than UNI(0, 1) distribution under the null hypothesis. To our knowledge, no research has previously considered a two-stage randomized p-value in testing for the interval composite null hypothesis. We propose a two-stage randomized p-value for multiple testing of interval composite null hypotheses when dealing with discrete data. The two-stage procedure uses the UMP p-value in the first stage to remove the discreteness of the test statistic. The randomized p-value proposed in Hoang and Dickhaus (2022) for a continuous test statistic is then used in the second stage to deal with the composite null hypothesis.

When utilizing the non-randomized version of the Two One-Sided Test (TOST) UMP p-value in discrete models, Finner and Strassburger (2001) showed that it is possible for the power function based on a sample of size n to coincide on the entire parameter space with the corresponding power function based on size \(n+i\) for small \(i\in {\mathbb {N}}\). We illustrate that the power function of a test based on the two-stage randomized (RAND2) p-value for discrete models, just like the one for the UMP randomized p-value, is strictly increasing with an increase in the sample size. We further illustrate that for small sample sizes, it is possible that the power functions of the UMP and RAND2 p-values do not strictly increase with an increase in the sample size.

We also investigate the behavior of the distribution function for the UMP and RAND2 p-values under the null hypothesis and alternative hypothesis. Three objectives are of interest: First, to find if the power and level of conservativity of the p-values depend on the size of the equivalence limit. Second, to investigate the behavior of the CDFs of the p-values when the parameter used to compute the p-values is close to or far from the midpoint of the equivalence interval. We are interested in finding the parameter value under the null hypothesis for which the p-values are least conservative or the parameter value that maximizes the power of a test based on our p-values under the alternative hypothesis. Third, to find out if the level of conservativity of the p-values depends on the sample sizes.

Finally, we consider multiple testing of equivalence hypotheses where we assess the performance of our p-values in estimating the proportion of true null hypotheses using an empirical-CDF-based estimator. An adaptive version of the Bonferroni that utilizes the plug-in estimator of Finner and Gontscharuk (2009) is used for familywise error control.

The rest of this paper is organized as follows. General preliminaries are provided in Sect. 2. The definitions, CDFs, and investigations of the behaviors of those CDFs under the null and alternative hypothesis for the UMP and the two-stage randomized p-values are considered in Sect. 3. We also investigate if the power function of the p-values is monotonically increasing with an increase in the sample size in the same section. Furthermore, we give the parameter value that maximizes the power of a test based on the p-values in the same section. We defer all matters concerning multiple testing until Sect. 4, where we consider a real-world data analysis and a simulation study to assess the performance of the p-values in estimating the proportion of true null hypotheses. Finally, we discuss our results and give recommendations for future research in Sect. 5.

2 General preliminaries

Let \(\pmb {X}=(X_1,\ldots ,X_n)^\top \) denote our random data where each \(X_r\) is a real-valued, observable random variable, \(1 \le r \le n\) with the support of \(\pmb {X}\) denoted by \({\mathcal {X}}\). We assume all \(X_r\) are stochastically independent and identically distributed (i.i.d.) with a known parametric distribution. The marginal distribution of \(X_1\) is assumed to be \(P_\theta \), where \(\theta \in \Theta \subseteq {\mathbb {R}}\) is the model parameter. The distribution of \(\pmb {X}\) under \(\theta \) is as a result given by \(P_\theta ^{\otimes n} =: {\mathbb {P}}_\theta \). We will be concerned with an interval hypothesis test problem of the form

for given numbers \(\theta _1,\theta _2 \in \Theta \) such that \(\theta _1<\theta _2\). When k hypotheses are of interest, then they will be expressed as \(H_j: \theta _j\notin \Delta _j\) versus \(K_j:\theta _j\in \Delta _j\) where \(\Delta _j\) denotes the range of values in the \(j^{th}\) interval between \(\theta _1^{(j)}\) and \(\theta _2^{(j)}\) for \(j\in \{1,\ldots ,k\}\) and k is the multiplicity of the problem. Denote the resulting k p-values by \(p_1,\ldots ,p_k\). We consider the case \(k=1\) in Sect. 3 and defer the multiple test problem till Sect. 4. When the difference between the \(j^{th}\) true parameter \(\theta ^{(j)}\) and \(\theta _1^{(j)}\) or \(\theta _2^{(j)}\) (\(j=1,\ldots ,k\)) is kept constant for all the k hypotheses, then this is referred to as the “average equivalence" criterion. We can sometimes make the interval in (1) symmetric to achieve equivariance to the permutation of groups, for example, the choice \(\theta _2=\theta _1^{-1}\) in Pflüger and Hothorn (2002) and Munk (1996).

As mentioned before, one method for testing this hypothesis is the Two One-Sided Test (TOST) procedure, where one tests for the alternatives \(\theta <\theta _1\) and \(\theta >\theta _2\) separately at size \(\alpha \) and in no particular order. The TOST procedure is a special case of the intersection–union test proposed by Berger (1982) where the null hypothesis is a union of disjoint sets, and the alternative hypothesis is an intersection of the complements of those sets. For this reason, we conduct the separate individual tests at size \(\alpha \) without a multiplicity adjustment like \(\alpha /2\). Practical equivalence is declared if one rejects both tests and otherwise non-equivalence. These procedures suffer from a lack of power, and an alternative that is more powerful but too complicated has been suggested in the literature by Berger and Hsu (1996) and Brown et al (1997). Since alternative tests are difficult to implement, we use the TOST procedure in this research.

We consider test statistics \(T(\pmb {X})\), where \(T: {\mathcal {X}} \rightarrow {\mathbb {R}}\) is a measurable mapping. Furthermore, the test statistics \(T_r\) for \(r=1,\ldots ,n\) are also assumed to be mutually independent. The marginal p-value \(p(\pmb {X})\) resulting from \(T(\pmb {X})\) is assumed to be valid, meaning that \({\mathbb {P}}_\theta (p(\pmb {X}) \le t)\le t\) holds for all significance levels \(t \in (0,1)\) and for any parameter value \(\theta \) in the null hypothesis. Valid p-values are stochastically larger than UNI (0, 1), as investigated by, among many others, Habiger and Pena (2011) and Dickhaus et al (2012). On the same note, we call a p-value conservative if it is valid and \({\mathbb {P}}_\theta (p(\pmb {X}) \le t)< t\) holds for some fixed significance level \(t \in (0,1)\). Throughout the article, we refer to the cumulative distribution function (CDF) of a p-value under the alternative hypothesis as a power function because we reject the null hypothesis for small p-values. Finally, we also make use of the (generalized) inverses of certain non-decreasing functions mapping from \({\mathbb {R}}\) to [0, 1]. In this regard, we follow Appendix 1 in Reiss (1989): If F is a real-valued, non-decreasing, right-continuous function, and similarly G is a real-valued, non-decreasing, left-continuous function where we define both F and G on \({\mathbb {R}}\), then \(F^{-1}(y) = \inf \{x \in {\mathbb {R}}: F(x) \ge y\}\) and \(G^{-1}(y) = \sup \{x \in {\mathbb {R}}: G(x) \le y\}\), respectively.

3 Interval composite hypothesis

3.1 Introduction

In this article, we are interested in the (interval) composite null hypothesis of the form in (1). We test this hypothesis using two different p-values whose definitions and the CDFs are as follows.

Definition 1

(First stage randomization) Let U be a UNI (0, 1)-distributed random variable independent of the data \(\pmb {X}\). Further assume that \(T(\pmb {X})\) is our test statistic whose distribution has monotone likelihood ratio (MLR), the UMP-based p-value \(P^{UMP}(\pmb {X}, U)\) is

for \(i=1,2\) where \(\theta _1, \theta _2\) such that \(\theta _1<\theta _2\) are the LFC parameters and \(C_n\), \(D_n \in {\mathbb {R}}\) such that \(C_n\le D_n\) are the critical constants. The CDF of \(P^{UMP}(\pmb {X},U)\) is

where \(\theta \) is the chosen true parameter while \(\gamma _n\) and \(\delta _n\) are the randomization constants. The critical constants \(C_n, D_n\in {\mathbb {R}}\) and the randomization constants \(\gamma _n, \delta _n\in [0,1]\) are found by solving the equation \(E_{\theta _i}[T(\pmb {X})]= \alpha \) for \(i=1,2\) where \(T(\pmb {X})=\sum _{r=1}^nT(X_r).\) For large sample sizes, the critical and the randomization constants are \(C_n=F^{-1}_{\theta _1}(1-t)\), \(D_n=F^{-1}_{\theta _2}(t)\),

We can use the p-value defined in Equation (2) with models possessing monotone likelihood ratio (MLR), for example, any one-dimensional exponential family and the location family of folded normal distribution. For continuous models, the critical constants \(C_n\) and \(D_n\) are slightly modified, for example, by introducing the variance in the case of a normal distribution. Moreover, the randomization constants in (3) are such that \(\gamma _n=\delta _n=0\) for such continuous models. Next, we give a lemma whose proof is in the Appendix to show that the UMP p-value in Definition (1) is the maximum of the p-values for a lower- and an upper-tailed test.

Lemma 1

For a fixed but arbitrary significance level \(t\in (0,1)\) and a chosen true parameter under the null hypothesis \(\theta _0=\theta _1\) or \(\theta _0=\theta _2\), the UMP p-value in Equation (2) is the maximum of the p-values for a lower- and an upper-tailed test.

In calculating the UMP p-value in (2), using either \(\theta _1\) or \(\theta _2\) leads to the same result for the p-value. The UMP p-value is used in the first stage of randomization to deal with the discreteness of the test statistics. We conduct a second randomization to deal with the composite null hypothesis. The second stage randomized p-value (RAND2) (cf. (Hoang and Dickhaus 2022)) is defined as follows.

Definition 2

(Second stage randomization) Let U and \({\tilde{U}}\) be two different UNI (0, 1)-distributed random variables both stochastically independent of the data \(\pmb {X}\) and are also independent of each other. Assume also that we have an arbitrary constant \(c\in [0,1]\). The two-stage randomized p-value \(P^{rand2}(\pmb {X},U,{\tilde{U}},c)\) is

where \(P^{UMP}({X},U)\) is the UMP p-value in the first stage as defined in Equation (2). Furthermore, we define \(P^{rand2}(\pmb {X},U,{\tilde{U}},0)={\tilde{U}}\) and \(P^{rand2}(\pmb {X},U,{\tilde{U}},1)=P^{UMP}(\pmb {X})\). The CDF of \(P^{rand2}(\pmb {X},U,{\tilde{U}},c)\) is

With our p-values so defined, we are now ready to use them to test our hypothesis. We first describe an example of a discrete model that we use to illustrate our randomized p-values in practice.

Example 1

(Binomial distribution) Assume that our (random) data is given by \(\pmb {X}=(X_1,\ldots ,X_n)^\top \), where each \(X_r\) is a real-valued, observable random variable, \(1 \le r \le n\), and all \(X_r\) are stochastically independent and identically distributed (i.i.d.) Bernoulli variables with parameter \(\theta _i\in (0,1)\) for \(i=1,2\), \(Bernouli(\theta _i)\) for short. A sufficient test statistic for testing the hypothesis in (1) is \(T(\pmb {X})=\sum _{r=1}^n X_r\) which is distributed as a Binomial random variable with parameters n and \(\theta _i\), \(i=1,2\) and we shall denote this by \(Bin(n,\theta _i).\) The respective p-values with their CDFs are calculated using Equations (2), (3), (4), and (5). The critical constants \(C_n\) and \(D_n\) are given by \(C_n=F^{-1}_{Bin(n, \theta _1)}(1-t)\) and \(D_n=F^{-1}_{Bin(n, \theta _2)}(t)\) for a fixed significance level \(t\in (0,1)\) where \(F^{-1}(\bullet )\) denotes the quantile of a binomial random variable with parameters n and \(\theta .\) The randomization constants \(\gamma _n\) and \(\delta _n\) for large sample sizes and for arbitrary \(t\in (0,1)\) are given by \(\gamma _n=\{F_{Bin(n,\theta _1)}(C_n)-(1-t)\}\{f_{Bin(n,\theta _1)}(C_n)\}^{-1}\) and \(\delta _n=\{t-F_{Bin(n,\theta _2)}(D_n-1)\}\{f_{Bin(n,\theta _2)}(D_n)\}^{-1},\) where \(F_{Bin(n,\theta )}\) denotes the CDF and \(f_{Bin(n,\theta )}\) the probability mass function of binomial variable with parameters n and \(\theta \).

In this section, as mentioned before, we consider the individual test problem where \(k=1\). We are interested in finding if randomization is beneficial when the equivalence limit \(\Delta \) increases or decreases and if the power functions for the p-values are monotonic in sample size. Furthermore, we seek to find if the level of conservativity of the p-values depends on the sample sizes.

3.2 Sample size versus power

We expect that the power function for a test would be strictly increasing with an increase in sample size. A power function that is strictly increasing with an increase in the sample size is ideal for sample size planning since an additional observation cannot lower the power. In the case of discrete models, Finner and Strassburger (2001) showed that it is possible for the power of the (least favorable configuration) LFC-based p-value at a sample of size n to coincide over the entire parameter space with that of size \(n+i\), for small \(i\in {\mathbb {N}}\). We illustrate in the second panel of Fig. 1 and for the model in Example (1) that this paradoxical behavior can also occur for the UMP p-value and cannot be corrected even by use of randomization. The problem occurs for small samples with the chosen true parameter \(\theta \) too close to the boundary of the alternative hypothesis. To generate Fig. 1, we set the tuning parameter \(c=0.5\), \(t=0.05\), \(\theta _1=0.25\), and \(\theta _2=0.75\) in both panels. Furthermore, we choose \(\theta =0.5\) as the true parameter under the alternative hypothesis in the left panel and \(\theta =0.4\) in the right.

On the left panel in Fig. 1, both power functions are strictly increasing with an increase in the sample size. On the right panel, both power functions are not strictly increasing with an increasing sample size. We further illustrate in Fig. 2 that this paradoxical behavior of the power function of the UMP p-value in the right panel of Fig. 1 does not occur for small equivalence limit \(\Delta \). To generate Fig. 2, we maintain the parameter settings as in the right panel of Fig. 1 but only change \(\theta _1\) to 0.35 so that the resulting \(\Delta \) is decreased compared to the initial one.

From Fig. 2, the power functions for the UMP and RAND2 p-values are now strictly increasing with an increase in the sample size for most n. The problem of the power function failing to be strictly increasing with an increase in the sample size is partially dealt with, though not completely removed. Shrinking \(\Delta \) from both sides, however, worsens the problem in the right panel of Fig. 1. Finally, we provide Theorem (1) with a proof in the appendix to further justify the claims in the left panel of Figure (1).

Theorem 1

(Monotonicity of the power functions) The CDFs of the UMP and RAND2 p-values are strictly increasing with an increase in the sample size n for any fixed parameter value \(\theta \) under the alternative hypothesis. Consequently, for any significance level and a fixed parameter value \(\theta \) under the alternative hypothesis, the power of the corresponding test is monotonically increasing with an increase in the sample size n.

3.3 Conservativity of the p-values

We expect that the distribution of a p-value under the null hypothesis is close to that of a UNI(0, 1) distribution. A p-value can fail to meet this requirement and hence be conservative, meaning it is stochastically greater than the Uniform distribution. We compare the CDFs of RAND2 and the UMP p-values under the null and alternative hypothesis for two equivalence limits \(\Delta _1\) and \(\Delta _2\) such that \(\Delta _1<\Delta _2\) and sample sizes \(n_1\) and \(n_2\) such that \(n_1<n_2\). We plot Fig. 3 to compare the conservativity and power functions of the two p-values for the model in Example 1 using two different equivalence limits \(\Delta .\)

The CDFs of the UMP and RAND2 p-values against t for \(n=50\) and \(c=0.5\). We choose the true parameter \(\theta =0.2\) under the null hypothesis and \(\theta =0.35\) under the alternative hypothesis. Furthermore, we set \(\theta _1=0.25\), and \(\theta _2=0.75\) in the first case (I) and \(\theta _1=0.3\), and \(\theta _2=0.75\) in the second case (II)

From Fig. 3, the CDF of the UMP p-value under the null hypothesis is far from the UNI(0, 1) line compared to the one for RAND2 p-value in both cases. Therefore, the UMP p-value is more conservative than RAND2 p-value. Under the alternative hypothesis, the CDF of the UMP p-value is also far from the UNI(0, 1) line compared to the one for RAND2 p-value in both cases. Therefore, as expected, the power of the UMP p-value exceeds that of RAND2 p-value.

Under the same parameter configurations and only shrinking the equivalence limit \(\Delta \), the UMP p-value becomes less powerful and more conservative. The two-stage randomized p-value also becomes less powerful, but the conservativeness of the p-value reduces even further. Next, we give Fig. 4 to illustrate the behavior of the CDFs for the two p-values under the null hypothesis using the same parameter configurations as in Fig. 3 except that the sample size n is not constant. Again, we consider two cases but with \(n=50\) in the first case (I) and \(n=100\) in the second case (II).

From Fig. 4, the CDF of the UMP p-value moves away while the one for RAND2 p-value moves closer to the UNI(0, 1) line as the sample size increases. Therefore, the UMP p value becomes more conservative while the RAND2 p value becomes less conservative as the sample size increases.

3.4 Maximum power

In this section, we are interested in finding a parameter value \(\theta _{max}\) under the alternative hypothesis that maximizes the power of a test based on our p-values. If such a parameter exists, choosing it as our true parameter under the alternative hypothesis will always guarantee that we have the maximum power. Also, we investigate if the value of \(\theta _{max}\) is affected by the size of the equivalence limit \(\Delta .\) We generate Fig. 5 to address these questions for Example 1, where we have set \(c=0.5\) and used \(n=50\) as our sample size. Furthermore, we use \(\theta _1=0.15\) and \(\theta _2=0.45\) in the left panel of Fig. 5 and \(\theta _1=0.25\) and \(\theta _2=0.45\) in the right one.

The CDFs for the UMP and RAND2 p-values against the chosen parameter \(\theta \) for \(c=0.5\) and \(n=50\). We set \(\theta _1=0.15\) and \(\theta _2=0.45\) in the left panel and \(\theta _1=0.25\) and \(\theta _2=0.45\) in the right one. The vertical lines intersect the respective CDF curves at their maximum and the x axis at the parameter value \(\theta _{max}\) that maximizes those CDFs. The bold vertical line is for the UMP p-value while the thin dotted line is for RAND2 p-value. Furthermore, the thin dotted horizontal lines intersect the y axis at the value of \(\alpha \)

From Fig. 5 and for a large equivalence limit \(\Delta \), the parameter \(\theta _{max}\) for the two p-values always occur at the midpoint of the interval \(\Delta \). For a small \(\Delta \), the parameter \(\theta _{max}\) for RAND2 p-value can occur at a point too close to \(\theta _1\) or \(\theta _2\). The one for the UMP p-value occurs at the midpoint throughout, and it does not matter how small \(\Delta \) becomes. Also, for both p-values, only a single \(\theta _{max}\) exists under the alternative hypothesis. Moreover, the behavior of the CDFs in the right panel further confirms that RAND2 p-value, unlike the UMP p-value, is not unbiased. To conclude this section, we give a figure illustrating the power for the two p-values against the equivalence limit \(\Delta \). To generate Figure (6), we set \(c=0.5\), \(n=50\), and choose \(\theta =0.2,0.3,0.4,\) and 0.48 as the true parameters under the alternative hypothesis. Moreover, we use different values of \(\theta _1\) and \(\theta _2\) to get different equivalence limits since \(\Delta =\theta _2-\theta _1\).

The CDF under the alternative hypothesis for the UMP and RAND2 p-values against the equivalence limit \(\Delta \) for \(c=0.5\) and \(n=50\). The chosen parameters under the alternative hypothesis are \(\theta =0.2, 0.3,0.4,\) and 0.48, respectively, from left to right. The vertical lines intersect the respective CDF curves at their maximum and the x axis at the \(\Delta \) value, which maximizes those CDFs. The bold vertical line is for the UMP p-value while the thin dotted line is for RAND2 p-value. Furthermore, the thin dotted horizontal lines intersect the y axis at the value of \(\alpha \)

From Fig. 6, as is expected, the range of \(\Delta \) in each panel is from the chosen parameter value \(\theta \) under the alternative hypothesis to \(1-\theta \). For example, in the first panel, the parameter is \(\theta =0.2\) and \(\Delta \) ranges from \(\theta =0.2\) to \(1-\theta =0.8\). The value of \(\theta _{max}\) moves closer to 0.5 as the chosen parameter under the alternative hypothesis moves closer to 0.5.

4 Estimation of the proportion of true null hypotheses

4.1 Introduction

In this section, we extend our discussions from Sect. 3 to the case when \(k>1\) hypotheses are of interest. According to Section 4.3 of Wellek (2010), it is possible to have a case of univariate equivalence tests for a parameter of interest. Comparison of a single proportion to a fixed reference success probability was the subject of Sect. 3. We extend this idea and compare multiple proportions to a fixed reference success probability. We do this to identify the proportion of true null hypotheses (an estimation problem) and not which particular null hypotheses are true (a selection problem).

Recall that for the multiple testing problem, our hypothesis in Eq. (1) can be expressed as

where \(\Delta _j\) denotes the range of values in the \(j^{th}\) interval between \(\theta _1^{(j)}\) and \(\theta _2^{(j)}\) for \(j\in \{1,\ldots ,k\}\), k is the multiplicity of the problem, and \(p_1,\ldots ,p_k\) are the resulting k p-values. For example, assume we have a data set from \(k=1{,}000\) small regions of a country showing the number of individuals suffering from a certain disease. We are interested in testing the hypotheses that the proportion of infected individuals from all the regions lie in a certain interval \([\theta _1,\theta _2]\) when the equivalence limit is constant. We do this to find if the infection rate is at dangerously high levels in a particular region.

Conducting these hypotheses at level \(\alpha \) increases the probability of type I errors since we do not account for the multiplicity of the problem. It is crucial to account for this multiplicity by doing, for example, a familywise error rate (FWER) control. One commonly used method for familywise error control at level \(\alpha \) is the Bonferroni adjustment (cf. (Bonferroni 1936)). The Bonferroni procedure adjusts the raw p-values \(p_1,\ldots ,p_k\) by multiplying them by the number of hypotheses k. We reject the null hypothesis if an adjusted p-value is less than or equal to \(\alpha \). The Bonferroni procedure guarantees that the FWER is at most \(\alpha \) regardless of the ordering or the dependence structure of the p-values.

The Bonferroni procedure can be conservative when large proportions of null hypotheses are false. The adjustment also maintains FWER at levels below \(\pi _0 \alpha \) instead of \(\alpha \) where \(\pi _0=k_0/k\) is the proportion of true null hypotheses. When the number of true null hypotheses \(k_0<k\), the individual tests are conducted at a higher level \(\alpha /k_0\) instead of \(\alpha /k\), leading to a higher power for the testing procedure. We refer to this as the adaptive Bonferroni procedure, ABON for short. Since in practice we never really know the number (proportion) \(k_0\) (\(\pi _0\)), we make use of ABON combined with the plug-in (ABON+plug-in) procedure of Finner and Gontscharuk (2009) to estimate \(\pi _0\). The ABON+plug-in procedure, unlike closed testing procedures (like (Hommel 1988) and Hochberg (1988)), provides a theoretical guarantee to control the type I error rate at the desired level.

One classical but still commonly used estimator for \(k_0\) is the Schweder and Spjøtvoll (1982) estimator. It is given by

where \(\lambda \in [0,1)\) is a tuning parameter and \({\hat{F}}_k\) is the empirical CDF (ecdf) of the k marginal p-values. It is often suggested in practice to choose \(\lambda =0.5\). One crucial prerequisite for the applicability of this estimator is that the marginal p-values \(p_1, \ldots , p_k\) are (approximately) uniformly distributed on (0, 1) under the null hypothesis; see, e. g., Dickhaus (2013); Hoang and Dickhaus (2022) and the references therein for details. The randomized p-values considered in this work are close to meeting the uniformity assumption, whereas the non-randomized p-values are over-conservative when testing two one-sided composite null hypotheses, especially in discrete models. Typically, the estimated value of \(k_0\) becomes too large if many null p-values are conservative and the estimator from (6) is employed.

4.2 Empirical distributions

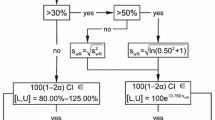

To illustrate the implication of using our proposed two-stage randomized p-value in multiple testing, we employ a graphical algorithm in computing \(\pi _0\). This algorithm connects the points \((\lambda ,{\hat{F}}_k(\lambda )), \lambda \in [0,1)\) with the point (1, 1). We draw a straight line to connect the two points and extend this line to intersect the y axis at the point \(1-{\hat{\pi }}_0\). The best p-value for use in the estimation of \(\pi _0\) is that for which the resulting straight line meets the y axis at a point that is close to the actual \(1-\pi _0\). We require the empirical CDF of the p-value not to lie below the UNI(0, 1) line for this to be actualized. We summarize our steps in Algorithm 1 below.

To generate Fig. 7, we let the number of hypotheses to be \(k=1{,}000\), the tuning parameters c and \(\lambda \) are both set at 0.5 and use a sample of size \(n=50\). We take the proportion of true null hypotheses to be \(\pi _0=0.7\) and set \(\theta _1=0.25\) and \(\theta _2=0.75\). Furthermore, to calculate the UMP-based p-value, the parameter \(\theta \) under the null and alternative hypothesis are chosen as 0.18 and 0.37, respectively.

Empirical CDF of the UMP p-value (black curve) and the two-stage randomized (RAND2) p-value (grey curve) for \(k=1{,}000\), \(\lambda =0.5\), \(c=0.5\), and \(\pi _0=0.7\). We set \(\theta _1=0.25\) and \(\theta _2=0.75\). Furthermore, we choose the true parameter under the null as \(\theta =0.18\) and otherwise \(\theta =0.37\). The dashed vertical line intersects the x axis at \(\lambda \) while the thin diagonal lines intersect the y-axis at \(1-{\hat{\pi }}_0\)

From Fig. 7, RAND2 p-value outperforms the UMP p-value since its ECDF lies above the UNI(0, 1) line for all values of \(t\in (0,1)\). Furthermore, an extension of a straight line from the points (1, 1) to \((\lambda , F_k^{RAND2})\) as earlier mentioned, meets the y axis at a point which is close to \(1-\pi _0\).

4.3 Simulation study

We now conduct a simulation study based on real-world data to support the claim in Sect. 4.2 that RAND2 p-value outperforms the UMP p-value in estimating the proportion of true null hypotheses in multiple testing. We use the publicly available Coronavirus Disease 2019 (COVID-19) data taken from https://github.com/CSSEGISandData/COVID-19 (cf. (Dong et al 2020)). The data set consists of confirmed COVID-19 cases and recoveries for \(k=58\) regions of the United States of America as of \(12^{th}\) May 2020. The regions include all fifty states and eight others: American Samoa, Diamond Princess, District of Columbia, Grand Princess, Guam, Northern Mariana Islands, Puerto Rico, and the Virgin Islands.

After cleaning the data by removing all the missing values, we have \(k=47\) regions for our analysis. We select an interval of recovery rates \(\theta _1\) and \(\theta _2\) and conduct a TOST to find if the true rates from the data set belong to these intervals. We use a Monte Carlo simulation to assess the (average) performance of the UMP and RAND2 p-values in estimating \(k_0\). We set the constant c and the tuning parameter \(\lambda \) in (6) to 0.5 for all the simulations. The recovery rates from the data set are assumed to be the true proportions. The recovery rates are defined as \(\theta _i=r_i/n_i\) where \(r_i\) are the number of recoveries out of the \(n_i\) infected individuals from the \(i^{th}\) region for \(i\in \{1,\ldots , 47\}.\)

Using these rates and the number of confirmed cases, we generate a new data set on the computer for calculating the p-values. For simulation purposes, we choose different values for \(\theta _1\) and \(\theta _2\) in our null hypothesis leading to different equivalence limits \(\Delta \). For each equivalence limit \(\Delta \), define \(k_0\) as the number of true proportions, that is, \(k_0\) is the number of proportions \(\theta _i\), \(i \in \{1, \ldots , k\}\) such that \(\theta _i\le \theta _1\) or \(\theta _i\ge \theta _2\). For example, with the \(k=47\) regions, \(k_0\) can take any random value between 0 and 47. Since we are utilizing randomized p-values in (6), we average the estimated value of \(k_0\) over the 10, 000 Monte Carlo repetitions.

For exemplary purposes, we present ten choices of \(\theta _1\) and \(\theta _2\) in Table 1. A detailed description of the simulation is provided in Algorithm 2. The results from our simulation study based on the Algorithm 2 are presented in Table 1. From Table 1, for whatever value of \(\Delta \), RAND2 p-value outperforms the UMP p-value by giving estimates which are on average close to the actual value of \(k_0\). Also, as is expected, the number of true null hypotheses \(k_0\) decreases with an increase in the interval \(\Delta \).

4.4 Role of the tuning parameter \(\lambda \)

In this section, we investigate the role of the tuning parameter \(\lambda \) in the estimator given in (6) when using the two p-values. Proper choice of this parameter is crucial since a smaller \(\lambda \) will lead to high bias and low variance while a larger one leads to low bias and high variance of the proportion estimator. Based on this bias-variance trade-off, we take the optimal \(\lambda \) to be the one that minimizes the mean square error (MSE).

Other research in this direction includes the use of change-point concepts for choosing \(\lambda \) in the Storey (2002) estimator. In this approach, first approximate the p-value plot by a piecewise linear function that has a single change-point. Select the p-value at this change-point location as the value of \(\lambda \). Another approach is to choose \(\lambda =\alpha \). Hoang and Dickhaus (2022) noted that the default choice of \(\lambda =0.5\) works well with randomized p-values since the sensitivity of the estimator in (6) with respect to \(\lambda \) is least pronounced for the case of randomized p-values. The default common choice of \(\lambda =0.5\) is unstable, especially when dealing with dependent p-values. We plot Fig. 8 to illustrate how the estimator based on the UMP and RAND2 p-value is affected by different choices of \(\lambda \). In this plot, we have used the same COVID-19 data and set \(c=0.5\), \(\theta _1=0.2963\), and \(\theta _2=0.7566\).

From Fig. 8, the estimate of \(k_0\) based on the UMP p-value moves away from the number of true null hypotheses \(k_0\) as the value of \(\lambda \) increases. The estimate based on RAND2 p-value stays close to \(k_0\) and only oscillates wildly around \(k_0\) when \(\lambda \) is close to 1.

5 Discussion

In this research, we have considered the use of UMP and randomized p-values (RAND2) in the problem of interval composite null hypothesis. Using large sample sizes, we have illustrated that the power functions for the UMP and RAND2 p-values are both monotonically increasing with an increase in the sample size. We have also found that it is possible for the power function of the UMP and the two-stage randomized p-value for a sample of size n and that of \(n+i\) for small \(i\in {\mathbb {N}}\) to coincide on the entire parameter space. This problem occurs when dealing with relatively small samples and the equivalence limit \(\Delta \) is too wide while the chosen parameter \(\theta \) under the alternative is too far from \(\theta _1\) or \(\theta _2\). This problem does not occur when \(\Delta \) is too narrow while the chosen parameter \(\theta \) is too close to \(\theta _1\) or \(\theta _2\) under the alternative hypothesis (see Fig. 2). This problem only occurs if the interval \(\Delta \) gets smaller from one end while the other one is kept constant, for example, by holding \(\theta _2\) constant and increasing \(\theta _1\).

The problem of nonmonotonicity of the power functions gets worse if the equivalence limit decreases from both ends. A similar observation in Qiu and Cui (2010) is that when the equivalence limit is too narrow, the ROC curve of the TOST procedure is nonmonotonic for small sample sizes. A complete characterization of this paradox will be considered in future research following the ideas in Finner and Strassburger (2001) and Finner and Roters (1993). Of course, in practical problems, the equivalence limits are determined before the data collection and remain fixed throughout the experiment. The adjustments made here are to illustrate the behavior of the p-values and their CDFs under different equivalence limits.

A plot of the CDFs for the UMP and RAND2 p-values under the null and alternative hypothesis illustrates that the UMP p-value is more conservative and more powerful compared to RAND2 p-value. The conservativeness of the UMP p-value increases while the one for RAND2 reduces with a further decrease in the equivalence limit \(\Delta \). Furthermore, the power functions for the p-values decrease with a decrease in \(\Delta \). A similar trend for the CDFs occurs when \(\Delta \) is kept constant, and the chosen parameter under the null (alternative) is too far from (close to) the boundary of the null (alternative).

Increasing both the parameters \(\theta _1\) and \(\theta _2\) by \(\epsilon _1\) and \(\theta \) by \(\epsilon _2\) leads to an increase in power for both the p-values, a decrease in conservativity of the UMP p-value, and no change in the level of conservativity of RAND2 p-value. A similar trend occurs for a large equivalence limit, provided \(\epsilon _2>\epsilon _1\). The power increases for a large equivalence limit since \(\theta \) moves closer to the midpoint of \(\Delta \), which is the parameter that gives the maximum power for both UMP and RAND2 p-values under this condition. We were also interested in finding the parameter value that maximizes the CDFs under the alternative hypothesis for the two p-values. We found that for large \(\Delta \), the parameter value that maximizes the CDFs of both p-values occurs at the midpoint of \(\Delta \). For small \(\Delta \), however, this parameter value can be too close to \(\theta _1\) or \(\theta _2\) for RAND2 p-value while the one for the UMP p-value is always at the midpoint.

Concerning the level of conservativity of the p-values to the sample size, we found that the CDF for the UMP p-value moves further away while the one for RAND2 p-value moves closer to the UNI(0, 1) line with an increase in the sample size. Therefore, the UMP p-value becomes more conservative while the level of conservativity for RAND2 p-value remains the same with an increase in the sample size. Furthermore, Munk (1996) and Wellek (2010) Sect. 1.2 (p. 5) argues that equivalence tests require much larger sample sizes to achieve a reasonable power compared to the one- or two-sided tests; unless \(\Delta \) is chosen too wide that even “nonequivalent” hypotheses would be declared “equivalent.” Therefore, it would be better to consider RAND2 p-value for multiple equivalence tests since they are less conservative even for large sample sizes.

A plot of the empirical CDFs of the p-values evaluates their performance when used with the estimator in (6). The ECDF of RAND2 p-value, unlike the one for the UMP p-value, is above the UNI(0, 1) throughout. Furthermore, the slope between the points (1, 1) and \((\lambda , F_k^{RAND2})\) for RAND2 p-value is close to \(\pi _0\) compared to the one for the UMP p-value. Therefore, RAND2 p-value outperforms the UMP p-value in the estimation of the proportion of true null hypotheses. To further justify this claim, we have given a real example and provided a simulation study showing that RAND2 p-value outperforms the UMP p-value for all values of \(\Delta \) by giving estimates that are closer on average to the true proportions.

The choice of the tuning parameter \(\lambda \) for the estimator in (6) has also been of great concern in the recent literature. The sensitivity of this estimator to \(\lambda \) is more pronounced for conservative p-values than for non-conservative ones. Since the UMP p-value is more conservative than RAND2 p-value, the choice of \(\lambda \) is critical for obtaining estimates that are close to the actual number of true null hypotheses when using the UMP p-value. Based on the results from our simulation study, we recommend a small value of \(\lambda \) when using the UMP p-value. Assuming we are using a small \(\alpha \), this choice is similar to the recommended choice of \(\lambda =\alpha \) in the previous literature. When using RAND2 p-value, any choice of \(\lambda \) which is not close to one is recommended. We recommend this choice since the estimate of \(k_0\) based on RAND2 p-value oscillates wildly around the value of \(k_0\) as \(\lambda \longrightarrow 1\). The recommendation in Dickhaus (2013); Habiger and Pena (2011), and Habiger (2015) that randomized p-values are nonsensical for a single hypothesis also applies to our RAND2 p-value and in that case the usage of the UMP p-value is advocated for. Furthermore, we caution the practitioner against using randomized p-values in bioequivalence studies. Some general extensions of this research include using randomized test procedures to achieve unbiased tests for Lehmann’s alternative. Also, one could extend these procedures to consider multiple endpoints while accounting for the correlations among those endpoints. Finally, randomized p-values can be extended to stepwise regression since we use the p-values in these procedures several times, leading to multiple test problems.

References

Berger RL (1982) Multiparameter hypothesis testing and acceptance sampling. Technometrics 24(4):295–300

Berger RL, Hsu JC (1996) Bioequivalence trials, intersection-union tests and equivalence confidence sets. Stat Sci 1:283–302

Bonferroni C (1936) Teoria statistica delle classi e calcolo delle probabilita. Pubbl R Ist Super Sci Econ Commer Firenze 8:3–62

Brown LD, Hwang JG, Munk A (1997) An unbiased test for the bioequivalence problem. Ann Stat 25(6):2345–2367

Dickhaus T (2013) Randomized p-values for multiple testing of composite null hypotheses. J Stat Plan Inference 143(11):1968–1979

Dickhaus T, Strassburger K, Schunk D et al (2012) How to analyze many contingency tables simultaneously in genetic association studies. Stat Appl Genet Mol Biol 11(4):12

Dong E, Du H, Gardner L (2020) An interactive web-based dashboard to track COVID-19 in real time. Lancet Infect Dis 20(5):533–534

Finner H, Gontscharuk V (2009) Controlling the familywise error rate with plug-in estimator for the proportion of true null hypotheses. J R Stat Soc Ser B Stat Methodol 71(5):1031–1048

Finner H, Roters M (1993) On the behaviour of expectations and power functions in one-parameter exponential families. Stat Risk Model 11(3):237–250

Finner H, Strassburger K (2001) Increasing sample sizes do not always increase the power of UMPU-tests for 2\(\times \) 2 tables. Metrika 54(1):77–91

Fogarty CB, Small DS (2014) Equivalence testing for functional data with an application to comparing pulmonary function devices. Ann Appl Stat 1:2002–2026

Giani G, Finner H (1991) Some general results on least favorable parameter configurations with special reference to equivalence testing and the range statistic. J Stat Plan Inference 28(1):33–47

Giani G, Straßburger K (1994) Testing and selecting for equivalence with respect to a control. J Am Stat Assoc 89(425):320–329

Habiger JD (2015) Multiple test functions and adjusted p-values for test statistics with discrete distributions. J Stat Plan Inference 167:1–13

Habiger JD, Pena EA (2011) Randomised P-values and nonparametric procedures in multiple testing. J Nonparametr Stat 23(3):583–604

Hoang AT, Dickhaus T (2022) On the usage of randomized p-values in the Schweder–Spjøtvoll estimator. Ann Inst Stat Math 74(2):289–319

Hochberg Y (1988) A sharper Bonferroni procedure for multiple tests of significance. Biometrika 75(4):800–802

Hommel G (1988) A stagewise rejective multiple test procedure based on a modified Bonferroni test. Biometrika 75(2):383–386

Huang Y, Hsu JC, Peruggia M et al (2006) Statistical selection of maintenance genes for normalization of gene expressions. Stat Appl Genet Mol Biol 5:1

Leday GG, Hemerik J, Engel J et al (2023) Improved family-wise error rate control in multiple equivalence testing. Food Chem Toxicol 178:113928

Munk A (1996) Equivalence and interval testing for Lehmann’s alternative. J Am Stat Assoc 91(435):1187–1196

Ostrovski V (2020) New equivalence tests for Hardy–Weinberg equilibrium and multiple alleles. Stats 3(1):34–39

Pflüger R, Hothorn T (2002) Assessing equivalence tests with respect to their expected p-value. Biometr J 44(8):1015–1027

Qiu J, Cui X (2010) Evaluation of a statistical equivalence test applied to microarray data. J Biopharm Stat 20(2):240–266

Qiu J, Qi Y, Cui X (2014) Applying shrinkage variance estimators to the tost test in high dimensional settings. Stat Appl Genet Mol Biol 13(3):323–341

Reiss RD (1989) Approximate distributions of order statistics. With applications to nonparametric statistics. Springer Series Statistics. Springer, New York

Romano JP (2005) Optimal testing of equivalence hypotheses. Ann Stat 33(3):1036–1047

Schweder T, Spjøtvoll E (1982) Plots of \(P\)-values to evaluate many tests simultaneously. Biometrika 69:493–502

Storey JD (2002) A direct approach to false discovery rates. J R Stat Soc Ser B Stat Methodol 64(3):479–498

Wellek S (2010) Testing statistical hypotheses of equivalence and noninferiority. CRC Press, New York

Acknowledgements

The author would like to acknowledge the editor and an anonymous reviewer for the careful reading of this manuscript and for their comments and suggestions, which improved the presentation of this manuscript.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Mathematical proofs

Appendix: Mathematical proofs

Proof of Lemma 1

Recall that our (random) data is given by \(\pmb {X}\), U is a UNI(0, 1)-distributed random variable which is independent of our data, and \(t\in (0,1)\) is an arbitrary significance level. Define \(\phi (X,U;t)\) to be a decision function for a test procedure such that we reject the null when \(\phi (X,U;t)=1\) and otherwise fail to reject when \(\phi (X,U;t)=0\). A p-value based on this decision function is

Consider a test of \(H:\theta \le \theta _0\) versus \(K:\theta >\theta _0\) where \(\theta _0\) is a prespecified constant. The size of this test for an arbitrary \(t\in (0,1)\) is

where \(C_n=F^{-1}_{\theta _0}(1-t)\) and \(\gamma _n=\{{\mathbb {P}}_{\theta _0}(T(\pmb {X})\le C_n)-(1-t)\}\{{\mathbb {P}}_{\theta _0}(T(\pmb {X})=C_n)\}^{-1}\) are the critical and randomization constants, respectively as given in Definition 1. The p-value for this test based on the definition in Equation (A1) is

Similarly, consider a test of the form \(H:\theta \ge \theta _0\) versus \(K:\theta <\theta _0\) where again \(\theta _0\) is a prespecified constant. The size of this test for an arbitrary \(t\in (0,1)\) is

where again \(D_n=F^{-1}_{\theta _0}(t)\) and \(\delta _n=\{t-{\mathbb {P}}_{\theta _0}(T(\pmb {X})\le D_n-1)\}\{{\mathbb {P}}_{\theta _0}(T(\pmb {X})=D_n)\}^{-1}\) are the critical and randomization constants, respectively as given in Definition 1. The p-value for this test based on the definition in Equation (A1) is

Assume now that the hypothesis is as given in Equation (1), then the overall test statistic for this problem is

The overall p-value is

since

which gives the overall p-value in (A4) using the definition in (A1). We can express this further as

which gives the p-value

again based on the definition of a p-value in Equation (A1). However, this is equivalent to the UMP p-value in Definition 1 since \(\theta _0\) is the LFC parameter, which can be \(\theta _1\) or \(\theta _2.\) \(\square \)

Proof of Theorem 1

To verify that the CDFs of the UMP and the two-stage randomized p-values are point-wise monotonically increasing with an increase in the sample size for any parameter value \(\theta \) belonging to the alternative hypothesis, it suffices to prove that these CDFs for a sample of size \(n+1\) are greater than those for size n. Recall from Equation (5) that

For an arbitrary, but fixed \(c\in [0,1]\), further recall that \(C_n=F_{Bin(n,\theta _1)}^{-1}(1-c)\) and \(D_n=F_{Bin(n,\theta _2)}^{-1}(c)\) denotes the quantile of a binomial random variable with parameters n and \(\theta _i\) for \(i=1,2\). Again recall that the randomization constants are given by

Define

Since X is a random variable that follows a binomial distribution with parameters n and \(\theta \), the above power function can be expressed as

where \(\varrho =\bigg (\frac{\theta }{1-\theta }\bigg )\) and it is such that \(\varrho \in (0,\infty )\). For a sample of size \(n+1\), Equation (A6) becomes

Since \(\gamma _n,\gamma _{n+1},\delta _n,\delta _{n+1}\in (0,1)\), to verify that \(\beta _{n+1}>\beta _n\), we compare the coefficients of \(\varrho ^x\) in Equations (A6) and (A7). To do this for the coefficients of \(\varrho ^x\) in the first terms in Equations (A6) and (A7), we have

provided \(c_{n}=c_{n+1}.\) The proof for the other case when \(c_{n}+1=c_{n+1}\) can be shown similarly. Next, comparing the other coefficients of \(\varrho ^x\) in Equations (A6) and (A7), we have

provided \(d_{n}+1=d_{n+1}.\) Again proving the other case when \(d_{n}=d_{n+1}\) will follow similar steps. With this, it is evident that \(\beta _{n+1}>\beta _n\). The proof for \({\mathbb {P}}_{\theta }\{P_T^{rand}(\pmb {X},U)\le tc\}\), which yields the same result, can be carried out similarly. With this, the CDF for the two-stage randomized p-value RAND2 is, under the stated conditions, monotonically increasing with an increase in n, which we needed to prove. Repeating the above calculations for \(\beta _n\) with \(t\in (0,1)\) in place of c provides the proof that the CDF of the UMP p-value is monotonically increasing with an increase in the sample size (under the stated conditions). \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ochieng, D. Multiple testing of interval composite null hypotheses using randomized p-values. Stat Papers (2024). https://doi.org/10.1007/s00362-024-01591-9

Received:

Revised:

Published:

DOI: https://doi.org/10.1007/s00362-024-01591-9