Abstract

We characterize absolutely continuous symmetric copulas with square integrable densities in this paper. This characterization is used to create new copula families, that are perturbations of the independence copula. The full study of mixing properties of Markov chains generated by these copula families is conducted. An extension that includes the Farlie–Gumbel–Morgenstern family of copulas is proposed. We propose some examples of copulas that generate non-mixing Markov chains, but whose convex combinations generate \(\psi \)-mixing Markov chains. Some general results on \(\psi \)-mixing are given. The Spearman’s correlation \(\rho _S\) and Kendall’s \(\tau \) are provided for the created copula families. Some general remarks are provided for \(\rho _S\) and \(\tau \). A central limit theorem is provided for parameter estimators in one example. A simulation study is conducted to support derived asymptotic distributions for some examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A bivariate copula, which is defined as a restriction to the unit square \([0; 1]^2\) of a bivariate joint cumulative distribution function with uniform marginals, is a tool that has been gaining popularity in dependence modeling. An equivalent definition can be found in Nelsen (2006), where one can find several related notions. The popularity of copulas is due to Sklar’s theorem that relates them to the joint distribution and marginals of a bivariate random vector (see Sklar (1959)).

Many authors have worked on building copulas with various properties. Some constructions can be found in Nelsen (2006), where several construction methods are presented. Arakelian and Karlis (2014) studied mixtures of copulas, that were investigated for mixing by Longla (2015). Longla (2014) constructed copulas based on prescribed \(\rho \)-mixing properties obtained from extending results of Beare (2010) on mixing for copula-based Markov chains. Longla et al. (2022a, b) constructed copulas in general based on perturbations. In these two works, two perturbation methods were considered. For the first method, copulas are perturbed at the level of the variables by adding some noise components to each of the variables. For the second method, a copula C(u, v) is perturbed by creating \(C_D(u,v)=C(u,v)+D(u,v)\) and requiring that \(C_D(u,v)\) satisfies the definition of a copula. This last method of perturbation was also presented in Komornik et al. (2017) and the references therein. Several other authors have considered extensions of existing copula families via other methods, that are not the focus of this work, see for instance Morillas (2005), Aas et al. (2009), Klement et al. (2017), Chesneau (2021) among others.

Chesneau (2021) considered multivariate trigonometric copulas, which seem close to one of the examples of copula families of this paper, but are in fact very far from the work we provide here. We are concerned with the important question of characterizing absolutely continuous symmetric copulas with square integrable densities. After characterizing such copulas, we extract some general copula families with specific functions, one of which is made of trigonometric functions. These copula families come with predefined mixing structure of the copula-based Markov chains they generate. This, in general, can not be said about the copula families of Chesneau (2021), which where published when this paper was already written. We also provide the mixing structure of Markov chains generated by any of the copulas constructed in this paper. Therefore, one of the main points of the paper is the presentation of several copula families and the mixing structure of the Markov chains they generate. Moreover, for each of these copulas, we provide joint distributions of any two variables along the Markov chain they generate; and show that the copulas of these variables also belong to the initial copula family. We show that the set of all copulas for each of the selected basis of functions is closed under the operation \(*\), defined by \(C^1(u,v)=C(u,v),\) for \(n>1\)

where \(C_{,i}(u,v)\) is the derivative with respect to the i th component of (u, v). This product plays a central role in our study and its properties can be found in Darsow et al. (1992). Recall that for \(n=2\) this product is the joint distribution of \((X_1,X_3)\) when \((X_1,X_2,X_3)\) is a stationary Markov chain with copula C(u, v) and the uniform marginal distribution. In general, the copula \(C^{n}(u,v)\) is the joint distribution of \((X_0, X_n)\) when \(X_0, \ldots , X_n\) is a stationary Markov chain generated by C(u, v) and the Uniform(0,1) distribution. This is a simple consequence of applying recursively the product formula (see Longla et al. (2022a)).

The product \(*\) and related notions are used in this paper to derive properties of Markov chains, including mixing and association. In Longla et al. (2022a), measures of association such as Spearman’s \(\rho \) and Kendall’s \(\tau \) were studied for perturbations of copulas. Recall that in terms of copulas, these coefficients are defined as

Formulas (1) and (2) are respectively formula (5.1.15c) and formula (5.1.12) of Nelsen (2006). For definitions and practical use of these measures, we refer the reader to Nelsen (2006) and references therein. In this paper, our interest for these measures is in finding the relationship between them and the parameters of the copulas, then propose an estimation procedure. Among copula families, for which we derive measures of association, is the family of copulas based on Legendre polynomials. This family is an extension of the Farlie–Gumbel–Morgenstern (FGM) copula family. The FGM has been extensively studied in the literature with several extensions (see Hurlimann (2017) or Ebaid et al. (2022)). Our extension is unrelated to extensions that exist in the literature. We use a new approach to extend this family in a way that has not been done in the literature. Moreover, we show that copulas from this family generate \(\psi \)-mixing stationary Markov chains for all values of the family parameter \(\theta \in [-1,1]\). This improves a previous result of Longla et al. (2022a), who failed to provide the proof for the boundary points \(\theta =\pm 1\). The new characterization of copulas obtained in this paper resulted in a new elegant proof of \(\psi \)-mixing that includes the boundary points. For more on mixing coefficients, see Bradley (2005), Beare (2010) and the references therein. To avoid confusions in this paper, we say that a function f(x) is a version of the function g(x) if and only if the Lebesgue measure (in the appropriate dimension) of the set on which they differ is 0.

This paper is structured as follows. In Sect. 2, we present a characterization of copulas with square integrable densities. The obtained characterization is used to construct new copula families. Among these general functional families of copulas, are finite and infinite sums, including new sine and cosine copula families. A second group of copulas that is shown as example here is based on Legendre polynomials and contains the FGM copula. We also provide the Spearman’s \(\rho _S\) and Kendall’s \(\tau \) coefficients for each of the examples of copula families along with some useful remarks. All these results are new to the best of our knowledge. In Sect. 3, we develop mixing properties of the extended FGM copula family based on Legendre polynomials. Several examples are provided here along with some copulas that don’t generate mixing Markov chains, but have convex combinations that generate Mixing Markov chains. These examples answer an open question on convex combinations of copulas and mixing. A new general result on mixing for Markov chains generated by copulas with square integrable densities is given. We provide an extension of a previous result of Longla et al. (2022c) on \(\psi \)-mixing and \(\psi '\)-mixing. Namely, we show that \(\psi \)-mixing follows from the two conditions \(c^{s_1}(u,v)>0\) on a set of Lebesgue measure 1 and \(c^{s_2}(u,v)<2\) for all \((u,v)\in [0,1]^2\). In Sect. 4 we provide some parameter estimators and their asymptotic distributions via centra limit theorems. We provide here a simulation study. Section 5 covers comments and conclusions. The Appendix of proofs ends the paper.

2 Square integrable symmetric densities

In this section, we provide a characterization of copulas with square integrable densities. The aim is to show that any such copula can be represented as a sum of possibly non-zero infinitely many terms. Such a representation indicates a new way to construct new copula families by modifying the independence copula \(\Pi (u,v)=uv\). We use this method in this section to provide new sets of copulas and compute their coefficients \(\rho _S\) and \(\tau \).

Recall that a copula in general is defined as a multivariate cumulative distribution function, for which the restriction on \([0,1]^2\) has uniform marginals on (0,1). For any bivariate random variable (X, Y) with continuous marginal distributions \((F_X, F_Y)\), the copula of (X, Y) is the joint cumulative distribution function of \((F_X(X), F_Y(Y))\). Note that both \(F_X(X)\) and \(F_Y(Y)\) are uniform on [0, 1]. This is why most works on copula theory ignore the marginal distributions and are limited to uniform marginals. This is done without loss of generality, when variables are assumed continuous (see Nelsen (2006), Durante and Sempi (2016) or Sklar (1959) for more on the topic). This work is concerned mainly with absolutely continuous symmetric bivariate copulas C(u, v) for which the density function c(u, v) exist and satisfies

Formula (3) follows from the fact that the singular part of the copula vanishes for absolutely continuous copulas (see Darsow et al. (1992)). It is known that the density of a copula is a positive bivariate function on \([0,1]^2\). Many authors have worked on copulas and their properties. Copulas are used to model dependence in various applied fields for problems that include but are not limited to estimation, classification and statistical tests of hypotheses. When used to model dependence, they help establish mixing properties of Markov chains, that are useful in establishing central limit theorems for sample averages of functions of observations (see Doukhan et al. (2009), Chen and Fan (2006), Peligrad and Utev (1997), and Jones (2004)). For more on properties of copulas, see Darsow et al. (1992) and Beare (2010).

In this paper, we say that a copula C(u, v) is symmetric if and only if \(C(u,v)=C(v,u)\) for all \((u,v)\in [0,1]^2\). Such copulas are useful in modeling reversible Markov chains. For a symmetric absolutely continuous copula, the density c(u, v) is square integrable if and only if

Square integrable copula densities are kernels of Hilbert–Schmidt operators on the Hilbert space \(L^2(0,1)\). This means, that the linear operator defined by

is a Hilbert–Schmidt operator in \(L^2(0,1)\) (see Ferreira and Menegatto (2009)). Let \(\{\varphi _k(x), k\in \mathbb {N}\}\) be an orthonormal basis made of eigenfunctions of the operator K. \(K\varphi _k(x)=\lambda _k\varphi _k(x),\) where \(\lambda _k\) is called eigenvalue of K associated to the eigenfunction \(\varphi _k(x)\) and the equality is given in the sense of \(L^2(0,1)\). Based on this, for a symmetric copula with square integrable density, we have (see also Beare (2010), Longla (2014)):

-

The sequence of \(|{}\lambda _k|{}\) has a finite number of values including their multiplicities, or converges to 0.

-

\(\{\varphi _k(x), k\in \mathbb {N}\}\) can be selected to form an orthonormal system in \(L^2(0,1)\).

Due to the fact that \(L^2(0,1)\) is a separable Hilbert space and the orthonormal system made of eigenfunctions of this operator is complete, it follows that formula (6) holds in the sense of \(L^2(0,1)\). Therefore, by Mercer’s theorem (see formula 27 page 445 of Mercer (1909) or Theorem 2.4 of Ferreira and Menegatto (2009))

where convergence of the series is point-wise and uniform on \((0,1)^2\) when the operator is non-negative definite (when all eigenvalues are non-negative). The same conclusion can be achieved when all eigenvalues are non-positive (or the operator is non-positive). In fact, Mercer (1909) showed that for any continuous non-negative definite kernel k(x, y), the series converges point-wise and uniformly. A non-negative definite kernel is a function k(x, y) such that

Mercer (1909) pointed that the above definition is equivalent to

Moreover, it is obvious that for a continuous symmetric kernel k(x, y), the trace of the operator is

Therefore, the series of partial sums of eigenvalues is convergent when the density of the copula is symmetric, square integrable, positive definite and continuous on \([0,1]^2\). It is important to mention that these conditions impose that eigenvalues be all positive (or all negative), therefore ruling out some of the copulas. But the decomposition can be extended by continuity to copulas with eigenvalues of both signs.

Proposition 2.1

For any absolutely continuous copula C(u, v) the following holds.

-

1.

The kernel operator (5) has and eigenvalue \(\lambda =1\) associated to the eigenfunction \(\varphi (x)=1\);

-

2.

There exists a basis of eigenfunctions of (5) for any square integrable symmetric copula density. Moreover, there exists a decomposition (6) containing \(\varphi _1(x)=1\) associated to the eigenvalue \(\lambda _1=1\).

-

3.

A selected basis might not contain \(\varphi (x)=1\) in the case when the eigenvalue \(\lambda =1\) has multiplicity at least equal to 2, generating a subspace of dimension higher than 1 with orthonormal basis not containing \(\varphi (x)=1\).

Note that when the eigenvalue \(\lambda =1\) has multiplicity \(s>1\) in the decomposition, s has to be finite because of square integrability. It is also worth mentioning that it is impossible for some functions to be eigenfunctions associated to the eigenvalue 1. For instance, \(\varphi _k(x)\) has to be bounded.

We devote the following subsections of this work to the case when \(\varphi (x)=1\) is used. This case by itself is interesting because it turns into the question of perturbations of the independence copula (see Longla et al. (2022a)). As we will see below, the mixing structure of Markov chains generated by our constructed copula families is easily established in general. This eases the study of estimators of coefficients and measures of association.

2.1 Perturbations of the copula \(\Pi (u,v)\)

The copula \(\Pi (u,v)=uv\) is called independence copula. It is used to model data under the assumption of independence. This means that the copula of random variables that are independent is \(\Pi (u,v)\). The notion of perturbation has been recently used in many papers to address modifications that are done to a copula to improve estimation results. This is done by introducing some level of dependence in the case of the independence copula (see Durante et al. (2013)) through a function D(u, v). When \(\Pi (u,v)\) is perturbed, the obtained copula exhibits some dependence that can be estimated to fit better the data at hands.

Various types of perturbations have been considered in Durante et al. (2013), Komornik et al. (2017), Longla et al. (2022a, c) and the references therein. In this section, we investigate perturbations of the kind \(C_D(u,v)=\Pi (u,v)+D(u,v)\), where D(u, v) is the perturbation factor to be determined using (6). This perturbation method was studied in Komornik et al. (2017) and the references therein. Longla et al. (2022a) and (2022c) considered mixing for Markov chains generated by perturbations with \(D(u,v)=\theta (\Pi (u,v)-C(u,v))\) and \(D(u,v)=\theta (M(u,v)-C(u,v))\), where \(M(u,v)=\min (u,v)\) is the Fréchet-Hoeffding upper bound.

Remark 1

The use of M(u, v) to perturb a copula C(u, v) for data with uniform marginals assumes that values of the Markov chain have a positive probability of repeating themselves, before providing new values from the transition copula C(u, v).

Considering the decomposition (6), when the first term uses \(\varphi (x)=1\), reindexing the remaining functions \(\varphi _k(x)\) and integrating leads to

This implies \(C(u,v)=uv+D(u,v)\), where

Note that \(D(u,0)=D(u,1)=0\) and D(u, v) is symmetric. Therefore, the perturbation is a copula when its second order mixed derivative is non-negative (refer to Longla et al. (2022a)). So, any representation (7) with \(\lambda _k\in \mathbb {R}\) defines a copula with square integrable density if and only if

Condition (9) is required for square integrability of the density and takes into account the fact that \(\varphi _k(x)\) form an orthonormal system of functions. Condition (8) is satisfied when the following holds:

Note that condition (10) is not necessary. For different values of k, the functions \(\varphi _k(x)\) might have their minima or maxima at different points. It is a strong condition, but easy to handle in practice. Note that when condition (10) holds, if \(\varphi _k(x)\) are uniformly bounded, then (9) holds. This can be shown by first showing that the sum of \(|\lambda _k|\) is convergent and larger than the sum in (9). For all systems of functions that are considered in this section, all these conditions are satisfied. Thus, the following characterization theorems hold.

Theorem 2.2

Any symmetric absolutely continuous copula with square integrable density can be represented by (7), where \(\{1, \varphi _k(x), k>1, k\in \mathbb {N}\}\) is an orthonormal basis associated to the eigenvalues \(\{1, \lambda _k, k>1, k\in \mathbb {N}\}\) of the operator (5).

In Theorem 2.2, equality is understood as equality of operators in \(L^2(0,1)\). This equality turns into point-wise or uniform convergence when the density of the copula is continuous and defines a positive semi-definite operator.

Theorem 2.3

Assume that \((\lambda _k\in \mathbb {R}, k>1)\) is such that conditions (10) and (9) hold for an orthonormal system of functions of \(L^2(0,1)\) that contains \(\varphi _1(x)=1\). The function (7) is an absolutely continuous symmetric copula with square integrable density that we call Type-I Longla copula with notation \(L(u,v, \Lambda , \Phi )\).

For any system of functions \(\varphi _k(x)\) that is uniformly bounded, condition (10) implies condition (9). Theorem 2.3 opens the ground for various copula families with functions as parameters. These copulas can have finite or infinite sums of terms. They depend both on the system of functions \(\varphi _k(x)\) and the coefficients \(\lambda _k\). The characterization formulated in the following general result summarizes the above analysis.

2.2 Examples of new copula families

Theorems 2.3 and 2.2 provide a relationship between extreme values of the functions \(\varphi _k(x)\) and the possible eigenvalues \(\lambda _k\) of (5). For example, a function \(\varphi (x)\) with maximum 2 and minimum \(-3\) cannot be associated to an eigenvalue less than \(-1/9\) or larger than 1/6, when all other coefficients are equal to 0. The larger the values of \(|{}\varphi _k(x)|{}\), the smaller the maximal range of \(\lambda _k\), when the sum (6) has only \(\varphi _k(x)\) and 1. Setting \(\Phi (x)=\int _0^x\varphi (s)ds\) for any mean-zero normal function \(\varphi (x)\) on [0, 1], we have the copula

An example of copula (11) is defined for \( -\min (\frac{1}{\alpha },\alpha ) \le \lambda \le 1,\) \(\alpha \in (0,\infty )\) by

Copula (12) is also a representative of the only class of copulas with square integrable symmetric densities based on a single function \(\varphi (x)\) that takes 2 values on [0, 1]. This class of copulas is defined using

where A is a set of measure \(\frac{1}{\alpha +1}\) for some strictly positive real number \(\alpha \). Copula (12) is obtained via integration to get \(\Phi (x)\) of formula (11) for

For copula (12), while the parameter \(\lambda \) answers for dependence (we will show later that its absolute value is the maximal coefficient of correlation), the parameter \(\alpha \) answers for the portions of \([0,1]^2\) that have constant likelihood values. In fact, the density of this copula is constant on each of the four portions of its support. Via simple computations we establish that for copula (12),

Remark 2

The coefficients \(\rho _S(C_{\alpha ,\lambda })\) and \(\tau (C_{\alpha ,\lambda })\) show that for the purpose of correlation or association, the range of \(\alpha \) can be limited to (0, 1], because \(1/\alpha \) and \(\alpha \) produce the same value for \(\rho _S(C_{\alpha ,\lambda })\) and \(\tau (C_{\alpha ,\lambda })\). The maximum of these coefficients is reached at \(\alpha =1\).

Interest in the copula family \(C_{1,\lambda }(u,v)\) is in the fact that the range of the Pearson correlation coefficient of functions of variables (U, V) generated by this copula family is \([-1,1]\). For \(\lambda \ne \pm 1\), the procedure to generate a stationary Markov chain from \(C_{1,\lambda }(u,v)\) with the uniform marginal distribution is as follows. We generate an observation \(U_0\) from Uniform(0, 1); then for every integer i, generate \(Q_i\) from Uniform(0, 1) and define \(U_i\) using the quantile function of the conditional distribution of \(U_i|{}U_{i-1}\). The quantile function is the inverse with respect to v of the derivative with respect to u of C(u, v) at the point \((U_{i-1},U_i)\) (see Longla and Peligrad (2012)). We obtain the formula

Recall that the joint cumulative distribution function of \((U_0, U_n)\) is the copula \(C_{1, \lambda ^n}(u,v)\). Therefore, the following holds.

Lemma 1

For any stationary Markov chain generated by \(C_{1,\lambda }(u,v)\) and the uniform marginal distribution for \(\lambda \ne \pm 1\), we have \(U_n-Q_n\rightarrow 0\) in probability when \(n\rightarrow \infty \).

The limiting value of \(U_n\) as \(n\rightarrow \infty \) doesn’t depend on the initial value \(U_0\). This is true because \(\lambda \) is replaced by \(\lambda ^n\rightarrow 0\) in formula (13) and \(Q_n\) is a random sample obtained independently. This fact is one of the selling points of the study of mixing properties. The initial point of the Markov chain doesnot affect the long run behavior under mixing assumptions.

An extension of the copula family (12) is obtained considering for all \(s\in \mathbb {N^*}\), \(\delta _i=\mathbb {I}(a_i\le u, v<a_{i+1})\) and \(|\theta _i|\le 1\). This extension has density

Formula (14) defines the density a copula for any set \(\{a_i, i=1,\ldots , s: 0\le a_1<a_2<\cdots<a_s<a_{s+1}= 1\}\). Via simple computations, we show that functions

satisfy the conditions of Theorem 2.3. Note that \(\theta _i (a_{i+1}-a_i) =\lambda _i\) implies \(|\lambda _i |\le (a_{i+1}-a_i)\). Moreover, this copula coincides with copula (12) when \(s=1\) and \(a_1=0\). This copula departs from independence by adding local perturbations to rectangles \([a_i, a_{i+1}]\times [a_i, a_{i+1}]\) of size \(\pm \theta _s\).

An interesting case is when all functions have the same maximum, same minimum and the absolute values of these numbers are same. The range of the \(\lambda _k\) is symmetric. This is the case when \(\varphi _k\) is \(a\sin w_kx\), or \(a\cos w_kx\). We consider these cases in the subsections below.

2.2.1 Copulas based on trigonometric on functions

Here, we look into copula families based on trigonometric bases of \(L^2(0,1)\). These copulas are different from those introduced in Chesneau (2021), because they don’t involve sums of variables. Moreover, the mixing structure of copulas of Chesneau (2021) is not as evident as that of the copula families that we construct here. It is also worth mentioning that the copula families that are introduced here can be finite or infinite sums and that their fold products remain in the considered classes. When modeling with these copulas, the existence of waves in the scatter plot can indicate the number of functions to consider in the sum. An observation on \(\rho _S(C)\) and \(\tau (C)\) shows that some terms can be added to increase linear correlation without affecting association.

-

The sine-cosine copulas as perturbations of \(\Pi (u,v)\)

We call sine-cosine copulas perturbations of the independence copula that consider the trigonometric orthonormal basis of \(L^2(0,1)\) that consists of functions: \(\{1, \sqrt{2}\cos 2\pi kx, \sqrt{2}\sin 2\pi kx, k\in \mathbb {N^*}\}\). Theorem 2.3 guarantees that

is a copula, when \(\displaystyle \sum \nolimits _{k=1}^{\infty } (|{}\lambda _k|{}+|{}\mu _k|{})\le 1/2\). For this example, condition (9) holds automatically. The series (15) also converges uniformly and absolutely on \([0,1]^2\) thanks to Weierstrass’ M-test and the fact that the functions in the series are uniformly bounded on \([0,1]^2\).

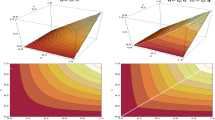

Figure 1a represents the copula density

Figure 1b is the graph of

Figure 1c represents

and Fig. 1d represents the copula density

The Spearman’s \(\rho \) and Kendall’s \(\tau \) for this copula family have been computed and are given below.

Lemma 2

For any bivariate population with copula C(u, v) defined by (15), the Spearman’s \(\rho _S(C)\) and Kendall’s \(\tau (C)\) are

We can see on Fig. 1 that the range of the copula decreases as we increase the number of terms in the sum. For sine-cosine copulas, Lemma 2 shows that terms related to cosine in the density of the copula do not affect \(\rho _S(C)\), while terms with sine linearly affect \(\rho _S(C)\).

Remark 3

Any copula from this family with \(\mu _k=0\) for all integer \(k\ge 1\) satisfies \(\rho _S(C)=\tau (C)=0\). This is a subclass of copulas of the form

Therefore, the Spearman’s \(\rho \) and Kendall’s \(\tau \) are not good parameters for tests of independence because they don’t uniquely identify a member of this family.

-

The sine copulas as perturbations of \(\Pi (u,v)\)

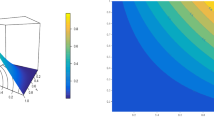

Consider the basis of \(L^2(0,1)\) given by \(\{1, \sqrt{2}\cos k\pi x, k>0,k\in \mathbb {N} \}\). By Theorem 2.3, for any sequence \(\{\lambda _k,k\in \mathbb {N}\}\) satisfying (18), the function (17) See some example of these copula densities on Fig,2.

Lemma 3

For copulas defined by formula (17), we have

This family of copulas seems close to the sine-cosine family, but the formula of \(\rho _S(C)\) or \(\tau (C)\) in Lemma 3 shows that they are not comparable. We see that for these copulas \(\rho _S(C)=0\) when all odd coefficients are equal to zero. Formula (16) defines a subclass of copulas from (17), for which all odd coefficients are equal to zero. Any finite sum of terms from (17) that includes the first term uv and with coefficients that satisfy condition (18) is a copula. An example is

Remark 4

The maximal coefficient of correlation of copula-based Markov chains generated by copulas (19) is \(|{}\lambda |{}\). We find \(\rho _S(C)=\frac{96}{\pi ^4}\lambda \) and \(\tau (C)=\frac{64}{\pi ^4}\lambda \). So, \(|{}\tau (C)|{}\le |{}\rho _S(C)|{}\le |{}\lambda |{}\).

2.2.2 The extended Farlie–Gumbel–Morgenstern copulas

In this subsection, we construct an example of copula family based on formula (7) that extends the Farlie–Gumbel–Morgenstern copulas. The FGM copula is a perturbation of the independence copula (see Longla et al. (2022c)). The form of the FGM copula allows an extension based on shifted Legendre polynomials, orthonormal basis of \(L^2(0,1)\). It is important to mention that formula (7) allows various families of extensions of the FGM copula that depend on the used orthonormal systems of functions. Legendre polynomials are defined by Rodrigues’ formula

This formula was discovered by Rodrigues (1816) and leads to

Legendre polynomials form a basis of \(L^2(-1,1)\). When they are shifted to [0, 1], a renormalization is used to obtain shifted orthonormal polynomials. They appear in many fields of mathematics as solutions to a special differential equation and are used for approximation of functions. For these polynomials, \(\int _{-1}^1P^2_k(x)dx=\frac{2}{2k+1}\). The transformation \(y=2x-1\) gives to the orthonormal basis of shifted Legendre polynomials as

For \(k>0,\) \(\varphi _k(1)=\sqrt{2k+1}=\max \varphi _k\), for odd k, \(\min \varphi _k=-\sqrt{2k+1}=\varphi _k(0)\) and for even k, \(0>\min \varphi _k>-\sqrt{2k+1}\). This minimum is achieved at least at one point. Therefore, when (7) contains \(\varphi _k(x)\) alone, the range of \(\lambda _k\) is

Based on the special structure of these functions, using the following equality known as Bonnet’s recursion formula

we establish the equality

This formula uses the fact that \(P_n(-1)=(-1)^n\). Substituting \(u=\frac{1}{2}(x+1)\) and \(t=2\,s-1, 2ds =dt\), we obtain

Thus, using the definition of shifted Legendre polynomials \(\varphi _k(x)\), we have

For more on Legendre polynomials, see Belousov (1962), Szegö (1975) and the references therein. Formula (23) is used to establish the following.

Lemma 4

For any copula of the extended FGM copula family,

Simple computations show that \(-1/3\le \rho _S(C)\le 1/3\) and \(\tau (C)\) can be increased or decreased by adding more non-zero terms to the series. In general, copulas with \(\varphi _k(x)\) given in formula (22) satisfy condition (10). We call this set of copulas extended Legendre–Farlie–Gumbel–Morgenstern family of copulas. Some copulas of this form are as follows. For \(|{}\lambda |{}\le 1/3, \) \(3|{}\lambda _1|{}+5|{}\lambda _2|{}\le 1\),

The first example above is the FGM copula. For more on this copula family, see Farlie (1960), Gumbel (1960), Johnson and Kotz (1975) or Morgenstern (1956). In general this copula has parameter \(\theta \) in place of \(3\lambda _1\). The second example is one of the possible extensions of this copula family. For this extension, we have

Any subclass of the copula family defined with Legendre polynomials and containing the copula given by formula (24) is an extension of the FGM family. Depending on what the investigator is looking for, it might be better to set some coefficients equal to zero, deal with the signs of the coefficients to increase or reduce the strength of dependence. This is justified by formula (26). The FGM copula family has parameter \(\theta =3\lambda \), with \(|\theta |\le 1\); so \(|\lambda |\le 1/3\). No copula from our extension can have \(\rho _S(C)\) out of this range because increasing the number of non-zero parameters reduces the range of \(\lambda _1\). Example (25) has \(-1/5\le \lambda _2\le 1/5\) when \(\lambda _1=0\), but can be extended to \(-1/5\le \lambda _2\le 2/5\) (Fig. 3).

For any copula from this family, it is worth formulating the following.

Remark 5

Lemma 4 shows that any copulas from this family with the same \(\lambda _1\) have the same \(\rho _S(C)\), while it is not the case for \(\tau (C)\). Other formulations of these copulas use the basis \(\varphi _{k}(x)=\sqrt{2j_k+1}P_{j_k}(2x-1)\), where \(j_k\) is a sequence of positive integers.

2.2.3 Counter example: the sine basis

This case is to show that not all orthonormal bases of \(L^2(0,1)\) can be used to construct copulas with square integrable densities. We take the case of functions constructed using the sine basis. They are not copulas under any assumptions. In fact, if

If this function is a copula, then using \(C(u,1)=u\) and taking the derivative of the series, we obtain

Multiplying by \(\sin k\pi u\) and integrating leads to \(\frac{1-\cos k\pi }{k\pi } =\frac{\lambda _k}{\pi k} (1-\cos k\pi ).\) Therefore, \(\lambda _{2k+1}=1\). As said earlier, the function \(\varphi (x)=1\) in this case is embedded in a subspace of dimension \(\infty \) of eigenfunctions associated to the eigenvalue 1. This is in contradiction with condition (9). So, no such decomposition represents a square integrable copula density.

3 Copula-based Markov chains and \(\psi \)-mixing

We study here the long run behavior of several copula families constructed with various sets of bases of \(L^2(0,1)\). We provide the study of the mixing structure of copula-based Markov chains generated by our constructed copulas. In some cases, we provide a modification of the copulas to present them as convex combinations of some members of the constructed families. This transformation provides a link between this work and results of Longla et al. (2022a) and Longla (2015). Mixing coefficients are used in probability theory to establish central limit theorems for sums of dependent random variables (see Peligrad and Utev (1997)), which are used to construct confidence intervals for means of functions of Markov chains. Copulas characterize the dependence structure of Markov chains or multivariate random variables in general via Sklar’s theorem (See Sklar (1959)) when marginal distributions are continuous. Therefore, it is important to look into some conditions on copulas that would guarantee a given dependence structure (see Beare (2010), Longla (2015) among others). Dependence structures considered in the literature include \(\alpha \)-mixing, \(\beta \)-mixing, \(\rho \)-mixing, \(\phi \)-mixing, \(\psi \)-mixing, \(\psi '\)-mixing, \(\psi ^*\)-mixing and others. We define only mixing coefficients of interest in this paper. For more on mixing coefficients, see Bradley (2007). For a stationary Markov chain \(X_0, \ldots , X_n\), \(\mathbb {A}=\sigma (X_0)\), mixing coefficients are defined as

where \(P^n(B|{} A)\) stands for \(P(X_n\in B|X_0\in A)\), and \(P^n(A\cap B)\) stands for \(P(X_0\in A ~ and ~ X_n\in B)\). Using these coefficients, Bradley (2005) in his survey presented the following result. Suppose \(X_0, \ldots , X_n\) is a strictly stationary sequence of random variables. If there exists n such that \(\psi '_n>0\) and \(\psi _n^{*}<\infty \), then \(\psi _n\rightarrow 0\) (the sequence is \(\psi \)-mixing). Based on this statement, results of Longla (2015) and Longla et al. (2022c), we can conclude the following.

Theorem 3.1

An absolutely continuous copula C(u,v) generates \(\psi \)-mixing stationary Markov chains when there exist integers \(s_1\) and \(s_2\) such that some versions of the densities of \(C^{s_1}(u,v)\) and \(C^{s_2}(u,v)\) satisfy one of the following conditions.

-

1.

There exists a constant real number M such that \(c^{s_1}(u,v)>0\) on a set of Lebesgue measure 1 and \(c^{s_2}(u,v)<M \) for all \((u,v)\in [0,1]^2\).

-

2.

For all \((u,v)\in [0,1]^2\), \(c^{s_2}(u,v)<M\le 2 \).

It is important in Theorem 3.1 that the version of the density is bounded above on [0, 1]. Failure of this condition on a set of Lebesgue measure 0 can imply that there is no \(\psi \)-mixing.

Example 1

The copula \(C(u,v)=aM(u,v)+(1-a)W(u,v),\) with \(0\le a\le 1,\) from the Mardia family, when used with continuous marginals, doesn’t generate \(\rho \)-mixing Markov chains as shown in Longla (2014). These copulas are not absolutely continuous. Another copula that fails these conditions is (12) with \(\alpha =1=\lambda \).

This example is a convex combination of \(W(u,v)=\max (u+v-1,0)\) and \(M(u,v)=\min (u,v)\). A convex combination of copulas \(C_1(u,v)\), \(\ldots ,\) \(C_k(u,v)\) is the copula \( C(u,v)= a_1 C_1(u,v)+ \cdots a_k C_k(u,v), \) with \(0< a_i,\) for all i and \(a_1+\cdots +a_k=1.\) Using Theorem 3.1, we get.

Corollary 1

Let \(C_1(u,v), \ldots , C_k(u,v)\) be copulas such that the density of \(C_{s_1}* C_{s_1+1}*\cdots *C_{s_2}(u,v)\) is bounded away from zero on a set of Lebesgue measure 1 for some positive integers \(s_1, s_2\). Assume that a version of the density of \((a_1C_{1}+\cdots +a_kC_{k})^{s_3}\) is bounded on \([0,1]^2\) for some positive integer \(s_3\) or a version of the density of \((\sum a_iC_i)^{s_4}(u,v)\) is strictly less than 2 for some \(s_4\in \mathbb {N}\). Then, any convex combination of copulas \(C_1(u,v), \ldots , C_k(u,v)\) generates exponential \(\psi \)-mixing stationary Markov chains with continuous marginals.

3.1 Applications to our new copula families

In this subsection, we apply the result on mixing to Markov chains generated by our newly created copula families. For any copula of the form (12), the maximal coefficient of correlation is \(|\lambda |\). Therefore, it generates \(\rho \)-mixing Markov chains when \(|{}\lambda |{}<1\). Moreover,

-

1.

For \(\alpha \ne 1\), \(C_{\alpha ,\lambda }(u,v)\) generates \(\psi \)-mixing when \(-\min (\frac{1}{\alpha }, \alpha ) \le \lambda <1\);

-

2.

\(C_{1,\lambda }(u,v)\) generates \(\psi \)-mixing when \(-1< \lambda <1\);

-

3.

There is no \(\psi '\)-mixing, \(\psi ^*\) or \(\psi \)-mixing for other values of \((\alpha ,\lambda )\).

Note that when \(\alpha =1\), the range of \(\lambda \) includes \(\pm 1\), for which there is no \(\rho \)-mixing, \(\psi ^*\)-mixing or \(\psi '\)-mixing. This case is equivalent to

When \(|{}\lambda |{}< 1\), the density (30) splits \([0,1]^2\) into 4 subsets. It is constant on each of the subsets, and the union of the 4 subsets is of full Lebesgue measure. Clearly, for \(|{}\lambda |{}=1\), this density is not bounded away from zero (See fig.4).

For a stationary copula-based Markov chain \(U_0, \ldots , U_n\) based on (7) with uniform marginals, the copula of \((U_0,U_n)\) is \(C^n(u,v)\) (see Longla et al. (2022a)).

Theorem 3.2

For any copula defined by (7) and satisfying condition (8),

Moreover, \(\rho _n=\sup _{k}|{}\lambda _k|{}^n\) and Markov chains generated with uniform marginals are both geometrically ergodic and exponential \(\rho \)-mixing if and only if \(\sup _k |{}\lambda _k|{}<1\). If the sum has a finite number of non-zero terms with \(|\lambda _k|<1\) for all k, then the Markov chains generated with uniform marginals are \(\psi \)-mixing.

Corollary 2

For any set \(\{a_i, i=1,\ldots , s: 0\le a_1<a_2<\cdots<a_s<a_{s+1}= 1\}\), and \(|{}\theta _i|{}\le 1\), formula (14) defines the density of a copula that generates \(\psi -mixing\) stationary Markov chains with continuous marginal distributions.

3.2 Mixing properties of the extended FGM family

We investigate the long run behavior of Markov chains generated by extended FGM copulas. Define \(\alpha _k=1\) if k is odd and \(\alpha _k=\min P_k(x)\), if k is even.

Theorem 3.3

For large enough values of n, a version of the copula density \(c^n(u,v)\) is bounded above by \(M<2\) when \(\varphi _k(x)\) is defined using Legendre polynomials with \(\sum {\lambda _k^2}<\infty \), \(\sup _k|{}\lambda _k|{}<1\) and \(\sum |{}\lambda _k|{}(2k+1)\alpha _k\le 1\). Therefore the copula C(u, v) generates \(\psi ^*\)-mixing stationary Markov chains (which is equivalent to \(\psi \)-mixing for stationary Markov chains) for all values of its parameters.

Remark 6

It follows from the proof of Theorem 3.3, that \(c^{n}(x,y)\rightarrow 1\) as \(n\rightarrow \infty \), when the conditions of Theorem 3.3 are satisfied. For any system of eigenfunctions \(\varphi _k(x)\) and for all values of the parameters described in Theorems 3.3, 3.2 holds because \(\psi \)-mixing implies \(\rho \)-mixing. Theorem 3.2 holds when extended FGM copulas are considered. A result similar to Theorem 3.3 is valid for our trigonometric extensions (15) and (17) in the form: for \(\sum (|{}\lambda _k|{}+|{}\mu _k|{})\le 1/2,\) generated Markov chains are \(\psi \)-mixing.

Based on the analysis above, we can derive several facts about mixing properties of Markov chains generated by absolutely continuous copulas with square integrable densities. In general, if we assume that a Markov chain is generated by a copula (7) satisfying conditions (9) and (10) with an absolutely continuous marginal distribution, then the following holds.

Theorem 3.4

Under the assumptions of Theorem (3.2),

-

1.

If the sequence \(\varphi _k(x)\) is uniformly bounded, and \(\sup _k|{}\lambda _k|{}<1\) for all k, then the Markov chain is \(\psi \)-mixing.

-

2.

If \(\sup _k|{}\lambda _k|{}<1\) for all k, then the Markov chain is \(\rho \)-mixing.

-

3.

If Condition (10) allows strict inequality, then the Markov chain is \(\psi '\)-mixing.

Each of the examples of Fig. 1 is bounded away from zero on \([0,1]^2\) and is strictly less than 2. Therefore, by Theorem (3.4), these examples generate \(\psi \)-mixing Markov chains. This means that the sine copulas and sine-cosine copulas generate \(\psi \)-mixing Markov chains. This is justified by the fact that each of the basis functions is bounded by \(\sqrt{2}\) and condition (10) implies \(|\lambda _k|\le 1/2\). It is important to note that the uniform bound on the sequence \(\varphi _k(x)\) is crucial in statement 1 of Theorem 3.4.

3.3 Answer to an open question on mixing

Longla (2015) was solely devoted to mixing properties of copula-based Markov chains. Results were provided for several mixing coefficients, in one direction. There was a proof that mixing for Markov chains generated by copulas implies mixing for Markov chains generated by their convex combinations. In this subsection, we show that mixing for Markov chains generated by convex combinations doesn’t require mixing for Markov chains generated by any of the copulas of the convex combination. To do this, we take extreme cases of formula (30), defined by \(\lambda =\pm 1\). None of them exhibits mixing in the sense of the coefficients defined in this paper, but they have square integrable densities.

Remark 7

We provide here a connection between various results of Longla et al. (2022a, c), Longla (2015) on mixing.

-

1.

The definition of \(C_3(u,v)\) through its density leads to

$$\begin{aligned} C_3(u,v)=\frac{1+\lambda }{2}C_1(u,v)+\frac{1-\lambda }{2}C_2(u,v), \end{aligned}$$where \(C_1(u,v)\) and \(C_2(u,v)\) are the copulas of this family defined by \(\lambda =\pm 1\). Moreover, \(C_3(u,v)\) generates \(\psi \)-mixing Markov chains for \(|{}\lambda |{}<1\).

-

2.

None of the two copulas \(C_1(u,v)\) and \(C_2(u,v)\) has a density bounded away from zero on a set of Lebesgue measure 1. Moreover, \(C_1(u,v)\) and \(C_2(u,v)\) don’t generate \(\psi '\)-mixing Markov chains with continuous marginal distributions. This is because they don’t generate \(\rho \)-mixing Markov chains with continuous marginals. Their convex combinations with \(|{}\lambda |{}< 1\) generate \(\psi '\)-mixing (which implies that they generate \(\rho \)-mixing).

-

3.

None of the copulas \(C_1(u,v)\) and \(C_2(u,v)\) generates \(\psi \)-mixing, because they would otherwise generate \(\rho \)-mixing; but their convex combinations with \(|{}\lambda |{}< 1\) generate \(\psi \)-mixing Markov chains.

-

4.

None of the copulas \(C_1(u,v)\) and \(C_2(u,v)\) generates \(\phi \)-mixing, because they would otherwise generate \(\rho \)-mixing; but their convex combinations with \(|{}\lambda |{}<1\) generate \(\phi \)-mixing Markov chains.

-

5.

None of the copulas \(C_1(u,v)\) and \(C_2(u,v)\) generates \(\beta \)-mixing, because they would otherwise generate \(\rho \)-mixing; but their convex combinations with \(|{}\lambda |{}< 1\) generate \(\beta \)-mixing Markov chains. This is because they are symmetric and generate \(\rho \)-mixing (see Longla and Peligrad (2012).

It is crucial in the above results that the copulas are absolutely continuous. To see this, take the copula

\(C_{\alpha ,\theta }(u,v)\) is a convex combination of copulas containing \(\Pi (u,v)\). Therefore, by Longla (2015), it generates \(\psi {'}\)-mixing stationary Markov chains. \(C_{\alpha ,\theta }(u,v)\) is a convex combination that contains M(u, v). Therefore, by Longla et al. (2022a), it doesn’t generate \(\psi \)-mixing stationary Markov chains with continuous marginals. Its density \(c_{\alpha ,\theta }(u,v)=\alpha (1+\theta (1-2u)(1-2v))<2\) for all \((u,v)\in [0,1]^2\) et all \(\alpha \) and \(\theta \), such that \(0<\alpha<1, 0<|{}\theta |{}<1\). Therefore, the conditions cannot be weakened.

Formula (12) defines an absolutely continuous copula that generates \(\psi \)-mixing stationary Markov chains. Note that its density is equal to 0 on a set of non-zero Lebesgue measure for \(\lambda =-\alpha >-1\) (\(\lambda <1\))or \(\lambda =-\frac{1}{\alpha }>-1\) (\(\lambda >1\)). This by itself answers an open question on \(\psi \)-mixing from Longla (2015). Longla (2015) showed that when the density is bounded away from 0 on a set of Lebesgue measure 1, the copula generates \(\psi '\)-mixing. This example shows that the condition is very strong. We can obtain \(\psi \)-mixing even for copulas with density equal to zero on a set of strictly positive Lebesgue measure using copulas from these new families.

4 Central limit theorem and simulation study

In this section we consider some functions of the Markov chain and derive central limit theorems for estimators of parameters of the copula and the population mean. We use an example of copula from the derived copula families, with special association properties. In fact, we select a copula that has \(\rho _S(C)=0\), and a modification that also includes \(\tau (C)=0\). For these examples, regular estimation procedures based on these measures of association fail.

4.1 Central limit theorem for parameter estimators

We consider in this section central limit theorems for averages of functions of the Markov chain generated by the copula

with \(\varphi _1(x)=\sqrt{2}\sin 2\pi x\), \(\varphi _2(x)=\sqrt{2}\sin 4\pi x\) and \(2|\mu _1|+2|\mu _2|\le 1\). Let f(x) be such that \(\mu =\int _0^1f(x)dx\) and \(\int _0^1f^2(x)dx<\infty \). Define \(S_n(f)=\sum _{i=1}^n f(U_i)\),

Theorem 4.1

Assume that \(U_1, \ldots U_n\) is a realization of the stationary Markov chain generated by (32) and the uniform marginal distribution. The following holds.

-

1.

$$\begin{aligned} \sqrt{n-1}\left( {\hat{\mu }_1\atopwithdelims ()\hat{\mu }_2}-{\mu _1\atopwithdelims ()\mu _2}\right) \rightarrow N\left( {0 \atopwithdelims ()0}, \begin{pmatrix}1 &{} -\mu _1\mu _2 \\ -\mu _1\mu _2 &{} 1\end{pmatrix}\right) . \end{aligned}$$(33)

-

2.

The central limit theorem holds in the form

$$\begin{aligned} \sqrt{n}\left( \frac{S_n(f)}{n}-\mu ) \rightarrow N(0, \sigma ^2_f\right) , \quad \text {where},\quad \sigma ^2_f=\sigma ^2+2\left( \frac{\mu _1 A_1^2}{1-\mu _1}+\frac{\mu _2A_2^2}{1-\mu _2}\right) , \end{aligned}$$(34)where \(A_i^2=2(\int _0^1\sin 2i \pi u f(u)du)^2\).

Based on properties of the copulas that we have constructed here, the variance \(\sigma ^2_f\) exists, is finite and is strictly positive. To construct a confidence set or test a specific value of \((\mu _1,\mu _2)\), we can use the following consequence of Theorem 4.1.

Corollary 3

Assume that \(U_1, \ldots U_n\) is a realization of the stationary Markov chain generated by (32) and the uniform marginal distribution. The following holds.

has an approximately \(\chi ^2(2)\) distribution. For confidence interval purposes, replace \(\mu _1\mu _2\) by \(\hat{\mu }_1\hat{\mu }_2\).

Consider now \(\lambda _k=0\) for all k and \(\mu _k=0\) for \(k>2\). Moreover, request that \(\tau (C)=0\) for the sine-cosine copula. This implies \(\mu _2=-4 \mu _1\), reducing the copula to the one parameter family with \(|\mu _1|\le 0.11\)

Remark 8

Consider a Markov chain generated by (35). Theorem 4.1 implies the following.

-

1.

For \(f(x)=\mathbb {I}(x\le a)\), \(a\in (0,1)\), we get a dependent Bernoulli sequence and a CLT for the sample relative frequency of success holds with

$$\begin{aligned} \sigma ^2_f= a(1-a)+4\frac{\mu _1}{\pi ^2}\left( \frac{\sin ^4 \pi a}{1-\mu _1}-\frac{\sin ^4 2\pi a}{1+4\mu _1}\right) . \end{aligned}$$(36)Note that for \(\mu _1\ne 0\), the observations are dependent, but there exists a value of \(a=\frac{1}{\pi }\arccos \big (\big (\frac{1-4\mu _1}{4(1-\mu _1)}\big )^{1/4}\big )\) for which \(\sigma ^2_f=a(1-a)\). This means that the sample behaves as if it was a simple random sample. A researcher who starts with this simple random sample assumption would have wrong conclusions.

-

2.

For \(f(x)=-\lambda \ln (1-x)\), we get a Markov chain with exponential marginal distribution of parameter \(\lambda \). The estimator of \(\lambda \) is \(S_n(f)/n\) and the CLT holds with \((\int _0^1\sin 2\pi t \ln (1-t)dt)^2=0.1505165\), \(4(\int _0^1\sin 4\pi t \ln (1-t)dt)^2=0.245684\) (these values where obtained using R to integrate).

$$\begin{aligned} \sigma ^2_f=\lambda ^2+4\mu _1\lambda ^2\left( \frac{0.1505165}{1-\mu _1}-\frac{0.245684}{1+4\mu _1}\right) . \end{aligned}$$(37)This example shows that we can still estimate \(\lambda \) using the sample mean, but the variance of the estimator has to be treated more carefully. The asymptotic variance is not \(\lambda ^2/n\). It is multiplied by a coefficient that can be less than or grater than 1 depending on the choice of \(\mu _1\).

-

3.

For \(f(x)=x\), the asymptotic variance is always larger than it would be, if the data was assumed from a simple random sample.

$$\begin{aligned} \sigma ^2_f=\frac{1}{12}+\frac{\mu _1/\pi ^2}{1-\mu _1}-\frac{\mu _1/\pi ^2}{1+4\mu _1}=\frac{1}{12}+\frac{5\mu _1^2}{\pi ^2(1-\mu _1)(1+4\mu _1)}. \end{aligned}$$(38) -

4.

For \( f(x,y)=2w \sin (2\pi x)\sin (2\pi y)-\frac{1}{2}(1-w)\sin (4\pi x)\sin (4\pi y)\), \(w\in [0,1]\), we have the estimator

$$\begin{aligned} \mu _w=\sum _{i=2}^{n}\left( 2w \sin (2\pi U_{i-1})\sin (2\pi U_i)-\frac{1}{2}(1-w)\sin (4\pi U_{i-1})\sin (4\pi U_i)\right) . \end{aligned}$$The central limit theorem for this estimator holds with variance less than that of \(\hat{\mu }_1\),

$$\begin{aligned} \sigma ^2_f= 1-2(1-4\mu _1^2)(w-w^2). \end{aligned}$$(39)The smallest possible value of this variance is \(\sigma ^2_0=0.5+2\mu _1^2\).

4.2 Simulation study

We generate a Markov chain (\(U_1,\ldots U_{1000}\)) using the uniform distribution on (0,1) and the copula (35) for \(\mu _1=0.05\). We use the following standard procedure to generate the Markov chain. Randomly generate an observation from Uniform(0, 1) as \(U_1\). and for every \(i>1\), we generate a new observation W from Uniform(0, 1) and solve for \(U_{i}\) the equation \(C_{,1}(U_{i-1}, U_i)=W\). This step is implemented using the R function uniroot. After generating this sample, we performed a correlation test. This test showed that one could conclude there is no correlation between variables along the Markov chain. Moreover, the scatter plot would also convince further the investigator that this data seems to be a random sample. We use \(\alpha =0.05\) for level of confidence of the intervals (Tables 1, 2, 3, 4, 5, 6).

-

1.

Case 1: We use \(a=01., 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9\) to obtain a set of variables \(Y_i=\mathbb {I}(U\le 0.i)\) for our sample. For this function of the observations we get a sample of values \((Y1\dots Y9)\). We now consider the problem of estimation of the parameters \(a_i\) of the model using the obtained dependent Bernoulli sequence. We get \(\hat{a}_i=\bar{Y}_i\) and the variance is given by formula (36) for every i. A confidence interval is constructed. We run N such studies, and get the proportions of intervals that contain the true value of the parameter. This is done under independence assumption and under our model. We obtain the following comparative results, that report the coverage probabilities under each of the models and for the given values of 100 and 1000 for N.

These tables show that the researcher would be off by a lot by assuming independence for the considered data. Despite the small departure from normality with \(\mu _1=.05\) and \(\mu _2=-.2\), there is a serious impact on the coverage probability of the confidence interval. If dependence is neglected, the interval would not be close to a \(95\%\) confidence interval.

-

2.

Case 2: We create a sample of \(Y=-\lambda \ln (1-U)\) with \(\lambda =0.5, 1, 5, 10, 20, 30\). For each of the values of \(\lambda \), we create N samples. Each of the samples produces a confidence interval, and we report proportions of samples that cover the true parameter \(\lambda \) as coverage probabilities. For the given value of \(\mu _1,\) we have \(\sigma ^2_f=0.9702667\lambda ^2\) and \(\sigma _f=0.9953594\lambda \).

For this example, the low departure from independence doesn’t influence much the distribution of the estimator or the confidence interval. Assuming independence in this case would not have serious consequences. When the same sample is used for all values of \(\lambda \), we obtain equal coverage probabilities.

-

3.

Case 3. In this case, we check that the Data that shows possible correlation 0, \(\rho _S=0\) and \(\tau =0\). We estimate the mean of the marginal distribution and build a confidence interval for samples of 1000 observations. This is repeated 100 times to obtain coverage probabilities. The procedure is repeated for sample sizes 500 and 100.

These tables also show that the confidence intervals under independence tend to capture more than \(94\%\) of the time the true value of the mean.

-

4.

Case 4: Estimation of \(\mu _1\) for a sample with the uniform marginal distribution. We construct 100 confidence intervals for each of the true values of \(\mu _1=.05,.1,.11\). The coverage probabilities are reported for sample sizes \(N=100, 500, 1000\). These intervals use \(w=0.25, 0.5, 0.75\).

This table shows that the best estimator would use values of w close to 1, giving more weigh tho the impact of \(\varphi _1(x)\) on the estimator of \(\mu _1\). This is true because when \(w\le 0.6\), even for a sample of size 5000, confidence intervals for \(\mu _1\) are too wide and cover the true value of \(\mu _1\) almost always.

5 Conclusion and comments

We have provided a characterization of copulas with square integrable symmetric densities. We have used this representation to construct several families of copulas. One of the constructed copula families is an extension of the FGM copula family. Other families include trigonometric functions and are all new in the literature. Mixing properties of the constructed copula families have been established. Theorems have been provided for \(\rho \)-mixing and \(\psi \)-mixing of Markov chains generated by these copulas and the uniform distribution. This result is equivalent to the said mixing for Markov chains generated by means of a continuous marginal distribution.

Copulas based on \(\{1, \sin 2\pi kx, \cos 2\pi kx, k\in \mathbb {N} \}\) have been proposed. Their Spearman \(\rho \) and Kendall \(\tau \) indicate a way to modify the copula without modifying \(\tau (C)=\rho _S(C)=0\). The equalities \(\tau (C)=\rho _S(C)=0\) hold for the independence copula. We have investigated central limit theorems for these Markov chains. An example with \(|\mu _1|\le .11, \mu _2=-4\mu _1\), \(\mu _k=0, k\ge 3\) was used in simulations to illustrate departure from independence, while conserving \(\tau (C)=\rho _S(C)=0\). This simulation study has shown that in some cases, the dependence is not seen graphically, and even on the correlation test. However, assuming independence produces poor confidence intervals. Various constructions of this form can be used to model the dependence structure of the data while keeping the key factors of association that the researcher doesn’t want to modify. We have provided in this case a central limit theorem for the estimator of \((\mu _1, \mu _2)\); and indicated how it can be used for testing and confidence intervals.

We have shown that examples of copulas based on finite sums of terms can increase \(\rho _S(C)\) up to 0.49 and \(\tau (C)\) to 0.32. These values are larger than those of the popular FGM copula family. A conclusion on the obtained extension of the FGM copula family is that it can help modify the Kendall coefficient while keeping the Spearman’s \(\rho \) at the level \(\lambda _1\) by introducing non-zero coefficients. This fact is based on \(\rho _S(C)=\lambda _1\) for all copulas of the family.

We also mention that functions \(\varphi _k(x)\) can act as parameters of the constructed copula families, and be subject to estimation issues. This would be the case when one needs to find the appropriate perturbation that fits the best the relevant situation based on the information at hands. This question will rely on mixing properties of the constructed copula families and is one of the topics for further research on estimation problems based on these copulas. A drawback for the constructed copula families is that, being absolutely continuous, they do not exhibit any tail dependence. Consideration of their tail dependent extensions is part of ongoing work.

Data availability

This manuscript has no associated data.

References

Aas K, Czado C, Frigessi A, Bakken H (2009) Pair-copula constructions of multiple dependence. Insur Math Econ 44:182–198

Arakelian V, Karlis D (2014) Clustering dependencies via mixtures of copulas. Commun Stat-Simul Comput 43(7):1644–1661

Beare BK (2010) Copulas and temporal dependence. Econometrica 78(1):395–410

Belousov SL (1962) Tables of normalized associated legendre polynomials. Mathematical Tables, vol 18. Pergamon Press

Bradley RC (2005) Basic properties of strong mixing conditions. a survey and some open questions. Probab Surv 2:107–144

Bradley RC (2007) Introduction to strong mixing conditions, vol 1–2. Kendrick Press, Utah

Chen X, Fan Y (2006) Estimation of copula-based semi-parametric time series models. J Econometrics 130:307–335

Chesneau C (2021) On new types of multivariate trigonometric copulas. Appl Math 1:3–17

Darsow WF, Nguyen B, Olsen ET (1992) Copulas and Markov processes. Ill J Math 36(4):600–642

Doukhan P, Fermanian J-D, Lang G (2009) An empirical central limit theorem with applications to copulas under weak dependence. Stat Inference Stoch Process 12(1):65–87

Durante F, Sempi C (2016) Principles of copula theory. CRC Press, Boca Raton

Durante F, Sanchez JF, Flores MU (2013) Bivariate copulas generated by perturbations. Fuzzy Sets Syst 228:137–144

Ebaid R, Elbadawy W, Ahmed E, Abdelghaly A (2022) A new extension of the FGM copula with an application in reliability. Commun Stat-Theory Methods 51(9):2953–2961

Farlie DJ (1960) The performance of some correlation coefficients for a general bivariate distribution. Biometrika 47(3–4):307–323

Ferreira JC, Menegatto VA (2009) Eigenvalues of integral operators defined by smooth positive definite kernels. Integral Equ Oper Theory 64(1):61–81

Gumbel EJ (1960) Bivariate exponential distributions. J Am Stat Assoc 55(292):698–707

Hurlimann W (2017) A comprehensive extension of the FGM copula. Stat Pap 58:373–392. https://doi.org/10.1007/s00362-015-0703-1

Johnson NL, Kotz S (1975) On some generalized Farlie-Gumbel-Morgenstern distributions. Commun Stat 4(5):415–427

Jones GL (2004) On the Markov chain central limit theorem. Probab Surv 1:299–320

Kipnis C, Varadhan SRS (1986) Central limit theorem for additive functionals of reversible Markov processes and applications to simple exclusions. Commun Math Phys 104(1):1–19

Klement EP, Kolesárová A, Mesiar R, Saminger-Platz S (2017) Copula constructions using ultramodularity. In: Úbeda Flores M, de Amo Artero E, Durante F, Fernández Sánchez J (eds) Copulas and dependence models with applications. Springer, Cham

Komornik J, Komornikova M, Kalicka J (2017) Dependence measures for perturbations of copulas. Fuzzy Sets Syst 324:100–116

Longla M (2014) On dependence structure of copula-based Markov chains. ESAIM 18:570–583

Longla M (2015) On mixtures of copulas and mixing coefficients. J. Multivar Anal 139:259–265

Longla M, Peligrad M (2012) Some aspects of modeling dependence in copula-based Markov chains. J. Multivar Anal 111(2012):234–240

Longla M, Djongreba Ndikwa F, Muia Nthiani M, Takam Soh P (2022a) Perturbations of copulas and mixing properties. J Korean Stat Soc 51(1):149–171

Longla M, Muia Nthiani M, Djongreba Ndikwa F (2022b) Dependence and mixing for perturbations of copula-based Markov chains. Stat Probab Lett 180:109239

Longla M, Mous-Abou H, Ngongo IS (2022c) On some mixing properties of copula-based Markov chains. J Stat Theory Appl 21:131–154

Mercer J (1909) Functions of positive and negative type and their connection with the theory of integral equations. Philos Trans R Soc A 209(441–458):415–446

Morgenstern D (1956) Einfache Beispiele zweidimensionaler Verteilungen. Mitteilungsblatt für Mathematische Statistik 8:234–235

Morillas PM (2005) A method to obtain new copulas from a given one. Metrika 61:169–184

Nelsen RB (2006) An introduction to copulas, 2nd edn. Springer Series in Statistics. Springer, New York

Peligrad M, Utev S (1997) Central limit theorem for linear processes. Ann Probab 25(1):443–456

Rodrigues O (1816) Mémoire sur l’attraction des sphéroïdes. Correspondence sur l’Ecole Polytechnique 3:361–385

Sklar A (1959) Fonctions de répartition à \(n\) dimensions et leurs marges. Publ Inst Stat Univ Paris 8:229–231

Szegö G (1975) Orthogonal polynomials. Amer. Math. Soc, Rhode Island

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix of proofs

Appendix of proofs

Proof of Proposition 2.1

-

1.

Note that for any copula, we have \(C(x,1)=x\) and \(C(x,0)=0\). Therefore, for any absolutely continuous copula, \(\varphi (x)=1\) satisfies

$$\begin{aligned} K \varphi (x) = \int _{0}^1c(x,y)dy=C_{,1}(x,1)-C_{,1}(x,0)=1=\varphi (x). \end{aligned}$$It follows that the function \(\varphi (x)=1\) is an eigenfunction of any kernel operator defined by an absolutely continuous copula associated to the eigenvalue 1.

-

2.

The proof of the second point relies on formula (6) and the first point of Proposition 2.1.

-

3.

The proof of this third point is a consequence of the fact that when the eigenvalue 1 has multiplicity higher than 1, it generates a subspace of dimension greater than 1. In this subspace, an orthonormal basis not containing \(\varphi (x)=1\) can be constructed.

\(\square \)

Proof of Theorem 2.3

The statement of Theorem 2.3 implicitly claims that the sum has point-wise convergence. To prove this, we recall Weierstrass’ M-test. By Weierstrass’ M-test, for a sequence \(f_n(x)\) defined on the same support E, if \(|{}f_n(x)|{}\le M_n\) for all x and \(\sum M_n<\infty \), then \(\sum f_n(x)\) converges uniformly and absolutely on E. For the sum (7), Condition (10) is equivalent to

Note that \( |{}\lambda _k \varphi _k(x)\varphi _k(y)|{}\le |{}\lambda _k\alpha _k|{}\) for all x, y and k. Moreover, \(\sum _{k=1}^{\infty } |{}\lambda _k \alpha _k|{} \) converges as a consequence of Condition (10). Thus, by Weierstrass M-test, the series that defines the density of the copula converges absolutely and uniformly. Moreover, (7) also holds with absolute convergence and uniform convergence. The rest of the proof relies on the fact that functions form an orthonormal system. Orthogonality implies \(C(1,x)=C(x,1)=x\), because \(\int _0^1\varphi _k(s)ds=0\) and \(C(x,0)=C(0,x)=0\) by definition. \(\square \)

Proof of Theorem 3.1

The first condition of this theorem implies \(\psi '\)-mixing as a consequence of Longla (2015). Longla (2015) showed that if the density of the absolutely continuous part of \(C^{s_1}(u,v)\) is bounded away from 0 on a set of Lebesgue measure 1, then \(\psi '_{s_1}>0\). Moreover, \(c^{s_2}(u,v)<M\) implies \(\psi _{s_2}<\infty \). Therefore, by Bradley (2005), the first condition of Theorem 3.1 implies \(\psi \)-mixing. The second condition implies \(\psi \) as a result of Longla et al. (2022c). \(\square \)

Proof of Example 1

If this copula generates \(\psi \)-mixing stationary Markov chains with continuous marginal distributions, then it would have to generate \(\psi '\)-mixing and would therefore generate \(\rho \)-mixing (contradiction) with Longla (2014). Therefore, it doesn’t generate \(\psi \)-mixing. Though its density is \(c(u,v)=0\) for all \((u,v)\in (0,1)^2\) such that \(v\ne u\ne 1-v\), this density doesn’t exist on a set of Lebesgue measure 0 and is bounded on a set of Lebesgue measure 1. The second example is based on the fact that the density of the given copula is equal to 2 on a set of Lebesgue measure 1/2 and equal to 0 on its complement. \(\square \)

Proof of Lemma 2

The upper bound on these values is based on the assumptions on the coefficients of the series. These conditions imply that \(|{}\mu _k|{}\le 1/2\) and the largest value in absolute value is achieved when \(|{}\mu _1|{}=1/2\), \(\mu _k=0\) for \(k>1\) and \(\lambda _k=0\) for \(k\ge 1\). Moreover,

The last inequality uses \(|{}\lambda _1|{}+|{}\mu _1|{}\le 1/2\). Equality happens for \(|{}\mu _1|{}=1/2\) and \(\lambda _1=0\). These inequalities justify the boundary on \(\rho _S(C)\) and \(\tau (C)\). \(\square \)

Proof of Theorem 3.2

The proof of Theorem 3.2 relies on the fact that \(C^n(u,v)\) is the \(n^{th}\)-fold product of C(u, v), \(\varphi _k(x)\) form an orthonormal basis of \(L^2(0,1)\), \(\lambda ^n_k\) are all eigenvalues of the Hilbert–Schmidt operator associated to \(c^n(u,v)\). Note that though we have square integrability, the supremum in Theorem 3.2 can be equal to 1. The rest is an application of Longla and Peligrad (2012). \(\square \).

Proof of Theorem 3.3

The proof of Theorem 3.3 is an application of Longla et al. (2022c). In Longla et al. (2022c) it was shown that if the density \(c^n(u,v)< 2\) on [0, 1] for some n, then the copula C(u, v) generates \(\psi ^*\)-mixing. Let \(\underline{\lambda }=\sup _{k}|{}\lambda _k|{}<1\). From the fact that the density is square integrable, we have

Therefore, \(c^n(u,v)\) is bounded away from 0 on a set of Lebesgue measure 1. This implies \(\psi '\)-mixing. Moreover, \(|{}\lambda _{k}|{}\le (2k+1)^{-1}\) when k is even. Thus,

The last inequality uses the fact that the sequence \(\lambda _k\) converges to 0 or has finitely many values. The second portion is also bounded. It is written separately to emphasize the fact that for even values of k, it is possible to have larger values when \(|{}\lambda _k|{}>(2k+1)^{-1}\). For odd values of k, this is not possible because \(|{}\lambda _k|{}\le (2k+1)^{-1}\). If we denote \(M=\sum _{k=1}^\infty \lambda _k^2\), then \(M<\infty \) because c(x, y) is square integrable. So, we obtain

M is a constant free of n. So, we conclude that as \(n\rightarrow \infty \), \(M\underline{\lambda }^{n-3}\rightarrow 0\). Therefore, \(c^n(x,y)<2\) for sufficiently large values of n. By Longla et al. (2022c) it follows that the Markov chains are \(\psi \)-mixing.

Note that there are still some values of \(\lambda _k>(2k+1)^{-1}\) that are not considered by Theorem 3.3 which can increase up to the minimum of the considered even Legendre polynomials. Using the same arguments, we extend the theorem to the cases when the supremum of \(\lambda _k\) is less than 1. Due to square integrability, we have \(\sum \lambda _k^2<\infty \). The comparison test for series leads to \(\lambda _k^2(2k+1)\rightarrow 0\) as \(k\rightarrow \infty \) because \(\sum (2k+1)^{-1}\) diverges. Therefore, there exists an integer K, such that for \(k>K\), \(\lambda ^2_k\le \varepsilon /(2k+1)\). Now, for \(k\le K\), it is easy to find N such that for all \(n\ge N\), \(\sum \lambda _k^n(2k+1)<\varepsilon _1\), where the sum is taken over even integers less than or equal to K. This leads to

The last inequality has parameters \(\varepsilon , \varepsilon _1, N\) and M that are free of n. Therefore, as a sequence that converges to 1, the right hand side can be made strictly less than 2 for any values of these parameters as n gets sufficiently large. So, \(c^{n}(u,v)<2\) for some integer n. \(\square \)

Proofs of Theorem 4.1and Corollary 3

Assume \((U_1,\ldots , U_n)\) is a Markov chain with uniform marginals generated by copula (32).

Proof of Theorem 4.1

Let \(f_i(u,v)=\varphi _i(u)\varphi _i(v)\), for \(i=1,2\) and \(f=af_1+bf_2\). It is obvious that \(\mathbb {E}f(U_i,U_{i+1})=a\mu _1+b\mu _2\) and \(var(f(U_1,U_2))\) is finite. Moreover, \(Y_{i-1}=(U_{i-1},U_{i}),\) \(i=2\cdots n\) is a Markov chain. This Markov chain is ergodic because the original Markov chain is \(\psi \)-mixing. Therefore, by Kipnis and Varadhan (1986), the CLT holds with

Note that for a Markov chain generated by (32), the density of the cumulative distribution function of \((U_1,U_2, U_{1+i}, U_{2+i})\) is obtained using the Markov property as follows. For \(i\ge 2\), using formula (31), \((U_2, U_{1+i})\) has density

Therefore, the joint density of \((U_1,U_2, U_{1+i}, U_{2+i})\) is

Formula (40) implies

Corresponding formulas for \(f_2\) are not provided here. They are obtained by symmetry. Using \(\varphi _1(x)=\sqrt{2}\sin (2\pi x)\) and \(\varphi _2(x)=\sqrt{2}\sin (4\pi x)\), we obtain

Therefore, substituting and simplifying leads to \(\sigma ^2_f=a^2+b^2-2ab\mu _1\mu _2.\) It follows that for \(\bar{f_n}=\frac{1}{n-1}\sum _{i=1}^{n-1}(a\varphi _1(U_i) \varphi _1(U_{i+1})+b\varphi _2(U_i)\varphi _2(U_{i+1}))\),

Therefore, the Cramer-Wold device concludes the proof of Theorem 4.1. \(\square \)

Proof of Corollary 3

According to our work, this Markov chain is \(\psi \)-mixing. Let f(x) be a function such that \(\mu =\int _0^1f(x)dx\) and \(\int _0^1f^2(x)dx<\infty \). Define \(S_n(f)=\sum _{i=1}^n f(U_i)\). By Kipnis and Varadhan (1986), the central limit theorem holds in the form

Based on the provided formula of joint cumulative distribution of \((U_0, U_n)\) and stationarity, \(\varphi _1(x)=\sqrt{2}\sin 2\pi x\) and \(\varphi _2(x)=\sqrt{2}\sin 4\pi x\) imply

Moreover, in the context of reversible Markov chains, we have

where \(A_i=\int _0^1\varphi _i(u)f(u)du\) and \(\sigma ^2\) is the variance of \(f(X_i)\). In this case, \(\mu _2=-4\mu _1\). Simple computations lead to the formula of \(\sigma ^2_f\).

-

Case 1: Dependent Bernoulli observations. Select \(f(x)=\mathbb {I}(x\le a)\) for \(a\in (0,1)\). Via simple computations, we obtain \(\mu =\mathbb {E}f(U_1)=a\), \(\sigma ^2=a(1-a)\) and \(A_i^2=\frac{(1-\cos 2 \pi i a)^2}{2\pi ^2i^2}=\frac{2\sin ^4 \pi i a}{\pi ^2i^2}\). Thus, Eq. (36) holds.

-

Case 2: Trigonometically dependent exponential sequences. Consider \(f(x)=-\lambda \ln (1-x)\). This defines a sequence of variables \(X_1,\ldots , X_n\) that has exponential marginal distributions (\(X_i=-\lambda \ln (1-U_i)\)). \(\mu =\mathbb {E}(X_i)=\lambda \), \(\sigma ^2=\lambda ^2\). Simple computations give formula (37)

-

Case 3. Dependent uniform Data. Consider now \(f(x)=x\). The generated Markov chain itself. \(\mathbb {E}(X)=1/2\), \(\sigma ^2=1/12\). \(A_i^2=\frac{1}{2i^2\pi ^2}\). So, formula (38) holds. For \(|\mu _1|\le .11\), the variance (38) is strictly positive.

-

Estimating \(\mu _1\). Consider estimating the parameter \(\mu _1\) based on the generated Markov chain. Take any \(w\in \mathbb {R}\) and

$$\begin{aligned} f(x,y)=2w \sin (2\pi x)\sin (2\pi y)-\frac{1}{2}(1-w)\sin (4\pi x)\sin (4\pi y). \end{aligned}$$

\(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Longla, M. New copula families and mixing properties. Stat Papers (2024). https://doi.org/10.1007/s00362-024-01559-9

Received:

Revised:

Published:

DOI: https://doi.org/10.1007/s00362-024-01559-9

Keywords

- Perturbations of copulas

- Characterization of symmetric copulas

- Square integrable copula density

- Reversible Markov chains

- Dependence modeling with copulas

- Mixing for copula-based Markov chains

- New copula families