Abstract

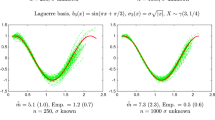

In this work, we develop two stable estimators for solving linear functional regression problems. It is well known that such a problem is an ill-posed stochastic inverse problem. Hence, a special interest has to be devoted to the stability issue in the design of an estimator for solving such a problem. Our proposed estimators are based on combining a stable least-squares technique and a random projection of the slope function \(\beta _0(\cdot )\in L^2(J),\) where J is a compact interval. Moreover, these estimators have the advantage of having a fairly good convergence rate with reasonable computational load, since the involved random projections are generally performed over a fairly small dimensional subspace of \(L^2(J).\) More precisely, the first estimator is given as a least-squares solution of a regularized minimization problem over a finite dimensional subspace of \(L^2(J).\) In particular, we give an upper bound for the empirical risk error as well as the convergence rate of this estimator. The second proposed stable LFR estimator is based on combining the least-squares technique with a dyadic decomposition of the i.i.d. samples of the stochastic process, associated with the LFR model. In particular, we provide an \(L^2\)-risk error of this second LFR estimator. Finally, we provide some numerical simulations on synthetic as well as on real data that illustrate the results of this work. These results indicate that our proposed estimators are competitive with some existing and popular LFR estimators.

Similar content being viewed by others

References

Amini AA (2021) Spectrally-truncated kernel ridge regression and its free lunch. Electron J Stat 15:3743–3761

Ben Saber A, Karoui A (2023) A distribution free truncated kernel ridge regression estimator and related spectral analyses. Preprint at arxiv:2301.07172

Bouka S, Dabo-Niang S, Nkiet GM (2023) On estimation and prediction in spatial functional linear regression model. Lith Math J 63:13–30

Cai T, Hall P (2006) Prediction in functional linear regression. Ann Statist 34:2159–2179

Cai T, Yuan M (2012) Minimax and adaptive prediction for functional linear regression. J Am Stat Assoc 107:1201–1216

Cardot H, Ferraty F, Sarda P (2003) Spline estimators for the functional linear model. Stat Sin 13:571–591

Casella G (1985) Condition numbers and minimax ridge regression estimators. J Am Stat Assoc 80:753–758

Chen D, Hall P, Müller H-G (2011) Single and multiple index functional regression models with nonparametric link. Ann Stat 39:1720–1747

Chen C, Guo S, Qiao X (2022) Functional linear regression: dependence and error contamination. J Bus Econ Stat 40(1):444–457

Cohen A, Davenport MA, Leviatan D (2013) On the stability and accuracy of least square approximations. Found Comput Math 13(5):819–834

Crambes C, Kneip A, Sarda P (2009) Smoothing splines estimators for functional linear regression. Ann Stat 37:35–72

Du P, Wang X (2014) Penalized likelihood regression. Stat Sin 24(2):1017–1041

Escabias M, Aguilera AM, Valderrama MJ (2005) Modeling environmental data by functional principal component logistic regression. Environmetrics 16:95–107

Ferraty F (2014) Regression on functional data: methodological approach with application to near-infrared spectrometry. J Soc Fr Stat 155:100–120

Ferraty F, Vieu P (2006) Nonparametric functional data analysis: theory and practice. Springer, New York

Gareth MJ, Jing W, Ji Z (2009) Functional linear regression that’s interpretable. Ann Stat 37:2083–2108

Hall P, Horowitz JL (2007) Methodology and convergence rates for functional linear regression. Ann Stat 35(1):70–91

Hoerl AE, Kennard RW (1970) Ridge regression: biased estimation of nonorthogonal problems. Technometrics 12:55–67

Horn RA, Johnson CR (2013) Matrix analysis, 2nd edn. Cambridge University Press, Cambridge

Maronna RA, Yohai VJ (2011) Robust functional linear regression based on splines. Comput Stat Data Anal 65:46–55

Masselot P, Dabo-Niang S, Chebana F, Ouarda T (2016) Streamflow forecasting using functional regression. J Hydrol 538:754–766

Mishra P, Verkleij T, Klont R (2021) Improved prediction of minced pork meat chemical properties with near-infrared spectroscopy by a fusion of scatter-correction techniques. Infrared Phys Technol 113:103643

Morris JS (2015) Functional regression. Ann Rev Stat Appl 2(1):321–359

Olver FW, Lozier DW, Boisvert RF, Clark CW (2010) NIST handbook of mathematical functions, 1st edn. Cambridge University Press, New York

Panaretos VM, Tavakoli S (2013) Cramér-Karhunen-Loève representation and harmonic principal component analysis of functional time series. Stoch Process Appl 123:2779–2807

Ramsay JO, Ramsey JB (2002) Functional data analysis of the dynamics of the monthly index of nondurable goods production. J Econom 107(1–2):327–344

Ramsay JO, Silverman BW (2005) Functional data analysis. Springer, New York

Ratcliffe SJ, Leader LR, Heller GZ (2002) Functional data analysis with application to periodically stimulated foetal heart rate data. I: functional regression. Stat Med 21:1103–1114

Shenk JS, Westerhaus MO (1991) Population definition, sample selection, and calibration procedures for near infrared reflectance spectroscopy. Crop Sci 31:469–474

Shin H, Hsing T (2012) Linear prediction in functional data analysis. Stoch Process Appl 122:3680–3700

Shin H, Lee S (2016) An RKHS approach to robust functional linear regression. Stat Sin 26:255–272

Tropp JA (2019) Matrix concentration and computational linear algebra. Caltech CMS Lecture Notes 2019-01

Vinod HD (1978) A survey of ridge regression and related techniques for improvements over ordinary least squares. Rev Econ Stat 60:121–131

Wang JL, Chiou JM, Müller HG (2016) Functional data analysis. Ann Rev Stat Appl 3(1):257–295

Wang D, Zhao Z, Yu Y, Willett R (2022) Functional linear regression with mixed predictors. J Mach Learn Res 23:1–94

Wesley IJ, Uthayakumaran S, Anderssen RS, Cornish GB, Bekes F, Osborne BG, Skerritt JH (1999) A curve-fitting approach to the near infrared reflectance measurement of wheat flour proteins which influence dough quality. J Near Infrared Spectrosc 7:229–240

Wu Y, Fan JQ, Müller HG (2010) Varying-coefficient functional linear. Bernoulli 16(3):730–758

Yuan M, Cai TT (2010) A reproducing Kernel Hilbert space approach to functional linear regression. Ann Stat 38(6):3412–3444

Acknowledgements

The authors would like to thank very much the anonymous Referees for their valuable suggestions and comments that have greatly improved the first version of this work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No Conflict of interest was reported by the author.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Proof of theorem 1: From Eqs. (15) and (16), it is easy to see that

where \(G_{N,\lambda }=F_{n,N}^T F_{n,N}+\lambda I_N.\) Consequently, we have

Let \(\pmb \varepsilon =\big (\varepsilon _1,\cdots ,\varepsilon _n\big )_{1\le i\le n}^T\) and let \(\beta _{0,N}\) be the projection of \(\beta _0(\cdot )\) over \(S_N=\text{ Span }\{\varphi _j, \, 1\le j\le N\}\), so that

Then, by writing \(<X_i(\cdot ),\beta _0(\cdot )>=<X_i(\cdot ),\beta _{0,N}(\cdot )>+<X_i(\cdot ),\beta _0(\cdot )-\beta _{0,N}(\cdot )>\), one gets

where \(\pmb \alpha =(\alpha _1,\cdots ,\alpha _N)^T\) and \(\pmb {\Delta _N}=\Big [<X_i(\cdot ),\beta _0(\cdot )-\beta _{0,N}(\cdot )>\Big ]_{1\le i\le n}^T.\) Next, the singular values decomposition of \(F_{n,N}\) is given by \(F_{n,N}=U\Sigma _{n,N}V^T.\) Here, U, V are orthogonal matrices and \(\Sigma _{n,N}\) is an \(n\times N\) rectangular diagonal matrix. Consequently, we have

Let \(D_N=\Sigma _{n,N}^T\Sigma _{n,N}.\) Then,

Hence, we have

with \(\big \Vert \pmb \alpha \big \Vert _n\le 1.\) Let \({\displaystyle \phi _{n}^\lambda =\Sigma _{n,N}(D_N+\lambda I_N)^{-1}\Sigma _{n,N}^T-I_n,}\) and recall that for an \(n\times N\) matrix A, \(\Vert A\Vert _2=\sigma _1(A)\) is the largest singular value of A. Then, we have

Note that \({\displaystyle \Sigma _{n,N}=\big [s_{i,j}\big ]_{\underset{1\le j\le N}{1\le i\le n}}, \quad s_{i,j}=\mu _j\delta _{i,j}}\) with \(\mu _j=\sqrt{\lambda _{j}(F_{n,N}^T F_{n,N})}\) are the singular values of \(F_{n,N}.\) Consequently, we have

Since \({\displaystyle |\sigma _1\big (\phi _{n}^\lambda \Sigma _{n,N}\big )|=\underset{1\le j\le N}{\max }\big (\frac{\lambda }{{\mu }_j^2+\lambda }\big )\mu _j}\) and since \({\displaystyle \underset{x\ge 0}{\sup }\frac{\lambda x}{x^2+\lambda }=\frac{\sqrt{\lambda }}{2},}\) then \({\displaystyle \underset{1\le j\le N}{\max }\Big (\frac{\lambda }{{\mu }_j^2+\lambda }\Big )\mu _j=\frac{\sqrt{\lambda }}{2}.}\) That is

In the same way, one gets

where

Consequently, we have for \(1\le i\le n,\)

Moreover, since

then

Next, let \({\displaystyle B_n= U\Sigma _{n,N}(D_N+\lambda I_N)^{-1}\Sigma _{n,N}^T U^T}\) and let \(\Vert B_n\Vert _F\) be its Frobenius norm, given by

Since \(B_n\) has at most rank N, then

Also, by using the fact that the \(\varepsilon _i\) are i.i.d. and independent from the \(Z_i\) with \({\mathbb {E}}_{\varepsilon }(\varepsilon _i)=0\) and \({\mathbb {E}}_{\varepsilon }(\varepsilon ^2_i)=\sigma ^2,\) one gets

Finally, by combining Eqs. (62), (64)–(66) together with the fact that \((a+b)^2\le 2(a^2+b^2)\), one gets the desired result Eq. (20).

Proof of theorem 2: Since the i.i.d. random variables \(Z_{i,j}\) are centred with variances \(\sigma _Z^2\), then

Hence, we have

Moreover, the \(2^{k-1}-\)dimension random matrix \(G_k\) is written in the following form

Note that each matrix \({\textbf{H}}_{i,k}\) is positive semi-definite. This follows from the fact that for any \(\pmb x\in {\mathbb {R}}^{2^{k-1}}\), we have

By using Gershgorin circle theorem, see (Horn and Johnson 2013), one gets

Hence, we have

with probability at least \((1-\delta _N).\) To conclude for Eq. (38), it suffices to combine Eqs. (36), (37), (67), (68) and use the fact that \((1-\eta _1) (1-\eta _2) (1-\eta _3) > 1-\eta _1-\eta _2-\eta _3,\) where

Proof of theorem 3: Let \({\textbf{c}}^k\) and \(\widehat{{\textbf{c}}}_{k}\) be the expansion coefficients vectors of \(\beta _0^k\) and \({{\widehat{\beta }}}_{n,N}^k,\) the orthogonal projection of \(\beta _0\) and \({{\widehat{\beta }}}_{n,N}\) over \(\text{ Span }\{\varphi _j(\cdot ),\, j\in I_k\}.\) That is

where,

In a similar manner, let \(\widetilde{{\textbf{c}}}_{k}\) be the expansion coefficients vector of \({\widetilde{\beta }}_{N,{ M_1}}^k\), the projection over of \({\widetilde{\beta }}_{N,{ M_1}}.\) For each \(1\le k\le K_N,\) let \(\Omega _{+,k}\) and \(\Omega _{-,k}\) be the set of all possible draw \((X_1(\cdot ),\cdots , X_n(\cdot ))\) for which \(\lambda _{\min }(G_k)\ge \eta _k\) and \(0<\lambda _{\min }(G_k)\le \eta _k\) respectively. Then, we have

where

and

By using Parseval’s equality, we have from Eq. (69)

So that on \(\Omega _{+,k},\) we obtain

But

By using the hypotheses on the noises \(\varepsilon _i,\) it is easy to see that

Consequently, we have

The previous inequality, together with Eqs. (71)–(73) lead to

Now, since

then

Moreover, we have

Also, since \(\Vert \beta _0(\cdot )-\beta _{0,N}(\cdot )\Vert _{2}\) is deterministic, then

Finally, by using the previous equality together with Eqs. (74)–(76), one gets the desired \(L_2\)-risk error of the estimator \({\widetilde{\beta }}_{N,M_1}\).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ben Saber, A., Karoui, A. On some stable linear functional regression estimators based on random projections. Stat Papers (2024). https://doi.org/10.1007/s00362-024-01554-0

Received:

Revised:

Published:

DOI: https://doi.org/10.1007/s00362-024-01554-0