Abstract

This article investigates some statistical and probabilistic properties of general threshold bilinear processes. Sufficient conditions for the existence of a causal, strictly and weak stationary solution for the equation defining a self-exciting threshold superdiagonal bilinear \(\left( SETBL\right) \) process are derived. Then it is shown that under well-specified hypotheses the higher-order moments of the SETBL process are finite. As a result, the skewness and kurtosis indexes are explicitly computed. The exact autocorrelation function is derived with an arbitrarily fixed number of regimes. Also, the covariance functions of the process and its powers are evaluated and the second (respectively, higher)-order structure is shown to be similar to that of a linear process. This implies that the considered process admits an ARMA representation. Finally, necessary and sufficient conditions for the invertibility and geometric ergodicity of a SETBL model are established. Some examples illustrate the obtained theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

An \(\mathbb R\)-valued univariate process \(\left( X_{t}\right) _{t\in \mathbb {Z}}\) defined on some probability space \(\left( \Omega ,\Im ,P\right) \) is said to be a self-exciting threshold superdiagonal bilinear process of type \(\left( \ell ; p, q, P, Q\right) \) and threshold delay parameter d, in short denoted by \(SETBL_{d}\big (\ell ;p,q,P,Q\big ) \), if it is a solution of the following stochastic difference equation

where \(d\ge 1\), \(R_{j}=] r_{j-1},r_{j}] \subset \mathbb R\), \(j=1,\ldots , \ell \), \(r_{j}\) is the threshold value such that \( -\infty =r_{0}<r_{1}<\cdots <r_{\ell }=+\infty , \) hence \(\overset{\ell }{\underset{j=1}{\cup }}R_{j}=\mathbb {R}\), and \( I_{\left\{ X_{t-d}\in R_{j}\right\} }\) is the indicator function of the set \(\left\{ X_{t-d}\in R_{j}\right\} \). The coefficients \(\left( a_{i}^{\left( j\right) }\right) _{0\le i\le p},\left( b_{i}^{\left( j\right) }\right) _{1\le i\le q}\) and \(\left( c_{mk}^{\left( j\right) }\right) _{1\le m,k\le \max (P,Q)},\) \(j=1,\ldots ,\ell \), are constant. The innovation process \(\left( e_{t}\right) _{t\in \mathbb {Z}}\) is an independent and identically distributed \(\left( i.i.d.\right) \) white noise with zero mean and unit variance. Assume that the higher-order moments of the process \((e_t)_{t \in \mathbb Z}\) are finite.

Note that the \(SETBL_{d}\) model is characterized by a piecewise nonlinear structure which follows a bilinear model in each regime \(R_{j}\), for all \(j = 1, \ldots , \ell \). This offers remarkably rich dynamics and complex behaviour to model non-Gaussian data-sets with structural changes or high-amplitude oscillations, which cannot be sufficiently explained by the theory of standard linear models.

A study on threshold first-order bilinear models can be found in Cappuccio et al. (1998) and Ferrante et al. (2003). These authors deal with the problems of the existence of ergodicity, regularity and geometric ergodicity for stationary solutions of the threshold first-order bilinear model. These questions are very old since Tong (1983) for the class of threshold models in nonlinear time series analysis, and Brockwell et al. (1992) for ARMA models. Recent developments on the theory of threshold models can be found in Tong (2011, 2012, 2015). The results proved in our paper extend the work of the cited authors.

The \(SETBL_{d}\left( \ell ;p,q,P,Q\right) \) model encompasses many commonly used models existing in the literature:

-

1.

Standard superdiagonal bilinear BL(p, q, P, Q) models. They are obtained by assuming \(\ell =1\) in (1.1). See, for example, Granger and Anderson (1978), Davis and Resnick (1996), and Popovič and Bakouch (2020).

-

2.

\(SETARMA_{d}\left( \ell ;p,q\right) \) models. They are obtained by setting \(c_{mk}^{(j)}=0\) for all m, k and j in (1.1). See, for example, Tong (1983). Gibson and Nur (2011) investigate the relative efficacy of two-regime threshold autoregressive (TAR) models, applied to econometric dynamics, in the finance domain. A Bayesian analysis of such models has been developed by Chen and Lee (1995). A portmanteau test to detect self-exciting threshold autoregressive-type nonlinearity in time series data has been proposed by Petruccelli and Davies (1986).

The literature on SETARMA models has recently increased. Chan and Goracci (2019) solve the long-standing problem regarding the irreducibility condition of a first-order threshold autoregressive moving-average (TARMA) model, and derive a set of necessary and sufficient conditions for the ergodicity of invertible first-order TARMA processes. Li and Li (2011) derive the asymptotic null distribution of a quasilikelihood ratio test statistic for an ARMA model against its threshold extension, and propose a novel bootstrap approximation of that distribution based on stochastic permutation (the null hypothesis is that of no threshold, and the error term could be dependent). Multiple change point detection and validation in autoregressive time series data have been investigated by Ma et al. (2020), and Mohr and Selk (2020). Goracci et al. (2021) present supremum Lagrange multiplier tests to compare a linear ARMA specification against its threshold ARMA extension. Then they prove the consistency of the tests, and derive their asymptotic distribution both under the null hypothesis and contiguous local alternatives. Chan et al. (2024) propose a Lagrange multiplier test for dynamic regulation within TARMA setting, and provide a rigorous proof of tightness in the context of testing for threshold nonlinearity against difference stationarity.

-

3.

Some classes of \(SET(G)ARCH_{d}\left( \ell ;p,q\right) \) models. See Kristensen (2009) for the description of (G)ARCH(p, q) models as special cases of standard bilinear models. A class of threshold bilinear GARCH processes has been introduced by Choi et al. (2012) to study different asymmetries in volatilities, accommodating some existing asymmetric models.

Remark 1.1

The piecewise bilinear structure of the \(SETBL_{d}\) model allows to apply some well-known results, obtained by Granger and Anderson (1978) in the bilinear setting.

Remark 1.2

The \(SETBL_{d}\left( 2;p,q,P,Q\right) \) model can be written as

where \(X_{t}^{\left( j\right) }\) is a standard superdiagonal bilinear \( BL\left( p,q,P,Q\right) \) model, \(j=1,2\), and the indicator function

is shortly denoted by \(I_{t - d}\). It follows that \(I_{\left\{ X_{t-d}\in R_{2}\right\} }=1-I_{t-d}.\) Of course, the switching between the two regimes in model (1.2) is governed by the process in (1.3).

Remark 1.3

If the process in (1.3) is second-order stationary and ergodic, then we have

where \(p_{j}=E\left\{ I_{t-d}I_{t-d-j}\right\} =P\left( X_{t-d}\le r_{1},X_{t-d-j}\le r_{1}\right) \), for all \(j\ge 0\) with \(p_0 = p\).

In this case, the process in (1.3) is called a Bernoulli process.

The aim of the paper is to investigate some statistical and probabilistic properties of \(SETBL_{d}\left( \ell ;p,q,P,Q\right) \) models. For statistical purposes, it is desirable in practice that the solution processes \(\left( X_{t} \right) _{t\in \mathbb {Z}} \) of equation (1.1) are stationary, ergodic, and satisfy \(X_{t}=f\left( e_{t},e_{t-1},,\ldots \right) \) almost surely (a.s.), where f is a measurable function from \( \mathbb {R} ^{\infty }\) to \( \mathbb {R} \). Such solutions are called causal.

We now highlight our main contributions. First, using suitable Markovian state space representations of the \({\text {SETBL}}_d (\ell ; p, q, P, Q)\) model, we derive sufficient conditions ensuring the existence of a unique, causal, strictly stationary and ergodic solution of the proposed model. Then we prove that the higher-order moments of the SETBL process and its powers are finite, under certain tractable matrix conditions. As a result, the skewness and kurtosis indexes are explicitly computed. Second, since the second-order structure gives a useful information to identify a time series process, we derive the autocorrelation function and the covariance functions of the considered process. As a consequence, we find that the second-order structure is similar to that of some linear processes, hence the SETBL models admit ARMA representations. This finding is useful for the estimation of the model parameters via the GMM procedure. Furthermore, the ARMA representation plays an important role in forecasting the initial process. Third, we establish the necessary and sufficient conditions ensuring the existence of geometrically ergodic solutions of the SETBL models and for the model invertibility. Examples are proposed to illustrate the usefulness of the proposed methodology.

Some notations are used throughout the paper: \(I_{(n)}\) denotes the \( n\times n\) identity matrix, \(I_{\Delta }\) the indicator function of the set \(\Delta \), and \(O_{(k, \ell )}\) the matrix of order \(k\times \ell \) whose entries are zeros. For simplicity, we set \(O_{(k)}:=O_{(k,k)}\) and \( \underline{O}_{(k)}:=O_{(k,1)}\). Notation \({\text {plim}} \) means the convergence in probability. The spectral radius of a square matrix M is denoted by \(\rho \left( M\right) \). If \(M = (m_{ij})\) is a \(m \times n\) matrix and \(\underline{X} = (x_i)\) is a \(m \times 1\) vector, define \(\left| M\right| :=(\left| m_{ij}\right| )\) and \(\left| \underline{X}\right| : = (\left| x_i \right| )\). Then it is easy to see that \(\left| .\right| \) is submultiplicative, i.e., \(\left| M_{1}M_{2}\right| \le \left| M_{1}\right| \left| M_{2}\right| \) and \(\left| M \underline{X}\right| \le \left| M\right| \left| \underline{X }\right| \) for any appropriate vector \(\underline{X}\). It is also subadditive, i.e., \(\left| \sum \nolimits _{i}M_{i}\right| \le \sum \nolimits _{i}\left| M_{i}\right| \), where the inequality \(M\le N\) denotes the element-wise relation \(m_{ij}\le n_{ij}\) for all i and j. If \(M_{i}=\left( m_{jk}^{\left( i\right) }\right) \) is a sequence of \(m\times n\) matrices, define \(\underset{i}{\max }\left\{ \left| M_{i}\right| \right\} \) the \(m\times n\) matrix whose (j, k) element is \(\underset{i}{\max }\left\{ \left| m_{jk}^{\left( i\right) }\right| \right\} \), i.e., \( \underset{i}{\max }\ \left\{ \left| M_{i}\right| \right\} = \left( \underset{i}{\max }\left\{ \left| m_{jk}^{\left( i\right) }\right| \right\} \right) \). The symbol \(\otimes \) denotes the usual Kronecker product of matrices. Set \(M^{\otimes r}=M\otimes M\otimes \cdots \otimes M\), r times, with \(M^{\otimes r} = M\) if \(r = 1\).

The remainder of the paper is organized as follows. The next section provides state-space representations of the \(SETBL_{d}\) process generated by (1.1). Such representations will be used in Sect. 3 to derive sufficient conditions for the \(SETBL_{d}\) model in (1.1) to have a unique stationary (in strong and weak sense), causal and ergodic solution. Section 4 is devoted to establish sufficient conditions for the finiteness of higher-order moments of the considered process. Then the autocorrelation function of a \(\ell \)-th regime \( SETBL_{d}\) model is derived analytically. In Sect. 5 the \(\mathbb {L} _{2}-\)structure is analyzed and the covariance function is derived, which allows us to give an ARMA representation. Extending the \(\mathbb {L} _{2}-\)structure to \(\mathbb {L}_{m}\) yields that the power process \( \left( X_{t}^{m}\right) _{t \in \mathbb Z}\) also admits an ARMA representation (Sect. 6). Section 7 is devoted to establish necessary and sufficient conditions for the invertibility and geometric ergodicity of the \(SETBL_{d}\) model. Section 8 concludes.

2 Markovian representations of the \(SETBL_{d}\) model

In what follows, we shall assume, without loss of generality, that in (1.1) \(P=p\) and \(d \le r\), with \(r = p + q\), since otherwise zeros of \(a_{i}^{\left( j\right) }\) or \(b_{i}^{\left( j\right) }\) or \(c_{mk}^{\left( j\right) }\) can be filled in.

Define the r-dimensional state vector

and the \(r\times r \) block matrices \(\left( A_{i}^{\left( j\right) },0\le i\le Q\right) \), where

and

for all \(i = 1, \ldots , Q\) and \(j = 1, \ldots , \ell \).

Set \(\underline{H}_{r}:=(1,\underline{O}_{(r-1)}^{\prime })^{\prime } \in \mathbb R^r\) and \(\underline{F}_r:=(\underline{H}_{p}^{\prime },\underline{H} _{q}^{\prime })^{\prime } \in \mathbb R^r\). Then Eq. (1.1) can be expressed in the following state-space representation

for all \(t \in \mathbb Z\), where \(R_{j}^{\left( d\right) }\) is the Cartesian product \(\mathbb {R}^{d-1}\times R_{j}\times \mathbb {R}^{r-d}\), for all \(j = 1, \ldots , \ell \).

Now define

and

where \(K=r(Q+1)\). Then (2.1) can be transformed into the following Markovian state-space representation

where

and

for all \(j=1,\ldots , \ell \). It is evident that any solution \(\left( \underline{Y} _{t}\right) \) of (2.2) is a Markov chain with state space \(\mathbb {R }^{K}.\) Recall that a Markov chain is said to be irreducible if each state can be reached from any other state in a finite number of steps. For each Markov chain there exists a unique decomposition of the state space into a sequence of disjoint subsets in which the restriction of the chain results irreducible. So we can always assume that the considered Markov chain is irreducible (possibly, restricting the state space). Irreducibility is a crucial point as it allows to deploy the theory developed by Tweedie (see Tweedie (1974a, 1974b, 1975, 1976)).

Representation (2.2) can be rewritten as follows

where

Compare with Eq. (2.1) from Bibi and Ghezal (2015b) for the class of Markov switching bilinear processes. A different Markovian representation for bilinear time series models has been proposed by Pham (see Pham (1985, 1986)). Such a representation is intriguing and constitutes an open issue, which will be allocated into a separate article with further discussions and several statistical properties.

Since \((e_t)_{t \in \mathbb Z}\) is stationary and ergodic, the process \(\{(\Phi _t, \underline{\omega }_t)\}_{t \in \mathbb Z}\) is also stationary and ergodic. Let \(|| \, \cdot \, ||\) denote any operator norm on the sets of matrices and vectors. It is clear that \(E\left\{ {\text {log}}^{+} || \Phi _t ||\right\} \) and \(E \left\{ {\text {log}}^{+} || \underline{\omega }_t || \right\} \) are finite. Here \({\text {log}}^{+} x = {\text {max}} ({\text {log}} x, 0)\) for every positive real number x. By Brandt (1986) (see also Bougerol and Picard (1992)), the unique stationary solution of (2.3) is given by

whenever the top Lyapunov exponent \(\gamma _L (\Phi )\), associated to the sequence \((\Phi _t)_t\) and defined by

is strictly negative. Since all the norms are equivalent on a finite-dimensional vectorial space, the choice of the norm is unimportant in the above definition.

In the general case the Lyapunov exponent seems difficult to compute but it is easily estimated by Monte Carlo simulation using (2.3) as

Then we have

Proposition 2.1

Consider the threshold bilinear model SETBL in (1.1) with Markovian representation (2.2) or (2.3), and suppose that \(\gamma _L (\Phi ) < 0\). Then the series (2.4) converges a.s., for all \(t \in \mathbb Z\), and the process \((X_t)\), defined as the first component of \(\underline{Y}_t\), is the unique causal strictly stationary and ergodic solution of (1.1).

The proof of this result follows essentially the same arguments as in Francq and Zakoïan (2001) for Markov switching (MS) ARMA models, and Bibi and Ghezal (2015b) for MS bilinear models.

3 Existence of a causal strictly stationary \(SETBL_{d}\) process

In this section we provide a further sufficient condition for the existence of a causal strictly stationary and ergodic solution for the \(SETBL_{d}\) process. Such a condition is based on the computation of the spectral radius of a well-specified matrix, hence it is easily tractable and programmable.

Let us consider the following assumptions:

- \(\left[ A.0\right] \):

-

The probability distribution of \(\left( e_{t}\right) \) is absolutely continuous with respect to the Lebesgue measure \(\lambda \).

- \(\left[ A.1\right] \):

-

The conditional mean function \(e\left( \, \cdot \right) : \mathbb R^K \rightarrow \mathbb R^K\), defined by

$$\begin{aligned} e\left( \underline{y}\right) \, = \, E\left\{ \underline{Y}_{t}\left| \underline{Y}_{t-1}=\underline{y}\right. \right\} \, = \, \sum \limits _{j=1}^{\ell }\Gamma _{0}^{\left( j\right) }\, \underline{y} \, I_{\left\{ \underline{y}\in {R}_{j}^{\left( d\right) }\right\} }, \end{aligned}$$(3.1)for all \(\underline{y} \in \mathbb R^K\), is continuous.

Assumption \(\left[ A.0\right] \) guarantees \(\lambda \)-irreducibility. Assumption \(\left[ A.1\right] \) has a counterpart in the analysis of segmented regression models, as documented by Feder (1975). Stability of the considered process will be deduced via appropriate contraction conditions involving Lipschitz continuity.

We get the following lemma

Lemma 3.1

The conditional mean function \(e\left( \cdot \right) : \mathbb R^K \rightarrow \mathbb R^K\) satisfies the Lipschitz condition

where \(\Lambda _0 = \underset{j}{\max }\, \left\{ \vert \Gamma _0^{(j)} \vert \right\} \in \mathbb R^{K \times K}\).

Proof

Here we use some techniques from Brockwell et al. (1992). Assume that \(\underline{y}\in {R}_{j}^{\left( d\right) }\) and \( \underline{z}\in {R}_{k}^{\left( d\right) }\) with \(j\le k.\)

Then there exists a sequence of \(K\times 1\) vectors \( \underline{y}_{h-1}:=\underline{y}, \quad \underline{y}_{h}, \quad \ldots , \quad \underline{y}_{k+1}:=\underline{z} \) on the boundaries of \({R}_{h-1}^{\left( d\right) },\) \({R}_{h}^{\left( d\right) },\) \(\ldots ,\) \({R}_{k+1}^{\left( d\right) }\) such that \( \left| \underline{y}-\underline{z}\right| =\left| \underline{y} _{h-1}-\underline{y}_{h}\right| +\left| \underline{y}_{h}-\underline{ y}_{h+1}\right| +\cdots +\left| \underline{y}_{k}-\underline{y} _{k+1}\right| . \) So we have

This completes the proof. \(\square \)

The next theorem provides sufficient conditions for the existence of strictly stationary, causal and ergodic solutions of model (1.1). Recall that the higher-order moments of the innovations are assumed to be finite. The matrix condition in (3.2) below is tractable and readily programmable, hence it is very simple to check. Assumptions \(\left[ A.0\right] \), \(\left[ A.1\right] \) and Eq. (3.2) below are common to investigate stationarity in the context of time series analysis. For example, conditions similar to (3.2) have been used by Francq and Zakoïan (2001, 2005) to study stationarity of Markov switching ARMA and GARCH models, respectively.

Theorem 3.1

Under Assumptions \(\left[ A.0\right] \) and \(\left[ A.1\right] ,\) suppose that

Then the \(SETBL_d\) model in (1.1) with Markovian representation (2.2) or (2.3) has a unique causal strictly stationary and ergodic solution \(\left( \underline{Y}_{t}\right) _{t \in \mathbb Z } \) which belongs to \(\mathbb {L}_{1}\) and it is the limit (a.s.) of the sequence defined recursively by

Proof

For any \(n>2,\) we have

As \(E\left\{ \left| e_{t}\right| \right\} <\infty ,\) \(\left( \underline{Y}_{n}\left( t\right) \right) \) is a Cauchy sequence in \(\mathbb {L }_{1}\) for each fixed t. Then there exists an \(\mathbb {L}_{1}\) limit, \(\underline{Y}_{t}\) say, which is also the a.s. limit of \( \underline{Y}_{n}\left( t\right) \) as \(n\rightarrow \infty \) because \( E\left\{ \left| \underline{Y}_{n}\left( t\right) -\underline{Y} _{n-1}\left( t\right) \right| \right\} \) is geometrically bounded. Moreover, we show that the process \(\left( \underline{Y}_{t}\right) \) is strictly stationary. Notice that the process \(\left( \underline{Y} _{n}\left( t\right) \right) \) is strictly stationary and ergodic, for any fixed n. Then, for any bounded continuous function g, we have

Now we check that \({\text {plim}} I_{\left\{ \underline{Y}_{n_{k}}\left( t\right) \in R_{j}^{\left( d\right) }\right\} }=I_{\left\{ \underline{Y}_{t}\in R_{j}^{\left( d\right) }\right\} }\) for all \(j\in \left\{ 1,\ldots , \ell \right\} \) and some subsequence \(\left( n_{k}\right) \) of \(\left( n\right) .\) Note that, for any \(\delta >0\) and a.s. convergent subsequence \(\left( \underline{ Y}_{n_{k}}\left( t\right) \right) \) of \(\left( \underline{Y}_{n}\left( t\right) \right) := \left( \left( Y_{n}^{\left( 1\right) }\left( t\right) ,\ldots ,Y_{n}^{\left( K\right) }\left( t\right) \right) ^{\prime }\right) \), we have

It now suffices to note that \(P\left( X_{t}=r_{j}\right) =0,\) for all \( j\in \left\{ 1,\ldots , \ell \right\} .\) The rest is immediate. \(\square \)

Remark 3.1

The process \(\left( X_{t}\right) \), where \(X_t = \underline{H}_K^{\prime } \, \underline{Y}_t\), is also the unique causal strictly stationary and ergodic solution of (1.1).

Remark 3.2

Ling et al. (2007) investigate the first-order threshold moving-average model, denoted by TMA(1) and defined as

where \(\phi \), \(\psi \) and r are constant and \(\epsilon _t\) is a sequence of independent and identically distributed random variables, with mean zero and a density function f(x), for all \(x \in X \subset \mathbb R\). These authors provide a sufficient condition for the ergodicity of the TMA(1) model, without the need to check irreducibility. Their main result (see Theorem 1 of Ling et al. (2007)) states that if \(| \psi | \, {\text {sup}}_{x\in X} \, | x \, f(x) | < 1\), then there exists a unique, strictly stationary and ergodic solution of the TMA(1) model. It is interesting to see that the ergodicity of the TMA(1) model depends on the coefficient \(\psi \), while the intercept \(\phi \) is irrilevant. Since our SETBL model encompasses many nonlinear models, including the TMA models, Theorem 3.1 implies the ergodicity of TMA(1) by using a similar but different condition with respect to that proposed in the cited paper.

4 Higher-order moments of the \(SETBL_{d}\) model

In this section we are interested in conditions ensuring the existence of higher-order moments for a strictly stationary \(SETBL_{d}\) process \(X = (X_t)_{t \in \mathbb Z}\). In particular, the exact form of the r-th moment of X is derived. The computation of higher-order moments for some classes of Markov switching (MS) bilinear models has been given in Bibi and Ghezal (2015b) and Bibi (2023). Matrix expressions in closed form for higher-order moments and asymptotic Fisher information matrix of general MS VARMA models have been provided in Cavicchioli (2017a, 2017b), respectively.

Let \(\mu _{r}\left( j\right) \) be the moment of order r for the j-th bilinear regime process, i.e., \(\mu _{r}\left( j\right) =E\left\{ \left( X_{t}^{\left( j\right) }\right) ^{r}\right\} \), \(\widetilde{\mu }_{r}\left( j\right) \) the r-th centered moment, \(v_{r}\left( j\right) =E\left\{ \left( I_{t-d}^{\left( j\right) }-p_{j}\right) ^{r}\right\} \), and \(\gamma _{ij}\left( k\right) \) the autocovariance of \(X_{t}\) at lag k for the \(i,j-th\) bilinear regime processes, i.e., \(\gamma _{ij}\left( k\right) =Cov\left( X_{t}^{\left( j\right) },X_{t-k}^{\left( i\right) }\right) \), for all \(k>0\). For convenience, model (1.1) can also be written as follows

where \(X_{t}^{\left( j\right) }\backsim SBL\left( p,q,P,Q\right) \) is the standard superdiagonal bilinear process in regime j, for all \(j=1,\ldots , \ell \), and

We get the following lemma

Lemma 4.1

According to representation (4.1), the processes \(\left( I_{t-d}^{\left( j\right) }\right) _{t}\), for \(j = 1, \ldots , \ell \), satisfy the following properties:

-

(i)

\(E\left\{ I_{t-d}^{\left( j\right) }\right\} =P\left( X_{t-d}\in R_j \right) =p_{j}.\)

-

(ii)

\(\left( I_{t-d}^{\left( j\right) }\right) ^{m}=I_{t-d}^{\left( j\right) }\) for all \(j=1,\ldots ,\ell \) and \(m\ge 1.\)

-

(iii)

\(\left( I_{t-d}^{\left( j\right) }\right) ^{m}\left( I_{t-d}^{\left( k\right) }\right) ^{n}=0\) for all \(j\ne k\in \{1,\ldots ,\ell \}\) and \( m,n\ge 1.\)

-

(iv)

\(I_{t-d}^{\left( j\right) }I_{t-d-k}^{\left( j\right) }=\left\{ \begin{array}{l} 1\text { if } X_{t-d}\, \text {and } \, X_{t-d-k}\in R_{j} \\ 0\text { otherwise} \end{array} \right. \) with \(E\left\{ I_{t-d}^{\left( j\right) }I_{t-d-k}^{\left( i\right) }\right\} =P\left( X_{t-d}\in R_{j}\, \text {, }\, X_{t-d-k}\in R_{i}\right) =p_{ij}.\)

The proof is straightforward, hence omitted.

Proposition 4.1

Let us consider model (4.1). Suppose that the local bilinear process in each regime admits finite moments up to order r. Then the expected value of the process \(\left( X_{t}^{r}\right) \) is given by

for all \(r \ge 0\).

Proof

Using Lemma 4.1, we have

By convention, set \(x_{0}=r\) and \(x_{\ell }=0\). Then the computation of the expected value of \(X_{t}^{r}\) gives formula (4.2). \(\square \)

Corollary 4.1

Let us consider model (4.1). Under the assumptions of Proposition 4.1, we have

Proof

The variance of \((X_t)\) can be expressed by the following expansion

Now we can write \(Var\left( X_{t}^{\left( j\right) }I_{t-d}^{\left( j\right) }\right) =\widetilde{\mu }_{2}\left( j\right) p_{j}-\mu _{1}^{2}\left( j\right) v_{2}\left( j\right) \), for all \(j=1,\ldots , \ell \), and

for \(1\le i<j\le \ell \). Thus formula (4.3) for the variance of \(X_{t} \) follows. \(\square \)

Corollary 4.2

Let us consider model (4.1). Assume that \(E\left\{ e_{t}^{4}\right\} <\infty \). If \(E\left\{ X_{t}^{4}\right\} <\infty \), then the third and fourth central unconditional moments of \(X_{t}\) are given by

and

where

Proof

The proof is similar to that of Corollary 4.1. \(\square \)

Now we derive expressions in closed-form to compute the kurtosis and the skewness of the stationary threshold bilinear process \( X_{t}\ \).

Corollary 4.3

Let us consider model (4.1). Under the assumptions of Corollary 4.2, the kurtosis and the skewness of \(X_{t}\) exist and are given by substituting the formulas in Corollaries 4.1 and 4.2 into the usual expressions

Proof

Equations (4.3), (4.4), (4.5) linked to the unconditional variance, third and fourth central moments, respectively, are used to compute the skewness and the excess kurtosis. \(\square \)

To complete the section we present some generic examples of threshold bilinear processes to illustrate the obtained theoretical results. In particular, some examples show that the sufficient condition (3.2) for strict stationarity is not necessary in general. However, the pictures of the sample autocorrelation functions reveal that the considered processes are strictly stationary. In addition, we check the validity of the obtained expressions in closed-form for the higher-order moments and kurtosis. The proposed examples show that the values of the theoretical moments and kurtosis fit well with those of the empirical ones. We also compare sample and theoretical autocorrelation functions as goodness-of-fit criterion.

Example 4.1

Consider the \(SETBL_{d}\left( 2;0,0,2,1\right) \) model defined by

Then the regime moments collected in the following table

can be used to compute the skewness and the kurtosis of \((X_t)\). More precisely, the skewness of \(X_{t}\) is \(\tau =0\) and the kurtosis of \(X_{t}\) is given by

The exact autocorrelation function \(\left( ACF\right) \) of the process \((X_t)\) is derived. The ACF coefficient \(\rho \left( k\right) \), defined by \(\rho \left( k\right) = \frac{Cov\left( X_{t},X_{t-k}\right) }{Var\left( X_{t}\right) }\) with \(\rho \left( k\right) =\rho \left( -k\right) \) accordingly to the symmetry of the autocovariance function, has the following expression:

where \(Var\left( X_{t}\right) \) is given in Eq. (4.3).

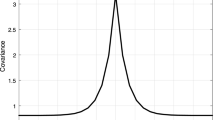

Set \(a=0.7\), \(c=0.5\), \(d=1\), \(r_1=4\), and \(p_1=0.7\). Then a typical realization of such a process is depicted in Fig. 1 (on the left side). The second and fourth moments of this process are given by \(\mu _2=E(X_t^2)=1.5216\) and \(\mu _4=E(X_t^4)=13.7085\), respectively, and the kurtosis is \(\kappa =5.9212\). The sample autocorrelation function is reported in Fig. 1 (on the right side). The matrix in the statement of Theorem 3.1 has spectral radius equal to 1. However, the sample ACF reveals that the given time series is strictly stationary. Thus the condition in Theorem 4.1 is sufficient but not necessary to guarantee strict stationarity.

Example 4.1: original data (left) and sample autocorrelation (ACF) (right)

Example 4.2

Consider the \(SETBL_{d}\left( 2;1,0,1,1\right) \) model defined by

Set \(a=0.7\), \(b=0.5\), \(c=0.8\), \(f=0.3\), \(d=1\), \(r_1=4\), and \(p_1=0.7\). We report in Fig. 2 a typical realization of such a process (left), the sample ACF (middle) and the sample partial ACF (right). The sample second and fourth moments of this process are given by \(\mu _2=0.2251\) and \(\mu _4=3.6210\), respectively, the skewness is \(\tau =0.6404\) and the kurtosis is \(\kappa =3.7706\).

Example 4.2: original data (left), sample ACF (middle), sample partial ACF (right)

Example 4.3

Consider the \(SETARMA_{d}\left( 2;1,1\right) \) model defined by

Then the regime moments in the following table

can be used to compute the skewness and the kurtosis of \((X_t)\). More precisely, the skewness of \(X_{t}\) is \(\tau =0\) and the kurtosis of \(X_{t}\) is given by

Furthermore, the autocorrelation of \(X_{t}\) is given by

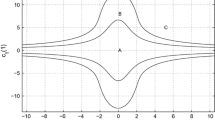

Set \(a=0.1\), \(b=0.7\), \(d=1\), \(r_1=4\), and \(p_1=0.7\). Then a typical realization of such a process is depicted in Fig. 3 (left) together with the theoretical autocorrelation function (right). The ACF declines to near zero rapidly at lag 20 with exponential decay. This means that the given time series is strictly stationary. The second and fourth moments of this process are given by \(\mu _2=E(X_t^2)=2.7071\) and \(\mu _4=E(X_t^4)=9.4589\), respectively, and the kurtosis is \(\kappa =4.3615\).

Example 4.3: original data (left) and theoretical autocorrelation function (ACF) (right)

Example 4.4

Consider the \(SETGARCH_{d}\left( 2;1,1\right) \) model defined as \(X_{t}= \sqrt{h_{t}}e_{t}\), where the volatility process \(h_{t}\) (at time t) is as follows

Then the regime moments in the following table

can be used to compute the skewness and the kurtosis of \((X_t)\). Indeed, the skewness of \(X_{t}\) is \(\tau =0\) and the kurtosis of \(X_{t}\) has the following expression

Furthermore, the autocorrelation of \(X_{t}^{2}\) is given by

Set \(a=1\), \(b=0.7\), \(c=0.5\), \(d=1\), \(r_1=4\), and \(p_1=0.7\). Then a typical realization of such a process is depicted in Fig. 4 (left) together with the sample ACF (middle) and the theoretical ACF (right). The second and fourth moments of this process are given by \(\mu _2=E(X_t^2)=2.4000\) and \(\mu _4=E(X_t^4)=35.2000\), respectively, and the kurtosis is \(\kappa =6.1111\). This time series is strictly stationary.

Example 4.4: original data (left), sample autocorrelation function (in the middle) and the theoretical autocorrelation function (right)

5 Second-order stationarity and ARMA representation

\(({{\textbf {5.1}}}\)) Computation of the second-order moments. Once second-order stationarity is established, it can be useful to compute the second-order moment of the process \(\left( X_{t}\right) _{t\in \mathbb {Z}}\). We shall consider the centered version of the state vector \( \underline{Y}_{t}\), i.e.,

where \(\widehat{\underline{Y}}_{t}:=\underline{Y}_{t}-E\left\{ \underline{Y} _{t}\right\} \) and \(\widehat{\underline{\eta }}_{t}^{(j)}\left( e_{t}\right) \) and \(\widehat{\underline{e}}_{t}\) are centered residual vectors.

Let

Proposition 5.1

Consider the \(SETBL_{d}\left( \ell ;p,q,p,Q\right) \) process in (1.1) or (2.2) with centered state-space representation (5.1). Suppose that \(E\{e_t^4\} < \infty \) and

where

Then we have

where

Proof

Starting from (5.1), we have:

(a) If \(h=0\), then

where \(\Gamma ^{\left( 2\right) }\) is as above. Thus it follows that

because \(I_{(K)} \, - \, \Gamma ^{(2)}\) is invertible as \(\rho (\Gamma ^{(2)}) < 1\).

(b) If \(h>0\), then

Then we get

where \(\Gamma _{0}\) is as above. Hence \(\Xi _{X}\left( h\right) =\left( H_{K}^{\otimes 2}\right) ^{\prime }\underline{\widehat{\Xi }}\left( h\right) = \left( \underline{H}_K^{\otimes 2 }\right) ^{\prime } \, \Gamma _0^h \, \underline{\widehat{\Xi }}\left( 0\right) \). The result follows. \(\square \)

\(({{\textbf {5.2}}}\)) ARMA representation. ARMA representations play an important role in forecasting and model identification. For these reasons, certain nonlinear processes are already represented as ARMA models. Indeed, Bibi (2003), Bibi and Ghezal (2015b) showed that superdiagonal bilinear processes with time-varying coefficients and Markov-switching bilinear processes admit weak ARMA representations. Weak VARMA representations of multivariate Markov switching (MS) AR and MA models and MS state-space models can be found in Cavicchioli (2014, 2016), respectively.

The following proposition establishes an ARMA representation for the \( SETBL_{d}\left( \ell ;p,q,P,Q\right) \) model.

Proposition 5.2

Under the conditions of Proposition 5.1, the \(SETBL_{d}\left( \ell ; p,q,P,Q\right) \) process with state-space representation (5.1) is an ARMA process.

Proof

Use the same approach as in Bibi and Ghezal (2015b). It is important to note that the ARMA representation naturally arises from the Markovian nature of the model. This was demonstrated by Pham (1985), Theorem 4.4, for the class of bilinear processes. \(\square \)

6 Covariance structure of higher-powers of a \(SETBL_{d}\) model

For the identification purpose it is necessary to look at higher-powers of the process in order to distinguish between different ARMA representations. For this purpose, we first establish the following lemma based on Lemma 3.1 in Bibi and Ghezal (2016), which is proved for the class of Markov switching bilinear processes.

Lemma 6.1

Consider the \(SETBL_{d}\left( \ell ;p,q,P,Q\right) \) model in (1.1) or (2.2) with centered state space representation (5.1). Let us define the following matrices \(B_{j}^{\left( k\right) }(i,e_{t})\), \( j=0,\ldots \), \(k=0,\ldots ,m\) and \(i=1,\ldots , \ell \), with appropriate dimension such that

and

where by convention \(B_{j}^{\left( k\right) }\left( .,.\right) = 0\) if \(j>k\) or \(j<0\), and \(\widehat{\underline{Y}}_{t}^{\otimes 0}=B_{0}^{\left( 0\right) }\left( .,.\right) =1\). Then \(B_{j}^{\left( k\right) }(i,e_{t})\) are uniquely determined by the following recursive formulas

Proof

The proof follows by using an approach which is similar to that employed by Bibi and Ghezal (2015b), Lemma 3.1, for the class of Markov-switching subdiagonal bilinear processes. \(\square \)

Now, set

for all \(i=1,\ldots , \ell \). Then the following relations hold:

for all \(k >1\), where \(\tilde{B}_{j}^{\left( m,i\right) }=B_{j}^{\left( m,i\right) }\otimes I_{\left( K^{m}\right) }\). Moreover, we have

in which O is the null matrix with appropriate dimension.

Proposition 6.1

Consider the \(SETBL_{d}\left( \ell ;p,q,P,Q\right) \) model (1.1) or (2.2) with centered state−space representation (5.1). If \(E\left\{ e_{t}^{2\left( m+1\right) }\right\} <+\infty \) and

with \(\Gamma ^{\left( 2\,m\right) }\left( j\right) :=E\left\{ \left( \Gamma _{0}^{\left( j\right) }+e_{t}\Gamma _{1}^{\left( j\right) }\right) ^{\otimes 2\,m}\right\} \), then \(\left( \widehat{\underline{Y}}_{t}^{\otimes m}\right) _{t\in \mathbb {Z}} \) is a second-order stationary process, and the following relations hold:

Remark 6.1

Proposition 6.1 allows to compute \(W^{\left( m\right) }(h)\) recursively for all \(h\ge 0\). The unconditional mean and the covariance structure of \( \left( \underline{X}_{t}^{\otimes m}\right) _{t\in \mathbb {Z}} \) are given by

where \(\underline{v}^{\prime }=(\underline{F}^{\otimes 2\,m\prime }\vdots O\vdots \ldots \vdots O\vdots -E\left\{ \widehat{\underline{X}}_{t}^{\otimes m}\right\} \otimes \underline{F}^{\otimes m\prime }).\)

Corollary 6.1

\(\left[ \text {The }{} \textit{SETARMA}_{d} \, \text {model} \right] \) For the \(\textit{SETARMA}_{d}\) model, whose coefficients \(c_{mk}^{(j)}\) in (1.1) are all zeros, Condition (6.2) reduces to \(\rho \left( \underset{j}{\max }\left\{ \left| \left( A_{0}^{\left( j\right) }\right) ^{\otimes 2\,m}\right| \right\} \right) <1\).

Corollary 6.2

\(\left[ \text {The }SETGARCH_{d} \, \text {model} \right] \) For the \(SETGARCH_{d}\) model, whose volatility process can be regarded as a diagonal bilinear model without moving average part, Condition (6.2) reduces to

\(\rho \left( \underset{j}{\max }\left\{ E\left\{ \left| \left( \tilde{A} _{0}^{\left( j\right) }+e_{t}^{2}\tilde{A}_{1}^{\left( j\right) }\right) ^{\otimes 2\,m} \right| \right\} \right\} \right) {<}1\), where the matrices \(\left( \tilde{A}_{0}^{\left( j\right) }\right) _{j},\left( \tilde{A}_{1}^{\left( j\right) }\right) _{j}\) are uniquely determined and easily obtained.

We are now in a position to state the following result

Proposition 6.2

Let \(\left( X_{t}\right) _{t\in \mathbb {Z}} \) be the causal second-order stationary solution of the \(SETBL_{d}\left( \ell ; p,q,P,Q\right) \) model with centered state-space representation (5.1). Then under the conditions of Proposition 6.1, the power process \(\left( X_{t}^{m}\right) _{t\in \mathbb {Z}}\) is an ARMA process.

Proof

The proof follows essentially the same arguments as in Proposition 5.2. \(\square \)

Remark 6.2

It is often necessary to look into higher-order cumulants in order to distinguish between linear and nonlinear models.

Example 6.1

The characteristics of the ARMA representations for the process \(X_{t}^{m}\) driven by \( SETBL_{d}\left( \ell ;1,0,1,1\right) \) models are collected in the following table

Specification | Representation | |

|---|---|---|

\(m=1\) | ||

Standard | \(ARMA\left( 1,1\right) \) | |

\(SETGARCH_{d}\) | \(ARMA\left( 2 \ell -1,2 \ell -1\right) \) | |

\(SETBL_{d}\) | \(ARMA\left( \ell -1, \ell -1\right) \) | |

\(m>1\) | ||

Standard | \(ARMA\left( m-1,m-1\right) \) | |

\(SETGARCH_{d}\) | \(ARMA\left( \left( m+1\right) \ell -1,\left( m+1\right) \ell -1\right) \) | |

\(SETBL_{d}\) | \(ARMA\left( \left( m+1\right) \ell -1,\left( m+1\right) \ell -1\right) \) |

Example 6.2

Let us consider the SETBL process in Example 4.2. The process admits an ARMA(2, 2) representation \((1-\phi _1 L -\phi _2 L^2) X_t=(1+\theta _1 L + \theta _2 L^2) \epsilon _t\), whose coefficient estimates are reported aside Fig. 5.

The SETBL process in Example 4.2, and its ARMA(2, 2) representation

Example 6.3

The SETARMA process in Example 4.3 admits an ARMA(1, 1) representation

whose coefficient estimates are reported in Fig. 6. Indeed, the AR and MA coefficients \(\phi \) and \(\theta \) are solutions of the equations

and

Substituting the numerical values from Example 4.3, we have to solve the second-order equations

Choosing the solutions of module less than 1 yields \(\phi = 0.5236\) and \(\theta = 0.8134\).

The SETARMA process in Example 4.3, and its ARMA(1, 1) representation

7 Invertibility and geometric ergodicity of \(SETBL_{d}\) processes

\(({{\textbf {7.1}}}\)) Invertibility. The concept of invertibility plays a fundamental role in the analysis of time series and statistical applications. Various definitions of this concept have been proposed in the literature. Granger and Anderson (1978), Guégan and Pham (1987), Pham and Tran (1981), Subba Rao and Gabr (1984), Liu (1990), and Bibi and Ghezal (2015a) have derived invertibility conditions for some particular stationary \(\left( \text {Markov Switching}-\right) \)bilinear models.

The \({\text {SETBL}}_{d} (\ell ; 0,0, p, 1)\) process \(\left( X_{t}\right) _{t \in \mathbb Z} \) defined by the stochastic difference equation

is said to be invertible if for all \(t\in \mathbb {Z},\) the innovation process \(e_{t}\) is \(\mathcal {F} _{t}\left( X\right) -\)measurable.

Let \(\Lambda _{t}:=-\sum \limits _{j=1}^{\ell } \, \sum \limits _{m=1}^{p}\, c_{m1}^{(j)} \, X_{t-m} \, I_{ \left\{ X_{t-d}\in R_{j}\right\} }\). Then we get

Assume that \(\left( X_{t}\right) \) is strictly stationary and ergodic. By the ergodic theorem, if \(E\left\{ \log \left| X_{t}\right| \right\} <\infty \), then

as \(m\longrightarrow \infty \). If \(E\left\{ \log \left| \Lambda _{t}\right| \right\} <0,\) then

as \(m\longrightarrow \infty .\) For any \(\omega \in \Omega \) such that the last inequality holds, there exists a natural number \(n_{\omega }\) such that \(\left\{ \prod \limits _{j=0}^{m}\left| \Lambda _{t-j}X_{t-m}\right| \right\} ^{\frac{1}{m}}\left( \omega \right) \le \delta _{\omega }<1\), for any \(m > n_{\omega }\). Thus, \( \left\{ \sum \limits _{k=1}^{m}\left\{ \prod \limits _{j=0}^{k-1}\Lambda _{t-j}\right\} X_{t-k}\right\} \left( \omega \right) \) converges as m goes to infinity, that is,

In the same manner, we can show that the second term in (7.2) converges to zero a.s. In this case, we get

hence model (7.1) is invertible.

We are now in a position to state the following results.

Theorem 7.1

Let \(\left( X_{t}\right) \) be the unique strictly stationary and ergodic solution of model (7.1) with \(E\left\{ \log \left| X_{t}\right| \right\} <\infty \). Then model (7.1) is invertible if

where \(F_{X}(x)\) is the distribution of \(X_{t}\). Furthermore, model (7.1) is not invertible if \( \lambda >1.\)

The above arguments can be generalized to the case of a \(SETBL_{d}\left( \ell ;0,0,p,q\right) \) model as follows.

Let us consider the \(SETBL_{d}\left( \ell ;0,0,p,q\right) \) model defined by

Let \(\underline{e}_{t}:=\left( e_{t},e_{t-1},\ldots ,e_{t-q+1}\right) ^{\prime }\in \mathbb {R}^{q}.\) Then we get

with \(\left( \Lambda _{t}\right) _{t}\) defined as

Similarly as above, we can show that the second term in (7.6) converges to zero a.s. In this case, we get

hence model (7.5) is invertible. Finally, we obtain the following result.

Theorem 7.2

Let \(\left( X_{t}\right) \) be the unique strictly stationary and ergodic solution of model (7.5) with \(E\left\{ \log \left| X_{t}\right| \right\} <\infty \). Then model (7.5) is invertible if

and it is not invertible if \(\lambda >0.\)

\(({{\textbf {7.2}}}\)) Geometric ergodicity. The final result concerns the geometric ergodicity of the process \(\left( \underline{Y}_{t}\right) \) driven by the \(SETBL_d\) model in (2.2). The geometric ergodicity of a threshold first-order bilinear process has been studied by Cappuccio et al. (1998). In our article we study the geometric ergodicity of general threshold bilinear models. Let \( \underline{Y}_{0}\) be an arbitrarily specified random vector in \(\mathbb {R} ^{K}\). The process \(\left( \underline{Y}_{t}\right) \) is a Markov chain with a state space \(\mathbb {R}^{K}\) and with \(n-\)step transition probability \(P^{(n)}\left( \underline{y},C\right) =P\left( \underline{Y}_{n}\in C|\underline{Y}_{0}= \underline{y}\right) \) and invariant probability measure \(\pi \left( C\right) =\int P\left( \underline{y},C\right) \pi \left( d\underline{y} \right) \) for any Borelean set \(C\in \mathcal {B}_{\mathbb {R}^{K}}\). The chain is said to be \(\phi \)-irreducible if, for some non trivial measure \(\phi \) on \( \left( \mathbb {R}^{K},\mathcal {B}_{\mathbb {R}^{K}}\right) \) such that \( \forall C\in \mathcal {B}_{\mathbb {R}^{K}}\), \(\phi \left( C\right)>0\Longrightarrow \exists n>0\), \(P^{(n)}\left( \underline{y},C\right) >0\) for every \(\underline{y}\). It is called a Feller Markov chain if the function \( E\left\{ g(\underline{Y}_{t})|\underline{Y}_{t-1}=\underline{y}\right\} \) is continuous for every bounded and continuous function g defined on \(\mathbb {R}^{K}\). The chain is said to be geometrically ergodic if there exist some probability measure \(\pi \) on \(\mathcal {B}_{\mathbb {R}^{K}}\) and a positive real number \( c\in ] 0,1] \) such that \(c^{-n}\left\| P^{(n)}\left( \underline{y},.\right) -\pi \left( .\right) \right\| _{V}\rightarrow 0\) as \(n\rightarrow +\infty \), where \(\left\| .\right\| _{V}\) denotes the total variation norm.

Let us make the following assumption

[A.2] The marginal distribution of \(e_{t}\) is absolutely continuous with respect to the Lebesgue measure \(\lambda \) on \(\mathbb {R}\). The support of \(e_{t}\), defined by its strictly positive density \(f_{e}\), contains an open set around zero.

Proposition 7.1

Under Assumptions \([A0]-[A2]\), the Markov chain \(\left( \underline{Y} _{t}\right) \) is a \(\lambda -\)irreducible, aperiodic and strong Feller chain. Moreover, if \( \left( \underline{Y}_t\right) \) is starting with the stationary distribution, then its first component process is strictly stationary and geometrically ergodic.

The proof of this result follows using the same arguments from Theorem 4.1 in Bibi and Ghezal (2015b) for the class of Markov switching bilinear models.

8 Conclusion

In this paper we analyse some statistical and probabilistic properties of the self-exciting threshold superdiagonal bilinear (SETBL) time series model, which are useful in statistics and econometrics. Using a suitable Markovian representation of such a model, we derive sufficient conditions to have a unique, strictly stationary, causal and ergodic solution. Under neat matrix conditions, we prove that the higher-order moments of the SETBL process and its powers are finite. Then we obtain matrix expressions in closed-form for the computations of such moments. As a result, the skewness and kurtosis indexes are explicitly computed. These theoretical results are useful for the diagnostic analysis of the process and for model identification. Furthermore, we derive the autocorrelation function and the covariance functions of the SETBL process, and provide its ARMA representations. Such representations play an important role in forecasting and to estimate model parameters via GMM method. Finally, we examine necessary and sufficient conditions for the invertibility and geometric ergodicity of the proposed model. Several examples illustrate the obtained theoretical results.

In the paper we concentrate on superdiagonal bilinear models as the crucial step of our method is to represent them by a Markovian state-space form. As pointed out by one of the referees, the general model may be also represented by an elegant \(\ell \)-Markovian form. This is an interesting problem, which can be solved by a direct modification of the proposed techniques. In this setting, and particularly to compute explicitly the higher-order moments, we need to consider the additional assumption that the innovation \(e_t\) and the sequence \(\{(\underline{Y}_{\tau - 1}, s_{\tau })\}_{\tau \le t}\) are independent, where \(\underline{Y}_t\) is the Markov chain with state space \(\mathbb R^K\) from Sect. 2 and \(s_t\) denotes the corresponding state function. After that, all the main results of the paper mantain their validity for the general model.

References

Bibi A (2003) On the covariance structure of time-varying bilinear models. Stoch Anal Appl 21:25–60

Bibi A (2023) Higher-order moments of Markov switching bilinear models. Stat Pap (Manuscript)

Bibi A, Ghezal A (2015a) Consistency of quasi-maximum likelihood estimator for Markov-switching bilinear time series models. Stat Probab Lett 100:192–202

Bibi A, Ghezal A (2015b) On the Markov-switching bilinear processes: stationarity, higher-order moments and \(\beta \)-mixing. Stochastics 1–27

Bibi A, Ghezal A (2016) Minimum distance estimation of Markov-switching bilinear processes. Statistics 50(6):1290–1309

Bougerol P, Picard N (1992) Strict stationarity of generalized autoregressive processes. Ann Probab 20:1714–1729

Brandt A (1986) The stochastic equation \(Y_{n + 1} = A_n \, Y_n \, + \, B_n\) with stationary coefficients. Adv Appl Probab 18:221–254

Brockwell PJ, Liu J, Tweedie RL (1992) On the existence of stationary threshold autoregressive moving-average processes. J Time Ser Anal 13(2):95–107

Cappuccio N, Ferrante M, Fonseca G (1998) A note on the stationarity of a threshold first-order bilinear process. Stat Probab Lett 40:379–384

Cavicchioli M (2014) Determining the number of regimes in Markov switching \(VAR\) and \(VMA\) models. J Time Ser Anal 35(2):173–186

Cavicchioli M (2016) Weak \(VARMA\) representations of regime-switching state-space models. Stat Pap 57(3):705–720

Cavicchioli M (2017a) Higher order moments of Markov switching \(VARMA\) models. Economet Theory 33(6):1502–1515

Cavicchioli M (2017b) Asymptotic Fisher information matrix of Markov switching \(VARMA\) models. J Multivar Anal 157:124–135

Chan K-S, Goracci G (2019) On the ergodicity of first-order threshold autoregressive moving-average processes. J Times Ser Anal 40(2):256–264

Chan K-S, Giannerini S, Goracci G, Tong H (2024) Testing threshold regulation in presence of measurement error. Stat Sin 34(3):1–40

Chen CWS, Lee JC (1995) Bayesian inference of threshold autoregressive models. J Times Ser Anal 16(5):483–492

Choi MS, Park JA, Hwang SY (2012) Asymmetric GARCH processes featuring both threshold effect and bilinear structure. Stat Probab Lett 82:419–426

Davis RA, Resnick SI (1996) Limit theory for bilinear processes with heavy-tailed noise. Ann Appl Probab 6(4):1191–1210

Feder PI (1975) On asymptotic distribution theory in segmented regression problems-identified case. Ann Stat 3(1):49–83

Ferrante M, Fonseca G, Vidoni P (2003) Geometric ergodicity, regularity of the invariant distribution and inference for a threshold bilinear Markov process. Stat Sin 13:367–384

Francq C, Zakoïan JM (2001) Stationarity of multivariate Markov-switching ARMA models. J Econometr 102:339–364

Francq C, Zakoïan JM (2005) \(L^2\)-structures of standard and switching regime GARCH models. Stoch Process Appl 115:1557–1582

Gibson D, Nur D (2011) Threshold autoregressive models in finance: a comparative approach. Applied Statistics Education and Research Collaboration (ASEARC)—Conference paper 26. https://ro.uow.edu.au/asearc/26

Goracci G, Giannerini S, Chan K-S, Tong H (2021) Testing for threshold effects in the TARMA framework. arXiv preprint arXiv:2103.13977

Granger CWJ, Anderson A (1978) An introduction to bilinear time series models. Vandenhoeck and Ruprecht, Gottingen

Guégan D, Pham DT (1987) Minimalit é et Inversibilité des modèles bilinéaires à temps discret. C R Acad Sci Paris Serie I Math 448:159–162

Kristensen D (2009) On stationarity and ergodicity of the bilinear model with applications to the \(GARCH\) models. J Time Ser Anal 30:125–144

Li G, Li W (2011) Testing a linear time series model against its threshold extension. Biometrika 98(1):243–250

Ling S, Tong H, Li D (2007) Ergodicity and invertibility of threshold moving-average models. Bernoulli 13(1):161–168

Liu J (1990) A note on causality and invertibility of a general bilinear time series model. Adv Appl Probab 22:247–250

Ma L, Grant AJ, Sofronov G (2020) Multiple change point detection and validation in autoregressive time series data. Stat Pap 61:1507–1528

Mohr M, Selk L (2020) Estimating change points in nonparametric time series regression models. Stat Pap 61:1437–1463

Petruccelli JD, Davies N (1986) A portmanteau test for self-exciting threshold autoregressive-type nonlinearity in time series. Biometrika 73(3):687–694

Pham DT (1985) Bilinear Markovian representation and bilinear models. Stoch Process Appl 20(2):295–306

Pham DT (1986) The mixing property of bilinear and generalized random coefficient autoregressive models. Stoch Process Appl 23(2):291–300

Pham DT, Tran LT (1981) On the first order bilinear time series models. J Appl Probab 18(3):617–627

Popovič PM, Bakouch HS (2020) A bivariate integer-valued bilinear autoregressive model with random coefficients. Stat Pap 61:1819–1840

Subba Rao T, Gabr MM (1984) An introduction to bispectral analysis and bilinear time series models, vol 24. Lecture Notes in statistics. Springer, Berlin

Tong H (1983) Threshold models in non-linear time series analysis. Lecture Notes in statistics, vol 21. Springer, Berlin, pp 59–121

Tong H (2011) Threshold models in time series analysis—30 years on. Stat Interface 4(2):107–118

Tong H (2012) Threshold models in non-linear time series analysis, vol 21. Springer, Berlin

Tong H (2015) Threshold models in time series analysis—some reflections. J Econometr 189(2):485–491

Tweedie RL (1974a) R-Theory for Markov chains on a general state space I. Solidarity properties and r-recurrent chains. Ann Probab 2(5):840–864

Tweedie RL (1974b) R-Theory for Markov chains on a general state space II. r-subinvariant measures for r-transient chains. Ann Probab 2(5):865–878

Tweedie RL (1975) Sufficient conditions for ergodicity and recurrence of Markov chains on a general state space. Stoch Process Appl 3(4):385–403

Tweedie RL (1976) Criteria for classifying general Markov chains. Adv Appl Probab 8(4):737–771

Acknowledgements

Maddalena Cavicchioli acknowledges financial support by FAR 2022 research grant of the University of Modena and Reggio E., Italy. The authors thank the Editor in Chief of the journal, Professor Carsten Jentsch, and the three anonymous referees for their constructive comments and very useful suggestions and remarks which were most valuable for improvement of the final version of the paper.

Funding

Open access funding provided by Università degli Studi di Modena e Reggio Emilia within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ghezal, A., Cavicchioli, M. & Zemmouri, I. On the existence of stationary threshold bilinear processes. Stat Papers 65, 3739–3767 (2024). https://doi.org/10.1007/s00362-024-01539-z

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-024-01539-z