Abstract

We consider the problem of predicting values of a random process or field satisfying a linear model \(y(x)=\theta ^\top f(x) + \varepsilon (x)\), where errors \(\varepsilon (x)\) are correlated. This is a common problem in kriging, where the case of discrete observations is standard. By focussing on the case of continuous observations, we derive expressions for the best linear unbiased predictors and their mean squared error. Our results are also applicable in the case where the derivatives of the process y are available, and either a response or one of its derivatives need to be predicted. The theoretical results are illustrated by several examples in particular for the popular Matérn 3/2 kernel.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A common problem, which occurs in many different areas, most notably geostatistics (Ripley 1991; Cressie 1993), computer experiments (Sacks et al. 1989; Stein 1999; Santner et al. 2003; Leatherman et al. 2017) and machine learning (Rasmussen and Williams 2006), is to predict the response \(y(t_0)\) at a point \(t_0\in \mathbb {R}^d\) from given responses \(y(t_1),\ldots ,y(t_N)\) at points \(t_1,\ldots ,t_N\in \mathbb {R}^d\), where \(t_0\ne t_i\) for all \(i=1,\ldots ,N\). Making the prediction assuming that responses are observations of a random field is called kriging (Stein 1999). In classical kriging, it is assumed that y is a random field of the form

where \(f(t) \in \mathbb {R}^m \) is a vector of known regression functions, \(\theta \in \mathbb {R}^m \) is a vector of unknown parameters and \(\epsilon \) is a random field with zero mean and existing covariance kernel, say \(K(t,s) = E [\epsilon (t)\epsilon (s) ]\). The components of the vector-function f(t) are assumed to be linearly independent on the set of points where the observations have been made.

It is well-known, see e.g. (Sacks et al. 1989), that in the case of discrete observations the best linear unbiased predictor (BLUP) of \(y(t_0)\) has the form

where \(\varSigma =\big (K(t_i,t_j)\big )_{i,j=1}^N\) is an \(N\!\times \! N\)-matrix, \(K_{t_0}=\big (K(t_0,t_1),\ldots ,K(t_0,t_N)\big )^\top \) is a vector in \(\mathbb {R}^N\), \(X=(f(t_1),\ldots ,f(t_N))^\top \) is an \(N\!\times \! m\)-matrix, \(Y=(y(t_1),\ldots ,\) \(y(t_N))^\top \in \mathbb {R}^N\) is a vector of observations and

is the best linear unbiased estimator (BLUE) of \(\theta \). The BLUP satisfies the unbiased condition \( \mathbb {E}[\hat{y}(t_0)]=\mathbb {E}[y(t_0)] \) and minimizes the mean squared error \(\textrm{MSE}(\tilde{y}(t_0))=\mathbb {E}\left( y(t_0) -\tilde{y}(t_0)\right) ^2\) in the class of all linear unbiased predictors \(\tilde{y}(t_0)\); its mean squared error is

In the present paper, which is a follow-up of (Dette et al. 2019), we generalize the predictor (1.2) to the case of continuous observations of the response including possibly derivatives and prediction of derivatives and weighted averages of y(t). In practice, observations are rarely continuous but often they are made on very fine grids which make the model with continuous observations a good model for such experimental schemes. Analyzing models with very large number of observations can be significantly harder than the analyzing models with continuous observations.

An important note concerning construction of the BLUPs at different points is the fact that there is a considerable common part related to the use of the same BLUE. This could lead to significant computational savings relative to independent construction of the BLUPs. This observation extend to the cases when the observations are taken in \(\mathbb {R}^d\) and when derivatives are also used for predictions.

The remaining part of this paper is organized as follows. In Sect. 2 we consider the BLUPs when we observe the process or field only. In Sect. 3 we study the BLUPs for either process values or one of its derivatives when we observe the process (or field) with derivatives. in Sect. 4 we give additional illustrating examples of the BLUPs for particular kernels. In Sect. 5 we provide proofs of the main results.

2 Prediction without derivatives

2.1 Prediction at a point

Assume \(\mathcal {T}\subset \mathbb {R}^d\) and consider prediction at a point \(t_0\not \in \mathcal {T}\) for a response given by the model (1.1), where the observations for all \(t\in \mathcal {T}\) are available. The vector-function \(f\!: \mathcal {T}\!\rightarrow \! \mathbb {R}^m \) is assumed to contain functions which are bounded, integrable, smooth enough and linearly independent on \(\mathcal {T}\); the covariance kernel \(K(t,s) = E[\epsilon (t) \epsilon (s) ]\) is assumed strictly positive definite.

A general linear predictor of \(y(t_0)\) can be defined as

where Q is a signed measure defined on the Borel field of \(\mathcal {T}\). This predictor is unbiased if \(\mathbb {E}[\hat{y}_Q(t_0)]=\mathbb {E}[y(t_0)]\), which is equivalent to the condition

The mean squared error (MSE) of \(\hat{y}_Q(t_0)\) is given by

The best linear unbiased predictor (BLUP) \(\hat{y}_{Q_*}(t_0)\) of \(y(t_0)\) minimizes the mean squared error \(\textrm{MSE}(\hat{y}_{Q}(t_0))\) in the set of all linear unbiased predictors. The corresponding signed measure \(Q_*\) will be called BLUP measure throughout this paper. Unlike the case of discrete observations, the BLUP measure does not have to exist for continuous observations.

Assumption A

-

(1)

The best linear unbiased estimator (BLUE) \(\hat{\theta }_{\textrm{BLUE}} = \int _\mathcal {T}y(t) G(dt) \) exists in the model (1.1), where G(dt) is some signed vector-measure on \(\mathcal {T}\),

-

(2)

There exists a signed measure \(\zeta _{t_0}(dt)\) which satisfies the equation

$$\begin{aligned} \int _\mathcal {T}K(t,s)\zeta _{t_0}(dt)= K(t_0,s),\;\; \forall s\in \mathcal {T}. \end{aligned}$$(2.1)

Assumption A will be discussed in Sect. 2.2 below. We continue with a general statement establishing the existence and explicit form of the BLUP.

Theorem 1

If Assumption A holds then the BLUP measure \(Q_*\) exists and is given by

where the signed measure \(\zeta _{t_0}(dt)\) satisfies (2.1) and \( c= f(t_0)-\int _\mathcal {T}f(t)\zeta _{t_0}(dt)\,. \) The MSE of the corresponding BLUP \(\hat{y}_{Q_*}(t_0)\) is given by

where \( D\!=\! \int _\mathcal {T}\!\! \int _\mathcal {T}K(t,s) G(dt) G^\top (ds) \) is the covariance matrix of \(\hat{\theta }_{\textrm{BLUE}}\!=\!\int _\mathcal {T}y(t) G(dt) \).

This theorem is a particular case of a more general Theorem 2, which considers the problem of predicting an integral of the response. A few examples illustrating applications of Theorem 1 for particular kernels are given in Sect. 4.

We can interpret the construction of the BLUP at \(t_0\) in model (1.1) as the following two-stage algorithm. At stage 1, we use the BLUE \(\hat{\theta }_{\textrm{BLUE}}=\int _\mathcal {T}y(t) G(dt) \) for estimating \(\theta \). At stage 2, we compute the BLUP in the model

which is a model with new error process and no trend. Straightforwardly, the covariance function of the process \(\tilde{y}(t)\) is calculated as

It then follows from Theorem 1 applied to the new model that the signed measure \(Q_*(dt)\) satisfies the equation

From (2.3) in the new model, we obtain an alternative representation for the MSE of the BLUP \(\hat{y}_{Q_*}(t_0)\); that is,

2.2 Validity of Assumption A

If \(\mathcal{T}\) is a discrete set then Assumption A is satisfied for any strictly positive definite covariance kernel.

In general, the main part of Assumption A is the existence of the BLUE of the parameter \(\theta \), which has been clarified by Dette et al. (2019). According to their Theorem 2.2, the BLUE of \(\theta \) exists if and only if there exists a signed vector-measure \(G=(G_1, \ldots , G_m)^\top \) on \(\mathcal {T}\), such that the \(m\!\times \! m \)-matrix \(\int _\mathcal {T}f(t) G^\top (dt)\) is the identity matrix and

holds for all \(s\in \mathcal {T}\) and some \(m\! \times \! m\)-matrix D. In this case, \(\widehat{\theta }_{\textrm{BLUE}} = \int _\mathcal {T}Y(t)G(dt) \) and D is the covariance matrix of \(\widehat{\theta }_{\textrm{BLUE}} \); this matrix does not have to be non-degenerate.

Let \(\mathcal {H}_K\) be the reproducing kernel Hilbert space (RKHS) associated with kernel K. If the function \(K(t_0,s)\) belongs to \(\mathcal {H}_K\), then the second part of Assumption A is also satisfied; that is, there exists a measure \(\zeta _{t_0}(dt)\) satisfying the Eq. (2.1). This follows from results of Parzen (1961). Note that the function \(K(t_0,s)\) does not automatically belong to \(\mathcal {H}_K\) since in general \(t_0 \notin \mathcal {T}\).

If all components of f belong to \(\mathcal {H}_K\) then Assumption A holds and the matrix D in (2.4) is non-degenerate; see Dette et al. (2019) and Parzen (1961).

If the matrix D in Theorem 1 is non-degenerate then this theorem can be reformulated in the following form which is practically more convenient as there is no unbiasedness condition to check.

Proposition 1

Assume that there exists a signed measure \(\zeta _{t_0}(dt)\) satisfying (2.1) and a signed vector-measure \(\zeta (dt)\) satisfying equation

If additionally the matrix \( C=\int _\mathcal {T}f(t)\zeta ^\top (dt) \) is non-degenerate, then the BLUP measure exists and is given by (2.2) with \(D=C^{-1}\). Its MSE is given by (2.3).

Clearly, if the conditions of Proposition 1 are satisfied then the BLUE measure G(dt) is expressed via the measure \(\zeta (dt)\) by \(G(dt)=C^{-1}\zeta (dt)\).

Explicit forms of the BLUP for some kernels are given in Sect. 4.

2.3 Matching expressions in the case of discrete observations

Let us show that in the case of discrete observations the form of the BLUP of Proposition 1 coincides with the standard form (1.2). Assume that \(\mathcal{T}\) is finite, say, \(\mathcal{T}=\{t_1,\ldots ,t_N\}\). In this case, Eq. (2.5) has the form \( \varSigma \zeta = X\), where \(\zeta \) is and \(N\!\times \! m\)-matrix. Since the kernel K is strictly positive definite, this gives \(\zeta =\varSigma ^{-1}X\), and we also obtain \(C=X^\top \varSigma ^{-1}X\), \(G^\top =C^{-1}\zeta ^\top \). A general linear predictor is of form \(\tilde{y}(t_0)=Q^\top Y\) and the BLUP is \(Q_*^\top Y\) with \( Q^\top _*=\zeta _{t_0}^\top +c^\top G^\top , \) where \(\zeta _{t_0}=\varSigma ^{-1}K_{t_0}\) satisfies Eq. (2.1) and \( c= f(t_0)-X^\top \zeta _{t_0}. \) Expanding the expression for \(Q^\top _*\) we obtain

The classical form of the BLUP is given by (1.2), which can be written as \(Q^\top Y\) with \( Q^\top =f^\top (t_0)C^{-1}X^\top \varSigma ^{-1}+K^\top _{t_0}\varSigma ^{-1}-K^\top _{t_0}\varSigma ^{-1}XC^{-1}\varSigma ^{-1}X^\top \) and coincides with (2.6).

2.4 Predicting an average with respect to a measure

Assume that we have a realization of a random field (1.1) observed for all \(t\in \mathcal {T}\subset \mathbb {R}^d\). Consider the prediction problem of \(Z=\int _\mathcal{S} y(t) \nu (dt),\) where \(\nu (dt) \) is some (signed) measure on the Borel field of \(\mathbb {R}^d\) with support \(\mathcal{S}\). Assume that \(\mathcal{S} {\setminus } \mathcal {T}\ne \emptyset \) (otherwise, if \(\mathcal{S} \subseteq \mathcal {T}\), the problem is trivial as we observe the full trajectory \(\{y(t) ~|~ t \in \mathcal {T}\} \)). We interpret Z as a weighted average of the true process values on \(\mathcal{S}\). The general linear predictor can be defined as

where Q is a signed measure on the Borel field of \(\mathcal {T}\). The estimator \(\hat{Z}_Q\) is unbiased if and only if

The BLUP signed measure \(Q_*\) minimizes

among all signed measure Q satisfying the unbiasedness condition (2.8). Assumption A and Theorem 1 generalize to the following.

Assumption A\(^\prime \). The BLUE \(\hat{\theta }_{\textrm{BLUE}}\) exists and there exists a signed measure \(\zeta _{\nu }(dt)\) which satisfies the equation

Theorem 2

Suppose that Assumption A\(^\prime \) holds and let D be the covariance matrix of \(\hat{\theta }_{\textrm{BLUE}}=\int _\mathcal {T}y(t) G(dt) \). Then the BLUP measure exists and is given by

where \(\zeta _{\nu }(dt)\) is the signed measure satisfying (2.9) and \( c\!=\! \int _{\mathcal{S}}f(s)\nu (ds)-\int _\mathcal {T}f(t)\zeta _{\nu }(dt). \) The MSE of the BLUP \(\hat{Z}_{Q_*}\) is given by

The proof of Theorem 2 is given in Sect. 5 and contains the proof of Theorem 1 as a special case. Note also that the BLUP \(\hat{Z}_{Q_*}\) is simply the average (with respect to the measure \(\nu \)) of the BLUPs at points \(s \in \mathcal{S}\).

2.5 Location scale model on a product set

In this section we consider the location scale model

and assume that the kernel \({\textsf {K}}\) of the random field \({\varepsilon }(t)\) is given by

for \(t=(t_1,t_2), t'=(t'_1,t'_2)\in \mathcal {T}.\) We also assume that the set \(\mathcal {T}\subset \mathbb {R}^2\) is a product-set of the form \(\mathcal {T}= \mathcal {T}_1 \times \mathcal {T}_2\), where \(\mathcal {T}_1 \) and \( \mathcal {T}_2\) are Borel subsets of \(\mathbb {R}\) (in particular, these sets could be discrete or continuous). The kernel K of the product form (2.12) is called separable; such kernels are frequently used in modelling of spatial-temporal structures because they offer enormous computational benefits, including rapid fitting and simple extensions of many techniques from time series and classical geostatistics [see Gneiting et al. (2007) or Fuentes (2006) among many others].

Assume that Assumption A\(^\prime \) holds for two one-dimensional models

with \( K_i(u,u')=\mathbb {E}[\varepsilon _{(i)}(u)\varepsilon _{(i)}(u')] ~,~~u,u' \in \mathcal {T}_i ~~ (i=1,2). \) Let the measures \(G_i(du)\) define the BLUE \( \int _{\mathcal {T}_i} y_{(i)}(u) G_i(du) \) in these two models. Then the BLUE of \(\theta \) in the model (2.11) is given by \( \hat{\theta }= \int _\mathcal {T}y(t) {\textsf {G}}(dt), \) where \( {\textsf {G}}\) is a product-measure \( {\textsf {G}}(dt)=G_1(dt_1)G_2(dt_2), \) Assume we want to predict y(t) at a point \(T=(T_1,T_2) \notin \mathcal {T}\). Note that Eq. (2.9) can be rewritten as

A solution of the above equation has the form \( {\zeta }_{T}(dt_1,dt_2)= \zeta _{T_1}(dt_1) \zeta _{T_2}(dt_2)\,, \) where \(\zeta _{T_i}(dt)\) (\(i=1,2\)) satisfies the equation

Finally, the BLUP at the point \(T=(T_1,T_2)\) is \(\int _\mathcal {T}y(t) \textsf {Q}_{*}(dt) \), where

The measure \( {\textsf {G}}(dt)\) is the BLUE measure and does not depend on \(T_1,T_2\). On the other hand, the measure \( {\zeta }_{T}(dt)\) and constant c do depend on \(T_1,T_2\). The MSE of the BLUP is \(\textrm{MSE}(\hat{ y}_{Q_*}(T))=1+c-\int _\mathcal {T}{\textsf {K}} (t,T) \textsf {Q}_{*}(dt)\,.\)

Example 1

Consider the case of \(\mathcal {T}=[0,1]^2\) and the exponential kernel

where \(\lambda >0\) and \(t=(t_1,t_2), t'=(t'_1,t'_2) \in [0,1]^{2}\). Define the measure

In view of (Dette et al. (2019), Sect 3.4), \(\int _0^1 y(u) G(du)\) is the BLUE in the model \(y(u)=\theta + \varepsilon (u)\) with kernel \(K(u,u')=\mathbb {E}[\varepsilon (u)\varepsilon (u')]=e^{-\lambda |u-u'|}\), \(u,u' \in [0,1]\). Equation (2.14) can be rewritten as

It follows from (Dette et al. (2019), Sect 3.4) that this equation is satisfied by the measure

For \(T_1 \le 0\) we obtain \( \textsf {Q}_{*}(dt)= {\zeta }_{(T_1,T_2)}(dt)+ c {\textsf {G}}(dt)\) in the following form

Similar formulas can be obtained for \(0<T_1<1\) and \(T_1\ge 1\).

In Table 1 we show the square root of the MSE of the BLUP for the equidistant design supported at points \((i/(N-1),j/(N-1))\), \({i,j=0,1,\ldots ,N-1}\). We can see that the MSE for the design with \(N=4\) is already rather close to the MSE for the design with large N and the design with continuous observations.

In Fig. 1 we show the plot of the square root of the MSE as a function of a prediction point for points \((T_1,T_2) \in [0.5,2]\times [0.5,2]\). As the design is symmetric with respect to the point (0.5, 0.5), the plot of the MSE is also symmetric with respect to this point. Consequently only the upper quadrant is depicted in the figure.

We observe that the MSE tends to zero when the prediction point tends to one of design points and the MSE does not change much if the prediction point is far enough from the observation domain.

Remark 1

The results of this section can be easily generalized to the case of \(d>2\) variables and, moreover, to the model \(y(t)=\theta f(t)+\varepsilon (t)\), where \(t=(t_1,\ldots ,t_d)\in \mathcal {T}_1 \times \ldots \times \mathcal {T}_d\), \( {\textsf {K}}( t,t')=\mathbb {E}[{\varepsilon }(t){\varepsilon }(t')]=K_1(t_1,t_1')\cdots K_d(t_d,t_d') \) and \( f(t)=f_{(1)}(t_1) \cdots f_{(d)}(t_d), \) where \(f_{(i)}\) are some functions on \(\mathcal {T}_i~;~~i=1, \ldots , d\).

3 Prediction with derivatives

In this section we consider prediction problems, where the trajectory y in model (1.1) is differentiable (in the mean-square sense) and derivatives of the process (or field) y are available. This problem is mostly known in the literature under the name of gradient-enhanced kriging and became very popular in many different areas; see e.g. recent papers (Bouhlel and Martins 2019; Han et al. 2017; Ulaganathan et al. 2016), where many references to applications can also be found.

In Sect. 3.1 we discuss the discrete case of a once-differentiable process and in Sect. 3.2 we consider the general case of a q times differentiable (in the mean-square sense) process y satisfying the model (1.1). For the process y to be q times differentiable, the covariance kernel K and vector-function f in (1.1) have to be q times differentiable, which is one of the assumptions in Sect. 3.2. In Sect. 3.3 we consider the prediction problem for the location scale model on a two-dimensional product set in the case where the kernel \( {\textsf {K}}\) of the random field \({\varepsilon }\) has the product form (2.12). The results of this section can be easily generalized to the case of \(d>2\) variables.

3.1 Discrete case

Consider the model (1.1), where the kernel K and vector-function f are differentiable and one can observe the process y and its derivative at N different points \(t_{1}, \ldots , t_{N} \in \mathbb {R}\). In this case, the BLUP of \(y(t_0)\) has the form

where \(Y_{2N}=(y(t_1),\ldots ,y(t_N),y'(t_1),\ldots ,y'(t_N))^\top \in \mathbb {R}^{2N}\),

is a block matrix,

are \(N\!\times \! N\)-matrices,

is a vector in \(\mathbb {R}^{2N}\), \(X_{2N}=(f(t_1),\ldots ,f(t_N),f'(t_1),\ldots ,f'(t_N))^\top \) is an \(2N\!\times \! m\)-matrix and

is the BLUE of \(\theta \). The MSE of the BLUP (3.1) is given by

For more general cases of prediction of processes and fields with derivatives observed at a finite number of points, see Morris et al. (1993); Näther and Šimák (2003).

3.2 Continuous observations on an interval

Consider the continuous-time model (1.1), where the error process \(\epsilon \) has a q times differentiable covariance kernel K(t, s). We also assume that the vector-function f is q times differentiable and therefore the response y is q times differentiable as well.

Suppose we observe realization \(y(t)=y^{(0)}(t)\) for \(t \in {\textsc {T}}_0 \subset \mathbb {R}\) and assume that observations of the derivatives \(y^{(i)}(t)\) are also available for all \(t\in {\textsc {T}}_i\), where \({\textsc {T}}_i \subset \mathbb {R}\); \(i=1, \ldots , q\). The sets \({\textsc {T}}_i\) (\(i=0,1, \ldots , q\)) do not have to be the same; some of these sets (but not all) can even be empty. If at least one of the sets \({\textsc {T}}_i\) contains an interval then we speak of a problem with continuous observations.

Consider the problem of prediction of \(y^{(p)}(t_0)\), the p-th derivative of y at a point \(t_0\not \in {\textsc {T}}_p\), where \(0 \le p \le q\).

A general linear predictor of the p-th derivative \(y^{(p)}(t_0)\) can be defined as

where \(\textbf{Y}(t)=\left( y(t), y^{(1)}(t), \ldots , y^{(q)}(t)\right) ^\top \) is a vector with observations of the process and its derivatives, \(\textbf{Q}(dt)= (Q_0(dt),\ldots ,Q_q(dt))^\top \) is a vector of length \( (q+1)\) and \(Q_0(dt),\ldots ,Q_q(dt)\) are signed measures defined on \({\textsc {T}}_0,\ldots , {\textsc {T}}_q\), respectively. The covariance matrix of \(\textbf{Y}(t)\) is

which is a non-negative definite matrix of size \((q+1) \times (q+1)\).

The estimator \(\hat{y}_{p,Q}(t_0) \) is unbiased if \(\mathbb {E}[\hat{y}_{p,Q}(t_0)]=\mathbb {E}[y^{(p)}(t_0)]\), which is equivalent to

where \(\textbf{F}(t)=\left( f(t), f^{(1)}(t), \ldots , f^{(q)}(t)\right) \) is a \(m \!\times \! (q+1)\)-matrix.

Assumption A\(^{\prime \prime }\).

(1) The best linear unbiased estimator (BLUE) \(\hat{\theta }_{\textrm{BLUE}} = \int \textbf{G}(dt)\textbf{Y}(t) \) exists in the model (1.1), where \(\textbf{G}(dt)\) is some signed \(m \!\times \! (q+1)\)-matrix measure (that is, the j-th column of \(\textbf{G}(dt)\) is a signed vector measure defined on \({\mathcal {T}}_j\));

(2) There exists a signed vector-measure \(\zeta _{p,t_0}(dt)\) (of size \(q+1\)) which satisfies the equation

where \(\textbf{K}(t,s)=\big (\frac{\partial ^{j}K(t,s)}{\partial s^j}\big )_{j=0}^q\) is a \((q+1)\)-dimensional vector.

The problem of existence and construction of the BLUE in the continuous model with derivatives is discussed in Dette et al. (2019). A general statement establishing the existence and explicit form of the BLUP is as follows. The proof is given in Sect. 5.

Theorem 3

If Assumption A\(^{\prime \prime }\) holds, then the BLUP measure \(\textbf{Q}_{*}\) exists and is given by

where the signed measure \(\zeta _{p,t_0}(dt)\) satisfies (3.3) and

The MSE of the BLUP \(\hat{y}_{p,Q_*}(t_0)\) is given by

where

is the covariance matrix of \(\hat{\theta }_{\textrm{BLUE}}=\int \textbf{G}(dt)\textbf{Y}(t) \).

Example 2

As a particular case of prediction in the model (1.1), in this example we consider the problem of predicting a value of a process (so that \(p=0\)) with Matérn 3/2 covariance kernel \( K(t,s)=(1+\lambda |t-s|)e^{-\lambda |t-s|}\,; \) this kernel is once differentiable and is very popular in practice, see e.g. Rasmussen and Williams (2006). We assume that the vector-function f in the model (1.1) is 4 times differentiable and that the process y and its derivative \(y^\prime \) are observed on an interval [A, B] (so that \({\textsc {T}}_0={\textsc {T}}_1=[A,B]\) in the general statements). As shown in Dette et al. (2019), for this kernel the BLUE measure \(\textbf{G}(dt)\) can be expressed in terms of the signed matrix-measure \(\zeta (dt)=(\zeta _0(dt),\zeta _1(dt))\) with

where

Then using (Dette et al. (2019), Sect. 3.4) we obtain \(\zeta _{0,t_0}(dt)=(\zeta _{0,t_0,0}(dt),\) \( \zeta _{0,t_0,1}(dt))\) with

where for \(t_0>B\) we have \(z_{t_0,A}=0\), \(z_{t_0,1,A}=0,\) \( z_{t_0}(t)=0,\)

We also obtain the matrix

defined in (Dette et al. (2019), Lem. 2.1) from the condition of unbiasedness. If D, the covariance matrix of the BLUE is non-degenerate, then \(D=C^{-1}\). In the present case,

The BLUE-defining measure \(\textbf{G}(dt)\) is expressed through the measures \(\zeta (dt)\) and the matrix C by \(\textbf{G}(dt)= C^{-1}\zeta (dt)\). The BLUP measure for process prediction is given by

where

For the location scale model with \(f(t)=1\), we obtain \(C=1+\lambda (B-A)/4\), \(c_0=(1-z_{t_0,B})\) and, therefore, a BLUP measure for this model is given by

Therefore, the corresponding BLUP is given

Table 2 gives values of the square root of the MSE of the BLUP in the location scale model at the point \(t_0=2\) for three families of designs, where \([A,B] = [0,1]\). We observe that observations of derivatives inside the interval do not bring any improvement to the BLUP which can be explained by the fact that the weights of the continuous BLUP at derivatives at points in the interior of the interval [A, B] are 0.

3.3 Location scale model on a product set

Similarly to Sect. 2.5, we consider the location scale model (2.11) defined on the product set \(\mathcal {T}=\mathcal {T}_1 \times \mathcal {T}_2\) (where \(\mathcal {T}_1\) and \(\mathcal {T}_2\) are Borel sets in \(\mathbb {R}\)) with the kernel \( {\textsf {K}}\) of the random field \({\varepsilon }\) having the product form (2.12). The results of this section (as of Sect. 2.5) can be easily generalized to the case of \(d>2\) variables.

Assume that Assumption A\(^{\prime \prime }\) with \(q=1\) is satisfied for two one-dimensional models (2.13). For this assumption to hold, the process \( \{ y(t_1,t_2) ~|~ (t_1,t_2) \in \mathcal {T}\} \) has to be once differentiable with respect to \(t_1\) and \(t_2\). Let the measures \(G_{0,i}(du)\) and \(G_{1,i}(du)\) define the BLUE

in the univariate models (2.13); \(i=1,2\). In this case, results of Dette et al. (2019) imply that the BLUE of \(\theta \) in the model (2.11) has the form \( \hat{\theta }= \int _\mathcal {T}{\textbf{Y}}^\top (t) { {\textsf {G}}}(dt), \) where

and

with \({\textsf {G}}_{ij}(dt)=G_{i,k}(dt_1)G_{j,k}(dt_2)\).

Assume we want to predict y(T) at a point \(T=(T_1,T_2) \notin \mathcal {T}\). The analogue of the Eq. (2.9) is given by

where

Observing the product-form of expressions, we directly obtain that a solution of (3.5) has the form

where measures \(\zeta _{0,T_i}(dt)\) and \(\zeta _{1,T_i}(dt)\) for \(i=1,2\) satisfy the equation

Finally, the BLUP at the point \(T=(T_1,T_2)\) is \(\int _\mathcal {T}{\textbf{Y}}^\top (t) {\textsf {Q}}_{*}(dt) \), where \( {\textsf {Q}}_{*}(dt)= {\textsf {Z}}_{T}(dt)+ {c}_0 {{\textsf {G}}}(dt) \) with \( c_0=1- \int _\mathcal {T}(1,0,0,0){\textsf {Z}}_{T}(dt). \)

The MSE of the BLUP is given by

where D is the variance of the BLUE.

Example 3

Consider a location scale model on a square \([0,1]^{2}\) with a product covariance Matérn 3/2 kernel, that is

where

Define the measures

and

In view of (Dette et al. (2019), Sect. 3.4),

defines a BLUE in the model \(y(u)=\theta + \varepsilon (u)\) with \(u \in [0,1]\) and covariance kernel (3.6). Additionally, from (Dette et al. (2019), Sect. 3.4) we have

and

Finally, \(c_0=1- \int _0^1\!\! \int _0^1 (1,0,0,0){\textsf {Z}}_{T}(dt)= 1-\int _0^1\!\zeta _{0,T_1}(dt_1) \! \int _0^1 \zeta _{0,T_2}(dt_2)\) and the BLUP measure is given by \( {\textsf {Q}}_{*}(dt)= {\textsf {Z}}_{T}(dt)+ c_0 {{\textsf {G}}}(dt); \) that is,

We now investigate the performance of five discrete designs:

-

(i)

the design \(\xi _{N^2,0,0,0}\), where we observe process y on an \(N\!\times \! N\) grid;

-

(ii)

the design \(\xi _{N^2,4,4,4}\), where we observe process y on an \(N\!\times \! N\) grid and additionally derivatives \(\frac{\partial y}{\partial t_1}\), \(\frac{\partial y}{\partial t_2}\), \(\frac{\partial ^2 y}{\partial t_1\partial t_2}\) at 4 corners of \([0,1]^2\);

-

(iii)

the design \(\xi _{N^2,N^2,N^2,0}\), where we observe process y and derivatives \(\frac{\partial y}{\partial t_1}\), \(\frac{\partial y}{\partial t_2}\) on an \(N\!\times \! N\) grid;

-

(iv)

the design \(\xi _{N^2,N^2,N^2,0}\), where we observe process y on an \(N\!\times \! N\) grid and derivatives \(\frac{\partial y}{\partial t_1}\), \(\frac{\partial y}{\partial t_2}\), \(\frac{\partial ^2 y}{\partial t_1\partial t_2}\) at \(4N-4\) equidistant points on the boundary of \([0,1]^2\);

-

(v)

the design \(\xi _{N^2,N^2,N^2,N^2}\), where we observe process y and derivatives \(\frac{\partial y}{\partial t_1}\), \(\frac{\partial y}{\partial t_2}\), \(\frac{\partial ^2 y}{\partial t_1\partial t_2}\) at \(N\!\times \! N\) equidistant points on an \(N\!\times \! N\) grid.

The results are depicted in Table 3, which shows the square root of the MSE of predictions at the point (2, 2) and (0.5, 2) for different sample sizes. For any given \(N\ge 2\), the MSE for prediction outside the square \([0,1]^2\) for the designs \(\xi _{N^2,N^2,N^2,N^2}\) and \(\xi _{N^2,4N-4,4N-4,4N-4}\) are exactly the same. This is related to the fact that the BLUP weights associated with all derivatives at interior points in \([0,1]^2\) of the designs \(\xi _{N^2,N^2,N^2,N^2}\) are all 0. This means that for optimal prediction of \(y(t_0)\) at a point \(t_0\) outside the observation region one needs the design guaranteeing the optimal BLUE plus the observations of y(t) and \(y'(t)\) at points t closest to \(t_0\). Note that the results of (Dette et al. (2019), Sect. 3.4) imply that the continuous optimal design for the BLUE does not use values of any derivatives of the process (or field for the product-covariance model) in the interior of \(\mathcal {T}\).

The observation above is consistent with our other numerical experience which have shown that the BLUP at a point \(t_0 \in (0,1) \times (0,1)\) constructed from the design \(\xi _{N^2,N^2,N^2,N^2}\) has vanishing weights at all derivatives of interior points of \([0,1]^2\) with five exceptions: the center 0 and the four points which are closest to \(t_0\) in the \(L_\infty \) (Manhattan) metric.

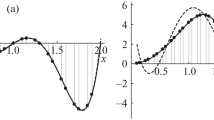

Figures 2 and 3 compare the MSE for some designs. Figure 3 illustrates that the MSE for designs \(\xi _{N^2,4N-4,4N-4,4N-4}\) and \(\xi _{N^2,N^2,N^2,N^2}\) is exactly the same for all points outside \([0,1]^2\) and almost the same at all interior points of \([0,1]^2\). This is in full agreement with Table 3, which also illustrates this phenomenon.

4 Additional examples of predicting process values

In this section, we give further examples of prediction of values of specific random processes y(t), which follows the model (1.1) and observed for all \(t\in \mathcal {T}=[A,B]\). In Sect. 4.1, we illustrate application of Proposition 1 and in Sect. 4.2 we give an example of application of Theorem 3. In the example of Sect. 4.2 we consider the integrated Brownian motion process, which is a once differentiable random process, and we assume that in addition to values of y(t), the values of the derivative of y(t) are also available. As in the main body of the paper, the components of the vector-function f(t) in (1.1) are assumed to be smooth enough (for all formulas to make sense) and linearly independent on \(\mathcal {T}\).

4.1 Prediction for Markovian error processes

4.1.1 General Markovian process

Consider the prediction of the random process (1.1) with \(\mathcal {T}=[A,B]\) and the Markovian kernel \(K(t,s)=u(t)v(s)\) for \(t\le s\), where \(u(\cdot )\) and \(v(\cdot )\) are twice differentiable positive functions such that \(q(t)=u(t)/v(t)\) is monotonically increasing. As shown in (Dette et al. (2019),Sect. 2.6), a solution of the equation \( \int _A^B K(t,s)\zeta (dt)= f(s) \) holding for all \(s\in \mathcal {T}\) is the signed vector-measure \(\zeta (dt)=z_{A}\delta _A(dt)+z_{B}\delta _B(dt)+z(t)dt\) with

where \(\psi '\) denotes a derivative of a function \(\psi \), the vector-function \(h(\cdot )\) is defined by \(h(t)=f(t)/v(t)\).

Then we obtain \(\zeta _{t_0}(dt)=z_{0A}\delta _A(dt)+z_{0B}\delta _B(dt)\) with

and

The BLUP measure is given by

and the MSE of the BLUP is

4.1.2 Prediction when the error process is Brownian motion

The covariance kernel \(K(t,s)=\min (t,s)\) of Brownian motion is a particular case of the Markovian kernel with \(u(t)=t\) and \(v(s)=1\), \(t\le s\). Further we present the BLUP for few choices of f(t).

For the location-scale model with \(f(t)=1\), we obtain \(c=0\) and, therefore, the BLUP measure is given by \(Q_{*}(dt)=\delta _B(dt)\). The BLUP is \(\hat{y}_{Q_*}(t_0)=y(B)\) and it has \(\textrm{MSE}(\hat{y}_{Q_*}(t_0))=t_0-B\).

For the model with \(f(t)=t\), we obtain \(\tilde{c}=B^{-1}(t_0-B)\) and, thus, the BLUP measure is given by

The BLUP is \(\hat{y}_{Q_*}(t_0)=\frac{t_0}{B} y(B)\) and it has \(\textrm{MSE}(\hat{y}_{Q_*}(t_0))=\frac{t_0}{B} (t_0-B)\).

For the model with \(f(t)=t^2\), we obtain \(\tilde{c}=(A^3+4/3(B^3-A^3))^{-1}(t_0^2-B^2)\) and, thus, the BLUP measure is given by

The BLUP is

and it has the mean squared error

4.1.3 Prediction for an OU error process

The covariance kernel \(K(t,s)=\exp (-\lambda |t-s|)\) of the OU error process is also a particular case of the Markovian kernel with \(u(t)=e^{\lambda t}\) and \(v(s)=e^{-\lambda s}\), \(t\le s\).

For the location-scale model \(f(t)=1\), we obtain \(\tilde{c}=(1+(B-A)\frac{\lambda }{2})^{-1}(1-e^{-\lambda |t_0-B|})\) and, therefore, the BLUP measure is given by

The BLUP is \( \hat{y}_{Q_*}(t_0)=\tilde{c}/2 y(A)+(e^{-\lambda |t_0-B|}+\tilde{c}/2)y(B)+\tilde{c}\lambda /2 \int _A^B y(t)dt \) and it has \(\textrm{MSE}(\hat{y}_{Q_*}(t_0))=1+\tilde{c}-\int _{A}^B e^{-\lambda |t-t_0|}Q_{*}(dt)\).

In Table 4 we give values of the square root of the MSE of the BLUP at the point \(t_0=2\) for the N-point equidistant design in the location scale model on the interval [0, 1] and the OU kernel with \(\lambda =2\). From this table, we can see that one does not need many points to get almost optimal prediction: indeed, the MSE for designs with \(N\ge 4\) is very close to the MSE for the continuous observations. Similar results have been observed for other points \(t_0\) and other Markovian kernels.

4.2 Prediction when the error process is integrated Brownian motion

Consider the prediction of the random process (1.1) with \(\mathcal {T}=[A,B]\), the 4 times differentiable vector of regression functions f(t) and the kernel of the integrated Brownian motion defined by

From (Dette et al. (2019), Sect. 3.2) we have that the signed matrix-measure \(\zeta (dt)=(\zeta _0(dt),\zeta _1(dt))\) has components \(\zeta _0(dt)=z_A\delta _A(dt)+z_B\delta _B(dt)+z(t)dt\) and \(\zeta _1(dt)=z_{1,A}\delta _A(dt)+z_{1,B}\delta _B(dt)\), where

Therefore we obtain \(\zeta _{t_0,0}(dt)=z_{t_0,A}\delta _A(dt)+z_{t_0,B}\delta _B(dt)+z_{t_0}(t)dt\) and \(\zeta _{t_0,1}(dt)=\) \(z_{t_0,1,A}\delta _A(dt) +z_{t_0,1,B}\delta _B(dt)\) with (for \(t_0>B\))

and \(z_{t_0}(t)=K^{(4)}(t,t_0)=0.\) This implies \(\zeta _{t_0,0}(dt)=\delta _B(dt)\) and \(\zeta _{t_0,1}(dt)=(t_0-B)\delta _B(dt)\). Also we obtain \( C=\frac{12}{A^3}f(A)f^\top (A)-\frac{6}{A^2}\Big (f'(A)f^\top (A)+f(A)f'^\top (A)\Big )+\frac{4}{A}f'(A)f'^\top (A)+\int _A^B f''(t)f''^\top (t)dt \) and \( \tilde{c}_0\!=\!C^{-1}c_0\!=\! C^{-1} \Big (f(t_0) -[f(B)+(t_0-B)f'(B)]\Big ). \)

For the location-scale model with \(f(t)=1\), we obtain \(c=0\) and, therefore, the BLUP measure is given by \(\textbf{Q}_{*}(dt)=(\delta _B(dt),(t_0-B)\delta _B(dt))^\top \). The BLUP is \(\hat{y}_{\textbf{Q}_*}(t_0)=y(B)+(t_0-B)y'(B)\) and it has \(\textrm{MSE}(\hat{y}_{\textbf{Q}_*}(t_0))=t_0^3/3-t_0B(t_0-B/2).\)

5 Proofs

5.1 Proof of Theorem 2

To start, we proof the following lemma.

Lemma 1

The mean squared error [relative to the true process value] of any unbiased estimator \(\hat{Z}_Q= \int _\mathcal {T}y(t)Q(dt)\) is given by

Proof

Straightforward calculation gives

as required.

Let us now prove the main result. We will show that \(\textrm{MSE}(\hat{Z}_Q)\ge \textrm{MSE}(\hat{Z}_{Q_*})\), where \(\hat{Z}_Q\) is any linear unbiased estimator of the from (2.7) and \(\hat{Z}_{Q_*}\) is defined by the measure (2.10). Define \(R(dt)=Q(dt)-Q_{*}(dt)\). From the condition of unbiasedness for Q(dt) and \(Q_{*}(dt)\), we have \(\int _\mathcal {T}f(t)R(dt)=0_{m \times 1}\).

We obtain

where the inequality follows from nonnegative definiteness of the covariance kernel and the last equality follows from the unbiasedness condition \(\int f(t)R(dt)=0\). \(\Box \)

5.2 Proof of Theorem 3

For simplicity, assume \(p=0\); the case \(p>0\) can be dealt with analogously. First, we derive the following lemma.

Lemma 2

The mean squared error of any unbiased estimator \(\hat{y}_{Q}(t_0)\) of the form (3.2) is given by

Proof

Straightforward calculation gives

as required.

Now we will prove the main result. We will show that

where \(\hat{y}_Q(t_{0})\) is any linear unbiased estimator of the form (3.2) and \(\hat{y}_{Q_{*}}(t_{0})\) is defined by (3.4). Define \(\textbf{R}(dt)=\textbf{Q}(dt)-\textbf{Q}_{*}(dt)\). From the unbiasedness condition for \(\textbf{Q}(dt)\) and \(\textbf{Q}_{*}(dt)\), we have \( \int _\mathcal {T}\textbf{F}(t)\textbf{R}(dt)=0_{m \times 1} \) with \(\textbf{F}(t)=(f(t),f^{(1)}(t),\ldots ,f^{(q)}(t)).\) Therefore we obtain

where the inequality follows from nonnegative definiteness of the covariance kernel and the last equality follows from the unbiasedness condition \(\int \textbf{F}(t)\textbf{R}(dt)=0\).

References

Bouhlel M, Martins J (2019) Gradient-enhanced kriging for high-dimensional problems. Eng Comput 35(1):157–173

Cressie N (1993) Statistics for spatial data. Wiley, New York

Dette H, Pepelyshev A, Zhigljavsky A (2019) The blue in continuous-time regression models with correlated errors. Ann Stat 47:1928–1959

Fuentes M (2006) Testing for separability of spatial-temporal covariance functions. J Stat Plan Inference 136(2):447–466

Gneiting T, Genton M, Guttorp P (2007) Geostatistical space-time models, stationarity, separability and full symmetry. In: Finkenstadt VIB, Held L (eds) Statistical methods for spatio-temporal systems. Chapman and Hall/CRC, Boca Raton, pp 151–176

Han ZH, Zhang Y, Song CX, Zhang KS (2017) Weighted gradient-enhanced kriging for high-dimensional surrogate modeling and design optimization. Am Inst Aeronaut Astronaut J 55(12):4330–4346

Leatherman E, Dean A, Santner T (2017) Designing combined physical and computer experiments to maximize prediction accuracy. Comput Stat Data Anal 113:346–362

Morris MD, Mitchell TJ, Ylvisaker D (1993) Bayesian design and analysis of computer experiments: use of derivatives in surface prediction. Technometrics 35(3):243–255

Näther W, Šimák J (2003) Effective observation of random processes using derivatives. Metrika 58(1):71–84

Parzen E (1961) An approach to time series analysis. Ann Math Stat 32(4):951–989

Rasmussen C, Williams C (2006) Gaussian processes for machine learning. MIT Press, Cambridge

Ripley BD (1991) Statistical inference for spatial processes. Cambridge University Press, Cambridge

Sacks J, Welch WJ, Mitchell TJ, Wynn HP (1989) Design and analysis of computer experiments. Stat Sci 4:409–423

Santner TJ, Williams BJ, Notz WI (2003) The design and analysis of computer experiments. Springer series in statistics. Springer-Verlag, New York

Stein ML (1999) Interpolation of spatial data: some theory for kriging. Springer Science & Business Media, New York

Ulaganathan S, Couckuyt I, Dhaene T, Degroote J, Laermans E (2016) Performance study of gradient-enhanced kriging. Eng Comput 32:15–34

Acknowledgements

The authors are grateful to both referees for careful reading of the manuscript and useful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The work of A. Pepelyshev was partially supported by the Russian Foundation for Basic Research under project 20-01-00096.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dette, H., Pepelyshev, A. & Zhigljavsky, A. Prediction in regression models with continuous observations. Stat Papers 65, 1985–2009 (2024). https://doi.org/10.1007/s00362-023-01465-6

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-023-01465-6