Abstract

We obtain discrete mixture representations for parametric families of probability distributions on Euclidean spheres, such as the von Mises–Fisher, the Watson and the angular Gaussian families. In addition to several special results we present a general approach to isotropic distribution families that is based on density expansions in terms of special surface harmonics. We discuss the connections to stochastic processes on spheres, in particular random walks, discrete mixture representations derived from spherical diffusions, and the use of Markov representations for the mixing base to obtain representations for families of spherical distributions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A discrete mixture representation for a parametric family \(\{P_\theta :\,\theta \in \Theta \}\) of probability measures in terms of another family \(\{Q_n:\, n\in \mathbb {N}_0\}\) of probability measures, the mixing base, all defined on the same measurable space, is of the form

Here, for each \(\theta \in \Theta \), the mixing coefficients \((w_\theta (n))_{n\in \mathbb {N}_0}\) are the individual probabilities of a distribution \(W_\theta \), the mixing distribution, on (the set of subsets of) \(\mathbb {N}_0\). A classical case is the representation of non-central chisquared distributions with k degrees of freedom, \(P_\theta =\chi _k^2(\theta ^2)\) with non-centrality parameter \(\theta ^2>0\), as Poisson mixtures of central chisquared distributions, where \(Q_n=\chi _{2n+1}^2:=\chi ^2_{2n+1}(0)\) and where \(W_\theta \) is the Poisson distribution with mean \(\lambda =\theta ^2/2\); see e.g. the books of Liese and Miescke (2008) and Mörters and Peres (2010) where the representation appears in statistics in connection with the power of statistical tests and in probability theory in connection with the local times of Markov processes respectively. Such mixture representations can be related to two-stage experiments: In order to obtain a value x with distribution \(P_\theta \) we first choose n according to \(W_\theta \) and then choose x according to \(Q_n\). This leads to an immediate application of discrete mixture representations in the context of simulation methodology.

In the present paper we continue our previous investigations (see Baringhaus and Grübel (2021a, 2021b)), and now specifically consider distributions on the Euclidean sphere \(\mathbb {S}_d:=\{x\in \mathbb {R}^{d+1}:\, \Vert x\Vert =1\}\) of \((d+1)\)-dimensional real vectors of unit length. This case seems to us to deserve some interest, in particular if specific properties of spheres are taken into account: The group \(\mathbb {O}(d+1)\) of orthogonal transformations of the ambient space \(\mathbb {R}^{d+1}\) acts transitively on \(\mathbb {S}_d\), and there is a ‘polar decomposition’ (or ‘tangent-normal decomposition’, see Sect. 4.3) that relates \(\mathbb {S}_d\) to \([-1,1]\times \mathbb {S}_{d-1}\) if \(d>1\).

We generally assume that the distribution parameters in the above general setup are of the form \(\theta =(\eta ,\rho )\), where \(\eta \in \mathbb {S}_{d}\) may be seen as a location parameter; instead of \(P_\theta \) we also write \(P_{\eta ,\rho }\). We obtain mixture representations that split the dependence on the two parts of the parameter in the sense that

In particular, the mixing distributions depend on \(\rho \) only. For fixed \(\rho \) on the left, or fixed \(n\in \mathbb {N}_0\) on the right hand side of (2), the families \(\{P_{\eta ,\rho }:\, \eta \in \mathbb {S}_d\}\) respectively \(\{Q_{n,\eta }:\,\eta \in \mathbb {S}_d\}\) are parametrized by the sphere and are defined on its Borel subsets \(\,\mathcal {B}(\mathbb {S}_d)\). We assume that these families interact with the group action mentioned above in the sense that they are isotropic; see (8) below. In particular, their elements are then rotationally symmetric about the axis specified by \(\eta \). As a simple application of the representation (2) we mention that with the finite sums \(R_{\eta ,\rho }(A)\,:=\,\sum _{k=0}^n w_\theta (n) \,Q_k(A)\) we have monotonically increasing approximations of the probabilities \(P_{\eta ,\rho }(A)\), \(A\in \mathcal {B}(\mathbb {S}_{d})\), with uniform error bounds in the sense that

The literature contains several other applications; see for example the relation to nonparametric Bayesian inference in Baringhaus and Grübel (2021b), Section 5.1.

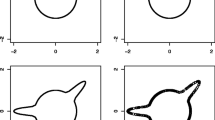

In Sect. 2 we collect some basic notation and obtain mixture representations for the von Mises–Fisher family and two spherical Cauchy families in Theorem 2, the Watson family in Theorem 3, and an angular Gaussian family in Theorem 5. The mixing bases are chosen specifically for the respective family, with a view towards reflecting its properties. A different base will generally lead to a different representation, as demonstrated by Baringhaus and Grübel (2021a) in the context of non-central chisquared distributions. In Sect. 3 we present a general approach that uses expansions of densities in terms of special surface harmonics. The resulting mixing base has a structural property that we call self-mixing stability. This property makes it comparably easy to relate different expansions to each other. We obtain representations with this mixing base for the wrapped Cauchy and the wrapped normal families in Theorem 8, and for the von Mises–Fisher families in Theorem 9. These results only hold under conditions on the parameter \(\rho \) that ensure that the respective distribution is not too far away from the uniform distribution on the sphere; in Example 11 we work out a possibility for extending this range.

For fixed \(\rho \) or n we may regard \(P_{\eta ,\rho }\) and \(Q_{n,\eta }\) as probability kernels via \((\eta ,A)\mapsto P_{\eta ,\rho }(A)\), \((\eta ,A)\mapsto Q_{n,\eta }(A)\). This provides a general connection with Markov processes. We briefly return to the classical mixture representation of non-central one-dimensional chisquared distributions, which may be written as

Here the random variables \(N(\theta ),X,E_1,E_2,\dots \) are independent, X has the standard normal distribution, \(E_1,E_2,\dots \) are exponentially distributed with mean 1, and \(N(\theta )\) has the Poisson distribution with parameter \(\theta ^2/2\). The path-wise point of view displays the distributions \(\chi ^2_1(\theta )\), \(\theta \ge 0\), as the distributions of randomly stopped partial sums of independent random variables, and (3) may be used to read off stochastic monotonicity and infinite divisibility of non-central chisquared distributions. Note that the representation only covers the one-dimensional marginal distributions of the process \(((X+\theta )^2)_{\theta \ge 0}\), as the left hand side of (3) is obviously not pathwise monotone in the ‘time parameter’ \(\theta \). Quite generally, (1) can be related to randomly stopped stochastic processes: If \(X=(X_n)_{n\in \mathbb {N}_0}\) is such that \(X_n\) has distribution \(Q_n\) for all \(n\in \mathbb {N}_0\) then \(X_\tau \) has distribution \(P_\theta \) if \(\tau \) is independent of X and has distribution \(W_\theta \).

In Sect. 4 we discuss several connections between families of spherical distributions and stochastic processes on spheres. We consider random walks on spheres in Sect. 4.1, distribution families that arise in connection with diffusion processes in Sect. 4.2, and the use of Markov representations of the mixing base in connection with almost sure representations for distribution families in Sect. 4.3. The ultraspherical mixing base from Sect. 3 will be useful at various stages.

For a single transition kernel we obtain a family \((X^\eta _n)_{n\in \mathbb {N}_0}\) of Markov chains indexed by their initial state \(\eta \), meaning that \(X^\eta _0=\eta \) with probability 1. Isotropy of the kernel then extends to isotropy of the corresponding distributions on the path space. For the elements of the mixing base in Sect. 3 the marginal distributions of these chains have a particular simple description. Further, isotropy relates a family \(\{P_\eta :\,\eta \in \mathbb {S}_d\}\) to a single distribution on \([-1,1]\) via the latitude projection \(x\rightarrow \eta ^t x\), with \(\eta \) as ‘north pole’. For the chain \(X^\eta =(X^\eta _n)_{n\in \mathbb {N}_0}\) we obtain an associated latitude process \(Y=(Y_n)_{n\in \mathbb {N}_0}\) via \(Y_n:=\eta ^{\text {t}}X_n\) for all \(n\in \mathbb {N}_0\). For isotropic kernels this is again a Markov chain, now on \([-1,1]\) and with start at 1. Finally, for the von Mises–Fisher distributions we show that a homogeneous Markov process on the sphere with these as marginal distributions does not exist, see Theorem 16, and we obtain a result similar to (3), see Example 18.

Proofs are collected in Sect. 5.

Mixing of distributions is a standard topic in probability theory and statistics, see e.g. Lindsay (1995). Spherical data and families of spherical distributions have similarly been investigated for a long time and by many researchers; standard references are the classic monograph of Watson (1983) and, more recently, the book of Mardia and Jupp (2000). For a review of distributions on spheres we refer to Pewsey and García-Portugués (2021), see also Watson (1982). Of particular interest for the topics treated here is the very recent paper of Mijatović et al. (2020) where a discrete mixture representation for the marginal distributions of spherical Brownian motion is developed. More specific references will be given at the appropriate places below.

2 Generalities and some special results

We need some basic notions and definitions. We write \(X\sim \mu \) if X is a random variable on some background probability space \((\Omega ,\mathcal {F},\mathbb {P})\) with distribution \(\mu \). Formally, let \((\Omega ,\mathcal {A})\) and \((\Omega ',\mathcal {A}')\) be measurable spaces and suppose that \(T:\Omega \rightarrow \Omega '\) is \((\mathcal {A},\mathcal {A}')\)-measurable. Then the push-forward \(P^T\) of a probability measure P on \((\Omega ,\mathcal {A})\) under T is the probability measure on \((\Omega ',\mathcal {A}')\) given by \(P^T(A)=P(T^{-1}(A))\), \(A\in \mathcal {A}'\), and \(X\sim \mu \) is the same as \(\mathbb {P}^X=\mu \). For many of the measurable spaces considered below there is a canonical uniform distribution, often defined by invariance under a group operation. To avoid tiresome repetitions we agree that densities refer to the respective uniform distribution if not specified otherwise.

We fix a dimension \(d\ge 1\), but instead of d we often use

as this is common in connection with families of special functions. In particular, whenever d and \(\lambda \) appear together, they are related by (4). The group \(\mathbb {O}(d+1)\) of orthogonal \((d+1)\times (d+1)\)-matrices U acts on \(\mathbb {S}_{d}\) via \(x\mapsto Ux\), and the uniform distribution \(\textrm{unif}(\mathbb {S}_{d})\) on the sphere is the unique probability measure on the Borel subsets of \(\mathbb {S}_d\) that is invariant under all such transformations. For a fixed \(\eta \in \mathbb {S}_{d}\) the push-forward \(\nu _d\) of \(\textrm{unif}(\mathbb {S}_{d})\) under the mapping \(x\mapsto \eta ^{\text {t}}x\) has density \(h_d\) with respect to the uniform distribution \(\textrm{unif}(-1,1)\) on the interval \([-1,1]\), where

Note that this does not depend on \(\eta \in \mathbb {S}_{d}\). Further, if \(X\sim \textrm{unif}(\mathbb {S}_{d})\) and \(Y=\eta ^{\text {t}}X\sim \nu _d\), then the conditional distribution of X given \(Y=y\) is the uniform distribution on

with \(\textrm{unif}(C_d(\eta ,y))\) the unique probability measure on this set that is invariant under the subgroup \(\{U\in \mathbb {O}(d+1):\, U\eta =\eta \}\) of \(\mathbb {O}(d+1)\). This may be seen in the context of the polar decomposition mentioned in the introduction.

Conversely, given a probability measure \(\nu \) on \([-1,1]\) and a parameter \(\eta \in \mathbb {S}_{d}\), we can construct a distribution \(\mu =\mu _\eta \) on \(\mathbb {S}_{d}\) via the kernel \((y,A)\mapsto \textrm{unif}(C_d(\eta ,y))(A)\). In particular, for bounded and measurable functions \(\phi :\mathbb {S}_{d}\rightarrow \mathbb {R}\),

For \(\eta \in \mathbb {S}_d\) and a measurable function \(g:[-1,1]\rightarrow \mathbb {R}\) the function \(f_\eta :\mathbb {S}_{d}\rightarrow \mathbb {R}\) given by

is \(\textrm{unif}(\mathbb {S}_d)\)-integrable if and only if g is \(\nu _d\)-integrable, and then

Thus \(f_\eta \) is the density of a probability measure on \(\mathbb {S}_{d}\) if and only if g is the \(\nu _d\)-density of a probability measure on \([-1,1]\). In particular, a probability density g on \([-1,1]\) generates a family \(\{Q_\eta :\, \eta \in \mathbb {S}_d\}\) of spherical distributions via (7), and such families are isotropic in the sense that

In particular, each \(Q_\eta \) is invariant under all rotations with axis \(\eta \). As the function \(\eta \mapsto \int _A g(\eta ^{\text {t}}x)\,\textrm{unif}(\mathbb {S}_{d})(dx)\) is \(\mathcal {B}(\mathbb {S}_{d})\)-measurable for all \(A\in \mathcal {B}(\mathbb {S}_{d})\), \(Q_{{\cdot }}:\mathbb {S}_{d}\times \mathcal {B}(\mathbb {S}_{d})\rightarrow \mathbb {R}\) defined by \(Q_{{\cdot }}(\eta ,A)=Q_\eta (A),\,(\eta ,A)\in \mathbb {S}_{d}\times \mathcal {B}(\mathbb {S}_{d}),\) is a Markov kernel from \(\left( \mathbb {S}_{d},\mathcal {B}(\mathbb {S}_{d})\right) \) to \(\left( \mathbb {S}_{d},\mathcal {B}(\mathbb {S}_{d})\right) \). Further, if Q is a Markov kernel from \(\left( \mathbb {S}_{d},\mathcal {B}(\mathbb {S}_{d})\right) \) to \(\left( \mathbb {S}_{d},\mathcal {B}(\mathbb {S}_{d})\right) \) and \(U\in \mathbb {O}(d+1)\), then the kernel \(Q^U:\mathbb {S}_{d}\times \mathcal {B}(\mathbb {S}_{d})\rightarrow \mathbb {R}\) defined by \(Q^U(\eta ,A)=Q(\eta ,U^{\text {t}}A),\,(\eta ,A)\in \mathbb {S}_{d}\times \mathcal {B}(\mathbb {S}_{d}),\) with \(U^{\text {t}}A:=\{U^{\text {t}}x:x\in A\}\), is the push-forward of Q under U. The kernel Q is isotropic if

Some classical special functions will be needed below. Let

be the ascending factorials. The modified Bessel functions \(I_\alpha \) of the first kind are given by

with real nonnegative parameter \(\alpha \), the confluent hypergeometric functions are

with real positive parameters \(\alpha \) and \(\beta \), and the hypergeometric functions are

with real positive parameters \(\alpha \), \(\beta \), and \(\gamma \).

Example 1

(a) Let \(p>-\frac{1}{2}\) and let \(\nu \) be the distribution on \([-1,1]\) with \(\textrm{unif}(-1,1)\)-density \(y\mapsto \frac{\Gamma (p+\lambda +1)}{\Gamma (p+\frac{1}{2})\Gamma (\lambda +\frac{1}{2})} |y|^{2p}(1-y^2)^{\lambda -1/2}\). Then \(\nu \) has \(\nu _d\)-density \(y\mapsto \frac{\Gamma (\frac{1}{2})\Gamma (p+\lambda +1)}{\Gamma (p+\frac{1}{2}) \Gamma (\lambda +1)}\,|y|^{2p},\) and we obtain the spherical power distribution \(\textrm{SP}_d(\eta ,p)\) with density

We mainly use this with \(p=n\in \mathbb {N}_0\), and then have

(b) Starting with \(\nu =\textrm{Beta}_{[-1,1]}(p+\lambda -\frac{1}{2},q+\lambda -\frac{1}{2})\), \(p,q>\frac{1}{2}-\lambda \), the beta distributions on \([-1,1]\) with densities \(y\mapsto c(p+\lambda -\frac{1}{2},q+\lambda -\frac{1}{2}) (1-y)^{p+\lambda -\frac{3}{2}}(1+y)^{q+\lambda -\frac{3}{2}}\), where \(c(p+\lambda -\frac{1}{2},q+\lambda -\frac{1}{2})=\Gamma (p+q+2\lambda -1)/\left( 2^{p+q+2(\lambda -1)}\Gamma (p+\lambda -\frac{1}{2})\Gamma (q+\lambda -\frac{1}{2})\right) \), we obtain the spherical beta distributions \(\textrm{SBeta}_d(\eta ,p,q)\), with densities

where the norming constants are given by

(c) The von Mises–Fisher distributions, which we denote by \(\textrm{MF}_d(\eta ,\rho )\), \(\rho > 0\), arise if we start with \(\nu _d\)-density proportional to \(y\mapsto \exp (\rho y)\), \(-1\le y\le 1\). The continuous density of the associated spherical distribution is

where the norming constants are given by

Further, \(\textrm{MF}_d(\eta ,0)=\textrm{unif}(\mathbb {S}_{d})\).

(d) The Watson distributions \(\textrm{Wat}_d(\eta ,\rho )\), \(\rho \in \mathbb {R}\), arise if we begin with \(\nu _d\)-density proportional to \(y\mapsto \exp (\rho y^2)\), \(-1\le y\le 1\). The continuous density of the associated spherical distribution is

with norming constants \(\ c_d(\rho ) = \bigl ({}_1F_1(\frac{1}{2};\lambda +1;\rho )\bigr )^{-1}\). Clearly, \(\textrm{Wat}_d(\eta ,0)=\textrm{unif}(\mathbb {S}_{d})\).

(e) The angular Gaussian distributions are the distributions of \(X=Z/\Vert Z \Vert \), where Z has the \((d+1)\)-variate normal distribution \(N_{d+1}(a,\Sigma )\) with mean vector \(a\in \mathbb {R}^{d+1}\setminus \{0\}\) and symmetric positive definite covariance matrix \(\Sigma \); see, e.g. Watson (1983, p. 108). Here, we exclusively deal with the case where \(\Sigma \) is the identity matrix \(I_{d+1}\), as the radial parts then lead to isotropic families. The distributions arising in this special case seem to have first been studied in detail by Saw (1978). Putting \(\eta = a/\Vert a\Vert \) and \(\rho = (\frac{1}{2}\Vert a\Vert ^2)^{\frac{1}{2}}\) we denote by \(\textrm{AG}_d(\eta ,\rho )\) the distribution of X and speak of the angular Gaussian distribution with parameters \(\eta \) and \(\rho \). Its density is represented by the infinite series

We refer to Saw (1978), where it is also pointed out that, with a random variable \(S\sim \chi _{d+1}^2\), the density can be written as

(f) We consider two types of spherical Cauchy distributions: The spherical Cauchy distributions of type I have the \(\textrm{unif}(\mathbb {S}_{d})\)-densities

and the spherical Cauchy distributions of type II have the \(\textrm{unif}(\mathbb {S}_{d})\)-densities

both with parameters \(\eta \in \mathbb {S}_d\) and \(\rho \in (0,1)\). We denote by \({\textrm{CI}}_d(\eta ,\rho )\) the distribution with \(\textrm{unif}(\mathbb {S}_{d})\)-density \(f_d^\textrm{CI}(\,\cdot \,|\eta ,\rho )\), and by \({\textrm{CII}}_d(\eta ,\rho )\) the distribution with \(\textrm{unif}(\mathbb {S}_{d})\)-density \(f_d^\textrm{CII}(\,\cdot \,|\eta ,\rho )\). Clearly, \({\textrm{CI}}_d(\eta ,0)\,=\,\textrm{CII}_d(\eta ,0)\,=\,\textrm{unif}(\mathbb {S}_{d})\).

The distributions in Example 1 (a) - (e) and their push-forwards under \(x\mapsto \eta ^{\text {t}}x\), respectively, are all classical; for basic as well as specific properties and interesting historical comments we refer to Watson (1982, 1983) and Mardia and Jupp (2000). It is well known, for example, that the von Mises–Fisher family in part (c) arises from the multivariate normal distributions in part (e) by conditioning on \(\Vert Z\Vert \). A well known relation with Brownian motion on \(\mathbb {S}_{d}\) is addressed in Sect. 4.2 below. In the special case \(d=1=(d+1)/2\) the distributions \(\textrm{WC}_1(\eta ,\rho ):=\textrm{CI}_1(\eta ,\rho )=\textrm{CII}_1(\eta ,\rho )\) in Example 1 (f) are known as the wrapped Cauchy or circular Cauchy distributions; see, e.g. Mardia and Jupp (2000) and Sect. 3 below. Hence, with the two types of spherical Cauchy distributions given above we have two different extensions of this distribution family to higher dimensions. For distinction, we added the name supplement ‘of type I’ and ‘of type II’, respectively. The spherical Cauchy distributions of type I were introduced and studied by Kato and McCullagh (2020). Generalizing results obtained by McCullagh (1996) for \(d=1\), the authors especially deal with the behavior of the spherical Cauchy distributions of type I under Möbius transformations. The densities \(f_d^{\textrm{CII}}(\,\cdot \,|\eta ,\rho )\) were considered by McCullagh (1989), though the author does not speak of spherical Cauchy distributions but, with the push-forward of \(\textrm{CII}(\eta ,\rho )\) under \(x\mapsto \eta ^{\text {t}}x\), of a noncentral version of the univariate symmetric beta distribution.

We recall from the introductory remarks that for a discrete mixture representation we need a mixing base \((Q_{n,\eta })_{n\in \mathbb {N}_0}\), \(\eta \in \mathbb {S}_d\), where each \(Q_{n,\eta }\) is a probability measure on the sphere, and mixing distributions on \(\mathbb {N}_0\) that depend on \(\rho \) only; see (2). Of special interest in the latter context are the the negative binomial distributions \(\textrm{NB}(r,p)\) with parameters \(r>0\), \(p\in (0,1)\), and probability mass function

the confluent hypergeometric series distributions \(\textrm{CHS}(\alpha ,\beta ,\tau )\) on \(\mathbb {N}_0\) with parameters \(\alpha ,\beta ,\tau >0\) and probability mass functions

and the hypergeometric series distributions \(\textrm{HS}(\alpha ,\beta ,\gamma ,\tau )\) on \(\mathbb {N}_0\) with parameters \(\alpha ,\beta ,\gamma ,\tau >0\) and probability mass functions

For \(\tau =0\) we take \(\textrm{CHS}(\alpha ,\beta ,\tau )\) and \(\textrm{HS}(\alpha ,\beta ,\tau )\) to be the one-point mass at 0. The distribution \(\textrm{CHS}(\alpha ,\beta ,\tau )\) arises as the stationary distribution of a birth-death process with birth rates \((\alpha + i)\tau \) and death rates \(i(\beta + i-1)\), \(i\in \mathbb {N}_0\); see Hall (1956). Note that these three distribution families are subclasses of the family of generalized hypergeometric distributions considered recently by Themangani et al. (2020). We also require that each family \(\{Q_{n,\eta }:\, \eta \in \mathbb {S}_d\}\) is isotropic.

We can now state our first results. Let \(d\in \mathbb {N}\) be fixed and let \(\lambda \) be as in (4).

Theorem 2

(a) The family \(\,\{\textrm{MF}_d(\eta ,\rho ):\, \eta \in \mathbb {S}_{d}, \rho \ge 0\}\,\) has a unique discrete mixture representation with mixing base \(\textrm{SBeta}_d(\eta ,1,n+1)\), \(n\in \mathbb {N}_0\). This representation is given by

(b) The family \(\,\{\textrm{CI}_d(\eta ,\rho ):\, \eta \in \mathbb {S}_{d}, \rho \in (0,1)\}\,\) has a unique discrete mixture representation with mixing base \(\textrm{SBeta}_d(\eta ,1,n+1)\), \(n\in \mathbb {N}_0\). This representation is given by

(c) The family \(\,\{\textrm{CII}_d(\eta ,\rho ):\, \eta \in \mathbb {S}_{d}, \rho \in (0,1)\}\,\) has a unique discrete mixture representation with mixing base \(\textrm{SBeta}_d(\eta ,1,n+1)\), \(n\in \mathbb {N}_0\). This representation is given by

In our next result the mixing base depends on the value of \(\rho \).

Theorem 3

(a) The family \(\{\textrm{Wat}_d(\eta ,\rho ):\, \eta \in \mathbb {S}_{d}, \rho \ge 0\}\) has a unique discrete mixture representation with mixing base \(\textrm{SP}_d(\eta ,n)\), \(n\in \mathbb {N}_0\). This representation is given by

(b) The family \(\{\textrm{Wat}(\eta ,\rho ):\, \eta \in \mathbb {S}_{d}, \rho \le 0\}\) has a unique discrete mixture representation with mixing base \(\textrm{SBeta}_d(\eta ,n+1,n+1)\), \(n\in \mathbb {N}_0\). This representation is given by

In order to obtain a similar representation for the family of \(\{{\textrm{AG}}_d(\eta ,\rho ):\, \eta \in \mathbb {S}_{d},\rho >0\}\) of angular Gaussian distributions we make use of the integral representation

of the parabolic cylinder functions \(D_\nu \) with real index \(\nu <0\); see Magnus (1966, p. 328).

Lemma 4

Let \(\delta >\frac{1}{2}\) and \(\tau >0\). Then \(\textrm{dpc}(\,\cdot \,|\delta ,\tau )\) with

\(k\in \mathbb {N}_0\), is a probability mass function.

We write \(\textrm{DPC}(\delta ,\tau )\) for the associated discrete parabolic cylinder distribution with parameters \(\delta >\frac{1}{2}\) and \(\tau >0\). For the special values \(\delta =\lambda + 1=(d+1)/2\) with \(d\in \mathbb {N}\) the statement of the lemma also follows from (20) below as the values on the right hand side of (19) are all nonnegative.

Theorem 5

The family \(\{\textrm{AG}_d(\eta ,\,\rho ):\, \eta \in \mathbb {S}_{d}, \rho > 0\}\) has a unique discrete mixture representation with mixing base \(\textrm{SBeta}_d(\eta ,1,n+1)\), \(n\in \mathbb {N}_0\). This representation is given by

Remark 6

(a) Regarding probability measures as real functions on a set of events, we may define the series in (13) - (17) and (20) as referring to pointwise convergence of functions. In fact, as the distributions involved all have smooth densities and compact domain, convergence even holds with respect to uniform convergence in spaces of continuous functions.

(b) The representations are minimal in the sense that the respective mixing base cannot be reduced. This follows from the uniqueness and the fact that the mixing probabilities are strictly positive.

(c) In connection with the base in Theorem 2 all mixtures have densities that are increasing in \(\eta ^{\text {t}}x\), and in Theorem 3 and Theorem 5 all mixtures are invariant under the reflection \(x\mapsto -x\).

(d) Interestingly, the von Mises–Fisher family, the spherical Cauchy families and the angular Gaussian family have the same mixing base of spherical beta distributions. So, these families are obtained by picking at random (the index n of) the element \(\textrm{SBeta}_d(\eta ,1,n+1)\) according to the respective mixing distributions. Another family with this mixing base is the family of spherical normal distributions; see Sect. 4.2.

(e) For a discussion of other similarities as well as differences between the von Mises–Fisher family and the spherical Cauchy family of type I we refer to Kato and McCullagh (2020). The von Mises–Fisher family and the spherical Cauchy family of type II both have representations in terms of multivariate Brownian motion. To be specific, let \(X=(X_t)_{t\ge 0}\) be a standard Brownian motion in \(\mathbb {R}^{d+1}\), let \(Y=(Y_t)_{t\ge 0}\) with \(Y_t=\rho \eta t + X_t\) for \(t\ge 0\) be the drifted standard Brownian motion with constant drift vector \(\rho \eta \), where \(\rho \ge 0\), \(\eta \in \mathbb {S}_{d}\), and let \(Z=(Z_t)_{t\ge 0}\) with \(Z_t=\rho \eta +X_t\) for \(t\ge 0\), where \(0\le \rho <1\), \(\eta \in \mathbb {S}_{d}\), be the Brownian motion starting at \(\rho \eta \). With \(T_Y:=\inf \{t\ge 0: \Vert Y_t\Vert \ge 1\}\) as the first time that Y exits the Euclidean unit ball \(\mathbb {B}_{d}=\{x\in \mathbb {R}^{d+1}: \Vert x\Vert <1\}\) it then holds that \(Y_{T_Y}\sim \textrm{MF}_d(\eta ,\rho )\); see Gatto (2013) for a more recent proof and historical remarks on this result. Further, with \(T_Z:=\inf \{t\ge 0: \Vert Z_t\Vert \ge 1\}\) the first time that Z exits \(\mathbb {B}_{d}\) it holds that \(Z_{T_Z}\sim \textrm{CII}_d(\eta ,\rho )\); see Chung (1982, p. 170), and McCullagh (1989).

3 Ultraspherical mixing bases

Our aim in this section is a mixing base that is applicable for general distribution families where, as before, we consider distributions \(P_\theta \) on \((\mathbb {S}_d,\mathcal {B}(\mathbb {S}_d))\), with \(\theta =(\eta ,\rho )\in \mathbb {S}_d\times I\) and \(I\subset \mathbb {R}_+\) an interval, that have densities \(f_\theta \) of the form

We will occasionally omit d or \(\lambda \) from the notation. Recall that \(\lambda =(d-1)/2\) and that \(\nu _d\) is the push-forward of \(\textrm{unif}(\mathbb {S}_d)\) under the mapping \(x\mapsto \eta ^{\text {t}}x\).

We assume that the functions \(g_\rho \) in (21) are elements of

and on \(\mathbb {H}_\lambda \) we use the inner product

and the norm \(\Vert f\Vert _\lambda =\langle f,f\rangle _\lambda ^{1/2}\). Then \((\mathbb {H}_\lambda ,\langle \cdot ,\cdot \rangle _\lambda )\) is a Hilbert space. We deal with a special complete sequence of orthogonal polynomials in this space. For \(d=1\) and \(\lambda =0\) this is the sequence of Chebyshev polynomials \(T_n\) of the first kind of degree \(n\in \mathbb {N}_0\), for \(d>1\) and \(\lambda >0\) we use the sequence of Gegenbauer or ultraspherical polynomials \(C_n^\lambda \) of degree \(n\in \mathbb {N}_0\); see Erdélyi et al. (1953b, Chs. X, XI). These functions play an important role in directional statistics, especially nonparametric directional statistics; see e.g. the papers of Bingham (1972), Giné (1975), Prentice (1978), Baringhaus (1991), Jupp (2008), and García-Portugués et al. (2021). The functions are standardized such that

if \(\lambda > 0\); further, \(T_n(1)=1\) for all \(n\in \mathbb {N}_0\). In particular, \(C_0^\lambda \equiv 1 \equiv T_0\). Of course, for \(n>0\) none of these functions is a probability density with respect to \(\nu _d\). However, it is known that the Chebyshev polynomials and, for \(\lambda >0\), the Gegenbauer polynomials attain their absolute maximum on \([-1,1]\) at \(t=1\); see Erdélyi et al. (1953b, p. 206, formula (7)) and Abramowitz and Stegun (1964, p. 786). Hence the standardization

provides a sequence \((D_n^\lambda )_{n\in \mathbb {N}_0}\) of orthogonal polynomials that are bounded in absolute value on \([-1,+1]\) by their value 1 in \(t=1\). As \((D_n^\lambda )_{n\in \mathbb {N}_0}\) is complete in \(\mathbb {H}_\lambda \), we have the series expansion converging in \(\mathbb {H}_\lambda \)

with \(\beta _n(\rho ):= \langle g_\rho ,D_n^\lambda \rangle _\lambda \,\Vert D_n\Vert _\lambda ^{-2}\) for \(n\in \mathbb {N}\). We assume throughout this section that \(g_\rho \) is such that

For fixed \(n\in \mathbb {N}_0\) and \(\eta \in \mathbb {S}_{d}\) the function \(H_{n,\eta }^\lambda :\mathbb {S}_{d}\rightarrow \mathbb {R}\) defined by

is the unique surface harmonic of degree n that depends only on \(\eta ^{\text {t}}x\) and that satisfies \(H_{n,\eta }^\lambda (\eta )=1\); see Erdélyi et al. (1953b, p. 238). Obviously, \(H_{n,\eta }^\lambda \) is invariant under the subgroup \(\{U\in \mathbb {O}(d+1):\, U\eta =\eta \}\) of \(\mathbb {O}(d+1)\), i.e. for all elements U of the subgroup it holds that \(H_{n,\eta }^\lambda (Ux)=H_{n,\eta }^\lambda (x)\) for all \(x\in \mathbb {S}_{d}\).

Let

We will repeatedly make use of the basic formulas

and

see e.g. Erdélyi et al. (1953b, p. 245) and Saw (1984, formula (1.14)|).

The mixing bases considered in what follows are of a very simple structure: The densities of the base distributions are built with only two special surface harmonics. To be precise, for \(n\in \mathbb {N}\) and real numbers \(-1 \le \alpha \le +1\) the functions

are \(\textrm{unif}(\mathbb {S}_{d})\)-densities of probability distributions \(\Delta _{n,\eta ,\alpha }^\lambda \) on \(\mathbb {S}_{d}\). These distributions can be regarded as a multivariate generalization of the cardioid distributions introduced by Jeffreys (1948), p. 302; see also Mardia and Jupp (2000), Section 3.5.5. Let \(\Delta _{0,\eta ,\alpha }^\lambda :=\textrm{unif}(\mathbb {S}_d)\). Here we mainly deal with the special distributions \(\Delta _{n,\eta }^\lambda :=\Delta _{n,\eta ,1}^\lambda \), but see also Remark 10 (a) and Proposition 12 below. So, for \(n\in \mathbb {N}\) the \(\textrm{unif}(\mathbb {S}_{d})\)-density of \(\Delta _{n,\eta }^\lambda \) is simply the sum of the two special surface harmonics \(H_{0,\eta }^\lambda \equiv 1\) and \(H_{n,\eta }^\lambda \).

With the mixing base \(\Delta _{n,\eta }^\lambda ,n\in \mathbb {N}_0,\) given for each \(\eta \in \mathbb {S}_{d}\), we obtain discrete mixture representations for all spherical distributions with densities of the form (21) that are not too far away from \(\textrm{unif}(\mathbb {S}_d)\). This may be seen as an instance of the perturbation approach discussed in the survey paper of Pewsey and García-Portugués (2021) and, indeed, the value of \(\beta (\rho )\) may be interpreted as a distance between \(\nu _d\) and the measure with \(\nu _d\)-density \(g_\rho \). By an ultraspherical mixing base we mean a family \(\{\Delta _{n,\eta }^\lambda :\, n\in \mathbb {N}_0\}\).

The following general formula is an immediate consequence of the above definitions and the expansion in (22).

Proposition 7

Suppose that \(\beta _n(\rho )\ge 0\) for all \(n\in \mathbb {N}\) and that \(\beta (\rho )\le 1\). Let \(\eta \in \mathbb {S}_d\). Then \(P_{\eta ,\rho }\) has the discrete mixture expansion

with \(w_\rho (0)=1-\beta (\rho )\) and \(w_\rho (n) =\beta _n(\rho )\) for all \(n\in \mathbb {N}\).

Applying this construction to several specific families we have to take care of the crucial condition \(\beta (\rho )\le 1\), equivalently \(w_\rho (0)\ge 0\). In each case, we obtain the mixing distribution and a range of \(\rho \)-values for the validity of the representation. Any distribution on \(\mathbb {N}_0\) may be written as a mixture of unit mass at 0 and a distribution on \(\mathbb {N}\) and it turns out that the latter are occasionally from a standard family. For a distribution on \(\mathbb {N}_0\) with mass function w on \(\mathbb {N}_0\) such that \(w(0)<1\) we call the distribution on \(\mathbb {N}\) with mass function \(n\mapsto w(n)/(1-w(0))\), \(n\in \mathbb {N}\), its zero-truncated counterpart. Some of the results stated in what follows turn out to be simple consequences of Proposition 7.

In the first theorem we consider two families of wrapped distributions, hence \(d=1\) and \(\lambda =0\). There are different notational conventions in the literature; here, we regard the wrapped distribution associated with a given distribution \(\mu \) on (the Borel subsets of) the real line as the push-forward \(\mu ^T\) of \(\mu \) under the mapping \(T:\mathbb {R}\rightarrow \mathbb {S}_1\), \(x\mapsto (\cos (x),\sin (x))^{\text {t}}\). This is often applied to location-scale families. Alternatively, the interval \([-\pi ,\pi )\) is used instead of \(\mathbb {S}_1\) as the base set for the wrapped distribution. This means that with \(X\sim \mu \) one deals with the \([-\pi ,\pi )\)-valued random variable \(X_0\) as the variable X reduced modulo \(2\pi \). If X has the density f with respect to the Lebesgue measure, then \(X_0\) has the \(\textrm{unif}\left( [-\pi ,+\pi )\right) \)-density \(2\pi \sum _{n=-\infty }^{+\infty } f(s+2\pi n),\,s\in [-\pi ,\pi )\). If the characteristic function \(\varphi \) of X is absolutely integrable, then f is continuous and the Poisson summation formula applies, i.e.

see Feller (1971, p. 632). For example, the wrapped normal distribution \(\textrm{WN}_1(\eta ,\rho )\) arises from the normal distribution \(N(\alpha ,\sigma ^2)\) with mean \(\alpha \) and variance \(\sigma ^2\), where \(\eta =(\cos (\alpha ),\sin (\alpha ))^{\text {t}}\) and \(\rho =\sigma ^2\). Note that some authors use \(\rho =2\sigma ^2\), see, e.g. Hartman and Watson (1974). With \(X\sim N\bigl (\alpha ,\sigma ^2\bigr )\) we deduce from (29) that \(X_0\) has the density

which means that \(T\circ X\sim \textrm{WN}_1(\eta ,\rho )\) has the \(\textrm{unif}(\mathbb {S}_{1})\)-density

It is worthwhile to note that as \(\rho \rightarrow 0\) the distribution \(\textrm{WN}_1(\eta ,\rho )\) converges weakly to the one-point mass distribution at \(\eta \). This is in contrast to other distributions considered here. For example, \(\textrm{MF}_1(\eta ,\rho ),\, \textrm{Wat}_1(\eta ,\rho ),\,\textrm{AG}_1(\eta ,\rho )\) all converge weakly to the uniform distribution \(\textrm{unif}(\mathbb {S}_{1})\) as \(\rho \rightarrow 0\). Also, as \(\rho \rightarrow \infty \), the distribution \(\textrm{WN}_1(\eta ,\rho )\) converges weakly to \(\textrm{unif}(\mathbb {S}_{1})\).

For the wrapped Cauchy distribution \(\textrm{WC}_1(\eta ,\rho )\) we follow the definition given by Pewsey and García-Portugués (2021): If X has a standard Cauchy distribution with density \(x\mapsto 1/(\pi (1+x^2))\), \(x\in \mathbb {R}\), then we apply the wrapping procedure to \(Y:=\sigma X+\alpha \), where \(\sigma >0,\,\alpha \in \mathbb {R},\) and take \(\eta \) as in the wrapped normal case. For the scaling we use the parametrization \(\rho :=e^{-\sigma }\in (0,1)\) and augment this with the limiting uniform distribution at \(\rho =0\). Using (29) again we obtain that the distribution \(\textrm{WC}_1(\eta ,\rho )\) has the density

see also Pewsey and García-Portugués (2021).

We write \(\textrm{geo}_{\mathbb {N}_0}(n|p)=p(1-p)^n\), \(n\in \mathbb {N}_0\), for the probability mass function of the geometric distribution on \(\mathbb {N}_0\) with parameter \(p\in (0,1)\), and \(\textrm{geo}_\mathbb {N}\) for the mass function of its zero-truncated counterpart. Recall that the function \(\beta \) for a given distribution family is defined in (23).

Theorem 8

(a) For the wrapped Cauchy distributions we have \(\beta (\rho )= 2\rho /(1-\rho )\) and, for \(0\le \rho \le 1/3\),

(b) For the wrapped normal distributions we have \(\beta (\rho )= 2\sum _{n=1}^\infty e^{-n^2 \rho /2}\). Let \(\rho _0\approx 1.570818\) be the unique solution of the equation \(\beta (\rho )=1\). Then, with

and \(\rho \ge \rho _0\), it holds that

We deal with the von Mises–Fisher families next. For these, we need variants of the Skellam distribution with parameter \(\rho \). This distribution arises as the distribution of \(N_1-N_2\) where \(N_1,N_2\) are independent random variables that both have the Poisson distribution with parameter \(\rho /2\); see Irwin (1937), and see Skellam (1946) where the more general case with possibly different means for \(N_1\) and \(N_2\) is considered. In the one-dimensional case we need the positive Skellam distribution, which is the conditional distribution of \(|N_1-N_2|\) given that \(N_1\ne N_2\). The associated probability mass function is given by

For \(d>1\) we use the generalized positive Skellam distribution with parameters \(\kappa >0\) and \(\tau >0\), with mass function

It will turn out as part of the proof of the next result that this is indeed a probability mass function. We have \(\lim _{\kappa \rightarrow 0}\frac{1}{\kappa }\frac{(2\kappa )_n}{n!} = \frac{2}{n}\), which gives \(\lim _{\kappa \rightarrow 0}\textrm{gpsk}(n|\kappa ,\tau )=\textrm{psk}(n|\tau )\) for all \(n\in \mathbb {N}\). Hence the positive Skellam distribution appears as the limiting case of the generalized positive Skellam distribution as \(\kappa \rightarrow 0\).

Theorem 9

We consider the von Mises–Fisher families \(\{\textrm{MF}_d(\eta ,\rho ):\, \rho \ge 0\}\), \(\eta \in \mathbb {S}_d\).

(a) If \(d=1\) then \(\beta (\rho )= e^\rho /I_0(\rho )-1\), the equation \(\beta (\rho )=1\) has a unique finite positive solution \(\rho _0\approx 0.876842\), and for \(0\le \rho \le \rho _0\) it holds that

(b) If \(d>1\) then

the equation \(\beta _\lambda (\rho )=1\) has a unique finite positive solution \(\rho _0(\lambda )\), and for \(0\le \rho \le \rho _0(\lambda )\) it holds that

Remark 10

(a) The condition that \(\beta _n(\rho ) \ge 0\) for all \(n\in \mathbb {N}\) in Proposition 7 is satisfied in all of the above families, but it can easily be removed by an appropriate extension of the mixing base. For this, let \(\Delta ^{\lambda ,-}_{n,\eta }\) be the distribution with density \(x\mapsto 1-D_n^\lambda (\eta ^{\text {t}}x)\), \(x\in \mathbb {S}_d\). Then the representation (28) continues to hold if we take \(Q_{n,\eta }=\Delta ^{\lambda ,-}_{n,\eta }\) and \(w_\rho (n)=-\beta _n(\rho )\) whenever \(\beta _n(\rho )<0\).

(b) The condition \(\beta (\rho )\le 1\) in Proposition 7 holds if \(P_{\eta ,\rho }\) is sufficiently close to the uniform distribution on the sphere. If instead of \(P_{\eta ,\rho }\) we consider a mixture of this distribution with the uniform, with enough weight on the latter, then the result is close enough to the uniform, and we again obtain a mixture representation. In the von Mises–Fisher case with \(d=1\), for example, we get for \(\rho >\rho _0\) and with \(\alpha (\rho ):= (1- 2e^{-\rho } I_0(\rho ))/(1- e^{-\rho } I_0(\rho ))\),

(c) Is there a countable mixing base that represents all isotropic spherical distributions with densities of the form \(x \mapsto g(\eta ^{\text {t}}x)\) with \(\eta \in \mathbb {S}_{d}\) and \(g\in \mathbb {H}_\lambda \)? This may be rephrased in terms of the set of extremal points of a convex set in an infinite dimensional space. We refer to Baringhaus and Grübel (2021b) for such geometric aspects in general, and for the construction of tree-based mixing bases that would lead to a positive answer for the set of all \(g\in \mathbb {H}_\lambda \) that are Riemann integrable.

The passage from an \(L^2\)-expansion (22) of \(g_\rho \) to the mixture representation (28) heavily relies on the nonnegativity of the functions \(1+D^\lambda _n\) (respectively \(1-D^\lambda _n\) in part (a) above). More generally, we may consider a mixing base \((Q_{n,\eta })_{n\in \mathbb {N}}\) where the density of \(Q_{n,\eta }\) is a polynomial of degree n in \(\eta ^{\text {t}}x\). This leads to the consideration of general linear combinations of ultraspherical polynomials; indeed, finding conditions for such polynomials to be nonnegative (on a given interval) is an ongoing research topic, see Askey (1975).

We confine ourselves to an example with \(\lambda =0\) and the Chebyshev polynomials. A change of mixing base will obviously lead to a change in the sequence of mixing coefficients. It turns out that this may lead to a representation of wider applicability.

Example 11

We define functions \(g_\rho :[-1,1]\rightarrow \mathbb {R}_+\), \(0\le \rho < 1\), by

where \(\phi _\rho (t):= (1-2\rho t +\rho ^2)^{1/2}\). These can be written as

see Magnus et al. (1966, p. 259). In particular, \(\int _{-1}^1 g_\rho (t)\, dt = 1\), so that we may define a family of distributions \(P_{\eta ,\rho }\) on \(\mathbb {S}_1\) via their densities \(x\mapsto g_\rho (\eta ^{\text {t}}x)\), \(x\in \mathbb {S}_1\).

We first derive an expansion in terms of the distributions \(\Delta ^0_{n\eta }\). With \(t=1\) we get

and it follows that \(\beta (\rho )\le 1\) if and only if \(\rho \le 1-2^{-\frac{1}{2}}=:\rho _0\). We now introduce the zero-truncated negative binomial distribution with parameters \(r>0\), \(p\in (0,1)\), and probability mass function

Then (34) leads to the discrete mixture representations

for all \(\eta \in \mathbb {S}_1,\, 0< \rho \le \rho _0\).

On the other hand, Turán (1953) proved that, for all \(n\in \mathbb {N}_0\),

In view of \(T_k(\cos \vartheta )=\cos (k\vartheta )\) this can be used to obtain an alternative representation. For this, let \(\Sigma _{n,\eta }\) be the distribution on \(\mathbb {S}_1\) with density \(x\mapsto \sum _{k=0}^n \alpha _k T_k(\eta ^{\text {t}}x)\), where \(\alpha _k:= (\frac{1}{2})_k/{k!}\) and \(n\in \mathbb {N}_0\). Then (34), together with a summation by parts, leads to

where \(\textrm{geo}_{\mathbb {N}_0}\) and \(\textrm{geo}_\mathbb {N}\) are as in Theorem 8. Both (35) and (36) hold for all \(\eta \in \mathbb {S}_1\), but note that the range of permissible \(\rho \)-values has increased from \(0\le \rho \le 1-2^{-1/2}\) to the full interval \(0\le \rho < 1\).

As pointed out earlier mixing families constructed with surface harmonics can be used with all distributions of the form (21) as long as these are sufficiently close to the uniform. The following result gives a property which we interpret as self-mixing stability of the distribution families \(\mathcal {D}^\lambda _0:=\{\textrm{unif}(\mathbb {S}_{d})\}\), \(\mathcal {D}^\lambda _n:=\{\Delta ^\lambda _{n,\eta ,\alpha }:\, \eta \in \mathbb {S}_d,\,-1\le \alpha \le +1\}\), \(n\in \mathbb {N}\), and \(\mathcal {D}^\lambda :=\bigcup _{n\in \mathbb {N}_0} \mathcal {D}^\lambda _n\): Mixing two elements of the same family results in a distribution that belongs to this family as well. Additionally, mixing any two elements moves the mixing distribution closer to the uniform distribution. Generally, the mixing operation relates distributions with different location parameter \(\eta \in \mathbb {S}_d\) to each other.

Proposition 12

Let \(\gamma _n^\lambda \) be the constants defined in (24).

(a) For all \(n\in \mathbb {N}_0\) and \(\eta \in \mathbb {S}_d\), \(-1\le \alpha ,\beta \le +1\),

for all \(A\in \mathcal {B}(\mathbb {S}_d)\).

(b) For all \(n,m\in \mathbb {N}_0\) with \(n\not = m\), and all \(\eta \in \mathbb {S}_d\), \(-1\le \alpha ,\beta \le +1\),

We recall that a probability kernel from a measurable space \((E,\mathcal {E})\) to another measurable space \((F,\mathcal {F})\) is a function \(Q:E\times \mathcal {F}\rightarrow \mathbb {R}\) that is \(\mathcal {E}\)-measurable in its first and a probability measure on \((F,\mathcal {F})\) in its second argument. Given a probability measure P on \((E,\mathcal {E})\) and a kernel Q from \((E,\mathcal {E})\) to \((F,\mathcal {F})\) we define a probability measure \(P\circ Q\) on \((F,\mathcal {F})\) by

For a family \(\{Q_x:\, x\in E\}\) of probability measures \(Q_x\) on \((F,\mathcal {F})\) with the property that the map \(x\mapsto Q_x(A)\) is \(\mathcal {E}\)-measurable for all \(A\in \mathcal {F}\), we may regard \(Q_{{\cdot }}:E\times \mathcal {F}\rightarrow \mathbb {R}\) defined by \(Q_{{\cdot }}(x,A)=Q_x(A)\), \((x,A)\in E\times \mathcal {F}\), as a Markov kernel from \((E,\mathcal {E})\) to \((F,\mathcal {F})\). Then (37) reads

which may be interpreted as a mixing operation. For use in the next section we note that for a family \(\{P_\eta :\eta \in \mathbb {S}_{d}\}\) of probability measures \(P_\eta \) on \((\mathbb {S}_{d},\mathcal {B}(\mathbb {S}_{d}))\) and a kernel Q from \((\mathbb {S}_{d},\mathcal {B}(\mathbb {S}_d))\) to \((\mathbb {S}_d,\mathcal {B}(\mathbb {S}_d))\) that are both isotropic in the sense defined by (8) and (9) respectively, the mixing results in an isotropic family again,

Further, for \(\alpha =\beta =1\) the statements in Proposition 12 can be simply rephrased as

Using the self-mixing stability and the bilinearity of the operation defined in (37) we obtain a discrete mixture representation for the composition of two families that both have a representation in terms of the ultraspherical mixing base.

Proposition 13

Suppose that \(P_\eta \) and \(P'_\eta \), \(\eta \in \mathbb {S}_d\), are distribution families on \(\mathbb {S}_d\) with discrete mixture representations \(\, P_\eta =\sum _{n=0}^\infty w(n)\Delta ^\lambda _{n,\eta }\,\) and \(\,P'_\eta =\sum _{n=0}^\infty w'(n)\Delta ^\lambda _{n,\eta }\,\) respectively. Then

where \(\; {\tilde{w}}(0):= 1 - \sum _{n=1}^\infty {\tilde{w}}(n)\) and \(\; {\tilde{w}}(n):= \gamma ^\lambda _n\, w(n)w'(n)\;\) for all \(n\in \mathbb {N}\).

This can be used to obtain the composition of wrapped Cauchy distributions. Indeed, taken together, Theorem 8 (a) and Proposition 13 lead to

It is worthwhile to point out that the restriction \(\rho ,\rho '\le 1/3\) in (39) can be omitted. In fact, for all \(0\le \rho ,\rho '<1\), using (24), (25), (26), and (31), the density of \(\textrm{WC}_1(\eta ,\rho )\circ \textrm{WC}_1({\cdot },\rho ')\) is easily calculated to be

In the next section this will be put into a wider context.

4 Discrete mixture representations and Markov processes

Let \(\{P_{\eta ,\rho }:\,\eta \in \mathbb {S}_d, \, \rho \in I\}\), \(I\subset \mathbb {R}_+\) an interval, be a family of distributions of the type considered in the previous sections. Below we briefly discuss three different connections to Markov processes. First, for \(\rho \in I\) fixed, the corresponding subfamily may be regarded as a probability kernel and thus induces a Markov chain on spheres. Second, an isotropic diffusion process on \(\mathbb {S}_d\) leads to a family of the above type via its one-dimensional marginal distributions, where \(\eta \) and \(\rho \) take over the role of starting point and (transformed) time parameter respectively. Third, starting with a discrete mixture representation we may find a discrete time Markov chain with marginal distributions equal to elements of the mixing base, and thus obtain an almost sure representation of the family as the distributions of the chain at random times.

4.1 Random walks on spheres

Let \(\{Q_\eta :\, \eta \in \mathbb {S}_d\}\) be a family of probability distributions that leads to a Markov kernel as described at the end of Section 3. Such kernels arise as transition probabilities of Markov processes. We may, for example, fix an \(\eta \in \mathbb {S}_d\) and define a Markov chain \((X_n)_{n\in \mathbb {N}_0}\) with state space \((\mathbb {S}_d,\mathcal {B}(\mathbb {S}_d))\) by the requirements that \(X_0\equiv \eta \) and that the distribution of \(X_{n+1}\) conditionally on \(X_n=\xi \) is given by \(Q_\xi \). For isotropic kernels each transition can be divided into two steps that make use of the representation of \(\mathbb {S}_d\) by \([-1,1]\times \mathbb {S}_{d-1}\) that also appeared in connection with (5) and (6). In the geometrically most familiar case we consider the current position as the ‘north pole’, then first choose a latitude and thereafter, independently, a longitude uniformly at random. The result is regarded as the new north pole. A generalization of this setup has been considered by Bingham (1972), see also the references given there.

The case \(d=1\) is somewhat special as the wrapping procedure is a group homomorphism from the additive group of real numbers into the multiplicative group \(\mathbb {S}_1\), regarded as a subset of \(\mathbb {C}\) and endowed with complex multiplication. Wrapping a random walk or a Lévy process thus leads to processes with values in \(\mathbb {S}_1\) that have stationary and independent increments, where the latter are now to be understood as ratios rather than differences. In fact, the location-scale family of Cauchy distributions arises as the one-dimensional marginals of a specific Lévy process, which gives (39) after an appropriate rescaling of the variance parameter. A similar approach, now using Brownian motion on the real line, gives a corresponding statement for the wrapped normal distributions.

We collect some observations in the following result. Recall that the distribution \(\mathcal {L}(X)\) of a Markov chain \(X=(X_n)_{n\in \mathbb {N}_0}\) with state space \((\mathbb {S}_d, \mathcal {B}(\mathbb {S}_d))\) is a probability measure on the path space \((\mathbb {S}_d^{\mathbb {N}_0}, \mathcal {B}\bigl ( \mathbb {S}_d^{\mathbb {N}_0}\bigr ))\) of the chain, where the \(\sigma \)-field \(\mathcal {B}\bigl ( \mathbb {S}_d^{\mathbb {N}_0}\bigr )\) is generated by the projections \(\pi _k:\mathbb {S}_d^{\mathbb {N}_0}\rightarrow \mathbb {S}_d\), \((x_n)_{n\in \mathbb {N}_0}\mapsto x_k\), \(k\in \mathbb {N}_0\). Any measurable mapping \(T:\mathbb {S}_d\rightarrow \mathbb {S}_d\) may be lifted to a mapping from and to paths by componentwise application.

Proposition 14

Suppose that Q is an isotropic kernel on \(\mathbb {S}_d\) and that \(X^\eta =(X^\eta _n)_{n\in \mathbb {N}_0}\) is a Markov chain with start at \(\eta \in \mathbb {S}_d\) and transition kernel Q.

(a) The family \(\{\mathcal {L}(X^\eta ):\, \eta \in \mathbb {S}^d\}\) of probability measures on the path space is isotropic in the sense that \(\mathcal {L}(X^\eta )^U=\mathcal {L}(X^{U\eta })\) for all \(\eta \in \mathbb {S}_d\), \(U\in \mathbb {O}(d+1)\).

(b) The latitude process \(Y=(Y_n)_{n\in \mathbb {N}_0}\), with \(Y_n:=\eta ^{\text {t}}X^\eta _n\) for all \(n\in \mathbb {N}_0\), is a Markov chain with state space \([-1,1]\) and start at 1.

(c) Suppose that \(Q(\eta ,\cdot \,) =\sum _{k=0}^\infty w(k)\Delta _{k,\eta }^\lambda \) for all \(\eta \in \mathbb {S}_d\). Then the representation

holds with \(w_1(k):=w(k)\) for all \(k\in \mathbb {N}_0\) and, for \(n>1\),

The fact that the geodesic distances from the starting point are again a Markov chain is an instance of lumpability, see Rogers and Pitman (1981) for a general discussion. That the dependence on \(\eta \) is lost in the lumping transition is part of the assertion of part (b). Further, it follows from the cosine theorem for spherical triangles that the transition kernel \(Q_Y\) of Y may be written as

with Z, U independent, \(\mathcal {L}(Z)=\mathcal {L}(\eta ^{\text {t}}X_1^\eta )\) and \(\mathcal {L}(U)=\nu _{d-1}\); see also Bingham (1972). Part (c) shows that the mixing base introduced in Sect. 3 is useful in the Markov chain context, and (40) may be seen as a discrete mixture representation of the family \(\{P_{\eta ,n}:\, \eta \in \mathbb {S}_d,\, n\in \mathbb {N}\}\), with \(P_{\eta ,n}=\mathcal {L}(X^\eta _n)\). It implies that the marginal distributions of the associated latitude process are given by

where \(\mu _k^\lambda \) is the push-forward of \(\Delta ^\lambda _{k,\eta }\) under \(x\mapsto \eta ^{\text {t}}x\).

4.2 Diffusion processes

Let \(X=(X_t)_{t\ge 0}\) be a homogeneous continuous time Markov process on the sphere with start at \(\eta \), i.e. \(\mathbb {P}(X_0=\eta )=1\), and transition densities \(p_t(x,y)\), \(t>0\), \(x,y\in \mathbb {S}_d\), that are isotropic in the sense that \(p_t(Ux,Uy)=p_t(x,y)\) for all \(t>0\), \(x,y\in \mathbb {S}_d\) and \(U\in \mathbb {O}(d+1)\). Then the marginal distributions \(\mathcal {L}(X_t)\), \(t\ge 0\), of the process may have a discrete mixture representation of the type considered above, with \(\rho \) related to time t.

We sketch the basic argument, see also Karlin and McGregor (1960), and then apply this in the context of the spherical Brownian motion \((B_t)_{t\ge 0}\). For a general discussion of the latter we refer to Ito and McKean (1997, Section 7.15) and Hsu (2002, Example 3.3.2). We note in passing that the marginal distributions of X characterize the full distribution \(\mathcal {L}(X)\) of the process, in view of the Chapman–Kolmogorov equations and the invariance of the transition mechanism under orthogonal transformations (clearly, \(\mathbb {O}(d+1)\) acts transitively on \(\mathbb {S}_d\)).

Suppose that X has transition densities \(p_t(x,y)\) and that its infinitesimal generator A has a discrete spectrum. As transitions are isotropic it is enough to consider one specific starting value \(x=\eta \). For the Kolmogorov forward equations

we may try to find a family of basic solutions \(\phi \) by a separation ansatz \(\phi (t,y)=f(t)g(y)\). This leads to

As the left and right hand side respectively depend on t and y only, we may hope that

where \(\omega _n\), \(n\in \mathbb {N}_0\), are the eigenvalues of the operator A, with eigenfunctions \(\phi _{n,\eta }\).

Recall that \(\lambda =(d-1)/2\).

Theorem 15

Let \((B_t)_{t\ge 0}\) be the spherical Brownian motion on \(\mathbb {S}_d\), \(d>1\), with start at \(\eta \in \mathbb {S}_d\). Let

and let

Further, let \(\,t^\lambda _0\) be the unique solution of the equation \(\beta ^\lambda (t)=1\). Then, for \(t\ge t^\lambda _0\),

In view of the wrapping representation mentioned above the corresponding result for \(d=1\) is contained in part (b) of Theorem 8.

Let \(B=(B_t)_{t\ge 0}\) be as in the theorem and let \(Y=(Y_t)_{t\ge 0}\) with \(Y_t=\eta ^{\text {t}}B_t\), \(t\ge 0\), be the associated latitude process. Then a polar decomposition shows that, for \(t>0\) fixed, \(B_t\) can be synthesized (in distribution) from \(Y_t\) and an independent random variable Z that is uniformly distributed on \(\mathbb {S}_{d-1}\). On the level of processes the conditional distribution of B given Y is a result known as the skew product decomposition of spherical Brownian motion; see Ito and McKean (1997, p. 270) and Mijatović et al. (2020).

A representation with a different mixing base, closer in spirit to the representations in Sect. 2, has very recently been obtained by Mijatović et al. (2020). The result is based on the authors’ observation that for a spherical Brownian motion \((B^\lambda _t)_{t\ge 0}\) with start at \(\eta \in \mathbb {S}_d\), \(d>1\), the rescaled latitude process \((Y_t)_{t\ge 0}\), \(Y_t:=(1-\eta ^{\text {t}}B^\lambda _t)/2\) for all \(t\ge 0\), is a neutral Wright-Fisher diffusion with both mutation parameters equal to \(\lambda \). For these, a discrete mixture representation had earlier been given by Jenkins and Spanò (2017), see also Griffiths and Spanó (2010). Taken together, this leads to

with

where the term \((d)_{-1}\) appearing with \(n=k=0\) is defined to be \(1/(d-1)\); see Jenkins and Spanò (2017, formula (5)). Interestingly, the mixture coefficients turn out to be the individual probabilities associated with the marginal distributions of a particular pure death process \((Z_t)_{t>0}\), i.e. \(w_t^\lambda (n)=P(Z_t=n)\). In contrast to our representation in Theorem 15 via surface harmonics no further restrictions on the time parameter are needed. Moreover, there is also a fascinating probabilistic interpretation, relating neutral Wright-Fisher diffusions to Kingman’s coalescent via moment duality; see Mijatović et al. (2020) for the details.

Mardia and Jupp (2000) call the \(\mathcal {L}(B^\lambda _t)\) Brownian motion distributions on \(\mathbb {S}_{d}\); Kent (1977) regards \(\mathcal {L}(\eta ^{\text {t}}B^\lambda _t)\) as a spherical normal distribution. We adopt the notation of the latter. Remembering that \(B^\lambda \) starts in \(\eta \in \mathbb {S}_{d}\), we denote by \(\textrm{SN}_d(\eta ,\rho )\,=\,\mathcal {L}\bigl (B^\lambda _{1/\rho }\bigr )\) the spherical normal distribution with parameters \(\eta \in \mathbb {S}_{d}\) and \(\rho >0\). Then, interestingly, by (42) we have a discrete mixture representation for the family \(\{\,\textrm{SN}_d(\eta ,\rho ):\eta \in \mathbb {S}_{d}, \rho >0\}\,\) with the same mixing base of spherical beta distributions \(\textrm{SBeta}_d(\eta ,1,n+1)\), \(n\in \mathbb {N}_0\), as obtained in Sect. 2 for the von Mises–Fisher, the spherical Cauchy, and the angular Gaussian families.

As explained at the beginning of this subsection, isotropic diffusion processes on the sphere may lead to a discrete mixture representation for the family of their marginal distributions. Conversely, for a given family of the type considered in the previous sections, one might ask for a representation of its elements as the marginals of some diffusion process with values in \(\mathbb {S}_d\). The following result answers this question for the von Mises–Fisher distributions.

Theorem 16

There is no homogeneous Markov process \(X=(X_t)_{t\ge 0}\) on \(\mathbb {S}_d\) with the property that, for all \(\eta \in \mathbb {S}_d\),

Alternatively one might start with a diffusion on the ambient space \(\mathbb {R}^{d+1}\) and then use the transition \(x\mapsto x/\Vert x\Vert \) from \(\mathbb {R}^{d+1}\) to \(\mathbb {S}_d\). For example, if \(B=(B_t)_{t\ge 0}\) is a Brownian motion on \(\mathbb {R}^{d+1}\) with start at \(\eta \in \mathbb {S}_d\), then \(X=(X_t)_{t\ge 0}\), with \(X_t:=\Vert B_t\Vert ^{-1} B_t\,\) for all \(t\ge 0\), represents the family \(\{\textrm{AG}_d(\eta ,\rho ):\,\rho >0\}\) in the sense that

Here the bijection \(\rho :(0,\infty )\rightarrow (0,\infty )\) is given by \(\rho (t):=(2t)^{-1/2}\), \(t>0\).

Remark 17

We relate Theorem 16 to the infinite divisibility statement for the von Mises–Fisher distributions obtained by Kent (1977). Interestingly, in both cases the proofs are based on the same series expansions (57), (59) of the densities \(f_d^{\textrm{MF}}(\,\cdot \,|\eta ,\rho )\) in terms of ultraspherical polynomials. An important ingredient of Kent’s approach is the associative convolution algebra \(({\mathscr {F}},\circ _d)\) on the space \({\mathscr {F}}\) of probability measures on \([-1,1]\) introduced by Bingham (1972), where the convolution \(F_1\circ _d F_2\) of \(F_1,F_2\in {\mathscr {F}}\) is defined to be the distribution of \(S_1S_2 + \Lambda (1-S_1)^{\frac{1}{2}}(1-S_2)^{\frac{1}{2}}\), see also (41). Here \(S_1,S_2,\Lambda \) are independent, \(S_i\sim F_i\) for \(i=1,2\), and \(\Lambda \sim \nu _{d-1}\). For \(d=0\) we take \(\nu _0\) to be the discrete uniform distribution on \(\mathbb {S}_{0}:=\{-1,1\}\). With this definition a probability measure \(F\in {\mathscr {F}}\) is said to be \(\circ _p\)-infinitely divisible if for each \(m\in \mathbb {N}\) there exists an \(F_m\in {\mathscr {F}}\) such that F is equal to the m-fold convolution \(F_m^{\circ _d m}\) of \(F_m\). As \(F\in {\mathscr {F}}\) is uniquely determined by its Fourier transform \(\varphi _F:\mathbb {N}_0\rightarrow \mathbb {R}\) defined by

and \(\varphi _{F_1\circ _d F_2}=\varphi _{F_1}\varphi _{F_2}\) for all \(F_1,F_2\in {\mathscr {F}}\), it holds that F is \(\circ _d\)-infinitely divisible if and only if for each \(m\in \mathbb {N}\) there is a Fourier transform \(\varphi _{F_m}:\mathbb {N}_0\rightarrow \mathbb {R}\) of an \(F_m\in {\mathscr {F}} \) such that \(\varphi _F=\varphi _{F_m}^m\) for all \(m\in \mathbb {N}\). The distribution \(\textrm{MF}_d^*(\rho )\) of \(\eta ^{\text {t}}X\), with \(X\sim \textrm{MF}_d(\eta ,\rho )\), has the \(\nu _d\)-density \(\frac{\rho ^d}{2^d\Gamma (\lambda +1)I_\lambda (\rho )}\exp (\rho t),\,t\in [-1,1]\). Kent (1977) showed that \(\textrm{MF}_d^*(\rho )\) is \(\circ _d\)-infinitely divisible. In fact, Kent even gives an interesting representation of the distributions \(F_m\) such that \(\textrm{MF}_d^*(\rho )=F_m^{\circ _d m}\) based on the spherical Brownian motion \((B_t)_{t\ge 0}\) on \(\mathbb {S}_d\) with start at \(\eta \in \mathbb {S}_d\): He showed that for each \(m\in \mathbb {N}\) there exists an absolutely continuous distribution \(G_m\) on the positive half-line such that \(\eta ^{\text {t}}B_{T_m}\sim F_m\), where \(T_m\sim G_m\) is independent of \((B_t)_{t\ge 0}\). Consequently,

In order to lift this from the unit interval to the sphere let \(\eta \in \mathbb {S}_{d}\) be fixed and let \({\mathscr {P}}_\eta \) be the family of distributions P on \(\mathbb {S}_{d}\) that are axially symmetric with respect to \(\eta \), i.e. \(P^U=P\) for each \(U\in \mathbb {O}(d+1)\) with \(U\eta =\eta \), and \(P^U\) the push-forward of P under U. In order to carry over the convolution operation \(\circ _d\) to \({\mathscr {P}}_\eta \), a spherical addition \(\oplus \) on \(\mathbb {S}_{d}\) is defined. Assign to each \(x\in \mathbb {S}_{d}\) an element \(U_x\in \mathbb {O}(d+1)\) in such a way that \(U_x\eta =x\) for all \(x\in \mathbb {S}_{d}\) and that \(x\rightarrow U_x\) is a measurable injection (a measurable embedding) from \(\mathbb {S}_{d}\) into \(\mathbb {O}(d+1)\). Then, for \(x,y\in \mathbb {S}_{d}\), let \(x\oplus y:=U_xy\). For \(P_1,P_2\in {\mathscr {P}}_\eta \), with independent \(\mathbb {S}_{d}\)-valued random vectors \(X_i\sim P_i\), \(i=1,2\), the \(\star _d\)-convolution \(P_1\star _d P_2\) of \(P_1\) and \(P_2\) is defined to be the distribution of \(X_1\oplus X_2\). Kent showed that \(P_1\star _d P_2\in {\mathscr {P}}_\eta \), and with \(F_i\) as the distribution of \(\eta ^{\text {t}}X_i\), \(i=1,2\), the cosine theorem for spherical triangles leads to \(\eta ^{\text {t}}(X_1\oplus X_2)\sim F_1\circ _d F_2\), i.e.

From (44) it now follows that \(\textrm{MF}_d(\eta ,\rho )\,=\,\mathcal {L}\bigl (B_{T_m}\bigr )^{\star _d m}\) for all \(m\in \mathbb {N}\).

4.3 Almost sure representations

The basic relation (2) connects \(\{P_\theta :\, \theta \in \Theta \}\) to the distributions \(W_\rho \) on \(\mathbb {N}_0\) and \(Q_{n,\eta }\) on \(\mathbb {S}_d\). Isotropy means that we may consider the \(\eta \)-part of the parameter as fixed. In this situation, if \((N_\rho )_{\rho \ge 0}\) and \((X_n)_{n\in \mathbb {N}_0}\) are independent stochastic processes such that

then

Equation (45) may be regarded as an almost sure representation of the distributional equation (2). Classical examples of such almost sure representations are the Skorohod coupling in connection with distributional convergence, see e.g. Kallenberg (1997, Theorem 3.30), and the representation of a sequence of uniformly distributed permutations on the sets \(\{0,1\ldots ,n\}\), \(n\in \mathbb {N}\), by the Chinese restaurant process, see e.g. Pitman (2006, Section 3.1). Of course, such representations are most useful if the successive variables are close to each other (and not simply chosen to be independent). Our aim here are representations of the type (45) for the distribution families considered above. For this, we first formalize the polar decomposition.

Let \(\eta \in \mathbb {S}_d\), we assume that \(d>1\). Recall from (6) that \(C_d(\eta ,y)=\{z\in \mathbb {S}_d:\, \eta ^{\text {t}}z = y\}\) and let

be the normalized projection onto the orthogonal complement of the linear subspace of \(\mathbb {R}^{d+1}\) spanned by \(\eta \). This can be extended to the whole of the sphere by choosing some arbitrary of \(\xi \in \mathbb {S}_d\) as the value of \(n_\eta (\pm \eta )\). Then an inverse of the polar decomposition \(x\mapsto (\eta ^{\text {t}}x,n_\eta (x))\) is given by

in the sense that \(\; \Psi _\eta (\eta ^{\text {t}}x,n_\eta (x)) = x\) for all \(x\in \mathbb {S}_d\), and a random vector X with values in \(\mathbb {S}_d\) may be written as

Let \(Q_\eta \) be a distribution on \((\mathbb {S}_d,\mathcal {B}(\mathbb {S}_d))\) that has a density f with respect to \(\textrm{unif}(\mathbb {S}_d)\) which can be written as \(f_\eta (x)=g(\eta ^{\text {t}}x)\), \(x\in \mathbb {S}_d\), see (7). If \(X\sim Q_\eta \) then \(\eta ^{\text {t}}X\) and \(n_\eta (X)\) are independent, \(\eta ^{\text {t}}X\) has the distribution \(\nu _{d;g}\) with \(\nu _d\)-density g, and \(n_\eta (X)\sim \textrm{unif}\left( C_d(\eta ,0)\right) \); see (Watson 1983, p. 92) and (Mardia and Jupp 2000, p. 169). So, conversely, if we have independent random variables \(Y\sim \nu _{d;g}\) and \(Z\sim \textrm{unif}\left( C_d(\eta ,0)\right) \), then

Mardia and Jupp (2000, p. 161, p. 169) call (46) and the distributional version (47) the tangent-normal decomposition. In the past this decomposition has been successfully applied by many authors treating different problems in directional statistics, see e.g. Saw (1978, 1983, 1984), García-Portugués et al. (2020), and Ulrich (1984). In practice, a random variable X with distribution \(Q_\eta \) is simply obtained as follows. Suppose first that \(\eta \) is equal to \(e_1=(1,0,\ldots ,0)^{\text {t}}\), the first unit vector in the canonical basis of \(\mathbb {R}^{d+1}\). With \(e_1\) as ‘north pole’ the polar representation takes on a particularly simple form: From \(y\in [-1,1]\) and \(z=(z_1,\ldots ,z_d)^{\text {t}}\in \mathbb {S}_{d-1}\) we get \(x\in \mathbb {S}_d\) by \(x=\Phi (y,z)\) with

Then, starting with a random variable \(Y\sim \nu _{d;g}\) and another random variable \(Z\sim \textrm{unif}(\mathbb {S}_{d-1})\) independent of Y, we obtain an \(\mathbb {S}_d\)-valued random variable X with distribution \(Q_{e_1}\) via \(X:=\Phi (Y,Z)\). For a general \(\eta \in \mathbb {S}_d\) we use that \(Q_\eta \) is the push-forward \(Q_{e_1}^U\) of \(Q_{e_1}\) under the mapping \(x\mapsto Ux\), where \(U\in \mathbb {O}(d+1)\) is such that \(\eta =Ue_1\), i.e. U has \(\eta \) as its first column. Then, defining \(\Phi _\eta (y,z)=U\Phi (y,z)\) it follows that \(X:=\Phi _\eta (Y,Z)\sim Q_\eta \); see also Saw (1978) and Ulrich (1984) for this construction.

Starting with a random variable Y that is almost surely equal to 1, it follows that the random variable \(X=\Phi _\eta (Y,Z)\) is almost surely equal to \(\eta \). This means that from a Markov chain \((Y_n)_{n\in \mathbb {N}_0}\) with state space \([-1,1]\) starting in 1, where for \(n\in \mathbb {N}\) the distribution of \(Y_n\) is the push-forward of \(Q_{n,\eta }\) under \(x\mapsto \eta ^{\text {t}}x\), and a single random variable \(Z\sim \textrm{unif}(\mathbb {S}_{d-1})\), we obtain a Markov chain \((X_n)_{n\in \mathbb {N}_0}\) with the desired one-dimensional marginal distributions by putting \(X_n:=\Phi _\eta (Y_n,Z)\) for all \(n\in \mathbb {N}_0\). Clearly, \((Y_n)_{n\in \mathbb {N}_0}\) is then the latitude process associated with \((X_n)_{n\in \mathbb {N}_0}\).

This reduces the first step in an almost sure construction (45) to finding a Markov chain on \([-1,1]\) with prescribed marginals. For the second step we require a suitable integer-valued process \(N=(N_\rho )_{\rho \ge 0}\) with marginal distributions \(W_\rho \). One general possibility is the quantile transformation, which can also be used to construct a Skorohod coupling for real random variables: With \(U\sim \textrm{unif}(0,1)\) we obtain a random variable X with distribution function F via \(X:=F^{-1}(U)\), where \(F^{-1}(u):=\inf \{t\in \mathbb {R};\, F(t)\ge u\}\) for \(0<u<1\). If \(F=F_\rho \) is the distribution function associated with \(W_\rho \) the paths of the process \(N=(N_\rho )_{\rho \ge 0}\) constructed in this way depend on the relations between the distribution functions for different \(\rho \)’s. In particular, if the distributions \(W_\rho \) are stochastically monotone, meaning that

whenever \(\rho \le \rho '\), then the paths of N are increasing. It is well known that this stochastic monotonicity applies to arbitrary distributions \(W_\rho ,W_{\rho '}\) with monotone likelihood ratio. To be precise, defining a likelihood ratio of \(W_{\rho '}\) with respect to \(W_\rho \) to be a \(\mathcal {B}(\mathbb {R})\)-measurable function \(L_{\rho ,\rho '}:\mathbb {R}\rightarrow [0,\infty ] \) such that \(W_{\rho }(L_{\rho ,\rho '}<\infty )\,= 1\; \) and

the distributions \(W_\rho ,W_{\rho '}\) with \(\rho <\rho '\) have monotone likelihood ratio if there exists an increasing function \(h_{\rho ,\rho '}:\mathbb {R}\rightarrow [0,\infty ]\) such that

Note that if \(f_{\rho },f_{\rho '}\) are densities of \(W_\rho ,W_{\rho '}\) with respect to some \(\sigma \)-finite measure, then

is a special version of the likelihood ratio of \(W_{\rho '}\) with respect to \(W_\rho \); here \(1(\cdot )\) denotes the indicator function. In fact, (49) holds if \(W_\rho ,W_{\rho '}\) with \(\rho <\rho '\) have monotone likelihood ratio; see, e.g. Witting (1985, Satz 2.28).

Again, we consider the special case of the von Mises–Fisher distributions in some detail.

Example 18

Let \(\eta \in \mathbb {S}_{d}\). In order to translate Theorem 2, see also Example 1 (c), into an almost sure representation for the family \(\{\textrm{MF}_d(\eta ,\rho ):\,\rho \ge 0\}\) we need random variables \(Y_n\) with \(Y_n\sim \textrm{Beta}_{[-1,1]}(d/2,d/2 + n)\) for all \(n\in \mathbb {N}_0\). To this end let V, \(W_i\), \(i\in \mathbb {N}_0\), be independent random variables with \(V\sim \Gamma (d/2,1)\), \(W_0\sim \Gamma (d/2,1)\), and \(W_i\sim \textrm{Exp}(1)\) for all \(i\in \mathbb {N}\). Then, using the well known connection between beta and gamma distributions, see e.g. Johnson and Kotz (1970), we obtain independent random variables \(B_0\sim \textrm{Beta}(d/2,d/2)\), \(B_n\sim \textrm{Beta}(d+n-1,1)\), \(n\in \mathbb {N}\), via

and products

The transformation \(Y_n:=1-2 {\tilde{Y}}_n\), \(n\in \mathbb {N}_0\), now gives the desired sequence \(Y=(Y_n)_{n\in \mathbb {N}_0}\). Moreover, \({\tilde{Y}}_{n+1}= {\tilde{Y}}_n B_{n+1}\) implies that

As \(B_{n+1}\) is independent of \(Y_n\) this shows that Y is a Markov chain.

Suppose now that \((N_\rho )_{\rho \ge 0}\) is a stochastic process with \(N_\rho \sim \textrm{CHS}(d/2,d,2\rho )\) for all \(\rho \ge 0\). Let \(Z\sim \textrm{unif}(\mathbb {S}_{d-1})\) be independent of the variables V and \(W_i\), \(i\in \mathbb {N}\). Then \((X_{\rho })_{\rho \ge 0}\) with

and \(\Phi _\eta \) as in the remarks preceding the example, has the desired property that \(X_\rho \sim \textrm{MF}_d(\eta ,\rho )\) for all \(\rho \ge 0\).

For the construction of the counting process we use the quantile transformation. The likelihood ratios turn out to be

which, as a function of n, is increasing whenever \(\rho < \rho '\). As explained above, this shows that the paths of \((N_\rho )_{\rho \ge 0}\) are increasing. From (50) it follows that \(Y_n\ge Y_{n-1}\) for all \(n\in \mathbb {N}\). Taken together we see that we have found an almost sure representation (51) with a process \((Y_{N_\rho })_{\rho \ge 0}\) that has increasing paths.

Some comments are in order. Obviously, almost sure representations are generally not unique. In the first step of Example 18, we could use a sequence \((Y_n)_{n\in \mathbb {N}_0}\) of independent random variables with \(Y_n\sim \textrm{Beta}_{[-1,1]}(d/2,d/2 + n)\) for all \(n\in \mathbb {N}_0\), or we could use the quantile transformation to obtain suitable variables \(Y_n\) as functions of one single \(U\sim \textrm{unif}(0,1)\) (in fact, the corresponding likelihood ratios would be increasing). Similar to (3) in the classical case, the representation (51) strikes a structural middle ground in this spectrum from no dependence at all to total dependence between the variables of interest. Also, the Markov chain featuring in the denominator of

has some resemblance to the sum appearing in (3). In Baringhaus and Grübel (2021a, Remark 4 (a)) we found a discrete mixture representation for the non-central family of hyperbolic secant distributions that may similarly written as a function of a Markov chain of this type.

Returning to the general situation, we may regard the right hand side of (3) or (45) as a representation of a continuous time stochastic process \(Z= (Z_t)_{t\ge 0}\) by independent processes \(X=(X_n)_{n\in \mathbb {N}_0}\) and \(N=(N_t)_{t\ge 0}\) via \(Z_t=X_{N_t}\) for all \(t\ge 0\). As already mentioned in the introduction, equality of the marginal distributions is considerably weaker than equality of the distributions of the processes. For example, the representation in Example 18 leads to a process that moves by jumps from \(\eta \) on a fixed great circle through \(\eta \) towards the equator in a piecewise constant manner. Loosely speaking, a discrete mixture representation on the process level is only possible for processes of the pure jump type. However, if the time parameter of the base process is X continuous too then we obtain a connection to a famous group of results, known as skew product decompositions. For example, with \((X_t)_{t\ge 0}\) a Brownian motion on \(\mathbb {R}^{d+1}\) starting at \(a\not =0\) and \(R_t:=\Vert X_t\Vert \), \(t\ge 0\), we have \(R_t^{-1} X_t = B_{N_t}\) for all \(t\ge 0\), where \((B_t)_{t\ge 0}\) is a Brownian motion on \(\mathbb {S}_d\) starting at \(\eta :=\Vert a\Vert ^{-1} a\), \(N_t\) is given implicitly by \(\int _0^{N_t} R_s^{-2}\, ds=t\), and B and N are independent. In particular, this leads to a representation of the distribution of \(X_t/\Vert X_t\Vert \), \(t\ge 0\), which is a family of spherical distributions, as a continuous mixture. In contrast to discrete mixture representations these seem to be less suitable for simulation.

5 Proofs

5.1 Proof of Theorem 2

(a) We first simplify the norming constant in (11) for the d-dimensional spherical beta distribution with parameters \(p=1\) and \(q=n+1\), \(n\in \mathbb {N}_0\). Using the duplication formula

for the gamma function we obtain

and it follows that the density of \(\textrm{SBeta}_d(\eta ,1,n+1)\) can be written as

We now write

and use the expansion

together with the identity

to obtain

In order to prove uniqueness suppose that

for two sequences \(v=(v_n)_{n\in \mathbb {N}_0},w=(w_n)_{n\in \mathbb {N}_0}\) of non-negative real numbers with sum 1. Passing to the respective push-forwards under \(x\mapsto \eta ^{\text {t}}x\) this leads to the equality of the (continuous) densities,

hence the sequences v and w are equal to each other.

(b) From \(d=2\lambda +1\), \(1-\frac{4\rho }{(1+\rho )^2} = \frac{(1-\rho )^2}{(1+\rho )^2}\), and (53) it follows that

The proof of the uniqueness is similar to that of part (a).

(c) Noticing \((d+1)/2=\lambda +1\), and

see Magnus et al. (1966, p. 39), we get arguing as in part (b) that

5.2 Proof of Theorem 3

(a) Straightforward manipulations give

(b) As at the beginning of the proof of Theorem 2, with \(p=q=n+1\), \(n\in \mathbb {N}_0\), the general expression (11) for the norming constants can be simplified to

Hence the density for the associated spherical beta distribution may be written as

For \(\rho <0\) and \(\eta \in \mathbb {S}_{d}\) we have

for all \(x\in \mathbb {S}_{d}\). Because of

(see, e.g. (Magnus et al. 1966, p. 267)) it follows that

for all \(\eta \in \mathbb {S}_{d}\), \(\rho <0\).

In both cases, it is easy to adapt the uniqueness argument from the von Mises–Fisher context to the Watson situation.

5.3 Proof of Lemma 4

We write \(\Gamma (\delta ,\alpha )\) for the gamma distribution with shape parameter \(\delta >0\), scale parameter \(\alpha >0\) and Lebesgue density \(t\mapsto \Gamma (\delta )^{-1}\alpha ^\delta t^{\delta -1}\exp (-\alpha t)\), \(t>0\). Let V be a positive random variable with \(V\sim \Gamma (\delta ,\frac{1}{2})\). Then, for \(k\in \mathbb {N}_0\),

Writing

and using the duplication formula (52) we obtain

Thus,

Using

for \(z\in \mathbb {R}\), see e.g. Erdélyi et al. (1953a, p. 265, formula (10)), and

we obtain

which in view of \(E(V^k)=\frac{\Gamma (k+\delta )}{\Gamma (\delta )}2^k\) gives \(\sum _{k=0}^\infty \textrm{dpc}(k|\tau ,\delta )=1\).

5.4 Proof of Theorem 5

Using (12) with \(S\sim \chi ^2_{d+1}\) and writing

we see that

As \(\chi _{d+1}^2=\Gamma (\lambda +1,\frac{1}{2})\), the asserted discrete mixture representation now follows with (56). The uniqueness of the representation is obtained as in the proofs of Theorems 2 and 3.

5.5 Proof of Theorem 8

(a) We use Proposition 7. We have \(\beta (\rho )=\sum _{n=1}^\infty 2\rho ^n=\frac{2\rho }{1-\rho }\le 1\) if and only if \(\rho \le \frac{1}{3}\). By (31), the density \(f_1^\textrm{WC}(\cdot |\eta ,\rho )\) of the wrapped Cauchy distribution \(\textrm{WC}_1(\eta ,\rho )\) can be written as

The representation now follows easily.

(b) Using (30) we can write the density \(f_1^\textrm{WN}(\cdot |\eta ,\rho )\) of the wrapped normal distribution \(\textrm{WN}_1(\eta ,\rho )\) as

The function \(\beta \) is easily seen to be continuous and strictly decreasing, with unique solution \(\rho _0\approx 1.570818\) of the equation \(\beta (\rho )=1\). From Proposition 7 we thus obtain a discrete mixture expansion for the family \(\{\textrm{WN}_1(\eta ,\rho ):\, \eta \in \mathbb {S}_1, \rho \ge \rho _0\}\) with mixing base elements \(\Delta ^0_{n,\eta }\) and weights \(w_\rho (n)= 2e^{-n^2\rho /2}\), \(n\in \mathbb {N}\).

5.6 Proof of Theorem 9

(a) The density \(f_1^\textrm{MF}(\cdot |\eta ,\rho )\) of the von Mises–Fisher distribution \(\textrm{MF}_1(\eta ,\rho )\) can be written as