Abstract

Although beta regression is a very useful tool to model the continuous bounded outcome variable with some explanatory variables, however, in the presence of multicollinearity, the performance of the maximum likelihood estimates for the estimation of the parameters is poor. In this paper, we propose improved shrinkage estimators via Liu estimator to obtain more efficient estimates. Therefore, we defined linear shrinkage, pretest, shrinkage pretest, Stein and positive part Stein estimators to estimate of the parameters in the beta regression model, when some of them have not a significant effect to predict the outcome variable so that a sub-model may be sufficient. We derived the asymptotic distributional biases, variances, and then we conducted extensive Monte Carlo simulation study to obtain the performance of the proposed estimation strategy. Our results showed a great benefit of the new methodologies for practitioners specifically in the applied sciences. We concluded the paper with two real data analysis from economics and education.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Ferrari and Cribari-Neto (2004) firstly introduced Beta regression (BR) to model the outcome variables bounded in the interval (a, b) to be explained by some variables. The main assumption of the BR is that the dependent variable has beta distribution. Several applications of the BR have been studied in the literature. For instance, when modelling the proportion of income spent on food, the poverty rate, the proportion of crude oil converted to gasoline and the proportion of surface covered by vegetation (Qasim et al. 2021). The BR model has also been applied for modeling bounded time series data in analyzing Canada Google®Flu Trends (Guolo and Varin 2014). Recently, the BR model has gained attention in machine learning area such that Espinheira et al. (2019) proposed some criteria for variable selection in beta regression models.

Usually, the maximum likelihood estimator (MLE) is used to estimate the unknown regression coefficients in the BR model (Ferrari and Cribari-Neto 2004). Hence, a multicollinearity problem may arise in the count regression model, when there are near linear dependencies between predictor variables. As a remedy, Karlsson et al. (2020) studied a Liu estimator (Liu 1993) approach in the BR model to overcome the multicollinearity problem.

In regression models, when there is some prior information about the parameter vector \(\varvec{\beta } \) under a linear restriction defined as \( {{\textbf {H}}} \varvec{\beta } = {{\textbf {h}}} \), the shrinkage strategies, namely linear shrinkage (Thompson 1968), pretest (Bancroft 1944), shrinkage pretest estimator (Ahmed 1992), Stein estimator (Stein 1956), and positive Stein estimators (Kibria and Saleh 2004) are applied to the estimation of parameters. The parameter vector \( \varvec{\beta } \) is partitioned into two parts as \(\varvec{\beta } = (\varvec{\beta }_1^\prime , \varvec{\beta }_2^\prime )^\prime \) where \( \varvec{\beta }_1 \) is of order \(p_1 \times 1\) containing the active or significant parameters and \( \varvec{\beta }_2 \) is of order \(p_2 \times 1\) containing the inactive parameters that are not significantly effective in predicting the dependent variable in this setting. Note that the number of regression parameters is \(p=p_1 + p_2\). Therefore, there are two models, such as a full model or unrestricted model including all parameters estimated with the maximum likelihood method and a sub–model or restricted model only containing the significant parameters. For more details about methodology of shrinkage estimations, see Ahmed (2014) and Kibria and Saleh (2004).

The main purpose of this paper is to develop effective methods for the BR model in the presence of highly correlated variables where some of them may not have significant effect on the models specifically in econometric and education data. Therefore, we propose improved shrinkage estimation strategies by making use of the Liu estimator in the BR model to estimate the parameters in the presence of multicollinearity. We derive the theoretical properties of the proposed estimators and conduct a Monte Carlo simulation experiment to evaluate their relative performance with respect to the usual unrestricted Liu estimator. We observe that the proposed estimators, specifically the Liu Stein estimator, uniformly outperform the usual estimators in both the simulation studies and the real data application.

The organization of the paper is as follows: We introduce the BR model and the unrestricted Liu estimator, propose a restricted Liu estimator, and then derive the shrinkage Liu estimators in Sect. 2. In Sect. 3, the asymptotic distributional bias and variance of the proposed estimators are presented. Asymptotic evaluations of the variance of the proposed estimators are given in Sect. 4. We provide the details of the Monte Carlo simulation experiment to compare the performance of the proposed estimators in Sect. 5. We apply the proposed estimation methods to two real data sets in Sect. 6. Finally, conclusive remarks are presented in Sect. 7.

2 Theory and method

In this section, we briefly introduce the BR model. Then, the unrestricted and restricted Liu estimators and shrinkage Liu estimators are defined.

2.1 Beta regression model

Assume that \({{\mathbf {y}}}=\left[ y_1, y_2, \ldots , y_n\right] ^\prime \) be the vector of observations of the response variable following independent beta distribution with two shape parameters a and b such that the probability distribution function (pdf) is given as

where \(a>0, ~b>0\) and \(\Gamma (.)\) is the gamma function, and it is denoted as \(y_i \sim Beta(a,b)\). The mean and variance of each \(y_i\) are, respectively,

and

Following Ferrari and Cribari-Neto (2004), we use a different re-parametrization in order to derive the BR model. Let us suppose that \(\mu = a/(a+b)\) and \(\phi = a+b\) which is called the precision parameter. Now, the pdf of \(y_i\) can be written as

where \(0<\mu <1\) and \(\phi >0\) such that \(y_i \sim Beta\left( \mu \phi , (1-\mu )\phi \right) \). Therefore, the mean and variance of each observation becomes respectively, \(E(y_i)=\mu \) and \(Var(y_i)=V(\mu )/(1+\phi )\) where \(V(\mu )=\mu (1-\mu )\).

Now, the beta regression model can be written by assuming that the mean of \(y_i\) can be written as

where \({{\mathbf {x}}}_i^\prime \) is the ith observation vector such that \({{\mathbf {X}}}=\left[ {{\mathbf {x}}}_1^\prime ,{{\mathbf {x}}}_2^\prime ,\ldots ,{{\mathbf {x}}}_n^\prime \right] \) which is the design matrix of order \(n \times p, (n>p)\), \({\varvec{\beta }}=\left[ \beta _1, \beta _2, \ldots , \beta _p \right] ^\prime \) is a vector of regression parameters. In Equation (3), we assume that the link function g(.) is a strictly monotone and twice differentiable function from the interval (0, 1) to \({\mathbb {R}}^p\).

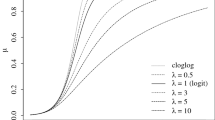

Although alternative link functions are available for the BR model (Ferrari and Cribari-Neto 2004), we use the logit link function given as \(g(\mu )=\log (\mu /(1-\mu )\) such that

for \(i=1,2, \ldots , n\). Thus, the corresponding log-likelihood function of the BR model given in (3) can be written as

One should use an iterative algorithm to obtain the parameter estimates due to the nonlinearity of the log-likelihood function. Therefore, the score functions can be obtained by differentiating the log-likelihood function with respect to the parameters \({\varvec{\beta }}\) and \(\phi \) respectively as

and

where \(y_i^*=\log (y_i/(1-y_i)\), \(\mu _i^*=\psi (\mu _i \phi )\), \({{\mathbf {T}}}=diag\{1/g'(\mu _1),\ldots , 1/g'(\mu _n) \}\), \({{\mathbf {y}}}^*=(y_1^*,\ldots , y_n^*)^\prime \), \({\varvec{\mu }}^*=(\mu _1^*,\ldots , \mu _n^*)^\prime \) and the Fisher’s information matrix as

where \({{\mathbf {K}}}_{{\varvec{\beta }}{\varvec{\beta }}}=\phi {{\mathbf {X}}}^\prime {{\mathbf {W}}}{{\mathbf {X}}}= \varvec{\mathcal {I}}\), \({{\mathbf {K}}}_{{\varvec{\beta }}\phi }={{\mathbf {K}}}_{\phi {\varvec{\beta }}}={{\mathbf {X}}}^\prime {{\mathbf {T}}}\) and \({{\mathbf {K}}}_{\phi \phi }=trace({{\mathbf {D}}})\), \({{\mathbf {D}}}=diag\{d_1, \ldots , d_n \}\) with \(d_i=\psi ^\prime (\mu _i\phi )\mu _i^2+\psi ^\prime ((1-\mu _i)\phi )(1-\mu _i)^2-\psi ^\prime (\phi )\) such that \(\psi ^\prime (.)\) is the trigamma function, \({{\mathbf {c}}}=(c_1, \ldots , c_n)\) with \(c_i=\phi \{\psi ^\prime (\mu _i\phi )\mu _i-\psi ^\prime ((1-\mu _i)\phi )(1-\mu _i) \}\). Please see the appendix of Ferrari and Cribari-Neto (2004) for derivations of the score functions and the Fisher’s information matrix in detail.

It is known that under usual regularity conditions, the asymptotic distribution of the maximum likelihood estimators \(\widehat{\varvec{\beta }}\) and \(\widehat{\phi }\) of \({\varvec{\beta }}\) and \(\phi \) as \(n \rightarrow \infty \), is approximately given by

2.2 Unrestricted Liu estimator in beta regression

One estimation method for handling the multicollinearity problem in the BR model is the Liu estimator, introduced by Karlsson et al. (2020), having the following form

where \(0<d<1\) is the Liu biasing parameter and \(\widehat{{{\mathbf {W}}}}\) is a diagonal matrix such that the ith diagonal element is equal to \( \widehat{\mu }_i = exp( {{\mathbf {x}}}^\prime _i \widehat{\varvec{\beta }}) \).

In this study, following Karlsson et al. (2020), we estimate the Liu parameter d by \({\widehat{d}} =\max \left( 0, \frac{ \widehat{\varvec{\beta }}_{max}^2-1 }{\lambda _{max}+\widehat{\varvec{\beta }}_{max}^2 } \right) \) where \(\lambda _{max}\) is the maximum eigenvalue of the matrix \( {{\mathbf {X}}}' \widehat{{{\mathbf {W}}}}{{\mathbf {X}}}\) and \(\widehat{\varvec{\beta }}_{max}\) is the maximum element of the maximum likelihood estimator.

2.3 Restricted Liu estimator

When there exists some prior information regarding the parameters as linear restrictions, some of them are not significant and should be eliminated from the model to improve estimation efficiency. Therefore, the following general hypothesis on \( \varvec{\beta } \) is defined

where \( {{\textbf {H}}} \) is a \( p_2 \times p \) matrix, \( p_2 \) is the number of non-significant parameters, and \( {{\textbf {h}}} \) is a \( p_2 \times 1 \) known vector. Then, based on Kibria and Saleh (2012), the restricted estimator of \({\varvec{\beta }}\) denoted by \( \widehat{\varvec{\beta }}_{\text {RMLE}}\) can be written as

where \(\varvec{\mathcal {I}}^{-1}\) is the inverse of the Fisher’s information matrix given in the previous sub-section. In the presence of multicollinearity, following Kibria and Saleh (2012), a restricted Liu estimator in the BR model denoted by \(\widehat{\varvec{\beta }}^{RL}\) is defined as

The test statistic for testing the null hypothesis given in (11) is defined as

As \( n \rightarrow \infty \), the above test statistic has an asymptotic chi-square distribution with \( p_2 \) degrees of freedom.

2.4 Improved estimators

Now, by applying shrinkage strategies defined in many papers for example Asar and Korkmaz (2022), Lisawadi et al. (2020), Hossain et al. (2018), and Hossain and Ahmed (2012), we propose the improved shrinkage estimators based on the unrestricted and restricted Liu estimators.

2.5 Liu linear shrinkage estimator

We denote the Liu linear shrinkage estimator of \( \varvec{\beta }\) by \(\widehat{\varvec{\beta }}^{LLS}\) as follows:

where \( 0 \le \delta \le 1 \) is the confidence level in prior information and can be specified by the researcher. However, if there is no prior information on \(\delta \), then one can estimate the optimum value of the \(\delta \), by minimizing the mean square error of \(\widehat{\varvec{\beta }}^{LLS}\) with respect to \(\delta \) (See Online Appendix 0), as follows:

in which \( {{\textbf {B}}} = ( {{\textbf {X}}}^\prime \widehat{{{\mathbf {W}}}}{{\textbf {X}}} + \, {{\textbf {I}}}_p )^{-1} ( {{\textbf {X}}}^\prime \widehat{{{\mathbf {W}}}}{{\textbf {X}}} + d \, {{\textbf {I}}}_p ) \), \( \varvec{\mathcal {Z}} = \varvec{\mathcal {I}}^{-1} {{\textbf {H}}}^\prime \Big ( {{\textbf {H}}}\, \varvec{\mathcal {I}} ^{-1} {{\textbf {H}}}^\prime \Big )^{-1} \) and \(\varvec{\Sigma }(.) \) is the variance–covariance matrix. It is clear that the \(\delta _{optimal}\) depends on the unknown value of \(\varvec{\beta }\). We can recommend that the users can use the estimated values of \(\varvec{\beta }\) for practical situations.

2.6 Liu pretest estimator

The Liu pretest estimator of \( \varvec{\beta } \) denoted by \( \widehat{\varvec{\beta }}^{LPT}\) has the following form

where I(.) is an indicator function and \( T_{n,\alpha } \) is the \( \alpha \)-level upper value of the distribution of the test statistic \( T_n \). The Liu pretest estimator has two choices so that, if \( {{\textbf {H}}}_0 : {{\textbf {H}}} \varvec{\beta } = {{\textbf {h}}} \) is not rejected then, \( \widehat{\varvec{\beta }}^{LPT}= \widehat{\varvec{\beta }}^{RL}\) otherwise, \( \widehat{\varvec{\beta }}^{LPT}= \widehat{\varvec{\beta }}^{UR}\).

2.7 Liu shrinkage pretest estimator

The Liu shrinkage pretest estimator of \( \varvec{\beta } \) denoted by \( \widehat{\varvec{\beta }}^{LSPE}\) is as

note that, \( \widehat{\varvec{\beta }}^{LSPE}\) is more efficient than \( \widehat{\varvec{\beta }}^{LPT}\) in many parts of the parameter space.

2.8 Liu Stein estimator

We denote the Liu Stein estimator of \( \varvec{\beta } \) by \( \widehat{\varvec{\beta }}^{LS}\) that combines the Liu unrestricted and Liu restricted estimator in an optimal way, dominating the Liu unrestricted estimator is defined as follows

2.9 Liu positive Stein estimator

The Liu positive Stein estimator of \( \varvec{\beta } \) denoted by \( \widehat{\varvec{\beta }}^{LPS}\) is defined as

where \( z^{+} = \max (0 , z) \). The \( \widehat{\varvec{\beta }}^{LPS}\) adjusts controls for the over–shrinking problem in Liu Stein estimator. For more on Liu estimator for Stein type estimator, we refer Kibria (2012) among others.

3 Asymptotic properties

In this section, we provide the asymptotic properties of the Liu shrinkage estimators introduced in Sect. 2. To explore the properties when the subspace information \({{\textbf {H}}}\varvec{\beta } = {{\textbf {h}}} \) is wrong, we consider the sequence of local alternatives

where \( \varvec{\vartheta } = (\vartheta _1, \vartheta _2, ..., \vartheta _{p_2})^\prime \in R^{p_2} \) is a \( p_2 \times 1 \) vector of fixed values. In order to compare the estimators, we compute the asymptotic distributional bias \( ( \mathcal {B}) \) and the asymptotic distributional variance \( (\mathcal {V}) \) of the proposed estimators.

Suppose \( \widehat{{\varvec{\beta }}} \) is any of the proposed estimators of \( {\varvec{\beta }}\). The asymptotic distributional bias of \( \widehat{{\varvec{\beta }}} \) is defined as

Also, the asymptotic distributional variance of \( \widehat{{\varvec{\beta }}} \) is defined as

We present the following lemma which are useful for computing the asymptotic results of proposed estimators.

Lemma 3.1

Under the sequence of local alternatives \( \lbrace \mathcal {K}_{(n)} \rbrace \) given in (21) and the usual regularity conditions of MLE, as \( n \rightarrow \infty \)

where \( \varvec{\mathcal {Z}} = \varvec{\mathcal {I}}^{-1} {{\textbf {H}}}^\prime ( {{\textbf {H}}}\, \varvec{\mathcal {I}}^{-1} {{\textbf {H}}}^\prime )^{-1} \), \( {{\textbf {B}}} = ( {{\mathbf {X}}}^\prime \widehat{{{\mathbf {W}}}}{{\mathbf {X}}}+ k\, {{\mathbf {I}}}_p )^{-1} ({{\mathbf {X}}}^\prime \widehat{{{\mathbf {W}}}}{{\mathbf {X}}}+{{\mathbf {I}}}_p) \), \( {{\textbf {I}}}_p \) is an identity matrix of order p, and \( \varvec{\mathcal {I}}^{-1} \) is the inverse of Fisher information matrix given right after the Eq. (8).

Proof

See Online Appendix 1.

Using Lemma 3.1, we present the asymptotic properties of the Liu shrinkage estimators in the following theorems.

Theorem 3.2

Under the sequence of local alternatives given in (21) and the usual regularity conditions, the asymptotic distributional biases of the proposed estimators are as follows

where \( \varvec{\Psi }_{v}(. ; \Delta ^{*} ) \) is the cumulative distribution function of the \( \chi ^2_{v}( \Delta ^{*} ) \) distribution and \( \Delta ^{*} = \varvec{\vartheta }^\prime ( {{\textbf {H}}} \, {{\textbf {I}}}^{-1} {{\textbf {H}}}^\prime )^{-1} \varvec{\vartheta } \) is the non-centrality parameter. \(\square \)

Proof

See Online Appendix 2.

Theorem 3.3

Under the local alternatives given in (21) and the usual regularity conditions, the asymptotic distributional variances of the estimators are as follows

Proof

See Online Appendix 3.

4 Some asymptotic evaluations of the variance of the proposed estimators

In this section, we compare the asymptotic distributional variances of the seven estimators discussed in Sect. 3. The following definition is very helpful for comparison purposes.

Definition 4.1

Let \(\mathcal {B}\) be the parameter space of \(\varvec{\beta }\). If two estimators \(\hat{\varvec{\beta }}^{*}\) and \(\hat{\varvec{\beta }}^{**}\) are such that \(\mathcal {V} (\hat{\varvec{\beta }}^{*})\le \mathcal {V} (\hat{\varvec{\beta }}^{**})\) for all values of \(\varvec{\beta }\in \mathcal {B}\), with strict inequality for at least one \(\varvec{\beta }\), we say that \(\hat{\varvec{\beta }}^{*}\) dominates \(\hat{\varvec{\beta }}^{**}\)

1. \(\varvec{\beta }^{RL}\) is superior to the \(\varvec{\beta }^{UR}\) if:

2. \(\varvec{\beta }^{LPT}\) is superior to the \(\varvec{\beta }^{UR}\) if:

3. \(\varvec{\beta }^{LLS}\) is superior to the \(\varvec{\beta }^{UR}\) if:

4. \(\varvec{\beta }^{LPS}\) is superior to the \(\varvec{\beta }^{LS}\) if:

The right hand side of the equations above is just real numbers. Since the expectation of a positive random variable is positive, then by definition of an indicator function,

Since \(P(\chi ^{2}_{p_2+2}(\varvec{\Delta ^{*}})>0)=1\) Thus, for all \(\Delta ^{*}\in (0,+\infty )\)

5 Monte Carlo simulation

In this section, we provide the details of an extensive Monte Carlo simulation study in order to compare the performances of the listed estimators in terms of relative efficiency, which is defined in Eq. (24) where \(\widehat{\varvec{\beta }}^{*}\) corresponds to the listed methods in the paper

Since one of our main aims is to investigate the performance of the estimators under a multicollinear design, we generate the design matrix \({{\mathbf {X}}}\) using the multivariate normal distribution with zero mean vector \(\mathbf{0}\) and variance covariance matrix \(\varvec{\Sigma }\) such that \({{\mathbf {X}}}\sim N\left( \mathbf{0},\varvec{\Sigma }\right) \in {\mathbb {R}}^{n \times p}\), where \(\Sigma _{ij}=\rho ^{|i-j|}\), \(i,j=1,2, \ldots , p\), n is the sample size and p is the number of predictor variables. In this setting, \(\rho \) controls the degree of correlation between the predictors, and it is taken as 0.6 and 0.9.

We consider the candidate sub-model given in (11). The hypothesis \({{\textbf {H}}}_0 : {{\textbf {H}}} \varvec{\beta } = {{\textbf {h}}} \) is tested against \({{\textbf {H}}}_1 : {{\textbf {H}}} \varvec{\beta } \ne {{\textbf {h}}}\) where \({{\textbf {H}}}=\left( {{\mathbf {0}}}_{p_2 \times p_1}, {{\mathbf {I}}}_{p_2} \right) \in {\mathbb {R}}^{p_2 \times p}\) is a matrix of rank \(p_2\), \({{\mathbf {I}}}_{p_2}\in {\mathbb {R}}^{p_2 \times p_2}\) is an identity matrix of order \(p_2\) such that \(p=p_1+p_2\). The sample size is chosen to be \(n=50, 100, 200\). The true regression parameters are taken to be \({\varvec{\beta }}=\left( {\varvec{\beta }}_1^\prime , {\varvec{\beta }}_2^\prime \right) ^\prime \) where \({\varvec{\beta }}_1 \in {\mathbb {R}}^{p_1}\) and \({\varvec{\beta }}_2 \in {\mathbb {R}}^{p_2}\) are active and inactive parameter vectors respectively.

The response variable is generated using the beta distribution such that \(y_i \sim Beta\left( \mu _i \phi , (1-\mu _i)\phi \right) \) where

which is known as the logit link function and the dispersion parameter is fixed to be 5. The confidence level in prior information is taken as 0.5 which means that equal weights are put on the unrestricted and restricted Liu estimates. However, one can use the estimated value of \(\delta \) using (16). In designing this Monte Carlo experiment, we also aim to understand the effects of the departure from the true parameter vector. Thus, we focus on the two different cases, namely, \({\varvec{\beta }}_2={{\mathbf {0}}}_{p_2}\) meaning that the null hypothesis \({{\textbf {H}}}_0\) holds and \({\varvec{\beta }}_2 \ne {{\mathbf {0}}}_{p_2}\) which means that the alternative hypothesis \({{\textbf {H}}}_1\) holds. In order to measure the effect of the departure from the null hypothesis, we define another parameter \(\Delta \) that represents the distance between the simulated model and the candidate sub-model. It is defined as \(\Delta =\Vert {\varvec{\beta }}-{\varvec{\beta }}^{(0)} \Vert \) where \(\Vert . \Vert \) is the usual Euclidean norm and \({\varvec{\beta }}^{(0)}=\left( {\varvec{\beta }}_1^\prime , {{\mathbf {0}}}_{p_2}^\prime \right) ^\prime \) is the parameter vector under \({{\textbf {H}}}_0\). Thus, \(\Delta =0\) means that we consider the first scenario, we set \({\varvec{\beta }}_1^\prime =(2.75, -1.75, 1.45)\) and \({\varvec{\beta }}_2={{\mathbf {0}}}_{p_2}\). In the second scenario, \({\varvec{\beta }}_1\) is the same while \({\varvec{\beta }}_2=\left( \sqrt{\Delta }, {{\mathbf {0}}}_{p_2-1} ^\prime \right) ^\prime \) where \(\Delta \in [0,2]\). Moreover, \(p_2\) is chosen to be 10, 15, 20.

The number of repetitions in the simulation is 1000. The simulated mean squared error of an estimator \(\widehat{\varvec{\beta }}^{*}\) is computed as follows

Note that we use the relative efficiency, which is the relative mean squared errors (RMSE) such that a value of RMSE larger than one shows that the estimator \(\widehat{\varvec{\beta }}^*\) is superior to \(\widehat{\varvec{\beta }}^{UR}\).

The results of the simulation is summarized in Figs. 1 and 2 showing the RMSE performance of the methods with respect to \(\Delta \). The following the conclusions can be deducted from the figures:

-

At \( \Delta = 0 \), the performance of the restricted Liu estimator is the best in all the situations. When the null hypothesis is violated, the RMSE of this estimator sharply decreases.

-

At \( \Delta = 0 \), the Liu positive Stein estimator is better than the Liu Stein estimator. However, as \( \Delta \) moves away from zero, the performance of these two estimators becomes the same.

-

The RMSE of all estimators increases as the correlation between predictor variables increases.

-

The RMSE of all estimators generally decreases as the sample size n increases.

-

The most important result is that for high correlation (\(\rho =0.9\)) the relative efficiencies of all estimates are higher than the unrestricted estimator.

-

For high correlation (\(\rho =0.9\)), the restricted Liu shrinkage estimators generally has higher relative efficiencies in a wide range of \(\Delta \).

6 Real data application

In this section, we apply the proposed estimators to the two real data set as given in the subsections.

6.1 Government spending in Dutch cities data (2005)

The aim of this data is to explain the proportion of Dutch city budgets spent on administration and government based on 10 covariates. The data is contained in fmlogit package in R as a data frame with 429 observations and 12 variables. The dependent variable is governing and the remaining variables are the explanatory variables which are given in Table 1.

Since there are missing values in some observations, we exclude them and make a complete case analysis. We fit a beta regression model and observe the significant variables. We summarize the unrestricted and restricted models in Table 2. From Fig. 3, it is seen that there is a high correlation between some covariates. Also, we compute the condition number (CN) of the matrix of cross products \({{\textbf {X}}}^\prime \widehat{{{\mathbf {W}}}} {{\textbf {X}}}\) as 809.097 which is defined as the square root of the ratio of the maximum eigenvalue to the minimum eigenvalue \({{\textbf {X}}}^\prime \widehat{{{\mathbf {W}}}} {{\textbf {X}}}\). Both of the correlation plot and CN indicate that there is a severe collinearity problem and using the usual beta regression for this data may not be appropriate, as such analysis may result in unreliable estimates. Therefore, we applied the proposed estimators given in this paper. The corresponding values of the AIC criterion given in Table 2 indicate that five of the variables houseval, education, recreation, social and urbanplanning are effective and the rest of variables are ineffective (see also the \(R^2\) values). Therefore, we use this restriction in the analysis and computed the proposed Liu shrinkage estimators (Table 3). We obtained the optimal value of \(\delta \) as 0.48 in the Liu shrinkage estimator. Further, we set significance level as 0.05 in the preliminary test estimator. To evaluate the performance of the proposed new estimators, we apply the bootstrap technique with \(n=200\) and 2000 boot times. We then compute the mean squares as the square of the estimated bias plus the square of the standard deviation for each estimator.

The results show that the bootstrap root mean squares given in Table 3 (and standard errors, Online Appendix 4) of the proposed estimators, specifically the Stein-type and positive Stein–type shrinkage estimators, are generally lower than those based on the unrestricted maximum likelihood estimator of the beta regression model. The relative efficiencies also show that the restricted estimators had the highest value of 2.235 which is preferable to the other estimates.

6.2 Student performance data set

This data approach student achievement in secondary education of two Portuguese schools. The data attributes include student grades, demographic, social and school related features and it was collected by using school reports and questionnaires of 395 students. The outcome variable is the students’ performance of Mathematics graded in the interval [0, 20] . In Cortez and Silva (2008), the data set was modeled under binary/five-level classification and regression tasks. However, we use beta regression without categorization of the outcome variable, as it may lose some useful information (Altman and Royston 2006). Note that the outcome variable is final grade (G3) which has a strong correlation with attributes second period grade (G2) and first period grade (G1). As the G3 (final grade in mathematics) was bounded in the interval [0, 20] , we converted it to the interval \(\left( 0,1\right) \) and fitted beta regression. Based on the AIC and \(R^2\) measures, we form the null model given in Table 4. We then apply the estimators developed in this paper. We set the significance level (\(\alpha \)) equal to 0.05. Since we do not have any prior information about the parameters, therefore we use the estimated the value of \(\delta \) (0.354) in the linear shrinkage estimator. The results show that our proposed estimates outperform the unrestricted in most of the cases in terms of the bootstrap root mean squares (Table 5) and standard errors (Online Appendix 4). Further, of those proposed estimates, the restricted estimators are by far the best estimate in terms of having higher relative efficiency (2.579). Positive Stein and Stein type estimates are the other favorable estimators.

7 Conclusion

In this paper, we considered different types of improved shrinkage estimators based on the Liu estimator, namely, restricted, preliminary test, Stein-type, positive Stein-type, and linear shrinkage estimators for the beta regression model. We obtained the analytical biases and variances of the proposed estimators under the local alternative hypothesis. Further, we conducted an extensive simulation study to examine the performance of the proposed estimators in a limited number of samples. Our results showed that Stein–type estimators uniformly outperform the usual maximum likelihood estimators. Other shrinkage type estimators also had higher relative efficiencies compared to the maximum likelihood in a wide range of parameter space. We concluded the paper by applying the proposed methodology for two known real data from econometrics and education. The superiority of the proposed estimators were evident in terms of having high overall relative efficiencies and lower bootstrap standard and mean square errors in both of the real examples.

References

Ahmed SE (1992) Shrinkage preliminary test estimation in multivariate normal distributions. J Stat Comput Sim 43:177–195

Ahmed SE (2014) Penalty, shrinkage and pretest strategies: variable selection and estimation. Springer, New York

Altman DG, Royston P (2006) The cost of dichotomising continuous variables. BMJ 332(7549):1080

Asar Y, Korkmaz M (2022) Almost unbiased Liu-type estimators in gamma regression model. J Comput Appl Math 403:113819

Bancroft TA (1944) On biases in estimation due to the use of preliminary tests of significance. Ann Math Stat 15:190–204

Cortez P, Silva A (2008) Using data mining to predict secondary school student performance. In: A. Brito and J. Teixeira (eds), Proceedings of 5th FUture BUsiness TEChnology Conference (FUBUTEC 2008) pp. 5-12, Porto, April, 2008, EUROSIS, ISBN 978-9077381-39-7

Espinheira PL, da Silva LCM, Silva ADO, Ospina R (2019) Model selection criteria on beta regression for machine learning. Mach Learn Knowl Extract 1(1):427–449

Ferrari S, Cribari-Neto F (2004) Beta regression for modelling rates and proportions. J Appl Stat 31(7):799–815

Guolo A, Varin C (2014) Beta regression for time series analysis of bounded data, with application to Canada Google Flu Trends. Ann Appl Stat 8(1):74–88

Hoerl AE, Kennard RW (1970) Ridge regression: biased estimation for non-orthogonal problems. Technometrics 12:69–82

Hossain S, Ahmed SE (2012) Shrinkage and penalty estimators of a Poisson regression model. Aust NZ J Stat 54:359–373

Hossain S, Thomson T, Ahmed SE (2018) Shrinkage estimation in linear mixed models for longitudinal data. Metrika 81:569–586

Judge GG, Bock ME (1978) The statistical implication of pre-test and Stein-rule estimators in econometrics. North-Holland, Amsterdam

Karlsson P, Månsson K, Kibria BMG (2020) A Liu estimator for the beta regression model and its application to chemical data. J Chemom 34(10):e3300

Kibria BMG (2012) Some Liu and ridge type estimators and their properties under the ill-conditioned Gaussian linear regression model. J Stat Comput Simul 82(1):1–17

Kibria BMG, Saleh AKME (2004) Performance of positive rule estimator in the ill-conditioned Gaussian regression model. Calcutta Statist Assoc Bull 55:209–239

Kibria BMG, Saleh AME (2012) Improving the estimators of the parameters of a probit regression model: a ridge regression approach. J Stat Plann Inference 142:1421–1435

Lisawadi S, Ahmed SE, Reangsephet O (2020) Post estimation and prediction strategies in negative binomial regression model. Int J Model Simul. https://doi.org/10.1080/02286203.2020.1792601

Liu K (1993) A new class of biased estimate in linear regression. Commun Stat Theory Methods 22(2):393–402

Qasim M, Månsson K, Kibria BMG (2021) On some beta ridge regression estimators: method, simulation and application. J Stat Comput Simul 91(9):1699–1712

Stein C (1956) The admissibility of hotelling’s T 2-test. Math Stat 27:616–623

Thompson JR (1968) Some shrinkage techniques for estimating the mean. J Am Stat Assoc 63:113–122

Funding

Open access funding provided by Uppsala University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Arabi Belaghi, R., Asar, Y. & Larsson, R. Improved shrinkage estimators in the beta regression model with application in econometric and educational data. Stat Papers 64, 1891–1912 (2023). https://doi.org/10.1007/s00362-022-01355-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-022-01355-3