Abstract

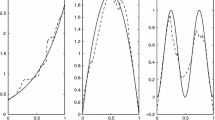

For covariate-adjusted nonparametric regression model, an adaptive estimation method is proposed for estimating the nonparametric regression function. Compared with the procedures introduced in the existing literatures, the new method needs less strict conditions and is adaptive to covariate-adjusted nonparametric regression with asymmetric variables. More specifically, when the distributions of the variables are asymmetric, the new procedures can gain more efficient estimators and recover data more accurately by elaborately choosing proper weights; and for the symmetric case, the new estimators can obtain the same asymptotic properties as those obtained by the existing method via designing equal bandwidths and weights. Simulation studies are carried out to examine the performance of the new method in finite sample situations and the Boston Housing data is analyzed as an illustration.

Similar content being viewed by others

References

Cui X, Guo W, Zhu L (2009) Covariate-adjusted nonlinear regression. Ann Stat 37:1839–1870

Delaigle A, Hall P, Zhou W (2016) Nonparametric covariate-adjusted regression. Ann Stat 44:2190–2220

Hansen BE (2008) Uniform convergence rates for kernel estimation with dependent data. Econom Theory 24:726–748

Li F, Lin L, Cui X (2010) Covariate-adjusted partially linear regression models. Commun Stat Theory Methods 39:1054–1074

Masry E (1996) Multivariate local polynomial regression for time series: uniform strong consistency and rates. J Time Ser Anal 17:571–599

Nguyen DV, Sentürk D (2008) Multicovariate-adjusted regression models. J Stat Comput Simul 78:813–827

Sentürk D, Müller HG (2005) Covariate-adjusted regression. Biometrika 92:75–89

Sentürk D, Müller HG (2006) Inference for covariate adjusted regression via varying coefficient models. Ann Stat 34:654–679

Sentürk D, Müller HG (2009) Covariate-adjusted generalized linear models. Biometrika 96:357–370

Zhang J, Zhu L, Zhu L (2012a) On a dimension reduction regression with covariate adjustment. J Multivar Anal 104:39–55

Zhang J, Zhu L, Liang H (2012b) Nonlinear models with measurement errors subject to sing-indexed distortion. J Multivar Anal 112:1–23

Zhang J, Li G, Feng Z (2015) Checking the adequacy for a distortion errors-in-variables parametric regression model. Comput Stat Data Anal 83:52–64

Acknowledgements

The research was supported by NNSF Project (U1404104, 11501522, 11601283 and 11571204) of China, China Social Science Fund 18BTJ021 and a Natural Science Project of Zhengzhou University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this Appendix, we first present some necessary lemmas, then give the technical proofs of the theorems.

Lemma 1

Assume the conditions C1–C6 hold, for \(\ell =0,1,2,\) we have

where \(\delta _{\ell }(h_i)=h_i^2+(nh_i^{2\ell +1})^{-1/2}(\log n)^{1/2}.\)

Lemma 1 could be obtained from Eq. (7.6) Delaigle et al. (2016), details see Masry (1996) and Hansen (2008). Similarly results also hold true for \(\hat{\phi }_0^{+(\ell )}\) and \(\hat{\phi }_0^{-(\ell )}.\)

For the recovered data \( \hat{Y}_i=\widetilde{Y}_i/{\hat{\psi }}(U_i), \, {\hat{X}}_i=\widetilde{X}_i/{\hat{\phi }}(U_i), i=1,2,\ldots ,n, \) let \({\hat{w}}_Y(U_i)=\psi (U_i)/{\hat{\psi }}(U_i),\) \({\hat{w}}_X(U_i)=\phi (U_i)/{\hat{\phi }}(U_i),\) then the expression could be formulated as \(\hat{Y}_i={\hat{w}}_Y(U_i)Y_i,\) \(\hat{X}_i={\hat{w}}_X(U_i)X_i.\)

Lemma 2

\(\Vert {\hat{w}}_Y(U)-1\Vert _\infty =O_p(\delta _0(h_1)+\delta _0(h_2)).\)

Proof

\({\hat{w}}_Y(U)-1=\frac{\psi (U)-{\hat{\psi }}(U)}{{\hat{\psi }}(U)},\)

The last equation can be obtained from Lemma 1. \(\square \)

Lemma 3

Assume the conditions C1–C6 hold, the following equation satisfies

where \({\hat{f}}_{{\hat{X}}}(x)=n^{-1}\sum \nolimits _{i=1}^nK_h({\hat{X}}_i-x)\) and \({\hat{f}}_X(x)=n^{-1}\sum \nolimits _{i=1}^nK_h(X_i-x).\)

Lemma 3 can be proved by the similar methods used in the proof of Eq. (7.11) in Delaigle et al. (2016).

Lemma 4

Suppose conditions C1–C6 hold, we have

where

All these equations can be easily computed for the case of local linear estimation, and they are similar to Eq. (F.27) in the supplement of Delaigle et al. (2016).

Combining Lemma 2 with Lemma 4, we have

Proof of Theorem 1

Following Eq. (9) and Lemma 4, we will obtain the expectation of \({\hat{\phi }}(u),\)

and the variance of \(\hat{\phi }(u),\)

The proof of Theorem 1 is completed. \(\square \)

Proof of Theorem 2:

Firstly, we consider the term \(\hat{\Pi }_1(x)\) multiplied by \({\hat{f}}_{{\hat{X}}}(x)\) and obtain,

Similar to the computation of Eq. (7.25) in Delaigle et al. (2016), we have \(\max \nolimits _x |J_2(x)|=O_p(h^{-1}(\delta _0(h_1)+\delta _0(h_2))(\delta _0(h_3)+\delta _0(h_4))\log n).\) We also note

Therefore, \(J_1(x)\) is the dominating term and \(J_2(x)\) is negligible. Together with Lemma 3, we have

Next, we consider the term \(\hat{\Pi }_2(x),\) by a Taylor’s expansion we obtain

where \(\xi _i\) lies between \((x-X_i)/h\) and \((x-{\hat{X}}_i)/h.\) By the law of large numbers, and after some calculations we have

and \(I_3(x)\) can be negligible compared with \(I_1(x)\) and \(I_2(x).\)

Finally, for \(\hat{\Pi }_3(x)\) we have,

Similar to the proof of Lemma F.1. in Delaigle et al. (2016), we can obtain \(Q_2(x)\) is negligible compared with \(Q_1(x).\) Then, we have

By central limit theorem and Slutsky theorem, we obtain

Based on the expansion of \(\Pi _1(x), \Pi _2(x)\) and \(\Pi _3(x)\) above, the proof of Theorem 2 is completed. \(\square \)

Proof of Theorem 3:

Following Eq. (12) we know \(\hat{m}_{LL}(x)=\mathbf{e}_1^\tau \mathbf{S}_{{\hat{X}}}^{-1}(x,K,h)\mathbf{T}_{{\hat{X}},{\hat{Y}}}(x,K,h).\)

Firstly, consider the term \(\mathbf{T}_{{\hat{X}},{\hat{Y}}}(x,K,h)\) and

After some computation we have

So \(\mathbf{T}_{{\hat{X}},{\hat{Y}}}(x,K,h)\) can be expressed as

Then \({\hat{m}}_{LL}(x)\) can be written as

\(\square \)

Secondly, we deal with \({\hat{m}}_{LL,1}(x),\) a standard decomposition shows

Also we can obtain \(\Vert \mathbf{S}_{{\hat{X}}}^{-1}(x,K,h)-f_X^{-1}(x)\mathbf{S}^{-1}\Vert _\infty =o_p(1),\) where \(\mathbf{S}=\mathrm{diag}(1,u_2).\) Combining with Eq. (17), we have

Then we can obtain \(E(G_2(x))=\frac{1}{2}h^2m''(x)u_2(1+o(1))=B_1(x)(1+o(1)).\) And,

By Eq. (16), it follows \(G_3(x)\xrightarrow {L} N\left( 0, \frac{1}{nhf_X(x)}\sigma ^2(x)v_0\right) .\)

Thirdly, we consider \({\hat{m}}_{LL,2}(x),\)

and the last equation follows from Eq. (15).

Combined with the decomposition components of \({\hat{m}}_{LL}(x),\) the proof of Theorem 3 is completed.

Rights and permissions

About this article

Cite this article

Li, F., Lin, L., Lu, Y. et al. An adaptive estimation for covariate-adjusted nonparametric regression model. Stat Papers 62, 93–115 (2021). https://doi.org/10.1007/s00362-019-01084-0

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-019-01084-0