Abstract

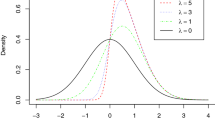

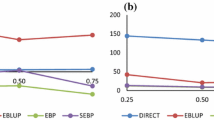

The empirical best linear unbiased prediction (EBLUP) based on the nested error regression model (Battese et al. in J Am Stat Assoc 83:28–36, 1988, NER) has been widely used for small area mean estimation. Its so-called optimality largely depends on the normality of the corresponding area level and unit level error terms. To allow departure from normality, we propose a transformed NER model with an invertible transformation, and employ the maximum likelihood method to estimate the underlying parameters of the transformed NER model. Motivated by Duan’s (J Am Stat Assoc 78:605–610, 1983) smearing estimator, we propose two small area mean estimators depending on whether all the population covariates or only the population covariate means are available in addition to sample covariates. We conduct two design-based simulation studies to investigate their finite-sample performance. The simulation results indicate that compared with existing methods such as the empirical best linear unbiased prediction, the proposed estimators are nearly the same reliable when the NER model is valid and become more reliable in general when the NER model is violated. In particular, our method does benefit from incorporating auxiliary covariate information.

Similar content being viewed by others

References

Battese GE, Harter RM, Fuller WA (1988) An error components model for prediction of county crop area using survey and satellite data. J Am Stat Assoc 83:28–36

Box GEP, Cox DR (1964) An analysis of transformations. J R Stat Soc Ser B 26:211–252

Chen J, Variyath AM, Abraham B (2008) Adjusted empirical likelihood and its properties. J Comput Gr Stat 17:426–443

Duan N (1983) Smearing estimate: a nonparametric retransformation method. J Am Stat Assoc 78:605–610

Datta GS, Lahiri P (2000) A unified measure of uncertainty of estimated best linear unbiased predictors in small area estimation problems. Stat Sin 10:613–627

Fay RE, Herriot RA (1979) Estimates of income for small places: an application of James-Stein procedures to census data. J Am Stat Assoc 74:269–277

González-Manteiga W, Lombardía MJ, Molina I, Morales D, Santamaría L (2008) Bootstrap mean squared error of a small-area EBLUP. J Stat Comput Simul 78:443–462

Gurka MJ, Edward LJ, Muller KE, Kupper LL (2006) Extending the Box-Cox transformation to the linear mixed model. J R Stat Soc Ser A 169:273–288

Hall P, Maiti T (2006) Nonparametric estimation of mean-squared prediction error in nested-error regression models. Ann Stat 34:1733–1750

Jiang J, Nguyen T (2012) Small area estimation via heteroscedastic nested-error regression. Can J Stat 40:588–603

John JA, Draper NR (1980) An alternative family of transformations. Appl Stat 29:190–197

Owen AB (1990) Empirical likelihood ratio confidence regions. Ann Stat 18:90–120

Owen AB (2001) Empirical likelihood. Chapman and Hall/CRC, New York

Pfeffermann D (2013) New important developments in small area estimation. Stat Sci 28:40–68

Pfeffermann D, Correa S (2012) Emprical bootstrap bias correction of prediction mean square error in small area estimation. Brometrika 99:457–472

Prasad NGN, Rao JNK (1990) The estimation of the mean squared error of small-area estimators. J Am Stat Assoc 85:163–171

Qin J, Lawless J (1994) Empirical likelihood and general estimating equations. Ann Stat 22:300–325

Rao JNK (2003) Small area estimation. Wiley, Hoboken

Rao JNK, Molina I (2015) Small area estimation, 2nd edn. Wiley, Hoboken

Schmid T, Munnich RT (2014) Spatial robust small area estimation. Stat Pap 55:653–670

Sinha SK, Rao JNK (2009) Robust estimation of small area estimation. Can J Stat 37:381–399

Sugasawa S, Kubokawa T (2014) Estimation and prediction in transformed nested error regression models. Manuscript. arXiv:1410.8269v1

Sugasawa S, Kubokawa T (2015) Box-Cox transformed linear mixed models for positive-valued and clustered data. Manuscript. arXiv:1502.03193v2

Yang ZL (2006) A modified family of power transformations. Econ Lett 92:14–19

Acknowledgements

We thank the editor, an associate editor, and two anonymous referees for their constructive suggestions that significantly improved the paper. The research was supported in part by National Natural Science Foundation of China (Grant Numbers 11371142, 11501354, and 11571112), Program of Shanghai Subject Chief Scientist(14XD1401600), the 111 Project (B14019), and Doctoral Fund of Ministry of Education of China (20130076110004).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

To study the consistency of the MLEs, we make the following assumptions.

-

(C1)

There exists constants \(0<c_1 <c_2\) such that the transformed response \(h(y; \lambda _0)\) with \(\lambda _0\in [c_1, c_2]\) and the transformation in (3) satisfy the NER model in (1).

-

(C2)

The small area number m keeps fixed and that as n goes to infinity, \(n_k/n=\rho _k +o(1)\) for \(\rho _k\in (0,1)\).

-

(C3)

Assume that \(\Sigma _x=\sum _{k=1}^m \rho _k \Sigma _{xk}\) is nonsingular, where \(\Sigma _{xk}\) is the variance matrix of \(\mathbf{x}_{kj}\) in small area k.

Apparently condition (C1) requires \(y_{kj}\) is an increasing function of \( \mathbf{x}_{kj}^{\mathrm {\scriptscriptstyle \top }}\beta + v_k +\varepsilon _{kj}\) since \(h(y; \lambda _0)\) is increasing when \(\lambda _0>0\). If \(y_{kj}\) is an decreasing function of \( \mathbf{x}_{kj}^{\mathrm {\scriptscriptstyle \top }}\beta + v_k +\varepsilon _{kj}\), it must be an increasing function of \( \mathbf{x}_{kj}^{\mathrm {\scriptscriptstyle \top }}(-\beta ) +(- v_k)+( - \varepsilon _{kj})\). Then condition (C1) is still satisfied except that the parameter \(\beta \) has an opposite sign. Condition (C2) is imposed to provide a justification of the proposed estimation method for \(\lambda \). Also we can check whether there exists system bias in the proposed method although the sample sizes \(n_k\) are generally very small in the literature of small area estimation.

Proof of Theorem 1

We begin by proving the consistency of \({\hat{\lambda }}\). Let \((\lambda _0, \gamma _0)\) be the true value of \((\lambda , \gamma )\). It is sufficient to show that as n is large, (1)

and (2) \( \frac{1}{n} \frac{\partial ^2 \ell (\lambda _0, \gamma _0)}{\partial \lambda \partial \lambda ^{\mathrm {\scriptscriptstyle \top }}}\) is positive definite. We shall prove only (1) since (2) can be proved along the same line of proving (1) but with more tedious derivation.

From (7), we have

To simplify S, we need to investigate \({\tilde{\sigma }}_e^2(\lambda _0, \gamma _0)\) and \(\partial {\tilde{\sigma }}_e^2(\lambda _0, \gamma _0)/\partial \lambda \). It follows from \(\mathbf{A}_k(\gamma )= \mathbf{I}_{n_k} - \frac{\gamma }{1+\gamma n_k}\mathbf{1}_{n_k} \mathbf{1}_{n_k}^{{\mathrm {\scriptscriptstyle \top }}}\) that for any fixed \(\gamma >0\),

The above three equalities imply that

By these three equalities, we immediately have

To calculate \(\partial {\tilde{\sigma }}_e^2(\lambda _0, \gamma _0)/\partial \lambda \), we denote \(z_{kj} \equiv \partial y_{rj}^{(\lambda _0)} / \partial \lambda = y_{rj}^{(\lambda _0)} \log (|y_{kj}|). \) Then

It can be found that

Accordingly

Putting (23) into (19), we obtain

To prove \(S=o_p(1)\), it is sufficient to prove for \(r=1,2,\ldots , m\) that

which is true as shown in Lemma 1. This proves the consistency of \({\hat{\lambda }}\).

Note that Eqs. (20) and (21) holds for any \(\gamma >0\). Since \({\hat{\lambda }}\) is consistent, we immediately obtain that \({\hat{\beta }}\) is an consistent estimator of \(\beta _0\). By re-studying the proof of (22), we find that it is still true when \(\gamma _0\) is replaced by any positive \(\gamma \) and \(\lambda _0\) is replaced by its consistent estimator \({\hat{\lambda }}\). This completes the proof of Theorem 1. \(\square \)

Lemma 1

Under the assumptions for the transformed NER model, it holds that

where \({\mathbb {E}}_{x, \varepsilon }\) and \({\mathbb {C}\mathrm{ov}}_{x, \varepsilon }\) denote expectation and covariance conditionally on \((\mathbf{x}, \varepsilon )\).

Proof

Denote the left-hand side of Eq. (24) by \(\Delta \). By assumption, the response \(y_{kj}^{(\lambda _0)} \) conditionally on \(v_k\) and \(\mathbf{x}_{kj}\) follows \(N(\mathbf{x}_{kj}^{\mathrm {\scriptscriptstyle \top }}\beta +v_k, \sigma _e^2)\). Since \({\mathbb {E}}(\varepsilon _{kj})=0\) and \( z_{kj} = y_{rj}^{(\lambda _0)} \log (|y_{kj}|) = \lambda _0^{-1} y_{rj}^{(\lambda _0)} \log (|y_{kj}^{(\lambda _0)} |), \) it follows that

where we denote \(a=\mathbf{x}_{kj}^{\mathrm {\scriptscriptstyle \top }}\beta +v_k\) for short. By transforming \(u=t/\sigma _e\) and \(b=a/\sigma _e\), we further have

Using \(d\{\phi (u-b)u\}= \{1- u(u-b) \} \phi (u) du \) and integration by parts, we have

\(\square \)

Rights and permissions

About this article

Cite this article

Li, H., Liu, Y. & Zhang, R. Small area estimation under transformed nested-error regression models. Stat Papers 60, 1397–1418 (2019). https://doi.org/10.1007/s00362-017-0879-7

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-017-0879-7

Keywords

- Empirical best linear unbiased prediction

- (Adjusted)Empirical likelihood

- Nested error regression model

- Small area estimation

- Transformed nested error regression model