Abstract

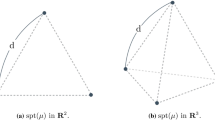

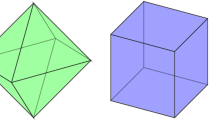

We consider functionals measuring the dispersion of a d-dimensional distribution which are based on the volumes of simplices of dimension \(k\le d\) formed by \(k+1\) independent copies and raised to some power \(\delta \). We study properties of extremal measures that maximize these functionals. In particular, for positive \(\delta \) we characterize their support and for negative \(\delta \) we establish connection with potential theory and motivate the application to space-filling design for computer experiments. Several illustrative examples are presented.

Similar content being viewed by others

References

Audze P, Eglais V (1977) New approach for planning out of experiments. Probl Dyn Strengths 35:104–107

Björck G (1956) Distributions of positive mass, which maximize a certain generalized energy integral. Arkiv för Matematik 3(21):255–269

Fedorov VV (1972) Theory of optimal experiments. Academic Press, New York

Hardin DP, Saff EB (2004) Discretizing manifolds via minimum energy points. Notices AMS 51(10):1186–1194

Johnson ME, Moore LM, Ylvisaker D (1990) Minimax and maximin distance designs. J Stat Plan Inference 26:131–148

Landkof NS (1972) Foundations of modern potential theory. Springer, Berlin

McKay MD, Beckman RJ, Conover WJ (1979) A comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 21(2):239–245

Morris MD, Mitchell TJ (1995) Exploratory designs for computational experiments. J Stat Plan Inference 43:381–402

Pronzato L, Müller WG (2012) Design of computer experiments: space filling and beyond. Stat Comput 22:681–701

Pronzato L, Pázman A (2013) Design of experiments in nonlinear models. Asymptotic normality, optimality criteria and small-sample properties. LNS 212, Springer, New York

Pronzato L, Wynn HP, Zhigljavsky A (2016) Extended generalised variances, with applications. Bernoulli (to appear). arXiv preprint arXiv:1411.6428

Saff EB (2010) Logarithmic potential theory with applications to approximation theory. Surv Approx Theory 5(14):165–200

Schilling RL, Song R, Vondracek Z (2012) Bernstein functions: theory and applications. de Gruyter, Berlin/Boston

Zhigljavsky AA, Dette H, Pepelyshev A (2010) A new approach to optimal design for linear models with correlated observations. J Am Stat Assoc 105(491):1093–1103

Acknowledgments

The work of the first author was partly supported by the ANR project 2011-IS01-001-01 DESIRE (DESIgns for spatial Random fiElds). The third author was supported by the Russian Science Foundation, project Nb. 15-11-30022 “Global optimization, supercomputing computations, and application”.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Lemma 2

Consider matrix A given by (2). The Laplacian of \(\det ^{\alpha }(A)\) considered as a function of \(x_1\) is

where \({\mathbf 1}_k=(1,\ldots ,1)^\top \in \mathbb {R}^k\).

Proof

We have

where \({\partial A{/}{\partial } \{x_1\}_i = -[{{\mathbf 1}_k \Delta _i^\top +\Delta _i{\mathbf 1}_k^\top }]}\) and \({\partial ^2 A{/}{\partial } \{x_1\}_i^2 =2 {\mathbf 1}_k{\mathbf 1}_k^\top }\), with \(\Delta _i=(\{x_2-x_1\}_i,\ldots ,\{x_{k+1}-x_1\}_i)^\top \in \mathbb {R}^k\). This gives

Noting that \(\sum _{i=1}^d \Delta _i \Delta _i^\top =A\), we have \(\sum _{i=1}^d \Delta _i^\top A^{-1}\Delta _i=\text{ trace }(I_k)=k\) and obtain

and

Now,

which finally gives (11). \(\square \)

A subgradient-type algorithm to maximize \({\widehat{\mathscr {D}}}_{k,-\infty }(\cdot )\).

Consider a design \(X_n=(x_1,\ldots ,x_n)\), with each \(x_i\in {\mathscr {X}}\), a convex subset of \(\mathbb {R}^d\), as a vector in \(\mathbb {R}^{n\times d}\). The function \({\widehat{\mathscr {D}}}_{k,-\infty }(\cdot )\) defined in (10) is not concave (due to the presence of \(\min \)), but is Lipschitz and thus differentiable almost everywhere. At points \(X_n\) where it fails to be differentiable, we consider any particular gradient from the subdifferential,

where \(x_{j_1},\ldots ,x_{j_{k+1}}\) are such that \({\mathscr {V}}_k(x_{j_1},\ldots ,x_{j_{k+1}})={\widehat{\mathscr {D}}}_{k,-\infty }(X_n)\) and where \(\nabla v_{j_1,\ldots ,j_{k+1}}(X_n)\) denotes the usual gradient of the function \({\mathscr {V}}_k(x_{j_1},\ldots ,x_{j_{k+1}})\). Our subgradient-type algorithm then corresponds to the following sequence of iterations, where the current design \(X_n^{(t)}\) is updated into

where \(P_{\mathscr {X}}[\cdot ]\) denotes the orthogonal projection on \({\mathscr {X}}\) and \(\gamma _t>0\), \(\gamma _t \searrow 0\), \(\sum _t \gamma _t=\infty \), \(\sum _t \gamma _t^2 < \infty \).

Direct calculation gives

where

so that

for \(j\in \{j_1,\ldots ,j_{k+1}\}\), \(j\ne j_1\), and

Rights and permissions

About this article

Cite this article

Pronzato, L., Wynn, H.P. & Zhigljavsky, A. Extremal measures maximizing functionals based on simplicial volumes. Stat Papers 57, 1059–1075 (2016). https://doi.org/10.1007/s00362-016-0767-6

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-016-0767-6