Abstract

In the framework of censored regression models the random errors are routinely assumed to have a normal distribution, mainly for mathematical convenience. However, this method has been criticized in the literature because of its sensitivity to deviations from the normality assumption. Here, we first establish a new link between the censored regression model and a recently studied class of symmetric distributions, which extend the normal one by the inclusion of kurtosis, called scale mixtures of normal (SMN) distributions. The Student-t, Pearson type VII, slash, contaminated normal, among others distributions, are contained in this class. A member of this class can be a good alternative to model this kind of data, because they have been shown its flexibility in several applications. In this work, we develop an analytically simple and efficient EM-type algorithm for iteratively computing maximum likelihood estimates of the parameters, with standard errors as a by-product. The algorithm has closed-form expressions at the E-step, that rely on formulas for the mean and variance of certain truncated SMN distributions. The proposed algorithm is implemented in the R package SMNCensReg. Applications with simulated and a real data set are reported, illustrating the usefulness of the new methodology.

Similar content being viewed by others

References

Akaike H (1974) A new look at the statistical model identification. Autom Control IEEE Trans 19:716–723

Arellano-Valle R, Castro L, González-Farías G, Muñoz-Gajardo K (2012) Student-t censored regression model: properties and inference. Stat Methods Appl 21:453–473

Arellano-Valle R. B (1994) Distribuições elípticas: propriedades, inferência e aplicações a modelos de regressão. Ph.D. thesis, Instituto de Matemática e Estatística, Universidade de São Paulo, in portuguese

Bai ZD, Krishnaiah PR, Zhao LC (1989) On rates of convergence of efficient detection criteria in signal processing with white noise. Inform Theory IEEE Trans 35:380–388

Barros M, Galea M, González M, Leiva V (2010) Influence diagnostics in the Tobit censored response model. Stat Methods Appl 19:716–723

Dempster A, Laird N, Rubin D (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B 39:1–38

Fang KT, Zhang YT (1990) Generalized multivariate analysis. Springer, Berlin

Garay A. M, Lachos V, Massuia M. B (2013) SMNCensReg: fitting univariate censored regression model under the scale mixture of normal distributions. R package version 2.2. http://CRAN.R-project.org/package=SMNCensReg

Genç AI (2012) Moments of truncated normal/independent distributions. Stat Pap 54:741–764

Greene W (2012) Econometric analysis. Prentice Hall, New York

Ibacache-Pulgar G, Paula G (2011) Local influence for Student-t partially linear models. Comput Stat Data Anal 55:1462–1478

Kim HJ (2008) Moments of truncated Student- distribution. J Korean Stat Soc 37:81–87

Labra FV, Garay AM, Lachos VH, Ortega EMM (2012) Estimation and diagnostics for heteroscedastic nonlinear regression models based on scale mixtures of skew-normal distributions. J Stat Plan Inference 142:2149–2165

Lachos VH, Ghosh P, Arellano-Valle RB (2010) Likelihood based inference for skew-normal independent linear mixed models. Stat Sin 20:303–322

Lange KL, Little R, Taylor J (1989) Robust statistical modeling using t distribution. J Am Stat Assoc 84:881–896

Lee G, Scott C (2012) EM algorithms for multivariate Gaussian mixture models with truncated and censored data. Comput Stat Data Anal 56:2816–2829

Liu C, Rubin DB (1994) The ECME algorithm: a simple extension of EM and ECM with faster monotone convergence. Biometrika 80:267–278

Lin TI (2010) Robust mixture modeling using multivariate skew tădistributions. Stat Comput 20:343–356

Louis TA (1982) Finding the observed information matrix when using the EM algorithm. J R Stat Soc 44:226–233

Massuia MB, Cabral CRB, Matos LA, Lachos VH (2014) Influence diagnostics for Student-t censored linear regression models. Statistics 85:1–21. doi:10.1080/02331888.2014.958489

Meilijson I (1989) A fast improvement to the EM algorithm to its own terms. J R Stat Soc Ser B 51:127–138

Meng XL, Rubin BD (1993) Maximum likelihood estimation via the ECM algorithm: a general framework. Biometrika 80:267–278

Meza C, Osorio F, la Cruz RD (2012) Estimation in nonlinear mixed-effects models using heavy-tailed distributions. Stat Comput 22:121–139

Mroz TA (1987) The sensitivity of an empirical model of married women’s hours of work to economic and statistical assumptions. Econometrica 55:765–799

Ortega EMM, Bolfarine H, Paula GA (2003) Influence diagnostics in generalized log-gamma regression models. Comput Stat Data Anal 42:165–186

Osorio F, Paula GA, Galea M (2007) Assessment of local influence in elliptical linear models with longitudinal structure. Comput Stat Data Anal 51:4354–4368

R Core Team (2015) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, http://www.R-project.org

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6:461–464

Therneau TM, Grambsch PM, Fleming RT (1990) Martingale-based residuals for survival models. Biometrika 77(1):147–160

Villegas C, Paula G, Cysneiros F, Galea M (2012) Influence diagnostics in generalized symmetric linear models. Comput Stat Data Anal 59:161–170

Wei CG, Tanner MA (1990) Posterior computations for censored regression data. J Am Stat Assoc 85:829–839

Wu L (2010) Mixed effects models for complex data. Chapman & Hall/CRC, Boca Raton

Acknowledgments

We thank the editor, associate editor, and two referees whose constructive comments led to an improved presentation of the paper. The research of Víctor H. Lachos was supported by Grant 305054/2011-2 from Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq-Brazil) and by Grant 2014/02938-9 from Fundação de Amparo à Pesquisa do Estado de São Paulo (FAPESP-Brazil). Celso R. B. Cabral was supported by CNPq (via the Universal and CT-Amazônia projects) and CAPES (via Project PROCAD 2007). The research of Aldo M. Garay is supported by Grant 161119/2012-3 from CNPq and by Grant 2013/21468-0 from FAPESP-Brazil and Heleno Bolfarine was supported by CNPq.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Lemmas and corollary

The following Lemmas, provided by Kim (2008) and Genç (2012), are useful for evaluating some integrals used in this paper as well as for the implementation of the proposed EM-type algorithm.

Lemma 1

If \(Z\sim \text {TN}_{(a,b)}\left( 0,1\right) \), then

for \(k=-1,0,1,2,\ldots \)

Proof: See Lemma 2.3 in Kim (2008).

Lemma 2

Let U be a positive random variable. Then \({F}_{SMN}\left( a\right) =E_U\left[ \Phi \left( aU^{\frac{1}{2}}\right) \right] ,\) where \({F}_{SMN}(\cdot )\) denotes the cdf of a standard SMN random variable, that is, when \(\mu =0\) and \(\sigma ^2=1\).

Proof: See Lemma 3 in Genç (2012).

The following Corollary is a direct consequence of Proposition 1 given in Sect. 2.

Corollary 1

Let \(Y \sim \text {SMN}(\mu ,\sigma ^2, {\varvec{\nu }})\) with scale factor U and \(\mathcal{A}=(\text {a},\text {b})\). Then, for \(r \ge 1\),

where \(X \sim \text {SMN }(0,1,{\varvec{\nu }})\) and \(\mathcal{A}^*=\left( a^*,b^*\right) \), with \(a^*=\left( a-\mu \right) /\sigma \) and \(b^*=\left( b-\mu \right) /\sigma \).

Appendix 2: Derivations of quantities \(\text {E}_{\phi }\left( r,h\right) \) and \(\text {E}_{\Phi }\left( r,h\right) ~\)for SMN distributions

In this Appendix, we calculate the expressions for the expected values \(\text {E}_{\phi }\left( r,h\right) \) and \(\text {E}_{\Phi }\left( r,h\right) ,\) for \(r,h\ge 0\), given in Proposition 1.

1.1 Pearson type VII distribution (and the Student-t distribution)

In this case \(U\sim Gamma(\nu /2,\delta /2)\), with \(\nu >0~\text {and}~\delta >0.\) To facilitate the notation, let us make \(\alpha _1=(\nu +2r)/2\) and \(\alpha _2=(h^2+\delta )/2\). Then,

where the integrand in (26) is the pdf of a random variable \(U'\) with distribution \(Gamma\left( \alpha _1,\alpha _2\right) \).

where in (27) the expectation is computed with respect to \(U' \sim Gamma\left( \alpha _1,\delta /2\right) \) and \(F_{PVII}(\cdot )\) represents the cdf of the Pearson type VII distribution. Then, the result follows from Lemma 2. When \(\delta =\nu \), i.e., the Student-t distribution, we have that \(\text { E}_{\phi }\left( r,h\right) \) and \(\text { E}_{\Phi }\left( r,h\right) \) are given by

1.2 Slash distribution

In this case \(U \sim Beta(\nu ,1)\), with positive shape parameter \(\nu \), and

thus, considering \(\gamma ^{*}\left( a,x\right) =\int ^{x}_{0}e^{-t}t^{a-1}dt\), we obtain Eq. (28).

where the integrand in (29) is the expectation of the random variable \(\Phi (h U'^{\{ \frac{1}{2} \} })\), with \({U'}\sim ~\text {Beta}(\nu +r,1)\). Using Lemma 2, we obtain Eq. (30), where \(F_{SL}(\cdot )\) is the cdf of the slash distribution.

1.3 Contaminated normal distribution

where \(F_{CN}(\cdot )\) is the cdf of the contaminated normal distribution.

Appendix C. Details of the EM-type algorithm

In this Appendix, we derive the EM algorithm Eqs. (20)–(22). Let \({\varvec{\theta }}=({\varvec{\beta }}^{\top }, \sigma ^2, {\varvec{\nu }})\) be the vector with all parameters in the SMN-CR model and consider the notation given in Sect. 3.2. Denoting the complete-data likelihood by \(L(\cdot |\mathbf {y}_\text {obs}, \mathbf {y}_{L},\mathbf {u})\) and pdf’s in general by \(f(\cdot )\), we have that

Dropping unimportant constants, the complete-data log-likelihood function is given by

The Q-function at the E-step of the algorithm is given by

so we have

The expectations \(\mathcal{E}_{si}\big ({\varvec{\theta }}^{(k)}\big ) = \text {E}_{{\varvec{\theta }}^{(k)}}[U_i Y_i^s|y_{\text {obs}_i}],\,\,\, s=0,1,2\), used in the E-step of the algorithm, are computed considering the two possible cases: (i) when the observation i is uncensored and (ii) otherwise. In the former case we solve the problem using results obtained by Osorio et al. (2007). In the later case we use Proposition 1. Then, we have

In the CM-step, we take the derivatives of \(Q\big ({\varvec{\theta }}|{{\varvec{\theta }}}^{(k)}\big )\) with respect to \({\varvec{\beta }}\) and \(\sigma ^2\), i.e.,

The solution of \(\displaystyle \frac{\partial Q\big ({\varvec{\theta }}|{\varvec{\theta }}^{(k)}\big )}{\partial {\varvec{\beta }}} = 0\) is

The solution of \(\displaystyle \frac{\partial Q\big ({\varvec{\theta }}|{\varvec{\theta }}^{(k)}\big )}{\partial \sigma ^2} = 0\) is

For the CML-step, we estimate \({\varvec{\nu }}\) by maximizing the marginal log-likelihood, circumventing the (in general) complicated task of computing \(\text {E}_{{\varvec{\theta }}^{(k)}}[\log \left( U_i\right) |y_{\text {obs}_i}]\) and \(\text {E}_{{\varvec{\theta }}^{(k)}}[\log \left( f(U_i |{\varvec{\nu }})\right) |y_{\text {obs}_i}]\), i.e.,

Appendix D. Complementary results of the simulation studies: asymptotic properties

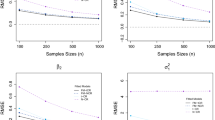

Figures 5 and 6 depict the average bias and the average MSE of \(\widehat{\beta }_1\), \(\widehat{\beta }_2\) and \(\widehat{\sigma ^2}\) for the levels of censoring \(p=25\,\%\) and \(p=45\,\%\), respectively.

Rights and permissions

About this article

Cite this article

Garay, A.M., Lachos, V.H., Bolfarine, H. et al. Linear censored regression models with scale mixtures of normal distributions. Stat Papers 58, 247–278 (2017). https://doi.org/10.1007/s00362-015-0696-9

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-015-0696-9