Abstract

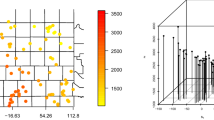

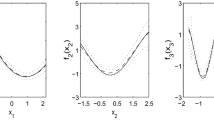

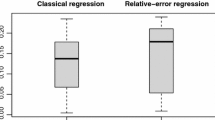

This paper considers a varying coefficient partially linear regression with spatial data. A general formulation is used to treat mean regression, median regression, quantile regression and robust mean regression in one setting. The parametric estimators of the model are obtained through piecewise local polynomial approximation of the nonparametric coefficient functions. The local estimators of unknown coefficient functions are obtained by replacing the parameters in model with their estimators and using local linear approximations.The asymptotic distribution of the estimator of the unknown parameter vector is established. The asymptotic distributions of the estimators of the unknown coefficient functions at both interior and boundary points are also derived. Finite sample properties of our procedures are studied through Monte Carlo simulations. A real data example about spatial soil data is used to illustrate our proposed methodology.

Similar content being viewed by others

References

Athreya KB, Pantala SG (1986) A note on strong mixing of ARMA processes. Stat Probab Lett 4:187–190

Carbon M, Tran LT, Wu B (1997) Kernel density estimation for random fields. Stat Probab Lett 36:115–125

Chen H (1988) Convergence rates for parametric components in a partly linear model. Ann Stat 16:136–146

Chen K, Jin Z (2006) Partial linear regression models for clustered data. J Am Stat Assoc 101:195–204

Cressie NAC (1991) Statistics for spatial data. Wiley, New York

Fan J, Huang T (2005) Profile likelihood inferences on semiparametric varyingcoefficient partially linear models. Bernoulli 11:1031–1057

Gao J, Lu Z, Tjøstheim D (2006) Estimation in semiparametric spatial regression. Ann Stat 34:1395–1435

Hallin M, Lu Z, Tran LT (2001) Density estimation for spatial linear processes. Bernoulli 7:657–668

Hallin M, Lu Z, Tran LT (2004a) Density estimation for spatial processes: the L1 theory. J Multivar Anal 88:61–75

Hallin M, Lu Z, Tran LT (2004b) Local linear spatial regression. Ann Stat 32:2469–2500

Hallin M, Lu Z, Yu K (2009) Local linear spatial quantile regression. Bernoulli 15:659–686

Hampel FR, Ronchetti EM, Rousseeuw PJ, Stahel WA (1986) Robust statistics, the approach based on influence functions. Wiley, New York

Hardle W (1990) Applied nonparametric regression. Cambridge University Press, Cambridge

He X, Zhu Z, Fung WK (2002) Estimation in a semiparametric model for longitudinal data with unspecified dependence structure. Biometrika 89:579–590

Kai B, Li R, Zou H (2011) New efficient estimation and variable selection methods for semiparametric varying-coefficient partially linear models. Ann Stat 39:305–332

Kavousi A, Meshkani M, Mohammadzadeh M (2011) Spatial analysis of auto-multivariate lattice data. Stat Pap 52:937–952

Koenker R (2005) Quantile regression. Cambridge University Press, Cambridge

Koenker R, Basset G (1978) Regression quantiles. Econometrica 46:33–50

Lee YK, Choi H, Park BU, Yu KS (2004) Local likelihood density estimation on random fields. Stat Probab Lett 68:347–357

Li D, Chen J, Lin Z (2011) Statistical inference in partially time-varying coefficient models. J Stat Plan Inference 141:995–1013

Lin Z, Li D, Gao J (2009) Local linear M-estimation in nonparametric spatial regression. J Time Ser Anal 30:286–314

Lu Z, Cheng P (2004) Spatial kernel regression estimation: weak consistency. Stat Probab Lett 68:125–136

Lu Z, Lundervold A, Tjøstheim D, Yao Q (2007) Exploring spatial nonlinearity using additive approximation. Bernoulli 13:447–472

Lu Z, Tang Q, Cheng L (2014) Estimating spatial quantile regression with functional coefficients: a robust semiparametric framework. Bernoulli 20:164–189

Ripley B (1981) Spatial statistics. Wiley, New York

Rosenblatt M (1956) A central limit theorem and a strong mixing condition. Proceedings of the National Academy of Sciences of the USA 42:43–47

Tang Q (2010) \(L_{1}\)-estimation in a semiparametric model with longitudinal data. J Stat Plan Inference 140:393–405

Tang Q, Cheng L (2009) B-spline estimation for varying coefficient regression with spatial data. Sci Chin Ser A 52(11):2321–2340

Tang Q, Cheng L (2012) Componentwise B-spline estimation for varying coefficient models with longitudinal data. Stat Pap 53:629–652

Tran LT (1990) Kernel density estimation on random field. J Multivar Anal 34:37–53

van der Vaart AW, Wellner JA (1996) Weak convergence and empirical processes: with applications to statistics. Springer, New York

Wang H, Zhu Z, Zhou J (2009) Quantile regression in partially linear varing coefficient models. Ann Stat 37:3841–3866

Xia Y, Zhang W, Tong H (2004) Efficient estimation for semivarying-coefficient models. Biometrika 91:661–681

Zhang W, Lee S, Song X (2002) Local polynomial fitting in semivarying coefficient model. J Multivar Anal 82:166–188

Zheng Y, Zhu J, Roy A (2010) Nonparametric Bayesian inference for the spectral density function of a random field. Biometrika 97:238–245

Acknowledgments

The author thank anonymous referees for their valuable comments and suggestions, which improved the early version of this paper. This work was supported by National Natural Science Foundation of China (Grant no. 11071120).

Author information

Authors and Affiliations

Corresponding author

Appendix: Proofs

Appendix: Proofs

Since the proof of Theorem 3.3 is similar to that of Theorem 3.2, we only give the proofs of Theorems 3.1 and 3.2. Let \(C\) denote some positive constants not depending on \(m\) and \(n\), but which may assume different values at each appearance. Let

and \(b_{0}=(b_{01}^{T},\ldots ,b_{0d_{1}}^{T})^{T}\), \(\tilde{G}_{k}=(\tilde{G}_{k1}^{T}, \ldots ,\tilde{G}_{kd_{1}}^{T})^{T}\), \(\tilde{G}=(\tilde{G}_{1},\ldots ,\tilde{G}_{d_{2}})\). Set \(\tilde{g}(X_{ij},U_{ij})^{T}=B_{ij}^{T}\tilde{G}\), \(\tilde{Z}_{ij}=N^{-1/2}[Z_{ij}-g^{*}(X_{ij},U_{ij})]\), \(\theta _{1}=N^{1/2}(\beta -\beta _{0})\), \(\tilde{B}_{ij}=(Nh_{0})^{-1/2}B_{ij}\), \(\theta _{2}=(Nh_{0})^{1/2}[(b-b_{0})+\tilde{G}(\beta -\beta _{0})]\), \(\theta =(\theta _{1}^{T},\theta _{2}^{T})^{T}\), \(V_{ij}=(\tilde{Z}_{ij}^{T},\tilde{B}_{ij}^{T})^{T}\) and \(e_{ij}=X_{ij}^{T}\alpha (U_{ij})-B_{ij}^{T}b_{0}+[g^{*}(X_{ij},U_{ij}) -\tilde{g}(X_{ij},U_{ij})]^{T}(\beta -\beta _{0})\). We consider the following new optimization problem

Clearly, \(\hat{\theta }_{1}=N^{1/2}(\hat{\beta }-\beta _{0})\). Let \(S_{N}(\theta )\) denote the objective function above and

Then

We first present several lemmas that are necessary to prove the theorems.

Lemma 6.1

Let \(\xi _{1}, \ldots , \xi _{n}\) be arbitrary scalar random variables such that \(\max _{1\le i\le n} E(|\xi _{i}|^{r})<\infty \) for some \(r\ge 1\). Then, we have

where \(C_{r}\) is a constant depending only on \(r\) and \(\max _{1\le i\le n} E(|\xi _{i}|^{r})\).

Proof

See (van der Vaart and Wellner (1996), Lemma 2.2.2). \(\square \)

Lemma 6.2

Let \((R_{ij}: (i,j)\in \mathbf {Z}^{2})\) be a zero-mean real valued random fields such that \(\sup _{1\le i\le m,1\le j\le n}|R_{ij}|\le V_{0}<\infty \) for some positive constant \(V_{0}\). Then for each \(\tilde{q}=(\tilde{q}_{1},\tilde{q}_{2})\) with integer-valued coordinates \(\tilde{q}_{1}\in [1,m/2]\), \(\tilde{q}_{2}\in [1,n/2]\) and for each \(\varepsilon >0\) we have

where \(q^{*}=\tilde{q}_{1}\tilde{q}_{2}\), \(\tilde{p}=(\tilde{p}_{1},\tilde{p}_{2})\), \(\tilde{p}_{1}=m/(2\tilde{q}_{1})\), \(\tilde{p}_{2}=n/(2\tilde{q}_{2})\) and \(v^{2}(\tilde{q})=8\sigma ^{2}(\tilde{q})/{p^{*}}^{2}+V_{0}\varepsilon \) with \(p^{*}=\tilde{p}_{1}\tilde{p}_{2}\), \(\sigma ^{2}(\tilde{q})=\min _{\mathbf {i},\mathbf {j}}E(\sum _{(i,j)\in A_{\mathbf {i}\mathbf {j}}}R_{ij})^{2}\) and \(A_{\mathbf {i}\mathbf {j}}= \prod _{k=1}^{2}((i_{k}+2j_{k})\tilde{p}_{k}, (i_{k}+2j_{k}+1)\tilde{p}_{k}]\). The minimisation in the defining equation for \(\sigma ^{2}(\tilde{q})\) is taken over all pairs of \(2\)-tuple indices \(\mathbf {i}=(i_{1},i_{2})\) and \(\mathbf {j}=(j_{1},j_{2})\) with \(i_{k}=0,1\) and \(j_{k}=0, 1, \ldots , \tilde{q}_{k}- 1\).

Proof

The proof can be found in Lee et al. (2004). \(\square \)

Lemma 6.3

Under the Assumptions of Theorem 1, for any sufficient large \(L\), it holds that

Proof

Let \(T_{ij}(\theta )=\rho (\varepsilon _{ij}+e_{ij}-V_{ij}^{T}\theta )- \rho (\varepsilon _{ij}+e_{ij})+V_{ij}^{T}\theta \psi (\varepsilon _{ij})\) and \(R_{ij}(\theta )=T_{ij}(\theta )-E(T_{ij}(\theta )|X_{ij},Z_{ij},U_{ij})\). Then \(R_{N}(\theta )=\sum _{i=1}^{m}\sum _{j=1}^{n}R_{ij}(\theta )\).Observe that

By Assumption 4 and Lemma 6.1, we get

Hence, for any \(\epsilon >0\), there exists a sufficiently large constant \(L^{*}\) such that

When \(\max _{ij}\Vert X_{ij}\Vert \le L^{*}N^{1/2\kappa }\) and \(\max _{ij}\Vert Z_{ij}\Vert \le L^{*}N^{1/2\kappa }\), by Assumption 5, for \(\theta \) such that \(\Vert \theta \Vert \le L\), it holds that

By Assumption 1, we have

where \(A_{\mathbf {0}\mathbf {0}}=(0, \tilde{p}_{1}]\times (0, \tilde{p}_{2}]\). Using Assumptions 1, 5 and 6 and Taylor expansion, we obtain

Let \(c_{Nk}=[M_{N}^{\delta /(2+\delta )a}]\) for \(k=1,2\), where \(a>2(4+\delta )/(2+\delta )\) is a constant. Let the set \(\{(i,j)\ne (i',j')\in A_{\mathbf {0}\mathbf {0}}\}\) be split into the following two parts

By (6.6), we get

Using Lemma 5.1 of Hallin et al. (2004b) and Assumptions 1, 3 and 5 and the fact that \(\chi _{k}(u)\chi _{l}(u)=0\) for \(k, l=1,\ldots ,M_{N},k\ne l\), we deduce that

Using arguments similar to those used in the proof of Lemma 6.2 of Hallin et al. (2004b) and noting that \(\varphi (t)=O(e^{-\varsigma t})\) and \(c_{Nk}=[M_{N}^{\delta /(2+\delta )a}]\), we have

where \(0<\varsigma _{1}<\varsigma \) is some constant. Therefore

Combining (6.3)–(6.6), we conclude that

Set \(\Theta =\{\theta =(\theta _{1},\ldots ,\theta _{K_{N}}): \Vert \theta \Vert \le L\}\) and \(|\theta |=\max _{1\le i\le K_{N}}|\theta _{i}|\), where \(K_{N}=d_{2}+d_{1}M_{N}(s+1)\). Let \(\Theta \) be divided into \(J_{N}\) disjoint parts \(\Theta _{1},\cdots , \Theta _{J_{N}}\) such that for any \(\pi _{k}\in \Theta _{k},1\le k\le J_{N}\) and any sufficient small \(\varepsilon >0\),

This can be done with \(J_{N}\le ((4CL^{*}M_{N}^{\frac{3}{2}}N^{\frac{1}{2}+\frac{1}{2\kappa }}L)/\varepsilon )^{K_{N}}\). Let \(\varepsilon _{N1}=(M_{N}\log N)^{3}/m^{1-1/\kappa }\) and \(\varepsilon _{N2}=(M_{N}\log N)^{3}/n^{1-1/\kappa }\). By Assumption 6, we have \(\varepsilon _{N1}\rightarrow 0\) and \(\varepsilon _{N2}\rightarrow 0\). Take \(\tilde{q}_{1}=[(M_{N}\log N)^{1/2}m^{1/2+1/(2\kappa )}/\varepsilon _{N1}^{1/4}]\), \(\tilde{q}_{2}=[(M_{N}\log N)^{1/2}n^{1/2+1/(2\kappa )}/\varepsilon _{N2}^{1/4}]\), then \(\tilde{p}_{1}=O(M_{N}\log N/\varepsilon _{N1}^{1/4})\) and \(\tilde{p}_{2}=O(M_{N}\log N/\varepsilon _{N2}^{1/4})\). Therefore, using Lemma 6.1, (6.2) and (6.7), we deduce that

where \(V_{0}=CLL^{*}M_{N}N^{-1/2+1/(2\kappa )}\). Now Lemma 6.3 follows from (6.3) and the above expression.

Lemma 6.4

Suppose that Assumptions 1–6 hold. Then \(\Vert \hat{\theta }\Vert =O_{p}(M_{N}^{1/2})\).

Proof

By Assumptions 2 and 3, \(\max _{1\le i\le m,1\le j\le n}(|e_{ij}|+|M_{N}^{1/2}V_{ij}^{T}\theta | =o(1)\), then by Assumption 5, we obtain that

where \(\tilde{c}_{1}\) are positive constants. Since \(\tilde{Z}_{ij}^{T}\theta _{1}\tilde{B}_{ij}^{T}\theta _{2}= \frac{1}{Nh_{0}^{1/2}}\theta _{1}^{T}[Z_{ij}-g^{*}(X_{ij},U_{ij})] B_{ij}^{T}\theta _{2}\) and \(Z_{ij}-g^{*}(X_{ij},U_{ij})=Z_{ij}-E(Z_{ij}|X_{ij},U_{ij})+E(Z_{ij}|X_{ij},U_{ij})-g^{*}(X_{ij},U_{ij})\). Using the fact that \(g^{*}(x,u)\) is the projection of \(E(Z_{ij}|X_{ij}=x,U_{ij}=u)\) onto the varying coefficient functional space \(\mathcal {Y}\) and \(B_{ij}^{T}\theta _{2}\in \mathcal {Y}\), we have \(E\phi (X_{ij},U_{ij})[Z_{ij}-g^{*}(X_{ij},U_{ij})] B_{ij}^{T}\theta _{2}=0\). Let \(Q_{N}=\{(i,j): 1\le i\le m, 1\le j\le n\}\) and

Similar to the proof of (6.9), we deduce that

Using arguments similar to those used in the proof of Lemma 3 of Tang and Cheng (2009), we can prove that there are positive constants \(\tilde{C}_{1}\) and \(\tilde{C}_{2}\) such that, except on an event whose probability tends to zero, all the eigenvalues of \(\sum _{i=1}^{m}\sum _{j=1}^{n}\tilde{B}_{ij}\tilde{B}_{ij}^{T}\) fall between \(\tilde{C}_{1}\) and \(\tilde{C}_{2}\). Hence by Assumption 6 and (6.2), we conclude that

where \(\tilde{c}_{2}\) is a positive constant. It is easy to prove that

Hence

Combining (6.1), (6.12), (6.13) and Lemma 6.3, for sufficiently large \(L\), we deduce that

which implies, by the convexity of \(\rho \), that

Therefore \(\Vert \hat{\theta }\Vert =O_{p}(M_{N}^{1/2})\). The proof of Lemma 6.4 is finished. \(\square \)

Proof of Theorem 3.1 Let \(\eta =\Lambda ^{-1}\sum _{i=1}^{m}\sum _{j=1}^{n} \psi (\varepsilon _{ij})\tilde{Z}_{ij}\). Using arguments similar to those used in the proof of Lemma 6 of Tang and Cheng (2009), we have \(\sum _{i=1}^{m}\sum _{j=1}^{n} \psi (\varepsilon _{ij})\tilde{Z}_{ij} \longrightarrow _{d} N(0,\Delta )\). Hence, to prove Theorem 3.1, it suffices to prove that for any \(\epsilon >0,P\{\Vert \hat{\theta }_{1}-\eta \Vert <\epsilon \}\rightarrow 1\). Set \(\tilde{S}_{ij}(\theta _{1},\theta _{2})= \rho (\varepsilon _{ij}+e_{ij}-\tilde{Z}_{ij}^{T}\theta _{1}-\tilde{B}_{ij}^{T}\theta _{2}) -\rho (\varepsilon _{ij}+e_{ij}-\tilde{B}_{ij}^{T}\theta _{2})\) and \(\tilde{S}_{N}(\theta _{1},\theta _{2})=\sum _{i=1}^{m}\sum _{j=1}^{n}\tilde{S}_{ij}(\theta _{1},\theta _{2})\). Using the convexity of the absolute-valued function \(|\cdot |\), we need only to show that

By Lemma 4 and definition of \(\eta \), we have \(\Vert \hat{\theta }_{2}\Vert =O_{p}(M_{N}^{1/2})\) and \(\Vert \eta \Vert =O_{p}(1)\). So it suffices to show that for any sufficient large \(L>0,L^{'}>0\)

Set \(\tilde{\Gamma }_{N}(\theta _{1},\theta _{2}) =\sum _{i=1}^{m}\sum _{j=1}^{n}E(\tilde{S}_{ij}(\theta _{1},\theta _{2})|X_{ij},Z_{ij},U_{ij})\) and

Then

By Assumptions 1 and 5, we have

By arguments similar to those used in the proof of Lemma 3 of Tang and Cheng (2009), we deduce that

Similar to the proof of (6.9), we get \(\sum _{i=1}^{m}\sum _{j=1}^{n}\phi (X_{ij},U_{ij})\tilde{Z}_{ij}^{T}\theta _{1}e_{ij}=o_{p}(1)\). Hence, by (6.11), we have

Using the fact that \(2\theta _{1}^{T}\Lambda \eta =\theta _{1}^{T}\Lambda \theta _{1}-(\theta _{1}-\eta )^{T}\Lambda (\theta _{1}-\eta )+\eta ^{T}\Lambda \eta \) and that \(\Vert \theta _{1}-\eta \Vert =\epsilon \), we have

where \(\lambda _{\min }\) is the minimum eigenvalue of \(\Lambda \). By (6.16), we get

It follows from (6.17) and (6.18) that

Using arguments similar to those in the proof of Lemma 6.3, it can be shown that \(\sup _{\Vert \theta _{1}\Vert \le \tilde{L},\Vert \theta _{2}\Vert \le LM_{N}^{1/2}}|\tilde{R}_{N}(\theta _{1},\theta _{2})|=o_{p}(1).\) Hence (6.14) holds and consequently Theorem 3.1 follows.

Proof of Theorem 3.2 Under Assumption 2, by Taylor expansion we have

for \(|U_{ij}-u_{0}|\le Mh\), where \(|\xi _{ijr}-u_{0}|<|U_{ij}-u_{0}|\). Let \(a_{0}=(a_{10},\ldots ,a_{d_{1}0})^{T}\), \(a_{1}=(a_{11},\ldots ,a_{d_{1}1})^{T}\), \(\alpha ''(\xi _{ij})=(\alpha _{1}^{''}(\xi _{ij1}),\ldots ,\alpha _{d_{1}}^{''}(\xi _{ijd_{1}}))^{T}\), and \(e_{ij}^{*}=\frac{1}{2}(U_{ij}-u_{0})^{2}\alpha ''(\xi _{ij})^{T}X_{ij}\), \(D_{ij}=(Nh)^{-1/2}(1,h^{-1}(U_{ij} -u_{0}))^{T}\otimes X_{ij}\), \(\vartheta =(Nh)^{1/2}((a_{0}-\alpha (u_{0}))^{T},h(a_{1}-\alpha '(u_{0}))^{T})^{T}\), \(\bar{Z}_{ij}=N^{-1/2}Z_{ij}\), where \(\otimes \) is the Kronecker product. We consider the following new optimization problem

Clearly, \(\hat{\vartheta }=(Nh)^{1/2}((\hat{a}_{0}-\alpha (u_{0}))^{T},h(\hat{a}_{1}-\alpha '(u_{0}))^{T})^{T}\). Let \(\vartheta ^{*}= \frac{1}{2}N^{1/2}h^{5/2}P^{*}\otimes \alpha ^{''}(u_{0})\) and \(\tilde{\vartheta }=\vartheta ^{*}+\frac{1}{f(u_{0})}(P^{-1}\otimes \Omega ^{-1}(u_{0})) \sum _{i=1}^{m}\sum _{j=1}^{n}D_{ij}\psi (\varepsilon _{ij})K(\frac{U_{ij}-u_{0}}{h})\), where \(P^{*}=(\mu _{0}^{-1}\mu _{2},0)^{T}\) and \(P=diag(\mu _{0},\mu _{2})\). Under Assumptions of Theorem 3.2, using arguments similar to those used in the proof of Lemma 3.1 of Hallin et al. (2004b), we can show that

where \(\tilde{P}=diag(\nu _{0},\nu _{2})\). Therefore, to complete the proof of Theorem 3.2, it suffices to prove that for any \(\epsilon >0\),

Set \(S_{ij}^{*}(\vartheta ,\theta _{1})= [\rho (\varepsilon _{ij}+e_{ij}^{*}-D_{ij}^{T}\vartheta -\bar{Z}_{ij}^{T}\theta _{1}) -\rho (\varepsilon _{ij}+e_{ij}^{*}-\bar{Z}_{ij}^{T}\theta _{1})]K(\frac{U_{ij}-u_{0}}{h})\), \(S_{N}^{*}(\vartheta ,\theta _{1})=\sum _{i=1}^{m}\sum _{j=1}^{n}S_{ij}^{*}(\vartheta ,\theta _{1})\). and \(\Gamma _{N}^{*}(\vartheta ,\theta _{1}) =\sum _{i=1}^{m}\sum _{j=1}^{n}E(S_{ij}^{*}(\vartheta ,\theta _{1})|X_{ij},Z_{ij},U_{ij})\). By Assumptions 3, 5 and 7, we deduce that

Using arguments similar to those used in the proof of Lemma 2.1 of Hallin et al. (2004b), we deduce that

where \(\tilde{\kappa }=(\mu _{2},0)^{T}\). Similar to the proof of Theorem 3.1 and using the fact that \(\sum _{i=1}^{m}\sum _{j=1}^{n}E|\phi (X_{ij},U_{ij})D_{ij}^{T}\vartheta \bar{Z}_{1ij}^{T}\theta _{1}| K(\frac{U_{ij}-u_{0}}{h})=O(h^{1/2})=o(1)\), we can prove that (6.19) holds. The proof of Theorem 3.2 is finished.

Rights and permissions

About this article

Cite this article

Qingguo, T. Robust estimation for spatial semiparametric varying coefficient partially linear regression. Stat Papers 56, 1137–1161 (2015). https://doi.org/10.1007/s00362-014-0629-z

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-014-0629-z