Abstract

We introduce a model of judgment aggregation in which individuals do not necessarily have full information regarding the judgments held by their peers. This intuitively limits an individual’s ability to strategically manipulate the aggregation process. Our results confirm this basic intuition. Specifically, we show that known impossibility results concerning the existence of reasonable strategyproof judgment aggregation rules break down once we abandon the classical assumption of full information. For instance, the simple plurality rule is strategyproof in case individuals do not have any information about their peers, while the well-known premise-based rule can be rendered strategyproof by withholding only a negligible amount of information.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The framework of logic-based judgment aggregation introduced by List and Pettit (2002) provides a rich environment in which to study collective decision making. It has been found useful by researchers in Legal Theory, Philosophy, Logic, Mathematics, Economics, Political Science, Computer Science, and Artificial Intelligence (alike see, e.g. List and Puppe 2009; List 2012; Grossi and Pigozzi 2014; Endriss 2016). While there now is a substantial and significant body of literature on a variety of topics in judgment aggregation, the analysis of the incentives of individuals to misrepresent their own judgments has only received limited attention to date. One aspect in particular so far has been ignored entirely, namely the fact that in practice an individual considering to manipulate the outcome of a judgment aggregation rule typically will not have full information regarding the judgments to be submitted by her peers, which intuitively constrains her own ability to manipulate successfully. To address this shortcoming, in this paper, we propose a model of strategic manipulation in judgment aggregation under partial information.

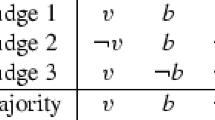

Example. To illustrate the basic idea, we begin with an example, inspired by Bovens and Rabinowicz (2006). Suppose a committee of five professors—Alice, Bob, Carol, Deniz, and Enrique—has to decide whether one of their junior colleagues should receive tenure. Regulations stipulate that the candidate needs to be found to perform at an excellent level with regards to research, teaching, and service to the profession. They also stipulate that on each of these issues the committee should decide by majority, and tenure should be granted if and only if there is a majority for each of the three issues. The private views of the committee members are shown in the following table:

Committee | Research? | Teaching? | Service? | Tenure? |

|---|---|---|---|---|

Alice | Yes | No | Yes | No |

Bob | Yes | Yes | Yes | Yes |

Carol | Yes | Yes | Yes | Yes |

Deniz | No | No | No | No |

Enrique | No | Yes | Yes | No |

Let us refer to the issues of excellence in research, teaching, and service as the premises and to the issue of whether tenure will be granted as the conclusion. Thus, the committee is using the well-known premise-based rule (Pettit 2001; Dietrich and Mongin 2010). If all committee members provide truthful judgments, then tenure will be granted, as there will be a majority on each of the three premises.

Now consider Alice, who would not be entirely happy with this outcome. If she—somehow—knows the others will vote as shown in the table, then she can lie and claim that she believes the candidate’s performance on research to be insufficient. In that case, the majority decision on research would come out negative and tenure would not be granted. Thus, given full information and assuming Alice only cares about the conclusion, she has an incentive to manipulate. In fact, this does not change if she has less information. Even if she has no information at all about the expected judgments of her colleagues, she could simply vote “No” on all three premises, which would be a safe strategy that would avoid the candidate getting tenure whenever Alice’s judgment is pivotal on any of the premises she truthfully accepts, and it would simply not change the outcome on the conclusion in all other cases.

But for the actual scenario above, a lie by Alice on the candidate’s performance regarding service would be an ineffective lie, as the majority in favour is too strong to begin with. Now suppose Alice cares not only about the conclusion: the outcome on the conclusion is what is most important to her but, all else being equal, she would rather have the collective judgment on the premises recorded in the official minutes of the meeting to be as close as possible to her own truthful judgments. Thus, if it does not affect the conclusion, she would not want to lie about a given premise. Hence, if Alice does not have full information and is unsure whether she is pivotal on the issues of research and service, then she cannot safely manipulate.

Related work The idea of modelling strategic individuals who may manipulate by misrepresenting their truthful judgments was introduced into the judgment aggregation literature by Dietrich and List (2007c).Footnote 1 Adopting an axiomatic perspective, Dietrich and List characterise the family of all strategyproof judgment aggregation rules, i.e., all rules that are immune to strategic manipulation, but also show that all rules that have certain desirable properties must be manipulable. These—largely negative—results presuppose full information on the part of any potential manipulator.

In search for more positive news, one route to take is to impose domain restrictions, i.e., to limit the range of judgments that a group can submit. For example, if the group’s judgments are always unidimensionally aligned,Footnote 2 then the majority rule—which is known to be strategyproof (Dietrich and List 2007c)—does not suffer from the familiar problem of sometimes returning inconsistent outcomes (List 2003). A second approach is to look for aggregation rules for which strategic manipulation may be mathematically (hence theoretically) possible, but computationally (hence practically) intractable. Such computational barriers against strategic manipulation in judgment aggregation were first considered by Endriss et al. (2012). For example, the problem of deciding whether the premise-based rule can be manipulated successfully in a given profile is NP-complete. Further results of this kind have been obtained by Baumeister et al. (2015) and de Haan (2017).

But arguably the most natural approach towards containing strategic manipulation, namely informational barriers against manipulation, so far has been neglected in research on judgment aggregation. The situation is somewhat different in other areas of social choice theory. While voting and preference aggregation suffer from similarly negative results in the general case—starting with the seminal Gibbard-Sattherwhate Theorem (Gibbard 1973; Satterthwaite 1975)—and while domain restrictions (Gaertner 2001) and computational barriers to manipulation (Conitzer and Walsh 2016) have been investigated extensively in this domain as well, in the context of voting there also have been several attempts at capturing the notion the partiality of the information available to a manipulator in an election.

For instance, Osborne and Rubinstein (2003) propose a model under which every voter knows the preferences of a small sample of the electorate and believes that this sample is representative. Chopra et al. (2004) work with a directed graph, called the knowledge graph, where voter i is taken to know the preferences of voter j if there is an edge from node i to node j in the graph. Conitzer et al. (2011) investigate a more general setting, where the set of possible preference profiles\(\mathcal {W}_i\) that a voter i deems possible is given explicitly. This model is developed further by Reijngoud and Endriss (2012), who assume that the information available to each voter is induced by some opinion poll. This model, also employed by Endriss et al. (2016), is the closest to the one we are going to develop in this paper. Finally, in a related model explored by Meir et al. (2014), each voter is taken to consider possible the set of preference profiles in some neighbourhood of the true profile. Most of these authors, like us in this paper, assume that individuals are extremely risk-averse, in the sense that they will only consider manipulating if, given the information available to them, they consider it certain that the outcome will not be worse than if they vote truthfully and they consider it possible that the outcome will be strictly better.

Our contribution In this paper, we introduce a model of strategic manipulation in judgment aggregation under partial information and show that some—but not all—known negative results concerning the existence of reasonable strategyproof judgment aggregation rules break down once we abandon the classical assumption of full information. Concretely, the simple plurality rule, which selects the overall judgment chosen by the largest number of individuals and which fails to be strategyproof under full information (Dietrich and List 2007c)—is immune to strategic manipulation if individuals do not have any information about the judgments of others at all. While assuming zero information is a strong assumption, another result shows that we can always find an aggregation rule that fails to be strategyproof under full information but is strategyproof under partial (but nonzero) information.

Our results regarding one of the most important judgment aggregation rules used in practice, the premise-based rule, heavily depend on the assumptions we wish to make regarding the preferences of the individuals, which—together with the information they have access to and the aggregation rule in use—determine their incentives to manipulate. Specifically, we distinguish whether individuals only care about certain issues or whether they care about different issues to different degrees. Our results show that (i) when individuals care much more about conclusions than premises, then the premise-based rule can be rendered strategyproof by withholding only a negligible amount of information and that (ii) when individuals care equally about all issues, then the premise-based rule is strategyproof under both full and partial information. These results provide an interesting contrast with a result due to Dietrich and List (2007c), which shows that when individuals care only about the conclusion, then the premise-based rule fails to be strategyproof (this also is true under both full and partial information).

Paper overview The remainder of this paper is organised as follows. In Sect. 2, we summarise some of the fundamentals of judgment aggregation and fix our notation. In Sect. 3, we first recall relevant definitions and results due to Dietrich and List (2007c) and then introduce our model of strategic manipulation under partial information. Several results highlighting the differences between full and partial information are given in Sect. 4, while Sect. 5 focuses on results regarding the premise-based rule.Footnote 3 Section 6 concludes. Proofs of all technical results have been relegated to the “Appendix”.

2 The framework of judgment aggregation

In this section, we recall the standard model of judgment aggregation (List and Puppe 2009; List 2012; Grossi and Pigozzi 2014; Endriss 2016), originally introduced by List and Pettit (2002).

Consider a finite set of individuals\(N=\{1, 2, \ldots , n\}\), with \(n \ge 2\), that constitute a group whose judgments are to be aggregated into one collective decision. The judgments of the individuals are represented as formulas in classical propositional logic.Footnote 4

2.1 Agendas

The domain of decision making is an agenda, a nonempty set of formulas of the form \(\varPhi = \varPhi ^+ \cup \{\lnot \varphi :\varphi \in \varPhi ^+\}\), where the pre-agenda\(\varPhi ^+\) consists of non-negated formulas only.

Several restrictions can be imposed on the structure of an agenda, in order to better capture the essence of specific aggregation situations.Footnote 5 For instance, a conjunctive agenda\(\varPhi \) consists of a set of premises\(\varPhi ^p\) and a single conclusion (together with its negation). The latter is understood to be satisfied if and only if all premises are (Dietrich and List 2007c). The example given in the introduction uses an instance of a conjunctive agenda, as do many examples discussed in the literature (see, e.g., List and Pettit 2002; Hartmann and Sprenger 2012). Formally, the pre-agenda of a conjunctive agenda is of the form \(\varPhi ^+ = \{p_1,\ldots ,p_k,c\}\), with the \(p_j\) being propositional variables and \(c = (p_1\wedge \cdots \wedge p_k)\). Analogously, in a disjunctive agenda the conclusion is equivalent to the disjunction of all the (non-negated) premises. Conjunctive and disjunctive agendas appear in situations in which a final decision has to be made on a conclusion, but the reasons that lead to that choice, described by the premises, are also important.

A more general class of agendas, which includes conjunctive and disjunctive ones, is that of the path-connected agendas (Dietrich and List 2007a), related to the concept of total-blockedness (Nehring and Puppe 2007). An agenda is path-connected if any two of its formulas are logically connected with each other, either directly or indirectly, via a sequence of conditional logical entailments. Formula \(\varphi \)conditionally entails formula \(\psi \) if \(\{\varphi ,\lnot \psi \}\cup \varPsi \) is logically inconsistent for some \(\varPsi \subseteq \varPhi \) logically consistent with \(\varphi \) and with \(\lnot \psi \). The agenda \(\varPhi \) is path-connected if for all propositions \(\varphi ,\psi \in \varPhi \) that are neither tautologies nor contradictions, there is a sequence of propositions \(\varphi _1,\varphi _2,\ldots ,\varphi _k\in \varPhi \) with \(\varphi =\varphi _1\) and \(\psi =\varphi _k\) such that \(\varphi _{i-1}\) conditionally entails \(\varphi _i\), for every \(i\in \{2,\ldots k\}\). Many standard and interesting agendas are path-connected, but note that conjunctive agendas and disjunctive agendas are not path-connected: in a conjunctive agenda there are no conditional entailments from non-negated towards negated formulas.

2.2 Aggregating individual judgments

Each individual i has a judgment set\(J_i\subseteq \varPhi \), the set of formulas she accepts. We assume that all individual judgment sets are consistent, i.e., logically consistent sets of formulas, and complete, i.e., \(\varphi \in J_i\) or \(\lnot \varphi \in J_i\) for every \(\varphi \in \varPhi ^+\).Footnote 6 The set of all consistent and complete subsets of the agenda is denoted as \(\mathcal {J}(\varPhi )\). A profile\(\varvec{J}=(J_1,\ldots ,J_n)\in \mathcal {J}(\varPhi )^n\) is a vector of all the individual judgment sets, and \(\varvec{J}_{-i}\) stands for the partial profile of judgments of the whole group besides individual i. We denote with \(N^{\varvec{J}}_{\varphi }\) the set \(\{i : \varphi \in J_i\}\) of individuals who accept formula \(\varphi \) in profile \(\varvec{J}\). We write \(\overline{J}\) for the complement \(\varPhi {\setminus } J\) of any given judgment set \(J\subseteq \varPhi \). Furthermore, we say that the judgment set Jagrees with the judgment set \(J'\) on formula \(\varphi \) whenever \(\varphi \in J\cap J'\) or \(\varphi \in \overline{J}\cap \overline{J'}\), and that Jdisagrees with\(J'\) otherwise.

There are various methods to aggregate the judgments of a group, which lead to different collective outcomes. An aggregation rule F is a function that maps every profile of judgments \(\varvec{J} \in \mathcal {J}(\varPhi )^n\) to a nonempty set of nonempty collective judgments, i.e., to a nonempty subset of \(2^{\varPhi }{\setminus } \emptyset \), where \(2^\varPhi \) is the powerset of \(\varPhi \). Thus, there may be a tie between several “best” judgment sets and these judgment sets need not be consistent or complete. When \( F(\varvec{J})\) is always a singleton, that is, when \(F: \mathcal {J}(\varPhi )^n\rightarrow 2^{\varPhi }{\setminus } \emptyset \), the rule F is called resolute. In practice, the aim of an aggregation rule is to provide us with an answer about what the collective decision of the individuals is, or should be. Hence, resoluteness is essential, and we can guarantee it by considering a lexicographic tie-breaking rule to resolve the ties between the suggested collective opinions.Footnote 7

2.3 Specific aggregation rules

A straightforward judgment aggregation rule is the majority rule, which accepts a formula in the agenda if and only if at least half of the individuals accept it. The quota rules generalise this idea. According to them, a formula \(\varphi \) is part of the collective decision if and only if at least a certain proportion of the individuals (meeting the relevant quota\(q_{\varphi }\in [0,n+1]\)) agrees with accepting \(\varphi \). Formally, the quota rule \(F^q\) is such that, for any profile \(\varvec{J}\):

Unfortunately, when a quota rule is used, the collective outcome may end up being logically inconsistent. This is the case, for instance, for the example given in the introduction (when the individuals are asked to provide judgments on research, teaching, service, and tenure), if the quota rule \(F_q\) with, say, \(q_\varphi =3\) for all \(\varphi \) is applied.

The most popular way to resolve this problem is to use the premise-based rule\(F^{\textit{pr}}\) (Pettit 2001; Chapman 2002; Dietrich and List 2007b; Dietrich and Mongin 2010; Hartmann and Sprenger 2012), which we define here with regard to conjunctive agendas only. First, a collective decision is made on the premises with respect to the (strict) majority rule. Concretely, for all \(p_i\in \varPhi ^p\cap \varPhi ^+\), \(p_i\in F^{\textit{pr}}(\varvec{J})\) if \(|N^{\varvec{J}}_{p}|>\frac{n}{2}\), and \(\lnot p \in F^{\textit{pr}}(\varvec{J})\) otherwise. Then, the conclusion is accepted by the group if and only if all the premises are: \(c\in F^{\textit{pr}}(\varvec{J})\) if \(p\in F^{\textit{pr}}(\varvec{J})\) for all \(p\in \varPhi ^p\cap \varPhi ^+\), and \(\lnot c\in F^{\textit{pr}}(\varvec{J})\) otherwise. Since \(c = (p_1\wedge \cdots \wedge p_k)\), a consistent outcome is then guaranteed. The definition for disjunctive agendas is analogous.

The premise-based rule applied to conjunctive agendas has received noticeable attention by economists and philosophers, especially because of its significance in the domains of politics and law (Pettit 2001; Chapman 2002). A famous argument in favour of the premise-based way of aggregating individual judgments relates to deliberative democracy (Elster 1998), supporting the view that collective decisions on conclusions should be determined by the group’s opinions on the premises.

Next, we turn to a family of aggregation rules that most will find objectionable, the dictatorships. Living up to its name, a dictatorship is connected to a single individual, the dictator, whose judgment is taken to be the collective judgment independently of the input profile. So, F is a dictatorship if and only if there exists an individual \(i\in N\) such that \(F(\varvec{J})=\{J_i\}\), for every profile \(\varvec{J}=(J_1,\ldots ,J_n)\).

Observe that the quota rules, the premise-based rule, and the dictatorships are all resolute by construction.

A dictatorship vacuously guarantees that the collective judgment will satisfy all the nice properties of individual judgments, like completeness and consistency. Fortunately, there are several other aggregation rules that also exhibit this advantage. Such an aggregation rule, directly inspired by voting theory (see, e.g., Zwicker 2016), is the plurality rule. The plurality rule \(F^{\textit{p}\ell }\) considers the aggregated outcome to be the judgment set(s) submitted by the largest number of individuals, i.e., for \(\varvec{J}=(J_1,\ldots ,J_n)\) we get:

The plurality rule presents certain theoretical limitations. For instance, it does not capture the internal logical structure of the judgment sets. Moreover, in settings with few individuals but many alternative judgments, it is very probable that several judgment sets receive the same amount of support, only by one individual, and hence the tie-breaking rule plays an overly important role in deciding the final outcome. Nonetheless, the plurality rule is of course widely used in political elections. Furthermore, in a different context motivated by applications to crowdsourcing, Caragiannis et al. (2014) show that an aggregation rule that they call modal ranking, and that is equivalent to the plurality rule (for judgment sets corresponding to rankings), is the unique one satisfying certain desirable truth-tracking properties. Later on we will see that the plurality rule also plays an important role in strategyproof judgment aggregation, since it turns out to be immune to strategic manipulation for partially informed individuals.

Finally, another rule that—like the dictatorships and the plurality rule—always selects from the judgment sets submitted by the individuals is the average-voter rule (Endriss and Grandi 2014). The average-voter rule \(F^{{\textit{av}}}\) takes into account a notion of distance between judgment sets and specifies the winners to be the individual judgment sets that minimise the average distance to the elements of the profile submitted by the group. Specifically, the Hamming-distance of two judgment sets \(J, J' \in 2^{\varPhi }\) is defined as the number of formulas in \(\varPhi \) on which they disagree:

The Hamming-distance \(H(\varvec{J},J)\) of the profile \(\varvec{J}=(J_1,\ldots ,J_n)\) and the judgment set J is the sum of the Hamming distances of all judgment sets in \(\varvec{J}\) and J. That is, \(H(\varvec{J}, J)= \sum \nolimits _{i \in N} H(J_i,J)\). Then, given the profile \(\varvec{J}\),

2.4 Properties of aggregation rules

Under a descriptive perspective, axioms provide a structured way of looking into aggregation rules, by helping us to compare them and better understand them. Under a normative perspective, axioms can guide the design of aggregation rules, as they directly reflect the properties we wish our rules to satisfy. Here, we refer to axiomatic characteristics of resolute rules only.

We are going to make use of the following axioms, all of which have been widely discussed in the literature (see, e.g., Grossi and Pigozzi 2014):

-

We call an aggregation rule Fresponsive if it gives a chance to every proposition to be accepted by the group. Formally, F is responsive if, for every formula \(\varphi \) that is not a tautology nor a contradiction, there exist a profile \(\varvec{J}\) such that \(\varphi \in F(\varvec{J})\) and another profile \(\varvec{J}'\) such that \(\varphi \notin F(\varvec{J}')\).Footnote 8

-

Monotonicity prescribes that extra support for a formula \(\varphi \in \varPhi \) can never be damaging. Formally, F is monotonic if \(\varphi \in J'_i{\setminus } J_i\) entails that \(\varphi \in F(J_i, \varvec{J}_{-i}) \Rightarrow \varphi \in F(J'_i, \varvec{J}_{-i})\), for all \((J_i, \varvec{J}_{-i}) \in \mathcal {J}(\varPhi )^n\) and \(J'_i\in \mathcal {J}(\varPhi )\).

-

A more controversial property is independence, according to which each formula \(\varphi \) in \(\varPhi \) is to be treated separately by the aggregation rule F. Formally, F is independent if for all profiles \(\varvec{J},\varvec{J'}\), it is the case that \(N_{\varphi }^{\varvec{J}}=N_{\varphi }^{\varvec{J'}}\) implies \(\varphi \in F(\varvec{J})\Leftrightarrow \varphi \in F(\varvec{J'})\). It is easy to see that, for instance, the plurality rule and the premise-based rule are not independent.

Besides enforcing axioms such as these, we can also constrain the manner in which a rule can operate by imposing conditions on the outcomes it is expected to return. In particular, an aggregation rule F is said to be complete (similarly consistent) if \( F(\varvec{J})\) is complete (consistent) for every \(\varvec{J}\in \mathcal {J}(\varPhi )^n\).

3 Strategic manipulation

Let us interpret a given aggregation problem as a strategic situation, where individuals prefer certain collective decisions more than others. In this section, we introduce a model for representing such situations that emphasises the information available to each of the individuals. We start by modelling individual preferences.

3.1 Preferences

We assume that every individual i comes equipped with a preference relation \(\succsim _i\), defined over all the possible collective judgment sets \(J\in 2^{\varPhi }\). By writing \(J\succsim _i J'\), we mean that individual i wants the collective decision to be judgment J at least as much as she wants it to be judgment \(J'\). Considering all judgment sets \(J, J', J'' \in 2^{\varPhi }\), we take the relation \(\succsim _i\) to be reflexive (\(J\succsim _i J\)), transitive (\(J\succsim _i J'\) and \(J'\succsim _i J''\) implies \(J\succsim _i J''\)), and complete (either \(J \succsim _i J'\) or \(J' \succsim _i J\)). Thus, we assume that individuals rank all pairs of possible outcomes relative to each other; no two outcomes are going to be incomparable.Footnote 9 Finally, we write \(J\sim _i J'\) if \(J\succsim _i J'\) and \(J'\succsim _i J\), and we denote by \(J\succ _i J'\) the strict component of \(J \succsim _i J'\), i.e., the case where \(J\succsim _i J'\), but not \(J' \succsim _i J\).

The type of preferences that the individuals hold will play a crucial role in our analysis. So, let us reflect on some further assumptions that we can make about them. For example, in many aggregation contexts it is natural to suppose that the preferences of an individual depend on the truthful judgment set that this individual holds. Recall, for instance, the example presented in the introduction. In such a situation, it would be reasonable to assume that each individual would like the final collective decision to match her own judgment. Hence, following Dietrich and List (2007c), we restrict our study to cases where individual judgments and preferences over collective outcomes are expected to be related. A full identification of scenarios that satisfy our assumptions is an empirical problem, which certainly deserves further investigation.

A preference relation \(\succsim _i\)respects closeness to \(J_i\) if, for any two judgment sets J and \(J'\), \(J\succsim _i J'\) whenever \(J\cap J_i \supseteq J'\cap J_i\). For each judgment set \(J_i\), let \(C(J_i)\) be the set of all preference relations \(\succsim _i\) that respect closeness to \(J_i\). Then, \(C=\{C(J_i):J_i\in \mathcal {J}(\varPhi )\}\) is the class of closeness-respecting preferences. Roughly, individuals with closeness-respecting preferences rank higher the collective judgments that agree with their individual ones.

3.2 Full information

Along the lines of Dietrich and List (2007c), we now develop a definition of strategyproofness of an aggregation rule, relative to a given class of preferences (such as the class of all closeness-respecting preferences). However, unlike these authors, we do not distinguish between being able to affect the outcome of an aggregation rule and the incentive of doing so, but only model strategic behaviour. Like Dietrich and List , we initially assume that every individual has full information about the profile of judgments.

When does an individual have an incentive to submit a dishonest judgment in an aggregation problem, under the assumption that she is fully informed about the judgments of her peers? This is the question we focus on next.

Definition 1

Consider a profile of judgments \(\varvec{J}=(J_1,\ldots ,J_n)\) and an aggregation rule F. Individual \(i \in N\) with preferences \({\succsim _i}\) has an incentive to manipulate in profile \(\varvec{J}\) if there exists a judgment set \(J_i^{*} \in \mathcal {J}(\varPhi )\) such that \(F(J_i^{*},\varvec{J}_{-i})\succ _i F(J_i,\varvec{J}_{-i})\).

In words, if by submitting an untruthful judgment set an individual can change the outcome into a judgment set she strictly prefers, then she has an incentive to manipulate in this manner.

Consider a function \( PR \) that assigns to each individual i and judgment set \(J_i\in \mathcal {J}(\varPhi )\) a non-empty set \( PR (J_i)\) of reflexive, transitive and complete preference relations \(\succsim _i\). Then, by a slight abuse of notation, we also denote with \( PR \) the class of preferences constructible by that function, i.e., \( PR =\{ PR (J_i):J_i\in \mathcal {J}(\varPhi )\}\) (an example of such a class is the class of closeness-respecting preferences).

Definition 2

The aggregation rule F is manipulable for the class of preferences \( PR \) if there exist a profile \(\varvec{J}\in \mathcal {J}(\varPhi )^n\) and an individual \(i \in N\) holding preferences \({\succsim _i}\in PR (J_i)\) such that i has an incentive to manipulate in \(\varvec{J}\).

Next, the notion of strategyproofness of an aggregation rule captures the absence of all incentives for manipulation.Footnote 10

Definition 3

The aggregation rule F is strategyproof for the class of preferences \( PR \) if, for all individuals \(i \in N\), all profiles \(\varvec{J}=(J_1,\ldots ,J_n) \in \mathcal {J}(\varPhi )^n\), all preference relations \({\succsim _i}\in PR (J_i)\), and all judgment sets \(J_i^{*} \in \mathcal {J}(\varPhi )\), \(F(J_i,\varvec{J}_{-i})\succsim _i F(J^*_i,\varvec{J}_{-i})\).

Thus the rule F is strategyproof for the class of preferences \( PR \) if and only if F is not manipulable for \( PR \). This holds only because preference relations are taken to be complete. We will also refer to strategyproofness as immunity to manipulation and to manipulability as susceptibility to manipulation.

Definition 3 implies that the individuals have a noticeable truth-bias. That is, in case where they equally like the outcomes obtained by the truthful and possible untruthful judgments, they will choose to be honest. Said differently, they will only lie if by doing so they can obtain a strictly better outcome. Obraztsova et al. (2013) justify this assumption by remarking that strategising can be costly for the individuals, for example in time and cognitive effort, so they would remain truthful when they cannot unilaterally affect the outcome (so when they have nothing to gain). Nevertheless, they will still try to manipulate if they can obtain a preferable result, assuming that their reward then will exceed the cost of strategising.

Dietrich and List (2007c) axiomatised the strategyproof aggregation rules, considering groups of individuals with closeness-respecting preferences that are reflexive and transitive. They proved that the strategyproof aggregation rules are exactly those that are both independent and monotonic. Next, we establish that this characterisation result remains valid for individuals whose preferences are furthermore complete.

Theorem 1

An aggregation rule F is strategyproof for all closeness-respecting preferences that are reflexive, transitive and complete if and only if F is both independent and monotonic.

As an immediate consequence of the result of Dietrich and List , we have that every independent and monotonic aggregation rule must be strategyproof for every subset of the class of all closeness-respecting preferences that are reflexive and transitive (and the class of all closeness-respecting preferences that are reflexive, transitive and complete obviously is such a subset). The interest in the above result thus lies in the fact that the converse is also true.

Hence, strategyproof rules exist, but they belong to a fairly narrow family of rules. The problem with rules that are independent is that they typically are not consistent and thus of limited interest in practice. For the large class of path-connected agendas, Dietrich and List (2007c) went one step further and showed that there exist no reasonable rules that are strategyproof:

Theorem 2

(Dietrich and List 2007c) For a path-connected agenda \(\varPhi \), an aggregation rule F is complete, consistent, responsive and strategyproof for the class of (reflexive and transitive) closeness-respecting preferences if and only if F is a dictatorship.

3.3 Partial information

Assuming that individuals in every situation know everything about the judgments of their peers is clearly rather stringent, particularly when we consider large groups of individuals or agendas with confidential issues. In practice, the information the individuals hold in an aggregation problem may be of different types. For example, a given individual may have the information of how many others hold a specific judgment set J, but she may not necessarily know which individuals do (which is common in election polls), or she may know everyone’s judgment on formula \(\varphi \), but not on \(\psi \), and so forth. Moreover, in a different setting where individuals are connected via a social network, an individual may know the judgments of some of the others (her neighbours in the network), but she may be completely uncertain about the rest.

We call \(\mathcal {I}\) the set of all possible pieces of information regarding a profile of judgment sets an individual could possibly be informed about, before the final reporting of judgments. Following Reijngoud and Endriss (2012), who introduce a similar concept in the context of voting, we define a judgment information function (JIF) \(\pi :N{\times } \mathcal {J}(\varPhi )^n\rightarrow \mathcal {I}\) as a function mapping individuals and profiles of judgment sets to elements of \(\mathcal {I}\). Intuitively, a JIF represents the available information for every individual, given the profile of judgments of the group. To simplify notation, we write \(\pi _i(\varvec{J})\) for the information of individual i about profile \(\varvec{J}\). The following are some natural choices for \(\mathcal {I}\) and the corresponding JIF \(\pi \).

-

Full The full-JIF returns precisely the input profile for every individual:

$$\begin{aligned} \pi _i(\varvec{J})= & {} \varvec{J} \quad \text {for all}\ i\in N\ \text {and}\ \varvec{J}\in \mathcal {J}(\varPhi )^n. \end{aligned}$$ -

All\(\_\)but\(\_ \varvec{Y}\) For \(\varvec{Y}=(Y_1,\ldots ,Y_n)\), the all\(\_\)but\(\_\varvec{ Y}\)-JIF returns for each individual i the judgments of the rest of the group on each formula except for the formulas in \(Y_i\subseteq \varPhi \):

$$\begin{aligned} \pi _i(\varvec{J})= & {} \left( N_{\varphi }^{\varvec{J}}\right) _ {\varphi \in \varPhi {\setminus } Y_i} \quad \text {for all}\ i\in N\ \text {and}\ \varvec{J}\in \mathcal {J}(\varPhi )^n. \end{aligned}$$ -

Besides\(\_ \varvec{I}\) For \(\varvec{I}=(I_1,\ldots ,I_n)\), the besides\(\_ \varvec{I}\)-JIF returns for each individual i the judgments of the other individuals besides those in \(I_i\subseteq N{\setminus }\{i\}\):

$$\begin{aligned} \pi _i(\varvec{J})= & {} (J_j)_ {j\in N{\setminus } I_i} \quad \text {for all}\ i\in N\ \text {and}\ \varvec{J}\in \mathcal {J}(\varPhi )^n. \end{aligned}$$ -

Zero The zero-JIF does not return any information; it just gives us a constant value:

$$\begin{aligned} \pi _i(\varvec{J})= & {} 0\ \quad \text {for all}\ i\in N\ \text {and}\ \varvec{J}\in \mathcal {J}(\varPhi )^n. \end{aligned}$$

Note that the full-JIF and the zero-JIF are extreme cases both of the all\(\_\)but\(\_ \varvec{Y}\)-JIF and of the besides\(\_ \varvec{I}\)-JIF. Full information is captured when \(\varvec{Y}=(\emptyset ,\ldots ,\emptyset )\) and \(\varvec{I}=(\emptyset ,\ldots ,\emptyset )\), while we get zero information for \(\varvec{Y}=(\varPhi ,\ldots ,\varPhi )\) and \(\varvec{I}=(N{\setminus }{\{1\}},\ldots ,N{\setminus }{\{n\}})\).

Our framework also allows for the above JIFs to be combined, letting different individuals have access to different types of information. Now, having the information expressed by a JIF \(\pi \) and a profile of judgments \(\varvec{J}\), we define the set of (partial) profiles that individual i considers possible:

That is, \(\mathcal {W}_{i}^{\pi ,\varvec{J}}\) contains all the judgments of the rest of the group that are compatible with individual i’s information. In the special cases where the individuals are fully informed or completely uninformed, we of course get \(\mathcal {W}_{i}^{\text {full},(J_i,\varvec{J}_{-i})}=\{\varvec{J}_{-i}\}\) and \(\mathcal {W}_{i}^{\text {zero},(J_i,\varvec{J}_{-i})}=\mathcal {J}(\varPhi )^{n-1}\) for all \(i\in N\) respectively.

Coming back to the tenure-example of the introduction, suppose that Alice (individual i), before the voting process has talked with the other members of the committee about the performance of the candidate regarding research (r) and teaching (t), so she already knows their relevant judgments on those two criteria. On the other hand, the service to the profession (s) of the candidate has only been discussed between Alice, Bob, Carol and Deniz, but not Enrique. Call \(\pi \) the appropriate JIF capturing the situation and suppose that the actual judgments of the five professors are as depicted in the table of the introduction. Then, the partial profiles that Alice considers possible only differ on the judgment of Enrique concerning s. Formally, it will be \(\mathcal {W}_{i}^{\pi ,\varvec{J}}=\{ \varvec{J}_{-i}, \varvec{J}'_{-i}\}\), where \( \varvec{J}_{-i}= (\{r,t,s\},\{r,t,s\},\{\lnot r, \lnot t, \lnot s\},\{\lnot r, t, s\})\) and \( \varvec{J}'_{-i}= (\{r,t,s\},\{r,t,s\},\{\lnot r, \lnot t, \lnot s\},\{\lnot r, t, \lnot s\}) \).

Note that we only deal with qualitative beliefs. We assume that the individuals cannot or do not want to assign any numerical value (probability) to their beliefs about the possibility of the occurrence of each scenario concerning the judgments of the group. We observe that \(\mathcal {W}_{i}^{\pi ,\varvec{J}}\) satisfies the three axioms of reflexivity (REF), symmetry (SYM), and transitivity (TRA), and hence it forms an equivalence relation. In other words, for every individual i and judgment set \(J_i\), the JIF \(\pi \) induces a partition of the set \(\mathcal {J}(\varPhi )^{n-1}\). Clearly, the finest partition corresponds to the full-information case and the coarsest one to zero information.Footnote 11 Formally, for all judgment sets \(J_i\) and for all (partial) profiles \(\varvec{J}_{-i}, \varvec{J}^*_{-i}, \varvec{J}^{**}_{-i}\), the following hold:

Axiom (REF) expresses that every individual always deems possible the truthful profile of judgments. Axioms (SYM) and (TRA) together state that whenever an individual considers some profile possible, then that profile would induce the same information set as her current one.

We can now refine the standard definitions of strategyproofness, accounting for individuals with incomplete information.

Definition 4

Consider an aggregation rule F, a JIF \(\pi \), and a truthful profile \(\varvec{J}=(J_1,\ldots ,J_n) \in \mathcal {J}(\varPhi )^n\). Individual \(i \in N\) with preferences \({\succsim _i}\) has an incentive to\(\varvec{\pi }\)-manipulate in \(\varvec{J}\) if there exists a judgment set \(J_i^{*} \in \mathcal {J}(\varPhi )\) such that

-

1.

\(F(J_i^{*},\varvec{J}'_{-i})\succ _i F(J_i,\varvec{J}'_{-i})\), for some \(\varvec{J}'_{-i}\in \mathcal {W}^{\pi ,\varvec{J}}_i\) and,

-

2.

\(F(J^*_i,\varvec{J}''_{-i})\succsim _i F(J_i,\varvec{J}''_{-i})\), for all other \(\varvec{J}''_{-i}\in \mathcal {W}^{\pi ,\varvec{J}}_i\).

This means that an individual has an incentive to manipulate under the (partial) information provided by the JIF \(\pi \) by reporting an untruthful judgment if there is a scenario consistent with her information that will result in a more desirable collective decision for her and there is no scenario where she will be worse off than when reporting a truthful judgment. That is, we adopt a pessimistic perspective (from the individual’s standpoint), according to which an individual is willing to lie only if it is totally safe to do so. Said differently, the individuals are taken to be risk-averse: if there is at least one possible scenario where lying induces a less desirable result, then they remain truthful.

If there is a profile \(\varvec{J}\) where at least one individual has an incentive to \(\pi \)-manipulate, then we say that the aggregation rule is \(\pi \)-manipulable:

Definition 5

Consider a JIF \(\pi \). The aggregation rule F is \(\varvec{\pi }\)-manipulable for the class of preferences \( PR \) if there are a profile \(\varvec{J}=(J_i,\varvec{J}_{-i})\in \mathcal {J}(\varPhi )^n\) and an individual \(i \in N\) holding preferences \({\succsim _i}\in PR (J_i)\) such that i has an incentive to \(\pi \)-manipulate in \(\varvec{J}\).

The aggregation rule F is \(\pi \)-strategyproof for the class of preferences \( PR \) if and only if F is not \(\pi \)-manipulable for \( PR \):Footnote 12

Definition 6

Consider a JIF \(\pi \). The aggregation rule F is \(\varvec{\pi }\)-strategyproof for the class of preferences \( PR \) if, for all individuals \(i \in N\), all profiles \(\varvec{J}=(J_1,\ldots ,J_n) \in \mathcal {J}(\varPhi )^n\), all preference relations \({\succsim _i}\in PR (J_i)\), and all judgment sets \(J_i^{*} \in \mathcal {J}(\varPhi )\), at least one of the following conditions holds:

-

1.

\(F(J_i,\varvec{J}'_{-i})\sim _i F(J^*_i,\varvec{J}'_{-i})\), for all \(\varvec{J}'_{-i}\in \mathcal {W}_{i}^{\pi ,\varvec{J}} \) or,

-

2.

\(F(J_i,\varvec{J}''_{-i})\succ _i F(J^*_i,\varvec{J}''_{-i})\), for some \(\varvec{J}''_{-i}\in \mathcal {W}_{i}^{\pi ,\varvec{J}}\).

The first condition of Definition 6 brings out a further assumption concerning the truth-bias of the individuals. Justifying it as in the case of full information, whenever an individual cannot unilaterally change the outcome, then she chooses to be honest, so that she does not have to bear the possible cost of strategising without being able to gain any profit. The second condition of Definition 6 is related to risk-aversion. If being sincere can induce a preferable collective decision in some scenario, then an individual will not lie and risk losing that desirable outcome.Footnote 13

Obviously, when \(\pi \) is the full-JIF, \(\pi \)-strategyproofness (\(\pi \)-manipulation) is equivalent to strategyproofness (manipulation) under full information. We can further understand the importance of partial information on strategyproofness as follows. Consider an individual i. If this individual possesses full information about the judgments of the rest of the group, then she will manipulate with no second thought in case she finds an untruthful judgment that makes her better off. However, finding such an insincere judgment is not sufficient to make individual i manipulate under partial information. Then, an extra condition needs to be satisfied: for all possible scenarios, the untruthful judgment should induce a result at least as good as the one induced by the individual’s truthful judgment. Loosely speaking, this second condition provides an additional layer of safety against manipulation for an aggregation rule.

4 Comparing manipulation under full and partial information

This section presents the main theoretical results of our model. We proceed with establishing an essential bridge between the framework of manipulation under full information and the richer one that incorporates settings of partial information. Subsequently, we demonstrate how partial information can escape the manipulability of aggregation rules, which haunts the standard model of full information. Throughout this section we assume that all individuals have complete and closeness-respecting preferences.

4.1 Connecting full and partial information

We start with clarifying how known results about fully informed individuals can extend to partial information cases.

Let us call a JIF \(\pi \)at least as informative as another JIF \(\sigma \) if for all profiles \(\varvec{J}\) and all individuals i, \(\mathcal {W}^{\pi ,\varvec{J}}_i \subseteq \mathcal {W}^{\sigma ,\varvec{J}}_i\).Footnote 14 For example, the full-JIF is at least as informative as the all\(\_\)but\(\_ \varvec{Y}\)-JIF for every \( \varvec{Y}\), which is at least as informative as the zero-JIF. As one may naturally expect, we can show that, if a less informed individual has an incentive to manipulate an aggregation rule, then she also has an incentive to manipulate the same rule when holding more information. Interestingly though, these incentives do not necessarily coincide. That is, the profile that triggers the manipulation under less information may be different than the one causing the manipulation under more information [consult Reijngoud (2011) for the voting-counterpart of this result].

Proposition 3

If a JIF \(\pi \) is at least as informative as another JIF \(\sigma \), then all aggregation rules that are \(\sigma \)-manipulable for a class of preferences \( PR \) are also \(\pi \)-manipulable for \( PR \).

Corollary 4

If a JIF \(\pi \) is at least as informative as another JIF \(\sigma \), then all aggregation rules that are \(\pi \)-strategyproof for a class of preferences \( PR \) are also \(\sigma \)-strategyproof for \( PR \).

Hence, we have now settled that for any JIF \(\pi \), all aggregation rules that are full-strategyproof are also \(\pi \)-strategyproof. Roughly speaking, withholding information from the individuals can never damage the strategyproofness of an aggregation rule. By Theorem 1, this implies that for any JIF \(\pi \), all independent and monotonic aggregation rules are \(\pi \)-strategyproof for the class of closeness-respecting preferences.

Corollary 5

An aggregation rule F is \(\pi \)-strategyproof for all JIFs \(\pi \) for the class of closeness-respecting preferences if and only if F is both independent and monotonic.

Specifically, since quota rules are independent and monotonic (Dietrich and List 2007b), they are \(\pi \)-strategyproof for all JIFs \(\pi \), for the class of closeness-respecting preferences.

4.2 Partial information makes a difference

Next, we ponder: Is it possible to get qualitatively different results regarding the manipulability of aggregation rules by introducing the model of partial information? As we will shortly see, the answer is clearly positive. To begin with, we can guarantee the existence of a strategyproof aggregation rule for scenarios where the individuals are missing a reasonable part of the information concerning the judgments of their peers, even when the same rule is manipulable for fully informed individuals.

Theorem 6

For any agenda \(\varPhi \), there exist an aggregation rule F and a JIF \(\pi \) different than the zero-JIF, such that F is \(\pi \)-strategyproof but not full-strategyproof for the class of closeness-respecting preferences.

Theorem 6 ensures that for any agenda \(\varPhi \), if we have control over the information that a group of individuals has access to, then we can suggest the use of an aggregation rule that no-one will be able to manipulate. Moreover, it is worth stressing that our result does not require totally ignorant individuals; rather, having only two individuals that lack information about the judgments of each other already is a sufficient condition (consult the proof in the “Appendix” for the technical details).

To illustrate, suppose that the members of a political party in Greece need to decide about whether a new secretary should be hired in their central office. Among the party members, everyone would of course like to hire their cousin in case the secretary position is open, but only the party’s top members, the leader and the sub-leader, have a realistic chance of doing so. However, these two individuals have a known history of not getting along well (especially when their personal interests are at stake). So, as the decision-making consultant of the party, we could wisely suggest the use of the following aggregation rule for the vote: the secretary position will be approved if and only if exactly one of the two people on the top votes in favour of it (so no fight between the leader and the sub-leader will create instability in the party in case they both want the opening) and at least half of the other members also believe it is a good idea to have the new position (suggesting that it is a reasonable choice to make). Now, if we could also guarantee that the two top members will not have access to each other’s judgments about the position before the vote, then we can make sure that no-one who wants the opening to be approved will have an incentive to lie; for instance, if the leader attempts to do so, she would immediately risk to have the position rejected in case the sub-leader does not have a cousin available for hiring at the moment and thus is opposed to the opening. On the other hand, if the leader is informed that the sub-leader is planning to vote positively, then she could untruthfully vote negatively and, after the position is approved, get in the fight about hiring her preferred person.

As a next step, we show that partial information can facilitate strategyproofness also in case there are specific restrictions regarding the choice of the aggregation rule. Remarkably, even if additional constraints (formulated as desirable axiomatic properties) have to be fulfilled by the aggregation rule, immunity to manipulation can nevertheless be achieved. In particular, Theorem 2, the impossibility theorem of Dietrich and List (2007c), breaks down.

Theorem 7

For any agenda \(\varPhi \) and any number of individuals \(n\ge 7\), the plurality rule \(F^{\textit{p}\ell }\) along with any lexicographic tie-breaking rule is nondictatorial, complete, consistent, responsive, and immune to zero-manipulation for the class of closeness-respecting preferences.

Corresponding results have been proved for the plurality rule in voting, albeit under stronger assumptions regarding the number of individuals (Conitzer et al. 2011; Reijngoud and Endriss 2012). The main insight in the proof of Theorem 7 is that when the plurality rule is applied, an individual has an incentive to manipulate if and only if she knows with certainty that her judgment is pivotal to the achievement of a strictly preferable outcome. When the individual is fully aware of the judgments of her peers, there are profiles where such an incentive is obvious. However, by restraining the information that the individual holds, her incentives to manipulate disappear.

But it is important to emphasise that Theorem 7 does not trivially rely on the features of zero information. To convince the reader of this fact, we inspect the average-voter rule, which satisfies all the demands of Theorem 2 besides strategyproofness, and we show that zero-information does not solve the problem of manipulability in this case.

Proposition 8

There exist an agenda \(\varPhi \) and a group \(N\) for which the average-voter rule \(F^{{\textit{av}}}\) together with a lexicographic tie-breaking order is susceptible to zero-manipulation for the class of closeness-respecting preferences.

Proposition 8 together with the fact that adding more information can only harm strategyproofness (Proposition 3) makes it evident that there is no class of JIFs for which immunity to manipulation is guaranteed for every rule.

Corollary 9

There is no JIF \(\pi \) for which every aggregation rule F is \(\pi \)-strategyproof for the class of closeness-respecting preferences.

5 The premise-based rule

This section examines an aggregation rule that is popular due to its practical use and the properties of which have been extensively studied in the literature on judgment aggregation, namely the premise-based rule (Pettit 2001; Bovens and Rabinowicz 2006; Dietrich and List 2007c; Dietrich and Mongin 2010; Hartmann and Sprenger 2012). We restrict attention to the most relevant agendas for the application of the premise-based rule, namely the conjunctive agendas. However, all our results hold for disjunctive agendas too. Throughout our analysis, we are going to keep assuming that the preferences of the individuals are always complete.

As has become evident in the introduction, the premise-based rule can be manipulated by an individual who wants the conclusion of the conjunctive agenda to be rejected, but who nevertheless truthfully accepts one premise, say premise p. In case such an individual knows that her judgment on p is pivotal concerning the collective decision on the conclusion, i.e., that by untruthfully rejecting p the—previously accepted—conclusion will be rejected by the group, the individual will prefer to lie. This observation was formalised by Dietrich and List (2007c), who showed that the premise-based rule is full-manipulable for the class of closeness-respecting preferences. In particular, the result of Dietrich and List hinges on individuals with preferences that are only interested in the conclusion of the agenda and completely ignore the collective decision on the premises. Dietrich and List refer to these preferences as outcome-oriented (we will call them conclusion-oriented instead) and justify them by assuming that only the conclusion and not the premises carries consequences that the individuals care about. Concretely, for every judgment set \(J_i\), let \(O(J_i)\) be the set of preferences \(\succsim _i\) such that for all judgment sets \(J,J'\in 2^{\varPhi }\), \(J\succsim _i J'\) if and only if \(J'\) agreeing on c with \(J_i\) implies that J agrees on c with \(J_i\). Then, \(O=\{O(J_i): J_i\in \mathcal {J}(\varPhi )\}\) is the class of conclusion-oriented preferences.

Proposition 10

(Dietrich and List 2007c) For a conjunctive agenda, the premise-based rule is full-manipulable for the class of conclusion-oriented preferences.

Even though the news concerning the manipulability of the premise-based rule is negative at first sight, several assumptions associated with it deserve further investigation. These are the questions we shall focus on:

-

Is the premise-based rule manipulable by individuals who have limited information about the judgments of their peers?

-

Do individuals with specific and reasonable types of preferences, different from the conclusion-oriented ones, still have incentives to manipulate the premise-based rule?

Unfortunately, if we address the first question while restricting attention only to individuals with conclusion-oriented preferences, then we continue to obtain a negative result—even under the assumption that the manipulator does not have access to any kind of information.

Lemma 11

For any conjunctive agenda, the premise-based rule is zero-manipulable for the class of conclusion-oriented preferences.

By Proposition 3 and the above lemma, we obtain the following generalisation of Proposition 10:

Proposition 12

For any conjunctive agenda and for any JIF \(\pi \), the premise-based rule is \(\pi \)-manipulable for the class of conclusion-oriented preferences.

So, conclusion-oriented individuals have incentives to manipulate under any kind of information that they may hold about the judgments of their peers. This provides us with an extra motivation to study the strategic behaviour of individuals with different preferences, still reasonable for the scenarios addressed by the premise-based rule. Dietrich and List (2007c) initiated this discussion by considering individuals with reason-based preferences, i.e., preferences that aim at maximising the agreement between the individual’s truthful judgment and the collective decision on the premises only, disregarding the conclusion. Although the work of Dietrich and List (2007c) brought to light a positive result regarding the strategyproofness of the premise-based rule, their assumption of reason-based preferences is rather restrictive. In the sequel, instead we are going to demonstrate different ways of obtaining strong positive results, by exploiting our framework of partial information in combination with various kinds of preferences.

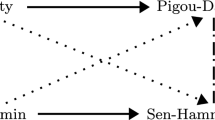

5.1 Conclusion-prioritising preferences

The class of conclusion-prioritising preferences P refines the class of conclusion-oriented preferences. Consider a conjunctive agenda \(\varPhi \), where \(\varPhi ^{p}\) is the set of its premises. The preference relations in P capture the idea that the individuals give highest priority to the outcome on the conclusion, and secondarily, they try to maximise the agreement on the premises. Formally, for each judgment set \(J_i\), let \(P(J_i)\) be the set of complete preferences \(\succsim _i\) such that for all judgment sets \(J,J'\in 2^{\varPhi }\), \(J\succ _i J'\) if and only if (i) J agrees with \(J_i\) on c but \(J'\) disagrees with \(J_i\) on c , or (ii) both J and \(J'\) agree or disagree with \(J_i\) on c and \(|\varPhi ^p\cap J \cap J_i|> |\varPhi ^p \cap J' \cap J_i|\). Then, \(P=\{P(J_i): J_i\in \mathcal {J}(\varPhi )\}\) is the class of conclusion-prioritising preferences.

We can show that the premise-based rule is full-manipulable for conclusion-prioritising preferences, similarly to conclusion-oriented preferences. However, under partial information the balance changes. The premise-based rule is immune to manipulation for conclusion-prioritising preferences, while it is still manipulable for conclusion-oriented preferences. Furthermore, the amount of information that needs to be absent in order to achieve strategyproofness is remarkably small. Speaking informally, for large agendas truthfulness is guaranteed even when the individuals know almost everything about the judgments of the rest of the group, i.e., when their uncertainty tends to 0. Before stating our result formally, we define a measure of the uncertainty related to a JIF \(\pi \).

Definition 7

The uncertainty of individual \(i\in N\) induced by JIF \(\pi \) in profile \(\varvec{J}\in \mathcal {J}(\varPhi )^n\) is defined as follows:

The uncertainty of a JIF \(\pi \) is the maximal uncertainty it can induce:

Thus, the uncertainty that the JIF \(\pi \) induces for an individual on a profile is a real number between 0 and 1, i.e., \(0\le U^{\varvec{J}}_i(\pi )\le 1\), where 0 denotes full certainty and 1 total uncertainty. The more partial profiles are possible for the individuals, the more uncertainty increases. For example, according to the full-JIF the individuals only consider possible the truthful partial profile, thus the uncertainty of the full-JIF is 0. At the other extreme, the uncertainty of the zero-JIF is 1, because according to it the individuals deem possible all the partial profiles.

Lemma 13

Consider a conjunctive agenda \(\varPhi \) with at least two premises \(p_1,p_2\in \varPhi ^p\). For \(Y=\{p_1,p_2,\lnot p_1, \lnot p_2\}\) and \(\varvec{Y}=(Y,\ldots , Y)\), the premise-based rule is immune to all\(\_\)but\(\_\varvec{Y}\)-manipulation for the class of conclusion-prioritising preferences.

Theorem 14

Consider a conjunctive agenda \(\varPhi \) with at least two premises. The premise-based rule \(F^{\textit{pr}}\) is susceptible to full-manipulation for the class of conclusion-prioritising preferences P. However, there is a family of JIFs \(\{\pi ^x:x\in \mathbb {N}\}\) with \(\lim _{x\rightarrow \infty } U(\pi ^x)=0\) such that \(F^{\textit{pr}}\) is immune to \(\pi _m\)-manipulation for P, where \(m=|\varPhi |\).

Lemma 13 establishes that, even when the preferences of the individuals prioritise the conclusion in a conjunctive agenda—but when they do not totally overlook the premises—strategyproofness of the premise-based rule is guaranteed in combination with certain modest assumptions on the uncertainty of individuals. This result is important for at least two reasons. First, it shows that caring about the conclusion is not necessarily detrimental to the strategyproofness of the premise-based rule, as the result of Dietrich and List (2007c) seems to imply; and second, it confirms that aiming for zero information is not the only way of achieving positive results. Then, Theorem 14 stresses an additional intriguing observation: that determining exactly how much information causes the manipulability of an aggregation rule can be quite an intricate challenge. Specifically, it proves that for big agendas, a rule can be susceptible to manipulation under full information but strategyproof under almost-full information.

5.2 Hamming-distance preferences

One particular example of commonly used closeness-respecting preferences in the literature are the Hamming-distance preferences (Dietrich and List 2007c; Endriss et al. 2012; Baumeister et al. 2015; Botan et al. 2016). These preferences are widely adopted in settings where the individuals are expected to care equally about all the formulas in the agenda. For every individual i, the Hamming-distance naturally induces a (reflexive, transitive and complete) preference relation \(\succsim _i\) on judgment sets. Let \(H(J_i)\) be the set of preferences \(\succsim _i\) such that, for all judgment sets \(J,J'\in 2^{\varPhi }\) it is the case that \(J \succsim _i J'\) if and only if \(H(J, J_i) \le H(J', J_i)\). Then, \(H=\{H(J_i): J_i\in \mathcal {J}(\varPhi )\}\) is the class of Hamming-distance preferences.

We now show that, if the individuals have Hamming-distance preferences, then the strategyproofness of the premise-based rule is guaranteed, independently of the amount of information that the individuals possess.

Lemma 15

For any conjunctive agenda \(\varPhi \), the premise-based rule is immune to full-manipulation for the class of Hamming-distance preferences.

By Corollary 4 and the above lemma, we thus obtain:

Theorem 16

For any conjunctive agenda \(\varPhi \) and any JIF \(\pi \), the premise-based rule is immune to \(\pi \)-manipulation for the class of Hamming-distance preferences.

Overall, we have underlined the gravity of the assumptions that one makes about the preferences of the individuals in an aggregation scenario as far as the manipulability of aggregation rules is concerned, especially when partial information comes into play. It now has become explicit that the manipulability of the premise-based rule is based on a special subset of the closeness-respecting preferences, namely the conclusion-oriented preferences, and narrowing down our analysis to individuals that completely overlook the conclusion in the agenda is not the only way to achieve strategyproofness. Notably, the non-independent premise-based rule is strategyproof under full information for a different subclass of the closeness-respecting preferences, consisting of the Hamming-distance preferences (Theorem 16).Footnote 15 Moreover, by adding an extra layer of uncertainty, the premise-based rule is also strategyproof for a third subclass of the closeness-respecting preferences, namely the class of the conclusion-prioritising preferences (Theorem 14).

6 Conclusion

We have introduced a novel model of strategic manipulation in judgment aggregation that weakens the standard assumption of every potential manipulator having full information regarding the judgments of her peers. Our technical results clarify the relationship between the standard model of full information and our model of partial information and demonstrate that, by moving to more realistic assumptions regarding the information available to individuals, we can avoid some of the negative results proved in the literature and instead obtain strategyproof judgment aggregation rules. This is true, in particular, for the important premise-based rule, which turns out to be strategyproof under two sets of assumptions that may be deemed reasonable in certain domains.

While we have introduced a general framework for modelling the information available to an individual who might engage in strategic manipulation, our results mostly relate to very specific instances of this general model, such as the case of zero information. In the future, more work will be needed to identify and analyse more realistic choices of judgment information functions that are relevant to specific applications. For example, as previously mentioned, building on the ideas of Chopra et al. (2004) one could assume that individuals are part of a social network and have full information on their immediate neighbours, but no information on those individuals further removed in the network. Finally, an other important direction of research expanding on our work concerns obtaining deeper technical results, for instance characterising for what—if any—types of partial information the impossibility of Dietrich and List (2007c) is reproduced.

Notes

Somewhat further removed from the concerns of this paper, other authors have investigated other forms of strategic behaviour in judgment aggregation, notably group manipulation (Botan et al. 2016), bribery (Baumeister et al. 2015), and agenda setting (Dietrich 2016). Moreover, in the context of epistemic judgment aggregation, i.e., when a ground truth can be assumed to exist about the issues under consideration, the strategic behaviour of partially informed individuals has been investigated by Bozbay et al. (2014). For a broader perspective, we refer to the recent survey by Baumeister et al. (2017), which specifically emphasises algorithmic considerations.

The judgments submitted by a group are said to be unidimensionally aligned, if the members of that group can be lined up from left to right, such that, for every issue upon which judgments are expressed, all the individuals that have the same opinion are either all on the left or all on the right side of those that disagree with them (List 2003).

Further results pertaining to our model may be found in the Master’s thesis of the first author (Terzopoulou 2017).

An extended model of judgment aggregation which captures propositions expressed in richer logical languages, such as predicate logic, modal logic, and multivalued or fuzzy logic has been developed by Dietrich (2007).

An alternative technique of breaking ties could exploit random tie-breaking. However, we restrict attention to lexicographic tie-breaking orders. One reason is that breaking ties with the help of a fixed linear order satisfies the independence of irrelevant alternatives principle (Ray 1973). The independence of irrelevant alternatives principle, also known as Sen’s property \(\alpha \) (Sen 1969, 1970), states that if an alternative J is chosen from a set S, and J is also an element of a subset \(S'\) of S, then J must be chosen from \(S'\). That is, eliminating some of the unchosen alternatives should not affect the selection of J. We find this condition normatively desirable as far as the tie-breaking rule is concerned.

An alternative name of the responsiveness axiom in the literature is nonimposition.

The requirement of completeness of preferences has triggered lot of discussion (e.g., Jeffrey 1983), and one of the main arguments against it is directly reflected in the judgment aggregation framework. The possible collective outcomes will usually be exponentially as many as the formulas in the agenda, and the individuals have to be able to compare all of them. Nonetheless, one justification of the completeness constraint is based on our interpretation of the individuals’ preferences over the collective decisions. For example, we may think of preferences expressing “conceivable” acts and not “actual” ones, in the sense that they represent the choice dispositions of the individuals (Sen 1973; Gilboa 2009). From this perspective, completeness does not imply that the individuals should be able to rank a large number of options prior to making a decision about them; instead, it may mean that they possess an intrapersonal method to rank the different judgment sets when these judgments are presented in pairs, which induces a complete ordering. An instance of a plausible such method is defined later in this paper, and is constructed via the Hamming-distance.

Note that in the original terminology of Dietrich and List (2007c), manipulability—as opposed to strategyproofness—is a preference-less concept (i.e., it only depends on the individuals’ truthful judgments and not on their possible preferences). In this paper though, we use this term with respect to a definition of preferences. More details on the preference-less notion of manipulability can be found in the proof of Theorem 1 in the “Appendix”.

Note that according to Definition 3 of simple strategyproofness, the aggregation rule F is said to be strategyproof if the first condition of Definition 6 holds, taking \(\pi \) to be the zero-JIF. After making this observation, one could also give an alternative interpretation to the original Definition 3. That is, it may be seen as not imposing any requirements on the information that the individuals hold, but instead asking for truthfulness to be a best response to any possible judgments of the others (namely a dominant-strategy equilibrium rather than only a Nash equilibrium). For the rest of this paper though we will stick to our first interpretation of Definition 3, which is associated with full information.

Equivalently, \(\pi \) is at least as informative as \(\sigma \) if for all individuals i and judgment sets \(J_i\) the partition on \(\mathcal {J}(\varPhi )^{n-1}\) induced by \(\pi \) is finer than the one induced by \(\sigma \).

For a motivating scenario where this would be an applicable rule, see the discussion following Theorem 6 in the body of the paper.

Note that for this part of the proof to work, the assumption that \(k\ge 3\) (which means that \(n\ge 7\)) is necessary. Suppose that \(k=2\), the closeness-respecting preference \(\succsim _i\) is such that \(J_i\sim _i J_i^*\), and the tie-breaking rule prioritises \(J_i^*\) over J. Then, in the profile \(\varvec{J}''=(J_i,\varvec{J}''_{-i})\) two individuals would submit \(J_i\), one would submit \(J_i^*\), and two other individuals would report J, so \(F^{\textit{p}\ell }(J_i,\varvec{J}''_{-i})=J_i\). Then, in case individual i reported \(J_i^*\) instead of \(J_i\), it would hold that \(F^{\textit{p}\ell }(J_i^{*},\varvec{J}''_{-i}) =J_i^* \sim _i F^{\textit{p}\ell }(J_i,\varvec{J}''_{-i}) \).

The same proof would work for any number of individuals that is a multiple of three.

We know that such an agenda can be constructed by Dokow and Holzman (2009).

References

Baumeister D, Erdélyi G, Erdélyi OJ, Rothe J (2015) Complexity of manipulation and bribery in judgment aggregation for uniform premise-based quota rules. Math Soc Sci 76:19–30

Baumeister D, Rothe J, Selker A-K (2017) Strategic behavior in judgment aggregation. In: Endriss U (ed) Trends in Computational Social Choice, chapter 8. AI Access, pp 145–168

Botan S, Novaro A, Endriss U (2016) Group manipulation in judgment aggregation. In: Proceedings of the 15th international conference on autonomous agents and multiagent systems (AAMAS), pp 411–419

Bovens L, Rabinowicz W (2006) Democratic answers to complex questions–an epistemic perspective. Synthese 150(1):131–153

Bozbay I, Dietrich F, Peters H (2014) Judgment aggregation in search for the truth. Games Econ Behav 87:571–590

Caragiannis I, Procaccia AD, Shah N (2014) Modal ranking: a uniquely robust voting rule. In: Proceedings of the 28th AAAI conference on artificial intelligence, pp 616–622

Chapman B (2002) Rational aggregation. Politics Philos Econ 1(3):337–354

Chopra S, Pacuit E, Parikh R (2004) Knowledge-theoretic properties of strategic voting. In: Proceedings of the 8th European conference on logics in artificial intelligence (JELIA), pp 18–30

Conitzer V, Walsh T (2016) Barriers to manipulation in voting. In: Brandt F, Conitzer V, Endriss U, Lang J, Procaccia AD (eds) Handbook of computational social choice. Cambridge University Press, Cambridge, pp 127–145

Conitzer V, Walsh T, Xia L (2011) Dominating manipulations in voting with partial information. In: Proceedings of the 25th AAAI conference on artificial intelligence, pp 638–643

de Haan R (2017) Complexity results for manipulation, bribery and control of the Kemeny judgment aggregation procedure. In: Proceedings of the 16th international conference on autonomous agents and multiagent systems (AAMAS), pp 1151–1159

Dietrich F (2007) A generalised model of judgment aggregation. Soc Choice Welf 28(4):529–565

Dietrich F (2016) Judgment aggregation and agenda manipulation. Games Econ Behav 95(C):113–136

Dietrich F, List C (2007a) Arrow’s Theorem in judgment aggregation. Soc Choice Welf 29(1):19–33

Dietrich F, List C (2007b) Judgment aggregation by quota rules: majority voting generalized. J Theor Politics 19(4):391–424

Dietrich F, List C (2007c) Strategy-proof judgment aggregation. Econ Philos 23(03):269–300

Dietrich F, List C (2008) Judgment aggregation without full rationality. Soc Choice Welf 31(1):15–39

Dietrich F, Mongin P (2010) The premiss-based approach to judgment aggregation. J Econ Theory 145(2):562–582

van Ditmarsch H, Lang J, Saffidine A (2013) Strategic voting and the logic of knowledge. In: Proceedings of the 14th conference on theoretical aspects of rationality and knowledge (TARK), pp 196–205

Dokow E, Holzman R (2009) Aggregation of binary evaluations for truth-functional agendas. Soc Choice Welf 32(2):221–241

Elster J (1998) Deliberative democracy. Cambridge University Press, Cambridge

Endriss U (2016) Judgment aggregation. In: Brandt F, Conitzer V, Endriss U, Lang J, Procaccia AD (eds) Handbook of computational social choice. Cambridge University Press, Cambridge, pp 399–426

Endriss U, Grandi U (2014) Binary aggregation by selection of the most representative voter. In: Proceedings of the 28th AAAI conference on artificial intelligence, pp 668–674

Endriss U, Grandi U, Porello D (2012) Complexity of judgment aggregation. J Artif Intell Res 45(1):481–514

Endriss U, Obraztsova S, Polukarov M, Rosenschein JS (2016) Strategic voting with incomplete information. In: Proceedings of the 25th international joint conference on artificial intelligence (IJCAI), pp 236–242

Gaertner W (2001) Domain conditions in social choice theory. Cambridge University Press, Cambridge

Gärdenfors P (2006) A representation theorem for voting with logical consequences. Econ Philos 22(2):181–190

Gibbard A (1973) Manipulation of voting schemes: a general result. Econometrica 41(4):587–601

Gilboa I (2009) Theory of decision under uncertainty. Cambridge University Press, Cambridge

Grossi D, Pigozzi G (2014) Judgment aggregation: a primer. synthesis lectures on artificial intelligence and machine learning. Morgan & Claypool Publishers, San Rafael

Hartmann S, Sprenger J (2012) Judgment aggregation and the problem of tracking the truth. Synthese 187(1):209–221

Jeffrey R (1983) Bayesianism with a human face. Test Sci Theor 10:133–156

List C (2003) A possibility theorem on aggregation over multiple interconnected propositions. Math Soc Sci 45(1):1–13

List C (2012) The theory of judgment aggregation: an introductory review. Synthese 187(1):179–207

List C, Pettit P (2002) Aggregating sets of judgments: an impossibility result. Econ Philos 18(1):89–110

List C, Puppe C (2009) Judgment aggregation: a survey. In: Anand P, Pattanaik P, Puppe C (eds) Handbook of rational and social choice. Oxford University Press, Oxford, pp 457–482

Meir R, Lev O, Rosenschein JS (2014) A local-dominance theory of voting equilibria. In: Proceedings of the 15th ACM conference on economics and computation (EC), pp 313–330. ACM, New York

Nehring K, Puppe C (2007) The structure of strategy-proof social choice–part I: general characterization and possibility results on median spaces. J Econ Theory 135(1):269–305

Obraztsova S, Markakis E, Thompson DR (2013) Plurality voting with truth-biased agents. In: Proceedings of the 6th international symposium on algorithmic game theory (SAGT), pp 26–37

Osborne MJ, Rubinstein A (2003) Sampling equilibrium, with an application to strategic voting. Games Econ Behav 45(2):434–441

Pettit P (2001) Deliberative democracy and the discursive dilemma. Philos Issues 11(1):268–299

Ray P (1973) Independence of irrelevant alternatives. Econometrica 41(5):987–991

Reijngoud A (2011) Voter response to iterated poll information. Master’s thesis, ILLC, University of Amsterdam

Reijngoud A, Endriss U (2012) Voter response to iterated poll information. In: Proceedings of the 11th international conference on autonomous agents and multiagent systems (AAMAS), pp 635–644

Satterthwaite MA (1975) Strategy-proofness and Arrow’s conditions: existence and correspondence theorems for voting procedures and social welfare functions. J Econ Theory 10(2):187–217

Sen A (1969) Quasi-transitivity, rational choice and collective decisions. Rev Econ Stud 36(3):381–393

Sen A (1970) Collective choice and social welfare. Holden-Day, San Francisco

Sen A (1973) Behaviour and the concept of preference. Economica 40(159):241–259

Terzopoulou Z (2017) Manipulating the manipulators: richer models of strategic behavior in judgment aggregation. Master’s thesis, ILLC, University of Amsterdam

Terzopoulou Z, Endriss U, de Haan R (2018) Aggregating incomplete judgments: axiomatisations for scoring rules. In: Proceedings of the 7th international workshop on computational social choice (COMSOC)

Zwicker WS (2016) Introduction to the theory of voting. In: Brandt F, Conitzer V, Endriss U, Lang J, Procaccia AD (eds) Handbook of computational social choice. Cambridge University Press, Cambridge, pp 23–56

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors thank the associate editor and the two reviewers for their valuable suggestions.

Appendix: Proofs

Appendix: Proofs

Proof of Theorem 1

We only show the non-trivial direction, which states that if an aggregation rule F is strategyproof for all reflexive, transitive and complete closeness-respecting preferences, then F is independent and monotonic.