Abstract

This paper proposes a method to extract financial causal knowledge from bi-lingual text data. Domain-specific causal knowledge plays an important role in human intellectual activities, especially expert decision making. Especially, in the financial area, fund managers, financial analysts, etc. need causal knowledge for their works. Natural language processing is highly effective for extracting human-perceived causality; however, there are two major problems with existing methods. First, causality relative to global activities must be extracted from text data in multiple languages; however, multilingual causality extraction has not been established to date. Second, technologies to extract complex causal structures, e.g., nested causalities, are insufficient. We consider that a model using universal dependencies can extract bi-lingual and nested causalities can be established using clues, e.g., “because” and “since.” Thus, to solve these problems, the proposed model extracts nested causalities based on such clues and universal dependencies in multilingual text data. The proposed financial causality extraction method was evaluated on bi-lingual text data from the financial domain, and the results demonstrated that the proposed model outperformed existing models in the experiment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Domain-specific causal knowledge plays an important role in human intellectual activities, particularly in expert decision-making processes. For example, car companies store knowledge that comprises broken parts and their reasons, for using next research and development. In addition, financial institutes store the causalities of stock price movements and their causes as human knowledge to develop effective trading strategies.

In particular, financial institutes require causality information in English and other languages because early stock price fluctuation information is published in different languages. Therefore, causality for global activities must be extracted from text data in multiple languages; however, an effective multilingual causality extraction method has not yet been established. For example, FinCausal [1] is a shared task for extracting causalities; however, this task only targets causalities in English. In addition, Zhou et al. [2] proposed a model to extract causality from Chinese traffic accident text data. Each of these previous studies targeted a single language, and it is unclear if the models can extract causality from text data in different languages. There is also research on causality extraction from multilingual languages [3]. However, since it is necessary to manually extract candidate causes and effects, this method cannot be used in the financial area where new causality appears one after another.

Technical reports, e.g., financial texts and traffic accident texts [2], often include nested causalities. For example, the sentence presented in Table 1 includes two causalities. Here, the “r” tag means effect, the “b” means cause, and the “c” represents a clue to detect causalities. In addition, each tag has a number to associate cause–effect. In this example, cause 1 (b1) includes an additional causality (b2-r2), and each causality can be identified by clues. However, existing technologies to extract complex causal structures, e.g., nested causalities, are insufficient. For example, in the FinCausal dataset, nested causalities were combined a single causality.

Therefore, in this paper, we propose a model to extract bi-lingual nested financial causalities using clues. In this research, we use two language data, English and Japanese, to confirm the performance of our model. Here, we assume that financial causality structures are language-independent, and we employ universal dependencies [4] as syntactic parsers. The universal dependencies project is developing cross-linguistically consistent treebank annotation for many languages, including data from more than 100 languages. In addition, we employ graph neural networks to apply syntactic dependencies in the causality extraction task. Finally, we evaluated the proposed method to extract financial causality on bi-lingual text data from the financial domain.

Our primary contributions are summarized as follows.

-

We proposed a model to extract financial causalities from bi-lingual text data.

-

The proposed model handles nested causalities.

-

We indicated clue expressions, multi-task learning, and syntactic dependencies with graph neural network are useful for extracting causality.

2 Related Works

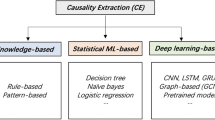

Many studies have investigated the extraction of causal information from texts. For example, Khoo et al. proposed a method to extract cause–effect expressions from newspaper articles by applying manually constructed patterns [5]. In addition, Khoo et al. also proposed a method to extract causalities from medical databases by applying graphical patterns [6]. Kao et al. [7] developed the best model in the FinCausal 2020 shared task. This model is based on BERT and the Viterbi decoder algorithm. Sakaji et al. proposed a method for extracting casualties from Japanese newspaper articles using syntactic patterns [8]. Their method also relies on clue expressions. Girju proposed a method to automatically detect and extract causal relations based on clue phrases [9], where causal relations are expressed by pairs of noun phrases. Here, WordNet was used to create semantic constraints to select candidate pairs. In addition, Mirza et al. proposed a framework to annotate causal signals and causal relations between events [10]. In their paper, they compared the rule-based method and the supervised method. Chang et al. proposed a bootstrapping method to extract causalities [11], and they used Naive Bayes as filtering causalities. These studies were based on clues, which are important in terms of extracting causalities; thus, this study is also based on clues. In addition, we know that extracting causalities using a syntactic parser is effective, as demonstrated by Khoo et al. [6] and Chang et al. [11]. Therefore, given the findings presented by previous studies, it is understandable that we adopt syntactic dependencies.

In terms of studies into causality extraction that assumed an application, Hassanzadeh et al. attempted to detect causalities in phrase pairs to use causalities for the IBM Scenario Planning Advisor [12]. They proposed a BERT-based method, and it outperformed other methods. In addition, Hassanzadeh et al. proposed a causal knowledge extraction system [13] that has functions, i.e., cause–effect pairs search, evidence search, causal analysis, and mind map creation. Hashimoto et al. proposed a method to detect causalities from phrase pairs in order to predict future scenarios [14], and Heindorf et al. constructed a causal net using a method based on bootstrapping [15], and they evaluated their causal net using human assessment and question answering processes. Izumi et al. extracted causalities using syntactic patterns and a machine learning method to construct causal chains [16] using word2vec and cosine similarities. Sakaji et al. extracted rare causalities from Japanese financial statement summaries based on probabilities of occurrence of cause and effect [17]. They aim to find investment opportunities using rare causalities. Thus, in this study, we also extract causalities for use as applications for companies, e.g., financial institutions.

Several studies have investigated the detection of causal knowledge from phrase pairs. For example, Bethard et al. proposed a method to classify verb pairs with causal relationships [18] using a SVM for classification. In their study, they considered both syntactic and semantic features. Do et al. proposed a minimally supervised method to identify causal relationships between events in context [19]; however, their method only detects causalities, leaving the extraction of phrases that represent causality to another method. Thus, the focus of their study differs from our focus.

Note these previous studies targeted a single language, especially English; thus, it is unknown if the corresponding methods can be used to extract causalities from text data in other languages. In contrast, the proposed model can extract causalities in any language supported by universal dependencies. In addition, there are few mentions of the nested causalities above studies, which indicates that our target task is challenging.

3 Tagging Causality

In reference to the literature, we define causalities in text data as cause expression and effect expression pairs [20]. Here, “reason” and “cause” are treated as equal because it is too difficult to distinguish these meanings. In addition, we focus on cause and effect expressions connected via clue expressions. Here, a clue expression is an expression that helps us detect both cause and effect expressions. For example, “because,” “due to,” and “as” are clue expressions in English. We must consider clue expressions to extract cause and effect expressions because the proposed model targets nested causalities. Using clue expressions, we can effectively detect which cause corresponds to which effect. For example, in Table 1, we can detect two causalities using two clue expressions, i.e., “as” and “from.” In this case, the cause expression “weaker-than-expected data on Chicago-area manufacturing offset gains from positive news about General Motors Corp.” corresponds to an effect expression “U.S. stocks ended lower on Friday in a volatile trading session,” and this cause–effect expression pair is connected by the “as” clue expression. In addition, the cause expression “positive news about General Motors Corp.” corresponds to the effect expression “weaker-than-expected data on Chicago-area manufacturing offset gains,” and this cause–effect expression pair is connected by the “from” clue expression. Therefore, for this task, we input the clue expressions to the causality extraction model to extract nested causalities. In this research, we define nested causality in comparison to FinCausal as follows.

-

One sentence contains more than one causalities.

-

One causality encompasses another causality.

In this task, we use BIO tagging to extract cause and effect expressions. BIO tagging scheme comprises beginning (B), inside (I), and outside (O) tags. For cause expressions, the “B-C” tag indicates B, and the “I-C” indicates I. For effect expressions, the “B-E” tag indicates B, and the “I-E” indicates I. Figure 1 shows an example of BIO tagging.

In Fig. 1, “Pfizer falls, oil shares rise” is a cause expression, and “U.S. stocks flat” is an effect expression. Therefore, a word “Pfizer” is tagged “B-C” and “falls” “,” “oil” “shares” and “rise” are tagged “I-C.” And, a word “U.S.” is tagged “B-E” and “ stocks” and “flat” are tagged “I-E.” In this research, we develop the method for extracting cause and effect expressions using these tags.

4 Causality Extraction

In this section, we describe the proposed model to extract cause and effect expressions using both syntactic dependencies and clue expressions. The proposed model comprises bidirectional encoder representations from transformers (BERT) [21] and graph neural networks. The model can process multiple languages by using syntactic parsers based on universal dependencies. In addition, graph attention networks (GAT) [22] are employed as graph neural networks in order to apply syntactic dependencies. We initially considered graph convolutional networks (GCN) [23] rather than GATs; however, we found that the GAT was better than the GCN for this task through the pre-evaluation. In addition, to handle nested causalities, the proposed model takes clue expressions as input. Here, the proposed model uses clue expressions that are encoded using a gated recurrent unit (GRU) [24]. Figure 2 shows an overview of the proposed model.

First, we create a word sequence W from the input sentence. Here, the word sequence W comprises N words; thus, \(W = \{w_1, w_2,..., w_N\}\). Using this input and the BERT model BERT trained using English or Japanese Wikipedia entries, we obtain the vector \(T_W\) of each word using Eq. 1.

Using the obtained vector of each word \(T_W = \{t_1, t_2,..., t_N\}\), we generate the inputs to the GAT. Here, the dimension l of the final BERT output is 768, and \(t_i \in \mathbb {R}^{l}\). We acquire directed edges from W using the syntactic dependencies. Here, we employ spaCyFootnote 1 and GiNZAFootnote 2 as dependency parsers for English and Japanese, respectively. In addition, we create \(\mathcal {N}_i\) using the acquired directed edges. \(\mathcal {N}_i\) is a set of connected words from word \(w_i\). Then, \(T_W\) is updated using the GAT following Eqs. 2 and 3.

where, \(\text {a}\) is a weight vector, and \(\text {a} \in \mathbb {R}^{2\,l}\). In addition, \(W_{g}\) is a weight matrix, and \(W_{g} \in \mathbb {R}^{l \times l}\). Here, \(\text {LReLU}\) indicates \(\text {LeakyReLU}\) [25] and is calculated using Eq. 4.

Therefore, we can obtain \(T^{\prime }_W = \{t_1^{\prime }, t_2^{\prime },..., t_N^{\prime } \}\) using Eq. 3. We then obtain \(G_W\) using Eq. 5.

where, \(G_W\) comprises \(\{g_1, g_2,..., g_N\}\), and \(g_i \in \mathbb {R}^{l}\). In particular, \(g_i=t_i+t_i^{\prime }\), and \(G_W = \{t_1+t_1^{\prime }, t_2+t_2^{\prime },..., t_N+t_N^{\prime }\)}. Then, we create \(C= \{c_1, c_2,..., c_M\}\), which includes the output of the [CLS] tag and \(t_n\) comprising the input clue expression and \(c_i \in \mathbb {R}^{l}\). We select \(g_i\) that fall under [CLS] tag and clue expressions from \(G_W\) as C. Figure 3 shows an example of correcting C from the input.

Then, the proposed model obtains clue encoding \(\overrightarrow{h}_{\textrm{clue}}\) using C according to Eq. 6. Here, \(\overrightarrow{h}_{\textrm{clue}} \in \mathbb {R}^{\frac{l}{2}}\). Note that we attempted a bidirectional version of Eq. 6; however, we found that this was less effective than the presented version, i.e., Eq. 6.

The proposed model predicts cause or effect tags using \(G_W\) and \(\overrightarrow{h}_{\textrm{clue}}\) according to Eqs. 7 and formula 8, respectively.

where, \(W_\textrm{cause}\) and \(W_\textrm{effect}\) are weight matrices, and \(b_\textrm{cause}\) and \(b_\textrm{effect}\) are bias vectors. In addition, \(O_\textrm{cause}(w_i)\) and \(O_\textrm{effect}(w_i)\) are the output layers including three classes; i.e., the B, I and O tags.

Finally, the proposed model calculates loss \(\mathcal {L}\) using Eq. 9.

where, \(\mathcal {L}_\textrm{cause}\) and \(\mathcal {L}_\textrm{effect}\) are the losses of the cause and effect expressions; respectively, and \(\mathcal {L}_\textrm{cause}\) and \(\mathcal {L}_\textrm{effect}\) are cross-entropy losses. For simplicity, \(O_\textrm{cause}(w_i)\) and \(O_\textrm{effect}(w_i)\) are referred to as \(o_c\) and \(o_e\), respectively, in the following.

where, \(o_c\) is the cause output by the proposed model, and \(y_c\) is the true label for the cause. In addition, \(o_e\) is the effect output by the proposed model, and \(y_e\) is the true label for the effect.

5 Experiment

We conducted an experiment to evaluate the performance of the proposed model. For this experiment, we prepared three datasets including English and Japanese text data from the finance area. With these datasets, we confirmed that the proposed model can extract causal expressions from data with languages.

5.1 Datasets

The first dataset contained Reuters news articles in English, and the second dataset was the FinCausal 2020 “PRACTICE” data. This Fincausal dataset is not the final version, the causal expression extraction results differed from the leader board results.Footnote 3 Finally, we used a Japanese financial statements summary called “Kessan Tanshin.” Companies listed on the Tokyo Stock Exchange in Japan must prepare and publish Kessan Tanshin once per quarter. Therefore, the Kessann Tanshin data are published in PDF format on each company’s website. Here, we opened the Kessan Tanshin dataset.Footnote 4 Note that the created Reuters dataset, cannot be made public because the content is a commercial product. Thus, two of the three datasets are open data. There are two tasks for detecting causalities in texts: selecting causality sentences and extracting cause–effect expressions. In this experience, we utilize only sentences that include causalities because we target to extract cause and effect expressions. Our previous work [17] is an example of a paper that selects causality sentences. Each dataset is described in more detail in the following.

Reuters news articles (English)

Here, we randomly selected 100 Reuters news articles published between 1996 and 2006. The 100 articles were tagged by three people working at a securities company. Each people labeled tags for the 100 articles, and then we combined the results of the three people. The average Kappa value about Reuters news articles is 0.65. As a result, the data included 808 causal expressions, and we split the data into 565 training data, 82 validation data, and 161 test data.

FinCausal (English)

The FinCausal dataset includes 1,109 causal expressions, and we split the data into 770 training data, 110 validation data, and 229 test data.

Kessan Tanshin (Japanese)

We gathered the Kessan Tanshin data from the websites of Japanese companies, and we selected 30 files at random to generate the dataset. We tagged the data to create the experimental causality dataset, and this tagging process was performed by two natural language processing researchers (And they are also individual investors). Each researcher labeled tags for the 30 files, and then we combined the results of the two researchers. In particular, the first annotator tagged the texts, then the second annotator checked the annotations. As a result, the data included 477 causal expressions, and we split the data into 332 training data, 46 validation data, and 99 test data.

5.2 Models

In this performance evaluation, we compared the proposed model and existing models. First, we considered the model proposed by Dasgupta et al. [26], which is based on bidirectional long short-term memory [27] (BiLSTM). For this model, we used GloVe [28] for English word embeddings and WikiEntVecFootnote 5 for Japanese word embeddings. We also employ the BERT model.

In addition, we also used models that were constructed using individual components of the proposed model. Here, the MB model only used multi-task learning, the CMB model uses multi-task learning and clue encoding (Fig. 2), and the GCMB is the proposed model, which includes multi-task learning, clue encoding, and the GAT with syntactic dependencies. Additionally, we evaluate GCMB_GCN, which employs GCN instead of GAT. In this experiment, we used BERT-base models based on Wikipedia, and these models were developed using PyTorch,Footnote 6 PyTorch Geometric [29], and transformers [30]. Additionally, we conducted the experiments with five seeds (2000, 2010, 2020, 2030, 2040) of PyTorch.

5.3 Clue Expressions

In this research, our proposed model uses clue expressions. For example, “because,” “as,” “due to,” and etc. are English clue expressions. We used 28 English clue expressions for experiments on Reuter news articles and the FinCausal dataset. Table 2 showed the clue expressions. In Table 2, “will *” means that a combination of “will” and any verb. Additionally, we published English clue expressions and Japanese clue expressions on the web.Footnote 7

5.4 Parameters

For each model, we searched for the best parameter settings using the validation data. Here, the number of search epoch was 3–10, the batch size was 8–32, and the learning rates were set from 5e-08 to 5e-03. In this experiment, we set the input size to 350 following Kao et al. [7], who investigated models that use BERT. These parameters were searched by Optuna [31]. In this experiment, we employ Adam as an optimizer of models.

5.5 Experimental Results

Tables 3, 4, and 5 show experimental results. In this experiment, we calculate precision, recall, and F1. F1 is the harmonic mean of precision and recall, and each value is the average of a macro value obtained with five seeds. Specifically, for each word, we evaluate whether it is a cause, a result, or otherwise. We calculate the average of each result. In addition, these results are calculated for each of the five seeds and averaged. The best parameters of the proposed model are shown in Table 6. Figure 4 shows examples of the labeled cause and effect expressions in the Reuters dataset. In Fig. 4, “B–C” represents the B tag of cause, “I–C” represents the I tag of cause, “B–E” represents the B tag of effect, and “I–E” represents the I tag of effect.

6 Discussion

As shown in Tables 3, 4, and 5, the proposed model outperformed the compared models, which the exception of the precision value obtained on the FinCasal dataset. We found that the F1 score obtained by the proposed model was greater than 0.80 in the Reuters and Kessan Tanshin datasets; thus, we consider that the performance of the proposed model is sufficient for use in practical applications. Thus, we believe that we have confirmed the usefulness of the proposed model.

Tables 3, 4, and 5 compare the results of the MB, CMB, and GCMB models, and, as can be seen, these performances are increasing in order of MB, CMB, GCMB. From these results, we have confirmed that all components of the proposed model (i.e., the multi-task learning, clue encoder, and GATs with universal dependencies components) are important in terms of causality extraction performance. Here, we found that it is important to learn the cause and effect separately in neural network models. In addition, it is essential to handle clue expressions effectively in the causality extraction task. Finally, graph neural networks with universal dependencies are important relative to extracting causal knowledge.

From Table 4, we found that the precision of Bi-LSTM outperformed other models. This can be attributed to two reasons. First, the FinCausal dataset is limited to only one causal per sentence. Second, Bi-LSTM does not use multi-task learning. Therefore, it is possible that adequate performance could have been achieved without using a complex Transformer-based model.

Figure 4 shows that results of the GCMB and BiLSTM models. In Fig. 4, the models were required to extract the cause expression “the No.1 U.S. meat processor beat analysts’ quarterly profit estimates” and the effect expression “Tyson Foods gained 3.8 percent.” In addition, the sentence includes an additional cause expression, i.e., “strong demand for beef.,” and an additional effect expression, i.e., “the No.1 U.S. meat processor beat analysts’ quarterly profit estimates.” These cause and effect expressions are connected via the “due to” clue expression. In Fig. 4, the GCMB results were corrected except the tag of “No. 1.” Note that the BiLSTM model only identified four correct tags with, i.e., “Tyson,” “Foods,” “gained,” and “after.”

In addition, as shown in Fig. 5, the models were required to extract the cause expression “(human resources investment, research and development expenses, and main office transfer)” and the effect expression “(increasing selling costs and increasing administrative expenses).” The sentence also includes another cause expression, i.e., “(increasing selling costs due to human resources investment, research and development expenses, and main office transfer and increasing administrative expenses)” and another effect expression, i.e., “(the amount was 285 million yen.)” These cause and effect expressions are connected via the “(from)” clue expression. In this case, the GCMBERT results were corrected except the tag of “(sale).” Note that the BiLSTM model exhibited three errors for “(due to),” “(sale),” and “(cost).”

From these results, we confirm that the performance of the proposed model is excellent on both English and Japanese datasets. However, we should continue to improve the performance of the proposed model because several errors are observed in the results.

To examine the stability of each method, we summarized the results with five seed values in boxplots. The boxplots are shown in Figs. 6, 7 and 8. In Figs. 6, 7 and 8, results are summarized as boxplots. The trends in Figs. 6 and 8 are similar, but the trends in Fig. 7 are different. We consider that this is caused by including nested causality or not. It indicate that our model fit to extract nested causality. Additionally, the result also indicate all of our components (multi-task learning, clue encoder, and graph neural network based on syntactic dependencies) are useful for extracting nested causality.

From Figs. 6, 7 and 8, we can confirm that GCMB is more stability that other models. The difference between the maximum and minimum values of GCMB tends to be smaller than other models. We consider that graph neural network with syntactic dependencies provided stability and accuracy for extracting causality.

From Figs. 6, 7 and 8, precision of BiLSTM and BERT tends to be higher than their recall. However, their F1 is low due to their low recall. This result suggests that our components (multi-task learning, clue encoder, and graph neural network based on syntactic dependencies) are important for causality extraction regardless of nested causality or not.

7 Conclusion

In this paper, we have proposed a model to extract nested financial causalities based on clue expressions and universal dependencies from bi-lingual texts. The proposed model uses a clue encoder to handle given clue expressions for detecting nested causalities. In addition, to extracting bi-lingual causalities, the proposed model used syntactic dependencies based on universal dependencies, as well as graph attention networks. To evaluate the proposed model, we created two datasets, i.e., the Reuters news dataset and Kessan Tanshin dataset, from the financial domain. The proposed model was evaluated experimentally on both English and Japanese datasets, and the experimental results demonstrate that the proposed model outperformed the compared models.

In future, we attempt to extract causalities from another language dataset, e.g., a Chinese dataset, using the proposed model, and we have begun to explore causalities in Chinese financial text data to facilities this future research direction. We also plan to develop a method to construct causal nets from multilingual causalities. We believe that the financial domain requires multilingual causal nets because the economy is becoming more worldwide. We consider that a method is required to connect causalities between different languages, and we would like to investigate forecasting global economic scenarios using the constructed causal nets. When an event occurs, some companies’ sales may decrease and other companies’ sales may increase. We expect that this process can be observed using causal nets; therefore, we consider that economic scenarios can be forecasted from past events using causal nets.

References

El-Haj, D.M., Athanasakou, D.V., Ferradans, D.S., Salzedo, D.C., Elhag, D.A., Bouamor, D.H., Litvak, D.M., Rayson, D.P., Giannakopoulos, D.G., Pittaras, N. (eds.): Proceedings of the 1st Joint Workshop on Financial Narrative Processing and MultiLing Financial Summarisation (2020)

Zhou, G., Ma, W., Gong, Y., Wang, L., Li, Y., Zhang, Y.: Nested causality extraction on traffic accident texts as question answering, 13029 LNAI, 354–362 (2021)

Hashimoto, C.: Weakly supervised multilingual causality extraction from Wikipedia. In: EMNLP-IJCNLP, pp. 2988–2999 (2019)

Nivre, J., Marneffe, M.-C., Ginter, F., Hajič, J., Manning, C.D., Pyysalo, S., Schuster, S., Tyers, F., Zeman, D.: Universal Dependencies v2: An evergrowing multilingual treebank collection. In: LREC, pp. 4034–4043 (2020)

Khoo, C.S.G., Kornfilt, J., Oddy, R.N., Myaeng, S.H.: Automatic extraction of cause-effect information from newspaper text without knowledge-based inferencing. Lit. Linguist. Comput. 13(4), 177–186 (1998)

Khoo, C.S.G., Chan, S., Niu, Y.: Extracting causal knowledge from a medical database using graphical patterns. In: ACL, pp. 336–343 (2000)

Kao, P.-W., Chen, C.-C., Huang, H.-H., Chen, H.-H.: NTUNLPL at FinCausal 2020, task 2:improving causality detection using Viterbi decoder. In: Proceedings of the 1st Joint Workshop on Financial Narrative Processing and MultiLing Financial Summarisation (2020)

Sakaji, H., Sekine, S., Masuyama, S.: Extracting causal knowledge using clue phrases and syntactic patterns. In: PAKM, pp. 111–122 (2008)

Girju, R.: Automatic detection of causal relations for question answering. In: MultiSumQA, pp. 76–83 (2003)

Mirza, P., Tonelli, S.: An analysis of causality between events and its relation to temporal information. In: COLING, pp. 2097–2106 (2014)

Chang, D.S., Choi, K.S.: Incremental cue phrase learning and bootstrapping method for causality extraction using cue phrase and word pair probabilities. Inform. Process. Manag. 42, 662–678 (2006)

Hassanzadeh, O., Bhattacharjya, D., Feblowitz, M., Srinivas, K., Perrone, M., Sohrabi, S., Katz, M.: Answering binary causal questions through large-scale text mining: An evaluation using cause-effect pairs from human experts. In: IJCAI, pp. 5003–5009 (2019)

Hassanzadeh, O., Bhattacharjya, D., Feblowitz, M., Srinivas, K., Perrone, M., Sohrabi, S., Katz, M.: Causal knowledge extraction through large-scale text mining. In: AAAI, pp. 13610–13611 (2020)

Hashimoto, C., Torisawa, K., Kloetzer, J., Sano, M., Varga, I., Oh, J.-H., Kidawara, Y.: Toward future scenario generation: Extracting event causality exploiting semantic relation, context, and association features. In: ACL, pp. 987–997 (2014)

Heindorf, S., Scholten, Y., Wachsmuth, H., Ngomo, A.C.N., Potthast, M.: Causenet: Towards a causality graph extracted from the web. In: CIKM, pp. 3023–3030 (2020)

Izumi, K., Sakaji, H.: Economic causal-chain search using text mining technology. In: Proceedings of the First Workshop on Financial Technology and Natural Language Processing, pp. 61–65 (2019)

Sakaji, H., Murono, R., Sakai, H., Bennett, J., Izumi, K.: Discovery of rare causal knowledge from financial statement summaries. In: SSCI, pp. 602–608 (2017)

Bthard, S., H.Martin, J.: Learning semantic links from a corpus of parallel temporal and causal relations. In: ACL, pp. 177–180 (2008)

Do, Q.X., Chan, Y.S., Roth, D.: Minimally supervised event causality identification. In: EMNLP, pp. 294–303 (2011)

Prasad, R., Dinesh, N., Lee, A., Miltsakaki, E., Robaldo, L., Joshi, A., Webber, B.: The Penn Discourse TreeBank 2.0. In: LREC (2008)

Devlin, J., Chang, M.-W., Lee, K., Toutanova, K.: BERT: Pre-training of deep bidirectional transformers for language understanding. In: NAACL, pp. 4171–4186 (2019)

Veličković, P., Cucurull, G., Casanova, A., Romero, A., Liò, P., Bengio, Y.: Graph Attention Networks. In: ICLR (2018)

Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. In: ICLR (2017)

Chung, J., Gulcehre, C., Cho, K., Bengio, Y.: Empirical evaluation of gated recurrent neural networks on sequence modeling. In: NeurIPS 2014 Workshop on Deep Learning (2014)

Maas, A.L., Hannun, A.Y., Ng, A.Y.: Rectifier nonlinearities improve neural network acoustic models. ICML 30, 3 (2013)

Dasgupta, T., Saha, R., Dey, L., Naskar, A.: Automatic extraction of causal relations from text using linguistically informed deep neural networks. In: SIGDIAL, pp. 306–316 (2018)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Computat. 9(8), 1735–1780 (1997)

Pennington, J., Socher, R., Manning, C.D.: Glove: Global vectors for word representation. In: EMNLP, pp. 1532–1543 (2014)

Fey, M., Lenssen, J.E.: Fast graph representation learning with PyTorch Geometric. In: ICLR Workshop on Representation Learning on Graphs and Manifolds (2019)

Wolf, T., Debut, L., Sanh, V., Chaumond, J., Delangue, C., Moi, A., Cistac, P., Rault, T., Louf, R., Funtowicz, M., Davison, J., Shleifer, S., Platen, P., Ma, C., Jernite, Y., Plu, J., Xu, C., Scao, T.L., Gugger, S., Drame, M., Lhoest, Q., Rush, A.M.: Transformers: State-of-the-art natural language processing. In: EMNLP: System Demonstrations, pp. 38–45 (2020)

Akiba, T., Sano, S., Yanase, T., Ohta, T., Koyama, M.: Optuna: A next-generation hyperparameter optimization framework. In: KDD, pp. 2623–2631 (2019)

Acknowledgements

This work was supported by Daiwa Securities Group. This work was supported in part by JSPS KAKENHI Grant Number JP21K12010, and the JST-Mirai Program Grant Number JPMJMI20B1, and JST-PRESTO Grant Number JPMJPR2267, Japan.

Funding

Open access funding provided by The University of Tokyo.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A Parameters

Appendix A Parameters

In this appendix section, we show the graphs of parameters. Using Optuna’s “plot_slice” and “plot_param_importances”, we illustrate the learning process for each parameter and the importance of each parameter. In this study, we define the batch size as \(2^N\) and search N from 3 to 5. And, we define the epoch number as L and search L from 3 to 20. Finally, we define the learning rate \(5e-M\) and search M from 8 to 3. In each experiment, 10 trials were performed using Optuna to search for optimal parameters.

Figures 9, 10, and 11 are showed plot of each parameters. In these figures, “batch_size” indicates the batch size, “epoch” indicates the epoch number, and “lr_lastlayer” indicates the learning rate. Additionally, Figs. 12, 13, and 14 are showed importance of each parameters.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Sakaji, H., Izumi, K. Financial Causality Extraction Based on Universal Dependencies and Clue Expressions. New Gener. Comput. 41, 839–857 (2023). https://doi.org/10.1007/s00354-023-00233-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00354-023-00233-2