Abstract

Field Programmable Gate Arrays (FPGAs) have become a widely available computational resource for real-time image processing and can be used in time-critical optical process measurements. In this study, we investigate the applicability of the spatial filtering technique for time-resolved velocity field determination, on the example of a simple water tunnel flow. For this, the spatial filtering technique was implemented for a custom FPGA platform and analyzed regarding the effect of spatial and temporal resolution on the accuracy of the results. It was possible to prove the algorithm as suitable for velocity field estimation and identify algorithm parameters, which allow for increasing the accuracy of the results.

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Correlation-based particle image velocimetry (PIV) is widely applied for the time-resolved measurement of multidimensional velocity fields (Raffel et al. 2018). It requires the evaluation of image pairs captured at high frame rates, leading to high bandwidth and storage requirements for the image data, as well as an extensive demand for computational resources for the processing. This renders continuous online measurements of velocity fields in real-time with high frame rates nearly impossible. To overcome this limitation, parts of the image processing pipeline can be implemented within highly paralleled application-specific hardware modules, directly connected to the data output of an imaging sensor. For this purpose, Field Programmable Gate Arrays (FPGAs) are reasonable in terms of complexity and flexibility, as they have become more accessible in recent years. By using such a system at a sufficient clock speed the demand for bandwidth, storage, and computational resources can be reduced while yielding fewer intermediate results needed to be transferred to a post-processing system.

The algorithm used in this study is known as spatial filtering technique (Aizu and Asakura 2006). It is a suitable option for a hardware implementation as its processing time scales linearly with the amount of data to be processed. A description of the spatial filtering technique, alongside selected modifications, is given in Sect. 2.

An FPGA-based camera platform, capable of real-time image processing, was developed in a cooperative project between the University of Rostock and the OPTOLOGIC Components GmbH. Section 3 gives an overview of this system and addresses details of the hardware implementation. It is used in a verification experiment presented in Sect. 4.

Commercial length and velocity measurement systems using spatial filtering techniques are available from Optologic Components GmbH, ASTech GmbH, Micro-Epsilon Messtechnik GmbH & Co. KG, Kistler Instrumente GmbH, and Parsum GmbH. They are primarily used to measure one time-dependent velocity component with a high temporal resolution, at one point of a surface (0D–1C). Typically, optical line or area scan sensors are used in these systems.

2 Spatial filtering technique

2.1 Basic principles

In general, the spatial filtering technique is based on using a periodic, structured grating within the imaging path of an optical system (Aizu and Asakura 2006; Schaeper et al. 2008). The image information is weighted by this grating and integrated consecutively. Considering the one-dimensional image of a moving structure \(i\left( x-vt\right)\), the movement results in a velocity v or an equivalent displacement vt of the structures projection in the imaging plane. Using a time-independent spatial grating function \(g\left( x\right)\), the spatial filtering signal \(s\left( t\right)\) can be calculated as follows (Schaeper and Damaschke 2012):

The spatial filtering signal can be interpreted as time-resolved cross-correlation of the imaged structure with a grating function.

Real valued sine gratings

where \(k_g\) is the spatial angular frequency and \(P_g\) a defined period length, are a common choice in spatial filtering velocimetry. The velocity can then be estimated from the frequency of the spatial filtering signal \(\omega _s\) taking into account the grating functions spatial angular frequency (Schaeper and Damaschke 2012):

Building up on this approach, it is possible to use a complex harmonic grating function (Schaeper and Damaschke 2017)

that applied to Eq. 1 results in a complex valued spatial filtering signal

which is equivalent to a time-resolved Fourier coefficient of the respective spatial angular frequency \(k_g\) (Schaeper and Damaschke 2017). A Velocity estimation can then be performed, by estimating the phase derivation in Eq. 5, utilizing the phase difference \(\Delta \varphi _g\) of the signal \(s\left( t\right)\), at consecutive discrete temporal samples (Nomura et al. 1992):

The validity of this method can be shown, using the shifting property of the Fourier transform. It states, that a shift in spatial domain results in a linear frequency dependent phase shift in frequency domain, which is proportional to vt:

where I denotes the spatial spectrum of the one-dimensional image signal i with respect to the spatial frequency k. To isolate this phase shift analogously to Eq. 6, the complex conjugate multiplication between the spectra of the image information at two consecutive time steps can be computed:

Thereby the phase offsets introduced by the structure itself cancel out while the shift-dependent phase term, \(\Delta \varphi \left( k\right) =kv\Delta t\), remains. The expression can be rearranged in terms of the velocity

which should be equal for every frequency from gratings with different k. This proves Eq. 6 true as a special case of Eq. 10 and implies, that in theory any complex harmonic grating function is suitable for the velocity estimation.

2.2 Application to real imaging systems

In contrast to the previous considerations, a real imaging system incorporating a spatial filter would not provide an infinitely large imaging plane. Because the grating function should be mean-free, the spatial filter is in the following assumed to be limited to an integer multiple N of period length. Equation 5 can therefore be rewritten as:

This concept can be translated to a camera system, which uses a CCD or CMOS imaging sensor. The sensor provides a light-sensitive area, limited by a fixed number of equidistant pixels, which sample the imaging plane spatially. Therefore, the spatial filtering signal of a digitally recorded region of interest (ROI) can be expressed as the sum of pixel values, weighted by a discrete grating function:

The influence of the discretization of the imaging plane and the grating function is given only if the point spread function of the used imaging lens is smaller than the area of a single pixel or if a differential grating function with alternating positive and negative values is used (Schaeper and Damaschke 2017). In the case of a very small point spread function, the problem can be solved by slightly defocusing the objective lens. The second case of a differential grating function does not apply to the proposed method, as only harmonic grating functions with longer period lengths are used.

The discretization of the imaging plane and the spatial limitation of the ROI both increase the velocity estimations variance. As the image information moves over the imaging sensor’s surface, it crosses the ROIs boundaries, leading to changes in the spectral content between consecutive images. This causes inconsistencies and breaks in the phase progression of the spatial filtering signal, resulting in inaccurate velocity estimations. This increased variance depends on the density of image information and the shape of the chosen interrogation areas (Schaeper et al. 2015). It can be reduced by taking the average over multiple independent velocity estimations in the frequency (see 2.3) and time domain (see 2.6).

Building upon the usage of imaging sensors, the spatial filtering technique can be extended to two-dimensional rectangular interrogation areas with a certain width w and height h. Applying Eq. 12 to the respective directions of row and column sums of the ROI, results in two sets of independent spatial filtering signals, allowing a two-component velocity estimation. The grating functions for both directions can be chosen independently from each other regarding their spatial frequency, accounting for different orders of magnitude assumed for each velocity component.

A multitude of such interrogation areas can be distributed over the area of an image sensor, enabling spatial resolution of velocity fields in one or two dimensions. The ROI sizes and positions can be adjusted to meet certain requirements depending on the observed process. For example, if the system provides a dominant direction of movement, the interrogation area size can be expanded in this direction, allowing larger shifts of image information and therefore estimations of higher velocity values. ROIs can also be placed in an overlapping pattern, to increase the vector density of the velocity field measurement results.

2.3 Extension to multiple grating functions

The depicted approach using a single grating function to generate the spatial filtering signal (Eq. 5) has two obvious flaws. Even if Eq. 10 proves otherwise, not every grating function is equally suitable to obtain an appropriate velocity estimation in any case. Instead, the suitability of the applied grating function is highly dependent on the spectral content of the imaged structure and its velocity (Schaeper and Damaschke 2017). Since the computation of the complex spatial filtering signal is equivalent to a cross-correlation with a complex harmonic function of a given frequency, this frequency has to be part of the image’s spectral content in order to generate a valid spatial filtering signal.

Also, the limitation in terms of the maximum measurable velocity originates from the \(2\pi\)-periodic nature of harmonic grating functions. For one grating, the possible phase range is restricted to \([-\pi ,\pi ]\), resulting in determinable image shifts within half a period length for both directions and therefore limiting the measurable velocity to

where the subscript n will be used in the following to denote individual grating functions. This can either be treated by decreasing the sampling interval \(\Delta t\) or by increasing the period length, whereby a drastic decrease in the sampling interval becomes infeasible in practice. But also to increase the period length entails the disadvantage of lowering the accuracy at lower velocities (Schaeper and Damaschke 2017).

As a conclusion from the observations mentioned above, to increase the accuracy while sustaining a proper dynamic range for the velocity estimation, multiple grating functions should be used at the same time, resulting in a set of concurrent spatial filtering signals. Through evaluation of the different signals and combining their respective intermediate results, the velocity estimation can be optimized, leading to an adaptive spatial filtering algorithm. Strategies to evaluate spatial filtering signals from different grating functions have already been proposed, like the dual orthogonal pointer method (DOP) by Michel et al. (1995) and the coarse-to-fine algorithm by Bergmann (2010).

The most straight forward approach is to apply a set of N different grating functions to the same moving image and computing the average of the resulting independent velocity estimations:

This method can improve the accuracy compared to the use of a single grating function but does not provide an improvement in terms of the dynamic range. The estimation quality spontaneously degrades when the spatial shift exceeds the smallest period length used in the set of grating functions, due to the wrap-around behavior of the phase computation.

It is possible to identify the corrupted values by using the velocity estimation based on the grating function with the largest period length as a reference. If the velocity value exceeds the value range of certain spatial filtering signals, their estimation should be excluded from the average calculation. Assuming that the velocity measurements are ordered ascending by the period length of the used grating function, this can be written as follows:

Using this approach, the accuracy degrades on a smaller level as invalidated values no longer contribute to the average velocity.

Instead of invalidating the phase differences yielded by smaller grating functions, they can be estimated with respect to larger ones or simply the largest grating function used. Applying the equality of velocity estimations for different grating functions according to Eq. 10, the phase shift \(\Delta \varphi _{N-1}\) can be used to calculate an estimation of the phase shift

for any other smaller grating function.

Under ideal circumstances, this approximation should be equal to the respective phase difference \(\Delta \varphi _n\) extended by a multiple \(M_n\) of \(2\pi\) as it is no longer restricted to the value range of \(\left[ -\pi ,\pi \right]\) mentioned before:

\(M_n\) can be calculated while rounding half toward zero to reduce errors, as follows:

Thereby, an offset velocity \(\widetilde{v}_n\) for every individual velocity value can be computed, as it determines shifts of image information based on full period widths. Thus, Eq. 14 can be extended by this offset:

eliminating the error introduced by the limited phase value range. Nevertheless, the maximum estimable velocity remains limited by half the period length of the largest grating function.

2.4 Assessing the multi-grating-approach using artificial images

In order to compare the accuracy of the proposed multi-grating spatial filtering approach to other displacement estimation methods, multiple series of artificial particle images are used. Each series consists of 5000 images with a size of 512 px \(\times\) 10 px and are providing a constant pixel shift between 0.1 and 30 px in steps of 0.1 px respectively. The particle density for all images is set to one particle per 100 pixels and a slight effect of out-of-focus imaging is simulated by Gaussian blurring with a \(\sigma\)-value of one pixel in the subpixel domain.

Each image series is processed by different approaches. For a direct comparison, three conventional spatial filtering shift estimators using grating functions with period lengths of 128, 64 and 32 px (\({\text {SF}}_{x}\) with \(x=\{128,64,32\}\)) are used. The proposed multi-grating spatial filtering approach is applied in an unfiltered \({\text {SF}}_{\text {MG}}\) and filtered mode \({\text {SF}}_{\text {MG}}^*\) using a set of grating functions with period lengths of 16, 32, 64 and 128 px. The unfiltered mode simply yields the mean of each intermediate displacement estimation in every time step as displayed in Eq. 19, while the unfiltered mode only yields a mean displacement estimation if all four intermediate estimations lie within a range of one pixel. Additionally, a naive cross-correlation (CC) implementation using Fourier transform and a Gaussian fit for displacement estimation in the sub-pixel domain is applied as a benchmark.

The root mean square error (RMSE) of the individual shift estimation methods is shown in Fig. 1 for a reduced number of individual shifts.

The conventional spatial filtering methods provide a comparable high error level that is reduced toward shorter period lengths as mentioned in Sect. 2.3. Also, it becomes visible, that the estimation error begins to increase if the pixel shifts approach half the period length of the used grating function, as for \({\text {SF}}_{32}\) between displacements from around 11 to 16 px and for \({\text {SF}}_{64}\) starting at approximately 20 px.

The unfiltered multi-grating approach shows a slight improvement over the conventional spatial filtering estimator with a grating period length of 128 px only, which is also the largest grating function used for estimating the intermediate displacement results (see Eq. 16). This is due to the direct feedback of estimation errors from this low-frequency spatial filtering signal by calculating the correction factors \(M_n\) based on it. As there is a relatively large gap between the used period lengths, a small phase error at the reference \(\Delta \varphi _{N-1}\) can result in a large phase offset at the estimated phase differences \(\Delta \widetilde{\varphi }_n\).

The filtered multi-grating approach on the other hand provides a significantly improved estimation error when compared to the conventional spatial filtering estimators, and its unfiltered counterpart, which increases in the direction of higher displacement values. As estimation values are produced only, if all intermediate shift results lie within the range of a single pixel, the error arising when computing \(M_n\) as described above, is inherently suppressed. Despite the improved accuracy, this approach does not reach the low error level of the cross-correlation-based shift estimation. This can be explained by the respective amount of spectral content that contributes to the shift estimation. The conventional spatial filtering methods rely on single frequencies, the multi-grating approach combines four independent frequency components and the cross-correlation considers all frequencies obtainable by a discrete Fourier transform of the image information. Following this observation, the error level seems to scale with the amount of spectral information contributing to the shift estimation.

By excluding all processing results of the multi-grating approach, whose intermediate displacement estimations do not lie within the defined allowed displacement range of 1 px, a large number of estimations is discarded, causing a thinned-out dataset. Figure 2 shows the relative drop rates for the respective image series.

While the drop rates seem to be high, as already a pixel shift of 4 px results in only half of the shift estimations being considered valid, it has to be noted, that in the provided case study, no measures were in place to improve the signal quality before the shift estimation. Such measures could for example include the temporal integration using the superposition of pointers which is described in Sect. 2.6.

2.5 Mean image subtraction

To reduce the influence of static background illumination while generating the spatial filtering signal, it is possible to subtract a mean image from the digital image data before the grating function is applied (Schaeper et al. 2016). Such an image can be created by computing the pixelwise average over a set of images taken from the moving surface. Additionally to the mean image, a constant threshold can be subtracted from the pixel values, to take fluctuations in lighting or noise into account. The results of these subtractions are clipped to a minimum of zero, preventing noise and fluctuation offsets from persisting in the negative pixel values. If this approach can be used or if only a threshold should be applied depends on the imaged structure.

2.6 Temporal integration

Another possibility to improve the velocity estimation is the averaging of temporally consecutive values, which comes at the cost of losing temporal resolution. Depending on the overall properties of the processed image information, this can be either used to increase the accuracy of the average estimation or at least to gather enough data to compute a valid estimation result. Looking at the spatial filtering algorithm described above, there are two different suitable options to perform this kind of temporal integration.

The straight forward approach would be, to compute the average of \(N_v\) consecutive velocity estimations, yielded by Eq. 19:

This method can be used for systems that provide dense image information only, resulting in substantial velocity estimations in every time step. In contrast, if the images are rather sparsely populated with information, most single velocity estimations originate from background noise, degrading the average result. The same effect was also shown for the average velocity method in PIV by Meinhart et al. (2000).

An alternative approach is to perform the temporal integration before the velocity estimation in form of the accumulation of N temporal consecutive complex pointers yielded by Eq. 9. This method is comparable to the average correlation method in PIV (Meinhart et al. 2000) for individual frequencies. The magnitude of a complex spatial filtering signal generated from background noise only should be significantly lower than that generated from real structures. Therefore, the complex conjugate multiplication of two valid spatial filtering values will provide a higher magnitude compared to that of at least one invalid signal. Additionally, the phases of such valid results have to be equal, whereas the phase of invalid signals can be assumed as arbitrary distributed. Based on these considerations, the accumulation of the complex values results in a new pointer which phase reflects the shift of image information, slightly distorted by the invalid signal values:

The flaw in this approach arises when densely populated image data is evaluated and the errors in the spatial filtering signal are rather based on the change of information than on background noise. Under these circumstances, the result of the complex conjugate multiplication has a high amplitude, even if both factors do originate from image data, which is not correlated enough to allow a valid estimation.

3 Real-time processing

3.1 Platform description

An implementation of an FPGA-based smart camera platform originated as part of a cooperative project between the University of Rostock and the OPTOLOGIC Components GmbH. It consists of a high-speed CMOS image sensor, an FPGA, and an ARM-based System-on-module (SoM) (see Fig. 3).

The image sensor is capable of delivering a framerate of 340 fps at a full resolution of \({2048}\;{\textrm{px}}\times {1088}\;{\textrm{px}}\), resulting in a theoretical possible data rate to the FPGA of 722.5\(\;{\textrm{MBs}}^{-1}\), which is for technical reasons capped at 640 \({\textrm{MBs}}^{-1}\) in the current implementation. This means, that a framerate of 340 fps can only be used by reducing the ROI size to \({2048}\;{\textrm{px}}\times {963}\;{\textrm{px}}\). Even higher framerates can be reached by further reducing the ROIs number of lines. The FPGA provides 600 DSP-Cores for signal processing tasks and RAM Units with a total size of 11.700 kbit, for buffering tasks and storage of static and critical process data, besides other hardware resources. It handles the control of the sensor, manages the data acquisition from the sensor outputs, and implements the image processing algorithm. Furthermore, it is equipped with two external independent DDR3 RAM Banks, allowing operations that require larger storage than the internal RAM can provide, for example storing whole sets of images for subsequent evaluation. The FPGA is connected to an SoM via SPI and PCIe Gen. 3. The SoM itself is equipped with an ARM 1 GHz Quad-core processor and 1 GB of DDR3 RAM, running a Custom Linux, which contains all necessary drivers and software libraries to operate the FPGA. It also provides connectivity to an external host (e.g. via Ethernet) for data transmission and can be used for post-processing of the measurement data. Figure 4 shows the complete measurement system. The case has a size of \({120}\;{\text {mm}} \times {130}\;{\text {mm}} \times {64}\;{\text {mm}}\).

3.2 Processing architecture

The internal architecture of the FPGA implementation, as depicted in Fig. 5 consists of three organizational classes of different intellectual property cores (IP-Cores). The Sensor Control Block (green), provides all services related to the external imaging sensor, including parameterization, data acquisition, and triggering. Also, it converts the acquired image data into a streaming format for internal data transmission. The infrastructural IP-Cores (red) perform memory-related tasks. They are used to provide a PCIe-Endpoint, allowing the SoM to communicate with the FPGA, as well as to manage data access to internal and external memories and registers. The algorithmic IP-Cores (blue) are implementing the spatial filtering and shift estimation algorithms. They are consuming imaging data and producing velocity estimation values, which are written to a memory region that is accessible through the PCIe-Endpoint. Additionally to the processing pipeline, the current implementation features an independent sub-routine, for mean image generation. This functionality allows generating a mean image over an arbitrary number of images prior to the measurement, using the same sensor configuration, regarding frame rate and exposure time. In a future release, the mean image generation is planned to be migrated into the processing pipeline, for periodic renewal parallel to the measurement at configurable intervals ranging from a few minutes up to several hours. This will allow the system to adapt to changes in the background illumination that could occur in longer measurements or depending on the environment.

The processing pipeline is comprised of two individual parts, denoted as pre- and post-processing IP-Cores. While the preprocessing step is used to generate the spatial filtering signal, the post-processing performs the temporal integration and velocity estimation. Both IP Cores feature block control and configuration registers, which are used to manage the execution and parametrization of the algorithm.

Figure 6 shows the architecture of the preprocessing block. Incoming pixel values are reduced by corresponding values of a mean image and an optional threshold (see 2.5). The reduced pixel values are weighted by different grating functions (see 2.3), of which up to four can be applied simultaneously in the current implementation. Afterward, the pixels are accumulated in row and column directions, resulting in multiple spatial filtering signals, one for every grating function and interrogation area (see 2.2). It has to be noted, that the mean image and grating functions are accessed via independent memory interfaces. Since these datasets are frequently accessed, the throughput of the pipeline can be optimized by placing them on independent memory devices. The preprocessing block can process incoming pixels with the applied system clock speed of 200 MHz, while the pixel clock provided by the imaging sensor is at 40 MHz. Thereby, the possibility of pipeline stalling is eliminated and there are resources left for extending the block to more grating functions and generating spatial filtering signals for both dimensions.

As shown in Fig. 7, the post-processing block is used to estimate velocity values directly from the spatial filtering signal provided by the preprocessing block. In the first computation step, the complex conjugate multiplication of temporally consecutive signal values is performed (Eq. 9). Therefore, a delayed memory is added within this block to store signal values of the previous timestep, allowing the continuous generation of complex pointers for every calculated value of the spatial filtering signal after the first. Pointers yielded by the complex conjugate multiplication can be accumulated to perform the first form of temporal integration (Eq. 21), which increases the estimation quality (see 2.6). A CORDIC-based and fully pipelined atan2 implementation is used to generate the phase values of these accumulated pointers. After that, the corrected phase shifts are computed as stated in Eq. 16 and passed to a filter function. The filter function observes the divergence of the velocity estimations yielded by the corrected phases and passes information about their validity and the velocity offset (Eq. 19) to the final velocity estimation. Here, the velocities are estimated according to Eq. 19 and can additionally be averaged temporally for the second kind of temporal integration (see 2.6).

The current implementation is designed for processing the sensors data five times faster than the pixel stream input could provide, which eliminates the possibility of data inconsistencies in the dataflow within the pipeline. Despite this assumed reliability, there are measures in place, to handle possible occurring data inconsistencies within the pipeline. The pixel stream provides side-channel signals, to identify the first pixel of a new image and the last pixel of every image line. These side-channel signals are evaluated and converted to match the respective data structure in every step of the processing pipelines. If an inconsistency arises in one of those steps, the whole pipeline will be reset and will resume operation on the next new image.

Assuming the uninterrupted operation of the pipeline and that each image generates a set of output data, the delay time between the last pixel of an image coming from the sensor control block and raising the interrupt to signal that the dataset is ready, is approximate 200 \({{\mu }}s\). This delay value may vary, depending on the interrogation area height and the number of velocity estimations (i.e., the spatial resolution). About 99% of this delay time is caused by the shift estimation in the post-processing step, where the most demanding task is the phase calculation, which is currently performed by a single CORDIC-based processor. By implementing more of these processing blocks in parallel, the delay could be reduced by nearly the parallelization factor at the cost of more used resources inside the FPGA.

4 Flow Profile Characterization

4.1 Setup and Calibration

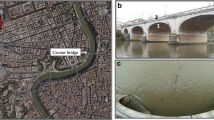

For the verification of the measurement system, the flow in a small water tunnel (Fig. 8) is observed. The tunnel has a rectangular cross-section with three acrylic windows. A laser light sheet is coupled into one of the side windows, parallel to the upper one. The measurement system is observing the light section perpendicularly from above, with the lines of the image sensor oriented in the main flow direction. To generate a flow inside the tunnel, a motor-driven propeller with an adjustable number of rotations u per second is used. A 200 mW Nd:YAG CW laser (532 nm) with a cylindrical lens as light sheet optics is used as light source. The measuring system is equipped with an objective of 3.5 mm focal length and its distance to the light section is adjusted to fit the cross section of the tunnel into an ROI of 512 times 512 pixels (Fig. 9). Throughout the measurement, the imaging sensor is configured with an exposure time of 0.2 ms and a framerate of 500 fps.

A calibration of the imaging field was performed by removing the cylindrical lens in front of the laser, resulting in a small Gaussian beam of light within the plane of measurements. The laser beam can be made visible by generating a mean image while the water inside the tunnel flows (see 2.5). The Laser is mounted on a motorized linear stage, which allows placing at even intervals of 5 mm, sampling the physical extent of the tunnel at a total length of 10 cm with 21 lines. These imaged laser beams are visible in Fig. 10.

A line-wise estimation of the peak positions yields nearly no distortion introduced by the lens itself, only slight deviations in the sub-pixel domain(\(\le {0.1}\;{\textrm{px}}\)), which can also be interpreted as a numerical error. The low image distortion can be explained by the limited measurement region of 512 times 512 pixels in the center of the sensor. Therefore, in the following for every line of the ROI is assumed, that the lens provides a magnification of 0.3 mm/px

4.2 Study on spatial resolution

In the following, the effect of the spatial resolution on the estimation results is examined at different flow velocities. Therefore, the motor of the tunnel is set to rotational frequencies u of \(50,100,150,200\;{\textrm{and}}\; 250\;{\text {s}}^{-1}\) while the interrogation areas are configured with heights h of 4, 8, 16 and 32 respectively. The measurement system is configured to use four complex harmonic grating functions with period lengths of 32, 24, 16 and 8 pixels and a pointer-based temporal integration of 100 consecutive samples.

Figures 11 and 12 show the mean flow profiles generated by the measurement data. All profiles feature a nearly linear relation to the rotational frequency u and even show similar features originating from the tunnel flow characteristics, especially when looking at the profiles measured at \(u={250}\;{\textrm{s}}^{-1}\). It can be seen, that a higher spatial resolution leads to slight fluctuations between neighboring values. In contrast, a reduction of the spatial resolution causes a degradation of the estimation in more dynamic areas, like the boundaries of the tunnel in Fig. 12.

As an indicator of the estimation accuracy, every interrogation area i is considered as a single velocity estimator, yielding a value \(v_{i,t}\) at every time step of the measurement. Those values are divided by the time average \(\overline{v}_i\) corresponding to their respective interrogation area and grouped by the measurement run. Therefore every box in Fig. 13 is derived by a number of values, equivalent to the number of interrogation areas multiplied by the number of samples in the time domain.

It becomes clear, that a reduction of spatial resolution improves the reliability of the estimation, caused by in-plane loss (see 2.2). However, this effect is only noticeable at the step from \(h=4\) to 8, which is due to the flow characteristics and the image quality. As 75% of the relative deviations for most of the measurement runs and configurations lie within a range of 5–10% it is assumable, that most of the deviations are introduced through in-stationary effects of the tunnel flow.

4.3 Study on temporal integration

In Sect. 2.6, a superposition of complex pointers is proposed as a temporal integration strategy, which has been examined experimentally. For this purpose, the rotation of the motor was set to a fixed speed of \({150}\;{\textrm{s}}^{-1}\) to ensure an approximately constant tunnel flow. For the conducted measurements, the sampling window \(n_p\) for temporal integration is set to 10, 25, 50 and 100 individual frame pairs and the height of the interrogation areas is set to \(h=4\).

Figure 14 illustrates the mean velocity profiles generated by this measurement. They show a slight underestimation for lower sampling windows, due to including zero-valued velocities into the average when no image information passes the interrogation area.

The accuracy of the strategy was examined analogous to Sect. 4.2, as shown in Fig. 15. It shows a strong convergence in the direction of longer sampling windows, but once again, it has to be assumed, that the majority of the deviations originate from in-stationary effects within the tunnel flow.

4.4 Observing a dynamic process

While the previous studies were focused on static operating points of the water tunnel, the following experiment aimed to observe a dynamic change in the flow. Therefore, the rotational speed of the motor is set to \({200}\;{\textrm{s}}^{-1}\) and immediately turned off, which has proven to be the most reliably repeatable process with the current setup. This turn-off process was observed at 500 fps with three different temporal integration lengths, resulting in the three different signal rates \(f_s\) (i.e., time resolutions), generated by the temporal integration strategy of pointer superposition. The results are shown in Fig. 16.

Due to the low density of image information, the results generated at the sampling rate of 40 samples per second exhibit high fluctuations and spikes. These effects are smoothed out for lower sampling rates at the cost of available time resolution.

Figure 17 shows the results of a one-dimensional and time-resolved flow profile measurement. The profiles are generated by the temporal superposition of 100 pointers, resulting in a signal rate of five velocity profiles per second. The comparably low temporal resolution allows reliable velocity estimations, despite the sparse image information.

The velocity profiles for the maximum velocity of about \({0.75}\;{\textrm{ms}}^{-1}\) contain spatial and temporal coherent velocity fluctuations, which seem to be large-scale turbulent structures in the flow. When the motor is turned off, the fluctuations decrease in frequency.

5 Conclusion

The proposed spatial filtering algorithm in connection with the developed FPGA platform has proven to be a promising approach for real-time velocity field measurement. Since its accuracy strongly depends on the type of process being measured and the configuration of the algorithm parameters, the identification of the appropriate parameters and the development of suitable correction and validation procedures is the subject of further research. Furthermore, the current implementation requires only a portion of the available FPGA resources of the platform, which should allow the measurement method to be extended to a 2D–2C technique. By using the presented FPGA-Camera system, such time-resolved profiles can be directly measured and processed without time limitation. Therefore the system can be used for continuous process characterization.

Availability of data and materials

The datasets generated during and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Code Availability

The code used for data evaluation during the current study is available from the corresponding author upon reasonable request. Algorithmic codes used for programmable logic and embedded systems will not be made available.

References

Aizu Y, Asakura T (2006) Spatial filtering velocimetry: fundamentals and applications, vol 116. Springer Science and Business Media, Berlin, Heidelberg

Bergmann A (2010) Verbesserung des ortsfrequenzfilterverfahrens zur kamerabasierten messung der translatorischen geschwindigkeit bewegter objekte. Shaker, Aachen

Meinhart CD, Wereley ST, Santiago JG (2000) A PIV algorithm for estimating time-averaged velocity fields. J Fluids Eng 122(2):285–289

Michel K, Richter A, Christofori K (1995) Geschwindigkeitsschätzung bei mehrteilchensignalen mittels dual-orthogonal-pointer (dop). In: Proceedings der4. GALA-Fachtagung Lasermethoden in der Strömungsmesstechnik, pp 9.1–9.3

Nomura M, Hori M, Shimomura J, Terashima M (1992) Velocity measurement using phase orthogonal spatial filters. In: Conference record of the 1992 IEEE industry applications society annual meeting, pp 1756–1761

Raffel M, Willert CE, Scarano F, Kähler CJ, Wereley ST, Kompenhans J (2018) Particle image velocimetry : a practical guide (Array edn) [Online-Ressource]. Cham: Springer. Retrieved from https://doi.org/10.1007/978-3- 319-68852-7 (Online-Ressource (XXVI, 669 p 434 illus, 164 illus. in color, online resource))

Schaeper M, Damaschke N (2012) Pre-processing for multidimensional spatial filtering technique. 16th International symposium on application of laser techniques to fluid mechanics

Schaeper M, Damaschke N (2017) Fourier-based layout for grating function structure in spatial filtering velocimetry. Mea Sci Technol 28(5):055008

Schaeper M, Menn I, Frank H, Damaschke N (2008) Spatial filtering technique for measurement of 2c flow velocity. In: Proceedings of 14th international symposium on applications of laser techniques to fluid mechanics, 7th–10th July

Schaeper M, Kostbade R, Kröger W, Damaschke N (2015) Analyse von algorithmen zur verbesserten versatzschätzung in der ortsfiltertechnik. In: Fachtagung Lasermethoden in der Strömungsmesstechnik, Dresden

Schaeper M, Schmidt R, Kostbade R, Damaschke N, Gimsa J (2016) Optical high-resolution analysis of rotational movement: testing circular spatial filter velocimetry (CSFV) with rotating biological cells. J Phys D Appl Phys 49(26):265402

Funding

Open Access funding enabled and organized by Projekt DEAL. This Project is supported by the Federal Ministry for Economic Affairs and Climate Action (BMWK) on the basis of a decision by the German Bundestag.

Author information

Authors and Affiliations

Contributions

All authors contributed to the conception and design of the study. Electronics design and interface implementation of the measurement system were performed by AR, RP, and TS. The used algorithms were developed by MS, ND, and TS. Algorithm implementation for programmable logic and embedded driver development was carried out by JO and TS. The measurement environment was prepared and supervised by AK and TS. The first draft of the manuscript was written by TS and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Ralf Poosch: Deceased

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Steinmetz, T., Schaeper, M., Kleinwächter, A. et al. Time-resolved determination of velocity fields on the example of a simple water channel flow, using a real-time capable FPGA-based camera platform. Exp Fluids 64, 73 (2023). https://doi.org/10.1007/s00348-023-03617-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-023-03617-8