Abstract

Although the singular value decomposition (SVD) and proper orthogonal decomposition have been widely used in fluid mechanics, Venturi (J Fluid Mech 559:215–254, 2006) and Epps and Techet (Exp Fluids 48:355–367, 2010) were among the first to consider how noise in the data affects the results of these decompositions. Herein, we extend those studies using perturbation theory to derive formulae for the 95% confidence intervals of the singular values and vectors, as well as formulae for the root mean square error (rmse) of each noisy SVD mode. Moreover, we show that the rmse is well approximated by \(\epsilon /\tilde{s}_k\) (where \(\epsilon\) is the rms noise and \(\tilde{s}_k\) is the singular value), which provides a useful estimate of the overall uncertainty in each mode.

Graphic abstract

Similar content being viewed by others

Notes

The SVD is related to the biorthogonal decomposition (Aubry 1991) and the method of empirical orthogonal functions (Loren 1956). The POD (Berkooz et al. 1993; Holmes et al. 1996, 1997) is related to the Karhunen–Loève transform (Karhunen 1946; Loève 1978), principal components analysis (Pearson 1901), the method of empirical eigenfunctions, and the method of snapshots (Sirovich 1987).

The number of data sites D is the number of individual pieces of data at each time step. For example, consider sampling two-dimensional velocity data on an \(I \times J\) grid of field points; then \(D = 2IJ\) is the total number of data sites.

Ideally, \(\mathbf{E}\) contains i.i.d. noise drawn from a Gaussian distribution, but herein we also consider \(\mathbf{E}\) containing spatially correlated noise, as occurs in PIV data.

In terms of the POD eigenvalues \({\tilde{\lambda }}_k = \tilde{s}_k^2\), the threshold criterion (3) requires \({\tilde{\lambda }}_k > \epsilon ^2 TD\).

Note that the reconstructed singular values \(\bar{s}_k\) could be used in place of the noisy ones \(\tilde{s}_k\), but we find this makes little difference in the predicted rmse.

Proof: since \(\mathbf{U}\) is orthogonal (\(\mathbf{U}\mathbf{U}^\intercal = \mathbf{I}\)), we can write (41) as \(\mathbf{H}= \mathbf{U}{\varvec{{\Lambda }}} \mathbf{U}^\intercal\). At the same time, \(\mathbf{H}= \mathbf{A}\mathbf{A}^\intercal = \mathbf{U}\mathbf{S}\mathbf{V}^\intercal \mathbf{V}\mathbf{S} \mathbf{U}^\intercal = \mathbf{U}\mathbf{S}^2 \mathbf{U}^\intercal\).

Kato uses the notation: \(\chi\), \(\mathbf{T}\), and \(\mathbf{T}(\chi )\) for \(\epsilon\), \(\mathbf{H}\), and \({\tilde{\mathbf{H}}}\), respectively. Kato and Venturi use \(\mathbf{S}\) for \(\mathbf{Q}\).

If \(\mathbf{H}\) has repeated eigenvalues, then Eq. (84) represents the weighted mean of such eigenvalues. In this case, the present theory then needs to be modified (via Kato’s reduction theory). However, these modifications complicate the analysis and prevent one from simplifying the results into forms as simple as, for example, equation (87).

Although matrix \(\mathbf{W}^{(1)}\) refers to mode k, we have omitted the subscript k to facilitate referring to its \(im\text {th}\) element as \(W^{(1)}_{im}\). The \(i\text {th}\) element of vector \({\tilde{\mathbf{u}}}_k\) is \(\tilde{U}_{ik}\).

Note that all odd “powers” of \(\mathbf{E}\) average to zero, so \(\langle W^{(1)}_{im}U_{mk} \rangle = \langle W^{(3)}_{im}U_{mk} \rangle = \big \langle ( W^{(1)}_{im}U_{mk} ) \, ( W^{(2)}_{in}U_{nk} ) \big \rangle = \dots = 0\).

Note that again all odd “powers” of \(\mathbf{E}\) average to zero, so \(\langle N^{(1)}_{im}V_{mk} \rangle = \langle N^{(3)}_{im}V_{mk} \rangle = \dots = 0\).

The author prefers to interpolate using a piecewise cubic Hermite interpolating polynomial, pchip, because it provides continuity of the function and its first derivative while not being susceptible to overshoots as in a cubic spline. In Matlab, \(g' = \texttt {pchip}(x,g, x')\) returns g(x) evaluated at \(x'\).

References

Aubry N (1991) On the hidden beauty of the proper orthogonal decomposition. Theor Comput Fluid Dyn 2:339–352

Beltrami E (1873) Sulle funzioni bilineari. English translation by D. Boley is available as Techical Report 90-37, University of Minnesota Department of Computer Science, Minneapolis, MN, 1990

Benaych-Georges F, Nadakuditi RR (2011) The eigenvalues and eigenvectors of finite, low rank perturbations of large random matrices. Adv Math 227:494–521

Berkooz G, Holmes P, Lumley JL (1993) The proper orthogonal decomposition in the analysis of turbulent flows. Annu Rev Fluid Mech 25:539–575

Breuer K, Sirovich L (1991) The use of the Karhunen-Loève procedure for the calculation of linear eigenfunctions. J Comput Phys 96:277–296

Brindise MC, Vlachos PP (2017) Proper orthogonal decomposition truncation method for data denoising and order reduction. Exp Fluids 58(4):28

Cagney N, Balabani S (2013) On multiple manifestations of the second response branch in streamwise vortex- induced vibrations. Phys Fluids 25(7):075110

Charonko JJ, King CV, Smith BL, Vlachos PP (2010) Assessment of pressure field calculations from particle image velocimetry measurements. Meas Sci Technol 21(10):105401

Cohen K, Siegel S, McLaughlin T, Gillies E (2003) Feedback control of a cylinder wake low-dimensional model. AIAA J 41(7):1389–1391

Davis C, Kahan W (1970) The rotation of eigenvectors by a perturbation. III. SIAM J Numer Anal 7(1):1–46

Dawson STM, Hemati MS, Williams MO, Rowley CW (2016) Characterizing and correcting for the effect of sensor noise in the dynamic mode decomposition. Exp Fluids 57(3):42

Dopico FM (2000) A note on \(\sin \theta\) theorems for singular subspace variations. BIT 40(2):395–403

Druault P, Bouhoubeiny E, Germain G (2012) POD investigation of the unsteady turbulent boundary layer developing over porous moving flexible fishing net structure. Exp Fluids 53:277–292

Epps BP, Krivitzky EM (2019) Singular value decomposition of noisy data: noise filtering. Exp Fluids (accepted)

Epps BP, Techet AH (2010) An error threshold criterion for singular value decomposition modes extracted from PIV data. Exp Fluids 48:355–367

Feng LH, Wang JJ, Pan C (2011) Proper orthogonal decomposition analysis of vortex dynamics of a circular cylinder under synthetic jet control. Phys Fluids 23(1):014106

Gandhi V, Bryant DB, Socolofsky SA, Stoesser T, Kim JH (2015) Concentration-based decomposition of the flow around a confined cylinder in a UV disinfection reactor. J Eng Mech 141(12):04015050

Holden D, Socha JJ, Cardwell ND, Vlachos PP (2014) Aerodynamics of the flying snake chrysopelea paradisi: how a bluff body cross-sectional shape contributes to gliding performance. J Exp Biol 217(3):382–394

Holmes P, Lumley JL, Berkooz G (1996) Turbulence, coherent structures, dynamic systems, and symmetry. Cambridge University Press, Cambridge

Holmes PJ, Lumley JL, Berkooz G, Mattingly JC, Wittenberg RW (1997) Low-dimensional models of coherent structures in turbulence. Phys Rep 287:337–384

Jordan C (1874a) Mémoire sur les formes bilinéaires. J Math Pures Appl 19:35–54

Jordan C (1874b) Sur la réduction des formes bilinéaires. Comptes Rend Acad Sci 78:614–617

Karhunen K (1946) Zur spektraltheorie stochastischer prozesse. Ann Acad Sci Fennicae A1:34

Kato T (1976) Perturbation theory for linear operators. Springer, Berlin

Kourentis L, Konstantinidis E (2012) Uncovering large-scale coherent structures in natural and forced turbulent wakes by combining PIV, POD, and FTLE. Exp Fluids 52:749–763

Kriegseis J, Dehler T, Pawlik M, Tropea C (2009) Pattern-identification study of the flow in proximity of a plasma actuator. In: 47th AIAA aerospace sciences meeting, p 1001

Li RC (1998) Relative perturbation theory: (i) eigenvalue and singular value variations. SIAM J Matrix Anal Appl 19(4):956–982

Loève M (1978) Probability theory. Springer, Berlin

Lorenz EN (1956) Empirical orthogonal functions and statistical weather prediction. Tech. rep., MIT

Marchenko VA, Pastur LA (1967) Distribution of eigenvalues for some sets of random matrices. Mat Sbornik 114(4):507–536

Marié S, Druault P, Lambaré H, Schrijer F (2013) Experimental analysis of the pressure-velocity correlations of external unsteady flow over rocket launchers. Aerosp Sci Technol 30:83–93

Mokhasi P, Rempfer D (2004) Optimized sensor placement for urban flow measurement. Phys Fluids 16(5):1758–1764

Neal DR, Sciacchitano A, Smith BL, Scarano F (2015) Collaborative framework for piv uncertainty quantification: the experimental database. Meas Sci Technol 26(7):074003. http://stacks.iop.org/0957-0233/26/i=7/a=074003

Nguyen TD, Wells JC, Mokhasi P, Rempfer D (2010) POD-based estimations of the flowfield from PIV wall gradient measurements in the backward-facing step flow. In: Proceedings of ASME 2010 3rd joint US-European fluids engineering summer meeting and 8th international conference on nanochannels, microchannels, and minichannels

Pearson K (1901) LIII on lines and planes of closest fit to systems of points in space. Lond Edinburgh Dublin Philos Mag J Sci 2(11):559–572

Rajaee M, Karlsson S, Sirovich L (1994) Low-dimensional description of free-shear-flow coherent structures and their dynamical behaviour. J Fluid Mech 258:1–29

Rowley CW, Mezic I, Bagheri S, Schlatter P, Henningson D (2009) Spectral analysis of nonlinear flows. J Fluid Mech 641:115–127

Schmidt E (1907) Zur theorie der linearen und nichtlinearen integralgleichungen. I teil. Entwicklung willkurlichen funktionen nach system vorgeschriebener. Math Annal 63:433–476

Sirovich L (1987) Turbulence and the dynamics of coherent structures. Part 1: coherent structures, Part 2: symmetries and transformations, Part 3: dynamics and scaling. Q Appl Math 45:561–590

Stewart GW (1978) A note on the perturbations of singular values. Tech. Rep. TR-720, University of Maryland

Stewart GW (1990) Perturbation theory for the singular value decomposition. Technical Report UMIACS-TR-90-124, CS-TR 2539, University of Maryland

Stewart GW (1993) On the early history of the singular value decomposition. SIAM Rev 35(4):551–566

Strang (2009) Introduction to linear algebra, 4th edn. Wellesley-Cambridge Press, Wellesley

Sylvester JJ (1889a) A new proof that a general quadric may be reduced to its canonical form (that is, a linear function of squares) by means of a real orthogonal substitution. Messenger Math 19:1–5

Sylvester JJ (1889b) On the reduction of a bilinear quantic of the nth order to the form of a sum of n products by a double orthogonal substitution. Messenger Math 19:42–46

Tu JH, Rowley CW, Luchtenburg DM, Brunton SL, Kutz JN (2013) On dynamic mode decomposition: theory and applications. arXiv:1312.0041

Utturkar Y, Zhang B, Shyy W (2005) Reduced-order description of fluid flow with moving boundaries by proper orthogonal decomposition. Inte J Heat Fluid Flow 26:276–288

Venturi D (2006) On proper orthogonal decomposition of randomly perturbed fields with applications to flow past a cylinder and natural convection over a horizontal plate. J Fluid Mech 559:215–254

Venturi D, Karniadakis GE (2004) Gappy data and reconstruction procedures for flow past a cylinder. J Fluid Mech 519:315–336

Wedin PA (1972) Perturbation bounds in connection with singular value decomposition. BIT 12:99–111

Weyl H (1912) Das asymptotische verteilungsgesetz der eigenwerte linearer partieller differentialgleichungen (mit einer anwendung auf die theorie der hohlraumstrahlung). Math Annal 71:441–479

Yildirim B, Chryssostomidis C, Karniadakis G (2009) Efficient sensor placement for ocean measurements using low-dimensional concepts. Ocean Model 27:160–173

Acknowledgements

B. P. Epps dedicates this paper to Julian D. Cole, who likely would have enjoyed working on this problem. B. P. Epps is grateful to Ben Southworth for his initial analytical studies.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Relation between the SVD and POD

For discrete data the POD and SVD yield identical results. In fact, we now show that the \(\mathbf{U}\), \(\mathbf{S}\) and \(\mathbf{V}\) obtained via the POD are identical to those obtained via the SVD. Given real-valued data \(\mathbf{A}\in \mathbb {R}^{T\times D}\) with \(T<D\), the POD procedure of Sirovich (1987) is as follows:

-

1.

Form the temporal autocorrelation matrix \(\mathbf {H} \in \mathbb {R}^{T\times T}\) via:

$$\begin{aligned} \mathbf {H} = \mathbf {A} \mathbf {A}^\intercal \,. \end{aligned}$$(40) -

2.

Solve the matrix eigenvalue problem

$$\begin{aligned} \mathbf {H} \mathbf {U} = \mathbf {U} \varvec{\Lambda }. \end{aligned}$$(41)This eigenvector matrix \(\mathbf {U} \in \mathbb {R}^{T\times T}\) is identical to the SVD left singular vector matrix, and the eigenvalue matrix \(\mathbf {\Lambda } \in \mathbb {R}^{T\times T}\) contains the squares of the T singular values of \(\mathbf {A}\), \(\lambda _k = s_k^2\).Footnote 6

-

3.

Find the scaled POD modes, \(\mathbf {M}\), by projecting the eigenvectors onto the data set

$$\begin{aligned} \mathbf {M} = \mathbf {U}^\intercal \mathbf {A}. \end{aligned}$$(42)Using (1), Eq. (42) is equivalent to \(\mathbf {M} = \mathbf {U}^\intercal \mathbf {U} \mathbf {S} \mathbf {V}^\intercal\) which simplifies to \(\mathbf {M} = \mathbf {S} \mathbf {V}^\intercal\).

-

4.

Rescale each mode to unit norm, and record the amplitudes. In other words, separate \(\mathbf {S} \mathbf {V}^\intercal\) into \(\mathbf {S}\) and \(\mathbf {V}^\intercal\).

Thus, the end results of the POD procedure are the same \(\mathbf {U}\), \(\mathbf {S}\), and \(\mathbf {V}\) that are given by the SVD.

Appendix 2: Perturbation theory derivations

In this section, we use perturbation theory to derive expressions for the expected values and standard deviations of the noisy singular values and vectors (\(\langle {\tilde{\mathbf{u}}}_k \rangle\), \(\langle \tilde{s}_k \rangle\), \(\langle {\tilde{\mathbf{v}}}_k \rangle\), \(\sigma _{{\tilde{\mathbf{u}}}_k}\), \(\sigma _{\tilde{s}_k}\), \(\sigma _{{\tilde{\mathbf{v}}}_k}\)). The present derivation builds upon the work of Kato (1976) and Venturi (2006): Kato (1976) derived the perturbations of the eigenvalues and eigenvectors of a noisy matrix, and Venturi (2006) made the key realization that because the SVD of \({\tilde{\mathbf{A}}}\) is related to the eigendecomposition of \({\tilde{\mathbf{A}}}{\tilde{\mathbf{A}}}^\intercal\), Kato’s theory can be used to describe the perturbations of the SVD. Herein, we extend these results in two ways: (1) by assuming unique singular values, we are able to greatly simplify the perturbation theory results into useful formulae for the experimentalist; and (2) we extend the theory to account for spatially correlated noise, which occurs in PIV.

Before we can derive the desired quantities, we need to establish several definitions. The temporal autocorrelation matrix \(\mathbf {H} \in \mathbb {R}^{T\times T}\) for the analytic data is defined as:

The temporal autocorrelation matrix \({\tilde{\mathbf{H}}}\) for the noisy data \({\tilde{\mathbf{A}}}=\mathbf{A}+\mathbf{E}\) is thus defined as

where \({\hat{\mathbf{H}}}^{(1)}\equiv \mathbf{A}{\hat{\mathbf{E}}}^\intercal + {\hat{\mathbf{E}}}\mathbf{A}^\intercal\) and \({\hat{\mathbf{H}}}^{(2)}\equiv {\hat{\mathbf{E}}}{\hat{\mathbf{E}}}^\intercal\). The elements of \({\hat{\mathbf{E}}}\equiv \mathbf{E}/\epsilon\) are each \(\mathcal{N}(0,1)\). These error data can either be independent or spatially correlated, as discussed in “Appendix 2.1" and “Appendix 2.2” below.

Herein, we assume that all eigenvalues of \(\mathbf{H}\) are unique (a.k.a. simple, multiplicity of one), which implies that the eigennilpotent matrix \((\mathbf{H}- \lambda _k \mathbf{I})\mathbf{P}_k\) is zero for all modes. This allows us to considerably simplify the presentation from that in Kato (1976) and Venturi (2006). The basic idea is that for repeated (a.k.a. degenerate) eigenvalues, the perturbation causes these eigenvalues to split and become unique upon the perturbation. Thus, although there might be cases where \(\mathbf{H}\) has repeated eigenvalues, \({\tilde{\mathbf{H}}}\) will always have unique eigenvalues. Since we are concerned with the practical experimental scenario of only knowing \({\tilde{\mathbf{H}}}\), it suffices for our purposes to only consider the case of unique eigenvalues, because the experimentalist will never know whether or not the original eigenvalues were unique.

Continuing with the required definitions, now define the projection matrix \(\mathbf{P}\equiv \mathbf{A}(\mathbf{A}^\intercal \mathbf{A})^{-1}\mathbf{A}^\intercal = \mathbf{U}\mathbf{U}^\intercal\), and define the mode k projection matrix by

Given vector \(\mathbf {x}\), the projection of \(\mathbf {x}\) onto the column space of \(\mathbf{A}\) is \(\mathbf{P}\mathbf {x}\), and the projection of \(\mathbf {x}\) onto mode k is \(\mathbf{P}_k \mathbf {x}\). Some useful identities are these: \(\mathbf{P}^2 = \mathbf{P}\), \(\mathbf{P}_k^2 = \mathbf{P}_k\), \(\mathbf{P}_j\mathbf{P}_k = \mathbf{P}_k \delta _{jk}\) (\(\delta _{jk}\) is the Kronecker delta). Note that since \(\mathbf{H}= \mathbf{U}{\varvec{{\Lambda }}} \mathbf{U}^\intercal = \sum _{k=1}^T \lambda _k \mathbf{P}_k\), the following additional results are true: \(\mathbf{P}\mathbf{H}= \mathbf{H}\mathbf{P}= \mathbf{P}\mathbf{H}\mathbf{P}= \mathbf{H}\), and \(\mathbf{H}\mathbf{P}_k = \mathbf{P}_k\mathbf{H}= \mathbf{P}_k\mathbf{H}\mathbf{P}_k = \lambda _k \mathbf{P}_k\).

Kato’s theory makes use of the resolvent matrix, \(\mathbf{R}(\zeta ) \equiv (\mathbf{H}- \zeta \mathbf{I})^{-1}\). The eigenvalues of \(\mathbf{H}\) are the singularities of \(\mathbf{R}(\zeta )\). Since \(\mathbf{H}\mathbf{P}_k = \lambda _k \mathbf{P}_k\), it is natural to consider the reduced resolvent matrixFootnote 7

Since \(\mathbf{P}_j\mathbf{P}_k = \mathbf{P}_k \delta _{jk}\), observe that \(\mathbf{P}_k\mathbf{Q}_k = \mathbf{Q}_k\mathbf{P}_k = 0\), and thus \(\mathbf{Q}_k^2 \equiv \sum _{j\ne k} \frac{\mathbf{P}_j}{(\lambda _j - \lambda _k)^2}\).

The expected value calculations are a tedious yet laborious application of the above definitions and identities. It is convenient to use index notation with the stipulation that summation over i, j, and k is not implied. We will define the \(ij{\text{th}}\) element of several matrices, many of which are defined specially for the \(k{\text{th}}\) mode. Repeated indices other than i, j, or k indicate implied summation over \(1,\ldots ,T\) or \(1,\ldots ,D\).

In this section, we consider the expected values of various combinations of \({\hat{\mathbf{H}}}^{(1)}\) and \({\hat{\mathbf{H}}}^{(2)}\) with \(\mathbf{P}_k\), \(\mathbf{Q}_k\), and \(\mathbf{Q}_k^2\). Using index notation (but with no implied sum over k), we have

1.1 Spatially independent error data

Assuming each element of \(\mathbf{E}\in \mathbb {R}^{T\times D}\) is independent, identically distributed \(\mathcal{N}(0,\epsilon ^2)\), all odd powers of \(\mathbf{E}\) terms are expected to be zero for any combination of indices: \(\langle E_{mn} \rangle = 0\), \(\langle E_{mn}E_{pq}E_{rs} \rangle = 0\), and so on. Some even power terms are as follows:

1.2 Spatially correlated error data

Error data with spatial correlation can be modeled as that produced by uniform smoothing (i.e. a moving average, as in the Matlab smooth function) of i.i.d. random data as follows:

where w is the window width. It is easy to show that if \({\bar{\mathbf{E}}}\) is composed of i.i.d. random data with a normal distribution \(\mathcal{N}(0,\bar{\epsilon }^2)\), then \(\mathbf{E}\) also has a normal distribution, \(\mathcal{N}(0,\epsilon ^2)\), but with standard deviation \(\epsilon = \bar{\epsilon }/ \sqrt{w}\) and now spatial correlation [introduced by the spatial smoothing in (59)]. For proof, consider \(\langle E_{mn}^2 \rangle\), with no implied sum over m or n. Note that for the original i.i.d. data, \(\langle \bar{E}_{mn} \bar{E}_{pq} \rangle = \bar{\epsilon }^2 \, \delta _{mp}\delta _{nq}\), so

Now consider the expected values of other useful \(\mathbf{E}\) terms. Clearly, the odd powers are still expected to be zero: \(\langle E_{mn} \rangle = 0\), \(\langle E_{mn}E_{pq}E_{rs} \rangle = 0\), and so on. Also, the data are still uncorrelated in time, so the even power terms still involve delta functions of the first index, such as \(\langle E_{mn} E_{pq} \rangle \sim \delta _{mp}\).

For perturbation theory, we are interested in sums across entire rows, such as \(\sum _{q=1}^D \langle E_{mn} E_{pq} \rangle\). Such a “cross-sum” involves terms like \(\langle E_{mn} E_{p,n+1} \rangle\) and \(\langle E_{mn} E_{p,n+2} \rangle\), which are evaluated as follows:

Thus, the desired cross-sum can be computed as follows:

To make the perturbation theory analysis tractable, we make the following “lumping” approximation:

Equation (64) is consistent with the row sum (63) and the i.i.d. case (\(w=1\)) (56). The approximation in (64) is to set all the \(q\ne n\) terms in (63) to zero and instead to lump the contributions of these terms into the \(q=n\) term. The consequence of this lumping approximation is that in the perturbation theory analysis, various \(A_{pq}\) terms are then only evaluated at \(q=n\); in other words, \(A_{pq}\) takes the value \(A_{pn}\) over the entire smoothing window. Since \(A_{pq}\) is expected to vary only slightly over the smoothing window, this approximation is justified.

With the lumping approximation (64), the even power terms are now given as follows:

Note the key difference between expressions (65) and (68) versus (66) and (67). In (65), a sum is implied across \(q = 1,\ldots ,D\), and this cross-sum leads to the appearance of w, as in (63). In (68), cross-sums are implied across each \(\{q,s,u\}\), which leads to the appearance of \(w^2\). By contrast, the weight w does not appear in (66) and (67), because the second index (n) is the same for both terms, and so no cross-sum is implied (but rather just a regular sum over all n).

Consider now other terms that appear in the perturbation theory derivations. Since all odd powers of \(\mathbf{E}\) terms are expected to be zero, so to are odd “powers” of \(\mathbf{H}^{(n)}\) terms. That is, \(\langle H_{mn}^{(1)} \rangle = \langle H_{mn}^{(1)}H_{pq}^{(2)} \rangle = \langle H_{mn}^{(1)}H_{pq}^{(1)}H_{rs}^{(1)} \rangle = \dots = 0\). Some even “power” terms are these:

The trace operation is \(\text {tr}(\mathbf{X}) \equiv X_{mm}\), with implied sum over \(m=1,\ldots ,T\). Also note the identities \(\text {tr}(\mathbf{X} + \mathbf{Y}) = \text {tr}(\mathbf{X}) + \text {tr}(\mathbf{Y})\) and \(\langle \text {tr}(\mathbf{X})\rangle = \text {tr}(\langle \mathbf{X}\rangle )\). Thus,

Computing the expected values of \(\langle \big ( W^{(1)}_{im}U_{mk} \big )^2 \rangle\), \(\langle W^{(2)}_{im}U_{mk} \rangle\), \(\langle \big ( N^{(1)}_{im}V_{mk} \big )^2 \rangle\), and \(\langle N^{(2)}_{im}V_{mk} \rangle\) is laborious but no more complicated than the examples shown here. The final results are as follows:

with \((1-\delta _{mk})\) indicating a sum over \(m=1,\ldots ,T\) but \(m\ne k\).

Recall that the lumping approximation was used in formulae (65)–(82), so these formulae are also valid for the case of i.i.d. error data upon setting \(w=1\).

1.3 Perturbed eigenvalues

Both for completeness and because the singular values are the square roots of the eigenvalues \(\tilde{s}_k = \sqrt{{\tilde{\lambda }}_k}\), we first consider the perturbed eigenvalues \({\tilde{\lambda }}_k\). The \(k{\text{th}}\) eigenvalue of \({\tilde{\mathbf{H}}}\) can be written as a perturbation expansion (Kato [II-2.21])

where, assuming the eigenvalues of \(\mathbf{H}\) are uniqueFootnote 8 (Kato [II-2.33])

Note that subscript k refers to the mode number, and no summation over k is implied. The expected value and standard deviation of \(\tilde{\lambda }_k\) are (Venturi 2006)

Equation (85) follows from the fact that \(\hat{\lambda }^{(1)}_k, \hat{\lambda }^{(3)}_k, \ldots\) are “odd” in \({\hat{\mathbf{E}}}\), so their expected values are zero. The evaluation of \(\langle \hat{\lambda }^{(2)}_k \rangle\) and \(\big \langle \big (\hat{\lambda }^{(1)}_k\big )^2 \big \rangle\) for i.i.d. error data is detailed in (Venturi 2006). Herein, we simplify his analysis by assuming unique eigenvalues, and we extend his results to the case of spatially correlated error. Substituting our Eqs. (77) and (78) into (85) and (86), we find

1.4 Perturbed singular values

We now use the above results to find the perturbed singular values. Since the singular values of \({\tilde{\mathbf{A}}}\) are the square roots of the eigenvalues of \({\tilde{\mathbf{H}}}\), we have

If the error is small, then (89) can be expanded in a Taylor series about \(\lambda _k\),

and upon substituting \(s_k = \sqrt{\lambda _k}\), we have

The expected value and standard deviation of \(\tilde{s}_k\) are

Inserting (77) and (78) into (92) and (93) yields

Note that to first order, \(\sigma _{\tilde{\lambda }_k} = 2s_k\, \sigma _{\tilde{s}_k}\), which makes sense since \(\lambda _k = s_k^2\), so \(d\lambda _k = 2 s_k \, ds_k\).

1.5 Perturbed left singular vectors

The \(k{\text{th}}\) left singular vector of \({\tilde{\mathbf{A}}}\) is (Kato [II-4.24])Footnote 9

with implied summation over \(m=1,\ldots ,T\), where (Kato [II-4.23])

The expected value and standard deviation of \(\tilde{U}_{ik}\) are (Venturi 2006)Footnote 10

Extending Venturi’s results, with some effort to evaluate \(\langle \big ( W^{(1)}_{im}U_{mk} \big )^2 \rangle\) and \(\langle W^{(2)}_{im} U_{mk} \rangle\) [see Eqs. (79) and (80)], we find

1.6 Perturbed right singular vectors

The \(k\text{th}\) right singular vector of \({\tilde{\mathbf{A}}}\) is (Venturi 2006)

with implied summation over \(m=1,\ldots ,D\). Venturi (2006) gives \(\mathbf{N}^{(1)}\), and we derive \(\mathbf{N}^{(2)}\) in “Appendix 3”.

The expected value and standard deviation of \(\tilde{V}_{ik}\) are (Venturi 2006)Footnote 11

Extending Venturi’s results, with some effort to evaluate \(\langle \big ( N^{(1)}_{im}V_{mk} \big )^2 \rangle\) and \(\langle N^{(2)}_{im} V_{mk} \rangle\) (see Eqs. (81) and (82)], we find

with \(O(\epsilon ^4)\) and \(O(\epsilon ^2)\) accuracy, respectively.

Appendix 3: Derivation of \(\mathbf{N}^{(1)}\) and \(\mathbf{N}^{(2)}\)

The \(k\text {th}\) right singular vector of \({\tilde{\mathbf{A}}}\) is (Venturi 2006)

To derive \(\mathbf{N}^{(1)}\) and \(\mathbf{N}^{(2)}\), recall the definition of the SVD \({\tilde{\mathbf{A}}}= {\tilde{\mathbf{U}}}{\tilde{\mathbf{S}}}{\tilde{\mathbf{V}}}^\intercal\) and thus \({\tilde{\mathbf{A}}}^\intercal {\tilde{\mathbf{u}}}_k = \tilde{s}_k{\tilde{\mathbf{v}}}_k\). Also recall \(\tilde{s}_k = \sqrt{{\tilde{\lambda }}_k}\), so

Expanding in a Laurent series,

so that

Grouping terms of order 1, \(\epsilon\), and \(\epsilon ^2\), and comparing the results with (104) we have:

Upon factoring out \({{\mathbf{v}}}_k\) using \(\mathbf{u}_k = \mathbf{A}{{\mathbf{v}}}_k/\sqrt{\lambda _k}\) or \(\mathbf{A}^\intercal \mathbf{u}_k = {{\mathbf{v}}}_k \sqrt{\lambda _k}\), we have the result

Appendix 4: Accuracy of RMSE estimates

In Sect. 3.1, we derived the following back-of-the-envelope formulae for the rmse (valid for modes \(k<k_F\)):

It is worthwhile to ask under what conditions will these formulae be accurate?

In comparing (31) to the full \(\text {rmse}({\tilde{\mathbf{v}}}_k)\) Eq. (19),

it is clear that (19) reduces to (31) if the summation term in (19) is negligible. Moreover, it is straightforward to show that if the summation term in (19) is negligible, then the summation term in (17) is roughly equal to one, and (17) reduces to (31) as well. But under what conditions does this occur? Recall Eq. (17) read

Assuming \(\lambda _m \gg \lambda _k\) for \({\scriptstyle m=1,\ldots ,k-2 }\) and \(\lambda _m \ll \lambda _k\) for \({\scriptstyle m=k+2,\ldots ,T}\), the summation term in (17) can be approximated as:

The first sum is negligible, because these \(\lambda _m \gg \lambda _k\). The second sum yields \(1 - (k+1)/T\), which is roughly 1 for \(k \ll T\). The third and fourth terms can be combined (in approximation), taking the numerators both as \(2\lambda _k^2\) and the denominators both as \((\text {gap}(\lambda _k))^2\). With these approximations, we have

Equation (113) now predicts that \(\text {rmse}({\tilde{\mathbf{u}}}_k) \approx \sqrt{w} \, \epsilon /s_k\) only when the relative gap is larger than

To validate Eq. (114), consider the following example with analytic data \(\mathbf{A}\) generated using the singular vectors from (26) and (28), as in Sect. 3.1, and new singular values

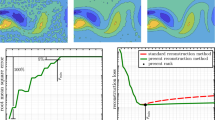

Here, the noise data \(\mathbf{E}\) were constructed by first drawing from a normal distribution with standard deviation \(\sqrt{w}\epsilon\) and then performing uniform spatial smoothing over a window of width w. This two-step process yields spatially correlated noise with standard deviation \(\epsilon\). Results of a Monte Carlo simulation with \(\epsilon = 10^{-5}\), \(w = 5\), \(T = 200\), \(D = 2000\), and \(N=1000\) are shown in Fig. 19.

One interesting feature of this example is that (115) gives the singular values “flat spots” at \(k=5{-}13, 22{-}29,\) and so on, where the relative gap between the singular values becomes very small (see Fig. 19a, b). These “flat spots” significantly increase the rmse of the modes (see Fig. 19c). Because of the “flat spots”, the summations in rmse predictions (17) and (19) contribute significantly.

Inspecting Fig. 19b, c, it is evident that the rmse “lifts off” the \(\epsilon /s_k\) curve when the relative gap is small enough that (114) is violated. This example shows that Eq. (114) must be true for the back-of-the-envelope formulae (31) to be accurate.

The other interesting feature of this example is that the noise data were generated using spatial smoothing, so this example provides validation of the rmse theory (17)/(19) and estimates (31) for the case of \(w>1\). Here, we find that the theoretical rmse predictions nearly overlay the numerically-computed rmse for nearly all modes.

(“Appendix 4” example) Monte Carlo results (\(s_k\) from (115), \(\epsilon = 10^{-5}\), \(N = 1000\)): a singular values; b relative gap between eigenvalues gap(\(\lambda _k)/\lambda _k\), where gap(\(\lambda _k)\) is given by (130) with \(\tilde{s}_k\) replaced by \(\lambda _k\); c root mean square error, comparing numerical results to theory (17)/(19) and estimates (31)

Appendix 5: Singular values of random data

The Marchenko–Pastur distribution (Marchenko and Pastur 1967) is related to the distribution of singular values of a matrix of i.i.d. Gaussian noise. Here, we provide this distribution, and we show that for \(D \gg T\), the Marchenko–Pastur distribution can also be modified so as to represent random data that has spatial correlation.

1.1 Spatially independent random data

Consider the expected distribution \(\mathbb {E}[\acute{s}(\mathbf{E})]\) of singular values of a matrix \(\mathbf{E} \in \mathbb {R}^{T \times D}\). Let \(\mathbf{E}\) be composed of independent, identically distributed (i.i.d.) random data drawn from a Gaussian (normal) distribution with zero mean and standard deviation \(\epsilon\), \(\mathcal{N}(0,\epsilon ^2)\). Without loss of generality, assume \(T<D\). The Marchenko–Pastur Law relates to the expected distribution \(\mathbb {E}[\lambda (\mathbf{Y})]\) of eigenvalues of matrix \(\mathbf{Y} = (\mathbf{E}{} \mathbf{E}^\intercal )/(D\epsilon ^2)\). Recall, the singular values of \(\mathbf{E}\) are equal to \(\sqrt{\text {eigenvalues of } \mathbf{E}{} \mathbf{E}^\intercal }\) and thus equal to \(\sqrt{(D \epsilon ^2) (\text {eigenvalues of } \mathbf{Y}) }\). Therefore,

The Marchenko–Pastur Law states that in the limit \(T\rightarrow \infty\) with constant \(y \equiv T/D\), the distribution of eigenvalues \(\lambda (\mathbf{Y})\) converges to that defined by the following probability density function (Marchenko and Pastur 1967)

where

are the largest and smallest eigenvalues of \(\mathbf{E}\), respectively.

To derive the associated distribution of singular values, consider the cumulative distribution function \(P(z) = \int _a^z p(z') \, {\text {d}}z'\), which is the fraction of eigenvalues \(\lambda\) in the range \(a \le \lambda \le z\). By definition \(P(z = a) = 0\) and \(P(z = b) = 1\). Carrying out the integration, we find

For a matrix \(\mathbf{E}\) of finite size (\(T < \infty )\), Eq. (119) can be used to determine the T singular values of \(\mathbf {E}\) as follows. First, find the T eigenvalues \(\lambda _k\) \(\scriptstyle (k=1,\ldots ,T)\) corresponding to \(P(\lambda _k) = 1 - (k-1)/(T-1)\), such that \(P(\lambda _k)\) takes on T discrete values (of even spacing) between \(P(\lambda _1=b)=1\) and \(P(\lambda _T=a)=0\). Practically, this can be accomplished by evaluating P(z) on a fine grid \(z \in [a,b]\) and interpolatingFootnote 12 to find

The expected singular values of \(\mathbf{E}\) are given by Eq. (116), which becomes

For example, the largest \(\acute{s}_1\) and smallest \(\acute{s}_T\) singular values of \(\mathbf{E}\) are

For shorthand in this manuscript, we will say “Marcheko–Pastur distribution” to refer to the expected singular values computed via (119), (120), and (121).

Note that the singular values in (121) are proportional to the error level \(\epsilon\), so it is useful to define a ‘unit-error’ singular value distribution

such that \(\acute{s}_k = \epsilon {\hat{s}}_k\).

1.2 Spatially correlated random data

Spatially correlated noise can occur in experimental data that are spatially smoothed during collection or processing. For example, PIV data are typically collected from overlapping interrogation windows, and processing typically includes smoothing by a weighted average over the 9 nearest neighbors. Such a dataset effectively has fewer than D independent data sites. Thus, it is reasonable to expect that the singular values of spatially correlated random data still follow a Marchenko–Pastur distribution, but with \(y=T/D\) replaced by \(y=fT/D\) in Eqs. (117)–(119). Indeed, we have empirically found this approximation to work well when \(D/T \gtrsim 20\) and \(D/fT \gtrsim 5\). The ‘spatial-correlation factor’ f represents the ratio \(f = (\text {actual } D)/(\text {effective } D)\), so \(f=1\) represents uncorrelated data, and \(f > 1\) indicates effectively-fewer independent data sites due to spatial correlation.

For example, consider random data with spatial correlation that is produced by taking a moving average of i.i.d. random data. Such an average could either have uniform weighting 1 / w (i.e. as in Eq. (59)) or Gaussian weighting:

for \(i = -h, \ldots , h\), where \(h = (w-1)/2\) and w is the window width (see Matlab gausswin function).

Figure 20 shows the singular values of random data with either uniform or Gaussian spatial smoothing. The \(w=1\) curve corresponds to the original i.i.d. data (no smoothing) and is well represented by the original Marchenko–Pastur distribution (\(f=1\)). Clearly, the ‘spatial-correlation factor’ f increases with increasing width w of the smoothing window. As f increases, the modified Marchenko–Pastur distribution becomes steeper: with the \(y = fT/D\) substitution, the largest and smallest singular values in (122) become

Appendix 6: Theoretical perturbation bounds

Classical perturbation theory provides bounds for the singular values and canonical angles (Stewart 1990; Dopico 2000). In this Appendix, we show that these bounds are much looser than the 95% confidence intervals derived in Sect. 2.

1.1 Perturbation bounds for singular values

Weyl’s theorem provides a bound on the perturbation of each singular value (Weyl 1912)

where \(\Vert \mathbf{E}\Vert _2 = \acute{s}_1 \approx \epsilon (\sqrt{fT}+\sqrt{D})\). Weyl’s theorem implies that large singular values are relatively unaffected by the measurement error; that is, \(\tilde{s}_k \approx s_k\) for \(\tilde{s}_k \gg \Vert \mathbf{E}\Vert _2\). However, small singular values (\(\tilde{s}_k < \Vert \mathbf{E}\Vert _2\)) are quite dubious. Assuming \(\tilde{s}_k > s_k\), as is typical for smaller singular values, Weyl’s theorem (126) only guarantees

We now show that (127) is a much looser bound than the 95% confidence interval predicted by our perturbation theory results. Assuming well separated singular values, the summation in Eq. (5) can be ignored, and with aid of (6), the 95% confidence interval \(\langle \!\langle \tilde{s}_k \rangle \!\rangle = \langle \tilde{s}_k \rangle \pm 1.96 \sigma _{\tilde{s}_k}\) provides the upper bound

Clearly (128) is a tighter bound than (127) for all the \(s_k > \epsilon D / ( 2 [ (\sqrt{fT}+\sqrt{D}) - 1.96]) \approx \epsilon \sqrt{D}/2\). In other words, (128) is a tighter bound than (127) for all \(s_k\) of interest, since lower modes with \(s_k < \epsilon \sqrt{D}/2\) are mostly noise anyway.

1.2 Perturbation bounds for singular vectors

Wedin’s theorem provides a bound on the canonical angles of the singular vectors (Davis and Kahan 1970; Wedin 1972)

where

In words, a singular vector is extremely sensitive to perturbations if it corresponds to a singular value that has nearby neighbors.

Wedin’s theorem can also be stated for a space \(\tilde{\mathcal{S}}\) spanned by a collection of singular vectors \(\{{\tilde{\mathbf{u}}}_k, k \in \mathcal{K}\}\), where \(\mathcal K\) is a subset of the integers \(1,\ldots ,T\). This is useful for the case when the gaps between singular values within the set \(\{\tilde{s}_k, k \in \mathcal{K}\}\) are small but the gap between this set and any other singular value, \(\text{gap}(\tilde{s}_{\mathcal{K}}) = \min ( \min \nolimits _{k \in {\mathcal{K}} \atop j \not \in {\mathcal{K}} } |\tilde{s}_k - s_j|\, , \, \tilde{s}_k)\), is large. In this scenario, the individual vectors \({\tilde{\mathbf{u}}}_{k\in \mathcal{K}}\) may be ill-defined, but the space \(\tilde{\mathcal{S}}\) may be well defined.

Using our perturbation theory results, we can evaluate the left hand side (LHS) and right hand side (RHS) terms of Wedin’s theorem (129). Equation (32) prescribes the left hand side terms of (129) as \(\sin \phi _k \approx \epsilon \sqrt{wT}/s_k\) and \(\sin \theta _k \approx \epsilon \sqrt{D}/s_k\), so

The expected value of the right hand side can be evaluated as follows using the methods in “Appendix 2”,

Figure 21 compares the LHS and RHS terms in Wedin’s theorem for the example in Sect. 3.1. Clearly, expressions (131) and (132) well approximate the left hand side (LHS) and right hand side (RHS) terms of Wedin’s theorem.

This example shows that Wedin’s theorem (129) is not an extremely useful tool, because it is too broad of a bound [(i.e. the right hand side of (129) is much larger than the left hand side]. On the other hand, our approximations (32) well describe the canonical angles and thus are much better estimates of the quantities of interest than Wedin’s theorem.

For cases where the gap between singular values is not large, we expect approximations (32) to fail. Thus, the expectation is that for cases with smaller \(\text {gap}(\tilde{s}_k)\), Wedin’s theorem is expected to ensure

Rights and permissions

About this article

Cite this article

Epps, B.P., Krivitzky, E.M. Singular value decomposition of noisy data: mode corruption. Exp Fluids 60, 121 (2019). https://doi.org/10.1007/s00348-019-2761-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-019-2761-y