Abstract

The conventional computing method based on the von Neumann architecture is limited by a series of problems such as high energy consumption, finite data exchange bandwidth between processors and storage media, etc., and it is difficult to achieve higher computing efficiency. A more efficient unconventional computing architecture is urgently needed to overcome these problems. Neuromorphic computing and stochastic computing have been considered to be two competitive candidates for unconventional computing, due to their extraordinary potential for energy-efficient and high-performance computing. Although conventional electronic devices can mimic the topology of the human brain, these require high power consumption and large area. Spintronic devices represented by magnetic tunnel junctions (MTJs) exhibit remarkable high-energy efficiency, non-volatility, and similarity to biological nervous systems, making them one of the promising candidates for unconventional computing. In this work, we review the fundamentals of MTJs as well as the development of MTJ-based neurons, synapses, and probabilistic-bit. In the section on neuromorphic computing, we review a variety of neural networks composed of MTJ-based neurons and synapses, including multilayer perceptrons, convolutional neural networks, recurrent neural networks, and spiking neural networks, which are the closest to the biological neural system. In the section on stochastic computing, we review the applications of MTJ-based p-bits, including Boltzmann machines, Ising machines, and Bayesian networks. Furthermore, the challenges to developing these novel technologies are briefly discussed at the end of each section.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Modern computers, based on von Neumann architecture that solves numerical problems in a serial, deterministic, and highly precise way, have been extensively developed for decades and are still the mainstream of the fashion for information processing at present. However, the emergence of big data with increasing volume and complexity challenged the von Neumann computing paradigm, in that shuttling such information between the processor and the storage inevitably causes substantial energy consumption. Therefore, in the context of seeking a solution for the “von Neumann bottleneck” [1], novel computing paradigms beyond von Neumann architecture, i.e., unconventional computing, are desired. Contrary to the von Neumann paradigm that gives out guaranteed and accurate results, approximate computing [2] employs redundant computation and returns approximate results that are sufficient for their objectives such as recognition, classification, prediction, optimization, and so on. Such emerging paradigms are expected to achieve high performance and energy-efficient computing when involving big data processing, in that 1) the approximate computing uses many low-precision or probabilistic calculations, and thus is inherently resilient to errors, 2) most paradigms for approximate computing are in parallel that would benefit for calculation speed, and 3) some of the approximate computing associated paradigms are designed for storing information locally where it is processed so that extricating from the large energy dissipation caused by commuting data between processor and memory.

Among approximate computing, bio-inspired computing has recently attracted much interest due to its massive parallelism, high energy efficiency, adaptivity to varying and complex inputs, and inherent tolerance to fault and variation. Therefore, bio-inspired computing is especially useful for unstructured data processing such as recognition, one of the purposes of machine learning. The direct strategy for bio-inspired computing design is emulating the human brain, namely Neuromorphic computing [3]. Neuromorphic computing is based on a variety of artificial neural networks (ANNs) which are composed of the following two elementary units: artificial neurons and synapses. Synapses function as connectors with different variable weights (i.e., connection strength) to update and deliver information. While neurons that are interconnected by synapses receive signals from other neurons and emit spikes to the subsequent neurons if activated. Choosing a proper neural network for a particular computing task is one of the key issues associated with neuromorphic computing. Specifically, the rudimentary classification tasks that require differentiating binary states can be handled by the first generation of ANN [4], also called “perceptron”. A perceptron is the simplest ANN that constitutes one layer of neurons for inputs and another layer of neurons for outputs and the two layers are connected by synapses. As shown in Fig. 1(a), however, the single perceptron can only solve classification problems in a linear way and thus function analogously to AND, NAND, and OR gate. Therefore, one of the second-generation ANNs, the multi-layer perceptron (MLP) [5] or deep neural network (DNN) [6] in which hidden layers play an important role has been proposed for solving the nonlinear classification problems which are analogous to XOR gate shown in Fig. 1(b). In DNN, neurons in one layer are fully connected by neurons in the neighboring layer and information would be delivered unidirectionally. Additionally, by adapting the mode that how neurons are connected and interacted, other multi-layered neural networks have been proposed to perform computation tasks with upgraded efficiency. For example, the convolutional neural network (CNN) [7] would be advantageous for image recognition due to the cooperation among the convolutional layer, max pooling layer, and fully connected layer that consist of the CNN. The data processed in the DNN and the CNN are time-independent or static, while recurrent neural network (RNN) [8] concerns processing sequence data. RNN not only allows for cycles that could achieve related data among adjacent time steps, but also has differing levels of connectivity; therefore, RNN is very desirable for sound recognition, natural language processing, computer vision, etc.

a The classification problem can be solved by finding a straight line whose function is Y = w1X1 + w2X2, where wi is weight, Xi is input (i = 1, 2) and Y is output. The logic is analogous to AND, NAND, and OR gate. b The classification problem needs to be solved by finding a nonlinear line in that the two output states cannot be separated by any straight line. The logic is analogous to the XOR gate

Compared to biological neural networks, the first- and second-generation ANNs are much more computationally driven and would be in the category of non-spiking neural networks (non-SNN), whereas in recent years, researchers started to design an ANN that could replicate biological behavior closely in that biological neural systems would inherently process information with high efficiency. Consequently, the third-generation ANN, the spiking neural networks (SNN) [9], would be more biomimetically driven and has attracted much attention. In additional to the potential for saving energy, SNN offers a platform for realizing spike-timing-dependent plasticity (STDP) [10], one of the most efficient unsupervised learning algorithms and is capable of training data online. It is worth noting that the capabilities of the spiking neuromorphic system have not been realized by training and learning mechanisms comprehensively and the superiority of computing performance for the SNN and non-SNN is still under debate.

On the other hand, stochastic computing exploits randomness, and its physics rules are also competitive for solving problems that neuromorphic computing concerns. The unit of stochastic computing is a random number generator with a tunable output probability, which is called a probabilistic-pit (p-bit) [11]. For machine learning, the Boltzmann machine (BM) [12] is widely used as the architecture of stochastic computing, which is also called “stochastic neural networks”. A more commonly utilized Boltzmann model is the restricted Boltzmann machine [13]. Compared to a general BM, the restricted Boltzmann machine could speed up the training rate due to the restricted connection among the units. Another typical case of the stochastic neural networks is related to Bayesian calculations, i.e., the Bayesian network (BN) [14]. BN is a feed-forward neural network, and the nodes could be divided into parent and child nodes in terms of their causal sequences inherited from events, expecting parent-to-child directionality for the data delivery. Furthermore, stochastic computing is also capable of solving the computation tasks that adiabatic quantum computing concerns [15]. For example, the Ising model has been applied to solve combinatorial optimization problems (COP) such as the travel salesman problem (TSP) [16], while inverse problems such as integer factorization (IF) [17], which is very difficult for conventional computing, can be worked out by stochastic networks. Figure 2 summarizes the relationship among the aforementioned computing paradigms that will be discussed in more detail in this review.

The hardware unit implementation for such unconventional computing paradigms, furthermore, was initially supported by typically hundreds to thousands of transistors which would be undesirable for unconventional computing tasks because of the energy and area requirements. In recent years, it has been proposed that a single spintronic device would be capable of emulating the behavior of synapse, neuron, and p-bit in that such devices could be engineered to a variety of properties such as non-volatility, plasticity, stochasticity, and oscillation, which are key features of the computing units [18]. Magnetic tunnel junctions (MTJs), a typical structure of spintronic devices, have been investigated for not only information storage but also unconventional computing. Due to their versatile properties, together with the outstanding endurance, and CMOS-technology compatibility, MTJs are promising candidates for the hardware of unconventional computing with high performance. Moreover, the MTJs would be expected in different behaviors to cooperate with a particular computing paradigm, and this will also be discussed in more detail in this review.

The structure of this review is conducted as follows: in Sect. 2, we discuss the hardware based on MTJ for unconventional computing on the device level, illustrating the mechanism for the operation of MTJ-based devices, the device features, design principles, and recent works. Then we divided the discussion of unconventional computing on the architecture level into neuromorphic computing (Sect. 3) and stochastic computing (Sect. 4), giving an overview of the aspects of computing tasks and applications to which these unconventional computing systems have been investigated. Finally, we enumerated some challenges that need to be tackled and concluded with promising perspectives for unconventional computing.

2 MTJ-based devices for unconventional computing: from mechanism to applications

2.1 Mechanism

For magnetic materials, magnetic orders stem from the neighboring localized, exchange-coupled electron spins. From the perspective of classical physics, magnetic orders are regarded as the magnetic moment which is controlled by the spin angular momentum. Due to the controllable magnetic orders, the device based on magnetic materials could achieve information storage, logic computation, and other novel functionalities. At an early stage, the magnetic order is controlled by a magnetic field, and then, in order to be compatible with circuits, people started to manipulate magnetization in electrical ways. Especially, the spin-transfer torque (STT) effect [19] plays an important role in electrically changing magnetic orders. The spin-polarized conduction electrons can change the magnetic moment by exchanging the spin angular momenta between the conduction electrons and spin electrons. More specifically, the STT effect can be experimentally achieved in a structure of a non-magnetic spacer sandwiched by two magnetic layers with large/small saturation magnetization called pinned/free layer, respectively. When the spin-polarized electrons which are filtered by pinned layer reached the free layer, the spin-polarization component that is parallel to the magnetization of the free layer can be transmitted, while the component which is perpendicular to the magnetization would be absorbed and thus led to the rotation of the magnetization due to angular momentum conservation.

Although STT is difficult to control the magnetization of magnetic materials with high resistance, the magnetization could also be rotated by spin-orbital torque (SOT) [20] which does not require electrons to pass through the magnets. The SOT effect originated from spin-orbital coupling (SOC) [21], and the principle of SOC can be attributed to an effective magnetic field generated by an electrical field. A material with broken inversion symmetry could produce a net spin polarization due to the asymmetric spin scatterings in the bulk, and this is the spin-Hall effect (SHE) [22]. Then, the spin-polarized electrons would accumulate at the interface of the material and thus can be absorbed by an adjacent ferromagnet in the form of damping-like SOT. Another physical explanation is attributed to the Rashba effect [23]; electrons pass through an interface with the asymmetrical inversion and therefore obtain a spin polarization. The polarized electrons can generate a torque on the adjacent ferromagnet via the exchange coupling. Although the SHE and Rashba effects are dominant in conventional ferromagnet/heavy metal heterostructures, these two are not the only origin of SOT. The other effects, such as the quantum spin-Hall effect [24] in topological insulators, which could also generate SOT, are still under intensive investigation.

In addition to applying the spin torques to manipulate magnetizations, the magnetic anisotropy of the magnets can be changed and, therefore, control the magnetization alignment. This effect is the voltage-control magnetic anisotropy (VCMA) [25] that plays an important role in the stochastic MTJ. The VCMA-MTJ is important in the computing paradigms which require stochasticity, and this will be discussed in detail later.

Besides, an essential element in spintronic technology in the last two decades is the MTJ. The resistance of an MTJ depends on the relative orientation of the magnetizations in the pinned layer (i.e., reference layer) and the free layer. The discovery of the tunneling magnetoresistance (TMR) effect [26] is one of the milestones for integrating spin-based devices with CMOS technology. Specifically, TMR gives a very large magnetoresistance ratio so that it can provide enough signal strength to the CMOS sense amplifier [27].

2.2 Applications

2.2.1 Neuromorphic computing

Compared to conventional computing technology, one of the strategies for processing data more efficiently and energy-conserving is to emulate the brain. The brain consists of the following two elementary units: synapses, and neurons. Synapses operate as connectors of neurons, while neurons interconnected by synapses receive signals from other neurons and emit spikes to the subsequent neurons if activated. Inspired by the functionality of the brain, neuromorphic computing is being intensively developed and has exhibited outstanding performance for computational tasks such as classification, recognition, and prediction. Hence, at the device level, designing artificial synapses and neurons for high-performance neuromorphic computing is of foremost importance. In this part, we will explain the MTJ-based synapse and neuron in the aspects of device features, design principles, and recent works.

2.2.1.1 Artificial synapses based on MTJ

Selecting the type of neural network is dependent on the specified applications. To be specific, the non-SNN are much more computationally driven, while SNN is proposed to explicitly reproduce biological behavior such as STDP. The selected neural network model defines the behavior of the synapse. Therefore, the artificial synapse could be classified into synapse for non-SNN and synapse for SNN.

For the non-SNNs, the synapses could be regarded as an unstable memory device that does not require a 10-year retention time. These synapses for non-SNN are required to 1) represent the strength of the connection (encoding to different weights) between the connected neurons, 2) update the weights according to the output of connected neurons to realize the learning process or plasticity-like properties, and 3) keep the connection strength within one iteration (short-term memory functionality). On the other hand, the synapses for SNNs are attempted to emulate biological behavior in a further precise way. One of the popular inclusions for more complex synapse properties is the STDP mechanism, which requires the connection strength to change over time.

Generally, memory devices can be used as the artificial synapse because they can memorize and be repetitively rewritten. So far, many works apply memory devices to synapses in both non-SNNs and SNNs. For example, floating-gate transistors are used as analog memory cells for synaptic weights storage [28], [29], while conductive-bridging RAM changes the connection strength via electrochemical properties [30]. Likewise, memristors based on ferroelectric materials [31], [32] and phase change memory [33] have been applied in the hardware of synaptic systems due to their plasticity-like and especially the STDP-like behavior.

Spintronic devices, additionally, have been considered as a competitive candidate for the hardware implementation of synapses. Spintronic devices can be non-volatile and allow for a variety of tunable spin dynamics such as intrinsic stochastic switching, the dynamics of domain wall (DW), and so on. These various spin dynamics could emulate synapses with different behaviors. For instance, because uniform and continuous variation of synaptic weights are required to guarantee the accuracy of the training [6, 34], linear synaptic behavior is desired in non-SNNs that exploit supervised learning such as the backpropagation (BP) learning rule. Memristor-like behavior that the synaptic weights that depend on both input amplitude and duration time is required for the SNNs with unsupervised learning, especially for STDP learning rules [35], [36]. Spintronic devices could emulate the synaptic behavior for both non-SNNs and SNNs. The spintronic devices not only keep the merits of fast operation speed which outperform conductive-bridging RAM, phase change memory, and some of the non-spin-based memristors but also are energy-efficient compared to volatile floating-gate transistors.

Due to exhaustive back-and-forth memory-processor operations and inevitable leakage current, the early artificial synapse based on a group of transistors requires intensive energy [37]. The power consumption can be reduced by introducing non-volatile memory units into CMOS circuits. Compared to pure CMOS circuits, the proposed CMOS/MTJ-hybrid structures [38], [39] exhibit reduced energy consumption and computational latency when performing the classification and recognition tasks. Nevertheless, in such CMOS/MTJ-hybrid structures, the MTJs just function as associative memories to store the synaptic weights of hardware, which would not fully exploit the versatility of MTJs.

For the MTJ-based synapse, however, one of the key issues is how to use the binary MTJ to mimic the analog synapse, which would realize a gradual or semi-gradual change of synaptic weight and thus achieve high computational accuracy. There are mainly two strategies to represent the strength of the connection as follows: 1) gradually changed the probability of the binary switch (bistate), and 2) gradually changed states (multi-state).

At the very beginning, the binary MTJ is designed to be thermally stable to target 10-year information preservation by designing the high energy barrier between the different states. As a result, the energy consumption required to switch nonvolatile MTJ is relatively high, typically 100 fJ [40], as compared with 23 fJ per synaptic event [41]. Subsequently, if the circuit requires frequent changes in the stored information to realize rapid updates for the synaptic weights, the MTJs are not energy-efficient [42], [43]. Additionally, MTJs are required to have a minimum variation, which requires severe constraints on nanofabrication. When the energy barrier between the two states is comparable to thermal energy, changing the state of the MTJ requires less power but introduces much noise. For the paradigm of neuromorphic computing, on the other hand, the neural networks are tolerant to and even could harness noise, variability, and stochasticity for the computation [44].

Furthermore, in binary MTJs, the resistance cannot evolve gradually, but the probability of an MTJ switching during a voltage pulse can be tuned gradually by the amplitude and duration of the pulse. Using the bistate synapses makes learning slower but offers the network increased memory stability. Furthermore, given that spin-transfer-torque magneto-resistive random-access memory (STT-MRAM) has been intensively developed in both the academic and industrial world, the neuromorphic chips composed of spintronic devices would tend to start from STT-MTJ with binary switch behavior. STT-MTJs could comprehensively emulate the functionality of biological synapses because of their intrinsic stochastic switching behavior [45].

Based on this principle, Vincent et al. [46] designed an artificial synapse based on a single STT-MTJ. By encoding the binary states of the STT-MTJ to the two weights representing light and dark, as shown in Fig. 3(a), the artificial neural network composed of such STT-MTJ artificial synapse is capable of unsupervised learning. When an input neuron spikes, a brief read pulse is applied to the crossbar and currents will reach the different output neurons simultaneously. Then, by design choice, only the inputs coming from the P synapses are integrated by the output neurons. When an output neuron spikes, other output neurons will be inhibited and their internal variable is reset to zero. The synapses in the crossbar architecture successfully counted cars via recognizing the change of brightness in lanes, which is showed in Fig. 3(b). Due to the inherently stochastic switching, only two STT-MTJs switch states are enough for the presented example.

The ANN is composed of binary STT-MTJs that function as synapses. a Schematic of the crossbar architecture. Read operation occurs when an input neuron spikes and STDP (write) operation occurs when an output neuron spikes. Waveforms (1) and (2) are applied concurrently. b The final state of the MTJs is organized as the input pixels in the image. White is P, black is AP state. Every sub-image represents one output neuron. The figures are adapted from Ref. [46] with the authors’ permission

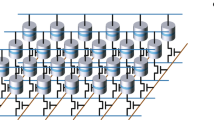

To further improve the performance of the STT-MTJ as a synapse, Locatelli et al. [47] reported strategies to effectively control the bit error rate by modulating the programming pulse amplitude or duration. Conversely, it is challenging for a synapse based on a single binary MTJ to handle complex computation tasks. Thus, the analog behavior of an artificial synapse is desired, resulting that such a synapse could exhibit multiple distinguished states corresponding to multiple discrete weights. To realize the analog behavior, embedding such binary-state STT-MTJ to crossbar frameworks has been proposed. Fig. 4(a), (b) shows that the optimized STT-MTJ crossbar synapse with multi-state is constructed by stacking several binary-state STT-MTJs [48] or connecting in the 2D architecture [49], named compound magnetoresistive synapse (CMS), respectively. The CMSs are offered an analog-like weight spectrum that results from different states of the individual MTJs leading to a gradual conductance modulation. CMSs are advantageous for number recognition tasks with high tolerance to fault and variation, nevertheless at the cost of device number and integration area. To solve this problem, Zhang et al. [49] proposed a 3D crossbar structure, that each MTJ is sandwiched by the vertical electrodes and the horizontal electrodes. The two vertical electrodes and the two horizontal electrodes are connected to the post-neurons and the pre-neurons, respectively.

The crossbar structure based on MTJ-array synapse for realizing multi-level weights. a multi-state synapse using several binary-state stacked STT-MTJs. The figures are adapted from Ref. [48] with the authors’ permission. b STT-MTJ crossbar synapse connected in parallel

Compared to STT-MTJs, in principle, SOT-MTJs are expected to perform better in terms of energy consumption, speed, and endurance [50]. Srinivasan et al. [51] demonstrated a SOT-MTJ which is a building block of the proposed all-spin SNN which is highly energy-efficient. The synapse based on the SOT-MTJ consumes less than 36 fJ per spiking event. It is also helpful for realizing the stochastic-STDP learning algorithm. The CMS based on the SOT-MTJs has also been reported. Such CMS with analog-like behavior can handle more complex computation tasks with high accuracy while keeping the power consumption low [52]. Alternatively, as shown in Fig. 5(a), Ghanatian et al. [53] created multiple states by putting multiple SOT-MTJs on a shared heavy metal layer but with different cross-section areas. Although the SOT-MTJ-based synapses have been proposed with progressive significance, the challenge of scaling is unavoidable. Therefore, developing an artificial synapse based on a single MTJ with multi-state would be desirable for reducing the area.

The MTJ-based synapse for realizing multi-level weights. a SOT-MTJs on a shared heavy metal layer but with different cross-section areas. b MTJs with a shared free layer. c A single MTJ combining binary switch and spin textures to achieve the four-state synapse. The figures are adapted from Ref. [53, 62], and [63] with the authors’ permission, respectively

The multi-state synapses are especially desired for SNN, which requires the connection strength to evolve continuously depending on the past activity of the connected neurons. The property is plasticity, which allows neural networks to learn and reconfigure. Magnetic devices are particularly well adapted for implementing plasticity [54] due to their memory effects and tunability. Embedding a magnetic DW in the MTJ structure can be used to implement synaptic plasticity. Such memristive behavior has been demonstrated in MTJ with more than 15 intermediate resistance states [55]. Furthermore, it has also been shown that similar continuous magnetization variations can be triggered by SOT in a magnetic stripe on top of an antiferromagnetic layer [56]. Memristive-like features can then be obtained by fabricating a tunnel junction on top of the bilayer stripe. These spintronic memristors could be used as multi-state synapses, similar to many strategies proposed for other memristive technologies [57], [58]. Moreover, Wang et al. [59] proposed a compact model of a synapse based on current-induced DW motion MTJ (CIDWM-MTJ), driven by the SOT, the CIDWM-MTJ exhibited reduced threshold current and a faster DW motion of 400 m/s compared to CIDWM-MTJ driven by the STT [60]. Cooperated with a peripheral circuit, the CIDWM-MTJ with low power consumption and high speed would be promising for high-performance SNN applications [61]. Besides, Siddiqui et al. [62] designed a linear synapse shown in Fig. 5(b) based on nine MTJs with a shared free layer to realize multilevel linear synaptic weight generation, which would be favorable for DNN applications. Lourembam et al. [63] reported a strategy for formatting and stabilizing metastable magnetic domains by the voltage pulse in the MTJ, combining binary switch and spin textures to achieve the four-state synapse by using only a single MTJ shown in Fig. 5(c). The MTJ was fabricated without any of the domain-wall pinning methods and, therefore, can alternatively realize metastable multi-domain states. Hong et al. [64] demonstrated a dual-domain-and-dual domain MTJ to realize the eight-state synapse.

2.2.1.2 Artificial neurons based on MTJ

The typical behavior of a neuron is to accumulate charge when the neuron’s membrane potential changes. The neuron would generate a spike when its membrane potential reaches a threshold.

McCulloch-Pitts Neuron model [65] is used in most ANNs. For this model, the output of neuron j is governed by the following equation:

where yj is the output value, f is an activation function, N is the number of inputs into neuron j, wi,j is the weight of the synapse from neuron i to neuron j, and xi is the output value of neuron i. In this neuron model, firstly, the neuron would integrate the weighted outputs of the pre-neurons through synapses. Next, this linearly combined integration is processed by the activation function of the neuron and then emits output to the next neuron. The activation function plays a key role in data processing. Choosing the activation function is heavily dependent on the particular neural networks and the different activation functions can be realized by the behaviors of the devices.

For artificial neurons applied in non-SNN, there are a variety of implementations of the traditional McCulloch-Pitts neuron model. The perceptron composed of CMOS [66], which implements a simple thresholding function, is commonly used in hardware implementation. As shown in Fig. 6(a), the simplest activation function is the step function [41], and the neuron hardware for this activation function requires less area utilization and would not be computationally intensive. However, the mainstream learning algorithms such as the BP algorithm are gradient-based. As the step function is not differentiable and not suitable for this algorithm, the other hardware-based activation functions, including the ramp-saturation function [67], linear [68], and piecewise linear [69] functions shown in Fig. 6(b), (c), have been implemented to match the gradient-based learning algorithm. As the complexity of the activation function is increased, i.e., from linear to nonlinear function, the overall accuracy of the learning process is increased, in that nonlinear activation functions, shown in Fig. 6(d) such as the basic sigmoid function [70] and the hyperbolic tangent function [71], gives derivatives with continuous variation offering a high resolution for the gradient-based learning algorithm.

Nonlinear activation functions, unfortunately, would cause complexity in computation and hardware implementation, The MTJ-based neuron could alleviate this challenge due to its nonlinear dynamics. For example, the motion of DWs could realize neural-like integration and thresholding. Besides, thresholding can be achieved by using a standard MTJ, which switches only if the amount of current it receives is above the critical current. The neurons together with the activation functions mentioned above are mainly for the non-SNN which is computationally intensive, and when the neurons are activated, they would not necessarily return to their initial states.

On the other hand, for artificial neurons applied in SNN, the behavior of fire means that when the neurons are activated, they would emit spikes and then back to their initial state spontaneously. A simple set of spiking neuron models belongs to the integrate-and-fire family, which is a set of models that vary in complexity from relatively simple (the basic integrate-and-fire) to those approaching complexity levels near that of the Izhikevich model [72] and other more complex biologically-inspired models. In general, the neuron models where action potentials are described as events are called “integrate-and-fire” models. Integrate-and-fire models have two separate components that are necessary to define their dynamics: 1) an equation that describes the evolution of the membrane potential, and 2) a mechanism to generate spikes. Although the “integrate-and-fire” models are still less biologically realistic but produce enough complexity in behavior to be useful in spiking neural systems. The simplest integrate-and-fire model maintains the current charge level of the neuron. Furthermore, there is a leaky integrate-and-fire (LIF) [73] implementation that expands the simplest implementation by introducing a leak term to the model, which leads to the potential for a neuron to decay over time. The LIF models use the following two ingredients: 1) a linear differential equation to describe the evolution of the membrane potential, and 2) a threshold for spike firing. It is one of the most popular models used in neuromorphic systems. Spin-torque nano-oscillators are specific types of MTJ, and the oscillation amplitudes have memory due to finite magnetization relaxation, which can imitate the leaky integration of neurons [74], [75]. Moreover, the next level of complexity of the neuron model is the general nonlinear integrate-and-fire method, including the quadratic integrate-and-fire model that is used in some neuromorphic systems [76]. These have also been used in neuromorphic systems. Nonetheless, the models aforementioned make use of the fact that neuronal action potentials of a given neuron always have roughly the same form, and no attempt is made to describe the shape of an action potential. If the shape of an action potential is always the same, the shape cannot be used to transmit information, i.e., rather information is contained in the presence or absence of a spike. As a result, action potentials are reduced to events that happen at a precise moment in time. Alternatively, another level of complexity is added with the adaptive exponential integrate-and-fire model [77].

In addition to the previous analog-style spiking neuron models, there are also implementations of digital spiking neuron models. The dynamics in a digital spiking neuron model are usually governed by a cellular automaton, rather than a set of nonlinear or linear differential equations. A hybrid analog/digital implementation has been created for neuromorphic implementations [78], as well as implementations of resonate-and-fire [79] and rotate-and-fire [80] digital spiking neurons. A generalized asynchronous digital spiking model has been created to enable the exhibition of nonlinear response characteristics [81]. Digital spiking neurons have also been utilized in pulse-coupled networks [82], and a neuron for a random neural network has been exploited in hardware [83].

2.2.2 Stochastic computing

Conventional computing is based on the binary representation of information in terms of “0” and “1”, as known as “bits”. These bits of information are processed and stored by stable deterministic devices like the MOSFETs or MTJs with stable magnets having energetic barriers of the order of 40–60 times the thermal energy at room temperature. Probabilistic spin logic (PSL) is a new paradigm of computing [84] that relies on probabilistic bits (p-bits for short) that fluctuate randomly between 0 and 1, with probabilities that can be tuned by an input. Besides, exploiting physics properties to do computation has become increasingly attractive in recent years, because such computation can naturally converge, which is governed by the physical laws instead of complex algorithms. In the field of physics-inspired computing, stochastic computing has gained significant interest due to its excellent performance in solving non-deterministic polynomial (NP)-hard problems. The unit of stochastic computing is p-bit which is realized by several devices with non-deterministic behavior. In this part, we will illustrate the device features, design principles, and recent works of the MTJ-based p-bits.

2.2.2.1 P-bit based on MTJ

Compared to the traditional deterministic von Neumann approach, stochastic computing would endow improved efficiency for solving computationally hard problems such as the COP [85] and factorization [17]. For stochastic computing, a large number of independent sources of the stochastic signal are needed, in that they are often based on Markov chain Monte Carlo techniques such as Gibbs sampling [86]. Therefore, energy-efficient, high-density hardware for generating true-random noise sources is of significance.

P-bits are evolved from random number generators (RNGs) and the key feature of the p-bits is the tunability of the probability for their outputs, i.e., concerning the inputs, the outputs of hardware that function as a p-bit should obey a specific probability distribution, generally, the sigmoidal-like probability distribution. The ideal p-bit behavior is described by the following two equations:

where Eq. (2) represents the state of the ith p-bit (given by mi) as a function of its input Ii. “r” is a random number with a uniform distribution between – 1 and 1, that captures the stochastic aspect of the output. Equation (3) provides the expression for the input Ii in terms of the connection strengths Jij of other p-bits in the network to the ith p-bit and the local bias hi. This is analogous to the concept of a Binary stochastic neuron (BSN) used in the field of stochastic neural networks [12].

MTJs have been integrated into CMOS technologies for memory applications, and they are engineered to have stable magnetic states. However, MTJs could become naturally fluctuating if choosing proper materials or geometry, resulting in such MTJs being one of the natural candidates for p-bit hardware. Evaluating the speed of such fluctuation is essential because it relates to the speed of computation in a stochastic computing scheme [87]. Stochastic fluctuation has been reported in superparamagnetic MTJs [17, 88], [89] with low uniaxial anisotropy and energy barriers, operating in the millisecond time regime. For the superparamagnetic MTJs, the fluctuation rate follows an Arrhenius-like relation [90]:

where the magnetization of a uniaxial anisotropy nanomagnet has two stable directions along its anisotropy axis: “Up” and “Down” for nomination. The two states are separated by an energy barrier, EB, which stabilizes the magnetization in one of the states. τ0 is called the attempt time, a material-dependent parameter of the nanomagnet. An exponential increase in fluctuation speed is expected upon reducing the energy barrier EB, with a frequency scale set approximately by the attempt frequency of 1/τo ∝ αγHk, where α is the Gilbert damping coefficient, Hk is the anisotropy field, and γ is the gyro-magnetic ratio. However, because both energy barrier EB = mHk/2 where m is the total moment of the macro-spin, and attempt frequency 1/τo reduce with the decreasing of Hk, the fluctuation speed of superparamagnetic MTJs is largely limited.

A potential approach to increase the fluctuation speed, consequently, is to exploit easy-plane anisotropy that can allow magnetic fluctuation confined in the plane and meanwhile keep high-speed fluctuation dynamics [91]. In the works [92], the energy barrier is determined by the shape of the MTJ. A low relative energy barrier could be achieved by constructing a circular in-plane junction. The attempt frequency for this magnetization configuration is then related to the free layer’s easy-plane anisotropy field, which can be remarkably higher than the easy-axis anisotropy field Hk, thus endowing a faster fluctuation speed. Furthermore, nanosecond fluctuation in the in-plane MTJs [93]-[94] has been achieved by investigating the mechanism for controlling relaxation time.

Introducing the VCMA effect to the MTJs is another strategy for achieving fast fluctuation speed. By applying voltage pulses, the magnetic anisotropy of the free layer would be switched between the in-plane and out-of-plane directions together with the thermal noise, achieving the random fluctuation of the magnetization without reducing the energy barrier. The VCMA-MTJs [95], [96] with stochasticity have been applied as not only true random number generators (TRNGs) but also p-bits the output probability could be tuned by the amplitude and the enduring time of the voltage pulses.

Besides the stochastic binary-switching MTJs, spin torque nano-oscillator (STNO) could also be operated as hardware of p-bit, exploiting intrinsic frequency fluctuation caused by thermal noise [97]. Cooperated by a peripheral circuit, the digital p-bit based on STNO would be able to act as a p-bit array by time division multiplexing, which overcomes the limitation of calibration and coupling connections encountered by synchronous p-bit arrays. More details of the applications of MTJs to stochastic computations will be discussed in Sect. 4.

3 Neuromorphic computing

In the previous section, MTJ-based artificial synapses and neurons were introduced. Facing the challenge of the Von Neumann bottleneck and the decline of Moore's Law [98], more efficient neuromorphic computing emerges as the times require, which is inspired by the human brain and can process and store the data simultaneously. Spintronic devices provide a feasible approach to building neuromorphic computing systems, due to their intrinsic dynamics being akin to biological synapses and neurons. Additionally, their low energy consumption, non-volatility, high speed, and potential for pure spin current transport make them one of the most promising candidates [99], [100].

Inspired by the human brain, ANN was created to mimic the functionality of the human brain to store and process information. Similar to the human brain, ANNs also consist of many synapses and neurons. As mentioned in Sect. 2.1, synapses and neurons are the fundamental building blocks of the brain. Among them, synapses are related to the formation of memory, while neurons are related to the information processing [101].

In the nervous system of the human brain, each synapse is a specialized junction with two neurons, which allows a neuron to transmit electrical or chemical signals to another neuron, as shown in Fig. 7(a). The information is transformed from the axon of the pre-neuron to the dendrite of the post-neuron through a synapse. In conventional ANN, there are generally two types of synapses: one requires multilevel memory, while another one relies on stochastic binary memory devices. As we have mentioned, one compact MTJ is sufficient to imitate the functionalities of the biological synapse.

a Schematic of biological neuron and synapse. b Diagram of perceptron with its weights, input function, activation function, and output. c Architecture of MTJ-based MLP for recognizing the hand-written digit. d The neuron model based on MTJ used in (c). The figures are adapted from Ref. [110] with the authors’ permission

Neurons play an essential role in producing and transmitting action potentials in neural networks. Their functionalities are intricate and plenteous [88]. However, in the conventional ANN, an artificial neuron is a mathematical function based on a model of biological neurons. Each neuron takes inputs, weighs them separately, sums them up, and passes this sum through a nonlinear function to produce output, where the nonlinear activation functions are extracted from the complex neural mechanisms [101]. The same as artificial synapses, MTJs exhibit great potential for mimicking artificial neurons. According to the characteristics of MTJ switching, the neuron models can be distinguished into two categories: deterministic and stochastic neurons [47].

The content for this section is organized as follows: First, the development and recent progress of neuromorphic computing are reviewed, including the MTJ-based single perceptron, multi-layered perceptron, conventional neural network, and recurrent neural network. The encoding approaches and learning methods are highlighted. The last part concludes the topic and envisions the challenge and prospects.

3.1 Artificial neural networks

In this part, several different neural networks are introduced, including MLP, CNN, RNN, and oscillator neural networks. From multi-layered perceptron to CNN, by increasing the number of layers or changing the network architecture, it is possible to build and perform tasks such as image recognition with a large number of neurons and synapses based on MTJs. In addition, RNNs and oscillator neural networks show potential for time-domain signal processing. ANN based on magnetic nano-oscillator and RNN will be discussed separately.

3.1.1 The perceptron based on MTJ

The development of neural networks has mainly gone through three periods: The first generation is a perceptron capable of binary operations. The second generation is an MLP and CNN with hidden layers, and the third generation is the event-driven SNN.

The concept of perceptron has a landmark effect on the development of the neural network. The single-layer perceptron model was proposed by Frank Rosenblatt in 1958 [102]. A perceptron is implemented as a binary classifier, which decides whether an input belongs to a specific class. As shown in Fig. 7(b), a perceptron consists of four main parts including input values, weights, net sum, and an activation function. During the learning process, the input values are multiplied by their weights. Additionally, all of these multiplied values are added together to create the weighted sum. The weighted sum is, after that, applied to the activation function, producing the perceptron's output, and only when the weighted sum exceeds a certain threshold, the neuron is activated. To ensure the output is mapped between (0, 1) or (− 1,1), the step function is chosen to be the activation function. In addition to the step function, the activation function also includes the sigmoid function (\(f\left(x\right)=1/(1+{e}^{-x})\)) [103], the ReLU function (\(\mathrm{ReLU}(x)=\mathrm{max}(0, x)\)) [104], the tanh (\(\mathrm{tanh}\left(x\right)=(1-{e}^{-2x})/(1+{e}^{-2x})\)) [105], and so on. Since the step function is not differentiable at x = 0, which makes it unusable for BP. The sigmoid function is the most widely used class of activation functions, with an exponential shape, which is the closest to a neuron in the physical sense. The output range of the sigmoid is (0, 1), which has good properties and can be represented as probability or used for input normalization. However, sigmoid also has its own shortcomings. The first point, the most obvious, is saturation. Specifically, in the process of BP, the gradient of the sigmoid will contain a factor, once the input falls into the saturation region at both ends, the factor will become close to 0, resulting in the gradient becoming very small in BP. At the same time, the network parameters may not even be updated, making it difficult to train effectively. This phenomenon is called gradient disappearance. The sigmoid network will produce gradient disappearance within 5 layers. The second point is the offset phenomenon of the activation function. The output values of the sigmoid function are all greater than 0 so that the output is not the mean value of 0, which will cause the neurons in the latter layer to get the non-zero mean signal of the previous layer as input. To overcome this problem, the tanh function is proposed. Compared to the sigmoid function, its mean of output is 0, making it converge faster than the sigmoid and reducing the number of iterative updates. However, like sigmoid, the gradient will vanish. The ReLU function is proposed to solve the saturation of sigmoid and tanh. When x > 0, there is no saturation problem. Consequently, ReLU can keep the gradient from decaying when x > 0, thereby alleviating the problem of gradient disappearance.

3.1.2 Multi-layered perceptron and convolutional neural network

Nevertheless, the perceptron has only the output layer neurons for activation function processing, that is, only one layer of functional neurons, which limits its learning ability. In 1969, Minsky and Papert [106] proposed that the perceptron can only solve linearly separable problems, that is, if there is a plane that can separate the two types of modes, the learning process of the perceptron will definitely converge. Nevertheless, for nonlinear separable problems, the perceptron learning process will have fluctuations and cannot obtain a suitable solution, which makes the perceptron unable to solve even simple nonlinear separable problems such as XOR. After a downturn for the first generation of AI, multiple layers of functional neurons are considered. This led to the concept of MLP [107] [108], [109], also known as the neural networks (NN), in the 1980s. Unlike the single perceptron, MLP has multiple hidden layers, and it is capable of solving both linearly and nonlinearly separable problems.

Figure 7(c) shows an MLP built by voltage-controlled stochastic MTJs [110]. The structure of the stochastic neuron model is an MTJ, which consists of CoFeB/MgO/CoFeB layers, as shown in Fig. 7(d). Its stochastic switching behavior is attributable to the VCMA effect by altering bias voltages. The electric bias changes the switching probability between the stable parallel (P) and antiparallel (AP) states, which can be probed readily by measuring the time average of the resistance or voltage across the MTJ. More importantly, the switching probability curves under various external current densities resemble a commonly used activation function, the sigmoid function. The MLP composed of MTJs has trained to recognize the handwritten digits from the MNIST dataset with about 95% accuracy.

As the problems that need to be solved become more complex, more hidden layers will be needed, such as speech recognition often requiring 4 layers. However, it is also common for image recognition problems to require 20 layers, leading to the number of trainable parameters increasing dramatically. For example, assuming that the input picture is a 1 K × 1 K picture, the implicit layer has 1 M nodes, and there will be 1012 weights that need to be adjusted, which will easily lead to overfitting and local optimal solution problems. In this case, the learning efficiency of MLP is limited, therefore, the concept of DNN is proposed [111], and new architectures start to be used to improve computational efficiency. Typical representatives of new architectures include the CNN and the RNN, which are widely used neural network architectures nowadays.

As shown in Fig. 8(a), CNN [7, 112], [113] is widely used in image recognition, and its architecture includes different types of layers, including the convolutional layers, max pooling layers, and fully connected layers. The convolutional layer is used to find features. The features of the image can be extracted through the convolution operation so that some features of the original signal can be enhanced, and the noise can be reduced. The pooling layer is used to reduce the amount of data processing while retaining useful information. Sampling will neglect the specific position of a feature, because after a certain feature is found, its position is no longer important, and only the relative position of this feature and other features is necessary. At last, the fully connected layer is used to make classification judgments.

a The architecture of CNN for image recognition. b The architecture of STT-computing in memory which implements the conventional operation. c XNOR-Net topology with STT-computing in memory as conventional layers. d The accelerator for BCNN and the main compute flow for convolutional layers of BCNN. The figures are adapted from Ref. [114], with the authors’ permission

The input layer reads in a simple regularized image. The units in each layer take as input a set of small local neighbors in the previous layer. Through the local perception field, neurons can extract some basic visual features, such as directed edges, end-points, corners, and so on. These features are then used by higher-level neurons, and basic feature extractors that apply to a part also tend to apply to the entire image. By using this feature, CNN uses a group of units distributed in different positions of the image but with the same weight vector to obtain the features of the image and form a feature map. At each location, the units from different feature maps get their own types of features. Different units in a feature map are restricted to perform the same operation on local data at various locations in the input map. This operation is equivalent to convolving the input image with a small kernel. A convolutional layer usually contains multiple feature maps with different weight vectors, so that multiple different features can be obtained at the same location. Once a feature is detected, its absolute position in the image becomes less important as long as its relative position with respect to other features has not changed. Therefore, each convolutional layer is followed by a pooling layer. The pooling layer performs local averaging and down-sampling operations, reducing the resolution of the feature map and reducing the sensitivity of the network output to displacement and deformation. The role of the fully connected layer is mainly for classification. The features obtained through the convolution and pooling layers above are classified at the fully connected layer. The fully connected layer is a fully connected neural network. The proportion of feedback from each neuron is different. Finally, the classification results are obtained by adjusting the weights and the network.

In the overall system architecture, one CNN subarray output could relate to a long interconnect and amplifier to one or more inputs of another CNN subarray. Connections between CNN subarrays are programmed with multiplexers. Direct connections between layers speed up deep CNNs. CNN makes full use of the local information in the image. There are inherent local patterns in images (such as contours, boundaries, human eyes, noses, mouths, etc.) that can be exploited, and it is clear that the concepts in image processing should be combined with neural network techniques. For CNNs, not all neurons can be directly connected, but through the “convolutional kernel” as a mediation. The same convolutional kernel is shared within all images, and the image retains its original positional relationship after the convolution operation.

MTJs have been widely used to build CNNs [114,115,116]. Pan et al. [114] proposed a multilevel cell-based STT-MRAM computing in-memory accelerator for a binary convolutional neural network (BCNN). Fig. 8(b) shows the architecture of STT-computing in memory used in this paper. The modified sensing circuit is designed for logic and full-addition operation. In the meanwhile, the mode controller decides the exact working mode. In this architecture, one cell is composed of two MTJs and two bits are stored in one cell. The addition operation of the two bits is implemented within the unit, which reduces the number of required transistors and reduces the power consumption. Fig. 8(c) shows the XNOR-Net topology and XNF-Net is used as the fully connected layer. The convolution operation can be implemented by the above-mentioned STT-computing in memory. As shown in Fig. 8(d), first, the process of input preprocessing is performed, i.e., batch normalization and binarization of the input, corresponding to the path of the blue arrow (input data flow) in Fig. 8(d). The process of weight preprocessing is shown by the path of the purple arrow (weight data flow) on the left side of Fig. 8(d), and the weights are binarized. The preprocessed inputs and weights are fed into the proposed convolutional layers. The weights stored in the computing in-memory array are shared, as we mentioned as one of the advantages of CNNs. The green and orange arrows in the convolutional layers represent binary AND operations and bit counting operations, respectively. The trained scale factor and convolution result are passed to the multiplier in the APU, and the convolution calculation is completed. The final pooling operation further reduces the number of parameters.

Above all, CNN greatly reduces the trainable parameters while ensuring the depth of the network. For image recognition applications [117, 118], CNN can efficiently extract image features by convolution operations and perform tasks such as classification or recognition. Nonetheless, the deepening of its layers cannot reflect the effect in temporal sequence and is no longer suitable for processing time-domain problems such as speech recognition. Facing this, RNNs are proposed [119], which incorporate feedback operations.

3.1.3 Recurrent neural network

Although the fully connected neural network can predict something, the input of the previous data and the input of the latter data are completely independent, which makes it impossible to deal with the data with sequence information. In many scenarios, yet sequence information is indispensable. For instance, to guess what the next word of the text is, usually information from the front part of the text needs to be used, because all the content in the text does not exist alone. In order to solve the “current output of a sequence is also related to the previous output” problem, RNN was proposed [120], as an important branch of artificial neural network. It contains a feedback mechanism in the hidden layer to achieve effective processing of sequence data. It is also known as a feedback neural network. RNNs have the powerful ability to store and process contextual information, and they have been widely used in recognition [121], natural language processing [122], computer vision [123] and other fields.

From the viewpoint of neuroscience, RNN aims at mimicking, in a reductionist scheme, how the human brain processes information. In this context, RNN assumes that the neurons are embedded in a randomly connected complex network whose intrinsic activity is modified by external stimuli. The persistent neural network activity makes the information processing of a given stimulus occur in the context of the response to previous excitations. The generated network activity is projected into other cortical areas that interpret or classify the outputs. It was this bio-inspired view that motivated one of the original RNN concepts. The main inspiration underlying RNN is the insight that the brain processes information generating patterns of transient neuronal activity excited by input sensory signals [124]. Information processing using a single dynamical node as a complex system.

Figure 9(a) shows the network structure of RNN. Through the loop connection on the hidden layer, the network state of the previous moment can be transmitted to the current moment; meanwhile, the state of the current moment can also be transmitted to the next moment. At time t, the hidden unit h receives data from two aspects, i.e., the value of the hidden unit at the previous moment of the network ht−1, and the current input data xt, and the output is calculated at the current moment through the value of the hidden unit. The input xt−1 at time t − 1 can then influence the output at time t through a loop structure. The forward calculation of RNN is carried out in time series, and the parameters in the network are updated using the time-based BP algorithm. Wsh is the weight matrix from the input unit to the hidden unit. Whh is the connection weight matrix between hidden units. Why is the connection between the hidden unit and the output unit weight matrix. by and bh are the bias vectors. The parameters required in the calculation process are shared. As a result, RNN can process sequence data of any length. The calculation of ht requires ht−1, the calculation of ht−1 requires ht−2, and so on. Therefore, the state at a certain moment in the RNN depends on all the states in the past. RNN can map sequence data to sequence data output. However, the length of the output sequence is not necessarily the same as the length of the input sequence. According to different task requirements, there will be various correspondences. As shown in Fig. 9(b), an RNN consisting of 40 MTJs is trained for the Chinese character recognition [125]. The black lines show the feedback connections where the information is transported, and the red lines are connections between output nodes and every MTJ.

a The typical diagram of RNN, and the connection to the next step, which is represented by the dashed line. b MTJ-based RNN for the Chinese character recognition. The red lines show the connections from every MTJ to output nodes with adjustable weights. The black lines show the feedback connections that transport the output signal to every MTJ in the RNN. c The difference between RNN and RC. d Schematic of a RC system using the spin dynamics in MTJs with S1 as the pinned layer and S2 as the free layer. The figures are adapted from Ref. [125, 126] with the authors’ permission

Reservoir computing is a computing framework derived from the theory of recurrent neural networks. Reservoir is a stationary, nonlinear system with internal dynamics that map input signals into a higher-dimensional computational space [120, 126, 127]. The architectural comparison of RNN and RC is shown in Fig. 9(c). RNN consists of input, intermediate, and output, and the information of the intermediate layer recursively propagates itself. The state of the middle layer is determined by the current input and the state of the past middle layer, that is, the middle layer in RNN has a memory effect. All weight matrices for the input (Win), middle (W) and output (Wout) are trained to obtain the desired output. However, when the middle layer has sufficient memory effects and non-linearities, computation can be achieved only by optimizing the output matrix (Wout). This led to the concept of RC being proposed. The typical structure of RC consists of an input layer, an output layer, and a dynamic reservoir, as shown in the lower part of Fig. 9(c). The input layer feeds the input signals to the reservoir via fixed-weight connections which are randomly initialized. The reservoir maps the input signals into higher dimensions before processing them. This requires the reservoir to be sufficiently complex, nonlinear, sparsely populated, self-organized in a certain manner and capable of short-term memory. The reservoir usually consists of a large number of randomly interconnected nonlinear nodes, constituting a recurrent network, that is, a network that has internal feedback loops. Under the influence of input signals, the network exhibits transient responses. These transient responses are read out at the output layer via a linear weighted sum of the individual node states. The objective of RNN is to implement a specific nonlinear transformation of the input signal or to classify the inputs. Classification involves the discrimination between a set of input data, for example, identifying features of images, voices, time series, and so on. The only part of the system that is trained is the output layer weights with fixed connections. As shown in Fig. 9(d), RC based on MTJs has been proposed [126], where the MTJs are driven by STT.

Macrospin simulation is conducted for the spin-dynamics in MTJs, for RC. RNN can be seen as a neural network that passes on time, and its depth is the length of time. As we have mentioned, the “gradient disappearance” phenomenon is about to appear again, but on the timeline. As a result, RNNs have the problem of not being able to solve long-term dependencies. In order to solve the above problems, long short-term memory is proposed, which realizes the memory function in time through switching the cell door and prevents the gradient from disappearing.

In addition to ordinary MTJs, STNOs [18, 74] are used as the building blocks of neural networks, due to their several unique features. The structure of STNO is shown in Fig. 10(a). According to the principle of STT [128], the oscillation frequency of the STNO can be controlled by adjusting the input voltage [129]. In a biological neural network, synapses cannot be completely separated from neurons. the neuron-synapse relationship in STNO-based neural networks can better reflect this biological relationship. Further, the relationship between the oscillation frequency of STNO and the applied current or magnetic field is highly nonlinear, leading to a direct implementation of nonlinear activation functions. In addition, STNOs can be coupled by means such as direct exchange, magnetic fields, or currents, which gives them the potential to scale to large networks. As shown in Fig. 10(b), a single STNO is used to process the speech file using time multiplexing [130]. A single oscillator can simulate 400 neurons by periodically assigning time intervals to each neuron's state and using finite relaxation times to simulate coupling between neurons. This RC network can achieve a recognition rate of up to 99.6% for MNIST TI-46 speech digits. The upper part of Fig. 10(c) shows a coupled STNO-based neural network for vowel recognition [131]. The first neural layer consists of two individual neurons A and B. The input is represented by the frequency through two microwave signals fA, and fB. Changing the bias currents of the STNOs can change the intrinsic frequencies of the oscillators. The second layer is composed of 4 full-connected neurons. The lower part of Fig. 10(c) shows the specific implementation method of the above network. If the i-th neuron in the second layer is synchronized with neuron A in the first layer, the equality of their frequencies simulates a strong synaptic coupling. On the contrary, neuron A and neuron i with independent dynamics and frequencies simulate weak synaptic coupling between them. The strength of these synapses can be tuned by changing the bias current of each oscillator in the second layer. In many applications [130,131,132,133], STNO exhibits good stability as well as reliability and can achieve complex functions with fewer devices and higher energy efficiency.

NC with STNOs. a The structure of STNO. When a d.c. current Idc is applied, the magnetization of FL gives an oscillating voltage due to the oscillating magnetoresistance. b The neuron model in RNN. Using time multiplexing in pre- and post-processing, a single STNO gives state of the art performance as a reservoir in a reservoir computing scheme, here recognizing the particular spoken digit as ‘1’. c Upside: schematic of RNN for vowel recognition. Downside: the input is represented by the frequencies of two microwaves applied through a strip line to the oscillators. I1–4 represent the bias currents and they can manipulate the natural frequencies of 4 STNOs. These STNOs can be tuned so that the synchronization pattern between the oscillators corresponds to the desired output. The figures are adapted from Ref. [130, 131] with the authors’ permission

Above all, the first-generation neural network, also known as the perceptron, was proposed around 1950. It has only two layers, the input layer and the output layer, which are mainly linear structures. It cannot solve linearly inseparable problems, and it cannot do anything with slightly more complicated functions, such as the XOR operation. In order to solve the defects of the first-generation neural network, Rumelhart, Williams et al. proposed the second-generation neural network i.e., MLP, around 1980. Compared to the first-generation neural network, the second-generation has multiple hidden layers, which can introduce some nonlinear structures and solve the defect that the nonlinear problem could not be solved before. To conquer the problem of increasing the number of hidden layers and increasing the parameters sharply, CNN was proposed, which greatly improved the computational efficiency. To solve the sequence correlation problem, RNNs are proposed, and because the neurons are continuously interconnected, the second-generation neural network generally supports the BP [134] learning method, which is another enormous improvement in learning efficiency.

3.2 Spiking neural network

The neural networks mentioned above are usually fully connected, receiving continuous values and outputting continuous values. Although contemporary neural networks have achieved breakthroughs in many fields, they are biologically imprecise and do not essentially mimic the mechanisms of the human brain. Therefore, the third generation of a neural network, SNN, was proposed [9, 135], and uses models that best fit biological neuron mechanisms to perform computations and aims to bridge the gap between neuroscience and machine learning. Compared to the previous two generations of neural networks, SNNs are closer to biological neuron mechanisms. SNNs use spikes, which are discrete events that occur at points in time, rather than the usual continuous values in ANNs. Each peak is represented by a differential equation representing a biological process, the most important of which is the neuron's membrane potential. Essentially, once a neuron reaches a certain potential, a spike occurs, and neurons that subsequently reach that potential are reset. Furthermore, SNNs are usually sparsely connected and take advantage of special network topologies.

Neurons in an ANN communicate with each other using activations encoded with high precision and continuous values and only propagate information in the spatial domain (i.e., layer by layer). As can be seen from the above equations, the multiply-and-accumulate of inputs and weights is the main operation of the network. However, in the SNN, communication between spiking neurons is through binary events, rather than continuous activation values. The spikes from the previous neuron are transmitted to the dendrites through synapses and finally processed by soma. The equations of SNN are shown as follows,

where t represents the time step, τ is a constant, and u and s represent the membrane potential and output peak. ur1 and ur2 are the resting potential and the reset potential, respectively. wj is the weight of the jth input synapse. \({t}_{j}^{k}\) is the moment when the kth pulse of the jth input synapse fires (i.e., the state is 1) within the integration time window Tw. \(K(t-{t}_{j}^{k})\) is the kernel function representing the delay effect. Tw is the integration time window. uth is a threshold, which means whether to fire once or not.

When the membrane potential u(t) (that is, the implicit potential of soma) is higher than the threshold uth, the spiking neuron is regarded as fired, at which time the output potential s(t) is set to 1, and then u(t) returns to the reset potential ur2. When u(t) is lower than uth, it does not fire, and the output remains at 0 at this time. At each time step, the update process of u(t) satisfies a differential equation, as shown above. At each time step, the value of u(t) should drop by a value as large as u(t)-ur1, where ur1 is the resting potential. In the meanwhile, at each time step, the value of the membrane potential u(t) should rise by a value, the value of which is related to the j input synapses of this neuron, and the weight of each input synapse is wj, and the contribution of this synapse to the rise in membrane potential is\({\sum }_{{t}_{j}^{k}\in {S}_{j}^{{T}_{w}}}K(t-{t}_{j}^{k})\), i.e., in \({S}_{j}^{{T}_{w}}\) pulses, if the input pulse at time \({t}_{j}^{k}\) is the fire state (ie, 1 state), then \(K(t-{t}_{j}^{k})\) is calculated once and accumulated.

Unlike ANNs, SNNs use sequences of spikes to transmit information, and each spiking neuron experiences rich dynamic behaviors [135, 136]. Specifically, in addition to information propagation in the spatial domain, history in the temporal domain also has a close influence on the current state. As a result, neural networks typically have more temporal generality and lower accuracy than neural networks that primarily propagate through space and activate continuously. Since spikes are only fired when the membrane potential exceeds a threshold, the overall spike is usually sparse. Furthermore, since spikes are binary, i.e., 0 or 1, if the integration time window Tw is adjusted to 1, the multiplication between the input and the weights can be eliminated. For the above reasons, SNN networks can generally achieve lower power consumption compared to computationally intensive ANN networks.

Although SNN has many advantages such as biological proximity, low power consumption, etc. There has long been a debate about the utility of SNNs as computational tools in AI and neuromorphic computing [137, 138], especially compared to ANN. Over the past few years, these doubts have slowed down the development of neuromorphic computing, and with the rapid progress of deep learning, researchers have tried to alleviate this problem at the root, people want to strengthen the SNN by means such as improving the training algorithm [136, 139], [139,140,141] to alleviate this problem.

3.2.1 Biological synapses based on MTJs

In general, learning in the neural network is achieved by adjusting synaptic weights. Traditional ANNs mainly rely on gradient descent-based BP algorithms [139, 142], while in SNN, because the function of the spiking neuron is usually a non-derivable differential equation, it is extremely difficult to implement BP in SNN. There are three mainstream ways of SNN implementation: The first is to convert traditional ANN to SNN without considering any SNN characteristics [143]. However, the trained network is fully converted into a binary spike-based network. For input, the input signal needs to be encoded as a pulse train. All neurons need to be replaced with corresponding spiking neurons, and the weights obtained from training need to be quantified. The second method is BP [144]. Although it is true that the spike function of the spiking neuron cannot be directly derived to calculate the gradient, researchers have come up with many methods to estimate the gradient of the changing parameters in the network for BP, including Spikeprop [145], Slayer, etc. Although these algorithms are still controversial, they do reduce the training complexity of SNNs to some extent. The third is using STDP [146]. The principle is to use STDP to adjust the weights, thresholds, synaptic delays, and other parameters of the SNN during the training process and obtain parameters that meet the requirements of the indicators (such as classification, recognition accuracy, etc.) and the training process is completed. Lastly, the parameters are fixed and the trained SNN is obtained. Compared to the previous two approaches, STDP is closer to the actual situation in biology. It has been the most widely used method so far. Its key feature is that if presynaptic neuron activity (electrical impulse release) precedes postsynaptic neuron activity, it will cause an increase in the strength of synaptic connections. Nevertheless, if the presynaptic activity lags the postsynaptic activity, inhibition will result in weakening the synaptic connection. The effect of such temporal sequencing of presynaptic and postsynaptic activities on synaptic transmission has been thought to be directly related to brain learning and memory functions.

The learning method of biological neurons is unsupervised learning. Consequently, the initial training of SNN is considered to be unsupervised. The Hebb rules [146] provide a firm theoretical basis for the direct training of SNNs, which state that the strength of synaptic connections between two neurons changes as the neuron state changes. Extended from Hebb's rule, the STDP mechanism [147] not only is the basis for the realization of biological learning and memory functions but also becomes the basic training principle of SNN. Long-term potentiation and long-term depression in synaptic transmission function are shown in Fig. 11(a). STDP studies the relationship between the time interval between pre-neuron and post-neuron firing and the strength of the synaptic connection between the two. When a post-neuron excites a spike sequence, if the excitation time is later than the arrival time of the spike from the previous neuron, the synaptic connection strength between the two is enhanced. The smaller the time difference, the greater the strength and the synaptic connection. The weight value is closer to the long-term potentiation in the upper half of the ordinate; on the contrary, if the excitation time is earlier than the arrival time of the pulse from the previous neuron, the synaptic connection strength between the two will be weakened. STDP can be expressed by the following equation:

a The typical STDP curve. If the presynaptic neuron spikes just before the postsynaptic neuron, the synaptic weight increases, and if the postsynaptic neuron spikes just before the presynaptic neuron, the synaptic weight decreases. b Left side: The heating pulse and the switching pulse applied on the MTJ and their time interval. Right side: The MTJ-based synapse following STDP, which is consist of two nanomagnets separated by a nonmagnetic spacer (MgO). The red curve is the switching probability of the artificial synapse as a function of pulse width. c The temperature (the red curve) response to the current pulse (the gray curve). The inset is the relationship of switching probability and temperature. d Experimental results of the proposed MTJ and its STDP behavior wherein the switching probability can be adjusted by changing the input pulses. The figures are adapted from Ref. [150], with the authors’ permission

where τ+ and τ- are time constants, and A+ and A- represent the maximum magnitudes of the synaptic value for the different time domains of s between the arrival and firing of neuron pulses before and after the synapse, respectively.