Abstract

Stochastic gradient descent (SGD) is one of the most popular algorithms in modern machine learning. The noise encountered in these applications is different from that in many theoretical analyses of stochastic gradient algorithms. In this article, we discuss some of the common properties of energy landscapes and stochastic noise encountered in machine learning problems, and how they affect SGD-based optimization. In particular, we show that the learning rate in SGD with machine learning noise can be chosen to be small, but uniformly positive for all times if the energy landscape resembles that of overparametrized deep learning problems. If the objective function satisfies a Łojasiewicz inequality, SGD converges to the global minimum exponentially fast, and even for functions which may have local minima, we establish almost sure convergence to the global minimum at an exponential rate from any finite energy initialization. The assumptions that we make in this result concern the behavior where the objective function is either small or large and the nature of the gradient noise, but the energy landscape is fairly unconstrained on the domain where the objective function takes values in an intermediate regime.

Similar content being viewed by others

Data Availability Statement

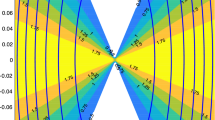

Data sharing not applicable to this article as no datasets were generated or analysed during the current study. The code for the simulations in Fig. 1 is available from the corresponding author on reasonable request.

References

Allen-Zhu, Z.: Natasha 2: faster non-convex optimization than SGD. (2017) arXiv preprint arXiv:1708.08694

Bernstein, J., Azizzadenesheli, K., Wang, Y.-X., Anandkumar, A.: Convergence rate of sign stochastic gradient descent for non-convex functions. In: International Conference on Machine Learning, pp. 560–569. PMLR (2018)

Bassily, R., Belkin, M., Ma, S.: On exponential convergence of sgd in non-convex over-parametrized learning. (2018) arXiv preprint arXiv:1811.02564

Bottou, L., Curtis, F.E., Nocedal, J.: Optimization methods for large-scale machine learning. SIAM Rev. 60(2), 223–311 (2018)

Bach, F., Moulines, E.: Non-strongly-convex smooth stochastic approximation with convergence rate O(1/n). (2013) arXiv preprint arXiv:1306.2119

Cooper, Y.: The loss landscape of overparameterized neural networks. (2018) arXiv:1804.10200 [cs.LG],

Défossez, A., Bottou, L., Bach, F., Usunier, N.: A simple convergence proof of Adam and Adagrad. (2020) arXiv preprint arXiv:2003.02395

Dieuleveut, A., Durmus, A., Bach, F.: Bridging the gap between constant step size stochastic gradient descent and Markov chains. (2017) arXiv preprint arXiv:1707.06386

Dereich, S., Kassing, S.: Convergence of stochastic gradient descent schemes for Lojasiewicz-landscapes. (2021) arXiv preprint arXiv:2102.09385

Fehrman, B., Gess, B., Jentzen, A.: Convergence rates for the stochastic gradient descent method for non-convex objective functions. J. Mach. Learn. Res. 21(1), 5354–5401 (2020)

Ghadimi, S., Lan, G.: Stochastic first-and zeroth-order methods for nonconvex stochastic programming. SIAM J. Optim. 23(4), 2341–2368 (2013)

Ghadimi, S., Lan, G., Zhang, H.: Mini-batch stochastic approximation methods for nonconvex stochastic composite optimization. Math. Program. 155(1–2), 267–305 (2016)

Hsieh, Y.-G., Iutzeler, F., Malick, J., Mertikopoulos, P.: On the convergence of single-call stochastic extra-gradient methods. (2019) arXiv preprint arXiv:1908.08465

Hsieh, Y.-G., Iutzeler, F., Malick, J., Mertikopoulos, P.: Explore aggressively, update conservatively: Stochastic extragradient methods with variable stepsize scaling. (2020) arXiv preprint arXiv:2003.10162

Jentzen, A., Kuckuck, B., Neufeld, A., von Wurstemberger, P.: Strong error analysis for stochastic gradient descent optimization algorithms. IMA J. Numer. Anal. 41(1), 455–492 (2021)

Klenke, A.: Wahrscheinlichkeitstheorie, vol. 1. Springer, Berlin (2006)

Karimi, H., Nutini, J., Schmidt, M.: Linear convergence of gradient and proximal-gradient methods under the Polyak-Lojasiewicz condition. In: Joint European Conference on Machine Learning and Knowledge Discovery in Databases, pp. 795–811. Springer, (2016)

Königsberger, K.: Analysis 2. Springer-Verlag, Berlin (2013)

Kushner, H., Yin, G.G.: Stochastic Approximation and Recursive Algorithms and Applications, vol. 35. Springer Science and Business Media, Berlin (2003)

Li, Q., Tai, C., Weinan, E.: Stochastic modified equations and adaptive stochastic gradient algorithms. In: International Conference on Machine Learning, pp. 2101–2110. PMLR, (2017)

Moulines, E., Bach, F.: Non-asymptotic analysis of stochastic approximation algorithms for machine learning. Adv. Neural. Inf. Process. Syst. 24, 451–459 (2011)

Mertikopoulos, P., Hallak, N., Kavis, A., Cevher, V.: On the almost sure convergence of stochastic gradient descent in non-convex problems. (2020) arXiv preprint arXiv:2006.11144

Needell, D., Ward, R., Srebro, N.: Stochastic gradient descent, weighted sampling, and the randomized Kaczmarz algorithm. Adv. Neural. Inf. Process. Syst. 27, 1017–1025 (2014)

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Stat. 400–407 (1951)

Rakhlin, A., Shamir, O., Sridharan, K.: Making gradient descent optimal for strongly convex stochastic optimization. (2011) arXiv preprint arXiv:1109.5647

Sard, A.: The measure of the critical values of differentiable maps. Bull. Am. Math. Soc. 48(12), 883–890 (1942)

Skorokhodov, I., Burtsev, M.: Loss landscape sightseeing with multi-point optimization. (2019) arXiv preprint arXiv:1910.03867

Vaswani, S., Bach, F., Schmidt, M.: Fast and faster convergence of SGD for over-parameterized models and an accelerated perceptron. In: Chaudhuri, K., Sugiyama, M. (eds.) Proceedings of the Twenty-Second International Conference on Artificial Intelligence and Statistics, volume 89 of Proceedings of Machine Learning Research, pp. 1195–1204. PMLR, 16–18 (2019)

Wojtowytsch, S.: Stochastic gradient descent with noise of machine learning type. Part II: Continuous time analysis. (2021) arXiv:2106.02588 [cs.LG]

Ward, R., Wu, X., Bottou, L.: Adagrad stepsizes: Sharp convergence over nonconvex landscapes. In: International Conference on Machine Learning, pp. 6677–6686. PMLR (2019)

Xie, Y., Wu, X., Ward, R.: Linear convergence of adaptive stochastic gradient descent. In: International Conference on Artificial Intelligence and Statistics, pp. 1475–1485. PMLR, (2020)

Acknowledgements

The author would like to thank the anonymous reviewer, Krishnakumar Balasubramanian and Sebastian Kassing for their valuable comments.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There is no conflict of interest to report.

Additional information

Communicated by Anthony Bloch.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: On the Convergence of SGD with Classical Noise

To illustrate the necessity of our improvement, we show that non-constant functions which satisfy the Łojasiewicz inequality always have unbounded gradients.

Lemma A.1

Let \(f:{\mathbb {R}}^m\rightarrow {\mathbb {R}}\) be bounded from below and \(C^1\)-smooth. Assume that f satisfies the Łojasiewicz inequality

for some \(\Lambda >0\). Then either f is constant or \(\nabla f\) is unbounded.

Proof

Assume that f is not constant. Since \(\nabla f\) is bounded from above, so is f by (A.1). Without loss of generality, we may assume that \(\inf f=0\). Now take any \(\theta _0\in {\mathbb {R}}^m\) such that \(f(\theta _0)>0\) and consider the solution \(\theta \) of the ODE

Since

we find that \(f(\theta (t)) \ge \exp (\Lambda t)\), meaning that f cannot be bounded. \(\square \)

We re-prove (Karimi et al. 2016, Theorem 4) under the assumption of bounded noise instead of bounded gradients and with a corrected second estimate containing a logarithm.

Theorem A.2

Assume that

-

(1)

\(f:{\mathbb {R}}^m\rightarrow [0,\infty )\) is a \(C^1\)-function and bounded from below.

-

(2)

\(\nabla f\) satisfies the one-sided Lipschitz-condition

$$\begin{aligned} \langle \nabla f(\theta _1) - \nabla f(\theta _2), \theta _1-\theta _2\rangle \le C_L\,|\theta _1-\theta _2|^2 \end{aligned}$$for some \(C_L>0\).

-

(3)

f satisfies the Łojasiewicz-inequality

$$\begin{aligned} \Lambda \,\big (f(\theta ) - \inf f\big ) \le |\nabla f|^2(\theta ) \end{aligned}$$for some \(\Lambda >0\).

-

(4)

\((\Omega ,{\mathcal {A}}, \mathbb {P})\) is a probability space and \(g:{\mathbb {R}}^m\times \Omega \rightarrow {\mathbb {R}}^m\) is a family of functions such that

-

(a)

\({{\mathbb {E}}}_{\xi \sim \mathbb {P}} g(\theta ,\xi ) = \nabla f(\theta )\) for all \(\theta \in {\mathbb {R}}^m\) and

-

(b)

\({{\mathbb {E}}}_{\xi \sim \mathbb {P}} \big [ |g(\theta ,\xi )- \nabla f(\theta )|^2\big ] < \sigma ^2\) for all \(\theta \in {\mathbb {R}}^m\).

-

(a)

Then the following hold.

-

(1)

If the learning rates satisfy the Robbins-Monro conditions

$$\begin{aligned} \sum _{t=0}^\infty \eta _t = +\infty , \qquad \sum _{t=0}^\infty \eta _t^2 < \infty , \end{aligned}$$then \(\lim _{t\rightarrow \infty } f(\theta _t) = 0\).

-

(2)

If the learning rates are chosen as \(\eta _t= \frac{2}{\Lambda (t+1)}\), then the estimate

$$\begin{aligned} {{\mathbb {E}}}\big [ f(\theta _t) - \inf f\big ] \le \frac{2\,C_L\sigma ^2\,\log (t+1)}{\Lambda ^2t}\,{{\mathbb {E}}}\big [f(\theta _0)-\inf f\big ] \end{aligned}$$holds for all \(t\in {\mathbb {N}}\).

-

(3)

If \(\eta \le \frac{1}{C_L}\), then the estimate

$$\begin{aligned} {{\mathbb {E}}}\big [ f(\theta _t) - \inf f\big ] \le \left( 1- \frac{\Lambda \eta }{2}\right) ^{t}\,{{\mathbb {E}}}\big [ f(\theta _0)-\inf f\big ] + \frac{C_L\sigma ^2}{\Lambda }\,\eta \end{aligned}$$holds for all \(t\in {\mathbb {N}}\).

Proof

Preliminaries. The proof strongly resembles that of Theorem 3.1. Without loss of generality, we assume that \(\inf f=0\) and \(\eta _t < \frac{1}{C_L}\) for all t. Note that

due to the independence of \(\xi _t,\theta _t\). As in the proof of Theorem 3.1, we note that

Thus

First claim. Denote \(z_t = {{\mathbb {E}}}\big [f(\theta _t)\big ]\). Since

It remains to show that the right hand side converges to zero. Note that for any \(T\le t\) we have

where the approximation \(-2\alpha \le \log (1-\alpha ) \le -\alpha \) is valid for almost all terms since \(\eta _k\rightarrow 0\). Thus we find that

independently of T. Immediately, the first term on the right hand side goes to zero. For the second term, we employ the dominated convergence theorem for the sequence of functions

with respect to the finite measure on \({\mathbb {N}}\) which has counting density \(\eta _t^2\). \(F(k) = 1\) can be taken as an integrable majorizing function.

Second claim. For the specified learning rate, the estimate (A.2) becomes

Which can be rewritten for the modified sequence \({\tilde{z}}_t = t\,z_t\) as

In particular since \({\widetilde{z}}_0=0\) we have

Third claim. In this case, we have

for constant \(\eta \), so

\(\square \)

For the sake of completeness, we also provide an elementary version of the result that SGD finds stationary points for objective functions which are non-convex and do not satisfy a Łojasiewicz inequality.

Theorem A.3

Assume that

-

(1)

\(f:{\mathbb {R}}^m\rightarrow [0,\infty )\) is a \(C^1\)-function and bounded from below.

-

(2)

\(\nabla f\) satisfies the Lipschitz-condition

$$\begin{aligned} |\nabla f(\theta _1) - \nabla f(\theta _2)| \le C_L\,|\theta _1-\theta _2| \end{aligned}$$for some \(C_L>0\).

-

(3)

\((\Omega ,{\mathcal {A}}, \mathbb {P})\) is a probability space and \(g:{\mathbb {R}}^m\times \Omega \rightarrow {\mathbb {R}}^m\) is a family of functions such that

-

(a)

\({{\mathbb {E}}}_{\xi \sim \mathbb {P}} g(\theta ,\xi ) = \nabla f(\theta )\) for all \(\theta \in {\mathbb {R}}^m\) and

-

(b)

\({{\mathbb {E}}}_{\xi \sim \mathbb {P}} \big [ |g(\theta ,\xi )- \nabla f(\theta )|^2\big ] < \sigma ^2\) for all \(\theta \in {\mathbb {R}}^m\).

-

(a)

If the learning rates satisfy the Robbins-Monro conditions

then \(\lim _{t\rightarrow \infty } {{\mathbb {E}}}\big [|\nabla f|^2(\theta _t)\big ] = 0\).

Proof

Step 1 Without loss of generality, we assume that \(\inf f = 0\). As previously in the proofs of Theorems 3.1 and Theorem A.2, we note that

if \(\eta _t<\frac{1}{C_L}\). Then

for all t, i.e. \({{\mathbb {E}}}\big [ f(\theta _t)\big ]\) is uniformly bounded in terms of the initial condition, the constants \(C_L\) and \(\sigma \), and the second Robbins-Monro condition.

Step 2. Assume for the sake of contradiction that \({\bar{c}}:= \liminf _{t\rightarrow \infty } {{\mathbb {E}}}\big [|\nabla f(\theta _t)|^2\big ]>0\). Then

for all sufficiently large t since \(\eta _t^2 \ll \eta _t\). We choose \(T\in {\mathbb {N}}\) such that (A.3) holds for all \(t\ge T\). Due to the first Robbins-Monro condition and Step 1, we have

As f is bounded from below, we have arrived at a contradiction.

Step 3. Assume for the sake of contradiction that

Then there exists sequences \(S_n, T_n\) such

-

\(S_n<T_n < S_{n+1}\),

-

\({{\mathbb {E}}}\big [ |\nabla f|^2(\theta _{S_n})\big ] < \frac{{\bar{c}}}{4}\),

-

\({{\mathbb {E}}}\big [ |\nabla f|^2(\theta _{T_n})\big ] > \frac{3\,{\bar{c}}}{4}\), and

-

\(\frac{{\bar{c}}}{4} \le {{\mathbb {E}}}\big [|\nabla f|^2(\theta _t)\big ] \le \frac{3\,{\bar{c}}}{4}\) for \(S_n\le t\le T_n\).

By the energy dissipation estimates, we see that

for any \(T\in {\mathbb {N}}\). Then

In particular \(\lim _{n\rightarrow \infty } \sum _{t=S_n}^{T_n-1} \eta _t \rightarrow 0\) as \(n\rightarrow \infty \).

Step 4. We are finally in a place where we can obtain a contradiction using (A.4). Due to the Lipschitz-continuity of \(\nabla f\), we find that

so

Since \(\sum _{i=S_n}^{T_n-1} \eta _i\rightarrow 0\) by (A.4), we arrive at a contradiction for large n. \(\square \)

The avoidance analysis for strict saddle points is more subtle and requires more precise analysis.

Appendix B: On the Non-convexity of Objective Functions in Deep Learning

Under general conditions, energy landscapes in machine learning regression problems have to be non-convex. The following result follows from Theorem 2.6.

Corollary B.1

Assume that \(h:{\mathbb {R}}^m\times {\mathbb {R}}^d\rightarrow {\mathbb {R}}\) is a parameterized function model, which is at least \(C^2\)-smooth in \(\theta \) for fixed x. Let \(L_y(\theta ) = \frac{1}{n}\sum _{i=1}^n \big (h(\theta ,x_i) - y_i\big )^2\) where \(y=(y_1,\dots ,y_n)\in {\mathbb {R}}^n\).

-

(1)

If \(L_y\) is convex for every \(y\in {\mathbb {R}}^n\), the map \(\theta \mapsto h(\theta ,x_i)\) is linear for all \(x_i\).

-

(2)

Assume that \(L_y(\theta ) =0\) and that there exists \(1\le j \le n\) such that \(D^2\,h(\theta ,x_j)\) has rank \(k>n\). Then for every \(\varepsilon >0\), there exists \(y'\in {\mathbb {R}}^m\) such that \(|y-y'|<\varepsilon \) and \(D^2L_{y'}(\theta )\) has a negative eigenvalue.

-

(3)

Assume that \(L_y(\theta ) =0\) and that there exists \(1\le j \le n\) such that \(D^2\,h(\theta ,x_j)\) has rank \(k>n\). Assume furthermore that the gradients \(\nabla _\theta h(\theta ,x_1), \dots , \nabla _\theta h(\theta , x_n)\) are linearly independent. Then for every \(\varepsilon >0\), there exists \(\theta '\in {\mathbb {R}}^m\) such that \(|\theta -\theta '|<\varepsilon \) and \(D^2L_{y}(\theta ')\) has a negative eigenvalue.

The first statement is fairly weak, as the proof requires us to consider the convexity of \(L_y\) far away from the minimum. The second statement shows that even close to the minimum, \(L_y\) can be non-convex if the function model is sufficiently far from being ‘low dimensional’ – this statement concerns perturbations in y. The third claim is analogous, but concerns perturbations in \(\theta \). It is therefore stronger, as it shows that \(L_y\) is not convex in any neighborhood of a given point in the set of minimizers.

Proof

First claim Compute

If \(D^2h(\theta , x_i)\ne 0\), there exists \(v\in {\mathbb {R}}^d\) such that \(v^T D^2h(\theta ,x_i)v \ne 0\) since the Hessian matrix is symmetric. Thus

meaning that \(L_y\) cannot be convex. Thus if \(L_y\) is convex for all y, by necessity \(D^2\,h(\theta , x_i)\equiv 0\) for all i, i.e. \(\theta \mapsto h(\theta ,x_i)\) is linear.

Second claim Set \(y_j = h(\theta , x_j)\) for \(j\ne i\) and \(y_i = h(\theta ,x_i) \pm \varepsilon /2\). Then by a simple result in linear algebra (see Lemma B.3 below), the matrix

has a negative eigenvalue.

Third claim Let \(V= \textrm{span}\{\nabla f(\theta ,x_j): j\ne i\}\). Since the set of gradients is linearly independent, there exists v such that \(v\bot V\) but \(v^T \nabla f(\theta , x_i) \ne 0\). Thus to leading order

As before, we conclude that \(D^2L_y(\theta +tv)\) has a negative eigenvalue if \(t>0\) is small enough. \(\square \)

Remark B.2

If \(\theta \mapsto h(\theta ,x_i)\) is linear, then \(D^2h(\theta ,x_i)\) vanishes, i.e. \(\textrm{rk}(D^2h) =0\). The large discrepancy between the conditions ensuring local convexity and global convexity is in fact necessary. Consider \(h(\theta , x_i) = \theta _i\) for all \(i\ge 1\) and

If \(D^2\psi \) is bounded and y is fixed, then for every \(R>0\) we can choose \(\varepsilon \) so small that \(L_y\) is convex on \(B_R(0)\). Note that the rank of \(D^2\psi \) is at most n in this example.

We prove a result which we believe to be standard in linear algebra, but have been unable to find a reference for.

Lemma B.3

Let \(A\in {\mathbb {R}}^{m\times m}\) be a symmetric positive definite matrix of rank at most \(n<m\). Let B be a symmetric matrix of at least \(n+1\). Then, for every \(s>0\) at least one of the matrices \(A+sB\) or \(A-sB\) has a negative eigenvalue.

Proof

Without loss of generality, we assume that \(A = \textrm{diag}(\lambda _1,\dots , \lambda _n, 0, \dots , 0)\).

First case. The Lemma is trivial if there exists \(v\in \textrm{span}\{e_{n+1},\dots , e_m\}\) such that \(v^TBv\ne 0\) since then

is a linear function.

Second case. Assume that \(v^TBv =0\) for all \(v\in \textrm{span}\{e_{n+1}, \dots , e_m\}\). Since B has rank \(n+1\), there exists an eigenvector w for a non-zero eigenvalue \(\mu \) of B such that \(w\notin \textrm{span}\{e_1,\dots , e_n\}\). Without loss of generality, we may assume that \(e_{n+1} \cdot w >0\). Consider

Thus, we choose the correct sign for t depending on \(\mu \) and s, then

In this situation, B is indefinite and the sign of s does not matter. \(\square \)

Appendix C: Auxiliary Observations on Objective Functions and Łojasiewicz Geometry

Lemma C.1

Assume that \(f:{\mathbb {R}}^m\rightarrow {\mathbb {R}}\) is a non-negative function and \(\nabla f\) is Lipschitz continuous with constant \(C_L\). Then

Proof

Take \(\theta \in {\mathbb {R}}^m\). The statement is trivially true at \(\theta \) if \(\nabla f(\theta ) =0\), so assume that \(\nabla f(\theta )\ne 0\). Consider the auxiliary function

Then \(g'(0) = - \nu \cdot \nabla f(\theta ) = |\nabla f(\theta )|\) and

Thus

The bound on the right is minimal for \(t = -\frac{|\nabla f(\theta )|}{C_L}\) when

Since \(f\ge 0\) also \(g\ge 0\), so \( f(\theta ) - \frac{|\nabla f(\theta )|^2}{2C_L} \ge 0\). \(\square \)

Remark C.2

In particular, If f satisfies a Łojasiewicz inequality and has a Lipschitz-continuous gradient, then

We show that the class of objective functions which can be analyzed by our methods does not include loss functions of cross-entropy type under general conditions.

Corollary C.3

Assume that \(f:{\mathbb {R}}^m\rightarrow {\mathbb {R}}\) is a \(C^1\)-function such that

-

\(f\ge 0\) and

-

(C.1) holds.

Then there exists \({\bar{\theta }}\in {\mathbb {R}}^m\) such that \(f({\bar{\theta }})=0\).

Proof

Choose \(\theta _0 \in {\mathbb {R}}^m\) and consider the solution of the gradient flow equation

Then

and thus \(f(\theta (t)) \le f(\theta (0))\,e^{-\Lambda t}\). Furthermore

whence we find that \(\theta (t)\) converges to a limiting point \(\theta _\infty \) as \(t\rightarrow \infty \). By the continuity of f, we find that

\(\square \)

Appendix D: Proof of Theorem 3.5: Local Convergence

We split the proof up over several lemmas. Our strategy follows along the lines of (Mertikopoulos et al. 2020, Appendix D), which in turn uses methods developed in Hsieh et al. (2019, 2020). We make suitable modifications to account for the fact that the smallness of noise comes from the fact that the values of the objective function are low, not that the learning rate decreases. Furthermore, we have slightly weaker control since we do not impose quadratic behavior with a strictly positive Hessian at the minimum, but only a Łojasiewicz inequality and Lipschitz continuity of the gradients.

While weaker conditions may hold for the individual steps of the analysis, we always assume that the conditions of Theorem 3.5 are met for the remainder section. We decompose the gradient estimators as

and interpolate \(\theta _{t+s} = \theta _t - s\eta \,g(\theta _t,\xi _t)\) for \(s\in [0,1]\). With these notations, we can estimate the change of the objective in a single time-step as

where

All three variables scale like \(\eta \) and the difference between them can be ignored for the essence of the arguments. As usual, we denote by \({{\mathcal {F}}}_t\) the filtration generated by \(\theta _0, \xi _0, \dots , \xi _{t-1}\), with respect to which \(\theta _t\) is measurable. We note that \(\nabla f(\theta _t) \cdot \sqrt{f(\theta _t)}\,Y_{\theta _t,\xi _t}\) is a martingale difference sequence with respect to \({{\mathcal {F}}}_t\) since

To analyze the \(\theta _t\) over several time steps, we define the cumulative error terms

We furthermore define the events

for \(\varepsilon '>0\) and \(0<r<1\). The sets \({\widetilde{E}}_t\) are useful as they allow us to estimate the measure of \(E_t^c = \bigcup _{i=0}^t {\widetilde{E}}_i\), where all sets in the union are disjoint.

Lemma D.1

The following are true.

-

(1)

\(\Omega _{t+1}\subseteq \Omega _t\) and \(E_{t+1}\subseteq E_t\).

-

(2)

If \(\theta _0\in \Omega _0(\varepsilon ')\) for \(\varepsilon '<\varepsilon \), then \(E_{t-1}(r) \subseteq \Omega _t(\varepsilon )\) if \(\varepsilon ' +r + \sqrt{r} < \varepsilon \).

-

(3)

Under the same conditions, the estimate

$$\begin{aligned} {{\mathbb {E}}}\big [ R_t\,1_{E_{t-1}}\big ] \le {{\mathbb {E}}}\big [R_{t-1}\,1_{E_{t-2}}\big ] + C\sigma \big (2c_L\varepsilon +1\big )\eta ^2\, {{\mathbb {E}}}\big [ 1_{E_{t-1}} f(\theta _t)\big ] - r \,\mathbb {P}\big ({\widetilde{E}}_t\big ) \end{aligned}$$(D.1)holds, where the constant \(C>0\) incorporates the factors between \({\hat{\eta }}\), \({\tilde{\eta }}\) and \({\bar{\eta }}\).

Proof

The first claim is trivial.

Second claim Recall that \(\theta _0\) is initialized in \(\Omega (\varepsilon ')\subseteq \Omega (\varepsilon )\). In particular \(\Omega _0 = E_{-1} = \Omega \) is the entire probability space since the sum condition for \(E_{-1}\) is empty. We proceed by induction.

Assume that \(\omega \in E_{t}\). Then in particular \(\omega \in E_{t-1}\), so \(\omega \in \Omega _{t}\) by the induction hypothesis. Thus it suffices to show that \(f(\theta _t) < \varepsilon \), i.e. to focus on the last time step. A direct calculation yields

Third claim A simple algebraic manipulation shows that

since \(R_{t-1}\ge r\) on \({\widetilde{E}}_{t-2}\). We recall that

and that

where the analysis of (III) reduces to that of (II) and the bound \(|\nabla f(\theta )|^2 \le 2C_L\,f(\theta _t)\) from Lemma C.1 was used. The result now follows by putting all estimates together. \(\square \)

We now proceed to estimate the probability that the quadratic noise \(R_t\) does not remain small by bounding the probability that it exceeds the given threshold in the t-th step and summing over t.

Lemma D.2

The estimate

holds.

Proof

First step. The probably that \(E_t\) does not occur coincides with the probability that there exists some \(i\le t\) such that \(R_i\) exceeds \(r_\delta \) at i, but not \(i-1\). More precisely

since \(E_0\) is the whole space. Hence

Using \({\widetilde{E}}_i = E_{i-1} \cap \{R_i>r\}\) and \(1_{{\widetilde{E}}_i} = 1_{E_{i-1}} 1_{\{R_i>r\}}\), we bound

since \(R_i\ge 0\) as a sum of squares.

Second step. From (D.1), we obtain

so by the telescoping sum identity

Note that the second term on the right hand side vanishes since the sum defining \(R_{-1}\) is empty.

Conclusion. Combining (D.3) and (D.3), we find that

so

\(\square \)

Finally, we are in a position to prove the local convergence result.

Proof of Theorem 3.5

Step 1. Since \(E_{i}\subseteq E_{i-1}\) and \(\Omega _i\subseteq E_{i-1}\), we have

where

as previously for functions which satisfy the Łojasiewicz inequality globally. We conclude from (D.4) that

Thus for every \(\delta >0\), there exists \(\varepsilon '>0\) such that \(\mathbb {P}(E_t) \ge 1-\delta \) for all \(t\in {\mathbb {N}}\) if \(f(\theta _0) <\varepsilon '\) almost surely.

Step 2 Consider the event

since \(E_{t-1}\subseteq \Omega _t\) and \(E_t\subseteq E_{t-1}\). Then

as in Step 1. We conclude that for every \(\beta \in [1,\rho _\eta ^{-1})\) the estimate

holds almost surely conditioned on \({\widetilde{\Omega }}\) as in the proof of Theorem 3.1. \(\square \)

Appendix E: Proof of Theorem 3.8: Global Convergence

Again, we split the proof up over several Lemmas. First, we show that

We always assume that f satisfies the conditions of Theorem 3.8, although weaker conditions suffice in the individual steps.

Lemma E.1

If \({{\mathbb {E}}}\big [f(\theta _0)\big ]<\infty \), the estimate

holds.

Proof

Recall that for any \(\theta \) we have

as in the proof of Theorem 3.1. We distinguish two cases:

-

If \(f(\theta )\le S\), then

$$\begin{aligned} {{\mathbb {E}}}_\xi \big [f(\theta - \eta g(\theta ,\xi ))\big ] \le \left( 1+ \frac{C_L\sigma \eta ^2}{2}\right) f(\theta ) \le \left( 1+ \frac{C_L\sigma \eta ^2}{2}\right) S. \end{aligned}$$ -

If \(f(\theta )\ge S\), then

$$\begin{aligned} {{\mathbb {E}}}_\xi \big [f(\theta - \eta g(\theta ,\xi ))\big ] \le \left( 1- \Lambda \eta + \frac{C_L(\Lambda +\sigma )}{2\Lambda }\eta ^2\right) f(\theta ) \end{aligned}$$due to the Łojasiewicz inequality on the set where f is large.

In particular, since f is non-negative, we have

where \({\tilde{\rho }}_\eta = 1- \Lambda \eta + \frac{C_L(\Lambda +\sigma )}{2\Lambda }\eta ^2\). If we abbreviate \(z_t = {{\mathbb {E}}}\big [f(\theta _t)\big ]\), we deduce that

Thus if \(z_t\) is large, then \(z_t\) decays. In fact

independently of the initial condition. \(\square \)

Note that the finiteness of the bound hinges on the fact that the learning rate remains uniformly positive in this simple proof. In other variants of gradient flow, it can be non-trivial to control the possibility of escape.

The trajectories of SGD satisfy stronger bounds than the expectations.

Lemma E.2

Proof

Let

In particular, \(\Omega _n \subseteq \Omega _{n,N}\) for all \(N\ge n\) and \(1_{\Omega _n}\le 1_{\Omega _{n,t+1}}\le 1_{\Omega _{n,t}}\) for all \(t\ge n\). Thus

for any \(t\ge n\). Thus \(\mathbb {P}(\Omega _n) = 0\) for all \(n\in {\mathbb {N}}\). Hence also

\(\square \)

We have shown that we visit the set \(\{f<S\}\) infinitely often almost surely. We now show that in every visit \(\theta _t\), the probability that \(f(\theta _{t+1}) < \varepsilon '\) is uniformly positive. Below, we will use this to show that we visit the set \(\{f<\varepsilon '\}\) infinitely often with uniformly positive probability (which then implies that SGD iterates approach the set of minimizers almost surely). In this step, we use that the noise is uniformly ‘spread out’.

Lemma E.3

There exists \(\gamma >0\) such that the following holds: If \(f({\bar{\theta }})\le S\), then

Proof

We consider two cases separately: \(f(\theta )<\varepsilon '\) or \(f(\theta )>\varepsilon '\). In the first case, we can argue by considering the gradient descent structure, while we rely on the stochastic noise in the second case.

First case If \(f({\bar{\theta }})<\varepsilon '\), then

since f satisfies a Łojasiewicz inequality in this region. In particular

Second case By assumption, the set of moderate energy is not too far from the set of global minimizers in Hausdorff distance, i.e. there exists \(\theta '\) such that

-

(1)

\(f(\theta ') =0\) and

-

(2)

\(|{\bar{\theta }}-\theta '|<R\).

Due to the Lipschitz-continuity of the gradient of f, there exists \({\tilde{r}}>0\) depending only on \(C_L\) such that \(f(\theta )<\varepsilon '\) for all \(\theta \in B_{{\tilde{r}}}(\theta ')\). We conclude that

By assumption, the radius

is uniformly positive and the center of the ball

is in some large ball independent of \(\theta \). Thus the probability of jumping into \(\{f< \varepsilon '\}\), albeit small, is uniformly positive with a lower bound

\(\square \)

By Lemma E.2, the sequence of stopping times \(\tau _0=0\),

is well-defined except on a set of measure zero. Consider the Markov process

Note that we use \(\tau _k+1\) for odd times, not \(\tau _{k+1}\). To show the stronger statement that

we use the conditional Borel-Cantelli Lemma E.4.

Lemma E.4

(Klenke 2006, Übung 11.2.6) Let \({{\mathcal {F}}}_n\) be a filtration of a probability space and \(A_n\) a sequence of events such that \(A_n\in {{\mathcal {F}}}_n\) for all \(n\in {\mathbb {N}}\). Define

Then \(\mathbb {P}(A^*\Delta A_\infty ) = 0\) where \(A\Delta B\) denotes the symmetric difference of A and B.

Corollary E.5

Proof

Consider the filtration \({{\mathcal {F}}}_n\) generated by \(Z_n\) and the events

Then

except on the null set where \(\tau _k\) is undefined for some k. Thus \(\mathbb {P}\big (\limsup _{n\rightarrow \infty }A_n\big ) = 1\), so almost surely there exist infinitely many \(k\in {\mathbb {N}}\) such that \(f(Z_k) < \varepsilon '\). In particular, almost surely there exist infinitely many \(t\in {\mathbb {N}}\) such that \(f(\theta _t)<\varepsilon '\). \(\square \)

We are now ready to prove the global convergence result.

Proof of Theorem 3.8

Let \(\beta \in [1,\rho _\eta ^{-1})\) and consider the event

Choose \(\delta \in (0,1)\) and associated \(\varepsilon '>0\). By Corollary E.5, the stopping time

is finite except on a set of measure zero. Consider \(\theta _\tau \) as the initial condition of a different SGD realization \({\tilde{\theta }}\) and note that the conditional independence properties which we used to obtain decay estimates still hold for the gradient estimators \({\widetilde{g}}_t = g(\theta _{t+\tau }, \xi _{t+\tau })\) with respect to the \(\sigma \)-algebras \({\widetilde{F}}_t\) generated by the random variables \(\theta _{t+\tau }\) for \(t\ge 0\).

By Theorem 3.5, we observe that with probability at least \(1-\delta \), we have

Thus for any \(T>0\), with probability at least \(1-\delta - \mathbb {P}(\tau >T)\) we have

Taking \(T\rightarrow \infty \) and \(\delta \rightarrow 0\), we find that almost surely

\(\square \)

We conclude by proving that not just the function values \(f(\theta _t)\), but also the arguments \(\theta _t\) converge.

Proof of Corollary 3.9

The proof follows the same lines as that of Corollary 3.4. Consider the set

Evidently

As in the proof of Corollary 3.4, we deduce that \(\theta _t\) converges pointwise almost everywhere on the set \(U_T\). As this is true for all T and

due to Theorem 3.8, we find that \(\theta _t\) converges almost surely to a random variable \(\theta _\infty \) and \(f(\theta _\infty ) = \lim _{t\rightarrow \infty } f(\theta _t) =0\). \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wojtowytsch, S. Stochastic Gradient Descent with Noise of Machine Learning Type Part I: Discrete Time Analysis. J Nonlinear Sci 33, 45 (2023). https://doi.org/10.1007/s00332-023-09903-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00332-023-09903-3

Keywords

- Stochastic gradient descent

- Almost sure convergence

- Łojasiewicz inequality

- Non-convex optimization

- Machine learning

- deep learning

- Overparametrization

- Global minimum selection