Abstract

It has been proved by the authors that the (extended) Sadowsky functional can be deduced as the \(\Gamma \)-limit of the Kirchhoff energy on a rectangular strip, as the width of the strip tends to 0. In this paper, we show that this \(\Gamma \)-convergence result is stable when affine boundary conditions are prescribed on the short sides of the strip. These boundary conditions include those corresponding to a Möbius band. This provides a rigorous justification of the original formal argument by Sadowsky about determining the equilibrium shape of a free-standing Möbius strip. We further write the equilibrium equations for the limit problem and show that, under some regularity assumptions, the centerline of a developable Möbius band at equilibrium cannot be a planar curve.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The derivation of variational models for thin structures is one of the most fruitful applications of \(\Gamma \)-convergence in continuum mechanics. A typical example is a variational model for a two-dimensional structure deduced as \(\Gamma \)-limit of energies for bodies occupying cylindrical regions whose heights tend to zero. Quite often, such variational derivations focus on the asymptotic behavior of the bulk energy while partially or completely neglecting the contribution of external forces and/or boundary conditions. Usually, external forces, such as dead loads, can be easily included in the analysis afterward by using the stability of \(\Gamma \)-convergence with respect to continuous additive perturbations. On the other hand, boundary conditions may affect the \(\Gamma \)-limit of the bulk energy and, even if not so, they must be taken into account in the construction of the so-called recovery sequence. In some cases, boundary conditions may be an essential feature of the structure under study: consider, for instance, a Möbius band, where the two ends of the strip are glued together after a half-twist.

In this paper, we show that the \(\Gamma \)-convergence result leading to the derivation of the Sadowsky functional (see Freddi et al. (2016a, 2016b)) is stable with respect to an appropriate set of boundary conditions, that include those corresponding to a Möbius band. This provides a rigorous justification of the original formal argument by Sadowsky (1930) aimed at determining the equilibrium shape of a free-standing Möbius strip. Moreover, as a consequence of the Euler–Lagrange equations for the limit problem, we deduce some geometric properties of Möbius strips at equilibrium.

In the last years, the Sadowsky functional and, more in general, the theory of elastic ribbons has received a great deal of attention. Part of the reappraisal on the subject is due to the work by Starostin and van der Heijden (2007) on elastic Möbius strips. Since then the literature has been increasing in several directions, as partially documented in the book edited by Fosdick and Fried (2015). Indeed, the mechanics of Möbius elastic ribbons has been studied, e.g., in Bartels and Hornung (2015), Moore and Healey (2018), Starostin and van der Heijden (2015). The morphological stability of ribbons has been considered in Audoly and Seffen (2015), Chopin et al. (2015), Levin et al. (2021), Moore and Healey (2018) and their helicoidal-to-spiral transition in Agostiniani et al. (2017), Paroni and Tomassetti (2019), Teresi and Varano (2013), Tomassetti and Varano (2017). The relation between rods and ribbons, as well as the derivation of viscoelastic models, has been investigated in Audoly and Neukirch (2021), Brunetti et al. (2020), Dias and Audoly (2015), Friedrich and Machill (2021), while models of ribbons with moderate displacements have been deduced in Davoli (2013), Freddi et al. (2018), Freddi et al. (2013). Finally, for numerics and experiments on ribbons we refer to Bartels (2020), Charrondière et al. (2020), Korner et al. (2021), Kumar et al. (2020), Yu (2021), Yu et al. (2021), Yu and Hanna (2019).

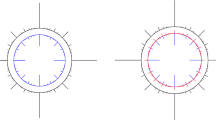

As a simple experiment shows, a Möbius strip made of an unstretchable material, when left to itself, adopts a sort of universal shape, independent of the material the band is made of (if sufficiently stiff to ignore gravity). To the best of our knowledge, Sadowsky was the first to address the problem (still open to this date) of determining the equilibrium shape of a free-standing unstretchable Möbius strip. In 1930, he formulated the problem variationally in terms of an energy (now known as the Sadowsky functional) obtained as a formal limit of the Kirchhoff energy for a Möbius band of vanishing width (see Hinz and Fried (2015a, 2015b), Sadowsky (1930)).

This derivation was made precise much more recently in Freddi et al. (2016a, 2016b), using the language of \(\Gamma \)-convergence, for an open narrow ribbon, that is, without including any kind of boundary conditions or topological constraints. More precisely, let \(S_\varepsilon =(0,\ell )\times (-\varepsilon /2,\varepsilon /2)\) be the reference configuration of an inextensible isotropic strip, where the width \(\varepsilon \) is much smaller that the length \(\ell \). The Kirchhoff energy of the strip is

where \(u:S_\varepsilon \rightarrow {\mathbb {R}}^3\) is a \(W^{2,2}\)-isometry and \(\Pi _u\) is the second fundamental form of the surface \(u(S_\varepsilon )\). Note that by Gauss’s Theorema Egregium \(\det \Pi _u=0\). In Freddi et al. (2016a, 2016b), it has been proved that the \(\Gamma \)-limit of \(E_\varepsilon \), as \(\varepsilon \rightarrow 0\), provides an extension of the classical Sadowsky functional and is given by

where the unit vectors \(d_i\) are such that \(R^T:=(d_1|d_2|d_3)\in W^{1, 2}((0,\ell ); SO(3))\) and satisfy the nonholonomic constraint

while the deformation y of the centerline of the strip is related to the system of directors by the equation

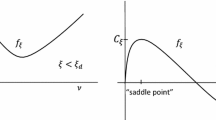

In other words, the director \(d_1\) is the tangent vector to the deformed centerline. The director \(d_2\) describes the “transversal orientation” of the deformed strip; hence, the constraint (1.2) means that the strip cannot bend within its own plane. The director \(d_3\) represents the normal vector to the deformed strip. The energy depends on the two quantities \(d_1'\cdot d_3\) and \(d_2'\cdot d_3\), that represent the bending strain and the twisting strain of the strip, respectively. The limiting energy density \(\overline{Q}\) is given by

which is a convex function that coincides with the classical Sadowsky energy density for \(|\mu |>|\tau |\).

The \(\Gamma \)-convergence result in Freddi et al. (2016a, 2016b) is supplemented by suitable compactness properties that guarantee convergence of minimizers of the Kirchhoff energy to minimizers of the (extended) Sadowsky functional (1.1).

As already mentioned, these results were proved without any kind of boundary conditions or topological constraints. Therefore, the question remained open of whether the Sadowsky functional correctly describes the behavior of narrow Möbius bands or of other closed narrow ribbons.

In this paper, we give a positive answer to this question by considering prescribed affine boundary conditions on the short sides \(\{0\}\times (-\varepsilon /2,\varepsilon /2)\) and \(\{\ell \}\times (-\varepsilon /2,\varepsilon /2)\). We prove (see Theorem 2.5) that, as \(\varepsilon \rightarrow 0\), the \(\Gamma \)-limit of the Kirchhoff energy is still given by the Sadowsky functional (1.1), where the frame (y, R) satisfies, in addition to (1.2–1.3), a set of boundary conditions that we now describe.

By translating and rotating the coordinate system, we may assume, with no loss of generality, that the side \(\{0\}\times (-\varepsilon /2,\varepsilon /2)\) is clamped and that, on this short side, the ribbon is tangent to the undeformed centerline of the ribbon. In the limit problem, this boundary condition leads to

where \({\mathbb {I}}\) is the identity matrix. Similarly, the boundary condition on the side \(\{\ell \}\times (-\varepsilon /2,\varepsilon /2)\) leads to

where \(\overline{y}\in {\mathbb {R}}^3\) is the ending point of the deformed centerline and \(\overline{R}\in SO(3)\) is the orientation in the deformed configuration of the short side at \(\ell \).

We note that these boundary conditions can model both open and closed ribbons. In particular, a Möbius band satisfies the above boundary conditions with \(\overline{y}=0\) and \(\overline{R}=(e_1|-e_2|-e_3)\). However, we point out that the geometrical boundary conditions (1.5–1.6) are insensitive to the number of full turns of the director \(d_2\) along the centerline; thus, a closed ribbon with an odd number of half-twists satisfies the same boundary conditions as a Möbius band. Alternatively, one may prescribe both the boundary conditions and the linking number of the strip (see, e.g., Alexander and Antman (1982)). This will be addressed in future work.

In the last part of the paper, we derive the Euler–Lagrange equations for the functional (1.1) with boundary conditions (1.5–1.6) and we discuss some of their consequences. In particular, we prove (see Theorem 6.3) that, under some regularity assumptions, the centerline of a developable Möbius band at equilibrium cannot be a planar curve.

We close this introduction by discussing the main mathematical difficulties in the proof of our main result (Theorem 2.5). Whereas compactness and the liminf inequality can be proved by relying on the results of Freddi et al. (2016a, 2016b), the construction of the recovery sequence requires a highly non-trivial modification. The first step in the construction consists in showing that one can assume the infinitesimally narrow ribbon to be “well behaved.” To do so, at the level of the infinitesimal ribbon we perform several approximations in which we iteratively approximate and correct the approximating sequences on ever finer scales. In particular, it is essential to correct the boundary conditions at the end of each approximation step. This procedure is not trivial, because the approximation process involves the bending and twisting strains, \(d_1'\cdot d_3\) and \(d_2'\cdot d_3\), while the boundary conditions (1.5–1.6) are expressed as final conditions (see 3.1–3.2) for the solution \(R: [0,\ell ]\rightarrow SO(3)\) of the ODE system \(R' = AR\) in \((0,\ell )\), with initial condition \(R(0) = {\mathbb {I}}\), where

Thus, the correction process could spoil other essential properties. For this reason, one part of the modifications aims at making the sequence robust enough to be stable under the corrections. This argument is based on density results for framed curves preserving boundary conditions, proved in Hornung (2021).

Plan of the paper In Sect. 2, we set the problem, we prove compactness for deformations with equibounded energy, and we state the \(\Gamma \)-convergence result. Section 3 contains the approximation results that are key to prove the existence of a recovery sequence in Sect. 4. In Sect. 5, we derive the equilibrium equations for the limit boundary value problem, and in the last section, we focus on regular solutions of this problem in the case of a Möbius band.

Notation Along the whole paper \((e_1,e_2,e_3)\) and \((\underline{e}_1,\underline{e}_2)\) denote the canonical bases of \({\mathbb {R}}^3\) and \({\mathbb {R}}^2\), respectively.

2 Setting of the Problem and Main Result

In this section, we recall the setting of the problem and state the main result (Theorem 2.5), which proves stability of \(\Gamma \)-convergence with respect to an appropriate set of geometric boundary conditions.

We consider the interval \(I=(0,\ell )\) with \(\ell >0\). For \(0<\varepsilon \ll 1\), let \(S_{\varepsilon } = I\times (-\varepsilon /2, \varepsilon /2)\) be the reference configuration of an inextensible elastic narrow strip. We assume the energy density of the strip, \(Q:{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}} \rightarrow [0,+\infty )\), to be an isotropic and quadratic function of the second fundamental form. The Kirchhoff energy of the strip for a deformation \(u : S_\varepsilon \rightarrow {\mathbb {R}}^3\) is

where the second fundamental form of u, \(\Pi _u : S_\varepsilon \rightarrow {\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}}\), is defined by

and

is the unit normal to \(u(S_\varepsilon )\). Due to the inextensibility constraint, deformations u satisfy the relations \(\partial _i u\cdot \partial _j u = \delta _{ij}\), where \(\delta _{ij}\) is the Kronecker delta. We denote the space of \(W^{2,2}\)-isometries of \(S_\varepsilon \) by

Since the energy density Q is isotropic, it depends on \(\Pi _u\) only through the trace and the determinant of \(\Pi _u\). On the other hand, the inextensibility constraint and Gauss’s Theorema Egregium imply that the Gaussian curvature is equal to zero, that is, \(\det \Pi _u=0\). Thus, the energy may be expressed in terms of \(\text {tr}\,\Pi _u\) only. Equivalently, since \(|\Pi |^2+ 2\, \text {det}\, \Pi =(\text {tr}\,\Pi )^2\) for every \(\Pi \in {\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}}\), we can write the energy in terms of the norm of \(\Pi _u\). With these considerations in mind, up to a multiplicative constant, the energy can be written as

We shall require deformations u to satisfy “clamped” boundary conditions at \(x_1=0\) and \(x_1=\ell \). In particular, this implies that the short sides of the strip remain straight. By composing deformations with a rigid motion, we may assume, without loss of generality, that

To set the boundary conditions at \(x_1=\ell \), we fix \(\overline{y}\in {\mathbb {R}}^3\) and \(\overline{R}^T=(\bar{d}_1|\bar{d}_2|\bar{d}_3)\in SO(3)\) and require that

for \( i=1,2\). We note that the imposed boundary conditions keep straight the sections at \(x_1=0\) and \(x_1=\ell \), or, in other words, the images of \(u(0, \cdot )\) and \(u(\ell , \cdot )\) are straight-line segments. Moreover, the inextensibility constraint implies that \(|\overline{y}|\le \ell \): indeed,

We thus consider as domain of the energy \(E_\varepsilon \) the admissible class

where all equalities are in the sense of traces.

If \(|\overline{y}|= \ell \), the midline \(I\times \{0\}\) of the strip cannot deform and the cross sections cannot twist around the midline, because otherwise the “fibers” \(I\times \{\pm \varepsilon \}\) would get shorter or longer. In other words, if \(|\overline{y}|= \ell \), the whole strip cannot deform. This is proved in the next lemma.

Lemma 2.1

Let \(\varepsilon > 0\) and let \(u\in W^{2,2}_\mathrm{iso}(S_\varepsilon ;{\mathbb {R}}^3)\) be such that \(u(0,0) = 0\), \(\nabla u = (e_1\, |\, e_2)\) on \(\{0\}\times (-\varepsilon /2, \varepsilon /2)\), \(|u(\ell , 0)| = \ell \), and \(\nabla u\) is constant on \(\{\ell \}\times (-\varepsilon /2, \varepsilon /2)\). Then, \(u(x)=x_1e_1+x_2e_2\) on \(S_{\varepsilon }\) .

Lemma 2.1 is an immediate consequence of the following elementary lemma.

Lemma 2.2

Let \(\varepsilon > 0\) and let \(u : S_{\varepsilon }\rightarrow {\mathbb {R}}^3\) be 1-Lipschitz. Assume that u is affine on \(\{0\}\times (-\varepsilon /2, \varepsilon /2)\) and on \(\{\ell \}\times (-\varepsilon /2, \varepsilon /2)\). If \(|u(\ell ,0) - u(0,0)| = \ell \), then u is an affine isometry.

Proof

By applying a rigid motion to u, we may assume without loss of generality that \(u(0,0) = 0\) and that \(u(0, x_2) = x_2e_2\) for all \(x_2\in (-\varepsilon /2, \varepsilon /2)\). Since u is affine on \(\{\ell \}\times (-\varepsilon /2, \varepsilon /2)\), there exists a constant vector \(v\in {\mathbb {R}}^3\) such that

Since u is 1-Lipschitz, we conclude that for all \(x_2\)

If \(v\ne e_2\), this would imply that

Since this is impossible, we conclude that \(v = e_2\) and so

In particular, \(|u(0, x_2) - u(\ell , x_2)| = \ell \) for all \(x_2\). Hence, (Eberhard and Hornung 2020, Lemma 2.1) implies that, for each \(x_2\), there is \(w(x_2)\in {\mathbb {R}}^3\) such that

Evaluating this at \(x_1 = \ell \) and using (2.1), we conclude that \(w(x_2) = \frac{u(\ell , 0)}{\ell }\) for all \(x_2\). Hence,

with the unit vector \(\theta = u(\ell ,0)/\ell \). Since u is 1-Lipschitz and \(u(0) = 0\), we see that

This can only be true if \(\theta \cdot e_2 = 0\). \(\square \)

Under the assumptions of Lemma 2.1, it follows that \(u(\ell ,0)=\ell e_1\) and \(\nabla u(\ell ,x_2)=(e_1|e_2)\). By this fact and the above considerations, we conclude that

Hence, the only non-trivial case is \(|\overline{y}|<\ell \) (note that, if \(|\overline{y}|<\ell \), then \(\mathcal {A}_\varepsilon \ne \varnothing \) for \(\varepsilon >0\) small enough by Remark 2.6 below).

Hereafter, we shall always assume that \(|\overline{y}|<\ell \).

2.1 Change of Variables

We now change variables in order to rewrite the energy on the fixed domain

We introduce the rescaled version \(y: S\rightarrow {\mathbb {R}}^3\) of u by setting

We have that

where the scaled gradient is defined by

In particular, if \(u\in W^{2,2}_\mathrm{iso}(S_\varepsilon ;{\mathbb {R}}^3)\), the map y belongs to the space of scaled isometries

and

where the admissible class of scaled isometries \( \mathcal {A}_\varepsilon ^s\) is defined by

We define the scaled unit normal to y(S) by

and the scaled second fundamental form of y(S) by

so that \(\Pi _u(x_1, \varepsilon x_2) = \Pi _{y,\varepsilon }(x_1, x_2)\). Finally, we denote the scaled energy by

and we have \(J_{\varepsilon }(y) = E_{\varepsilon }(u)\).

2.2 Statement of the Main Results

As \(\varepsilon \) approaches zero, the convergence of the admissible deformations leads naturally, as shown in Lemma 2.4 below, to the admissible class

Proposition 2.3

Assume \(|\overline{y}|<\ell \). Then, \(\mathcal {A}_0\ne \varnothing \).

Proof

The proposition follows from Hornung (2021, Proposition 3.1). We provide here an explicit construction for the reader’s convenience.

Given \(\overline{y}\) and \(\overline{R}\), with \(|\overline{y}|<\ell \), we shall construct a pair \((y, R)\in \mathcal {A}_0\). To satisfy easily the constraint \(d_1'\cdot d_2=0\), we shall build y by “glueing” together straight curves and arcs of circles.

We start by giving two definitions.

The pair \((y, R):[\ell ^i,\ell ^e]\rightarrow {\mathbb {R}}^3\times SO(3)\), where \(\ell ^i<\ell ^e\), with \(y(t)=t e_1+\hat{y}\), \(d_1(t)=e_1\), \(d_2(t)=\cos (\alpha t+\beta ) e_2 +\sin (\alpha t+\beta ) e_3\), for some \(\hat{y}\in {\mathbb {R}}^3\), \(\alpha ,\beta \in {\mathbb {R}}\), and \(d_3(t)=d_1(t)\wedge d_2(t)\) shall be called a straight frame starting at \(y(\ell ^i)\), parallel to \(e_1\), and that rotates \(d_2(\ell ^i)\) to \(d_2(\ell ^e)\). Given three generic unit vectors \(d, b^i, b^e\) such that \(d\cdot b^i=d\cdot b^e=0\), and a point x, we can similarly define a straight frame, starting at x, parallel to d, and that rotates \(b^i\) to \(b^e\). Trivially, the straight frames satisfy the conditions \(y'=d_1\) and \(d_1'\cdot d_2=0\).

The pair \((y, R):[\ell ^i,\ell ^e]\rightarrow {\mathbb {R}}^3\times SO(3)\), where \(\ell ^i<\ell ^e\), with \(y(t)=\sigma (\cos (\alpha +t/\sigma ) e_2 +\sin (\alpha +t/\sigma ) e_3)+\hat{y}\), for some \(\hat{y}\in {\mathbb {R}}^3\), \(\alpha ,\sigma \in {\mathbb {R}}\), \(\sigma \ne 0\), \(d_1(t)=y'\), \(d_2(t)= e_1\), and \(d_3(t)=d_1(t)\wedge d_2(t)\) shall be called a circular frame, starting at \(y(\ell ^i)\), orthogonal to \(e_1\), and that rotates \(d_1(\ell ^i)\) to \(d_1(\ell ^e)\). If convenient, instead of specifying the starting point \(y(\ell ^i)\) we may specify the ending point \(y(\ell ^e)\). Given three generic unit vectors \(b, d^i, d^e\) such that \(b\cdot d^i=b\cdot d^e=0\), and a point x, we can similarly define a circular frame, starting at x, orthogonal to b, and that rotates \(d^i\) to \(d^e\). Clearly, the circular frames also satisfy the conditions \(y'=d_1\) and \(d_1'\cdot d_2=-d_1\cdot d_2'=0\).

It is also convenient to denote by \(S_{PQ}\) the segment whose endpoints are P and Q.

Given these definitions, we prove the proposition by first assuming \(\overline{y}\ne 0\). Let

We define (y, R) in several steps.

-

(1)

If \(e_1\) is not orthogonal to the segment \(S_{0\overline{y}}\), let \((y^0,R^0):[0,\delta ]\rightarrow {\mathbb {R}}^3\times SO(3)\) be a circular frame starting at 0, orthogonal to \(e_2\), and that rotates \(e_1\) to a unit vector \({\overline{d}}_1^0:=(y^0)'(\delta )\) orthogonal to \(S_{0\overline{y}}\). We set \(P^0:=y^0(\delta )\) and \(\overline{d}_2^0:=e_2\).

-

(2)

If \(e_1\) is orthogonal to the segment \(S_{0\overline{y}}\), let \((y^0,R^0):\{0\}\rightarrow {\mathbb {R}}^3\times SO(3)\), with \(y^0(0)=0\), \(R^0(0)={\mathbb {I}}\), and let \(P^0:=0\), \(\overline{d}_1^0:=e_1\), and \(\overline{d}_2^0:=e_2\).

-

(3)

If \(\overline{d}_1\ne -\overline{d}_1^0\), let \((y^\ell ,R^\ell ):[\ell -\delta ,\ell ]\rightarrow {\mathbb {R}}^3\times SO(3)\) be a map such that \(d_1^\ell (\ell -\delta )=-\overline{d}_1^0\), \(d_2^\ell (\ell -\delta )=\overline{d}_2^0\), \(y^\ell (\ell )=\overline{y}\), and \(R^\ell (\ell )=\overline{R}\). This can be achieved in the following way: let a be a unit vector such that \(a\cdot \overline{d}_1=a\cdot \overline{d}_1^0=0\); we glue together a straight frame parallel to \(-\overline{d}_1^0\) that rotates \(\overline{d}_2^0\) to a, with a circular frame orthogonal to a that rotates \(-\overline{d}_1^0\) to \(\overline{d}_1\), and finally with a straight frame ending at \(\overline{y}\), parallel to \(\overline{d}_1\) and that rotates a to \(\overline{d}_2\). We set \(P^\ell :=y^\ell (\ell -\delta )\).

-

(4)

If \(\overline{d}_1=-\overline{d}_1^0\), let \((y^\ell , R^\ell ):\{\ell \}\rightarrow {\mathbb {R}}^3\times SO(3)\), with \(y^\ell (\ell )=\overline{y}\), \(R^\ell (\ell )=\overline{R}\), and let \(P^\ell :=\overline{y}\) and \(\overline{d}_2^\ell :=\overline{d}_2\).

-

(5)

Let

$$\begin{aligned} s^0:=\{P^0+t \overline{d}_1^0: \ t\in (0,+\infty )\} \quad \text {and}\quad s^\ell :=\{P^\ell +t \overline{d}_1^0: \ t\in (0,+\infty )\}, \end{aligned}$$and note that the distance between \(s^0\) and \(s^\ell \) is larger than \(10\delta \) and smaller than \(\ell -10\delta \). Let now \(Q^0\in s^0\) and \(Q^\ell \in s^\ell \) be such that the segment \(S_{Q^0Q^\ell }\) is orthogonal to \(\overline{d}_1^0\). The length of the curve obtained by glueing together the curve \(y^0\), the segment \(S_{P^0Q^0}\), the segment \(S_{Q^0Q^\ell }\), the segment \(S_{Q^\ell P^\ell }\), and the curve \(y^\ell \), has a minimum value that is at most \(\ell -6\delta \). Therefore, we can choose \(Q^0\) and \(Q^\ell \) such that the total length of this curve is exactly equal to \(\ell +(4-\pi )\delta \). We denote by \(d^p\) the unit vector parallel to \(S_{Q^0Q^\ell }\) pointing toward \(Q^\ell \).

-

(6)

We are now in a position to define (y, R). Let b be a unit vector orthogonal to the plane containing the points \(P^0\), \(Q^0\), and \(Q^\ell \) (note that \(P^\ell \) belongs to this plane, too). We consider the following curves:

-

(i)

Let \(\eta ^0\) be the distance between \(P^0\) and \(Q^0\) (note that \(\eta ^0>3\delta \)) and let \(\tilde{\delta }^i:=\delta \) if case (1) holds, and \(\tilde{\delta }^i:=0\) if case (2) holds. Let \((y^i,R^i):[\tilde{\delta }^i, \tilde{\delta }^i+\eta ^0-\delta ] \rightarrow {\mathbb {R}}^3\times SO(3)\) be a straight frame starting at \(P^0\), parallel to \(\overline{d}_1^0\), and that rotates \(\overline{d}_2^0\) to b.

-

(ii)

Let \(\eta ^\ell \) be the distance between \(P^\ell \) and \(Q^\ell \) (note that \(\eta ^\ell >\delta \)) and let \(\tilde{\delta }^e:=\delta \) if case (3) holds, and \(\tilde{\delta }^e:=0\) if case (4) holds. Let \((y^e,R^e):[\ell -\tilde{\delta }^e-\eta ^\ell +\delta , \ell -\tilde{\delta }^e] \rightarrow {\mathbb {R}}^3\times SO(3)\) be a straight frame ending at \(P^\ell \), parallel to \(-\overline{d}_1^0\), and that rotates b to \(\overline{d}_2^0\) in case (3) and to \(\overline{d}_2^\ell \) in case (4).

-

(iii)

Let \((y^{ci},R^{ci}):[\tilde{\delta }^i+\eta ^0-\delta , \tilde{\delta }^i+\eta ^0-\delta +\pi \delta /2] \rightarrow {\mathbb {R}}^3\times SO(3)\) be a circular frame starting at \(y^i(\tilde{\delta }^i+\eta ^0-\delta )\), orthogonal to b, and that rotates \(\overline{d}_1^0\) to \(d^p\).

-

iv)

Let \((y^{ce},R^{ce}):[\ell -\tilde{\delta }^e-\eta ^\ell +\delta -\pi \delta /2,\ell -\tilde{\delta }^e-\eta ^\ell +\delta ] \rightarrow {\mathbb {R}}^3\times SO(3)\) be a circular frame ending at \(y^e(\ell -\tilde{\delta }^e-\eta ^\ell +\delta )\), orthogonal to b, and that rotates \(d^p\) to \(-\overline{d}_1^0\).

-

v)

Let \((y^p,R^p):[\tilde{\delta }^i+\eta ^0-\delta +\pi \delta /2, \ell -\tilde{\delta }^e-\eta ^\ell +\delta -\pi \delta /2] \rightarrow {\mathbb {R}}^3\times SO(3)\) be a straight frame, starting at \(y^{ci}(\tilde{\delta }^i+\eta ^0-\delta +\pi \delta /2)\), with \((R^p(t))^T=(d^p\,|\,b\,|\,d^p\wedge b)\).

We define \((y,R):[0,\ell ]\rightarrow {\mathbb {R}}^3\times SO(3)\) as the function equal to \((y^0,R^0)\), \((y^i,R^i)\), \((y^{ci},R^{ci})\), \((y^p,R^p)\), \((y^{ce},R^{ce})\), \((y^e,R^e)\), and \((y^\ell ,R^\ell )\) on the respective domains. It is easy to check that y and R have the desired regularity and satisfy all the conditions in the definition of \({\mathcal {A}}_0\).

-

(i)

If \(\overline{y}= 0\), one can consider first the map \((y^a,R^a):[3 \ell /4,\ell ]\rightarrow {\mathbb {R}}^3\times SO(3)\) defined by \((y^a(t), R^a(t))=((t -\ell ) \overline{d}_1,\overline{R})\) and then repeat the previous argument with \(3\ell /4\) in place of \(\ell \), and with \(y^a(3\ell /4) =-\ell /4 \overline{d}_1\) in place of \(\overline{y}\). \(\square \)

The next lemma shows that the compactness result (Freddi et al. 2016a, Lemma 2.1) remains true under our set of boundary conditions.

Lemma 2.4

Let \((y_{\varepsilon })\) be a sequence of scaled isometries such that \(y_\varepsilon \in \mathcal {A}_\varepsilon ^s\) for every \(\varepsilon >0\) and

Then, up to a subsequence, there exists \((y,R)\in \mathcal {A}_0\) such that

and

with \(\gamma \in L^2(S)\).

Proof of Lemma 2.4

The proof is an easy adaptation of that of Freddi et al. (2016a, Lemma 2.1) with slight modifications due to the presence of boundary conditions. In particular, estimates (3.1) and (3.2) in Freddi et al. (2016a) imply that the sequence \((y_\varepsilon )\) is uniformly bounded in \(W^{2,2}(S;{\mathbb {R}}^3)\) (without any additive constant, thanks to the boundary conditions). In addition, the boundary conditions for y and R are satisfied owing to (2.5), the continuity of traces, and the compact embedding of \(W^{2,2}(S;{\mathbb {R}}^3)\) in \(C(\overline{S})\) (in fact, in \(C^{0,\lambda }(\overline{S})\) for every \(\lambda \in (0,1)\)), which implies uniform convergence on \(\overline{S}\) of weakly converging sequences in \(W^{2,2}(S;{\mathbb {R}}^3)\). More precisely, the conditions \(y(0)=0\) and \(y(\ell )=\overline{y}\) follow from passing to the limit in \(y_\varepsilon (0,0) =0\) and \(y_\varepsilon (\ell ,0)=\overline{y}\), respectively, using that \(y_\varepsilon \rightharpoonup y\) in \(W^{2,2}(S;{\mathbb {R}}^3)\), hence uniformly on \(\overline{S}\). The equality \(y' = R^T e_1\) is a consequence of the definition of R and (2.5). The condition \(y'(0)=e_1\) follows from \(\partial _1 y_\varepsilon (0, x_2) = e_1\), the fact that \(\partial _1 y_\varepsilon \rightharpoonup y'\) in \(W^{1,2}(S;{\mathbb {R}}^3)\), and the continuity of the trace. Similarly, the condition \(d_2(0)=e_2\) follows from \(\partial _2 y_\varepsilon (0, x_2) = \varepsilon e_2\), the fact that \(\frac{\partial _2 y_\varepsilon }{\varepsilon }\rightharpoonup d_2\) in \(W^{1,2}(S;{\mathbb {R}}^3)\), and the continuity of the trace. This implies that \(d_3(0)=d_1(0) \wedge d_2(0)=e_3\), hence \(R(0) = {\mathbb {I}}\). Analogously, one deduces that \(R(\ell )=\overline{R}\). \(\square \)

The following theorem is the main result of the paper. It proves that the functionals \(J_\varepsilon \) defined in (2.3) with domain \(\mathcal {A}_\varepsilon ^s\) (see 2.2) \(\Gamma \)-converge to the functional

with domain \(\mathcal {A}_0\) (see 2.4), where \(\overline{Q}\) is defined in (1.4).

Theorem 2.5

As \(\varepsilon \rightarrow 0\), the functionals \(J_\varepsilon \), with domain \(\mathcal {A}^s_\varepsilon \), \(\Gamma \)-converge to the limit functional E, with domain \(\mathcal {A}_0\), in the following sense:

-

(i)

(liminf inequality) for every \((y,R)\in \mathcal {A}_0\) and every sequence \((y_{\varepsilon })\) such that \(y_\varepsilon \subset \mathcal {A}_\varepsilon ^s\) for every \(\varepsilon >0\), \(y_{\varepsilon }\rightharpoonup y\) in \(W^{2,2}(S; {\mathbb {R}}^3)\), and \(\nabla _{\varepsilon } y_{\varepsilon } \rightharpoonup (d_1\, |\, d_2)\) in \(W^{1,2}(S; {\mathbb {R}}^{3\times 2})\), we have that

$$\begin{aligned} \liminf _{\varepsilon \rightarrow 0} J_\varepsilon (y_\varepsilon )\ge E(y,d_1,d_2,d_3); \end{aligned}$$ -

(ii)

(recovery sequence) for every \((y,R)\in \mathcal {A}_0\) there exists a sequence \((y_{\varepsilon })\) such that \(y_{\varepsilon }\in \mathcal {A}^s_\varepsilon \) for every \(\varepsilon >0\), \(y_{\varepsilon }\rightharpoonup y\) in \(W^{2,2}(S; {\mathbb {R}}^3)\), \(\nabla _{\varepsilon } y_{\varepsilon } \rightharpoonup (d_1\, |\, d_2)\) in \(W^{1,2}(S; {\mathbb {R}}^{3\times 2})\), and

$$\begin{aligned} \limsup _{\varepsilon \rightarrow 0} J_\varepsilon (y_\varepsilon )\le E(y,d_1,d_2,d_3). \end{aligned}$$

Remark 2.6

By combining Proposition 2.3 and Theorem 2.5 – (ii) we deduce, in particular, that \(\mathcal {A}^s_\varepsilon \ne \varnothing \), hence \(\mathcal {A}_\varepsilon \ne \varnothing \) for \(\varepsilon >0\) small enough. For the boundary conditions of a Möbius band, an explicit construction was provided by Sadowsky in (1930), see also Hinz and Fried (2015a).

The liminf inequality can be proved exactly as in Freddi et al. (2016a).

The proof of the existence of a recovery sequence is based on the approximation results of the next section and is postponed to Sect. 4. The strategy proceeds as follows. The limiting energy density \(\overline{Q}\) is obtained by relaxing the zero determinant constraint with respect to the weak convergence in \(L^2\). More precisely, Freddi et al. (2016a, Lemma 3.1) shows that the lower semicontinuous envelope of the functional

with respect to the weak topology of \(L^2((0,\ell );{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}})\) is given by the functional

for \(M\in L^2(I;{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}})\). The density \(\overline{Q}\) is then defined by the minimization problem

from which equation (1.4) easily follows.

Given a frame \((y,R)\in \mathcal {A}_0\), one can define \(\mu :=d_1'\cdot d_3\), \(\tau :=d_2'\cdot d_3\), and \(\gamma \) as a solution of the minimization problem (2.7). By the relaxation result, there exists a sequence \((M^j)\subset L^2((0,\ell );{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}})\) with \(\det M^j=0\) for every j, such that \((M^j)_{11}\rightharpoonup \mu \) and \((M^j)_{12}\rightharpoonup \tau \) weakly in \(L^2(0,\ell )\), and

By approximation, one can assume \(M^j\) to be smooth, and by a diagonal argument, it is enough to construct a recovery sequence for \(M^j\) for every j. To do so, the first step consists in building a new frame \((y^j,R_j)\in \mathcal {A}_0\) such that \((d^j_1)^\prime \cdot d^j_3=(M^j)_{11}\) and \((d^j_2)^\prime \cdot d^j_3=(M^j)_{12}\). To ensure that \((y^j,R_j)\) still satisfy the boundary conditions at \(x_1=0\) and \(x_1=\ell \) (and so, it belongs in fact to \(\mathcal {A}_0\)), we need a refinement of the relaxation result (Freddi et al. 2016a, Lemma 3.1), showing that the sequence \((M^j)\) in (2.8) can be modified in such a way to accommodate the boundary conditions. This is the content of the next section.

3 Smooth Approximation of Infinitesimal Ribbons

We set \(\ell =1\) and \(I = (0, 1)\). All results in this section remain true with obvious changes for intervals of arbitrary length \(\ell \in (0, \infty )\). We define \({\mathfrak {A}}\subset {\mathbb {R}}^{3\times 3}\) to be the span of \(e_1\otimes e_3 - e_3\otimes e_1\) and \(e_2\otimes e_3 - e_3\otimes e_2\). For a given \(A\in L^2(I;{\mathfrak {A}})\), we define \(R_{A}: I\rightarrow SO(3)\) to be the solution of the ODE system

with initial condition \(R_{A}(0) = {\mathbb {I}}\). Note that \(R_{A}\in W^{1,2}(I;{\mathbb {R}}^{3\times 3})\). We will call a map \(A\in L^2(I;{\mathfrak {A}})\) nondegenerate on a measurable set \(J\subset I\) if \(J\cap \{A_{13}\ne 0\}\) has positive measure. When the set J is not specified, it is understood to be \(J = I\).

For all \(A\in L^2(I;{\mathfrak {A}})\) we define

We fix some nondegenerate \(A^{(0)}\in L^2(I;{\mathfrak {A}})\) and set \(\overline{R} = R_{A^{(0)}}(1)\) and \(\overline{\Gamma } = \Gamma _{A^{(0)}}\). A map \(A\in L^2(I;{\mathfrak {A}})\) is said to be admissible if it satisfies

Note that, if \((y,R)\in \mathcal {A}_0\) (see 2.4), then \(R=R_{A}\) with A given by (1.7). In particular, A is admissible in the sense of (3.2) with \(\overline{R}\) given by the boundary condition at \(\ell \) and \(\overline{\Gamma }=\overline{y}\).

For \(M\in {\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}}\), we define \(A_M\in {\mathfrak {A}}\) by setting \((A_M)_{13} = M_{11}\) and \((A_M)_{23} = M_{12}\), that is,

Let \({\mathcal {F}}\) be the functional defined in (2.6). In Freddi et al. (2016a, Lemma 3.1), it has been proved that for every \(M\in L^2(I;{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}})\) there exists a sequence \((M_n)\subset L^2(I;{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}})\) such that \(\det M_n=0\) for every n, \(M_n\rightharpoonup M\) weakly in \(L^2(I;{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}})\), and \({\mathcal {F}}(M_n)\rightarrow {\mathcal {F}}(M)\). The main purpose of this section is to prove the following refinement of this result.

Proposition 3.1

Let \(M\in L^2(I;{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}})\) be such that \(M_{11}\ne 0\) on a set of positive measure and \(A_M\) is admissible. Then, there exist \(\lambda _n\in C^\infty (\overline{I})\) and \(p_n\in C^{\infty }(\overline{I};\mathbb {S}^1)\) such that, setting \(M_n:=\lambda _n p_n\otimes p_n\), we have

-

(i)

\(p_n\cdot \underline{e}_1 > 0\) everywhere on \(\overline{I}\), \(p_n = \underline{e}_1\) near \(\partial I\), and \(A_{M_n}\) is admissible for every n;

-

(ii)

\(M_n\rightharpoonup M\) weakly in \(L^2(I;{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}})\);

-

(iii)

\({\mathcal {F}}(M_n) \rightarrow {\mathcal {F}}(M)\).

The next lemma is the key tool that allows us to correct the boundary conditions at each approximation step.

Lemma 3.2

Let \(A\in L^2(I;{\mathfrak {A}})\) and assume that there is a set \(J\subset I\) of positive measure such that A is nondegenerate on J. Then, every \(L^2\)-dense subspace \(\widetilde{E}\) of

contains a finite dimensional subspace E such that, whenever \(A_n\in L^2(I; {\mathfrak {A}})\) converge to A weakly in \(L^2(I; {\mathfrak {A}})\), then there exist \(\widehat{A}_n\in E\) converging to zero in E and such that

for every n large enough.

Proof

By definition, \({\mathfrak {A}}\) is a two-dimensional subspace of \({\mathbb {R}}^{3\times 3}_{{{\,\mathrm{skew}\,}}}\) and it contains the matrix \(e_2\otimes e_3 - e_3\otimes e_2\). Since \(A_{13}\ne 0\) on a set of positive measure in J, Hornung (2021, Proposition 3.3 (ii)) shows that A is not degenerate with respect to \({\mathfrak {A}}\) on J in the sense of Hornung (2021, Definition 3.1). While we do not need the precise definition here, let us recall that this means that whenever \(M\in {\mathbb {R}}^{3\times 3}_{{{\,\mathrm{skew}\,}}}\) and \(\lambda \in {\mathbb {R}}^3\) satisfy

then necessarily \(M = 0\) and \(\lambda = 0\). Here

and \({\mathfrak {A}}^\perp \) denotes the orthogonal complement of \({\mathfrak {A}}\) in \({\mathbb {R}}^{3\times 3}\). Hence, A satisfies the hypotheses of Hornung (2021, Theorem 3.2) with \(\widetilde{{\mathfrak {A}}} = {\mathbb {R}}^{3\times 3}_{{{\,\mathrm{skew}\,}}}\). The conclusion of Lemma 3.2 is precisely the conclusion of that theorem. \(\square \)

Remark 3.3

If \(\widetilde{E}\) in Lemma 3.2 is such that \(\widetilde{E}\subset L^\infty (I; {\mathfrak {A}})\), the sequence \(\widehat{A}_n\) provided by the lemma converges to zero uniformly, since E is finite dimensional and all norms are topologically equivalent in finite dimension. We shall use this remark several times in the following.

We now prove several approximation results for nondegenerate and admissible functions in \(L^2(I;{\mathfrak {A}})\), that preserve (nondegeneracy and) admissibility.

Lemma 3.4

Let \(A\in L^2(I;{\mathfrak {A}})\) be nondegenerate and admissible. Then, there exist admissible \(A^{(n)}\in L^2(I;{\mathfrak {A}})\) such that \(A^{(n)}\rightarrow A\) strongly in \(L^2(I;{\mathfrak {A}})\) and \(A^{(n)}_{13}A^{(n)}_{23}\ne 0\) on a set of positive measure, independent of n.

Proof

We may assume that \(A_{13}A_{23} = 0\) almost everywhere, since otherwise there is nothing to prove. Since A is nondegenerate, there exist two disjoint sets \(J_1\), \(J_2\) of positive measure on which \(A_{13}\) does not vanish. Let \(\widetilde{A}^{(n)} : I\rightarrow {\mathfrak {A}}\) be equal to A except on \(J_2\) where we set \(\widetilde{A}_{23}^{(n)} = \frac{1}{n}\). Since \(A_{23} = 0\) on \(J_2\), we see that \(\widetilde{A}^{(n)}\) converges to A strongly in \(L^2(I;{\mathfrak {A}})\).

Let \(\widetilde{E}\) be the set of maps in \(L^{\infty }(I; {\mathfrak {A}})\) that vanish a.e. on \(I\setminus J_1\). By Lemma 3.2, there exist \(\widehat{A}_n\in \widetilde{E}\) converging to zero uniformly and such that \(A^{(n)} := \widetilde{A}^{(n)} + \widehat{A}^{(n)}\) is admissible. By construction, we have \(A^{(n)}_{13}A^{(n)}_{23}=\frac{1}{n}A_{13}\ne 0\) on \(J_2\), and \(A^{(n)}\rightarrow A\) strongly in \(L^2(I;{\mathfrak {A}})\). \(\square \)

For \(c > 0\), we set

Lemma 3.5

Let \(A\in L^2(I; {\mathfrak {A}})\) be nondegenerate and admissible. Then, there exist admissible \(A^{(n)}\in L^2(I;{\mathfrak {A}}_{1/n})\) such that \(A^{(n)}\rightarrow A\) strongly in \(L^2(I;{\mathfrak {A}})\).

Proof

In view of Lemma 3.4, we may assume, without loss of generality, that \(A_{13}A_{23}\) differs from zero on a set \(J_{\infty }\) of positive measure. For \(k\in {\mathbb {N}}\) define

Clearly \(J_k\uparrow J_{\infty }\), as \(k\rightarrow +\infty \). Since \(J_{\infty }\) has positive measure, there exists \(K\in {\mathbb {N}}\) such that \(J_K\) has positive measure. By definition, A is nondegenerate on \(J_K\). Denote by \(\widetilde{E}\) the set of maps in \(L^{\infty }(I;{\mathfrak {A}})\) that vanish a.e. in \(I\setminus J_K\). Now define \(\widetilde{A}^{(n)} : I\rightarrow {\mathfrak {A}}\) as follows: for \((i, j) = (2,3)\) and \((i, j) = (1,3)\) set

Since \(|\widetilde{A}^{(n)} - A|\le \frac{2}{n}\), we have that \(\widetilde{A}^{(n)}\rightarrow A\) strongly in \(L^\infty (I;{\mathfrak {A}})\). Hence, by Lemma 3.2 there exist a finite dimensional subspace E of \(\widetilde{E}\) and some \(\widehat{A}^{(n)}\in E\) such that \(\widehat{A}^{(n)}\rightarrow 0\) uniformly and \(A^{(n)}: = \widetilde{A}^{(n)} + \widehat{A}^{(n)}\) is admissible for every n large enough.

Almost everywhere on \(I\setminus J_K\), we have

whereas on \(J_K\), for large n, we have \(\widetilde{A}^{(n)} = A\) and thus,

The same holds for \(A^{(n)}_{23}\). Hence \(A^{(n)}\in L^2(I; {\mathfrak {A}}_{1/n})\) for any n large enough. Moreover, \(A^{(n)} =\widetilde{A}^{(n)} + \widehat{A}^{(n)}\rightarrow A\) strongly in \(L^{\infty }(I;{\mathfrak {A}})\) and the proof is concluded. \(\square \)

We denote by \({\mathcal {P}}(I; X)\) the piecewise constant functions from I into the set X, i.e., \(f\in {\mathcal {P}}(I; X)\) if there exists a finite covering of I by disjoint nondegenerate intervals on each of which f is constant. Moreover, we set \({\mathcal {P}}(I):={\mathcal {P}}(I; {\mathbb {R}})\).

Lemma 3.6

Let \(A\in L^2(I; {\mathfrak {A}})\) be nondegenerate and admissible. Then, there exist \(c_n > 0\) and admissible \(A^{(n)}\in {\mathcal {P}}(I; {\mathfrak {A}}_{c_n})\) such that \(A^{(n)}\rightarrow A\) strongly in \(L^2(I; {\mathfrak {A}})\).

Proof

In view of Lemma 3.5, we may assume that A take values in \({\mathfrak {A}}_{2\varepsilon }\) for some \(\varepsilon > 0\). Hence, there exist \(\widetilde{A}^{(n)}\in {\mathcal {P}}(I; {\mathfrak {A}}_{\varepsilon })\) which converge to A strongly in \(L^2(I; {\mathfrak {A}})\). Indeed, for \(i=1,2\), we can write \(A_{i3}\) as difference of the positive and negative parts, \(A_{i3}^+\) and \(A_{i3}^-\), which are not both smaller than \(\varepsilon \). It is well known that there exist increasing sequences of simple measurable functions \(S_{i3}^{\pm (n)}\) such that \(\varepsilon \le S_{i3}^{\pm (n)}\le A_{i3}^{\pm }\) and \(S_{i3}^{\pm (n)}\rightarrow A_{i3}^\pm \) a.e. in I. Since the sets where the simple functions \(S_{i3}^{\pm (n)}\) are constant are measurable, they can be approximated in measure from inside by finite unions of intervals. Hence, the same result holds for \(S_{i3}^{\pm (n)}\in {\mathcal {P}}(I; [\varepsilon ,+\infty ))\). By Lebesgue’s theorem, the sequences \(S_{i3}^{(n)}:=S_{i3}^{+(n)}-S_{i3}^{-(n)}\) strongly converge in \(L^2(I; {\mathfrak {A}})\) to \(A_{i3}\). Applying Lemma 3.2 with \(\widetilde{E} = {\mathcal {P}}(I; {\mathfrak {A}})\), we find \(\widehat{A}^{(n)}\in {\mathcal {P}}(I; {\mathfrak {A}})\) converging to zero uniformly and such that \(A^{(n)} := \widetilde{A}^{(n)} + \widehat{A}^{(n)}\) is admissible for n large enough. Clearly, \(A^{(n)}\in {\mathcal {P}}(I; {\mathfrak {A}}_{\varepsilon /2})\) for n large. \(\square \)

For \(A\in {\mathfrak {A}}\), we define

with \(\gamma _A := +\infty \) if \(A_{13} = 0\) and \(A_{23}\ne 0\), whereas \(\gamma _A := 0\) if \(A_{13} = A_{23} = 0\).

Lemma 3.7

For all nondegenerate and admissible \(A\in L^2(I;{\mathfrak {A}})\) and all \(\gamma \in L^2(I)\), there exist \(\lambda _n\in {\mathcal {P}}(I)\) and \(p_n\in {\mathcal {P}}(I; \mathbb {S}^1)\) with \(\lambda _n\ne 0\), \(p_n\cdot \underline{e}_1 > 0\) on I and \(p_n = \underline{e}_1\) near \(\partial I\), such that

is admissible, \((A^{(n)}, \gamma _{A^{(n)}})\rightharpoonup (A, \gamma )\) weakly in \(L^2(I;{\mathfrak {A}}\times {\mathbb {R}})\), and

Proof

We may assume without loss of generality that \(\gamma \in {\mathcal {P}}(I)\). In fact, if the lemma is true for all \(\gamma \in {\mathcal {P}}(I)\), then, by approximation and a standard diagonal procedure, it is true also for arbitrary \(\gamma \in L^2(I)\). For the same reason, in view of Lemma 3.6 we may assume that \(A\in {\mathcal {P}}(I;{\mathfrak {A}}_c)\) for some \(c > 0\). Hence, the map

is piecewise constant and never zero. Since \(M_{12}\) is bounded away from zero, the eigenvectors of M have components along \(\underline{e}_1\) and \(\underline{e}_2\) bounded away from zero. We choose \(a(x)\in \mathbb {S}^1\) among both eigenvectors and among both signs to be an eigenvector of M(x) for which \(a(x){\,\cdot \,} \underline{e}_1\) is maximal; in particular, \(a{\,\cdot \,} \underline{e}_1\ge c\) for a positive constant c. Define

where \(a^{\perp } = (-a_2, a_1)\). Then, \(\widetilde{a}\) is piecewise constant and \(\widetilde{a}\cdot \underline{e}_1 > 0\).

Denote by \(\lambda _1\) the eigenvalue corresponding to a, and by \(\lambda _2\) the other one. Like M itself, the function

is piecewise constant and never zero. Moreover, there exist piecewise constant functions \(\theta : I\rightarrow [0, 1]\) and \(\sigma ^{(i)} : I\rightarrow \{-1, 1\}\) such that the following spectral decomposition holds:

Indeed, one can define \(\sigma ^{(i)}=\chi _{\{\lambda _i\ge 0\}}-\chi _{\{\lambda _i<0\}}\) and \(\theta =|\lambda _1|/\Lambda \).

Let \(\chi _n : I\rightarrow \{0, 1\}\) be such that \(\chi _n\rightharpoonup \theta \) weakly\(^*\) in \(L^{\infty }(I)\) and define

and

Finally, let

Since we can write

we have that \(\widetilde{M}_n\) converges to M weakly\(^*\) in \(L^{\infty }(I;{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}})\). Moreover,

Let \(\widetilde{A}^{(n)} := A_{\widetilde{M}_n}\). Since \(A = A_M\), see (3.3), we have that \(\widetilde{A}^{(n)}\) converges to A weakly\(^*\) in \(L^{\infty }(I;{\mathfrak {A}})\). Moreover, \(\gamma _{\widetilde{A}^{(n)}}=(\widetilde{M}_n)_{22}\) since \(\det \widetilde{M}_n=0\), hence \(\gamma _{\widetilde{A}^{(n)}}\) weakly\(^*\) converges to \(M_{22}=\gamma \) in \(L^{\infty }(I)\). Condition (3.6) rewrites as

We now modify \(\widetilde{A}^{(n)}\) in such a way to make it admissible. Let \(J\subset (\frac{1}{4}, \frac{3}{4})\) be an open interval on which M is constant. Denote by \(\widetilde{E}\) the set of maps in \({\mathcal {P}}(I; {\mathfrak {A}})\) that vanish a.e. in \(I\setminus J\). Since A is nondegenerate on J, by Lemma 3.2 there is a finite dimensional subspace \(E\subset \widetilde{E}\) and \(\widehat{A}^{(n)}\in E\) converging to zero uniformly such that \(A^{(n)} := \widetilde{A}^{(n)} + \widehat{A}^{(n)}\) is admissible. Clearly, \(A^{(n)}\) is piecewise constant and \(A^{(n)}\) converges to A weakly\(^*\) in \(L^{\infty }(I;{\mathfrak {A}})\).

We claim that \(\gamma _{A^{(n)}}\) converges to \(\gamma \) weakly\(^*\) in \(L^{\infty }(I)\). It is enough to show that

We first note that there is a constant \(c>0\) such that for all n

The same is true (with a smaller constant) for \(A^{(n)}_{13}\) for large n, since \(\widehat{A}^{(n)}\rightarrow 0\) uniformly. Therefore, using that \(\widetilde{A}^{(n)}\) and \(A^{(n)}\) are uniformly bounded, we obtain

which implies (3.8).

Equation (3.5) follows from (3.7), (3.8), and the uniform convergence of \(\widehat{A}^{(n)}\).

It remains to show that \(A^{(n)}\) is of the form \(A_{\lambda _n p_n\otimes p_n}\) for some \(\lambda _n\) and \(p_n\) satisfying the desired properties. Keeping in mind that \(A^{(n)}_{13}\ne 0\) due to (3.9), we define

where

and \(\lambda _n := A^{(n)}_{13} + \gamma _{A^{(n)}}\). It is easy to check that \(A^{(n)} = A_{\lambda _n p_n\otimes p_n}\), and \(\lambda _n\) and \(p_n\) have all the stated properties. In particular, \(p_n=\widetilde{p}_n\) on \(I\setminus J\), hence \(p_n = \underline{e}_1\) near \(\partial I\), and by a consequence of (3.9)

for a suitable constant \(c>0\). \(\square \)

We are now in a position to prove the main result of this section, namely Proposition 3.1.

Proof of Proposition 3.1

Let us assume that \(M = \lambda p\otimes p\), where \(p : I\rightarrow \mathbb {S}^1\) and \(\lambda : I\rightarrow {\mathbb {R}}\setminus \{0\}\) are piecewise constant, \(p\cdot \underline{e}_1 > 0\) on I and \(p = \underline{e}_1\) near \(\partial I\). In view of Lemma 3.7, this constitutes no loss of generality, provided we prove strong rather than weak convergence in Proposition 3.1–(ii). Indeed, since \(M_{11}\ne 0\) on a set of positive measure, then \(A_M\) is nondegenerate and we can apply Lemma 3.7 to \(A=A_M\) and \(\gamma =M_{22}\). Then, there exists a sequence \(M^{(n)}=\lambda _np_n\otimes p_n\) with \(0\ne \lambda _n\in {\mathcal {P}}(I)\) and \(p_n\in {\mathcal {P}}(I;\mathbb {S}^1)\) as in Lemma 3.7. To each element of this sequence, we can apply the version of Proposition 3.1 that we are going to prove obtaining a sequence \(M^{(n)}_k:=\lambda ^k_np_n^k\otimes p_n^k\) such that \(M^{(n)}_k\) converges to \(M^{(n)}\) strongly in \(L^2(I;{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}})\) and \(\mathcal {F}(M^{(n)}_k)\rightarrow \mathcal {F}(M^{(n)})\), as \(k\rightarrow \infty \). The required sequence is then given by \(M^{(n)}_{k_n}\), where \((k_n)\) is an increasing sequence such that \(\Vert M^{(n)}_{k_n}-M^{(n)}\Vert _{L^2} +|\mathcal {F}(M^{(n)}_k)-\mathcal {F}(M^{(n)})|<\frac{1}{2^n}\) for every n.

Let us write \(p(t)=\mathrm{e}^{i\theta (t)}\) with argument \(\theta \in {\mathcal {P}}(I)\). Since p is a Lipschitz continuous function of \(\theta \), we can mollify the argument to obtain smooth \(\widetilde{p}_n : I\rightarrow \mathbb {S}^1\) converging to p boundedly in measure, that is, \(\widetilde{p}_n\) converges in measure and \(\sup _n\Vert \widetilde{p}_n\Vert _{L^\infty }<+\infty \). Note that for every n large enough we have \(\widetilde{p}_n = \underline{e}_1\) near \(\partial I\), as well as

for some constant \(c > 0\). The latter follows from the same property for p, which is stable under mollification of the argument. By mollifying \(\lambda \), we obtain smooth \(\widetilde{\lambda }_n\) converging to \(\lambda \) boundedly in measure such that

for some constant \(c>0\) and for every n large enough, since a similar inequality holds for \(\lambda \). Observe that both \(\widetilde{p}_n\) and \(\widetilde{\lambda }_n\) are well defined and smooth up to the boundary of I.

Let us define \(\widetilde{M}_n := \widetilde{\lambda }_n \widetilde{p}_n\otimes \widetilde{p}_n.\) Since \(\widetilde{M}_n\rightarrow M\) boundedly in measure, we have that \(A_{\widetilde{M}_n}\rightarrow A_M\) and \(\gamma _{A_{\widetilde{M}_n}}=({\widetilde{M}_n})_{22}\rightarrow M_{22}=\gamma _{A_M}\) in the same sense.

We now modify \(A_{\widetilde{M}_n}\) in such a way to make it admissible. Let \(J\subset (1/4, 3/4)\) be a nondegenerate open interval on which \(\lambda \) and p are constant. Let \(\widetilde{E} = C_0^{\infty }(J; {\mathfrak {A}})\) be the space of smooth functions with compact support. Since \(A_M\) is admissible and nondegenerate on J, by Lemma 3.2 there exists a finite dimensional subspace \(E\subset \widetilde{E}\) and some \(\widehat{A}^{(n)}\in E\) converging to zero uniformly such that \(A^{(n)} := \widehat{A}^{(n)} + A_{\widetilde{M}_n}\) are admissible. Clearly, \(A^{(n)}\) is smooth up to the boundary and \(A^{(n)}\rightarrow A_M\) boundedly in measure.

We claim that \(\gamma _{A^{(n)}}\rightarrow \gamma _{A_M}\) boundedly in measure. First of all, by (3.11) and (3.12) there exists a constant \(c>0\) such that \(|({\widetilde{M}_n})_{11}|=|\widetilde{\lambda }_n||\widetilde{p}_n\cdot \underline{e}_1|^2\ge c\). This implies that also \(A_{13}^{(n)}=({\widetilde{M}_n})_{11}+\widehat{A}_{13}^{(n)}\) is bounded away from zero for n large enough, because \(\widehat{A}_{13}^{(n)}\) converges uniformly to 0. Therefore, we can argue as in the proof of Lemma 3.7 and show that \(\gamma _{A^{(n)}}-\gamma _{A_{\widetilde{M}_n}}\rightarrow 0\) uniformly. Since \(\gamma _{A_{\widetilde{M}_n}}\rightarrow \gamma _{A_M}\) boundedly in measure, this proves the claim.

We now define \(p_n\) as in (3.10) and \(\lambda _n := A_{13}^{(n)} +\gamma _{A^{(n)}}\), so that \(A^{(n)} = A_{\lambda _n p_n\otimes p_n}\). Since \(A^{(n)}\) is smooth up to the boundary and \(A_{13}^{(n)}\) is bounded away from zero for n large, the functions \(p_n\) and \(\lambda _n\) are smooth and \(p_n{\,\cdot \,} \underline{e}_1 > 0\) on \(\overline{I}\). By construction \(p_n=\widetilde{p}_n\) on \(I\setminus J\), hence \(p_n = \underline{e}_1\) near \(\partial I\).

Finally, since \((\lambda _n p_n\otimes p_n)_{22}=\gamma _{A^{(n)}}\), we have that \(\lambda _n p_n\otimes p_n\rightarrow M\) boundedly in measure, hence strongly in \(L^2(I;{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}})\). This implies condition (iii). \(\square \)

4 The Recovery Sequence

In this section, we prove part (ii) of Theorem 2.5, namely the existence of a recovery sequence.

Proof of Theorem 2.5–(ii). Let \((y,R)\in \mathcal {A}_0\). We set

where \(\gamma \in L^2(I)\) is such that

Such a \(\gamma \) can indeed be chosen measurable. Moreover, by comparing M to the same matrix with 0 in place of \(\gamma \) and using minimality, we have

where the right-hand side is in \(L^1(I)\). Thus, we conclude that \(\gamma \in L^2(I)\).

The assumption \(|\overline{y}|<\ell \) implies that the director \(d_1\) cannot be constant. Since \(d_1'\cdot d_1=d_1'\cdot d_2=0\), we deduce that \(M_{11}=d_1'\cdot d_3\ne 0\) on a set of positive measure. Moreover, the boundary conditions satisfied by (y, R) guarantee that \(A_M\) is admissible in the sense of (3.2) with respect to the data \(\overline{R}\) and \(\overline{\Gamma }=\overline{y}\). By Proposition 3.1, there exist \(\lambda _j\in C^{\infty }(\overline{I})\) and \(p_j\in C^{\infty }(\overline{I}; \mathbb {S}^1)\) such that \(M^j := \lambda _j p_j\otimes p_j\) satisfies

-

(i)

\(p_j\cdot \underline{e}_1 > 0\) everywhere on \(\overline{I}\), \(p_j = \underline{e}_1\) near \(\partial I\), and \(A_{M^j}\) is admissible;

-

(ii)

\(M^j\rightharpoonup M\) weakly in \(L^2(I;{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}})\);

-

(iii)

there holds

$$\begin{aligned} \lim _{j\rightarrow \infty }\int _0^\ell |M^j|^2\,dt = \int _0^\ell ( |M|^2 + 2|\det M|)\, dt. \end{aligned}$$

Let \(R_j : I\rightarrow SO(3)\) be the solution of the Cauchy problem

Since \(M_j\) is smooth, so is \(R_j\). Moreover, since \(A_{M^j}\) is admissible, we have that \(R_j(\ell )=\overline{R}\) and

For \(t\in I\), we define

and we observe that \(y^j(\ell )=\overline{y}\). One can show that \(y^j\rightharpoonup y\) weakly in \(W^{2,2}(I;{\mathbb {R}}^3)\); see, for instance, the proof of Freddi et al. (2012, Lemma 4.2). Moreover, it follows from (4.1) that

Since the functions \(p_j\) are smooth on the interval \(\overline{I}\), they can be extended smoothly to \({\mathbb {R}}\). For \((t,s)\in {\mathbb {R}}^2\), we consider

Since

we have that

hence, there exist an interval \(I_j \supset \overline{I}\) and \(\eta _j>0\) such that

By the Inverse Function Theorem, there exists \(\rho >0\) such that, if \((t,s),(t',s')\in \overline{I}_j\times [-\eta _j,\eta _j]\), then

On the other hand, using the definition of \(\Phi _j\), we have that, up to choosing \(\eta _j\) smaller if needed, there exists a constant \(c>0\) such that, if \((t,s),(t',s')\in \overline{I}_j \times [-\eta _j,\eta _j]\), then

These two facts together imply that, up to choosing \(\eta _j\) smaller, \(\Phi _j\) is injective on \(\overline{I}_j \times [-\eta _j,\eta _j]\). By the invariance of domain theorem, the set \(U_j:=\Phi _j(I_j\times (-\eta _j,\eta _j))\) is open and, since both \(\nabla \Phi _j\) and \((\det \nabla \Phi _j)^{-1}\) are continuous on \(\overline{I}_j\times [-\eta _j,\eta _j]\), the inverse \(\Phi _j^{-1}\) belongs to \(C^1(\overline{U}_j;{\mathbb {R}}^2)\). Since

there exists \(\varepsilon _j>0\) such that \(S_\varepsilon \subset U_j\) for any \(0<\varepsilon \le \varepsilon _j\), in other words \(\Phi _j^{-1}\) is defined on \(S_{\varepsilon }\) for \(0<\varepsilon \le \varepsilon _j\).

For \((t,s)\in I\times {\mathbb {R}}\), we now define

where

for every \(t\in I\), and

for \(x\in S_\varepsilon \).

By the definitions above

By means of (4.2), one can check that

With these identities at hand, one can show that \((\nabla v_j)^T\nabla v_j=(\nabla \Phi _j)^T\nabla \Phi _j\), that is, \((\nabla u_j)^T\nabla u_j={\mathbb {I}}\), for details see Freddi et al. (2016a). Clearly, \(u_j(\cdot , 0) = y^j\) and \(\partial _1 u_j(\cdot , 0) = (y^j)'=d^j_1\). Moreover, we have

Using that \(p_j\cdot \underline{e}_1\ne 0\), one readily deduces that

Taking derivatives with respect to s on both sides of (4.5), we see that

and therefore

Taking derivatives in (4.6), we see that

and similarly that \((A_{u^j}(\cdot , 0))_{12} = (M^j)_{12}\). On the other hand, since \(\partial _s \Phi _j\) is orthogonal to \(p_j\), we have that \(M^j\partial _s \Phi _j=0\). Using (4.7) and the fact that \(p_j\cdot \underline{e}_1\ne 0\), we conclude that \(A_{u^j}(\cdot , 0) = M^j\).

We now prove that \(u_j\) satisfies the boundary conditions, namely \(u_j\in \mathcal {A}_\varepsilon \). To this aim, we remark that condition (i) ensures that \(\Phi _j(0,s)=(0,s)\) and \(\Phi _j(\ell ,s)=(\ell ,s)\) for every s. This implies that \(u_j(0,0)=v_j(0,0)=y^j(0)=0\) and \(u_j(\ell ,0)=v_j(\ell ,0)=y^j(\ell )=\overline{y}\). Again by condition (i) we have that \(p_j'(0)=p_j'(\ell )=0\) and \(b_j=d_2^j\) close to \(\partial I\), hence \(b_j'(0)=(d_2^j)'(0)\) and \(b_j'(\ell )=(d_2^j)'(\ell )\). From (4.1), it follows that \(R_j^\prime (0)=A_{M_j}(0)R_j(0)=A_{\lambda _j\underline{e}_1\otimes \underline{e}_1}\), hence \((d_2^j)^\prime (0)=0\). Analogously, one can show that \((d_2^j)^\prime (\ell )=0\). By (4.3), we deduce that \(\nabla \Phi _j(0,s)=\nabla \Phi _j(\ell ,s)={\mathbb {I}}\) for every s. We now use (4.4) to conclude that \(\nabla u_j(0,x_2)=(d_1^j(0)\, |\, b_j(0))\) and \(\nabla u_j(\ell ,x_2)=(d_1^j(\ell )\, |\, b_j(\ell ))\) for \(x_2\in (-\varepsilon _j,\varepsilon _j)\). By the initial condition in (4.3) and the fact that \(p_j(0)=\underline{e}_1\), we have that \(\nabla u_j(0,x_2)= (e_1\, |\, e_2)\) for \(x_2\in (-\varepsilon _j,\varepsilon _j)\). Since \(R_j(\ell )=\overline{R}\) and \(p_j(\ell )=\underline{e}_1\), we have that \(\nabla u_j(\ell ,x_2)= (\overline{d}_1|\overline{d}_2)\) for \(x_2\in (-\varepsilon _j,\varepsilon _j)\).

We are now in a position to define the recovery sequence. For \(\varepsilon \) small enough, the maps \(y^j_{\varepsilon } : S\rightarrow {\mathbb {R}}^3\) given by \(y^j_{\varepsilon }(x_1, x_2) = u^j(x_1, \varepsilon x_2)\) are well-defined scaled \(C^2\)-isometries of S such that

as \(\varepsilon \rightarrow 0\); here \(T_{\varepsilon }x = (x_1, \varepsilon x_2)\). Set \(A_{\varepsilon }^j:= A_{y^j_\varepsilon ,\varepsilon }\). Then, since \(A_{u^j}(x_1, 0) = M^j(x_1)\), we see that \(A_{\varepsilon }^j\rightarrow M^j\) strongly in \(L^2(S;{\mathbb {R}}^{2\times 2}_{{{\,\mathrm{sym}\,}}})\), as \(\varepsilon \rightarrow 0\). Hence,

By a diagonal argument, we obtain the desired result. \(\square \)

5 Equilibrium Equations for the Sadowsky Functional

In this section, we consider the minimization problem for the Sadowsky functional (1.1) on the class \(\mathcal {A}_0\) introduced in (2.4) and the corresponding Euler–Lagrange equations.

5.1 Existence of a Solution

Let \(\chi _{\mathcal {A}_0}\) be the indicator function of the set \(\mathcal {A}_0\). Since the density function \(\overline{Q}\) is convex and \(\overline{Q}(\mu ,\tau )\ge \mu ^2+\tau ^2\) for any \(\mu ,\tau \in {\mathbb {R}}\), it is easy to prove that the functional \(E+\chi _{\mathcal {A}_0}\) is \((W^{2,2}\times W^{1,2})\)-weakly lower semicontinuous and coercive. By the direct method, there exists a minimizer of E in \(\mathcal {A}_0\). Uniqueness is not ensured since \(\overline{Q}\) is not strictly convex.

5.2 Euler–Lagrange Equations

Let \(|\overline{y}|<\ell \) and let (y, R) be a minimizer of E on \(\mathcal {A}_0\). We always write \(R^T=(d_1|d_2|d_3)\). A system of Euler–Lagrange equations for (y, R) has been derived in Hornung (2010). In that paper, the energy is considered as a function of \(\kappa =d_1'\cdot d_2\), \(\mu =d_1'\cdot d_3\) and \(\tau =-d_2'\cdot d_3\), so that the constraint \(d_1^\prime \cdot d_2=0\) corresponds to assuming \(\kappa =0\). Taking \(\dot{\kappa }=0\) in Hornung (2010, Eq. 12), one obtains that (y, R) satisfies the second and the third equation in Hornung (2010, Eq. 14). Note that, since \(|\overline{y}|<\ell \), one can rule out the degenerate case where the curve y is a straight line. From these considerations, we obtain the following proposition.

Proposition 5.1

(Equilibrium equations) Let \(|\overline{y}|<\ell \) and let (y, R) be a minimizer of E on \(\mathcal {A}_0\) with \(R^T=(d_1|d_2|d_3)\). Then, there exist Lagrange multipliers \(\lambda _1,\lambda _2\in {\mathbb {R}}^3\) such that the following boundary value problem is satisfied:

Proof

The second and the third equation in Hornung (2010, Eq. 14) read as

Since \(d_1=y'\), we have that \(\int _t^\ell d_1(s)\,ds=\overline{y}-y(t)\). The thesis follows by replacing the multiplier \(\lambda _2\) with \(\lambda _2-\lambda _1\wedge \overline{y}\). \(\square \)

Remark 5.2

The two partial derivatives on the left-hand side of the first two equations in (5.1) have the mechanical meaning of a twisting and bending moment, respectively, and are given by

The next proposition shows that on a closed planar curve like a circle, where the curvature is always positive, the constraint \(d_1'\cdot d_2=0\) is incompatible with the boundary condition \(\overline{R}=(e_1|-e_2|-e_3)\). As a consequence, the centerline of a developable Möbius band, if planar, must contain a segment.

Proposition 5.3

Let \((y, R)\in W^{2,2}(I; {\mathbb {R}}^3)\times W^{1,2}(I; SO(3))\) be such that \(R^T=(d_1|d_2|d_3)\), \(y'=d_1\), and \(d_1'\cdot d_2=0\) a.e. on I. If the curve y is planar and \(d_1'\cdot d_3>0\) a.e. in I, then \(d_2\) is constant and orthogonal to the plane of the curve.

Proof

Let \(\mu :=d_1'\cdot d_3\) and let k be a normal unit vector to the plane of the curve, that is, \(|k|=1\) and \(d_1\cdot k\equiv 0\). Since \(d_1'\cdot d_2=0\), see (2.4), we have that \(d_1'=\mu d_3\) and \(d_1\wedge d_1'=-\mu d_2\). Using the fact that \(\mu (s)>0\) for a.e. \(s\in I\), we deduce that \(d_2=-d_1\wedge d_1'/\mu \) a.e. in I. On the other hand, both \(d_1\) and \(d_1'\) are orthogonal to k (indeed, \(d_1'\cdot k=(d_1\cdot k)'=0\)), hence \(d_2\) is parallel to k a.e. in I. By continuity, \(d_2\) must be constantly equal to k or to \(-k\) in I. \(\square \)

6 Regular Möbius Bands at Equilibrium

It is easy to construct a developable Möbius band by adding segments to its centerline in a way that it remains planar, see Example 6.4. On the other hand, we can show that a developable Möbius band, whose centerline is regular and planar, cannot satisfy the equilibrium equations. In other words, the centerline of a regular developable Möbius band at equilibrium cannot be planar.

The regularity notion that we need, is the following.

Definition 6.1

A solution \((y, R)\in W^{2,2}(I; {\mathbb {R}}^3)\times W^{1,2}(I; SO(3))\) of the boundary value problem (5.1) with \(R^T=(d_1|d_2|d_3)\) is said to be regular if there exists a family \(\mathscr {F}\) of pairwise disjoint open subintervals of \((0,\ell )\) such that \(|(0,\ell )\setminus \cup \mathscr {F}|=0\) and such that in every open interval \(J\in \mathscr {F}\) the curvature \(\mu =d_1'\cdot d_3\) is (a.e.) either strictly positive, strictly negative, or zero.

Remark 6.2

An example of a function \(\mu \in L^2(0,1)\) that is not regular in the sense of Definition 6.1 is the characteristic function of a fat Cantor set in (0, 1) (or of any closed set with positive measure and empty interior).

In the next theorem, we show that a regular solution of the Euler–Lagrange equations with boundary conditions \(\overline{y}=0\) and \(\overline{R}=(e_1|-e_2|-e_3)\) cannot be planar. In the proof, we use in a crucial way the expression of \(\overline{Q}\) for small curvatures, which is exactly the region where \(\overline{Q}\) differs from the classical Sadowsky energy density.

Theorem 6.3

Assume that (y, R) be a regular solution of (5.1) with \(\overline{y}=0\) and \(\overline{R}=(e_1|-e_2|-e_3)\). Then, the curve y is not planar, i.e., \(d_1\) does not lie on a plane.

Proof

Assume by contradiction that \(d_1\) lies on a plane, which we assume to be orthogonal to a unit vector k, that is, \(d_1(s)\cdot k=0\) for every \(s\in (0,\ell )\). We set \(\mu :=d_1'\cdot d_3\) and \(\tau :=-d_2'\cdot d_3\).

Let \(\mathscr {F}\) be a family of pairwise disjoint open subintervals of \((0,\ell )\) as in Definition 6.1 and let \(J\in \mathscr {F}\). If \(\mu (s)>0\) for a.e. \(s\in J\), then, by applying Proposition 5.3 on the interval J we have that \(d_2\) is constantly equal to k or \(-k\) in J, hence \(d_2'=0\). By definition of \(\tau \) this implies that \(\tau =0\) in J. The same conclusion is true if \(\mu (s)<0\) for a.e. \(s\in J\). If, instead, \(\mu (s)=0\) for a.e. \(s\in J\), then the curvature vanishes on the interval and the curve is a segment. Therefore, globally the curve is a union of segments and of arcs with \(\tau =0\).

Let us set \(S:=\cup \{J\in \mathscr {F}:\ \mu =0\text { a.e. in }J\}\) and \(C:=\cup \{J\in \mathscr {F}:\ \mu \ne 0\text { a.e. in }J\}\). On the set S we have \(\mu =0\), which corresponds to the regime \(|\mu |\le |\tau |\), while on C we have \(\tau =0\), corresponding to the opposite regime \(|\mu |>|\tau |\). Hence, equations (5.1) reduce to

Then, we have

and, as a consequence, \(\mu \) and \(\tau \) turn out to be continuous on the sets S and C. By the assumption \(\overline{y}=0\), we have that \(C\ne \varnothing \). On the other hand, by the twisting assumption \(d_2(\ell )=-e_2=-d_2(0)\) we have that \(S\ne \varnothing \), too. By the first equation in (6.1) and the second equation in (6.2), we also have that at the boundary points between S and C the left and right limits of \(\mu \) and \(\tau \) exist and are finite. Let \(t_0\) be one of such points, with S on the left and C on the right, without loss of generality. By continuity of the left-hand side in (6.3), we have that

where we have set

Since \(d_1(t_0)\) and \(d_2(t_0)\) are orthogonal, we deduce that \(\tau (t_0^-)=\mu (t_0^+)=0\). On the other hand, \(\tau =0\) on C and \(\mu =0\) on S, hence \(\tau (t_0^+)=\mu (t_0^-)=0\). Thus, we conclude that the functions \(\mu \) and \(\tau \) are globally continuous and must vanish at the boundary points between S and C.

From the first equation in (6.1), it follows that \(\tau \) is constant. Indeed, on every interval J of S the curve y is affine, that is, y is of the form \(y(t)=d_1t+c\) with constants \(d_1\) and c. Therefore, on J we have

Being constant and equal to zero at the boundary points, \(\tau =0\) on S. We conclude that \(\tau =0\) on \([0,\ell ]\). This gives a contradiction, since the boundary condition on R cannot be satisfied. \(\square \)

We conclude with an explicit example of a developable Möbius band, whose centerline is planar. Because of the previous theorem, it cannot satisfy the equilibrium equations, and thus, it cannot be a minimizer of the Sadowsky functional (1.1).

Example 6.4

(Non-minimal developable Möbius band) We consider the framed curve (y, R) given by

The boundary conditions \(y(0)=y(\ell )=0\), \(R(0)={\mathbb {I}}\), and \(R(\ell )=(e_1|-e_2|-e_3)\) with \(\ell =2+2\pi \) are satisfied. For \(t\in (0,1)\) the director \(d_2\) rotates from \(e_2\) to \(-e_2\), while it is constantly equal to \(-e_2\) for \(t\in (1,\ell )\). Since \(d_1\) is constant on (0, 1) and it always belongs to the plane \(x_1x_3\), we have that \(d_1'\cdot d_2=0\), hence \((y,R)\in \mathcal {A}_0\). However, (y, R) cannot be a minimizer since the curve y belongs to the plane \(x_1x_3\).

Data Availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

Alexander, J.C., Antman, S.S.: The ambiguous twist of Love. Quart. Appl. Math. 40, 83–92 (1982)

Agostiniani, V., De Simone, A., Koumatos, K.: Shape programming for narrow ribbons of nematic elastomers. J. Elasticity 127, 1–24 (2017)

Audoly, B., Neukirch, S.: A one-dimensional model for elastic ribbons: a little stretching makes a big difference. J. Mech. Phys. Solids 153, 104–157 (2021)

Audoly, B., Seffen, K.A.: Buckling of naturally curved elastic strips: the ribbon model makes a difference. J. Elasticity 119, 293–320 (2015)

Bartels, S.: Numerical simulation of inextensible elastic ribbons. SIAM J. Numer. Anal. 58, 3332–3354 (2020)

Bartels, S., Hornung, P.: Bending paper and the Möbius strip. J. Elasticity 119, 113–136 (2015)

Brunetti, M., Favata, A., Vidoli, S.: Enhanced models for the nonlinear bending of planar rods: localization phenomena and multistability. Proc. Roy. Soc. Edinburgh Sect. A 476, 20200455 (2020)

Charrondière, R., Bertails-Descoubes, F., Neukirch, S., Romero, V.: Numerical modeling of inextensible elastic ribbons with curvature-based elements. Comput. Methods Appl. Mech. Engrg. 364, 112922 (2020)

Chopin, J., Démery, V., Davidovitch, B.: Roadmap to the morphological instabilities of a stretched twisted ribbon. J. Elasticity 119, 137–189 (2015)

Davoli, E.: Thin-walled beams with a cross-section of arbitrary geometry: derivation of linear theories starting from 3D nonlinear elasticity. Adv. Calc. Var. 6, 33–91 (2013)

Dias, M.A., Audoly, B.: Wunderlich, Meet Kirchhoff: A general and unified description of elastic ribbons and thin rods. J. Elasticity 119, 49–66 (2015)

Eberhard, P., Hornung, P.: On singularities of stationary isometric deformations. Nonlinearity 33, 4900–4923 (2020)

Fosdick, R., Fried, E. (eds.): The mechanics of ribbons and Möbius bands, Springer, (2015)

Freddi, L., Hornung, P., Mora, M.G., Paroni, R.: A corrected Sadowsky functional for inextensible elastic ribbons. J. Elasticity 123, 125–136 (2016)

Freddi, L., Hornung, P., Mora, M.G., Paroni, R.: A variational model for anisotropic and naturally twisted ribbons. SIAM J. Math. Anal. 48, 3883–3906 (2016)

Freddi, L., Hornung, P., Mora, M.G., Paroni, R.: One-dimensional von Kármán models for elastic ribbons. Meccanica 53, 659–670 (2018)

Freddi, L., Mora, M.G., Paroni, R.: Nonlinear thin-walled beams with a rectangular cross-section - Part I. Math. Models Methods Appl. Sci. 22, 1150016 (2012)

Freddi, L., Mora, M.G., Paroni, R.: Nonlinear thin-walled beams with a rectangular cross-section - Part II. Math. Models Methods Appl. Sci. 23, 743–775 (2013)

Friedrich, M., Machill, L.: Derivation of a one-dimensional von Kármán theory for viscoelastic ribbons, (2021). Preprint arXiv, arXiv:2108.05132

Hinz, D.F., Fried, E.: Translation of Michael Sadowsky’s Paper An elementary proof for the existence of a developable Möbius band and the attribution of the geometric problem to a variational problem. J. Elasticity 119, 3–6 (2015)

Hinz, D.F., Fried, E.: Translation and interpretation of Michael Sadowsky’s paper Theory of elastically bendable inextensible bands with applications to the Möbius band. J. Elasticity 119, 7–17 (2015)

Hornung, P.: Euler-Lagrange equations for variational problems on space curves. Phys. Rev. E 81, 066603 (2010)

Hornung, P.: Deformation of framed curves with boundary conditions. Calc. Var. Partial Differ. Equ. 60, 87 (2021)

Hornung, P.: Deformation of framed curves, (2021). Preprint arXiv, arXiv:2110.08541

Korner, K., Audoly, B., Bhattacharya, K.: Simple deformation measures for discrete elastic rods and ribbons, (2021). Preprint arXiv, arXiv:2107.04842

Kumar, A., Handral, P., Bhandari, C.S.D., Karmakar, A., Rangarajan, R.: An investigation of models for elastic ribbons: simulations & experiments. J. Mech. Phys. Solids 143, 104070 (2020)

Levin, I., Siéfert, E., Sharon, E., Maor, C.: Hierarchy of geometrical frustration in elastic ribbons: Shape-transitions and energy scaling obtained from a general asymptotic theory. J. Mech. Phys. Solids 156, 104579 (2021)

Moore, A., Healey, T.: Computation of elastic equilibria of complete Möbius bands and their stability. Math. Mech. Solids 24, 939–967 (2018)

Paroni, R., Tomassetti, G.: Macroscopic and microscopic behavior of narrow elastic ribbons. J. Elasticity 135, 409–433 (2019)

Sadowsky, M.: Ein elementarer Beweis für die Existenz eines abwickelbaren Möbiusschen Bandes und die Zurückführung des geometrischen Problems auf ein Variationsproblem, Sitzungsber. Preuss. Akad. Wiss. (1930). Mitteilung vom 26 Juni, pp. 412–415

Sadowsky, M.: Theorie der elastisch biegsamen undehnbaren Bänder mit Anwendungen auf das Möbiussche Band. Verhandl. des 3. Intern. Kongr. f. Techn. Mechanik 2, 444–451 (1930)

Starostin, E.L., van der Heijden, G.H.M.: The equilibrium shape of an elastic developable Möbius strip. PAMM Proc. Appl. Math. Mech. 7, 2020115–2020116 (2007)

Starostin, E.L., van der Heijden, G.H.M.: Equilibrium shapes with stress localisation for inextensible elastic Möbius and other strips. J. Elasticity 119, 67–112 (2015)

Teresi, L., Varano, V.: Modeling helicoid to spiral-ribbon transitions of twist-nematic elastomers. Soft Matter 9, 3081–3088 (2013)

Tomassetti, G., Varano, V.: Capturing the helical to spiral transitions in thin ribbons of nematic elastomers. Meccanica 52, 3431–3441 (2017)

Yu, T.: Bistability and equilibria of creased annular sheets and strips, (2021). Preprint arXiv, arXiv:2104.09704

Yu, T., Dreier, L., Marmo, F., Gabriele, S., Parascho, S., Adriaenssens, S.: Numerical modeling of static equilibria and bifurcations in bigons and bigon rings. J. Mech. Phys. Solids 152, 104459 (2021)

Yu, T., Hanna, J.A.: Bifurcations of buckled, clamped anisotropic rods and thin bands under lateral end translations. J. Mech. Phys. Solids 122, 657–685 (2019)

Acknowledgements

The authors would like to thank Gianni Dal Maso for several discussions about the content of Sect. 6. The work of LF has been supported by DMIF–PRID project PRIDEN. MGM acknowledges support by MIUR–PRIN 2017. LF and MGM are members of GNAMPA–INdAM and RP is a member of GNFM–INdAM. PH was supported by the DFG.

Funding

Open access funding provided by Università degli Studi di Pavia within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare.

Additional information

Communicated by Paul Newton.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Freddi, L., Hornung, P., Mora, M.G. et al. Stability of Boundary Conditions for the Sadowsky Functional. J Nonlinear Sci 32, 72 (2022). https://doi.org/10.1007/s00332-022-09829-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00332-022-09829-2