Abstract

We investigate the effects of structural perturbations on the networks ability to synchronize. We establish a classification of directed links according to their impact on synchronizability. We focus on adding directed links in weakly connected networks having a strongly connected component acting as driver. When the connectivity of the driver is not stronger than the connectivity of the slave component, we can always make the network strongly connected while hindering synchronization. On the other hand, we prove the existence of a perturbation which makes the network strongly connected while increasing the synchronizability. Under additional conditions, there is a node in the driving component such that adding a single link starting at an arbitrary node of the driven component and ending at this node increases the synchronizability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Synchronization is an important phenomenon in real-world networks. For instance, in power grids, power stations must work in 50 Hz synchrony in order to avoid blackouts (Dörfler and Bullo 2012; Motter et al. 2013). In sensor networks, synchronization among the sensors is vital for the transmission of information (Papadopoulos et al. 2005; Yadav et al. 2017). On the other hand, synchronization of subcortical brain areas such as in the Thalamus is strongly believed to be the origin of motor diseases such as Dystonia and Parkinson (Hammond et al. 2007; Milton and Jung 2003; Starr et al. 2005). In all of the mentioned examples, the stability of synchronous states is crucial for the network’s function or dysfunction, respectively. Motivated by these observations, stability properties of synchronous states in systems of coupled elements have been investigated intensively (Barahona and Pecora 2002; Pecora and Carroll 1998; Pikovsky et al. 2001; Field 2017; Li et al. Oct 2007).

An important class, mimicking the above examples, is given by networks of identical elements which are coupled in a diffusive manner. That is, networks for which the dynamics of a node depend on the difference between its own state and its input. A special focus has been on unravelling the connection between such a network’s coupling topology and its overall dynamics (Pereira et al. 2017; Jalili 2013; Nishikawa et al. 2003, 2017; Agarwal and Field 2010; Bick and Field 2017).

While certain correlations have been observed, there are few rigorous results determining the relation between a network’s structure and its dynamical properties (Wu and Chua 1996; Pogromsky and Nijmeijer 2001; Wang and Chen 2002; Ujjwal et al. 2016). There is even less known about the impact of structural perturbations of a network on its dynamical properties such as the stability of synchrony (see for instance Milanese et al. Apr 2010). A particularly interesting and important question in this category is the following: assume that a link’s weight in a network is perturbed or a new link with a small weight is added to the network. What is the impact on the dynamics? For instance, in interaction graphs of gene networks, it has been shown that adding links between two stable systems can lead to dynamics with positive topological entropy (Poignard 2013). In diffusive systems such as laser networks, it was shown that the addition of a link can lead to synchronization loss (Pade and Pereira 2015; Hart et al. 2015). In this article, we focus on the question whether these structural perturbations lead to higher or lower synchronizability. In the main body, we give rigorous answers to this question for directed networks. In undirected networks, under our assumptions, undirected perturbations will never decrease the synchronizability. Here, the only non-trivial situation appears when introducing directed perturbations to undirected networks. We deal with this case in the “Appendix”. Let us first introduce the model and motivate the main questions with some examples.

1.1 Model and Examples

We call a network a triplet \((\mathcal {G}, \varvec{f}, \varvec{H})\), where \(\mathcal {G}\) is a graph, possibly weighted and directed, \(\varvec{f}:\mathbb {R}^{\ell }\rightarrow \mathbb {R}^{\ell }\) is a function representing the local dynamics of each node and \(\varvec{H}\) is a coupling function between the nodes of the network. If no confusion can arise, we sometimes identify a network and its underlying graph \(\mathcal {G}\). To this triplet, we associate the following coupled equations.

Here, \(\alpha \ge 0\) is the overall coupling strength and \(\varvec{W}=[W_{ij}]_{1\le i,j\le N}\in \mathbb {R}^{N\times N}\) is the adjacency matrix associated with the graph \(\mathcal {G}\). In other words, \(W_{ij}\ge 0\) measures the strength of interaction from node j to node i. The network \((\mathcal {G}, \varvec{f}, \varvec{H})\) is undirected if \(\varvec{W}\) is symmetric, otherwise it is directed. The theory we develop here can include networks of non-identical elements with minor modifications (Pereira et al. 2014).

Note that due to the diffusive nature of the coupling, if all oscillators start with the same initial condition, then the coupling term vanishes identically. This ensures that the globally synchronized state is an invariant state for all coupling strengths \(\alpha \), and we call the set

the synchronization manifold. The transverse stability of M depends on the structure of the graph \(\mathcal {G}\). Indeed, structural changes in \(\mathcal {G}\) can have a drastic influence on the stability of M as can be seen in the next example which serves as motivating example for the subsequent analysis.

1.2 Structural Perturbations in Directed Networks—About Masters and Slaves

Directed networks always consist of one or several strongly connected subnetworks in which every node is reachable from any other node through a directed path. If there is more than one strongly connected subnetwork, two such subnetworks can be connected through unidirectional links pointing from one subnetwork to another. In the top right of Fig. 1, we show a network composed of two strongly connected subnetworks (without the red link), which is weakly connected; starting from the smallest connected subnetwork, it is not possible to reach the larger connected subnetwork through a directed path. In the physics literature, this configuration is called master–slave coupling as the subnetwork consisting of nodes 1, 2 and 3 drives the subnetwork consisting of nodes 4 and 5.

The master–slave configuration is believed to have many optima such as synchronization. For instance, feedforward networks can synchronize for a wide range of coupling strengths while having only a few links (Nishikawa and Motter 2006). The network presented in Fig. 1 also supports stable synchronized dynamics. An important question concerns the network dynamics once we make qualitative structural changes. For instance, what happens if we add a link breaking the master–slave configuration?

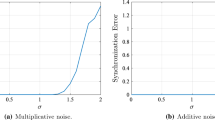

Impact of Making the network strongly connected. Simulations of the networks shown on the right depicting the total synchronization error \(\sum _{i{\ne }j}\Vert x_j(t)-x_i(t)\Vert /N(N-1)\) versus time, where N is the number of nodes. In the top plot, the red link is added after time \(t=4000\) and destroys the master-slave configuration by making the network strongly connected. As a consequence, the previously stable chaotic synchronization is no longer supported. In the bottom row, parameters are adjusted such that synchronization is unstable for the original network. The addition of the blue link at time \(t=4000\) makes the network strongly connected again. However, in this case it leads to a stabilization of the synchronous state. Each link in the original network has unit weight. The new links introduced have weight 0.25, and the isolated dynamics f is described by chaotic Rössler oscillators (Poignard et al. 2018). The coupling function is the identity operator and \(\alpha \) is near the critical coupling \(\alpha _c\) (\(\alpha =0.085\) in the upper plot and \(\alpha =0.08\) in the lower plot). The parameters a, b, c as in Eq. (1) in Barrio et al. (2011) are set to \(a=0.2, b=0.2,c=0.9\). The main plots show the total synchronization error and the insets show the differences \(x_1-x_5\) of the first components of nodes 1 and 5. The initial conditions are chosen as small random deviations of \(x_i=4.7973, y_i=-8.4776\) and \(z_i=0.0361\) for \(i=1,\ldots 5\) where \((x_i,y_i,z_i)^T\) is the state variable of the i-th node (Color figure online)

An example for this is found in Fig. 1a. Introducing the new link (in red) makes the whole network strongly connected: there is a directed path connecting any two vertices in the network. Therefore, the addition of the link significantly improves the connectivity properties of the network. However, this structural improvement has a surprising consequence for the dynamics: the network synchronization is lost, as can be seen in the simulation in Fig. 1a.

Hinderance of synchronization is not about breaking a master–slave configuration One may think that this synchronization loss appears because we are breaking the master–slave configuration. This rationale is justified as master–slave configurations are known to synchronize well (Nishikawa and Motter 2006). However, the synchronization loss is not related to the master–slave breaking. Indeed, adding a different connection which also makes the network strongly connected stabilizes the synchronous state (see Fig. 1b).

Hinderance of synchronization is not about reinforcing the hub Synchronization loss in the example of Fig. 1a appeared as an additional link was added to the hub of the largest subnetwork (the most connected node in the network). However, running experiments on random graphs with hubs, we found several counter-examples in which linking to the hub improves synchronization.

To sum up, while in some settings, master–slave configurations and the presence of hubs play an important role for the behaviour of a network under structural perturbations (Pereira et al. 2017; Pereira 2010; Belykh et al. 2005), for networks with diffusive dynamics near synchronization adding extra links generates nonlinearities which can either enhance or hinder synchronization. Our main result (Theorem 1) gives an almost complete explanation of the complex behaviour of such weakly connected directed networks when a master–slave configuration is reinforced or destroyed, respectively.

1.3 Informal Statement of the Main Result

Using the master stability approach to tackle the transverse stability of the synchronization manifold M (Pereira et al. 2014), we can in fact reduce the stability problem to the spectral analysis of graph Laplacians. The rather mild assumptions needed for this approach are specified in Sect. 2.2. We emphasize that under these assumptions, the master stability function is unbounded. Furthermore, the left bound exclusively depends on the spectral gap \(\lambda _2\), i.e. the other Laplacian eigenvalues do not play a role for linear stability considerations. Let us now give an informal statement of our main result.

In Theorem 1, we consider networks consisting of two strongly connected components. The general case of a higher number of strongly connected components is a straightforward generalization.

Informal Statement of Theorem1(Breaking Master–Slave configurations) Consider a directed network connected in a master–slave configuration as in Fig. 1. First, consider the situation where the master network is poorly connected. Then, strengthening the cutset is immaterial for synchronization and it will neither facilitate nor hinder synchronization. Second, consider the case where the master network is highly connected. In this case, we have:

-

Strengthening the driving facilitates synchronization, leading to shorter transients towards synchronization and augmenting the basin of attraction.

-

Master–Slave configurations are non-optimal. It is always possible to break the master–slave configuration in a way that favours synchronization (e.g. Fig. 1). Provided the overall connectivity of the network is poor, it is even possible to find one or several nodes in the master component such that the addition of an arbitrary single link ending at this node and breaking the master–slave configuration increases the synchronizability. In fact, if additionally, the Laplacian of the master component has zero column sums, then any perturbation in opposite direction of the cutset enhances the synchronizability.

-

Breaking Master–Slave configurations can hinder Synchronization. If the connectivity of the master component is not much stronger than the connectivity of the slave component (a precise condition of this will be given in Theorem 1), we can always find a cutset such that there is a perturbation in opposite direction of this cutset for which synchronization is hindered. Our result reveals the role the eigenvectors of the master network play in the destabilization of the synchronous motion. For example, if \(\alpha _k\) is an eigenvalue of the Laplacian of the master and close to \(\lambda _2\) the spectral gap in the slave component (this is the case in our illustration), then the eigenvector \(\varvec{X}_k\) associated with \(\alpha _k\) encodes the important information about the possible destabilization. For instance, assume that the ith entry of \(\varvec{X}_k\) is the maximal (or minimal) one. If the slave network is driven by a link coming from the ith node, then it is possible to destabilize the synchronization.

The remainder of the article is organized as follows. In Sect. 2, we introduce basic notions concerning the stability of synchronization in networks and present the notion of synchronizability of networks. In Sect. 3, we present the main result of our paper, followed by Sect. 4 which is devoted to the proof of Theorem 1. The article concludes with a discussion in Sect. 5. The “Appendix” is dedicated to the study of directed perturbations in undirected networks. We state and prove the main result, Theorem 2, which establishes a classification of directed perturbations according to their impact on synchronizability.

2 Notations and Definitions

2.1 Weighted Graphs and Laplacian Matrices

We consider networks of identical elements with diffusive interaction. It will be useful to interpret the coupling structure of the network as a graph. We recall some basic facts on graph theory.

Definition 1

(Weighted graphs) A weighted graph \(\mathcal {G}\) is a set of nodes \(\mathcal {N}\) together with a set of edges \(\mathcal {E}\subset \mathcal {N}\times \mathcal {N}\) and a weight function \(w:\mathcal {E}\rightarrow \mathbb {R}_+\). We say that the graph is unweighted when we have \(w(i,j)=1\) for all (i, j) in \(\mathcal {E}\). Moreover,

-

(i)

We say that the graph is undirected if \((i,j)\in \mathcal {E}\iff (j,i)\in \mathcal {E}\) and \(w(i,j)=w(j,i)\) for all \((i,j)\in \mathcal {E}\). Otherwise, the graph is directed and edges are assigned orientations. A directed graph is also called digraph.

-

(ii)

\(\mathcal {G}=(\mathcal {N},\mathcal {E},w)\) is a subgraph of \(\mathcal {G}^{\prime }=(\mathcal {N}^{\prime },\mathcal {E}^{\prime },w^{\prime })\) if \(\mathcal {N}\subseteq \mathcal {N}^{\prime }\), and \(\mathcal {E}\subseteq \mathcal {E}^{\prime }\). In this case, we write \(\mathcal {G}\subseteq \mathcal {G}^{\prime }\).

-

(iii)

The adjacency matrix \(\varvec{W}\in \mathbb {R}^{N\times N}\) of the graph \(\mathcal {G}\) is defined through

$$\begin{aligned} W_{ij}=\left\{ \begin{array}{cc} w(i,j) &{} \text {if } (i,j)\in \mathcal {E}\\ 0 &{} else \end{array}\right. \end{aligned}$$

To deal with synchronization of networks, we will focus on graphs exhibiting some sort of connectedness.

Definition 2

(Connectedness of graphs) An undirected graph \(\mathcal {G}\) is connected if for any two nodes i and j, there exists a path \(\{i=i_1,\ldots ,i_p=j\}\) of nodes (successively connected by edges of \(\mathcal {G}\)) between node i and node j. For directed graphs, we have two notions of connectedness

-

(i)

A digraph \(\mathcal {G}\) is strongly connected if every node is reachable from every other node through a directed path.

-

(ii)

The digraph is weakly connected if it is not strongly connected and the underlying graph which is obtained by ignoring the links’ directions is connected. A maximal strongly connected subgraph of a weakly connected digraph is called strongly connected component, or strong component. The maximal set of links which connects two strong components is called cutset.

-

(iii)

A spanning diverging tree of a digraph is a weakly connected subgraph such that one node, the root node, has no incoming edges and all other nodes have one incoming edge.

Let a weighted digraph be given by its adjacency matrix \(\varvec{W}\), and let \(\varvec{D_W}\) be the diagonal matrix whose i-th entry is given by the degree \(d_i=\sum _{j=1}^NW_{ij}\) of node i. The Laplacian of \(\varvec{W}\) is then defined as

As the Laplacian has zero row sums, the vector \(\mathbf {1}\) (all entries equal to 1) is an eigenvector associated with the eigenvalue 0. In virtue of the Gershgorin theorem (Horn and Johnson 1985), the remaining eigenvalues \(\lambda _{i}\) have nonnegative real parts. In what follows we will always assume that the eigenvalues are ordered in the following way

This allows us to introduce a standard notation from algebraic graph theory. We call the second eigenvalue \(\lambda _2=\lambda _2(\varvec{L_W})\) of \(\varvec{L_W}\) the spectral gap. If the graph is undirected, we call the corresponding normalized eigenvector the Fiedler vector (Chung 1997).

2.2 Synchronizability of Networks: Assumptions

Although equations of the form (1) are heavily used in the context of network synchronization, it was only very recently that a stability result has been established for the general case of time-dependent solutions (Pereira et al. 2014). In order to guarantee for the stability of synchronous motion, we make the following assumptions:

A1 (Structural assumption)\(\mathcal {G}\) has a spanning diverging tree.

B1 (Absorbing Set) The vector field \(\varvec{f}:\mathbb {R}^{\ell }\rightarrow \mathbb {R}^{\ell }\) is continuous and there exists a bounded, positively invariant open set \(U\subset \mathbb {R}^{\ell }\) such that \(\varvec{f}\) is continuously differentiable in U and there exists a \(\varrho >0\) such that

B2 (Smooth Coupling) The local coupling function \(\varvec{H}\) is smooth satisfying \(\varvec{H}(\varvec{0})=\varvec{0}\), and the eigenvalues \(\beta _{j}\) of \(d\varvec{H}\left( \varvec{0}\right) \) are real.

B3 (Spectral Interplay) The eigenvalues \(\beta _{j}\) of \(d\varvec{H}\left( \varvec{0}\right) \) and \(\lambda _{i}\) of \(\varvec{L}\) fulfil

Let us shortly discuss these assumptions to see that they are somehow natural. A1 concerns the coupling topology of the underlying (directed) graph. For undirected networks, it simply amounts to assuming that the underlying coupling graph is connected. In the case of a weakly connected digraph consisting of several strong components, it is equivalent to the fact that there is at most one root component: a strong component which does not have any incoming cutsets. Algebraically, a consequence of this assumption for both, undirected and directed graphs, is that the zero eigenvalue of the graph Laplacian is simple (Agaev and Chebotarev 2000).

Assumption B1 guarantees that the nodes’ dynamics admit an invariant compact set, for instance an equilibrium, a periodic orbit or a chaotic orbit (such as is the case for the motivating example from Fig. 1).

The second dynamical condition B2 guarantees that the synchronous state \({x}_{1}\left( t\right) ={x}_{2}\left( t\right) =\cdots ={x}_{N}\left( t\right) \) is a solution of the coupled equations for all values of the overall coupling strength \(\alpha \): when starting with identical initial conditions, the coupling term vanishes and all the nodes behave in the same manner.

The last condition B3 remarks that for undirected graphs the zero eigenvalue of the graph Laplacian is non-simple iff the underlying graph is disconnected (Brouwer and Haemers 2011). In this case, the stability condition would be violated. Indeed, in order to observe synchronization, it is clear that one should consider networks which are connected in some sense. We remark that the assumption that the \(\beta _j\) are real is true for many applications. The general case of complex eigenvalues \(\beta _j\) can be tackled in a similar way, but the analysis becomes more technical without providing new insight into the phenomena (Pereira et al. 2014).

2.3 Critical Threshold for Synchronization

Under the previous assumptions, it was shown in Pereira et al. (2014) that for Eq. (1), there exists an \(\alpha _c = \alpha _c(\mathcal {G},\varvec{f},\varvec{H})\) such that if the global coupling strength fulfils \(\alpha > \alpha _c\), the network is locally uniformly synchronized: the synchronization manifold attracts uniformly in an open neighbourhood. More precisely, there exists a \(C=C\left( \varvec{L},d\varvec{H}\left( \varvec{0}\right) \right) >0\) such that if the initial condition \(\varvec{x}_{i}\left( t_{0}\right) \) is in a neighbourhood of the synchronization manifold, then the solution \(\varvec{x}(t)\) of Eq. (1) fulfils

Now, the key connection to the graph Laplacian is that the critical coupling \(\alpha _c\) can be factored as

where \(\rho =\rho (\varvec{f},d\varvec{H}(\varvec{0}))\) is a constant depending only on \(\varvec{f}\) and \(d\varvec{H}(\varvec{0})\). So, the constant \(\gamma \) which represents the coupling structure [see Eq. (6)] is directly related to the contraction rate towards the synchronous manifold. In fact, the condition \(\alpha >\alpha _c\) for stable synchronous motion now writes as

Condition (8) shows that the spectral gap \(\lambda _{2}\) plays a central role for synchronization properties of the network.

2.3.1 Measures of Synchronization

We can use the critical coupling \(\alpha _c\) in order to define a measure of synchronizability.

Definition 3

We say that the network \((\mathcal {G}_1, \varvec{f}_1, \varvec{H}_1)\) is more synchronizable than \((\mathcal {G}_2, \varvec{f}_2, \varvec{H}_2)\) if their critical couplings satisfy

Indeed, the range of coupling strengths which yield stable synchronization is larger for \(( \mathcal {G}_1, \varvec{f}_1, \varvec{H}_1)\). Fixing the dynamics \(\varvec{f}\) and the coupling function \(\varvec{H}\), we can now measure whether structural changes in the graph will favour or hinder synchronization. Assume we have a network \((\mathcal {G}, \varvec{f}, \varvec{H})\) and a perturbed network \((\tilde{\mathcal {G}}, \varvec{f}, \varvec{H})\) with corresponding spectral gaps \(\lambda _2\) and \(\tilde{\lambda }_2\).

A direct consequence of the definition of synchronizability is that if \(\mathfrak {R}(\lambda _2)<\mathfrak {R}(\tilde{\lambda }_2)\), the perturbed network \((\tilde{\mathcal {G}}, \varvec{f}, \varvec{H})\) is more synchronizable than \((\mathcal {G}, \varvec{f}, \varvec{H})\).

We also say that the modification favours synchronization. Otherwise, if \(\mathfrak {R}(\lambda _2)>\mathfrak {R}(\tilde{\lambda }_2)\), we say the structural perturbation hinders synchronization. This enables us to reduce the stability problem to an algebraic problem, i.e. the behaviour of the spectral gap under structural perturbations. We will use this approach throughout the whole article.

3 Main Result

In this section, we state our main result, Theorem 1, on perturbations of directed networks. We emphasize that, given assumption A1, the result is structurally generic, a term which we introduced in an earlier paper (Poignard et al. 2018). In order to explain the notion of structural genericity, consider the set of Laplacians corresponding to networks with identical coupling topologies but potentially different weights. In this set, the subset of Laplacians for which our results are valid is dense and its complement is of zero Lebesgue measure. In other words, given any network topology satisfying A1, our result is valid up to a small perturbation of the weights of the existing links of this network. This structural genericity is stronger than the classical one for which it is usually necessary to perturb drastically the structure of the original network itself. For more details on structural genericity, see Theorem 6.6 for the directed case and Theorem 3.1 for the undirected case in Poignard et al. (2018).

3.1 Structural Perturbations in Directed Networks

In this section, we investigate the class of directed networks satisfying assumption A1 and consisting of at least two strong components. Due to A1, these networks have one root component. Furthermore, we restrict ourselves to the study of the dynamical role of links between strong components, i.e on cutsets. Here, perturbations can point either in direction of a cutset or in opposite direction of a cutset. For simplicity of presentation, we can assume that there are only two strong components. So, the corresponding Laplacian is of the form

where \(\varvec{L}_{1}\in \mathbb {R}^{n\times n}\) and \(\varvec{L}_{2}\in \mathbb {R}^{m\times m}\) are the respective Laplacians of the strong components, \(\varvec{C}\in \mathbb {R}^{m\times n}\) is the adjacency matrix of the cutset pointing from one strong component to the other and \(\varvec{D_{C}}\) is a diagonal matrix with the row sums of \(\varvec{C}\) on its diagonal. The results presented in Theorem 1 below can be generalized in a straightforward way to networks with more than two strong components. For instance, our results are still valid for graph Laplacians of the form

representing a graph with p strong components connected by cutsets \(\varvec{C_{ij}}\).

We first remark that as a consequence of the block structure of \(\varvec{L_W}\) given by Equation (10), its eigenvalues are either eigenvalues of \(\varvec{L}_{1}\) or eigenvalues of \(\varvec{L}_{2}+\varvec{D}_{c}\). A structural perturbation in direction of the cutset induced by a nonnegative matrix \(\varvec{\Delta }\in \mathbb {R}^{m\times n}\) corresponds to the following modified Laplacian matrix

A structural perturbation in opposite direction of the cutset induced by a nonnegative matrix \(\varvec{\Delta }\in \mathbb {R}^{n\times m}\) corresponds to the following modified Laplacian matrix

Remark 1

In the rest of the paper, to avoid cumbersome formulations, we will employ the formulation “a structural perturbation \(\varvec{\Delta }\) in direction of the cutset” to refer to a structural perturbation in direction of the cutset induced by a nonnegative matrix \(\varvec{\Delta } \in \mathbb {R}^{m\times n}\)” and similarly for structural perturbations in opposite direction of the cutset.

Notation

Given a Laplacian matrix \(\varvec{L_W}\) of a directed graph with simple spectral gap \(\lambda _2\left( \varvec{L_W}\right) \) and a nonnegative matrix \(\varvec{\Delta }\), we denote, similarly to the above notations, by

the change rate of the spectral gap map under the small structural perturbations \(\varepsilon \varvec{\Delta }\) in direction or in opposite direction of the cutset.

Observe, as in Definition 4, that the spectral gap map \(\varepsilon \mapsto \lambda _2\left( \varvec{L}_p(\varepsilon \varvec{\Delta })\right) \) is regular because of the simplicity of \(\lambda _2\left( \varvec{L_W}\right) \) (Horn and Johnson 1985). In Poignard et al. (2018), we proved that having simple eigenvalues is a structurally generic property for graph Laplacians of weakly connected digraphs that satisfy Assumption A1.

Notice that we can possibly have \(s\left( \varvec{\Delta }\right) \in \mathbb {C}\) since the matrices involved in this notation are no more symmetric. However, we will prove in Sect. 4 (Lemma 2) that in the case where \(\lambda _2\left( \varvec{L_W}\right) \) is an eigenvalue of \(\varvec{L_{2}}+\varvec{D_{C}}\), then \(\lambda _2\left( \varvec{L_W}\right) \) is real positive and therefore \(s\left( \varvec{\Delta }\right) \in \mathbb {R}\). We can now state our second main result in the directed case:

Theorem 1

Let a directed graph \(\mathcal {G}\) consists of two strong components connected by a cutset with adjacency matrix \(\varvec{C}\) and write the associated Laplacian as

Assume A1 is satisfied. Then, for a generic choice of the nonzero weights of \(\varvec{L_W}\), we have the following assertions:

-

(i)

Invariance of synchronizability If the spectral gap \(\lambda _2\) of \(\varvec{L_W}\) is an eigenvalue of \(\varvec{L_1}\), then the network’s synchronizability is invariant under arbitrary structural perturbations \(\varvec{\Delta }\) in direction of the cutset.

-

(ii)

Improving synchronizability by reinforcing the cutset If \(\lambda _{2}\) is an eigenvalue of \(\varvec{L_{2}}+\varvec{D_{C}}\), then the network’s synchronizability increases for arbitrary structural perturbations \(\varvec{\Delta }\) in direction of the cutset.

-

(iii)

Non-optimality of master–slave configurations Assume \(\lambda _{2}\) is an eigenvalue of \(\varvec{L_{2}}+\varvec{D_{C}}\). Then, we have the following statements:

-

(a)

There exists a structural perturbation \(\varvec{\Delta }\) in opposite direction of the cutset such that \(s(\varvec{\Delta })> 0\).

-

(b)

There exists a constant \(\delta (\varvec{L}_1)>0\) and at least one node \(1\le k_0\le n\) (in the driving component) such that if we have \(0<\lambda _2<\delta (\varvec{L}_1)\), then \(s(\varvec{\Delta })> 0\) for any structural perturbation \(\varvec{\Delta }\) consisting of only one link in opposite direction of the cutset and ending at node \(k_0\).

-

(c)

If, moreover, \(\varvec{L}_1\) has zero column sums, then there exists a constant \(\delta (\varvec{L}_1)>0\) such that if \(0<\lambda _2<\delta (\varvec{L}_1)\), we have \(s(\varvec{\Delta })> 0\) for any structural perturbation \(\varvec{\Delta }\) in opposite direction of the cutset.

-

(a)

-

(iv)

Hindering synchronizability by breaking the master–slave configuration There exists a cutset \(\varvec{C}\) for which \(\lambda _{2}\) is an eigenvalue of \(\varvec{L_{2}}+\varvec{D_{C}}\) and a perturbation \(\varvec{\Delta }\) in opposite direction of \(\varvec{C}\) such that: if \(\varvec{L}_1\) admits a positive eigenvalue sufficiently small, then we have \(s(\varvec{\Delta })\le 0\).

Let us make a few remarks. In the proof of items (i) and (ii), we repeatedly apply a perturbation result in order to handle non-small perturbations. This is not possible in items (iii)(a)–(c) and item (iv) because every perturbation in opposite direction of the cutset makes the graph strongly connected and thus qualitatively changes the network’s structure. In item (iii) a, the perturbation can be realised by turning an arbitrary node in the slave component into a hub having directed connections to all the nodes in the master component.

As we have shown numerically in the example in Fig. 1 and also in Pade and Pereira (2015), not all perturbations in opposite direction of the cutset are increasing the synchronizability when \(\varvec{L}_1\) does not have zero column sums. This is stated in item (iv): an example where the situation described in this item occurs is when the master component is an undirected subnetwork, i.e when \(L_1\) is symmetric, in which case all the eigenvalues are nonnegative.

In items (ii)–(iv), we assume that the spectral gap is an eigenvalue of \(\varvec{L_{2}}+\varvec{D_{C}}\). This happens for instance when the entries in the cutset \(\varvec{C}\) are very small (see Lemma 2). Topologically, this means that the master component is very well connected in comparison with the intensity and/or density of the driving force. It is worth remarking that the connection density of the second component does not play a role in this scenario. The rest of the paper is devoted to the proofs of our two main results and of other results completing our study.

4 Proof of the Main Result

The following standard result from matrix theory is the technical starting point for the rest of this article (Horn and Johnson 1985). It allows us to determine the dynamical effect of structural perturbations up to first order in the strength of the perturbation.

Lemma 1

(Spectral Perturbation Horn and Johnson 1985) Let \(\lambda \) be a simple eigenvalue of \(\varvec{L}\in \mathbb {R}^{N\times N}\) with corresponding left and right eigenvectors \(\varvec{u},\varvec{v}\), and let \(\tilde{\varvec{L}}\in \mathbb {R}^{N\times N}\). Then, for \(\varepsilon \) small enough there exists a smooth family \(\lambda \left( \varepsilon \right) \) of simple eigenvalues of \(\varvec{L}+\varepsilon \tilde{\varvec{L}}\) with \(\lambda \left( 0\right) =\lambda \) and

In order to track the motion of the spectral gap through this representation, we first investigate the structure of the eigenvectors of \(\varvec{L_W}\) in the following two auxilliary lemmata. First, observe that the matrix \(\varvec{L}_{2}+\varvec{D_{C}}\) is nonnegative diagonally dominant (Berman and Plemmons 1994; Horn and Johnson 1985). This property enables us to find a Perron–Frobenius like result.

Lemma 2

Let \(\varvec{L_W}\) be as in Theorem 1. Then, \(\varvec{L}_{2}+\varvec{D_{C}}\) has a minimal simple, real and positive eigenvalue with corresponding positive left and right eigenvectors.

Proof

Let \(s:=\max _{i}\left\{ {\varvec{D_{C}}} _{(i)}+\sum _{j\ne i}W_{ij}\right\} >0\), then \(\varvec{N}=s\varvec{I}-\left( \varvec{L}_{2}+\varvec{D_{C}}\right) \) is a nonnegative matrix by definition of s. Furthermore, it is irreducible as we assumed that the component associated with \(\varvec{L}_{2}\) is strongly connected. Then, by the Perron–Frobenius theorem (Berman and Plemmons 1994), \(\varvec{N}\) has a maximal, simple and real eigenvalue \(\Lambda \) with corresponding positive left and right eigenvectors \(\varvec{\omega }\) and \(\varvec{\eta }\). That is

yielding

As \(\Lambda \) is the maximal eigenvalue and all the eigenvalues of \(\varvec{L}_{2}+\varvec{D_{C}}\) are obtained by eigenvalues \(\mu \) of \(\varvec{N}\) through \(s-\mu \), we must have that \(s-\Lambda \) is the minimal real eigenvalue of \(\varvec{L}_{2}+\varvec{D_{C}}\). Furthermore, the eigenvectors are the same, so the left and right eigenvectors of \(\varvec{L}_{2}+\varvec{D_{C}}\) corresponding to \(s-\Lambda \) are positive. As a consequence of the Gershgorin Theorem together with the strong connectivity of the second component, we have that \(\varvec{L}_{2}+\varvec{D_{C}}\) is invertible (Corollary 6.2.9 in Horn and Johnson 1985). Hence, \(s-\Lambda \ne 0\). Furthermore, by the Gershgorin Theorem again, we have \(s-\Lambda \ge 0\) and hence \(s-\Lambda >0\). \(\square \)

This Lemma shows that the spectral gap and the corresponding eigenvectors are real in this case. So, when changing the coupling structure, the motion of \(\lambda _{2}\) will be along the real axis by Lemma 1. Next, we investigate the structure of the eigenvectors of \(\varvec{L_W}\).

Lemma 3

Let \(\varvec{L_W}\) be as in Theorem 1 and let the spectral gap \(\lambda _{2}\) of \(\varvec{L_W}\) be an eigenvalue of \(\varvec{L_{2}}+\varvec{D_{C}}\). Then, the eigenvalue is simple and the corresponding left and right eigenvectors of \(\varvec{L_W}\) have the form

where \(\varvec{w}\) and \(\varvec{y}\) are left and right eigenvectors of \(\varvec{L}_{2}+\varvec{D_{C}}\).

Proof

Let \(\left( \varvec{v},\varvec{w}\right) \) and \(\left( \varvec{x},\varvec{y}\right) \) be left and right eigenvectors of \(\varvec{L_W}\) corresponding to \(\lambda _{2}\). For the left eigenvector, we have

The second component of this equation yields that \(\varvec{w}\) is a left eigenvector of \(\left( \varvec{L}_{2}+\varvec{D_{C}}\right) \). As \(\lambda _{2}\) is simple by Lemma 2, it is not an eigenvalue of \(\varvec{L}_{1}\), so the first component yields

The equation for the right eigenvector is

As \(\varvec{L}_{1}-\lambda _{2}\varvec{I}\) is regular, we have \(\varvec{x}=\varvec{0}\). The second component then yields that \(\varvec{y}\) is a right eigenvector of \(\varvec{L}_{2}+\varvec{D_{C}}\). \(\square \)

Proof of Theorem 1

We will use throughout the proof that the smallest eigenvalue of \(\varvec{L}_2+\varvec{D_C}\) is simple, real and positive by Lemma 2.

Ad (i). Let the nonnegative matrix \(\varepsilon \varvec{\Delta }\) be a small perturbation of the cutset, so the corresponding Laplacian writes

By assumption \(\lambda _2\) is an eigenvalue of \(\varvec{L}_1\). As the smallest eigenvalue of \(\varvec{L}_2+\varvec{D_C}\) is simple, we can apply Lemma 1 and obtain the following formula for the perturbed smallest eigenvalue \(\mu _1(\varepsilon )\) of \(\varvec{L}_2+\varvec{D_C}+\varepsilon \varvec{D_{\Delta }}\)

The positivity holds true because by Lemma 2, left and right eigenvectors \(\varvec{w}^T, \varvec{y}\) of \(\varvec{L}_2+\varvec{D_C}\) are positive, and at least one entry of \(\varvec{D_{\Delta }}\) is positive. So, we have \(\mathfrak {R}(\lambda _2)<\mu _1(0)<\mu _1(\varepsilon )\), i.e. the spectral gap of the whole network is still given by \(\lambda _2\). Now, the perturbed matrix \(\varvec{L}_2+\varvec{D_C}+\varepsilon \varvec{D_{\Delta }}\) is of the same form as \(\varvec{L}_2+\varvec{D_C}\). Hence, the above reasoning can be applied repeatedly in order to obtain the desired result.

Ad (ii). Let again \(\varepsilon \varvec{\Delta }\) be a small perturbation in direction of the cutset and let us write the perturbed Laplacian \(\varvec{L_p}\left( \varepsilon \varvec{\Delta } \right) \) as

Using Lemma 1, we have for the spectral gap of the perturbed system

where \(\left( \varvec{v},\varvec{w}\right) \) and \(\left( \varvec{x},\varvec{y}\right) \) are the eigenvectors of \(\varvec{L_W}\). Now, from Lemma 3, we have \(\varvec{x}=\varvec{0}\) and so we obtain

By assumption \(\varvec{\Delta }\) and therefore \(\varvec{D_{\Delta }}\) is nonnegative. Furthermore, Lemma 2 shows that \(\varvec{w}\) and \(\varvec{y}\) are positive, so \(s(\varvec{\Delta })\) is positive. By the same reasoning as in (i), we can perform such small perturbations repeatedly in order to obtain the result for any structural perturbation in direction of the cutset with arbitrarily large entries.

Ad (iii)(a). For a small perturbation \(\varepsilon \varvec{\Delta }\) in opposite direction of the cutset, the perturbed Laplacian writes as

where \(\varvec{w}\) and \(\varvec{y}\) are left and right eigenvectors of \(\varvec{L}_{2}+\varvec{D_C}\) and

Now, assume we can find a \(\varvec{\Delta }\) such that \(\varvec{\Delta y}=\varvec{1}\). Then, we would have

Now, by Lemma 2, the eigenvectors \(\varvec{w}\) and \(\varvec{y}\) are positive and \(\varvec{C}\) is nonnegative with at least one positive entry. Hence,

So it remains to show that there exists a \(\varvec{\Delta }\) such that \(\varvec{\Delta y}=\varvec{1}\). By Lemma 2, \(\varvec{y}\) is a positive vector, so for any fixed \(1\le k\le m \), we can choose \(\varvec{\Delta }_{ik}=\frac{1}{y_k}\) for \(1\le i \le N\) and zero elsewhere to obtain \(\varvec{\Delta y}=\varvec{1}\).

Ad (iii)(b). Here, we first use a result proved in Poignard et al. (2018) on the structure of Laplacian spectra in the case of strongly connected digraphs. Such graphs admit a spanning diverging tree, and therefore, by Theorem 6.6 in Poignard et al. (2018), we have that for a generic choice of the nonzero weights of \(\varvec{L}_1\), the spectrum of this matrix is simple. Under this genericity assumption, we can thus suppose that \(\varvec{L}_1\) is diagonalizable.

Then. let’s consider a vector \(\varvec{\Delta y}\) (with nonnegative entries) decomposed in the basis of eigenvectors \(\left( \varvec{1},\varvec{X}_2,\ldots ,\varvec{X}_N\right) \) of \(\varvec{L}_1\):

with the numbers \(\beta _i\) being possibly in \(\mathbb {C}\). Such a decomposition gives the relation:

where the numbers \(\alpha _k\) denote the eigenvalues of \(\varvec{L}_1\) sorted by increasing order with respect to their real part, (so that \(\alpha _1=0\)). Notice the fraction in this expression is well defined, since by assumption we have \(\mathfrak {R}\left( \alpha _k\right) >\lambda _2\) for any \(k\ge 2\).

Let’s consider \(\varvec{\Delta y}=\varvec{e}_k\), where \(\varvec{e}_k\) denotes the k-th vector of the canonical basis of \({\mathbb {R}}^N\). We first remark that the corresponding values \(\beta _1\left( \varvec{e}_k\right) \) in the decomposition (20) satisfy the following relation

which directly gives us the relation \(\sum _{k=1}^N\beta _1\left( \varvec{e}_k\right) =1\).

So, at least one of these values, say \(\beta _1\left( \varvec{e}_{k_0}\right) \), must be positive. Consequently, for any nonnegative matrix \(\varvec{\Delta }\) with \(\varvec{\Delta y}=\varvec{e}_{k_0}\) and \(\lambda _2\) small enough, we get that the right hand side in Eq. (21) is positive and hence, \(s(\varvec{\Delta })\) from Eq. (18) must be positive. In other words, since the terms in the sum in Eq. (21) depend only on \(\varvec{L}_1\) and \(\varvec{e}_{k_0}=\varvec{\Delta y}\), there exists a constant \(\delta (\varvec{L}_1,\varvec{e}_{k_0})\) such that if \(0<\lambda _2<\delta (\varvec{L}_1,\varvec{e}_{k_0})\) then \(s(\varvec{\Delta })>0\). To conclude, it suffices to consider the set \(\mathcal {A}\) of integers \(1\le k\le N\) such that \(\beta _1\left( \varvec{e}_k\right) >0\) and to set

Since \(\mathcal {A}\) contains \(k_0\), it is nonempty and thus \(\delta (\varvec{L}_1)\) exists and is positive. Now consider the structural perturbations \(\varvec{\Delta }\) with one link in opposite direction of the cutset for which exist an integer k in \(\mathcal {A}\) such that \(\varvec{\Delta y}=\varvec{e}_k\), i.e the structural perturbations with only one link in opposite direction of the cutset ending at a node k belonging to \(\mathcal {A}\): such structural perturbations exist (since the vector \(\varvec{y}\) is positive), and for any such \(\varvec{\Delta }\) we have \(s(\varvec{\Delta })>0\) provided \(0<\lambda _2<\delta (\varvec{L}_1)\).

Ad (iii)(c). As in (b) assume again that \(\varvec{L}_1\) is diagonalizable. If ,moreover, it is zero column sum, then the basis of eigenvectors \(\left( \varvec{1},\varvec{X}_2,\ldots ,\varvec{X}_n\right) \) of \(\varvec{L}_1\) satisfies

Indeed, this can be directly seen by multiplying each eigenvector equation \(\varvec{L}_1\varvec{X}_k=\alpha _k\varvec{X}_k\) by the vector \((1,\ldots ,1)\) to the left.

As a result, we must have \(\beta _1 \left( \varvec{e}_k\right) =\frac{1}{n}\) for any \(1\le k \le n\). Now consider any nonnegative matrix \(\varvec{\Delta }\), if \(y_i\) denotes the ith entry of \(\varvec{y}\) in its decomposition in the canonical basis \((\varvec{e}_1,\ldots ,\varvec{e}_n)\), we have

Since all the entries \(y_i\) are positive by Lemma 2, thus to get \(s(\varvec{\Delta })>0\) it suffices that all the terms in brackets are positive vectors. So, it suffices that \(\lambda _2\) is small enough compared to \(\frac{1}{n}\) and compared to the sums \(\sum _{k=2}^n \frac{\beta _k(\varvec{e}_i)}{\alpha _k-\lambda _2}\varvec{X}_k\). Since the family \((\beta _k(\varvec{e}_i))_{\begin{array}{c} 2\le k\le n\\ 1\le i\le n\\ \end{array}}\) is finite, we get again (as in Ad (iii)(b)) the existence of a constant \(\delta (\varvec{L}_1)>0\) such that, if \(0<\lambda _2<\delta (\varvec{L}_1)\), then for any structural perturbation \(\varvec{\Delta }\) in opposite direction of the cutset, we have \(s(\varvec{\Delta })>0\).

Ad (iv). As in (b) and (c), we can suppose \(\varvec{L}_1\) is diagonalizable. Since the entries of the cutset \(\varvec{C}\) are nonnegative, in virtue of Eq. (18) it suffices to show that there is a \(\varvec{\Delta }\) such that some entry of \((\varvec{L}_1 - \lambda _2 \varvec{I} )^{-1} \varvec{\Delta } \varvec{y}\) is positive. Assume \(\varvec{L}_1\) admits a positive eigenvalue \(\alpha _k\), then any eigenvector of \(\varvec{L}_1\) associated with \(\alpha _k\) is real. Let’s choose one such eigenvector \(\varvec{X}_k\): if one entry of \(\varvec{X}_k\) is negative, we define

and consider

if the set \(\mathcal {G}_{-}\) is empty (resp. \(\mathcal {G}_{+}\)), we set \(\beta _m = 0\) (resp. \(\beta _M = 0\)). Notice that in view of the genericity properties, the eigenvector \(\varvec{X}_k\) has no zero entry. Moreover, we consider \(\varvec{\Delta y} = \beta _m \varvec{1} + \varvec{X}_k\). In this way \(\varvec{\Delta y}\) is nonnegative and there is a \(\varvec{\Delta }\) that solves this equation. Thus

Assume that \(\beta _M\) is attained in the ith entry, so we obtain that if

then \({(\varvec{L}_1 - \lambda _2 \varvec{I})^{-1} \varvec{\Delta y}}_{(i)}>0.\) Therefore, for a cutset \(\varvec{C}\) connecting to this entry, we have \(s(\varvec{\Delta })\le 0\), as desired.

If all entries of \(\varvec{X}_k\) are nonnegative, then \(\varvec{\Delta y} = \varvec{X}_k\). This yields

and any \(\alpha _k > \lambda _2\) suffices from which we get this time that for any choice of the cutset \(\varvec{C}\) we have \(s(\varvec{\Delta })<0\). \(\square \)

In item (iv) of this theorem, the choice of the cutset \(\varvec{C}\) for which we hinder synchronization is not that sharp, indeed suppose only one entry in \(\varvec{X}_k\) is negative. Then, we can apply the same reasoning to the vector \(-\varvec{X}_k\), for which \(n-1\) entries will be non negative. In this case, the suitable cutsets \(\varvec{C}\) will be more numerous.

Illustration of Item (iv) (Hinderance of Synchronization). Consider the directed network in Fig. 1 (without the addition of links). Assume that all connections in the master network have strength \(1/2< w<1\) and the connections in the slave network have strength 1. Then, the spectrum of the network can be decomposed as

So, the spectral gap \(\lambda _2 = 1\) belongs to \(\sigma (\varvec{L}_2 + \varvec{D}_{\varvec{C}})\). With the notation from the proof above, we have \(\alpha _2 = 2w\) and the corresponding eigenvector of \(\varvec{L}_1\) is given by \(\varvec{X}_2 = (-1 , 1 , -1)\). Also, the right eigenvector corresponding to \(\lambda _2\) of \(\varvec{L}_2 + \varvec{D}_{\varvec{C}}\) is \(\varvec{y} = (1, 1)\). Hence, considering

, this equation can be solved by introducing a single link from any node of the slave component to any node of the master component. However, in view of the cutset \(\varvec{C}\) that starts from node 2 of the master component, only connections to node 2 can give a contribution to \(s(\varvec{\Delta })\). Hence, we can choose

that is, a single link from node 4 to node 2 as in Fig. 1 will cause hinderance of synchronization since \(s(\varvec{\Delta }) < 0\).

More generally, the eigenvector \(\varvec{X}_k\) provides a spectral decomposition

When the cutset is from only the nodes of a single component \(\mathcal {G}_{-}\) or \(\mathcal {G}_{+}\) then it might be possible to hinder synchronization. This suggests that to improve synchrony it is best to drive the slave by mixing multiple inputs from \(\mathcal {G}_{-}\) and \(\mathcal {G}_{+}\).

5 Discussion

In this paper, we have investigated the effect of structural perturbations on the transverse stability of the synchronous manifold in diffusively coupled networks. Establishing a connection between topological properties of a network and its synchronizability has been a challenge for the last few decades. So far, most of the existing literature focuses on establishing correlations supported by numerical simulations. Here, we present a first step in proving rigorous results for both, undirected and directed networks.

For directed networks, we have investigated the behaviour of a network when its cutset is perturbed. There is only scenario we did not investigate here: when the spectral gap is an eigenvalue of \(L_1\), determining the effect of a perturbation in opposite direction of the cutset cannot be solved in the framework presented above. It is of course possible to write down a similar term as in Eq. (19). However, in this case it involves left and right eigenvectors of \(L_1\). One would thus need to investigate eigenvectors of Laplacians of strongly connected digraphs, and more precisely the signs of their entries. To our knowledge, there have been no attempts to do so, yet.

Even more involved is the question whether there exists a classification of links according to their dynamical impact in strongly connected networks. To our knowledge, no results have been obtained for the general case so far either. This is also due to the fact that there are few attempts to extend the approaches on undirected graphs due to Fiedler (see Fiedler 1973) to directed graphs. As shown here, related results would essentially improve our understanding of the dynamical impact of a link in directed networks.

References

Agaev, R., Chebotarev, P.: The matrix of maximum out forests of a digraph and its applications. Autom. Remote Control 61, 1424–1450 (2000)

Agarwal, N., Field, M.: Dynamical equivalence of networks of coupled dynamical systems: asymmetric inputs I. Nonlinearity 23(6), 1245 (2010)

Barahona, M., Pecora, L.M.: Synchronization in small-world systems. Phys. Rev. Lett. 89, 054101 (2002)

Barrio, R., Blesa, F., Dena, A., Serrano, S.: Qualitative and numerical analysis of the Rössler model: Bifurcations of equilibria. Comput. Math. Appl. 62(11), 4140–4150 (2011)

Belykh, I., Hasler, M., Lauret, M., Nijmeijer, H.: Synchronization and graph topology. Int. J. Bifurc. Chaos 15(11), 3423–3433 (2005)

Berman, A., Plemmons, R.J.: Nonnegative Matrices in the Mathematical Sciences. SIAM, University City (1994)

Bick, C., Field, M.: Asynchronous networks: modularization of dynamics theorem. Nonlinearity 30(2), 595 (2017)

Brouwer, A.E., Haemers, W.H.: Spectra of Graphs. Springer, Berlin (2011)

Chung, F.R.K.: Spectral Graph Theory. American Mathematical Society, Philadelphia (1997)

Dörfler, F., Bullo, F.: Synchronisation and transient stability in power networks and nonuniform kuramoto oscillators. SIAM J. Cont. Optim. 50, 1616–1642 (2012)

Fiedler, M.: Algebraic connectivity of graphs. Czechoslovak Math. J. 23, 298–305 (1973)

Field, M.J.: Patterns of desynchronization and resynchronization in heteroclinic networks. Nonlinearity 30(2), 516 (2017)

Hammond, C., Bergman, H., Brown, P.: Pathological synchronization in Parkinson’s disease: networks, models and treatments. TRENDS Neurosci. 30, 357–364 (2007)

Hart, J.D., Pade, J.P., Pereira, T., Murphy, T.E., Roy, R.: Adding connections can hinder network synchronization of time-delayed oscillators. Phys. Rev. E 92, 2 (2015)

Horn, R.A., Johnson, C.R.: Matrix Analysis. Cambridge University Press, Cambridge (1985)

Jalili, M.: Enhancing synchronizability of diffusively coupled dynamical networks: a survey. IEEE TNNLS 24, 1009–1022 (2013)

Li, C., Sun, W., Kurths, J.: Synchronization between two coupled complex networks. Phys. Rev. E 76, 046204 (2007)

Milanese, A., Sun, J., Nishikawa, T.: Approximating spectral impact of structural perturbations in large networks. Phys. Rev. E 81, 046112 (2010)

Milton, J., Jung, P. (eds.): Epilepsy as a Dynamic Disease. Springer, Berlin (2003)

Motter, A.E., Myers, S.A., Anghel, M., Nishikawa, T.: Spontaneous synchrony in power-grid networks. Nat. Phys. 9, 191–197 (2013)

Nishikawa, T., Motter, A.E., Lai, Y.C., Hoppensteadt, F.C.: Heterogeneity in oscillator networks: are smaller worlds easier to synchronize? Phys. Rev. Lett. 91, 014101 (2003)

Nishikawa, T., Motter, A.E.: Synchronization is optimal in non-diagonalizable networks. Phys. Rev. E 73, 065106 (2006)

Nishikawa, T., Sun, J., Motter, A. E.: Sensitive dependence of optimal network dynamics on network structure. to appear in Phys. Rev. X, arXiv:1611.01164 (2017)

Pade, J.P., Pereira, T.: Improving the network structure can lead to functional failures. Nat. Sci. Rep. 5 (2015)

Papadopoulos, A.A., McCann, J.A., Navarra, A.: Connectionless probabilistic (cop) routing: an efficient protocol for mobile wireless ad-hoc sensor networks. In: 24th IEEE International IPCCC (2005)

Pecora, L.M., Carroll, T.L.: Master stability functions for synchronized coupled systems. Phys. Rev. Lett. 80, 2109–2112 (1998)

Pereira, T.: Hub synchronization in scale-free networks. Phys. Rev. E 82, 036201 (2010)

Pereira, T., Eldering, J., Rasmussen, M., Veneziani, A.: Towards a theory for diffusive coupling functions allowing persistent synchronization. Nonlinearity 27, 501–525 (2014)

Pereira, T., van Strien, S., Tanzi, M.: Heterogeneously coupled maps: hub dynamics and emergence across connectivity layers. arXiv preprint arXiv:1704.06163, (2017)

Pikovsky, A., Rosenblum, M., Kurths, J.: Synchronization. A Universal Concept in Nonlinear Sciences. Cambridge University Press, Cambridge (2001)

Pogromsky, A., Nijmeijer, H.: Cooperative oscillatory behavior of mutually coupled dynamical systems. IEEE Transactions on Circuits and Systems I: Fundamental Theory and Applications 48(2), 152–162 (2001)

Poignard, C.: Inducing chaos in a gene regulatory network by coupling an oscillating dynamics with a hysteresis-type one. J. Math. Biol. 69, 1–34 (2013)

Poignard, C., Pereira, T., Pade, J.P.: Spectra of laplacian matrices of weighted graphs: Structural genericity properties. SIAM J. Appl. Math. 78(1), 372–394 (2018)

Starr, P.A., Rau, G.M., Davis, V., Marks, W.J., Ostrem, J.L., Simmons, D., Lindsey, N., Turner, R.S.: Spontaneous pallidal neuronal activity in human dystonia: comparison with parkinson’s disease and normal macaque. J. Neurophysiol. 93, 3165–3176 (2005)

Ujjwal, S.R., Punetha, N., Ramaswamy, R.: Phase oscillators in modular networks: the effect of nonlocal coupling. Phys. Rev. E 93, 012207 (2016)

Wang, X.F., Chen, G.: Synchronization in scale-free dynamical networks: robustness and fragility. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 49(1), 54–62 (2002)

Wu, C.W., Chua, L.O.: On a conjecture regarding the synchronization in an array of linearly coupled dynamical systems. IEEE Trans. Circuits Syst. I 43(2), 161–165 (1996)

Yadav, P., McCann, J. A., Pereira, T.: Self-synchronization in duty-cycled internet of things (iot) applications. To appear in IEEE Internet Things J. arXiv:1707.09315 (2017)

Acknowledgements

We are in debt with Mike Field, Chris Bick, Anna Dias, Jeroen Lamb, Matteo Tanzi, and Serhyi Yanchuk for useful discussions.

Funding

CP was supported by FAPESP Cemeai grant 2013/07375-0 and by EPSRC Centre for Predictive Modelling in Healthcare grant EP/N014391/1. TP was supported by FAPESP Cemeai grant 2013/07375-0, Russian Science Foundation grant 14-41-00044 at the Lobayevsky University of Nizhny Novgorod, by the European Research Council [ERC AdG grant number 339523 RGDD] and by Serrapilheira Institute. JPP was supported by the DFG Research Center Matheon in Berlin. This study did not generate any new data.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Dr. Paul Newton.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Structural Perturbations in Undirected Networks—A Partition for Synchronization Improvement

To complete the classification of links with respect to their dynamical impact on diffusive networks, we now investigate weighted undirected networks and their behaviour under both, undirected and directed structural perturbations. The advantage of undirected networks is that tools from algebraic graph theory allow us to relate the coupling structure to algebraic properties of the associated graph Laplacian. We emphasize that under the assumptions B1–B3, adding undirected links to an undirected network can never hinder synchronization as the following Proposition shows. It was first established by Fiedler for unweighted graphs (Fiedler 1973). Here, we present a straightforward generalization to weighted graphs.

Proposition 1

Let \(\mathcal {G}^{\prime }\) be a graph and \(\mathcal {G}\subseteq \mathcal {G}^{\prime }\) a subgraph with corresponding Laplacians \(\varvec{L_W}\) and \(\varvec{L_{W^{\prime }}}\), respectively. Then, we have

The proof follows from using the Courant–Fischer theorem (Horn and Johnson 1985). Hence, increasing weights or adding links in an undirected network will never decrease the synchronizability. More precisely, under the assumptions B1–B3, the master stability function is unbounded and the left bound is determined by the spectral gap exclusively and not by the other Laplacian eigenvalues (see Eq. 8 ).

However, if we allow for directed perturbations, the motion of the spectral gap over the real line is not constrained to one direction any more and such a perturbation can therefore either facilitate or hinder synchronization as the following example reveals. Consider the network with a graph \(\mathcal {G}\) as shown in Fig. 2. This example can be decomposed into two connected subgraphs (in blue and red) such that the addition of any directed connection between the two subnetworks facilitates synchronization—regardless of its direction.

Partition of a graph corresponding to the dynamic role of the nodes. Connecting red nodes and blue nodes with a directed link such as \(3\rightarrow 7\) favours synchronization—regardless of the direction of the added link. Adding links between nodes of the same colour has opposite effects on synchronizability. Simulations show dynamics of the network on the right with local dynamics \(\varvec{f}\) given by Rössler systems in the chaotic regime. In a, the addition of the blue link connecting nodes 2 and 3 at time \(t=4000\) leads to chaotic synchronization of the whole network. In b, the addition of the reverse link in red destabilizes the synchronous state. The insets show time traces of the chaotic dynamics of a single node (Color figure online)

On the other hand, the impact of increasing weights or adding links with small weights between red nodes does depend on the direction of the perturbation. More precisely, the addition of a link from node i to node j has the opposite effect on the stability of synchronization as the addition of a link from node j to node i. The same is valid for the blue nodes. For example, in Fig. 2 adding the directed blue link (with small weight) will enable the network to synchronize its previously asynchronized nodes (Plot a), whereas adding the red link will hinder synchronization (Plot b). In the main result of this appendix (Theorem 2), we show that this situation is generic. What’s more, we establish a full classification of directed links according to their impact on synchronizability.

Before we state the main result, we introduce some notation. We focus on rank-one perturbations which correspond to the addition of a link in a directed way or in an undirected way. This extends naturally to increasing the weight of an existing link. Observe that structurally more complex perturbations such as the addition or deletion of two or several links can be treated in the same way since by Lemma 1, the results we obtain are linear in the perturbation term. Let us first introduce the following matrix corresponding to the aforementioned perturbations.

Notation 1

We denote by \({\varvec{L}}_{{kl}}\) (respectively \({\varvec{L}}_{{k \rightarrow l}}\)) the Laplacian matrix of the disconnected undirected (respectively directed) graph with n nodes having only one link between node k and node l (respectively pointing from k to l):

where the entry \(-1\) in the matrix \(\varvec{L}_{k \rightarrow l}\) is in the l-th row and k-th column.

Now, as exposed in Sect. 2.3.1, for linear stability considerations it is sufficient to track the spectral gap of the perturbed graph Laplacian.

Definition 4

(Motion of Eigenvalues) For an undirected graph Laplacian \(\varvec{L_W}\) having a simple spectral gap \(\lambda _2 \left( \varvec{L_W}\right) \ne 0\), we set

for the change rates of the spectral gap maps under the small structural perturbations \(\varepsilon {\varvec{L}}_{kl}\), \(\varepsilon {\varvec{L}}_{k\rightarrow l}\).

The maps \(\varepsilon \mapsto \lambda _2\left( \varvec{L_W}+\varepsilon {\varvec{L}}_{kl}\right) \) and \(\varepsilon \mapsto \lambda _2\left( \varvec{L_W}+\varepsilon {\varvec{L}}_{k\rightarrow l}\right) \) are smooth functions because of the simplicity of \(\lambda _2 (\varvec{L_W})\) at \(\varepsilon =0\) (see Lemma 1 below, in which we provide an expression of the derivative of the spectral gap map at \(\varepsilon =0\)). This simplicity assumption is structurally generic, as shown in Poignard et al. (2018). Now, we are ready to enounce the main result of the appendix.

Theorem 2

Let \(\varvec{L_W}\) be the Laplacian of an undirected, connected and weighted graph \(\mathcal {G}\). Assume that assumptions B1-B3 are fulfilled. Then, for a generic choice of the nonzero weights of \(\mathcal {G}\) we have:

-

(i)

Improving synchronizability by a unique decomposition There is a unique partition of \(\mathcal {G}\) into disjoint connected subgraphs \(\mathcal {G}_1\) and \(\mathcal {G}_2\) such that for nodes k and l belonging to different subgraphs we have \(s_{k\rightarrow l} > 0\).

-

(ii)

Opposite effects on synchronizability For nodes k, l belonging to the same subgraph, so either \(k,l\in \mathcal {G}_1\) or \(k,l\in \mathcal {G}_2\) we have \(s_{k\rightarrow l}s_{l\rightarrow k}\le 0\).

-

(iii)

Cascade of destabilization There are unique increasing sequences of connected subgraphs

$$\begin{aligned} \mathcal {G}_1\subset & {} \mathcal {G}_{11}\subset \mathcal {G}_{12}\subset \cdots \subset \mathcal {G}_{1r}=\mathcal {G}\\ \mathcal {G}_2\subset & {} \mathcal {G}_{21}\subset \mathcal {G}_{22}\subset \cdots \subset \mathcal {G}_{2m}=\mathcal {G} \end{aligned}$$with \(r+m\le N\) such that for \(j>i\) and for a node k in \(\mathcal {G}_{1i}\cap \mathcal {G}_2\) and a node l in \(\mathcal {G}_{1j}\setminus \mathcal {G}_{1i}\) we have \(s_{l\rightarrow k} < 0\). Analogously, for \(j>i\) and for a node k in \(\mathcal {G}_{2i}\cap \mathcal {G}_1\) and a node l in \(\mathcal {G}_{2j}\setminus \mathcal {G}_{2i}\) we have \(s_{l\rightarrow k} < 0\).

Theorem 2 builds on a theorem by Fiedler that we recall here shortly

Theorem 3

[Fiedler, 1975] Let \(\mathcal {G}\) be a connected weighted graph with Fiedler vector \(\varvec{v}\). For \(r\ge 0\) definite \(M(r)=\left\{ i | v_i+r\ge 0\right\} \). Then, the following holds

-

(i) The subgraph \(\mathcal {G}_r\subseteq \mathcal {G}\) induced by the set of nodes M(r) is connected.

-

(ii) If the Fiedler vector \(\varvec{v}\) fulfils \(v_i\ne 0\) for all \(1\le i\le n\), the set of edges \(\{i,j\}\) such that \(v_iv_j<0\) defines a cut \(C\subset \mathcal {E}\) and the resulting two components are connected.

This result enables us to prove Theorem 2.

Proof of Theorem 2

We first mention that by the genericity assumption in the Theorem, we have that \(\lambda _2(\varvec{L_W})\) is simple and the Fiedler vector \(\varvec{v}\) has nonzero entries (see Theorems 3.1 and 3.2 in Poignard et al. 2018). As a consequence, we can apply Theorem 3.

ad (i). The first assertion is a consequence of Theorem 3 (ii). Indeed, let \(\mathcal {N}\) be the set of nodes of \(\mathcal {G}\). Choosing the subgraph \(\mathcal {G}_1\) induced by the nodes \(M(0)=\{i\in \mathcal {N}|v_i\ge 0\}\) and \(\mathcal {G}_2\) as its complement yields two connected subgraphs. Now, by Lemma 1, we have \(s_{k\rightarrow l}=v_l^2-v_lv_k > 0\) as \(v_k\) and \(v_l\) have opposite signs. Now assume there is another decomposition of the nodes \(I_1\subset \mathcal {N}\) and \(I_2\subset \mathcal {N}\) such that \(s_{k\rightarrow l}>0\) for all \(k\in I_1\) and \(l\in I_2\). Then, necessarily there must be two nodes \(i\in I_1\), \(j\in I_2\) such that \(v_i\) and \(v_j\) have the same sign. Otherwise, it would be the same decomposition as before. But then either \(s_{i\rightarrow j}<0\) or \(s_{j\rightarrow i}<0\) or \(s_{i\rightarrow j}=s_{j\rightarrow i}=0\) which is a contradiction.

ad (ii). Assume without restriction that \(k,l\in \mathcal {G}_1\) and \(v_l\ge v_k\). Then, we have \(s_{k\rightarrow l} = v_l(v_l-v_k)\ge 0\). For the opposite link, we obtain equivalently \(s_{l\rightarrow k}= v_k(v_k-v_l)\le 0\) as all the involved \(v_i\) are positive.

ad (iii) Consider again the (connected) subgraphs \(\mathcal {G}_1\) and \(\mathcal {G}_2\) induced by M(0) as in (i). Assume without restriction that the \(v_i>0\) are numbered by \(i=1,\ldots ,n_1\) and form a decreasing sequence as well as the \(v_i<0\) are numbered by \(i=n_1+1,\ldots ,n\) and form a decreasing sequence. Now, set \(\varepsilon =\frac{1}{2}\min \{|v_i-v_j| | i,j\ge n_1+1, v_i\ne v_j \}\) and define the positive numbers \(d_i=-(v_{n_1+i}-\varepsilon )\) for \(1\le i\le n-n_1\). Then, by Theorem 3 we have that the subgraphs \(\mathcal {G}_{1i}\) induced by \(M(d_i)\) form an increasing sequence of connected graphs. Let now \(j>i\). For a node k in \(\mathcal {G}_{1i}\cap \mathcal {G}_2\) and a node l in \(\mathcal {G}_{1j}\setminus \mathcal {G}_{1i}\) we have \(v_k>v_l\) by construction. So using Lemma 1 again yields \(s_{l\rightarrow k} = v_k(v_k-v_l)<0\) as all the involved \(v_i\) are negative. The second decomposition starting from the subgraph \(\mathcal {G}_2\) induced by the nodes \(\{i|v_i\le 0\}\) is obtained considering the Fiedler vector \(-\varvec{v}\). \(\square \)

Illustration of Theorem 2. The subgraphs \(\mathcal {G}_1\) and \(\mathcal {G}_2\) are given by the nodes \(\{1,2,3\}\) and \(\{4,5,6,7\}\), respectively. The Fiedler vector is given by \(\varvec{v}=(0.57,0.5,0.09,-0.06,-0.38,-0.34,-0.38)\), and the nodes are colour coded with shades of red (\(v_i>0\)) and blue (\(v_i<0\)) (Color figure online)

Example 1

In order to illustrate Theorem 2, consider again the graph from Fig. 2 and let \(\varvec{v}\) be its Fiedler vector, i.e. the normalized eigenvector associated with the spectral gap. Then, the cut from item (i) is obtained by dividing nodes according to the sign of the corresponding entry in \(\varvec{v}\). In Fig. 2, it corresponds to red and blue nodes. For the complete picture, it remains to determine what exactly is the effect of perturbing among nodes of the same type of colour beyond item (ii). This is done by comparing the corresponding entries in \(\varvec{v}\), colour coded in Fig. 3. Then, item (iii) states that increasing weights or adding weak connections from dark red to light red nodes decreases the synchronizability, while adding an opposite link increases the synchronizability. The same is valid for the blue nodes.

Rights and permissions

OpenAccess This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Poignard, C., Pade, J.P. & Pereira, T. The Effects of Structural Perturbations on the Synchronizability of Diffusive Networks. J Nonlinear Sci 29, 1919–1942 (2019). https://doi.org/10.1007/s00332-019-09534-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00332-019-09534-7

Keywords

- Ordinary differential equations

- Synchronization

- Stability theory

- Phase transitions

- Perturbations

- Graphs and linear algebra

- Network models

- deterministic